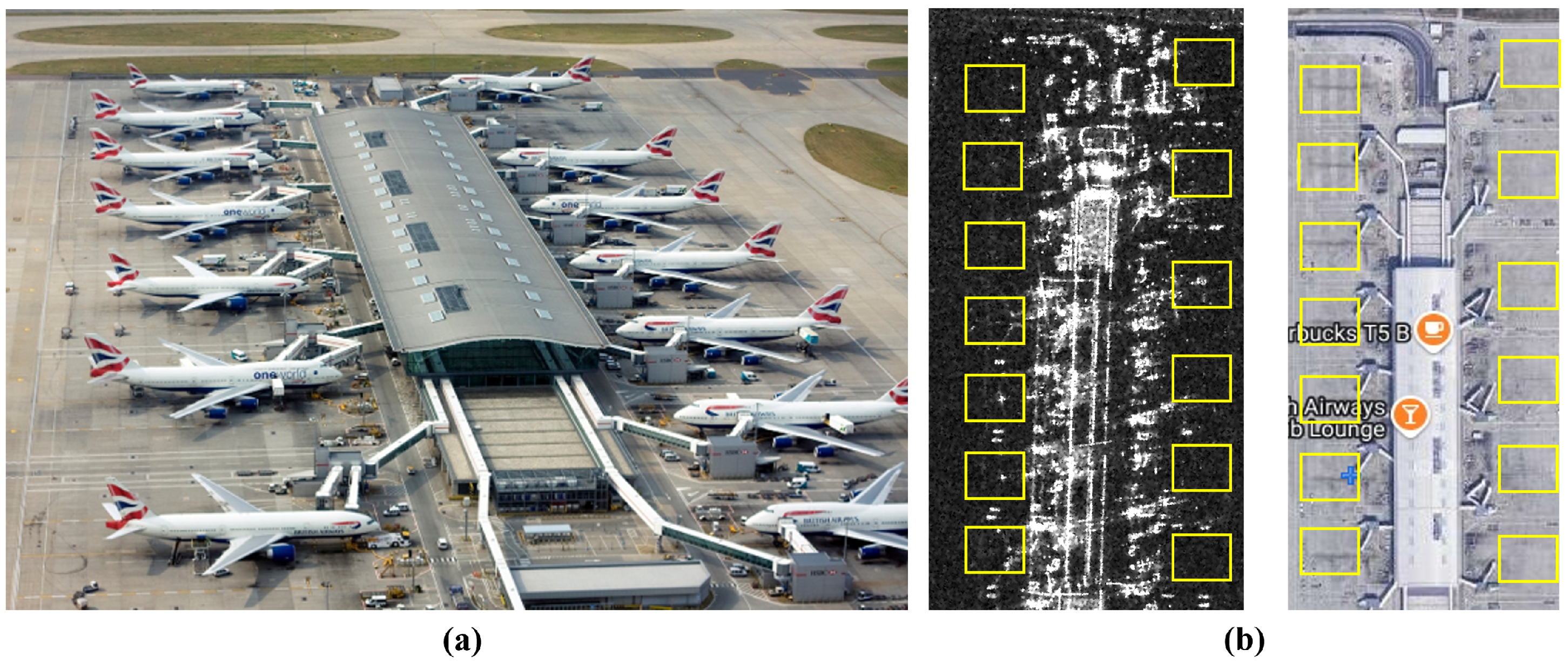

Parking Pattern Guided Vehicle and Aircraft Detection in Aligned SAR-EO Aerial View Images

Abstract

1. Introduction

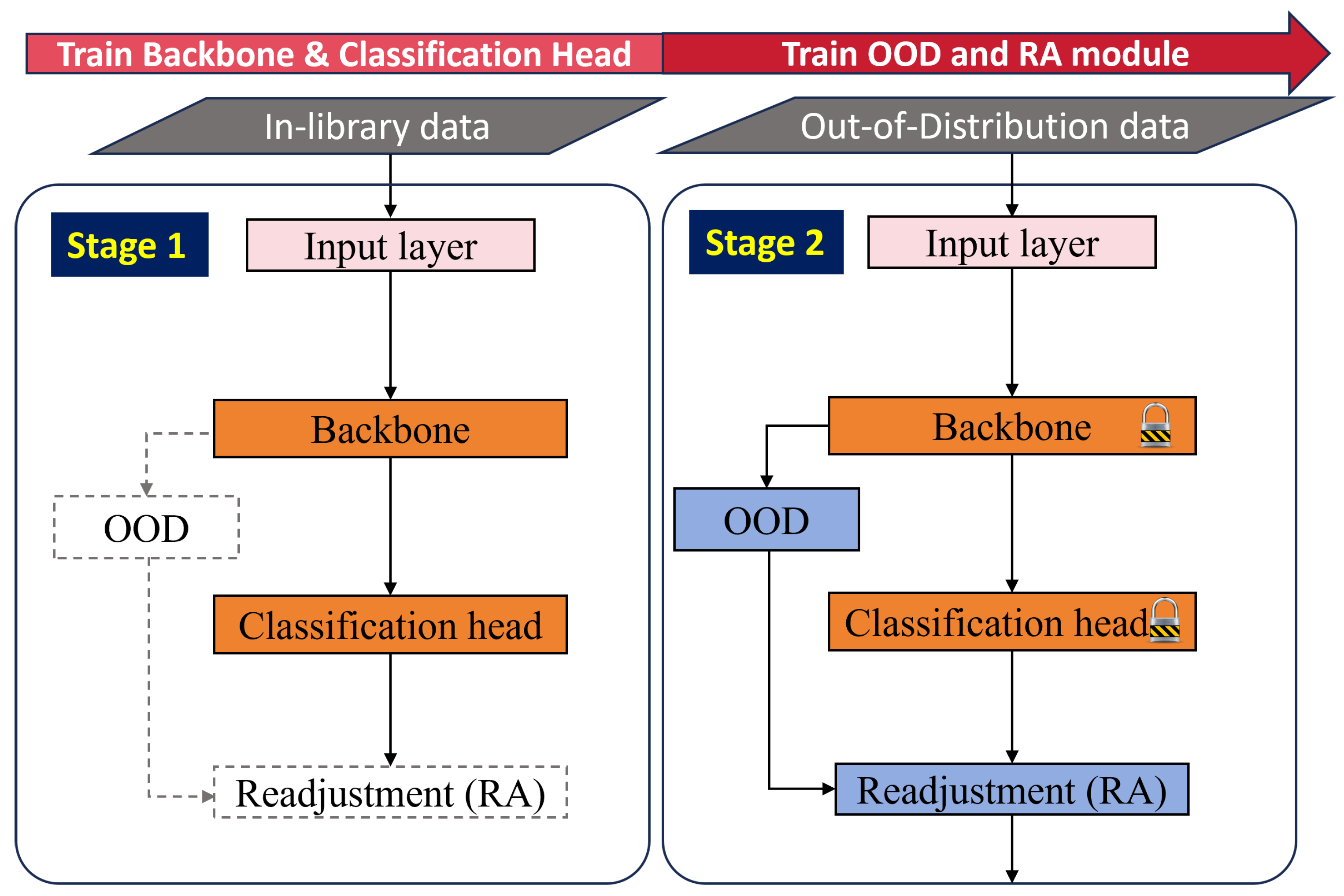

2. ATR Based on Optical-SAR Image Scene Grammar Alignment with SRM and CATRM

2.1. The SRM for In-Library and OOD Scene Detection Based on MSP and AdvOE

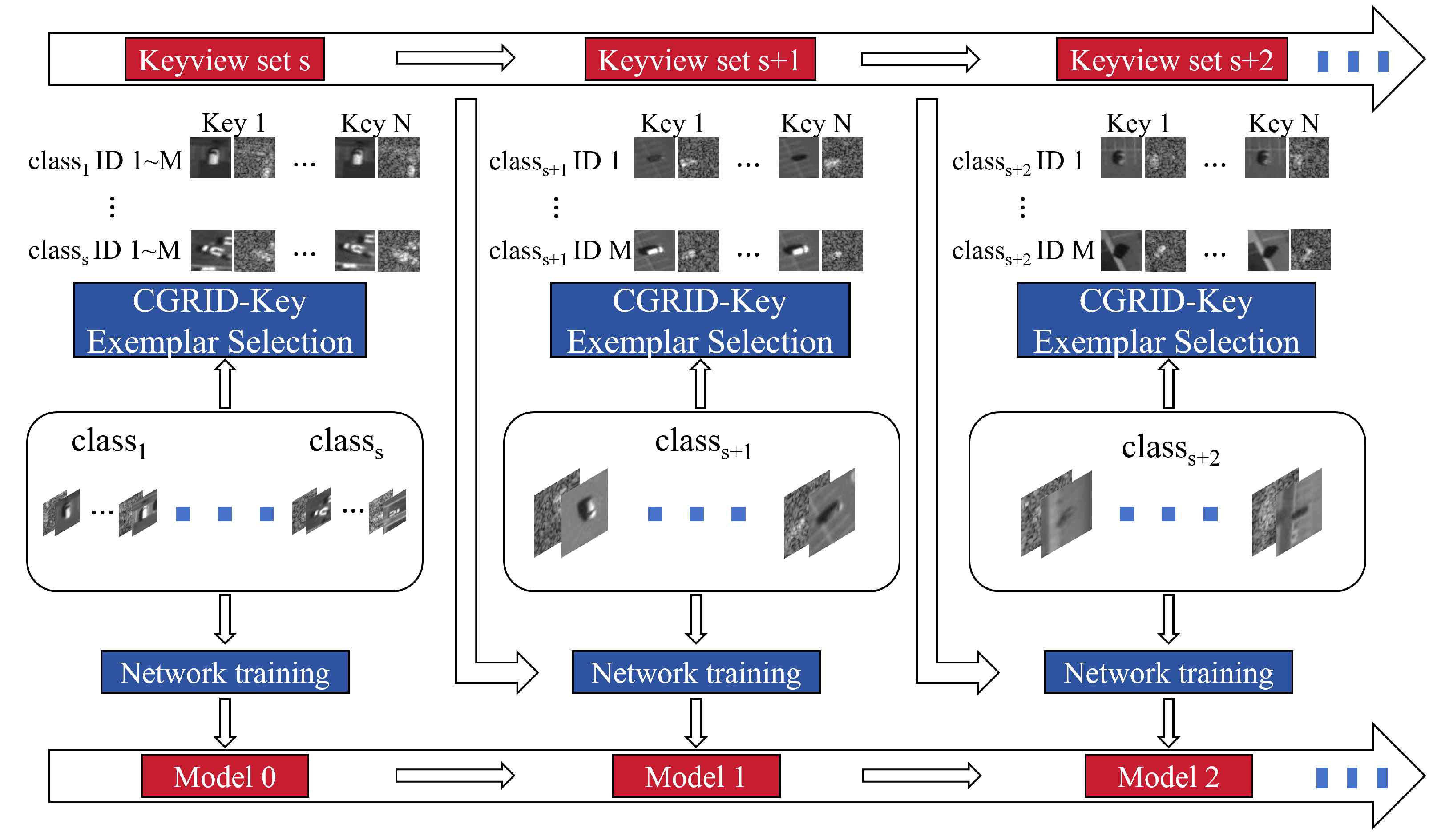

2.2. The CATRM for Target Recognition Based on the CGRID-Key Strategy

- Step 1: Offline in-library/In-Distribution (ID) target detection training with image samples featuring s categories of targets and offline Outlier Exposure (OE) training with diverse unlabeled EO-SAR image samples from the wider OOD set, obtain Model 0;

- Step 2: Deploy the trained system into the test environment and present to Model 0 N OOD samples;

- Step 3: Divide the N OOD samples into C clusters by jointly considering the target feature and the context information with a clustering module, with cluster consisting of samples;

- Step 4: If passes a predetermined threshold , or (i.e., the total number of OOD samples in a cluster and its nearest neighbor in feature space) passes threshold , allow a human observer to add a new target category label to the library and annotate less than representative OOD EO-SAR pairs according to the CGRID-Key exemplar selection strategy, with ;

- Step 5: Update Model 0 using the samples belonging to and representative old samples (to avoid catastrophic forgetting), obtain Model 1;

- Step 6: (optional) Repeat Steps 2–5 if necessary;

- Step 7: Redeploy the system to the test environment to test the ID target classification performance and the OOD sample detection performance.

3. Simulation Results

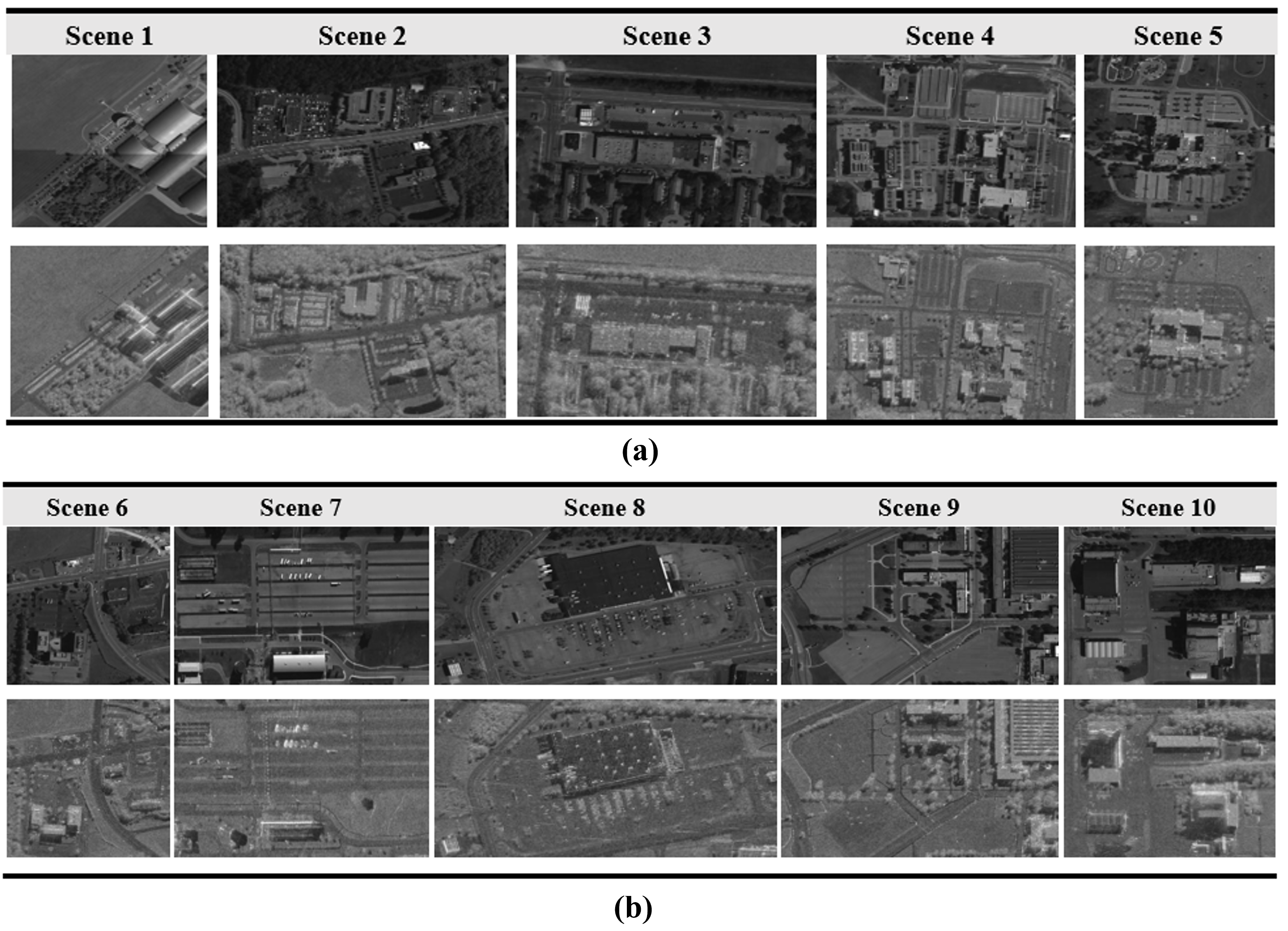

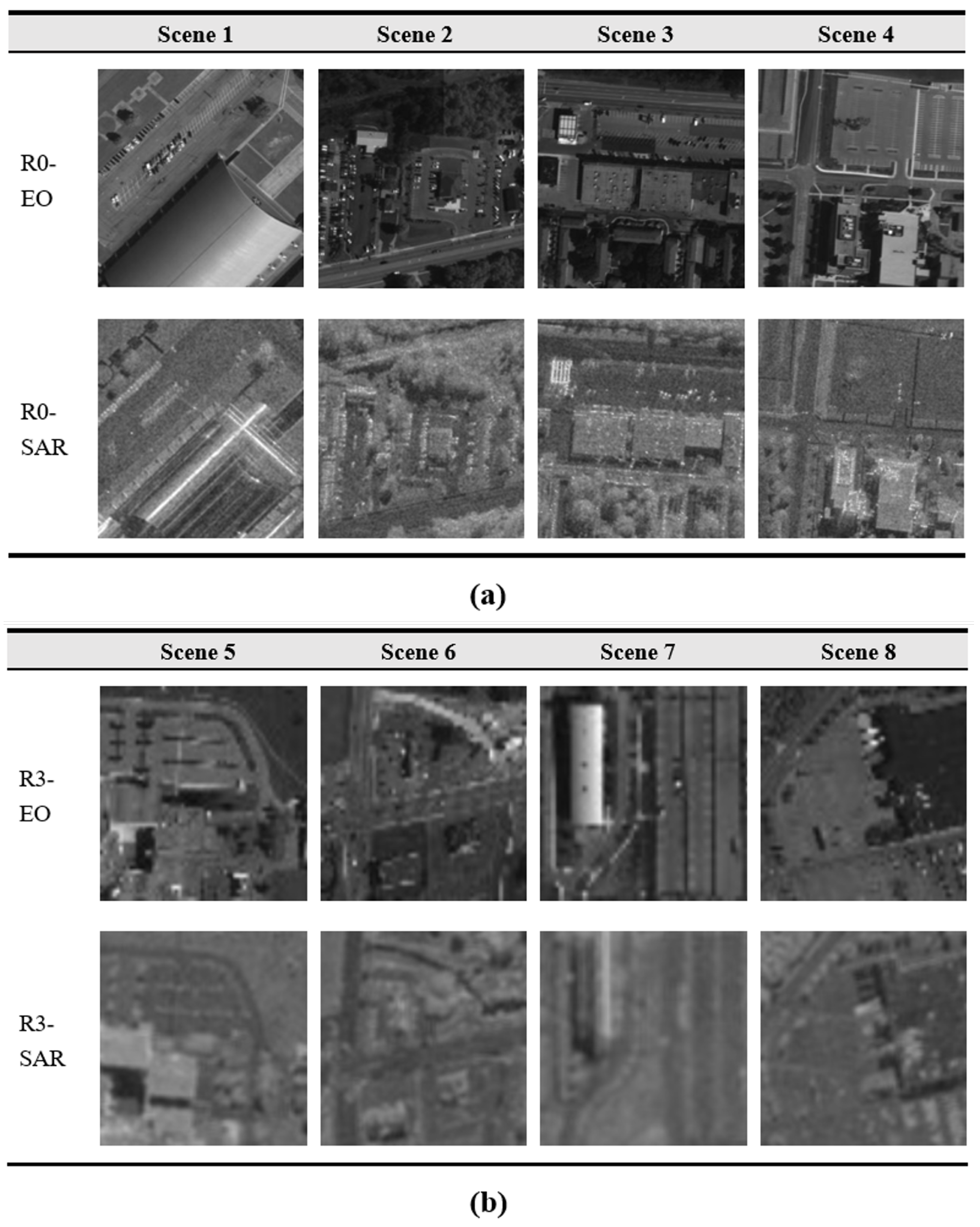

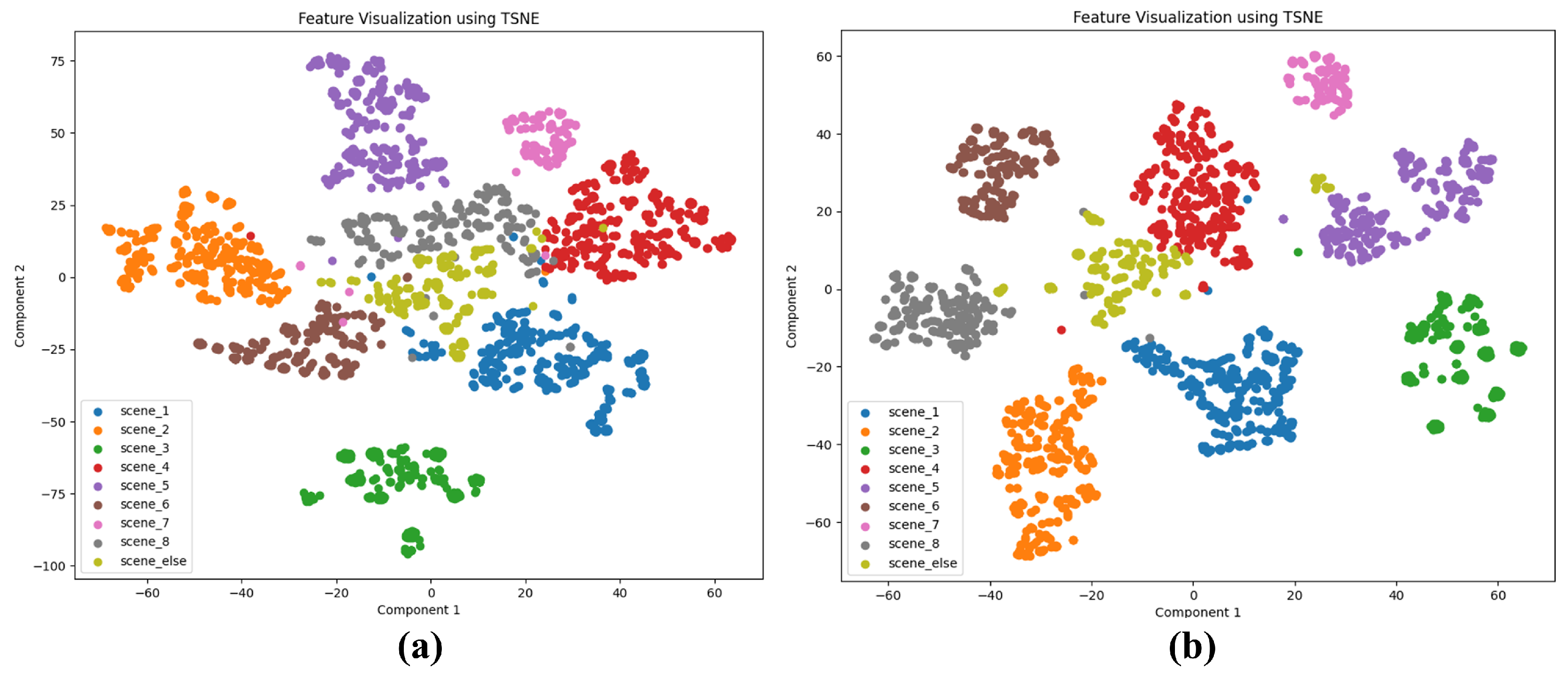

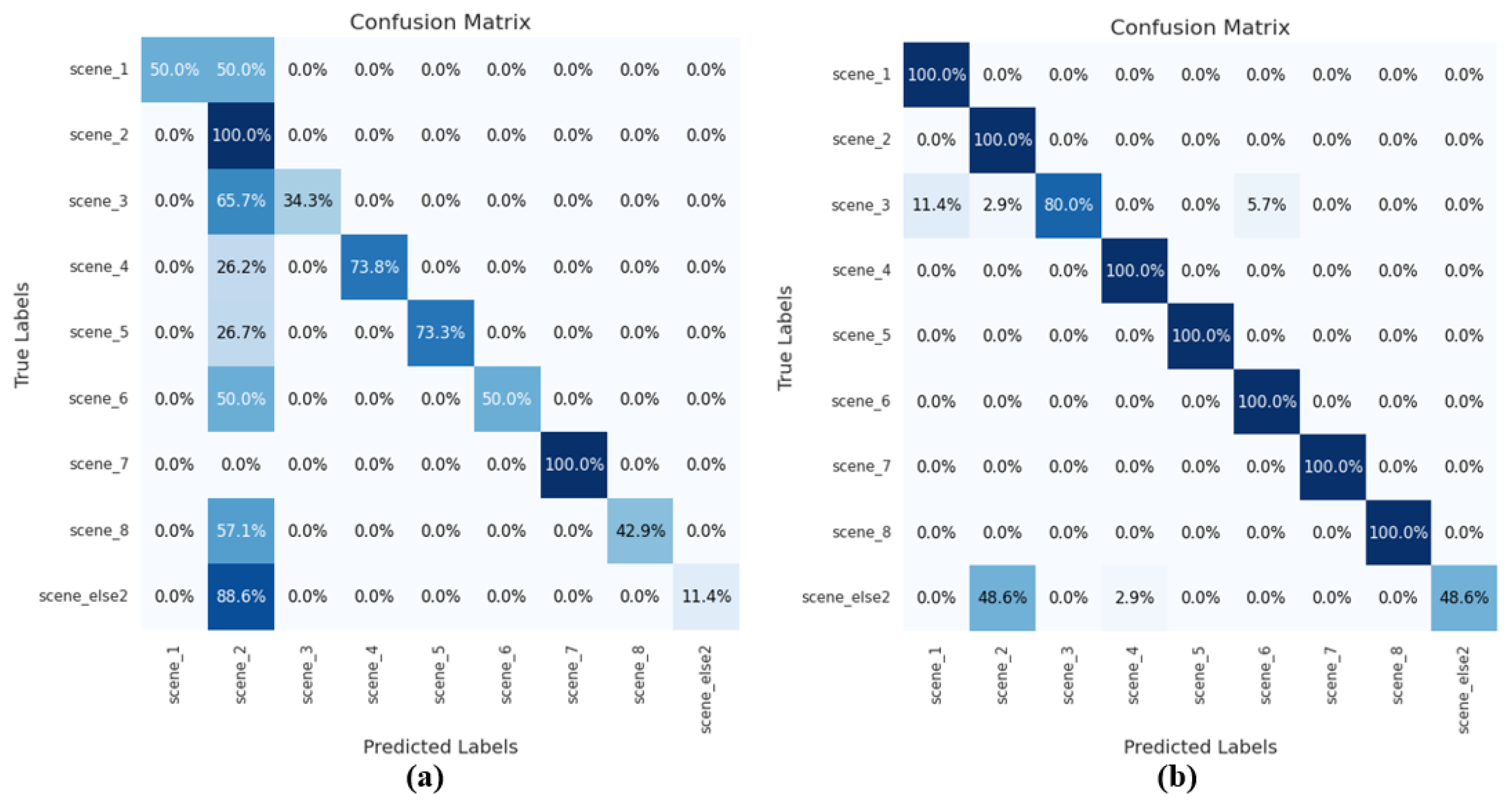

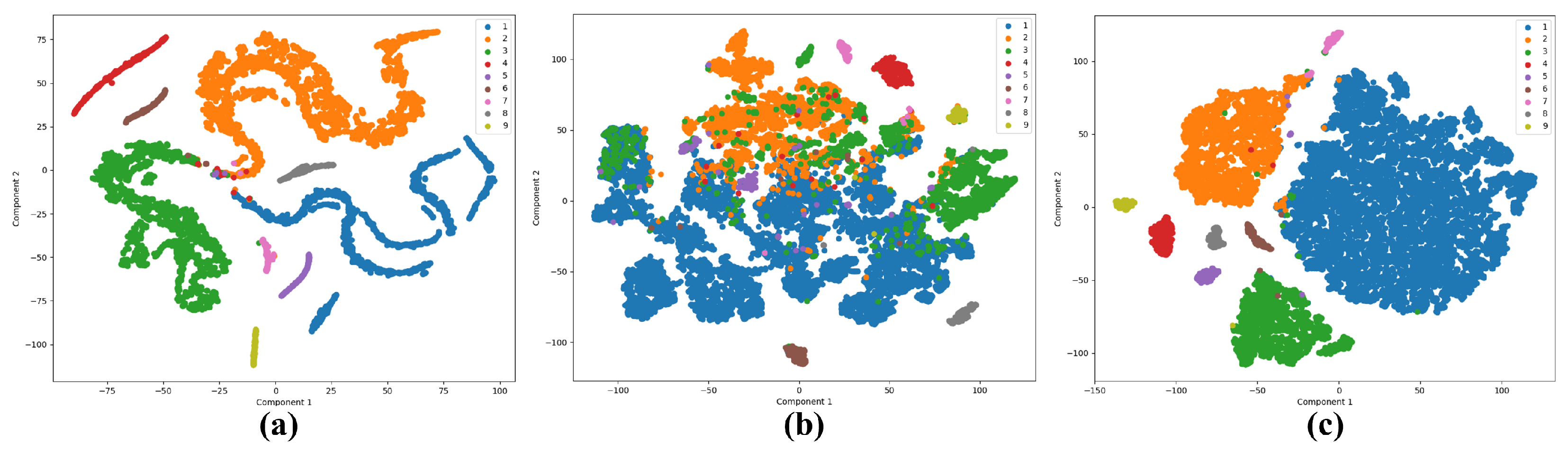

3.1. Experiment Results on the UNICORN Dataset

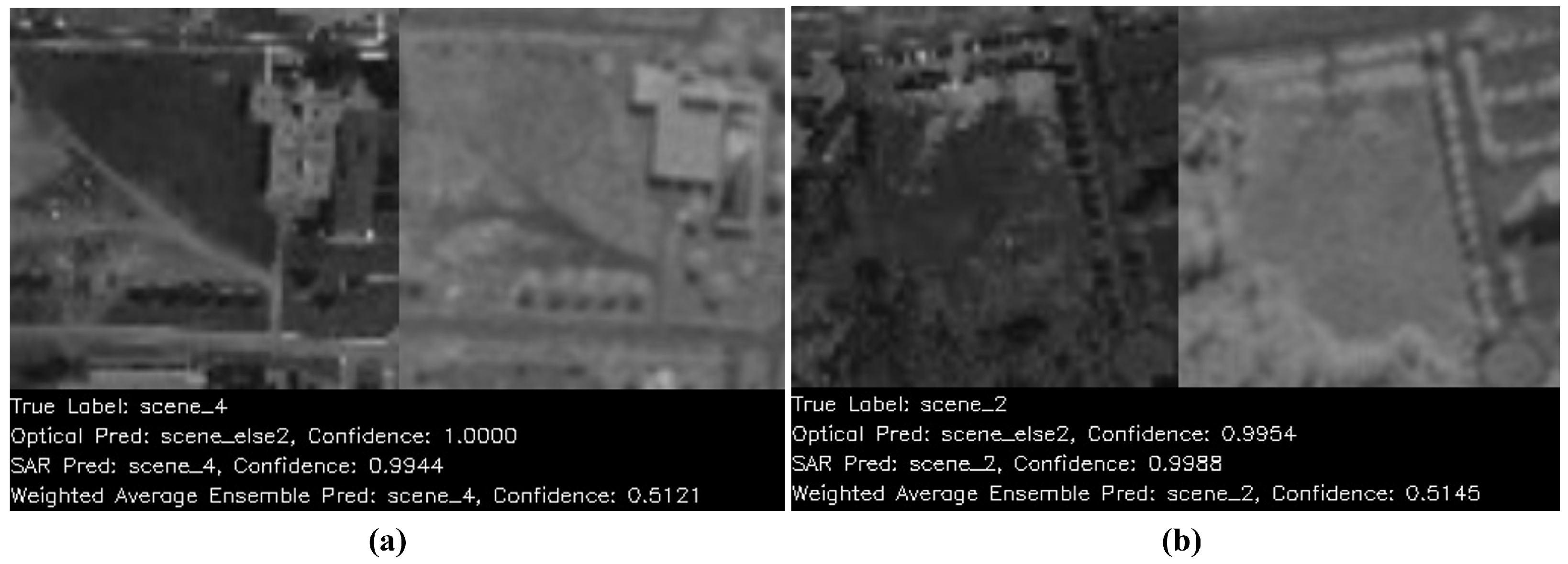

3.1.1. Parking Lot Recognition with SRM

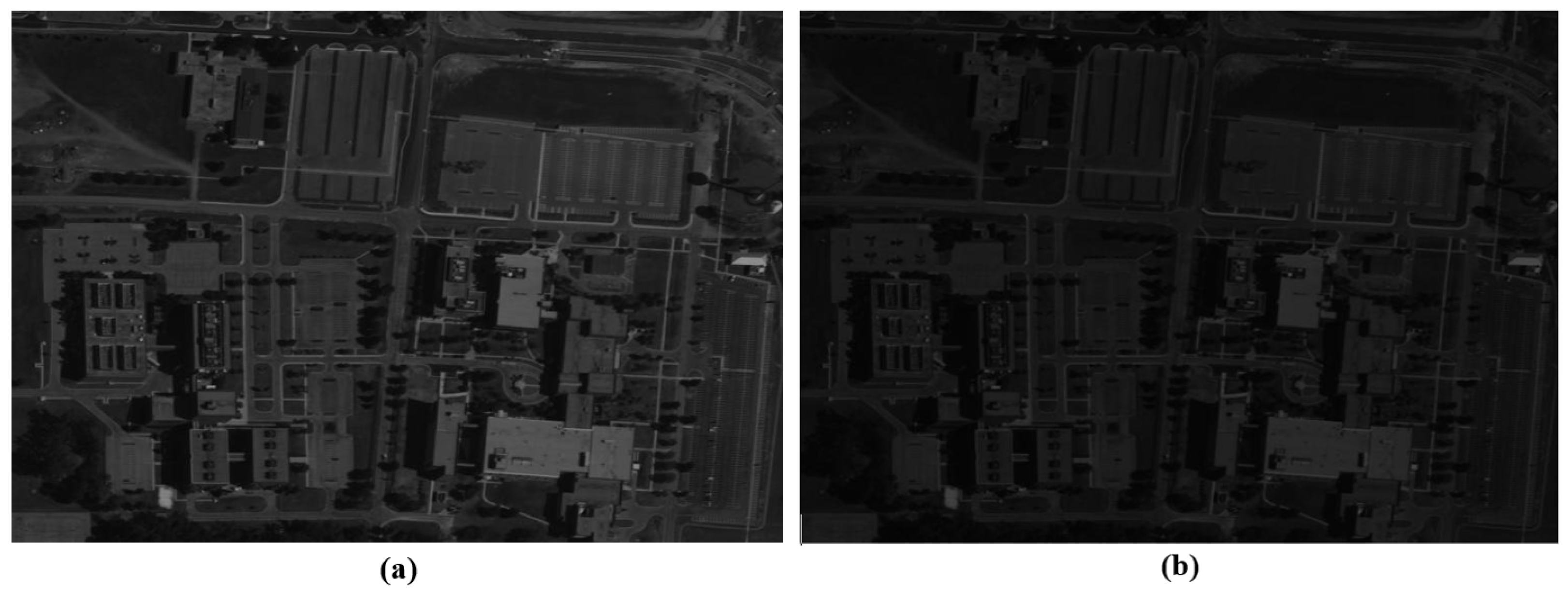

3.1.2. Target Recognition Based on the CGRID-Key Strategy

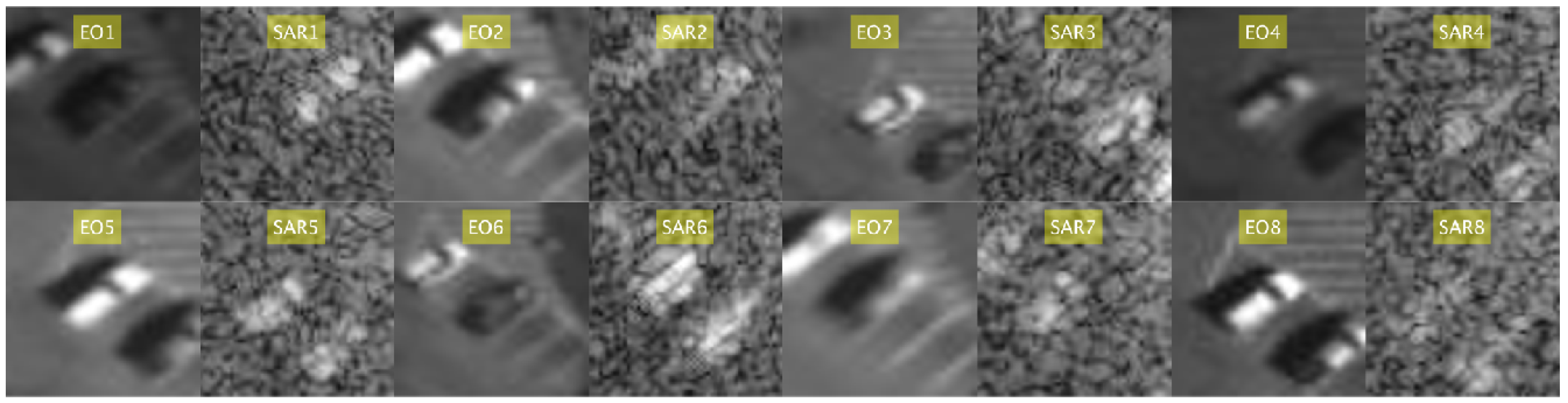

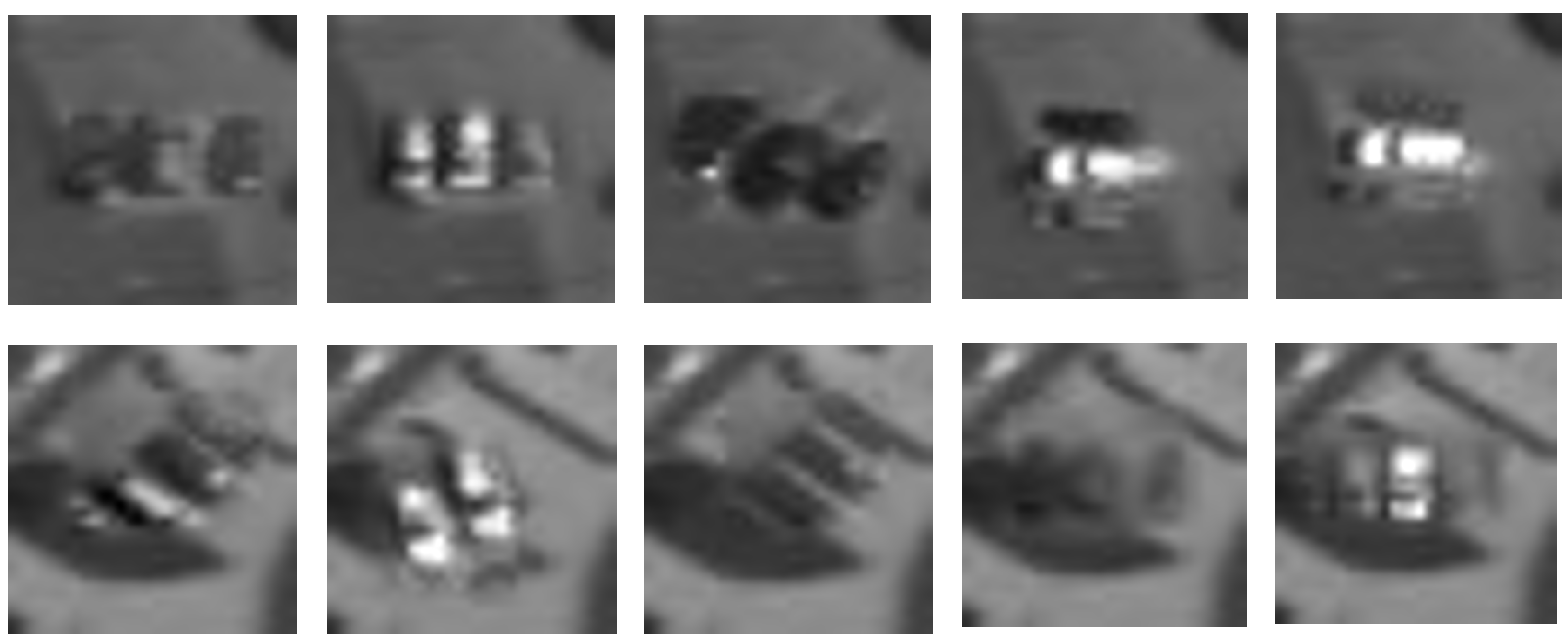

- Divide the images into subsets, with accounting for the cases that 1 or 2 vehicles are present, and accounting for the cases that 3+ vehicles are present.

- Use images in for OE training and obtain Model 0, in which process random vehicle cropping and poisson image fusion are employed to reduce the impact of vehicle quantity and the background (see Figure 15).

- Assume that contains prototypes, add new vehicle category, and have prototypes of the incremental class annotated manually.

- Use the annotated samples as seeds and generate training samples via clustering.

- Train Model 0 with synthesized samples and representative old samples (to avoid cartographic forgetting), obtain Model 1.

- Test the performance of Model 1 with prototypes based on the key-view exemplars.

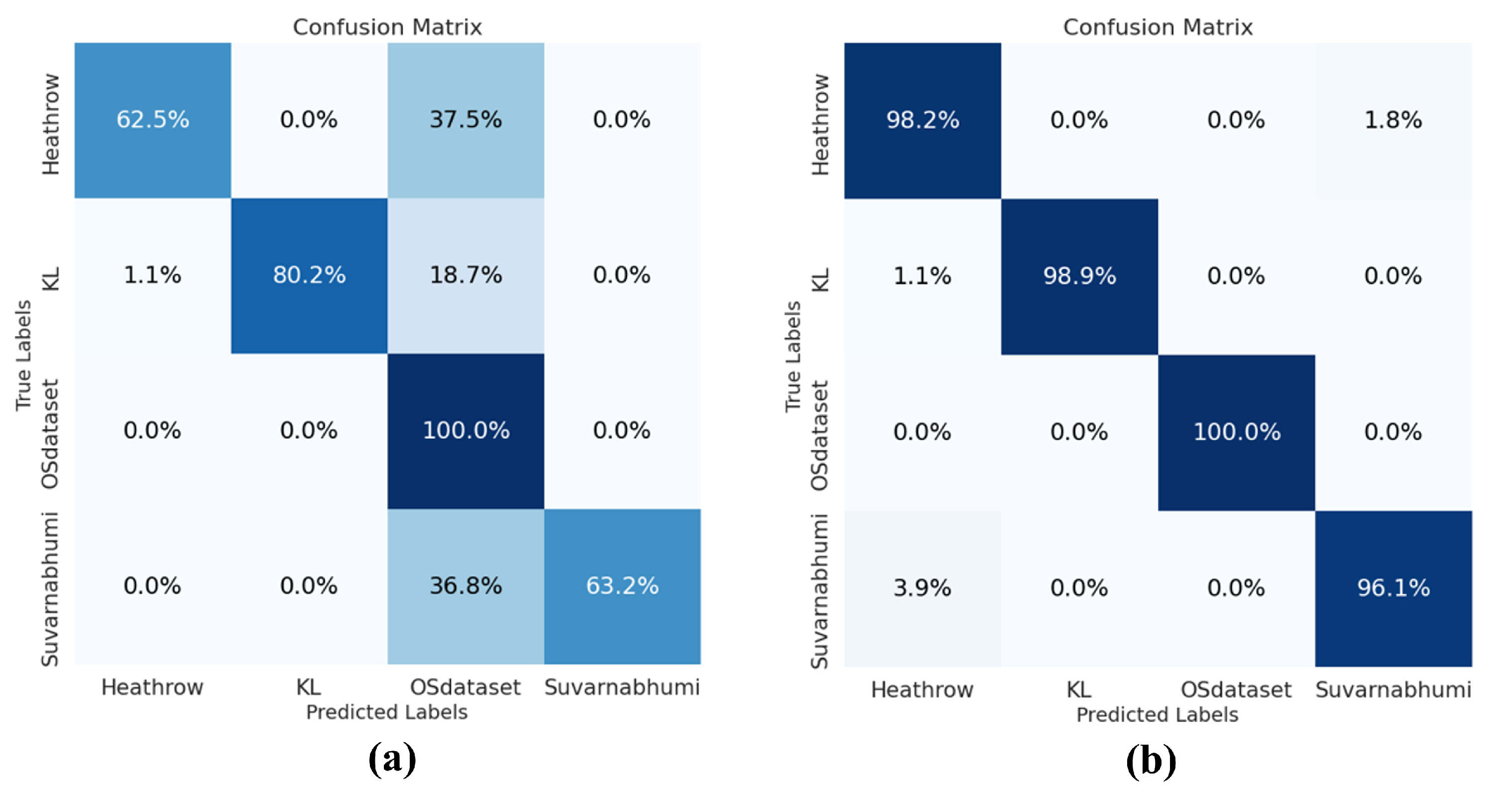

| Class | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | ||||||||||||

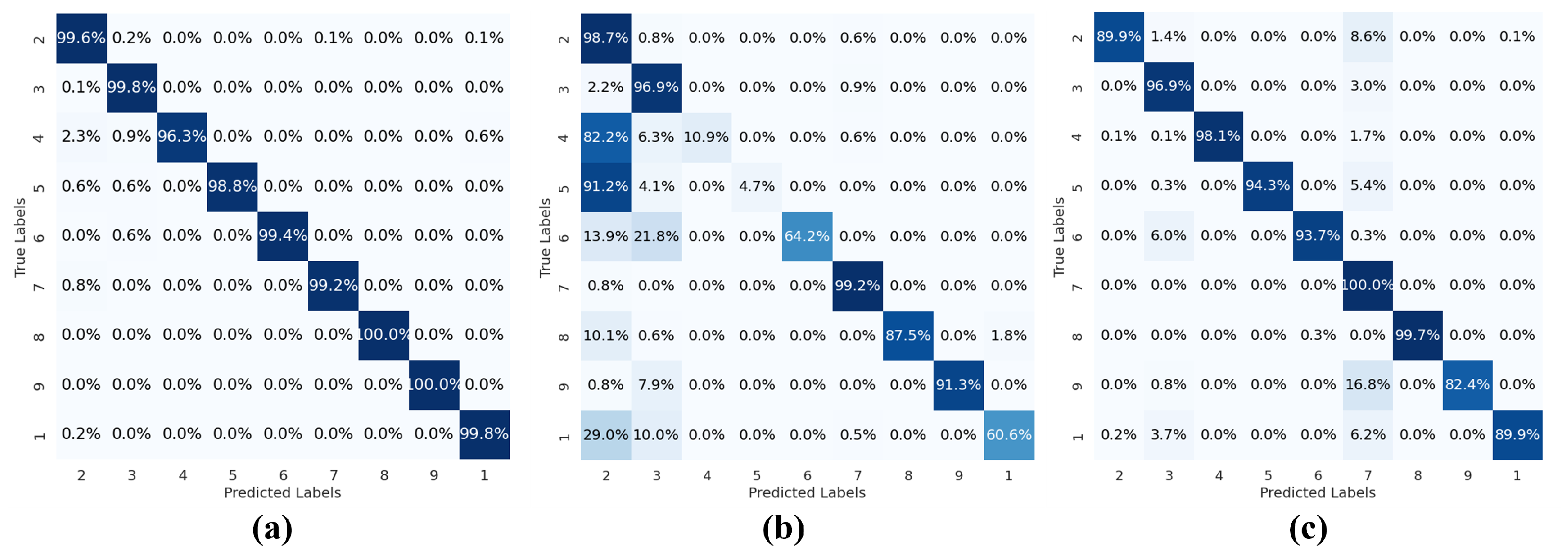

| Exp-1 | OracleSam | - | - | 99.8 | 99.6 | 98.0 | 100 | 100 | 98.4 | 100 | 100 | |

| Exp-2A | OracleSam | - | 99.8 | 99.6 | 99.8 | 96.3 | 98.8 | 99.4 | 99.2 | 100 | 100 | |

| Baseline | - | 60.6 | 98.7 | 96.9 | 10.9 | 4.7 | 64.2 | 99.2 | 87.5 | 91.3 | ||

| CGRID-Key | - | 89.9 | 89.9 | 96.9 | 98.1 | 94.3 | 93.7 | 100 | 99.7 | 82.4 | ||

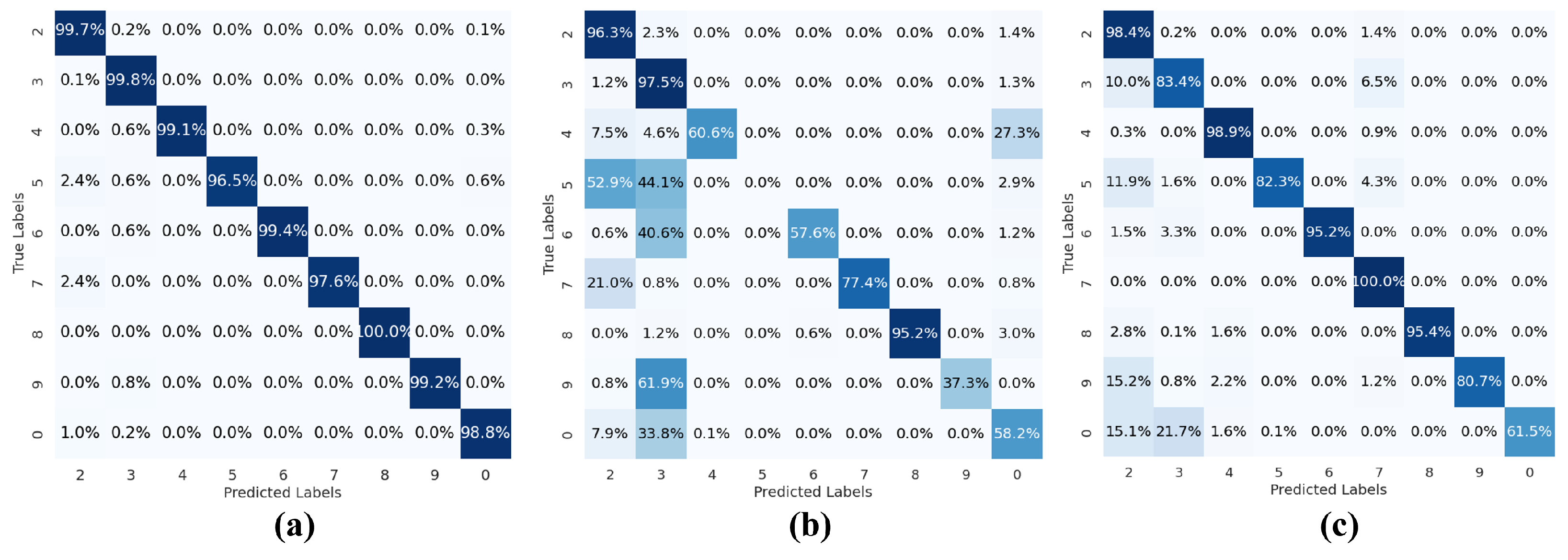

| Exp-2B | OracleSam | 98.8 | - | 99.7 | 99.8 | 99.1 | 96.5 | 99.4 | 97.6 | 100 | 99.2 | |

| Baseline | 58.2 | - | 96.3 | 97.5 | 60.6 | 0 | 57.6 | 77.4 | 95.2 | 37.3 | ||

| CGRID-Key | 61.5 | - | 98.4 | 83.4 | 98.9 | 82.3 | 95.2 | 100 | 95.4 | 80.7 | ||

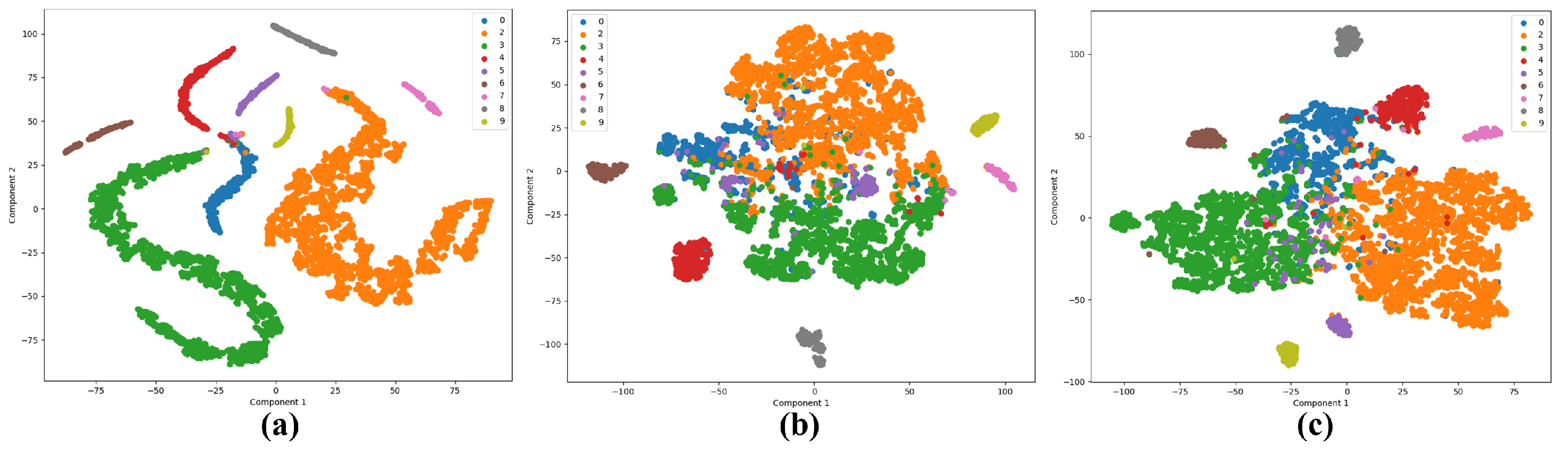

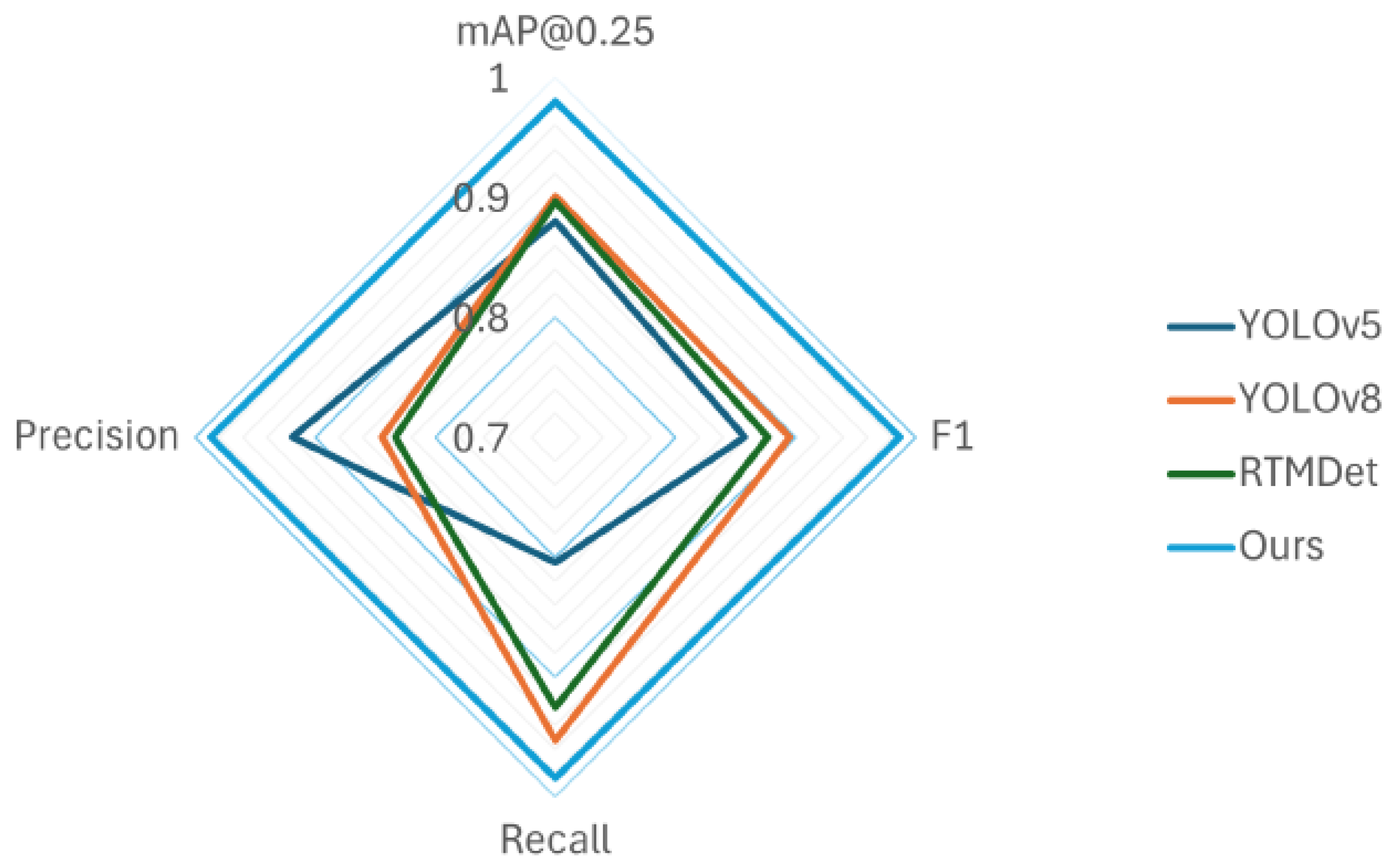

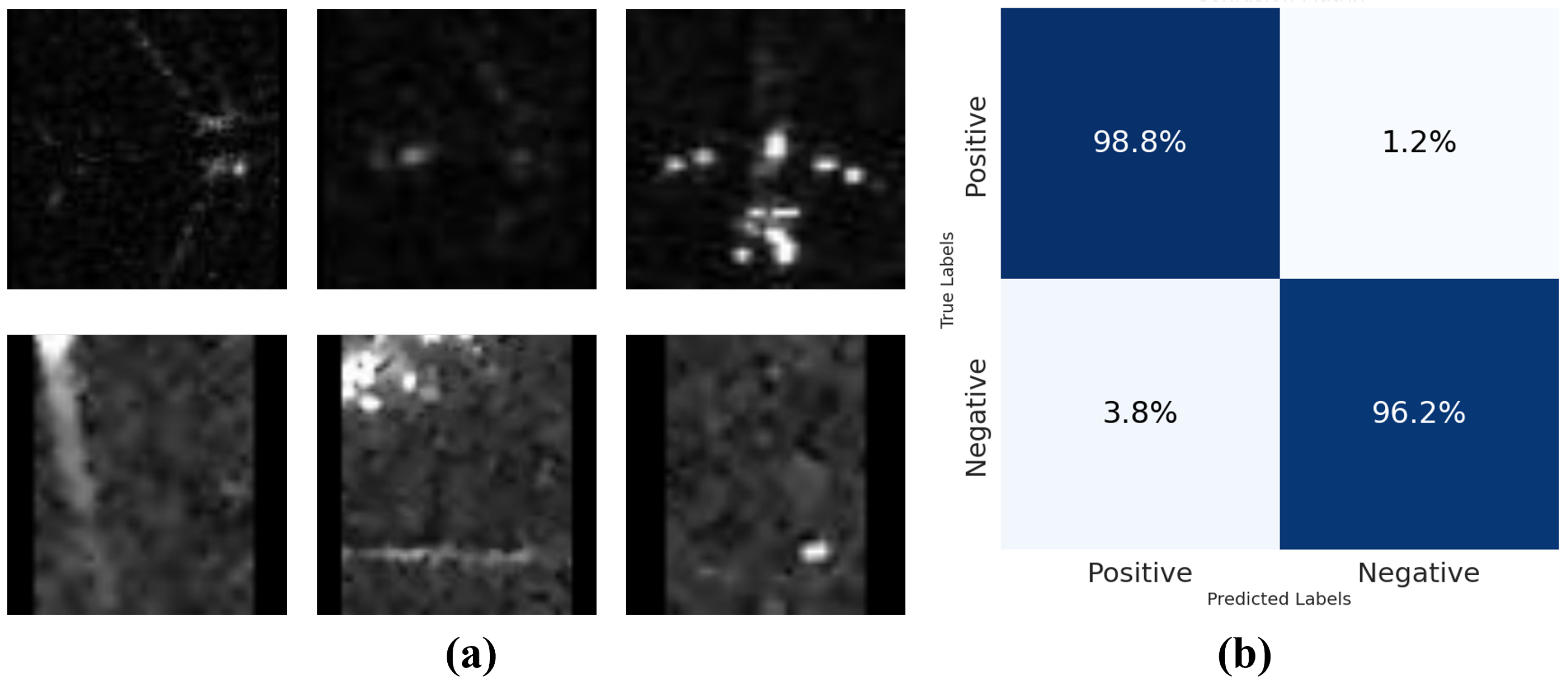

3.2. Experiment Results on the Self-Constructed EO-SAR Aircraft Dataset

4. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kechagias-Stamatis, O.; Aouf, N. Automatic Target Recognition on Synthetic Aperture Radar Imagery: A Survey. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 56–81. [Google Scholar] [CrossRef]

- Belloni, C.; Balleri, A.; Aouf, N.; Le Caillec, J.M.; Merlet, T. Explainability of Deep SAR ATR Through Feature Analysis. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 659–673. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target Classification Using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Chen, H.; Zhu, D.; Wu, D.; Huang, J.; Lv, J. An Addition Network with ResNet for Fine-Grained Visual Classification in SAR Images. In Proceedings of the IET International Radar Conference (IRC 2023), Chongqing, China, 3–5 December 2023; Volume 2023, pp. 589–593. [Google Scholar] [CrossRef]

- Min, R.; Lan, H.; Cao, Z.; Cui, Z. A Gradually Distilled CNN for SAR Target Recognition. IEEE Access 2019, 7, 42190–42200. [Google Scholar] [CrossRef]

- Feng, S.; Ji, K.; Zhang, L.; Ma, X.; Kuang, G. SAR Target Classification Based on Integration of ASC Parts Model and Deep Learning Algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10213–10225. [Google Scholar] [CrossRef]

- Huang, Z.; Wu, C.; Yao, X.; Zhao, Z.; Huang, X.; Han, J. Physics Inspired Hybrid Attention for SAR Target Recognition. ISPRS J. Photogramm. Remote Sens. 2024, 207, 164–174. [Google Scholar] [CrossRef]

- Kechagias-Stamatis, O. Target Recognition for Synthetic Aperture Radar Imagery Based on Convolutional Neural Network Feature Fusion. J. Appl. Remote Sens. 2018, 12, 046025. [Google Scholar] [CrossRef]

- Kechagias-Stamatis, O.; Aouf, N. Fusing Deep Learning and Sparse Coding for SAR ATR. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 785–797. [Google Scholar] [CrossRef]

- Zhou, Z.; Cao, Z.; Pi, Y. Subdictionary-Based Joint Sparse Representation for SAR Target Recognition Using Multilevel Reconstruction. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6877–6887. [Google Scholar] [CrossRef]

- Zheng, P.; Zhou, Y.; Wang, W.; Wang, T.; Li, X. SAR Target Recognition through Adaptive Kernel Sparse Representation Model Based on Local Contrast Perception. Signal Process. 2024, 223, 109558. [Google Scholar] [CrossRef]

- Inkawhich, N.; Inkawhich, M.J.; Davis, E.K.; Majumder, U.K.; Tripp, E.; Capraro, C.; Chen, Y. Bridging a Gap in SAR-ATR: Training on Fully Synthetic and Testing on Measured Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2942–2955. [Google Scholar] [CrossRef]

- Inkawhich, N.; Zhang, J.; Davis, E.K.; Luley, R.; Chen, Y. Improving Out-Of-Distribution Detection by Learning from the Deployment Environment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2070–2086. [Google Scholar] [CrossRef]

- Geng, Z.; Xu, Y.; Wang, B.N.; Yu, X.; Zhu, D.Y.; Zhang, G. Target Recognition in SAR Images by Deep Learning with Training Data Augmentation. Sensors 2023, 23, 941. [Google Scholar] [CrossRef] [PubMed]

- Low, S.; Nina, O.; Sappa, A.D.; Blasch, E.; Inkawhich, N. Multi-modal Aerial View Object Classification Challenge Results-PBVS 2022. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; pp. 349–357. [Google Scholar] [CrossRef]

- Shermeyer, J.; Hogan, D.; Brown, J.; Van Etten, A.; Weir, N.; Pacifici, F.; Hänsch, R.; Bastidas, A.; Soenen, S.; Bacastow, T.; et al. SpaceNet 6: Multi-Sensor All Weather Mapping Dataset. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 768–777. [Google Scholar] [CrossRef]

- Huang, M.; Xu, Y.; Qian, L.; Shi, W.; Zhang, Y.; Bao, W.; Wang, N.; Liu, X.; Xiang, X. The QXS-SAROPT Dataset for Deep Learning in SAR-Optical Data Fusion. arXiv 2021, arXiv:2103.08259. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Zhu, X.X. The SEN1-2 Dataset for Deep Learning in SAR-Optical Data Fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4.1, 141–146. [Google Scholar] [CrossRef]

- Zhu, X.; Hu, J.; Qiu, C.; Shi, Y.; Kang, J.; Mou, L.; Bagheri, H.; Haberle, M.; Hua, Y.; Huang, R.; et al. So2Sat LCZ42: A Benchmark Data Set for the Classification of Global Local Climate Zones [Software and Data Sets]. IEEE Geosci. Remote Sens. Mag. 2020, 8, 76–89. [Google Scholar] [CrossRef]

- Li, Y.; He, J.; Xu, F.; Xiang, J.; Chen, J. OGRN: Optical-Guided Residual Dense Network for SAR Image Super-Resolution Reconstruction Network. Int. J. Remote Sens. 2024, 45, 9287–9310. [Google Scholar] [CrossRef]

- Geng, Z.; Li, W.; Xu, Y.; Wang, B.N.; Zhu, D.Y. SAR Image Scene Classification and Out-of-Library Target Detection with Cross-Domain Active Transfer Learning. In Proceedings of the IGARSS 2023—2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 7023–7026. [Google Scholar] [CrossRef]

- Dungan, K.E.; Ash, J.N.; Nehrbass, J.W.; Parker, J.T.; Gorham, L.A.; Scarborough, S.M. Wide Angle SAR Data for Target Discrimination Research. In Proceedings of the Defense + Commercial Sensing, Baltimore, MD, USA, 23–27 April 2012. [Google Scholar]

- Low, S.; Nina, O.; Sappa, A.D.; Blasch, E.; Inkawhich, N. Multi-modal Aerial View Image Challenge: Translation from Synthetic Aperture Radar to Electro-Optical Domain Results—PBVS 2023. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 515–523. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, H.; Liu, T.; Wang, F.; Li, H. Remote sensing image fusion via wavelet transform and sparse representation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 158–173. [Google Scholar] [CrossRef]

- Ankarao, V.; Sowmya, V.; Soman, K.P. Multi-sensor data fusion using NIHS transform and decomposition algorithms. Multimed. Tools Appl. 2018, 77, 30381–30402. [Google Scholar] [CrossRef]

- Easley, G.; Labate, D.; Lim, W.Q. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008, 25, 25–46. [Google Scholar] [CrossRef]

- Lei, L.; Su, Y.; Jiang, Y. Feature-based classification fusion of vehicles in high-resolution SAR and optical imagery. In Proceedings of the MIPPR 2005: SAR and Multispectral Image Processing, Wuhan, China, 3 November 2005; Volume 6043, p. 604323. [Google Scholar] [CrossRef]

- Li, X.; Zhang, G.; Cui, H.; Hou, S.; Wang, S.; Li, X.; Chen, Y.; Li, Z.; Zhang, L. MCANet: A joint semantic segmentation framework of optical and SAR images for land use classification. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102638. [Google Scholar] [CrossRef]

- Li, W.; Wu, J.; Liu, Q.; Zhang, Y.; Cui, B.; Jia, Y.; Gui, G. An Effective Multimodel Fusion Method for SAR and Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5881–5892. [Google Scholar] [CrossRef]

- Chureesampant, K.; Susaki, J. Multi-temporal SAR and Optical Data Combination with Textural Measures for Land Cover Classification. J. Jpn. Soc. Photogramm. Remote Sens. 2012, 51, 211–223. [Google Scholar] [CrossRef][Green Version]

- Kim, S.; Song, W.J.; Kim, S.H. Double Weight-Based SAR and Infrared Sensor Fusion for Automatic Ground Target Recognition with Deep Learning. Remote Sens. 2018, 10, 72. [Google Scholar] [CrossRef]

- Qin, J.; Wang, K.; Zou, B.; Zhang, L.; van de Weijer, J. Conditional Diffusion Model With Spatial-Frequency Refinement for SAR-to-Optical Image Translation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Qin, J.; Zou, B.; Li, H.; Zhang, L. Efficient End-to-End Diffusion Model for One-Step SAR-to-Optical Translation. IEEE Geosci. Remote Sens. Lett. 2025, 22, 1–5. [Google Scholar] [CrossRef]

- Lan, T.; He, S.; Qing, Y.; Wen, B. Leveraging Mixed Data Sources for Enhanced Road Segmentation in Synthetic Aperture Radar Images. Remote Sens. 2024, 16, 3024. [Google Scholar] [CrossRef]

- Qing, Y.; Zhu, J.; Feng, H.; Liu, W.; Wen, B. Two-Way Generation of High-Resolution EO and SAR Images via Dual Distortion-Adaptive GANs. Remote Sens. 2023, 15, 1878. [Google Scholar] [CrossRef]

- Chua, J.; Felzenszwalb, P.F. Scene Grammars, Factor Graphs, and Belief Propagation. arXiv 2019, arXiv:1606.01307. [Google Scholar] [CrossRef]

- Hendrycks, D.; Gimpel, K. A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Liang, S.; Li, Y.; Srikant, R. Enhancing The Reliability of Out-of-distribution Image Detection in Neural Networks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Inkawhich, N.A.; Davis, E.K.; Inkawhich, M.J.; Majumder, U.K.; Chen, Y. Training SAR-ATR Models for Reliable Operation in Open-World Environments. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3954–3966. [Google Scholar] [CrossRef]

- Yang, H.M.; Zhang, X.Y.; Yin, F.; Liu, C.L. Robust Classification with Convolutional Prototype Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3474–3482. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhang, R. A Classification Supervised Auto-Encoder Based on Predefined Evenly-Distributed Class Centroids. arXiv 2020, arXiv:1902.00220. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhang, P.; Wang, Z.; Ye, X. A New Loss Function for CNN Classifier Based on Predefined Evenly-Distributed Class Centroids. IEEE Access 2020, 8, 10888–10895. [Google Scholar] [CrossRef]

- Zhu, Q.; Zheng, G.; Shen, J.; Wang, R. Out-of-Distribution Detection Based on Feature Fusion in Neural Network Classifier Pre-Trained by PEDCC-Loss. IEEE Access 2022, 10, 66190–66197. [Google Scholar] [CrossRef]

- Li, B.; Cui, Z.; Sun, Y.; Yang, J.; Cao, Z. Density Coverage-Based Exemplar Selection for Incremental SAR Automatic Target Recognition. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–13. [Google Scholar] [CrossRef]

- Tang, Z.; Sun, Y.; Liu, C.; Xu, Y.; Lei, L. Simulated Data-Guided Incremental SAR ATR Through Feature Aggregation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 13602–13615. [Google Scholar] [CrossRef]

- Zakria; Deng, J.; Hao, Y.; Khokhar, M.S.; Kumar, R.; Cai, J.; Kumar, J.; Aftab, M.U. Trends in Vehicle Re-Identification Past, Present, and Future: A Comprehensive Review. Mathematics 2021, 9, 3162. [Google Scholar] [CrossRef]

- Xiang, Y.; Tao, R.; Wang, F.; You, H.; Han, B. Automatic Registration of Optical and SAR Images Via Improved Phase Congruency Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5847–5861. [Google Scholar] [CrossRef]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar] [CrossRef]

- Basgalupp, M.; Cerri, R.; Schietgat, L.; Triguero, I.; Vens, C. Beyond global and local multi-target learning. Inf. Sci. 2021, 579, 508–524. [Google Scholar] [CrossRef]

- Xue, L.; Zeng, P.; Yu, H. SETNDS: A SET-Based Non-Dominated Sorting Algorithm for Multi-Objective Optimization Problems. Appl. Sci. 2020, 10, 6858. [Google Scholar] [CrossRef]

- Leong, C.; Rovito, T.; Mendoza-Schrock, O.; Menart, C.; Bowser, J.; Moore, L.; Scarborough, S.; Minardi, M.; Hascher, D. Unified Coincident Optical and Radar for Recognition (UNICORN) 2008 Dataset. Available online: https://github.com/AFRL-RY/data-unicorn-2008 (accessed on 30 December 2024).

- Airport Cooperative Research Program. ACRP Report 96: Apron Planning and Design Guidebook; Technical Report; Transportation Research Board of the National Academies: Washington, DC, USA, 2013. [Google Scholar]

- Kim, J.; Shin, S.; Kim, S.; Kim, Y. EO-Augmented Building Segmentation for Airborne SAR Imagery. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Yaseen, M. What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. arXiv 2024, arXiv:2408.15857. [Google Scholar] [CrossRef]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. RTMDet: An Empirical Study of Designing Real-Time Object Detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar] [CrossRef]

- Li, Y.; Wang, S.; He, H.; Meng, D.; Yang, D. Fast Aerial Image Geolocalization Using the Projective-Invariant Contour Feature. Remote Sens. 2021, 13, 490. [Google Scholar] [CrossRef]

| Average | Score Ranking | Weighted Average | |

|---|---|---|---|

| Lux-original + SAR | 0.9574 | 0.9565 | 0.9602 |

| Lux-50 + SAR | 0.9091 | 0.9026 | 0.9318 |

| Lux-40 + SAR | 0.8977 | 0.8850 | 0.9290 |

| Lux-30 + SAR | 0.8778 | 0.8654 | 0.9290 |

| Lux-20 + SAR | 0.5881 | 0.5824 | 0.9290 |

| Lux-Original | Lux-50 | Lux-40 | Lux-30 | Lux-20 | |

|---|---|---|---|---|---|

| EO weight | 0.485 | 0.42 | 0.3 | 0.15 | 0.15 |

| SAR weight | 0.515 | 0.58 | 0.7 | 0.85 | 0.85 |

| Average | Score Ranking | Weighted Average | |

|---|---|---|---|

| Lux-original + SAR | 1.0000 | 1.0000 | 1.0000 |

| Lux-120 + SAR | 0.9758 | 0.9728 | 1.0000 |

| Lux-100 + SAR | 0.9154 | 0.9033 | 1.0000 |

| Lux-80 + SAR | 0.8097 | 0.8006 | 0.9849 |

| Lux-60 + SAR | 0.7976 | 0.7946 | 0.9849 |

| Lux-Original | Lux-120 | Lux-100 | Lux-80 | Lux-60 | |

|---|---|---|---|---|---|

| EO weight | 0.5 | 0.45 | 0.4 | 0.3 | 0.2 |

| SAR weight | 0.5 | 0.55 | 0.6 | 0.7 | 0.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geng, Z.; Zhang, S.; Zhang, Y.; Xu, C.; Wu, L.; Zhu, D. Parking Pattern Guided Vehicle and Aircraft Detection in Aligned SAR-EO Aerial View Images. Remote Sens. 2025, 17, 2808. https://doi.org/10.3390/rs17162808

Geng Z, Zhang S, Zhang Y, Xu C, Wu L, Zhu D. Parking Pattern Guided Vehicle and Aircraft Detection in Aligned SAR-EO Aerial View Images. Remote Sensing. 2025; 17(16):2808. https://doi.org/10.3390/rs17162808

Chicago/Turabian StyleGeng, Zhe, Shiyu Zhang, Yu Zhang, Chongqi Xu, Linyi Wu, and Daiyin Zhu. 2025. "Parking Pattern Guided Vehicle and Aircraft Detection in Aligned SAR-EO Aerial View Images" Remote Sensing 17, no. 16: 2808. https://doi.org/10.3390/rs17162808

APA StyleGeng, Z., Zhang, S., Zhang, Y., Xu, C., Wu, L., & Zhu, D. (2025). Parking Pattern Guided Vehicle and Aircraft Detection in Aligned SAR-EO Aerial View Images. Remote Sensing, 17(16), 2808. https://doi.org/10.3390/rs17162808