Adaptive Clustering-Guided Multi-Scale Integration for Traffic Density Estimation in Remote Sensing Images

Abstract

1. Introduction

- (1)

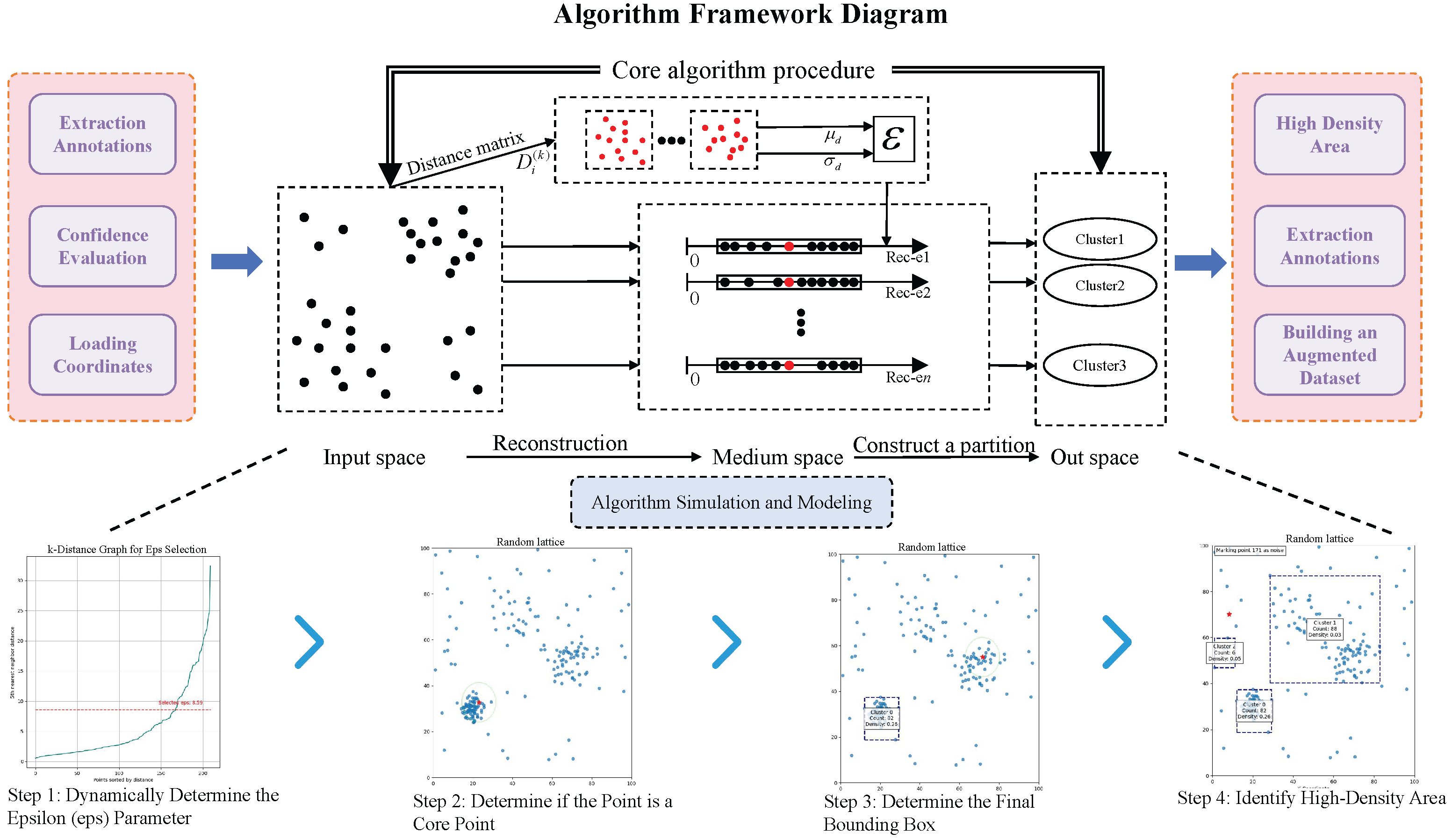

- An adaptive density-based clustering and pruning algorithm constrained by dual spatial factors is proposed. By dynamically adjusting the neighborhood radius and aligning the density clustering parameter space with the pruning region, the proposed method enables adaptive enhancement processing of remote sensing imagery. It overcomes the dependence on deep neural network training found in conventional approaches, leading to reduced computational overhead and time consumption.

- (2)

- A dynamic traffic density zoning technique based on density analysis and pixel-level gradient quantization is proposed. By incorporating precise target localization and a dual-optimization mechanism, a traffic state evaluation model with visual interpretability is developed. This model enhances the accuracy of dense object detection and the interpretability of the decision-making process, enabling a reliable regional congestion assessment strategy.

- (3)

- A collaborative optimization strategy that incorporates multi-stage training and multi-scale inference is proposed. A hierarchical detection framework connecting raw input data with region-of-interest refinement is established. Through a dynamic fine-tuning strategy for suppressing background interference and a multi-level detection mechanism, this method achieves accurate localization of traffic-related targets.

2. Related Works

2.1. Target Detection

2.2. Density Map Estimation

2.3. Density Zone Grading

3. Methodology

3.1. Overall Framework

3.2. Adaptive Pruning of Density-Based Clustering

- Analysis of target distribution characteristics and fundamental principles of DBSCAN.

- If q is directly density-reachable from a core point P, then q belongs to the same cluster as P.

- A point q is from P if there exists a chain of points (, ) in which each is directly density-reachable from and in which is a core point.

- A point q is to P if there exists a core point o such that both P and q are density-reachable from o.

- Dynamic adjustment of the clustering parameters.

- Generating the optimal detection region.

3.3. Joint Optimization of Multi-Stage Training and Multi-Scale Inference

3.4. Maximum Density Region Search and Congestion Grading Methodology

- is the area of the k-th detection box (detected target area).

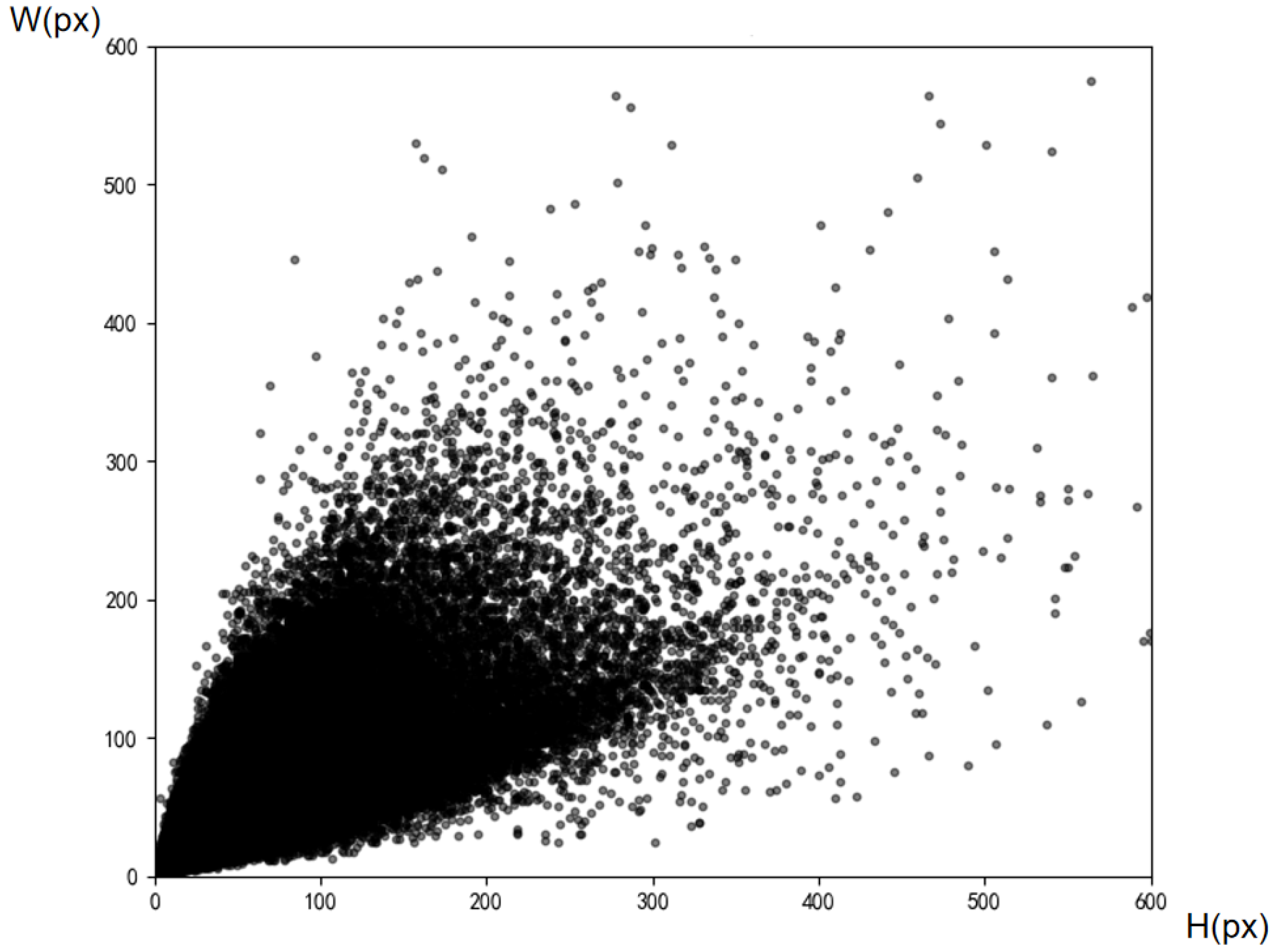

- W and H respectively denote the width and height of the bounding box (density region area identified by the search).

- Numerator : Total pixels in the union of detection masks within . Overlapping regions are eliminated through pixel-wise Boolean operations to avoid double-counting, thereby representing actual target coverage.

- Denominator : Total pixels in the candidate window, corresponding to the maximum theoretical coverage area.

4. Experiments and Results

4.1. Dataset Introduction

4.2. Experimental Settings and Evaluation Indicators

4.3. Experimental Results

4.4. Ablation Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shaygan, M.; Meese, C.; Li, W.; Zhao, X.G.; Nejad, M. Traffic prediction using artificial intelligence: Review of recent advances and emerging opportunities. Transp. Res. Part C Emerg. Technol. 2022, 145, 103921. [Google Scholar] [CrossRef]

- Zhang, K.; Chu, Z.; Xing, J.; Zhang, H.; Cheng, Q. Urban Traffic Flow Congestion Prediction Based on a Data-Driven Model. Mathematics 2023, 11, 4075. [Google Scholar] [CrossRef]

- Tang, F.; Fu, X.; Cai, M.; Lu, Y.; Zeng, Y.; Zhong, S.; Huang, Y.; Lu, C. Multilevel Traffic State Detection in Traffic Surveillance System Using a Deep Residual Squeeze-and-Excitation Network and an Improved Triplet Loss. IEEE Access 2020, 8, 114460–114474. [Google Scholar] [CrossRef]

- Macioszek, E.; Kurek, A. Extracting Road Traffic Volume in the City before and during COVID-19 through Video Remote Sensing. Remote Sens. 2021, 13, 2329. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Haleem, H.; Lavagetto, F.; Sciarrone, A. A Systematic Review of Drone Based Road Traffic Monitoring System. IEEE Access 2022, 10, 101537–101555. [Google Scholar] [CrossRef]

- Ünlüleblebici, S.; Taşyürek, M.; Öztürk, C. Traffic Density Estimation using Machine Learning Methods. Int. J. Artif. Intell. Data Sci. 2021, 1, 136–143. [Google Scholar]

- Chakraborty, D.; Dutta, D.; Jha, C.S. Remote Sensing and Deep Learning for Traffic Density Assessment. In Geospatial Technologies for Resources Planning and Management; Jha, C.S., Pandey, A., Chowdary, V., Singh, V., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 611–630. [Google Scholar] [CrossRef]

- Nam, D.; Lavanya, R.; Jayakrishnan, R.; Yang, I.; Jeon, W.H. A Deep Learning Approach for Estimating Traffic Density Using Data Obtained from Connected and Autonomous Probes. Sensors 2020, 20, 4824. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Yang, S.; Fu, Q.; Sun, H.; Wang, C. Infrared Image-Enhancement Algorithm for Weak Targets in Complex Backgrounds. Sensors 2023, 23, 6215. [Google Scholar] [CrossRef]

- Singh, M.P.; Gayathri, V.; Chaudhuri, D. A Simple Data Preprocessing and Postprocessing Techniques for SVM Classifier of Remote Sensing Multispectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7248–7262. [Google Scholar] [CrossRef]

- Guo, Y.; Wu, C.; Du, B.; Zhang, L. Density Map-based vehicle counting in remote sensing images with limited resolution. ISPRS J. Photogramm. Remote Sens. 2022, 189, 201–217. [Google Scholar] [CrossRef]

- Unel, F.O.; Ozkalayci, B.O.; Cigla, C. The Power of Tiling for Small Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–20 June 2019; pp. 582–591. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, Y.; Zhang, S.; Wang, N.; Mei, S. Finding Nonrigid Tiny Person With Densely Cropped and Local Attention Object Detector Networks in Low-Altitude Aerial Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4371–4385. [Google Scholar] [CrossRef]

- Li, C.; Yang, T.; Zhu, S.; Chen, C.; Guan, S. Density Map Guided Object Detection in Aerial Images. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 737–746. [Google Scholar] [CrossRef]

- Mei, S.; Chen, X.; Zhang, Y.; Li, J.; Plaza, A. Accelerating Convolutional Neural Network-Based Hyperspectral Image Classification by Step Activation Quantization. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Zhang, X.; Izquierdo, E.; Chandramouli, K. Dense and Small Object Detection in UAV Vision Based on Cascade Network. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 118–126. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.O. RS3Mamba: Visual State Space Model for Remote Sensing Image Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 21–23 September 2005; pp. 886–893. [Google Scholar] [CrossRef]

- Mu, K.; Hui, F.; Zhao, X. Multiple Vehicle Detection and Tracking in Highway Traffic Surveillance Video Based on SIFT Feature Matching. J. Inf. Process. Syst. 2016, 12, 183–195. [Google Scholar] [CrossRef]

- Greeshma, K.; Gripsy, J.V. Image classification using HOG and LBP feature descriptors with SVM and CNN. Int. J. Eng. Res. Technol. 2020, 8, 1–4. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems, Proceedings of the NeurIPS 2012, Lake Tahoe, NV, USA, 3–8 December 2012; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: New York, NY, USA, 2012; Volume 25. [Google Scholar]

- Mei, S.; Jiang, R.; Ma, M.; Song, C. Rotation-Invariant Feature Learning via Convolutional Neural Network With Cyclic Polar Coordinates Convolutional Layer. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5600713. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.U.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems, Proceedings of the NIPS 2017, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Yao, Z.; Ai, J.; Li, B.; Zhang, C. Efficient DETR: Improving End-to-End Object Detector with Dense Prior. arXiv 2021, arXiv:2104.01318. [Google Scholar]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Darrell, T.; Saenko, K. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; Volume 97, pp. 6105–6114. [Google Scholar]

- Zhang, X.; Feng, Y.; Zhang, S.; Wang, N.; Lu, G.; Mei, S. Robust Aerial Person Detection With Lightweight Distillation Network for Edge Deployment. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Li, J.; Guo, S.; Yi, S.; He, R.; Jia, Y. DMCTDet: A density map-guided composite transformer network for object detection of UAV images. Signal Process. Image Commun. 2025, 136, 117284. [Google Scholar] [CrossRef]

- Shorewala, S.; Ashfaque, A.; Sidharth, R.; Verma, U. Weed Density and Distribution Estimation for Precision Agriculture Using Semi-Supervised Learning. IEEE Access 2021, 9, 27971–27986. [Google Scholar] [CrossRef]

- Wu, Q.; Zhou, Y.; Wu, X.; Liang, G.; Ou, Y.; Sun, T. Real-time running detection system for UAV imagery based on optical flow and deep convolutional networks. IET Intell. Transp. Syst. 2020, 14, 278–287. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.; Chen, D. CSRNet: Dilated Convolutional Neural Networks for Understanding the Highly Congested Scenes. arXiv 2018, arXiv:1802.10062. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-Image Crowd Counting via Multi-Column Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 589–597. [Google Scholar] [CrossRef]

- Ding, X.; He, F.; Lin, Z.; Wang, Y.; Guo, H.; Huang, Y. Crowd Density Estimation Using Fusion of Multi-Layer Features. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4776–4787. [Google Scholar] [CrossRef]

- Yang, L.; Guo, Y.; Sang, J.; Wu, W.; Wu, Z.; Liu, Q.; Xia, X. A crowd counting method via density map and counting residual estimation. Multimed. Tools Appl. 2022, 81, 43503–43512. [Google Scholar] [CrossRef]

- Wang, Y.; Zou, Y.X.; Chen, J.; Huang, X.; Cai, C. Example-based visual object counting with a sparsity constraint. In Proceedings of the 2016 IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 June 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Kumar, K.N.; Roy, D.; Suman, T.A.; Vishnu, C.; Mohan, C.K. TSANet: Forecasting traffic congestion patterns from aerial videos using graphs and transformers. Pattern Recognit. 2024, 155, 110721. [Google Scholar] [CrossRef]

- Jiang, S.; Feng, Y.; Zhang, W.; Liao, X.; Dai, X.; Onasanya, B.O. A New Multi-Branch Convolutional Neural Network and Feature Map Extraction Method for Traffic Congestion Detection. Sensors 2024, 24, 4272. [Google Scholar] [CrossRef]

- Li, L.; Coskun, S.; Wang, J.; Fan, Y.; Zhang, F.; Langari, R. Velocity Prediction Based on Vehicle Lateral Risk Assessment and Traffic Flow: A Brief Review and Application Examples. Energies 2021, 14, 3431. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, KDD’96, Portland, OR, USA, 2–4 August 1996; AAAI Press: Washington, DC, USA, 1996; pp. 226–231. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The unmanned aerial vehicle benchmark: Object detection and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Wang, J.; Guo, W.; Pan, T.; Yu, H.; Duan, L.; Yang, W. Bottle Detection in the Wild Using Low-Altitude Unmanned Aerial Vehicles. In Proceedings of the 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 439–444. [Google Scholar] [CrossRef]

- Waqas Zamir, S.; Arora, A.; Gupta, A.; Khan, S.; Sun, G.; Shahbaz Khan, F.; Zhu, F.; Shao, L.; Xia, G.S.; Bai, X. iSAID: A Large-scale Dataset for Instance Segmentation in Aerial Images. In Proceedings of the EEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef]

| Benchmark Model | Training Dataset | Whether to Merge | AP(%) | (%) | (%) | (%) | (%) | (%) |

|---|---|---|---|---|---|---|---|---|

| FastRCNN | – | – | 18.6 | 33.6 | 17.9 | 10.1 | 28.8 | 40.3 |

| DINO | – | – | 30.5 | 52.4 | 29.8 | 22.0 | 41.0 | 48.9 |

| RTMDet | – | – | 15.4 | 26.2 | 15.6 | 7.4 | 24.5 | 33.6 |

| RetinaNet | – | – | 10.7 | 17.6 | 10.5 | 5.9 | 18.0 | 23.7 |

| VFNet | – | – | 27.5 | 43.3 | 29.0 | 18.1 | 39.8 | 56.5 |

| DETR | – | – | 19.5 | 34.9 | 18.9 | 11.4 | 28.8 | 45.2 |

| DMNet | – | – | 26.8 | 43.9 | 29.6 | 19.6 | 38.7 | 50.9 |

| YOLOv8 | original | N | 20.9 | 32.1 | 22.7 | 11.1 | 34.3 | 57.5 |

| original | Y | 21.7 | 33.6 | 23.3 | 11.7 | 35.2 | 54.3 | |

| original+Enhancement | N | 30.0 | 46.2 | 32.4 | 22.4 | 40.7 | 57.2 | |

| original+Enhancement | Y | 31.2 | 47.9 | 33.2 | 23.4 | 42.2 | 55.2 | |

| YOLOv11 | original | N | 23.4 | 35.3 | 25.0 | 13.6 | 36.4 | 50.9 |

| original+Enhancement | Y | 32.6 | 49.7 | 35.1 | 24.3 | 44.4 | 56.9 |

| Benchmark Model | Training Dataset | Whether to Merge | Pedestrian (%) | People (%) | Bicycle (%) | Car (%) | Van (%) | Truck (%) | Tricycle (%) | Awning -Tricycle (%) | Bus (%) | Motor (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FastRCNN | – | – | 18.1 | 10.0 | 5.5 | 48.2 | 23.2 | 22.1 | 10.1 | 5.2 | 27.7 | 15.1 |

| DINO | – | – | 30.1 | 20.6 | 14.6 | 60.7 | 38.2 | 29.5 | 22.0 | 13.1 | 47.4 | 28.9 |

| RTMDet | – | – | 10.6 | 6.9 | 2.1 | 48.8 | 24.5 | 14.7 | 9.6 | 7.2 | 28.8 | 11.7 |

| RetinaNet | – | – | 8.9 | 4.2 | 2.3 | 43.2 | 15.6 | 9.3 | 3.3 | 2.7 | 10.3 | 9.3 |

| VFNet | – | – | 24.6 | 13.6 | 11.8 | 58.0 | 36.2 | 29.6 | 20.6 | 11.0 | 45.9 | 24.1 |

| DETR | – | – | 19.9 | 13.2 | 7.2 | 47.3 | 24.7 | 19.6 | 12.3 | 5.9 | 24.9 | 19.7 |

| DMNet | – | – | 23.3 | 15.5 | 13.8 | 56.8 | 37.5 | 29.7 | 22.4 | 12.4 | 46.9 | 24.7 |

| YOLOv8 | original | N | 17.4 | 11.1 | 5.6 | 51.3 | 26.2 | 22.6 | 12.0 | 8.8 | 36.5 | 16.8 |

| original | Y | 18.7 | 11.6 | 7.1 | 51.8 | 24.3 | 25.1 | 13.6 | 7.5 | 39.6 | 17.6 | |

| original+ Enhancement | N | 29.9 | 19.5 | 12.7 | 60.9 | 36.6 | 31.1 | 19.9 | 12.7 | 49.1 | 27.3 | |

| original+ Enhancement | Y | 30.7 | 20.3 | 14.7 | 61.3 | 35.1 | 33.4 | 22.2 | 12.7 | 52.5 | 28.5 | |

| YOLOv11 | original | N | 20.1 | 10.6 | 7.4 | 55.2 | 29.3 | 25.5 | 15.5 | 7.1 | 42.1 | 20.8 |

| original+ Enhancement | Y | 31.5 | 20.7 | 15.9 | 61.5 | 39.4 | 35.6 | 24.6 | 13.6 | 52.1 | 30.7 |

| Benchmark Model | Training Dataset | Whether to Merge | AP (%) | (%) | (%) | APsmall (%) | APmid (%) | APlarge (%) |

|---|---|---|---|---|---|---|---|---|

| FastRCNN | – | – | 6.2 | 16.8 | 2.7 | 4.5 | 14.6 | 10.2 |

| DINO | – | – | 14.2 | 24.7 | 15.7 | 8.6 | 23.2 | 31.8 |

| RTMDet | – | – | 14.7 | 25.3 | 14.1 | 8.7 | 20.3 | 32.1 |

| RetinaNet | – | – | 15.4 | 26.6 | 14.4 | 9.2 | 21.5 | 31.2 |

| VFNet | – | – | 15.4 | 27.0 | 17.6 | 10.3 | 22.4 | 37.8 |

| DETR | – | – | 13.4 | 24.1 | 13.7 | 8.9 | 19.9 | 32.3 |

| DMNet | – | – | 12.6 | 22.8 | 14.9 | 8.1 | 24.1 | 34.7 |

| YOLOv8 | original | N | 14.0 | 23.7 | 14.9 | 8.2 | 24.7 | 30.2 |

| original | Y | 15.0 | 26.5 | 15.2 | 8.6 | 26.4 | 30.6 | |

| original+Enhancement | N | 12.5 | 21.5 | 13.5 | 7.4 | 22.9 | 33.9 | |

| original+Enhancement | Y | 16.1 | 27.6 | 17.2 | 10.1 | 26.7 | 34.4 | |

| YOLOv11 | original | N | 14.6 | 25.7 | 15.2 | 10.6 | 26.3 | 33.2 |

| original+Enhancement | Y | 16.2 | 26.5 | 17.7 | 10.7 | 25.6 | 39.7 |

| Benchmark Model | Cropping Method | Training Dataset | Whether to Merge | AP(%) | APsmall(%) | APmid(%) | APlarge(%) | Processing Speed (ms/img) |

|---|---|---|---|---|---|---|---|---|

| Image equalization (four pieces) | original+Enhancement | Y | 19.9 | 12.9 | 28.4 | 39.9 | 15.0 | |

| Image equalization (six pieces) | original+Enhancement | Y | 19.4 | 14.9 | 26.3 | 37.3 | 15.0 | |

| YOLOv11 | MCNN | original+Enhancement | Y | 17.5 | 10.9 | 24.9 | 40.7 | 20.5 |

| The cropping algorithm proposed in this paper | original | N | 23.4 | 13.6 | 36.4 | 50.9 | 8.0 | |

| Enhancement | N | 22.1 | 11.3 | 36.7 | 56.3 | 8.0 | ||

| original | Y | 28.5 | 20.8 | 38.5 | 50.6 | 16.2 | ||

| Enhancement | Y | 30.7 | 23.3 | 43.1 | 55.1 | 16.2 | ||

| original+Enhancement | Y | 32.6 | 24.3 | 44.4 | 56.9 | 16.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Meng, Q.; Zhang, X.; Li, X.; Li, S. Adaptive Clustering-Guided Multi-Scale Integration for Traffic Density Estimation in Remote Sensing Images. Remote Sens. 2025, 17, 2796. https://doi.org/10.3390/rs17162796

Liu X, Meng Q, Zhang X, Li X, Li S. Adaptive Clustering-Guided Multi-Scale Integration for Traffic Density Estimation in Remote Sensing Images. Remote Sensing. 2025; 17(16):2796. https://doi.org/10.3390/rs17162796

Chicago/Turabian StyleLiu, Xin, Qiao Meng, Xiangqing Zhang, Xinli Li, and Shihao Li. 2025. "Adaptive Clustering-Guided Multi-Scale Integration for Traffic Density Estimation in Remote Sensing Images" Remote Sensing 17, no. 16: 2796. https://doi.org/10.3390/rs17162796

APA StyleLiu, X., Meng, Q., Zhang, X., Li, X., & Li, S. (2025). Adaptive Clustering-Guided Multi-Scale Integration for Traffic Density Estimation in Remote Sensing Images. Remote Sensing, 17(16), 2796. https://doi.org/10.3390/rs17162796