Landslide Detection with MSTA-YOLO in Remote Sensing Images

Abstract

1. Introduction

- (1)

- A novel attentional mechanism denoted as receptive field attention (RFA) is proposed. The design of RFA refers to the structure of the human receptive field, allowing the model to extract more effective features from both channel and space perspectives.

- (2)

- The normalized Wasserstein distance (NWD) [39] is introduced into the loss function instead of the traditional cross ratio calculation method, which reduces the sensitivity of the traditional cross ratio and further improves the performance of the model on small targets.

- (3)

- The MSTA-YOLO model demonstrates significant performance improvements on both the Bijie and Luding landslide datasets, outperforming existing state-of-the-art models. Furthermore, its application to the Southwest landslide dataset validates the enhanced transfer learning capability enabled by our proposed module.

2. Data and Study Areas

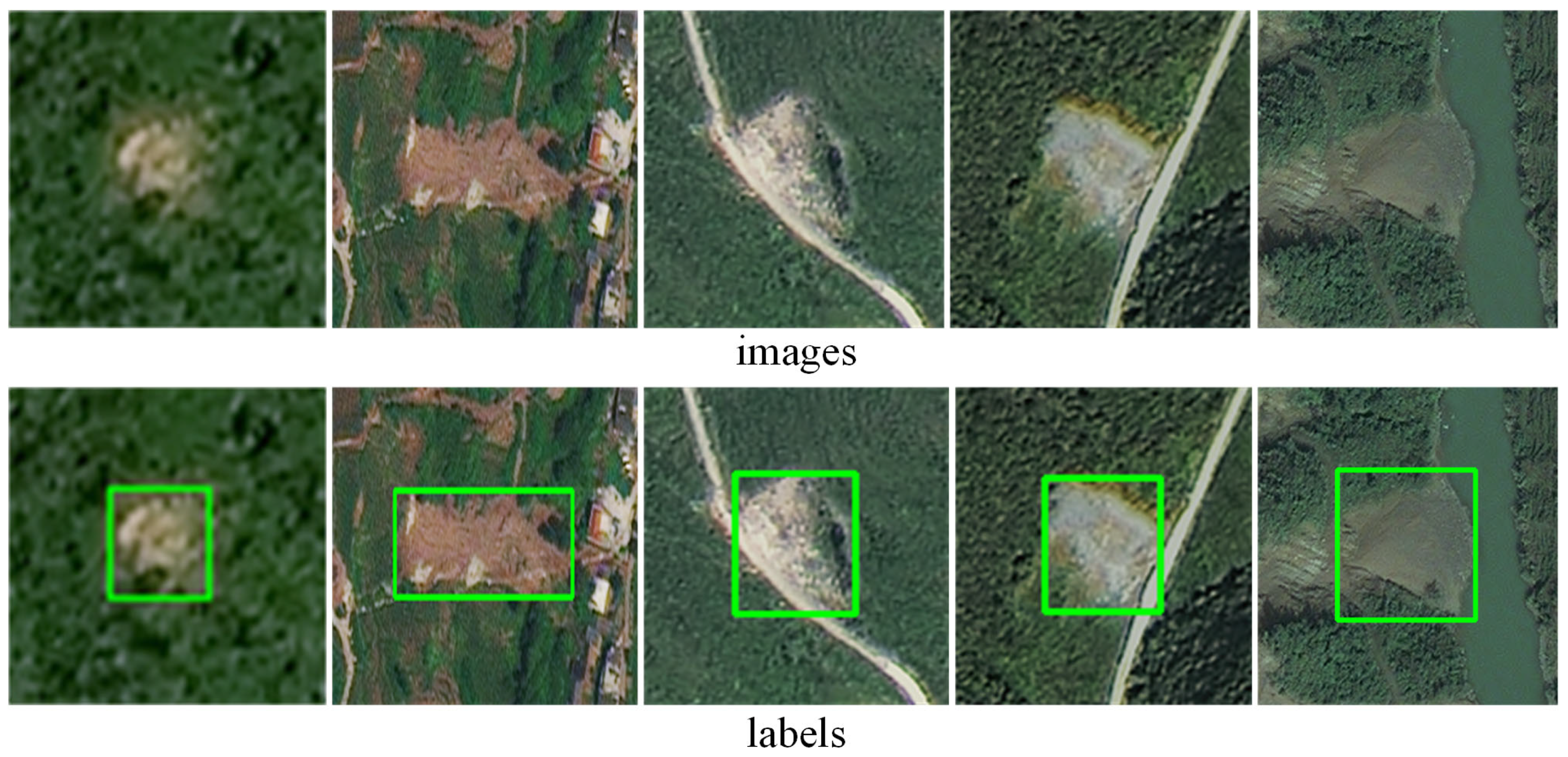

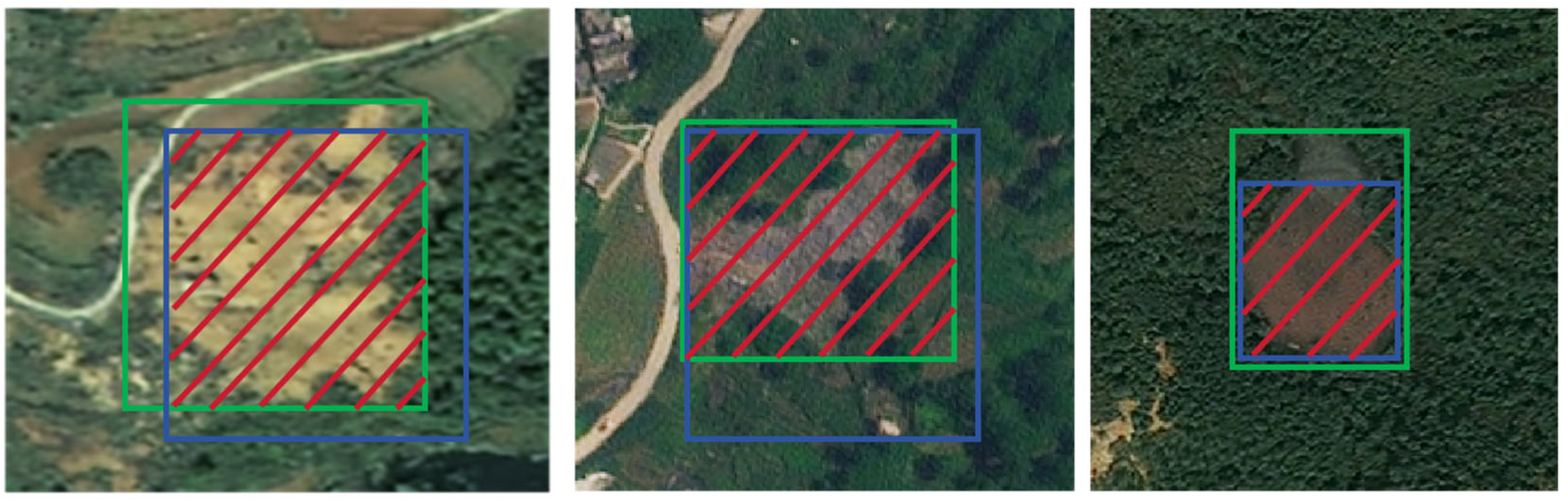

2.1. Bijie Landslide Dataset

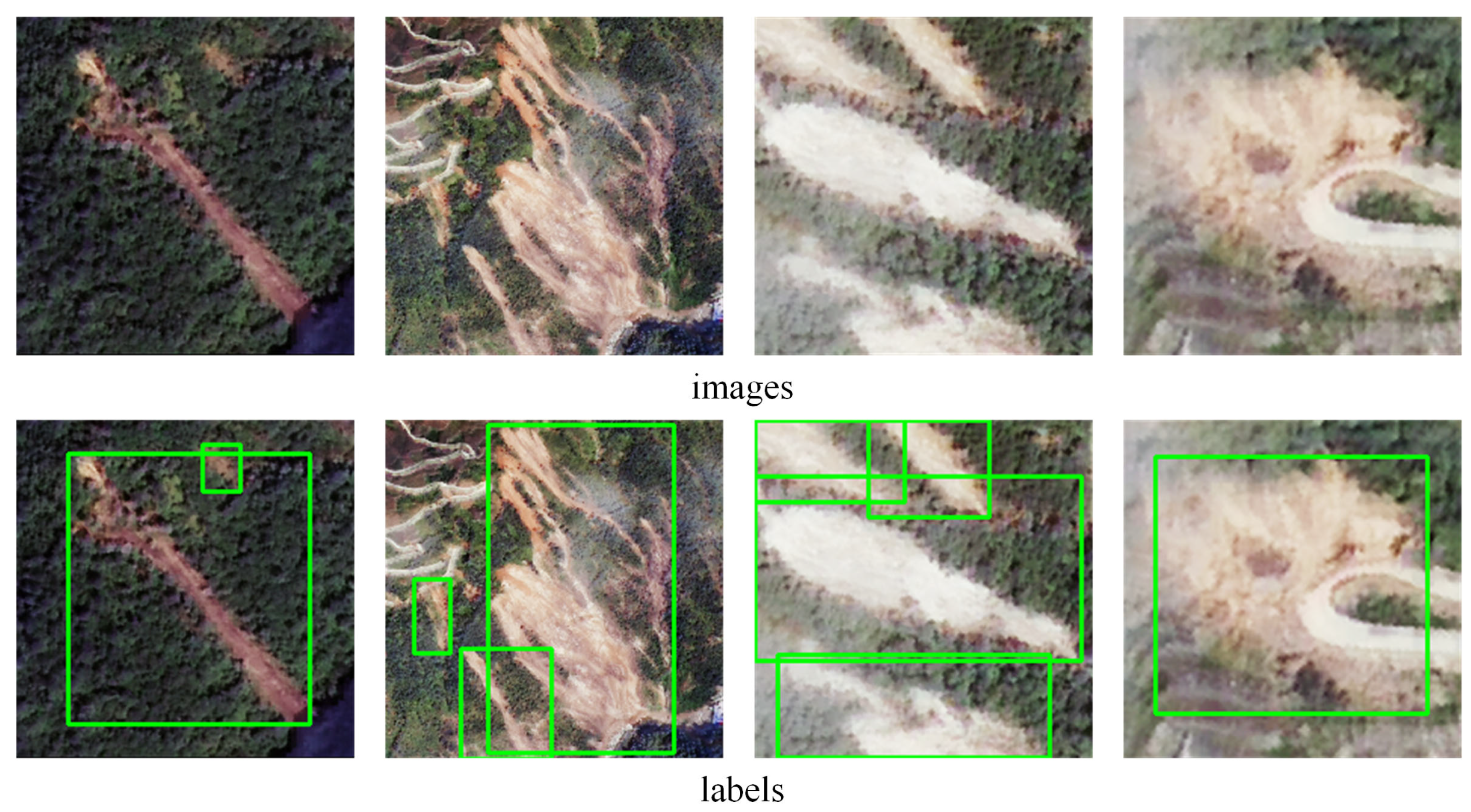

2.2. Luding Landslide Dataset

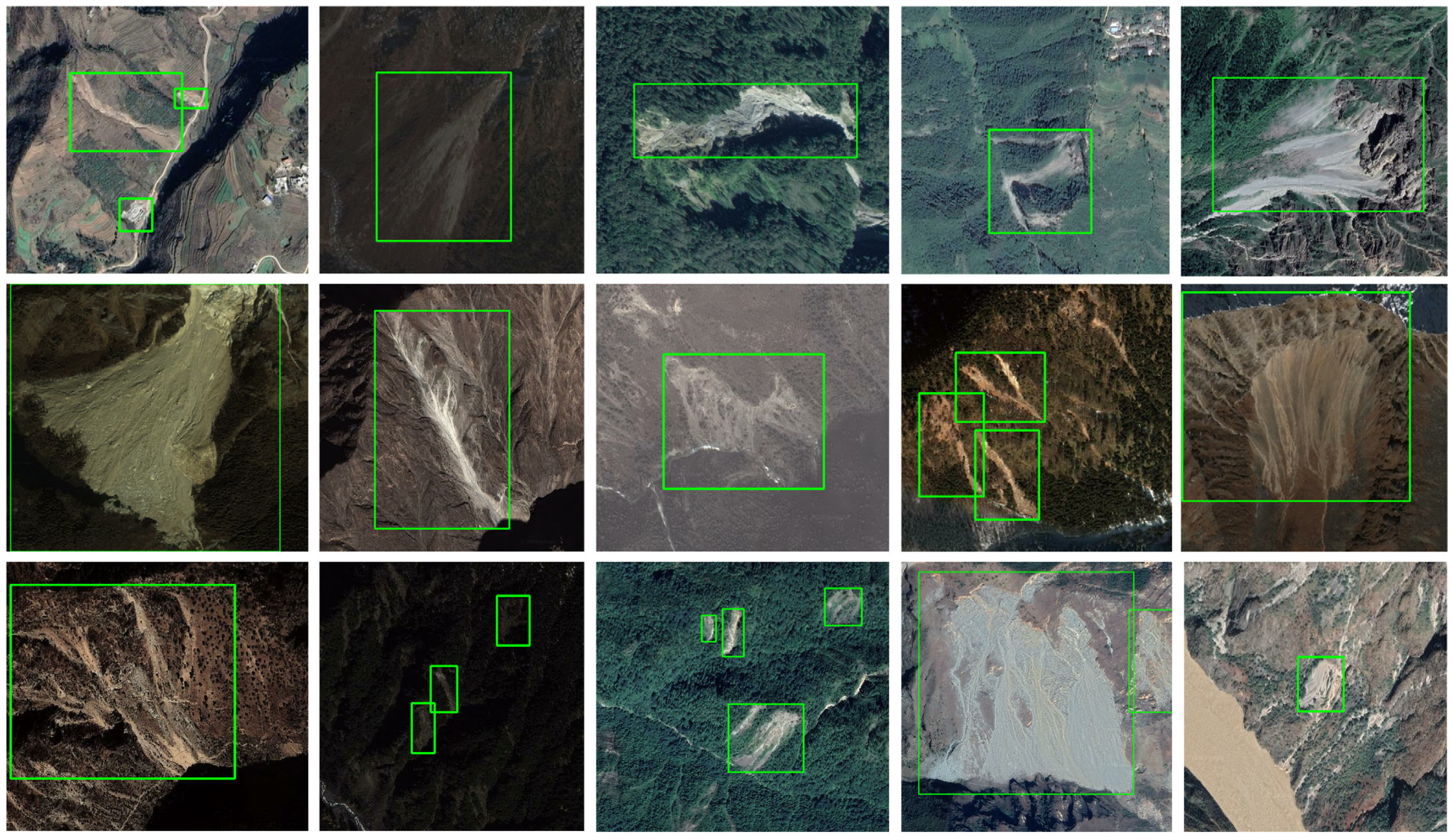

2.3. Southwest Landslide Dataset

3. Methods

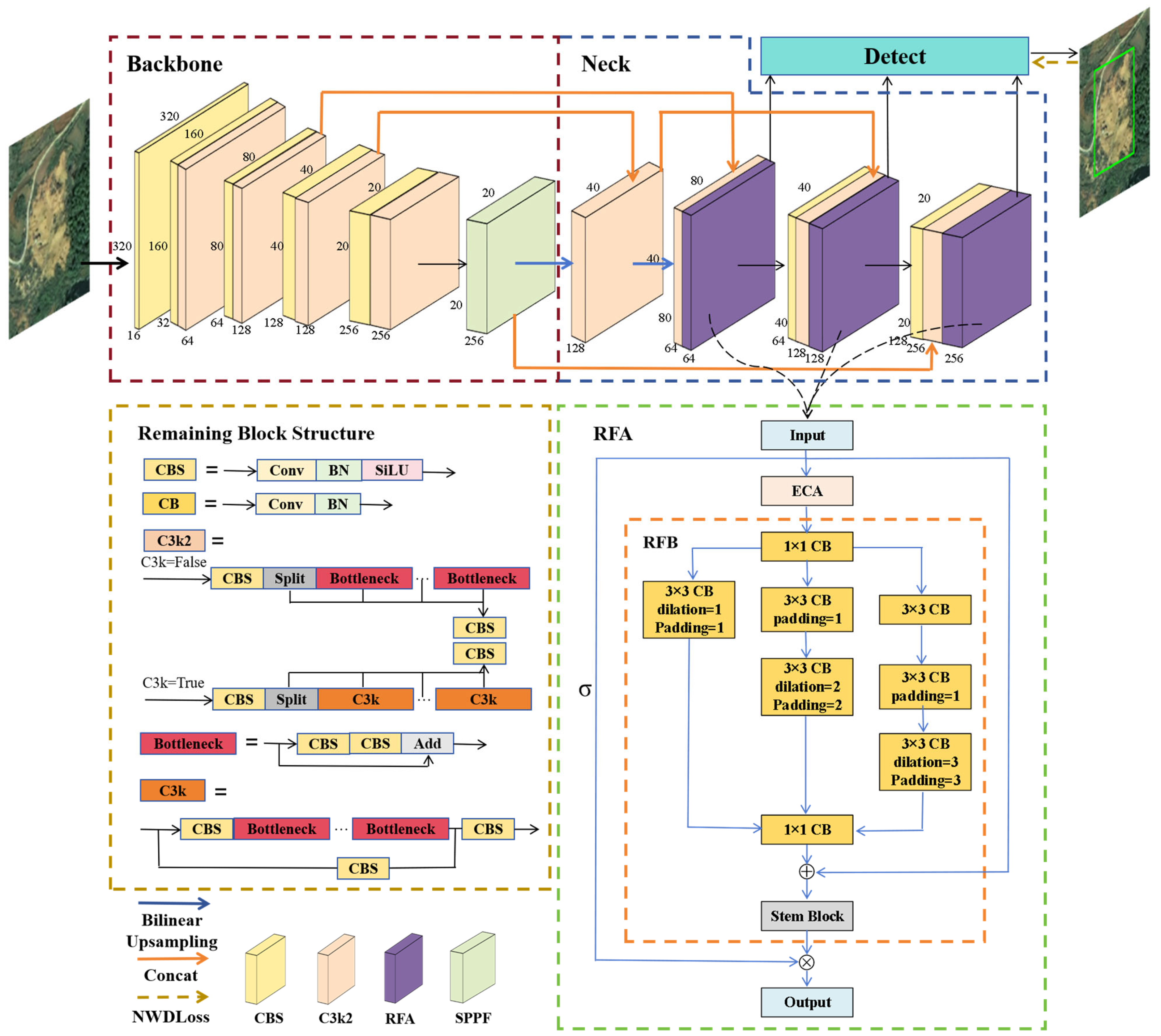

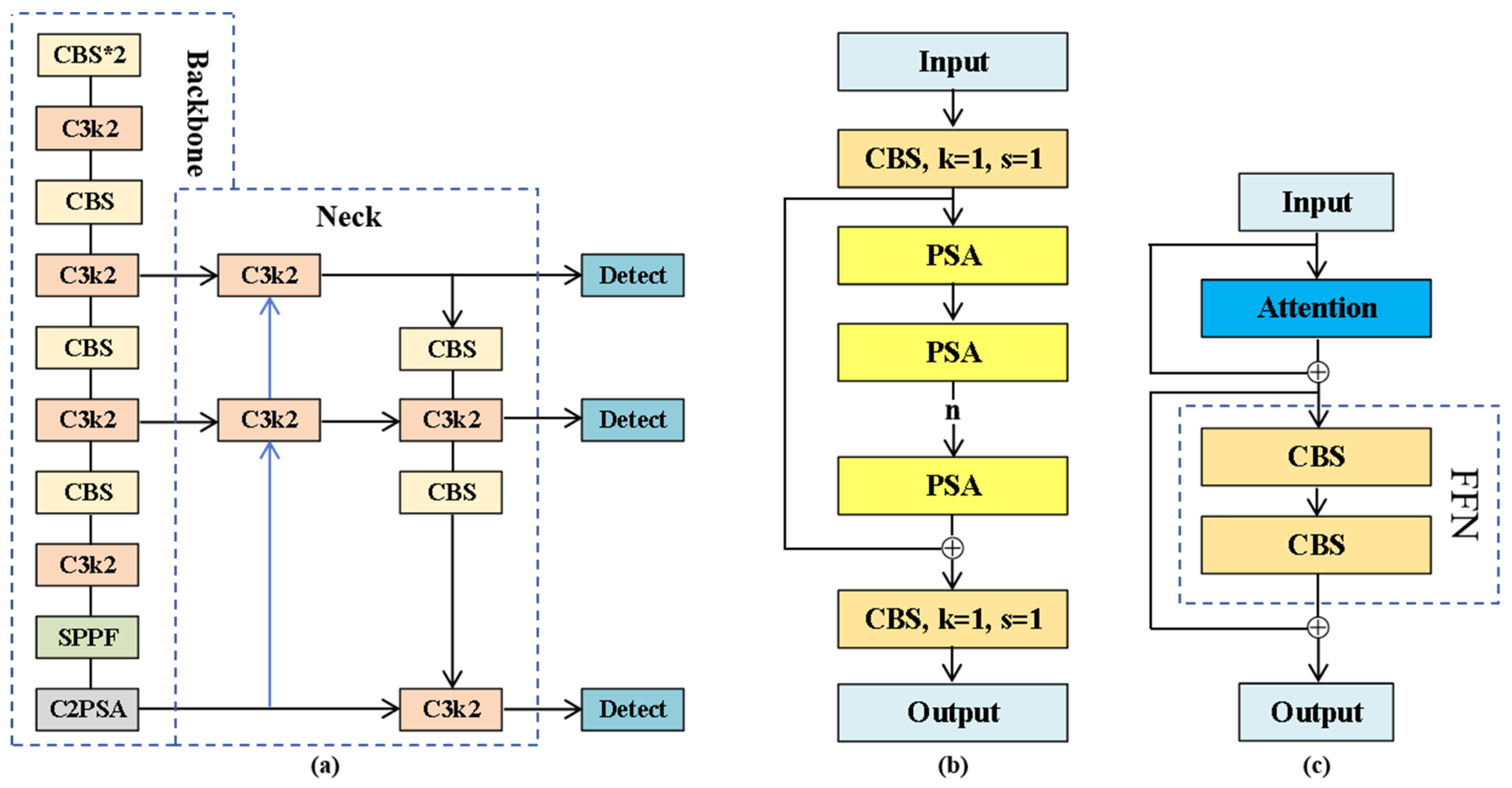

3.1. MSTA-YOLO Network Structure

3.2. YOLOv11 and C2PSA Block

3.3. Receptive Field Attention

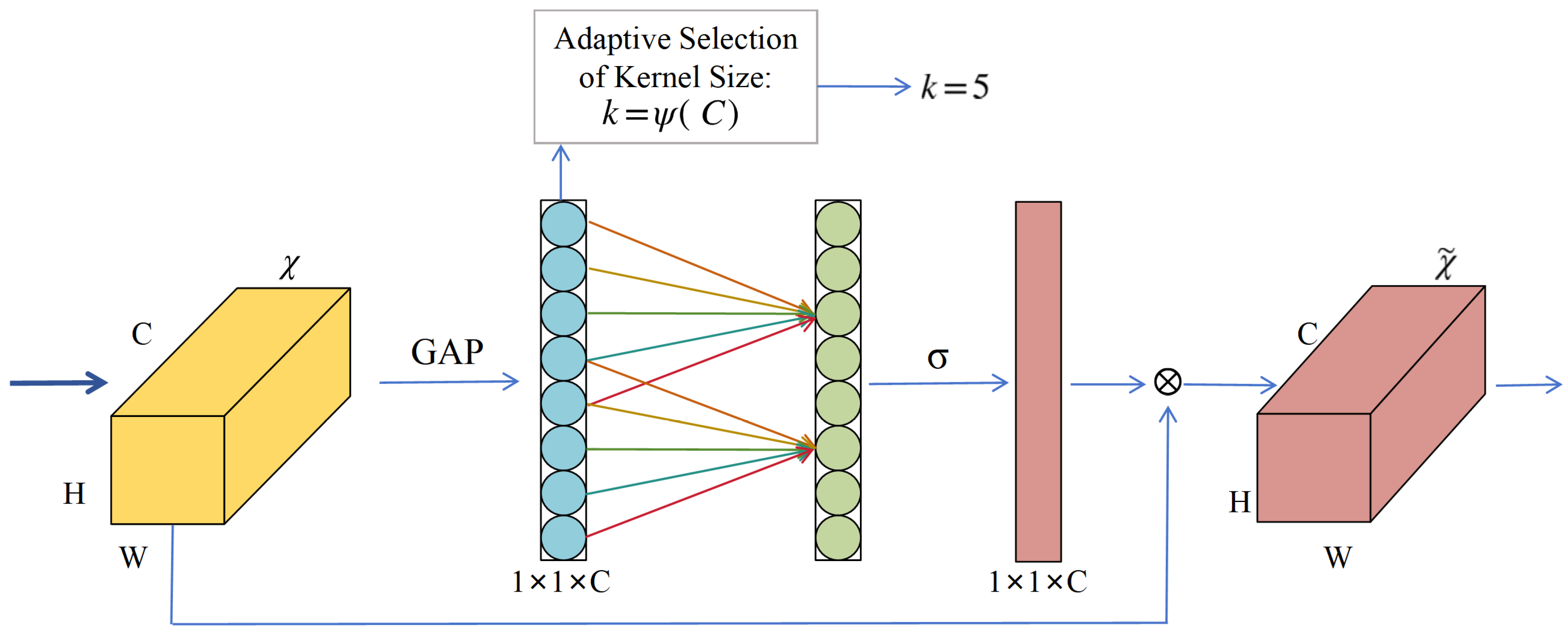

3.3.1. Receptive Field Block

3.3.2. Efficient Channel Attention

3.4. Normalized Wasserstein Distance

3.5. Evaluation Metrics

4. Results

4.1. Experimental Setup

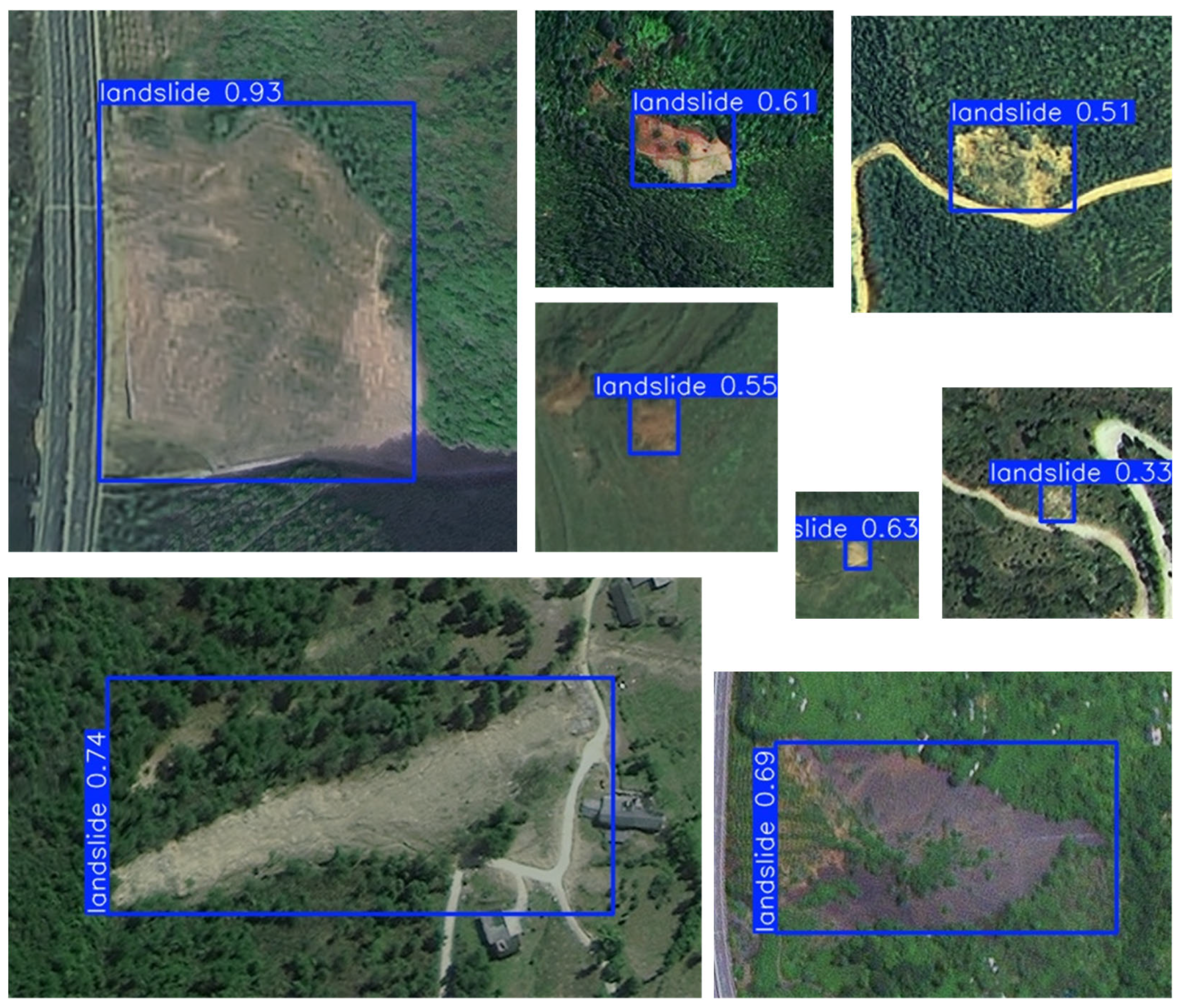

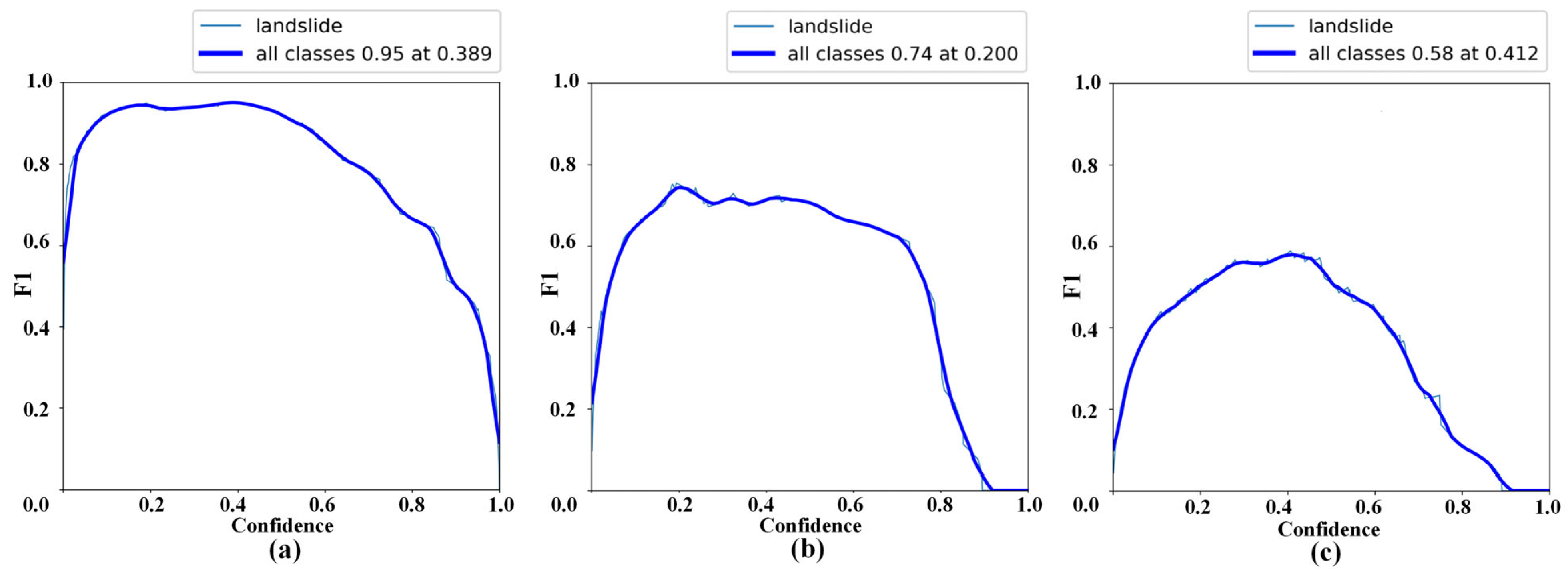

4.2. Accuracy Verification of MSTA-YOLO on Bijie Landslide Dataset

4.3. Ablation Experiment

4.4. Performance Test Based on the Luding Landslide Dataset

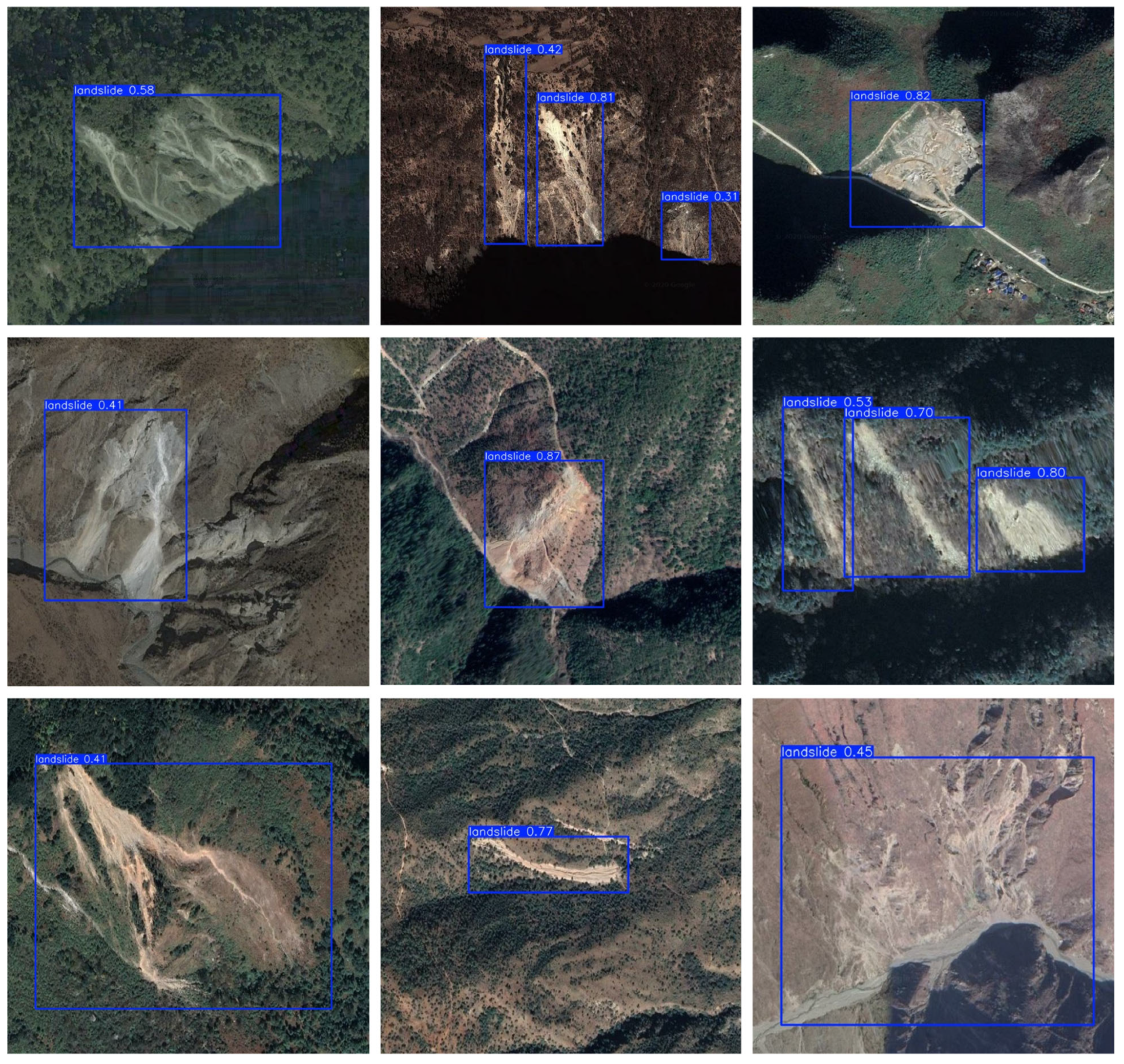

4.5. Verification of Difficult Areas Based on the Southwest Landslide Dataset

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Haque, U.; Da Silva, P.F.; Devoli, G.; Pilz, J.; Zhao, B.; Khaloua, A.; Wilopo, W.; Andersen, P.; Lu, P.; Glass, G.E.; et al. The human cost of global warming: Deadly landslides and their triggers (1995–2014). Sci. Total Environ. 2019, 682, 673–684. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Xu, C.; Ma, S.; Xu, X.; Wang, S.; Zhang, H. Inventory and spatial distribution of landslides triggered by the 8th August 2017 MW 6.5 Jiuzhaigou earthquake, China. J. Earth Sci. 2019, 30, 206–217. [Google Scholar] [CrossRef]

- Gorum, T.; Korup, O.; van Westen, C.J.; van der Meijde, M.; Xu, C.; van der Meer, F.D. Why so few? Landslides triggered by the 2002 Denali earthquake, Alaska. Quat. Sci. Rev. 2014, 95, 80–94. [Google Scholar] [CrossRef]

- Qu, F.; Qiu, H.; Sun, H.; Tang, M. Post-failure landslide change detection and analysis using optical satellite Sentinel-2 images. Landslides 2021, 18, 447–455. [Google Scholar] [CrossRef]

- Qiu, W.; Gu, L.; Gao, F.; Jiang, T. Building extraction from very high-resolution remote sensing images using refine-UNet. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6002905. [Google Scholar] [CrossRef]

- Xing, Y.; Han, G.; Mao, H.; He, H.; Bo, Z.; Gong, R.; Ma, X.; Gong, W. MAM-YOLOv9: A Multi-Attention Mechanism Network for Methane Emission Facility Detection in High-Resolution Satellite Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5614516. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, C.; Lu, Z.; Xi, J. Landslide Inventory Mapping Based on Independent Component Analysis and UNet3+: A Case of Jiuzhaigou, China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 2213–2223. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, C.; Liu, X.; Zhang, S.; Xi, J.; Khan, B.A. An Embedding Swin Transformer Model for Automatic Slow-moving Landslides Detection based on InSAR Products. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5223915. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, B.; Yu, W.; Kang, X. Federated deep learning with prototype matching for object extraction from very-high-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5603316. [Google Scholar] [CrossRef]

- Wang, B.; Liu, Z.; Xi, J.; Gao, S.; Cong, M.; Shang, H. Detection of Greenhouse and Typical Rural Buildings with Efficient Weighted YOLOv8 in Hebei Province, China. Remote Sens. 2025, 17, 1883. [Google Scholar] [CrossRef]

- Li, X.; Chen, P.; Yang, J.; An, W.; Zheng, G.; Luo, D.; Lu, A. Ship Target Search in Multi-Source Visible Remote Sensing Images Based on Two-Branch Deep Learning. IEEE Geosci. Remote Sens. Lett. 2024, 51, 5003205. [Google Scholar]

- Cai, H.; Chen, T.; Niu, R.; Plaza, A. Landslide detection using densely connected convolutional networks and environmental conditions. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5235–5247. [Google Scholar] [CrossRef]

- Wang, L.; Lei, H.; Jian, W.; Wang, W.; Wang, H.; Wei, N. Enhancing Landslide Detection: A Novel LA-YOLO Model for Rainfall-Induced Shallow Landslides. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6004905. [Google Scholar] [CrossRef]

- Chen, X.; Liu, C.; Wang, S.; Deng, X. LSI-YOLOv8: An improved rapid and high accuracy landslide identification model based on YOLOv8 from remote sensing images. IEEE Access 2024, 12, 97739–97751. [Google Scholar] [CrossRef]

- Lv, Z.; Yang, T.; Lei, T.; Zhou, W.; Zhang, Z.; You, Z. Spatial-spectral similarity based on adaptive region for landslide inventory mapping with remote sensed images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4405111. [Google Scholar] [CrossRef]

- Chen, Y.; Ming, D.; Yu, J.; Xu, L.; Ma, Y.; Li, Y.; Ling, X.; Zhu, Y. Susceptibility-guided landslide detection using fully convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 998–1018. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Z.; Zhou, S.; Qi, W.; Wu, X.; Zhang, T.; Han, L. LS-YOLO: A novel model for detecting multiscale landslides with remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 4952–4965. [Google Scholar] [CrossRef]

- Gao, M.; Chen, F.; Wang, L.; Zhao, H.; Yu, B. Swin Transformer-based Multi-scale Attention Model for Landslide Extraction from Large-scale Area. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4415314. [Google Scholar] [CrossRef]

- Dong, A.; Dou, J.; Li, C.; Chen, Z.; Ji, J.; Xing, K.; Zhang, J.; Daud, H. Accelerating cross-scene co-seismic landslide detection through progressive transfer learning and lightweight deep learning strategies. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4410213. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, J.; He, H.; Jia, Y.; Chen, R.; Ge, Y.; Ming, Z.; Zhang, L.; Li, H. MAST: An earthquake-triggered landslides extraction method combining morphological analysis edge recognition with swin-transformer deep learning model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 2586–2595. [Google Scholar] [CrossRef]

- Xu, G.; Wang, Y.; Wang, L.; Soares, L.P.; Grohmann, C.H. Feature-based constraint deep CNN method for mapping rainfall-induced landslides in remote regions with mountainous terrain: An application to Brazil. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2644–2659. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, T.; Dou, J.; Liu, G.; Plaza, A. Landslide susceptibility mapping considering landslide local-global features based on CNN and transformer. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7475–7489. [Google Scholar] [CrossRef]

- Gao, S.; Xi, J.; Li, Z.; Ge, D.; Guo, Z.; Yu, J.; Wu, Q.; Zhao, Z.; Xu, J. Optimal and multi-view strategic hybrid deep learning for old landslide detection in the loess plateau, Northwest China. Remote Sens. 2024, 16, 1362. [Google Scholar] [CrossRef]

- Chen, T.; Wang, Q.; Zhao, Z.; Liu, G.; Dou, J.; Plaza, A. LCFSTE: Landslide conditioning factors and swin transformer ensemble for landslide susceptibility assessment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 6444–6454. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 13–28 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, B.; Huang, Y.; Xia, Q.; Zhang, Q. Nonlocal spatial attention module for image classification. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420938927. [Google Scholar] [CrossRef]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to attend: Convolutional triplet attention module. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Online, 5–9 January 2021; pp. 3139–3148. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Qiao, Y.; Dong, C. Activating more pixels in image super-resolution transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22367–22377. [Google Scholar]

- Han, D.; Ye, T.; Han, Y.; Xia, Z.; Pan, S.; Wan, P.; Song, S.; Huang, G. Agent attention: On the integration of softmax and linear attention. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 124–140. [Google Scholar]

- Li, Y.; Li, X.; Yang, J. Spatial group-wise enhance: Enhancing semantic feature learning in cnn. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 687–702. [Google Scholar]

- Xiao, Y.; Xu, T.; Yu, X.; Fang, Y.; Li, J. A Lightweight Fusion Strategy with Enhanced Inter-layer Feature Correlation for Small Object Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4708011. [Google Scholar] [CrossRef]

- Lou, M.; Zhang, S.; Zhou, H.Y.; Yang, S.; Wu, C.; Yu, Y. TransXNet: Learning both global and local dynamics with a dual dynamic token mixer for visual recognition. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 11534–11547. [Google Scholar] [CrossRef]

- Li, M.; Huang, H.; Huang, K. FCAnet: A novel feature fusion approach to EEG emotion recognition based on cross-attention networks. Neurocomputing 2025, 638, 130102. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A normalized Gaussian Wasserstein distance for tiny object detection. arXiv 2021, arXiv:2110.13389. [Google Scholar]

- Ji, S.; Yu, D.; Shen, C.; Li, W.; Xu, Q. Landslide detection from an open satellite imagery and digital elevation model dataset using attention boosted convolutional neural networks. Landslides 2020, 17, 1337–1352. [Google Scholar] [CrossRef]

- Li, Y.; Wu, Z.; Wu, J.; Zhang, R.; Xu, X.; Zhou, Y. DBSANet: A Dual-Branch Semantic Aggregation Network Integrating CNNs and Transformers for Landslide Detection in Remote Sensing Images. Remote Sens. 2025, 17, 807. [Google Scholar] [CrossRef]

- Peng, H.; Yu, S. A systematic IOU-related method: Beyond simplified regression for better localization. IEEE Trans. Image Process 2021, 30, 5032–5044. [Google Scholar] [CrossRef] [PubMed]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Loss, G.Z.S. More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Zhang, R.X.; Zhu, W.; Li, Z.H.; Zhang, B.C.; Chen, B. Re-net: Multibranch network with structural reparameterization for landslide detection in optical imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 2828–2837. [Google Scholar] [CrossRef]

- Xiang, X.; Gong, W.; Li, S.; Chen, J.; Ren, T. TCNet: Multiscale fusion of transformer and CNN for semantic segmentation of remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 3123–3136. [Google Scholar] [CrossRef]

- Lv, P.; Ma, L.; Li, Q.; Du, F. ShapeFormer: A shape-enhanced vision transformer model for optical remote sensing image landslide detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2681–2689. [Google Scholar] [CrossRef]

- Du, Y.; Xu, X.; He, X. Optimizing geo-hazard response: LBE-YOLO’s innovative lightweight framework for enhanced real-time landslide detection and risk mitigation. Remote Sens. 2024, 16, 534. [Google Scholar] [CrossRef]

- Fan, S.; Fu, Y.; Li, W.; Bai, H.; Jiang, Y. ETGC2-net: An enhanced transformer and graph convolution combined network for landslide detection. Nat. Hazards 2025, 121, 135–160. [Google Scholar] [CrossRef]

- Jiang, W.; Xi, J.; Li, Z.; Ding, M.; Yang, L.; Xie, D. Landslide detection and segmentation using mask R-CNN with simulated hard samples. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1931–1942. [Google Scholar]

- Yang, Y.; Miao, Z.; Zhang, H.; Wang, B.; Wu, L. Lightweight attention-guided YOLO with level set layer for landslide detection from optical satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 3543–3559. [Google Scholar] [CrossRef]

| Method | P | R | F1 | mAP50 | mAP@50–95 |

|---|---|---|---|---|---|

| YOLOv10 | 69.2% | 75.3% | 72.1% | 80.7% | 50.8% |

| YOLOv9 | 79.8% | 80.5% | 80.1% | 86.4% | 52.5% |

| Re-Net [47] | 83.88% | ||||

| TCNet [48] | 84.19% | 89.2% | 85.12% | ||

| DBSANet [41] | 87.08% | ||||

| ShapeFormer [49] | 86.74% | 89.52% | 88.11% | ||

| LBE-YOLO [50] | 90.6% | 86.5% | 88.5% | 91.0% | |

| ETGC2-net [51] | 90.11% | 89.98% | 90.04% | ||

| Jiang et al. [52] | 92.3% | 96.0% | 94.1% | ||

| YOLOv8 | 86.7% | 96.1% | 91.2% | 95.7% | 66.7% |

| YOLOv11 | 88.9% | 93.4% | 91.1% | 96.1% | 66.1% |

| LA-YOLO-LLL [53] | 96.17% | 91.98% | 94.03% | 95.4% | |

| MSTA-YOLO (ours) | 95% | 99.5% | 97.2% | 99.1% | 69.9% |

| Method | P | R | mAP50 | mAP@50–95 |

|---|---|---|---|---|

| YOLOv11 | 88.9% | 93.4% | 96.1% | 66.1% |

| YOLOv11 + RFA | 91% | 93.5% | 97% | 67.1% |

| YOLOv11 + NWD | 88.4% | 89.6% | 94.6% | 65.2% |

| YOLOv11 + RFA + NWD | 91.4% | 96.5% | 97.8% | 60.6% |

| YOLOv11 * | 89.1% | 97.4% | 95.3% | 70.3% |

| YOLOv11 * + RFA | 93.7% | 96.2% | 98% | 70.9% |

| YOLOv11 * + NWD | 90.5% | 99.4% | 98.2% | 68.3% |

| MSTA-YOLO (ours) | 95% | 99.5% | 99.1% | 69.9% |

| Method | P | R | F1 | mAP@50–95 |

|---|---|---|---|---|

| YOLOv9 | 89.7% | 68.3% | 77.6% | 49.2% |

| YOLOv10 | 77.0% | 68.3% | 72.4% | 44.8% |

| YOLOv11 | 92.1% | 58.5% | 71.6% | 37.2% |

| MSTA-YOLO (ours) | 87.1% | 79.5% | 83.1% | 56.3% |

| Method | P | R | F1 | mAP@50–95 |

|---|---|---|---|---|

| YOLOv11 | 92.1% | 58.5% | 71.6% | 37.2% |

| YOLOv11 + RFA | 84.7% | 74.4% | 79.2% | 54.5% |

| YOLOv11 + NWD | 90.5% | 73.1% | 80.8% | 55.9% |

| YOLOv11 * + RFA | 86.0% | 75.0% | 80.1% | 55.4% |

| YOLOv11 * + NWD | 92.6% | 69.2% | 79.2% | 51.2% |

| MSTA-YOLO (ours) | 87.1% | 79.5% | 83.1% | 56.3% |

| Method | P | R | mAP50 | mAP@50–95 | GFLOPs | FPS |

|---|---|---|---|---|---|---|

| YOLOv11 | 69.8% | 48.5% | 57.6% | 26.5% | 6.4 | 29.77 |

| MSTA-YOLO | 72.1% | 49.9% | 59.1% | 30.5% | 7.0 | 29.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Su, J.; Xi, J.; Chen, Y.; Cheng, H.; Li, H.; Chen, C.; Shang, H.; Yang, Y. Landslide Detection with MSTA-YOLO in Remote Sensing Images. Remote Sens. 2025, 17, 2795. https://doi.org/10.3390/rs17162795

Wang B, Su J, Xi J, Chen Y, Cheng H, Li H, Chen C, Shang H, Yang Y. Landslide Detection with MSTA-YOLO in Remote Sensing Images. Remote Sensing. 2025; 17(16):2795. https://doi.org/10.3390/rs17162795

Chicago/Turabian StyleWang, Bingkun, Jiali Su, Jiangbo Xi, Yuyang Chen, Hanyu Cheng, Honglue Li, Cheng Chen, Haixing Shang, and Yun Yang. 2025. "Landslide Detection with MSTA-YOLO in Remote Sensing Images" Remote Sensing 17, no. 16: 2795. https://doi.org/10.3390/rs17162795

APA StyleWang, B., Su, J., Xi, J., Chen, Y., Cheng, H., Li, H., Chen, C., Shang, H., & Yang, Y. (2025). Landslide Detection with MSTA-YOLO in Remote Sensing Images. Remote Sensing, 17(16), 2795. https://doi.org/10.3390/rs17162795