Land8Fire: A Complete Study on Wildfire Segmentation Through Comprehensive Review, Human-Annotated Multispectral Dataset, and Extensive Benchmarking

Abstract

1. Introduction

- Literature Review: We present a comprehensive review of the existing wildfire segmentation methods, covering both traditional approaches and the recent advancements in deep learning.

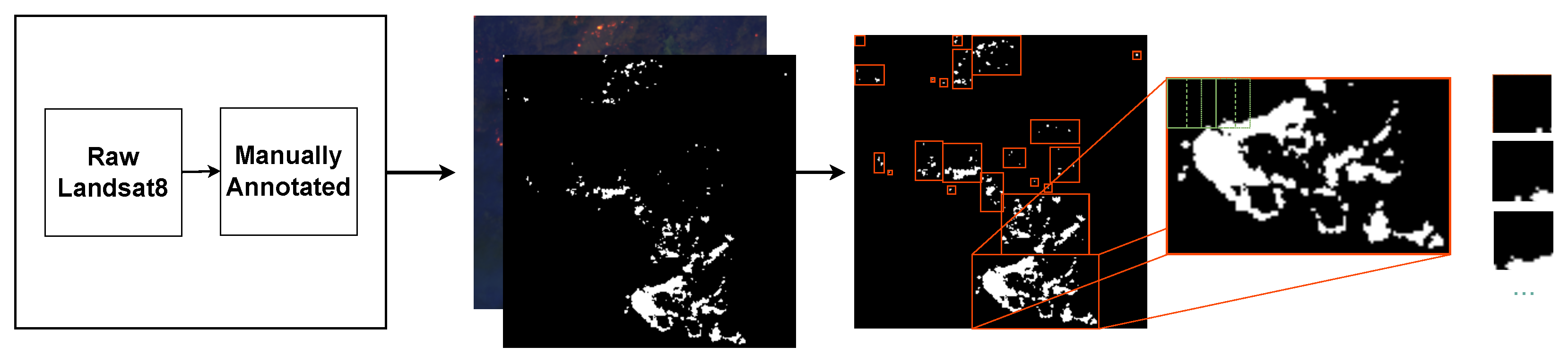

- Dataset: We introduce the Land8Fire dataset, a large-scale, high-resolution, human-annotated multispectral wildfire segmentation dataset designed to support the development and evaluation of wildfire detection models.

- Benchmark: We conduct extensive benchmarking and a comprehensive comparison of various deep learning methods, which will serve as baselines for wildfire segmentation in the research domain. In addition, we investigate the impact of different loss functions and spectral band combinations to better understand their influence on model performance.

2. Literature Review

2.1. Threshold-Based Wildfire Segmentation Methods

2.1.1. Murphy et al.’s Method

2.1.2. Schroeder et al.’s Method

2.1.3. Kumar and Roy’s Method

2.1.4. Thresholding Methods Strengths and Limitations

- Sensitivity to Environmental Illumination: During daylight hours, the sun’s intensity can significantly influence surface reflectance, introducing variability that may lead to false detections in remote sensing applications. This is particularly evident in channels sensitive to solar radiation. Furthermore, cloud occlusions can obscure parts of the surface, altering the intensity and distribution of light, which affects the accuracy of fire detection. Weather conditions, such as haze, fog, or varying cloud cover, can further impact the reflectance and absorption of light, introducing additional challenges in accurately detecting fire pixels.

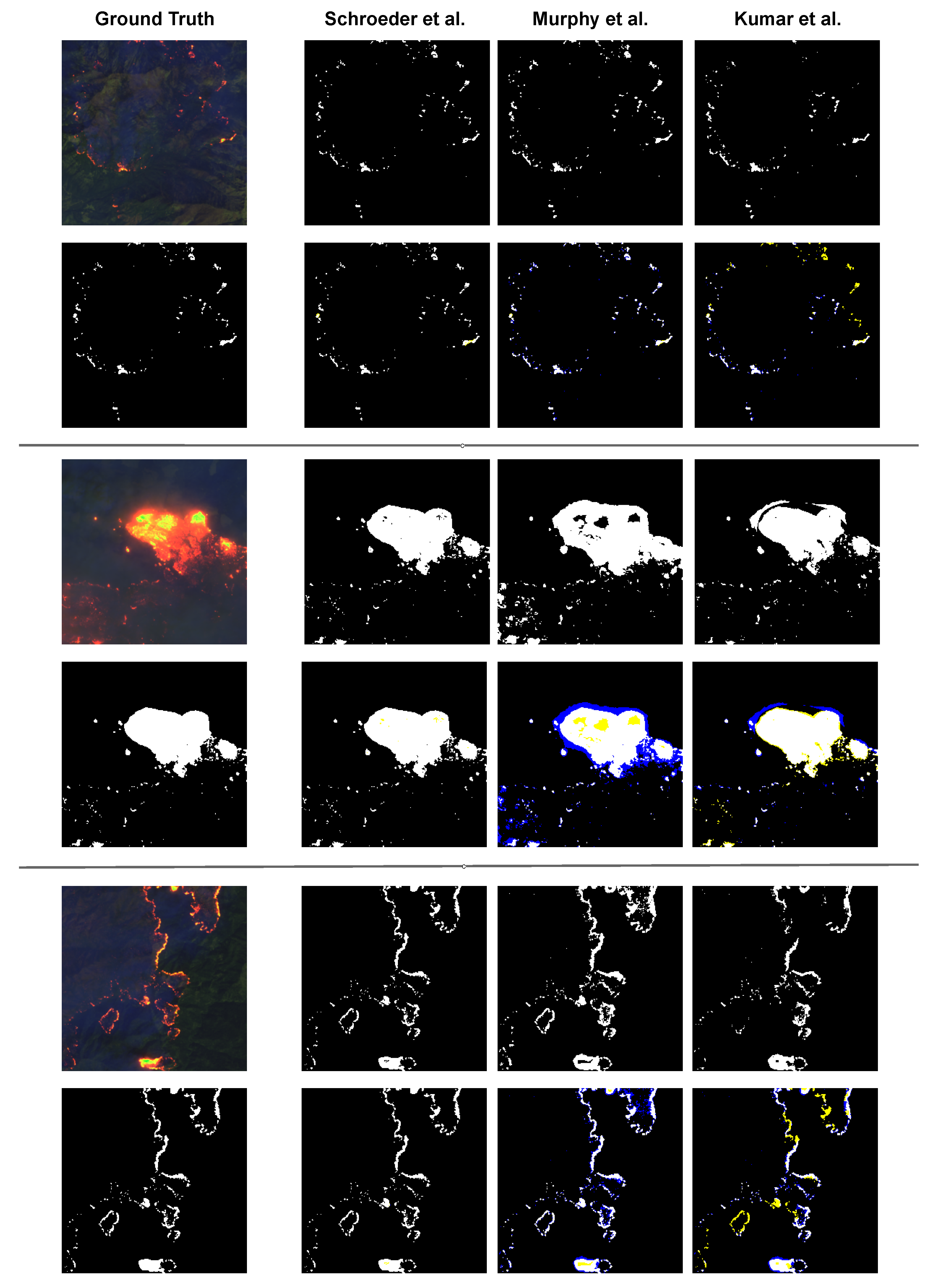

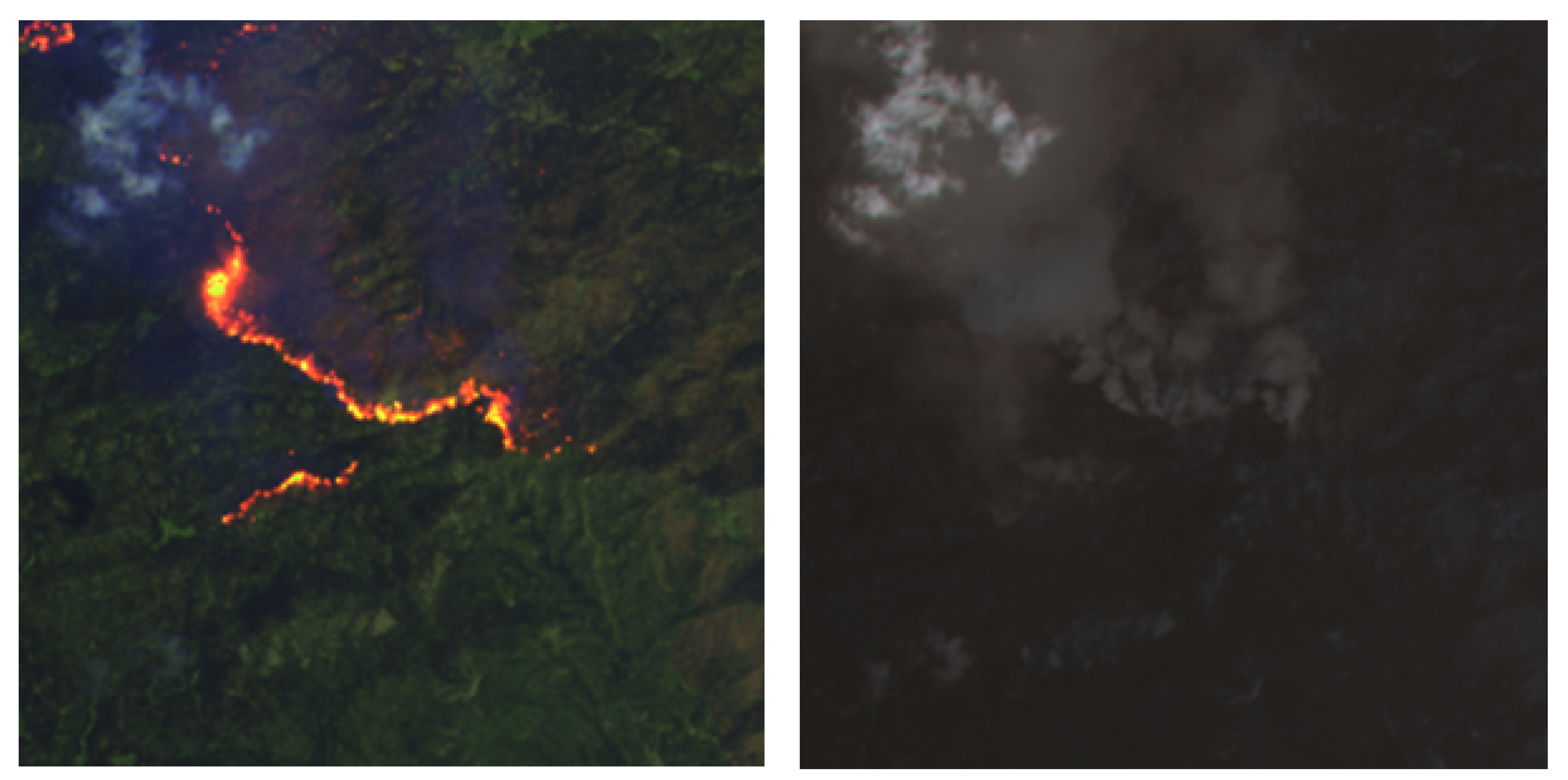

- Reliance on Fixed Thresholds: These methods depend on predefined thresholds for reflectance channels, making them rigid and prone to errors in dynamic environments. They often fail to adapt to varying environmental conditions, such as changes in weather or different landscapes, resulting in frequent misclassifications. As a result, highly reflective non-fire surfaces, like urban areas or deserts, are often misclassified as fire. For a detailed study on false detections in various settings, we refer readers to [20]. Furthermore, the inflexibility of these models can result in overly intense fire pixels being missed as illustrated in Figure 1 (middle).

2.2. Machine Learning-Based Wildfire Segmentation Methods

2.2.1. Conventional ML-Based Methods

2.2.2. Deep Learning-Based Methods

2.3. Wildfire Datasets

3. Land8Fire Dataset Curation

4. Experiments

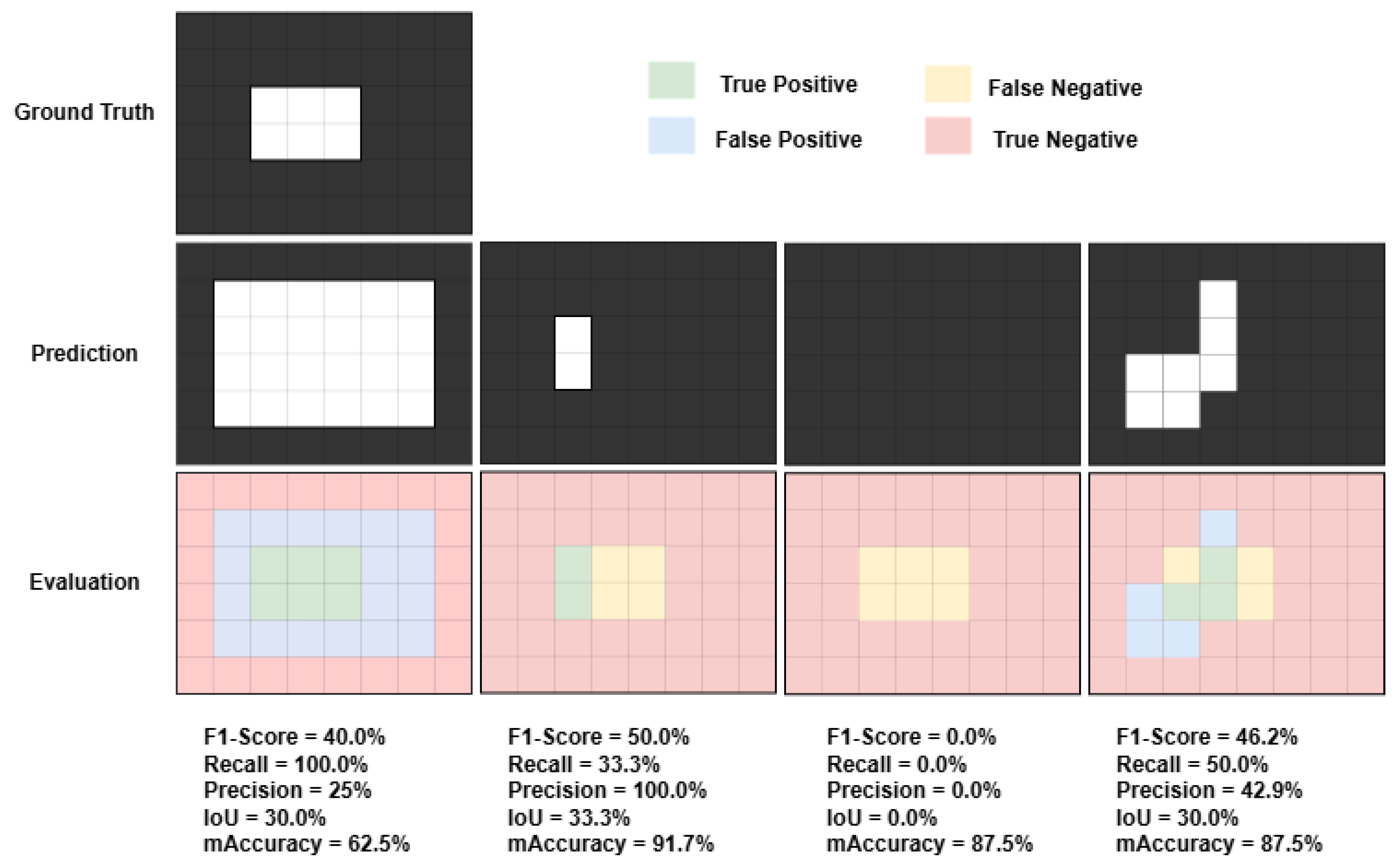

4.1. Evaluation Metrics

4.2. Evaluation Metric Analysis

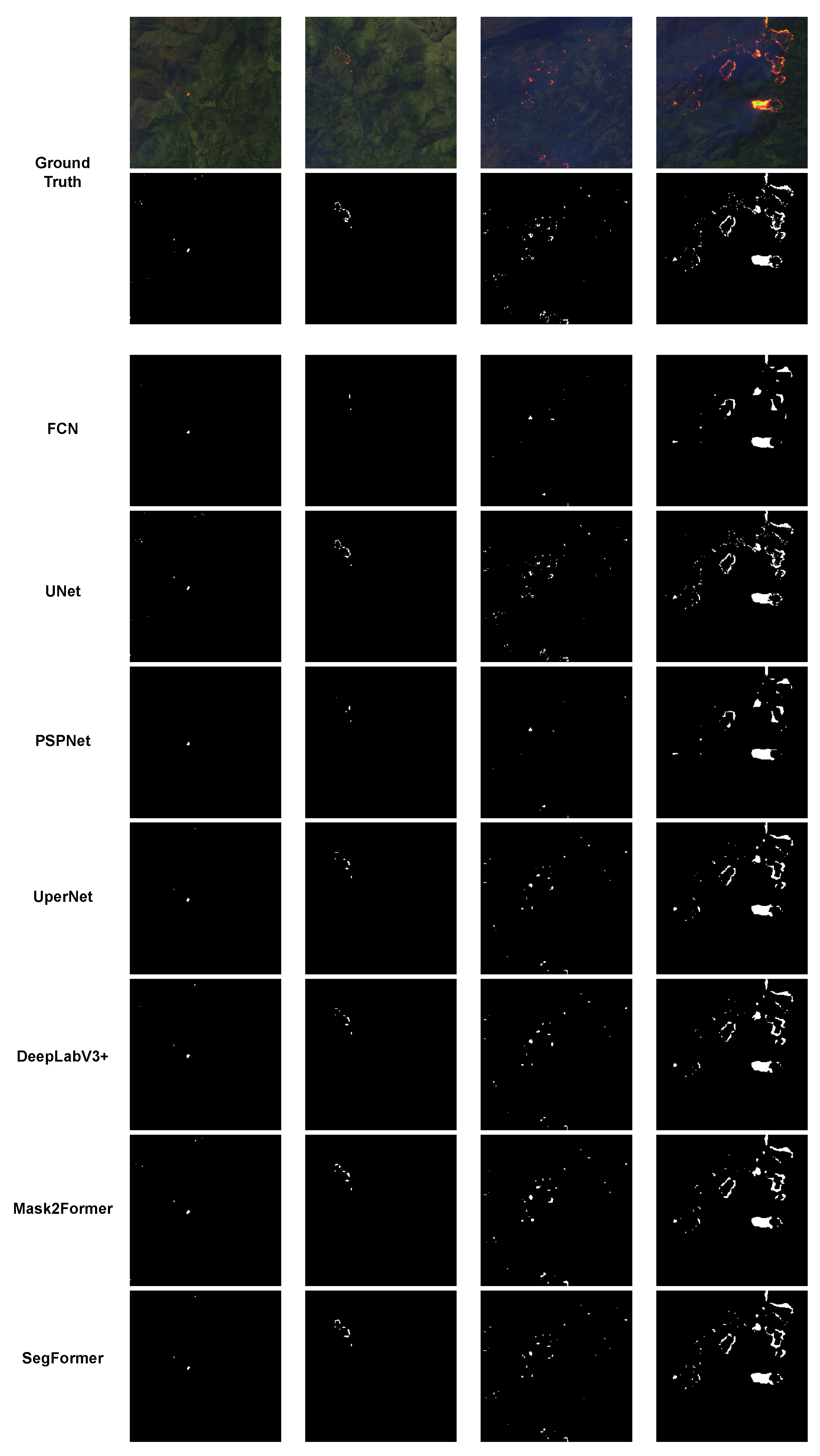

4.3. Deep Learning Architecture Analysis

4.3.1. CNN-Based Segmentation Models

- Fully Convolutional Networks (FCNs)

- 2.

- UNet

- 3.

- Pyramid Scene Parsing Network (PSPNet)

- 4.

- Unified Perceptual Parsing Network (UPerNet)

- 5.

- DeepLabV3+

4.3.2. Transformer-Based Segmentation Models

- Mask2Former

- 2.

- SegFormer

4.4. -Fold Cross-Validation

4.5. Implementation Details

4.6. Wildfire Detection Results

4.7. Discussion

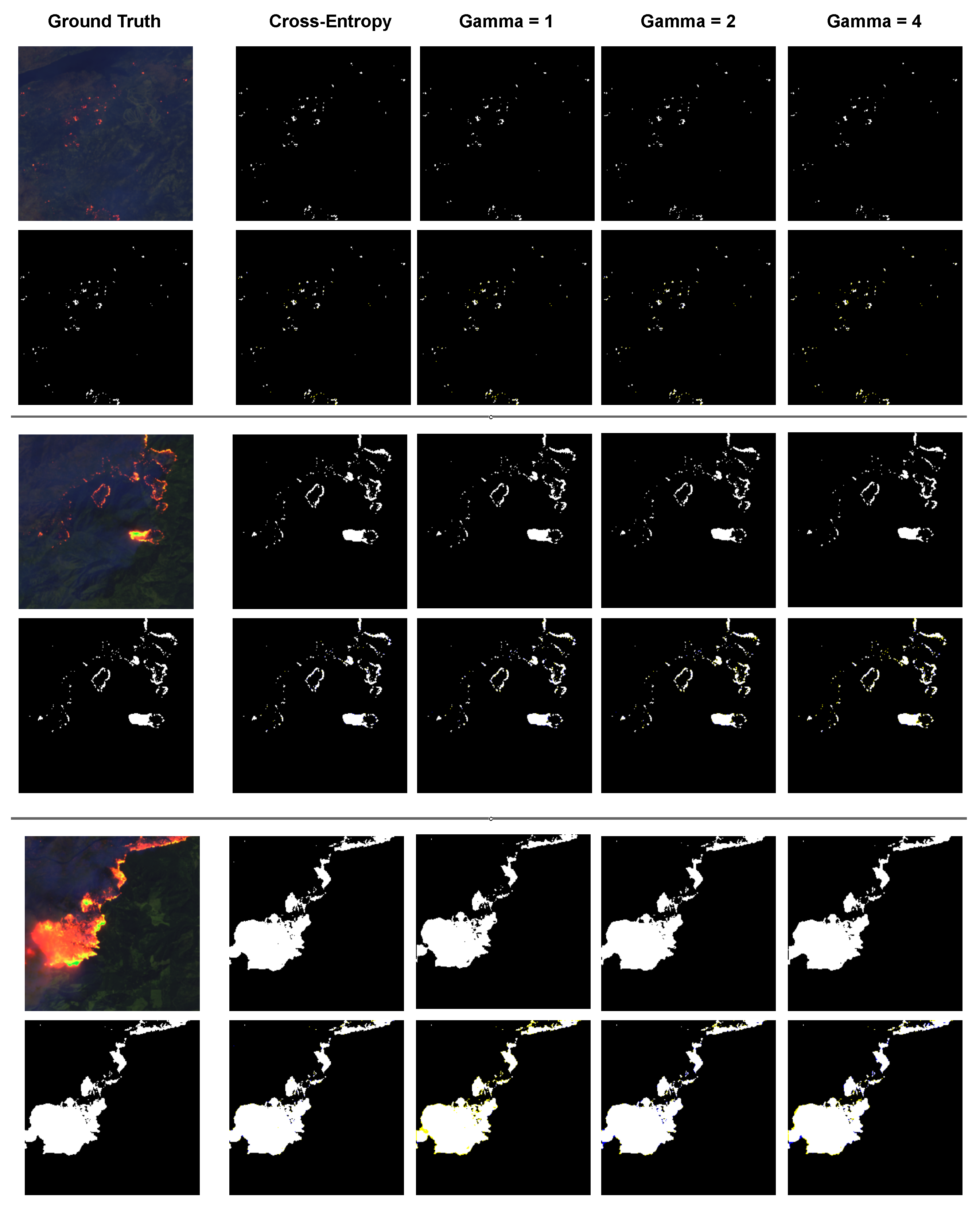

4.8. Abalation Study: Objective Function

4.9. Band Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jaffe, D.A.; O’Neill, S.M.; Larkin, N.K.; Holder, A.L.; Peterson, D.L.; Halofsky, J.E.; Rappold, A.G. Wildfire and prescribed burning impacts on air quality in the United States. J. Air Waste Manag. Assoc. 2020, 70, 583–615. [Google Scholar] [CrossRef] [PubMed]

- NOAA National Centers for Environmental Information. Billion-Dollar Weather and Climate Disasters: Summary Statistics; Technical Report; NOAA National Centers for Environmental Information: Asheville, NC, USA, 2024.

- Dampage, U.; Bandaranayake, L.; Wanasinghe, R.; Kottahachchi, K.; Jayasanka, B. Forest fire detection system using wireless sensor networks and machine learning. Sci. Rep. 2022, 12, 46. [Google Scholar] [CrossRef]

- Huang, P.; Chen, M.; Chen, K.; Zhang, H.; Yu, L.; Liu, C. A combined real-time intelligent fire detection and forecasting approach through cameras based on computer vision method. Process Saf. Environ. Prot. 2022, 164, 629–638. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Upadhyay, P.; Gupta, S. Introduction To Satellite Imaging Technology And Creating Images Using Raw Data Obtained From Landsat Satellite. ICGTI-2012 2012, 1, 126–134. [Google Scholar]

- Koltunov, A.; Ustin, S.L.; Prins, E.M. On timeliness and accuracy of wildfire detection by the GOES WF-ABBA algorithm over California during the 2006 fire season. Remote Sens. Environ. 2012, 127, 194–209. [Google Scholar] [CrossRef]

- Badhan, M.; Shamsaei, K.; Ebrahimian, H.; Bebis, G.; Lareau, N.P.; Rowell, E. Deep Learning Approach to Improve Spatial Resolution of GOES-17 Wildfire Boundaries using VIIRS Satellite Data. Remote Sens. 2024, 16, 715. [Google Scholar] [CrossRef]

- Wooster, M.J.; Roberts, G.J.; Giglio, L.; Roy, D.P.; Freeborn, P.H.; Boschetti, L.; Justice, C.; Ichoku, C.; Schroeder, W.; Davies, D.; et al. Satellite remote sensing of active fires: History and current status, applications and future requirements. Remote Sens. Environ. 2021, 267, 112694. [Google Scholar] [CrossRef]

- Justice, C.; Giglio, L.; Korontzi, S.; Owens, J.; Morisette, J.; Roy, D.; Descloitres, J.; Alleaume, S.; Petitcolin, F.; Kaufman, Y. The MODIS fire products. Remote Sens. Environ. 2002, 83, 244–262. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Csiszar, I.A. The New VIIRS 375 m active fire detection data product: Algorithm description and initial assessment. Remote Sens. Environ. 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Murphy, S.W.; de Souza Filho, C.R.; Wright, R.; Sabatino, G.; Pabon, R.C. HOTMAP: Global hot target detection at moderate spatial resolution. Remote Sens. Environ. 2016, 177, 78–88. [Google Scholar] [CrossRef]

- Kumar, S.S.; Roy, D.P. Global operational land imager Landsat-8 reflectance-based active fire detection algorithm. Int. J. Digit. Earth 2018, 11, 154–178. [Google Scholar] [CrossRef]

- Seydi, S.T.; Saeidi, V.; Kalantar, B.; Ueda, N.; Halin, A.A. Fire-Net: A Deep Learning Framework for Active Forest Fire Detection. J. Sens. 2022, 2022, 8044390. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- De Luis, A.; Tran, M.; Hanyu, T.; Tran, A.; Haitao, L.; McCann, R.; Mantooth, A.; Huang, Y.; Le, N. SolarFormer: Multi-scale transformer for solar PV profiling. In Proceedings of the 2024 International Conference on Smart Grid Synchronized Measurements and Analytics (SGSMA), Washington, DC, USA, 21–23 May 2024; pp. 1–8. [Google Scholar]

- Chen, Y.; Fan, R.; Yang, X.; Wang, J.; Latif, A. Extraction of urban water bodies from high-resolution remote-sensing imagery using deep learning. Water 2018, 10, 585. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep learning approaches for wildland fires using satellite remote sensing data: Detection, mapping, and prediction. Fire 2023, 6, 192. [Google Scholar] [CrossRef]

- Rashkovetsky, D.; Mauracher, F.; Langer, M.; Schmitt, M. Wildfire detection from multisensor satellite imagery using deep semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7001–7016. [Google Scholar] [CrossRef]

- de Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Bhargavi, K.; Jyothi, S. A survey on threshold based segmentation technique in image processing. Int. J. Innov. Res. Dev. 2014, 3, 234–239. [Google Scholar]

- Schroeder, W.; Oliva, P.; Giglio, L.; Quayle, B.; Lorenz, E.; Morelli, F. Active fire detection using Landsat-8/OLI data. Remote Sens. Environ. 2016, 185, 210–220. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Milanović, S.; Marković, N.; Pamučar, D.; Gigović, L.; Kostić, P.; Milanović, S.D. Forest fire probability mapping in eastern Serbia: Logistic regression versus random forest method. Forests 2020, 12, 5. [Google Scholar] [CrossRef]

- Molovtsev, M.D.; Sineva, I.S. Classification algorithms analysis in the forest fire detection problem. In Proceedings of the 2019 International Conference “Quality Management, Transport and Information Security, Information Technologies” (IT&QM&IS), Sochi, Russia, 23–27 September 2019; pp. 548–553. [Google Scholar]

- Hong, Z.; Tang, Z.; Pan, H.; Zhang, Y.; Zheng, Z.; Zhou, R.; Ma, Z.; Zhang, Y.; Han, Y.; Wang, J.; et al. Active fire detection using a novel convolutional neural network based on Himawari-8 satellite images. Front. Environ. Sci. 2022, 10, 794028. [Google Scholar] [CrossRef]

- Collins, L.; McCarthy, G.; Mellor, A.; Newell, G.; Smith, L. Training data requirements for fire severity mapping using Landsat imagery and random forest. Remote Sens. Environ. 2020, 245, 111839. [Google Scholar] [CrossRef]

- Reis, C.E.P.; dos Santos, L.B.R.; Morelli, F.; Vijaykumar, N.L. Deep Learning-Based Active Fire Detection Using Satellite Imagery. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Olten, Switzerland, 11–13 December 2023; pp. 148–157. [Google Scholar]

- Akbari Asanjan, A.; Memarzadeh, M.; Lott, P.A.; Rieffel, E.; Grabbe, S. Probabilistic wildfire segmentation using supervised deep generative model from satellite imagery. Remote Sens. 2023, 15, 2718. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Chen, X.; Hopkins, B.; Wang, H.; O’Neill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland fire detection and monitoring using a drone-collected rgb/ir image dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar] [CrossRef]

- Mohapatra, A.; Trinh, T. Early wildfire detection technologies in practice—A review. Sustainability 2022, 14, 12270. [Google Scholar] [CrossRef]

- California Department of Forestry and Fire Protection (CAL FIRE). CAL FIRE Data Portal. 2025. Available online: https://data.ca.gov/group/fire (accessed on 7 March 2025).

- Saah, D. Open Science in Wildfire Risk Management: Bridging the Gap with Innovations from Pyregence, Climate and Wildfire Institute, RiskFactor, Delos, and Planscape. In Proceedings of the AGU Fall Meeting Abstracts, San Francisco, CA, USA, 11–15 December 2023; Volume 2023, p. NH32A-03. [Google Scholar]

- Xu, Y.; Berg, A.; Haglund, L. Sen2Fire: A Challenging Benchmark Dataset for Wildfire Detection using Sentinel Data. arXiv 2024, arXiv:2403.17884. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Rahman, M.A.; Wang, Y. Optimizing intersection-over-union in deep neural networks for image segmentation. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 12–14 December 2016; pp. 234–244. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Arlot, S.; Celisse, A. A survey of cross-validation procedures for model selection. Statist. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. Ijcai 1995, 14, 1137–1145. [Google Scholar]

- Manual, A.B. An introduction to Statistical Learning with Applications in R; Springer: New York, NY, USA, 2013. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Singh, H.; Ang, L.M.; Srivastava, S.K. Active wildfire detection via satellite imagery and machine learning: An empirical investigation of Australian wildfires. Nat. Hazards 2025, 121, 9777–9800. [Google Scholar] [CrossRef]

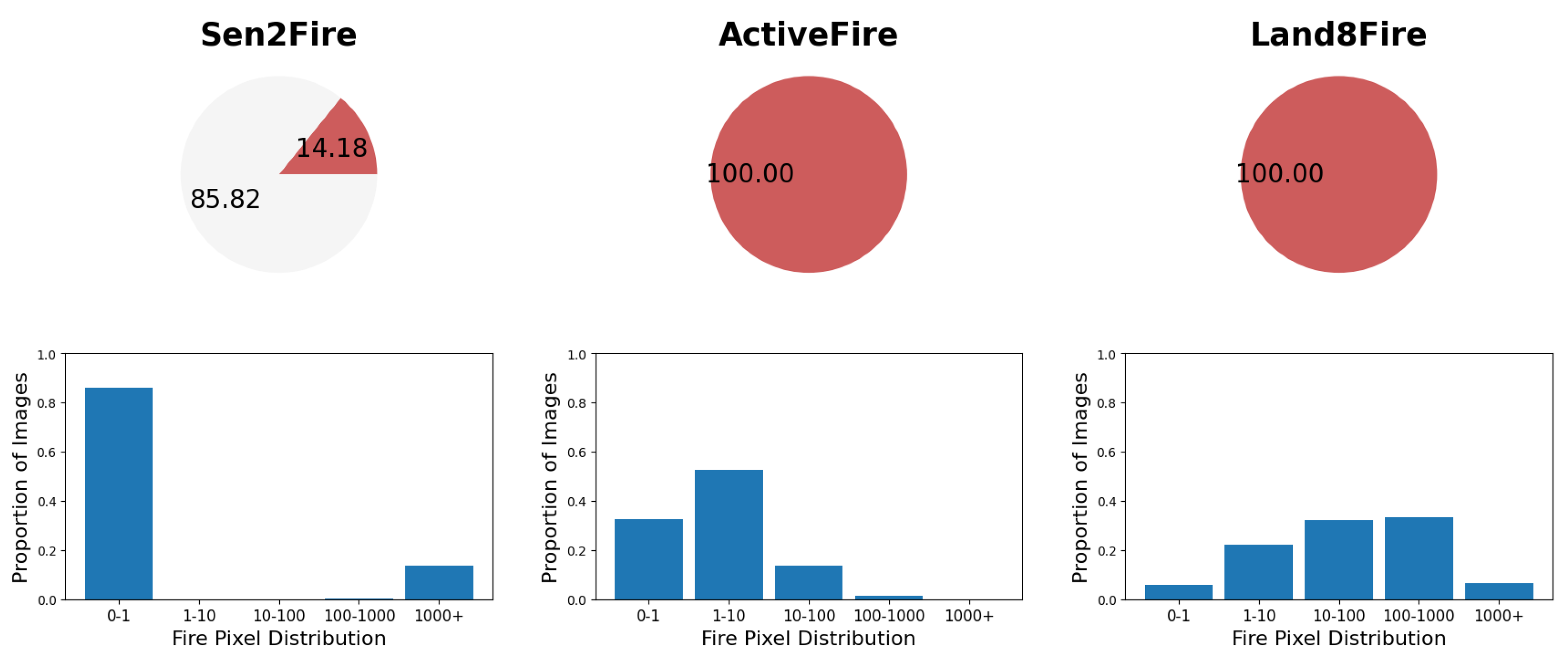

| Aerial Datasets | Dataset Size | Fire Pixel Distribution | Ground Truth Annotation | Data Reliability |

|---|---|---|---|---|

| Sen2Fire | 2466 | High imbalance | Software (MOD14AI V6.1) | Low, depend on the existing software |

| ActiveFire | 150,000+ | Imbalance (long tail) | Automated (Algorithm-based) | Low, depend on the existing algorithm |

| Land8Fire (ours) | 23,193 | Low imbalance | Manual Annotation | High |

| Band Number | Description | Wavelength (μm) | Resolution |

|---|---|---|---|

| B1 | Coastal aerosol | 0.433–0.453 | 30 m |

| B2 | Blue | 0.450–0.515 | 30 m |

| B3 | Green | 0.525–0.600 | 30 m |

| B4 | Red | 0.630–0.680 | 30 m |

| B5 | Near Infrared (NIR) | 0.845–0.885 | 30 m |

| B6 | Shortwave Infrared 1 (SWIR1) | 1.560–1.660 | 30 m |

| B7 | Shortwave Infrared 2 (SWIR2) | 2.100–2.300 | 30 m |

| B9 | Cirrus | 1.360–1.390 | 30 m |

| B10 | Thermal Infrared 1 | 10.6–11.2 | 100 m |

| B11 | Thermal Infrared 2 | 11.50–12.51 | 100 m |

| Methods | Bands | F1-Score | Recall | Precision | mAccuracy | IoU | |

|---|---|---|---|---|---|---|---|

| Thresh based | Schroeder | {B1, B5, B6, B7} | 87.58 | 82.98 | 99.76 | 91.49 | 82.83 |

| Kumar and Roy | {B5, B6, B7} | 70.75 | 91.96 | 61.08 | 95.89 | 57.24 | |

| Murphy | {B4, B5, B6, B7} | 74.25 | 98.62 | 62.44 | 99.11 | 61.45 | |

| Deep Learning based | UNet | {B2, B6, B7} | 94.49, 1.42 | 93.28, 3.01 | 95.79, 1.11 | 96.62, 1.49 | 89.58, 2.53 |

| UPerNet | {B2, B6, B7} | 80.76, 3.80 | 74.42, 8.11 | 83.83, 5.89 | 87.17, 4.05 | 65.35, 8.91 | |

| Mask2Former | {B2, B6, B7} | 80.27, 5.25 | 77.07, 6.01 | 83.90, 5.83 | 88.50, 3.00 | 67.29, 7.16 | |

| SegFormer | {B2, B6, B7} | 80.26, 6.13 | 77.20, 7.95 | 83.82, 5.43 | 88.56, 3.96 | 67.36, 8.31 | |

| DeepLabV3+ | {B2, B6, B7} | 78.96, 6.69 | 75.76, 7.99 | 82.54, 5.68 | 87.84, 3.98 | 65.62, 8.88 | |

| FCN | {B2, B6, B7} | 64.99, 14.12 | 55.77, 16.13 | 79.54, 8.56 | 77.85, 8.06 | 49.38, 14.88 | |

| PSPNet | {B2, B6, B7} | 64.77, 14.77 | 55.34, 16.49 | 80.38, 8.50 | 77.64, 8.24 | 49.15, 14.99 |

| Losses | Gamma | F1-Score | Recall | Precision | mAccuracy | IoU |

|---|---|---|---|---|---|---|

| Cross-Entropy | – | 95.63 | 95.13 | 97.71 | 90.23 | 86.17 |

| Focal | 1 | 90.77 | 85.4 | 97.51 | 92.70 | 83.38 |

| 2 | 92.62 | 89.73 | 95.86 | 94.85 | 86.28 | |

| 4 | 89.91 | 85.09 | 95.51 | 92.53 | 81.72 |

| Bands | F1-Score | Recall | Precision | mAccuracy | IoU |

|---|---|---|---|---|---|

| {B5, B6, B7} | 96.99 | 97.24 | 96.73 | 98.61 | 94.15 |

| {B4, B5, B6, B7} | 96.39 | 95.71 | 97.39 | 97.85 | 93.31 |

| {B1, B2, B4, B5, B6, B7} | 96.20 | 94.40 | 98.08 | 97.19 | 92.68 |

| {B1, B2, …, B11} | 96.17 | 98.89 | 93.59 | 99.42 | 92.62 |

| {B2, B6, B7} | 96.00 | 96.53 | 95.48 | 98.25 | 92.31 |

| {B1, B5, B6, B7} | 95.16 | 96.5 | 93.86 | 98.23 | 90.77 |

| {B6, B7} | 94.98 | 93.52 | 96.49 | 96.75 | 90.44 |

| {B2, B3, B4} | 32.70 | 20.47 | 81.32 | 60.22 | 19.55 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tran, A.; Tran, M.; Marti, E.; Cothren, J.; Rainwater, C.; Eksioglu, S.; Le, N. Land8Fire: A Complete Study on Wildfire Segmentation Through Comprehensive Review, Human-Annotated Multispectral Dataset, and Extensive Benchmarking. Remote Sens. 2025, 17, 2776. https://doi.org/10.3390/rs17162776

Tran A, Tran M, Marti E, Cothren J, Rainwater C, Eksioglu S, Le N. Land8Fire: A Complete Study on Wildfire Segmentation Through Comprehensive Review, Human-Annotated Multispectral Dataset, and Extensive Benchmarking. Remote Sensing. 2025; 17(16):2776. https://doi.org/10.3390/rs17162776

Chicago/Turabian StyleTran, Anh, Minh Tran, Esteban Marti, Jackson Cothren, Chase Rainwater, Sandra Eksioglu, and Ngan Le. 2025. "Land8Fire: A Complete Study on Wildfire Segmentation Through Comprehensive Review, Human-Annotated Multispectral Dataset, and Extensive Benchmarking" Remote Sensing 17, no. 16: 2776. https://doi.org/10.3390/rs17162776

APA StyleTran, A., Tran, M., Marti, E., Cothren, J., Rainwater, C., Eksioglu, S., & Le, N. (2025). Land8Fire: A Complete Study on Wildfire Segmentation Through Comprehensive Review, Human-Annotated Multispectral Dataset, and Extensive Benchmarking. Remote Sensing, 17(16), 2776. https://doi.org/10.3390/rs17162776