Deep Learning Small Water Body Mapping by Transfer Learning from Sentinel-2 to PlanetScope

Abstract

1. Introduction

2. Study Area, Data and Methods

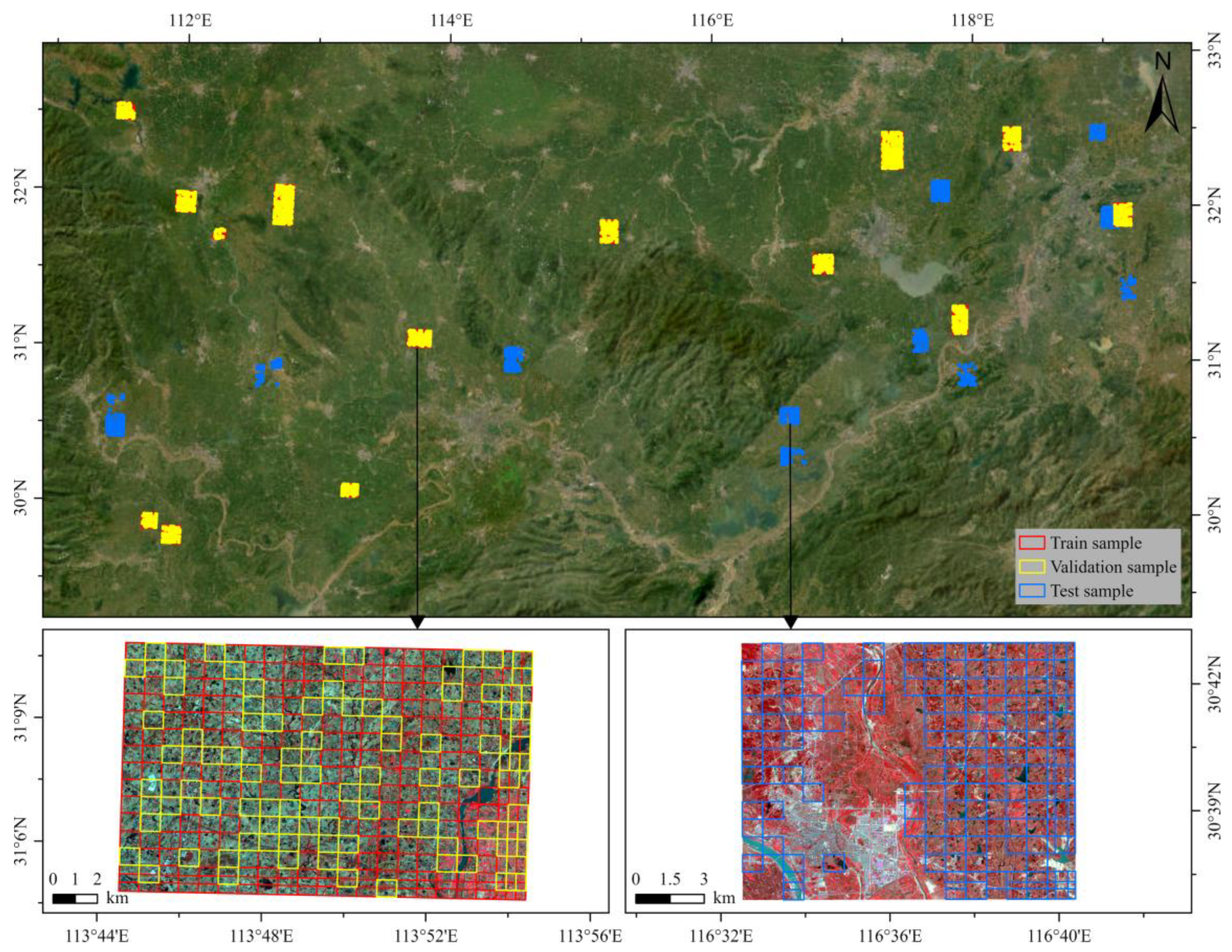

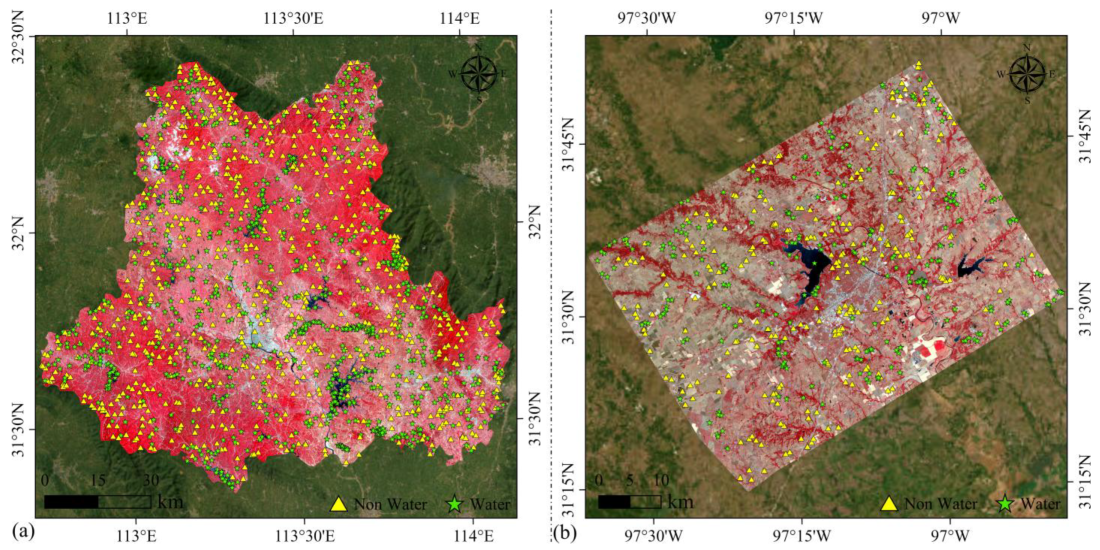

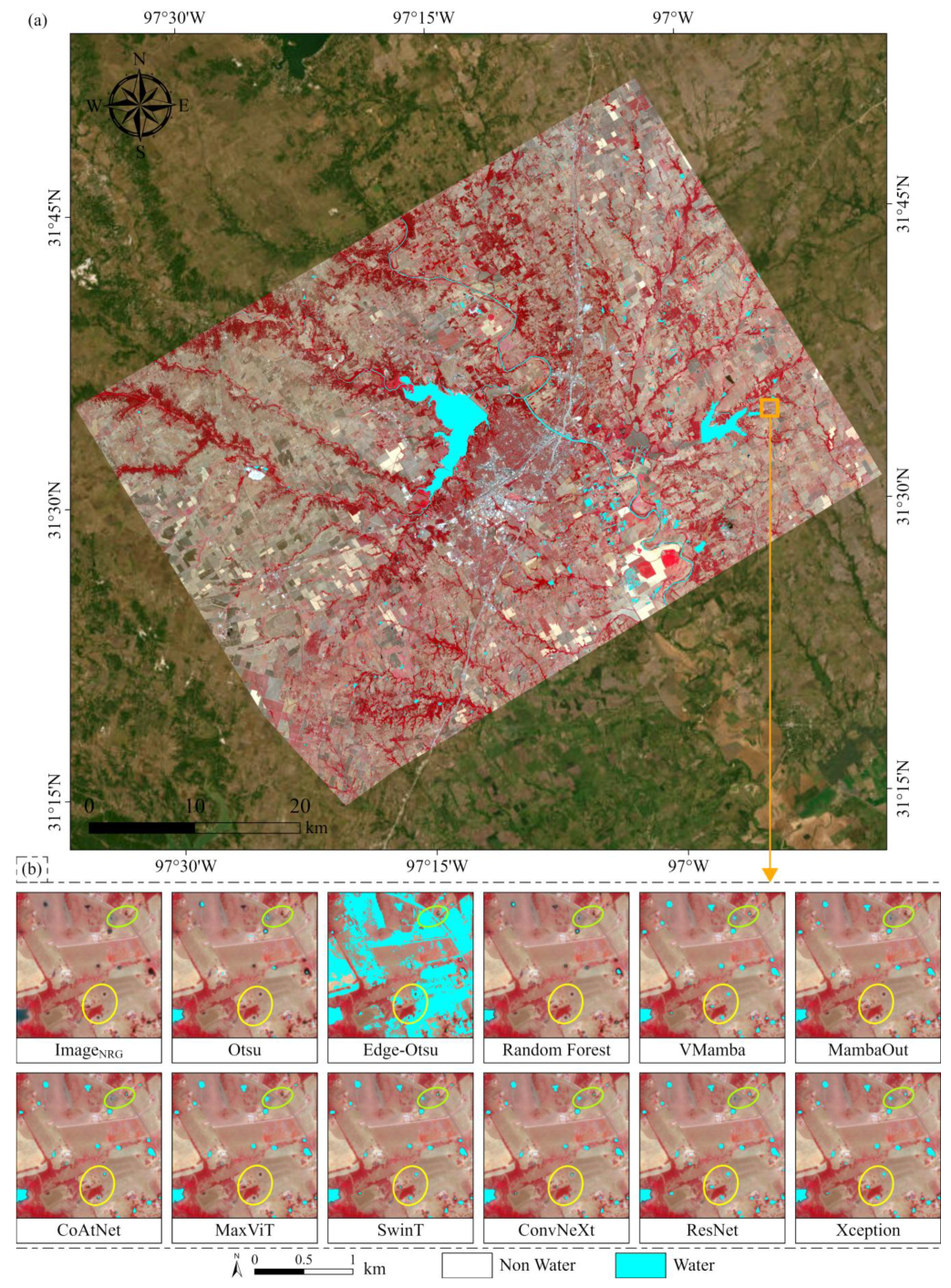

2.1. Study Area

2.2. Data Sources

2.2.1. Sentinel-2 Data

2.2.2. PlanetScope Data

2.3. Dataset Pre-Processing

2.4. Model Architecture and Training

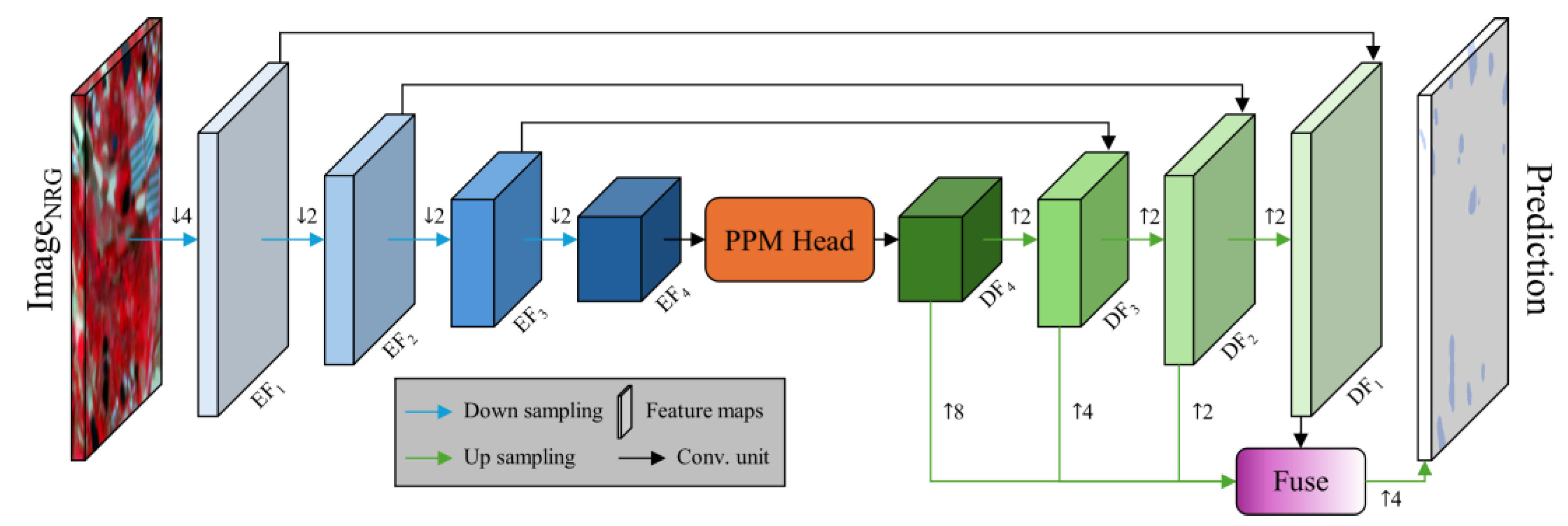

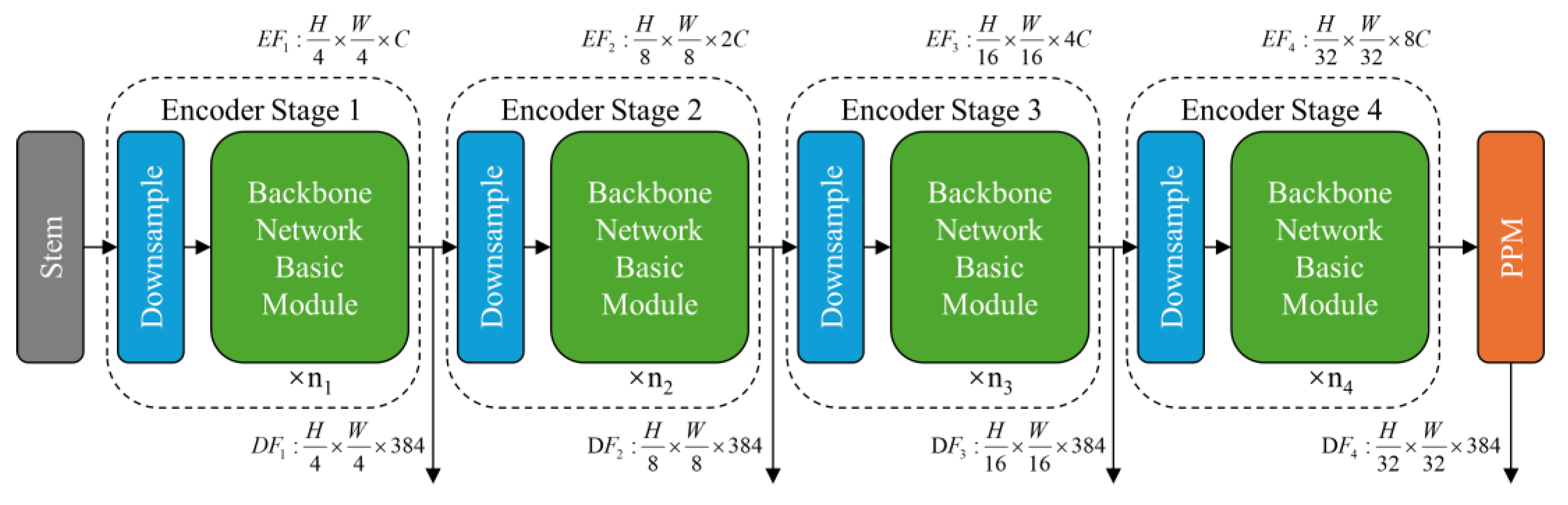

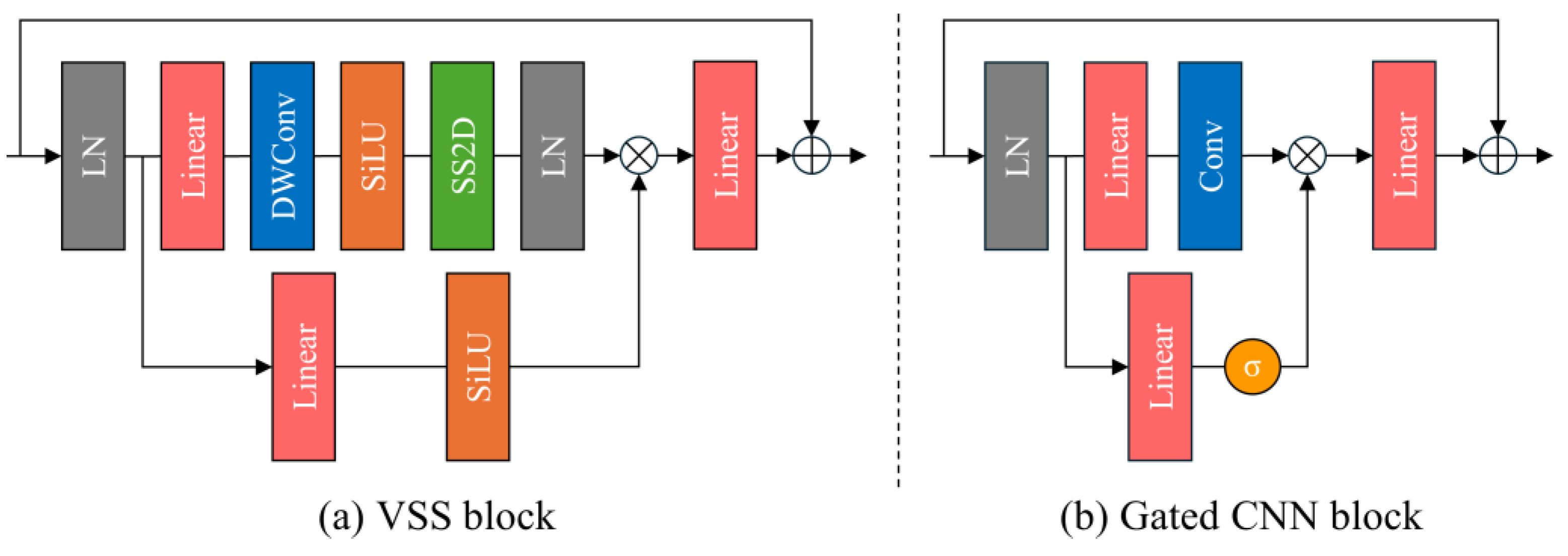

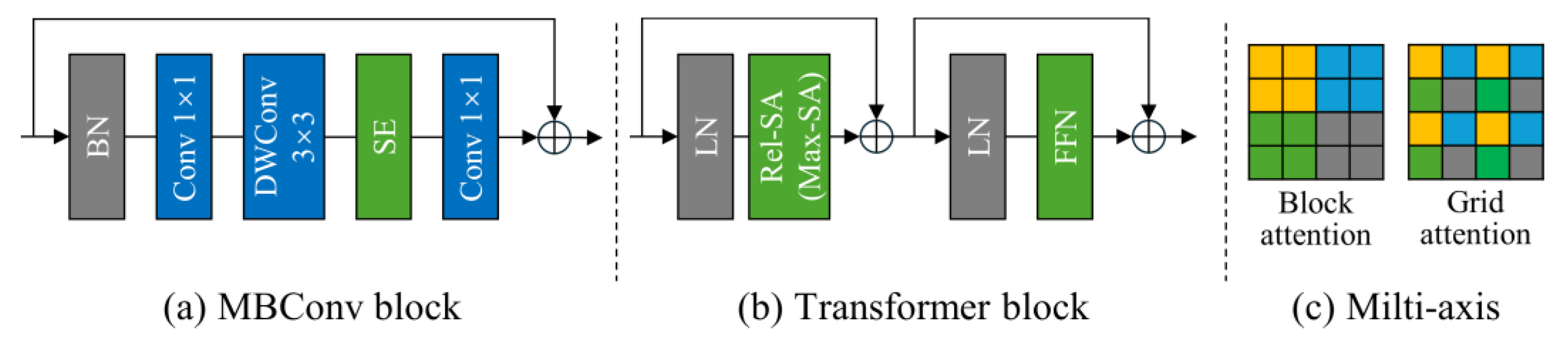

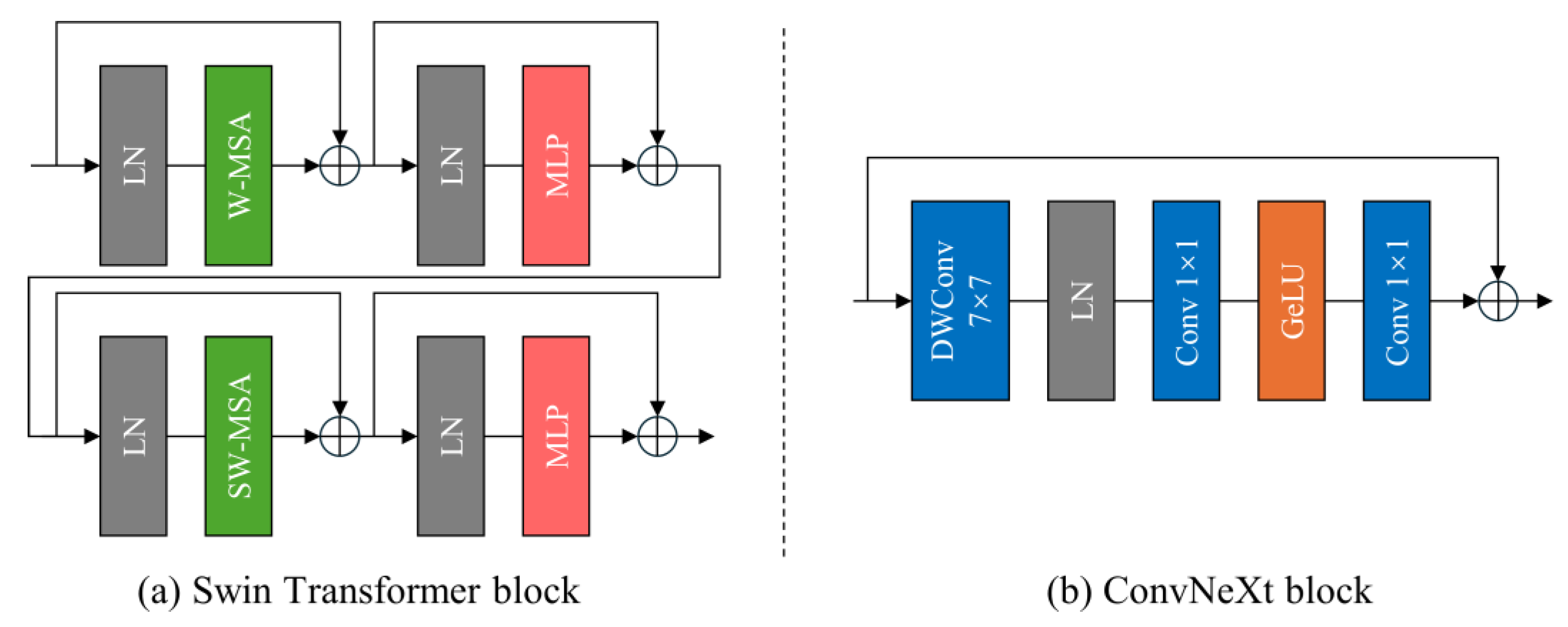

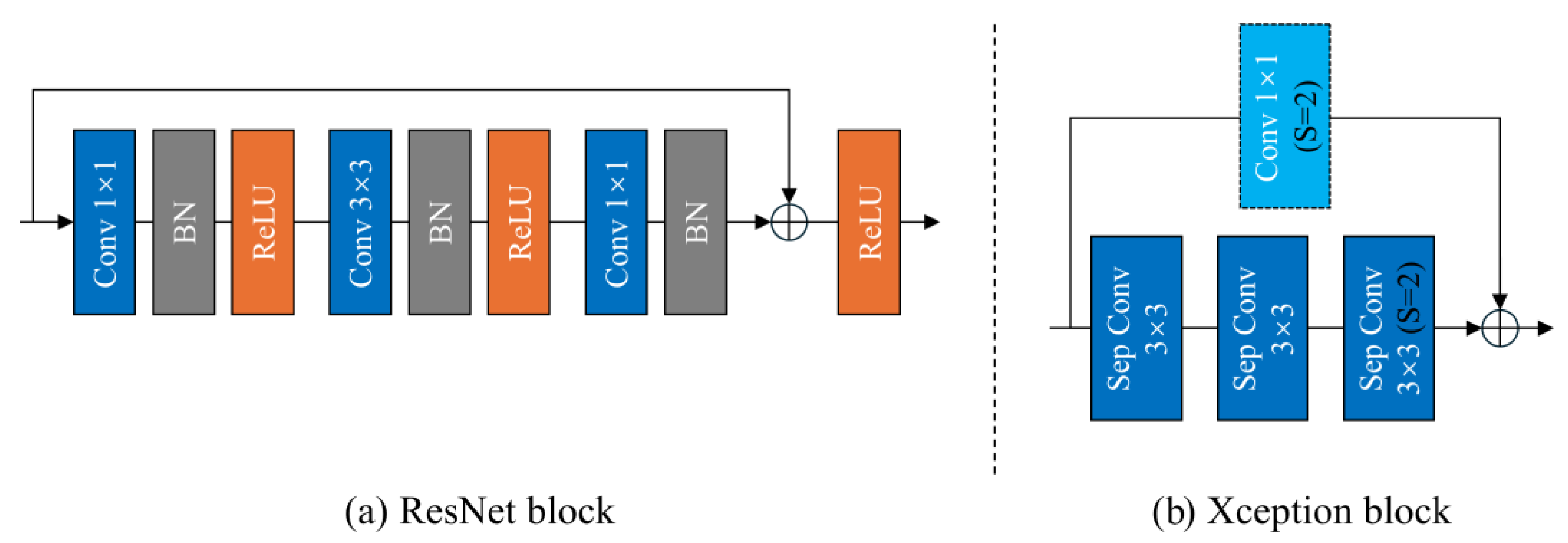

2.4.1. Deep Learning Network for Small Water Body Segmentation

2.4.2. Model Training Strategy and Comparison

2.5. Accuracy Assessment

3. Results

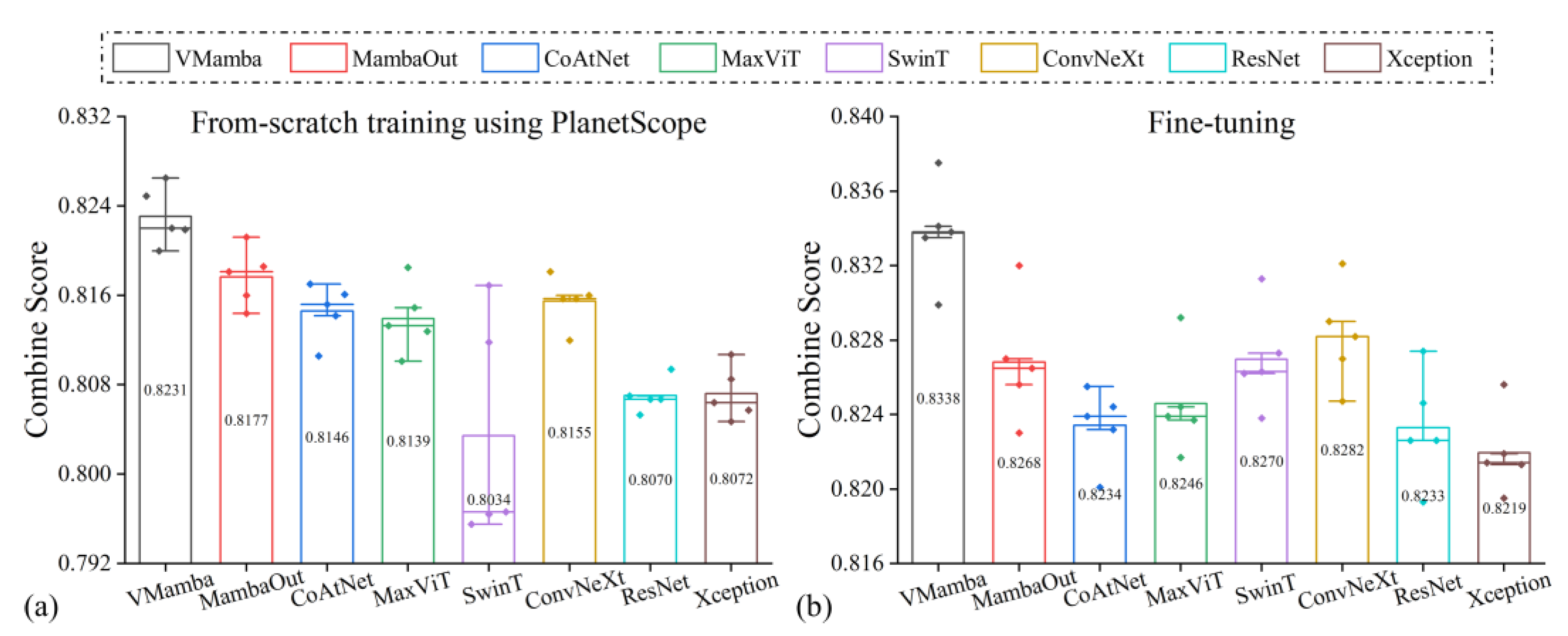

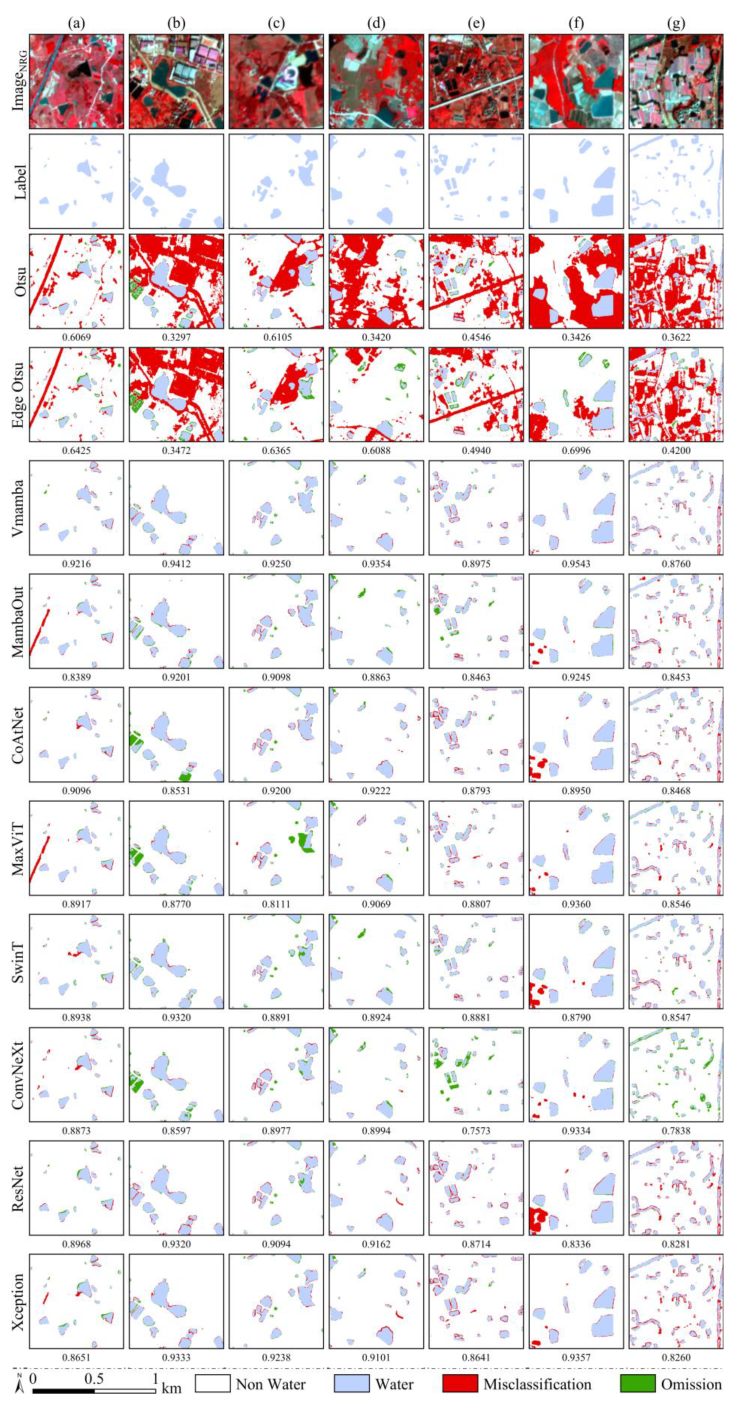

3.1. Five-Fold Cross-Validation of Fine-Tuning Strategies and From-Scratch Training Strategies Using PlanetScope

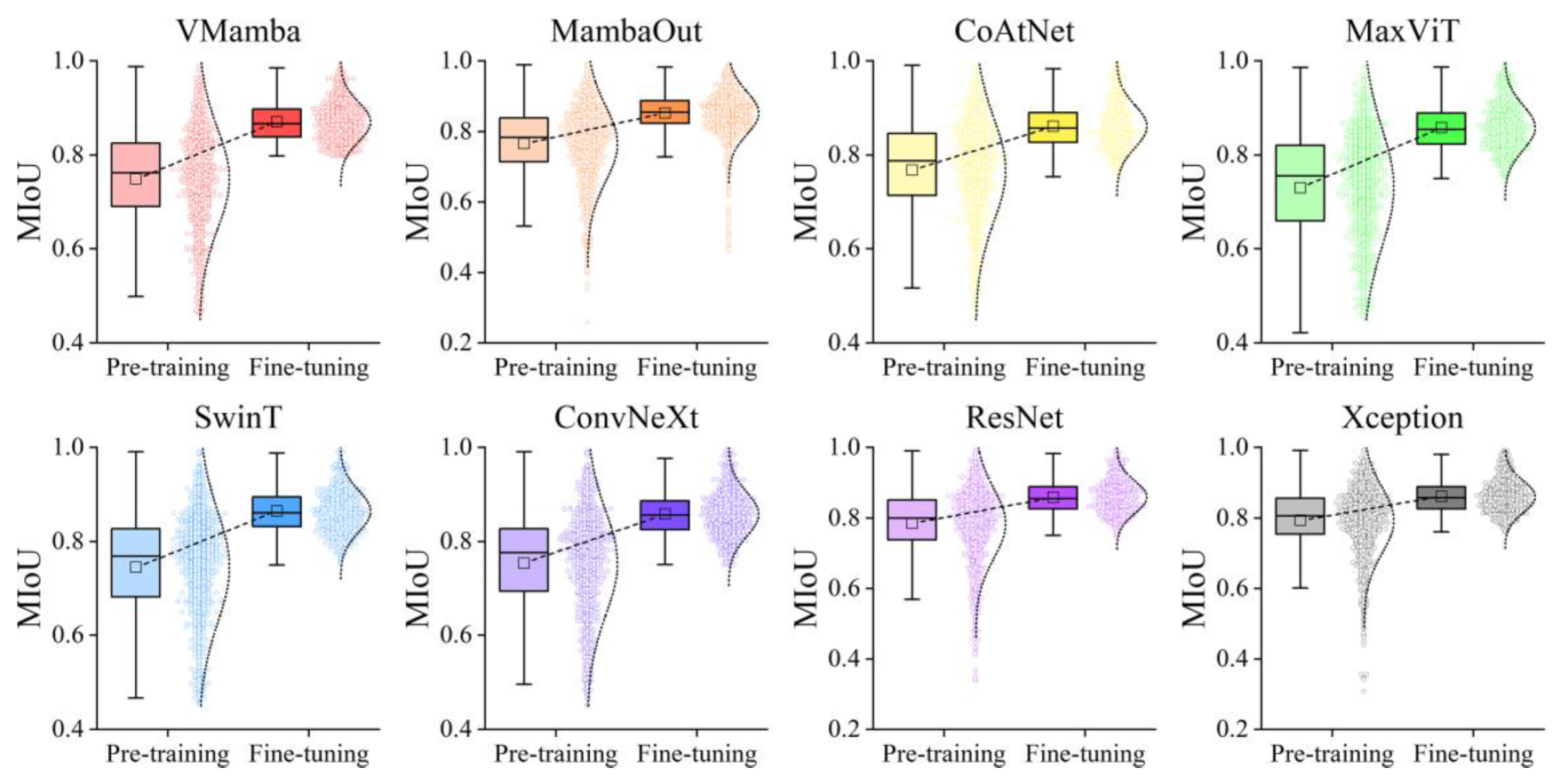

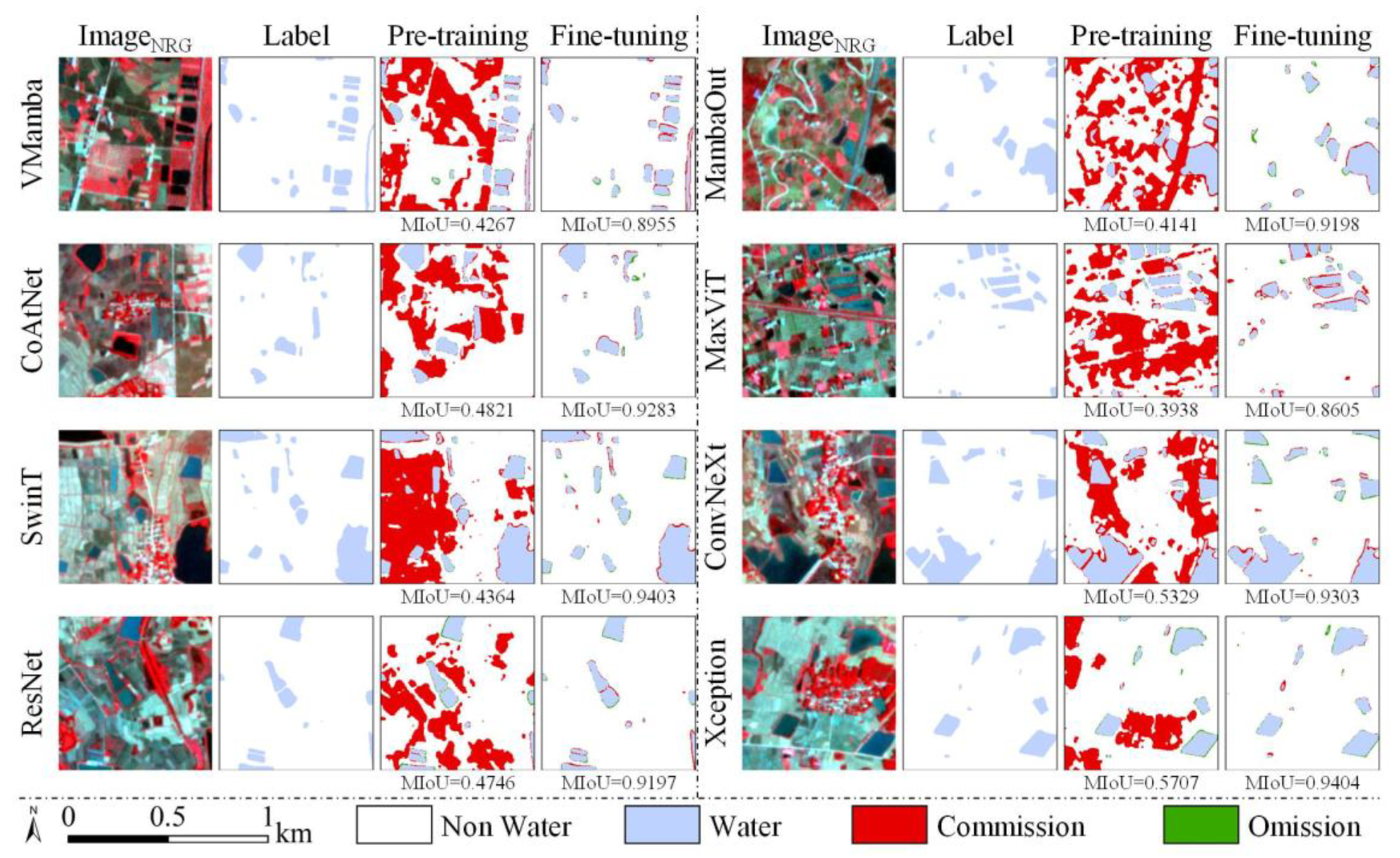

3.2. Comparison Between Pre-Training and Fine-Tuning

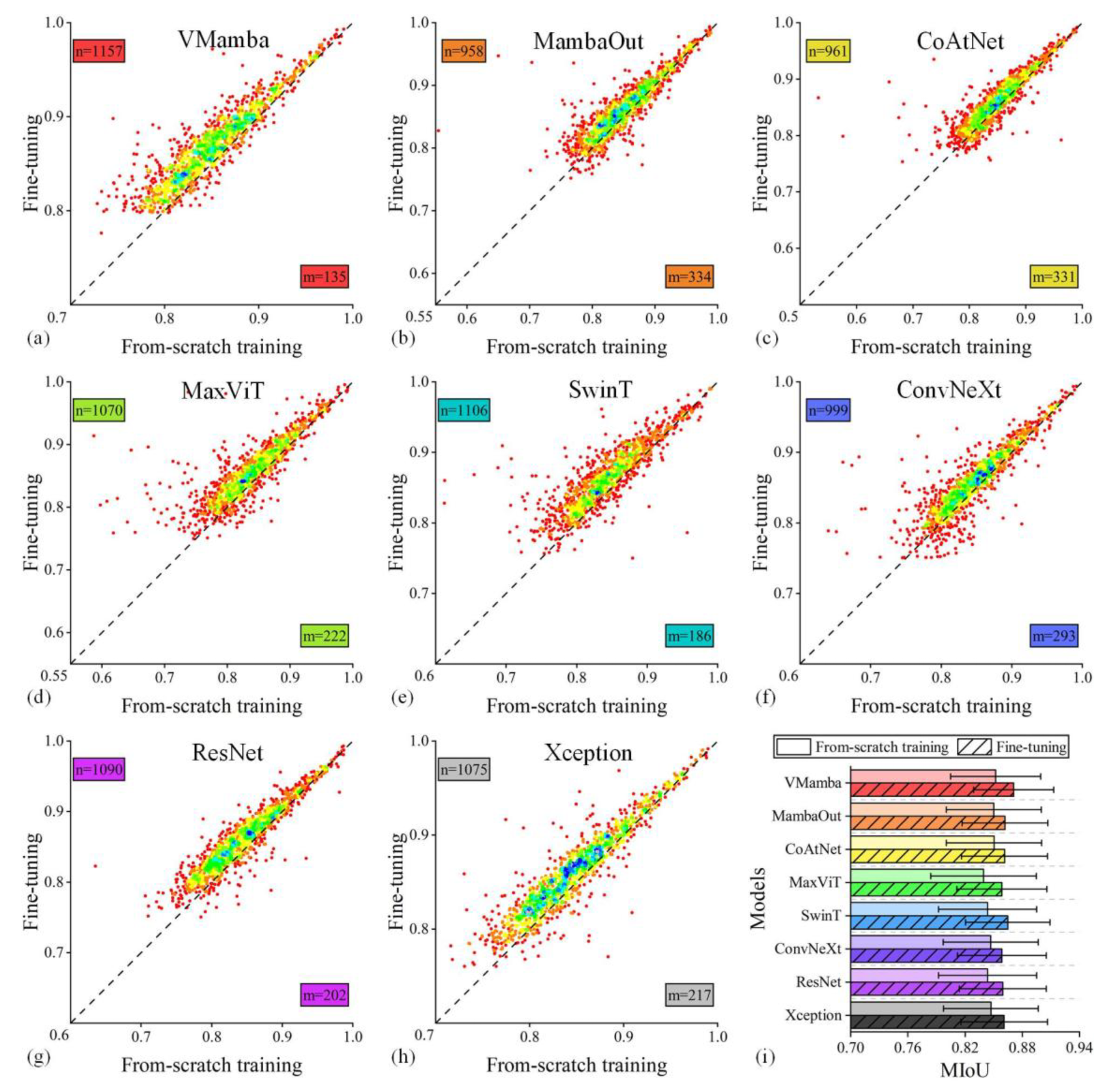

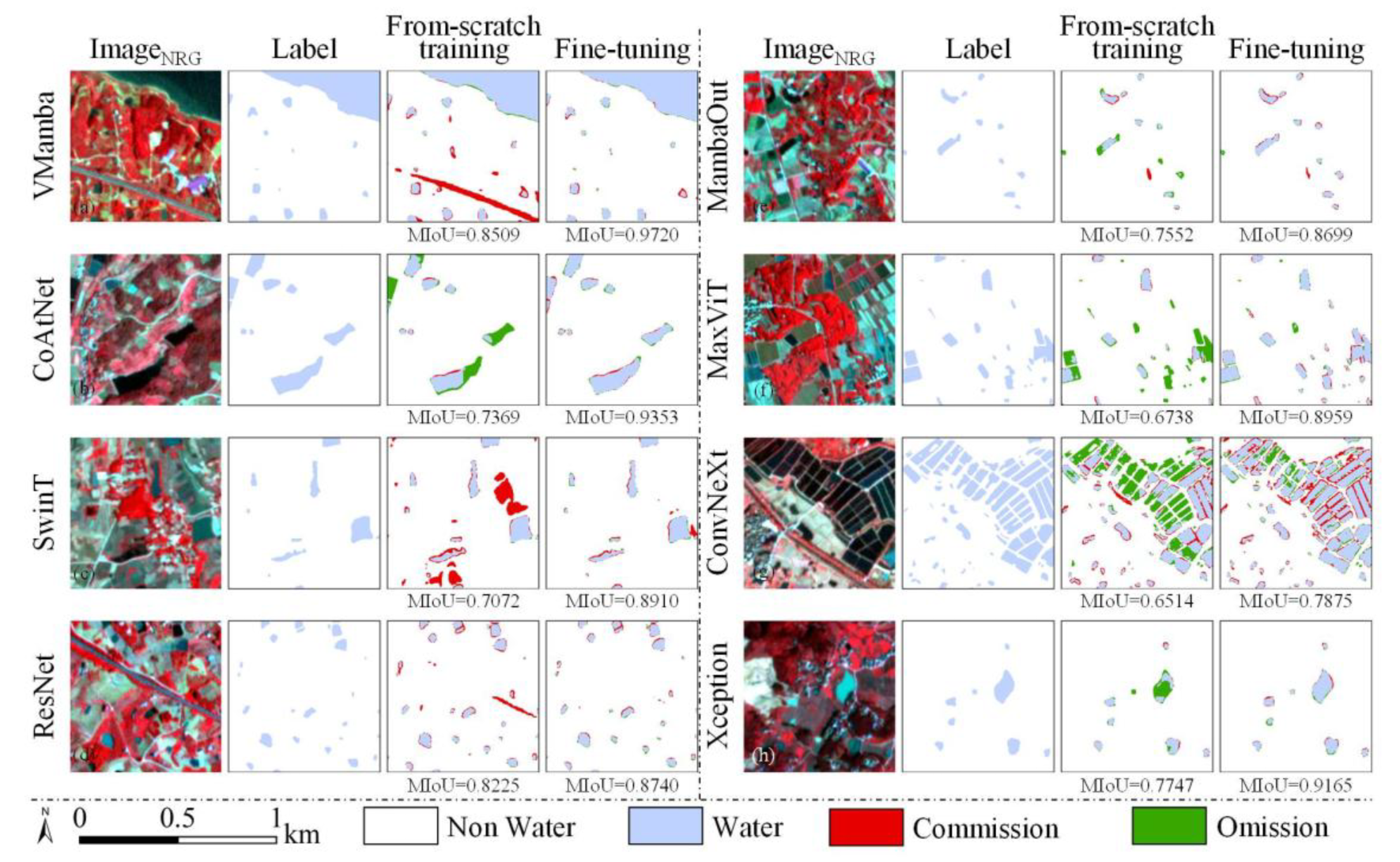

3.3. Comparison Fine-Tuning with From-Scratch Training Using PlanetScope

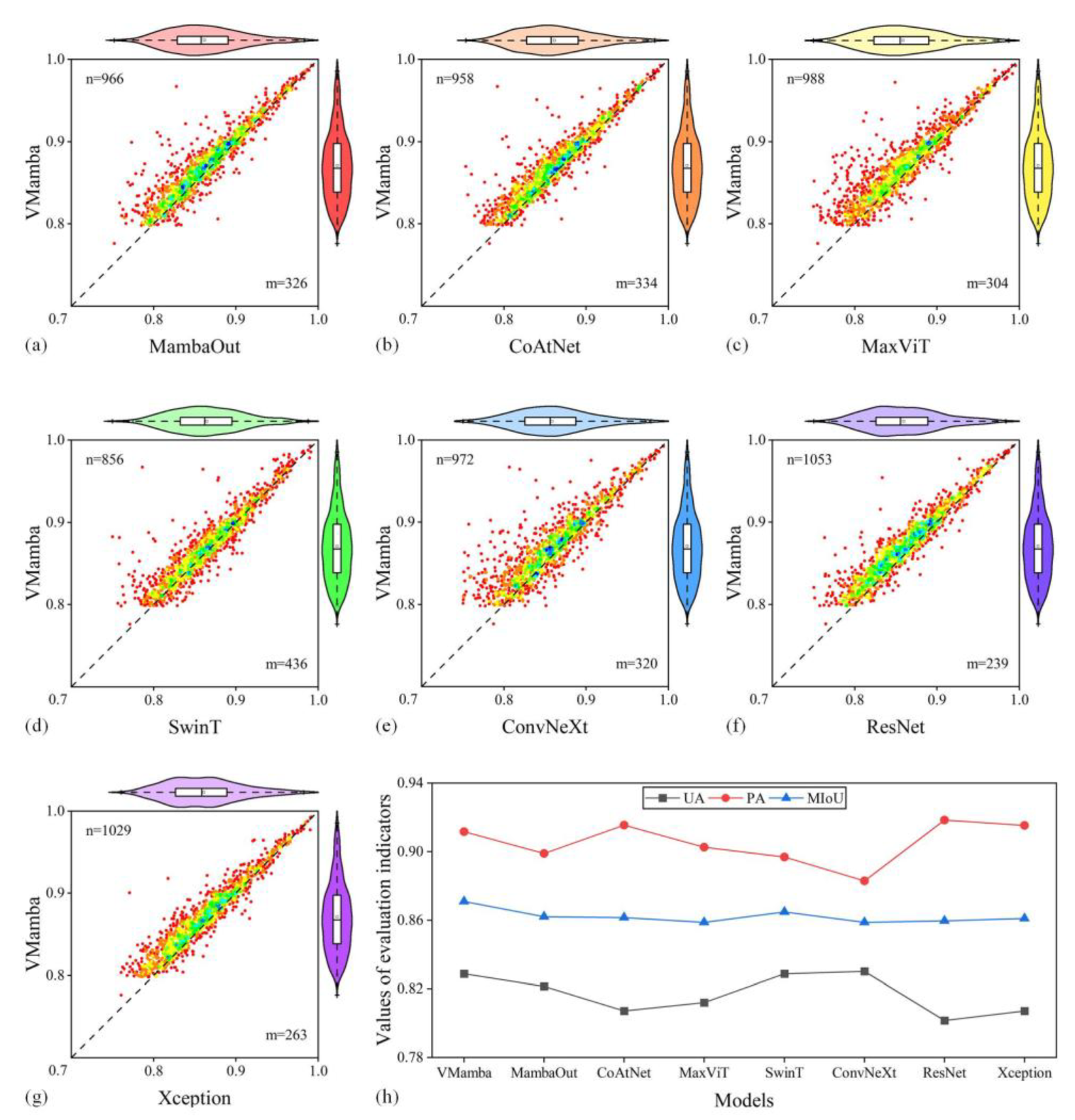

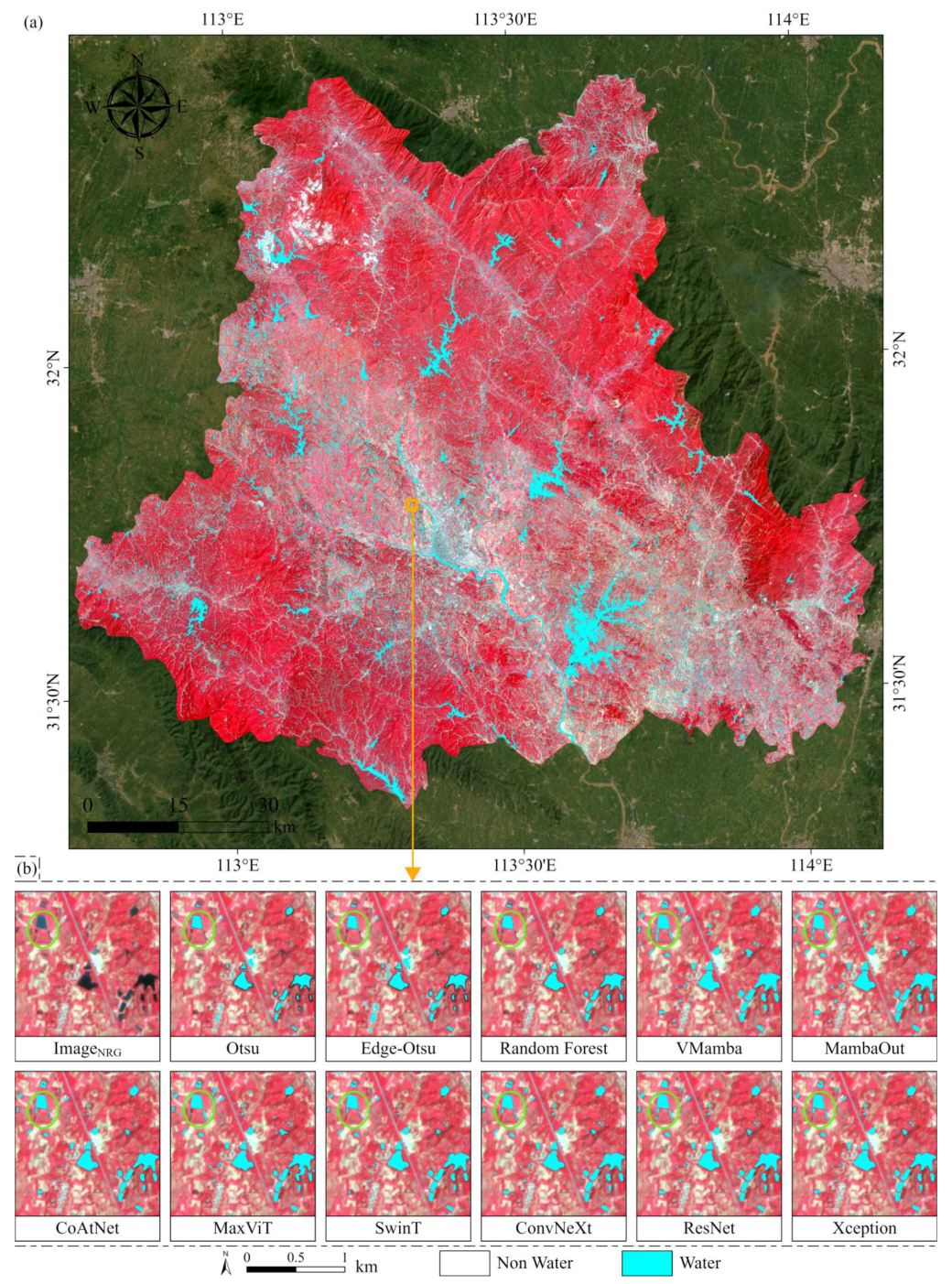

3.4. Validating the Generalization Capability of Transfer Learning Models Across Diverse Networks

4. Discussion

4.1. Impact of the Pre-Trained Data in Transfer Learning

4.2. The Impact of Model Hyperparameters

4.3. The Impact of Model Complexity

4.4. Limitation and Future Works

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| ID | Image Acquisition Times | Image Location (Upper Left Corner) | Image Size in Pixels (Height, Width) |

|---|---|---|---|

| 1 | 26 May 2017 | 117.68°N, 32.18°E | 3973 × 4804 |

| 2 | 17 July 2017 | 111.97°N, 29.86°E | 4018 × 4160 |

| 3 | 23 July 2017 | 111.96°N, 32.02°E | 4695 × 4367 |

| 4 | 2 January 2018 | 109.50°N, 23.08°E | 5073 × 3855 |

| 5 | 12 January 2018 | 119.07°N, 32.02°E | 5198 × 3958 |

| 6 | 28 March 2018 | 115.17°N, 31.91°E | 5207 × 3871 |

| 7 | 1 April 2018 | 116.21°N, 28.15°E | 1754 × 6995 |

| 8 | 8 April 2018 | 116.54°N, 30.72°E | 3733 × 4193 |

| 9 | 7 June 2018 | 112.62°N, 30.97°E | 6668 × 5668 |

| 10 | 28 June 2018 | 113.28°N, 30.17°E | 2864 × 3762 |

| 11 | 8 September 2018 | 114.47°N, 31.08°E | 5667 × 4054 |

| 12 | 14 March 2019 | 111.52°N, 30.69°E | 4002 × 3668 |

| 13 | 6 April 2019 | 111.48°N, 32.57°E | 3668 × 4001 |

| 14 | 24 August 2019 | 111.81°N, 29.94°E | 3335 × 3334 |

| 15 | 20 March 2020 | 112.25°N, 31.79°E | 2431 × 2611 |

| 16 | 26 April 2020 | 111.51°N, 30.56°E | 4961 × 4146 |

| 17 | 20 May 2020 | 119.11°N, 31.55°E | 5423 × 3196 |

| 18 | 11 October 2020 | 116.79°N, 31.71°E | 4257 × 4471 |

| 19 | 24 October 2020 | 118.90°N, 32.53°E | 3423 × 3220 |

| 20 | 10 November 2020 | 117.84°N, 31.37°E | 6616 × 3300 |

| 21 | 12 November 2020 | 117.31°N, 32.50°E | 8691 × 4745 |

| 22 | 30 November 2020 | 117.54°N, 31.22°E | 5145 × 3255 |

| 23 | 7 May 2021 | 117.87°N, 31.00°E | 5223 × 4104 |

| 24 | 26 September 2021 | 118.23°N, 32.52°E | 5251 × 3879 |

| 25 | 26 May 2017 | 118.97°N, 32.00°E | 4862 × 3882 |

| 26 | 17 July 2017 | 113.75°N, 31.18°E | 3723 × 5249 |

Appendix B

| Layer Name | Output Size | VMamba | MambaOut |

|---|---|---|---|

| Stem | 64 × 64 | ||

| Stage1 | 64 × 64 | ||

| Stage2 | 32 × 32 | ||

| Stage3 | 16 × 16 | ||

| Stage4 | 8 × 8 | ||

| Layer Name | Output Size | CoAtNet | MaxViT |

|---|---|---|---|

| Stem | 128 × 128 | ||

| Stage1 | 64 × 64 | ||

| Stage2 | 32 × 32 | ||

| Stage3 | 16 × 16 | ||

| Stage4 | 8 × 8 | ||

| Layer Name | Output Size | Swin Transformer | ConvNeXt |

|---|---|---|---|

| Stem | 64 × 64 | ||

| Stage1 | |||

| Stage2 | 32 × 32 | ||

| Stage3 | 16 × 16 | ||

| Stage4 | 8 × 8 | ||

| Layer Name | Output Size | ResNet | Xception |

|---|---|---|---|

| Stem | 128 × 128 | ||

| Stage1 | 64 × 64 | ||

| Stage2 | 32 × 32 | ||

| Stage3 | 16 × 16 | ||

| Stage4 | 8 × 8 | ||

References

- Li, L.; Long, D.; Wang, Y.; Woolway, R.I. Global Dominance of Seasonality in Shaping Lake-Surface-Extent Dynamics. Nature 2025, 642, 361–368. [Google Scholar] [CrossRef]

- Pekel, J.-F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-Resolution Mapping of Global Surface Water and Its Long-Term Changes. Nature 2016, 540, 418–422. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zhao, S.; Qin, X.; Zhao, N.; Liang, L. Mapping of Urban Surface Water Bodies from Sentinel-2 MSI Imagery at 10 m Resolution via NDWI-Based Image Sharpening. Remote Sens. 2017, 9, 596. [Google Scholar] [CrossRef]

- Yang, X.; Qin, Q.; Yésou, H.; Ledauphin, T.; Koehl, M.; Grussenmeyer, P.; Zhu, Z. Monthly Estimation of the Surface Water Extent in France at a 10-m Resolution Using Sentinel-2 Data. Remote Sens. Environ. 2020, 244, 111803. [Google Scholar] [CrossRef]

- Freitas, P.; Vieira, G.; Canário, J.; Folhas, D.; Vincent, W.F. Identification of a Threshold Minimum Area for Reflectance Retrieval from Thermokarst Lakes and Ponds Using Full-Pixel Data from Sentinel-2. Remote Sens. 2019, 11, 657. [Google Scholar] [CrossRef]

- Mullen, A.L.; Watts, J.D.; Rogers, B.M.; Carroll, M.L.; Elder, C.D.; Noomah, J.; Williams, Z.; Caraballo-Vega, J.A.; Bredder, A.; Rickenbaugh, E.; et al. Using High-Resolution Satellite Imagery and Deep Learning to Track Dynamic Seasonality in Small Water Bodies. Geophys. Res. Lett. 2023, 50, e2022GL102327. [Google Scholar] [CrossRef]

- Valman, S.J.; Boyd, D.S.; Carbonneau, P.E.; Johnson, M.F.; Dugdale, S.J. An AI Approach to Operationalise Global Daily PlanetScope Satellite Imagery for River Water Masking. Remote Sens. Environ. 2024, 301, 113932. [Google Scholar] [CrossRef]

- Flores, J.A.; Gleason, C.J.; Brinkerhoff, C.B.; Harlan, M.E.; Lummus, M.M.; Stearns, L.A.; Feng, D. Mapping Proglacial Headwater Streams in High Mountain Asia Using PlanetScope Imagery. Remote Sens. Environ. 2024, 306, 114124. [Google Scholar] [CrossRef]

- Perin, V.; Roy, S.; Kington, J.; Harris, T.; Tulbure, M.G.; Stone, N.; Barsballe, T.; Reba, M.; Yaeger, M.A. Monitoring Small Water Bodies Using High Spatial and Temporal Resolution Analysis Ready Datasets. Remote Sens. 2021, 13, 5176. [Google Scholar] [CrossRef]

- Perin, V.; Tulbure, M.G.; Gaines, M.D.; Reba, M.L.; Yaeger, M.A. A Multi-Sensor Satellite Imagery Approach to Monitor on-Farm Reservoirs. Remote Sens. Environ. 2022, 270, 112796. [Google Scholar] [CrossRef]

- Chanda, M.; Hossain, A.K.M.A. Application of PlanetScope Imagery for Flood Mapping: A Case Study in South Chickamauga Creek, Chattanooga, Tennessee. Remote Sens. 2024, 16, 4437. [Google Scholar] [CrossRef]

- Zhou, P.; Li, X.; Zhang, Y.; Wang, Y.; Li, Y.; Li, X.; Zhou, C.; Shen, L.; Du, Y. Domain-Knowledge-Guided Multisource Fusion Network for Small Water Bodies Mapping Using PlanetScope Multispectral and Google Earth RGB Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 2541–2562. [Google Scholar] [CrossRef]

- Freitas, P.; Vieira, G.; Canário, J.; Vincent, W.F.; Pina, P.; Mora, C. A Trained Mask R-CNN Model over PlanetScope Imagery for Very-High Resolution Surface Water Mapping in Boreal Forest-Tundra. Remote Sens. Environ. 2024, 304, 114047. [Google Scholar] [CrossRef]

- Kang, J.; Guan, H.; Ma, L.; Wang, L.; Xu, Z.; Li, J. WaterFormer: A Coupled Transformer and CNN Network for Waterbody Detection in Optical Remotely-Sensed Imagery. ISPRS J. Photogramm. Remote Sens. 2023, 206, 222–241. [Google Scholar] [CrossRef]

- Ma, D.; Jiang, L.; Li, J.; Shi, Y. Water Index and Swin Transformer Ensemble (WISTE) for Water Body Extraction from Multispectral Remote Sensing Images. GIScience Remote Sens. 2023, 60, 2251704. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 1–17 October 2021; pp. 9992–10002. [Google Scholar]

- Dai, Z.; Liu, H.; Le, Q.V.; Tan, M. CoAtNet: Marrying Convolution and Attention for All Data Sizes. Available online: https://arxiv.org/abs/2106.04803 (accessed on 5 August 2025).

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. VMamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Zhou, P.; Foody, G.; Zhang, Y.; Wang, Y.; Wang, X.; Li, S.; Shen, L.; Du, Y.; Li, X. Using an Area-Weighted Loss Function to Address Class Imbalance in Deep Learning-Based Mapping of Small Water Bodies in a Low-Latitude Region. Remote Sens. 2025, 17, 1868. [Google Scholar] [CrossRef]

- Ding, N.; Qin, Y.; Yang, G.; Wei, F.; Yang, Z.; Su, Y.; Hu, S.; Chen, Y.; Chan, C.-M.; Chen, W.; et al. Parameter-Efficient Fine-Tuning of Large-Scale Pre-Trained Language Models. Nat. Mach. Intell. 2023, 5, 220–235. [Google Scholar] [CrossRef]

- Liu, Z.; Xu, Y.; Xu, Y.; Qian, Q.; Li, H.; Ji, X.; Chan, A.; Jin, R. Improved Fine-Tuning by Better Leveraging Pre-Training Data. Adv. Neural Inf. Process. Syst. 2022, 35, 32568–32581. [Google Scholar]

- Martins, V.S.; Roy, D.P.; Huang, H.; Boschetti, L.; Zhang, H.K.; Yan, L. Deep Learning High Resolution Burned Area Mapping by Transfer Learning from Landsat-8 to PlanetScope. Remote Sens. Environ. 2022, 280, 113203. [Google Scholar] [CrossRef]

- Anand, A.; Imasu, R.; Dhaka, S.; Patra, P.K. Domain Adaptation and Fine-Tuning of a Deep Learning Segmentation Model of Small Agricultural Burn Area Detection Using Hi-Resolution Sentinel-2 Observations: A Case Study of Punjab, India. Remote Sens. 2025, 17, 974. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, X.; Zhang, Y. HA-Net: A Lake Water Body Extraction Network Based on Hybrid-Scale Attention and Transfer Learning. Remote Sens. 2021, 13, 4121. [Google Scholar] [CrossRef]

- Wu, P.; Fu, J.; Yi, X.; Wang, G.; Mo, L.; Maponde, B.T.; Liang, H.; Tao, C.; Ge, W.; Jiang, T.; et al. Research on Water Extraction from High Resolution Remote Sensing Images Based on Deep Learning. Front. Remote Sens. 2023, 4, 1283615. [Google Scholar] [CrossRef]

- Pickens, A.H.; Hansen, M.C.; Hancher, M.; Stehman, S.V.; Tyukavina, A.; Potapov, P.; Marroquin, B.; Sherani, Z. Mapping and Sampling to Characterize Global Inland Water Dynamics from 1999 to 2018 with Full Landsat Time-Series. Remote Sens. Environ. 2020, 243, 111792. [Google Scholar] [CrossRef]

- Pi, X.; Luo, Q.; Feng, L.; Xu, Y.; Tang, J.; Liang, X.; Ma, E.; Cheng, R.; Fensholt, R.; Brandt, M.; et al. Mapping Global Lake Dynamics Reveals the Emerging Roles of Small Lakes. Nat. Commun. 2022, 13, 5777. [Google Scholar] [CrossRef] [PubMed]

- Lv, M.; Wu, S.; Ma, M.; Huang, P.; Wen, Z.; Chen, J. Small Water Bodies in China: Spatial Distribution and Influencing Factors. Sci. China Earth Sci. 2022, 65, 1431–1448. [Google Scholar] [CrossRef]

- Jiang, C.; Zhang, H.; Wang, C.; Ge, J.; Wu, F. Water Surface Mapping from Sentinel-1 Imagery Based on Attention-UNet3+: A Case Study of Poyang Lake Region. Remote Sens. 2022, 14, 4708. [Google Scholar] [CrossRef]

- Isikdogan, F.; Bovik, A.C.; Passalacqua, P. Surface Water Mapping by Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4909–4918. [Google Scholar] [CrossRef]

- Yu, W.; Wang, X. MambaOut: Do We Really Need Mamba for Vision? Available online: https://arxiv.org/abs/2405.07992 (accessed on 5 August 2025).

- Tu, Z.; Talebi, H.; Zhang, H.; Yang, F.; Milanfar, P.; Bovik, A.; Li, Y. MaxViT: Multi-Axis Vision Transformer. In Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2022; pp. 459–479. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vegas, NV, USA, 7–30 June 2016; pp. 770–778. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Wieland, M.; Fichtner, F.; Martinis, S.; Groth, S.; Krullikowski, C.; Plank, S.; Motagh, M. S1S2-Water: A Global Dataset for Semantic Segmentation of Water Bodies from Sentinel- 1 and Sentinel-2 Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 1084–1099. [Google Scholar] [CrossRef]

- Liu, D.; Zhu, X.; Holgerson, M.; Bansal, S.; Xu, X. Inventorying Ponds Through Novel Size-Adaptive Object Mapping Using Sentinel-1/2 Time Series. Remote Sens. Environ. 2024, 315, 114484. [Google Scholar] [CrossRef]

- Zhou, P.; Li, X.; Foody, G.M.; Boyd, D.S.; Wang, X.; Ling, F.; Zhang, Y.; Wang, Y.; Du, Y. Deep Feature and Domain Knowledge Fusion Network for Mapping Surface Water Bodies by Fusing Google Earth RGB and Sentinel-2 Images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Reinke, A.; Tizabi, M.D.; Sudre, C.H.; Eisenmann, M.; Rädsch, T.; Baumgartner, M.; Acion, L.; Antonelli, M.; Arbel, T.; Bakas, S.; et al. Common Limitations of Image Processing Metrics: A Picture Story. arXiv 2021, arXiv:2104.05642. [Google Scholar] [CrossRef]

- Ait tchakoucht, T.; Elkari, B.; Chaibi, Y.; Kousksou, T. Random Forest with Feature Selection and K-Fold Cross Validation for Predicting the Electrical and Thermal Efficiencies of Air Based Photovoltaic-Thermal Systems. Energy Rep. 2024, 12, 988–999. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Kolli, M.K.; Opp, C.; Karthe, D.; Pradhan, B. Automatic Extraction of Large-Scale Aquaculture Encroachment Areas Using Canny Edge Otsu Algorithm in Google Earth Engine—The Case Study of Kolleru Lake, South India. Geocarto Int. 2022, 37, 11173–11189. [Google Scholar] [CrossRef]

- Luo, X.; Tong, X.; Hu, Z. An Applicable and Automatic Method for Earth Surface Water Mapping Based on Multispectral Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102472. [Google Scholar] [CrossRef]

- Brown, C.F.; Brumby, S.P.; Guzder-Williams, B.; Birch, T.; Hyde, S.B.; Mazzariello, J.; Czerwinski, W.; Pasquarella, V.J.; Haertel, R.; Ilyushchenko, S.; et al. Dynamic World, Near Real-Time Global 10 m Land Use Land Cover Mapping. Sci. Data 2022, 9, 251. [Google Scholar] [CrossRef]

- Bengio, Y. Practical Recommendations for Gradient-Based Training of Deep Architectures. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; pp. 437–478. [Google Scholar]

- Choi, D.; Shallue, C.J.; Nado, Z.; Lee, J.; Maddison, C.J.; Dahl, G.E. On Empirical Comparisons of Optimizers for Deep Learning. arXiv 1910, arXiv:1910.05446. [Google Scholar] [CrossRef]

- Novello, P.; Poëtte, G.; Lugato, D.; Congedo, P.M. Goal-Oriented Sensitivity Analysis of Hyperparameters in Deep Learning. J. Sci. Comput. 2023, 94, 45. [Google Scholar] [CrossRef]

| PlanetScope Band | Band Name | Wavelength (fwhm) | Interoperable with Sentinel-2 |

|---|---|---|---|

| 1 | Coastal Blue | 443 (20) | Yes—with Sentinel-2 band 1 |

| 2 | Blue | 490 (50) | Yes—with Sentinel-2 band 2 |

| 3 | Green I | 531 (36) | No equivalent with Sentinel-2 |

| 4 | Green | 565 (36) | Yes—with Sentinel-2 band 3 |

| 5 | Yellow | 610 (20) | No equivalent with Sentinel-2 |

| 6 | Red | 665 (31) | Yes—with Sentinel-2 band 4 |

| 7 | Red Edge | 705 (15) | Yes—with Sentinel-2 band 5 |

| 8 | NIR | 865 (40) | Yes—with Sentinel-2 band 8a |

| Name of Strategy | Pre-Training | From-Scratch Training | Fine-Tuning |

|---|---|---|---|

| Random seed | 42 | ||

| Batch size | 16 | ||

| Minimum epochs | 30 | 50 | |

| Maximum epochs | 60 | 100 | |

| Early stop epochs | 10 | ||

| Learning rate scheduler | LinearLR + CosineAnnealingLR | ||

| Warmup epochs | 10 | ||

| Maximum learning rate | 1 × 10−3 | 1 × 10−4 (backbone: 1 × 10−5) | |

| Minimum learning rate | 1 × 10−4 | 1 × 10−5 (backbone: 1 × 10−6) | |

| Optimizer | AdamW | ||

| Weight decay | 1 × 10−2 | 1 × 10−3 | |

| Betas | (0.9, 0.999) | ||

| Loss function | 0.5 × Focal Weighted Cross Entropy Loss + 0.5 × Focal Dice Loss 1 | ||

| Label smoothing | 0.05 | ||

| Evaluation metrics (combine score) | 0.5 × Mean Intersection over Union + 0.3 × Generalized Dice Score + 0.15 × Kappa + 0.05 × F1 score | ||

| Strategy | ID | VMamba | MambaOut | CoAtNet | MaxViT | SwinT | ConvNeXt | ResNet | Xception |

|---|---|---|---|---|---|---|---|---|---|

| From-scratch training using PlanetScope | 1 | 0.8220 | 0.8160 | 0.8152 | 0.8149 | 0.7964 | 0.8157 | 0.8067 | 0.8085 |

| 2 | 0.8200 | 0.8144 | 0.8170 | 0.8101 | 0.8118 | 0.8120 | 0.8053 | 0.8047 | |

| 3 | 0.8265 | 0.8181 | 0.8161 | 0.8128 | 0.7955 | 0.8157 | 0.8067 | 0.8064 | |

| 4 | 0.8219 | 0.8186 | 0.8142 | 0.8133 | 0.7966 | 0.8160 | 0.8070 | 0.8057 | |

| 5 | 0.8249 | 0.8212 | 0.8106 | 0.8185 | 0.8169 | 0.8181 | 0.8094 | 0.8107 | |

| Fine-tuning | 1 | 0.8338 | 0.8265 | 0.8232 | 0.8237 | 0.8263 | 0.8270 | 0.8226 | 0.8219 |

| 2 | 0.8299 | 0.8230 | 0.8201 | 0.8217 | 0.8238 | 0.8247 | 0.8193 | 0.8195 | |

| 3 | 0.8335 | 0.8256 | 0.8239 | 0.8244 | 0.8262 | 0.8282 | 0.8226 | 0.8214 | |

| 4 | 0.8341 | 0.8270 | 0.8244 | 0.8239 | 0.8273 | 0.8290 | 0.8246 | 0.8213 | |

| 5 | 0.8375 | 0.8320 | 0.8255 | 0.8292 | 0.8313 | 0.8321 | 0.8274 | 0.8256 |

| Strategy | Backbone Networks | Evaluation Indicators | ||||

|---|---|---|---|---|---|---|

| MIoU | OA | F1 | PA | UA | ||

| Pre-training | VMamba | 0.7498 | 0.9405 | 0.7049 | 0.8497 | 0.6473 |

| MambaOut | 0.7624 | 0.9415 | 0.7235 | 0.8257 | 0.7037 | |

| CoAtNet | 0.7687 | 0.9470 | 0.7302 | 0.8156 | 0.7086 | |

| MaxViT | 0.7310 | 0.9276 | 0.6811 | 0.8344 | 0.6326 | |

| SwinT | 0.7459 | 0.9353 | 0.7009 | 0.8401 | 0.6556 | |

| ConvNeXt | 0.7546 | 0.9414 | 0.7116 | 0.8315 | 0.6781 | |

| ResNet | 0.7848 | 0.9528 | 0.7533 | 0.8490 | 0.7070 | |

| Xception | 0.7938 | 0.9555 | 0.7668 | 0.8457 | 0.7290 | |

| Fine-tuning | VMamba | 0.8710 | 0.9781 | 0.8661 | 0.9117 | 0.8288 |

| MambaOut | 0.8619 | 0.9764 | 0.8551 | 0.8989 | 0.8214 | |

| CoAtNet | 0.8615 | 0.9761 | 0.8547 | 0.9154 | 0.8071 | |

| MaxViT | 0.8587 | 0.9756 | 0.8511 | 0.9026 | 0.8120 | |

| SwinT | 0.8648 | 0.9769 | 0.8587 | 0.8968 | 0.8287 | |

| ConvNeXt | 0.8587 | 0.9762 | 0.8507 | 0.8829 | 0.8301 | |

| ResNet | 0.8595 | 0.9754 | 0.8527 | 0.9184 | 0.8017 | |

| Xception | 0.8610 | 0.9758 | 0.8544 | 0.9153 | 0.8072 | |

| Strategy | Backbone Networks | Evaluation Indicators | ||||

|---|---|---|---|---|---|---|

| MIoU | OA | F1 | PA | UA | ||

| From-scratch training using PlanetScope | VMamba | 0.8520 | 0.9737 | 0.8437 | 0.9230 | 0.7832 |

| MambaOut | 0.8501 | 0.9736 | 0.8408 | 0.9157 | 0.7839 | |

| CoAtNet | 0.8504 | 0.9737 | 0.8409 | 0.9067 | 0.7922 | |

| MaxViT | 0.8394 | 0.9709 | 0.8274 | 0.9084 | 0.7710 | |

| SwinT | 0.8436 | 0.9722 | 0.8326 | 0.9309 | 0.7603 | |

| ConvNeXt | 0.8469 | 0.9730 | 0.8370 | 0.9019 | 0.7890 | |

| ResNet | 0.8436 | 0.9715 | 0.8333 | 0.9360 | 0.7587 | |

| Xception | 0.8470 | 0.9727 | 0.8374 | 0.9246 | 0.7728 | |

| Methods | Evaluation Indicators | |||

|---|---|---|---|---|

| OA | F1 | PA | UA | |

| Otsu | 0.7577 | 0.7061 | 0.5581 | 0.9608 |

| Edge-Otsu | 0.7901 | 0.7576 | 0.6287 | 0.9528 |

| Random Forest | 0.8501 | 0.8336 | 0.7198 | 0.9901 |

| VMamba | 0.9825 | 0.9832 | 0.9820 | 0.9844 |

| MambaOut | 0.9325 | 0.9349 | 0.9293 | 0.9406 |

| CoAtNet | 0.9282 | 0.9297 | 0.9114 | 0.9489 |

| MaxViT | 0.9369 | 0.9364 | 0.8910 | 0.9867 |

| SwinT | 0.9338 | 0.9337 | 0.8934 | 0.9777 |

| ConvNeXt | 0.9357 | 0.9377 | 0.9281 | 0.9474 |

| ResNet | 0.9544 | 0.9556 | 0.9413 | 0.9704 |

| Xception | 0.9563 | 0.9568 | 0.9281 | 0.9873 |

| Backbone Networks | Evaluation Indicators | |||

|---|---|---|---|---|

| OA | F1 | PA | UA | |

| Otsu | 0.7396 | 0.6878 | 0.5736 | 0.8588 |

| Edge-Otsu | 0.5755 | 0.6955 | 0.9698 | 0.5422 |

| Random Forest | 0.7585 | 0.6878 | 0.5321 | 0.9724 |

| VMamba | 0.9604 | 0.9615 | 0.9887 | 0.9357 |

| MambaOut | 0.9302 | 0.9319 | 0.9547 | 0.9101 |

| CoAtNet | 0.9396 | 0.9420 | 0.9811 | 0.9059 |

| MaxViT | 0.9302 | 0.9314 | 0.9472 | 0.9161 |

| SwinT | 0.8925 | 0.9012 | 0.9811 | 0.8333 |

| ConvNeXt | 0.9283 | 0.9307 | 0.9623 | 0.9011 |

| ResNet | 0.8208 | 0.8382 | 0.9283 | 0.7640 |

| Xception | 0.7472 | 0.7900 | 0.9509 | 0.6756 |

| Backbones | Params. (M) | Weight Size (MB) | FLOPs (GFLOPs) | Training Time (10 Epoch) (Minute) |

|---|---|---|---|---|

| VMamba | 35.23 | 134.49 | 19.46 | 40 |

| MambaOut | 25.91 | 98.93 | 18.96 | 36 |

| CoAtNet | 30.05 | 114.81 | 19.03 | 32 |

| MaxViT | 31.92 | 122.02 | 19.79 | 36 |

| SwinT | 30.90 | 117.97 | 18.67 | 30 |

| ConvNeXt | 31.20 | 119.09 | 18.93 | 30 |

| ResNet | 29.78 | 113.68 | 19.58 | 28 |

| Xception | 44.74 | 170.81 | 28.40 | 28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Zhou, P.; Wang, Y.; Li, X.; Zhang, Y.; Li, X. Deep Learning Small Water Body Mapping by Transfer Learning from Sentinel-2 to PlanetScope. Remote Sens. 2025, 17, 2738. https://doi.org/10.3390/rs17152738

Li Y, Zhou P, Wang Y, Li X, Zhang Y, Li X. Deep Learning Small Water Body Mapping by Transfer Learning from Sentinel-2 to PlanetScope. Remote Sensing. 2025; 17(15):2738. https://doi.org/10.3390/rs17152738

Chicago/Turabian StyleLi, Yuyang, Pu Zhou, Yalan Wang, Xiang Li, Yihang Zhang, and Xiaodong Li. 2025. "Deep Learning Small Water Body Mapping by Transfer Learning from Sentinel-2 to PlanetScope" Remote Sensing 17, no. 15: 2738. https://doi.org/10.3390/rs17152738

APA StyleLi, Y., Zhou, P., Wang, Y., Li, X., Zhang, Y., & Li, X. (2025). Deep Learning Small Water Body Mapping by Transfer Learning from Sentinel-2 to PlanetScope. Remote Sensing, 17(15), 2738. https://doi.org/10.3390/rs17152738