1. Introduction

Thermal Power Plants (TPPs) play a vital role in national energy infrastructure and environmental monitoring, making their automatic detection in remote sensing imagery increasingly important. As complex industrial facilities composed of diverse sub-components such as cooling towers, chimneys, coal yards, and boiler houses, TPPs exhibit irregular spatial arrangements and diverse appearances, posing significant challenges for object detection models [

1,

2].

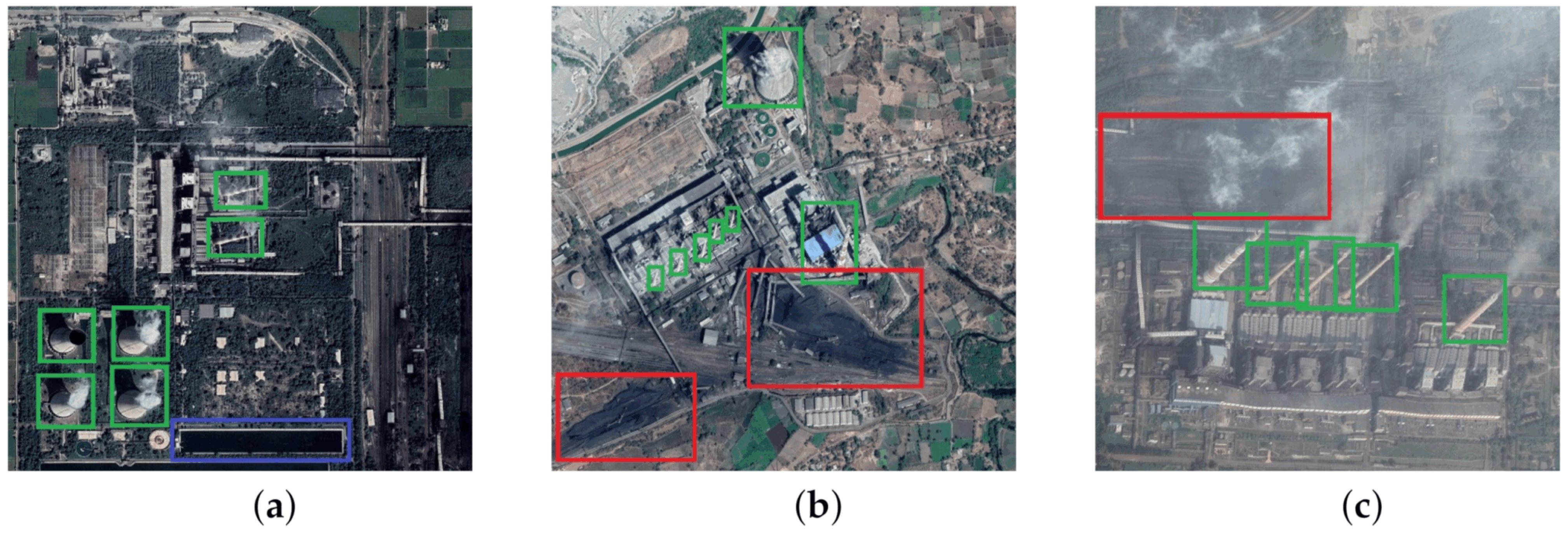

Figure 1 shows representative samples of TPPs with varied spatial configurations and separate, irregular components.

Conventional object detection methods in remote sensing have largely focused on compact, visually coherent targets such as vehicles or buildings [

3,

4,

5]. Fan et al. [

6] proposed a CSDP-enhanced YOLOv7 framework with MPDIoU loss to improve the detection of small, ambiguous ships under complex conditions. Wang et al. [

7] introduced a general foundation model for building interpretation that unifies building extraction and change detection tasks, demonstrating strong generalization and cross-task learning capabilities across diverse remote sensing datasets. These methods often rely on RGB imagery and are typically optimized for targets with consistent shape and texture. In contrast, TPPs are representative composite objects, where the spatial configuration and heterogeneous components must be jointly interpreted. However, several obstacles such as lack of public datasets and ambiguous definitions still exist. Plenty of works [

8,

9] have been previously carried out. For example, Liu et al. [

8] proposed ABNet, which is adaptive to multiscale object detection, effective for detecting airports, harbors and train stations. Cai et al. [

9] designed a weight balanced loss function for hard example mining task, making it more robust for detecting a few hard examples. Sun et al. [

10] introduced an anchor-free network for irregular objects in everyday lives. Yin et al. [

11] introduced a two-stage detection framework, sal-MFN, for TPP detection in remote sensing images. Their model incorporates a saliency-enhanced module to emphasize prominent regions and a multi-scale feature network (MFN) to adapt to components of varying scales. Yin et al. [

12] proposed PCAN, a one-stage detection framework for TPP in remote sensing imagery. Their method integrates context attention and multi-scale feature extraction via deformable convolutions, and introduces a part-based attention module to enhance the recognition of structural components in facility-type objects. Recently, Yuan et al. [

2] introduced REPAN, which combines part-level proposals with a Transformer-based global context modeling strategy, demonstrating strong capabilities in complex composite object detection. Most of the aforementioned methods rely on RGB imagery from Google Earth for analysis, while the use of other high-resolution multispectral satellite data remains limited. Overall, CNN-based detectors [

13,

14] struggle with such complex semantics due to their limited receptive fields and lack of explicit spatial reasoning capabilities. Part-based approaches [

1,

12,

15] attempt to mitigate this by modeling constituent components, but frequently depend on unsupervised clustering methods like K-means for part localization, leading to unstable learning and poor interpretability.

Figure 1.

Representative TPPs featuring diverse and irregularly distributed components, including chimneys (green boxes), coal yards (red boxes), pools (blue boxes), and other industrial buildings. (

a) Bathinda; (

b) Ukai; (

c) Korba [

12].

Figure 1.

Representative TPPs featuring diverse and irregularly distributed components, including chimneys (green boxes), coal yards (red boxes), pools (blue boxes), and other industrial buildings. (

a) Bathinda; (

b) Ukai; (

c) Korba [

12].

Another limitation of most current TPP detection methods lies in their reliance on a single modality. RGB images alone may not sufficiently capture spectral diversity, especially in scenes with vegetation occlusion, thermal interference, or complex backgrounds [

16]. Recent advances in multispectral imaging, particularly the use of near-infrared (NIR) bands, have shown that combining RGB and NIR modalities can enhance material and structural contrast [

17,

18], thus improving detection under challenging conditions. Compared with single-modal object detection methods (e.g., SSD [

19], RetinaNet [

20], Faster R-CNN [

21], DETR [

22]), multimodal approaches integrate complementary spectral information from different modalities (such as RGB, thermal, or depth data), leading to more robust and accurate object detection under challenging conditions. By leveraging the strengths of each modality, these methods can better handle issues such as low lighting, occlusion, or background clutter, which often hinder the performance of single-modal detectors. Consequently, multimodal object detection exhibits greater adaptability and reduced limitations, making it more suitable for real-world applications in complex environments. However, simply concatenating multispectral channels often fails to exploit the full potential of cross-modal interactions. This has motivated a growing body of research focused on designing more effective fusion strategies to fully leverage the complementary information between modalities. For example, to enhance multispectral object detection, Shen et al. [

23] proposed a dual cross-attention fusion framework that models global feature interactions across RGB and thermal modalities, demonstrating that effective cross-modal integration significantly improves detection accuracy and efficiency. Fang et al. [

24] introduced CMAFF, a lightweight cross-modality fusion module based on joint attention to common and differential features, enabling high-performance multispectral detection with minimal computational overhead.

Most of the commonly used attention mechanisms, such as SE [

25], CBAM [

26], ECA [

27], CA [

28], and Swin Transformer [

29], operate in the spatial or channel domain. These spatial-domain attention mechanisms typically rely on convolutional or MLP-based structures to process and enhance the original image features. In parallel, frequency-domain information has demonstrated its effectiveness in enhancing visual representations. For example, Chi et al. [

30] proposed Fast Fourier Convolution (FFC), which uses Fourier spectral theory to construct non-local receptive fields and perform cross-scale fusion efficiently. Suvorov et al. [

31] proposed LaMa, a large mask inpainting network that leverages Fast Fourier Convolutions (FFCs) to achieve a global receptive field even in early layers. This design enables the network to generalize well to high-resolution images despite being trained on low-resolution data. Chaudhuri et al. [

32] proposed a Fourier-Guided Attention (FGA) module that integrates Fast Fourier Convolution (FFC) with attention mechanisms to capture both local and global context in crowd counting tasks. Lyu et al. [

33] introduced DFENet, the FFT-based supervised network for RGB-T salient object detection (SOD), which efficiently processes high-resolution bi-modal inputs. By leveraging frequency-domain modules such as FRCAB and CFL, DFENet achieves competitive performance with reduced memory usage. Furthermore, frequency-aware attention has shown promise in preserving both global and local information by analyzing feature maps in the frequency domain [

34]. Low-frequency components can encode overall structure, while high-frequency details capture texture and edge information. Despite this, few works integrate frequency priors with multispectral data for detecting large, composite objects such as TPPs.

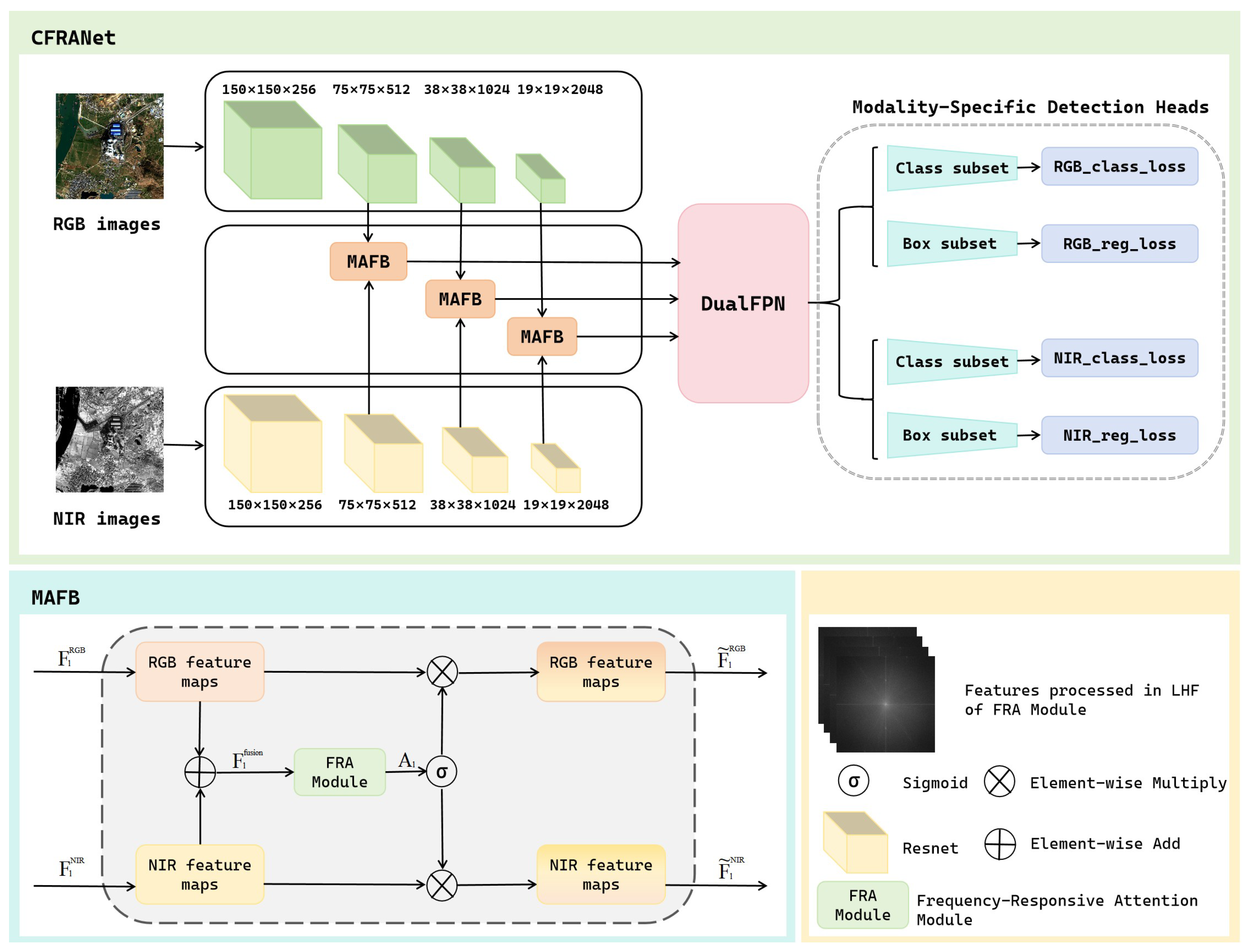

To tackle these limitations, we propose CFRANet (Cross-Modal Frequency-Responsive Attention Network), a novel dual-stream detection framework specifically designed for TPP detection in complex remote sensing scenes. CFRANet introduces two core modules: the Modality-Aware Fusion Block (MAFB), which selectively integrates RGB and NIR features to exploit modality-specific strengths, and the Frequency-Responsive Attention (FRA) module, which enhances feature representations via dual-branch attention in both spatial and frequency domains.

Our contributions are summarized as follows:

We propose CFRANet, a dual-stream multispectral detection framework that effectively leverages RGB and NIR modalities, tailored for detecting thermoelectric power plants with diverse and irregular structures.

We design a lightweight Modality-Aware Fusion Block (MAFB) to perform adaptive, hierarchical fusion of spectral features, enhancing cross-modal complementarity.

We introduce the Frequency-Responsive Attention (FRA) module, which integrates spatial and frequency-domain cues to simultaneously capture global structures and local textures.

We construct a new high-resolution multispectral dataset, AIR-MTPP, and demonstrate that CFRANet achieves state-of-the-art performance, with an average precision of 82.41%.

The remainder of this paper is organized as follows.

Section 2 describes the proposed CFRANet in detail.

Section 3 presents experiments and analysis.

Section 4 provides discussion, and

Section 5 concludes the paper.

2. Materials and Methods

2.1. Overview

To tackle the challenges of multispectral composite object detection, we propose CFRANet—a novel network designed to effectively exploit complementary information from RGB and NIR modalities. As shown in

Figure 2, the architecture is composed of three main components: a dual-stream feature extraction backbone, a frequency-aware cross-modal attention mechanism, and a modality-aware loss. The overall pipeline, including the backbone, cross-modal attention, Feature Pyramid Network (FPN), and modality-specific detection heads, is formulated as:

In the beginning, separate ResNet branches are employed to extract modality-specific features, which are later enhanced and fused using the MAFB. To bridge the semantic gap between modalities and enable cross-modal feature interaction, MAFB uses an FRA module. This module combines spatial attention and frequency-domain decomposition to reinforce both global and local semantics. The fused multi-scale features are then processed by an FPN and finally passed through detection heads for object localization and classification. A modality-aware loss function guides the learning process by balancing performance on both RGB and NIR modalities. Although the network extracts modality-specific features using two separate ResNet branches and generates intermediate regression and classification predictions for both RGB and NIR modalities, it does not independently output two final detection results. Instead, modality-specific predictions are first decoded and filtered using Non-Maximum Suppression (NMS) individually. Subsequently, the retained detections from both modalities are merged, and a second round of NMS is applied to obtain a unified final detection output. This late fusion strategy leverages complementary information from both modalities while maintaining a single consistent output for downstream evaluation.

2.2. Dual-Stream Feature Extraction Backbone and Modality-Aware Fusion Block

To effectively capture complementary characteristics of visible and NIR imagery, we introduce a dual-stream backbone, which consists of two independent ResNet branches: one dedicated to the RGB modality and another to the NIR modality. Both streams share the same architectural configuration (e.g., ResNet-50 or ResNet-101 depending on ), but they maintain separate weights to preserve modality-specific representations. The four residual blocks, layer1 to layer4, correspond to the feature stages C2 to C5 in standard ResNet terminology. In this work, we focus on layer2, layer3, and layer4 (i.e., C3, C4, C5) for cross-modal feature fusion.

Each stream initially processes its input through a convolutional stem comprising a convolution, batch normalization, ReLU activation, and a max-pooling layer. This is followed by four sequential residual blocks, denoted as layer1 through layer4.

To facilitate semantic-level cross-modal interaction, we incorporate a MAFB module (

Figure 2) after layer2, layer3, and layer4. At each of these levels, the corresponding RGB and NIR features are summed element-wise and passed through the FRA module to generate a shared cross-modal attention map. This attention map enhances both modality streams via channel-wise modulation.

Formally, let

and

denote the RGB and NIR feature maps at level

. The shared fused feature is computed as:

This fused representation is then passed to the FRA module to generate the attention map:

which is used to modulate each modality-specific feature via:

where

denotes the sigmoid activation.

This shared attention mechanism allows the two modalities to benefit from common semantic cues while retaining modality-specific characteristics. The outputs of this dual-stream backbone are the modulated multi-scale features from both streams. These correspond to the C3, C4, and C5 stages in the traditional ResNet and are passed to a dual FPN for further refinement.

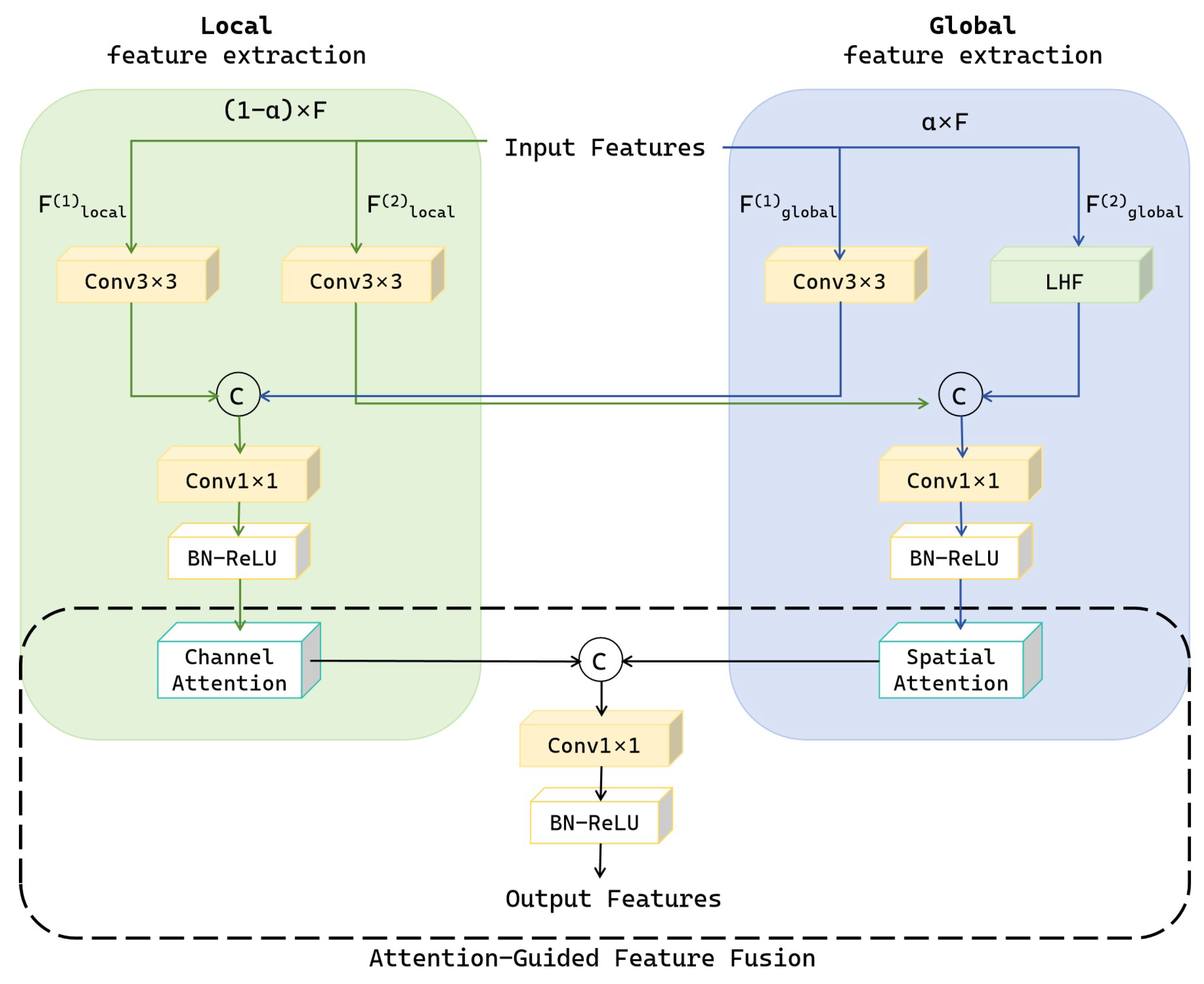

2.3. Frequency-Responsive Attention Module

To enhance cross-modal feature interaction in dual-stream networks and better integrate both spatial-local and frequency-global information in feature extraction, we propose an FRA Module, as shown in

Figure 3. The module incorporates both spatial convolution and frequency domain processing in a dual-branch structure, effectively enhancing feature representation capabilities for downstream tasks. The full processing steps of the proposed FRA Module are summarized in Algorithm 1, which outlines the training procedure and feature fusion pipeline.

2.3.1. Dual-Branch Local and Global Feature Extraction

The FRA Module consists of two major branches: the Local Branch, which applies two

convolutional layers to independently extract local spatial features and scales them by

, and the Global Branch, which employs one

convolution followed by a LHF module to extract frequency-enhanced global features, scaled by

.

| Algorithm 1 FRA Module—Frequency-Responsive Attention Module Pipeline |

| FRA Module integrates frequency-enhanced global features and local CNN features to improve attention mechanisms. |

Input: Feature map x, frequency ratio , global weight , max epochs T

Output: Trained FRA module and fused feature map - 1:

Initialize parameters of FRA Module - 2:

for to T do - 3:

Extract , scaled by - 4:

Extract - 5:

, scaled by - 6:

- 7:

- 8:

- 9:

end for - 10:

return trained FRA Module and

|

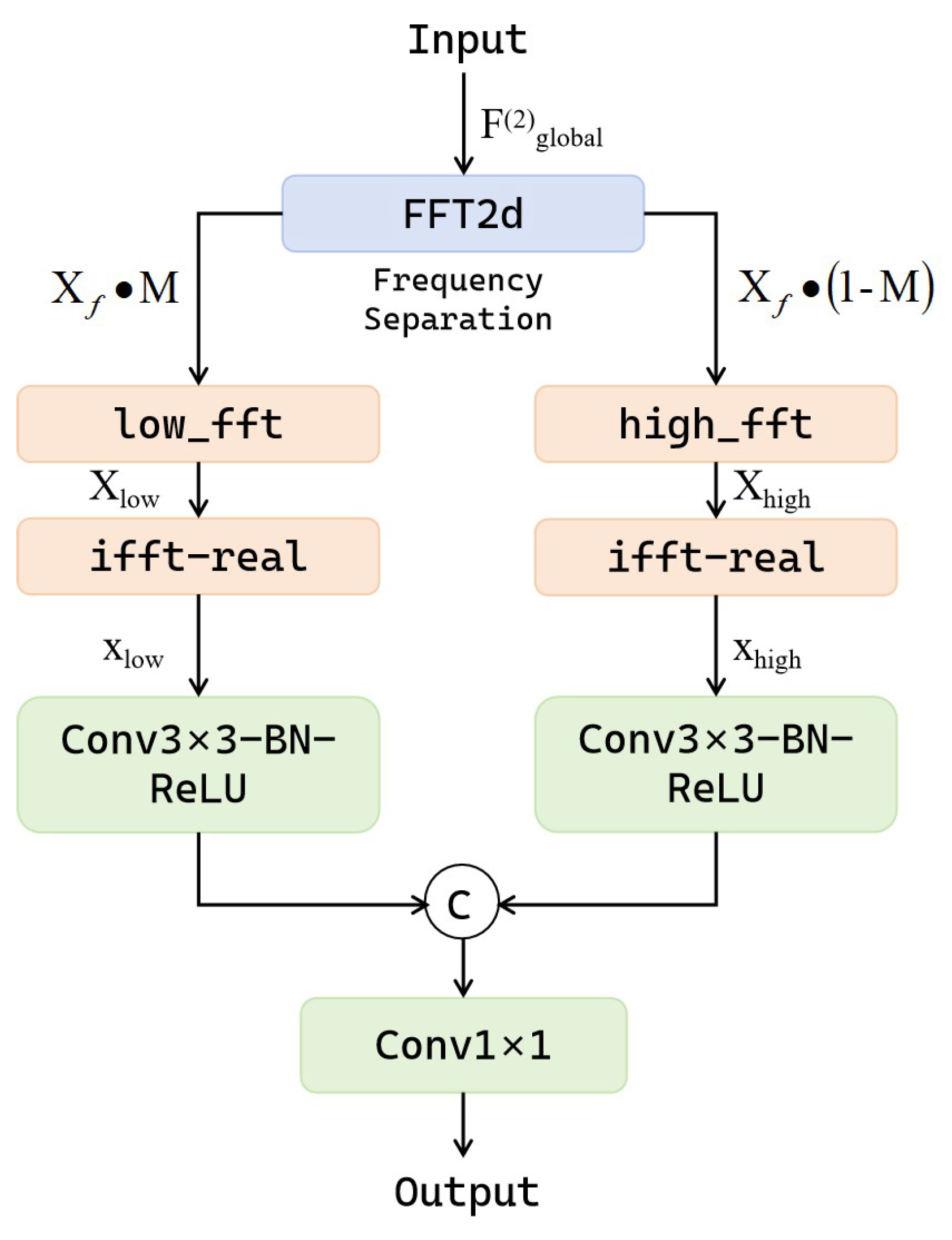

2.3.2. Low-High Frequency Decomposition

To enhance global feature extraction, we incorporate a Fourier-based Low-High Frequency (LHF) module into the global attention branch. This module decomposes the input features in the frequency domain using a learnable frequency mask, explicitly separating low-frequency (global structure) and high-frequency (detail boundary) components. These frequency-specific features are then independently processed and adaptively fused. The motivation is that global semantic context and fine-grained texture cues often manifest in different frequency bands, and directly modeling this distinction facilitates more discriminative representation learning, especially for complex targets like TPPs. The core of the global branch is the LHF (

Figure 4), which performs low- and high-frequency decomposition in the frequency domain. Given an input feature map

, we apply a 2D Fourier Transform

to obtain complex-valued frequency features:

A binary mask

is constructed to separate the spectrum into low- and high-frequency components based on a ratio parameter

:

These components are then transformed back to the spatial domain via the inverse Fourier transform

, with only the real part retained:

Each branch is followed by a convolutional block, and the outputs are concatenated and fused via a convolution to form the final output of the LHF module. In our experiments, we set to balance retaining essential low-frequency components, which capture overall structure and semantics, as well as preserving high-frequency details like edges and textures.

The detailed process for low- and high-frequency decomposition in the frequency domain is described in Algorithm 2.

| Algorithm 2 LHF—Low/High Frequency Separation |

Input: Feature map x, frequency ratio

Output: Fused low/high frequency feature - 1:

▹ Apply 2D Fourier Transform - 2:

Create binary mask M based on ratio - 3:

, - 4:

, - 5:

, - 6:

return

|

2.3.3. Attention-Guided Feature Fusion

Two attention mechanisms guide the fusion of local and global features: Channel Attention (CA), where the local feature and the global convolutional feature are concatenated and processed through a convolution followed by a channel attention block, and Spatial Attention (SA), where the local feature and the frequency-enhanced global feature are concatenated and passed through another fusion block and a spatial attention module.

The outputs of both attention paths are concatenated and passed through a final

convolution for fusion:

This architecture ensures that both frequency-aware global context and spatially detailed local patterns are effectively captured and integrated.

2.4. Loss Function

To effectively leverage complementary information from both RGB and NIR modalities, we design a multi-component loss function that integrates modality-specific detection losses. This enables the model to optimize each modality independently while benefiting from joint training. Specifically, the total loss is defined as:

where

and

denote the classification and regression losses used in RetinaNet [

20], respectively. The hyperparameter

balances the contributions of the two modalities. In our experiments, we set

to emphasize RGB features while still leveraging the complementary information from NIR.

This loss formulation encourages the model to adaptively learn from both modalities and improves detection performance, particularly under challenging visual conditions.

3. Results

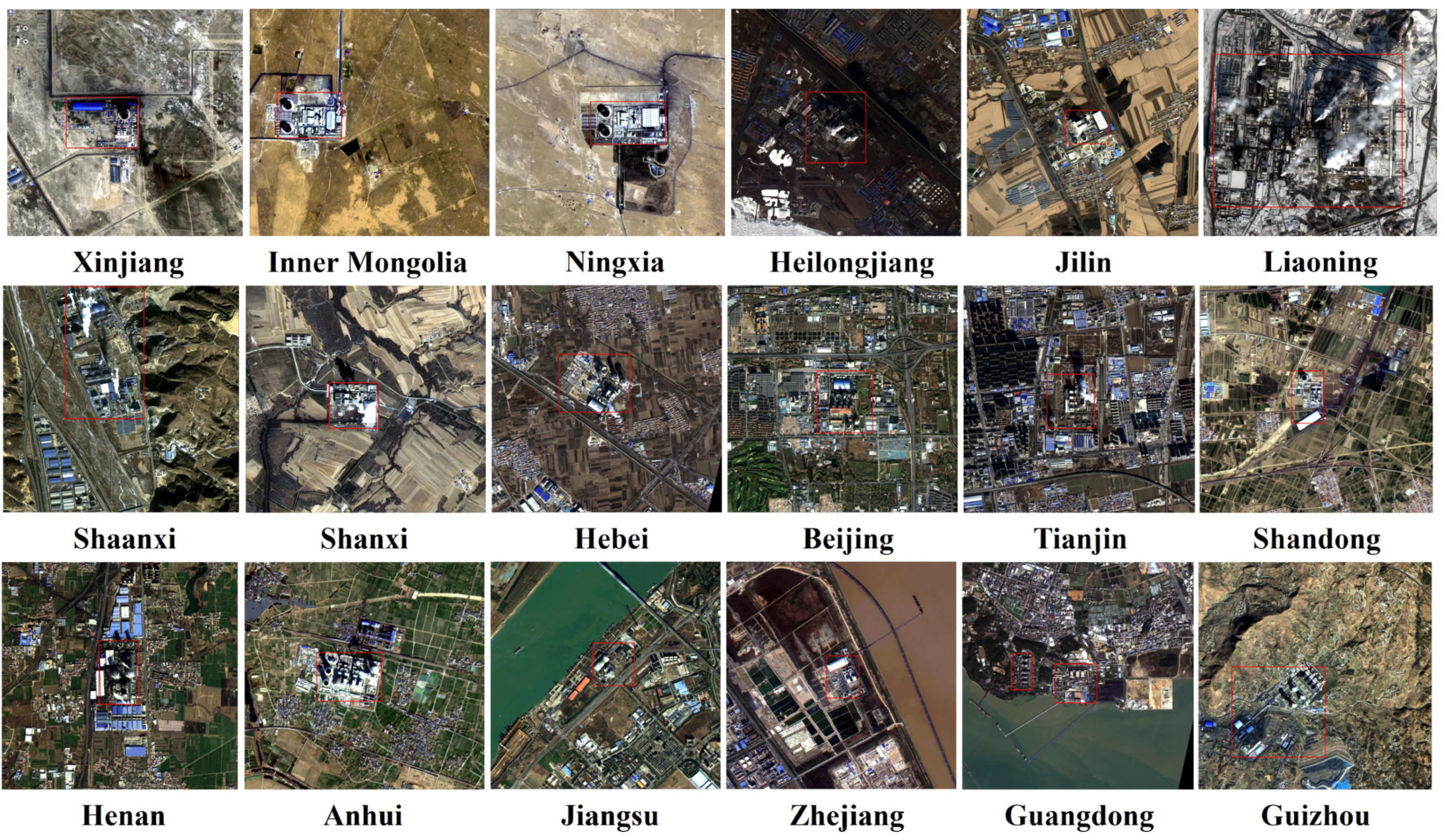

3.1. Datasets

Since there are few open-source composite object detection datasets in RSIs, we constructed a high-resolution multispectral dataset named AIR-MTPP, which contains 481 images of TPPs. The images were carefully selected from 18 provinces and regions across China, including Xinjiang, Inner Mongolia, Ningxia, Heilongjiang, Jilin, Liaoning, Shaanxi, Shanxi, Hebei, Beijing, Tianjin, Shandong, Henan, Anhui, Jiangsu, Zhejiang, Guangdong, and Guizhou. These locations were chosen to ensure a diverse geographical distribution, covering plains, mountainous areas, and coastal zones. The primary data source is the Gaofen-6 (GF-6) satellite, and the acquisition period spans from January 2023 to March 2025. The dataset captures TPPs under various seasonal conditions (spring, summer, autumn, and winter), ensuring both spatial and temporal diversity. In total, 49 scenes were utilized, with 2024 as the main acquisition period. When image quality was insufficient (e.g., due to cloud cover or atmospheric distortion), imagery from 2023 or early 2025 was used as a supplement.

All raw scenes underwent a rigorous preprocessing pipeline including atmospheric correction, pansharpening fusion, and bit-depth adjustment. The resulting multispectral images have a 2-meter spatial resolution. Each sample was then cropped around the TPPs to a fixed size, corresponding to a ground area of . After strict quality control and filtering, we obtained 481 valid images. All objects were annotated with horizontal bounding boxes in VOC format, resulting in a well-structured, composite-object-focused dataset suitable for training and evaluating object detection models in complex remote sensing scenarios. The dataset was randomly divided into training, validation, and test sets with proportions of 81%, 9%, and 10%, respectively. Specifically, 90% of the data was allocated to the combined training and validation set. Within this subset, a further split was performed: 90% for training and 10% for validation. This ensures a balanced and representative partitioning of the dataset for model development and evaluation.

3.2. Evaluation Metrics

To comprehensively evaluate our TPP detection method in practical engineering scenarios, we employ standard quantitative metrics, including precision, recall, average precision (AP), frames per second (FPS), and Giga floating-point operations (GFLOPs).

Precision and Recall: A predicted bounding box is considered a true positive (TP) if its intersection over union (IoU) with a ground-truth box exceeds a predefined threshold [

35]; otherwise, it is a false positive (FP). Unmatched ground-truth boxes are false negatives (FN). The IoU is defined as:

where

G and

D are the ground-truth and predicted boxes [

36]. Precision and recall are then computed as:

AP and mAP: Average precision (AP) is the area under the PR curve [

35]. Mean average precision (mAP), denoted as

, averages AP across IoU thresholds from 0.5 to 0.95 in steps of 0.05 [

37]. In this study, we adopt

(i.e., AP at IoU threshold of 0.5) as the primary evaluation metric due to its widespread usage and interpretability in remote sensing object detection.

Efficiency Metrics: Frames per second (FPS) measures inference speed, GFLOPs (giga floating point operations) reflects computational complexity, and the number of parameters (Params) indicates the model’s size and memory requirements..

All models are evaluated under identical hardware settings for fair comparison.

3.3. Experimental Settings

All experiments are conducted using the PyTorch 1.11.0 deep learning framework on a workstation equipped with an NVIDIA RTX 3090 GPU (24GB memory) and CUDA 11.3. To initialize the network, we adopt a ResNet-50 backbone pre-trained on the ImageNet dataset [

38], which provides a good feature representation and accelerates convergence.

To balance the demand of large-scale scenes and the efficiency of deep network training, all input images are resized to pixels through random cropping and horizontal flipping as data augmentation.

The model is trained using the Adam optimizer, with an initial learning rate of 0.001, momentum set to 0.9, and a minimum learning rate of 0.00001. In the classification loss, we adopt the focal loss formulation following RetinaNet [

20], where the focusing parameter is set to

and the balancing factor

.

To improve optimization, we adopt a cosine annealing learning rate schedule with a warm-up strategy. Specifically, the learning rate linearly increases from to the initial value over the first 5% of total training iterations (warm-up phase), followed by a smooth decay to the minimum learning rate using the cosine function. During the final 5% of iterations, the learning rate is fixed at the minimum value to ensure training stability. The learning rate is updated dynamically before each iteration according to this schedule.

Unless otherwise specified, the training is performed for a fixed number of epochs, and the learning rate follows the above schedule. All other hyperparameters are kept consistent across experiments for fair comparison.

3.4. Comparisons with State of the Art

To comprehensively evaluate the performance of our proposed CFRANet, we compare it with several state-of-the-art object detectors, covering both single-modality (RGB-only) and multi-modality (RGB+NIR) approaches. Among the single-modality methods, RetinaNet [

20] is a one-stage detector that introduces Focal Loss to address the extreme foreground–background class imbalance, making it particularly suitable for dense object detection tasks; Faster R-CNN [

21] is a classical two-stage detector that combines Region Proposal Networks (RPN) with Fast R-CNN, offering high accuracy at the cost of slower inference; SSD [

19] is a fast one-stage detector that uses multiple feature maps for multi-scale detection, offering a good trade-off between speed and accuracy; and FCOS [

39] is an anchor-free one-stage detector that predicts object centers and regresses boxes in a per-pixel fashion, effectively simplifying the detection pipeline. For multi-modality detectors, CSSA [

40] utilizes channel switching and spatial attention mechanisms to fuse information from RGB and NIR modalities, aiming to improve cross-modal detection performance, while ICAFusion [

23] employs a query-guided feature fusion framework, showing effectiveness in diverse multispectral scenarios. These two methods were chosen due to their strong performance and widespread adoption as general multimodal detectors in remote sensing, providing meaningful baselines for comparison.

Table 1 presents a quantitative comparison of CFRANet with these representative object detectors on multispectral object detection tasks. Among all methods, our proposed CFRANet, which also falls into the multi-modality category, achieves the highest detection accuracy with an mAP

of 82.41%, outperforming the second-best method, FCOS (73.36%), by a significant margin of 9.05%. This substantial improvement highlights the strong discriminative capability and cross-modal robustness of CFRANet, which benefits from its dual-stream architecture and the integration of the FRA module and MAFB for adaptive feature representation and fusion. While CFRANet incurs the highest computational complexity (384.12 GFLOPs) due to its dual-stream design and separate processing of RGB and NIR inputs, it still maintains a moderate inference speed of 30.96 FPS. Compared with other multi-modality methods like ICAFusion (26.45 FPS), CFRANet offers better performance at comparable speed. Although single-modality detectors such as SSD achieve higher FPS (e.g., 176.77), they fall short in terms of accuracy. Overall, the results demonstrate that CFRANet provides an effective trade-off between accuracy and efficiency, making it particularly suitable for remote sensing scenarios where robustness and precision in multispectral environments are crucial.

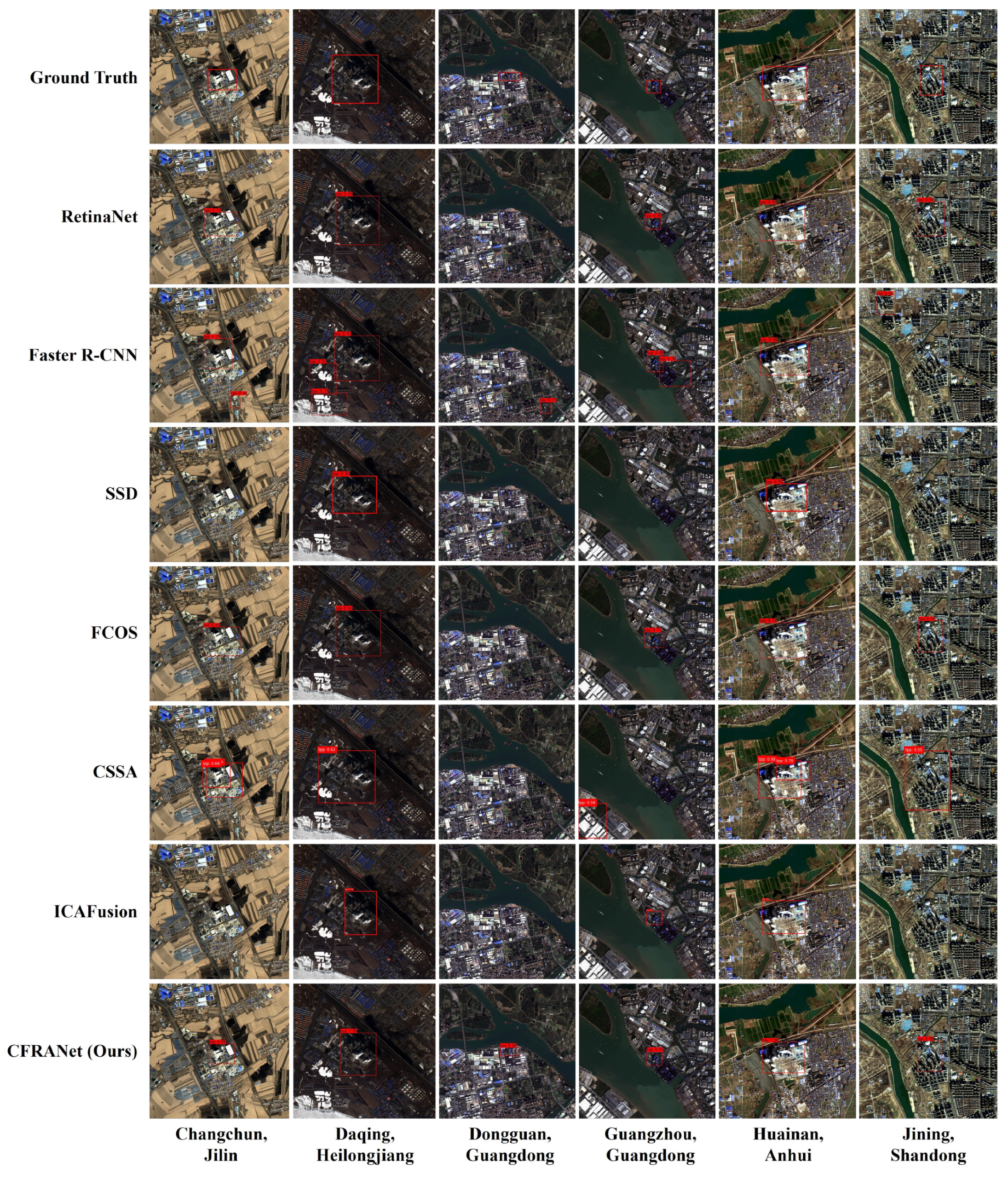

Figure 5 presents a qualitative comparison of detection results produced by different methods on six representative TPP images. These TPPs are located in diverse regions of China—specifically, Changchun (Jilin), Daqing (Heilongjiang), Dongguan (Guangdong), Guangzhou (Guangdong), and Huainan (Anhui)—covering a wide range of environmental and visual conditions, which enhances the credibility and representativeness of the comparison. Each column in the figure corresponds to one TPP image and includes the ground truth annotations alongside the detection results from RetinaNet, Faster R-CNN, SSD, FCOS, CSSA, ICAFusion, and the proposed CFRANet. As illustrated, CFRANet consistently delivers the most accurate and complete detections, demonstrating strong robustness and high precision across various complex scenes. In contrast, RetinaNet, SSD, FCOS, and ICAFusion exhibit varying degrees of missed detections, with SSD showing the highest miss rate. CSSA also presents a few missed and false detections. Meanwhile, Faster R-CNN tends to produce more false positives, leading to a higher false alarm rate. These qualitative results further validate the effectiveness and reliability of CFRANet in handling challenging multispectral remote sensing TPP detection tasks.

We also analyzed the correlation between the Params and FPS across the evaluated detectors. The Pearson correlation coefficient was −0.32 with a p-value of 0.4819, suggesting a weak and statistically insignificant negative correlation. This indicates that while model size may affect runtime to some extent, other architectural factors—such as model design, parallelizability, and feature fusion strategy—also play a crucial role in determining inference efficiency.

3.5. Ablation Study

3.5.1. Effect of MAFB

To verify the effectiveness of the proposed cross-modal fusion and attention modules, we conduct an ablation study based on RetinaNet using RGB and NIR modalities. The results are summarized in

Table 2, where all models are sorted by mAP

in ascending order. We focus on analyzing the impact of different fusion locations and modality combinations on detection accuracy and inference speed.

Compared with the baseline RetinaNet (RGB), using only NIR images leads to a significant performance drop (69.63% vs. 72.13% mAP, −2.50%), indicating that NIR images alone lack sufficient discriminative information. Simply concatenating RGB and NIR modalities (concat fusion) improves mAP to 73.83% (+1.70%), demonstrating the potential of multi-modal fusion. However, this naive fusion strategy lacks fine-grained cross-modal interactions. To address this limitation, we progressively introduce our proposed MAFB into different feature levels (, , ) of the RetinaNet backbone. The variants “MAFB@C5”, “MAFB@C3,C5”, “MAFB@C4,C5”, and “MAFB@C3,C4” denote fusion at different layer combinations. We observe consistent improvements across all configurations, confirming that cross-modal interactions at multiple levels significantly boost detection performance. Notably, fusing only at (MAFB@C3) achieves 79.74% mAP (+7.61%) with relatively low computational overhead, indicating that early-layer attention is particularly effective for fine-grained detail extraction. Using MAFB solely at (MAFB@C4) also achieves strong performance (79.30% mAP), suggesting the importance of mid-level semantic features in modality fusion. In contrast, fusing only at (MAFB@C5) results in a relatively modest improvement (75.50% mAP, +3.37%). This is primarily because high-level features in are highly abstract and spatially coarse, which limits the effectiveness of cross-modal interactions. The NIR modality’s strengths—such as preserving structural edges and enhancing contrast—are difficult to exploit at this stage due to the reduced spatial resolution and lack of fine detail. As a result, attention-based fusion in alone cannot fully leverage the complementary advantages of RGB and NIR inputs. Interestingly, fusion at two levels does not always outperform single-level fusion at or . For instance, MAFB@C3,C4 achieves 78.91% mAP, which is slightly lower than MAFB@C3. This may be due to potential redundancy or interference introduced when combining features from layers of different abstraction levels without sufficient semantic alignment. The overlapping or conflicting attention from multiple fusion stages may disrupt the learning of distinctive cross-modal patterns, leading to sub-optimal performance. Moreover, mid- and high-level features may not capture complementary modality cues as effectively as lower-level features do, further limiting the benefit of multi-level fusion when not comprehensively designed.

Ultimately, fusion across all three levels (CFRANet) yields the best result of 82.41 mAP (+10.28%), validating the effectiveness of the proposed Cross-modal Fusion Residual Attention Network (CFRANet), which fully exploits complementary information from both modalities across different semantic levels. In terms of inference speed, the introduction of attention modules does incur additional computational overhead, leading to a gradual reduction in FPS. Nevertheless, the accuracy gains outweigh this cost. CFRANet, despite being slightly slower (30.96 FPS), still maintains real-time performance on modern GPUs, making it a practical and effective solution for real-world multimodal detection tasks.

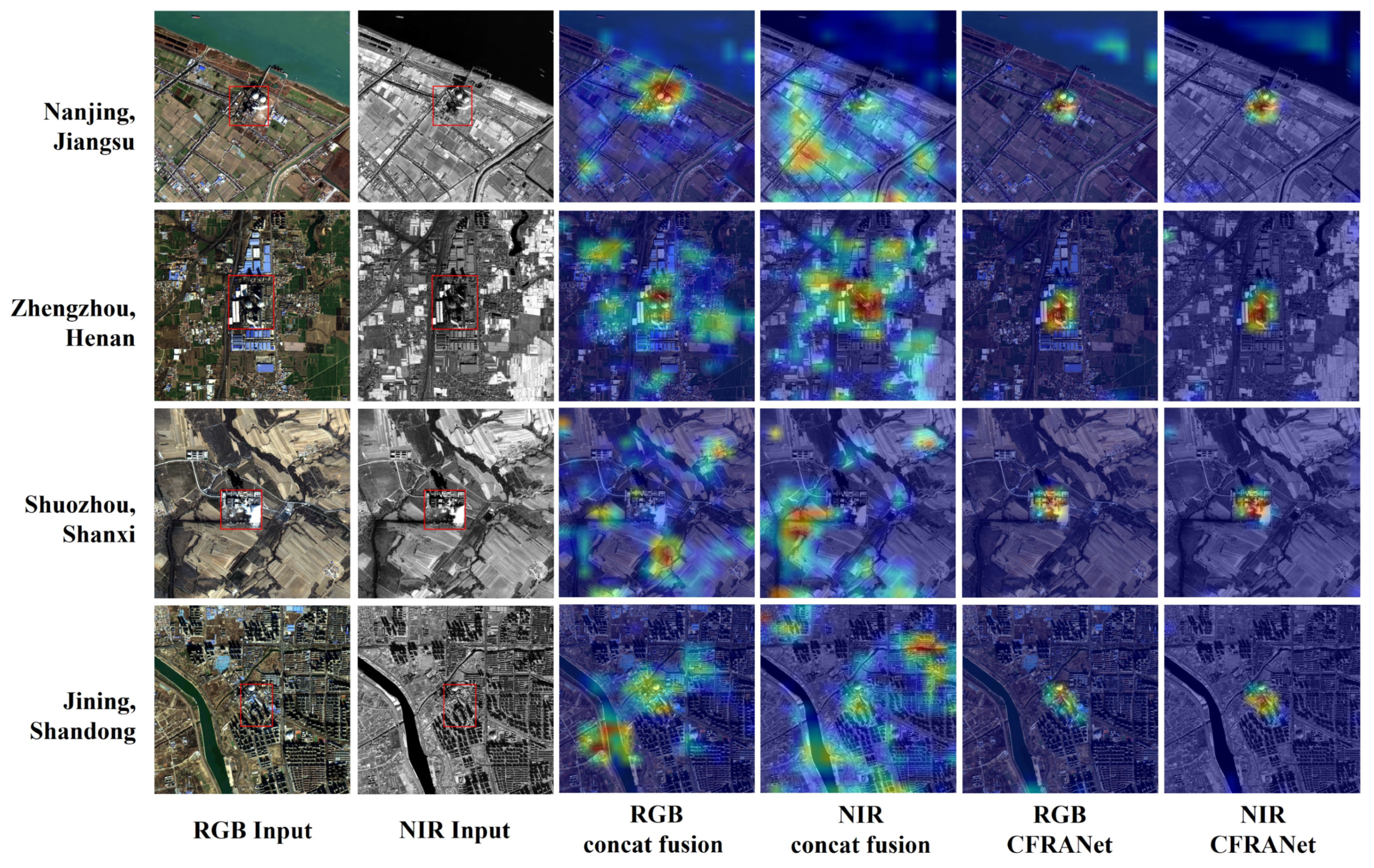

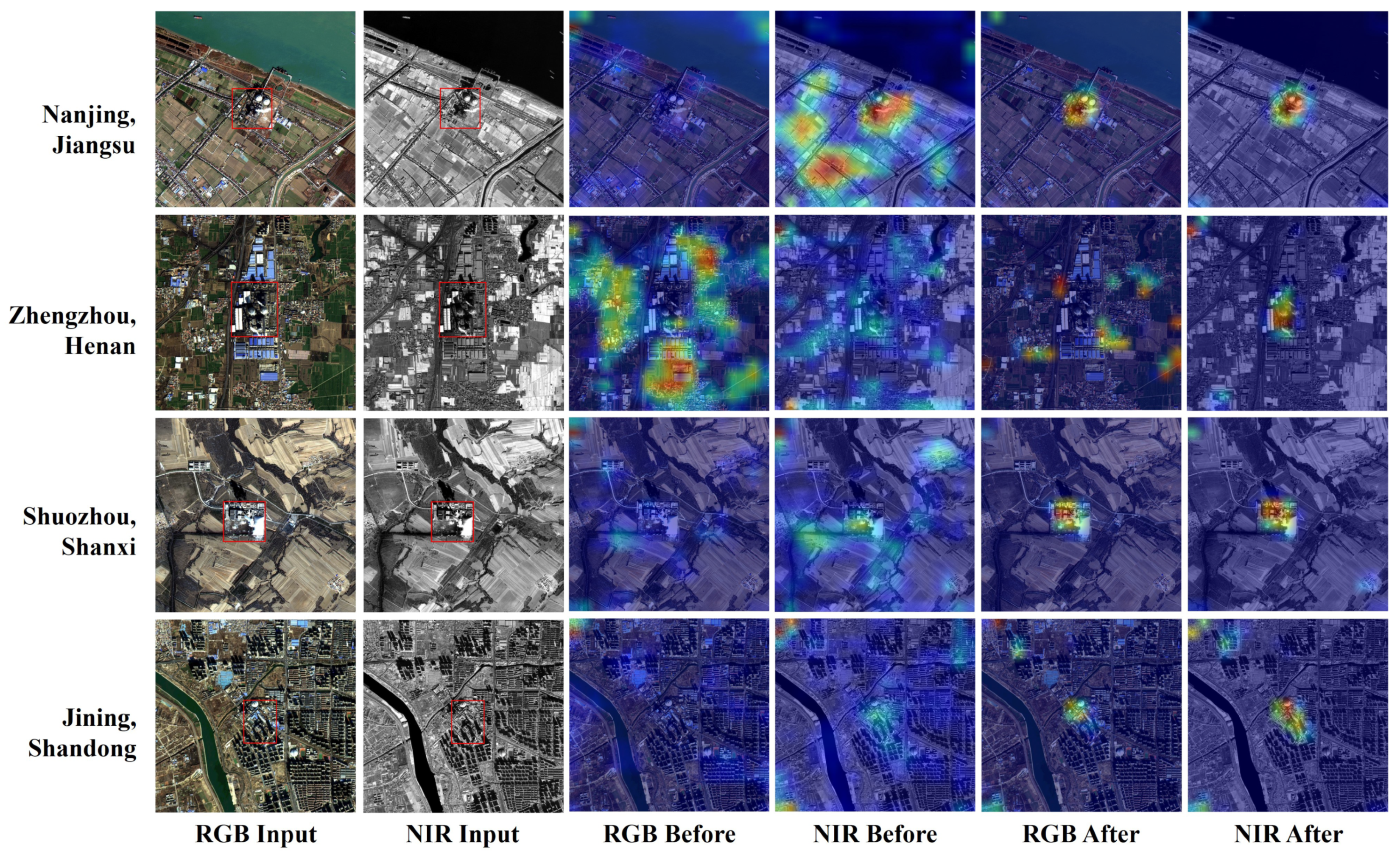

To provide further qualitative evidence of the effectiveness of MAFB, we visualize the Grad-CAM activation maps for two representative configurations: the baseline dual-stream model with simple RGB-NIR concatenation, and the full CFRANet model with MAFB applied across all three levels (

,

,

).

Figure 6 further demonstrates the effectiveness of the proposed MAFB fusion strategy through Grad-CAM visualizations on four representative TPPs located in Nanjing (Jiangsu), Zhengzhou (Henan), Shuozhou (Shanxi), and Jining (Shandong). Each row corresponds to one TPP sample, and the columns are arranged as follows: original RGB and NIR images (both with red bounding boxes indicating ground truth annotations), Grad-CAM heatmaps from the concat fusion model on the RGB stream and NIR stream, Grad-CAM heatmaps from CFRANet on the RGB stream and NIR stream. This comparison reveals that the concat-fusion model tends to activate broadly around background or irrelevant regions, whereas CFRANet produces more focused and discriminative responses, effectively highlighting the TPP structure in both modalities. This visual comparison further validates that MAFB enables more effective cross-modal feature interaction, facilitating precise localization and enhanced semantic understanding.

3.5.2. Effect of FRA Module

To further evaluate the effectiveness of the proposed cross-modal attention design, we replace the FRA module in MAFB of CFRANet with one frequency domain attention module and two widely used attention mechanisms, Fca (Frequency Channel Attention) [

41], SE [

25] and CBAM [

26], respectively, and compare their performance within the same CFRANet architecture. Fca enhances channel attention by analyzing frequency domain representations of feature maps. It applies the 2D Discrete Cosine Transform (DCT) to capture global dependencies and assigns higher weights to informative frequency components, thereby highlighting semantically important features. SE modules recalibrate channel-wise feature responses by explicitly modeling inter-channel dependencies through a lightweight gating mechanism, enhancing discriminative features while suppressing less informative ones. CBAM extends this idea by introducing both channel and spatial attention in a sequential manner, allowing the network to focus not only on what to emphasize (channel) but also on where to emphasize (spatial). As shown in

Table 3, the configuration

CFRANet with Fca replacing FRA module yields 71.46% mAP

, indicating that although Fca effectively models frequency information, it may not fully capture cross-modal interactions essential for the TPP detection task.

CFRANet with SE replacing FRA module achieves 76.25% mAP

, indicating a modest improvement over the simple concat fusion. Incorporating CBAM as

CFRANet with CBAM replacing FRA module yields a higher mAP

of 78.09%, thanks to its more comprehensive attention modeling. However, all three methods still fall short of the original CFRANet, which achieves the best performance of 82.41% mAP

. This result validates the advantage of CFRANet’s modality-aware multi-scale fusion strategy over traditional intra-modal attention mechanisms. In terms of inference speed, Fca, SE and CBAM modules introduce only minor computational overhead, maintaining real-time performance (47.16, 41.59 and 41.00 FPS, respectively). Although CFRANet incurs a larger drop in FPS due to its cross-modal and multi-level attention design, it still operates at 30.96 FPS—adequate for real-time applications—while delivering significantly better detection accuracy. This demonstrates that CFRANet strikes an effective balance between performance and computational cost.

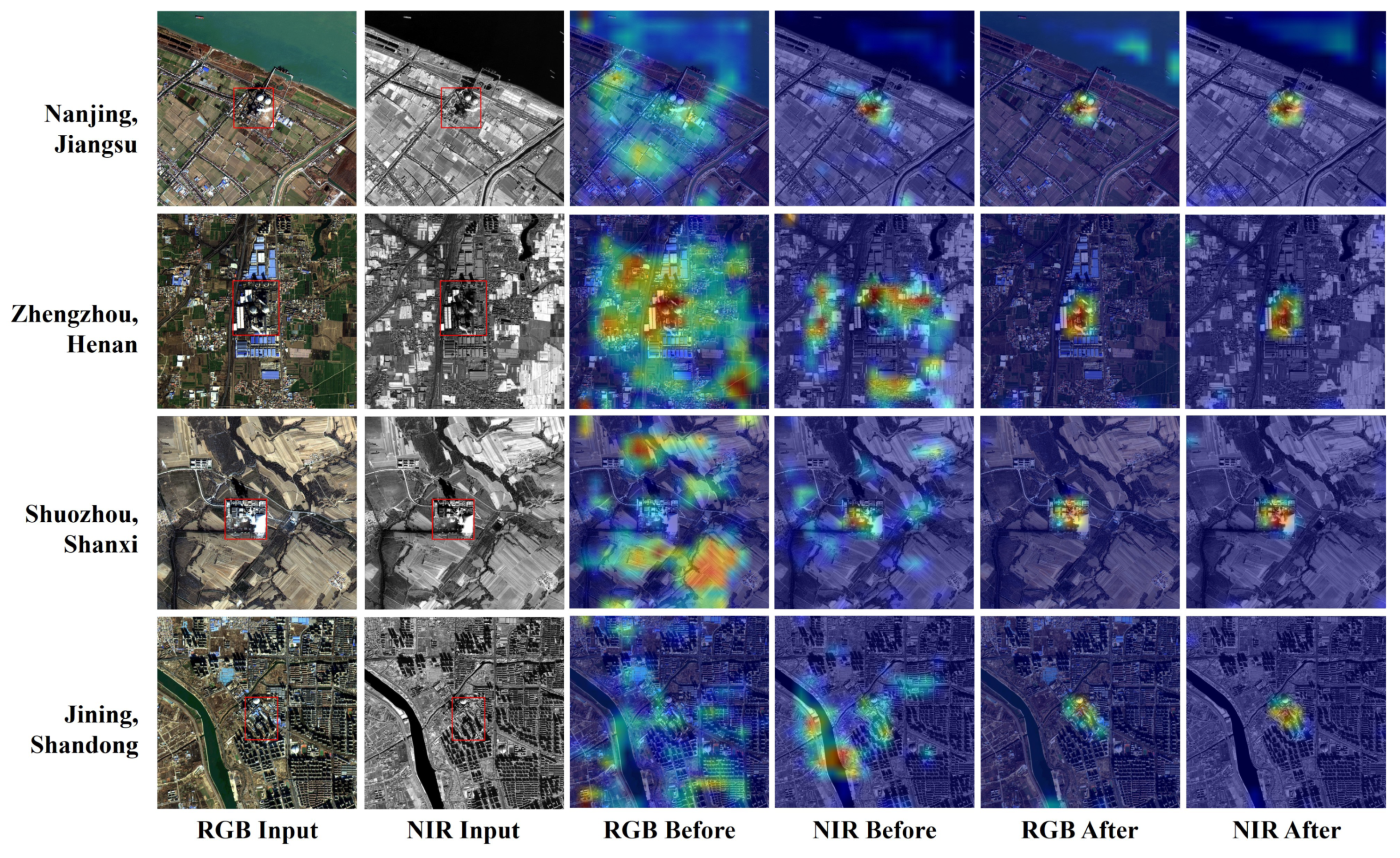

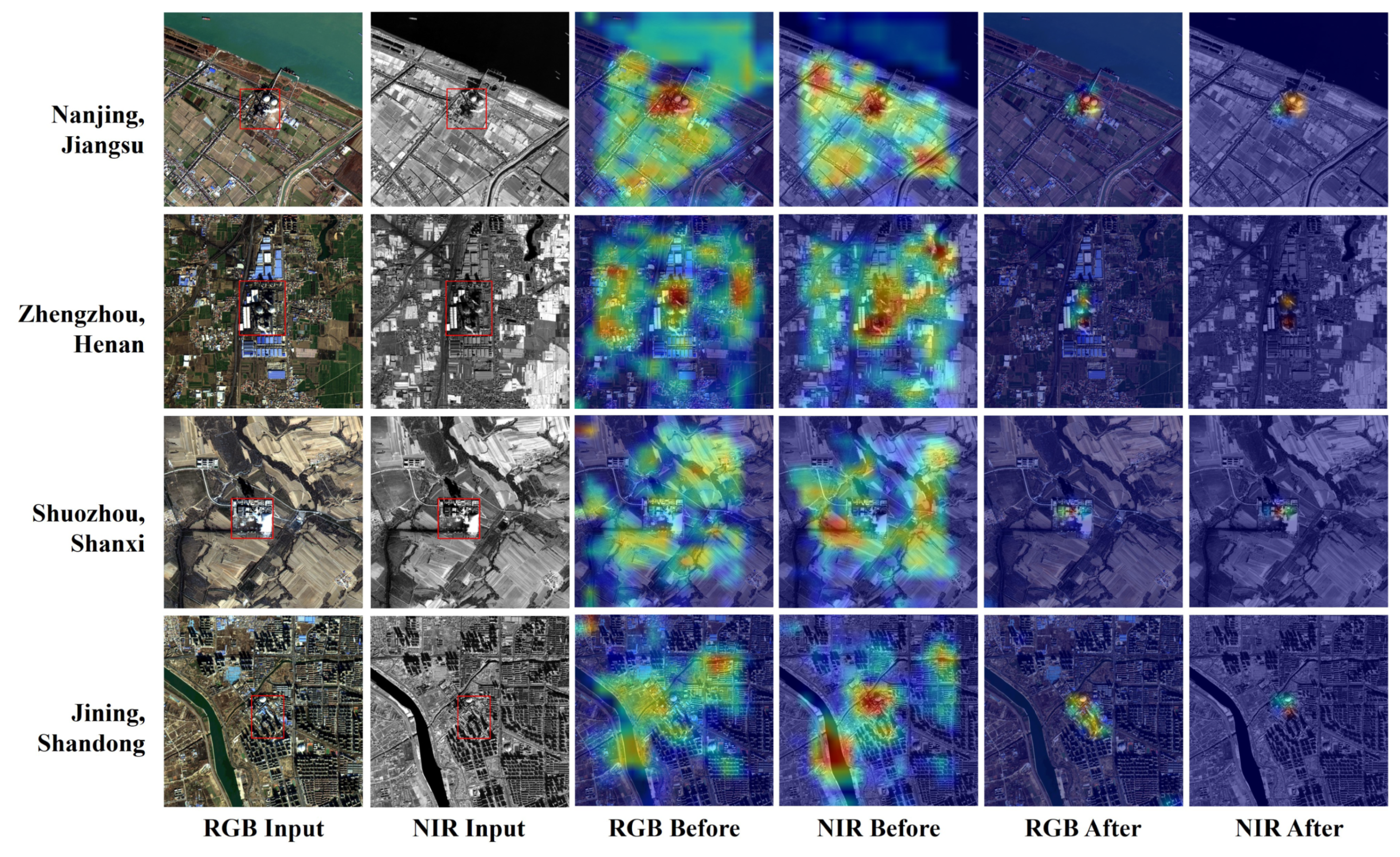

Figure 7 demonstrates the effectiveness of the proposed MAFB fusion strategy through Grad-CAM visualizations on four representative TPPs. Each row corresponds to one TPP and includes six columns: original RGB and NIR images (both with red bounding boxes indicating ground truth annotations), Grad-CAM heatmaps before fusion, and Grad-CAM heatmaps after applying MAFB fusion. It is evident that, after applying MAFB, both RGB and NIR modalities yield sharper and more localized activations around TPP structures. The post-fusion heatmaps exhibit higher concentration and clearer semantic focus, verifying the capability of MAFB to enhance cross-modal feature alignment and discriminative power.

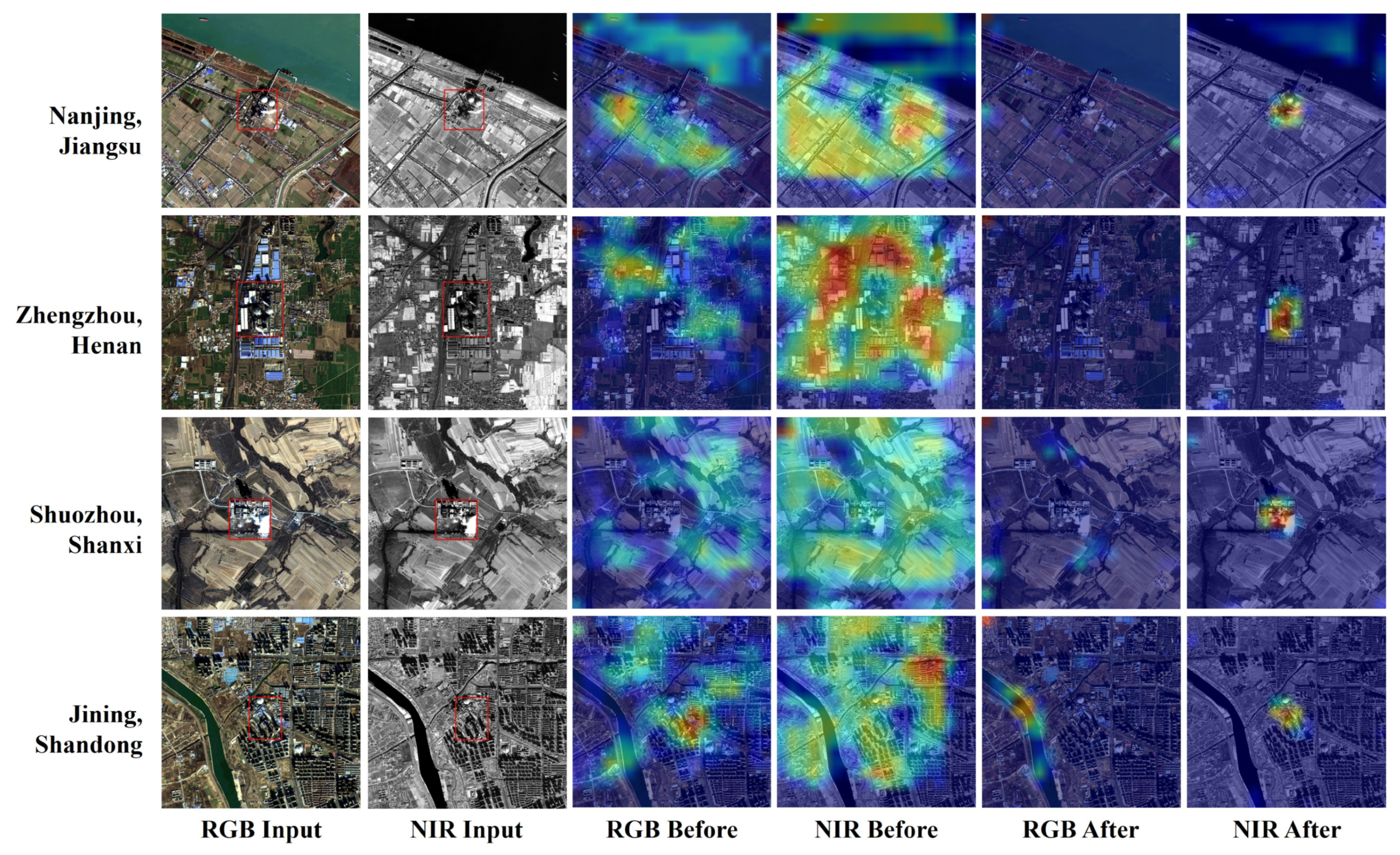

Figure 8 illustrates the effect of substituting FRA with the Fca module. While the NIR stream shows relatively good attention concentration around TPP structures, the RGB stream exhibits minimal improvement, with Grad-CAM activations remaining diffuse and poorly localized. This indicates that Fca provides limited cross-modal enhancement, especially in the RGB pathway.

Figure 9 shows the visualizations when the FRA module in MAFB is replaced with the SE attention module. The results suggest that SE also improves the focus of Grad-CAM activation in many cases, especially in the NIR stream. However, the enhancement is generally less consistent compared to the original MAFB configuration. In contrast,

Figure 10 presents the results when replacing the FRA module with CBAM. The improvements in activation focus are less noticeable.

Overall, these observations highlight that the proposed FRA module, with its frequency-responsive design, provides more robust cross-modal enhancement compared to generic attention mechanisms such as Fca, SE and CBAM.

3.6. Hyperparameters

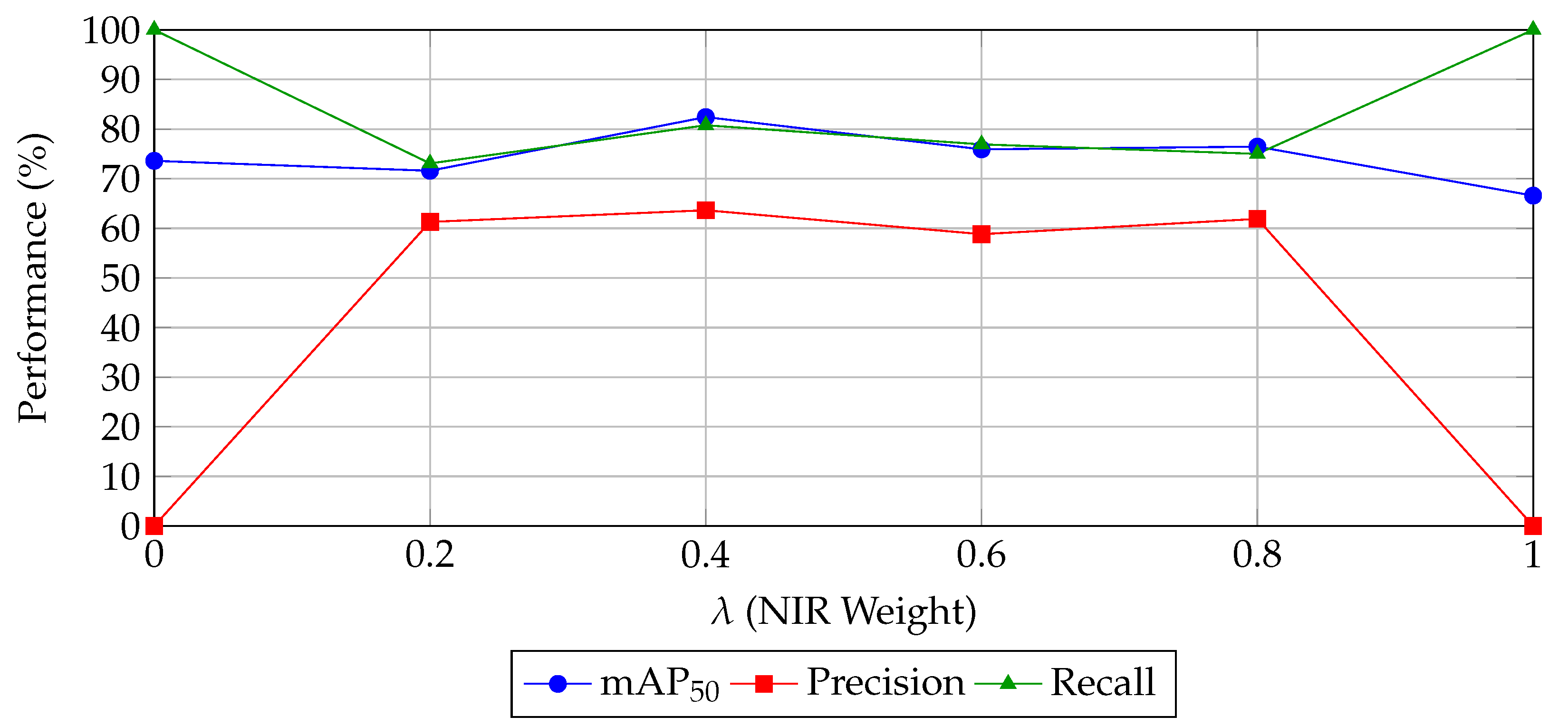

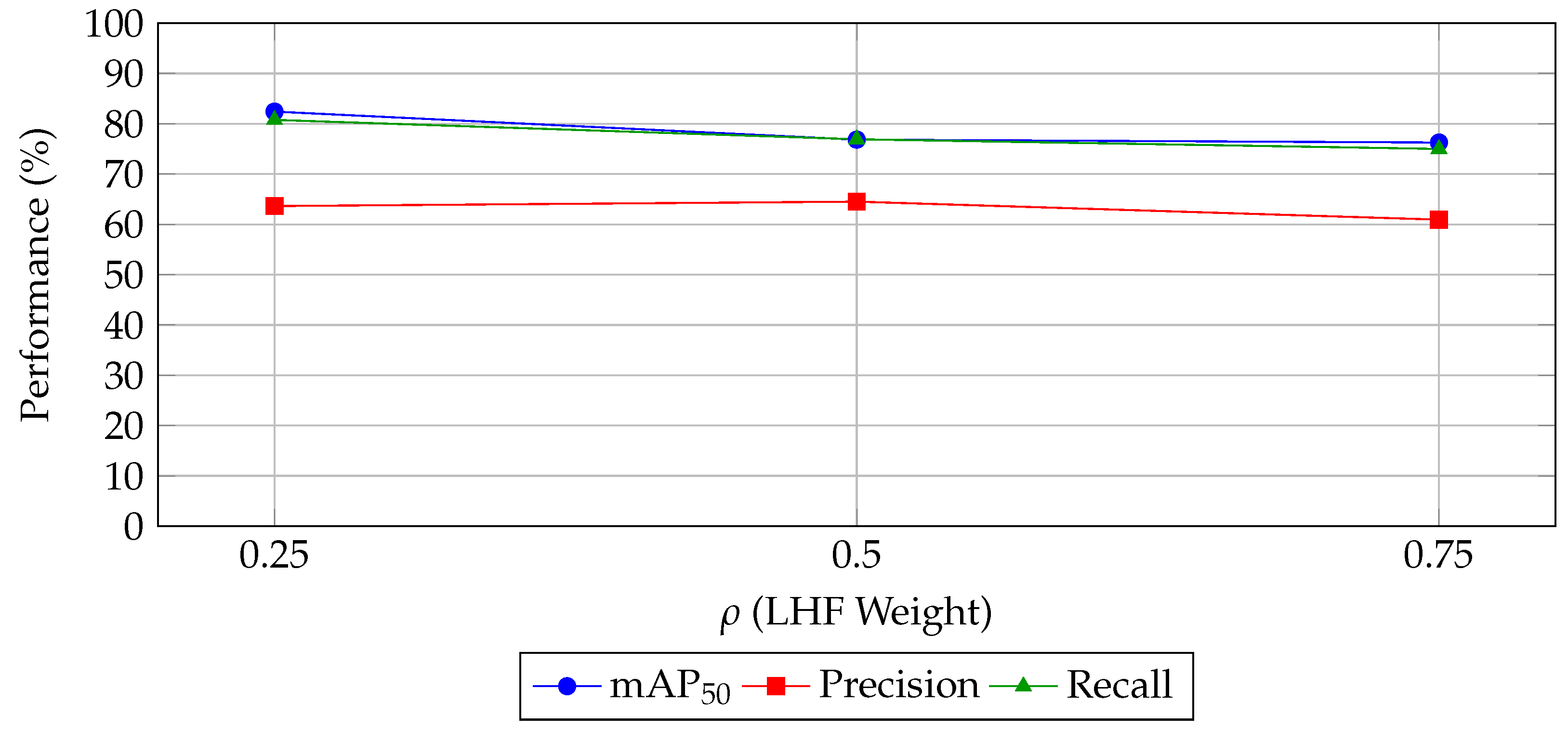

3.6.1. Effect of on Detection Performance

Figure 11 illustrates the impact of varying the hyperparameter

on detection performance, as measured by mAP

, Precision, and Recall. As

increases from

to

with an interval of

, the contribution of the NIR modality in the total loss becomes more significant. When

, the model relies solely on RGB supervision, resulting in a relatively high Recall (

) but extremely low Precision (

), indicating a high false positive rate. Conversely,

—which corresponds to exclusive NIR supervision—also yields poor Precision (

), despite maintaining full Recall.

The hyperparameter controls the weighting balance between the RGB loss and the NIR loss. Based on empirical ablation experiments, was found to achieve the best overall performance. Specifically, RGB images typically contain richer spatial and structural cues, so slightly emphasizing the RGB loss helps improve detection accuracy. At the same time, retaining the contribution from the NIR modality supplements complementary spectral information. This setting theoretically realizes a reasonable fusion of complementary information from both modalities, effectively enhancing the overall model performance. The best overall performance is observed when , where the model achieves a maximum mAP of , a balanced Precision of , and a Recall of . This setting outperforms both single-modality baselines, demonstrating that moderate fusion of RGB and NIR supervision provides complementary benefits. Additionally, strikes an effective trade-off between modality-specific contributions. Therefore, we adopt as the optimal configuration in our experiments.

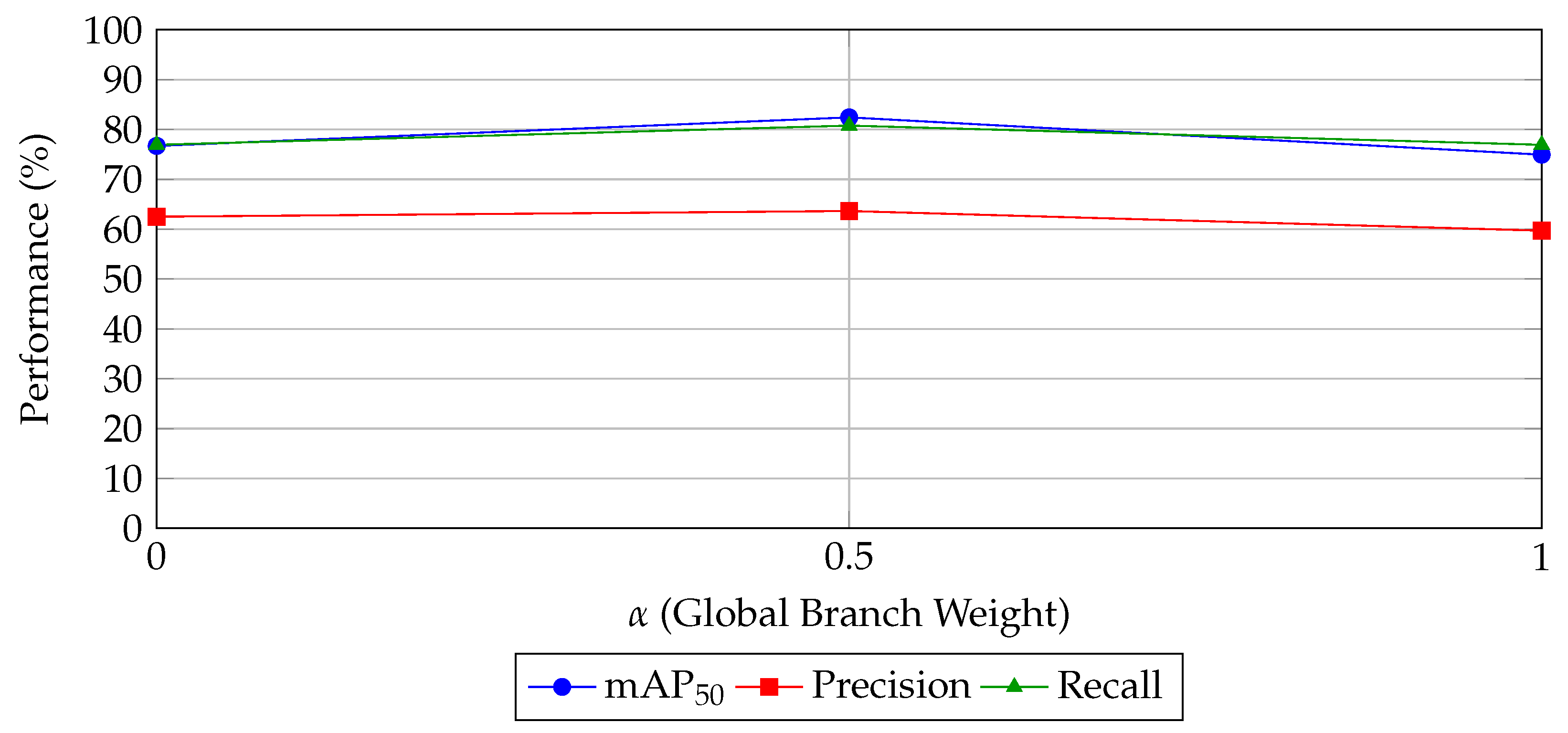

3.6.2. Effect of on Frequency-Responsive Attention

We evaluate the influence of the global-branch weighting parameter

in the FRA Module by testing three configurations:

(local-only),

(balanced local–global fusion), and

(global-only). As illustrated in

Figure 12, the best performance is achieved when

, where the model attains the highest mAP

of

, along with balanced Precision (

) and Recall (

). When

, the network relies solely on local spatial cues, which limits its ability to capture long-range dependencies, resulting in a lower mAP

of

. In contrast, setting

fully emphasizes the global frequency-aware branch, but omits crucial local details, leading to a further performance drop (mAP

of

).

The FRA module consists of a local frequency branch and a global frequency branch, with balancing their respective contributions. Empirical results indicate that setting , i.e., equal weighting between local and global branches, enables the model to better integrate local texture details and global structural information. From a theoretical perspective, this symmetric weighting simplifies optimization by avoiding bias toward either scale, thereby facilitating the complementary enhancement of multi-scale frequency features. This design aligns well with the module’s objective of capturing complex multi-level features of the target objects. These results also confirm that both local spatial features and global frequency information are indispensable for robust representation learning. Accordingly, we adopt as the default setting in the FRA Module for our experiments.

3.6.3. Effect of on LHF

We further analyze the effect of the weighting factor

in the LHF, which controls the relative importance of low- and high-frequency components during frequency-domain fusion. Specifically, we test three settings:

(low-frequency dominant),

(equal weighting), and

(high-frequency dominant). As illustrated in

Figure 13, the best performance is obtained when

, achieving a maximum mAP

of

, Precision of

, and Recall of

. When

increases, assigning greater emphasis to high-frequency features, a slight but consistent performance drop is observed. This trend suggests that while high-frequency components contain valuable texture and boundary information, overemphasizing them can lead to loss of global structural cues. Conversely, prioritizing low-frequency information (as in

) leads to more stable and discriminative representations.

These findings confirm that low-frequency global context plays a critical role in complementing local and high-frequency details within the LHF design. Therefore, we adopt as the default configuration in the LHF.

3.7. Cross-Domain Generalization Analysis

To further assess the generalization ability of the proposed CFRANet in diverse visual conditions, we also conduct experiments on the publicly available LLVIP dataset [

42]. LLVIP is a standardized multi-modal benchmark specifically designed for various low-light visual tasks such as image fusion, pedestrian detection, and image-to-image translation. It contains 30,976 images (15,488 aligned visible–infrared pairs), most of which were captured under extremely dark environments. Pedestrian annotations are provided, making it a challenging yet representative benchmark for low-light pedestrian detection and multispectral fusion performance evaluation.

Table 4 compares the performance of CFRANet with two state-of-the-art multi-modality methods on the LLVIP dataset. Although our model was originally designed for TPP detection in complex multispectral remote sensing imagery, it still achieves competitive performance on LLVIP, with a mAP

of 96.2%. This result demonstrates the robustness and adaptability of CFRANet to different cross-modal tasks. While ICAFusion achieves the highest mAP

of 96.3% and the best overall mAP of 62.3%, CFRANet remains competitive with a comparable mAP

and a mAP of 59.0%, outperforming CSSA. The relatively lower mAP

of CFRANet (67.6%) compared to ICAFusion (71.7%) suggests slightly less precise localization at stricter IoU thresholds.

This marginal difference can be attributed to CFRANet’s architectural design, which is optimized for detecting structured composite objects such as TPPs in high-resolution satellite images. Despite not being tailored for pedestrian detection in low-light conditions, CFRANet’s strong performance on LLVIP validates the generalizability of its dual-stream cross-modal fusion framework.

4. Discussion

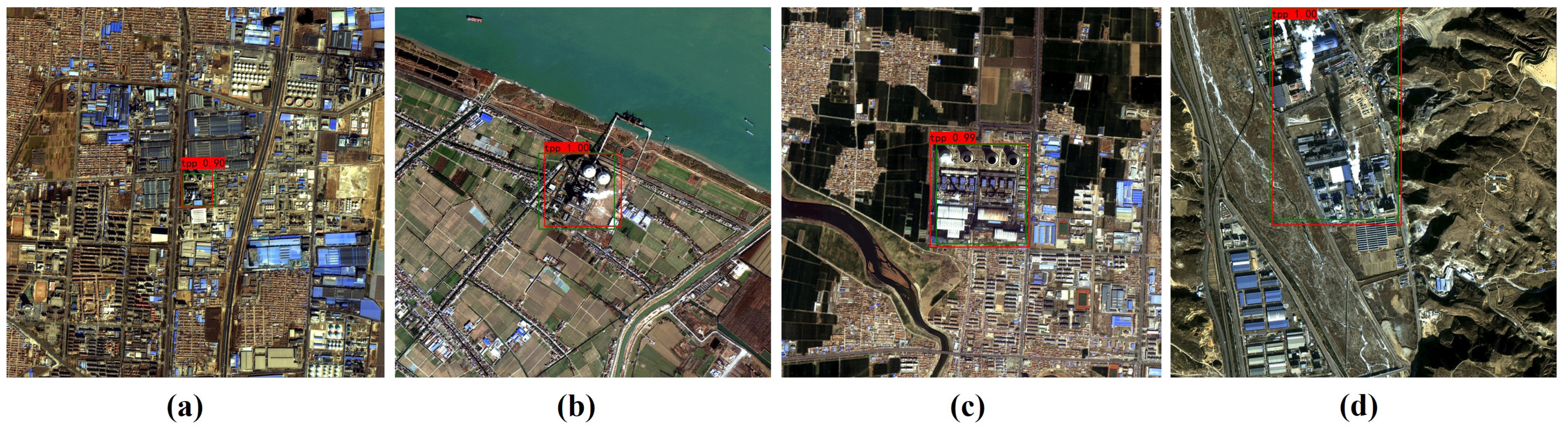

We propose a robust detection framework for TPPs with strong generalization across diverse and complex geographic environments. As shown in

Figure 14, CFRANet accurately detects large, medium, and small-scale TPPs under challenging background conditions. In the spatial domain, TPPs typically occupy limited regions of an image. After Fourier transform, these localized features (e.g., smokestacks, cooling towers) are dispersed across the frequency spectrum, potentially causing aliasing when multiple TPPs are present. However, CFRANet remains effective in such cases due to two key factors: (1) deep networks can learn robust global frequency patterns associated with key TPP structures, even when spatially mixed; (2) the joint fusion of spatial and frequency-domain features helps resolve ambiguities by preserving local cues while leveraging global context. Furthermore, extensive experiments across 18 provinces and regions (

Figure 15) confirm CFRANet’s robustness and generalization ability. Notably, in the Guangdong example, two spatially separated TPPs within a single image are both correctly identified, demonstrating its capability to handle multi-instance and aliasing-prone scenarios.

These results underscore CFRANet’s effectiveness and reliability in real-world deployments across heterogeneous spatial and spectral environments.

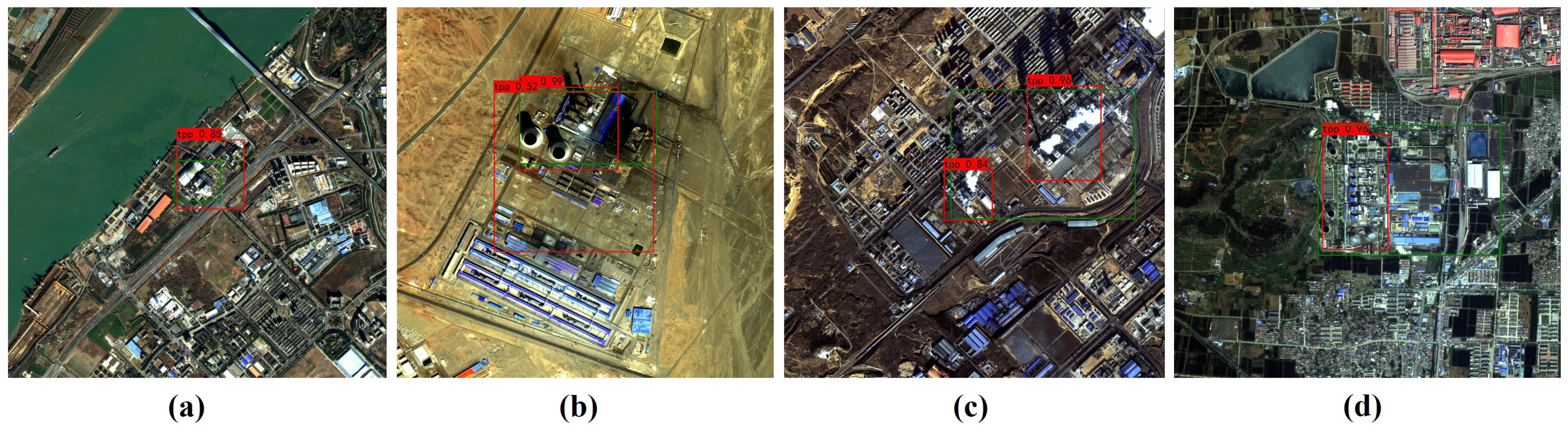

Figure 16 illustrates common failure cases of our method, primarily involving TPPs with irregular shapes or dispersed internal components. These structures are often situated in dense urban areas, making them challenging to distinguish from surrounding buildings. Consequently, conventional rectangular annotations may not precisely outline the true extent of the plant, occasionally covering non-relevant structures. In future work, we aim to enhance the robustness of our framework by introducing component-level instance segmentation or implementing oriented bounding boxes to better capture object geometries. Furthermore, we also aim to enhance the robustness and efficiency of our framework by exploring model optimization strategies such as network pruning, quantization, or adopting lightweight backbone architectures (e.g., MobileNetV3, ShuffleNetV2). These approaches have the potential to reduce the computational footprint without significantly compromising detection accuracy.