Abstract

Dual polarimetric SAR is capable of reflecting the biophysical and geometrical information of terrain with open access data availability. When it is combined with time-series observations, it can effectively capture the dynamic evolution of scattering characteristics of crops in different growth cycles. However, the actual planting of crops often shows spatial dispersion, and the same crop may be dispersed in different plots, which fails to adequately consider the correlation information between dispersed plots of the same crop in spatial distribution. This study proposed a crop classification method based on multi-temporal dual polarimetric data, which considered the utilization of information between near and far spatial plots, by employing superpixel segmentation and a HyperGraph neural network, respectively. Firstly, the method utilized the dual polarimetric covariance matrix of multi-temporal data to perform superpixel segmentation on neighboring pixels, so that the segmented superpixel blocks were highly compatible with the actual plot shapes from a long-term period perspective. Then, a HyperGraph adjacency matrix was constructed, and a HyperGraph neural network (HGNN) was utilized to better learn the features of plots of the same crop that are distributed far from each other. The method fully utilizes the three dimensions of time, polarization and space information, which complement each other so as to effectively realize high-precision crop classification. The Sentinel-1 experimental results show that, under the optimal parameter settings, the classified accuracy of combined temporal superpixel scattering features using the HGNN was obviously improved, considering the near and far distance spatial correlations of crop types.

1. Introduction

Synthetic Aperture Radar (SAR) is an active microwave remote sensing technology. Due to its unique working principle, it has the capability of all-day, all-weather observation and high-resolution imaging [1]. It is able to penetrate the cloud layer and part of the vegetation cover to obtain information on the scattering characteristics of terrains. Among the existing space-borne SAR systems, dual polarimetric SAR has become an important data source in the field of agricultural monitoring due to its comprehensive performance, considering the aspects of resolution, observation width, scattering representation and timely acquisition. By transmitting one polarization wave and receiving two orthogonally polarization waves, dual polarimetric SAR enhances the information dimension by about two times compared with single PolSAR. Compared with fully PolSAR systems, it can achieve a balance between data information and acquisition efficiency.

Although dual polarimetric data can provide certain polarimetric information, it only contains information from two channels, which limits its ability to comprehensively characterize crops to a certain extent, and there is a problem of missing information. Multi-temporal observation data has a unique advantage in that it can continuously and dynamically record the change process of crops during the growth cycle, including the evolution of morphology, structure, physiological characteristics and other aspects. This time-dimensional information can effectively complement the insufficiency of dual polarimetric data. Different crops will show significantly different characteristics at their respective growth stages [2]. Combining multi-temporal data with dual polarization can make full use of the advantages of both [3], and it can more accurately identify and classify different types of crops and improve the classification accuracy of PolSAR [4].

In the literature, multi-temporal dual PolSAR crop classification methods use the pixel of images as the processing unit and mainly rely on the polarimetric features and texture properties of the pixel. Salma et al. used the K-means unsupervised method for the clustering of and , then analyzed the changes in scattering mechanisms of ginger, tobacco, rice, cabbage and pumpkin crops in the plane during their respective growth stages [5]; Wang et al. extracted 12 polarimetric parameters based on the covariance matrix and decomposition, evaluated the sensitivity of each parameter to crop phenology, and constructed a classification model using the best combination [6]. Machine learning algorithms have been gradually applied to make better use of polarimetric features. Common machine learning algorithms include maximum likelihood classification, decision tree [7], random forest (RF) [8,9], Support Vector Machine (SVM) [10,11], etc. Machine learning technology shows obvious advantages with its powerful data processing capabilities. It can efficiently analyze and process massive amounts of data to achieve high-precision crop identification. Liu Rui et al. took Xinjiang Shihezi city as the study area and used multi-temporal Sentinel-1A SAR data with three classification algorithms, RF, CART decision tree and SVM. The classification results have shown that the RF classification method had the highest classification accuracy [12]. Dobrinić et al. also used RF for a classification task and used Sentinel-1 multi-temporal data for a classification study of crop categories [13]. At the same time, deep learning technology is gradually showing its powerful advantages in the field of crop classification. By constructing neural network models, deep learning can more accurately capture the subtle changes in the crop growth process, thus improving the classification accuracy. Xiao et al. investigated the application of multi-temporal SAR images in crop classification in rural areas of China, using a pixel-based Kth nearest neighbor algorithm in subspace, with an overall accuracy of up to 98.2% for ten categories [14]. Xue et al. proposed a sequence SAR target classification method based on the spatial–temporal ensemble convolutional network; this network has shown a higher classification accuracy [15]. Teimouri et al. classified 14 categories of crops in the Danish region based on a combination of FCN and ConvLSTM, which achieved a higher accuracy [16]; Wei et al. proposed a classification method based on the U-Net model for multi-temporal dual polarimetric crop data, which was able to achieve high classification accuracy in complex crop growing environments [17].

However, with the increasing resolution of dual polarimetric SAR systems, for high-resolution images, pixel-based image classification algorithms usually produce speckle noise effects [18] and degrade the image classification accuracy due to the high intraclass variability and low interclass separability of image pixels. So some scholars jumped out of the pixel-based framework and developed object-based methods, which are realized by aggregating neighboring pixels with similar features [19,20,21,22]. Object-level image processing means are more in line with the actual situation of farmland distributed in plots. For example, when classifying a large area of wheat cultivation, the object-based method is able to recognize the contiguous wheat area as a whole object, instead of the fragmented and scattered classification results as in the case of image element-based classification. Unlike pixel-level methods that ignore spatial context, superpixels group pixels into locally homogeneous regions that align well with agricultural field boundaries. Using superpixels as the basic processing unit allows the model to leverage these inherent spatial relationships for more accurate classification.

In practice, Clauss et al. segmented the Sentinel-1 time series using Simple Linear Iterative Clustering (SLIC), followed by extracting the VH-averaged backscatter coefficients for each superpixel, which has been used in six different rice growing regions with an average overall accuracy of 83% [23]. Some scholars have utilized Simple Non-Iterative Clustering (SNIC) for segmentation and have shown that object-based algorithms have higher accuracy than pixel-based algorithms. Xiang et al. combined backscatter coefficients with texture, elevation, and slope information, then used an object-oriented approach to combine these features to improve land cover classification accuracy [24]. Emilie et al. used an object-level random forest classifier to classify crops based on Sentinel-1 time series from January to August 2020 [25]. Gao et al. proposed a root-mean-square-based temporal polarization similarity metric and generated superpixels using an edge detection method with stacked two-dimensional Gaussian-type windows, which demonstrated better performance than the traditional method on Sentinel-1 dual polarimetric SAR data [26]. Huang Chong et al. proposed an Object-Based Dynamic Time Warping (OBDTW) algorithm to better improve rice classification accuracy by utilizing the features of long-term Sentinel-1 SAR data [27].

However, effectively fusing these datasets remains challenging. First, crop scattering properties change dynamically, making simple feature concatenation ineffective. Second, the high-dimensional data contains both redundant and complementary information, requiring a model that can selectively leverage useful features while suppressing noise and redundancy.

Although there have been a number of scholars who have conducted relevant studies and innovations on the classification of crops for multi-temporal dual polarimetric SAR data, there are still some problems that need to be further investigated: The first question is that for SAR data of different temporals, the polarimetric scattering features of crops may change, which may easily lead to inconsistent superpixel boundaries across temporals when single time segmentation is performed. The second is that most of the existing classification algorithms are based on local features or neighborhood information, which makes it difficult to effectively capture the similarities and associations between distant plots.

To address the above problems, this article designed a multi-temporal dual polarimetric SAR crop classification method based on plot distribution information, with specific main research components.

(1) A superpixel segmentation model based on multi-temporal data was constructed. By performing superpixel formation on the covariance matrices combined from multi-temporal data, the consistency and stability of superpixel boundaries were achieved by utilizing the constraints of the temporal covariance matrix. This strategy not only fully integrated the information of all temporal data during the long time period, but also effectively alleviated the boundary mismatch problem caused by single time segmentation.

(2) A HyperGraph neural network was used to connect far-distant crop plots with others of the same type. Considering that although the same crop plots may be spatially dispersed, their scattering characteristics have certain similarities and correlations, this article utilized a HyperGraph neural network to establish higher-order relationships among multiple superpixels to effectively capture potential feature correlations and category consistency, so as to improve the accuracy and robustness of PolSAR data in crop classification.

(3) Different scattering features were extracted and combined to explore their classification performances in complex scenes. Four typical dual polarimetric features of superpixels, , , and , were extracted, and the classification was verified for each scattering feature one by one to explore its feasibility for crop classification. The classification accuracy was further investigated by feature combination; the classification of feature combination achieved the optimal accuracy on the experimental dataset.

2. Materials and Methods

2.1. Study Area

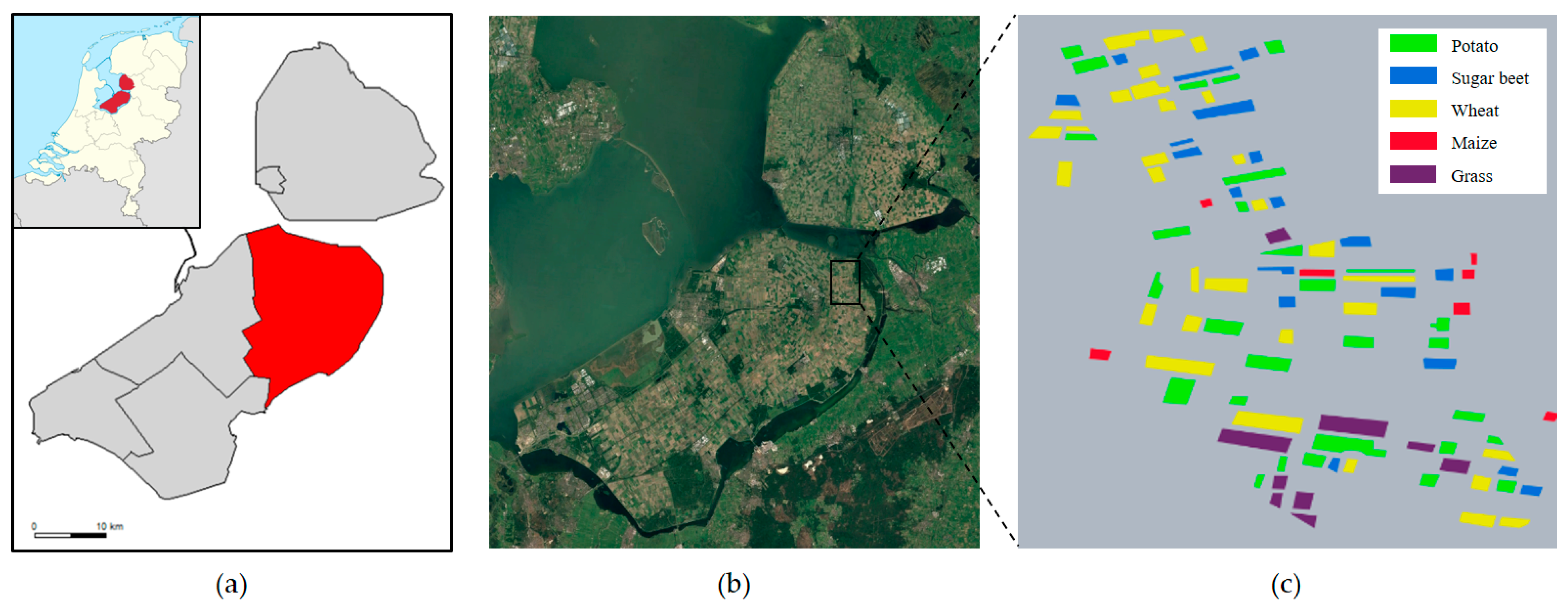

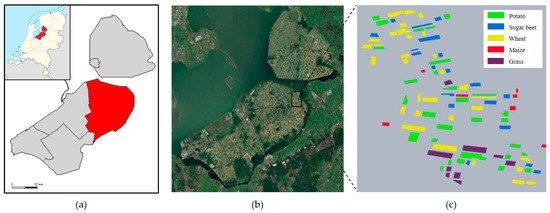

The experimental study area selected is the Flevoland region in the Netherlands. In order to avoid the influence of the observation alignment errors of different sensors on the observation results [28], the SLC data in the IW mode of Sentinel-1A are used in this article. In this study, the multi-temporal dataset of this region was downloaded through the Sentinel-1 open access platform. The real terrain classes were labeled according to the map provided by Khabbazan et al. [29] and Google Earth historical images.

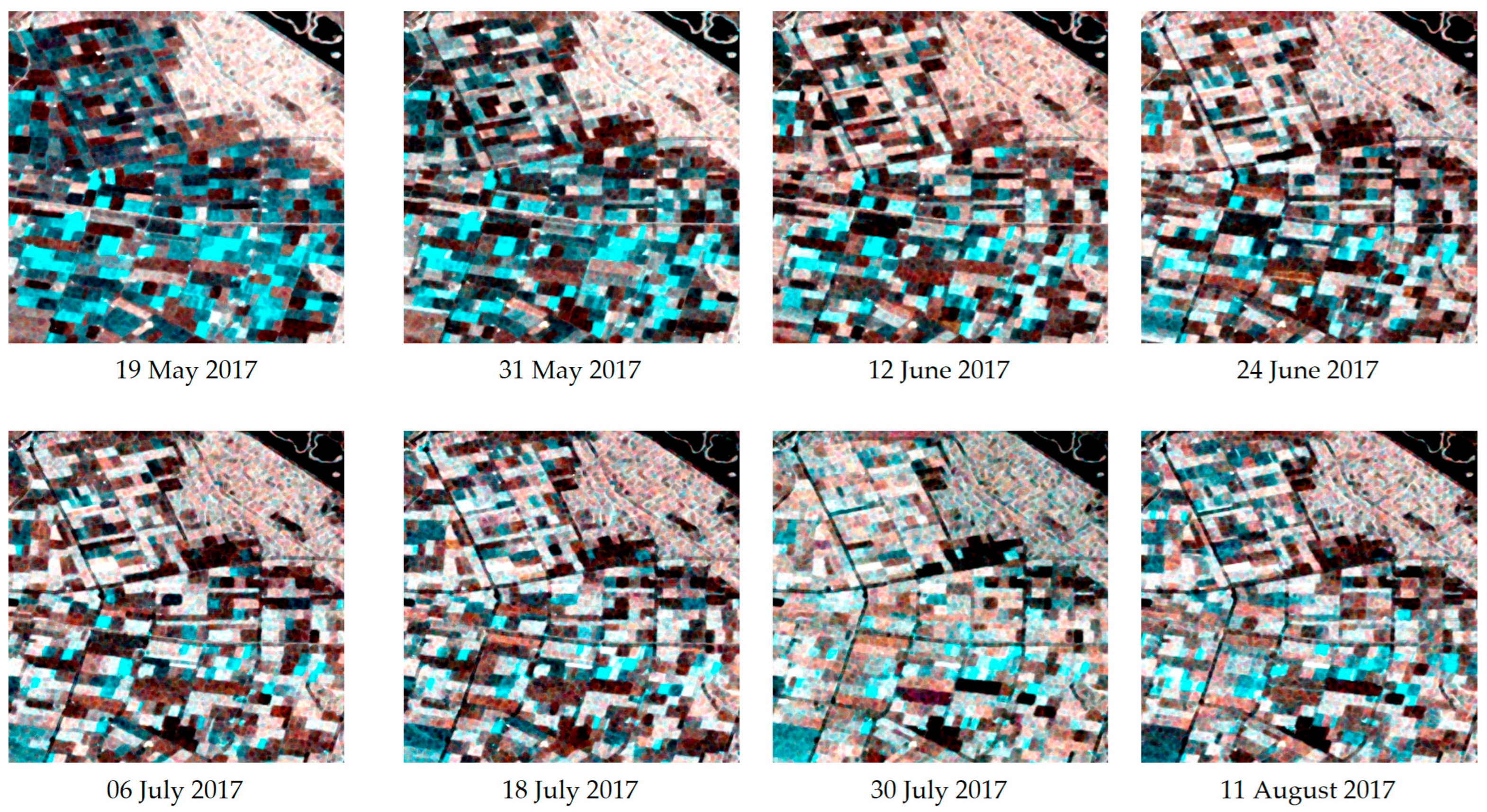

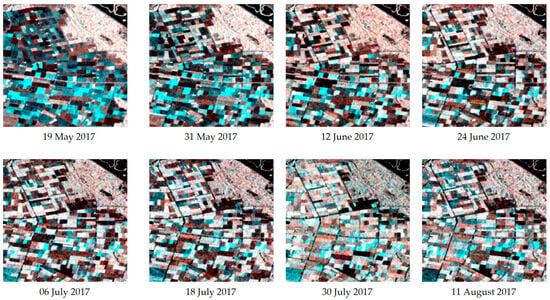

The Flevoland time-series dataset was collected from May to August 2017, with a total of 8 scenes of data. The scene pixel size of the images is 637 × 644, and the real crop information and pseudo-color images are shown in Figure 1 and Figure 2. The dataset covers five crop types: potato, sugar beet, winter wheat, corn, and grass.

Figure 1.

Study area and real crop labeling; (a) presents the division of Flevoland into districts; (b) shows an optical image of Google Earth; (c) displays the real crop information.

Figure 2.

Pseudo-color images of Flevoland in 8 temporal data scenes. The three-channel composition of the pseudo-color image is (red), (green), and (blue).

2.2. Related Works

2.2.1. Dual PolSAR Data Representation

The dual polarimetric SAR data can acquire the scattering information of the object in different combinations of electromagnetic wave transmission and reception polarimetric modes [30], and the polarimetric covariance matrix can analyze and interpret this information to reveal the polarimetric scattering features of the object. In the typical dual polarization VV/VH mode, the polarimetric covariance matrix is expressed as

where and represent the scattering coefficients of vertical emission vertical reception and vertical emission horizontal reception, respectively. denotes the covariance transpose.

Other dual polarimetric data modes are similar to the VV/VH mode. Considering the main acquisition method of the Sentinel-1 TOPS mode, a detailed description of the VV/VH mode is provided.

The eigenvalue decomposition of the covariance matrix is expressed as Equation (2).

where is the diagonal eigenvalue matrix; , which satisfies . reflects the main scattering mechanism of the object. is the corresponding orthogonal eigenvector matrix, and each eigenvector satisfies the unit-module normalization. Based on the eigenvalue decomposition of the covariance matrix, three polarization features can be obtained.

The scattering entropy () describes the randomness of the object scattering, which takes the value in the range from 0 to 1, defined as

Complementing the scattering entropy parameter, the polarization anisotropy () describes the relative importance between two eigenvalues and is defined as

also ranges from 0 to 1. When the scattering intensities of the two scattering mechanisms are similar, is close to 0, indicating a more homogeneous scattering characteristic in the observation area. When the difference between the two eigenvalues is large, the increases, indicating that a certain scattering mechanism dominates the observation area.

The mean scattering angle () is closely related to the scattering mechanism, which ranges from 0° to 45°.

2.2.2. Dual PolSAR Wishart Distribution Characteristics

Multi-look processing of dual polarimetric SAR data is usually required, so as to reduce speckle, compress data and improve data quality. This process is realized by averaging several independent 1-look polarimetric covariance matrices [31]. Equation (6) defines the average covariance matrix after n-look processing.

where denotes the number of looks and the vector denotes the kth 1-look data sample.

The polarimetric covariance matrix after n-look processing can be expressed as Equation (7).

It has been shown [32] that for multi-look polarimetric SAR data, the matrix obeys the complex Wishart distribution.

where is the spatial ensemble average of the multi-look polarimetric covariance matrix. denotes the trace of . denotes the dimension of the vector . is the normalization factor. For dual polarization data, . is the gamma function, which is defined as

Replacing with as the covariance matrix of the class, rewrite as , . The maximum likelihood classifier will evaluate whether belongs to . Lee et al. [31] derived a distance measure by maximizing for , defining the distance metric formula for classification of -look polarimetric SAR data as

As the number of looks, , increases, the influence of the a priori probability on category differentiation becomes smaller. In polarimetric SAR data, if nothing is known about the a priori probability of each category, it can be assumed that the a priori probability of different categories is the same, in which case the distance metric is independent of . Equation (11) can be simplified as

Equation (12) is the Wishart distance metric. In practice, due to the obvious differences in the scattering characteristics of crop categories in polarimetric SAR data, the Wishart model is able to capture these differences more accurately by modeling the polarimetric covariance matrix, thus achieving a finer distinction between crop types.

2.2.3. SLIC Superpixel Segmentation

Simple Linear Iterative Clustering (SLIC) is an efficient and widely used algorithm for superpixel segmentation, the core idea of which is to perform joint clustering of colors and spatial locations of pixel points in three-channel pseudo-color images of polarimetric data. It employs a weighted distance metric to balance the effects between color and spatial features, thus ensuring the uniformity of the superpixel regions and the integrity of the boundaries. The SLIC algorithm consists of the following main steps [33]:

(1) Initialize seed points: firstly, the image is divided into roughly equal grid regions based on the desired number of superpixels , and a pixel from the center of each grid is selected as the initial seed point. The position of each seed point is , and its color information is usually represented as the value in the CIELAB color space.

(2) Define the search range: the search range of each seed point is limited to a square area; is the spacing between the seed points; . denotes the total number of pixels in the image.

(3) Compute the distance metric: for each pixel, calculate its distance from nearby seed points. SLIC uses a united metric that combines color distance and spatial distance :

(4) Assign pixels to the nearest seed point: based on the computed distance , each pixel is assigned to the region of the superpixel to which the nearest seed point belongs; this process ensures that pixels with similar color and spatial features are classified into the same superpixel.

(5) Update the seed point position: for each superpixel region, the feature average of all pixels within its superpixel in color and spatial dimensions is computed and the seed point is moved to center-of-mass position, thus improving the representativeness of the seed point.

(6) Iterate optimization: repeat step (3) to step (5) until the seed point position no longer changes obviously, or the preset maximum number of iterations is reached. It has been shown [34] that the algorithm can converge after 10 iterations or less, so the number of iterations is often set to 10.

(7) Post-processing: each superpixel region is checked for connectivity, and disconnected small regions are subsumed into their neighboring superpixels, eliminating small isolated regions that may occur.

2.3. Proposed Method

2.3.1. SLIC Superpixel Segmentation Based on Multi-Temporal Dual Polarimetric Covariance Matrix

In order to solve the problem of superpixel inconsistency in temporal data due to color variations, this paper proposed an innovative approach that unites multi-temporal data for an integrated segmentation. In this method, the dual polarimetric covariance matrix of each temporal datum is formed into a block diagonal matrix, and this structure not only represents the polarimetric feature structure of different temporals, but also ensures that these features can be considered as a whole during the segmentation process, thus improving the accuracy and consistency of the segmentation across the growth period.

Suppose is a dual polarimetric covariance matrix for several temporals that follows a Wishart distribution , is the degree of freedom, and is covariance. Assuming that is independent, there is no correlation between each . So , as the block diagonal matrix, can be described as follows:

The for each temporal is jointly constructed into the block diagonal covariance matrix in Equation (16).

For each , this can be expressed as

Then

Wishart distribution has independent additivity. In the construction of the block diagonal matrix , the generation of each submatrix satisfies the above conditions and they are independent of each other. Therefore, the block diagonal matrix constructed from these submatrices also satisfies the generation of the Wishart distribution, and the covariance structure of the block diagonal matrix still maintains the block diagonal shape.

The probability density function of the block diagonal matrix is shown in Equation (20).

where is the probability density function of each sub-block. This study replaces the color distance formula in the SLIC method with the Wishart distance formula . For each sub-block, can calculate its Wishart distance.

Then the Wishart distance of the block diagonal matrix is the sum of the Wishart distances of the sub-blocks .

where denotes each pixel point, denotes each superpixel, and denotes the covariance matrix at the center of each superpixel.

The new SLIC superpixel segmentation Equation (23) is finally obtained instead of Equation (13).

2.3.2. Extraction of Superpixel Polarimetric Features

The superpixel segmentation method based on the temporal dual polarimetric covariance matrix divided the data into several superpixel regions, and each superpixel region is partitioned by spatial distance with a Wishart distance matrix. It is assumed that the result after superpixel segmentation can be represented as a set of superpixel regions, where each represents a superpixel region, and it satisfies that the union of all superpixels is the entire image region .

For PolSAR data, scattering features are the main form of expression, which contains rich distinctive information. After the superpixel segmentation was completed, this section utilized each superpixel region as a mask to localize the features in order to fully explore the unique information within each superpixel region.

Suppose a superpixel region contains a collection of pixels as , for a scattering feature matrix , where each pixel point contains a scattering feature . By applying a masking operation to the superpixel region , it is possible to extract the features of all the pixels from this region, forming the masked set of features .

For the features within each superpixel region, the statistics were calculated. Since the superpixel segmentation not only takes into account the similarity between pixels, but also takes into account the changing features of the time series data, thus ensuring the consistency of the categories within each superpixel region to a large extent. Based on this, the mean value of the features within the region was calculated in order to obtain the representative features within the region. The mean value of the features in a superpixel region can be expressed as

By statistically quantifying the features of all the superpixel regions, a new feature can be obtained in which each superpixel region has a mean value representing its local features. These means were effective in reflecting the characteristics of dual polarization in the region, and , , and were extracted for this study. The feature can be used in subsequent classification tasks to help the classification model better capture changes in crop type and growth state.

2.3.3. Establishing Spatial Distribution Relationship with HyperGraph Neural Network

In crop classification, crop plots often exhibit spatial dispersion, that is, the same crop is not necessarily planted continuously, which poses a challenge to the classification task. For such dispersed plots, the HyperGraph is able to consider them comprehensively from the local superpixel scale to the larger farm scale. At the local scale, the HyperGraph can focus on the features inside each superpixel to capture the detailed information; while at the farm scale, through the connection of hyperedges, the HyperGraph can effectively combine the scattered superpixels into a larger region, so as to grasp the distribution pattern of the same crop plots, and then realize the effective classification of these scattered plots. The nodes of the HyperGraph can represent the features of a single plot, while the hyperedges of it help establish the recognition of different plots by connecting multiple plots with similar features. Regardless of whether these parcels are spatially neighboring or not, as long as they possess similarity in certain features, they can be connected by a hyperedge. The nodes and hyperedges of the HyperGraph enhance its ability to handle tasks with complex similarity relationships, especially in crop classification, to effectively recognize plots of the same category with different distances.

The core of the HGNN lies in the construction of the HyperGraph, which can connect multiple nodes and learn the features of all nodes on the same hyperedge, and thus the higher-order associations between objects. In this study, a HyperGraph was constructed based on ground-truth labels of the training superpixels. This means that each crop type has a distinct hyperedge, effectively grouping known classes regardless of their spatial locations. The HGNN then utilizes this structure to learn features on the hyperedges. The entire process is detailed in Algorithm 1. A HyperGraph is a binary group , where denotes set of nodes, containing nodes, and denotes the set of hyperedges. Each hyperedge can connect multiple nodes.

| Algorithm 1. HyperGraph Construction |

| Input: : set of node labels, : set of nodes, : feature matrix for nodes |

| Output: : adjacency matrix of the HyperGraph |

| Steps: |

| 1: Initialize HyperGraph |

| 2: Extract unique labels from |

| 3: For each unique label where : |

| Find node indices |

| Add hyperedge to , |

| 4: Construct adjacency matrix where : |

| Set |

Given a HyperGraph , its structure can be represented by an adjacency matrix .

where denotes whether node belongs to a hyperedge . If , belongs to , otherwise . The degree of each node in the HyperGraph denotes the number of hyperedges connected to that node, defined as

And the degree of a hyperedge denotes the number of nodes contained in that hyperedge, defined as

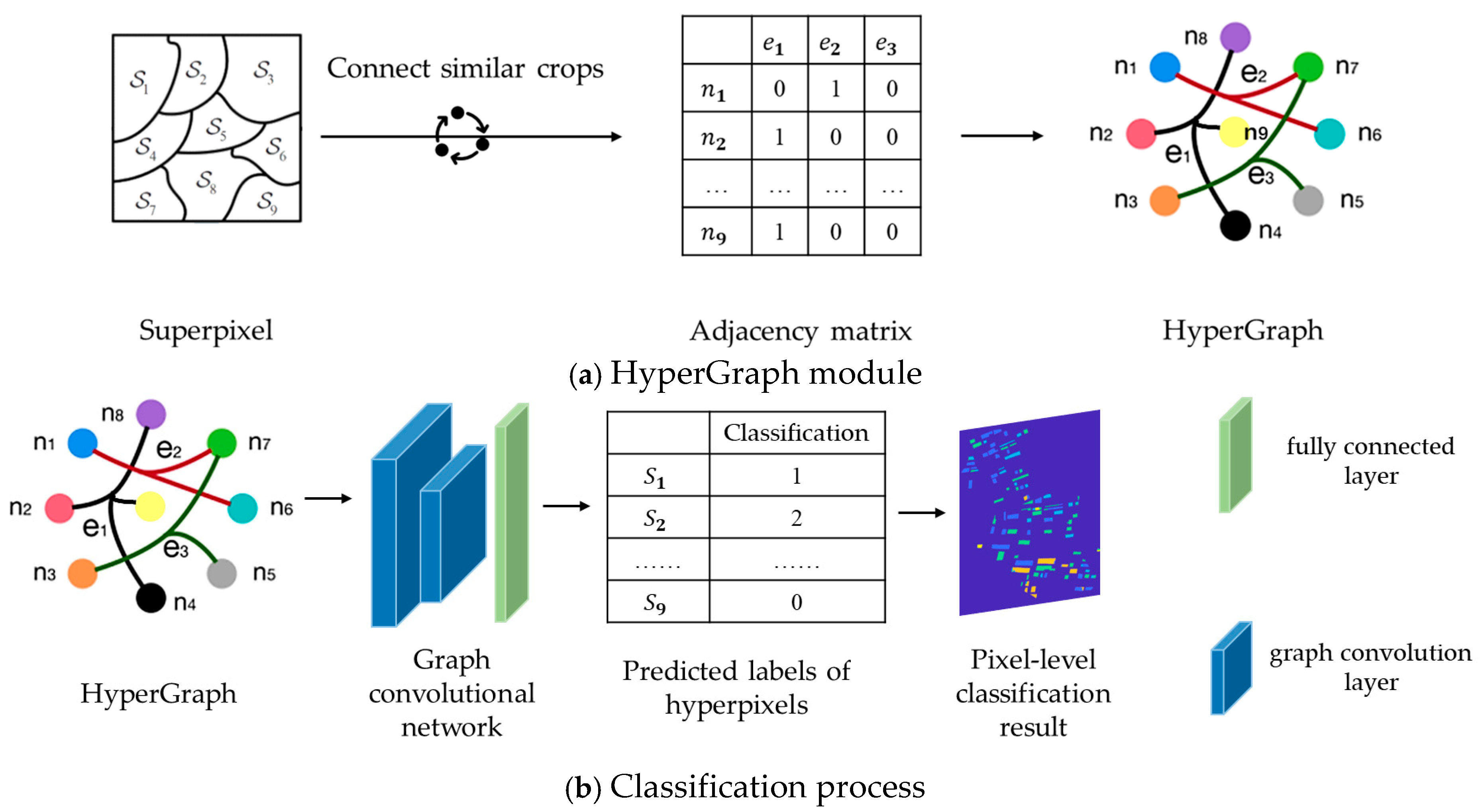

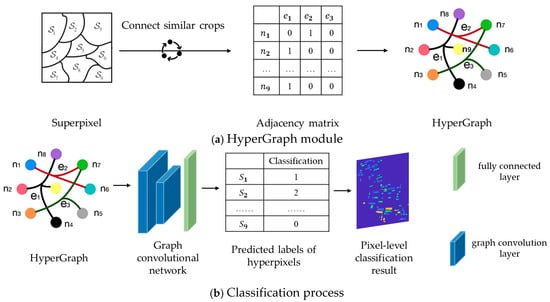

In the HGNN, the HyperGraph structure is mainly utilized and combined with multiple graph convolutional layers and fully connected layers. The purpose of these structures is to achieve feature aggregation, propagation and classification. The process of feature aggregation and propagation is carried out through hyperedges, the structure is shown in Figure 3a. Each node receives information from other nodes through the hyperedges, similar to the “message passing mechanism” in a traditional graph neural network (GNN). However, unlike a GNN, an HGNN can receive information from multiple related nodes through the hyperedge at the same time, and learn the features of each node on the hyperedge, which makes it possible to learn richer feature expressions in the classification task. The classification process based on HGNN is shown in Figure 3b.

Figure 3.

Classification flowchart of HGNN.

The content and form of each network layer of the HGNN is as follows. The input layer receives the feature of the node.

where is the number of nodes and is the feature dimension of each node. In this study, the input features are superpixel scattering features.

The main task of the first graph convolution layer is to update the feature representation of each node by aggregating the information of neighboring nodes through hyperedges. This process mainly relies on the adjacency matrix and the features of node to realize. Firstly, the connection relationships between nodes in the adjacency matrix are used to clarify which nodes belong to the same hyperedge. Then, based on these connection relationships, the information of nodes is aggregated. The features of nodes are updated as Equation (31), and the adjacency matrix is updated as Equation (32).

where is the learnable weight matrix of the first layer, and the network automatically adjusts these weights during the training process to learn the most effective feature representation; is the nonlinear activation function, and the ReLU function is used to introduce nonlinear factors to enhance the expressive ability of the network; and are the node degree matrix and the hyperedge degree matrix, respectively, which are used to normalize the correlation matrix and to ensure the information aggregation stability.

The second graph convolutional layer is similar to the first one, and again the updated features are aggregated by hyperedges. The process of feature updating is as follows:

where is the learnable weight matrix of the second layer, again continuously adjusted during training to further extract more discriminative features.

After feature extraction by the two graph convolution layers, the resulting feature is fed to the fully connected layer for final classification. The fully connected layer maps the extracted features to the category space as in Equation (34).

where is the weight matrix of the fully connected layer, is the bias term, and is the output after a softmax activation function indicating the predicted probability of each category.

By constructing the HGNN and performing multi-layer message passing, the HyperGraph is able to capture the higher-order relationships between plots and gradually learn the complex relationships between nodes through multi-layer convolution. This multi-layer message propagation mechanism enables the HyperGraph to better handle spatial dispersion and complex plot characteristics in crop classification.

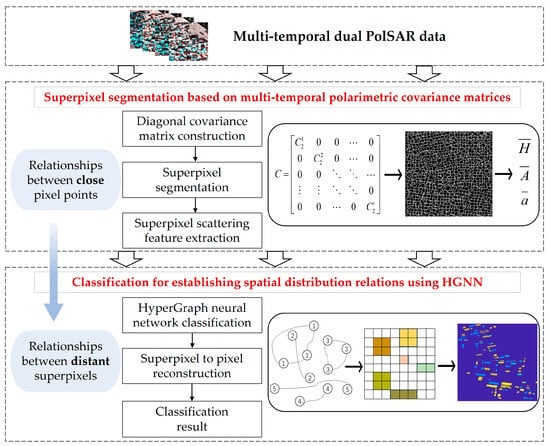

2.4. Overall Process

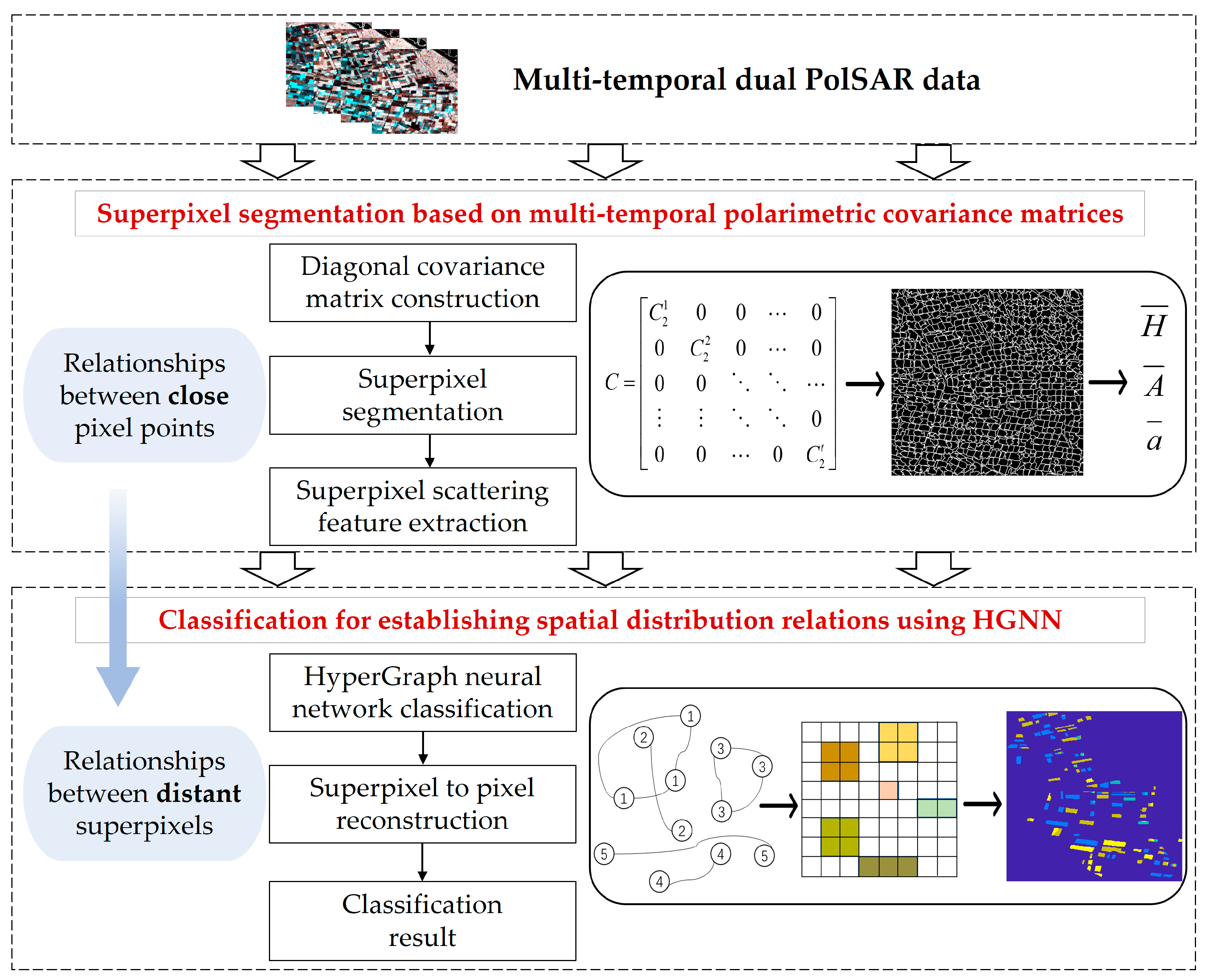

Figure 4 gives the overall flowchart of this article’s method. The proposed method consists of two main steps: superpixel segmentation based on multi-temporal polarimetric covariance matrices and classification for establishing spatial distribution relations using a HyperGraph neural network.

Figure 4.

The overall flowchart of proposed method.

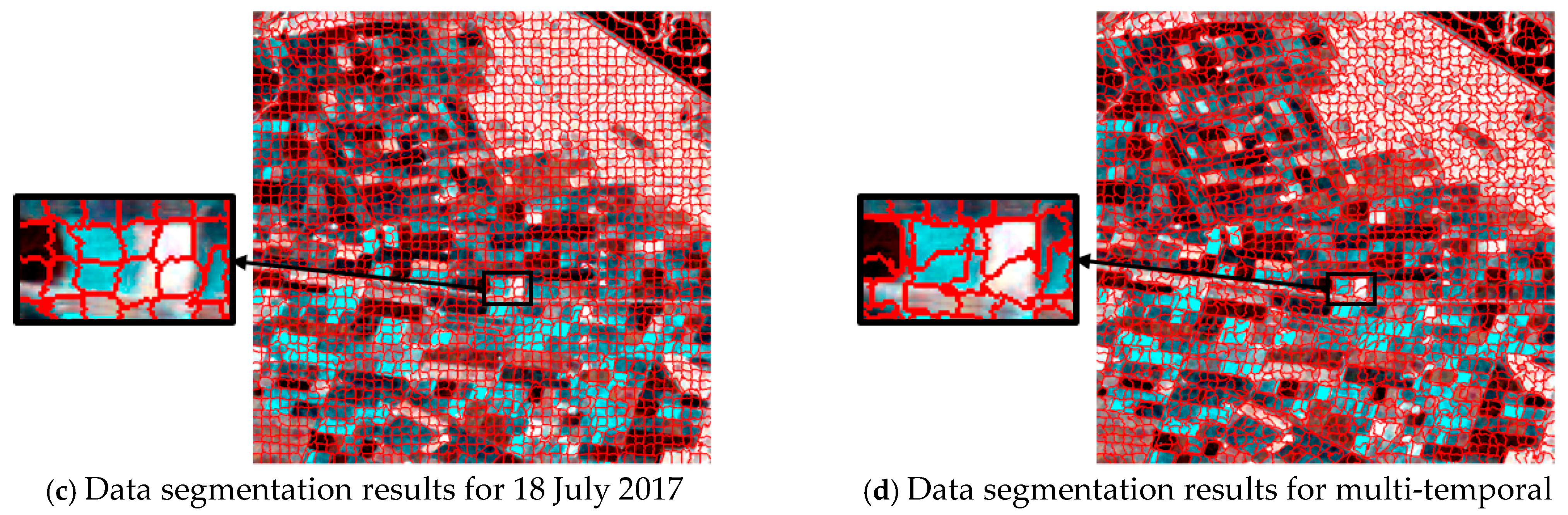

In the first step, the diagonal polarimetric covariance matrix is constructed by uniting a dual polarimetric covariance matrix of each temporal data. Then, considering that the dual polarimetric covariance matrix obeys the Wishart distribution characteristic, the traditional SLIC segmentation formula is modified for the multi-temporal data to obtain the superpixel segmentation results that are more stable, and then scattering features of each multi-temporal superpixel are extracted.

In the second step, a HyperGraph neural network is used to classify the obtained multi-temporal superpixel in the previous step. Compared with a traditional neural network, an HGNN is able to better deal with complex unstructured data, and it can effectively capture the complex relationship between the superpixels, and make full use of the multi-dimensional information of time–polarization–space to classify the superpixels. In order to apply the classification results to a real scene, the predicted labels of these superpixels are finally reconstructed back to the pixel level to obtain the final classification results.

3. Results and Discussion

3.1. Experimental Settings

To ensure the accuracy of the training data, about 6% of the labeled superpixels of the total superpixel data volume are selected from the segmentation results, and these superpixels are used to construct the adjacency matrix, and as the model training set. In the testing step, all the superpixels are used for the test in order to comprehensively assess the performance of the model. In the HyperGraph classification model, the Adam optimizer is used to train 200 epochs at a learning rate of 0.01.

To achieve a more intuitive and accurate presentation of the classification results, the pixel location information contained in each superpixel is recorded during the process of segmentation. After the HGNN completes the task of classifying, the predicted category labels of each superpixel are converted back to pixel labels. Through this reverse conversion process, the classification results at the superpixel level can be refined to the pixel level, thus obtaining the final, higher-resolution classification results.

3.2. Experimental Results

In this article, the method is validated and discussed through experiments in three aspects: (1) Firstly, in the step of superpixel segmentation, the effects of different parameters on the superpixel segmentation results are analyzed. At the same time, in order to show the advantages of multi-temporal information in superpixel segmentation, the effects of single-temporal and multi-temporal superpixel segmentation are compared. (2) Secondly, in the research of superpixel classification features, the classification effects of different polarimetric features for dual polarimetric data under the HGNN are compared, as well as the possible feature combinations, to examine the influence of input features and, further, to obtain the optimal feature combinations. (3) Finally, in terms of classification model performance evaluation, the performance of the HGNN, GNN, and RF are compared in the superpixel classification task, in order to validate the advantages of combining superpixels and the HyperGraph in crop classification.

3.2.1. Analysis of Superpixel Segmentation Results

- ➢

- Effect of seed points on superpixel segmentation

As a key step, the setting of different seed points can obviously change the effect of superpixel segmentation. This section describes experiments conducted for different seed points. The seed point directly affects the step length of superpixel segmentation; it has an inverse relationship with the step length. The step length determines the size and density of the superpixels, with a smaller step length typically generating more pairs of smaller-sized and finer superpixels, while a larger step length generates fewer but larger superpixels. This tuning helps to balance the computational complexity of segmentation with accuracy.

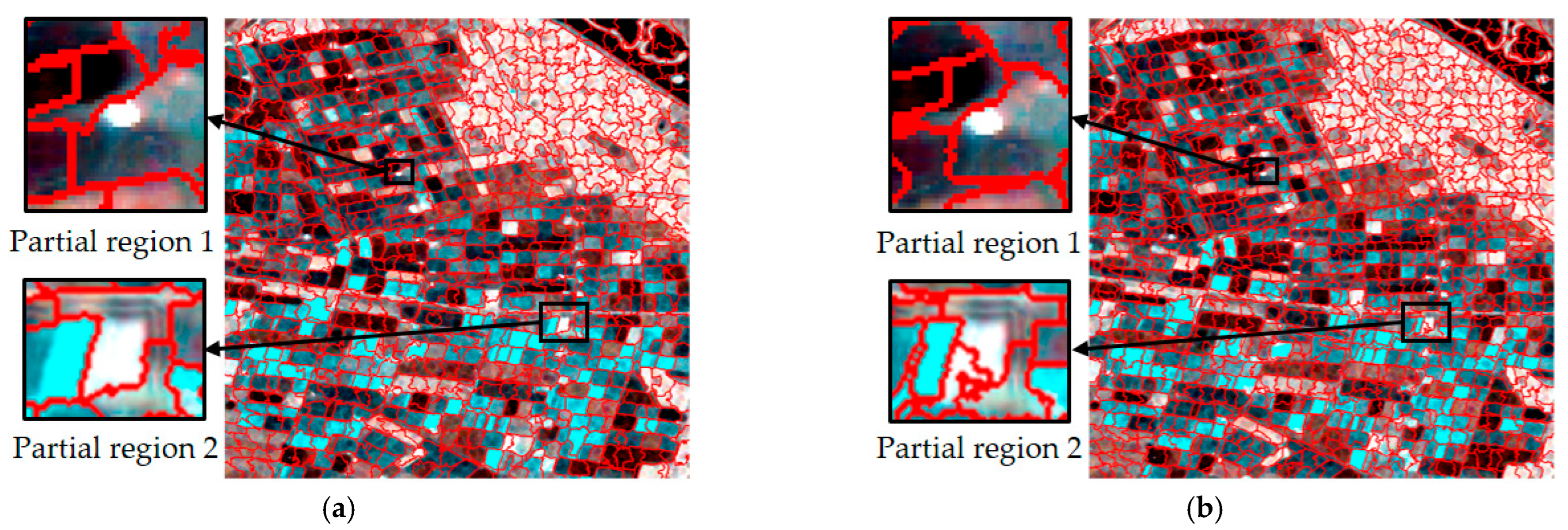

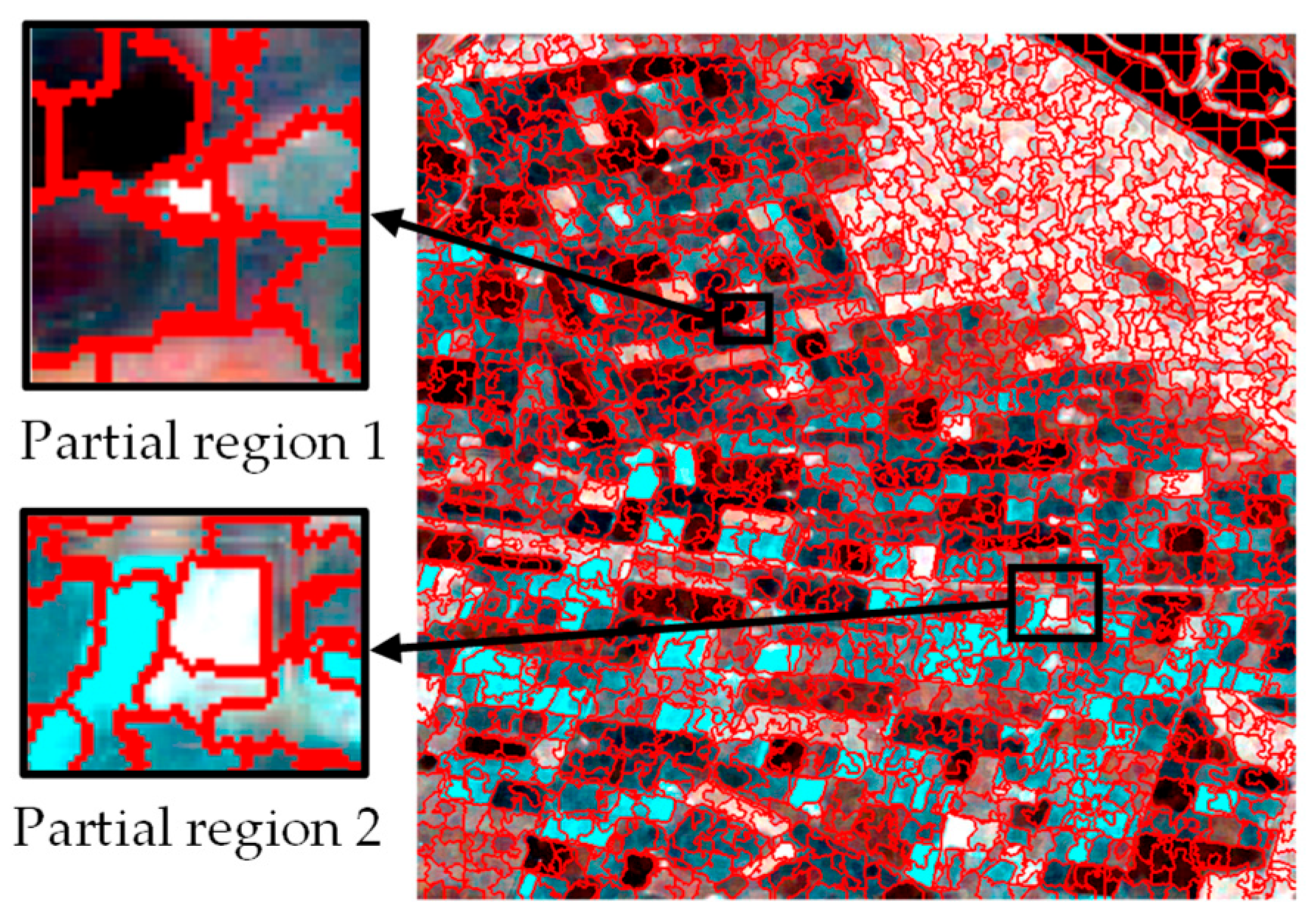

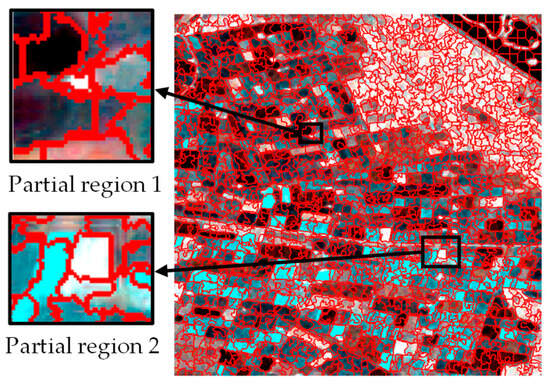

In the experiments, the number of seed points was set to 1000, 1500, 2000, 2500, 3000 and 3500, and the superpixel segmentation edges with different seed points are shown in Figure 5. It was found that when the number of seed points is 2500, the generated superpixels are of moderate number and reasonable size, which can effectively capture the boundary of the parcel in the image, avoiding the problem of excessive refinement or too much roughness.

Figure 5.

Superpixel segmentation results with different numbers of seed points. (a) 1000. (b) 1500. (c) 2000. (d) 2500. (e) 3000. (f) 3500.

In contrast, when the numbers of seed points were 1000, 1500 and 2000, the segmentation results appeared too coarse to accurately distinguish the boundaries of different parcels due to the large step length. Especially when the number of seed points was 1000, the generated superpixel area was too large, resulting in the neighboring regions being unable to be effectively distinguished, and the superpixel merged several different plots into one large parcel. When the numbers of seed points were 3000 and 3500, the step length was smaller, and although the superpixels became more detailed, the over-refined superpixels not only increased the computational complexity and prolonged the processing time, but also made the segmentation results too redundant, which affected the efficiency of the subsequent processing.

Combining the above experimental results and analysis, the 2500 seed point number is considered optimal for this experiment, which provides a good balance between segmentation accuracy and computational complexity.

- ➢

- Comparative analysis of single-temporal with multi-temporal superpixel segmentation

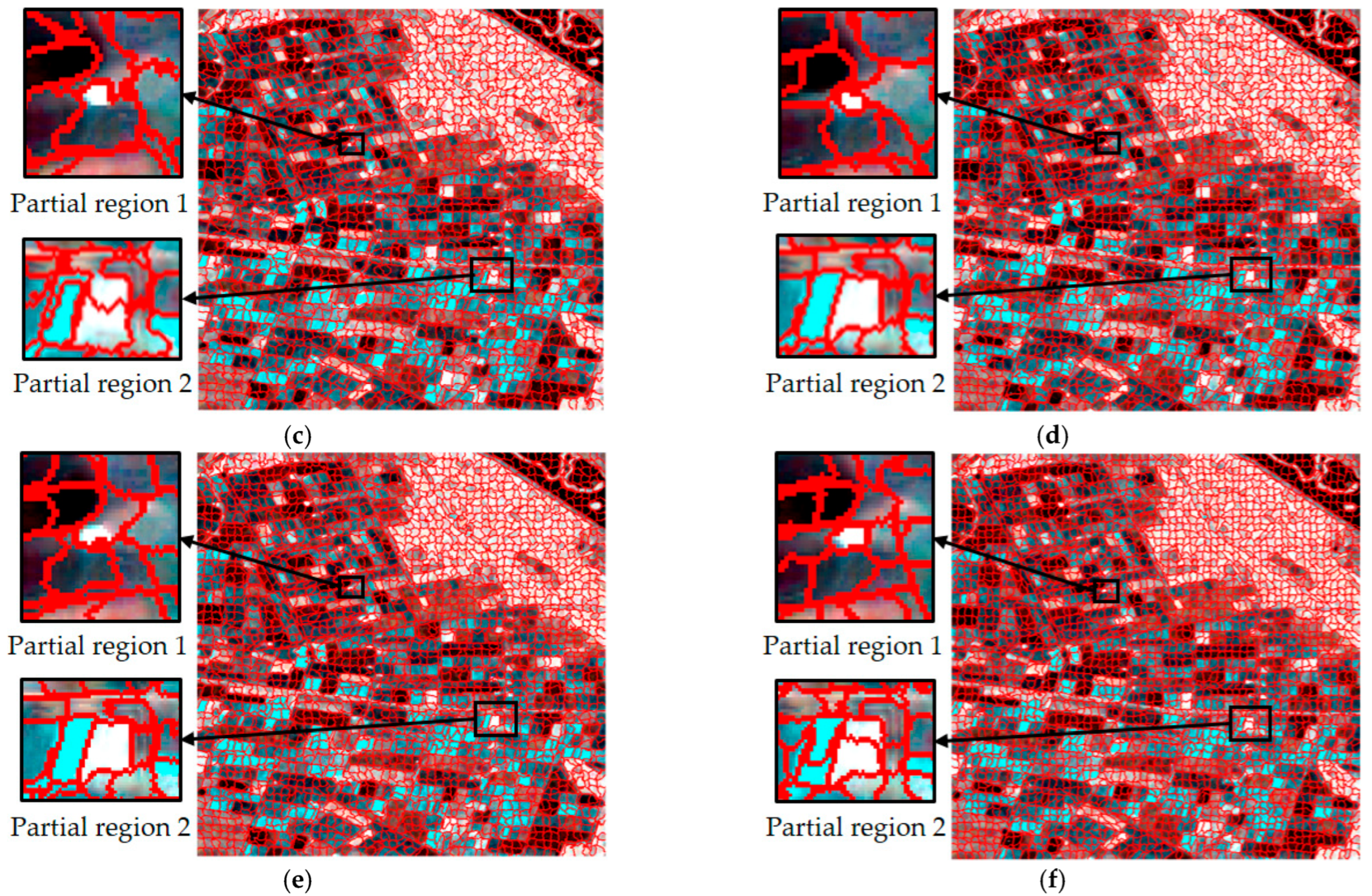

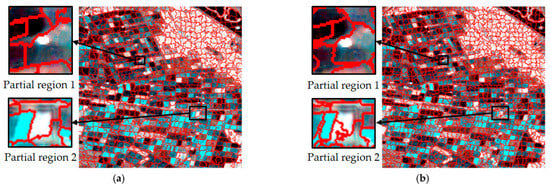

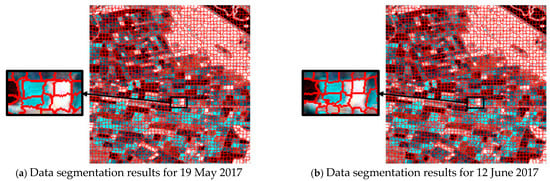

The experiment further compared the effect of single-temporal with multi-temporal superpixel segmentation to verify the effect of temporal information on the superpixel segmentation results.

For single-temporal superpixel segmentation, the same SLIC method was used for superpixel generation by replacing the color distance with the Wishart distance. The single-temporal superpixel segmentation algorithm acted independently on the data of each temporal to ensure that the segmentation processes did not interfere with each other. To ensure the comparability of the experiments, the experimental process kept the number of seed points at 2500, and the results are shown in Figure 6. Observing the results presented in Figure 6a–c, it can be seen that there were obvious differences in the size and shape of the region obtained from the segmentation of each temporal, and different types of plots are not effectively distinguished in the segmentation image.

Figure 6.

Comparison of superpixel segmentation results for single times and a multi-temporal based on Wishart distance; (a–c) are segmentation results based on single times, and (d) is based on a multi-temporal.

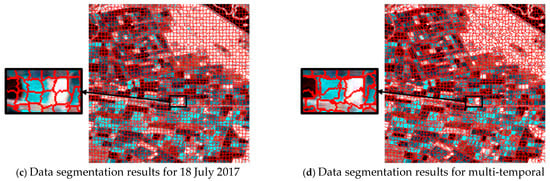

Simultaneously, for comparison, we also performed superpixel segmentation on single-time data using the traditional SLIC algorithm based on RGB distance under the same parameter setting of 2500 seed points. As shown in Figure 7, the traditional SLIC method was unable to distinguish between different crop regions.

Figure 7.

Superpixel segmentation results for a single time based on color distance.

Obviously different from single-temporal segmentation, multi-temporal superpixel segmentation exhibited better results, as shown in Figure 5d and Figure 6d. The algorithm was able to identify the features that change over time, which enabled the parcels that behave similarly at different time points to be classified into the same superpixel region, improving the consistency and accuracy of segmentation. Multi-temporal segmentation not only takes into account spatial information, but it is also able to capture subtle changes due to temporal variations.

During the crop growth cycle, the differences in the plots at different stages in SAR images may be subtle, and are difficult to accurately distinguish by traditional single-time segmentation methods. In contrast, temporal union segmentation can clearly distinguish these subtle changes and use them as the basis for superpixel division by comprehensively analyzing multi-temporal data, so that crop plots at different growth stages can be more accurately divided.

3.2.2. Classification Results of HGNN

The extracted scattering features for each superpixel were input into the HGNN. Based on the information of the superpixels, the network set a hyperedge for each category and grouped all similar superpixels into one group, and the node–hyperedge adjacency matrix could be constructed using the set of hyperedges.

In order to verify the effectiveness of the object-oriented HGNN, four types of scattering features obtained at different seed points were selected for comparison experiments in this study.

The classification accuracies of the four superpixel scattering features in the HGNN network of this experiment are shown in Table 1. In general, the classification accuracy of each superpixel scattering feature shows a trend of first increasing and then leveling off with the increase in the number of seed points. Specifically, when the number of seed points was 1000, the classification accuracy of , and was relatively low, and the classification accuracy of was relatively high. When the number of seed points was increased to 2500, the classification accuracies of the four superpixel scattering features , , and reached 85.08%, 90.15%, 85.40%, and 92.07%, respectively. When the number of seed points increased from 2500 to 3500, the improvement in classification accuracy was marginal, while the computational cost for superpixel segmentation rose significantly. Therefore, a setting of 2500 seed points effectively controls computational cost while ensuring high accuracy, achieving the best trade-off between precision and efficiency. Thus, in this experiment, it is considered that the overall classification effect reaches an optimal state with this setting. After this, the classification accuracy tended to stabilize by continuing to increase the number of seed points. By comparing the classification accuracies of these four scattering features, it can be found that shows better results than the other three under different seed points. When the seed point number was 2500, the classification effect corresponded to the effect of superpixel segmentation.

Table 1.

Classification accuracy of HGNN under different superpixel setting conditions.

Next, the effectiveness of multi-temporal superpixel classification was verified, as shown in Table 2. This indicated that integrating data from multi-temporal data can effectively overcome the limitations of single-time data. By using complementary information along the temporal dimension, this approach reduced random errors and the influence of environmental factors, enabling a more comprehensive characterization of crop changes, and led to a substantial improvement in classification accuracy.

Table 2.

Comparison of single-temporal and multi-temporal superpixel classification results.

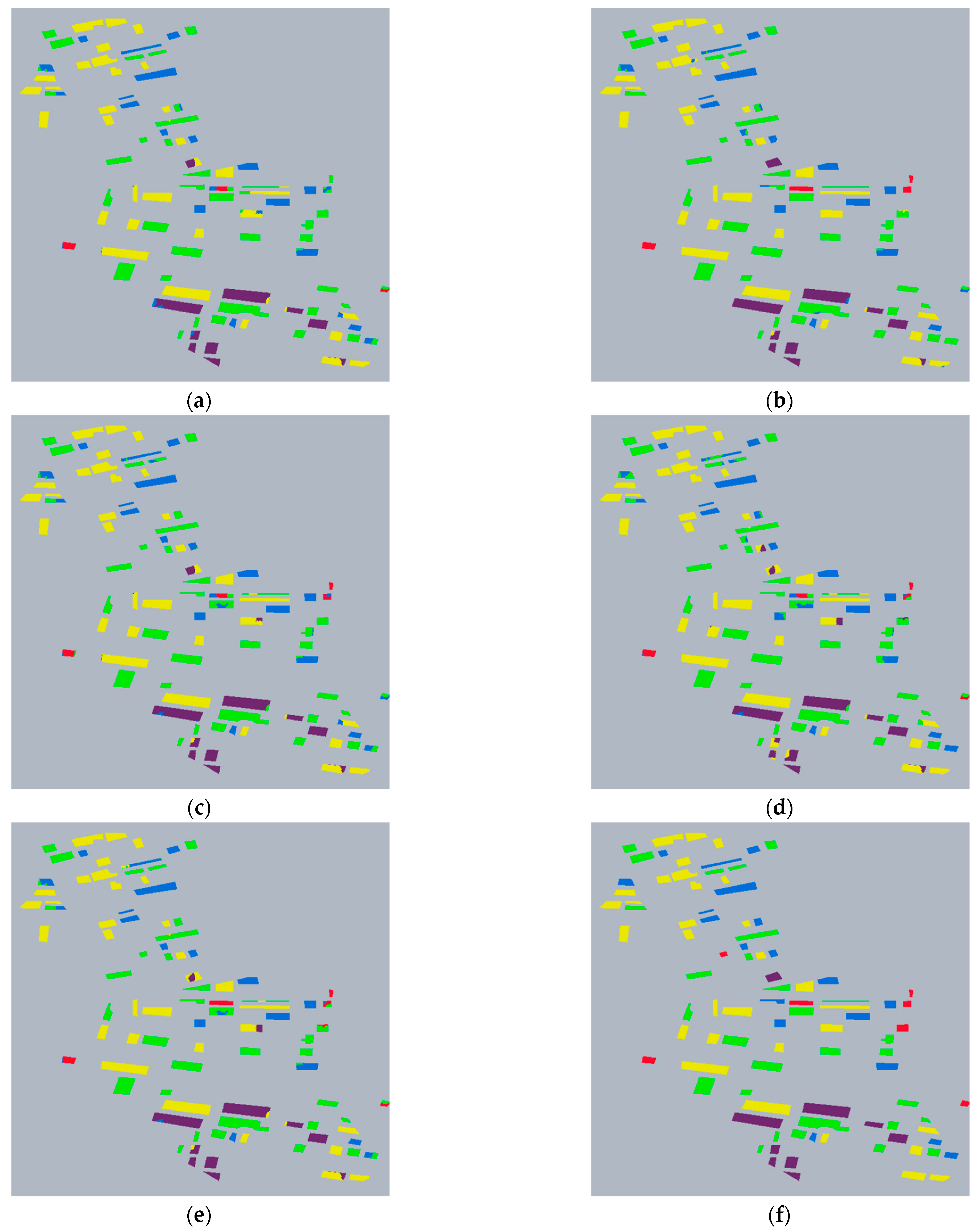

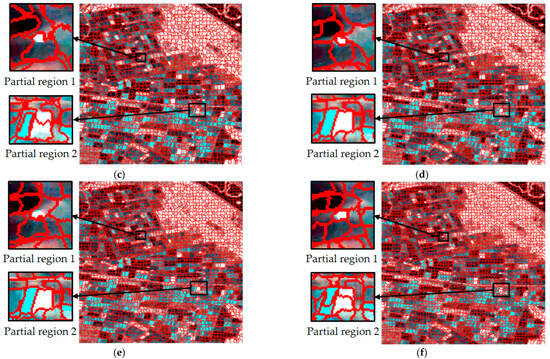

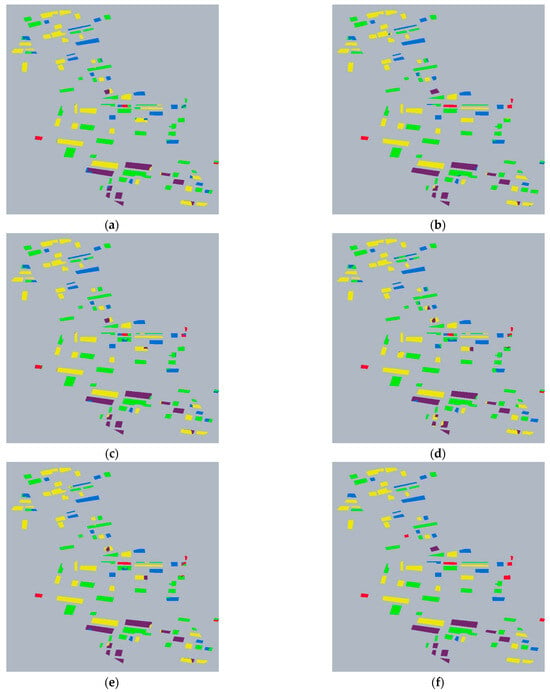

On the basis of single-feature classification, we further developed multi-feature combination classification under the condition of the optimal parameter of 2500 seed points, which has been verified by previous experiments.

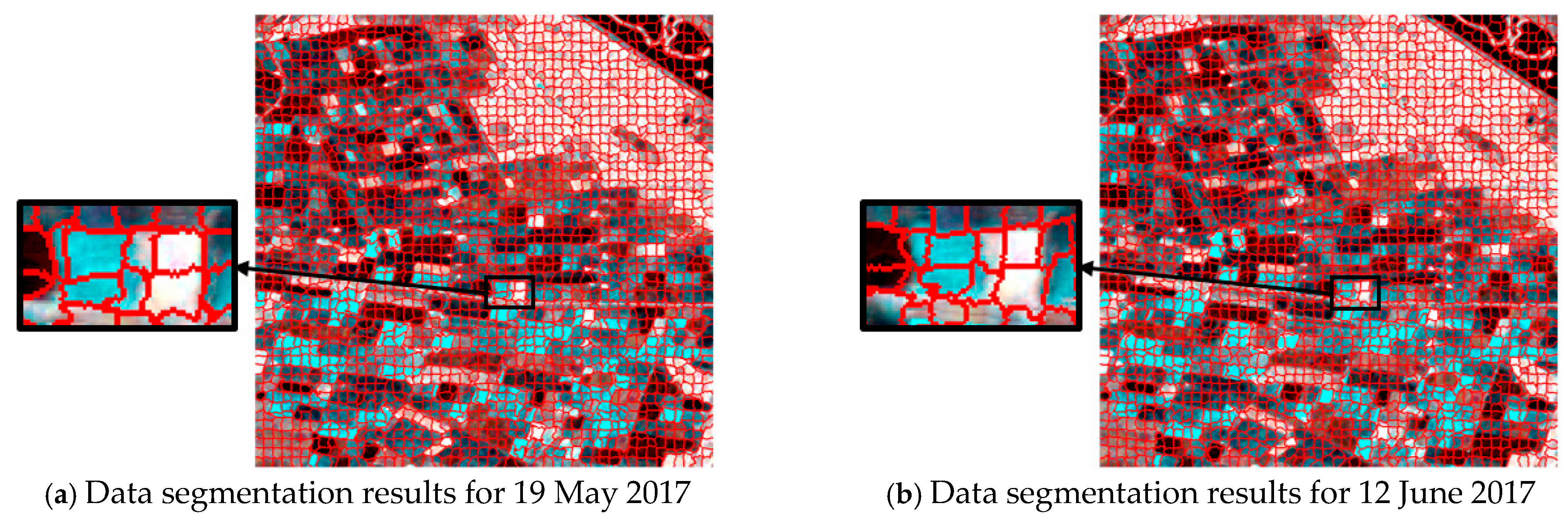

Table 3 compares the overall classification performance of different superpixel feature combinations. The experimental results show that the classification effect of the feature combination with is improved. The classification accuracy of is 95.74%, and its classification result is shown in Figure 8b. It is worth mentioning that when the feature combination is , its classification accuracy is not as high as that of the . This performance degradation is likely because does not contribute new and valuable scattering information. As shown in Table 1, the classification performance of alone is poor. The scattering mechanism information provided by lacks sufficient discriminative power for the crop types in this study. During the growth stages, the scattering mechanism for most of these crops is predominantly volume scattering. Consequently, their values are concentrated within a range of approximately 45° to 90°, which cannot provide the distinctive information needed to differentiate among these categories.

Table 3.

Classification results of HGNN with different superpixel scattering feature combinations.

Figure 8.

Classification results of HGNN. (a) Classification Result Image of . (b) Classification Result Image of . (c) Classification Result Image of . (d) Classification Result Image of . (e) Classification Result Image of . (f) Truth Label Image.

To further evaluate the model’s classification performance under the optimal parameter settings, 2500 seed points and the feature combination, we analyze the classification accuracy for each crop class in detail. Table 4 presents the classification confusion matrix, while Table 5 lists the Producer’s Accuracy (PA) and User’s Accuracy (UA) for each category.

Table 4.

Confusion matrix for the classification using the feature combination.

Table 5.

PA and UA for each crop using the feature combination.

The model demonstrates strong discriminative ability for wheat, beet, potato, and grass, but its classification performance for corn is comparatively poor. It is noteworthy that while the PA for corn reaches a perfect 1.0000, its UA is only 0.6146. Upon analysis, this discrepancy does not stem from issues in the model’s algorithmic design, architecture, or training optimization. Instead, it is attributed to the significantly low proportion of corn samples in the dataset. Out of a total of 32,922 labeled pixels in the entire dataset, only 1248 are labeled as corn, accounting for just 3.79%. After superpixel segmentation, the number of superpixels corresponding to corn becomes even smaller. This scarcity means that during the training phase, the model’s learning of corn features is concentrated on an extremely limited set of examples. It becomes highly proficient at identifying these few specific samples in the training set, leading to the high PA. However, in practical scenarios where sample distribution is more diverse and complex, the model lacks learning experience from a sufficient variety of corn samples. This makes it difficult to effectively distinguish other classes from corn, causing a large number of non-corn samples to be misclassified as corn. Consequently, the UA value is substantially lower.

3.2.3. Comparative Performance Analysis of HGNN and Other Classification Models

In order to evaluate the effectiveness of the HGNN, experiments were conducted to compare and analyze the classification performance of the other two typical classification models, namely, the random forest (RF) and the graph neural network (GNN), based on different scattering features. The relevant experiments were conducted with seed points of 2500 and the same superpixel features as the input. From the experimental results in Table 6, it can be seen that the different models show obvious differences. The RF classifier has a lower accuracy compared to the other two models, with its classification accuracy only ranging from about 74.54% to 80.45%. This is due to its structure based on decision tree integration, which has some limitations in dealing with complex feature information, and it does not consider spatial relationships, making it difficult to fully explore the potential relationships in the data. The classification accuracy of the GNN model is obviously improved compared to the RF, with its classification accuracy ranging from 82.45% to 93.17%. This is due to its ability to effectively capture the graph structure information in the data and better utilize the correlation relationship between the data when dealing with features. The HGNN model performs better, with a classification accuracy ranging from 85% to 95.74%. As a more advanced model architecture, the HGNN has a unique advantage in dealing with higher-order relationships and complex structured data, and it can extract the key information of scattering features with greater accuracy, thus achieving higher accuracy and better performance.

Table 6.

Comparison of classification accuracy among the three classification models.

3.3. Methodological Applicability, Limitations and Future Research

This method is suitable for dealing with complex crop classification with multi-temporal dual polarimetric SAR data, especially for solving the fine classification problem of crops with uneven spatial distribution, such as broken farmland and mixed planting areas. By improving SLIC superpixel segmentation through the united multi-temporal dual polarimetric covariance matrix, it can effectively resist the interference of temporal differences and capture the crop boundaries. Combined with the HGNN, when constructing the higher-order spatial correlation among the obtained superpixels, the classification accuracy of farmland parcels is obviously improved. However, its performance relies on high-quality and continuous temporal polarimetric SAR data.

In the future, the superpixel segmentation algorithm can be adaptively adjusted in real time according to the crop growth state, so that the segmentation process not only considers the spatial characteristics, but also dynamically combines the temporal changes and crop physiological parameters to realize on-demand and accurate segmentation.

4. Conclusions

To address the problem of insufficiently utilizing information about the comprehensive spatial distribution of crops near and far, a multi-temporal dual polarimetric SAR classification method based on superpixels and a HyperGraph is designed. Firstly, using the property that the polarimetric covariance matrix obeys the Wishart distribution, superpixel segmentation is performed on the multi-temporal dual PolSAR data, so that the shape and size of the superpixel segmentation of each temporal are consistent and in accordance with the actual plots. Secondly, the HyperGraph adjacency matrix is constructed based on superpixels, and then classified by the HGNN, which learns the features of the same crop across different plots, and effectively improves the accuracy of the classification.

The experimental results show that the HGNN effectively integrates the scattering features and spatial information of dual polarimetric SAR data through the multi-dimension data of time–polarization–space. Under the optimal parameter configuration, with 2500 seed points and the feature combination, the overall classification accuracy reaches 95.74%, which is improved compared with both the GNN and RF methods. The experiments verify the effectiveness of the dual polarimetric scattering feature extraction based on multi-temporal superpixels and the HGNN as the classification network. It also provides a new technical framework for crop classification in complex agricultural scenes.

Author Contributions

Conceptualization, Q.Y.; methodology, Y.D. and F.Z.; software, Y.D.; validation, F.L.; formal analysis, Y.Z.; writing—original draft preparation, Y.D.; writing—review and editing, Q.Y.; project administration, F.Z.; funding acquisition, Y.Z. and Q.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Shandong Province under Grant ZR2024ZD19, the National Natural Science Foundation of China under Grant 62302429, and the Open Foundation of National Key Laboratory of Microwave Imaging Technology (AIRZB76-2023-000573).

Data Availability Statement

The data presented in this study are openly available in [Sentinel data access] at [https://dataspace.copernicus.eu/] for scientific research purposes.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, J.; Quan, S.; Xing, S.; Li, Y.; Wu, H.; Meng, W. PSO-Based Fine Polarimetric Decomposition for Ship Scattering Characterization. ISPRS J. Photogramm. Remote Sens. 2025, 220, 18–31. [Google Scholar] [CrossRef]

- Yin, Q.; Gao, L.; Zhou, Y.; Li, Y.; Zhang, F.; López-Martínez, C.; Hong, W. Coherence Matrix Power Model for Scattering Variation Representation in Multi-Temporal PolSAR Crop Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 9797–9810. [Google Scholar] [CrossRef]

- Ni, J.; López-Martínez, C.; Hu, Z.; Zhang, F. Multitemporal SAR and Polarimetric SAR Optimization and Classification: Reinterpreting Temporal Coherence. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Mestre-Quereda, A.; Lopez-Sanchez, J.M.; Vicente-Guijalba, F.; Jacob, A.W.; Engdahl, M.E. Time-Series of Sentinel-1 Interferometric Coherence and Backscatter for Crop-Type Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4070–4084. [Google Scholar] [CrossRef]

- Salma, S.; Keerthana, N.; Dodamani, B.M. Target Decomposition Using Dual-Polarization Sentinel-1 SAR Data: Study on Crop Growth Analysis. Remote Sens. Appl. Soc. Environ. 2022, 28, 100854. [Google Scholar] [CrossRef]

- Wang, M.; Wang, L.; Guo, Y.; Cui, Y.; Liu, J.; Chen, L.; Wang, T.; Li, H. A Comprehensive Evaluation of Dual-Polarimetric Sentinel-1 SAR Data for Monitoring Key Phenological Stages of Winter Wheat. Remote Sens. 2024, 16, 1659. [Google Scholar] [CrossRef]

- Huang, X.; Wang, J.; Shang, J.; Liao, C.; Liu, J. Application of Polarization Signature to Land Cover Scattering Mechanism Analysis and Classification Using Multi-Temporal C-Band Polarimetric RADARSAT-2 Imagery. Remote Sens. Environ. 2017, 193, 11–28. [Google Scholar] [CrossRef]

- Waske, B.; Braun, M. Classifier Ensembles for Land Cover Mapping Using Multitemporal SAR Imagery. ISPRS J. Photogramm. Remote Sens. 2009, 64, 450–457. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, J.; He, Y.; Shan, B. Crop Classification and Representative Crop Rotation Identifying Using Statistical Features of Time-Series Sentinel-1 GRD Data. Remote Sens. 2022, 14, 5116. [Google Scholar] [CrossRef]

- Sonobe, R.; Tani, H.; Wang, X.; Kobayashi, N.; Shimamura, H. Discrimination of Crop Types with TerraSAR-X-Derived Information. Phys. Chem. Earth Parts A/B/C 2015, 83, 2–13. [Google Scholar] [CrossRef]

- Mandal, D.; Kumar, V.; Rao, Y.S. An Assessment of Temporal RADARSAT-2 SAR Data for Crop Classification Using KPCA Based Support Vector Machine. Geocarto Int. 2022, 37, 1547–1559. [Google Scholar] [CrossRef]

- LIU, R.; WANG, Z.; GAO, R. Application of Time-Series SAR Images in Land Use Classification of Arid Areas. Sci. Surv. Mapp. 2021, 46, 90–97. [Google Scholar]

- Dobrinić, D.; Gašparović, M.; Medak, D. Sentinel-1 and 2 Time-Series for Vegetation Mapping Using Random Forest Classification: A Case Study of Northern Croatia. Remote Sens. 2021, 13, 2321. [Google Scholar] [CrossRef]

- Xiao, X.; Lu, Y.; Huang, X.; Chen, T. Temporal Series Crop Classification Study in Rural China Based on Sentinel-1 SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2769–2780. [Google Scholar] [CrossRef]

- Xue, R.; Bai, X.; Zhou, F. Spatial–Temporal Ensemble Convolution for Sequence SAR Target Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1250–1262. [Google Scholar] [CrossRef]

- Teimouri, N.; Dyrmann, M.; Jørgensen, R.N. A Novel Spatio-Temporal FCN-LSTM Network for Recognizing Various Crop Types Using Multi-Temporal Radar Images. Remote Sens. 2019, 11, 990. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model. Remote Sens. 2019, 11, 68. [Google Scholar] [CrossRef]

- Deng, J.; Wang, W.; Zhang, H.; Zhang, T.; Zhang, J. PolSAR Ship Detection Based on Superpixel-Level Contrast Enhancement. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Nogueira, F.E.A.; Marques, R.C.P.; Medeiros, F.N.S. SAR Image Segmentation Based on Unsupervised Classification of Log-Cumulants Estimates. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1287–1289. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, H.; Wu, J.; Peng, Z.; Li, X. A Gamma Distribution-Based Fuzzy Clustering Approach for Large Area SAR Image Segmentation. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1986–1990. [Google Scholar] [CrossRef]

- Ma, F.; Zhang, F.; Xiang, D.; Yin, Q.; Zhou, Y. Fast Task-Specific Region Merging for SAR Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Ma, F.; Zhang, F.; Yin, Q.; Xiang, D.; Zhou, Y. Fast SAR Image Segmentation with Deep Task-Specific Superpixel Sampling and Soft Graph Convolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Clauss, K.; Ottinger, M.; Kuenzer, C. Mapping Rice Areas with Sentinel-1 Time Series and Superpixel Segmentation. Int. J. Remote Sens. 2018, 39, 1399–1420. [Google Scholar] [CrossRef]

- Xiang, H.; Luo, H.; Liu, G.; Yang, R.; Lei, X.; Cheng, C.; Chen, J. Land Cover Classification in Mountain Areas Based on Sentinel-1A Polarimetric SAR Data and Object Oriented Method. J. Nat. Resour. 2017, 32, 2136–2148. [Google Scholar]

- Beriaux, E.; Jago, A.; Lucau-Danila, C.; Planchon, V.; Defourny, P. Sentinel-1 Time Series for Crop Identification in the Framework of the Future CAP Monitoring. Remote Sens. 2021, 13, 2785. [Google Scholar] [CrossRef]

- Gao, H.; Wang, C.; Xiang, D.; Ye, J.; Wang, G. TSPol-ASLIC: Adaptive Superpixel Generation with Local Iterative Clustering for Time-Series Quad- and Dual-Polarization SAR Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Huang, C.; Xu, Z.; Zhang, C.; Li, H.; Liu, Q.; Yang, Z.; Liu, G. Extraction of Rice Planting Structure in Tropical Region Based on Sentinel-1 Temporal Features Integration. Trans. Chin. Soc. Agric. Eng. 2020, 36, 177–184. [Google Scholar]

- Liu, Y.; Wang, B.; Sheng, Q.; Li, J.; Zhao, H.; Wang, S.; Liu, X.; He, H. Dual-Polarization SAR Rice Growth Model: A Modeling Approach for Monitoring Plant Height by Combining Crop Growth Patterns with Spatiotemporal SAR Data. Comput. Electron. Agric. 2023, 215, 108358. [Google Scholar] [CrossRef]

- Khabbazan, S.; Vermunt, P.; Steele-Dunne, S.; Ratering Arntz, L.; Marinetti, C.; van der Valk, D.; Iannini, L.; Molijn, R.; Westerdijk, K.; van der Sande, C. Crop Monitoring Using Sentinel-1 Data: A Case Study from The Netherlands. Remote Sens. 2019, 11, 1887. [Google Scholar] [CrossRef]

- Shen, B.; Liu, T.; Gao, G.; Chen, H.; Yang, J. A Low-Cost Polarimetric Radar System Based on Mechanical Rotation and Its Signal Processing. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 4744–4765. [Google Scholar] [CrossRef]

- Lee, J.-S.; Hoppel, K.W.; Mango, S.A.; Miller, A.R. Intensity and Phase Statistics of Multilook Polarimetric and Interferometric SAR Imagery. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1017–1028. [Google Scholar]

- Lee, J.S.; Grunes, M.R. Classification of Multi-Look Polarimetric SAR Data Based on Complex Wishart Distribution. Int. J. Remote Sens. 1994, 15, 2299–2311. [Google Scholar] [CrossRef]

- Yin, J.; Wang, T.; Du, Y.; Liu, X.; Zhou, L.; Yang, J. SLIC Superpixel Segmentation for Polarimetric SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).