Abstract

The problem of inadequate object detection accuracy in complex remote sensing scenarios has been identified as a primary concern. Traditional YOLO-series algorithms encounter challenges such as poor robustness in small object detection and significant interference from complex backgrounds. In this paper, a multi-scale feature fusion framework based on an improved version of YOLOv8_L is proposed. The combination of a graph attention network (GAT) and Dilated Encoder network significantly improves the algorithm detection and recognition performance for space remote sensing objects. It mainly includes abandoning the original Feature Pyramid Network (FPN) structure, proposing an adaptive fusion strategy based on multi-level features of backbone network, enhancing the expression ability of multi-scale objects through upsampling and feature stacking, and reconstructing the FPN. The local features extracted by convolutional neural networks are mapped to graph-structured data, and the nodal attention mechanism of GAT is used to capture the global topological association of space objects, which makes up for the deficiency of the convolutional operation in weight allocation and realizes GAT integration. The Dilated Encoder network is introduced to cover different-scale targets by differentiating receptive fields, and the feature weight allocation is optimized by combining it with a Convolutional Block Attention Module (CBAM). According to the characteristics of space missions, an annotated dataset containing 8000 satellite and space station images is constructed, covering a variety of lighting, attitude and scale scenes, and providing benchmark support for model training and verification. Experimental results on the space object dataset reveal that the enhanced algorithm achieves a mean average precision (mAP) of 97.2%, representing a 2.1% improvement over the original YOLOv8_L. Comparative experiments with six other models demonstrate that the proposed algorithm outperforms its counterparts. Ablation studies further validate the synergistic effect between the graph attention network (GAT) and the Dilated Encoder. The results indicate that the model maintains a high detection accuracy under challenging conditions, including strong light interference, multi-scale variations, and low-light environments.

1. Introduction

In the context of the rapid advancements in the domain of space technology, human space activities have continued to expand, and various uses of spacecraft have been continuously explored. More and more spacecraft have been sent into space [1]. A variety of meteorological, communications, reconnaissance, remote sensing, astronomy and other scientific exploration satellites have provided strong technical support for environmental protection, national defense construction, disaster protection and resource exploitation. Simultaneously, the number of space objects is increasing on an annual basis. Consequently, space situational awareness plays an important role in ensuring the safety of spacecraft in orbit, and space object detection is the basis for realizing space situational awareness [2]. Space missions, such as the monitoring, avoidance and removal of space objects, hinge on the early and real-time detection and surveillance of uncooperative space targets. This is critical for planning effective collision avoidance maneuvers and ensuring the continuous operation of satellites in orbit. Thus, the fast and precise detection and recognition of small targets, such as uncooperative spacecraft and space debris, is of great significance for safeguarding space operations [3,4].

Currently, artificial intelligence technology is developing rapidly due to improvements in hardware performance and the development of deep learning algorithms, offering innovative solutions to meet various user demands [5]. Relevant researchers at home and abroad have conducted extensive research on the acquisition and processing of space information. Current research indicates that integrating artificial intelligence into space exploration and combining traditional target detection methods with AI-driven approaches represents a promising strategy to address the key challenges in space target detection. In space target detection, artificial intelligence equips the system with autonomous operation, intelligent reasoning, and decision-making capabilities, enabling functions that surpass traditional detection methods [6,7].

Object detection algorithms based on artificial intelligence technology leverage deep neural networks to directly learn feature representations from large-scale labeled datasets, significantly enhancing detection accuracy and efficiency. These approaches can be broadly classified into two primary types: two-stage detectors, which first generate candidate regions of interest before performing classification and bounding box refinement, and single-stage detectors, which predict both object classes and bounding boxes in a single step, offering faster inference speeds [8,9].

The single-stage detection algorithm YOLO [10] achieves fast and efficient detection by reformulating the task as a single regression problem. The advantage of the YOLO algorithm is its fast processing speed, making it well suited for real-time application scenarios. Furthermore, by incorporating global image context during training, YOLO demonstrates robust generalization performance when encountering novel scenes. As the technology has evolved, YOLO has undergone several updates, for example, YOLOv2 [11] introduced batch normalization and high-resolution classifiers, which not only boosted detection accuracy and speed but also improved the model’s generalization capability across different object classes. YOLOv3 [12] enhanced small object detection through multi-scale prediction and deep residual network architecture, further strengthening its performance. YOLOv4 [13] adds more data enhancement techniques and the latest CNN optimizers, such as Mish activation functions, to improve accuracy and robustness. YOLOv5 [14] uses the PyTorch framework to simplify use and deployment, and optimizes inspection efficiency through auto-learning anchor point tuning. YOLOF [15] demonstrated that the effectiveness of the FPN module stems primarily from its divide-and-conquer approach to addressing the target optimization problem, rather than merely from multi-scale feature fusion as is commonly emphasized. In view of this conclusion, a simple and elegant network structure without complex FPN is designed, which can achieve matching effect only by relying on single scale features, and has extremely fast inference speed. YOLOv8 [16] upgraded the architecture by replacing YOLOv5’s C3 structure with the C2f structure, which facilitates more abundant gradient flow. This design incorporated model-tailored channel configurations and utilized a decoupled prediction head architecture to separate classification and detection tasks. Additionally, YOLOv8 transitioned to anchor-free detection rather than anchor-based detection. The algorithm implemented the Task Aligned Assigner for positive sample selection and introduced distributed focus loss. These iterative improvements have significantly advanced real-time object detection capabilities.

Due to its powerful feature representation ability, the Transformer is also widely used in computer vision tasks. By processing image data through the self-attention mechanism, the visual Transformer can capture global features and reduce the demand for specific visual sensing biases, enabling it to demonstrate excellent performance in tasks such as image classification, object detection and image segmentation. In the task of object detection, Transformer models such as DETR [17] transform object detection into a set prediction problem. Feature sequences are processed through encoders and decoders to generate object bounding boxes and categories. Dosovitskiy et al. [18] observed that the attention mechanism in previous visual algorithms was often combined with convolutional operations or partially replaced convolutional networks. Based on this, they proposed ViT (vision Transformer), which is a visual architecture completely based on a self-attention mechanism, demonstrating that it can achieve good performance in image classification tasks even without relying on CNN. In DABDETR, Liu et al. [19] proposed that since the object query of the original DETR is randomly initialized, it will lead to a slow training speed and difficulty in convergence. Moreover, the position prior information in the original model only exists in the feature map and does not involve the object query of the decoder. To this end, they introduced dynamic anchor boxes as queries for the Transformer decoder and updated them dynamically in each layer, thereby providing better spatial prior knowledge for the cross-attention module.

Image-based deep learning object detection also brings many advantages to spatial situational awareness. Wu et al. [20] proposed a two-stage convolutional neural network T-SCNN to solve the problem of low efficiency in space target recognition and location [21]. Based on the YOLOv3 network, Wang et al. [22] improved feature extraction through integrating shallow and deep features, enhancing detection across various object scales. Jia et al. [23] upgraded model generalization through the integration of an enhanced ResNet-50 backbone and feature pyramid network into the Faster R-CNN architecture. To improve space object localization and classification, AlDahoul et al. [24] introduced a model decision fusion approach using EfficientNet-v2 and EfficientNet-B4 networks to train the EfficientDet model. Meanwhile, Mahendrakar et al. [25] proposed SpaceYOLO, integrating shape and texture detectors with YOLOv5 inspired by human decision-making processes, to enhance security-related detection capabilities. Tang et al. [26] used RepPoint to replace the 3 × 3 convolution of the ElAN skeleton in YOLOv7, which improved the accuracy of detecting space target components. Ai et al. [2] use three first-level feature maps to replace the feature pyramid structure and decouple head to enhance the detection of space targets of different scales. Massimi et al. [27] combined the pulse Doppler radar system with YOLO to improve the space debris detection capability. These works have shown better classification and detection performances for targets with obvious features.

To enhance the detection accuracy of small-scale space objects, Guo et al. [28] introduced a U-Net architecture incorporating channel-wise spatial attention modules that leverage spatial image features for detecting dim targets in single optical images. Jiang et al. [29] proposed a multi-channel histogram truncation module and central difference convolution to enhance features, and constructed SOD-YOLO to realize dim and small space target detection.

While these advancements have improved the performance of space object detection, deep space images present unique challenges such as stray light and low signal-to-noise ratios. Current spatial object detection methods still struggle in complex backgrounds, particularly with feature extraction for small objects, often resulting in decreased detection accuracy. Therefore, this paper proposes a space object detection network based on a YOLOv8_L network model, which takes into account both detection accuracy and model weight. The specific innovations are as follows:

- Abandon the original Feature Pyramid Network (FPN) structure, propose an adaptive fusion strategy based on multi-level features of backbone network, enhance the expression ability of multi-scale targets through upsampling and feature stacking, and reconstruct FPN.

- By introducing GAT, we propose a novel space image object detection algorithm to address the limitations of convolutional neural networks, combining their respective strengths to enhance overall detection performance.

- The Dilated Encoder network is introduced to cover different scale targets by differentiating receptive fields.

- This study establishes a novel spacecraft imagery dataset specifically designed to advance object detection algorithms in orbital environments. Through systematic validation on the dataset, we quantitatively verify the functional significance of each technical component and its contribution to performance enhancement.

The structure of this paper is structured as follows: in the second section, we provide a comprehensive review of the related literature. Section 3 elaborates on the proposed methodology, detailing the technical framework and the algorithm design. In Section 4 and Section 5, we present the experimental results, including quantitative comparisons and analyses with other existing approaches, to demonstrate the effectiveness and advantages of the proposed method. Finally, Section 6 concludes the paper by summarizing the main contributions and outlining potential future research directions for further exploration.

2. Related Works

2.1. YOLOv8 Object Detection Algorithm

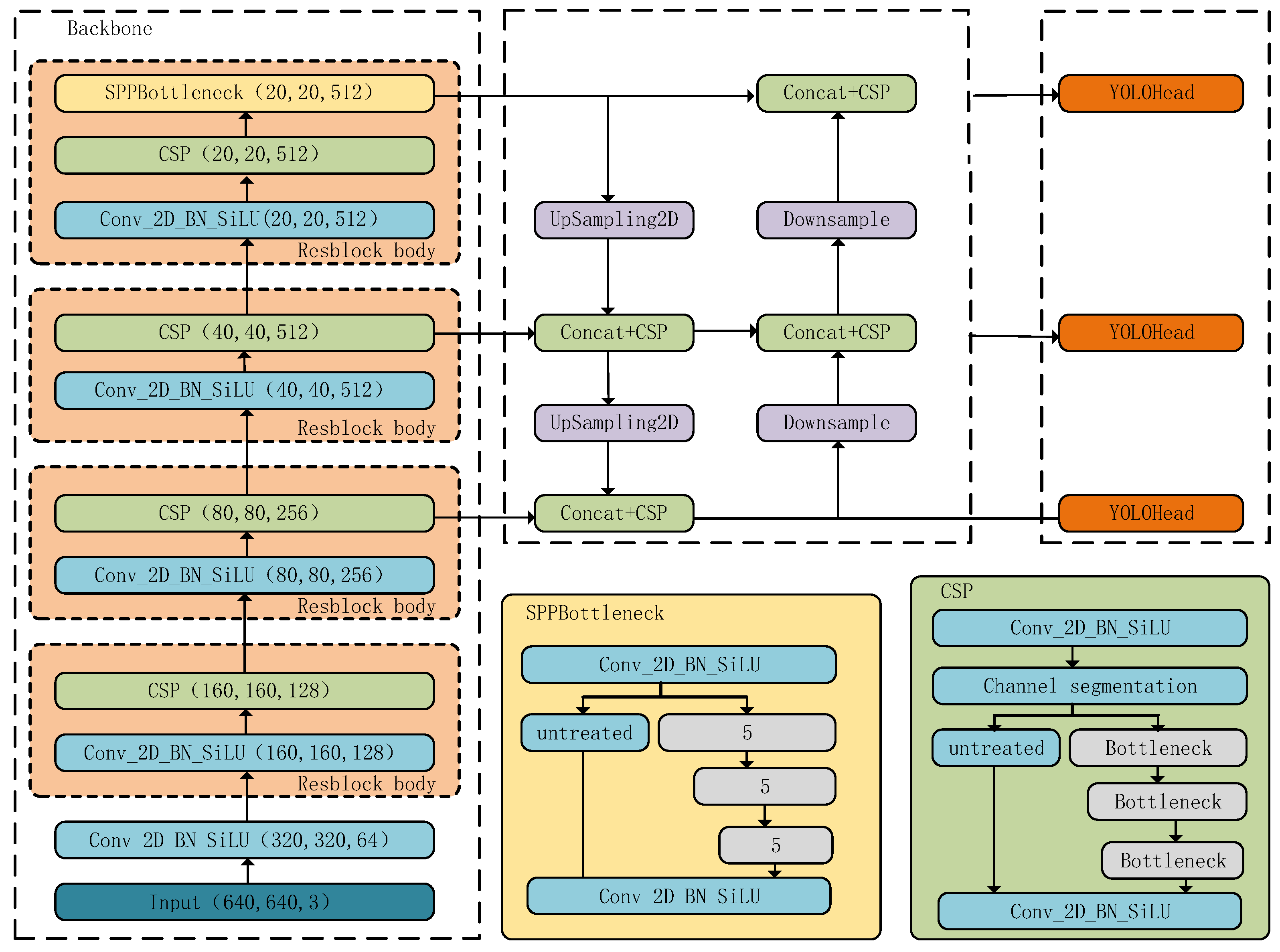

The Ultralytics team improved the model on the basis of the YOLOv5 network, replaced the C3 module in YOLOv5 with C2f, proposed the YOLOv8 network, and divided the network into five scales, n, s, m, l and x, according to the network depth. As shown in Figure 1, YOLOv8 does not differ much from the previous YOLO algorithm in terms of its prediction approach, and is still divided into three parts: backbone, FPN and YOLO Head.

Figure 1.

The structure of YOLOv8_L.

The backbone serves as YOLOv8’s primary feature extraction network. Input images first pass through this backbone network, where their essential features are extracted. These extracted features, referred to as feature layers, constitute the comprehensive feature representation of the input images.

FPN serves as YOLOv8’s enhanced feature extraction module, where feature fusion occurs between the three key feature layers extracted by the backbone. This fusion combines multi-scale feature information to improve detection performance. The validated feature maps from the FPN are subsequently utilized for hierarchical feature refinement. Notably, YOLOv8 introduces the PANet structure, which implements both upsampling and downsampling operations to facilitate comprehensive feature fusion at different scales.

In YOLOv8, the YOLOHead functions concurrently as both a classification and regression component. By leveraging the backbone and Feature Pyramid Network (FPN), it generates three optimized feature layers—each defined by unique width, height, and channel dimensions, which are critical for multi-scale object detection. These feature layers can be visualized as a sequence of feature points, where each point replaces the traditional prior box. Instead of fixed prior boxes, each feature point contains multiple channel-wise features. The core function of YOLOHead is to evaluate these feature points and determine whether objects exist at their corresponding locations. Unlike conventional methods, YOLOv8 employs a decoupled header where classification and regression tasks are processed separately—this is implemented without using a single 1 × 1 convolution layer for both operations.

In this paper, we focus on the application of object detection methodologies within the unique context of space environments, using YOLOv8_L as the fundamental architecture. In addition, we implement our proposed space object detection method on this foundation and demonstrate its effectiveness through experiments.

2.2. YOLOF Object Detection Algorithm

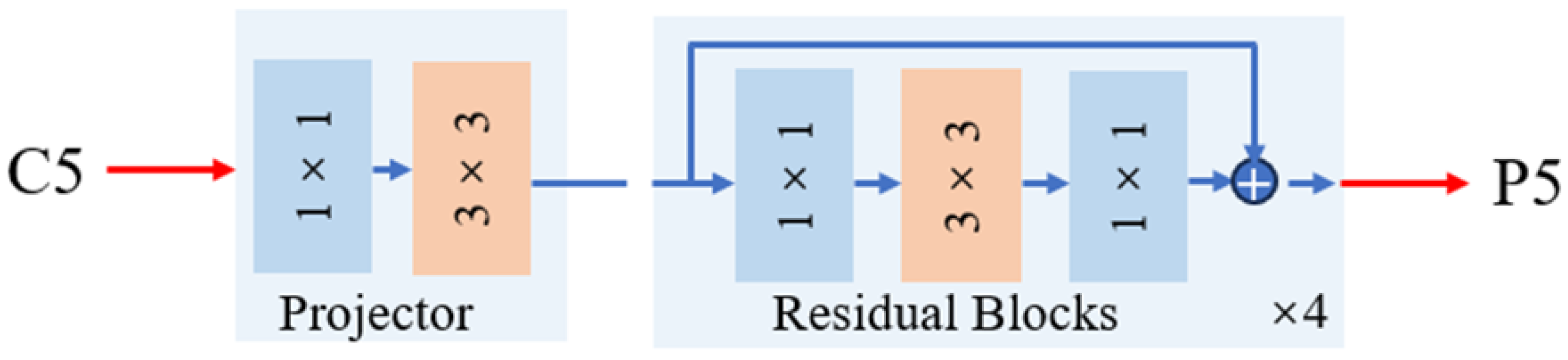

YOLOF proposes a simple object detection framework that uses only single-level feature graphs, which consists of three main parts: a backbone, encoder and decoder. Classic ResNet [30] and ResNeXt [31] are used in the backbone, extracting C5 feature maps with 2048 channels at a 32× downsampling rate. The encoder module incorporates two key components: a projector layer and stacked residual blocks. The projector first reduces channel dimensions through a 1 × 1 convolution operation, followed by a 3 × 3 convolution operation to enhance contextual semantics, aligning with FPN principles. This is succeeded by four residual blocks featuring varying expansion ratios in their convolutional kernels, producing multi-scale feature representations to accommodate objects of different sizes.

The decoder adopts a dual-branch structure similar to RetinaNet [32], handling classification and bounding box regression in parallel. Two principal modifications distinguish YOLOF’s design: (1) Following DETR’s FFN [17], the regression branch employs four convolutional layers with batch normalization and ReLU activations, contrasting with the classification branch’s two-layer configuration; (2) an implicit objectiveness score (without direct supervision) is integrated into each regression anchor, with the final classification confidence derived by multiplying the class scores by this learned objectiveness measure.

2.3. Graph Neural Network

Graph neural networks [33] integrate graph computational principles with neural network methodologies to transform structural information into node embeddings. Graph computing models are good at capturing topological structures, but they cannot handle high dimensional features. Traditional neural network deals with Euclidean spatial data, while graph data is non-Euclidean, so a new processing mechanism is needed.

The message propagation mode is a popular processing method in graph neural networks, which includes two steps, neighbor aggregation and node updating, which can obtain the higher-order neighbor information of a node.

Common models of graph neural networks include graph convolutional neural networks (GCNs) [34], Graph Attention Networks (GAT) [35], cyclic graph neural networks (GGNNs) [36] and autoencoder-based graph neural networks (SDNE) [37]. These models will face problems such as insufficient memory and neighbor explosion in large-scale data training.

GCN variants implement neighbor node aggregation through convolution operations, with methodological distinctions between spectral-domain implementations (utilizing graph Laplacian eigenvalues) and spatial-domain frameworks (employing message-passing mechanisms). GAT introduces attention mechanisms to process graph data and assigns different weights to each node. GGNN processes graph structure data based on RNN for time evolution graphs. SDNE uses an autoencoder to learn node representation, considering the similarity between nodes.

2.4. Attention Mechanism

The attention mechanism has emerged as a cornerstone of deep learning and has important applications in natural language processing, computer vision and speech recognition systems. Attention mechanisms use specific methods to obtain differences in spatial or path importance of feature graphs, so that the resources of convolutional neural networks can be allocated to more noteworthy tasks to achieve better results [38].

Notably, Momenta proposed the module SE-Net [39] and introduced the attention mechanism. Its main idea is that, for each channel in the feature map, the criticality of the channel is represented by a weight calculated based on the feature map. Since channel dimension reduction is carried out during the implementation of SE-net, this operation has a great impact on the good performance of the channel attention mechanism.

Wang et al. proposed ECA-Net [40] to solve the problem of side effects caused by dimensionality reduction in attention, and adopted a one-dimensional convolution layer to summarize cross-channel information to obtain more accurate attention signals, added very few parameters, and achieved a very good performance.

Sanghyun proposed a CBAM [41] model of the mechanism of convolutional attention. The CBAM model holds that a large amount of attention information exists within the channels in the feature maps of convolutional networks. In the channels, pixels in the feature maps contain attention information. However, in the previous attention mechanism, researchers only focused on the attention information existing in the channel, ignoring the large amount of attention resources in the space. CBAM demonstrates that joint consideration of both attention dimensions yields superior performance compared to isolated implementations, establishing a new baseline for attention-based feature enhancement.

2.5. Space Object Dataset

The development of deep learning methodologies fundamentally relies on extensive training datasets, yet constructing reliable space object dataset presents unique challenges. Space object images show obvious acquisition difficulties and are complicated due to the inherent variability in celestial body features under different observation conditions. Key factors include the following: (1) Limited availability of authentic space object observations; (2) significant changes caused by scale differences, posture variations, and operational parameters (such as observation angles, distances and lighting conditions) [42,43].

In order to construct a space object dataset, Liu Zhenjiang et al. obtained satellite and space debris images through network image, video collection and Satellite Tool Kit (STK) [44] software simulations for expansion and annotation, and made the SSAD dataset [45]. Non-cooperative objects that are far away from the normal spacecraft were acquired during the fabrication process, and the space objects were mostly small-scale targets. To enrich the diversity in the dataset, STK simulation video was used to simulate different satellite states, scales and backgrounds.

Given the scarcity of publicly available real-scene imagery of space objects due to acquisition challenges, a dedicated dataset for space object detection, segmentation and component recognition had been developed by Hoang, Dung Anh [46]. His study enriched the dataset through the following approaches: 3117 images of satellites were collected from authentic satellite imagery and spacecraft video archives, and a multi-source data fusion strategy was implemented to generate a comprehensive collection encompassing space stations, satellites, and their sub-components. Furthermore, established evaluation protocols were standardized through a benchmark test set containing annotated instances for target detection and instance segmentation tasks for related research.

3. Methods

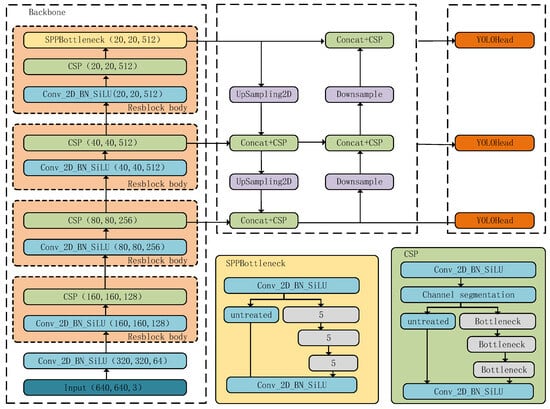

3.1. Framework Solution of Improved YOLOv8_L

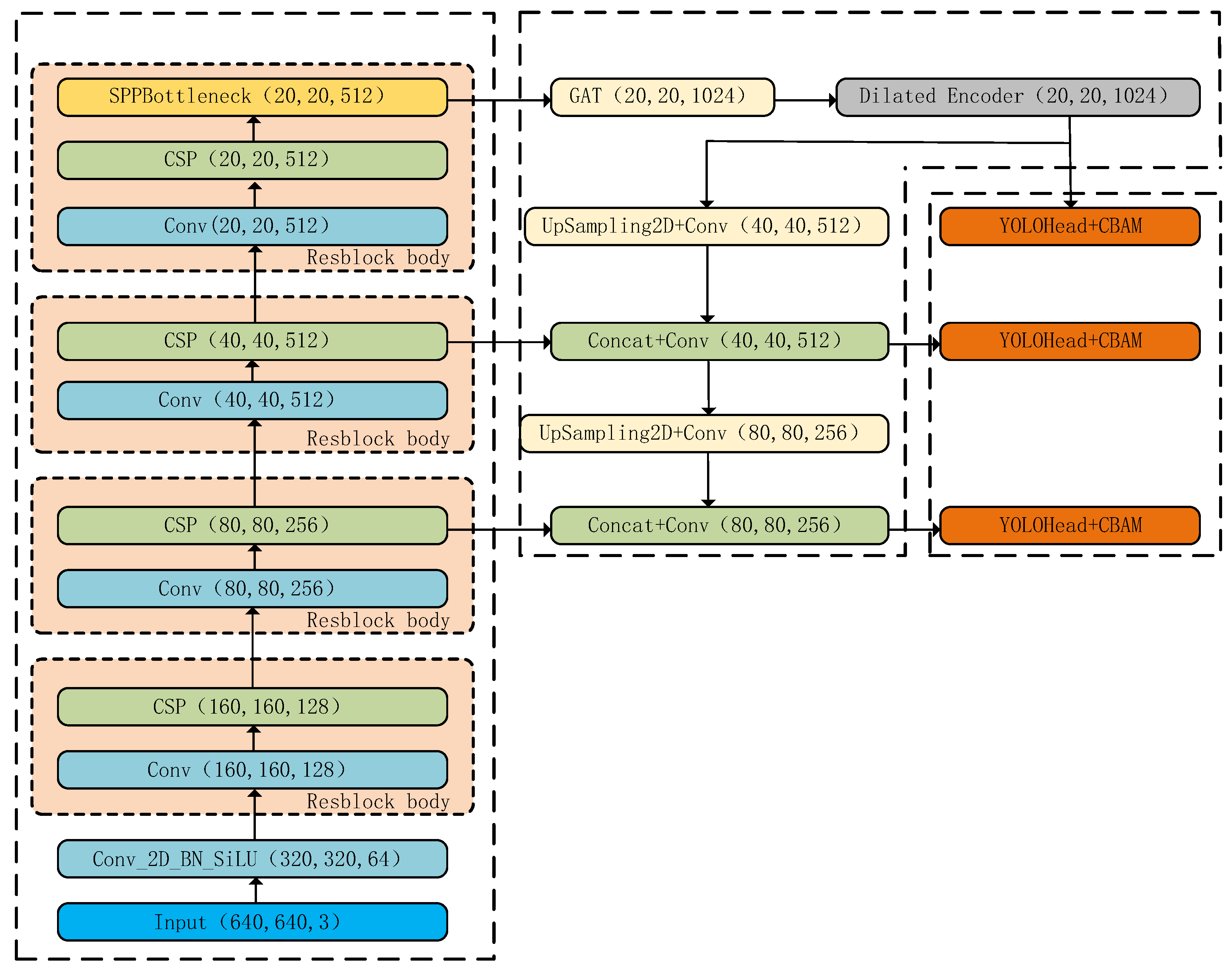

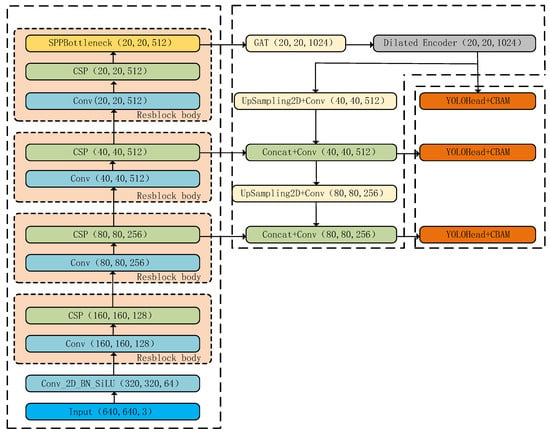

In order to solve the problem of insufficient object detection accuracy in complex space scenes, we propose a multi-scale feature fusion framework based on improved YOLOv8_L. The combination of a graph attention network (GAT) and extended encoder network significantly improves the detection and recognition performance of space remote sensing objects. The improved algorithm structure of YOLOv8_L proposed in this paper is shown in Figure 2. Compared to the YOLOv8_L network structure in Figure 1, we use the backbone of YOLOv8_L and change the FPN and YOLOHead networks. Detailed information is provided below:

Figure 2.

The improved YOLOv8_L algorithm. We used the backbone of YOLOv8_L and change the FPN and YOLOHead networks.

Compared with the structure in Figure 1, we added the GAT module and the Dilated Encoder module to enhance the feature expression ability; the upsampling operation in FPN has been canceled, and only the downsampling is required to fuse with the features of the previous layer. The CSP module has also been canceled, which can reduce the number of parameters to a certain extent, depending on the benefits brought by the GAT module and the Dilated Encoder module. Finally, CBAM was added to the YOLOHead module.

3.2. Graph Attention Network

This section mainly uses GAT to design space object detection algorithms. GAT can better aggregate the features of neighbor nodes by introducing attention mechanisms, and can describe the topology of inputs well. GAT builds the network framework by combining multiple GALs (graph attention layers). A GAL learns the mutual importance of each node to its neighbors and extracts features based on this importance. The input to a single GAL is a set of eigenvectors for the nodes, constructed as:

where represents the feature vector set of input nodes, represents the number of nodes in the node set, represents the feature dimension and represents the feature vector of the node. The output of a single GAL is a new set of node features, constructed as:

where indicates the output node feature dimension.

In order to obtain sufficient feature representation capability, a GAL first uses a learnable weight matrix with shared parameters to perform linear transformations on the input node feature vectors and transform them into features with stronger descriptive capability. Then, feature mapping is carried out on each node through a shared attention mechanism to calculate the attention coefficient between each node. The calculation process is constructed as follows:

where represents the attention coefficient of node to node , and is the attention mechanism function. In Formula (2), each node can focus on all other nodes, ignoring the intrinsic topology information between nodes. In order to consider the topology of the graph, when a GAL calculates the attention coefficient, only the attention coefficient of node is calculated, and represents the set of first-order adjacent nodes of node . In order to facilitate comparison of attention coefficients of different nodes, the attention coefficients of all nodes are normalized, and the processing process is structured as follows:

where represents the normalized attention coefficient. GAL uses a single-layer feedforward neural network to compute the attention mechanism. The overall calculation process is constructed as follows:

where represents the concatenation operation, represents the nonlinear activation function, represents the weight parameter of the feedforward neural network used to implement the attention mechanism and represents the transpose operation. The attention coefficient obtained after completing the above steps is asymmetric; that is, the attention coefficient of node to node is different from that of node to node . When the attention coefficient between nodes is obtained, GAL can linearly correlate it with the feature vector of the input node to obtain the output feature. The calculation process is constructed as follows:

where is the feature processed by GAL, represents the nonlinear activation function, and is the learnable weight parameter.

In order to improve the fitting ability of the network model, a multi-head attention mechanism is introduced; that is, each attention layer uses multiple learnable weights to calculate the attention coefficient and feature transformation, and then aggregates the calculated results. The computational process of the multi-head attention mechanism using the series method to aggregate results is constructed as follows:

where represents the concatenation operation, represents the number of multiple attention heads, represents the learnable weight parameter for computing the attention and represents the attention coefficient of node for node calculated using the weight. It is worth noting that the dimension of the output feature calculated using the concatenation operation is .

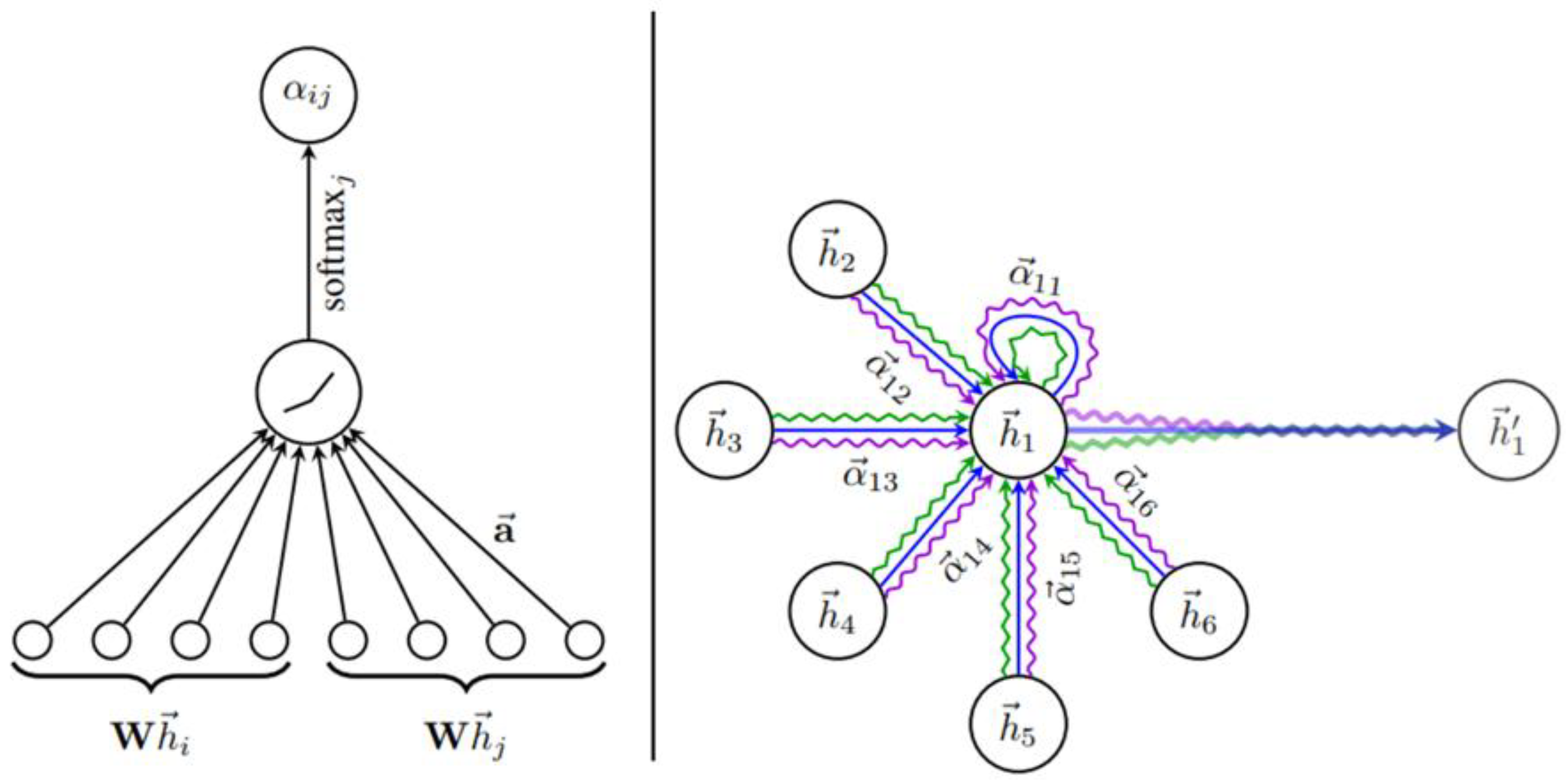

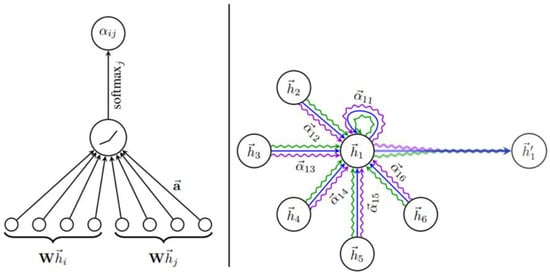

To sum up, the calculation process for a GAL is shown in Figure 3. Here, the left figure in Figure 3 shows the calculation process of the attention coefficient of node to node ; The figure on the right is a schematic diagram of multi-head attention. Different weights are used to obtain the attention coefficient of the first-order adjacent nodes of node , and then the node features are calculated, and the final output features are obtained after the calculation results are aggregated. Different arrows and colors are used in the figure to represent the attention calculated by different weights .

Figure 3.

Diagram of process of GAL calculating attention.

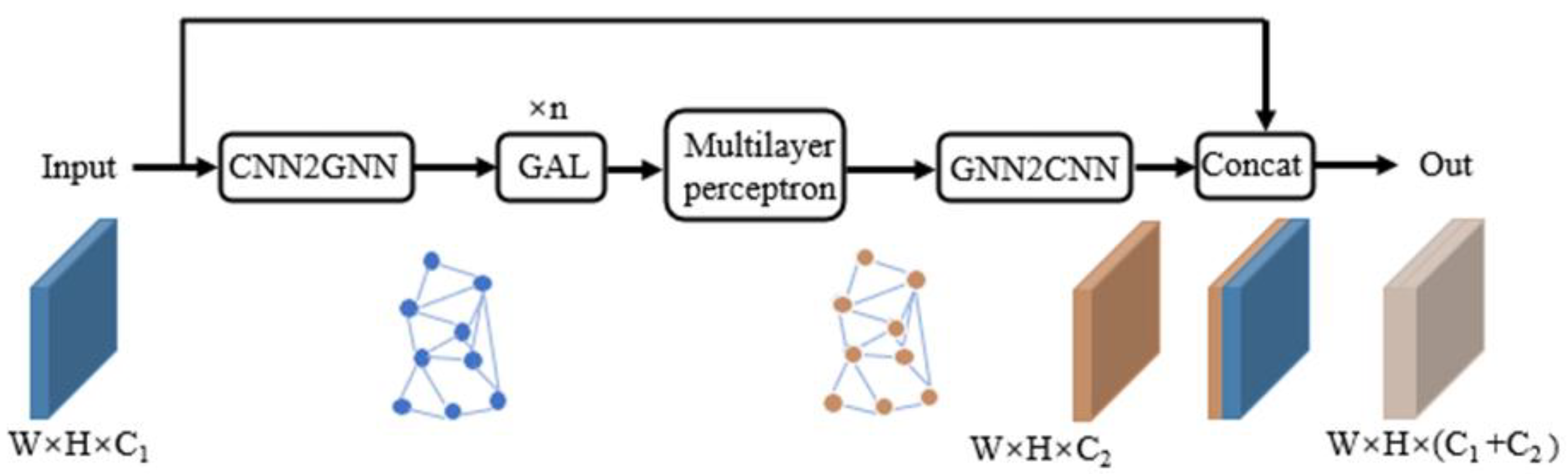

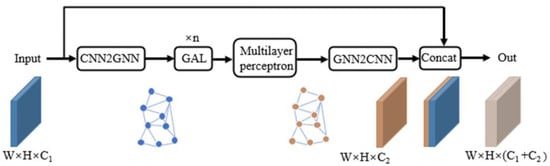

The GAT module is shown in Figure 4: First, the feature map is transformed into a graph data structure through feature mapping (pixels in the feature graph are regarded as graph nodes, and the relationships between pixels are regarded as edges), and represents the input feature graph. Then there are nodes in the converted graph data, and the feature vector dimension of each node is . The adjacency matrix size is . Then, the graph data is input into the graph attention network built by multiple GAL to extract topological features, multi-layer perceptron is used to transform the features extracted from the graph attention network. In this case, the dimension of the feature vector of the node matrix is , which is transformed into the feature graph of after the feature mapping. Finally, and are spliced into a new feature graph. The final output is obtained.

Figure 4.

Frame diagram of GAT module.

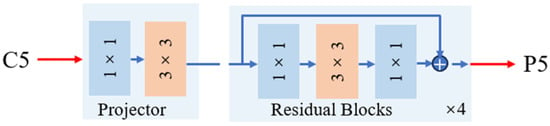

3.3. Dilated Encoder Network

This study employs the Dilated Encoder network as the architectural foundation for space object detection, building upon YOLOF’s seminal contribution that redefined feature pyramid optimization through single-scale feature utilization. The architecture demonstrates a comparable detection accuracy to traditional FPN-based systems while achieving an enhanced computational efficiency. The Dilated Encoder network architecture (Figure 5) implements a feature enhancement strategy through two principal components: Initial Convolutional Module and dilated residual blocks.

Figure 5.

The Dilated Encoder network architecture.

We construct the Initial Convolutional Module through a two-stage feature transformation process:

where is a feature map from the backbone (the C5 feature map), and and are two convolution operations. 1 × 1 represents a 1 × 1 convolution operation for channel dimensionality reduction, and 3 × 3 denotes the subsequent 3 × 3 convolution block with batch normalization and ReLU activation for contextual refinement. Subsequently, we stack four consecutive dilated residual blocks to capture multi-scale contextual information:

and are two 1 × 1 convolution operations and are used to adjust the channel dimension; is 3 × 3 dilated convolution operations with progressive dilation rates (2, 4, 6, 8). Finally, the output of the Dilated Encoder network is .

3.4. Improvement of FPN in YOLOv8_L

The enhanced space object detection algorithm based on YOLOv8_L in this paper mainly includes abandoning FPN structure, proposing an adaptive fusion strategy based on multi-level features of backbone network, enhancing the expression ability of multi-scale objects through upsampling and feature stacking, and reconstructing the FPN. The local features extracted by convolutional neural networks are mapped to graph-structured data, and the nodal attention mechanism of GAT is used to capture the global topological association of space objects, which makes up for the deficiency of the convolutional operation in weight allocation and realizes GAT integration. The Dilated Encoder network is introduced to cover different scale targets by differentiating receptive fields, The framework is shown in Figure 2.

CSPDarket network extracts three hierarchical feature maps: high-resolution feature map (feat1): 80 × 80 × 256 (middle layer); medium-resolution feature map (feat2): 40 × 40 × 512 (intermediate layer); low-resolution feature map (feat3): 20 × 20 × 512 (base layer).

We perform GAT and Dilated Encoder network operations on the feature layer feat3. The enhanced feature layer P3 = (20, 20, 1024) is obtained. After sampling at layer P3, stack it with feat2 to obtain the enhanced feature layer P2 = (40, 40, 512). After sampling at layer P2, stack it with feat1 to obtain the enhanced feature layer P1 = (80, 80, 256).

Finally, the triple-scale enhanced features (20 × 20 × 1024, 40 × 40 × 512, 80 × 80 × 256) are processed through YOLOHead to generate final detection predictions.

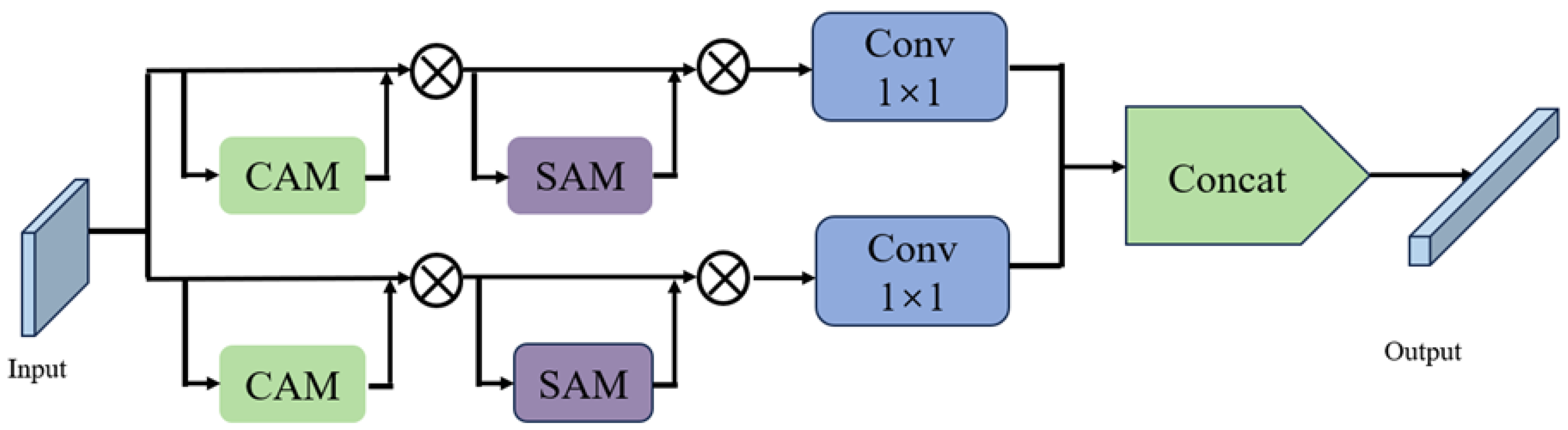

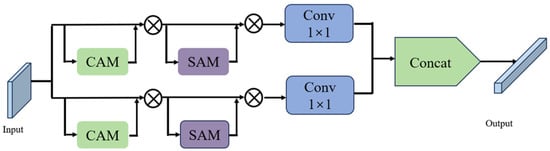

3.5. Improvement in YOLOHead Network in YOLOv8_L

As shown in Figure 6 below, the CBAM is introduced to the guide detection algorithm at the end of YOLOV8_L network, so as to extract more effective features from the complex background.

Figure 6.

The architecture of CBAM.

The CBAM model holds that a large amount of attention information exists within the channels in the feature maps of the convolutional networks. In the channels, pixels in the feature maps contain attention information. However, in the previous attention mechanism, researchers only focused on the attention information existing in the channel, ignoring the large amount of attention resources in the space. CBAM demonstrates that joint consideration of both attention dimensions yields superior performance compared to isolated implementations, establishing a new baseline for attention-based feature enhancement. The specific representation is as follows:

YOLOv8_L employs a decoupled header where classification and regression tasks are processed separately. Therefore, the attention mechanism is added to the classification and regression tasks, respectively, the channel dimension is adjusted by the 1 × 1 convolution operation, and finally the output is obtained by the Concat operation for the detection of the object.

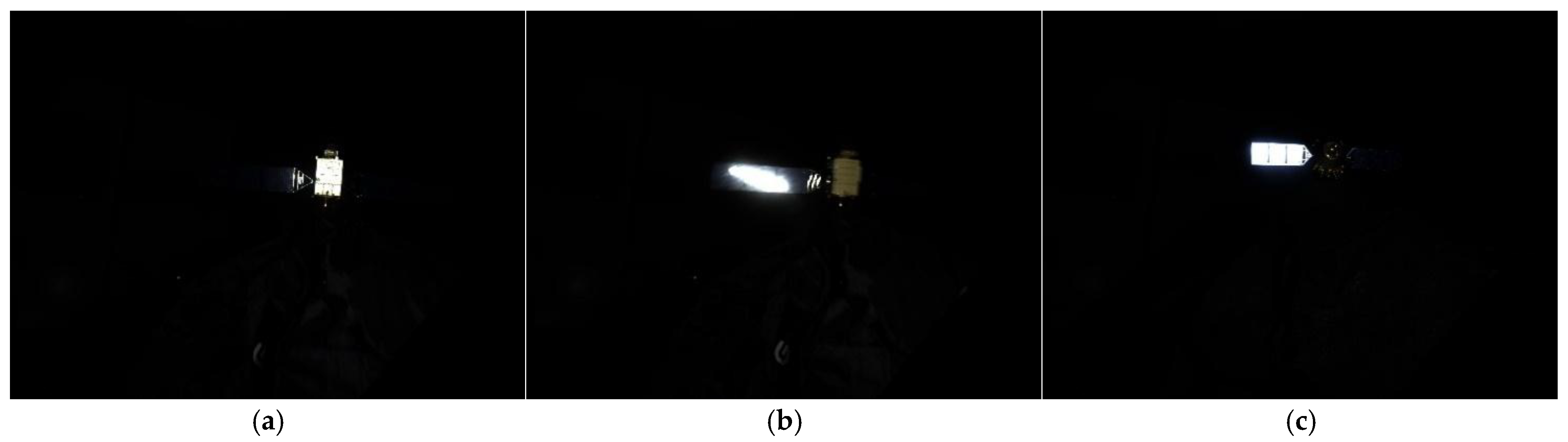

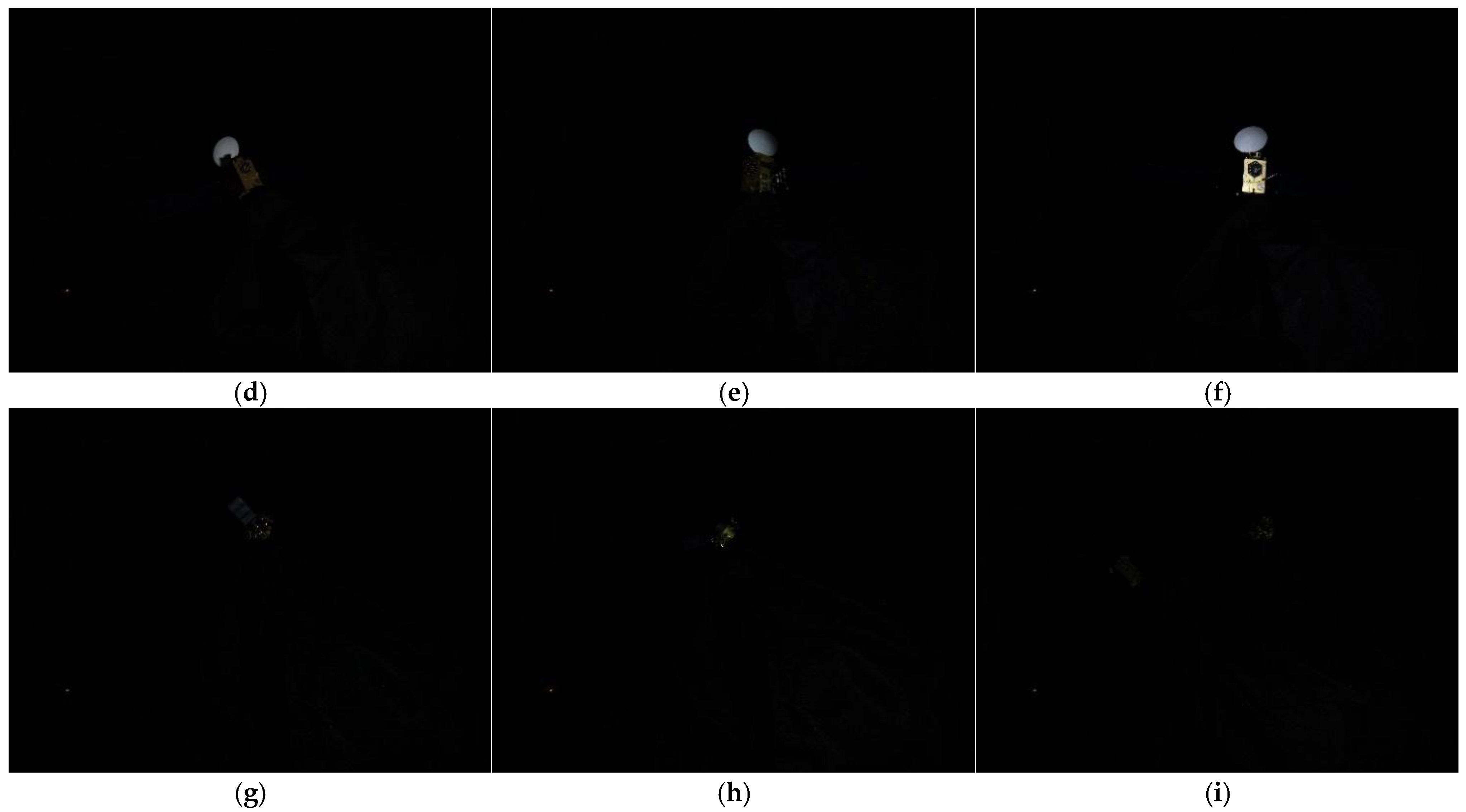

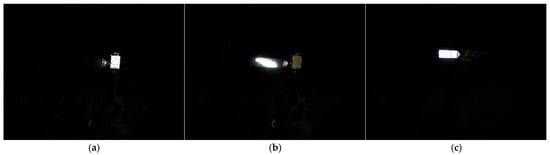

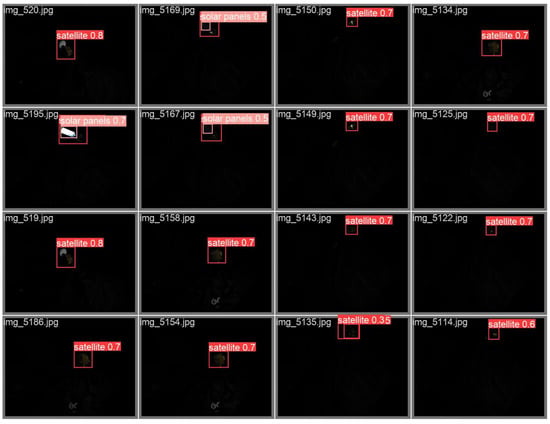

3.6. Construct Space Object Dataset

Because the current space object datasets do not take into account the problem of object detection in complex environments, such as light interference and dark–light environments, in this paper, we re-establish a space object dataset, and enhance and annotate the data, including 8000 images of satellites and solar panels. Finally, we verify the advanced nature of the improved algorithm with dataset. Some pictures of the dataset are shown in Figure 7.

Figure 7.

Some pictures of the space object dataset.

Dataset construction methodology integrates three key methods:

- We consider the production of satellite model dataset under strong light interference, and some of the renderings are shown in Figure 7a–c.

- A satellite model dataset is made from different angles and scales. The partial renderings are shown in Figure 7d–f.

- Considering the imaging effect of the satellite model in a dark light environment, some of the renderings are shown in Figure 7g–i.

4. Experiments

4.1. Datasets

The experiments in this paper adopt a two-dimensional data verification strategy: Firstly, the universality of the algorithm is verified based on the dataset VOC2007 + 2012. The performance is verified through a self-made dedicated dataset for space target detection. Each dataset was randomly allocated to the training set, validation set and testing set in an 8:1:1 ratio to ensure the accuracy of the experimental results.

4.2. Experimental Parameter Configuration

The model is trained and validated on a PC-based experimental platform with the following configurations:

Hardware Environment:

- NVIDIA GeForce RTX4090 GPU with CUDA 11.6.

Software Environment:

- OS: Windows 10 Pro 64-bit.

- Deep Learning Framework: PyTorch 1.13.1.

- Language: Python 3.10.

- IDE: Jupyter.

Training Protocol:

- Epochs: 100.

- Batch Size: 8.

- Learning Rate: 0.01.

- Optimizer: SGD.

4.3. Evaluation Indicators

In the experiment, the algorithm was evaluated using the following six indicator: (1) floating-point operations (GFLOPs), which reflect the computational complexity, represents the computing resource demand of the model; (2) average mean accuracy (mAP): the average accuracy of all categories in the dataset; (3) parameter quantity (Params): an important indicator for measuring the complexity of a model, directly affects the model’s storage requirements, computational costs, and training time; (4) frames Per Second (FPS): a key indicator for measuring the real-time performance of the model. A high FPS value means that the model can process the input image quickly and provide the detection results. (5) Precision and Recall: these are important indicators for evaluating the performance of classifiers.

5. Results and Discussion

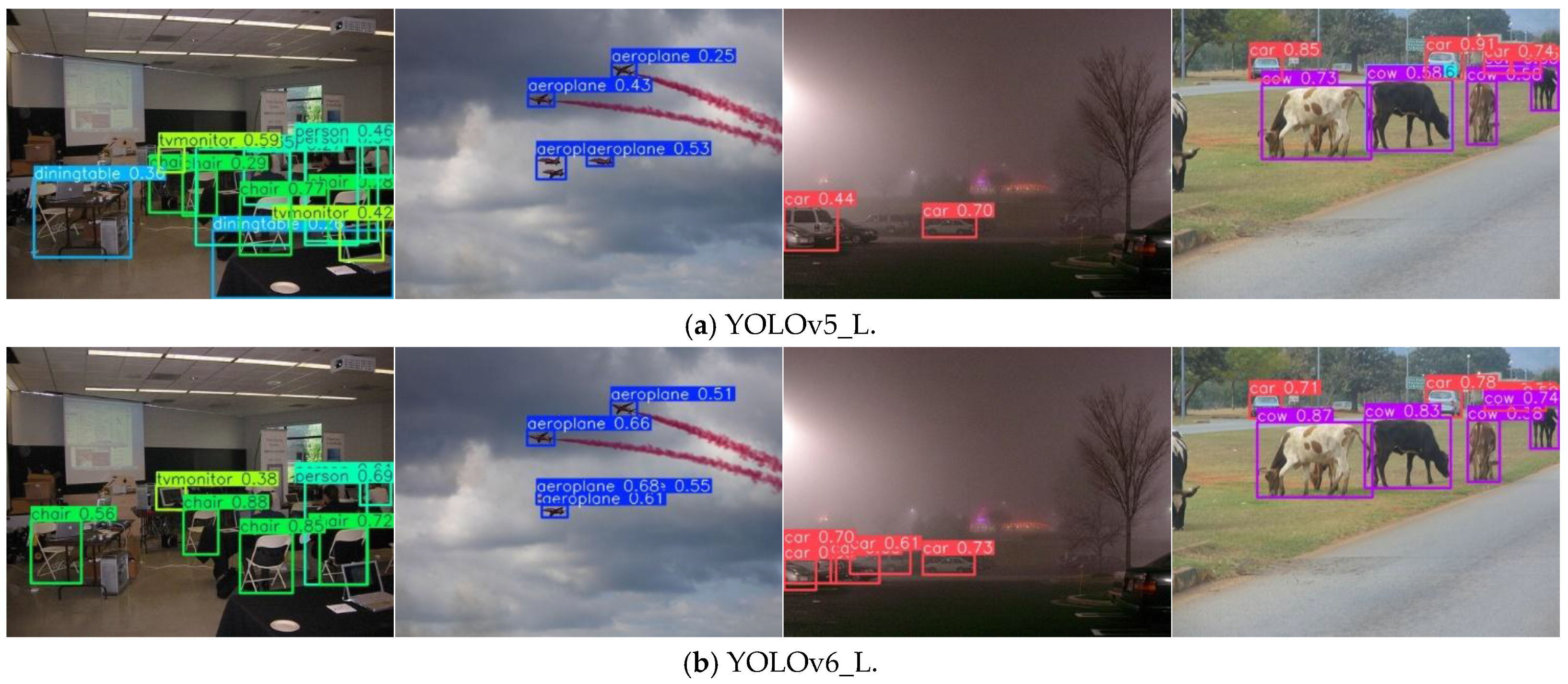

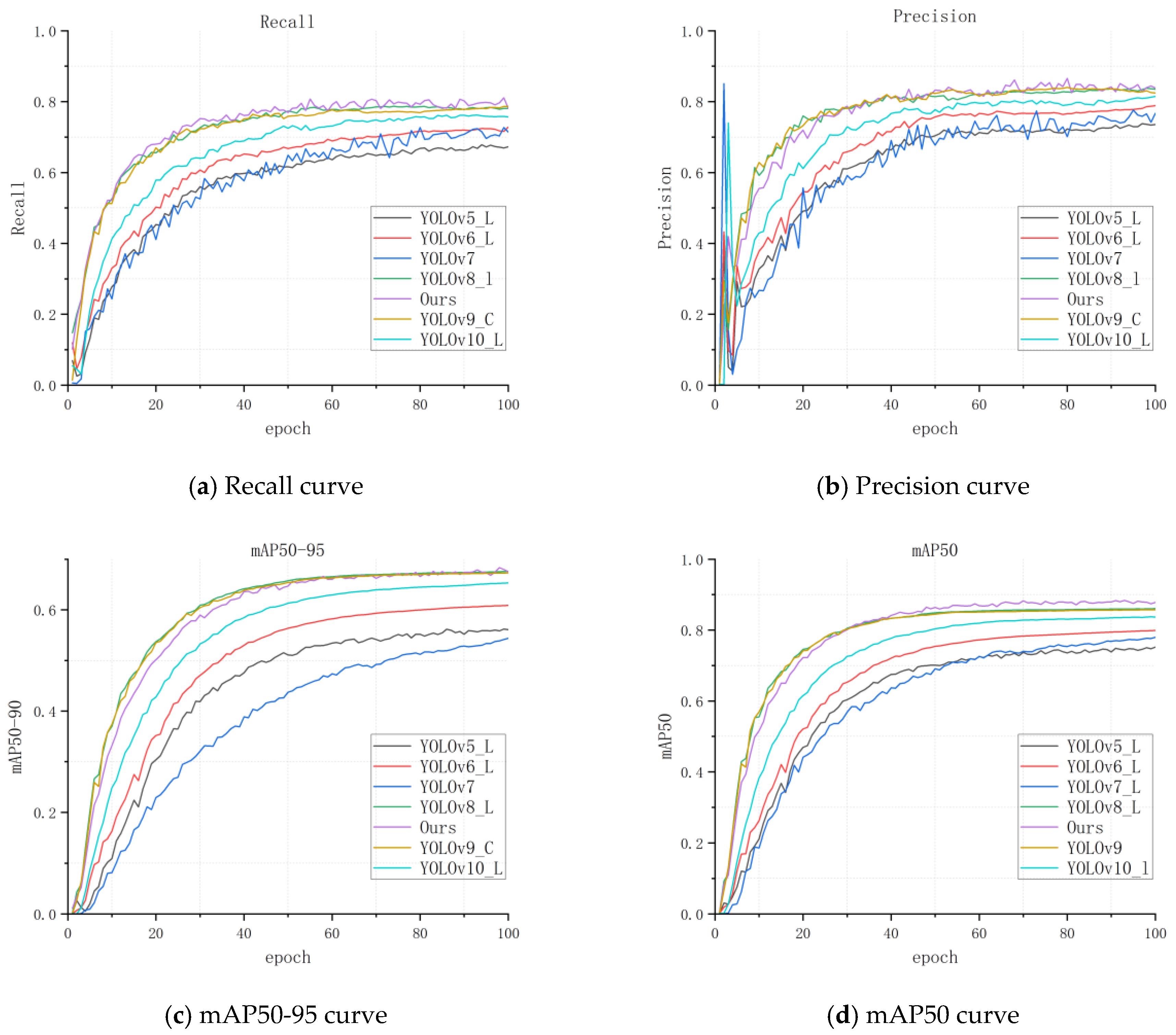

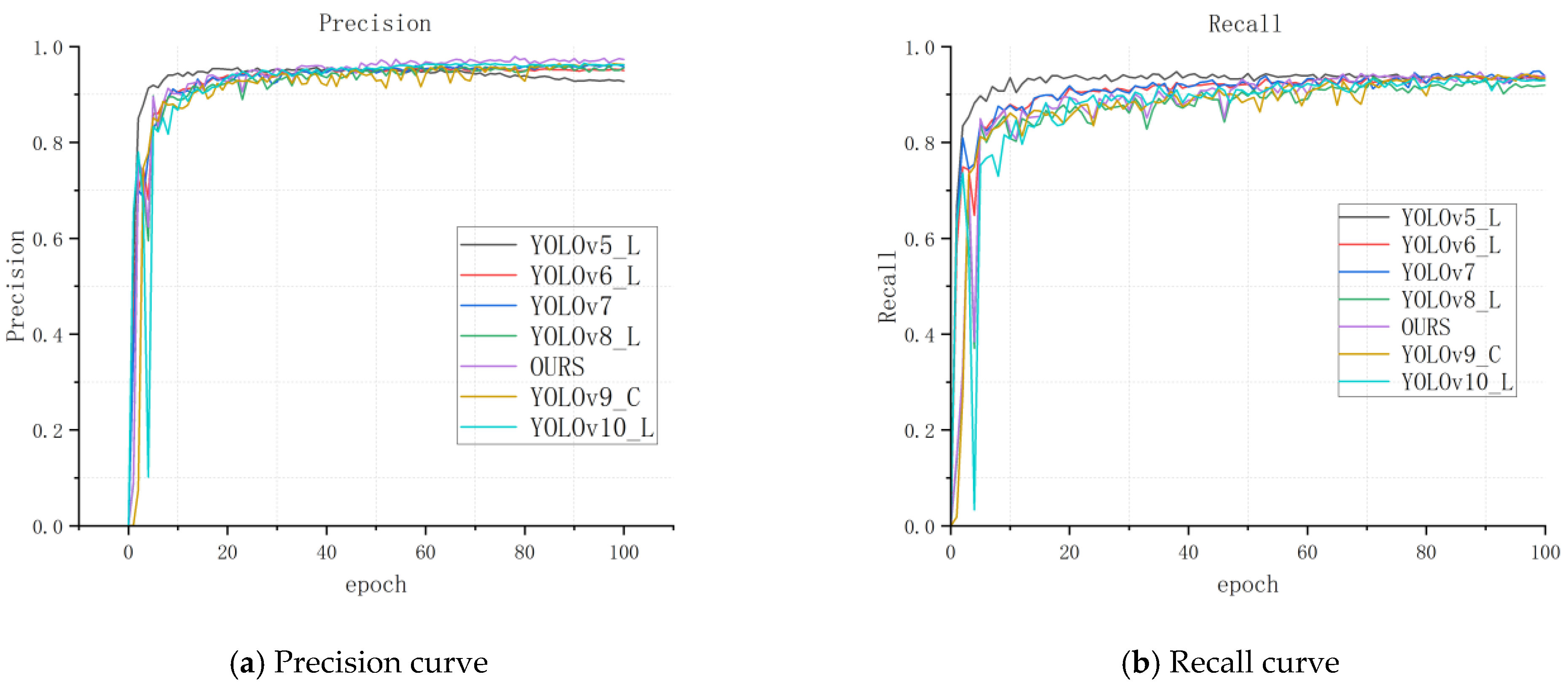

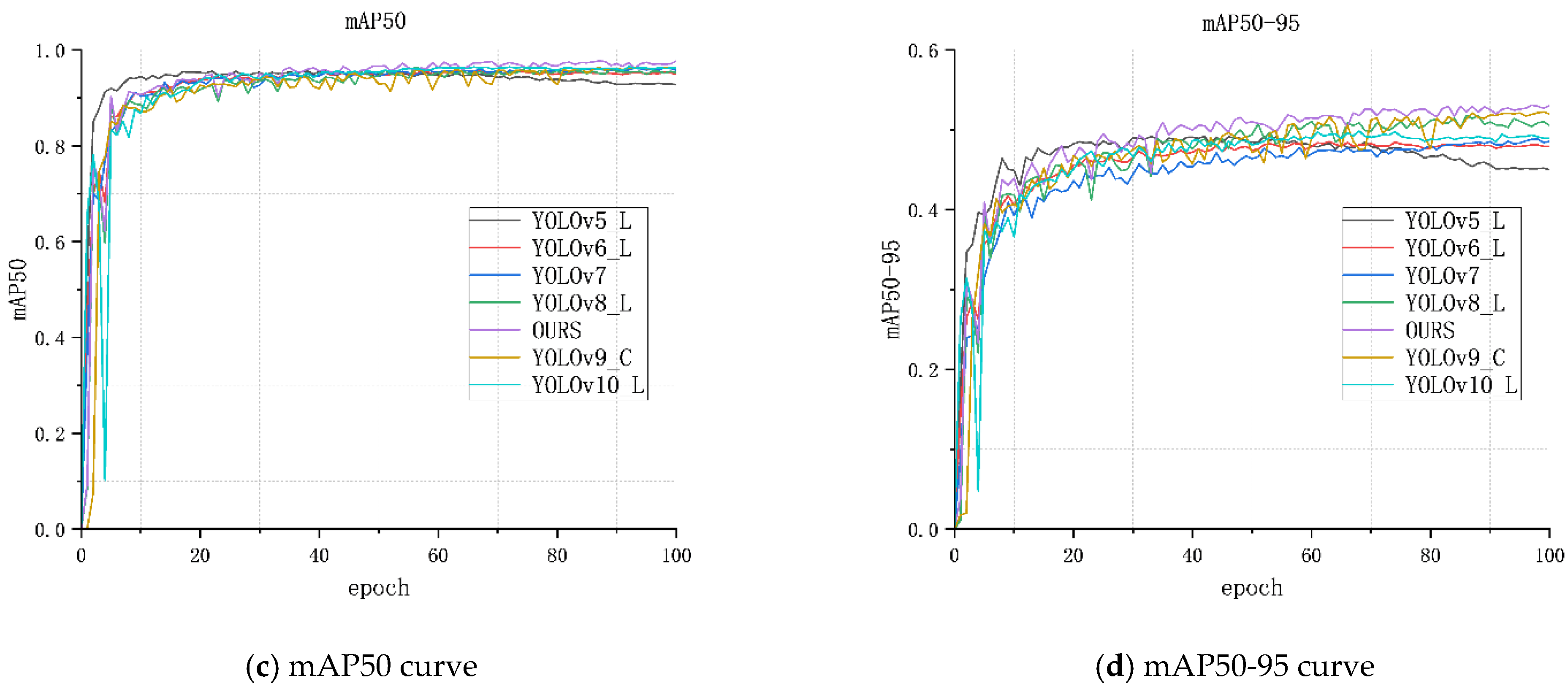

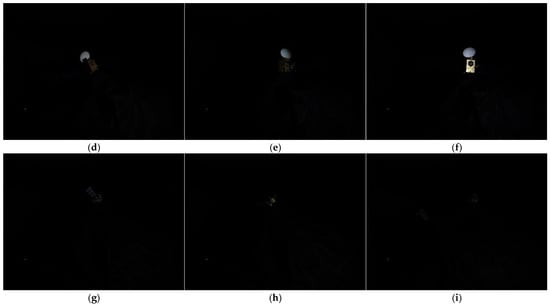

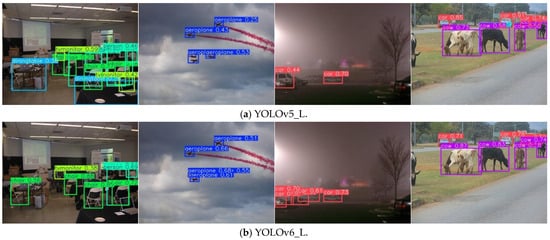

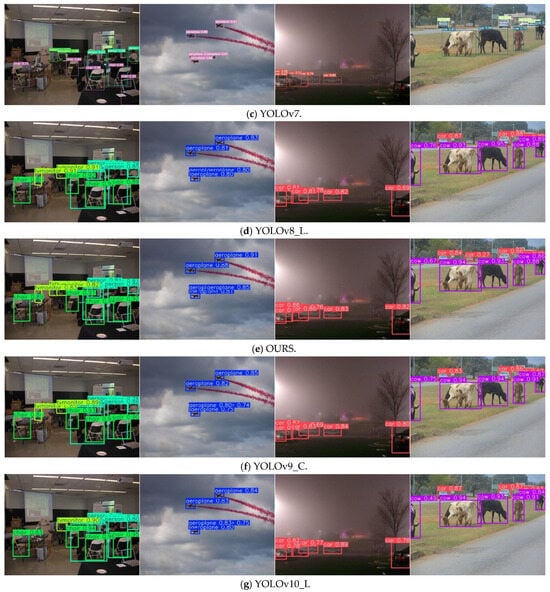

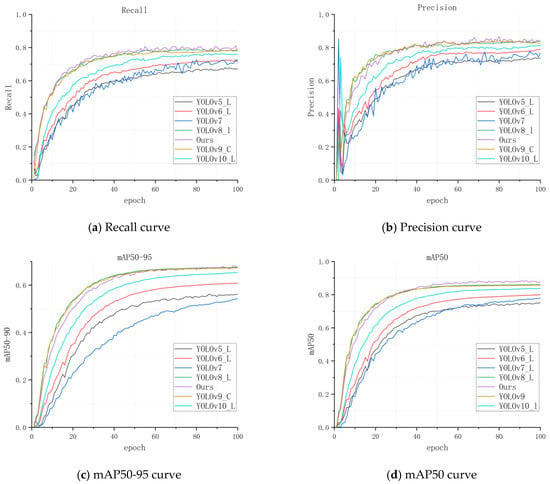

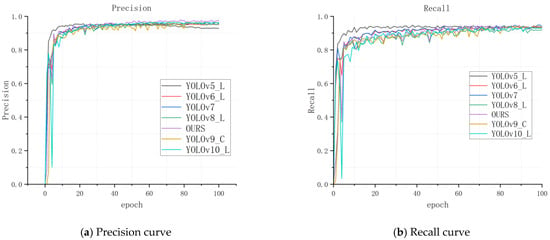

5.1. Contrastive Experiments Results on the VOC2007 + 2012 Dataset

We used the VOC2007 + 2012 dataset to verify the universality of the algorithm in this paper and conduct a comparative analysis with the excellent algorithms in recent years. These include YOLOv5_L [14], YOLOv6_L [47], YOLOv7 [48], YOLOv8_L [16], YOLOv9_C [49] and YOLOv10_L [50]. The contrastive experimental results on the VOC2007 + 2012 dataset are shown in Table 1 and Figure 8 and Figure 9.

Table 1.

Contrastive experimental results on the VOC2007 + 2012 dataset.

Figure 8.

Some image detection effects on the VOC2007 + 2012 dataset.

Figure 9.

Comparison of four evaluation indicators for seven models based on the VOC2007 + 2012 dataset.

It can be seen from Table 1 that our algorithm outperforms all other models in three of the evaluation metrics (Precision, Recall, mAP) and did not outperform YOLOv9_C and YOLOv10_L in the other evaluation metrics (FPS, GFLOPs and Params).

Figure 8 shows a comparison of the object detection capabilities of seven algorithms (including the algorithm in this paper) under four pictures. Through analysis, it can be seen that the other models all have problems of varying degrees in terms of detection errors and omissions. However, the detection ability of the algorithm in this paper is stronger, and no detection errors or detection failures occurred. In addition, while all the algorithms involved in the comparison successfully identified the target, the detection confidence level of the algorithm in this paper was the highest. This undoubtedly highlights the ability of our model and further verifies its superior and effective performance in target detection-related tasks.

Figure 9 shows that our algorithm consistently outperforms other models in key metrics, such as mAP, Precision and Recall, further verifying the high detection efficiency of the algorithm for the VOC2007 + 2012 dataset.

The above-mentioned comparative experiments on the VOC2007 + 2012 dataset were conducted on the YOLO series of algorithms, which is a single-stage object detection algorithm and is obviously not comprehensive. For this purpose, we selected the two-stage object detection algorithms Cascade R-CNN [51] and RepPoints [52] developed in recent years, as well as the Transformer-based object detection models DETR [17], Deformable DETR [53], and DN-DETR [54] for comparison with the algorithm in this paper. The experimental results are shown in Table 2 and verify the validity of the method proposed in this paper.

Table 2.

Additional contrastive experimental results on the VOC2007+2012 dataset.

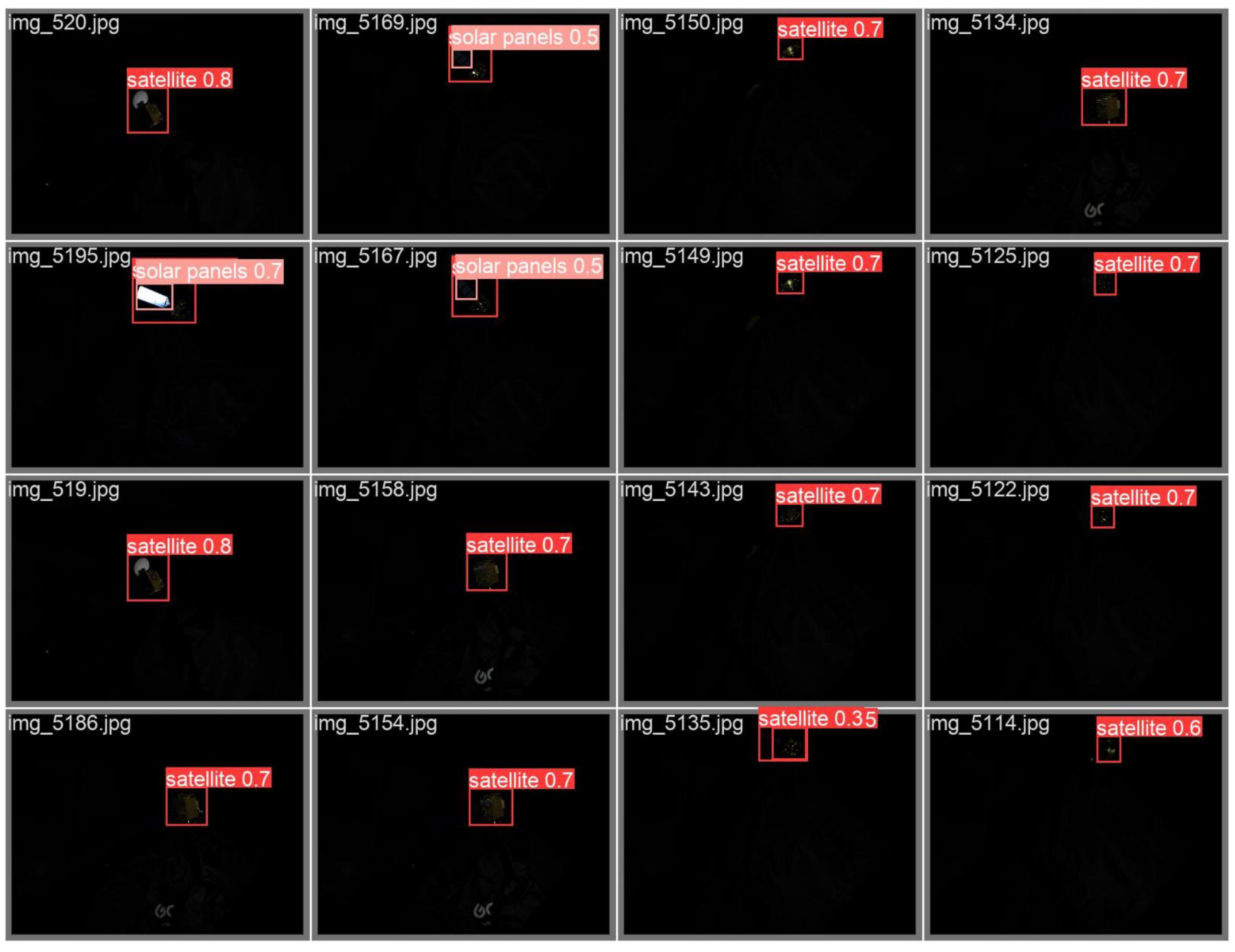

5.2. Visual Experiment Results on the Space Object Dataset

As shown in Figure 10, we have provided the detection effect diagrams produced by the algorithm in this paper for some images in the space object dataset. It can be seen from the figure that in complex environments, including strong light interference, different scales and low light environments, the algorithm can correctly detect satellites and solar panels in the space object dataset.

Figure 10.

Some visual experiment results on the space object dataset.

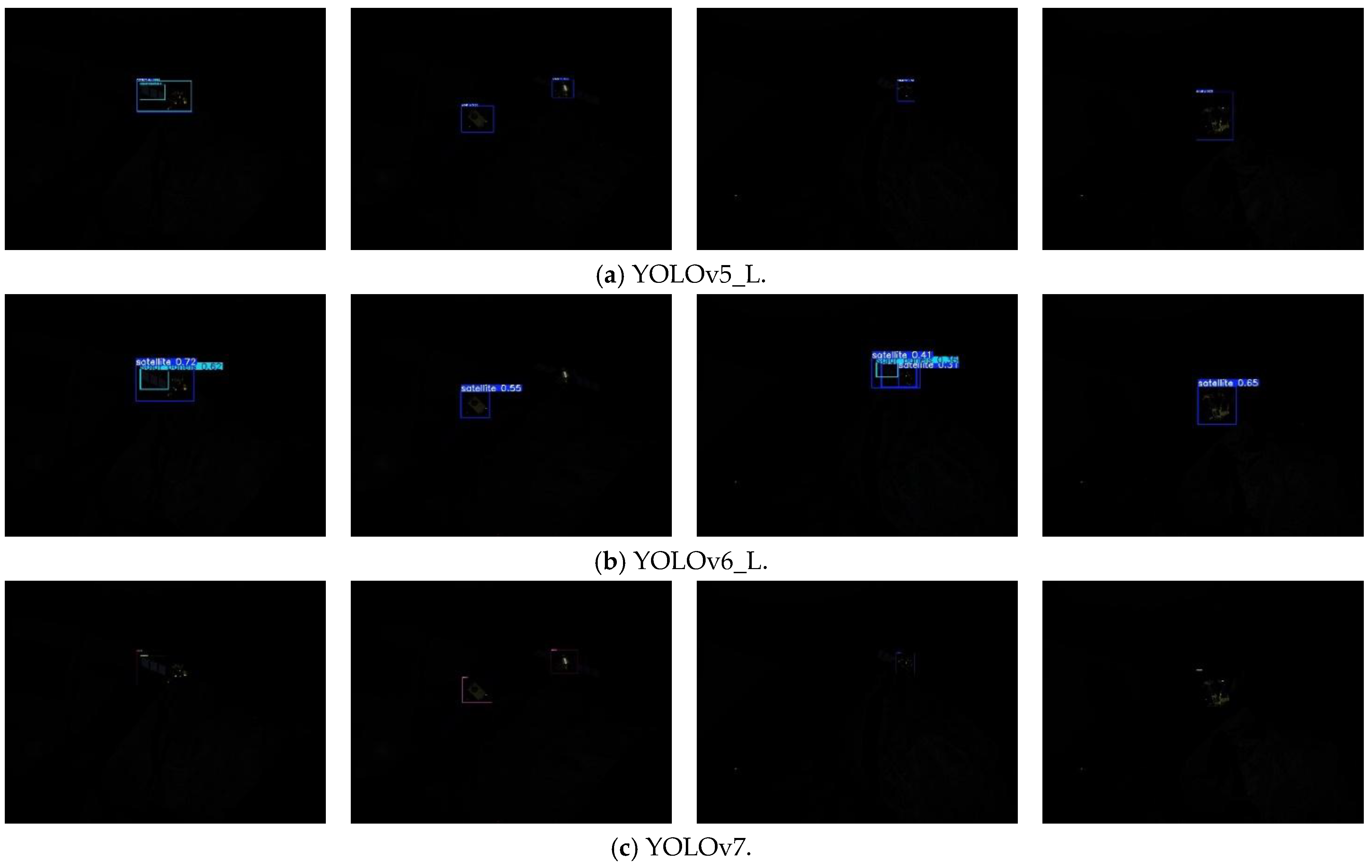

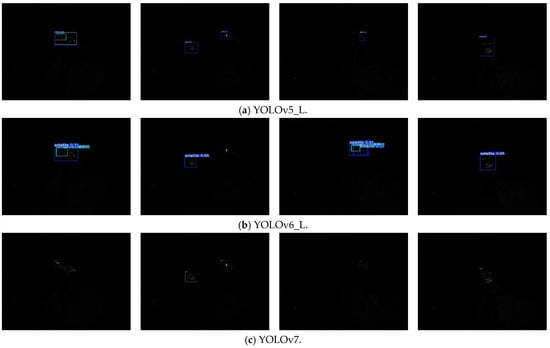

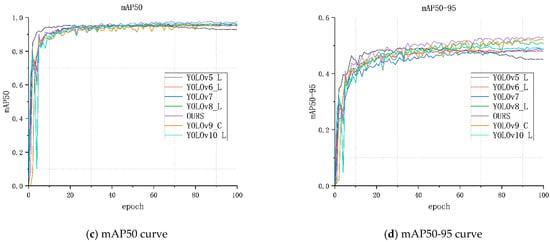

5.3. Contrastive Experiments Results on the Space Object Dataset

We used the space object dataset to verify the universality of the algorithm proposed in this paper and conduct a comparative analysis with the excellent algorithms produced in recent years. These include YOLOv5_L [14], YOLOv6_L [45], YOLOv7 [46], YOLOv8_L [16], YOLOv9_C [47] and YOLOv10_L [48]. The contrastive experimental results on the VOC2007 + 2012 dataset are shown in Table 1 and Figure 8 and Figure 9.

It can be seen from Table 3 that our algorithm outperforms all other models in three of the evaluation metrics (Precision, Recall, mAP) and did not outperform YOLOv9_C and YOLOv10_L in the other evaluation metrics (FPS, GFLOPs and Params).

Table 3.

Contrastive experimental results on the space object dataset.

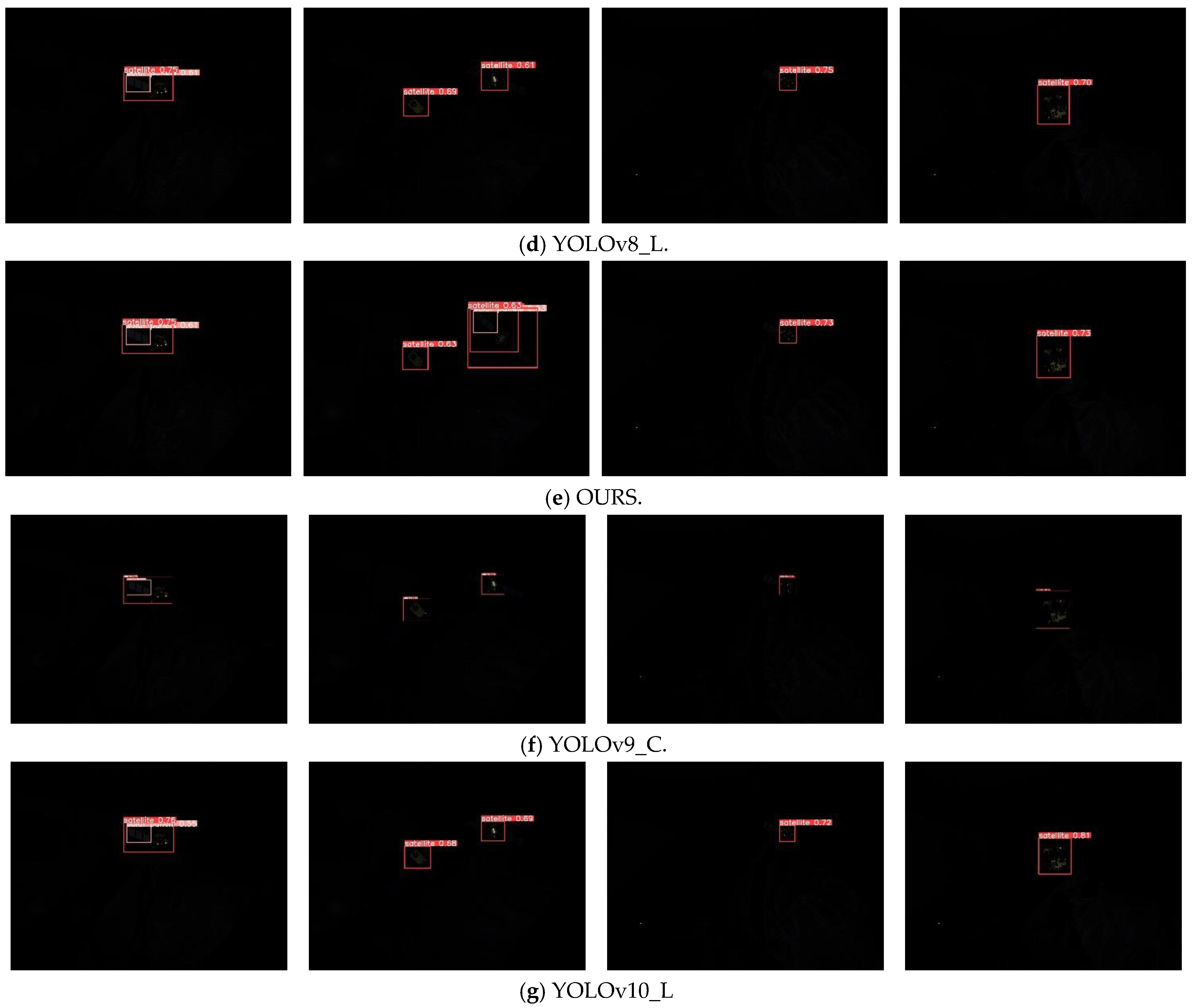

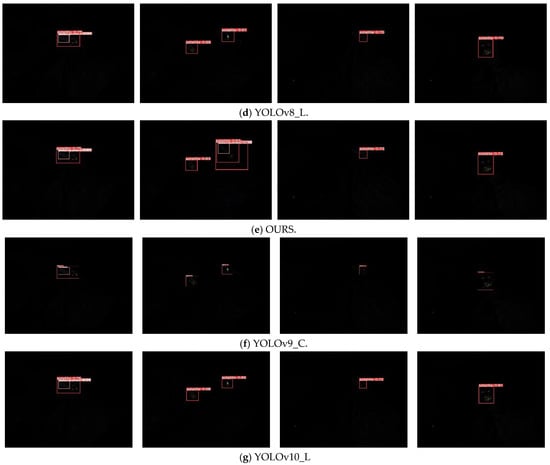

Figure 11 shows a comparison of the object detection capabilities of seven algorithms (including the algorithm proposed in this paper) over four pictures. Through analysis, other models all have problems of varying degrees in terms of detection errors and omissions. However, the detection ability of the algorithm in this paper is stronger, and no detection errors or detection failures occurred. In addition, when all the algorithms involved in the comparison successfully identify the target, the detection confidence level of the algorithm in this paper is the highest. This undoubtedly highlights our model and further verifies its superior and effective performance in target detection -related tasks.

Figure 11.

Some image detection effects on the space object dataset.

Figure 12 shows that our algorithm consistently outperforms the other models in key metrics, such as mAP, Precision and Recall, further verifying the high detection efficiency of the algorithm for space object datasets.

Figure 12.

Comparison of four evaluation indicators for seven models based on the space object dataset.

5.4. Ablation Experiment Results on the Space Object Dataset

To verify the performance of each improved module in the space object detection mission, we designed a series of ablation experiments based on the baseline algorithm (YOLOv8-L). In the ablation experiments, we successively introduced four advanced enhancement techniques: GAT, the Dilated Encoder network, Improved FPN and an attention mechanism. Each technology is introduced to enhance the performance of the algorithm. In terms of performance, enhancing the feature extraction ability, reducing the computational complexity, and optimizing the utilization of spatial information have all been taken into account in the algorithm design.

Generally, the local features extracted by convolutional neural networks are mapped to graph-structured data, and the nodal attention mechanism of GAT is used to capture the global topological association of space objects, which makes up for the deficiency in convolutional operation in weight allocation and realizes GAT integration. The Dilated Encoder network is introduced to cover different scale targets by differentiating receptive fields. Improved FPN proposes an adaptive fusion strategy based on multi-level features of backbone network, enhance the expression ability of multi-scale objects through upsampling and feature stacking. CBAM integrates channel attention and spatial attention mechanisms, enabling the model to pay more attention to key areas in the image, thus enhancing the accuracy of detection capabilities.

In order to accurately evaluate the influence of these modules on the detection results of the algorithm, several key indicators such as mAP50, FPS and other parameters are used as evaluation criteria. These indicators reflect the ability of the algorithm in the task of space object detection. Meanwhile, the ablation experiments more accurately reflect the actual role of each module in the algorithm, providing substantive support and optimization for subsequent research. The ablation experiment results for the space object dataset are shown in Table 4.

Table 4.

Ablation experiment results for the space object dataset.

Firstly, we performed GAT and Dilated Encoder network operations on the YOLOv8_L feature layer feat3 = (20, 20, 512), respectively, and the mAP50 showed an increase of 1.1% and 0.7%. Using the GAT and Dilated Encoder network simultaneously, the mAP50 shown a 2.2% improvement. Then, we changed the FPN structure of YOLOv8_L, and the mAP50 reduced, but Params reduced from 43.83 to 36.54. Finally, we added the attention mechanism, which increased the map by 1.1%.

5.5. Discussion

In the field of space object detection research, the continuous improvement in technology has led to the emergence of a series of excellent algorithms, which have greatly promoted the progression of this field. In this paper, through carefully designed comparative experiments and ablation experiments, the ability of the algorithm proposed in this paper in space object detection is further verified. In the experiments, we used six object detection algorithms and our object detection algorithm to compare on the VOC2007 + 2012 dataset. The improved YOLOv8_L algorithm has a high accuracy rate and recall rate in various types of object detection. In various complex scenes, even if the object is blocked, the algorithm can still show an excellent detection performance, fully verifying its wide applicability and robustness in object detection. To further verify the ability of this algorithm in space object detection, we conducted experiments on a space object dataset. The experimental data perfectly prove that this algorithm can achieve target detection tasks in complex scenarios, such as under strong light interference and in multi-scale, and low-light environments, further demonstrating its adaptability to various environments. Then, in order to verify the effectiveness of each module in the algorithm, we conducted a series of ablation experiments. The test results show that each module has advantages in improving accuracy. Finally, regarding why we should compare our algorithm with YOLOv9_C and YOLOv10_L, both of which belong to the YOLO family of recent algorithms, both show superior performance on VOC2007 + 2012 dataset and space object dataset. Although YOLOv9_C and YOLOv10_L are superior to our algorithm in FPS, GFLOPs and Params, our algorithm maintains a high detection accuracy and takes into account real-time performance, which better meets the original design goal of the algorithm.

Therefore, this algorithm has certain advantages in the field of object detection. For space object detection, it can complete the detection task in complex space environment. It shows excellent performance, strong applicability, strong spreading ability, and has great practical significance and application ability in the field of space targets. Secondly, considering that YOLOv9_C and YOLOv10_L are superior to our algorithm in terms of FPS, GFLOPs and Params, there is still some room for improvement. In addition, the research on space target detection in this paper is limited to simple targets in the space environment. For the targets related to the spacecraft itself, there are many types that can be detected, such as antennas and sensors, etc. And for space targets, communication satellites, navigation satellites, space stations, manned spacecraft, abandoned satellites, rocket debris, and tiny particles, etc., can be detected. In addition, although the dataset produced in this paper takes into account the target angle, strong light interference and low light environments, the complex spatial environment cannot be truly simulated on the ground. In conclusion, the method proposed in this paper can be regarded as presenting a solvable solution to the problem of space target detection, even if the optimal situation cannot be taken into account.

6. Conclusions

This paper proposes a multi-scale feature fusion framework based on the improved YOLOv8_L. The combination of graph attention network (GAT) and Dilated Encoder network significantly improve the detection and recognition of space remote objects. This research provides a lightweight and high-precision detection framework for space situational awareness tasks, and explores a new path for the cross-modal fusion of graph neural network and target detection. The method proposed in this paper is also applicable to remote sensing object detection. In future work, our research will focus on two directions: one is to focus on the detection and optimization of very small objects (such as space debris) in space remote sensing and the application of semi-supervised learning strategies. The second is to study lightweight models that make the algorithm easier to deploy in space payloads.

Author Contributions

Conceptualization, D.L. and J.C.; methodology, H.Z. (Haifeng Zhang), H.A., D.X. and Z.H.; validation, C.M. and H.Z. (Haoran Zhu); data curation, Z.H.; visualization, H.Z. (Haoran Zhu); supervision, H.Z. (Haifeng Zhang); project administration, H.Z. (Haifeng Zhang); funding acquisition, H.Z. (Haifeng Zhang); writing—original draft preparation, H.Z. (Haifeng Zhang); writing—review and editing, H.Z. (Haifeng Zhang). and H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Shaanxi Province (S2023-YF-YBGY-1523).

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The data are not publicly available due to privacy concerns.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, Z.P.; Deng, C.W.; Deng, Z.Y. A Diverse Space Target Dataset With Multidebris and Realistic On-Orbit Environment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9102–9114. [Google Scholar] [CrossRef]

- Ai, H.; Zhang, H.F.; Ren, L.; Feng, J.; Geng, S.N. Detection and Recognition of Spatial Non-Cooperative Objects Based on Improved YOLOX_L. Electronics 2022, 11, 3433. [Google Scholar] [CrossRef]

- Shan, M.H.; Guo, J.; Gill, E. Review and comparison of active space debris capturing and removal methods. Prog. Aerosp. Sci. 2016, 80, 18–32. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, Z.; Ai, X.; Wu, Q.; Liu, X.; Cheng, J. A Novel Method for PolISAR Interpretation of Space Target Structure Based on Component Decomposition and Coherent Feature Extraction. Remote Sens. 2025, 17, 1079. [Google Scholar] [CrossRef]

- Su, H.; You, Y.; Liu, S. Multi-Oriented Enhancement Branch and Context-Aware Module for Few-Shot Oriented Object Detection in Remote Sensing Images. Remote Sens. 2023, 15, 3544. [Google Scholar] [CrossRef]

- Wu, W.; Cui, S.; Zhang, P.; Dong, S.; He, Q. Development of intelligent processing technology of space remote sensing. Space Int. 2024, 59–64. [Google Scholar]

- Wang, Z.; Hu, Y.; Yang, J.; Zhou, G.; Liu, F.; Liu, Y. A ContrastiveAugmented Memory Network for Anti-UAV Tracking in TIR Videos. Remote Sens. 2024, 16, 4775. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.Q.; Han, J.W. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Yang, L.; Wang, H.; Zeng, Y.; Liu, W.; Wang, R.; Deng, B. Detection of Parabolic Antennas in Satellite Inverse Synthetic Aperture Radar Images Using Component Prior and Improved-YOLOv8 Network in Terahertz Regime. Remote Sens. 2025, 17, 604. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ultralytics/yolov5: v4.0—nn.SiLU Activations, Weights & Biases logging, PyTorch Hub integration. 2021. Available online: https://github.com/ultralytics/yolov5 (accessed on 10 January 2023).

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You Only Look One-level Feature. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Jocher, G.C.A.; Qiu, J. YOLO by Ultralytics. DB/OL. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 10 January 2023).

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhu, J.; Zhang, L. DAB-DETR: Dynamic Anchor Boxes are Better Queries for DETR. arXiv 2022, arXiv:2201.12329. [Google Scholar] [CrossRef]

- Wu, T.; Yang, X.; Song, B.; Wang, N.; Yang, D. T-SCNN: A Two-Stage Convolutional Neural Network for Space Target Recognition. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Xiang, Y.; Xi, J.; Cong, M.; Yang, Y.; Ren, C.; Han, L. Space debris detection with fast grid-based learning. In Proceedings of the 2020 IEEE 3rd International Conference of Safe Production and Informatization (IICSPI), Chongqing, China, 28–30 November 2020; pp. 205–209. [Google Scholar]

- Wang, G.; Lei, N.; Liu, H. Improved-YOLOv3 network for object detection in simulated Space Solar Power Systems images. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020. [Google Scholar]

- Jia, P.; Liu, Q.; Sun, Y.Y. Detection and Classification of Astronomical Targets with Deep Neural Networks in Wide-field Small Aperture Telescopes. Astron. J. 2020, 159, 212. [Google Scholar] [CrossRef]

- AlDahoul, N.; Karim, H.A.; De Castro, A.; Tan, M.J.T. Localization and classification of space objects using EfficientDet detector for space situational awareness. Sci. Rep. 2022, 12, 1–19. [Google Scholar] [CrossRef]

- Mahendrakar, T.; White, R.T.; Wilde, M.; Tiwari, M. SpaceYOLO: A Human-Inspired Model for Real-time, On-board Spacecraft Feature Detection. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2023. [Google Scholar]

- Tang, Q.; Li, X.W.; Xie, M.L.; Zhen, J.L. Intelligent Space Object Detection Driven by Data from Space Objects. Appl. Sci. 2024, 14, 333. [Google Scholar] [CrossRef]

- Massimi, F.; Ferrara, P.; Petrucci, R.; Benedetto, F. Deep learning-based space debris detection for space situational awareness: A feasib ility study applied to the radar processing. IET Radar Sonar Navig. 2024, 18, 635–648. [Google Scholar] [CrossRef]

- Guo, X.J.; Chen, T.; Liu, J.C.; Liu, Y.; An, Q.C. Dim Space Target Detection via Convolutional Neural Network in Single Optical Image. IEEE Access 2022, 10, 52306–52318. [Google Scholar] [CrossRef]

- Jiang, Y.X.; Tang, Y.J.; Ying, C.C. Finding a Needle in a Haystack: Faint and Small Space Object Detection in 16-Bit Astronomical Images Using a Deep Learning-Based Approach. Electronics 2023, 12, 4820. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE Transactions on Pattern Analysis & Machine Intelligence, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, P.; Sun, W.; Xu, J.; Liao, L.; Cao, Y.; Han, Y. Improving plant miRNA-target prediction with self-supervised k-mer embedding and spectral graph convolutional neural network. PeerJ 2024, 12, e17396. [Google Scholar] [CrossRef]

- Velikovi, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the 6th International Conference On Learning Representations, New Orleans, LA, USA, 6 May 2019. [Google Scholar]

- Tang, G.; Yang, L.; Zhang, L.; Cao, W.; Meng, L.; He, H.; Kuang, H.; Yang, F.; Wang, H. An attention-based automatic vulnerability detection approach with GGNN. Int. J. Mach. Learn. Cybern. 2023, 14, 3113–3127. [Google Scholar] [CrossRef]

- Cai, H.; Wang, Y.; Liu, L.; Zhu, S.; Chen, M. DAGNN: Deep Autoencoder-Based Graph Neural Network for Local Anomaly Detection. In Proceedings of the 2024 6th International Conference on Communications, Information System and Computer Engineering (CISCE), Guangzhou, China, 10–12 May 2024. [Google Scholar]

- Wang, Y.; Xiao, H.; Ma, C.; Zhang, Z.; Cui, X.; Xu, A. On-board detection of rail corrugation using improved convolutional block attention mechanism. Eng. Appl. Artif. Intell. 2025, 146, 110349. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G.; Albanie, S. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Zheng, S.; Jiang, L.; Yang, Q.; Zhao, Y.; Wang, Z. GS-orthogonalization OMP method for space target detection via bistatic space-based radar. Chin. J. Aeronaut. 2024, 37, 333–351. [Google Scholar] [CrossRef]

- Zhao, H.; Sun, R.; Yu, S. Deep Neural Network closed-loop with raw data for optical resident space object detection. Res. Astron. Astrophys. 2024, 24, 95–103. [Google Scholar] [CrossRef]

- Agrawal, S.; Sharma, A.; Bhatnagar, C.; Chauhan, D.S. Modelling and Analysis of Emitter Geolocation using Satellite Tool Kit. Def. Sci. J. 2020, 70, 440–447. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, H.; Ji, M.; Lu, T.; Zhang, C.; Yu, Y. Lightweight multi-scale feature fusion enhancement algorithm for spatial noncooperative small target detection. J. Beijing Univ. Aeronaut. Astronaut. 2024, 1–12. [Google Scholar] [CrossRef]

- Hoang, D.A.; Chen, B.; Chin, T.J. A Spacecraft Dataset for Detection, Segmentation and Parts Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the European Conference on Computer Vision, Nashville, TN, USA, 11–15 June 2025. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv.2010.04159. [Google Scholar] [CrossRef]

- Li, F.; Zhang, H.; Zhang, N.L. DN-DETR: Accelerate DETR Training by Introducing Query DeNoising. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 2239–2251. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).