1. Introduction

Spaceborne synthetic aperture radar (SAR) can provide high-resolution two-dimensional imaging of the Earth’s surface, and is one of the most important approaches for high-resolution remote sensing of the Earth [

1,

2,

3]. As an imaging radar, higher spatial resolution and larger imaging swath have always been the relentless pursuit of SAR [

4,

5,

6]. In terms of resolution, after decades of development, the imaging resolution of spaceborne SAR has gradually increased from tens of meters to submeters, and the next generation of spaceborne SAR is even expected to reach the centimeter level [

7,

8,

9]. In terms of imaging swath size, from several kilometers in spotlight mode to tens of kilometers in stripmap mode, and then to hundreds of kilometers in scanning mode, the imaging scene width continues to expand. Especially with the development of medium and high-orbit SAR satellites in recent years, the imaging swath width may even reach the order of thousands of kilometers [

10,

11,

12,

13]. The resolution escalation inherently demands extended synthetic aperture durations, with submetric resolution currently requiring integration times on the order of seconds, while centimeter-level resolutions may necessitate aperture durations extending to tens of seconds. In such a long synthetic aperture time, the assumption of uniform linear motion of the radar platform that the traditional efficient imaging processing algorithm relies on will no longer hold, and the effect of orbital curvature effect must be considered [

6,

14,

15]. In addition, during such a long synthetic aperture time, it is often necessary to consider the noncoplanar effect of the flight trajectory with respect to the illuminated scene due to Earth’s rotation. On the other hand, as the size of the illuminated scene continues to grow, the assumption of a flat ground surface, on which traditional efficient image processing algorithms are based, is no longer applicable, and accurate imaging processing must consider the spherical ground surface effect [

10,

13].

Current methodologies for addressing nonlinear radar platform trajectories predominantly employ polynomial approximation techniques to model range history through increased model orders [

16,

17]. These approaches have two main drawbacks. First, the achievable focusing accuracy becomes critically constrained in ultra-high-resolution systems due to inevitable residual errors from finite-order polynomial approximations. Second, these methods face inherent difficulties in establishing a unified range-history model that maintains accuracy across all scatterers within the imaged scene, particularly under complex trajectory conditions. Regarding spherical ground surface effect compensation, the conventional strategies typically treat this geometric effect as a disadvantageous factor and compensate for the additional phase error induced by the planar surface assumption through computationally intensive space-variant phase compensation filters. However, such implementations present significant challenges in achieving optimal balance between two critical parameters: correction accuracy and computational efficiency.

Reference [

18] proposes a fundamentally distinct approach, referred to as the Spherical Geometry Algorithm (SGA), which diverges significantly from existing methodologies. The new approach exploits the spherical surface effect to establish an accurate Fourier transform relationship between a single set of pulse-echo data and the target scattering function. It then proposes an image formation algorithm based on Fourier reconstruction, effectively solving the problem of accurate imaging of spherical surfaces under nonlinear radar trajectory. However, the original SGA is proposed only for focusing spotlight mode SAR data. Due to sampling ambiguity, it cannot be directly applied to other imaging modes, such as sliding spotlight, stripmap mode, etc. This article first analyzes the time–frequency relationship variation during SGA processing sliding spotlight SAR data, and shows the sampling ambiguity problems during azimuth resampling and azimuth IFFT. Then, an effective solution is proposed to address the sampling ambiguity problem faced by the SGA in azimuth resampling and azimuth IFFT processing. In order to solve the undersampling problem during azimuth resampling, the instantaneous Doppler central frequency variation is removed through a phase compensation procedure before azimuth resampling. It can reduce the total bandwidth of the signal to satisfy the Nyquist sampling theorem, thereby ensuring azimuth resampling to obtain a correct result. After azimuth resampling, the induced phase error caused by the phase compensation is eliminated through a phase correction. In order to solve the sampling ambiguity problem during azimuth IFFT, the sampling rate of the output signal is appropriately increased during azimuth resampling to avoid aliasing of scene edge targets in the focused image produced by IFFT.

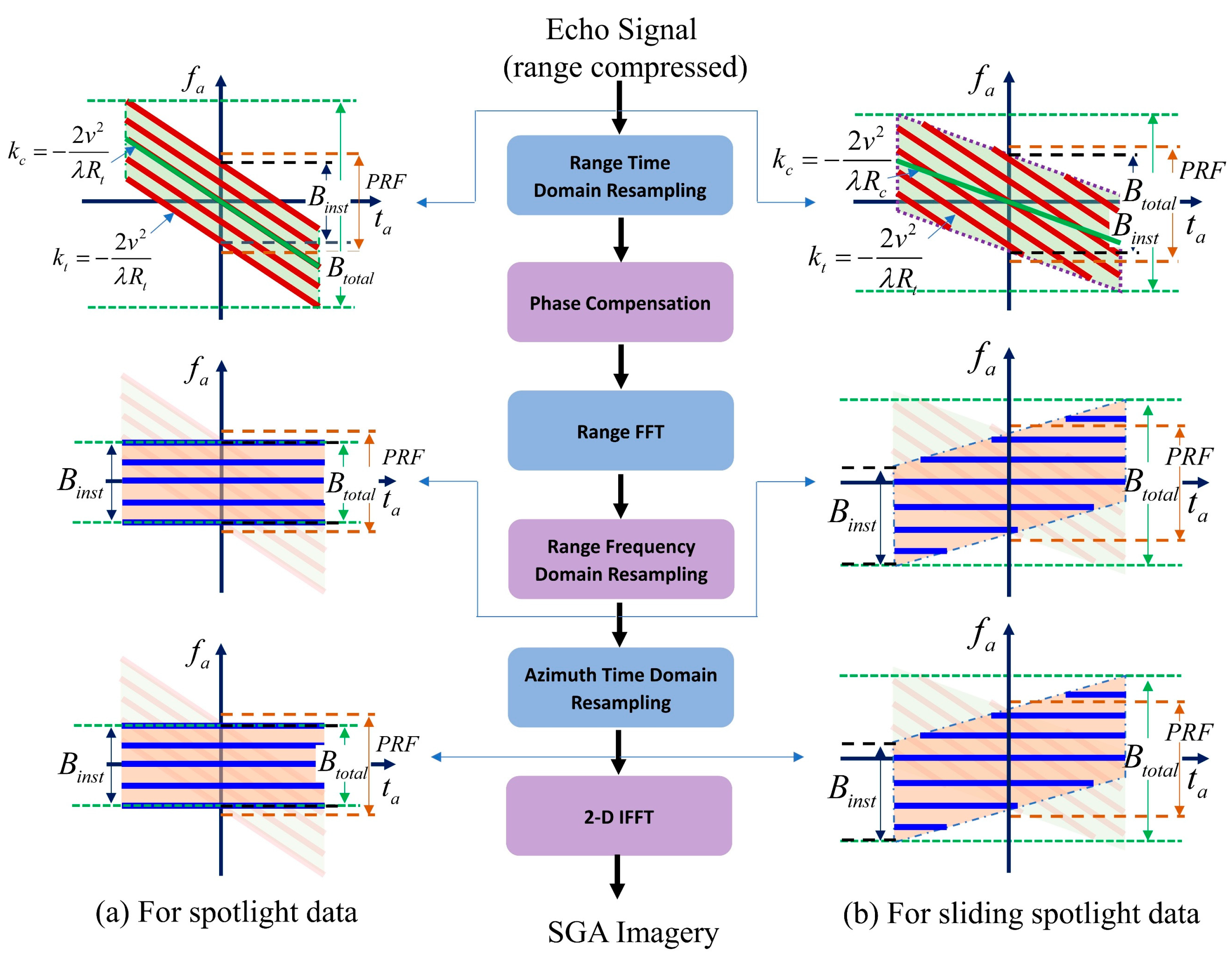

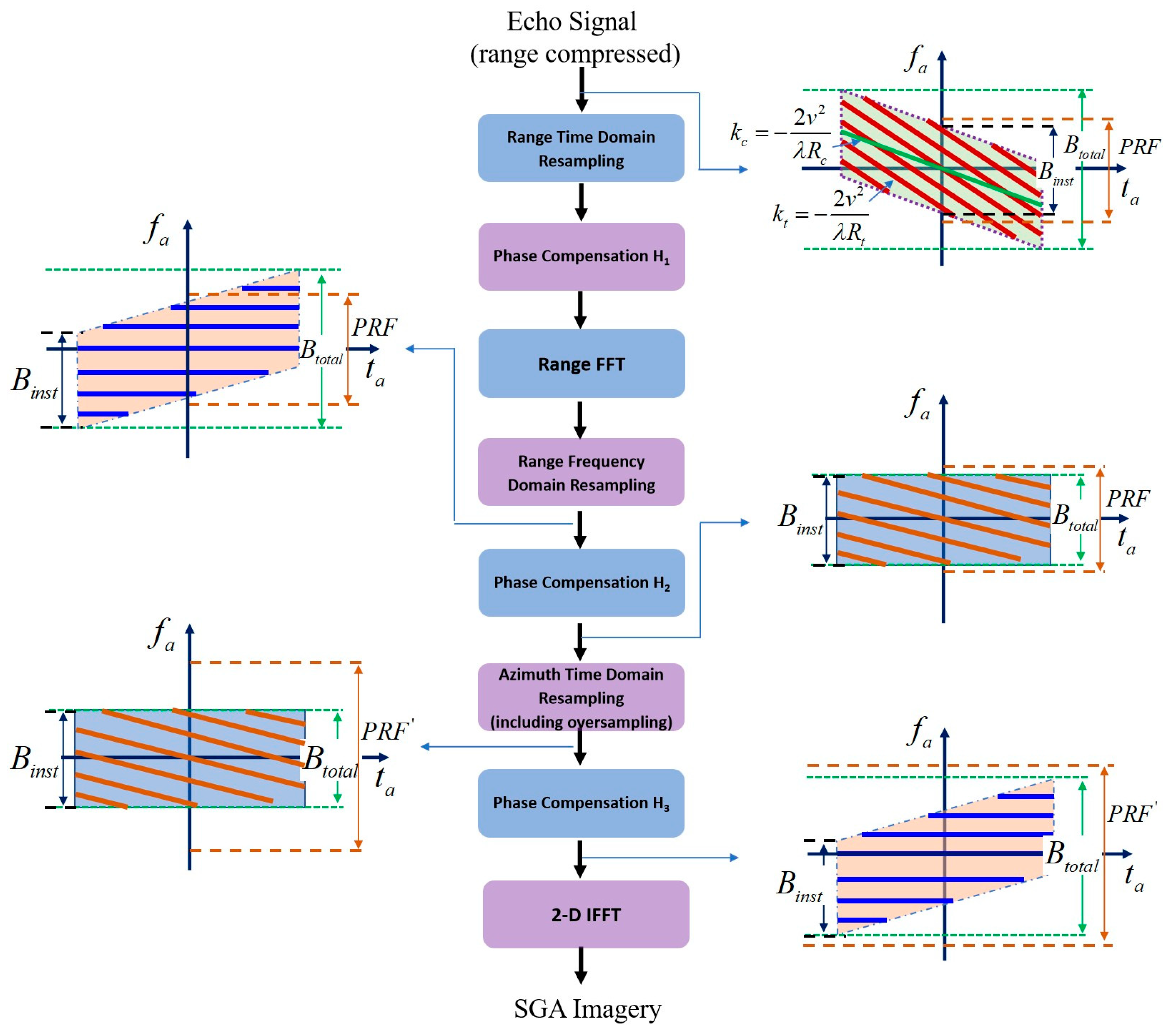

3. Modified Spherical Geometry Algorithm

In order to apply SGA to process sliding spotlight mode data, an improved SGA is proposed. Compared to the classical SGA algorithm, the modified algorithm mainly differs in azimuth resampling and azimuth IFFT processing. The purpose of the modification is to prevent signal undersampling during azimuth resampling and azimuth IFFT processing. In order to solve the undersampling problem of the azimuth signal during azimuth resampling, the new algorithm adds a phase correction processing before the azimuth resampling to eliminate the variation of the instantaneous Doppler central frequency so that the total signal bandwidth is equal to the instantaneous bandwidth, which ensures that the PRF meets the sampling requirements. Azimuth resampling can then be performed correctly on the corrected data. However, after azimuth resampling, the azimuth signal becomes an LFM signal again due to the phase correction before azimuth resampling. Therefore, direct IFFT cannot produce a focused image. To obtain a focused image, the induced phase error should be eliminated again after azimuth resampling. Paradoxically, on the one hand, the signal has been expected to become a single-frequency signal after the phase correction, so the following IFFT can produce a focused image. But on the other hand, the instantaneous central frequency of the signal begins to change linearly again, and the total bandwidth increases, resulting in image folding after IFFT for the scene edge scatterers. To avoid image folding, the sampling rate can be appropriately increased during azimuth resampling to ensure that the signal satisfies the Nyquist sampling theorem. Based on the above solution, the processing flow of the proposed modified SGA is shown in

Figure 3. The detailed analytical derivation of the algorithm is given below.

The first step of modified SGA is also range preprocessing, including range resampling, phase compensation, and range Fourier transform. The range resampling is actually to achieve a change of variable in the range time domain as follows:

where

is the new time variable.

After range time domain resampling, the transformed signal can be represented as

where

,

.

The second step is phase correction, which multiplies the signal with a phase correction function:

where

.

After phase compensation, the signal becomes

Then, performing a range FFT results in

Inserting

into Equation (7), we obtain

Now, the signal is a 2-D discrete sample of the spectrum of the target function, but in polar format. In order to implement fast Fourier reconstruction using IFFT, it is necessary to convert polar format sampling data into rectangular format sampling data. The process of polar format conversion is actually a 2-D signal decoupling process, usually achieved through two 1-D resamplings in range and azimuth, respectively. The range resampling is essentially a scaling transformation of the range frequency as follows:

where

.

After range resampling, the signal becomes

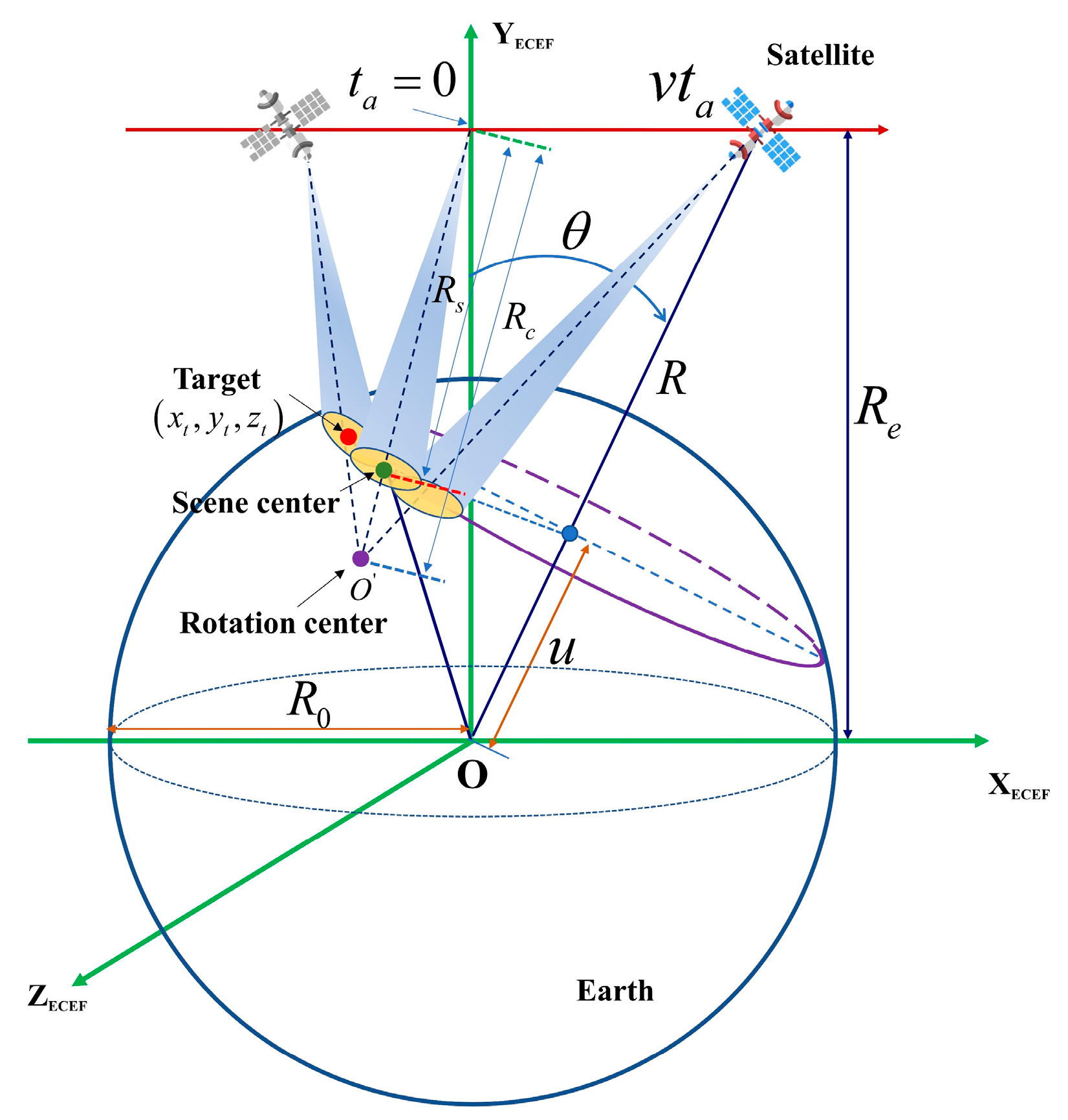

From the data collection geometry shown in

Figure 1, it is easy to calculate that

. Therefore, Equation (10) can also be rewritten as

In standard SGA processing for spotlight mode data, the next step is an azimuth resampling. However, for the sliding spotlight mode, due to the residual variation of the instantaneous Doppler central frequency, the signal at this point often does not satisfy the Nyquist sampling theorem. In order to ensure that azimuthal resampling yields correct results, it is necessary to compensate for the variation of instantaneous Doppler central frequency, which can be implemented by multiplying the signal with the following phase correction function.

After this correction, the signal becomes

Now, the signal satisfies the Nyquist sampling theorem and can be resampled in the azimuth direction. The azimuth resampling is mathematically a keystone transform as follows:

Therefore, after azimuth resampling, the signal becomes

where

.

The multiplication of phase function

before azimuth resampling ensures the correct implementation of azimuth resampling on one hand, but also introduces an additional phase error on the other hand. To eliminate its adverse effect, it is necessary to multiply the resampled signal by the following correction function:

After this correction, the signal becomes

At this point, the signal is completely 2-D decoupled and becomes a sinusoidal signal in both dimensions, with frequency linearly related to the target’s position. Therefore, a final 2-D IFFT can produce focused imagery. However, from the time–frequency diagram at this point in

Figure 3, it is clear that the total azimuth bandwidth is still greater than the instantaneous bandwidth. To avoid image folding, the sampling rate can be appropriately increased during azimuth resampling. The oversampling factor is determined by the ratio of the total bandwidth to the instantaneous bandwidth. Since each target at different azimuth positions corresponds to a distinct azimuth frequency, the total azimuth bandwidth depends on the entire scene width, while the instantaneous bandwidth is determined by the scene width illuminated by the instantaneous azimuth beamwidth. Consequently, the ratio of the total signal bandwidth to the instantaneous bandwidth equals the ratio of the total scene width to the instantaneous beam coverage width. For example, if the scene width covered by the instantaneous azimuth beamwidth is

, and the total scene width scanned by the beam during the coherent integration time is

, the oversampling factor for the azimuth interpolation is

.

Now, a 2-D IFFT can produce unfolded imagery. Firstly, by performing an azimuth IFFT on Equation (17), we obtain

Then, performing a range IFFT results in the following 2-D focused imagery:

If we define

,

, Equation (19) can also be expressed as

Now, the target is accurately focused at its true position in the orbit plane.

Next, we evaluate the computational performance of the modified algorithm. Both the standard SGA and modified SGA processing flows involve only three types of operations: complex multiplication, interpolation, and FFT/IFFT. Specifically, the original SGA requires one complex multiplication, three interpolation operations, and three 1-D FFT/IFFT operations, while the improved algorithm requires three complex multiplications, three interpolation operations, and three 1D FFT/IFFT operations. Consequently, the improved algorithm introduces two additional complex multiplications compared to the original algorithm. Furthermore, azimuth resampling necessitates a slightly higher sampling rate, leading to increased computational load in the azimuth interpolation process and subsequent phase compensation and azimuth IFFT steps due to the expanded data volume.

4. Experimental Results and Discussion

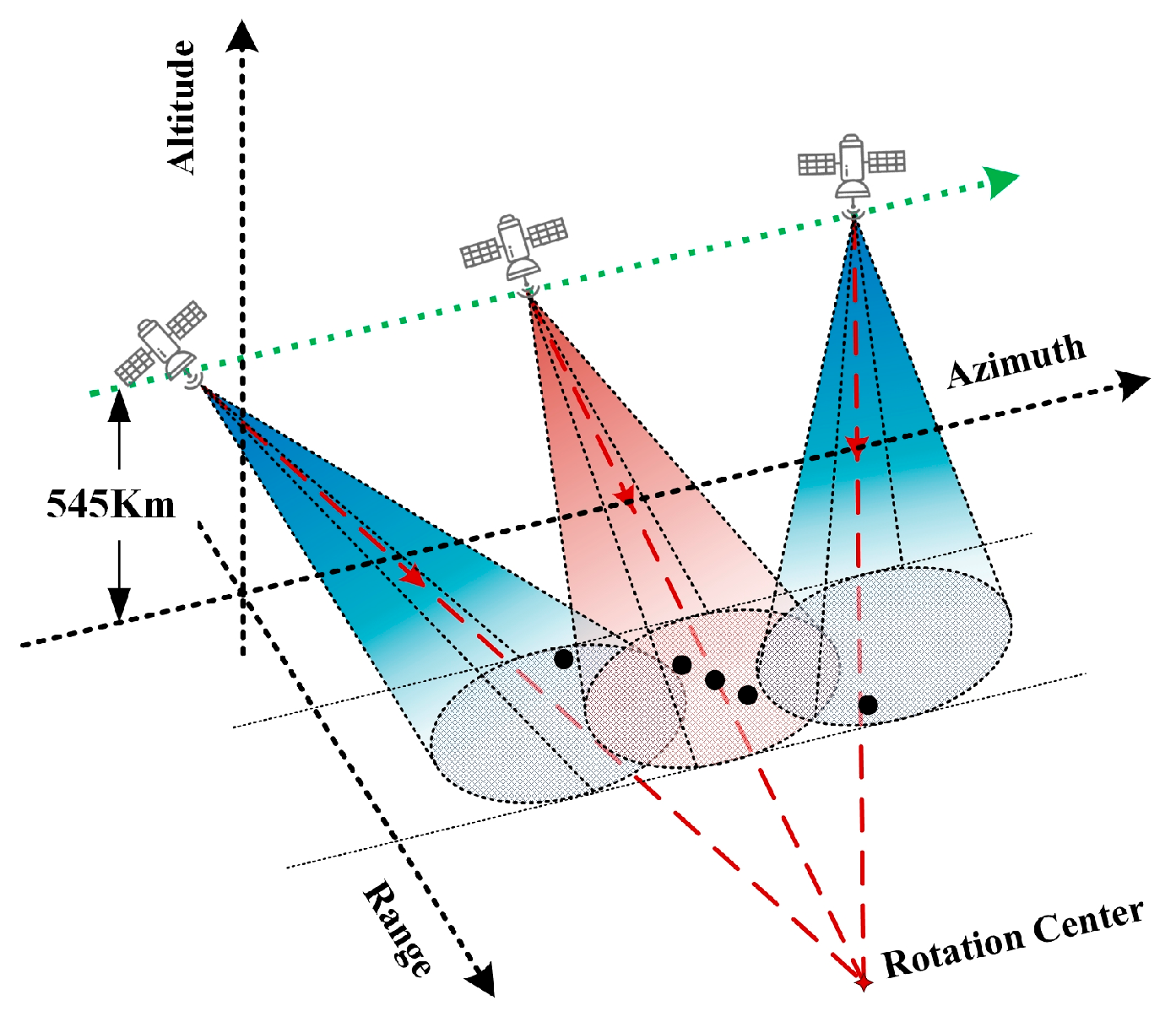

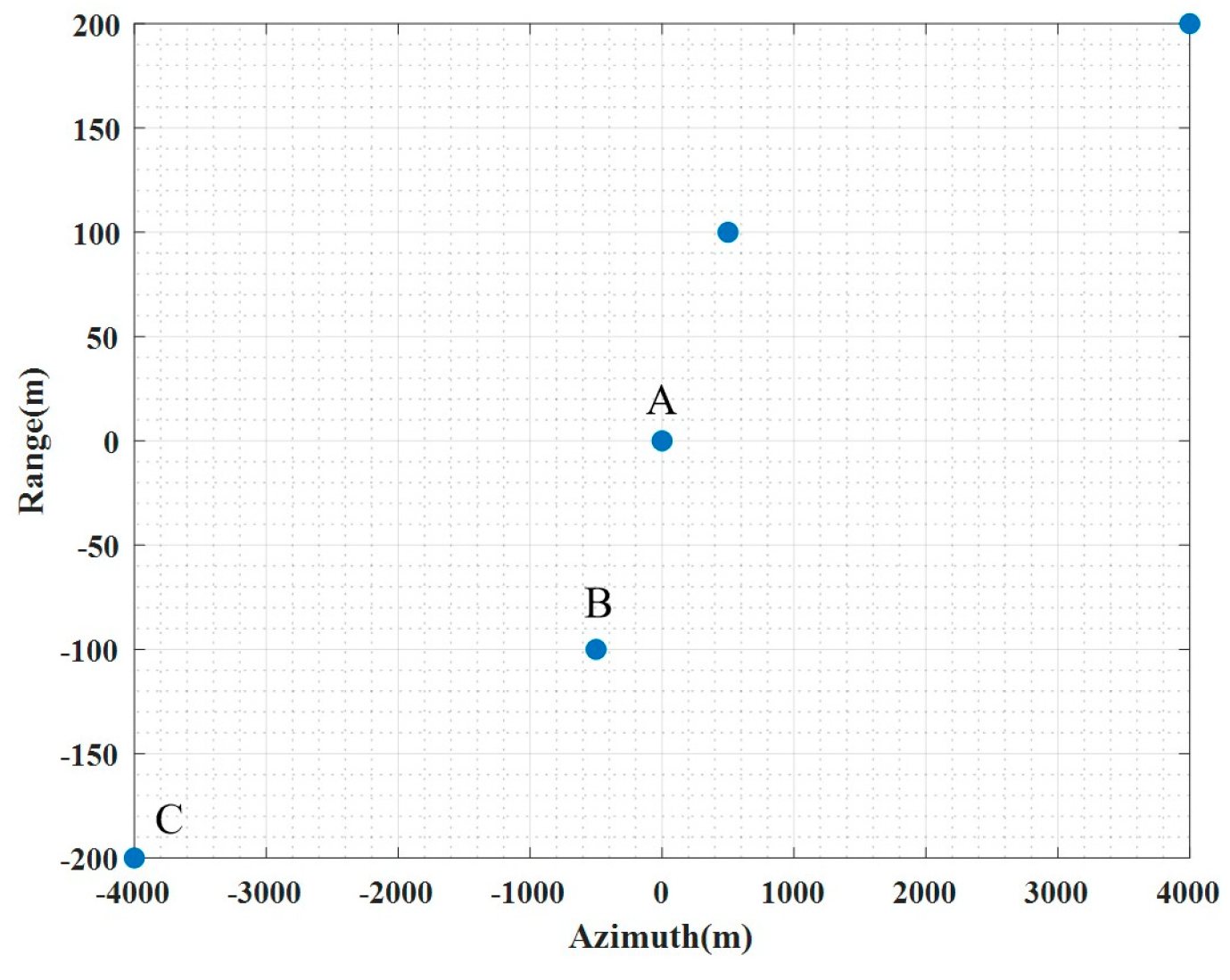

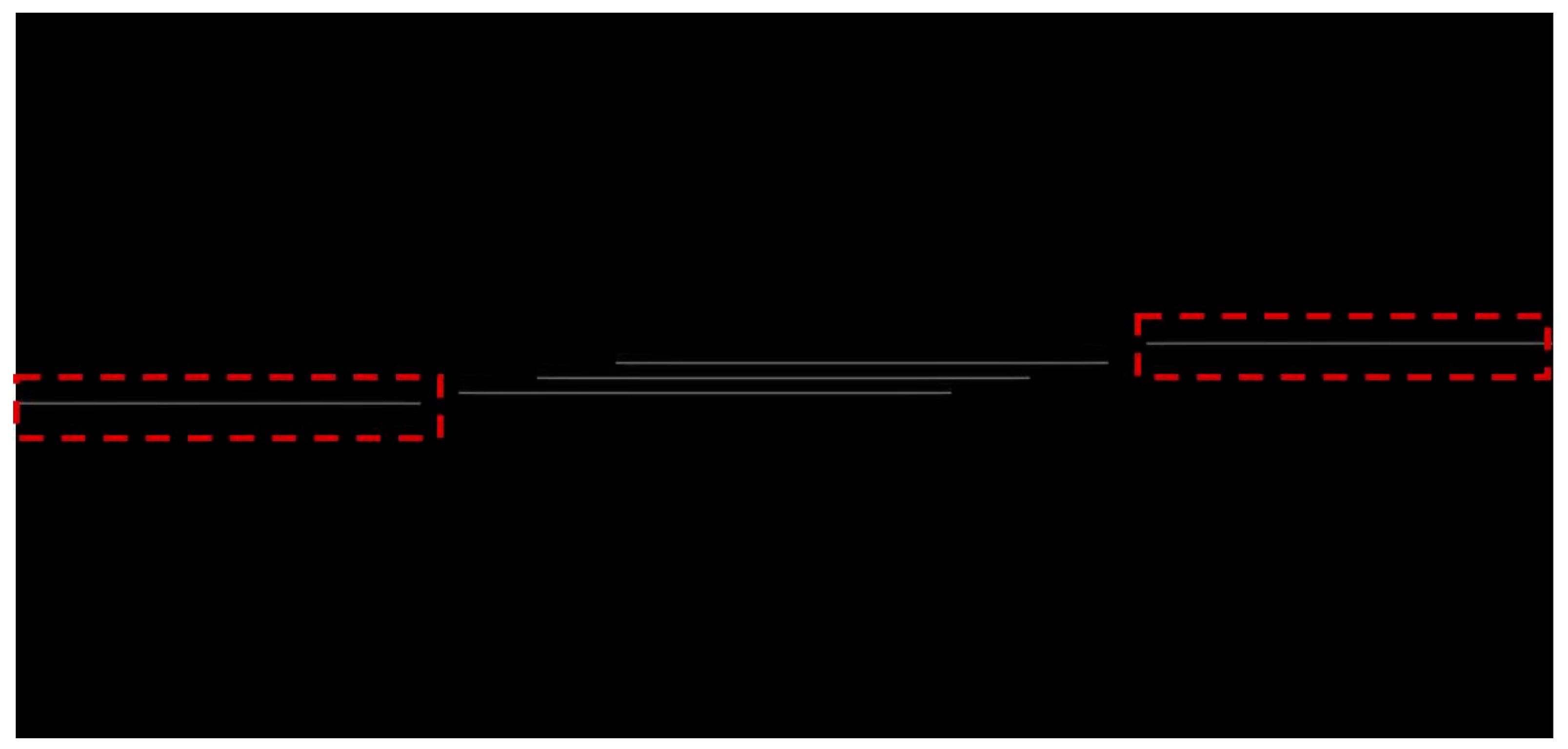

To validate the performance of the algorithm, both simulation and real data processing are carried out. In the simulation experiment, the imaging geometry is shown in

Figure 4. The radar is operated in sliding spotlight mode, where the scene center range is 600 km and the rotation center range is 900 km. Some main radar parameters are listed in

Table 1. Five point targets with different positions were placed within the imaging scene as shown in

Figure 5.

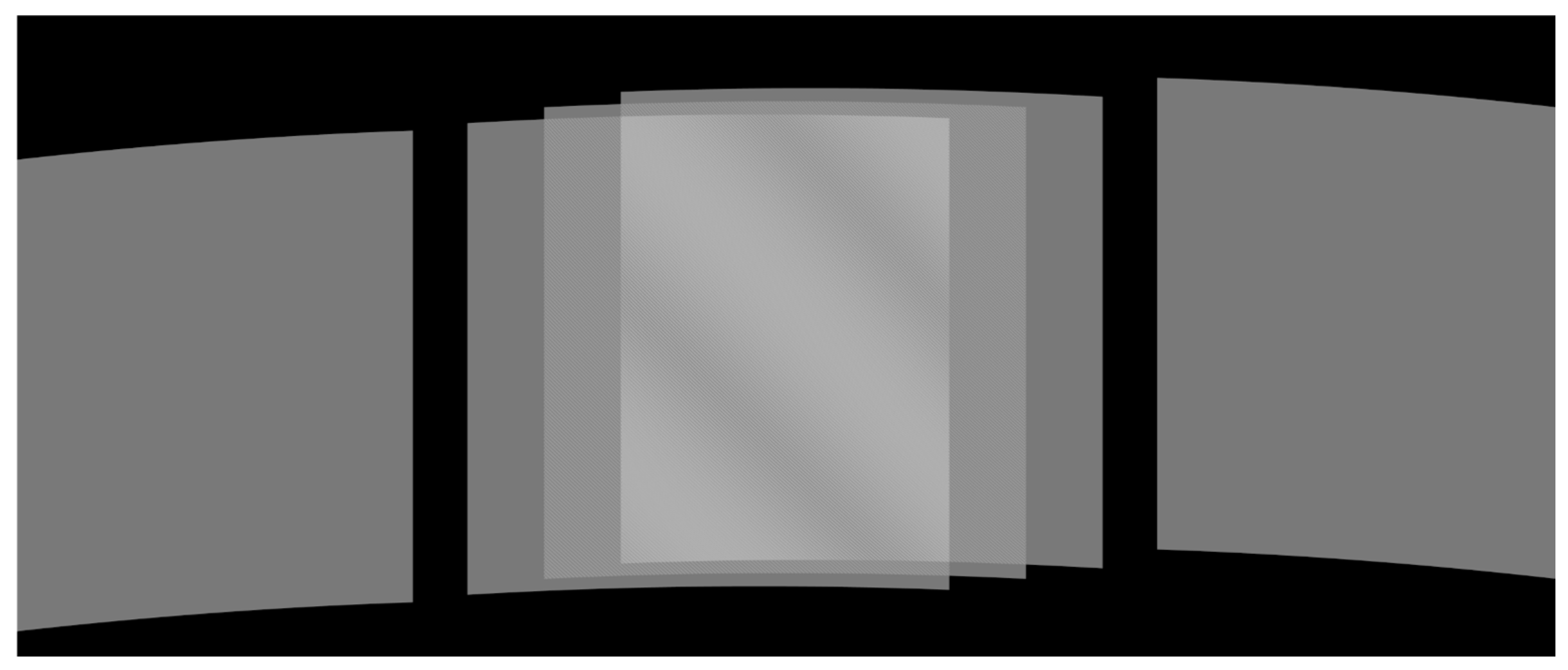

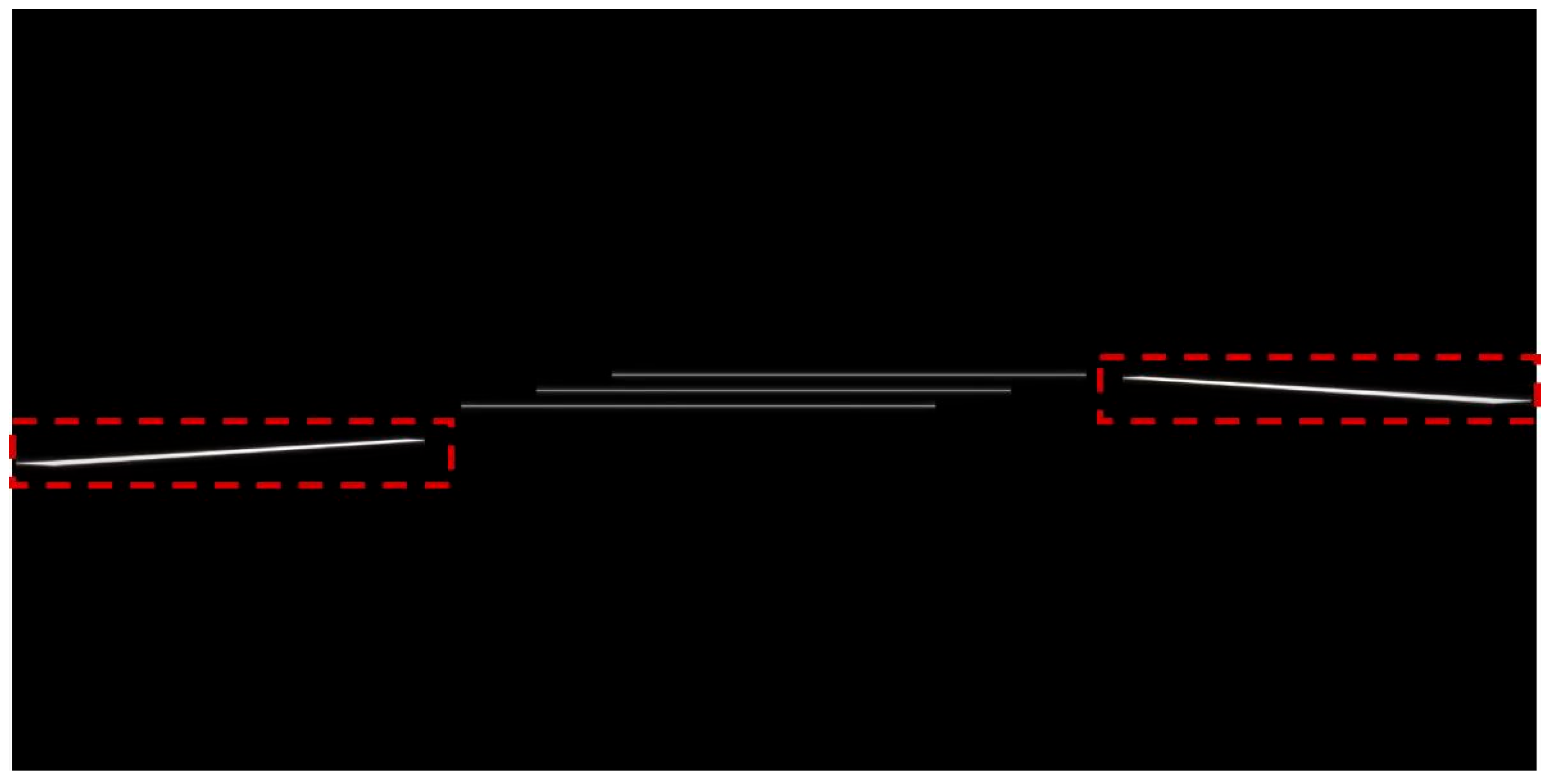

The simulated raw data are shown in

Figure 6. The data are first processed by the standard SGA, and the produced image is shown in

Figure 7 and

Figure 8, where

Figure 7 is the range-compressed imagery and

Figure 8 is the full-compressed imagery. As can be seen from the figures, only the targets near the center of the scene are accurately focused, while the targets at the edges of the azimuth suffer from being severely 2-D defocused on the one hand, and the positions of the targets, on the other hand, are also aliased. This is because the signals of targets located at the edge of the azimuth scene are undersampled during both azimuth interpolation and azimuth IFFT processing. The undersampling during azimuth interpolation prevents complete correction of the range migration, resulting in 2-D defocusing in the final image, while the undersampling during azimuth IFFT causes aliasing of the target’s azimuth position.

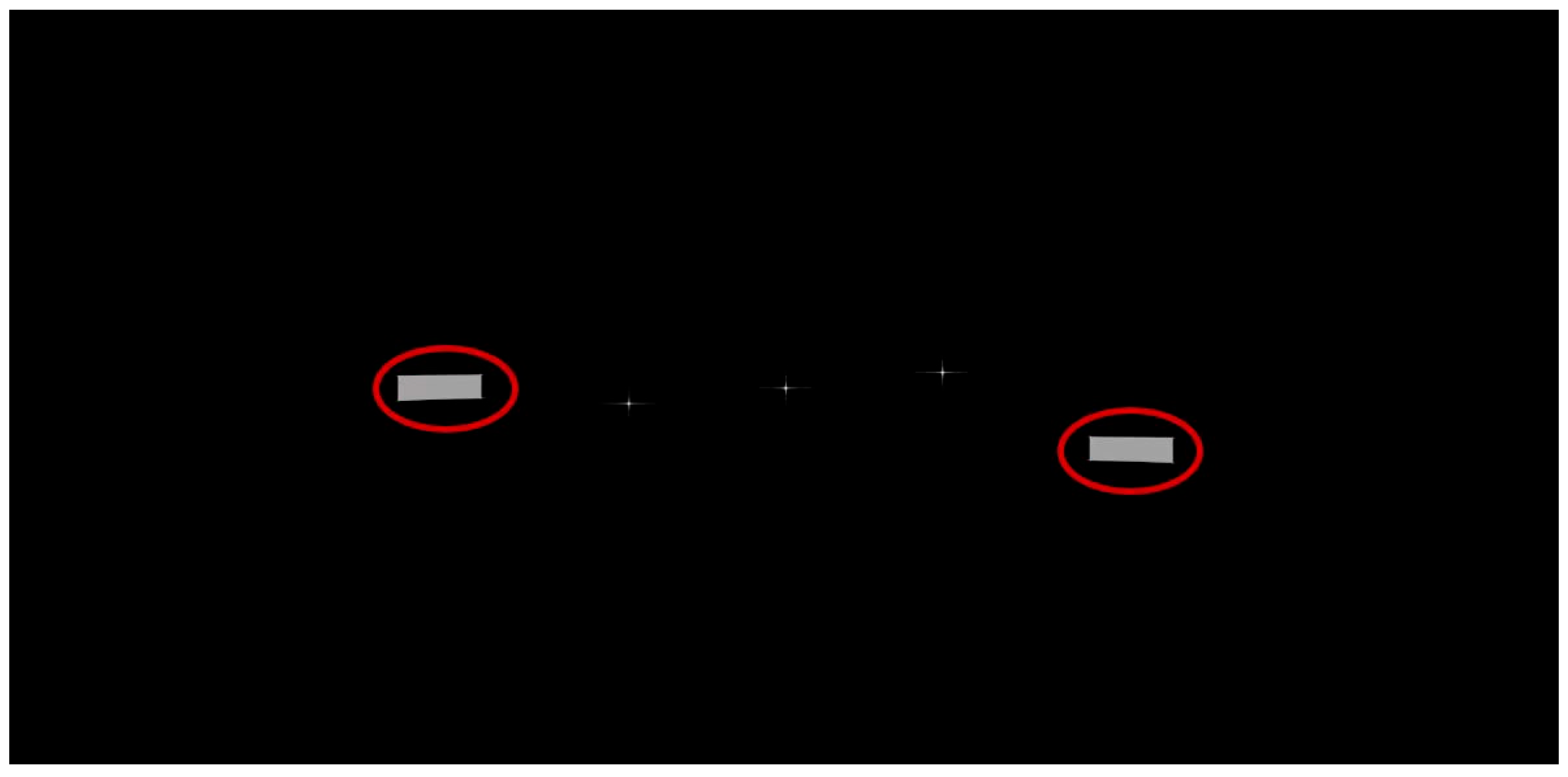

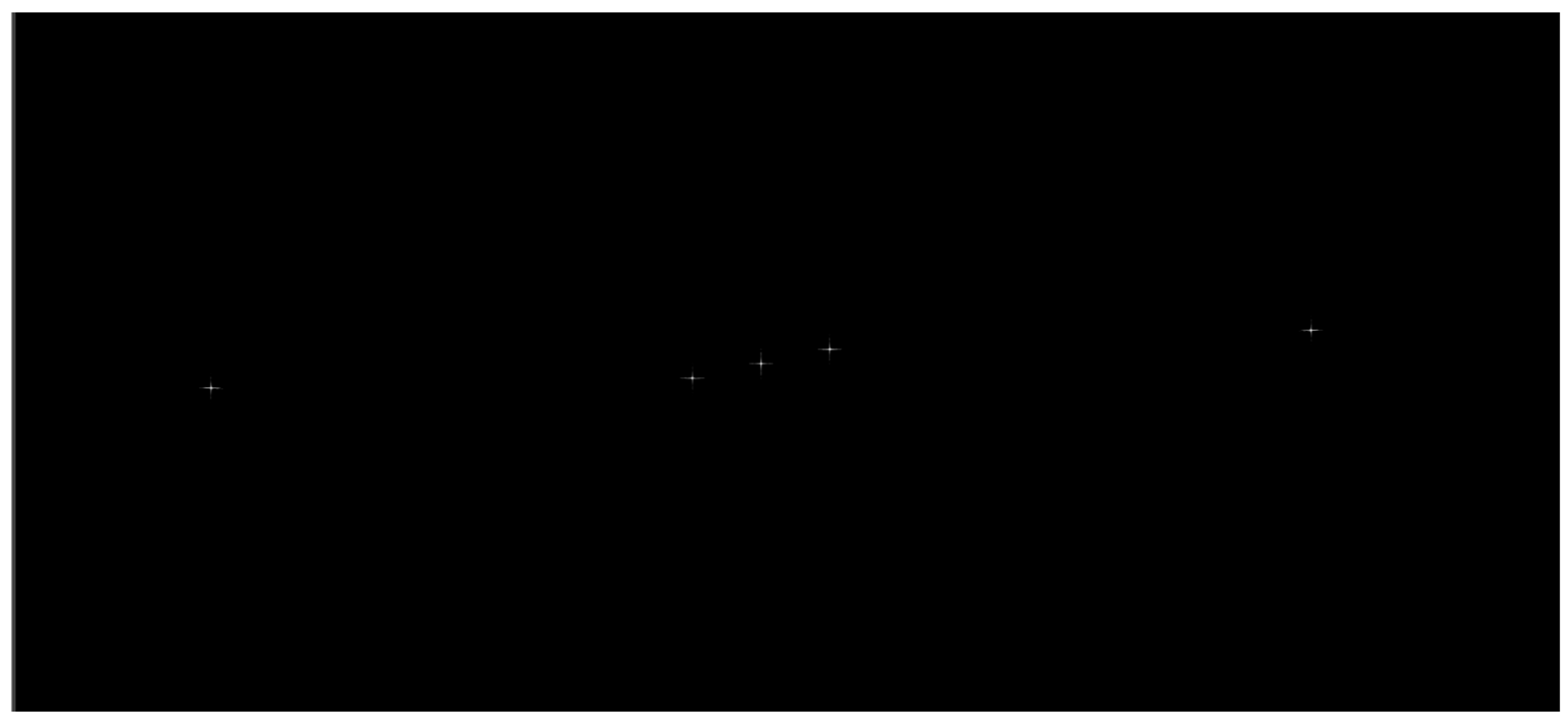

Finally, the data are also processed using the modified SGA algorithm, and the processing results are shown in

Figure 9 and

Figure 10, where

Figure 9 is the range-compressed image and

Figure 10 is the full-compressed image. From

Figure 9, it is clear that the range migration of scatterers located at the scene edge is also completely eliminated. Then, these point targets are also well focused, as shown in

Figure 10.

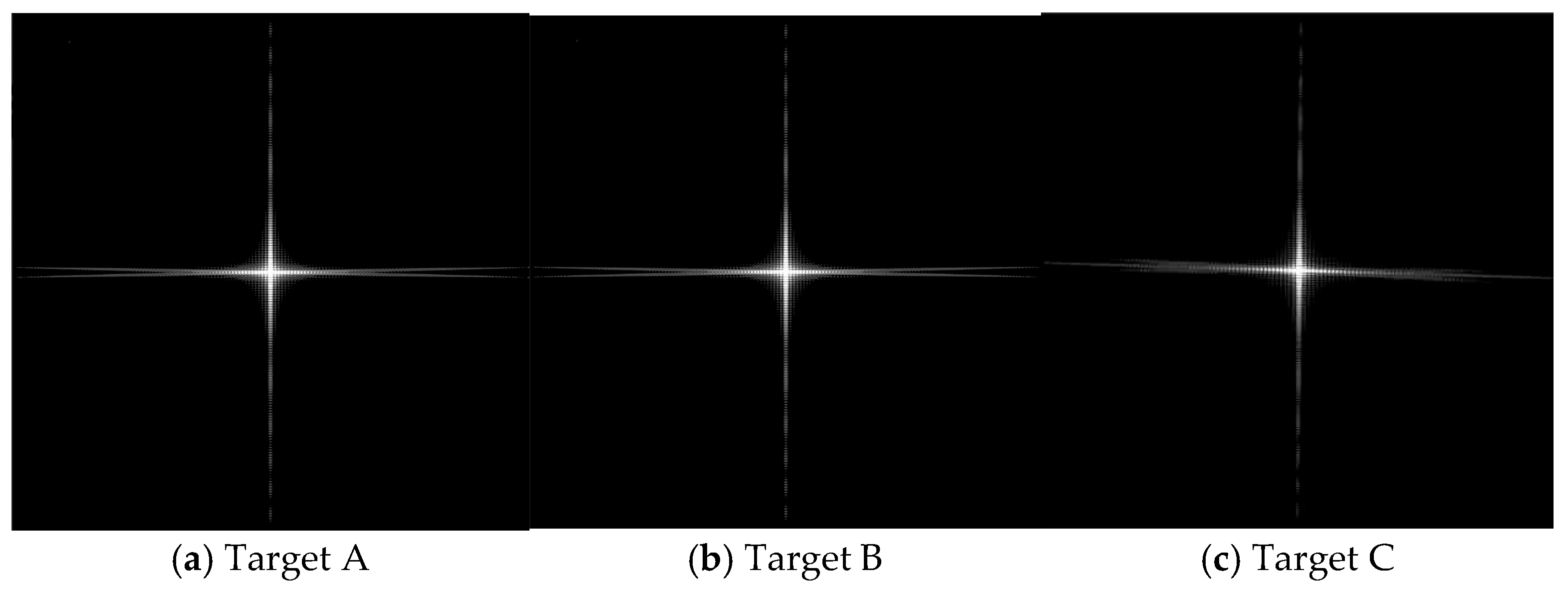

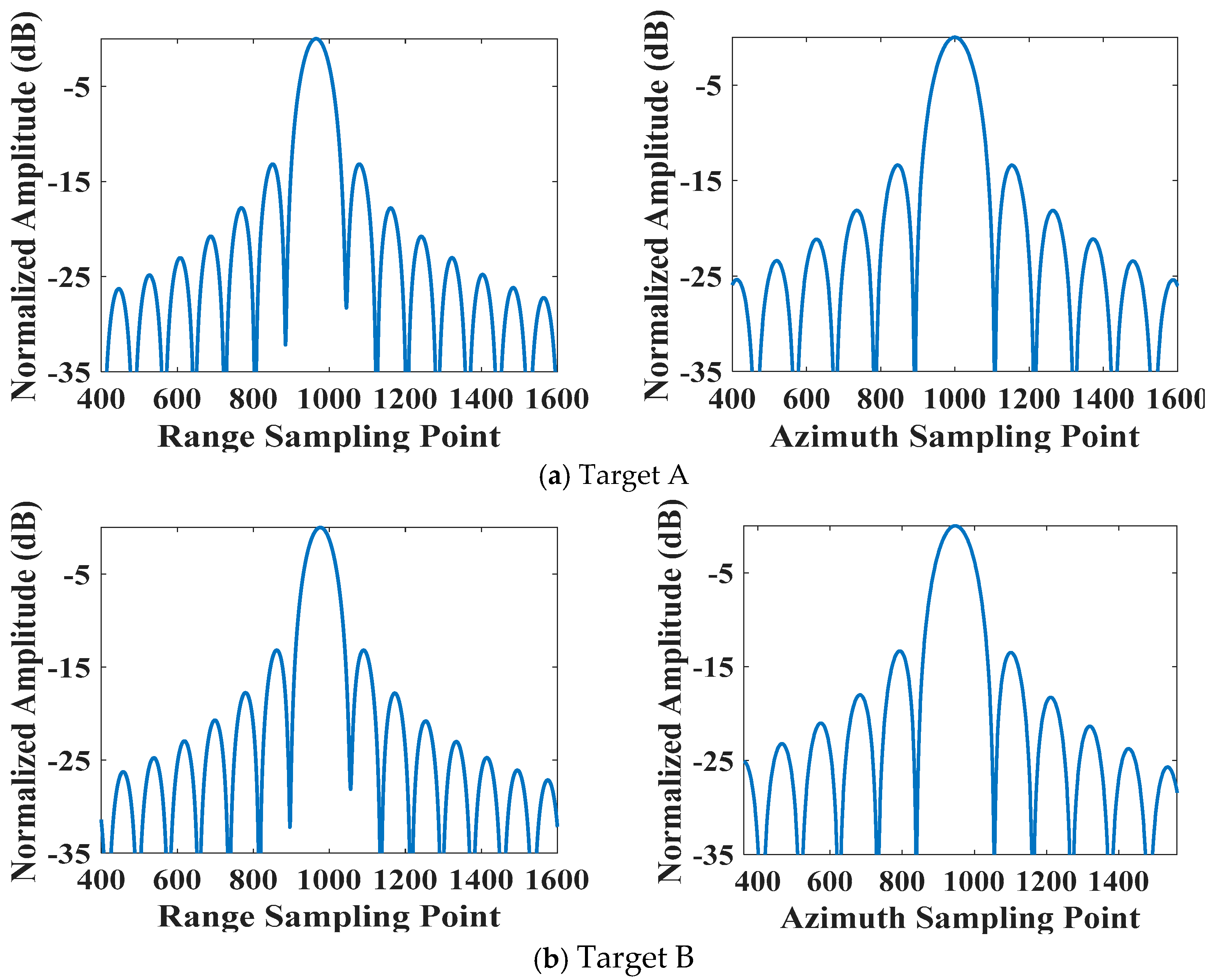

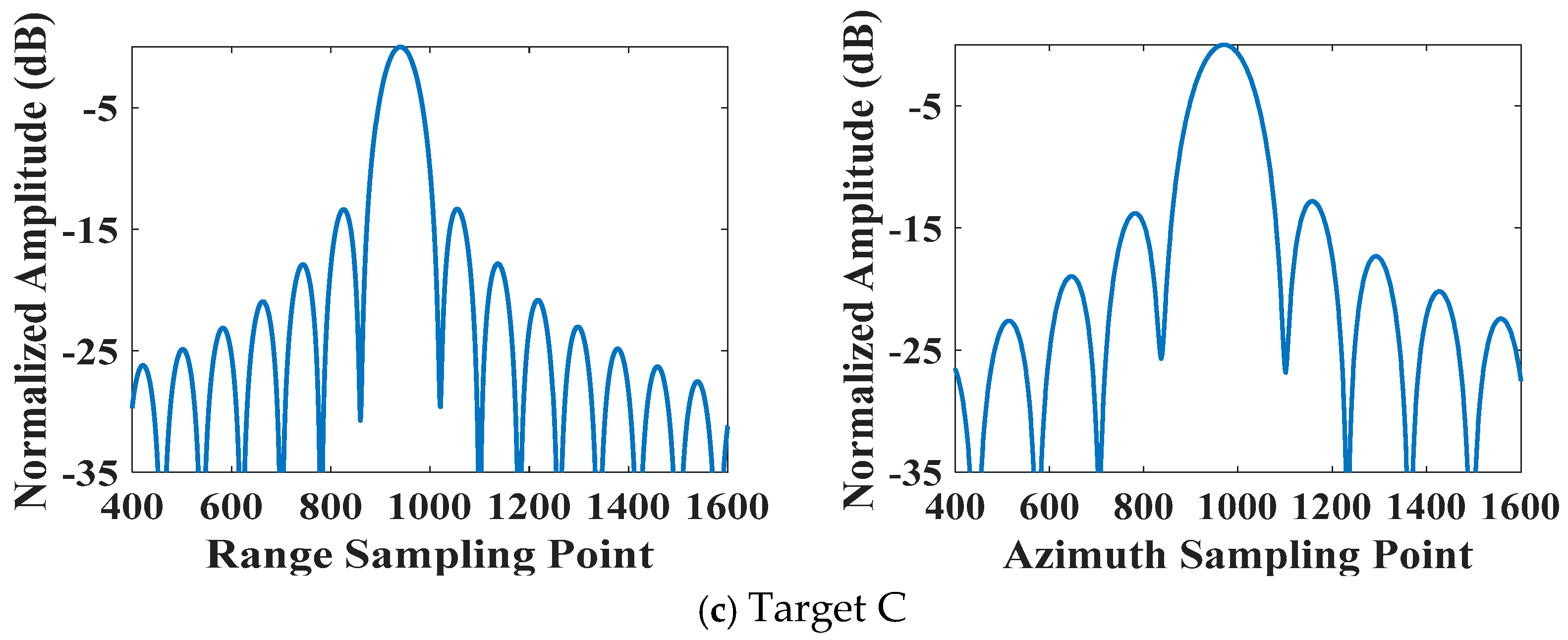

To evaluate the focus accuracy, point target analysis is performed for the three targets A, B, and C in the modified SGA image. First, the 2-D impulse response functions (IRFs) are shown in

Figure 11. Also, the range and azimuth profiles are given in

Figure 12. To quantitatively evaluate the focusing performance, the image quality parameters of these IRFs, including the impulse response width (IRW), peak sidelobe ratio (PSLR), and integrated sidelobe ratio (ISLR), are listed in

Table 2. These metrics demonstrate that all targets have been accurately focused. The resolution of Target C is slightly inferior to other targets, which is attributed to its relatively shorter illumination time.

The proposed algorithm is also validated using measured spaceborne SAR data. The data were collected by a LEO SAR satellite with an orbit height of 540 km. During this data collection, the radar operated in sliding spotlight mode. Some main orbit and radar parameters are shown in

Table 3.

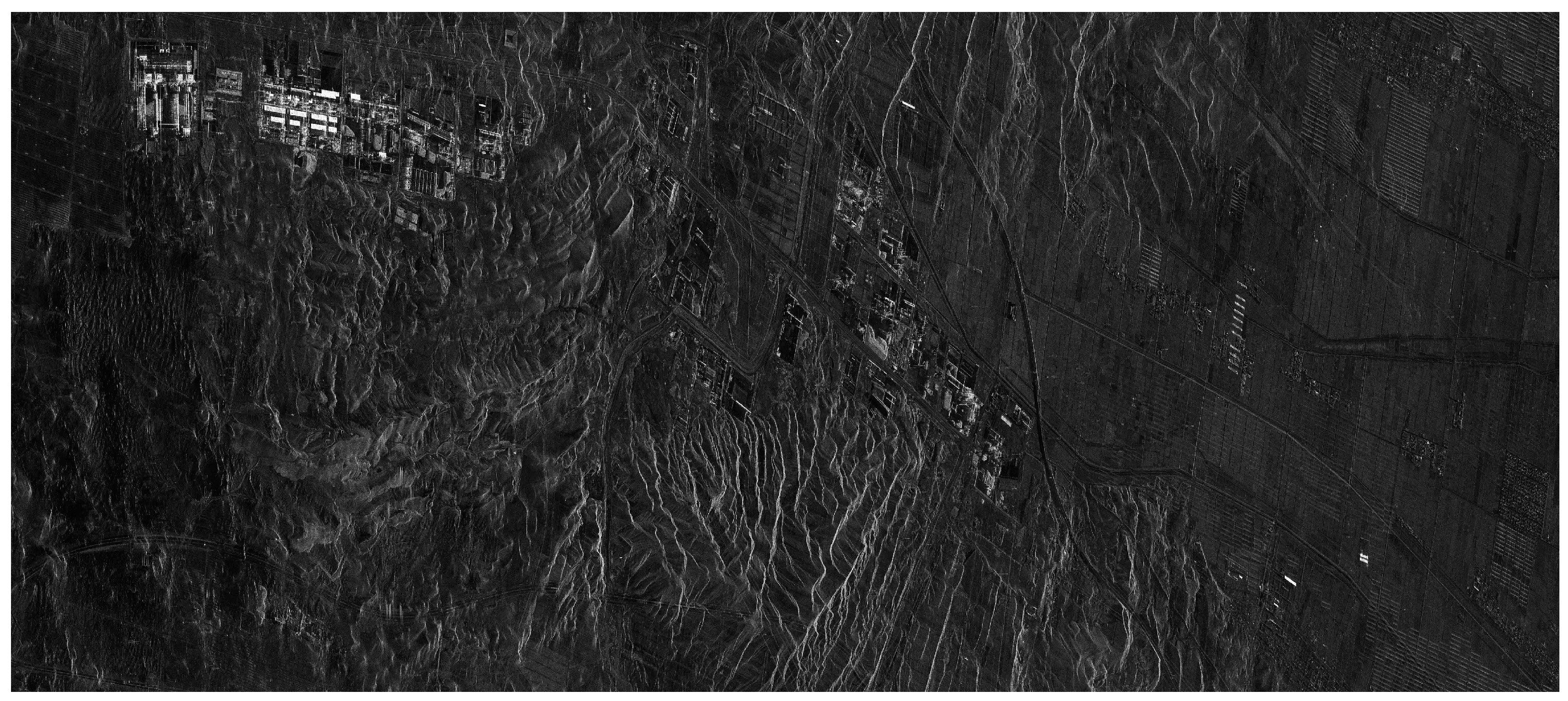

Firstly, the data are processed by the classical SGA, and the result is presented in

Figure 13. As can be seen from the figure, the scatterers near the scene center are all well-focused and accurately located at their true positions. But for the scatterers at the azimuth edge of the scene, they are aliased in the produced image, and on the other hand, these scatterers also suffer from defocus due to residual linear range migration, which has not been corrected during azimuth resampling.

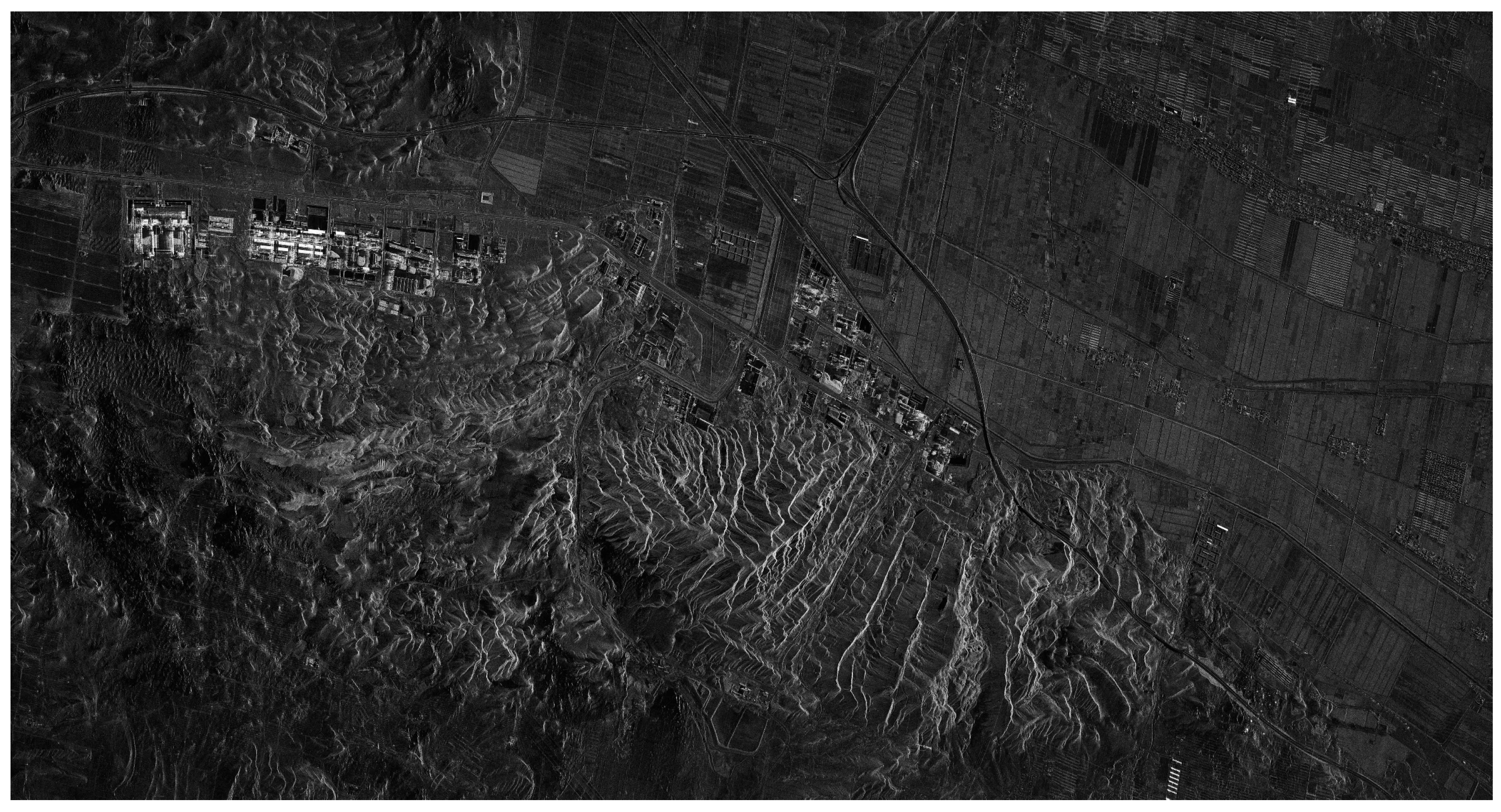

Then, the data are also processed by the proposed algorithm, and the result is shown in

Figure 14. It can be seen that all scatterers in the scene have been accurately focused and located at their true positions.

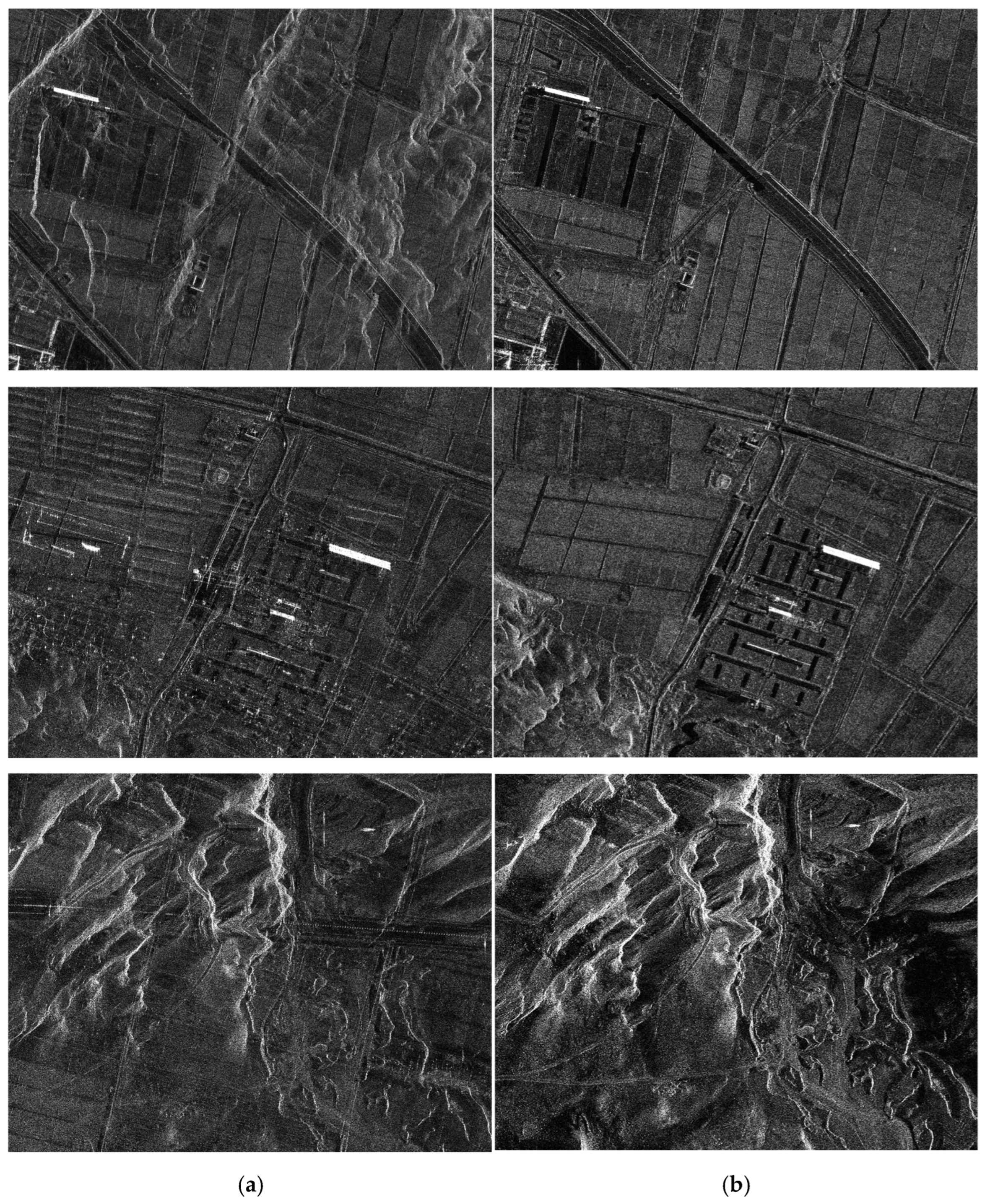

To see the performance improvement more clearly, some local subimages in the same area in both figures are presented in

Figure 15, where

Figure 15a shows the subimages from

Figure 13, and

Figure 15b shows the corresponding subimages in

Figure 14. Comparison results show clearly that the aliasing effect presented in the standard SGA image is completely eliminated in the modified SGA image.

In order to quantitatively assess the focusing performance of both algorithms, the measured image contrast and image entropy of

Figure 13 and

Figure 14 are shown in

Table 4. The results show that the imagery produced by the proposed approach has a higher contrast value and smaller entropy value. Therefore, the comparison results also show that the imagery produced by the modified SGA has better focus quality.