Abstract

This paper seeks to address the limitations of conventional remote sensing data dissemination algorithms, particularly their inability to model fine-grained multi-modal heterogeneous feature correlations and adapt to dynamic network topologies under resource constraints. This paper proposes multi-modal-MAPPO, a novel multi-modal deep reinforcement learning (MDRL) framework designed for a proactive data push in large-scale integrated satellite–terrestrial networks (ISTNs). By integrating satellite cache states, user cache states, and multi-modal data attributes (including imagery, metadata, and temporal request patterns) into a unified Markov decision process (MDP), our approach pioneers the application of the multi-actor-attention-critic with parameter sharing (MAPPO) algorithm to ISTNs push tasks. Central to this framework is a dual-branch actor network architecture that dynamically fuses heterogeneous modalities: a lightweight MobileNet-v3-small backbone extracts semantic features from remote sensing imagery, while parallel branches—a multi-layer perceptron (MLP) for static attributes (e.g., payload specifications, geolocation tags) and a long short-term memory (LSTM) network for temporal user cache patterns—jointly model contextual and historical dependencies. A dynamically weighted attention mechanism further adapts modality-specific contributions to enhance cross-modal correlation modeling in complex, time-varying scenarios. To mitigate the curse of dimensionality in high-dimensional action spaces, we introduce a multi-dimensional discretization strategy that decomposes decisions into hierarchical sub-policies, balancing computational efficiency and decision granularity. Comprehensive experiments against state-of-the-art baselines (MAPPO, MAAC) demonstrate that multi-modal-MAPPO reduces the average content delivery latency by 53.55% and 29.55%, respectively, while improving push hit rates by 0.1718 and 0.4248. These results establish the framework as a scalable and adaptive solution for real-time intelligent data services in next-generation ISTNs, addressing critical challenges in resource-constrained, dynamic satellite–terrestrial environments.

1. Introduction

The escalating global demand for seamless communication and the proliferation of Internet of Things (IoT) applications have rendered terrestrial networks inadequate to fulfill the stringent real-time requirements of users, especially in remote environments such as deserts, oceans, and disaster-stricken areas where terrestrial infrastructure is either sparse or entirely absent [1,2]. These scenarios underscore the critical need for next-generation wireless networks that prioritize ultra-low latency and ultra-high reliability to support mission-critical IoT deployments [3]. In this context, the integration of satellite networks—particularly low-Earth orbit (LEO) constellations—into terrestrial ecosystems emerges as a transformative strategy to achieve ubiquitous connectivity, enabling continuous data acquisition and dissemination across underserved regions [4]. By leveraging the unique attributes of LEO satellites, including their low propagation delays, wide coverage footprints, and inherent resilience against ground-based disruptions, integrated satellite–terrestrial networks (ISTNs) can effectively bridge the digital divide, ensuring robust IoT services even in the most challenging operational theaters.

The rapid expansion of remote sensing satellite systems has transformed them into a multifaceted ecosystem encompassing communication, navigation, Earth observation, and experimental satellites, enabling unprecedented integration with cutting-edge technologies like artificial intelligence (AI), advanced networking, and IoT. This convergence has dramatically expanded the utility of remote sensing data, rendering it indispensable across critical domains such as land resource management, economic development, disaster mitigation, meteorology, and environmental monitoring. As these fields increasingly rely on real-time, context-aware insights, the demand for remote sensing resources has undergone a paradigm shift, necessitating a departure from conventional query-based and subscription-based service models [5]. Existing paradigms often falter in addressing IoT-driven requirements due to their high technical barriers, limited contextual relevance, and inflexibility in dynamic scenarios, impeding efficient access to high-value, domain-specific data. This gap highlights the urgent need for innovative, proactive data acquisition strategies capable of delivering timely, tailored remote sensing resources to meet the evolving demands of smart applications, particularly within ISTNs where seamless cross-modal data fusion and adaptive decision making are paramount.

To address this critical need, there is a pressing demand for a remote sensing information service that prioritizes speed, accuracy, and adaptability, particularly for IoT applications requiring dynamic real-time data to enhance situational awareness and enable informed decision making. Low-orbit remote sensing satellite networks have emerged as the preferred infrastructure for real-time data acquisition and dissemination, leveraging their inherent strengths in global coverage, cost-effective deployment, and minimal propagation delays [6,7]. These networks are pivotal for IoT-centric applications such as weather forecasting, disaster monitoring, and remote sensing mapping, where rapid access to high-resolution spatial data is non-negotiable. Recent advancements have yielded successful implementations of low-orbit satellite networks, demonstrating their potential to revolutionize data delivery [8,9,10]. However, traditional low-Earth-orbit (LEO) satellite systems often face constraints due to the limited onboard processing capabilities. Fortunately, breakthroughs in onboard hardware technology have introduced a new generation of processors that deliver high performance with minimal power consumption, enabling efficient onboard compression and the slicing of remote sensing data [11]. Additionally, the adoption of relay-assisted access strategies within the space–air–ground integrated network (SAGIN) framework enhances connectivity for diverse IoT devices, ensuring seamless data flow across heterogeneous network infrastructures [12,13]. Looking ahead, intelligent remote sensing satellites are poised to function as dynamic content service providers within the emerging satellite Internet ecosystem. By seamlessly integrating remote sensing capabilities with communication satellite networks, it becomes feasible to establish real-time communication channels between satellites and IoT users, thereby optimizing resource allocation and significantly improving responsiveness to evolving user demands [14].

As user applications and data demands expand, the volume and diversity of data generated by remote sensing satellites have surged, outstripping the load capacity of existing data transmission systems [15]. This disparity has made it increasingly challenging for users to promptly access relevant remote sensing resources from the vast datasets available, thereby underscoring the need for real-time data transmission and intelligent push capabilities. To tackle this issue, an intelligent push strategy that proactively delivers potentially relevant remote sensing data from satellite networks to IoT users can significantly reduce content request delays. Such a strategy is crucial for maximizing the utilization of satellite and user cache resources and enhancing the overall performance of integrated satellite–terrestrial IoT networks. Deep reinforcement learning (DRL) has proven effective in addressing optimization challenges within dynamic network environments, as it leverages its perception and decision-making capabilities to discover optimal solutions through agent–environment interactions. Recently, several studies have applied DRL to optimize resource allocation in satellite networks [16,17].

In this work, we focus on the integration of large-scale remote sensing constellations with communication satellite networks and propose a multi-modal multi-agent proximal policy optimization (MAPPO) algorithm, termed multi-modal-MAPPO, to achieve the real-time intelligent push of multi-modal remote sensing data. The primary contributions of this paper are as follows:

- Firstly, to address the distinctive challenges of link intermittency and resource heterogeneity in satellite–terrestrial integrated networks, we introduce a link state-aware proactive delivery algorithm tailored for satellite networks. This algorithm innovatively constructs a multi-granularity network state monitoring model to dynamically capture the real-time fluctuations and outage characteristics of both satellite/user cache resources and link states. By framing the dynamic delivery problem as a Markov decision process (MDP), we provide a robust theoretical foundation for intelligent decision making.

- Secondly, our research comprehensively incorporates the spatio-temporal characteristics of remote sensing data that are vital for IoT applications. These characteristics encompass content generation time, geographic parameters (such as longitude and latitude ranges), payload type, resolution, and product level. To the best of our knowledge, this study represents the first application of multi-agent DRL to optimize an in-orbit proactive push for remote sensing data within a large-scale integrated satellite–terrestrial IoT network. This novel approach enhances data availability and access efficiency for a wide range of satellite applications.

- Thirdly, to achieve the active delivery of multi-modal remote sensing data in ISTNs, we propose a multi-modal collaborative multi-agent deep reinforcement learning framework. This framework is the first application of MAPPO to in-orbit remote sensing data delivery scenarios. By adopting a two-branch architecture: the MobileNet-v3-small pre-training model extracts deep features from remote sensing images and generates semantic feature vectors representing the image content, while the heterogeneous feature processing channel integrates the MLP branch (which encodes attribute features, such as the content generation time, geographic location range, and payload type, as well as the satellite cache state) and the LSTM branch (which simulates the temporal evolution of the user-cached content). Based on this, a dynamically weighted attention mechanism adaptively fuses the outputs of the dual branches with the image feature vectors to build a multi-modal correlation model that connects the image content, attribute data, and user requirements.

- Finally, simulation experiments demonstrate that the proposed multi-modal-MAPPO framework significantly enhances data access efficiency and delivery timeliness. This is achieved through the joint optimization of multi-modal features and agent collaboration mechanisms, which exhibit robust adaptive capabilities and scalability in dynamic topological environments. Consequently, our work establishes a novel paradigm for satellite–terrestrial collaborative services.

The rest of this article is structured as follows. Section 1 discusses current research on existing remote sensing data push technology in ISTNs. Section 2 models the network scenario and formulates the optimization problem. Section 3 formulates the proposed multi-modal-MAPPO intelligent push strategy. Section 4 shows the experimental simulation results. Finally, we conclude this article in Section 5.

2. Related Work

As satellite remote sensing technology advances, various satellite systems are being developed, significantly enhancing the comprehensive observation capabilities of Earth. The systems for acquiring remote sensing data are evolving steadily, leading to an exponential increase in the quantity and diversity of data, along with improvements in technical parameters like spatial, temporal, and spectral resolution [18]. At the same time, the frequency of updates for remote sensing data is increasing, enhancing their timeliness. Hence, it is urgent to provide users with intelligent and real-time remote sensing data services [19].

At present, the majority of existing remote sensing information services operate on query-based and subscription-based service models. In query-based services, users typically input criteria such as their area of interest and time to perform a search. The system subsequently returns retrieval results, enabling users to download the desired data. Conversely, in subscription-based services, users encapsulate their query conditions into resource orders and submit these to the remote sensing information system. When relevant remote sensing resources become available, the system automatically pushes them to the users. Although these two data service models facilitate the discovery of remote sensing resources to some extent, they are not without limitations, including passivity, latency, and a reliance on specialized knowledge. Moreover, there are increasing demands for enhanced precision and timeliness from various entities, including national agencies, industry-specific clients, and personalized users. Consequently, satellite information services are transitioning from reliance on large ground stations to vehicle-mounted, shipborne, and handheld terminals for data reception and transformation. It is evident that traditional processing and application models for satellite remote sensing data are insufficient to meet the current requirements for high timeliness, personalized data collection, and the delivery of information products.

In recent years, research on intelligent distribution services for spatial information has made some progress. In order to fully explore users’ potential demand for remote sensing images, the authors in [20] divided the remote sensing image coverage area into grid cells based on a unified grid system and proposed a spatio-temporal recommendation method for remote sensing data based on a probabilistic latent topic model–spatio-temporal periodic task model (STPT), in which the user retrieval behaviors of remote sensing images are represented as a mix of latent tasks, which act as a link between the user and the images, and each task is associated with a joint probability distribution of spatial, temporal and image features. Since the model adopts the minimum bounding rectangle as the filtering condition for spatial features and assumes that all user acquisitions of images are periodic, the model tends to return data that conform to the periodicity rule, which is less accurate and unsuitable for diverse users. The authors in [5] propose spatio-temporal embedded topic model (STET), which adopts the latent Dirichlet allocation (LDA) model to transform all user’s data records into documents through spatial embedding, temporal embedding, and content embedding, and constructs the document topic model with spatio-temporal embedding to simulate the user’s preferences, so as to fully utilize the spatial and temporal continuity features to recommend appropriate remote sensing images. The authors in [18] proposes a new multi-modal knowledge graph based on the graph convolutional network to perceive depth graph attention network, which firstly constructs the multi-modal knowledge graph of remote sensing images, integrates various attributes and visual information of remote sensing images, and then carries out the information aggregation based on the depth relational attention, and enriches the node representations of remote sensing images by using the multi-modal information and the higher-order collaborative signals to significantly improve the accuracy of the remote sensing image recommendation.

The above study has conducted in-depth research on users’ potential interests, which has resulted in a significant improvement in the accuracy of remote sensing image recommendation. However, the above studies did not consider the real-time network environment of end users, and the time cost for users to acquire remote sensing images is still high, which cannot meet the real-time demand. In order to alleviate the time cost of acquiring remote sensing images, the authors in [21] combined the stream push technology and proposed a recommendation algorithm based on attention and multi-view fusion, constructs a value assessment function from multiple perspectives such as remote sensing users, data and services, defines a multi-view heuristic strategy to support resource discovery, and fuses these strategies through an attention network to realize the active and precise push of massive multi-source remote sensing resources. The authors in [22] proposed a remote sensing resource service recommendation model combining time-aware bidirectional long short-term memory (LSTM) neural network and similarity graph learning in combination with stream push technology. First, a sequence of interaction history behaviors is constructed based on the user’s resource search history, and then, a graph structure of category similarity relationships between remote sensing resource categories is established based on the cosine similarity matrix, using LSTM to represent the history sequence, graph convolutional network to represent the graph structure, and combining the history sequence to construct the sequence of similarity relationships, and exploring the precise similarity relationships using the LSTM method. In [19], the authors proposes an efficient remote sensing image data retrieval and distribution scheme based on spatio-temporal features, which utilizes the spatio-temporal attributes of the remote sensing images as the markers of the content, introduces the in-orbit remote sensing image data segmentation algorithm according to the user’s needs, and adopts multi-path transmission to solve the problems of limitation of the transmission resources, such as the capacity of the link and the length of the connection time, so as to realize the rapid retrieval and distribution of the in-orbit remote sensing image data.

With the development of in-orbit processing technology and artificial intelligence technology, the future intelligent remote sensing satellite system will make the satellite in-orbit instant information push become a popular research direction in the field of remote sensing information recommendation. At that time, in addition to considering the characteristics of remote sensing data, it is inevitable that satellite topology changes, network performance, satellite service resources, user cache resources, user location, and other information need to be taken into account in order to realize the remote sensing data in-orbit proactive, real-time, intelligent push.

In recent years, in order to improve the quality of service (QoS) for users in the ISTNs, there have been some studies on network resource optimization problems [23,24,25], but the active content distribution in ISTNs is still in the preliminary exploration stage. The problem is still in the preliminary exploration stage, mainly focusing on utilizing in-network caching techniques to improve the efficiency of content distribution in satellite networks. In the study of collaborative caching algorithms for dynamic network environments, multi-agent deep reinforcement learning (MARL) has demonstrated significant potential. The authors in [26] proposed the C-MAAC algorithm based on the multi-agent actor–critic (MAAC) framework, which innovatively formulates the collaborative caching problem as a multi-agent Markov decision process (MDP). By adopting a centralized training with decentralized execution (CTDE) framework, this method effectively addresses the instability in training environments caused by decentralized multi-agent decision making. The introduction of a Gumbel-Softmax sampler to generate differentiable action spaces significantly enhances the stability of the training process. Experimental results demonstrate that, under dynamic content popularity scenarios, C-MAAC achieves notable improvements in cache hit rates compared to traditional methods (LFU, LRU) and deep reinforcement learning benchmarks (I-DDPG and D-DQN). In contrast, [27] approached the problem from the perspective of partially observable environments, proposing a multi-agent deep reinforcement learning framework based on the partially observable Markov decision process (POMDP) modeling. Their algorithm integrates a communication module to enable the interactive sharing of state-action information among base stations, employs variational recurrent neural networks for content request state estimation, and innovatively incorporates LSTM to model the temporal characteristics of user access patterns. Experimental results indicate that, compared to existing methods such as LRU and MAAC, this algorithm achieves significant enhancements in both caching rewards and hit rates. [28] addresses the collaborative edge caching problem in LEO satellite networks, aiming to reduce traffic congestion and request latency by caching popular content. It proposes the SEC-MAPPO method, which is based on the multi-agent proximal policy optimization (MAPPO) algorithm and models the problem as a POMDP to tackle the challenges of a brief satellite overhead time and dynamic network topology. The experimental results indicate that SEC-MAPPO significantly outperforms baseline algorithms like RANDOM, LFU, LRU, and deep Q-learning network (DQN) in reducing the average video request latency, demonstrating its effectiveness in practical LEO satellite network scenarios.

Current research on the remote sensing data push in satellite–terrestrial integrated networks centers on three pivotal dimensions: user demand modeling, dynamic network adaptation, and collaborative distribution mechanisms. While significant progress has been made in each area, critical challenges persist. In user demand modeling, innovative approaches such as multi-attribute decision frameworks leveraging quadruple user profiling, spatio-temporal topic models like STPT and STET, and advanced deep learning architectures including multi-modal knowledge graphs and attention fusion networks have demonstrably enhanced demand prediction accuracy [29,30]. However, these methodologies exhibit notable limitations in capturing the intricate heterogeneity of spatio-temporal correlations and cross-modal feature interactions inherent in remote sensing data, often relying on overly coarse spatial representations and static feature weighting schemes that fail to adequately resolve complex semantic patterns. Transitioning to dynamic network adaptation, existing strategies employing density-based partitioning and spatio-temporal feature tagging attempt to mitigate the challenges posed by intermittent connectivity and resource constraints in hybrid networks. Many of these solutions remain anchored to static network assumptions or rely on approximation algorithms, rendering them ill-equipped to handle the high-frequency topological variations characteristic of large-scale LEO constellations. Furthermore, the precision of coupling models linking remote sensing data semantics with dynamic network states requires substantial refinement. In the realm of collaborative distribution mechanisms, while cache optimization and multi-path transmission techniques have improved distribution efficiency, the absence of global joint scheduling frameworks for computational, storage, and communication resources across satellite–terrestrial nodes represents a critical gap. Centralized decision-making paradigms dominate current implementations, introducing scalability limitations and compromising real-time responsiveness in dynamic environments. Collectively, these challenges underscore the need for more sophisticated models that can unify fine-grained demand understanding with adaptive network management and distributed resource orchestration to achieve truly efficient and intelligent remote sensing data dissemination in next-generation integrated networks.

3. System Model Description

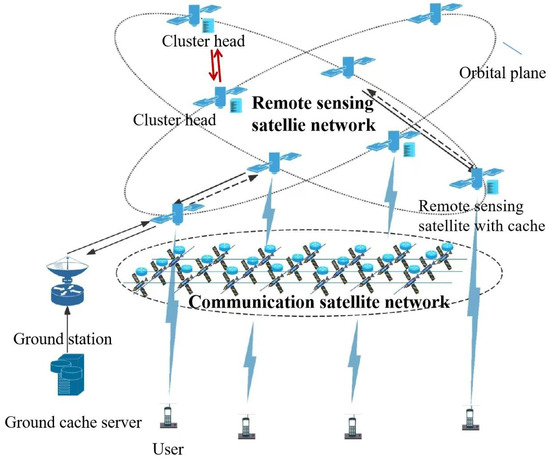

Figure 1 illustrates the integrated satellite–terrestrial remote sensing satellite network architecture, containing N satellites, M users, and a ground station. Among them, the ground station is denoted by G, the set of users is denoted by , the location of the user i is denoted as , the remote sensing satellite set S is denoted by , the set of communication satellites B is denoted as , and . The remote sensing satellite set is divided into K clusters, with the satellite set of the k-th cluster denoted as , and represents the number of remote sensing satellites in the k-th cluster. Remote sensing satellites generate real-time remote sensing data with spatio-temporal characteristics. We consider remote sensing data that include both imagery and attribute data, where the attribute data format is (shooting time, latitude starting point, latitude ending point, longitude starting point, longitude ending point, payload type, resolution level, product level). Among them, P remote sensing satellites are equipped with caching modules, while communication satellites are solely responsible for data transmission. Users directly communicate with satellites via satellite terminals to access data, ensuring visibility to at least one communication satellite at any given time. Satellites can relay content to ground stations via feeder links; however, ground stations are typically located in remote areas, incurring high transmission costs and significant service delays. Therefore, proactively pushing content of potential interest to users can substantially reduce request latency. On the other hand, limited user cache resources necessitate intelligent push strategies to conserve cache usage. Thus, the core challenge lies in designing an intelligent delivery strategy to dynamically push newly generated remote sensing data to relevant users, aiming to minimize the average content delivery latency. The mathematical model of this system can be described as follows.

Figure 1.

Architecture diagram of integrated satellite–terrestrial remote sensing satellite networks.

3.1. Channel Model

Satellite-to-user link: The channel model between satellites and users is represented by the Weibull channel model, mainly considering rainfall attenuation, which has been utilized in numerous existing works [31,32]. The distance between them can be regarded as the height of the LEO satellite. Due to channel attenuation, the power attenuation of the satellite to user link is expressed as

where is the wavelength of the carrier wave, and denote the antenna gains of LEO satellite and user satellite terminals, and represents the rainfall attenuation following the Weibull distribution [33]. Based on the power attenuation of the channel, it is easy to use the Shannon equation to calculate the maximum transmission rate between LEO satellites and users as

where is the transmission power of the LEO satellite, is the bandwidth allocated to LEO satellites, and denotes the background noise power.

Inter-satellite link: Due to there being less scattering in the space environment, the large-scale fading of electromagnetic waves in propagation is mainly caused by the free space propagation effect [34]. Hence, the power attenuation of the link between satellites can be expressed as

where and are the transmitter gain and receiver gain of the LEO satellite, respectively; is the optics efficiency of the transmitter, and is the optics efficiency of the receiver. Utilizing the power attenuation of the channel, the maximum transmission rate between LEO satellites can be calculated as follows

It is assumed that the link rates of the satellite-to-user and inter-satellite links are time-varied functions denoted by and , respectively, which are associated with Equations (2) and (4). The link rates and are randomly generated within the ranges and , respectively. In addition, temporary interruptions may occur on each link, at which time the link rate is 0.

3.2. Push Model

To address the challenge of minimizing user-perceived latency in accessing time-sensitive remote sensing data, we propose a dynamic intelligent dissemination framework that leverages real-time contextual awareness and adaptive network resource allocation. Recognizing that conventional batch-processing methodologies inherently introduce delays through periodic data aggregation and post-acquisition analysis, our strategy emphasizes proactive data delivery by continuously monitoring evolving user requirements and operational environments. By capitalizing on the temporal dynamics of satellite-derived data streams, the system performs in-transit analytics to predict user needs and pre-emptively routes relevant information across dynamically reconfigurable network topologies. This approach ensures the optimal utilization of intermittent connectivity windows while maintaining service continuity across time-slot transitions, where network configurations can be instantaneously adjusted based on prevailing conditions.

The core innovation lies in synchronizing data generation cycles with user decision workflows through the predictive modeling of demand patterns and network state variables, thereby transforming reactive data retrieval into an anticipatory service paradigm that significantly reduces the latency without compromising data integrity or relevance. It is assumed that the network topology is fixed during each time slot, but can be changed instantaneously during time slot transitions. The set of time slots is defined as , and each time slot will have multiple remote sensing data randomly generated in remote sensing satellite network using to represent all remote sensing data generated by the remote sensing satellite network. The data-generating satellite transmits content summary information to the cluster head node, which then performs intelligent analysis to determine the optimal caching and dissemination strategy. Instead of random caching, the cluster head dynamically selects appropriate remote sensing satellites within the cluster to store the content based on real-time demand forecasts and network conditions. Simultaneously, it employs predictive algorithms to match content relevance with user profiles, scheduling proactive pushes during low-traffic time slots to ensure the timely delivery of high-priority data while maintaining the efficient utilization of user terminal cache resources through coordinated content rotation and priority management.

We establish a unified framework where all remote sensing data items have equal size . Cache-enabled satellites and user terminals possess storage capacities of and , respectively, with both significantly smaller than the total data size (, ). This configuration allows each satellite to store data items, while user devices can hold contents. When storage reaches capacity, the first-in–first-out (FIFO) policy is implemented for cache replacement. The content dissemination decision making process is centralized at the cluster head corresponding to the originating satellite’s cluster. For cluster k, the binary decision variable determines whether content generated by cluster k is pushed to the j-th user at time slot l, if , the content is not pushed to the j-th user. To enforce efficient cache utilization, each content item can only be actively pushed once across all time slots, governed by the constraint

3.3. Content Delivery Model

In the satellite network system, user-requested content can be responded to directly at the user’s terminal or be responded to by a ground station, a remote sensing satellite, depending on whether the content is actively pushed and where the content is cached. Due to the short queuing and processing delays, this article only considers propagation and transmission delays as content delivery delay.

Direct delivery mode If the content of the request has been cached in the user terminal, the user terminal responds directly, and the delay for user to obtain content f is 0.

Access delivery mode If the requested content has been cached in access remote sensing satellite , the content is directly transmitted to the user terminal. The delay for user to obtain content f can be calculated as follows

where represents the Euclidean distance from user to access satellite , is the propagation rate of the satellite–ground link, and is the transmission rate between access satellite and at time slot t.

Collaborative delivery mode If the requested content is not cached in the access remote sensing satellite , but another remote sensing satellite has cached the content, then the user’s request will be transmitted to through the communication satellite network, and the content will be distributed through inter-satellite links from . Due to the dynamically varying link rates, the content delivery delay dynamically varies across multiple transmission paths between and . We use the Dijkstra algorithm to solve the shortest path with minimum content delivery delay.

The propagation delay for user to obtain content f is denoted by , which can be calculated as follows

where denotes the content delivery path from access satellite to satellite , , and is the inter-satellite link propagation rate. The content transmission delay of user to obtain content f is denoted by , which can be calculated as

where is the transmission rate between satellite and at time slot t. Then, the delay for user to obtain content f is

Ground station delivery mode If the requested content is not cached by the remote sensing satellite network, the content will be distributed from the ground station through the inter-satellite links. Due to the distance between the ground station and the user, users can only obtain content from the ground station through satellite networks, resulting in significant delay when users obtain content from the ground station. This paper uses a large constant to represent the delay for users requesting content from ground stations.

4. Problem Formulation

The optimization objective of the problem is to minimize the average content delivery delay for long-term user requests. denotes the average delivery delay of user requests during T, and it can be expressed as follows

where , and means that user requested content f at time slot t and vice versa user did not request content f at time slot t. Then the optimization problem P can be defined as follows

5. Algorithm Design

Targeting large-scale satellite–terrestrial integrated network scenarios, we propose a multi-modal deep reinforcement learning-based proactive remote sensing data delivery algorithm (Multi-modal-MAPPO). By integrating multi-modal data—including remote sensing imagery, attribute data, and user/satellite network cache states—and combining multi-agent collaboration mechanisms, the algorithm achieves high-precision and low-latency data distribution in dynamic network environments. The core design is elaborated through three key aspects: multi-modal feature modeling, cross-modal dynamic fusion, and collaborative decision optimization.

5.1. Multi-Modal Feature Modeling

We address the proactive push task for remote sensing data by categorizing input data into two modalities—image and attributes—for multi-modal feature modeling. For remote sensing image data, we consider visible-light images and SAR images. To address the heterogeneity of multi-source remote sensing data and onboard computing constraints in the integrated satellite–terrestrial networks, we propose dual-modal adaptation optimizations based on the lightweight MobileNetV3-Small architecture:

Heterogeneous input layer adaptation: To address the data discrepancy between single-channel SAR images and three-channel visible-light images, the input layer’s convolution kernel dimensions are restructured—adjusting the original 3 × 3 × 3 kernel to a 3 × 3 × 1 configuration. This reduces the number of parameters in the initial layer by 66.7% while retaining the lightweight characteristics of depthwise separable convolutions, ensuring channel compatibility for SAR data feature extraction.

Pre-training strategies: Distinct approaches are adopted for visible-light and SAR modalities. For visible-light image processing, ImageNet pre-trained weights are directly loaded, with the first three convolutional layers frozen to leverage the transfer learning for inheriting general visual semantic representations, while only the deep feature extraction layers (Layers 4–16) and the classification head are fine-tuned to accelerate convergence. For single-channel SAR images, a three-stage adaptation is implemented: (1) the input layer adaptation averages the weights of the original three-channel convolution kernel along the channel dimension to align with the SAR’s single-channel input; (2) Intermediate layers reuse pre-trained weights, exploiting the cross-modal generalization capability of depthwise separable convolutions; and (3) Kaiming normal initialization is applied to the classification head to mitigate the channel dimension mismatch and gradient vanishing issues. This strategy ensures efficient cross-modal compatibility while preserving computational efficiency.

The attribute data include remote sensing attribute metadata, satellite cache states, and user cache states from the previous time slots. We employ a three-layer MLP to jointly encode the remote sensing attribute data and satellite cache states:

where denotes the remote sensing attribute data, represents the satellite cache state, and ,, are the weight matrices of each layer, with , , being the corresponding bias terms.

An LSTM module is employed to model the temporal evolution of user cache states over the previous T time slots

where contains the hidden states across T time steps, and represent the final hidden and cell states. The last time-step hidden state is extracted as the temporal feature of user cache dynamics. The MLP-extracted attribute features and LSTM temporal features are fused via the residual addition

5.2. Multi-Modal Fusion

To enable the fine-grained interaction between the image and attribute modalities, a gated attention mechanism is designed to dynamically allocate modality weights. The image feature and fused attribute feature are concatenated and processed through a fully-connected network to generate attention weights

where and are the hidden-layer and output-layer weight matrices, respectively, with and as biases. The attention weights satisfy . The weighted fusion of the two modalities is then performed based on the attention weights:

This mechanism adaptively prioritizes dominant the modalities while preserving complementary information for robust decision making.

5.3. Multi-Agent Collaborative Decision-Making Optimization

The major advantage of DRL is that, in the process of continuous interaction between agents and wireless environments, the mobility of wireless networks, such as satellite mobility and link status, can be resolved, especially among multi-agent systems that can collaborate to solve problems through shared environments [35].

Markov Decision Process

The next section revolves around constructing Markov decision processes (MDPs), the core reason being its ability to formalize sequential decision-making problems in dynamic environments, providing structured modeling for an AI to optimize long-term cumulative gains through a mathematical framework of states, actions, rewards, and state transfer probabilities. At the same time, the Markovian nature of the MDP (where the current state is only dependent on the previous state) drastically simplifies the policy learning process in complex environments, enabling reinforcement learning methods to efficiently solve optimal decision-making policies, as follows

State space: At time slot t, we define the state of the k-th agent as

where represents the attribute characteristics of the contents, , represents the cache status of remote sensing satellites with cache capabilities, for remote sensing satellite i, its cache status is denotes as represents the cache status of users in the previous time slot and current time slot, respectively, represents the number of users involved in a single decision, for user i, its cache status in the previous time slot is denotes as

Action space: We define the multi-dimensional discrete action space to represent the push decision actions, and the action vector can be represented as follows

where , , with indicating that the content is pushed to user j at time slot l, otherwise . If , the content is not pushed to user j.

Reward function: To minimize the total content delivery delay, we define the following reward function.

with

where denotes the actual latency generated by the content distribution within time slot t.

5.4. Proposed R-MAPPO Algorithm

PPO is a robust policy gradient algorithm that addresses the problems of traditional policy gradient methods in terms of stability and sample efficiency through careful policy network update clipping and the use of a surrogate objective function. It has shown excellent performance in various reinforcement learning tasks [36]. Beyond the scope of single-agent DRL methods, MAPPO [37] significantly expands the applicability of PPO. It excels in scenarios where multiple agents must interact and make decisions autonomously, demonstrating superior performance in environments that require stability and sample efficiency within discrete action spaces. The robust handling of discrete action spaces and overall resilience in multi-agent systems make MAPPO an ideal choice for the dynamic active push problem explored in this study. The MAPPO algorithm employs a centralized training with decentralized execution (CTDE) framework, which is suitable for the fully cooperative relationship in satellite collaborative decision-making scenarios. In this paper, at time slot t, agent i can observe the global state of the environment through communication between cluster heads, represented as , and then, based on its own actor network , take action . Therefore, the actions of all agents can be represented as . Due to the complete cooperative relationship between the agents in this research, all agents share the same reward . The critic network then uses the collected global and to generate a Q-value function to maximize the reward function. The Q-value function is defined as follows

where . Therefore, the transition buffer can be expressed as follows

where is an advantage function that evaluates whether the behavior of the new policy is better than that of the old policy, and it can be defined as follows:

where is the state-value function output of the value network of each agent i. Therefore, the loss function of the policy network can be expressed as follows:

where represents the clipping function that limits the change between the old and new policy to the range . Then, the parameter is updated using a gradient ascent method, i.e., , where is the learning rate of the new policy network. The loss function of the value network is a mean square error function, defined as

The parameter is updated by gradient descent, i.e., . To address the challenge of high-dimensional decision making in this data push scenario, we redesign the actor network of MAPPO by adopting a multidimensional discrete action space. Specifically, the original action space for recommending 15 content items across 3 users (with 19 candidate time slots each) would naively require a single softmax output of size , leading to an intractable action space of dimensions. Instead, we decompose the action space into independent sub-policies, each implemented as a softmax head with 19 dimensions. This multidimensional discretization reduces the effective action space to dimensions, mitigating the "curse of dimensionality" while preserving decision granularity. Hence, the gradient of the actor network is expressed by

Moreover, we introduce a term for strategy entropy into the actor’s loss L(), and multiply it by a coefficient to avoid the agent getting trapped in a sub-optimal state. Consequently, the final loss function for the updated policy network can be expressed as follows

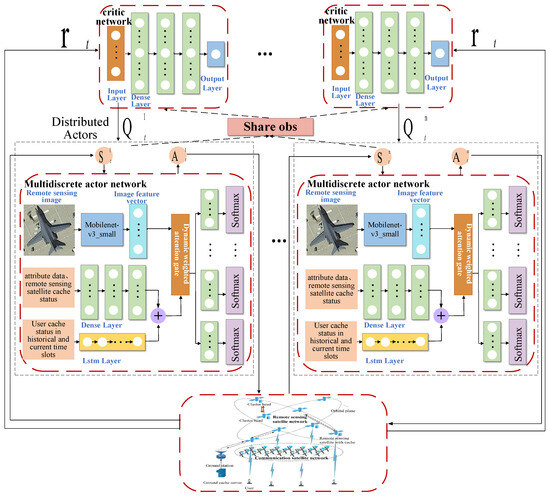

LSTM [38] is a specialized type of recurrent neural network created to address issues related to long-term dependencies. Since the data push decision problem in this paper is inherently a long-term dynamic decision challenge, we incorporate an LSTM layer into the actor network to better capture the correlation of the state information of different agents in the time domain. The sequence of the shared state space within a range of timesteps starting from time t is defined as . Figure 2 shows the multi-modal-MAPPO algorithm framework, and the training process pseudo-code for the proposed multi-modal-MAPPO is summarized in Algorithm 1.

Figure 2.

Architecture diagram of multi-modal-MAPPO.

| Algorithm 1 Proposed Multi-modal-MAPPO Algorithm | |

| 1: | Initialize the parameters , , D and ; |

| 2: | for do |

| 3: | Initiate the state of all agents; |

| 4: | for each do |

| 5: | for each do |

| 6: | Each agent obtains the local action , reward |

| and next state ; | |

| 7: | end for |

| 8: | end for |

| 9: | Collect the sample trajectory of each agent ; |

| 10: | Compute the and ; |

| 11: | Store sample trajectory to transition buffer D; |

| 12: | for do |

| 13: | Reshuffle the data order and reorder the sequence; |

| 14: | Randomly choose data from D; |

| 15: | Update and ; |

| 16: | end for |

| 17: | Clear the transition buffer D; |

| 18: | end for |

5.5. Complexity Analysis

The training of models is usually performed offline, while the computational complexity of each agent during execution depends on the combination of neural network forward computations. The proposed multi-modal-MAPPO framework involves multiple neural networks. For the image processing branch, the computational complexity of each CNN unit is denoted as , where and represent the dimensions of the output feature map and convolutional kernel size, and and denote the number of input and output channels, respectively. For the attribute processing branch, the three-layer MLP used for encoding attribute features has a computational complexity of for each fully connected layer, where and are the input and output sizes of the layer. The LSTM model used to capture temporal patterns in user cache states has a computational complexity of , where is the input feature dimension and is the hidden state dimension. The attention mechanism involves matrix operations to compute attention weights. For input vectors of dimension , the complexity of the fully connected layers in the attention mechanism is , and the softmax operation adds complexity. Overall, the attention mechanism has a complexity of . The overall complexity of the MAPPO algorithm depends on the number of agents K and the complexity of the actor and critic networks. The critic network is a three-layer MLP, with each fully connected layer having a complexity of , where and are the input and output sizes of the layer. Therefore, the overall computational complexity of the proposed multi-modal-MAPPO framework can be summarized as

where V is the number of layers in the CNN networks, and Y and Z are the number of fully connected networks in the actor network and critic network, respectively.

6. Results

6.1. Simulation Setup

A satellite network simulation scenario was developed utilizing Systems Tool Kit (STK) to model an intelligent remote sensing constellation inspired by the Jilin-1 architecture, comprising 300 satellites distributed across 20 distinct solar synchronous orbits. These orbits were configured with a nominal altitude of 656 km and an inclination angle of 98.04°, optimizing coverage for high-latitude regions while maintaining consistent solar illumination patterns. Concurrently, a communication satellite constellation was designed based on the Starlink topology, deploying 650 satellites across 25 Walker orbit planes at an altitude of 550 km with a 53° inclination angle to ensure the global coverage and low-latency communication capabilities. Both constellations were integrated within the STK environment to evaluate the inter-satellite link performance, coverage patterns, and network resilience under dynamic orbital mechanics. The simulation parameters—including ephemeris data, sensor specifications, and communication protocols—are systematically documented in Table 1, while the hyperparameter configurations governing constellation control algorithms and network optimization routines are presented in Table 2, providing a comprehensive framework for validating system-level performance metrics.

Table 1.

Simulation parameters.

Table 2.

Hyperparameters in R-MAPPO.

6.2. Datasets

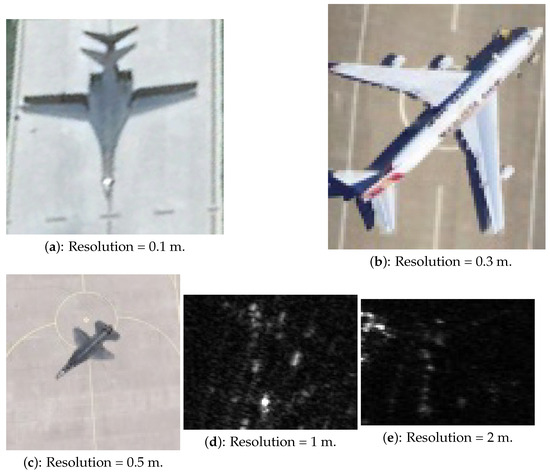

The visible-light images in this study are sourced from a subset of the MTARSI dataset [39]. MTARSI, a fine-grained remote sensing aircraft dataset from Hefei University, contains 9385 remote sensing images covering 20 aircraft types, including A-10, B-1, B-2, C-130, and others. Annotated by seven remote sensing domain experts, the dataset aggregates data from 36 airports across countries such as the United States, United Kingdom, Japan, Russia, and China, collected under varying time, seasonal, and imaging conditions. For SAR images, the publicly available SAR-ACD dataset is adopted [40]. SAR-ACD includes six categories of civilian aircraft (e.g., A220, A320/321, A330, ARJ21, Boeing 737, and Boeing 787) and 14 categories of military/special-purpose aircraft, with a spatial resolution of 1 m [41]. Constructed from time-series SAR images using HH polarization, the dataset covers multiple aviation hubs such as Shanghai Hongqiao International Airport. Annotation was performed manually through expert visual interpretation combined with corresponding optical imagery. After data augmentation, the public SAR-ACD subset contains 3032 images of civilian aircraft (approximately 500 per category), featuring high-quality labels for radar echo characteristics across multiple phases (e.g., takeoff, taxiing, and parking). By performing multi-scale reconstruction on both datasets, a cross-modal image set with five resolution levels (0.1 m, 0.3 m, 0.5 m, 1 m, and 2 m) is generated. After sample balancing, a final dataset of 280 images is created, with representative examples shown in Figure 3.

Figure 3.

Sample images with different resolutions from the experimental dataset.

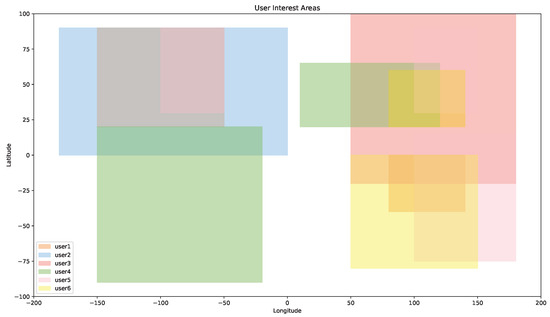

In this study, remote sensing content is generated based on the latitude and longitude positions of remote sensing satellites at different time intervals. Specifically, a square area is generated centered on the satellite’s latitude and longitude coordinates , with the top–left corner at and the bottom–right corner at . The study involves six users, each having differentiated requirements, where all users only receive remote sensing content within their regions of interest (ROIs, as shown in Figure 4). During the simulation period, a total of 280 remote sensing content items and 547 user requests are generated.

Figure 4.

Interest area for all users.

6.3. Baseline Algorithms and Evaluation Metrics

To demonstrate the convergence performance and reliability of the proposed algorithm, this paper compares the proposed multi-modal-MAPPO with two baseline algorithms: MAPPO and MAAC. MAPPO is a collaborative decision-making algorithm based on distributed reinforcement learning. By decoupling the policy optimization of agents from the global state value evaluation, it ensures training stability while achieving efficient adaptive solutions for multi-node collaborative tasks. MAAC is a multi-agent reinforcement learning algorithm based on the actor–critic framework, incorporating mechanisms from MADDPG, COMA, VDN, and attention mechanisms. In addition, to quantitatively evaluate the performance of the proposed algorithm, the following metrics are employed:

- Hit ratio: Due to the limited user cache capacity, proactively pushed content may be evicted by cache replacement strategies before the user issues a request, leading to a cache miss. To measure the continuous effectiveness of the push strategy, the hit ratio is defined as follows:where represents the number of content requests that are still accessible in the user’s cache during the t-th time slot, and represents the total number of content requests issued by the user node during the t-th time slot.

- Average delivery delay : The average latency for all content requests over the period T.

7. Analysis

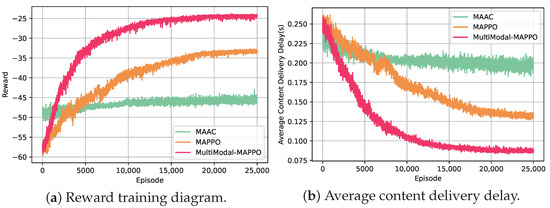

To validate the advantages of the multi-modal-MAPPO algorithm in multi-modal dynamic environments, this section analyzes its convergence and final performance through comparative experiments. First, Figure 5a presents the reward training curves of the three algorithms, showing that their rewards gradually converge as training progresses. The multi-modal-MAPPO algorithm achieves the highest reward, while MAPPO attains suboptimal performance, demonstrating that the agents can effectively learn and perceive changes in the dynamic system. Compared to MAPPO, multi-modal-MAPPO not only achieves higher rewards but also exhibits faster convergence speed and lower standard deviation, indicating a more stable training process. This validates that the introduced multi-modal dynamic attention mechanism enables the refined fusion of image-attribute features, capturing strong correlations between user demands and network states to generate high-value decisions. In contrast, MAPPO’s lack of multi-modal feature interaction limits its adaptability to partially observable scenarios, resulting in constrained long-term gains.

Figure 5.

Training reward values and delay representations.

The evaluation results presented in Figure 5b demonstrate the superiority of the intelligent push algorithm framework in reducing content delivery delays. By leveraging predictive capabilities for user demands and network states, both multi-modal-MAPPO and MAPPO achieve significant latency improvements over the MAAC baseline. Specifically, multi-modal-MAPPO demonstrates the lowest average delay of approximately 0.086 s, showing a 53.55% reduction compared to MAAC, while MAPPO achieves 0.1321s with a 29.55% decrease. These findings underscore the effectiveness of the proposed multi-modal architecture in optimizing proactive content caching strategies.

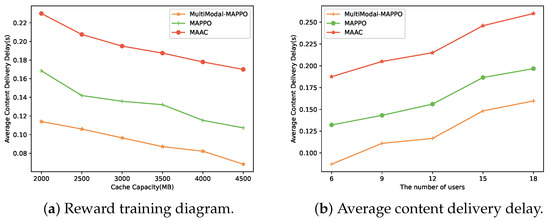

To analyze system adaptability under varying operational conditions, we conducted parametric studies on cache capacities and user loads. As visualized in Figure 6a, increasing cache sizes from 2000 MB to 4500 MB universally reduces delivery delays due to improved content availability. Notably, multi-modal-MAPPO maintains consistently superior performance, achieving sub-0.1 s latency across all configurations. At the minimum cache capacity of 2000 MB, it outperforms MAPPO by 0.053 s and MAAC by 0.116 s. The scalability of the proposed algorithm is further validated in Figure 6b, where multi-modal-MAPPO achieves the lowest delays under all tested user counts (up to 18 users), demonstrating 23.17% and 38.58% improvements over MAPPO and MAAC, respectively. These results confirm the algorithm’s robustness in dynamic environments. Real-time implementation feasibility is verified through Table 3, which shows the decision-making overhead of multi-modal-MAPPO (0.08654 s per decision) meets operational requirements for remote satellite caching systems.

Figure 6.

The effects of different parameters.

Table 3.

Average time overhead of decision making.

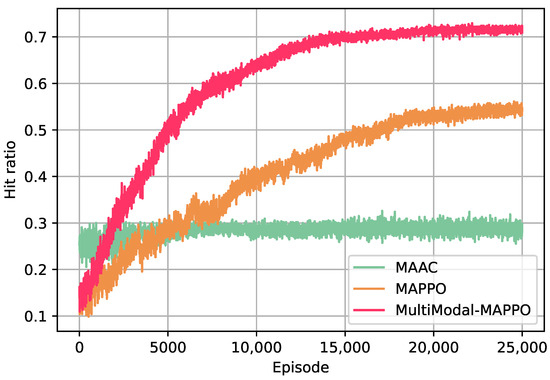

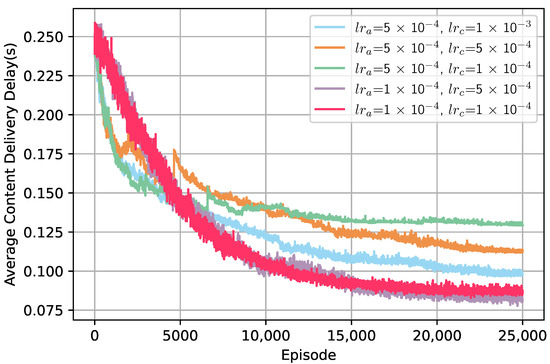

Proactive caching effectiveness is further evaluated using the push hit rates in Figure 7. Multi-modal-MAPPO achieves the highest hit rate of 0.7148, surpassing MAPPO (0.543) by 0.1718 and MAAC (0.29) by 0.4248. This substantial improvement highlights the algorithm’s enhanced ability to accurately predict user demands. The learning dynamics analysis in Figure 8 reveals critical insights into parameter optimization. While a high critic learning rate (e.g., ) accelerates the initial convergence, it risks suboptimal final performance. Conversely, dual low learning rates (, ) yield slower initial progress but achieve better ultimate delays. Notably, maintaining > improves the convergence efficiency, as can be seen in the , configuration. Inverting this ratio (e.g., , ) causes training instability and degraded performance. These findings provide valuable guidelines for balancing exploration–exploitation trade-offs in reinforcement learning-based caching systems.

Figure 7.

Hit ratio of the three algorithms.

Figure 8.

Different learning rate settings.

8. Conclusions

This paper addresses the complex scenario of large-scale remote sensing constellation and communication satellite network integration, proposing a multi-modal deep reinforcement learning-driven proactive remote sensing data push algorithm, multi-modal-MAPPO, which effectively resolves the challenge of collaborative decision-making for multi-modal heterogeneous data in large-scale dynamic network environments. The algorithm innovatively integrates satellite cache states, user cache states, and multi-modal data features into a Markov decision process (MDP), while introducing the MAPPO framework to satellite–terrestrial network push tasks. At the architectural level, it designs a dual-branch actor network to achieve the dynamic fusion of image-attribute modalities: a lightweight MobileNet-v3-small extracts semantic features from remote sensing images, combined with MLP-encoded static attributes (e.g., payload type, geographical location), and employs LSTM to capture temporal patterns in user cache evolution. A specially designed dynamically weighted attention mechanism significantly enhances semantic correlation modeling in complex scenarios through adaptive modality confidence evaluation. To tackle high-dimensional action space challenges, a multi-dimensional discretized policy decomposition mechanism is proposed to reduce the computational complexity. Simulations demonstrate that, in large-scale satellite–terrestrial networks, multi-modal-MAPPO reduces the average content access latency by 53.55% and 29.55% while improving the push hit rates by 0.1718 and 0.4248 compared to MAPPO and MAAC single-modal algorithms, respectively. This provides a scalable, highly adaptive theoretical framework and engineering paradigm for real-time intelligent services in large-scale satellite–terrestrial integrated networks.

Author Contributions

Methodology, R.P.; Software, C.B.; Formal analysis, S.C.; Resources, M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Pre-Research Project on Civil Aerospace Technologies (D030312), in part by the National Science Foundation of China under Grant 62001517 and Grant 62071202, in part by the “Double First-Class” Discipline Creation Project of Surveying Science and Technology under Grant CHXKYXBS03 and Grant GCCRC202306, and in part by the Fundamental Research Funds for the Universities of Henan Province under Grant NSFRF240202.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jia, Z.; Sheng, M.; Li, J.; Niyato, D.; Han, Z. LEO-satellite-assisted UAV: Joint trajectory and data collection for Internet of Remote Things in 6G aerial access networks. IEEE Internet Things J. 2021, 8, 9814–9826. [Google Scholar] [CrossRef]

- Ling, Q.; Chen, Y.; Feng, Z.; Pei, H.; Wang, C.; Yin, Z.; Qiu, Z. Monitoring Canopy Height in the Hainan Tropical Rainforest Using Machine Learning and Multi-Modal Data Fusion. Remote Sens. 2025, 17, 966. [Google Scholar] [CrossRef]

- Zhen, L.; Bashir, A.K.; Yu, K.; Al-Otaibi, Y.D.; Foh, C.H.; Xiao, P. Energy-efficient random access for LEO satellite-assisted 6G Internet of Remote Things. IEEE Internet Things J. 2021, 8, 142–149. [Google Scholar] [CrossRef]

- Chien, W.; Lai, C.; Hossain, M.; Muhammad, G. Heterogeneous space and terrestrial integrated networks for IoT: Architecture and challenges. IEEE Netw. 2019, 33, 15–21. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Li, F.; Li, X.; Jia, X. Remote sensing image recommendation based on spatial-temporal embedding topic model. Comput. Geosci. 2021, 157, 104935. [Google Scholar] [CrossRef]

- Kodheli, O.; Lagunas, E.; Maturo, N.; Sharma, S.K.; Shankar, B.; Montoya, J.F.M.; Duncan, J.C.M.; Spano, D.; Chatzinotas, S.; Kisseleff, S.; et al. Satellite communications in the new space era: A survey and future challenges. IEEE Commun. Survey Tuts. 2021, 23, 70–109. [Google Scholar] [CrossRef]

- Su, Y.; Liu, Y.; Zhou, Y.; Yuan, J.; Cao, H.; Shi, J. Broadband LEO satellite communications: Architectures and key technologies. IEEE Wirel. Commun. 2019, 26, 55–61. [Google Scholar] [CrossRef]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, J.; Ma, J.; Jia, X. SC-PNN: Saliency cascade convolutional neural network for pansharpening. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9697–9715. [Google Scholar] [CrossRef]

- Li, D.; Wang, M.; Jiang, J. China’s high-resolution optical remote sensing satellites and their mapping applications. Geo-Spat. Inf. Sci. 2021, 24, 85–94. [Google Scholar] [CrossRef]

- Tsigkanos, A.; Kranitis, N.; Theodorou, G.; Paschalis, A. A 3.3 Gbps CCSDS 123.0-B-1 multispectral & hyperspectral image compression hardware accelerator on a space-grade SRAM FPGA. IEEE Trans. Emerg. Top. Comput. 2018, 9, 90–103. [Google Scholar]

- Bai, L.; Han, R.; Liu, J.; Choi, J.; Zhang, W. Relay-aided random access in space-air-ground integrated networks. IEEE Wireless Commun. 2020, 27, 37–43. [Google Scholar] [CrossRef]

- Qu, L.; Xu, G.; Zeng, Z.; Zhang, N.; Zhang, Q. UAV-assisted RF/FSO relay system for space-air-ground integrated network: A performance analysis. IEEE Trans. Wirel. Commun. 2022, 21, 6211–6225. [Google Scholar] [CrossRef]

- Li, Y.; Su, H.; Chen, J.; Wang, W.; Wang, Y.; Duan, C.; Chen, A. A Contrast-Enhanced Approach for Aerial Moving Target Detection Based on Distributed Satellites. Remote Sens. 2025, 17, 880. [Google Scholar] [CrossRef]

- Yang, J.; Li, D.; Jiang, X.; Chen, S.; Hanzo, L. Enhancing the resilience of low earth orbit remote sensing satellite networks. IEEE Netw. 2020, 34, 304–311. [Google Scholar] [CrossRef]

- Garg, N.; Sellathurai, M.; Ratnarajah, T. In-network caching for hybrid satellite-terrestrial networks using deep reinforcement learning. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 8797–8801. [Google Scholar]

- Singh, P.; Hazarika, B.; Singh, K.; Pan, C.; Huang, W.; Li, C. DRL-Based Federated Learning for Efficient Vehicular Caching Management. IEEE Internet Things J. 2024, 11, 34156–34171. [Google Scholar] [CrossRef]

- Wang, F.; Zhu, X.; Cheng, X.; Zhang, Y.; Li, Y. MMKDGAT: Multi-modal Knowledge graph-aware Deep Graph Attention Network for remote sensing image recommendation. Expert Syst. Appl. 2024, 235, 121278. [Google Scholar] [CrossRef]

- Chen, J.; Di, X.; Xu, R.; Luo, H.; Qi, H.; Zhan, P.; Jiang, Y. An efficient scheme for in-orbit remote sensing image data retrieval. Future Gener. Comput. Syst. 2024, 150, 103–114. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, D.; Liu, J. A space-time periodic task model for recommendation of remote sensing images. ISPRS Int. J. Geo-Inf. 2018, 7, 40. [Google Scholar] [CrossRef]

- Zhu, L.; Wu, F.; Fu, K.; Hu, Y.; Wang, Y.; Tian, X.; Huang, K. An Active Service Recommendation Model for Multi-Source Remote Sensing Information Using Fusion of Attention and Multi-Perspective. Remote Sens. 2023, 15, 2564. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, W.; Zhang, E.; Xia, X. Time-Aware Dual LSTM Neural Network with Similarity Graph Learning for Remote Sensing Service Recommendation. Sensors 2024, 24, 1185. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Liu, L.; Jiang, C.; Wang, S.; Zhang, P.; Shen, S. Incorporating distributed DRL into storage resource optimization of space-air-ground integrated wireless communication network. IEEE J. Sel. Topics Signal Process. 2021, 16, 434–446. [Google Scholar] [CrossRef]

- Qiu, C.; Yao, H.; Yu, F.R.; Xu, F.; Zhao, C. Deep Q-learning aided networking, caching, and computing resources allocation in software-defined satellite-terrestrial networks. IEEE Trans. Veh. Technol. 2019, 68, 5871–5883. [Google Scholar] [CrossRef]

- Zhao, R.; Ran, Y.; Luo, J.; Chen, S. Towards coverage-aware cooperative video caching in LEO satellite networks. In Proceedings of the GLOBECOM 2022-2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 1893–1898. [Google Scholar]

- Yang, M.; Gao, D.; Foh, C.H.; Quan, W.; Leung, V.C. Multi-agent reinforcement learning-based joint caching and routing in heterogeneous networks. IEEE Trans. Cogn. Commun. Netw. 2024, 10, 1959–1974. [Google Scholar] [CrossRef]

- Chen, S.; Yao, Z.; Jiang, X.; Yang, J.; Hanzo, L. Multi-agent deep reinforcement learning-based cooperative edge caching for ultra-dense next-generation networks. IEEE Trans. Cogn. Commun. Netw. 2020, 69, 2441–2456. [Google Scholar] [CrossRef]

- Wu, M.; Dai, S.; Hu, H.; Wang, Z. Collaborative Edge Caching in LEO Satellites Networks: A MAPPO Based Approach. In Proceedinngs of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024; pp. 1–6. [Google Scholar]

- Stephenson, T.P.; DeCleene, B.T.; Speckert, G.; Voorhees, H.L. BADD phase II: DDS information management architecture. In Digitization Battlefield II; SPIE: Bellingham, WA, USA, 1997; pp. 49–58. [Google Scholar]

- Yu, X. Towards intelligent spatial information dissemination based on user profile model. J. Tongji Univ. 2008, 13, 401–410. [Google Scholar]

- Chen, Z.; Gu, S.; Zhang, Q.; Zhang, N.; Xiang, W. Energy-Efficient Coded Content Placement for Satellite-Terrestrial Cooperative Caching Network. In Proceedings of the 2022 14th International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 1–3 November 2022; pp. 871–876. [Google Scholar]

- Gu, S.; Sun, X.; Yang, Z.; Huang, T.; Xiang, W.; Yu, K. Energy-aware coded caching strategy design with resource optimization for satellite-UAV-vehicle-integrated networks. IEEE Internet Things J. 2021, 9, 5799–5811. [Google Scholar] [CrossRef]

- Ji, M.; Tulino, A.M.; Llorca, J.; Caire, G. Order-optimal rate of caching and coded multicasting with random demands. IEEE Trans. Inf. Theory 2017, 63, 3923–3949. [Google Scholar] [CrossRef]

- Liang, J.; Chaudhry, A.U.; Erdogan, E.; Yanikomeroglu, H. Link Budget Analysis for Free-Space Optical Satellite Networks. In Proceedings of the 2022 IEEE 23rd International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Belfast, UK, 14–17 June 2022; pp. 471–476. [Google Scholar]

- Zhao, F.; Chen, W.; Liu, Z.; Li, J.; Wu, Q. Deep Reinforcement Learning Based Intelligent Reflecting Surface Optimization for TDD Multi-User MIMO Systems. IEEE Wirel. Commun. Lett. 2023, 12, 1951–1955. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Peng, R.; Chen, S.; Xue, C. Collaborative Content Caching Algorithm for Large-Scale ISTNs Based on MAPPO. IEEE Wirel. Commun. Lett. 2017, 13, 3069–3073. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.Z.; Wan, S.H.; Wang, X.F.; Tan, M.; Zou, L.; Li, X.L.; Chen, Y. A benchmark data set for aircraft type recognition from remote sensing images. Appl. Soft Comput. 2020, 89, 106132. [Google Scholar] [CrossRef]

- Sun, X.; Lv, Y.; Wang, Z.; Fu, K. SCAN: Scattering characteristics analysis network for few-shot aircraft classification in high-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Wu, M.; Guo, K.; Li, X.; Lin, Z.; Wu, Y.; Tsiftsis, T.A.; Song, H. Deep reinforcement learning-based energy efficiency optimization for RIS-aided integrated satellite-aerial-terrestrial relay Networks. IEEE Trans. Commun. 2024, 72, 4163–4178. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).