A Multi-Branch Attention Fusion Method for Semantic Segmentation of Remote Sensing Images

Abstract

1. Introduction

- (1)

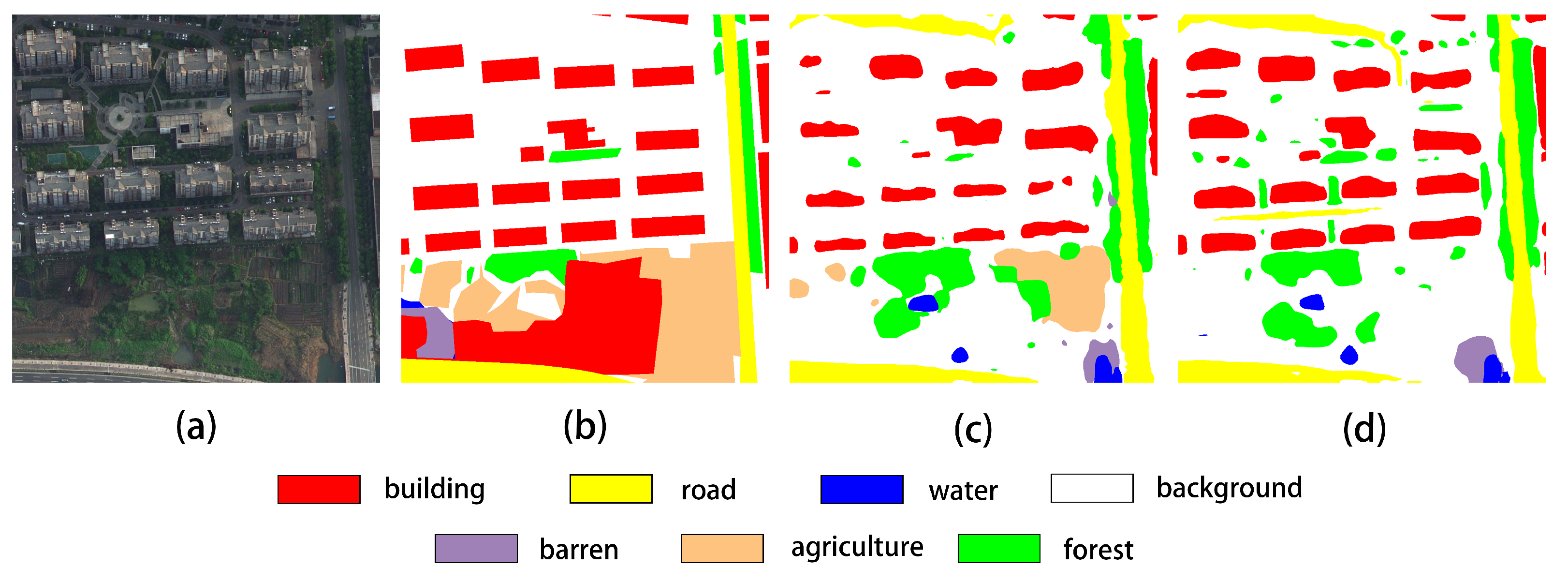

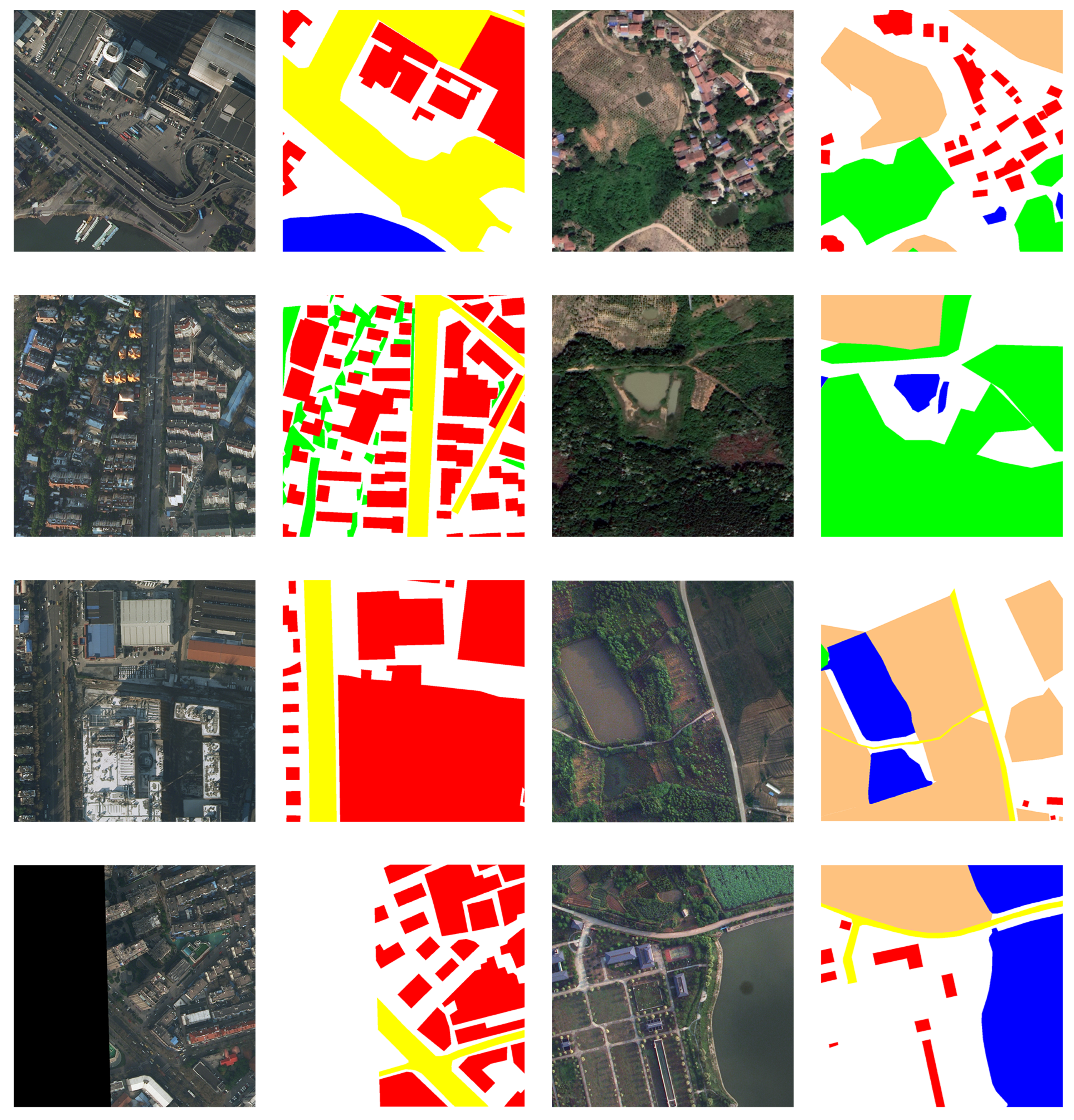

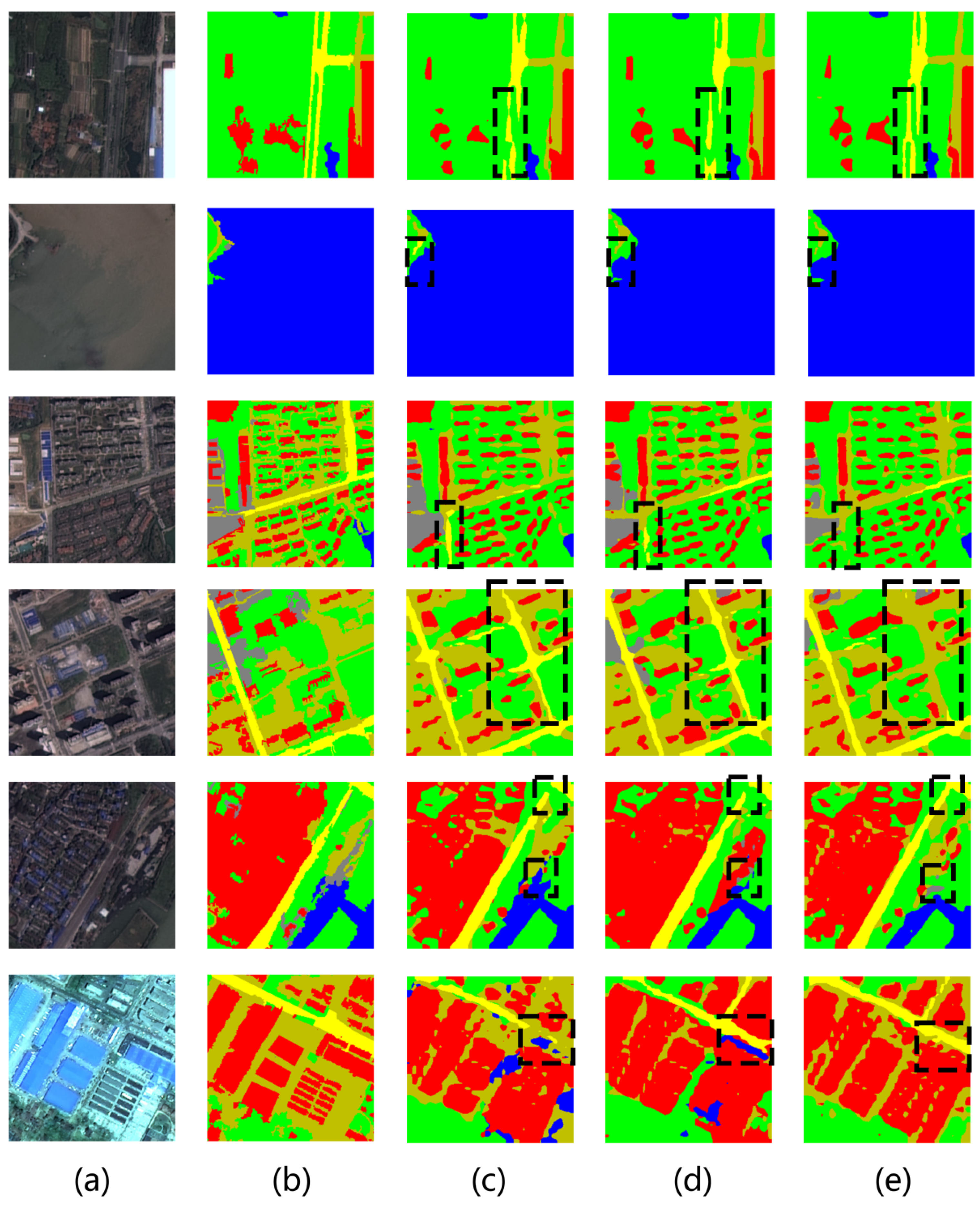

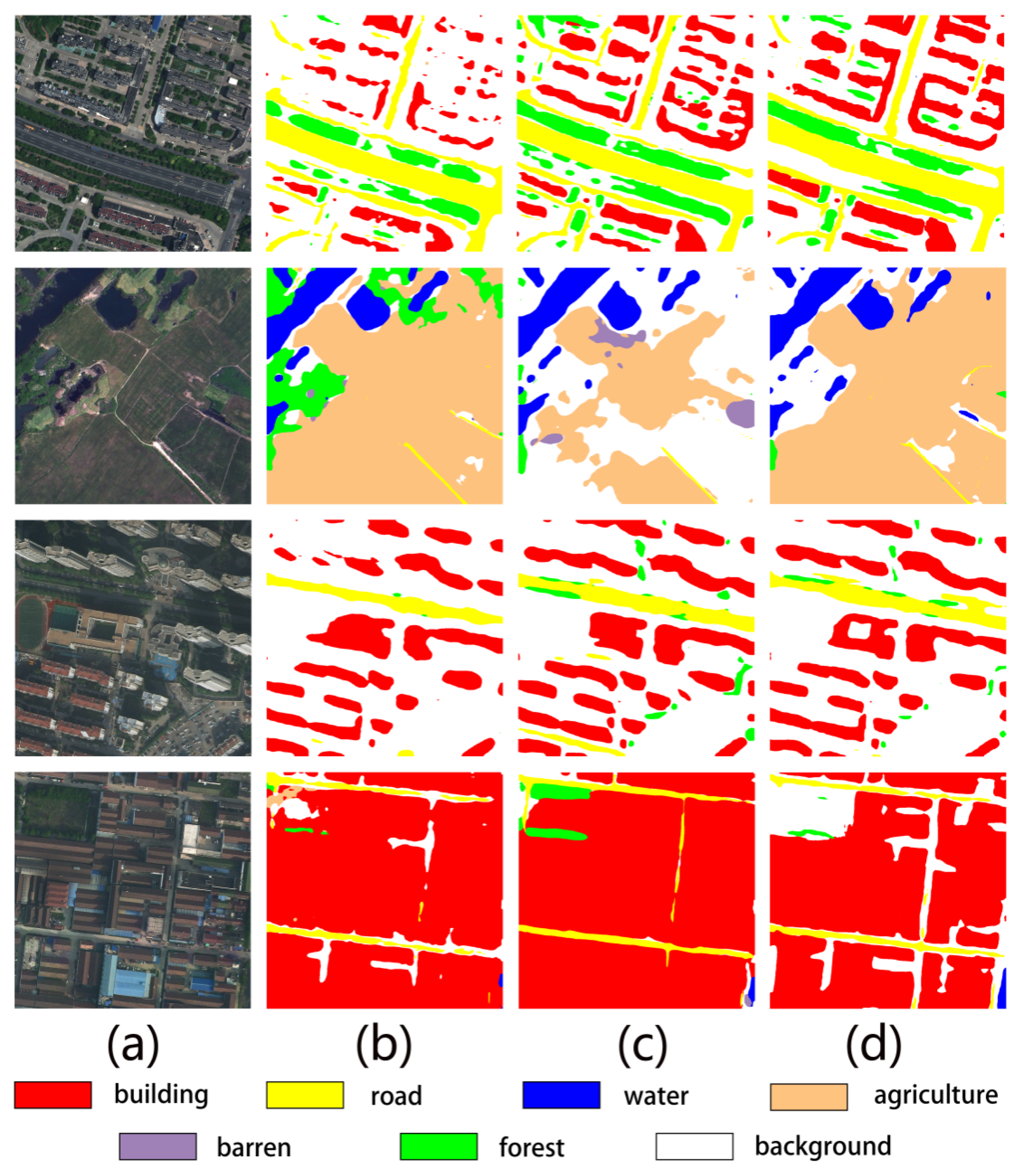

- We designed and proposed a multi-branch attention fusion method based on shallow and deep semantic information, specifically tailored for remote sensing data and exhibiting high generalization capability. Compared to the attention mechanisms employed in baseline methods, our approach demonstrates superior effectiveness while maintaining competitive inference speed. As illustrated in Figure 1, our method produces more intuitive results, with building regions exhibiting more precise boundaries and road regions showing enhanced connectivity.

- (2)

- We conducted comprehensive tests across multiple datasets, comparing the effects of applying our proposed attention mechanism to both natural image datasets and remote sensing datasets. The results reveal that this multi-branch attention mechanism exhibits superior performance on remote sensing imagery, achieving enhanced overall performance.

2. Related Work

2.1. Fully Convolutional Network (FCN)-Based Semantic Segmentation

2.2. PP-LiteSeg

2.3. Multi-Branch Feature Fusion Techniques

3. Methods

4. Experiments

4.1. Datasets

4.1.1. Cityscapes

- Training set: 2975 images (59.5%)

- Validation set: 500 images (10%)

- Test set: 1525 images (30.5%)

- Evaluation categories: 19 urban scene classes

- Resolution: 2048 × 1024 pixels

4.1.2. LoveDA

- Urban subset:

- −

- Training images: 1156;

- −

- Validation images: 677;

- −

- Total: 1833 samples;

- −

- Spatial resolution: 0.3 m;

- −

- Spectral channels: RGB.

- Rural subset:

- −

- Training images: 1366;

- −

- Validation images: 992;

- −

- Total: 2358 samples;

- −

- Spatial resolution: 0.3 m;

- −

- Spectral channels: RGB.

4.1.3. WHDLD

- Source: Wuhan metropolitan area, China;

- Total samples: 4,940 RGB images;

- Image dimensions: 256×256 pixels;

- Spatial resolution: 2 m;

- Land cover classes: 6 categories (buildings, roads, pavements, vegetation, bare soil, water).

- Compared with LoveDA, WHDLD features:

- Smaller spatial coverage per image;

- Lower spatial resolution;

- Higher class imbalance.

4.2. Data Augmentation

4.3. Evaluation Metrics

4.4. Experiments on Non-Remote Sensing Datasets

Application Experiment on Cityscapes Dataset

4.5. Experiments on Remote Sensing Datasets

4.5.1. Performance Comparison on WHDLD Dataset

4.5.2. Performance Comparison on LoveDA Dataset

4.5.3. Comparative Experiments with Other Networks

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BA | Boundary-aware |

| RSIR | Remote Sensing Image Retrieval |

| HBC | Hybrid Basic Convolution |

| FCN | Fully Convolutional Networks |

| SCFSE | Spatial-Channel Fusion Squeeze-and-Excitation |

| FCN | Fully Convolutional Networks |

| FLD | Flexible and Lightweight Decoder |

| SPPM | Simple Pyramid Pooling Module |

| UAFM | Unified Attention Fusion Module |

| MAFM | Multi-branch attention fusion mechanism (MAFM) |

| mIoU | Mean Intersection over Union |

References

- Zhu, L.; Suomalainen, J.; Liu, J.; Hyyppä, J.; Kaartinen, H.; Haggren, H. A Review: Remote Sensing Sensors. In Multi-Purposeful Application of Geospatial Data; Springer: Cham, Switzerland, 2018; Volume 19, pp. 1–19. [Google Scholar]

- Verstraete, M.M.; Pinty, B.; Myneni, R.B. Potential and Limitations of Information Extraction on the Terrestrial Biosphere from Satellite Remote Sensing. Remote Sens. Environ. 1996, 58, 201–214. [Google Scholar] [CrossRef]

- Salamí, E.; Barrado, C.; Pastor, E. UAV Flight Experiments Applied to the Remote Sensing of Vegetated Areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef]

- Jin, W.; Ge, H.L.; Du, H.Q.; Xu, X.J. A Review on Unmanned Aerial Vehicle Remote Sensing and Its Application. Remote Sens. Inf. 2009, 1, 88–92. [Google Scholar]

- Feroz, S.; Abu Dabous, S. UAV-Based Remote Sensing Applications for Bridge Condition Assessment. Remote Sens. 2021, 13, 1809. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV Remote Sensing Applications in Marine Monitoring: Knowledge Visualization and Review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef]

- Holmgren, P.; Thuresson, T. Satellite Remote Sensing for Forestry Planning—A Review. Scand. J. For. Res. 1998, 13, 90–110. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote Sensing for Urban Vegetation Mapping Using Random Forest and Texture Analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, C.; Qiang, Z.; Xu, W.; Fan, J. A New Forest Growing Stock Volume Estimation Model Based on AdaBoost and Random Forest Model. Forests 2024, 15, 260. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Soille, P.; Pesaresi, M. Advances in Mathematical Morphology Applied to Geoscience and Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2042–2055. [Google Scholar] [CrossRef]

- Richards, J.A.; Richards, J.A. Remote Sensing Digital Image Analysis, 6th ed.; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Atkinson, P.M.; Tatnall, A.R.L. Introduction Neural Networks in Remote Sensing. Int. J. Remote Sens. 1997, 18, 699–709. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Lin, H.; Tse, R.; Tang, S.K.; Qiang, Z.P.; Pau, G. The Positive Effect of Attention Module in Few-Shot Learning for Plant Disease Recognition. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19–21 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 114–120. [Google Scholar]

- Lin, H.; Chen, Z.; Qiang, Z.; Tang, S.-K.; Liu, L.; Pau, G. Automated Counting of Tobacco Plants Using Multispectral UAV Data. Agronomy 2023, 13, 2861. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, W.; Zhang, T.; Li, J. HRCNet: High-Resolution Context Extraction Network for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2020, 13, 71. [Google Scholar] [CrossRef]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A Deep Learning Framework for Semantic Segmentation of Remotely Sensed Data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for Small Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611215. [Google Scholar] [CrossRef]

- Liu, R.; Mi, L.; Chen, Z. AFNet: Adaptive Fusion Network for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7871–7886. [Google Scholar] [CrossRef]

- Ding, L.; Tang, H.; Bruzzone, L. LANet: Local Attention Embedding to Improve the Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 426–435. [Google Scholar] [CrossRef]

- Zhang, J.; Qiang, Z.; Lin, H.; Chen, Z.; Li, K.; Zhang, S. Research on tobacco field semantic segmentation method based on multispectral unmanned aerial vehicle data and improved PP-LiteSeg model. Agronomy 2024, 14, 1502. [Google Scholar] [CrossRef]

- Lin, H.; Qiang, Z.; Tse, R.; Tang, S.-K.; Pau, G. A few-shot learning method for tobacco abnormality identification. Front. Plant Sci. 2024, 15, 1333236. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, G.; Tan, X.; Guo, B.; Zhu, K.; Liao, P.; Wang, T.; Wang, Q.; Zhang, X. SDFCNv2: An Improved FCN Framework for Remote Sensing Images Semantic Segmentation. Remote Sens. 2021, 13, 4902. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X.X. RiFCN: Recurrent Network in Fully Convolutional Network for Semantic Segmentation of High Resolution Remote Sensing Images. arXiv 2018, arXiv:1805.02091. [Google Scholar]

- Peng, J.; Liu, Y.; Tang, S.; Hao, Y.; Chu, L.; Chen, G.; Wu, Z.; Chen, Z.; Yu, Z.; Du, Y.; et al. Pp-liteseg: A Superior Real-Time Semantic Segmentation Model. arXiv 2022, arXiv:2204.02681. [Google Scholar]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J. Rethinking Bisenet for Real-Time Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 9716–9725. [Google Scholar]

- Fan, C.M.; Liu, T.J.; Liu, K.H. Compound Multi-branch Feature Fusion for Real Image Restoration. arXiv 2022, arXiv:2206.02748. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation. arXiv 2021, arXiv:2110.08733. [Google Scholar]

- Shao, Z.; Yang, K.; Zhou, W. Performance Evaluation of Single-Label and Multi-Label Remote Sensing Image Retrieval Using a Dense Labeling Dataset. Remote Sens. 2018, 10, 964. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, W.; Deng, X.; Zhang, M.; Cheng, Q. Multilabel Remote Sensing Image Retrieval Based on Fully Convolutional Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 318–328. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-Resolution Representations for Labeling Pixels and Regions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Zhu, W.; Jia, Y. High-Resolution Remote Sensing Image Land Cover Classification Based on EAR-HRNetV2. J. Phys. Conf. Ser. 2023, 2593, 012002. [Google Scholar] [CrossRef]

- Yao, M.; Zhang, Y.; Liu, G.; Pang, D. SSNet: A Novel Transformer and CNN Hybrid Network for Remote Sensing Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 3023–3037. [Google Scholar] [CrossRef]

| Model | Encoder | Channels in Decoder |

|---|---|---|

| PP-LiteSeg-T | STDC1 | 32, 64, 128 |

| PP-LiteSeg-B | STDC2 | 64, 96, 128 |

| Model | InputSize | Val mIoU (%) | Test mIoU (%) |

|---|---|---|---|

| MobileNet V3-Large | - | - | 72.6 |

| STDC1-Seg50 | 512 × 1024 | 72.2 | 71.9 |

| LEDNet | 512 × 1024 | - | 70.6 |

| pp_liteseg_stdc1_V01 | 512 × 1024 | 75.84 | 73.37 |

| pp_liteseg_stdc1_Spa | 512 × 1024 | 73.10 | 72.00 |

| pp_liteseg_stdc2_V02 | 1024 × 1024 | 78.80 | 77.21 |

| pp_liteseg_stdc2_Spa | 1024 × 1024 | 78.20 | 77.50 |

| Model | Build | Road | Pavement | Vegetation | Bare Soil | Water | Parameter | Val mIoU (%) | Test mIoU (%) |

|---|---|---|---|---|---|---|---|---|---|

| U-net | 55.32 | 43.14 | 28.16 | 84.68 | 34.85 | 83.19 | - | - | 54.89 |

| FactSeg | 55.31 | 48.35 | 29.46 | 85.27 | 34.21 | 84.43 | - | - | 56.17 |

| HRNetV2 [34] | 56.11 | 52.41 | 31.74 | 85.65 | 31.58 | 86.63 | - | - | 57.35 |

| EAR-HRNet [35] | 58.56 | 55.42 | 32.96 | 87.12 | 39.06 | 88.56 | - | - | 60.28 |

| SSNet [36] | 56.00 | 59.76 | 42.64 | 82.03 | 37.28 | 93.90 | - | 61.93 | 60.63 |

| pp-liteseg_stdc2_V01 | 55.98 | 60.25 | 40.70 | 79.05 | 37.37 | 93.71 | 15.0 | 62.63 | 61.17 |

| pp-liteseg_stdc2_V02 | 56.59 | 60.45 | 41.08 | 79.02 | 37.83 | 93.79 | 15.0 | 62.54 | 61.45 |

| pp-liteseg_stdc2_Spa | 55.09 | 60.30 | 40.75 | 78.92 | 37.62 | 93.55 | 12.38 | 62.51 | 61.03 |

| pp-liteseg_stdc1_V01 | 55.76 | 60.48 | 39.97 | 79.04 | 37.66 | 93.74 | 10.41 | 62.57 | 61.10 |

| pp-liteseg_stdc1_V02 | 55.52 | 59.87 | 40.31 | 78.69 | 36.58 | 93.79 | 10.41 | 62.34 | 60.77 |

| pp-liteseg_stdc1_Spa | 54.84 | 58.04 | 40.73 | 78.61 | 36.15 | 93.49 | 8.15 | 61.96 | 60.31 |

| Model | Bg | Build | Road | Water | Barren | Forest | Agri | Param (M) | Val mIoU (%) | Test mIoU (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| pp-liteseg_stdc1_V01 | 43.06 | 53.45 | 55.56 | 78.20 | 17.83 | 45.83 | 61.19 | 10.41 | 46.69 | 50.73 |

| pp-liteseg_stdc1_V02 | 43.63 | 55.52 | 53.69 | 78.11 | 17.06 | 43.04 | 58.80 | 10.41 | 45.64 | 49.98 |

| pp-liteseg_stdc1_Spa | 44.19 | 54.75 | 54.57 | 78.37 | 12.10 | 45.17 | 62.29 | 8.15 | 45.53 | 50.21 |

| pp-liteseg_stdc2_V01 | 41.72 | 54.05 | 51.52 | 77.62 | 16.91 | 44.62 | 58.16 | 15.0 | 51.63 | 49.23 |

| pp-liteseg_stdc2_V02 | 44.14 | 55.30 | 55.54 | 79.01 | 14.64 | 45.38 | 59.02 | 15.0 | 51.18 | 50.43 |

| pp-liteseg_stdc2_Spa | 43.79 | 51.30 | 54.24 | 77.29 | 15.88 | 45.19 | 61.53 | 12.38 | 50.26 | 49.89 |

| Model | Bg | Build | Road | Water | Barren | Forest | Agri | Param (M) | Val mIoU (%) |

|---|---|---|---|---|---|---|---|---|---|

| RS3Mamba | 39.72 | 58.75 | 57.92 | 61.00 | 37.24 | 39.67 | 33.98 | 43.32 | 46.90 |

| TransUNet | 35.13 | 58.90 | 57.57 | 67.61 | 25.93 | 37.01 | 20.85 | 105.32 | 43.29 |

| ABCNet | 35.15 | 46.37 | 48.61 | 44.31 | 28.43 | 37.58 | 11.10 | 13.67 | 35.94 |

| UNetformer | 37.61 | 52.78 | 51.89 | 63.47 | 39.84 | 34.23 | 11.44 | 11.69 | 41.61 |

| pp-liteseg_stdc2_V01 | 40.03 | 63.04 | 61.90 | 67.62 | 26.35 | 43.26 | 41.53 | 15.0 | 49.12 |

| pp-liteseg_stdc2_V02 | 41.13 | 62.32 | 59.29 | 67.81 | 28.41 | 45.45 | 44.50 | 15.0 | 49.84 |

| pp-liteseg_stdc2_Spa | 41.98 | 61.62 | 60.18 | 68.17 | 24.71 | 43.12 | 44.61 | 12.38 | 49.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.; Qiang, Z.; Lin, H.; Wang, X. A Multi-Branch Attention Fusion Method for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2025, 17, 1898. https://doi.org/10.3390/rs17111898

Li K, Qiang Z, Lin H, Wang X. A Multi-Branch Attention Fusion Method for Semantic Segmentation of Remote Sensing Images. Remote Sensing. 2025; 17(11):1898. https://doi.org/10.3390/rs17111898

Chicago/Turabian StyleLi, Kaibo, Zhenping Qiang, Hong Lin, and Xiaorui Wang. 2025. "A Multi-Branch Attention Fusion Method for Semantic Segmentation of Remote Sensing Images" Remote Sensing 17, no. 11: 1898. https://doi.org/10.3390/rs17111898

APA StyleLi, K., Qiang, Z., Lin, H., & Wang, X. (2025). A Multi-Branch Attention Fusion Method for Semantic Segmentation of Remote Sensing Images. Remote Sensing, 17(11), 1898. https://doi.org/10.3390/rs17111898