Terrace Extraction Method Based on Remote Sensing and a Novel Deep Learning Framework

Abstract

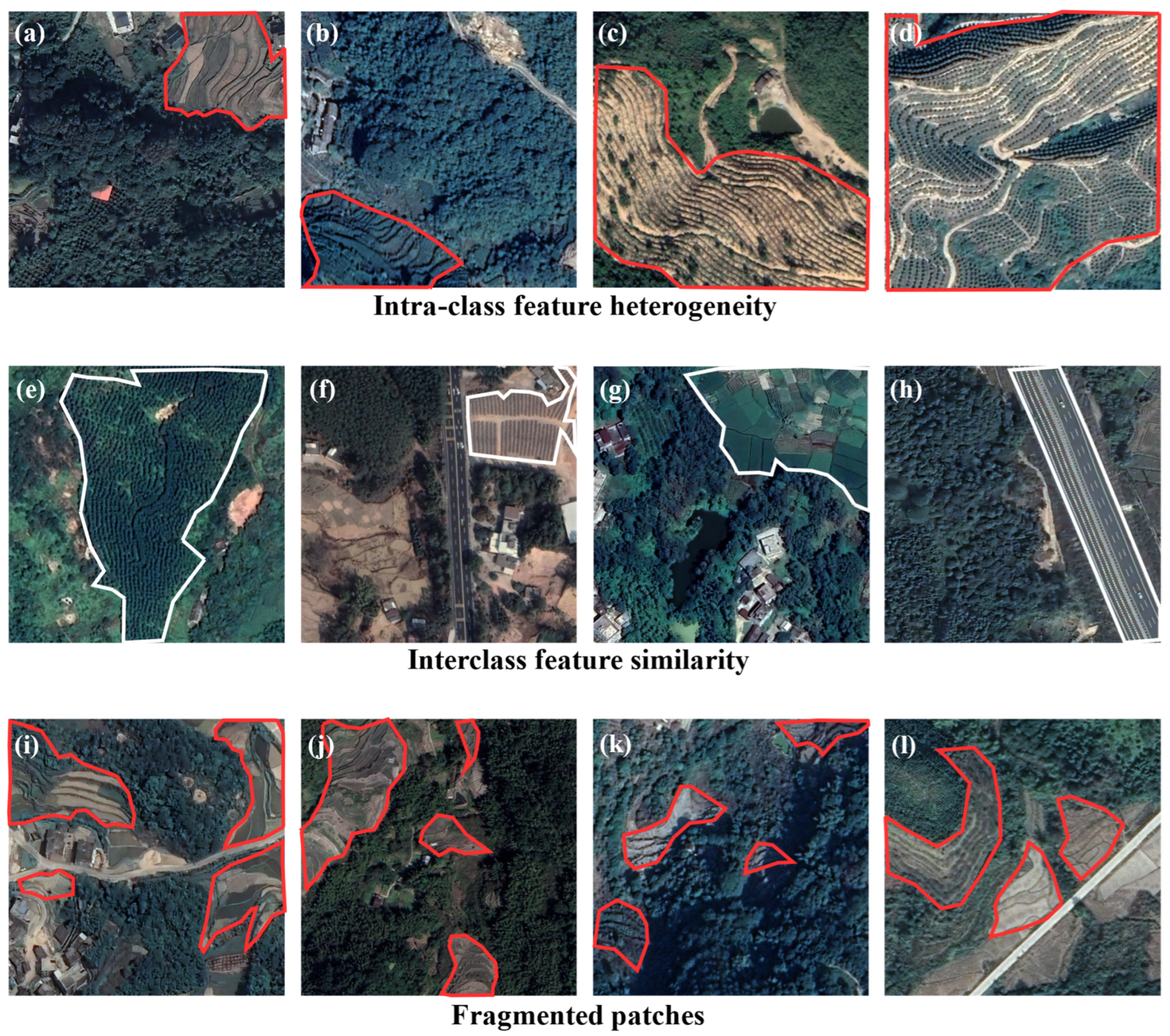

1. Introduction

2. Materials and Methods

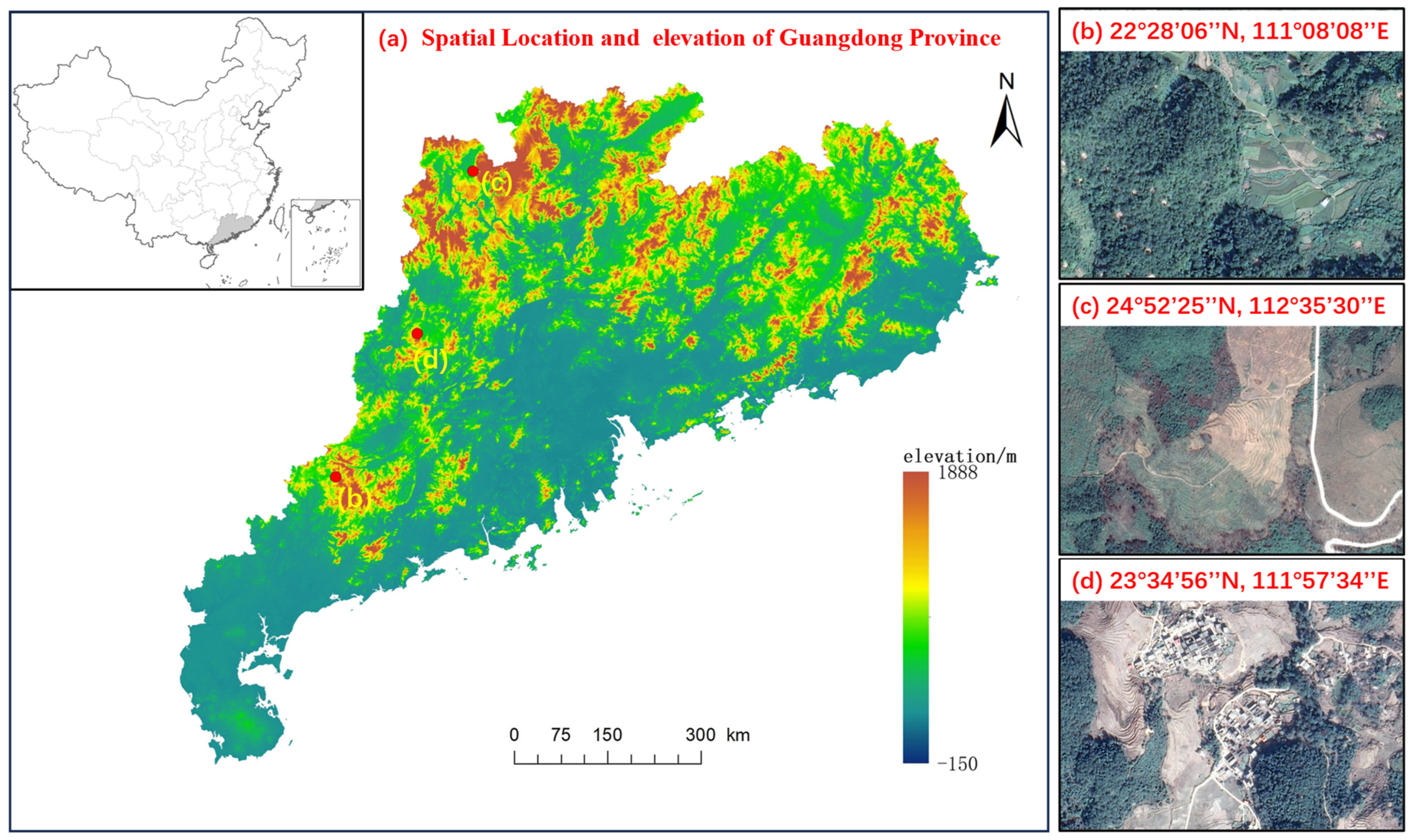

2.1. Study Area

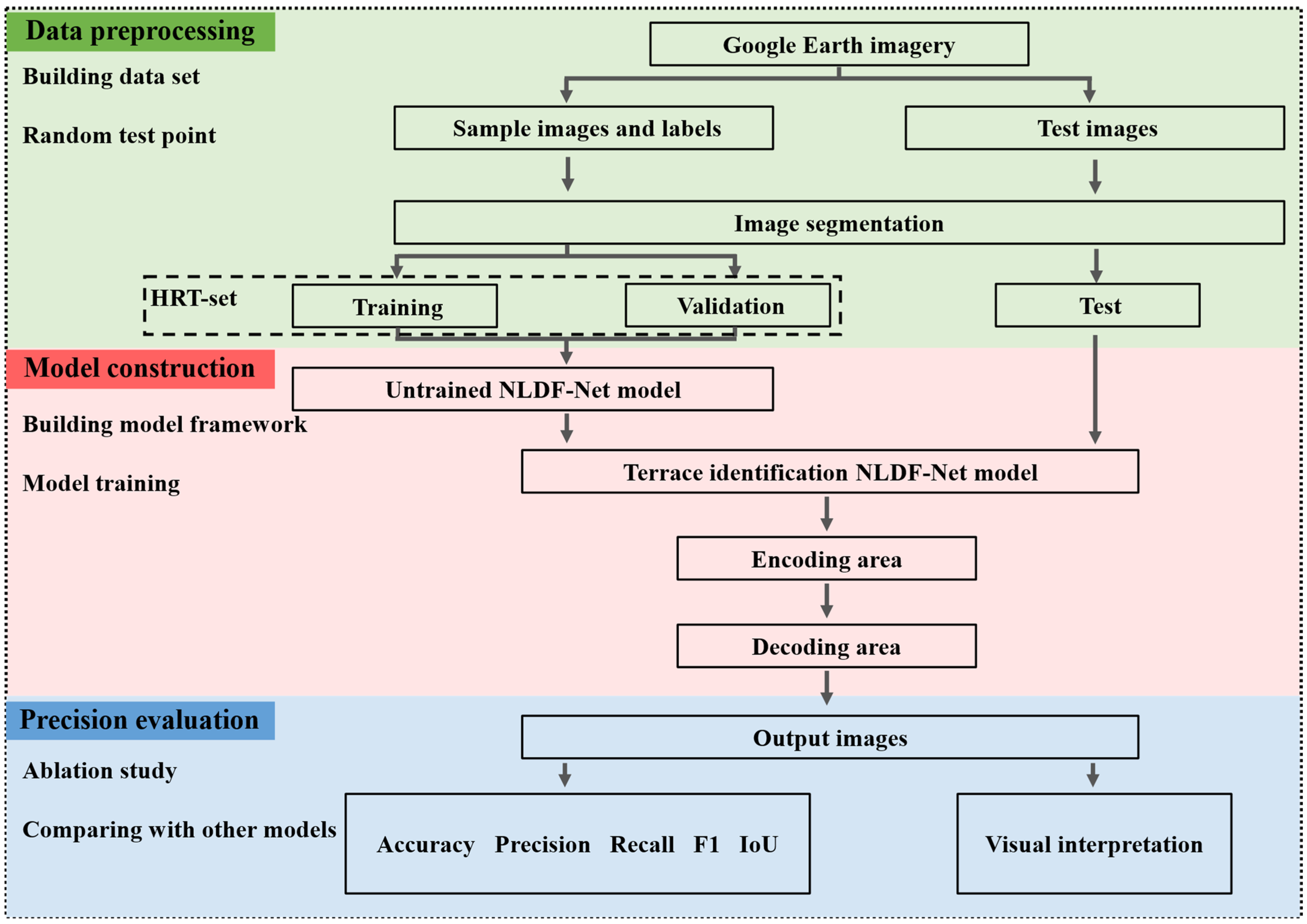

2.2. Technical Route

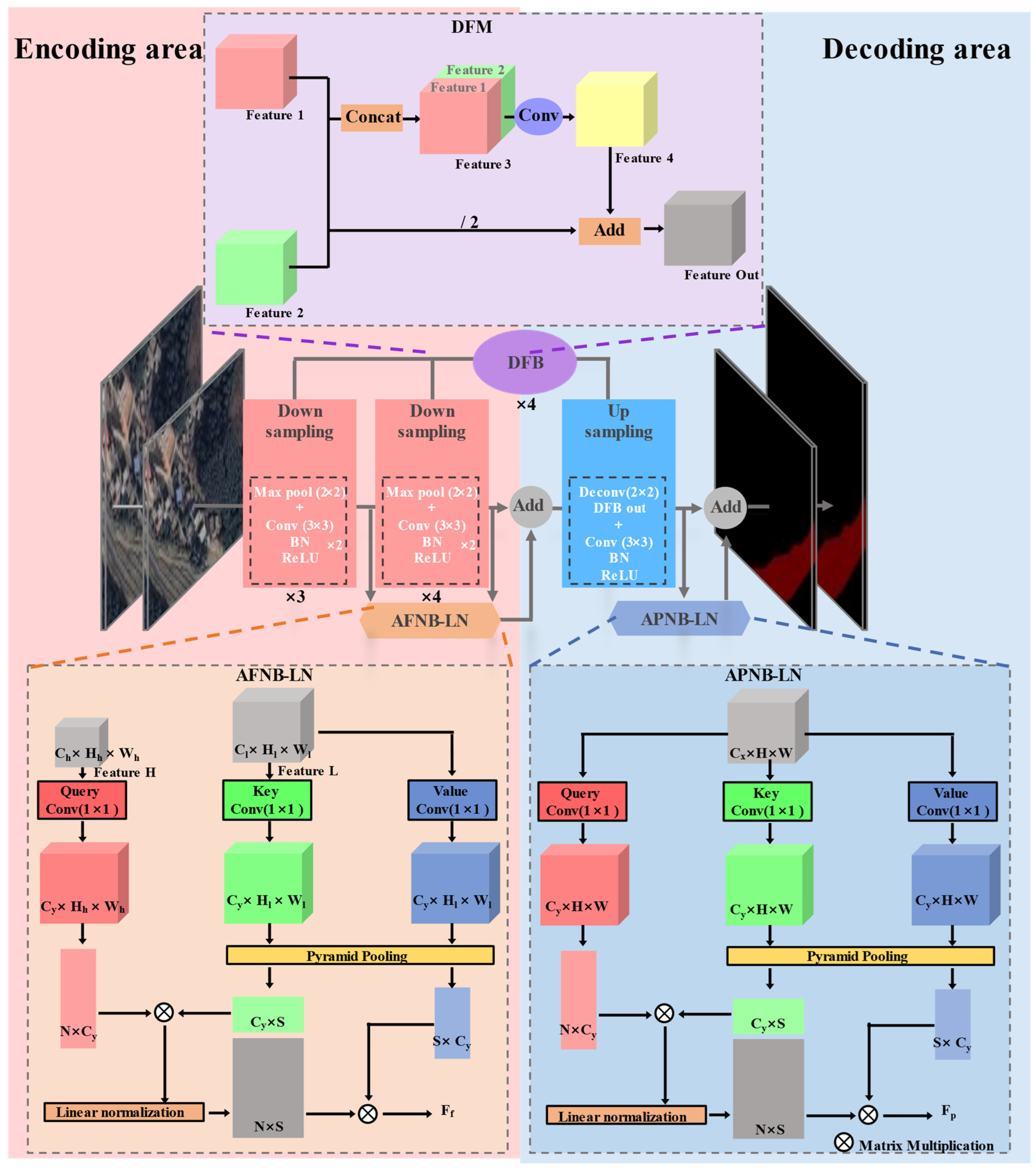

2.3. NLDF-Net Construction

2.3.1. Downsampling

2.3.2. Upsampling

2.3.3. ANB-LN

2.3.4. DFM

2.4. Experimental Methods

2.4.1. Comparisons with Different Module Combination

2.4.2. Comparisons with Advanced Deep Learning Models

- U-Net: The U-shaped structure comprises two parts: encoding and decoding. Each layer of the model had more feature dimensions, enabling it to use diverse and comprehensive features. In addition, information from different levels of feature maps in the encoding stage is utilized by Concat fusion; therefore, accurate prediction results can be obtained with fewer training samples [26]. Although U-Net originated from medical image segmentation, it is widely available in the field of remote sensing because of its excellent performance [51,52].

- IEU-Net: This model was designed for the extraction of terraces in the Loess Plateau region of China, and is constructed upon the U-Net framework. Specifically, it involves the addition of a dropout layer with a probability of 0.5 following the fourth and fifth sets of convolutional operations [53]. In other words, during each training iteration of the model, 50% of the neurons are randomly dropped out, a method that effectively prevents overfitting. Additionally, batch normalization (BN) is applied after each convolutional layer [54]. As previously mentioned, the inclusion of batch normalization (BN) enhances the training speed of the model. In a previous study, this model achieved high accuracy in extracting terraces from the Loess Plateau region of China [41].

- Pyramid Scene Parsing Network (PSP-Net): By introducing a pyramid pooling module, the model aggregates the context of different regions so that it can use global information to improve its accuracy [28]. In addition, an auxiliary loss function (AR) is proposed; that is, two loss functions are propagated together, and different weights are used to jointly optimize the parameters, which is conducive to the rapid convergence of the model. This model yielded excellent results in the ImageNet scene-parsing challenge.

- D-LinkNet: LinkNet is used as the backbone network, and an additional dilated convolution layer is added to the central part of the network. The dilated convolution layer fully uses the information from the deepest feature map of the coding layer while expanding the receptive field using convolutions with different expansion rates [55]. This method performed well in the DeepGlobe road extraction challenge based on remote sensing images, and has therefore been widely used in extracting other ground objects [56].

2.4.3. Precision Evaluation

2.4.4. Model Training Details

3. Results

3.1. HRT-Set Result

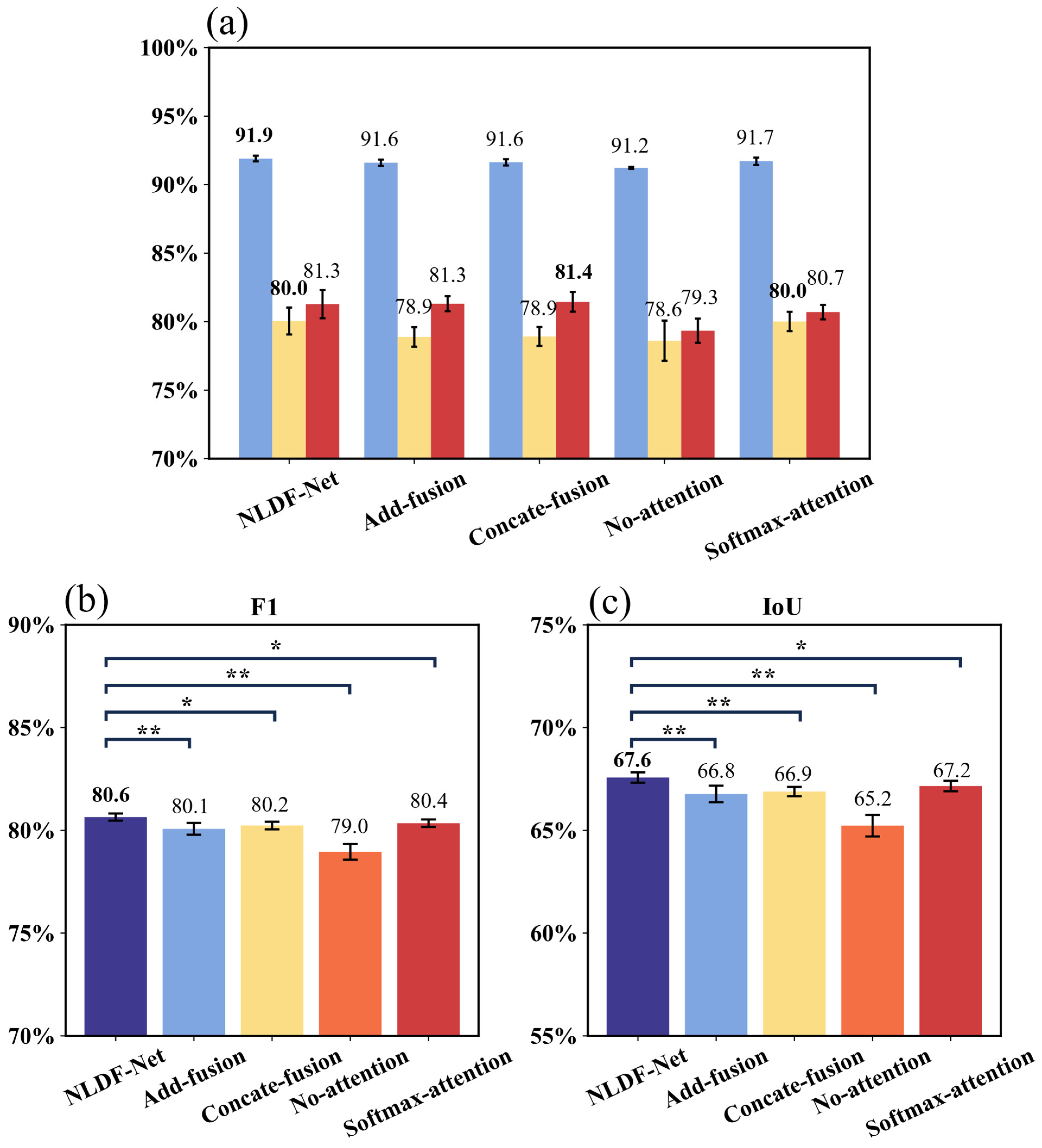

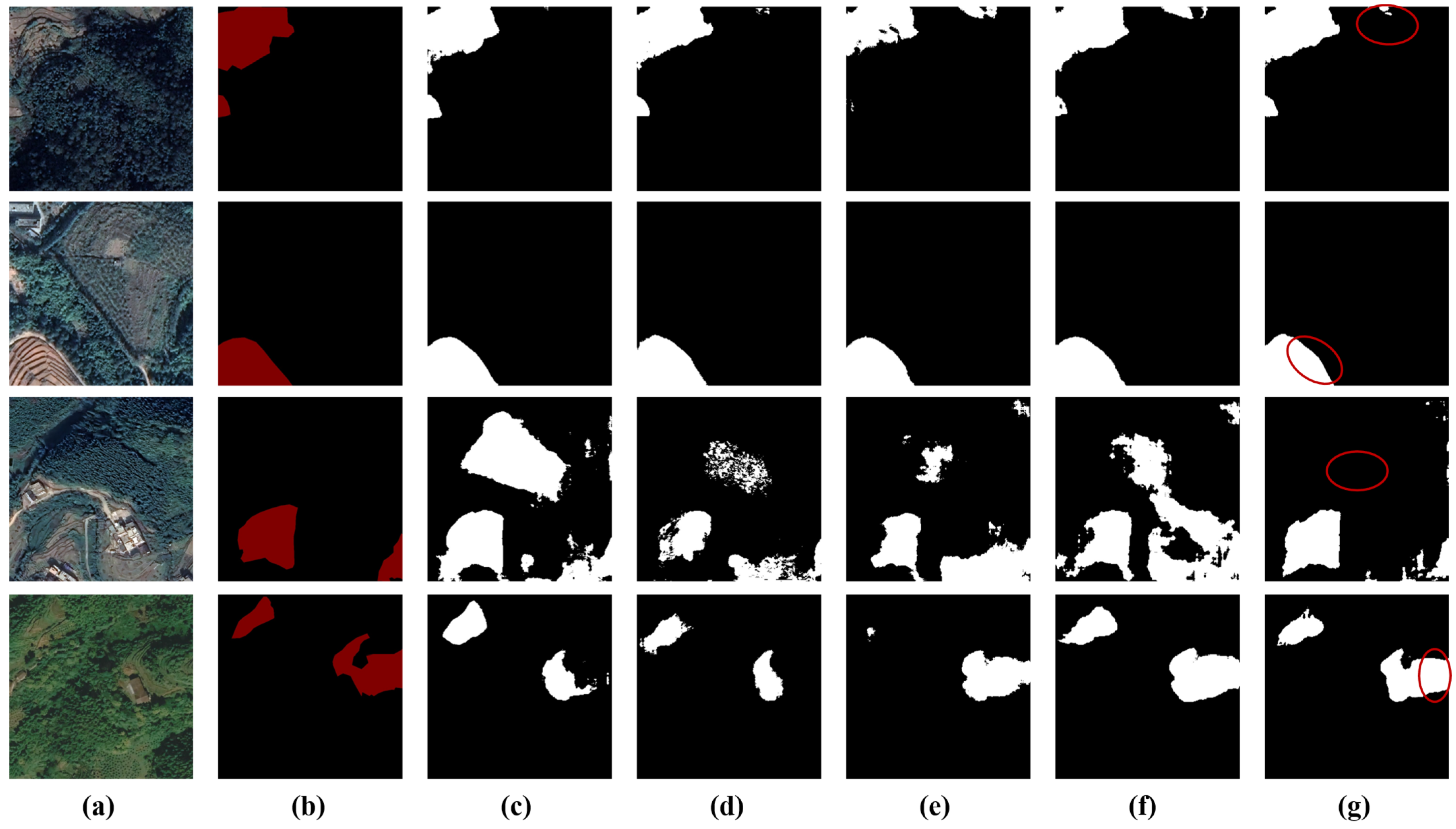

3.2. Comparisons with Different Module Combination

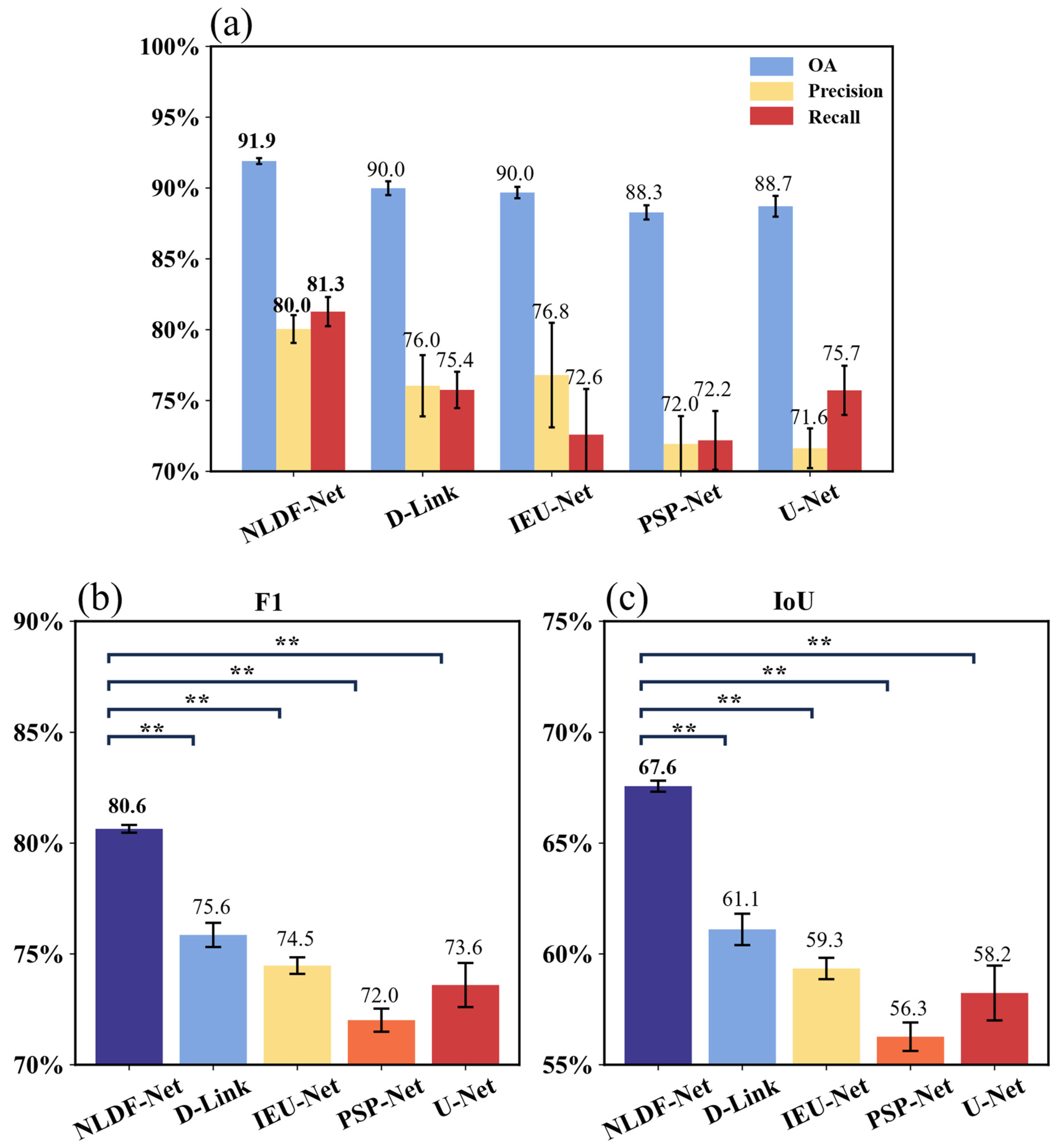

3.3. Comparisons with Advanced State-of-the-Art Deep Learning Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Petanidou, T.; Kizos, T.; Soulakellis, N. Socioeconomic Dimensions of Changes in the Agricultural Landscape of the Mediterranean Basin: A Case Study of the Abandonment of Cultivation Terraces on Nisyros Island, Greece. Environ. Manag. 2008, 41, 250–266. [Google Scholar] [CrossRef] [PubMed]

- Price, S.; Nixon, L. Ancient Greek Agricultural Terraces: Evidence from Texts and Archaeological Survey. Am. J. Archaeol. 2005, 109, 665–694. [Google Scholar] [CrossRef]

- Baryła, A.; Pierzgalski, E. Ridged Terraces—Functions, Construction and Use. J. Environ. Eng. Landsc. Manag. 2008, 16, 1–6. [Google Scholar] [CrossRef]

- Cevasco, A.; Pepe, G.; Brandolini, P. The Influences of Geological and Land Use Settings on Shallow Landslides Triggered by an Intense Rainfall Event in a Coastal Terraced Environment. Bull. Eng. Geol. Environ. 2014, 73, 859–875. [Google Scholar] [CrossRef]

- Dorren, L.; Rey, F. A Review of the Effect of Terracing on Erosion. In Briefing Papers of the 2nd Scape Workshop; Boix-Fayons, C., Imeson, A., Eds.; SCAPE: Cinque Terre, Italy, 2004; pp. 97–108. [Google Scholar]

- Arnáez, J.; Lana-Renault, N.; Lasanta, T.; Ruiz-Flaño, P.; Castroviejo, J. Effects of Farming Terraces on Hydrological and Geomorphological Processes. A Review. Catena 2015, 128, 122–134. [Google Scholar] [CrossRef]

- Cao, Y.; Wu, Y.; Zhang, Y.; Tian, J. Landscape Pattern and Sustainability of a 1300-Year-Old Agricultural Landscape in Subtropical Mountain Areas, Southwestern China. Int. J. Sustain. Dev. World Ecol. 2013, 20, 349–357. [Google Scholar] [CrossRef]

- Wei, W.; Chen, D.; Wang, L.; Daryanto, S.; Chen, L.; Yu, Y.; Lu, Y.; Sun, G.; Feng, T. Global Synthesis of the Classifications, Distributions, Benefits and Issues of Terracing. Earth Sci. Rev. 2016, 159, 388–403. [Google Scholar] [CrossRef]

- Posthumus, H.; De Graaff, J. Cost-Benefit Analysis of Bench Terraces, a Case Study in Peru. Land. Degrad. Dev. 2005, 16, 1–11. [Google Scholar] [CrossRef]

- Deng, C.; Zhang, G.; Liu, Y.; Nie, X.; Li, Z.; Liu, J.; Zhu, D. Advantages and Disadvantages of Terracing: A Comprehensive Review. Int. Soil Water Conserv. 2021, 9, 344–359. [Google Scholar] [CrossRef]

- Shimoda, S.; Koyanagi, T.F. Land Use Alters the Plant-Derived Carbon and Nitrogen Pools in Terraced Rice Paddies in a Mountain Village. Sustainability 2017, 9, 1973. [Google Scholar] [CrossRef]

- Chen, D.; Wei, W.; Chen, L. How Can Terracing Impact on Soil Moisture Variation in China? A Meta-Analysis. Agric. Water Manag. 2020, 227, 105849. [Google Scholar] [CrossRef]

- Chen, D.; Wei, W.; Daryanto, S.; Tarolli, P. Does Terracing Enhance Soil Organic Carbon Sequestration? A National-Scale Data Analysis in China. Sci. Total Environ. 2020, 721, 137751. [Google Scholar] [CrossRef]

- Wen, Y.; Kasielke, T.; Li, H.; Zhang, B.; Zepp, H. May Agricultural Terraces Induce Gully Erosion? A Case Study from the Black Soil Region of Northeast China. Sci. Total Environ. 2021, 750, 141715. [Google Scholar] [CrossRef] [PubMed]

- Ackermann, O.; Maeir, A.M.; Frumin, S.S.; Svoray, T.; Weiss, E.; Zhevelev, H.M.; Horwitz, L.K. The Paleo-Anthropocene and the Genesis of the Current Landscape of Israel. J. Landsc. Ecol. 2017, 10, 109–140. [Google Scholar] [CrossRef]

- Schönbrodt-Stitt, S.; Behrens, T.; Schmidt, K.; Shi, X.; Scholten, T. Degradation of Cultivated Bench Terraces in the Three Gorges Area: Field Mapping and Data Mining. Ecol. Indic. 2013, 34, 478–493. [Google Scholar] [CrossRef]

- Gao, X.; Roder, G.; Jiao, Y.; Ding, Y.; Liu, Z.; Tarolli, P. Farmers’ Landslide Risk Perceptions and Willingness for Restoration and Conservation of World Heritage Site of Honghe Hani Rice Terraces, China. Landslides 2020, 17, 1915–1924. [Google Scholar] [CrossRef]

- Agnoletti, M.; Cargnello, G.; Gardin, L.; Santoro, A.; Bazzoffi, P.; Sansone, L.; Pezza, L.; Belfiore, N. Traditional Landscape and Rural Development: Comparative Study in Three Terraced Areas in Northern, Central and Southern Italy to Evaluate the Efficacy of GAEC Standard 4.4 of Cross Compliance. Ital. J. Agron. 2011, 6, e16. [Google Scholar] [CrossRef]

- Jiao, Y.; Li, X.; Liang, L.; Takeuchi, K.; Okuro, T.; Zhang, D.; Sun, L. Indigenous Ecological Knowledge and Natural Resource Management in the Cultural Landscape of China’s Hani Terraces. Environ. Res. 2012, 27, 247–263. [Google Scholar] [CrossRef]

- Liu, X.Y.; Yang, S.T.; Wang, F.G.; He, X.Z.; Ma, H.B.; Luo, Y. Analysis on Sediment Yield Reduced by Current Terrace and Shrubs-Herbs-Arbor Vegetation in the Loess Plateau. J. Hydraul. Eng. 2014, 45, 1293–1300. [Google Scholar]

- Faulkner, H.; Ruiz, J.; Zukowskyj, P.; Downward, S. Erosion Risk Associated with Rapid and Extensive Agricultural Clearances on Dispersive Materials in Southeast Spain. Environ. Sci. Policy 2003, 6, 115–127. [Google Scholar] [CrossRef]

- Siyuan, W.; Jiyuan, L.; Zengxiang, Z.; Quanbin, Z.; Xiaoli, Z. Analysis on Spatial-Temporal Features of Land Use in China. Acta Geogr. Sin. 2001, 56, 631–639. [Google Scholar]

- Liu, J.; Tian, H.; Liu, M.; Zhuang, D.; Melillo, J.M.; Zhang, Z. China’s Changing Landscape during the 1990s: Large-Scale Land Transformations Estimated with Satellite Data. Geophys. Res. Lett. 2005, 32, L02405. [Google Scholar] [CrossRef]

- Martínez-Casasnovas, J.A.; Ramos, M.C.; Cots-Folch, R. Influence of the EU CAP on Terrain Morphology and Vineyard Cultivation in the Priorat Region of NE Spain. Land. Use Policy 2010, 27, 11–21. [Google Scholar] [CrossRef]

- Zhao, B.Y.; Ma, N.; Yang, J.; Li, Z.H.; Wang, Q.X. Extracting Features of Soil and Water Conservation Measures from Remote Sensing Images of Different Resolution Levels: Accuracy Analysis. Bull. Soil. Water Conserv. 2012, 32, 154–157. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Wang, K.; Xu, C.; Li, G.; Zhang, Y.; Zheng, Y.; Sun, C. Combining Convolutional Neural Networks and Self-Attention for Fundus Diseases Identification. Sci. Rep. 2023, 13, 76. [Google Scholar] [CrossRef] [PubMed]

- Zhao, F.; Xiong, L.-Y.; Wang, C.; Wang, H.-R.; Wei, H.; Tang, G.-A. Terraces Mapping by Using Deep Learning Approach from Remote Sensing Images and Digital Elevation Models. Trans. GIS 2021, 25, 2438–2454. [Google Scholar] [CrossRef]

- Lu, Y.; Li, X.; Xin, L.; Song, H.; Wang, X. Mapping the Terraces on the Loess Plateau Based on a Deep Learning-Based Model at 1.89 m Resolution. Sci. Data 2023, 10, 115. [Google Scholar] [CrossRef] [PubMed]

- Ding, H.; Na, J.; Jiang, S.; Zhu, J.; Liu, K.; Fu, Y.; Li, F. Evaluation of Three Different Machine Learning Methods for Object-Based Artificial Terrace Mapping—A Case Study of the Loess Plateau, China. Remote Sens. 2021, 13, 1021. [Google Scholar] [CrossRef]

- Cao, B.; Yu, L.; Naipal, V.; Ciais, P.; Li, W.; Zhao, Y.; Wei, W.; Chen, D.; Liu, Z.; Gong, P. A 30 m Terrace Mapping in China Using Landsat 8 Imagery and Digital Elevation Model Based on the Google Earth Engine. Earth Syst. Sci. Data 2021, 13, 2437–2456. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, W.; Zeng, X.; Wang, X.; Qiu, S.; Luo, P.; Tian, Y.; Li, H.; Yang, S.; Wang, Z.; Li, H. DeepID-Net: Object Detection with Deformable Part Based Convolutional Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1320–1334. [Google Scholar] [CrossRef] [PubMed]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Hu, K.; Zhang, D.; Xia, M. CDUNet: Cloud Detection UNet for Remote Sensing Imagery. Remote Sens. 2021, 13, 4533. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of Unmanned Aerial Vehicle Imagery and Deep Learning UNet to Extract Rice Lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef]

- Yu, M.; Rui, X.; Xie, W.; Xu, X.; Wei, W. Research on Automatic Identification Method of Terraces on the Loess Plateau Based on Deep Transfer Learning. Remote Sens. 2022, 14, 2446. [Google Scholar] [CrossRef]

- Luo, L.; Li, F.; Dai, Z.; Yang, X.; Liu, W.; Fang, X. Terrace Extraction Based on Remote Sensing Images and Digital Elevation Model in the Loess Plateau, China. Earth Sci. Inform. 2020, 13, 433–446. [Google Scholar] [CrossRef]

- Zhu, P.; Xu, H.; Zhou, L.; Yu, P.; Zhang, L.; Liu, S. Automatic Mapping of Gully from Satellite Images Using Asymmetric Non-Local LinkNet: A Case Study in Northeast China. Int. Soil Water Conserv. Res. 2024, 12, 365–378. [Google Scholar] [CrossRef]

- Wang, Z.; Xin, Z.; Liao, G.; Huang, P.; Xuan, J.; Sun, Y.; Tai, Y. Land-Sea Target Detection and Recognition in SAR Image Based on Non-Local Channel Attention Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Zhu, Z.; Xu, M.; Bai, S.; Huang, T.; Bai, X. Asymmetric Non-Local Neural Networks for Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 593–602. [Google Scholar]

- Liang, X.; Wang, X.; Lei, Z.; Liao, S.; Li, S.Z. Soft-Margin Softmax for Deep Classification. In Neural Information Processing; Liu, D., Xie, S., Li, Y., Zhao, D., El-Alfy, E.-S.M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 413–421. [Google Scholar]

- Zhang, H.; Xu, H.; Tian, X.; Jiang, J.; Ma, J. Image Fusion Meets Deep Learning: A Survey and Perspective. Inf. Fusion. 2021, 76, 323–336. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Potere, D. Horizontal Positional Accuracy of Google Earth’s High-Resolution Imagery Archive. Sensors 2008, 8, 7973–7981. [Google Scholar] [CrossRef] [PubMed]

- Gafurov, A.M.; Yermolayev, O.P. Automatic Gully Detection: Neural Networks and Computer Vision. Remote Sens. 2020, 12, 1743. [Google Scholar] [CrossRef]

- Samarin, M.; Zweifel, L.; Roth, V.; Alewell, C. Identifying Soil Erosion Processes in Alpine Grasslands on Aerial Imagery with a U-Net Convolutional Neural Network. Remote Sens. 2020, 12, 4149. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 182–186. [Google Scholar]

- Wu, K.; Cai, F. Dual Attention D-LinkNet for Road Segmentation in Remote Sensing Images. In Proceedings of the 2022 IEEE 14th International Conference on Advanced Infocomm Technology (ICAIT), Chongqing, China, 8–11 July 2022; pp. 304–307. [Google Scholar]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good Practices for Estimating Area and Assessing Accuracy of Land Change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Stehman, S.V.; Woodcock, C.E. Making Better Use of Accuracy Data in Land Change Studies: Estimating Accuracy and Area and Quantifying Uncertainty Using Stratified Estimation. Remote Sens. Environ. 2013, 129, 122–131. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Zou, J.; Liu, S.; Xie, Y. Terrace Extraction Method Based on Remote Sensing and a Novel Deep Learning Framework. Remote Sens. 2024, 16, 1649. https://doi.org/10.3390/rs16091649

Zhao Y, Zou J, Liu S, Xie Y. Terrace Extraction Method Based on Remote Sensing and a Novel Deep Learning Framework. Remote Sensing. 2024; 16(9):1649. https://doi.org/10.3390/rs16091649

Chicago/Turabian StyleZhao, Yinghai, Jiawei Zou, Suhong Liu, and Yun Xie. 2024. "Terrace Extraction Method Based on Remote Sensing and a Novel Deep Learning Framework" Remote Sensing 16, no. 9: 1649. https://doi.org/10.3390/rs16091649

APA StyleZhao, Y., Zou, J., Liu, S., & Xie, Y. (2024). Terrace Extraction Method Based on Remote Sensing and a Novel Deep Learning Framework. Remote Sensing, 16(9), 1649. https://doi.org/10.3390/rs16091649