Innovative Decision Fusion for Accurate Crop/Vegetation Classification with Multiple Classifiers and Multisource Remote Sensing Data

Abstract

1. Introduction

2. Materials and Methods

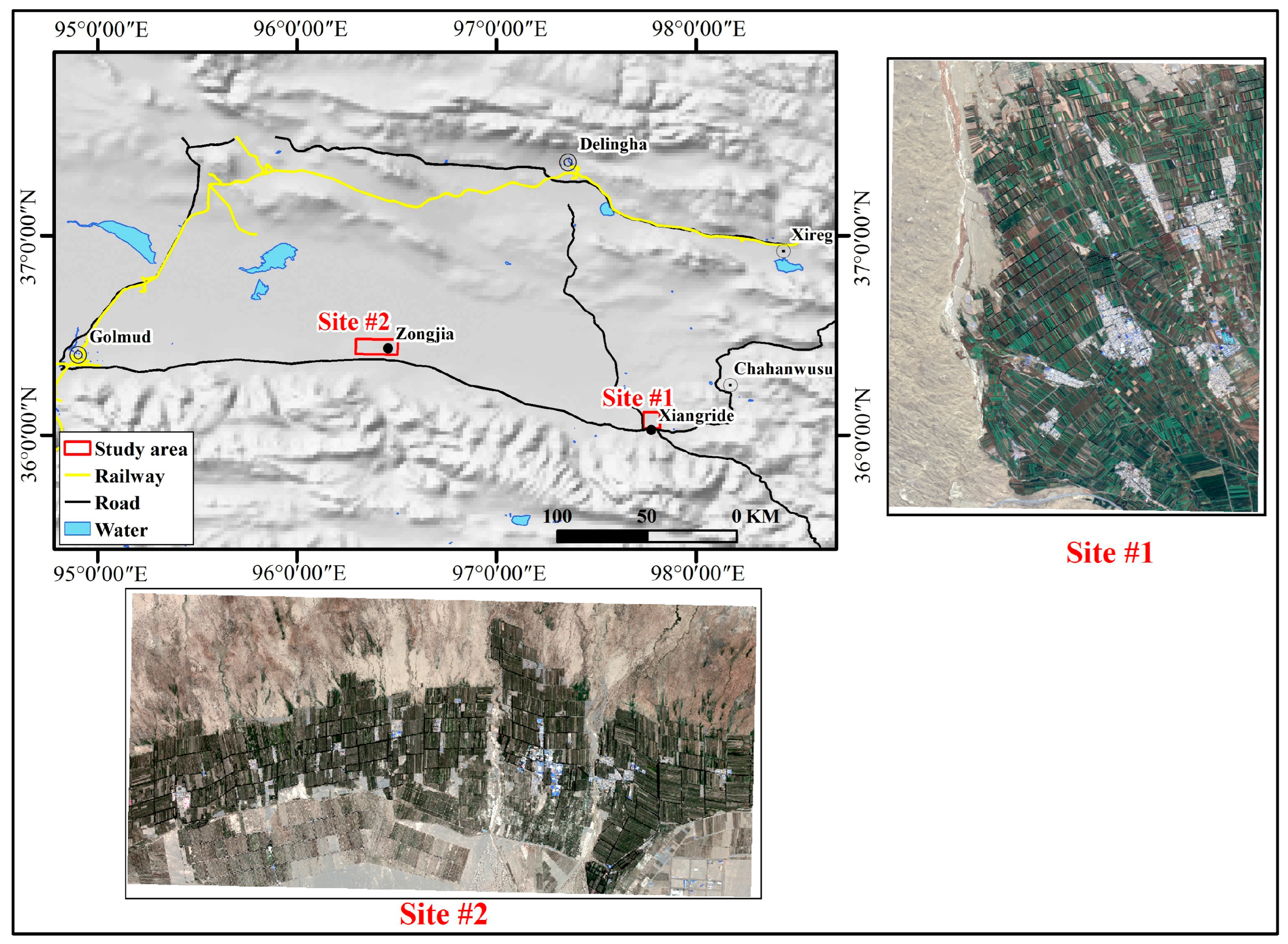

2.1. Study Area

2.2. MultiSource Remote Sensing Data and Data Processing

2.3. Methods

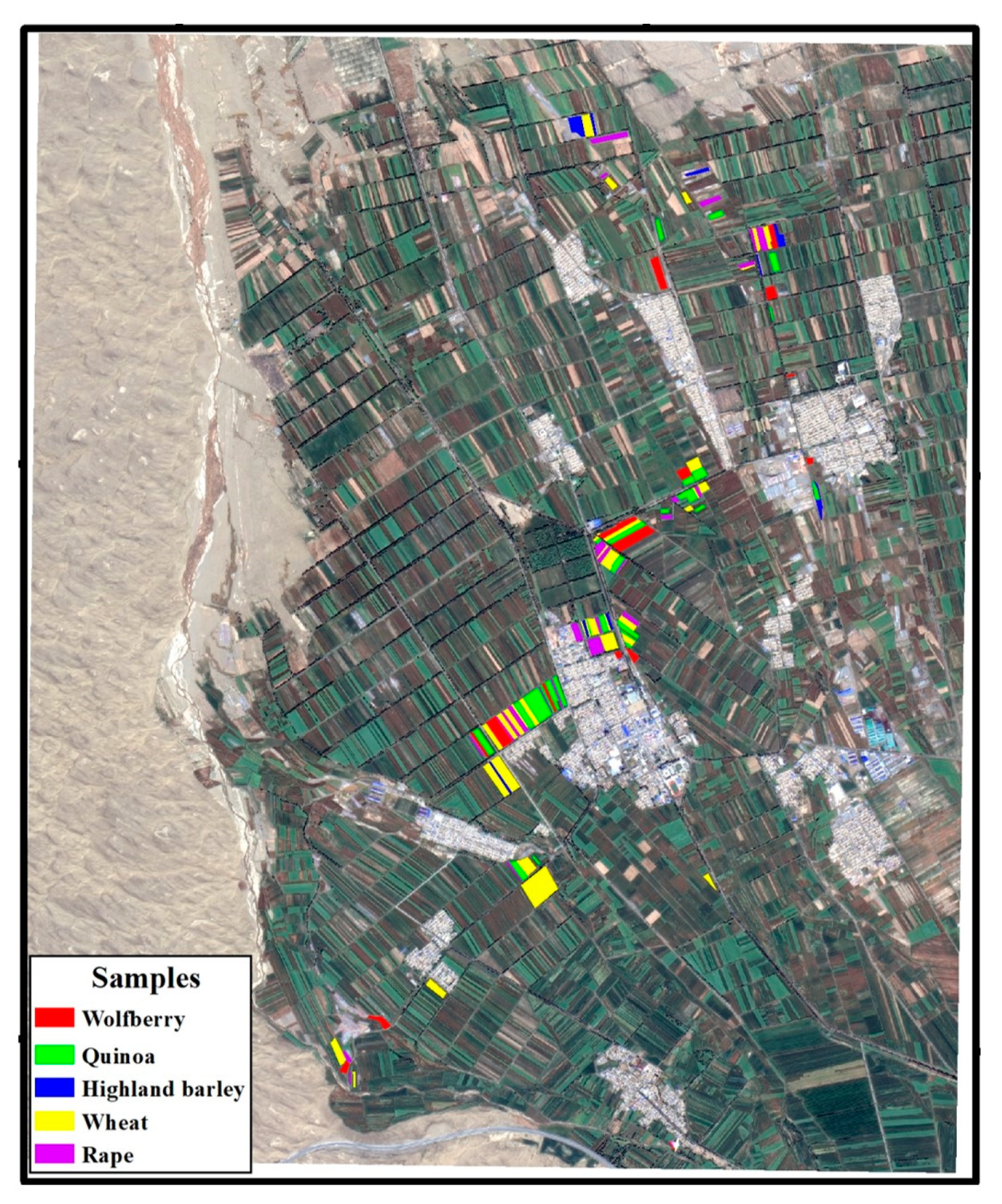

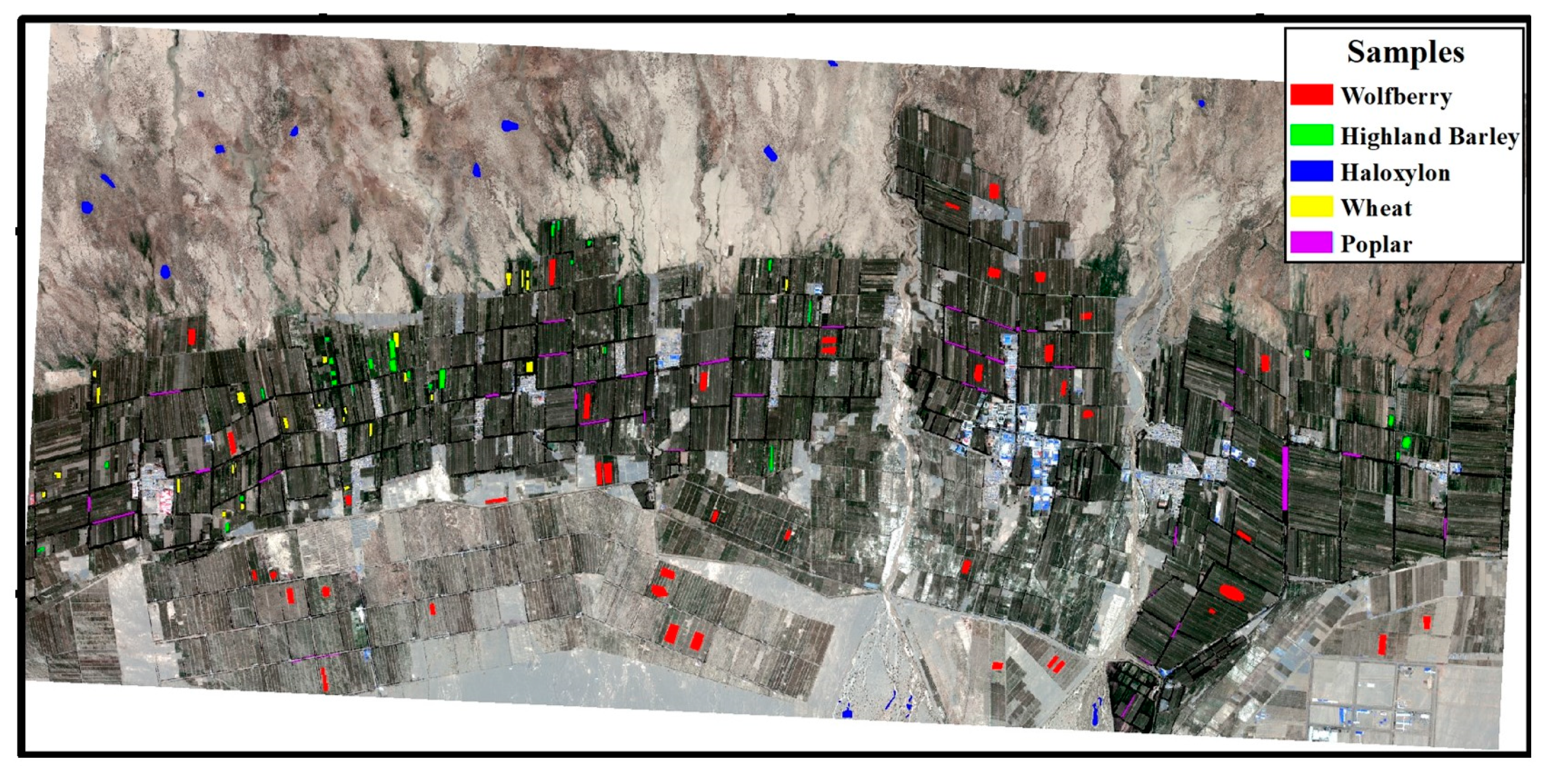

2.3.1. Field Survey and Sample Preparation

2.3.2. Multisource Remote Sensing Features

- Vegetation indices (VI)

- Biophysical variables (BP)

2.3.3. Feature Fusion

2.3.4. Classifiers

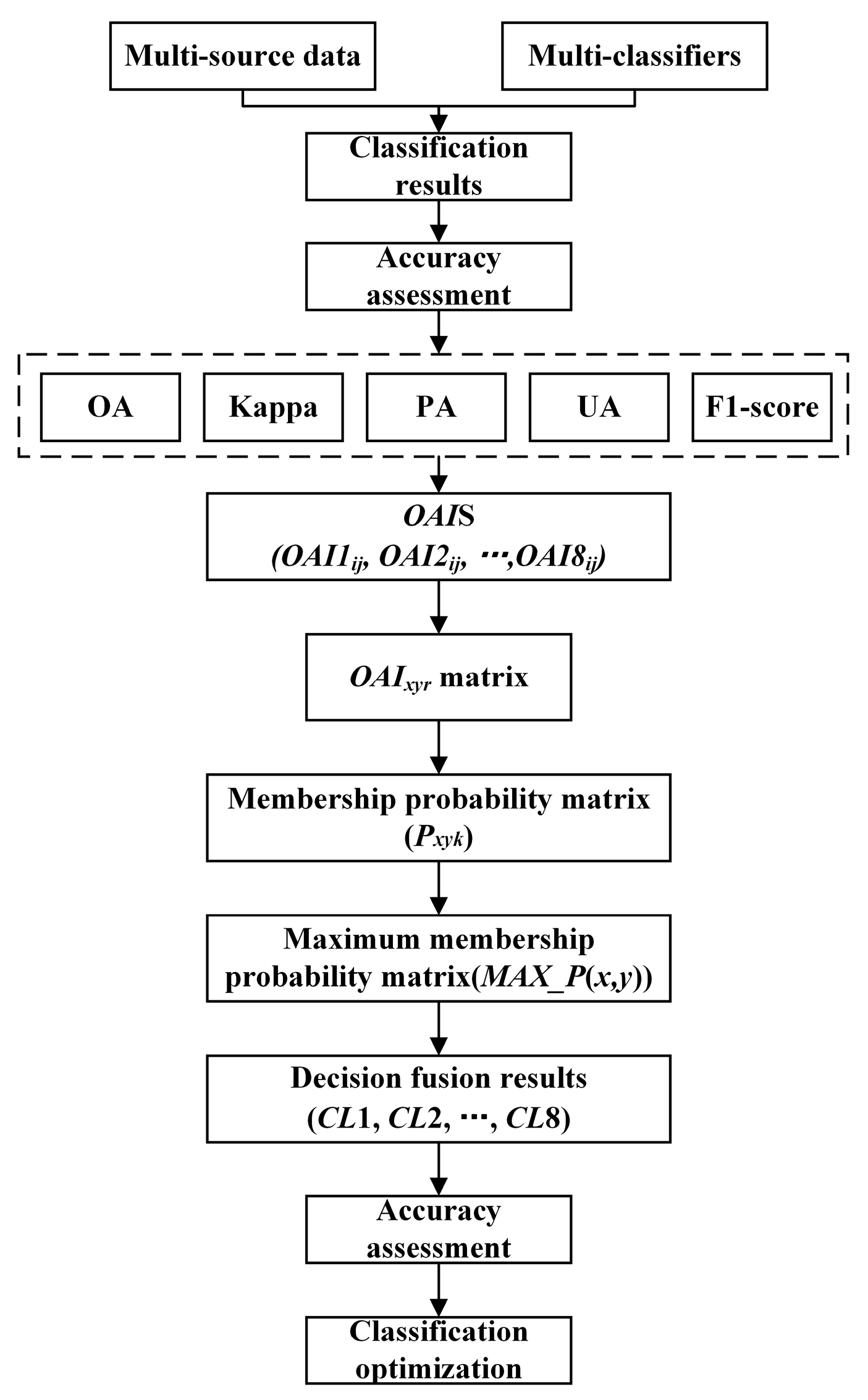

2.3.5. Decision Fusion Strategies

- Majority voting (MV)

- Enhanced Overall Accuracy Index (E-OAI) voting strategy

- Overall Accuracy Index based Majority Voting (OAI-MV)

2.3.6. Classification Scenarios

2.3.7. Accuracy Assessment

3. Results

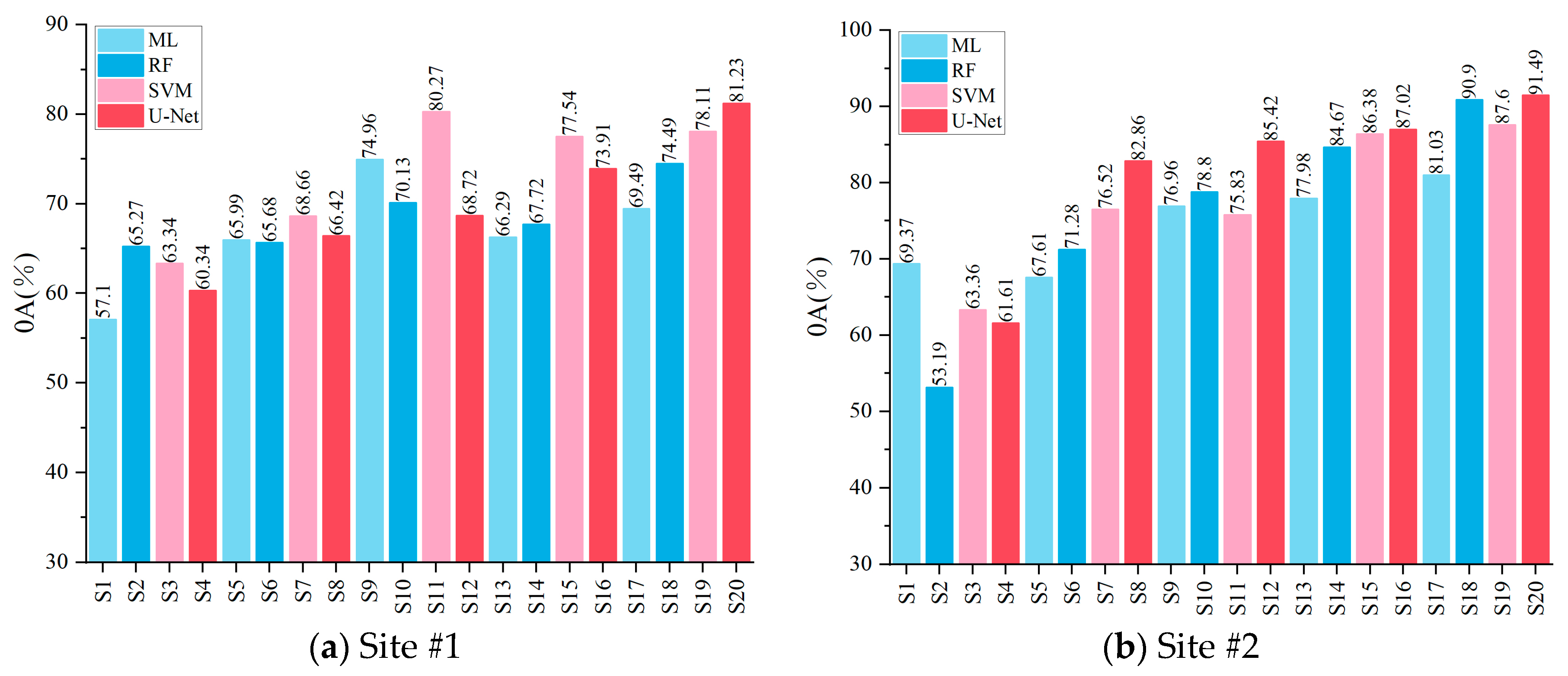

3.1. Crop/Vegetation Classification of Different Feature Sets with Single Classifier

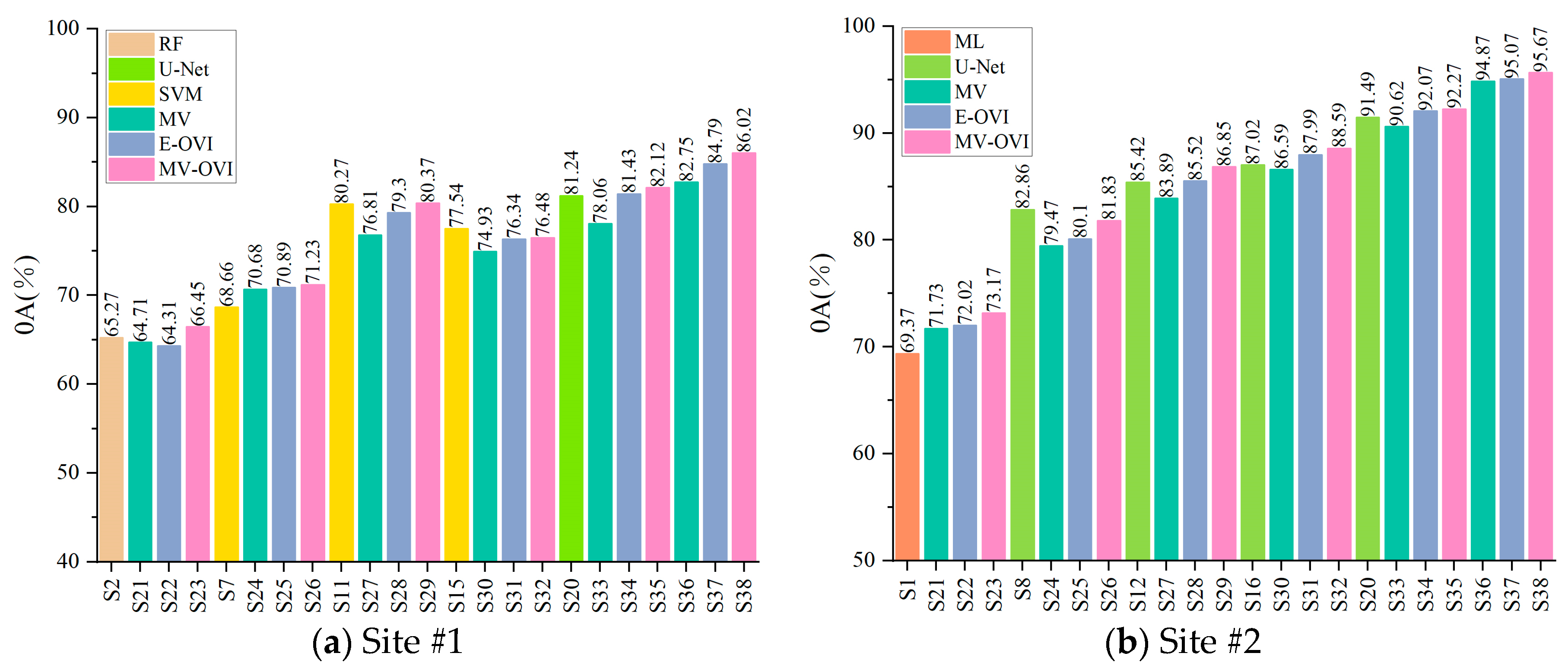

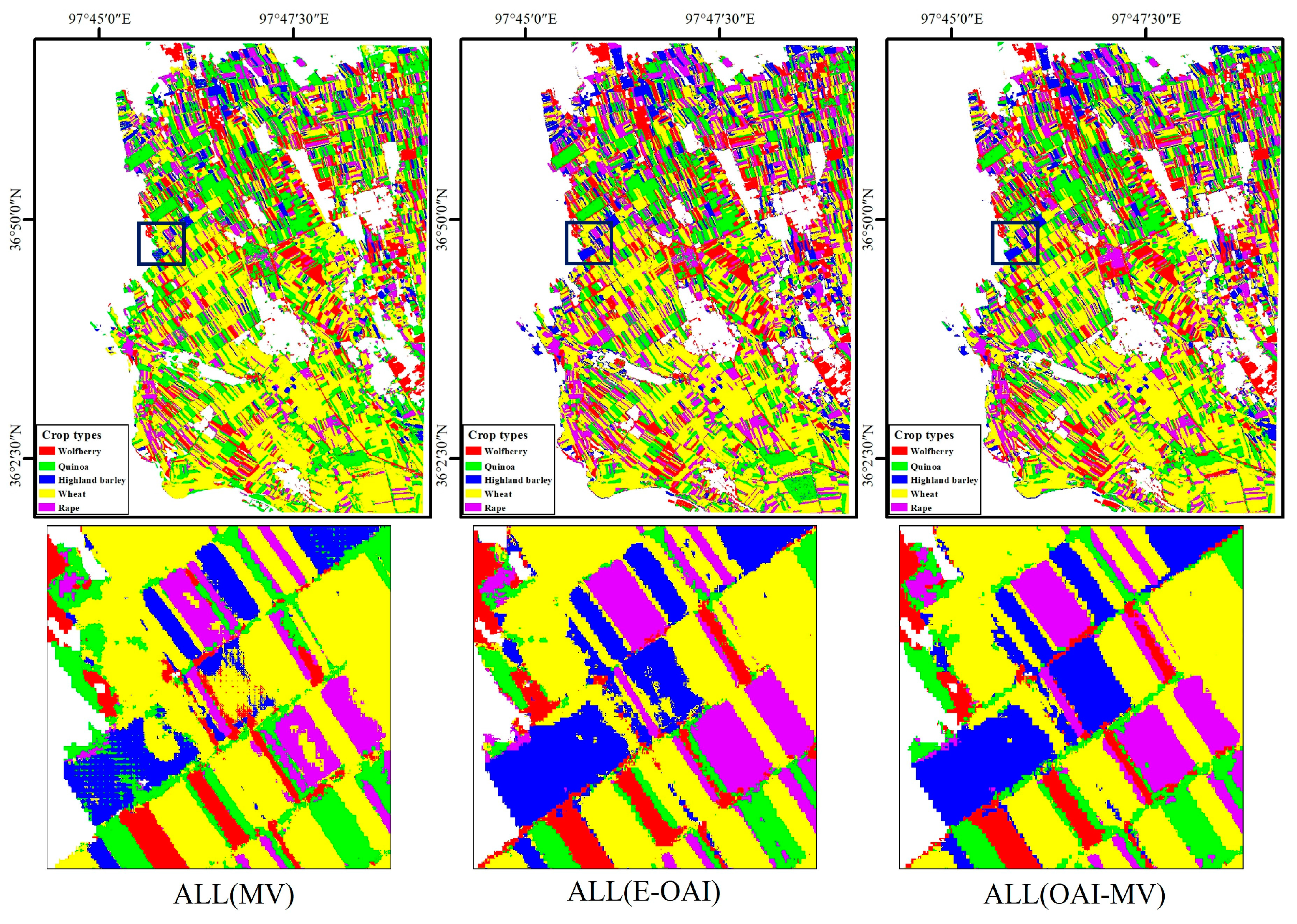

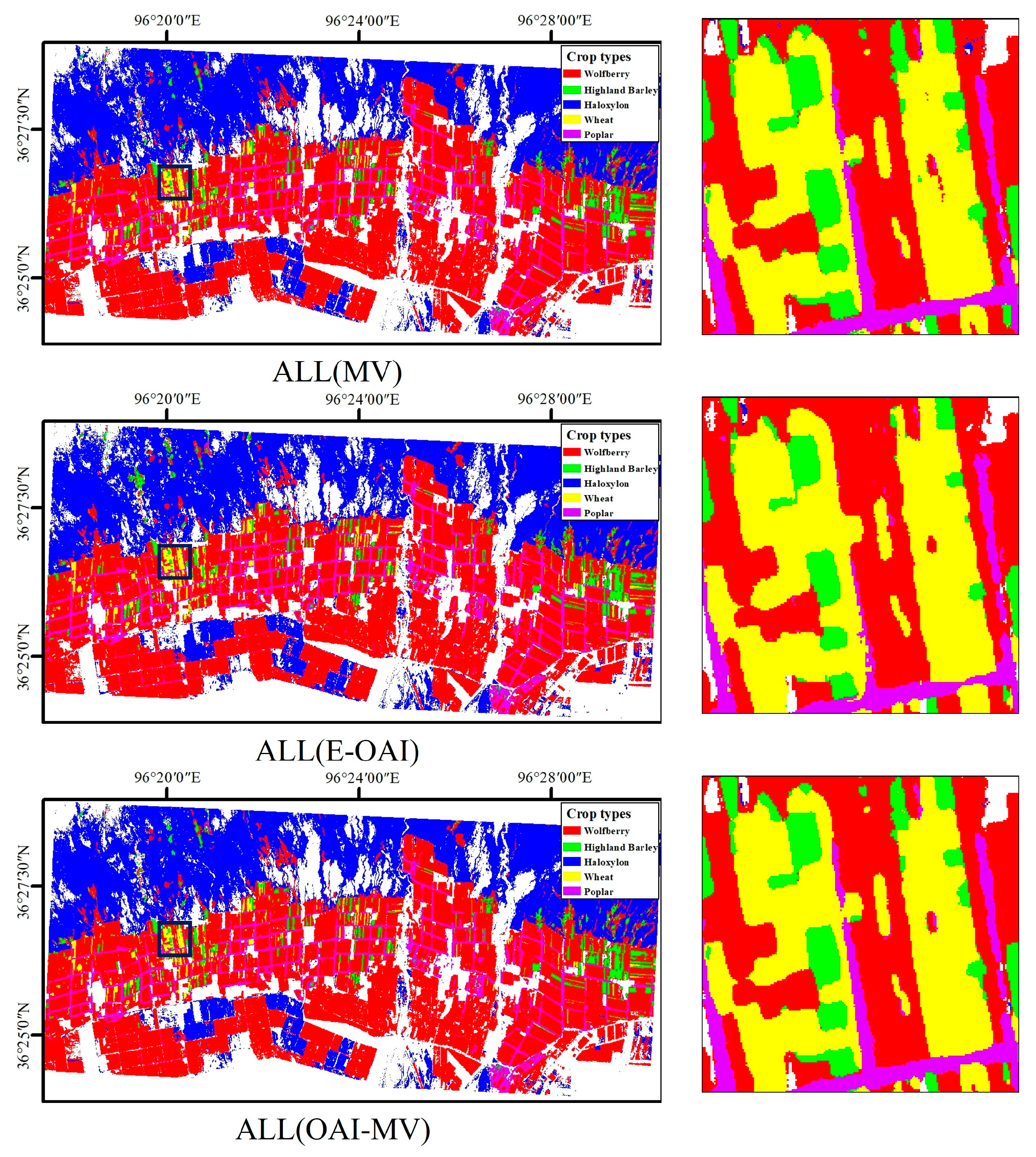

3.2. Crop/Vegetation Classification of Decision-Level Fusion

4. Discussion

4.1. Comparison of Crop/Vegetation Classification Performance with Different Feature Sets and Classifiers

4.2. Crop/Vegetation Classification Performance of Different Decision Fusion Strategies

4.3. Impact of Different OAIs on Classification Accuracy of OAI Strategy

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alexandratos, N. How to Feed the World in 2050. Proc. Tech. Meet. Experts 2009, 1–32. Available online: https://www.fao.org/fileadmin/templates/wsfs/docs/expert_paper/How_to_Feed_the_World_in_2050.pdf (accessed on 25 April 2024).

- Fan, J.; Zhang, X.; Zhao, C.; Qin, Z.; De Vroey, M.; Defourny, P. Evaluation of Crop Type Classification with Different High Resolution Satellite Data Sources. Remote Sens. 2021, 13, 911. [Google Scholar] [CrossRef]

- Futerman, S.I.; Laor, Y.; Eshel, G.; Cohen, Y. The Potential of Remote Sensing of Cover Crops to Benefit Sustainable and Precision Fertilization. Sci. Total Environ. 2023, 891, 164630. [Google Scholar] [CrossRef]

- Pluto-Kossakowska, J. Review on Multitemporal Classification Methods of Satellite Images for Crop and Arable Land Recognition. Agriculture 2021, 11, 999. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Zhang, C.; Marzougui, A.; Sankaran, S. High-Resolution Satellite Imagery Applications in Crop Phenotyping: An Overview. Comput. Electron. Agric. 2020, 175, 105584. [Google Scholar] [CrossRef]

- Sedighi, A.; Hamzeh, S.; Firozjaei, M.K.; Goodarzi, H.V.; Naseri, A.A. Comparative Analysis of Multispectral and Hyperspectral Imagery for Mapping Sugarcane Varieties. PFG—J. Photogramm. Remote Sens. Geoinf. Sci. 2023, 91, 453–470. [Google Scholar] [CrossRef]

- Zhao, L.; Li, F.; Chang, Q. Review on Crop Type Identification and Yield Forecasting Using Remote Sensing. Trans. Chin. Soc. Agric. Mach. 2023, 54, 1–19. [Google Scholar]

- Wan, S.; Chang, S.-H. Crop Classification with WorldView-2 Imagery Using Support Vector Machine Comparing Texture Analysis Approaches and Grey Relational Analysis in Jianan Plain, Taiwan. Int. J. Remote Sens. 2019, 40, 8076–8092. [Google Scholar] [CrossRef]

- Hively, W.D.; Shermeyer, J.; Lamb, B.T.; Daughtry, C.T.; Quemada, M.; Keppler, J. Mapping Crop Residue by Combining Landsat and WorldView-3 Satellite Imagery. Remote Sens. 2019, 11, 1857. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Conrad, C. Crop Type Classification Using a Combination of Optical and Radar Remote Sensing Data: A Review. Int. J. Remote Sens. 2019, 40, 6553–6595. [Google Scholar] [CrossRef]

- Fathololoumi, S.; Firozjaei, M.K.; Li, H.; Biswas, A. Surface Biophysical Features Fusion in Remote Sensing for Improving Land Crop/Cover Classification Accuracy. Sci. Total Environ. 2022, 838, 156520. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Lin, L.; Zhou, Z.-G.; Jiang, H.; Liu, Q. Bridging Optical and SAR Satellite Image Time Series via Contrastive Feature Extraction for Crop Classification. ISPRS J. Photogramm. Remote Sens. 2023, 195, 222–232. [Google Scholar] [CrossRef]

- Ghazaryan, G.; Dubovyk, O.; Löw, F.; Lavreniuk, M.; Kolotii, A.; Schellberg, J.; Kussul, N. A Rule-Based Approach for Crop Identification Using Multi-Temporal and Multi-Sensor Phenological Metrics. Eur. J. Remote Sens. 2018, 51, 511–524. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, C.; Zhang, B.; Liu, M. Crop Classification Based on Temporal Information Using Sentinel-1 SAR Time-Series Data. Remote Sens. 2019, 11, 53. [Google Scholar] [CrossRef]

- Moumni, A.; Oujaoura, M.; Ezzahar, J.; Lahrouni, A. A New Synergistic Approach for Crop Discrimination in a Semi-Arid Region Using Sentinel-2 Time Series and the Multiple Combination of Machine Learning Classifiers. J. Phys. Conf. Ser. 2021, 1743, 012026. [Google Scholar] [CrossRef]

- Gao, H.; Wang, C.; Wang, G.; Fu, H.; Zhu, J. A Novel Crop Classification Method Based on ppfSVM Classifier with Time-Series Alignment Kernel from Dual-Polarization SAR Datasets. Remote Sens. Environ. 2021, 264, 112628. [Google Scholar] [CrossRef]

- Rußwurm, M.; Courty, N.; Emonet, R.; Lefèvre, S.; Tuia, D.; Tavenard, R. End-to-End Learned Early Classification of Time Series for in-Season Crop Type Mapping. ISPRS J. Photogramm. Remote Sens. 2023, 196, 445–456. [Google Scholar] [CrossRef]

- Htitiou, A.; Boudhar, A.; Lebrini, Y.; Hadria, R.; Lionboui, H.; Benabdelouahab, T. A Comparative Analysis of Different Phenological Information Retrieved from Sentinel-2 Time Series Images to Improve Crop Classification: A Machine Learning Approach. Geocarto Int. 2020, 37, 1426–1449. [Google Scholar] [CrossRef]

- Chabalala, Y.; Adam, E.; Ali, K.A. Machine Learning Classification of Fused Sentinel-1 and Sentinel-2 Image Data towards Mapping Fruit Plantations in Highly Heterogenous Landscapes. Remote Sens. 2022, 14, 2621. [Google Scholar] [CrossRef]

- Chakhar, A.; Hernández-López, D.; Ballesteros, R.; Moreno, M.A. Improving the Accuracy of Multiple Algorithms for Crop Classification by Integrating Sentinel-1 Observations with Sentinel-2 Data. Remote Sens. 2021, 13, 243. [Google Scholar] [CrossRef]

- Tuvdendorj, B.; Zeng, H.; Wu, B.; Elnashar, A.; Zhang, M.; Tian, F.; Nabil, M.; Nanzad, L.; Bulkhbai, A.; Natsagdorj, N. Performance and the Optimal Integration of Sentinel-1/2 Time-Series Features for Crop Classification in Northern Mongolia. Remote Sens. 2022, 14, 1830. [Google Scholar] [CrossRef]

- Fathololoumi, S.; Firozjaei, M.K.; Biswas, A. An Innovative Fusion-Based Scenario for Improving Land Crop Mapping Accuracy. Sensors 2022, 22, 7428. [Google Scholar] [CrossRef] [PubMed]

- Al-Awar, B.; Awad, M.M.; Jarlan, L.; Courault, D. Evaluation of Nonparametric Machine-Learning Algorithms for an Optimal Crop Classification Using Big Data Reduction Strategy. Remote Sens. Earth Syst. Sci. 2022, 5, 141–153. [Google Scholar] [CrossRef]

- Xia, T.; He, Z.; Cai, Z.; Wang, C.; Wang, W.; Wang, J.; Hu, Q.; Song, Q. Exploring the Potential of Chinese GF-6 Images for Crop Mapping in Regions with Complex Agricultural Landscapes. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102702. [Google Scholar] [CrossRef]

- Chabalala, Y.; Adam, E.; Ali, K.A. Exploring the Effect of Balanced and Imbalanced Multi-Class Distribution Data and Sampling Techniques on Fruit-Tree Crop Classification Using Different Machine Learning Classifiers. Geomatics 2023, 3, 70–92. [Google Scholar] [CrossRef]

- Laban, N.; Abdellatif, B.; Ebeid, H.M.; Shedeed, H.A.; Tolba, M.F. Machine Learning for Enhancement Land Cover and Crop Types Classification. In Machine Learning Paradigms: Theory and Application; Hassanien, A.E., Ed.; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2019; pp. 71–87. ISBN 978-3-030-02357-7. [Google Scholar]

- Agilandeeswari, L.; Prabukumar, M.; Radhesyam, V.; Phaneendra, K.L.N.B.; Farhan, A. Crop Classification for Agricultural Applications in Hyperspectral Remote Sensing Images. Appl. Sci. 2022, 12, 1670. [Google Scholar] [CrossRef]

- Ji, S.; Zhang, C.; Xu, A.; Shi, Y.; Duan, Y. 3D Convolutional Neural Networks for Crop Classification with Multi-Temporal Remote Sensing Images. Remote Sens. 2018, 10, 75. [Google Scholar] [CrossRef]

- Li, G.; Cui, J.; Han, W.; Zhang, H.; Huang, S.; Chen, H.; Ao, J. Crop Type Mapping Using Time-Series Sentinel-2 Imagery and U-Net in Early Growth Periods in the Hetao Irrigation District in China. Comput. Electron. Agric. 2022, 203, 107478. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model. Remote Sens. 2019, 11, 68. [Google Scholar] [CrossRef]

- Reuß, F.; Greimeister-Pfeil, I.; Vreugdenhil, M.; Wagner, W. Comparison of Long Short-Term Memory Networks and Random Forest for Sentinel-1 Time Series Based Large Scale Crop Classification. Remote Sens. 2021, 13, 5000. [Google Scholar] [CrossRef]

- He, T.; Xie, C.; Liu, Q.; Guan, S.; Liu, G. Evaluation and Comparison of Random Forest and A-LSTM Networks for Large-Scale Winter Wheat Identification. Remote Sens. 2019, 11, 1665. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, J.; Xun, L.; Wang, J.; Wu, Z.; Henchiri, M.; Zhang, S.; Zhang, S.; Bai, Y.; Yang, S.; et al. Evaluating the Effectiveness of Machine Learning and Deep Learning Models Combined Time-Series Satellite Data for Multiple Crop Types Classification over a Large-Scale Region. Remote Sens. 2022, 14, 2341. [Google Scholar] [CrossRef]

- Solberg, A.H.S. Data Fusion for Remote Sensing Applications. In Signal and Image Processing for Remote Sensing; CRC Press: Boca Raton, FL, USA, 2006; pp. 249–271. [Google Scholar]

- Ban, Y.; Hu, H.; Rangel, I.M. Fusion of Quickbird MS and RADARSAT SAR Data for Urban Land-Cover Mapping: Object-Based and Knowledge-Based Approach. Int. J. Remote Sens. 2010, 31, 1391–1410. [Google Scholar] [CrossRef]

- Okamoto, K. International Journal of Estimation of Rice-Planted Area in the Tropical Zone Using a Combination of Optical and Microwave Satellite Sensor Data. Int. J. Remote Sens. 1999, 20, 1045–1048. [Google Scholar] [CrossRef]

- Soria-Ruiz, J.; Fernandez-Ordoñez, Y.; Woodhouse, I.H. Land-Cover Classification Using Radar and Optical Images: A Case Study in Central Mexico. Int. J. Remote Sens. 2010, 31, 3291–3305. [Google Scholar] [CrossRef]

- Kuncheva, L.I.; Bezdek, J.C.; Duin, R.P.W. Decision Templates for Multiple Classifier Fusion: An Experimental Comparison. Pattern Recognit. 2001, 34, 299–314. [Google Scholar] [CrossRef]

- Lam, L.; Suen, C.Y. Optimal Combinations of Pattern Classifiers. Pattern Recognit. Lett. 1995, 16, 945–954. [Google Scholar] [CrossRef]

- Ceccarelli, M.; Petrosino, A. Multi-Feature Adaptive Classifiers for SAR Image Segmentation. Neurocomputing 1997, 14, 345–363. [Google Scholar] [CrossRef]

- Grabisch, M. The Application of Fuzzy Integrals in Multicriteria Decision Making. Eur. J. Oper. Res. 1996, 89, 445–456. [Google Scholar] [CrossRef]

- Rogova, G. Combining the Results of Several Neural Network Classifiers. In Classic Works of the Dempster-Shafer Theory of Belief Functions; Yager, R.R., Liu, L., Eds.; Springer: Berlin, Heidelberg, 2008; pp. 683–692. ISBN 978-3-540-44792-4. [Google Scholar]

- Smits, P.C. Multiple Classifier Systems for Supervised Remote Sensing Image Classification Based on Dynamic Classifier Selection. IEEE Trans. Geosci. Remote Sens. 2002, 40, 801–813. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Chanussot, J.; Fauvel, M. Multiple Classifier Systems in Remote Sensing: From Basics to Recent Developments. In Multiple Classifier Systems; Haindl, M., Kittler, J., Roli, F., Eds.; Springer: Berlin, Heidelberg, 2007; pp. 501–512. [Google Scholar]

- Ye, Z.; Dong, R.; Chen, H.; Bai, L. Adjustive decision fusion approaches for hyperspectral image classification. J. Image Graph. 2021, 26, 1952–1968. [Google Scholar]

- Shen, H.; Lin, Y.; Tian, Q.; Xu, K.; Jiao, J. A Comparison of Multiple Classifier Combinations Using Different Voting-Weights for Remote Sensing Image Classification. Int. J. Remote Sens. 2018, 39, 3705–3722. [Google Scholar] [CrossRef]

- Pal, M.; Rasmussen, T.; Porwal, A. Optimized Lithological Mapping from Multispectral and Hyperspectral Remote Sensing Images Using Fused Multi-Classifiers. Remote Sens. 2020, 12, 177. [Google Scholar] [CrossRef]

- Foody, G.M.; Boyd, D.S.; Sanchez-Hernandez, C. Mapping a Specific Class with an Ensemble of Classifiers. Int. J. Remote Sens. 2007, 28, 1733–1746. [Google Scholar] [CrossRef]

- Yang, J. Study on the Evolution of Spatial Morphology of Farm Settlements in the Eastern Oasis of Qaidam Basin—A Case Study of Oasis Settlements in Xiangride and Nuomuhong River Basins; Xi’an University of Architecture and Technology: Xi’an, China, 2021. [Google Scholar]

- Niazmardi, S.; Homayouni, S.; Safari, A.; McNairn, H.; Shang, J.; Beckett, K. Histogram-Based Spatio-Temporal Feature Classification of Vegetation Indices Time-Series for Crop Mapping. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 34–41. [Google Scholar] [CrossRef]

- Teimouri, M.; Mokhtarzade, M.; Baghdadi, N.; Heipke, C. Fusion of Time-Series Optical and SAR Images Using 3D Convolutional Neural Networks for Crop Classification. Geocarto Int. 2022, 37, 15143–15160. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F.; Jay, S. S2ToolBox Level 2 Products: LAI, FAPAR, FCOVER. Version 2.1. 2020. Available online: https://step.esa.int/docs/extra/ATBD_S2ToolBox_L2B_V1.1.pdf (accessed on 25 April 2024).

- Hu, Q.; Yang, J.; Xu, B.; Huang, J.; Memon, M.S.; Yin, G.; Zeng, Y.; Zhao, J.; Liu, K. Evaluation of Global Decametric-Resolution LAI, FAPAR and FVC Estimates Derived from Sentinel-2 Imagery. Remote Sens. 2020, 12, 912. [Google Scholar] [CrossRef]

- Neinavaz, E.; Skidmore, A.K.; Darvishzadeh, R.; Groen, T.A. Retrieval of Leaf Area Index in Different Plant Species Using Thermal Hyperspectral Data. ISPRS J. Photogramm. Remote Sens. 2016, 119, 390–401. [Google Scholar] [CrossRef]

- González-Sanpedro, M.C.; Le Toan, T.; Moreno, J.; Kergoat, L.; Rubio, E. Seasonal Variations of Leaf Area Index of Agricultural Fields Retrieved from Landsat Data. Remote Sens. Environ. 2008, 112, 810–824. [Google Scholar] [CrossRef]

- Camacho, F.; Cernicharo, J.; Lacaze, R.; Baret, F.; Weiss, M. GEOV1: LAI, FAPAR Essential Climate Variables and FCOVER Global Time Series Capitalizing over Existing Products. Part 2: Validation and Intercomparison with Reference Products. Remote Sens. Environ. 2013, 137, 310–329. [Google Scholar] [CrossRef]

- Ceccato, P.; Flasse, S.; Grégoire, J.-M. Designing a Spectral Index to Estimate Vegetation Water Content from Remote Sensing Data: Part 2. Validation and Applications. Remote Sens. Environ. 2002, 82, 198–207. [Google Scholar] [CrossRef]

- Salehi, B.; Daneshfar, B.; Davidson, A.M. Accurate Crop-Type Classification Using Multi-Temporal Optical and Multi-Polarization SAR Data in an Object-Based Image Analysis Framework. Int. J. Remote Sens. 2017, 38, 4130–4155. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Ren, T.; Liu, D.; Ma, Z.; Tong, L.; Zhang, C.; Zhou, T.; Zhang, X.; Li, S. Identification of Seed Maize Fields With High Spatial Resolution and Multiple Spectral Remote Sensing Using Random Forest Classifier. Remote Sens. 2020, 12, 362. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Kittler, J.; Hatef, M.; Duin, R.P.W.; Matas, J. On Combining Classifiers. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 226–239. [Google Scholar] [CrossRef]

- Congalton, R.G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Q. Effects of Spatial Resolution on Crop Identification and Acreage Estimation. Remote Sens. Inf. 2014, 29, 36–40. [Google Scholar]

- Mariotto, I.; Thenkabail, P.S.; Huete, A.; Slonecker, E.T.; Platonov, A. Hyperspectral versus Multispectral Crop-Productivity Modeling and Type Discrimination for the HyspIRI Mission. Remote Sens. Environ. 2013, 139, 291–305. [Google Scholar] [CrossRef]

| Study Areas | Sensors | Temporal Phase | Growing Period of Crops |

|---|---|---|---|

| Site #1 | Sentinel-1 | 12 periods: 13 February 2021, 1 June 202125 June 2021, 1 July 2021, 19 July 2021, 31 July 2021, 24 August 2021, 5 September 2021, 23 September 2021, 29 September 2021, 11 October 2021, 17 October 2021 | Wheat: Early April~mid-to-late September Quinoa: April~October Highland barley: April~October Rape: April~September |

| Sentinel-2 | 12 periods: 9 February 2021, 4 June 2021, 29 June 2021, 2 July 2021, 22 July 2021, 29 July 2021, 26 August 2021, 7 September 2021, 22 September 2021, 30 September 2021, 12 October 2021, 17 October 2021 | ||

| GF-6 | 22 August 2021 | ||

| Site #2 | Sentinel-1 | 12 periods: 19 March 2020, 24 April 2020, 30 May 2020, 11 June 2020, 5 July 2020, 29 July 2020, 22 August 2020,3 September 2020, 27 September 2020, 9 October 2020, 21 October 2020, 2 November 2020 | |

| Sentinel-2 | 12 periods: 19 March 2020, 18 April 2020, 2 June 2020, 17 June 2020, 2 July 2020, 1 August 20201, 26 August 2020, 5 September 2020, 25 September 2020, 30 September 2020, 15 October 2020, 25 October 2020 | ||

| GF-6 | 26 July 2020 |

| Study Area | Crop Type | Training Samples | Validation Samples |

|---|---|---|---|

| Site #1 | wolfberry | 8 regions/599 pixels | 7 regions/725 pixels |

| quinoa | 14 regions/1135 pixels | 13 regions/1061 pixels | |

| highland barley | 5 regions/484 pixels | 4 regions/416 pixels | |

| wheat | 18 regions/1541 pixels | 18 regions/1234 pixels | |

| rape | 13 regions/687 pixels | 12 regions/594 pixels | |

| Site #2 | wolfberry | 22 regions/1808 pixels | 21 regions/1685 pixels |

| quinoa | 15 regions/692 pixels | 14 regions/665 pixels | |

| haloxylon | 11 regions/995 pixels | 11 regions/955 pixels | |

| wheat | 14 regions/559 pixels | 13 regions/620 pixels | |

| poplar | 20 regions/1262 pixels | 20 regions/1118 pixels |

| Scenario Notations | Features | Methods | Scenario Notations | Features | Methods |

|---|---|---|---|---|---|

| S1 | SAR (VV + VH) | ML | S20 | SAR + GF + VI + BP | U-Net |

| S2 | SAR (VV + VH) | RF | S21 | Results of S1~S4 | MV |

| S3 | SAR (VV + VH) | SVM | S22 | Results of S1~S4 | E-OAI |

| S4 | SAR (VV + VH) | U-Net | S23 | Results of S1~S4 | OAI-MV |

| S5 | GF | ML | S24 | Results of S5~S8 | MV |

| S6 | GF | RF | S25 | Results of S5~S8 | E-OAI |

| S7 | GF | SVM | S26 | Results of S5~S8 | OAI-MV |

| S8 | GF | U-Net | S27 | Results of S9~S12 | MV |

| S9 | VI (NDVI + RVI + SAVI) | ML | S28 | Results of S9~S12 | E-OAI |

| S10 | VI (NDVI + RVI + SAVI) | RF | S29 | Results of S9~S12 | OAI-MV |

| S11 | VI (NDVI + RVI + SAVI) | SVM | S30 | Results of S13~S16 | MV |

| S12 | VI (NDVI + RVI + SAVI) | U-Net | S31 | Results of S13~S16 | E-OAI |

| S13 | BP (LAI + Cab + CWC + FAPAR + FVC) | ML | S32 | Results of S13~S16 | OAI-MV |

| S14 | BP (LAI + Cab + CWC + FAPAR + FVC) | RF | S33 | Results of S17~S20 | MV |

| S15 | BP (LAI + Cab + CWC + FAPAR + FVC) | SVM | S34 | Results of S17~S20 | E-OAI |

| S16 | BP (LAI + Cab + CWC + FAPAR + FVC) | U-Net | S35 | Results of S17~S20 | OAI-MV |

| S17 | SAR + GF + VI + BP | ML | S36 | Results of S1~S20 | MV |

| S18 | SAR + GF + VI + BP | RF | S37 | Results of S1~S20 | E-OAI |

| S19 | SAR + GF + VI + BP | SVM | S38 | Results of S1~S20 | OAI-MV |

| Site #1 | |||||||||||||||

| Wolfberry | Quinoa | Highland Barley | Wheat | Rape | |||||||||||

| PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | |

| S1 | 54.3 | 51.3 | 52.8 | 55.5 | 61.4 | 58.3 | 35.7 | 22.2 | 27.3 | 58.3 | 71.1 | 64.1 | 65.5 | 52.2 | 58.1 |

| S2 | 65.3 | 71.5 | 68.2 | 61.2 | 63.8 | 62.5 | 16.0 | 37.4 | 22.4 | 72.4 | 67.9 | 70.1 | 68.5 | 59.0 | 63.4 |

| S3 | 56.3 | 56.7 | 56.5 | 61.5 | 62.5 | 62.0 | 28.7 | 32.3 | 30.4 | 67.2 | 73.0 | 70.0 | 73.8 | 60.9 | 66.8 |

| S4 | 61.1 | 54.0 | 57.3 | 55.5 | 69.5 | 61.7 | 17.4 | 18.6 | 18.0 | 70.0 | 65.8 | 67.9 | 56.8 | 58.5 | 57.6 |

| S5 | 55.6 | 58.7 | 57.1 | 70.3 | 65.8 | 68.0 | 93.8 | 37.7 | 53.8 | 54.3 | 81.0 | 65.0 | 88.9 | 70.9 | 78.9 |

| S6 | 50.5 | 71.9 | 59.3 | 53.4 | 66.7 | 59.3 | 86.0 | 38.7 | 53.3 | 67.7 | 71.5 | 69.5 | 88.2 | 64.7 | 74.6 |

| S7 | 48.1 | 74.1 | 58.3 | 71.7 | 67.8 | 69.7 | 87.8 | 41.1 | 56.0 | 66.6 | 70.2 | 68.4 | 88.2 | 76.8 | 82.1 |

| S8 | 60.2 | 67.9 | 63.8 | 49.7 | 72.1 | 58.8 | 82.4 | 44.8 | 58.1 | 68.9 | 66.2 | 67.5 | 84.7 | 70.0 | 76.7 |

| S9 | 69.9 | 83.6 | 76.1 | 77.7 | 73.5 | 75.5 | 73.8 | 64.9 | 69.1 | 78.7 | 80.1 | 79.4 | 70.2 | 62.4 | 66.1 |

| S10 | 81.1 | 77.1 | 79.0 | 55.3 | 80.5 | 65.6 | 78.9 | 52.4 | 63.0 | 76.5 | 72.5 | 74.4 | 61.1 | 56.3 | 58.6 |

| S11 | 85.4 | 83.0 | 84.2 | 76.0 | 84.1 | 79.8 | 83.6 | 75.8 | 79.5 | 83.7 | 81.7 | 82.7 | 72.1 | 71.2 | 71.6 |

| S12 | 77.5 | 77.0 | 77.2 | 45.2 | 78.7 | 57.4 | 85.5 | 38.4 | 53.0 | 80.3 | 69.8 | 74.7 | 59.9 | 67.8 | 63.6 |

| S13 | 64.0 | 82.6 | 72.1 | 66.5 | 70.9 | 68.6 | 21.4 | 43.4 | 28.7 | 76.0 | 70.7 | 73.3 | 61.3 | 45.7 | 52.4 |

| S14 | 80.8 | 82.0 | 81.4 | 57.0 | 83.6 | 67.8 | 72.9 | 49.8 | 59.2 | 79.0 | 66.5 | 72.2 | 41.3 | 45.6 | 43.3 |

| S15 | 83.1 | 86.4 | 84.7 | 78.7 | 85.3 | 81.9 | 77.8 | 54.8 | 64.3 | 82.6 | 77.5 | 80.0 | 58.9 | 66.2 | 62.3 |

| S16 | 80.9 | 84.0 | 82.4 | 70.3 | 84.0 | 76.5 | 76.1 | 44.6 | 56.2 | 77.8 | 73.5 | 75.6 | 61.8 | 66.3 | 63.9 |

| S17 | 65.4 | 82.9 | 73.1 | 66.7 | 76.3 | 71.2 | 65.9 | 65.0 | 65.4 | 77.7 | 72.6 | 75.1 | 61.9 | 49.2 | 54.8 |

| S18 | 79.7 | 79.4 | 79.6 | 69.7 | 84.2 | 76.2 | 86.9 | 45.4 | 59.6 | 76.2 | 79.3 | 77.7 | 69.3 | 66.0 | 67.6 |

| S19 | 80.4 | 74.8 | 77.5 | 70.3 | 81.9 | 75.7 | 91.5 | 54.0 | 67.9 | 80.0 | 85.2 | 82.5 | 77.8 | 75.3 | 76.5 |

| S20 | 82.7 | 86.6 | 84.6 | 86.5 | 80.1 | 83.2 | 82.3 | 85.7 | 84.0 | 80.9 | 80.2 | 80.5 | 73.7 | 77.7 | 75.6 |

| Site #2 | |||||||||||||||

| Wolfberry | Highland Barley | Haloxylon | Wheat | Poplar | |||||||||||

| PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | |

| S1 | 70.9 | 82.3 | 76.1 | 12.2 | 25.5 | 16.5 | 76.1 | 60.5 | 67.4 | 69.6 | 31.1 | 43.0 | 86.3 | 62.1 | 72.3 |

| S2 | 46.9 | 74.6 | 57.6 | 7.5 | 6.8 | 7.2 | 70.6 | 38.4 | 49.8 | 50.5 | 19.3 | 27.9 | 61.4 | 54.4 | 57.7 |

| S3 | 68.8 | 81.2 | 74.5 | 8.8 | 16.7 | 11.5 | 74.8 | 42.2 | 53.9 | 64.8 | 36.3 | 46.5 | 73.7 | 73.9 | 73.8 |

| S4 | 89.0 | 68.3 | 77.3 | 5.5 | 22.7 | 8.9 | 46.2 | 58.0 | 51.5 | 4.5 | 17.2 | 7.1 | 10.1 | 27.8 | 14.8 |

| S5 | 53.3 | 87.8 | 66.4 | 37.6 | 42.3 | 39.8 | 83.6 | 50.3 | 62.8 | 66.2 | 36.0 | 46.7 | 87.6 | 88.6 | 88.1 |

| S6 | 70.3 | 81.7 | 75.6 | 46.8 | 54.6 | 50.4 | 69.8 | 62.3 | 65.8 | 52.8 | 41.0 | 46.2 | 90.6 | 83.3 | 86.8 |

| S7 | 81.3 | 82.4 | 81.8 | 44.0 | 56.4 | 49.4 | 71.7 | 78.8 | 75.1 | 44.6 | 39.6 | 41.9 | 91.8 | 83.4 | 87.4 |

| S8 | 85.5 | 88.3 | 86.8 | 45.7 | 64.9 | 53.6 | 74.7 | 79.8 | 77.2 | 66.7 | 44.3 | 53.3 | 86.7 | 94.6 | 90.5 |

| S9 | 88.6 | 81.2 | 84.7 | 72.2 | 85.2 | 78.2 | 52.2 | 89.6 | 65.9 | 89.2 | 88.2 | 88.7 | 94.1 | 73.0 | 82.2 |

| S10 | 84.9 | 87.9 | 86.4 | 73.7 | 86.2 | 79.4 | 60.8 | 89.3 | 72.3 | 88.0 | 87.4 | 87.7 | 96.5 | 88.8 | 92.5 |

| S11 | 87.0 | 94.0 | 90.4 | 74.8 | 93.4 | 83.1 | 78.1 | 80.8 | 79.4 | 94.4 | 92.4 | 93.4 | 95.5 | 89.0 | 92.1 |

| S12 | 89.9 | 93.0 | 91.5 | 73.0 | 84.4 | 78.3 | 83.2 | 84.1 | 83.6 | 92.4 | 92.1 | 92.3 | 94.5 | 95.8 | 95.2 |

| S13 | 73.5 | 83.0 | 78.0 | 79.4 | 76.6 | 78.0 | 34.6 | 99.4 | 51.4 | 89.2 | 94.9 | 92.0 | 98.7 | 76.3 | 86.0 |

| S14 | 88.2 | 92.6 | 90.3 | 76.6 | 84.0 | 80.1 | 59.7 | 92.6 | 72.6 | 91.4 | 92.7 | 92.1 | 99.4 | 84.1 | 91.1 |

| S15 | 92.5 | 95.6 | 94.0 | 66.8 | 87.7 | 75.8 | 83.3 | 88.4 | 85.8 | 92.1 | 86.8 | 89.4 | 99.7 | 88.6 | 93.8 |

| S16 | 89.4 | 91.5 | 90.4 | 75.2 | 89.2 | 81.6 | 79.5 | 85.6 | 82.5 | 94.8 | 87.3 | 90.9 | 96.2 | 90.3 | 93.2 |

| S17 | 83.7 | 83.8 | 83.7 | 77.9 | 87.3 | 82.3 | 33.0 | 99.8 | 49.6 | 87.7 | 95.0 | 91.2 | 98.4 | 69.2 | 81.2 |

| S18 | 92.7 | 92.7 | 92.7 | 78.4 | 86.6 | 82.3 | 77.2 | 90.1 | 83.1 | 91.9 | 92.1 | 92.0 | 99.3 | 94.2 | 96.7 |

| S19 | 93.6 | 94.0 | 93.8 | 71.6 | 87.7 | 78.8 | 81.6 | 90.5 | 85.8 | 92.4 | 91.5 | 91.9 | 99.4 | 89.8 | 94.3 |

| S20 | 97.0 | 91.5 | 94.2 | 83.5 | 89.1 | 86.2 | 75.6 | 99.1 | 85.7 | 96.7 | 95.2 | 95.9 | 92.0 | 97.2 | 94.5 |

| Site #1 | |||||||||||||||

| Wolfberry | Quinoa | Highland Barley | Wheat | Rape | |||||||||||

| PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | |

| S21 | 71.7 | 61.1 | 66.0 | 63.6 | 69.0 | 66.2 | 25.0 | 26.9 | 25.9 | 65.7 | 71.5 | 68.5 | 66.9 | 61.8 | 64.2 |

| S22 | 55.3 | 77.3 | 64.5 | 53.3 | 67.9 | 59.7 | 12.3 | 69.7 | 20.9 | 78.2 | 64.1 | 70.4 | 73.4 | 54.6 | 62.6 |

| S23 | 63.1 | 66.8 | 64.9 | 63.6 | 68.0 | 65.7 | 13.3 | 55.3 | 21.5 | 74.3 | 69.6 | 71.9 | 71.9 | 59.6 | 65.2 |

| S24 | 62.8 | 72.3 | 67.2 | 71.1 | 68.6 | 69.8 | 93.1 | 43.9 | 59.7 | 63.3 | 78.1 | 69.9 | 88.3 | 73.7 | 80.3 |

| S25 | 51.6 | 81.7 | 63.2 | 68.0 | 73.1 | 70.5 | 71.3 | 75.7 | 73.5 | 72.5 | 67.4 | 69.9 | 92.2 | 68.1 | 78.4 |

| S26 | 58.1 | 78.3 | 66.7 | 65.1 | 71.9 | 68.3 | 89.0 | 50.2 | 64.2 | 70.7 | 73.2 | 71.9 | 90.1 | 70.6 | 79.2 |

| S27 | 85.2 | 78.9 | 81.9 | 70.4 | 82.8 | 76.1 | 86.1 | 62.5 | 72.4 | 81.2 | 77.9 | 79.5 | 63.8 | 70.5 | 67.0 |

| S28 | 83.9 | 81.8 | 82.9 | 74.0 | 84.0 | 78.7 | 82.4 | 73.9 | 77.9 | 84.3 | 79.9 | 82.0 | 69.7 | 71.3 | 70.5 |

| S29 | 86.0 | 83.0 | 84.5 | 76.0 | 84.1 | 79.8 | 83.3 | 77.6 | 80.4 | 83.7 | 81.7 | 82.7 | 72.1 | 71.2 | 71.6 |

| S30 | 83.4 | 84.0 | 83.7 | 75.6 | 83.9 | 79.5 | 78.1 | 59.5 | 67.5 | 81.6 | 72.9 | 77.0 | 49.5 | 61.8 | 54.9 |

| S31 | 86.9 | 87.3 | 87.1 | 83.8 | 79.4 | 81.5 | 61.7 | 65.8 | 63.7 | 83.1 | 72.8 | 77.6 | 44.4 | 67.7 | 53.7 |

| S32 | 84.2 | 85.5 | 84.8 | 78.7 | 84.3 | 81.4 | 69.1 | 68.7 | 68.9 | 84.9 | 72.0 | 77.9 | 49.3 | 67.7 | 57.1 |

| S33 | 81.6 | 78.9 | 80.2 | 77.4 | 82.5 | 79.9 | 91.5 | 57.7 | 70.8 | 78.8 | 81.8 | 80.3 | 69.9 | 72.9 | 71.3 |

| S34 | 82.7 | 87.8 | 85.1 | 83.7 | 82.0 | 82.8 | 82.2 | 80.4 | 81.3 | 84.1 | 81.1 | 82.6 | 71.7 | 74.6 | 73.1 |

| S35 | 82.6 | 86.9 | 84.7 | 91.0 | 79.5 | 84.8 | 83.6 | 85.3 | 84.4 | 80.3 | 82.6 | 81.4 | 74.3 | 78.8 | 76.5 |

| S36 | 87.1 | 84.0 | 85.5 | 74.6 | 88.9 | 81.1 | 89.1 | 73.2 | 80.4 | 87.2 | 82.4 | 84.7 | 77.8 | 78.8 | 78.3 |

| S37 | 90.8 | 88.2 | 89.5 | 83.5 | 86.8 | 85.1 | 84.6 | 76.2 | 80.2 | 89.2 | 83.9 | 86.4 | 71.5 | 82.6 | 76.6 |

| S38 | 91.3 | 91.2 | 91.3 | 91.2 | 84.8 | 87.8 | 86.7 | 89.9 | 88.3 | 89.7 | 82.5 | 85.9 | 66.7 | 89.8 | 76.5 |

| Site #2 | |||||||||||||||

| Wolfberry | Highland Barley | Haloxylon | Wheat | Poplar | |||||||||||

| PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | |

| S21 | 80.8 | 77.8 | 79.3 | 7.2 | 27.8 | 11.4 | 71.9 | 54.1 | 61.7 | 57.1 | 43.1 | 49.1 | 72.4 | 75.2 | 73.8 |

| S22 | 87.7 | 72.7 | 79.5 | 4.2 | 58.2 | 7.9 | 77.4 | 57.3 | 65.8 | 15.0 | 67.4 | 24.5 | 75.8 | 70.8 | 73.2 |

| S23 | 85.7 | 85.7 | 85.7 | 4.2 | 58.4 | 7.9 | 70.1 | 60.6 | 65.0 | 42.8 | 52.4 | 47.1 | 83.2 | 67.8 | 74.7 |

| S24 | 83.5 | 82.3 | 82.9 | 42.6 | 60.2 | 49.9 | 73.9 | 79.3 | 76.5 | 56.7 | 44.5 | 49.8 | 85.7 | 91.0 | 88.2 |

| S25 | 86.4 | 82.2 | 84.2 | 36.6 | 74.5 | 49.1 | 73.6 | 81.4 | 77.3 | 44.9 | 41.7 | 43.2 | 88.8 | 83.7 | 86.1 |

| S26 | 88.5 | 83.5 | 85.9 | 51.9 | 67.9 | 58.8 | 74.8 | 79.7 | 77.2 | 31.6 | 43.8 | 36.7 | 84.6 | 82.7 | 83.6 |

| S27 | 92.3 | 89.4 | 90.8 | 76.0 | 90.5 | 82.6 | 71.1 | 89.3 | 79.2 | 92.1 | 93.3 | 92.7 | 95.1 | 92.2 | 93.6 |

| S28 | 91.8 | 92.4 | 92.1 | 77.4 | 88.4 | 82.5 | 83.2 | 85.7 | 84.4 | 92.6 | 92.8 | 92.7 | 95.5 | 92.1 | 93.8 |

| S29 | 97.2 | 89.5 | 93.2 | 60.2 | 93.7 | 73.3 | 82.9 | 85.3 | 84.1 | 95.6 | 82.7 | 88.7 | 97.5 | 95.1 | 96.3 |

| S30 | 92.3 | 91.4 | 91.9 | 77.7 | 87.3 | 82.2 | 61.8 | 90.9 | 73.5 | 92.1 | 95.2 | 93.6 | 99.1 | 88.1 | 93.3 |

| S31 | 94.5 | 90.4 | 92.4 | 59.9 | 90.3 | 72.0 | 78.7 | 86.4 | 82.4 | 94.2 | 85.5 | 89.6 | 99.9 | 88.3 | 93.7 |

| S32 | 92.1 | 92.5 | 92.3 | 75.2 | 87.0 | 80.7 | 76.5 | 90.7 | 83.0 | 92.8 | 92.8 | 92.8 | 99.7 | 87.7 | 93.3 |

| S33 | 96.0 | 90.8 | 93.3 | 78.9 | 88.4 | 83.4 | 70.6 | 94.9 | 81.0 | 92.0 | 95.0 | 93.4 | 98.5 | 94.4 | 96.4 |

| S34 | 95.8 | 92.6 | 94.1 | 78.8 | 87.6 | 83.0 | 79.3 | 94.3 | 86.2 | 92.5 | 93.2 | 92.8 | 99.0 | 94.4 | 96.7 |

| S35 | 95.7 | 92.5 | 94.1 | 75.4 | 89.4 | 81.8 | 82.0 | 97.0 | 88.9 | 96.7 | 91.9 | 94.2 | 99.3 | 92.4 | 95.7 |

| S36 | 96.8 | 95.6 | 96.2 | 75.7 | 97.5 | 85.2 | 88.7 | 99.5 | 93.8 | 99.4 | 92.5 | 95.8 | 97.6 | 94.7 | 96.1 |

| S37 | 96.6 | 95.8 | 96.2 | 76.7 | 96.9 | 85.6 | 90.4 | 99.6 | 94.8 | 99.6 | 93.2 | 96.3 | 97.7 | 94.1 | 95.9 |

| S38 | 98.6 | 95.0 | 96.8 | 79.7 | 95.3 | 86.8 | 92.4 | 98.1 | 95.2 | 99.4 | 95.6 | 97.4 | 94.5 | 99.4 | 96.9 |

| Feature Set | SAR | GF | VI | BP | SAR + GF + BP + VI | ALL | |

|---|---|---|---|---|---|---|---|

| Site #1 | Range of OA | 61.15~64.43 | 64.19~70.89 | 76.78~79.30 | 73.68~76.34 | 77.27~81.43 | 79.86~84.79 |

| OAI of highest OA | OAI4 | OAI5 | OAI3 | OAI2 | OAI2 | OAI2 | |

| OA of OAI1 | 63.23 | 64.87 | 78.97 | 74.63 | 80.46 | 82.36 | |

| Site #2 | Range of OA | 68.97~72.02 | 78.83~80.10 | 82.04~85.52 | 80.93~87.99 | 86.86~92.07 | 88.94~95.07 |

| OAI of highest OA | OAI2 | OAI5 | OAI6 | OAI6 | OAI2 | OAI2 | |

| OA of OAI1 | 69.02 | 79.78 | 85.35 | 81.71 | 90.01 | 90.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shuai, S.; Zhang, Z.; Zhang, T.; Luo, W.; Tan, L.; Duan, X.; Wu, J. Innovative Decision Fusion for Accurate Crop/Vegetation Classification with Multiple Classifiers and Multisource Remote Sensing Data. Remote Sens. 2024, 16, 1579. https://doi.org/10.3390/rs16091579

Shuai S, Zhang Z, Zhang T, Luo W, Tan L, Duan X, Wu J. Innovative Decision Fusion for Accurate Crop/Vegetation Classification with Multiple Classifiers and Multisource Remote Sensing Data. Remote Sensing. 2024; 16(9):1579. https://doi.org/10.3390/rs16091579

Chicago/Turabian StyleShuai, Shuang, Zhi Zhang, Tian Zhang, Wei Luo, Li Tan, Xiang Duan, and Jie Wu. 2024. "Innovative Decision Fusion for Accurate Crop/Vegetation Classification with Multiple Classifiers and Multisource Remote Sensing Data" Remote Sensing 16, no. 9: 1579. https://doi.org/10.3390/rs16091579

APA StyleShuai, S., Zhang, Z., Zhang, T., Luo, W., Tan, L., Duan, X., & Wu, J. (2024). Innovative Decision Fusion for Accurate Crop/Vegetation Classification with Multiple Classifiers and Multisource Remote Sensing Data. Remote Sensing, 16(9), 1579. https://doi.org/10.3390/rs16091579