Evaluating the Point Cloud of Individual Trees Generated from Images Based on Neural Radiance Fields (NeRF) Method

Abstract

1. Introduction

2. Materials and Methods

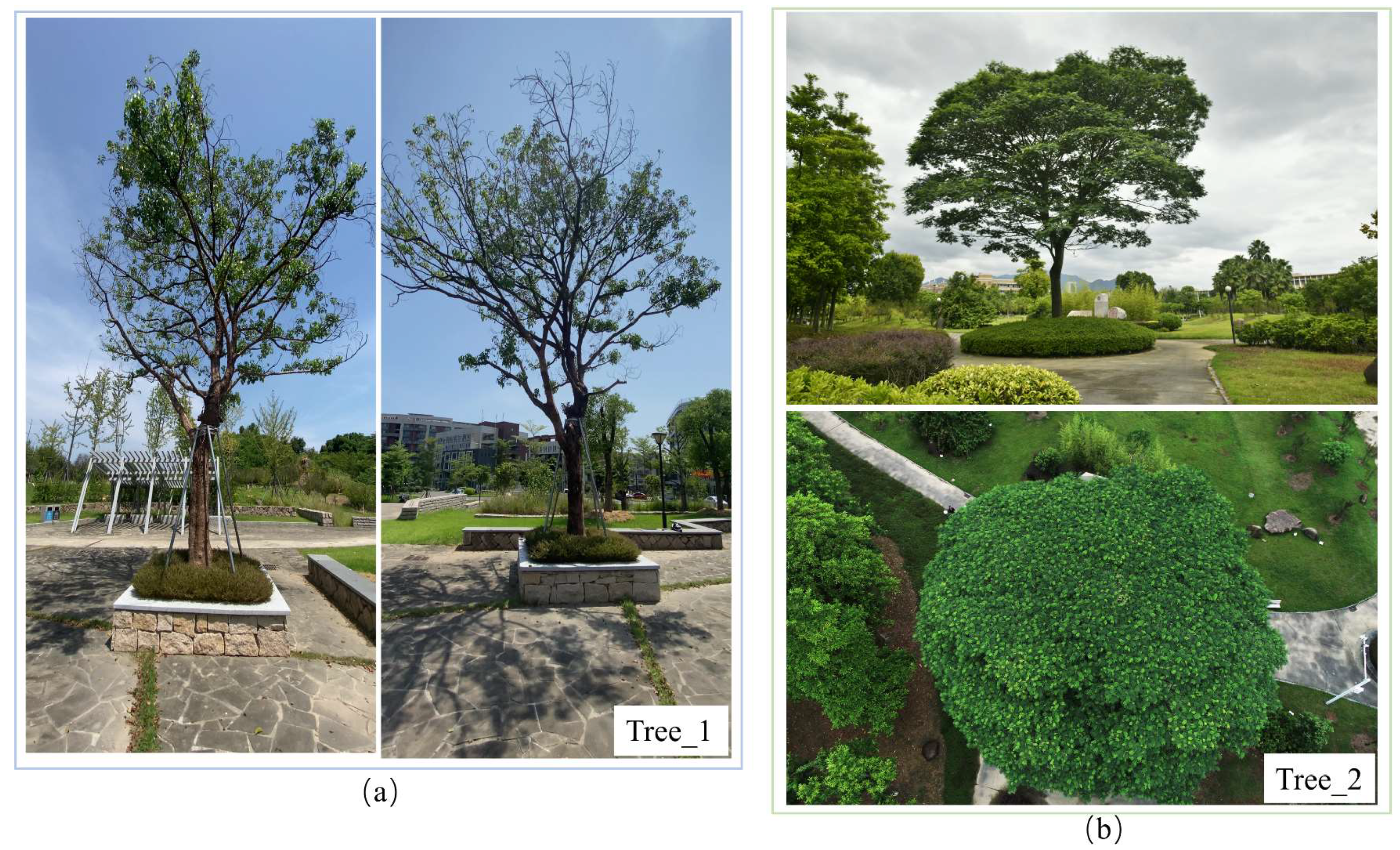

2.1. Study Area

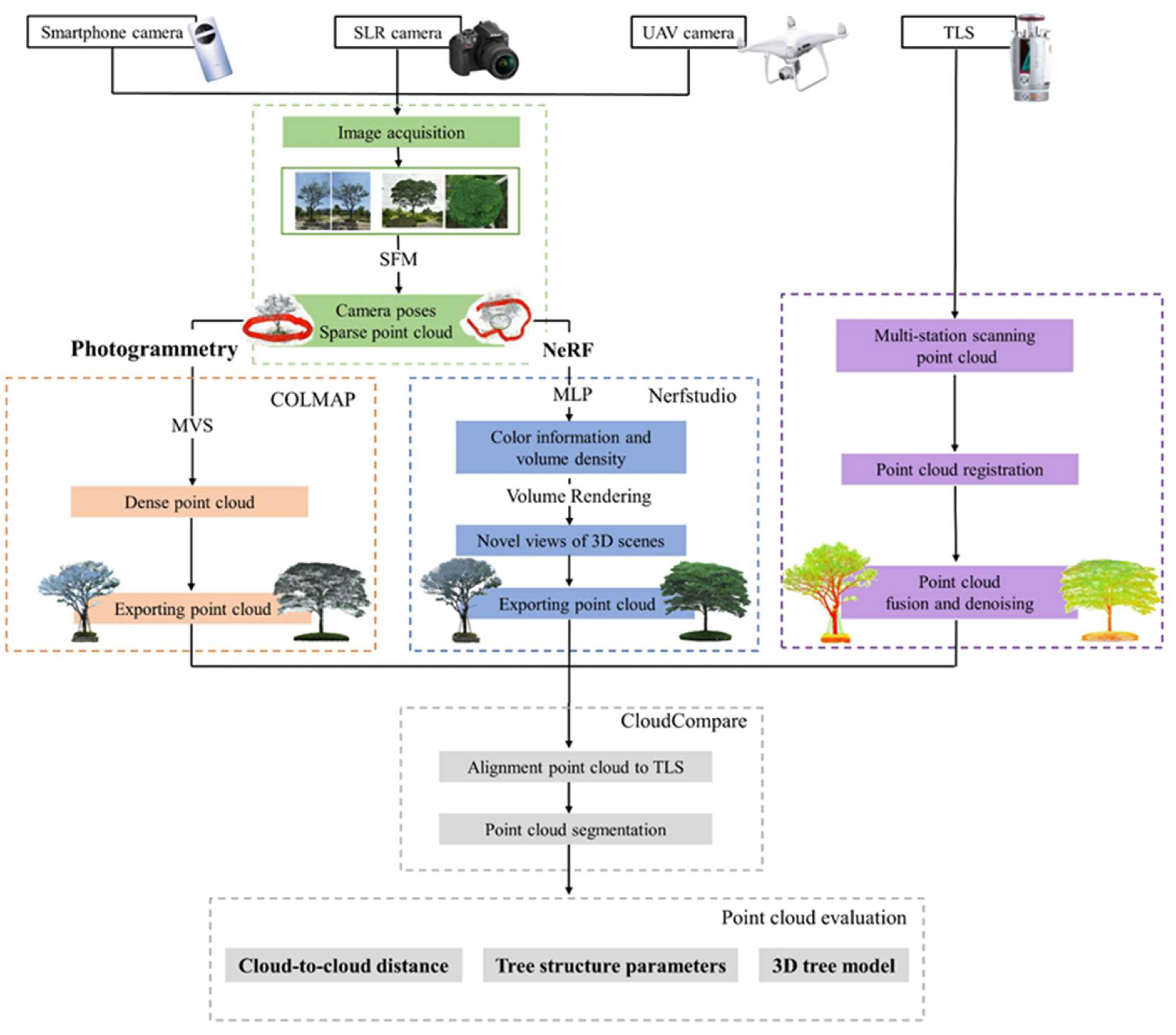

2.2. Research Methods

2.2.1. Traditional Photogrammetric Reconstruction

2.2.2. Neural Radiance Fields (NeRF) Reconstruction

2.3. Data Acquisition and Processing

2.3.1. Data Acquisition

2.3.2. Data Processing

3. Results

3.1. Reconstruction Efficiency Comparison

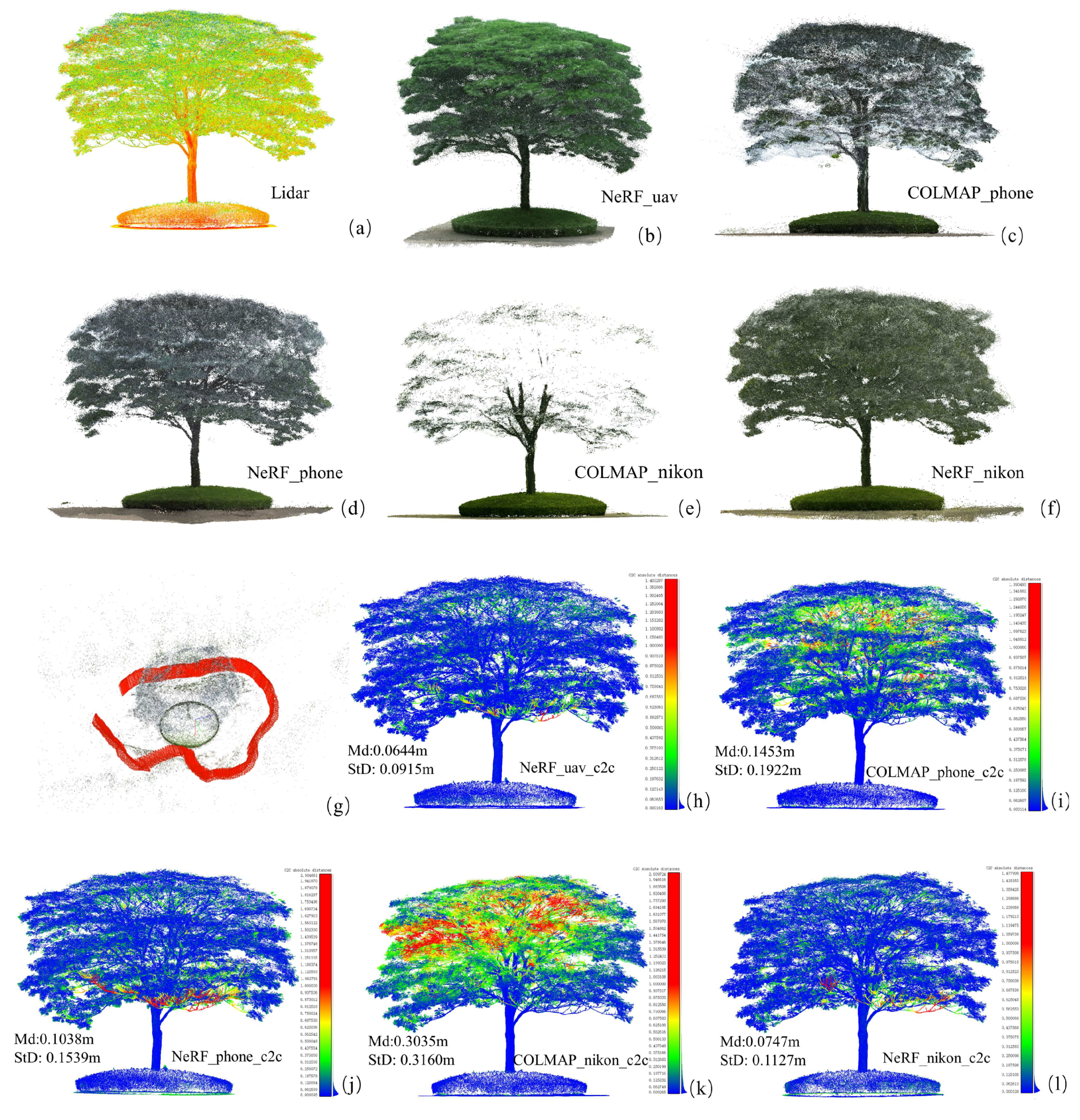

3.2. Point Cloud Direct Comparison

3.3. Extraction of Structural Parameters from Tree Point Cloud

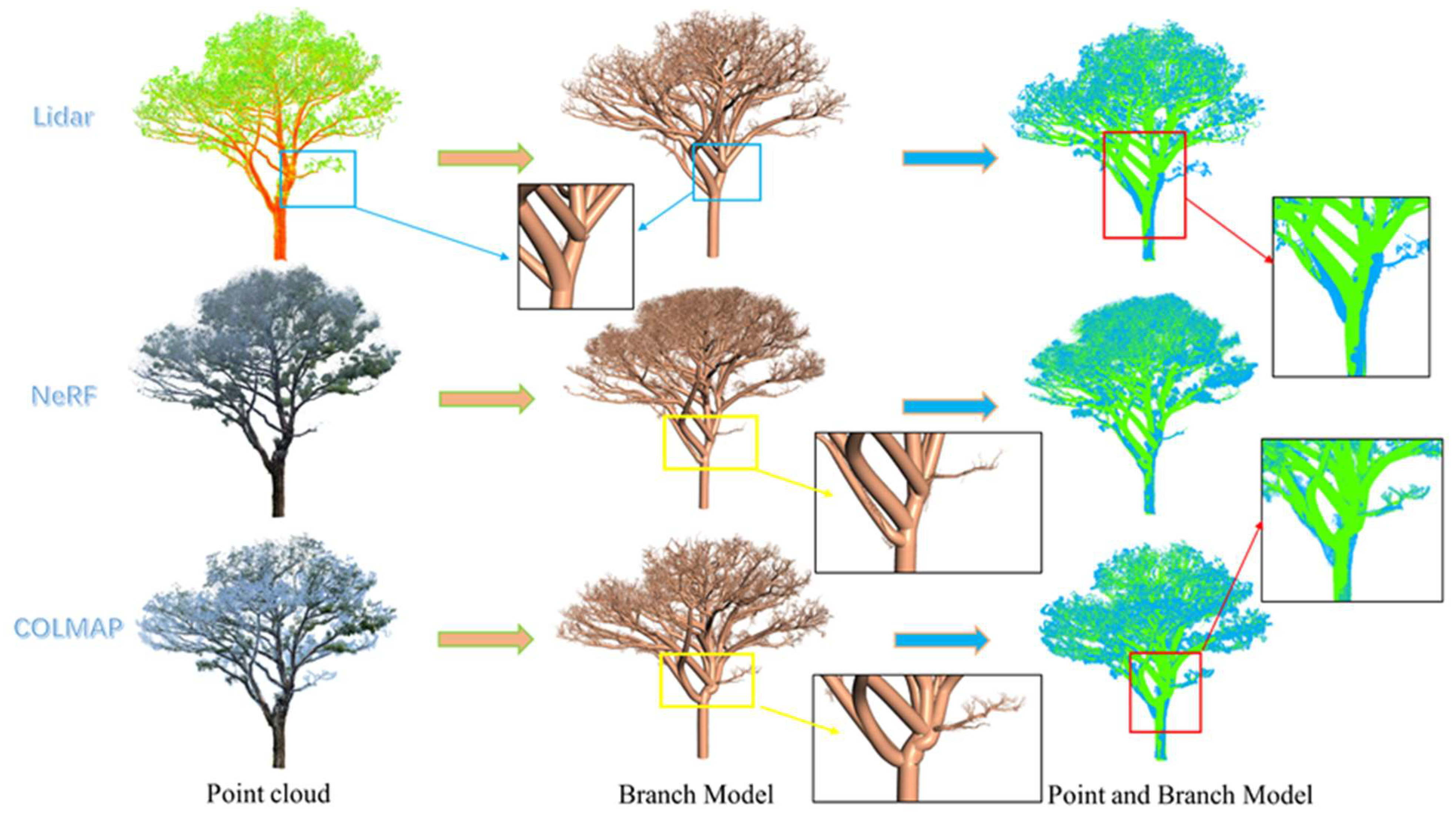

3.4. Comparison of 3D Tree Models Generated from Point Cloud

4. Discussion

5. Conclusions

- (1)

- The processing efficiency of the NeRF method is much higher than that of the traditional photogrammetric densification method of MVS, and it also has less stringent requirements for image overlap.

- (2)

- For trees with sparse or little leaves, both methods can reconstruct accurate 3D tree models; for trees with dense foliage, the reconstruction quality of NeRF is better, especially in the tree crown area. NeRF models tend to be noisy though.

- (3)

- The accuracy of the traditional photogrammetric method is still higher than that of the NeRF method in the extraction of single tree structural parameters in terms of tree height and DBH; NeRF models are likely to overestimate the tree height and underestimate DBH. However, canopy metrics (canopy width, height, area, volume and so on) derived from the NeRF model are more accurate than those derived from the photogrammetric model.

- (4)

- The method of image data acquisition, the quality of images (image resolution, quantity) and the photographing environment all have an impact on the accuracy and completeness of NeRF and photogrammetry reconstruction results. Further research is needed to determine the best practices.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kankare, V.; Joensuu, M.; Vauhkonen, J.; Holopainen, M.; Tanhuanpää, T.; Vastaranta, M.; Hyyppä, J.; Hyyppä, H.; Alho, P.; Rikala, J. Estimation of the timber quality of Scots pine with terrestrial laser scanning. Forests 2014, 5, 1879–1895. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Liang, X.; Kukko, A.; Balenović, I.; Saarinen, N.; Junttila, S.; Kankare, V.; Holopainen, M.; Mokroš, M.; Surový, P.; Kaartinen, H. Close-Range Remote Sensing of Forests: The state of the art, challenges, and opportunities for systems and data acquisitions. IEEE Geosci. Remote Sens. Mag. 2022, 10, 32–71. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from motion photogrammetry in forestry: A review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, H.; Chen, C.; Tang, L. Three-dimensional digitization of the arid land plant Haloxylon ammodendron using a consumer-grade camera. Ecol. Evol. 2018, 8, 5891–5899. [Google Scholar] [CrossRef] [PubMed]

- Kükenbrink, D.; Marty, M.; Bösch, R.; Ginzler, C. Benchmarking laser scanning and terrestrial photogrammetry to extract forest inventory parameters in a complex temperate forest. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 102999. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Xu, Z.; Shen, X.; Cao, L. Extraction of Forest Structural Parameters by the Comparison of Structure from Motion (SfM) and Backpack Laser Scanning (BLS) Point Clouds. Remote Sens. 2023, 15, 2144. [Google Scholar] [CrossRef]

- Balestra, M.; Tonelli, E.; Vitali, A.; Urbinati, C.; Frontoni, E.; Pierdicca, R. Geomatic Data Fusion for 3D Tree Modeling: The Case Study of Monumental Chestnut Trees. Remote Sens. 2023, 15, 2197. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Yang, Z.; Chen, Y.; Wang, J.; Manivasagam, S.; Ma, W.-C.; Yang, A.J.; Urtasun, R. UniSim: A Neural Closed-Loop Sensor Simulator. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 1389–1399. [Google Scholar]

- Corona-Figueroa, A.; Frawley, J.; Bond-Taylor, S.; Bethapudi, S.; Shum, H.P.; Willcocks, C.G. Mednerf: Medical neural radiance fields for reconstructing 3d-aware ct-projections from a single X-ray. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 3843–3848. [Google Scholar]

- Chen, J.; Zhang, Y.; Kang, D.; Zhe, X.; Bao, L.; Jia, X.; Lu, H. Animatable neural radiance fields from monocular rgb videos. arXiv 2021, arXiv:2106.13629. [Google Scholar]

- Turki, H.; Ramanan, D.; Satyanarayanan, M. Mega-nerf: Scalable construction of large-scale nerfs for virtual fly-throughs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12922–12931. [Google Scholar]

- Mazzacca, G.; Karami, A.; Rigon, S.; Farella, E.; Trybala, P.; Remondino, F. NERF for heritage 3D reconstruction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 1051–1058. [Google Scholar] [CrossRef]

- Condorelli, F.; Rinaudo, F.; Salvadore, F.; Tagliaventi, S. A comparison between 3D reconstruction using nerf neural networks and mvs algorithms on cultural heritage images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 565–570. [Google Scholar] [CrossRef]

- Remondino, F.; Karami, A.; Yan, Z.; Mazzacca, G.; Rigon, S.; Qin, R. A critical analysis of nerf-based 3D reconstruction. Remote Sens. 2023, 15, 3585. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 519–528. [Google Scholar]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. 2022, 41, 102. [Google Scholar] [CrossRef]

- Barron, J.T.; Mildenhall, B.; Tancik, M.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.P. Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In Proceedings of the ICCV International Conference on Computer Vision, Virtual, 19–25 June 2021; pp. 5855–5864. [Google Scholar]

- Tancik, M.; Weber, E.; Ng, E.; Li, R.; Yi, B.; Wang, T.; Kristoffersen, A.; Austin, J.; Salahi, K.; Ahuja, A. Nerfstudio: A modular framework for neural radiance field development. In Proceedings of the ACM SIGGRAPH 2023 Conference Proceedings, Los Angeles, CA, USA, 6–10 August 2023; pp. 1–12. [Google Scholar]

- Zhang, X.; Srinivasan, P.P.; Deng, B.; Debevec, P.; Freeman, W.T.; Barron, J.T. Nerfactor: Neural factorization of shape and reflectance under an unknown illumination. ACM Trans. Graph. 2021, 40, 237. [Google Scholar] [CrossRef]

- Barron, J.T.; Mildenhall, B.; Verbin, D.; Srinivasan, P.P.; Hedman, P. Mip-nerf 360: Unbounded anti-aliased neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5470–5479. [Google Scholar]

- Du, S.; Lindenbergh, R.; Ledoux, H.; Stoter, J.; Nan, L. AdTree: Accurate, detailed, and automatic modelling of laser-scanned trees. Remote Sens. 2019, 11, 2074. [Google Scholar] [CrossRef]

- Raumonen, P.; Kaasalainen, M.; Åkerblom, M.; Kaasalainen, S.; Kaartinen, H.; Vastaranta, M.; Holopainen, M.; Disney, M.; Lewis, P. Fast automatic precision tree models from terrestrial laser scanner data. Remote Sens. 2013, 5, 491–520. [Google Scholar] [CrossRef]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 1–14. [Google Scholar] [CrossRef]

| Image Dataset | Number of Images | Image Resolution | Total Pixel (Millions) |

|---|---|---|---|

| Tree_1_phone | 118 | 2160 × 3840 | 979 |

| Tree_2_phone | 237 | 1080 × 1920 | 491 |

| Tree_2_nikon | 107/66 | 8598 × 5597 | 5149 |

| Tree_2_uav | 374 | 5472 × 3648 | 7466 |

| Tree_1_phone | Tree_2_phone | Tree_2_nikon | Tree_2_uav | |

|---|---|---|---|---|

| COLMAP | 98.003 | 102.816 | 50.673 | failed |

| NeRF | 11.5 | 12.0 | 12.5 | 14.0 |

| Tree ID | Model ID | Number of Point |

|---|---|---|

| Tree_1 | Tree_1_Lidar | 990,265 |

| Tree_1_COLMAP | 1,506,021 | |

| Tree_1_NeRF | 1,275,360 | |

| Tree_2 | Tree_2_Lidar | 2,986,309 |

| Tree_2_phone_COLMAP | 1,746,868 | |

| Tree_2_phone_NeRF | 1,075,874 | |

| Tree_2_nikon_COLMAP | 580,714 | |

| Tree_2_nikon_NeRF | 1,986,197 | |

| Tree_2_uav_NeRF | 1,765,165 |

| Models | TH (m) | DBH (m) | CD (m) | CA (m2) | CV (m3) |

|---|---|---|---|---|---|

| Tree1_Lidar | 8.2 | 0.345 | 7.1 | 39.5 | 137.0 |

| Tree1_NeRF | 8.4 | 0.318 | 7.1 | 39.2 | 139.7 |

| Tree1_COLMAP | 8.1 | 0.349 | 7.0 | 38.9 | 138.0 |

| Tree2_Lidar | 13.6 | 0.546 | 16.3 | 208.8 | 1549.2 |

| Tree2_uav_NeRF | 14.0 | 0.479 | 16.0 | 201.0 | 1531.9 |

| Tree2_nikon_NeRF | 14.7 | 0.461 | 16.5 | 214.6 | 1627.0 |

| Tree2_phone_NeRF | 14.3 | 0.469 | 17.0 | 225.7 | 1693.1 |

| Tree2_phone_COLMAP | 13.8 | 0.562 | 16.7 | 220.3 | 1657.4 |

| Models | TH (m) | DBH (m) | TL (m) |

|---|---|---|---|

| Tree_1_Lidar | 8.16 | 0.347 | 8.07 |

| Tree_1_NeRF | 8.32 | 0.321 | 7.95 |

| Tree_1_COLMAP | 8.02 | 0.346 | 8.04 |

| Tree_2_Lidar | 13.35 | 0.548 | 17.20 |

| Tree_2_uav_NeRF | 13.75 | 0.476 | 17.19 |

| Tree_2_nikon_NeRF | 13.55 | 0.468 | 18.0 |

| Tree_2_phone_NeRF | 13.89 | 0.502 | 18.22 |

| Tree_2_phone_COLMAP | 13.86 | 0.557 | 17.64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, H.; Tian, G.; Chen, C. Evaluating the Point Cloud of Individual Trees Generated from Images Based on Neural Radiance Fields (NeRF) Method. Remote Sens. 2024, 16, 967. https://doi.org/10.3390/rs16060967

Huang H, Tian G, Chen C. Evaluating the Point Cloud of Individual Trees Generated from Images Based on Neural Radiance Fields (NeRF) Method. Remote Sensing. 2024; 16(6):967. https://doi.org/10.3390/rs16060967

Chicago/Turabian StyleHuang, Hongyu, Guoji Tian, and Chongcheng Chen. 2024. "Evaluating the Point Cloud of Individual Trees Generated from Images Based on Neural Radiance Fields (NeRF) Method" Remote Sensing 16, no. 6: 967. https://doi.org/10.3390/rs16060967

APA StyleHuang, H., Tian, G., & Chen, C. (2024). Evaluating the Point Cloud of Individual Trees Generated from Images Based on Neural Radiance Fields (NeRF) Method. Remote Sensing, 16(6), 967. https://doi.org/10.3390/rs16060967