Abstract

PolSAR image classification has attracted extensive significant research in recent decades. Aiming at improving PolSAR classification performance with speckle noise, this paper proposes an active complex-valued convolutional-wavelet neural network by incorporating dual-tree complex wavelet transform (DT-CWT) and Markov random field (MRF). In this approach, DT-CWT is introduced into the complex-valued convolutional neural network to suppress the speckle noise of PolSAR images and maintain the structures of learned feature maps. In addition, by applying active learning (AL), we iteratively select the most informative unlabeled training samples of PolSAR datasets. Moreover, MRF is utilized to obtain spatial local correlation information, which has been proven to be effective in improving classification performance. The experimental results on three benchmark PolSAR datasets demonstrate that the proposed method can achieve a significant classification performance gain in terms of its effectiveness and robustness beyond some state-of-the-art deep learning methods.

1. Introduction

Recently, polarimetric synthetic aperture radar (PolSAR) [1,2] has been able to capture the polarimetric characteristics of ground targets in addition to their scattering echo amplitude, phase, and frequency characteristics; it is one of the most important remote sensing types and more sophisticated than the traditional SAR system. PolSAR obtains the target polarization scattering matrix by sending and receiving electromagnetic waves with different polarization modes in order to measure the ground target’s polarization scattering properties [3]. Terrain classification using PolSAR image data has became a very challenging interpretation task due to limited labeled samples, unexploited spatial information, and speckle noise. PolSAR terrain classification, which focuses on assigning a meaningful category to each pixel vector according to PolSAR image contents, has wide application prospects, such as sea monitoring, geological resource exploration, agriculture status assessment, navigation safety, topographic mapping, etc. [4,5,6,7].

In the last few decades, PolSAR classification has been carried out with a wide variety of machine learning methods when limited numbers of labeled samples are obtainable. Kong et al. [8] developed a Bayesian classification approach to terrain cover identification using polarimetric data. Tao et al. [9] incorporated spatial and feature information into the k-nearest neighbor (KNN) classifier for PolSAR classification. Lardeux et al. [10] successfully applied SVM for Multifrequency PolSAR classification. Ersahin et al. [11] proposed a spectral graph clustering technique to improve performance in the classification of PolSAR images. Bi et al. [12] employed discriminative clustering for unsupervised PolSAR image classification. Antropov et al. [13] adopted a probabilistic neural network (PNN) for land cover mapping using spaceborne PolSAR image data. Compared with the polarimetric decomposition technique, machine learning PolSAR classification can usually make classification performance more robust and accurate.

Recently, a group of deep learning methods for PolSAR classification have been discussed in the literature and are demonstrated to be powerful for acquiring deep representations and discriminative features from PolSAR data. Zhou et al. [14] used a deep convolutional neural network for supervised multilooked POLSAR classification. Liu et al. [15] suggested a Wishart deep belief network (W-DBN) for PolSAR classification by making full use of local spatial information. Jiao and Liu [16] designed a Wishart deep stacking network (W-DSN) for fast PolSAR classification. Zhang et al. [17] presented a complex-valued CNN (CV-CNN) for PolSAR classification by employing both amplitude and phase information. Bi et al. [18] proposed a graph-based semisupervised deep neural network for PolSAR image classification. However, the success of these deep learning methods for PolSAR classification depends on massive amounts of labeled samples, as we know that PolSAR-annotated data is difficult to obtain in actual cases since labeling samples is time-consuming and labor-intensive. Additionally, speckle noise can easily affect the classification performance of PolSAR image data. Therefore, we will essentially develop a robust PolSAR classification technique that overcomes limited labeled samples and speckle noise by integrating phase and spatial information.

To tackle the problem of limited labeled samples, active learning (AL) is a powerful strategy for PolSAR classification since it can choose the most valuable samples of unlabeled data for annotation. AL has been widely applied in PolSAR and hyperspectral remote sensing images. Tuia et al. [19] proposed two AL methods for the semiautomatic definition of training samples for remote sensing image classification and applied them and SVM to the classification of VHR optical imagery and hyperspectral data. Tuia et al. [20] introduced a survey of AL for solving supervised remote sensing image classification tasks. In the literature, AL approaches concentrate on establishing new training sets by iteratively enhancing classification performance through sampling. Samat et al. [21] presented an AL ELM algorithm with fast operation and strong generalization for quad-polarimetric SAR classification. Liu et al. [22] proposed an active object-based classification algorithm with random forest for PolSAR imagery to enhance classification performance. Bi et al. [23] integrated AL and a fine-tuned CNN to improve the performance of supervised PolSAR image classification. Additionally, Li et al. [24] applied AL and loopy belief propagation to exploit the spectral and the spatial information of hyperspectral image data. Cao et al. [25] adopted deep learning and AL methods in a unified framework for hyperspectral image classification. Thus, AL is an effective method to decrease the cost of obtaining large labeled training samples for PolSAR image classification using deep learning methods.

As for speckle noise, CNN-based and CV-CNN-based methods for PolSAR image classification cannot effectively balance classification efficiency and classification performance. The wavelet transform- and complex wavelet transform-constrained pooling layers in CNN and CV-CNN can remove the speckle noise and have a better performance in PolSAR image classification. Additionally, wavelet transform domain-based CNN and CV-CNN can preserve PolSAR image edges, sharpness, and some other structures, while CNN and CV-CNN with conventional pooling have not yet taken into consideration the structure of the previous layer. Consequently, a rising number of wavelet transform domain-based deep learning algorithms have been developed to address various computer vision issues, including object categorization, picture super-resolution, etc. De et al. [26] developed two wavelet-based edge feature enhancements in the input layer to enhance the classification performance of CNN. Liu et al. [27] adapted a multi-level wavelet CNN algorithm and applied it to image restoration, including single-image super-resolution, image denoising, and object classification. Sahito et al. [28] applied scale-invariant deep learning based on stationary wavelet transform to single-image super-resolution and captured more information on the images. Therefore, using wavelet transform domain-based CNN and CV-CNN for PolSAR image classification is probably an effective way to preserve PolSAR image edges and sharpness while suppressing speckle noise.

Motivated by the discussions above, in this paper, we investigate the application of an active complex-valued convolutional-wavelet neural network and Markov random fields (ACV-CWNN-MRFs) in PolSAR image classification. Firstly, we incorporate dual-tree complex wavelet transform into CV-CNN (CV-CWNN) for PolSAR image classification to decrease the level of speckle noise. The CV-CWNN can preserve texture structures details and effectively enhance the classification performance of PolSAR images. Secondly, to address the issue of limited labeled samples, we take advantage of the potential of CV-CWNN in an active learning scheme (ACV-CWNN). Finally, we refine the PolSAR classification maps of ACV-CWNN via Markov random fields (MRFs), which utilize spatial contextual information to characterize the local correlation and implement the smooth alignment of PolSAR image edges.

The primary contributions of this research to the PolSAR classification literature are threefold:

- We apply a dual-tree complex wavelet transform-constrained pooling layer to CV-CNN classification from PolSAR images to decrease the level of speckle noise and preserve some structure features (e.g., edges, sharpness) at the pooling layer.

- We integrate a combination of AL and an MRF model-based framework into CV-CWNN for the PolSAR image classification task, which not only obtains the most informative unlabeled training samples based on the output of CV-CWNN, but also achieves spatial local correlation information to improve the classification performance.

- The experimental results on several PolSAR benchmark datasets demonstrate that our proposed ACV-CWNN-MRF PolSAR classification algorithm outperforms other state-of-the-art CNN-based and CV-CNN-based PolSAR algorithms with fewer labeled samples, especially with speckle noise backgrounds.

The rest of this paper is organized as follows. In Section 2, we describe in detail the development of ACV-CWNN-MRF for PolSAR classification. In Section 3, we present a six-dimensional complex matrix representation of PolSAR data. In Section 4, we report and analyze the PolSAR image classification experiments and results on three benchmark datasets. A comparison of the results for some state-of-the-art deep learning methods is discussed in Section 5. The conclusions are provided in Section 6.

2. Methods

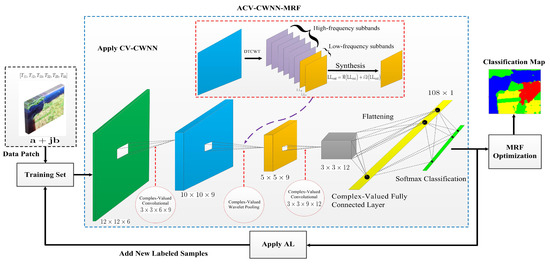

In this section, we first establish the basic theory of CV-CWNN by integrating dual-tree complex wavelet transform (DT-CWT) for the PolSAR image classification task. Finally, we introduce a significant development in incorporating CV-CWNN into the active learning (AL) and Markov random field (MRF) frameworks named ACV-CWNN-MRF. The configuration of the ACV-CWNN-MRF architecture for PolSAR image classification is as depicted in Figure 1.

Figure 1.

The configuration of ACV-CWNN-MRF architecture for PolSAR image classification.

2.1. The Architecture of CV-CWNN

Complex-valued convolutional-wavelet neural network (CV-CWNN) is a variant of the real-valued convolutional neural network (RV-CNN) that uses signals or image amplitude and phase information. The framework of CV-CWNN consists of five main processes: the complex-valued convolutional layer, complex-valued activation layer, complex-valued wavelet pooling layer, complex-valued fully connected layer, and complex-valued output layer. CV-CWNN is designed to deal with high-dimensional complex-valued data, and has superiority in preserving the structure of the previous layer and suppressing speckle noise at the same time over traditional CV-CNN [29,30] and RV-CNN.

Assume that and denote the PolSAR image dataset and corresponding ground-truth mask, respectively, where and are the CV and RV domains, respectively, P and Q are the range and azimuth dimensions of the PolSAR image, and R is the number of band channels. As depicted in Figure 1, the input of CV-CWNN or ACV-CWNN-MRF is the CV data patches obtained by clipping the PolSAR dataset , where the nth input CV patch and is the number of CV patches. The patch size, which affects the risk of overfitting and computational efficiency, plays an important role. We select the input patch dataset with a size of . The label corresponding to the nth input CV patch is , where C is the number of PolSAR target classes.

(1) Complex-Valued Convolutional Layer: In the CV convolutional layer, the lth () CV-CWNN convolutional layer’s output feature maps () calculates by the CV convolutional operation on the th layer’s output feature maps (), where i and j are the number of feature maps in lth and th layers, respectively. The CV convolution with a bank of CV filters and a CV bias is expressed as

where and are, respectively, the real and imaginary parts of a CV number · and ∗ denotes the CV convolution operation. The parameters and of CV-CWNN in the CV convolutional layer will be trained. For a CV convolutional operation with a stride size of S and a zero-padding size of P, the sizes of the CV output feature maps of lth CV-CWNN convolutional layer are represented as and .

(2) Complex-Valued Activation Layer: The linear CV features can be extracted by each CV convolutional layer. To achieve improved performance and robustness of CV-CWNN or ACV-CWNN-MRF, we must apply a nonlinear transformation to these linear CV features. In CV deep learning, the most popular CV activation functions are the CV sigmoid function (Sig) and the CV rectified linear unit (RLU) [31,32]. The output () of the CV activation layer can be calculated as

or

Note that the CV activation functions (e.g., RLU and Sig) are, respectively, applied to the real and imaginary parts of the input layer, which is critical to greatly simplifying the complex-valued back-propagation of CV-CWNN, defined as follows.

(3) Complex-Valued Wavelet Pooling Layer: A CV pooling operator in CV-CNN is applied to reduce the width and height dimensions of the input features, which does not change the number of band channels. Nowadays, determining the CV average and CV maximum of a rectangular CV neighborhood by down-sampling the real and imaginary parts have became two recommended pooling functions [33]. In the CV convolutional and activation layers, we can obtain some pivotal structures (e.g., edges, sharpness). However, the conventional CV average and CV maximum pooling in CV-CNN do not take the pivotal structures of the previous layer into consideration. Additionally, the intrinsic speckle noise of the PolSAR images will have an effect on the CV-CNN’s classification performance. Thus, it becomes completely necessary to preserve more pivotal structures at the CV pooling layer and suppress the speckle noise at the same time.

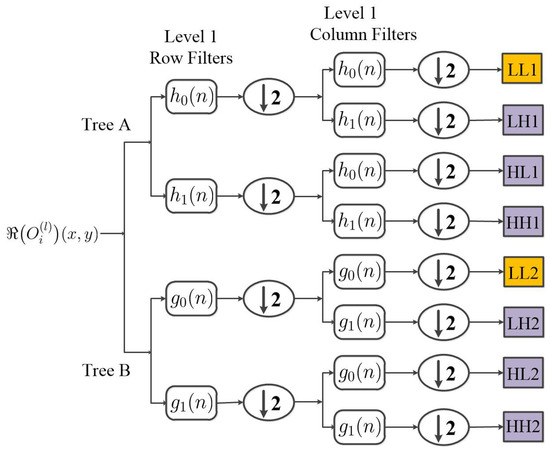

To address the above-mentioned issue, we utilize a dual-tree complex wavelet transform (DTCWT) [34,35]-constrained pooling operator to replace the CV average and CV maximum pooling operators. By means of the CV wavelet pooling operator based on DTCWT, the pivotal structures can be preserved and the speckle noise can be suppressed at the same time. The DTCWT-constrained pooling operator in CV-CWNN is performed with the real part of the output of the CV activation layers. From a multi-resolution analysis perspective, can be perfectly reconstructed from a linear combination of a CV scaling function with scale and six CV wavelet functions oriented at angle , which is expressed as

where denotes the pixel coordinate in the real part of feature map , and and denote the scaling coefficients and wavelet coefficients, respectively. Moreover, DTCWT is designed to have five desired properties in perfect reconstruction, approximate shift invariance, good directional selectivity, efficient order-N computation, and limited redundancy [36]. DTCWT with tree A (odd-length filters) and tree B (even-length filters) for pooling layers in CV-CWNN is illustrated in Figure 2. The level 1 filter pairs, and , , and , in Figure 2 are low-pass/high-pass and conjugate orthogonality filter pairs. Thus, for the real part of each input feature map , DTCWT can produce eight complex bandpass subimages:

where and are two complex low-frequency bandpasses subimages, and , , , , , and are six complex high-frequency bandpass subimages. Then, the average of the real and imaginary parts of the low-frequency bandpass subimages and is calculated as the real part of the output feature of the CV wavelet pooling layer in CV-CWNN, which is expressed as

where is the real part of the output feature of the CV wavelet pooling layer. For the same operation of , we can obtain , which is the imaginary part of the output feature of the CV wavelet pooling layer. In fact, the low-frequency bandpass subimages in CV-CWNN are retained in order to preserve some pivotal structures of the input, and the high-frequency bandpass subimages are discarded in order to suppress the speckle noise.

Figure 2.

Flowchart of 2D DTCWT for level .

(4) Complex-Valued Fully Connected Layer: The CV fully connected layer converts the CV data stream into 1-dimensional data to perform category determination. On top of several combinations of CV convolutional layers, CV activation layers, and CV wavelet pooling layers, one or two CV fully connected layers are employed in ACV-CWNN, which is considered as a special case of a CV convolutional layer. Each output neuron in the CV fully connected layer is in connection with the input neurons in the previous layer. Moreover, the number of output neurons is not fixed. The output CV feature vector of the CV fully connected layer is calculated with the output feature map of the previous layer, which is expressed as

where f is the Sig or RLU function, and K is the number of input neurons in the Lth CV fully connected layer.

(5) Complex-Valued Output Layer: A CV softmax operation is applied to the CV output layer of CV-CWNN for PolSAR images classification. The output of the CV softmax operation is a C-dimensional CV one-hot vector, while C is the number of PolSAR target classes. Each element of the CV one-hot vector varies in the range , and all the elements sum to , which satisfies the probability-like property. The input of the CV softmax operation is the th CV fully connected layer’s output feature map . Then, the CV softmax operation for the ith element of the CV one-hot vector can be represented as

where and the output denotes the CV probability belonging to class i for one CV training sample.

2.2. The Complex-Valued Back-Propagation of CV-CWNN

In this paper, we utilize the Sig function in the CV activation layer and CV fully connected layer. The error between the Lth CV output layer’s output and the label of the nth single CV patch can be directly measured as

The MSE loss function is widely used in RV-CNN and CV-CNN algorithms. However, CV data patches may be contaminated by non-Gaussian noise or the outliers. For PolSAR classification with non-Gaussian noise and outliers, the cross-entropy loss function might perform better than the MSE loss function since the MSE loss function is just optimal for samples with Gaussian noise and introduces the problem of the gradient disappearing. Then, the CV cross-entropy loss function is chosen to train the CV-CWNN network, which is given by

In the process of the complex-valued back-propagation [37,38] of CV-CWNN, the CV intermediate quantity with the output of the previous layer, also called the CV backward error term, is defined as follows

Thus, the parameters and in CV-CWNN can be adjusted iteratively by J and the learning rate in terms of

and

where t is the training time, and is the complex conjugate. The parameters are adjusted until the value of loss function no longer decreases. Mathematically, can be calculated for each layer in the following subsection.

(1) CV Output Layer: There are no parameters to be learned in the CV output layer. Combining (2) and (3), the CV backward error term of the CV output layer can be calculated as

(2) CV Fully Connected Layer: Combining (1) and (3), the CV backward error term of the th CV fully connected layer can be expressed as

In the previous layer, is affected by the backward error term through all the units in . Considering (6), the CV recurrence relation from th layer to lth layers can be formulated as

(3) CV Convolutional Layer and Activation Layer: The CV backward error term of lth CV convolutional layer is related to the CV backward error term of the CV wavelet pooling layer and the CV wavelet pooling weight . The weight in a pooling layer map is calculated as

where and are the CV scaling functions of tree A and tree B respectively.

To keep the same size as the CV convolutional layer’s map, we upsample by repeating each pixel 2 times in horizontal and vertical directions, denoted by . Similar to the derivation (7) of the hidden layer in the CV fully connected layer, the CV backward error term of the CV convolutional layer and activation layer can be expressed as

(4) CV Wavelet Pooling Layer: There are no parameters to be learned in the CV wavelet pooling layer. According to (7), the CV backward error term of the CV wavelet pooling layer can be calculated as

2.3. AL and MRF-Based CV-CWNN PolSAR Classification

In this subsection, we briefly introduce the core theory of AL and MRF for PloSAR classification using CV-CWNN.

(1) BvSB-Based AL: The basic idea of AL is enlarging the training dataset iteratively by selecting the most informative unlabeled samples. Many effective AL criteria are proposed to measure the informativeness of candidate sets to query for annotations, such as random selection (RS), mutual information (MI)-based criteria [39], the breaking ties (BT) algorithm [40], the entropy measure (EP) [41] and the best-versus-second-best (BvSB) measure [42]. The EP and BvSB measures are implemented by the probability estimates of candidate pixels. Due to our designed CV-CWNN model, which can supply exact pixel-wise class probabilities and perform the multiclass PloSAR classification task, it is beneficial to adopt the BvSB measure as the AL strategy in our CV-CWNN (i.e., ACV-CWNN). Specifically, the BvSB measure can be defined as

where , and denote the best and second best class membership probability outputs from the CV-CWNN of the CV data patch , respectively, and is the candidate pool.

This demonstrates that adopting the BvSB-Based AL strategy in CV-CWNN is very essential since the PolSAR classification task lacks enough unlabeled training samples. Additionally, it should be emphasized that (10) assumes that only one sample is annotated in each iteration. However, the reality is that more than one candidate is annotated.

(2) MRF-Based Optimization: In order to improve the classification performance of ACV-CWNN, we utilize MRF [12,43] to model the spatial correlation of PolSAR neighboring pixels. The MAP-MRF framework makes the interpixel class dependence assumption that spatially neighboring pixels are likely to belong to the same class. According to the Hammersley–Clifford theorem, the final MRF classification model can thus be formulated as

where is a label set for C classes, is a class label set for CV data patches , is the Kronecker delta function (i.e., if , and otherwise) to count the support from the neighboring pixels if the current pixel is labeled as , is the neighbor of pixel , and () is a constant that controls the importance of the neighboring pixels.

Solving the MRF model (11) is a combinatorial optimization problem which is NP-hard. Recently, some approximating algorithms, such as graph cut [44], message passing [45], and loopy belief propagation [46], have been proposed to achieve the optimal solution. In this paper, we adopt loopy belief propagation since it generally converges fast within less than ten iterations.

3. PolSAR Data Processing

For PolSAR images, the complex scattering matrix is usually used to capture the scattering characteristics of a single target through HH, HV, VH, and VV polarization channels, which can be expressed as

where “H” and “V” denote the orthogonal horizontal/vertical polarization directions, respectively, is the complex scattering coefficient of horizontal transmitting and vertical receiving polarization, and the other coefficients are similarly defined. In the case of reciprocal backscattering, , and based on the Pauli basis [47] can be represented by a complex scattering vector ,

where subscript T denotes the matrix transpose.

Thus, the coherency matrix of PolSAR data can be obtained as follows:

where subscript H and ∗ denote the conjugate transpose and conjugate operation, respectively. Therefore, the upper triangular elements (, , , , , ) of the coherency matrix are used as the input dataset of ACV-CWNN-MRF, and .

After the preprocessing of the input datasets, such as expansion operation, data augmentation (DA), and batch normalization (BN), we can obtain a higher classification accuracy for PolSAR images.

4. Results

In order to verify the effectiveness of the proposed ACV-CWNN-MRF approach, a series of experiments on three real PolSAR datasets are analyzed and compared with some state-of-the-art deep learning-based methods in the following experiments. The overall accuracy (OA), average accuracy (AA), Kappa coefficient (), and the confusion matrix are used to evaluate the performance on the proposed ACV-CWNN-MRF method. Details about these three benchmark PolSAR datasets are described as follows. We randomly select some samples as the training dataset and the remaining samples as the testing dataset.

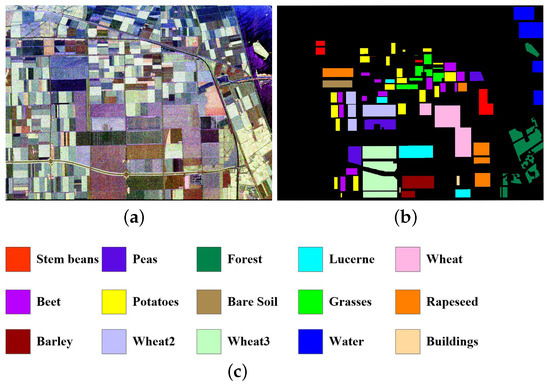

(1) Flevoland Dataset: This dataset was acquired by the NASA/JPL AIRSAR platform over an agriculture area of the Netherlands on August 1989, and is a subset of an L-band, full PolSAR image with a range resolution of m and an azimuth resolution of m. There are, in total, 15 identified categories, including stem beans, beet, barley, peas, potatoes, three types of wheat, forest, bare soil, lucerne, grasses, water, rapeseed, and buildings. The PauliRGB image of the Flevoland dataset is shown in Figure 3a, whose size is pixels. A ground-truth map of the 15 categories and a corresponding color legend of the ground-truth map are shown in Figure 3b,c, respectively.

Figure 3.

Flevoland dataset. (a) Pauli RGB image of the target scene. (b) Ground-truth map of the 15 categories. (c) Color legend of the ground-truth map.

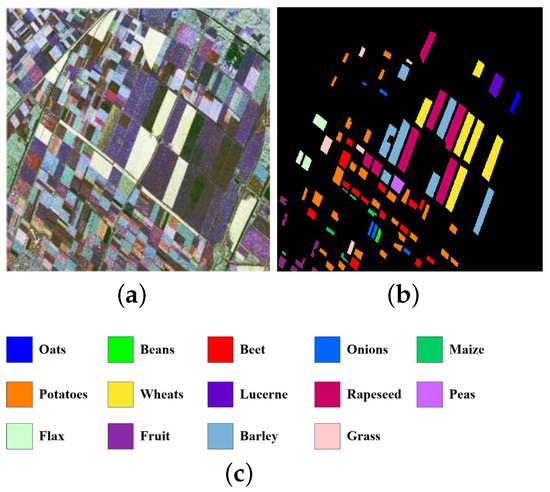

(2) Benchmark Dataset: A Benchmark PolSAR dataset of another L-band full PolSAR image was acquired over the Flevoland area in 1991, and is widely used for PolSAR image classification research. The size of the PolSAR image is pixels. There are, in total, 14 identified categories, including oats, potatoes, flax, beans, wheats, fruit, beet, lucerne, barley, onions, rapeseed, grass, maize, and peas. The PauliRGB image of the Flevoland dataset, a ground-truth map of the 14 categories, and a corresponding color legend of the ground-truth map are shown in Figure 4a–c, respectively.

Figure 4.

Benchmark dataset. (a) Pauli RGB image of the target scene. (b) Ground-truth map of the 14 categories. (c) Color legend of the ground-truth map.

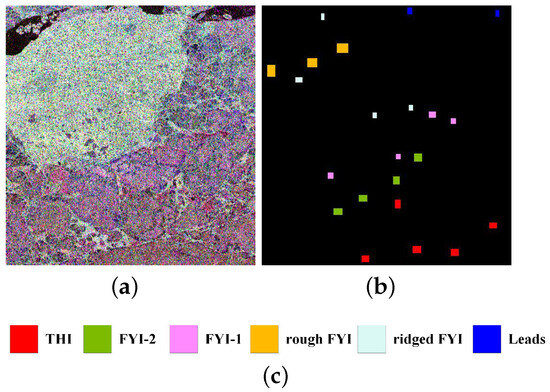

(3) CONVAIR Ice Dataset: The third dataset comprises multilook CONVAIR C-band full PolSAR data obtained over an ice area in Canada in 2001. The original PolSAR image has dimensions of pixels with a spatial resolution of 4 m × 0.43 m. This Pauli RGB image with pixels in Figure 5a was produced by using 10x Azimuth MultiLooking during the CONVAIR data extraction and conversion process. There are, in total, five identified categories, including leads, ThI (thin ice, new forming ice), rough FYI (rough First-Year Ice), ridged FYI (ridged First-Year Ice), FYI-1 (floes, far range), and FYI-2 (floes, near range). A ground-truth map of the five categories and a corresponding color legend of the ground-truth map are shown in Figure 5b,c, respectively.

Figure 5.

CONVAIR Ice dataset. (a) Pauli RGB image of the target scene. (b) Ground-truth map of the 5 categories. (c) Color legend of the ground-truth map.

4.1. Parameter Setting

In this subsection, we conduct an experiment to evaluate the effectiveness of our proposed ACV-CWNN-MRF method for PolSAR image classification incorporating four key strategies: (1) batch normalization (BN); (2) data augmentation (DA); (3) active learning (AL); (4) MRF optimization. In this experiment, the baseline method is CV-CWNN. To discuss the effectiveness of these strategies, we establish four comparison methods which gradually add each of the other techniques.

- (1)

- CV-CWNN: The method that is introduced in Section 2.1.

- (2)

- CV-CWNN + BN (CV-CWNN-B): A method that combines the CV-CWNN method and BN strategy.

- (3)

- CV-CWNN + BN + DA (CV-CWNN-BD): A method that incorporates the CV-CWNN-B method with the DA strategy.

- (4)

- CV-CWNN + BN + DA + AL (ACV-CWNN-BD): A method that integrates the AL strategy with the CV-CWNN-BD method.

- (5)

- CV-CWNN + BN + DA + AL +MRF (ACV-CWNN-MRF): A method that integrates the MRF optimization strategy with the ACV-CWNN-BD method.

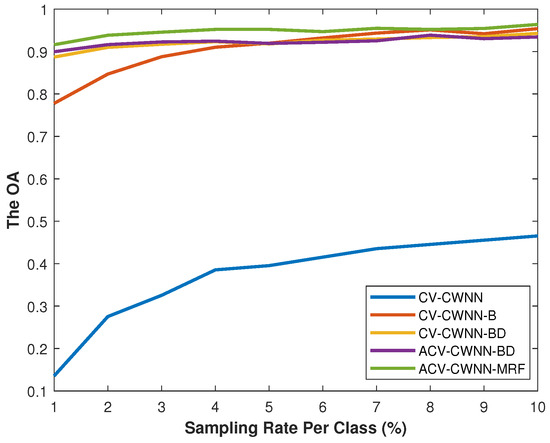

We take the Flevoland dataset to construct the experiment, and the relevant experimental parameters for the CV-CWNN-based methods are set as follows. The learning rate is set at 0.6. The batchsize is empirically set as 128. For all the methods, we conduct two rounds for training in the experiments and randomly select different sample rate of available labeled samples for training. For non-AL based methods, including CV-CWNN, CV-CWNN-B, and CV-CWNN-BD, each round contains 40 training epochs until the training model converges. For AL based methods, including ACV-CWNN-BD and ACV-CWNN-MRF, the first round contains 15 training epochs, while the second round contains 20 training epochs. Specifically, for the first round, we randomly choose 500 labeled samples as the training dataset and the remaining samples as the testing dataset. For the second round, we randomly add 50 samples into the training set for the non-AL based methods and actively select 50 samples to enlarge the training set for the AL based methods. We compute The average OA and the standard derivation of CV-CWNN based methods by averaging 5 independent runs. The experimental results are recorded in Table 1.

Table 1.

The average OA and the standard derivation (%) of CV-CWNN based methods with different strategies for PolSAR classification.

From Table 1, we can observe by column that each method has better performance as the round carries out, which is due to add some samples into the training dataset after the first round. From the results by row, we observe that the different strategy can improve classification and recognition performance. Specifically, CV-CWNN-B can first obtain a classification OA of up to improvement compared with CV-CWNN. Second, DA strategy can achieve an increase of approximately OA by comparing CV-CWNN-BD and CV-CWNN-B. Next, compared with CV-CWNN-B, ACV-CWNN-BD with AL strategy increase OA by about . Finally, MRF strategy can further obtain about improvement of classification OA. Therefore, it demonstrates that each different strategy used in the CV-CWNN based methods can improve the overall classification performance.

Second, in order to evaluate the performance of the CV-CWNN based methods and investigate an optimal sampling rate, we carry out the contrasting experiments on the overall accuracy (OA) versus different sampling rate per class. As can be seen from Figure 6, when the sampling rate is , the OA of ACV-CWNN-MRF is approximately , and as the sampling rate approaches , the accuracy quickly rises to . It follows that for the Flevoland data set, a sample rate is sufficient for ACV-CWNN-MRF. Indeed, it is observable from Figure 6 that as the number of training samples increases, the accuracy of all CV-CWNN based classification methods improves. Additionally, it is evident that each technique is capable of enhancing the classification performance of the CV-CWNN based model to a certain extent.

Figure 6.

The overall accuracy (OA) obtained by all CV-CWNN based methods with different numbers of training samples on the Flevoland dataset.

4.2. Effectiveness Verification

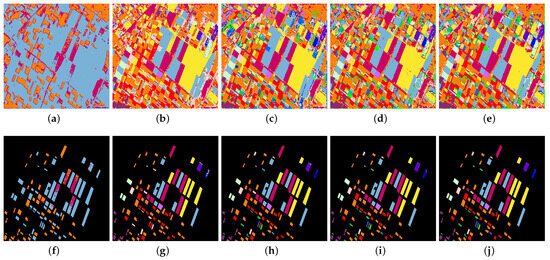

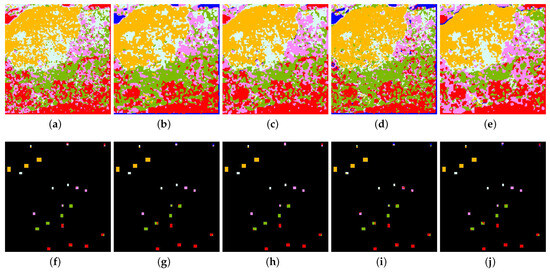

We present our proposed method’s performance and analysis using the Benchmark Dataset. According to the discussions aforementioned, for training, we randomly select of the available labeled samples each class. For AL based methods, the first round contains 15 training epochs, while the second round contains 20 training epochs. For the second round, we randomly add 50 samples into the training set for the non-AL based methods and actively select 50 samples to enlarge the training set for the AL based methods. The size of a local patch is 12. The classification maps achieved by the CV-CWNN based methods are shown in Figure 7, and the classification accuracies are reported in Table 2. It is evident from Table 2 that, when compared to all other approaches, the proposed ACV-CWNN-MRF method performs the best in terms of AA and OA metrics. Comparing the CAs of all the CV-CWNN based methods, it can be seen that the suggested method has the best classification accuracy in the majority of the classes. Furthermore, Figure 7 shows that the proposed ACV-CWNN-MRF method can produce smoother and more accurate classification maps.

Figure 7.

Classification maps for the Benchmark dataset with 15 training epochs the first round and 20 training epochs the second round by different methods. (a,f) CV-CWNN classified map. (b,g) CV-CWNN-B classified map. (c,h) CV-CWNN-BD classified map. (d,i) ACV-CWNN-BD classified map. (e,j) ACV-CWNN-MRF classified map.

Table 2.

Classification accuracies (%) for the Benchmark dataset with 15 training epochs the first round and 20 training epochs the second round by different methods.

5. Discussion

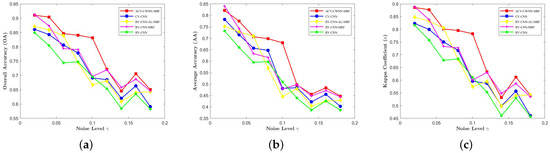

In this section, we compare the ACV-CWNN-MRF method with other some state-of-the-art PolSAR classification methods on CONVAIR Ice dataset with the speckle noise. These compared methods include real-valued CNN (RV-CNN) [14], real-valued CNN-MRF (RV-CNN-MRF) [48], complex-valued CNN (CV-CNN) [17], and real-valued CNN-AL-MRF (RV-CNN-AL-MRF) [25]. The experimental settings for the CONVAIR Ice dataset are as follows. For all methods, there are 238 training samples altogether since we choose of the CONVAIR Ice dataset at random from each class to use as the training set. The learning rate is set as . The batch size is empirically set at 100. For RV-CNN, RV-CNN-MRF, and CV-CNN, each round contains 50 epochs, which do not employ the AL strategy, while for RV-CNN-AL-MRF and ACV-CWNN-MRF, the first round contains 25 epochs and the second round contains 50 epochs. For the second round, we actively select 50 samples to enlarge the training set for the AL-based methods.

The speckle noise in the PolSAR image of the HH, HV, VH, and VV polarization channels is modeled as a multiplicative form, which can be expressed as , where , and , and is white Gaussian noise. The original multiplicative noise of the PolSAR speckling model can be converted to an additive case by the logarithmic operation . Therefore, the log-transformed speckle model of PolSAR images can also be regarded as an additive white Gaussian noise model with a mean of 0 and variance for mathematical tractability [49]. We consider the range of the level of speckle noise as , and the local patch size as , so as to train the compared model and the proposed model for PolSAR classification.

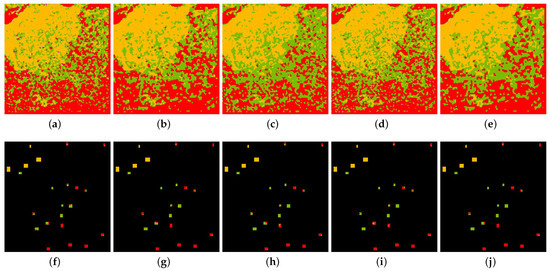

Finally, the overall accuracies (OAs), the average accuracies (AAs), and the Kappa coefficients () for all considered methods with different noise levels of speckles are reported in Figure 8. Visually, Figure 8 shows that as the level of speckle noise increases, the PolSAR image classification performance for all considered methods will decrease. Moreover, when the noise level exceeds , the classification performance drops sharply. However, it can be also easily observed that in the presence of PolSAR speckle noise, our ACV-CWNN-MRF method achieves the best performance in OA, AA, and on the CONVAIR Ice dataset compared with the other four methods. The superior classification performance of our ACV-CWNN-MRF method can be explained by exploiting the CWNN and MRF strategy simultaneously, where the complex-valued wavelet pooling layer of CWNN can not only remove some PolSAR speckle noise, but it can also maintain the structures of the feature maps in accordance with the specified rules. Additionally, classification maps of the CONVAIR Ice dataset with noise levels of and using different methods are presented in Figure 9 and Figure 10, respectively. It can be visually seen from Figure 9 and Figure 10 that our ACV-CWNN-MRF method for the despeckled PolSAR classification achieves a smoother classification map than RV-CNN, RV-CNN-MRF, RF-CNN-AL-MRF, and CV-CNN. According to the aforementioned discussions, our ACV-CWNN-MRF method can suppress the influence of speckle noise and obtain more accurate, smooth, and coherent classification performance.

Figure 8.

The OA, AA, and Kappa coefficient versus the level of speckle noise using different classification methods on CONVAIR Ice dataset. (a) OA vs. curves. (b) AA vs. curves. (c) vs. curves.

Figure 9.

Classification maps of the CONVAIR Ice dataset with noise level using different methods. (Top row) Results of the whole map classification. (Bottom row) Result overlaid with the ground-truth map. (a,f) RV-CNN-classified map. (b,g) RV-CNN-MRF-classified map. (c,h) RV-CNN-AL-MRF-classified map. (d,i) CV-CNN-classified map. (e,j) ACV-CWNN-MRF-classified map.

Figure 10.

Classification maps of the CONVAIR Ice dataset with noise level using different methods. (Top row) Results of the whole map classification. (Bottom row) Result overlaid with the ground-truth map. (a,f) RV-CNN-classified map. (b,g) RV-CNN-MRF-classified map. (c,h) RV-CNN-AL-MRF-classified map. (d,i) CV-CNN-classified map. (e,j) ACV-CWNN-MRF-classified map.

6. Conclusions

In this paper, we have proposed a novel PolSAR image classification method based on an active complex-valued convolutional-wavelet neural network framework by incorporating dual-tree complex wavelet transform and Markov random field. Our proposed ACV-CWNN-MRF method has the following advantages. Firstly, we introduce a DT-CWT-constrained pooling layer into CV-CNN-based PolSAR classification to suppress speckle noise in a PolSAR image and maintain the SAR image structure information. Secondly, we combine the AL and MRF strategies into CV-CWNN to obtain the most informative unlabeled training samples and achieve the local correlation of neighboring pixels. Finally, experiments on three benchmark PolSAR datasets show that our proposed ACV-CWNN-MRF method can outperform some other state-of-the-art deep learning-based PolSAR image classification methods. We conclude that our fine-tuned ACV-CWNN-MRF method is an effective alternative method for PolSAR classification. We will continue to study how to better perform PolSAR classification on our own datasets in our future work.

Author Contributions

Conceptualization, L.L.; methodology, L.L.; software, L.L.; validation, L.L.; formal analysis, L.L.; investigation, L.L.; resources, L.L. and Y.L.; data curation, L.L.; writing—original draft preparation, L.L.; writing—review and editing, L.L. and Y.L.; visualization, L.L.; supervision, L.L.; project administration, L.L.; funding acquisition, L.L. All authors have read and agreed to the published version of the manuscript.

Funding

The research was supported by National Natural Science Foundation of China under grant 42074225.

Data Availability Statement

Our experiments employ the open-source datasets introduced in [17].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cloude, S. Polarisation: Applications in Remote Sensing; OUP Oxford: Oxford, UK, 2009. [Google Scholar]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Hänsch, R.; Hellwich, O. Skipping the real world: Classification of PolSAR images without explicit feature extraction. ISPRS J. Photogramm. Remote Sens. 2018, 140, 122–132. [Google Scholar] [CrossRef]

- Durieux, L.; Lagabrielle, E.; Nelson, A. A method for monitoring building construction in urban sprawl areas using object-based analysis of Spot 5 images and existing GIS data. ISPRS J. Photogramm. Remote Sens. 2008, 63, 399–408. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, R. A multiple conditional random fields ensemble model for urban area detection in remote sensing optical images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3978–3988. [Google Scholar] [CrossRef]

- Besic, N.; Vasile, G.; Chanussot, J.; Stankovic, S. Polarimetric incoherent target decomposition by means of independent component analysis. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1236–1247. [Google Scholar] [CrossRef]

- Doulgeris, A.P.; Anfinsen, S.N.; Eltoft, T. Classification with a non-Gaussian model for PolSAR data. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2999–3009. [Google Scholar] [CrossRef]

- Kong, J.; Swartz, A.; Yueh, H.; Novak, L.; Shin, R. Identification of terrain cover using the optimum polarimetric classifier. J. Electromagn. Waves Appl. 1988, 2, 171–194. [Google Scholar]

- Tao, M.; Zhou, F.; Liu, Y.; Zhang, Z. Tensorial independent component analysis-based feature extraction for polarimetric SAR data classification. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2481–2495. [Google Scholar] [CrossRef]

- Lardeux, C.; Frison, P.L.; Tison, C.; Souyris, J.C.; Stoll, B.; Fruneau, B.; Rudant, J.P. Support vector machine for multifrequency SAR polarimetric data classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 4143–4152. [Google Scholar] [CrossRef]

- Ersahin, K.; Cumming, I.G.; Ward, R.K. Segmentation and classification of polarimetric SAR data using spectral graph partitioning. IEEE Trans. Geosci. Remote Sens. 2009, 48, 164–174. [Google Scholar] [CrossRef]

- Bi, H.; Sun, J.; Xu, Z. Unsupervised PolSAR image classification using discriminative clustering. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3531–3544. [Google Scholar] [CrossRef]

- Antropov, O.; Rauste, Y.; Astola, H.; Praks, J.; Häme, T.; Hallikainen, M.T. Land cover and soil type mapping from spaceborne PolSAR data at L-band with probabilistic neural network. IEEE Trans. Geosci. Remote Sens. 2013, 52, 5256–5270. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.Q. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Liu, F.; Jiao, L.; Hou, B.; Yang, S. POL-SAR image classification based on Wishart DBN and local spatial information. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3292–3308. [Google Scholar] [CrossRef]

- Jiao, L.; Liu, F. Wishart deep stacking network for fast POLSAR image classification. IEEE Trans. Image Process. 2016, 25, 3273–3286. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Bi, H.; Sun, J.; Xu, Z. A graph-based semisupervised deep learning model for PolSAR image classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2116–2132. [Google Scholar] [CrossRef]

- Tuia, D.; Ratle, F.; Pacifici, F.; Kanevski, M.F.; Emery, W.J. Active learning methods for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2218–2232. [Google Scholar] [CrossRef]

- Tuia, D.; Volpi, M.; Copa, L.; Kanevski, M.; Munoz-Mari, J. A survey of active learning algorithms for supervised remote sensing image classification. IEEE J. Sel. Top. Signal Process. 2011, 5, 606–617. [Google Scholar] [CrossRef]

- Samat, A.; Gamba, P.; Du, P.; Luo, J. Active extreme learning machines for quad-polarimetric SAR imagery classification. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 305–319. [Google Scholar] [CrossRef]

- Liu, W.; Yang, J.; Li, P.; Han, Y.; Zhao, J.; Shi, H. A novel object-based supervised classification method with active learning and random forest for PolSAR imagery. Remote Sens. 2018, 10, 1092. [Google Scholar] [CrossRef]

- Bi, H.; Xu, F.; Wei, Z.; Xue, Y.; Xu, Z. An active deep learning approach for minimally supervised PolSAR image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9378–9395. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Spectral–spatial classification of hyperspectral data using loopy belief propagation and active learning. IEEE Trans. Geosci. Remote Sens. 2012, 51, 844–856. [Google Scholar] [CrossRef]

- Cao, X.; Yao, J.; Xu, Z.; Meng, D. Hyperspectral image classification with convolutional neural network and active learning. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4604–4616. [Google Scholar] [CrossRef]

- De Silva, D.; Fernando, S.; Piyatilake, I.; Karunarathne, A. Wavelet based edge feature enhancement for convolutional neural networks. In Proceedings of the Eleventh International Conference on Machine Vision (ICMV 2018), Munich, Germany, 1–3 November 2018; International Society for Optics and Photonics: Bellingham, WA, USA, 2019; Volume 11041, p. 110412R. [Google Scholar]

- Liu, P.; Zhang, H.; Lian, W.; Zuo, W. Multi-level wavelet convolutional neural networks. IEEE Access 2019, 7, 74973–74985. [Google Scholar] [CrossRef]

- Sahito, F.; Zhiwen, P.; Ahmed, J.; Memon, R.A. Wavelet-integrated deep networks for single image super-resolution. Electronics 2019, 8, 553. [Google Scholar] [CrossRef]

- Guberman, N. On complex valued convolutional neural networks. arXiv 2016, arXiv:1602.09046. [Google Scholar]

- Tygert, M.; Bruna, J.; Chintala, S.; LeCun, Y.; Piantino, S.; Szlam, A. A mathematical motivation for complex-valued convolutional networks. Neural Comput. 2016, 28, 815–825. [Google Scholar] [CrossRef]

- Trabelsi, C.; Bilaniuk, O.; Zhang, Y.; Serdyuk, D.; Subramanian, S.; Santos, J.; Mehri, S.; Rostamzadeh, N.; Bengio, Y.; Pal, C. Deep Complex Networks. arXiv 2018, arXiv:1705.09792. [Google Scholar]

- Benvenuto, N.; Piazza, F. On the complex backpropagation algorithm. IEEE Trans. Signal Process. 1992, 40, 967–969. [Google Scholar] [CrossRef]

- Lau, M.M.; Lim, K.H.; Gopalai, A.A. Malaysia traffic sign recognition with convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Digital Signal Processing (DSP), Singapore, 21–24 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1006–1010. [Google Scholar]

- Kingsbury, N. Complex wavelets for shift invariant analysis and filtering of signals. Appl. Comput. Harmon. Anal. 2001, 10, 234–253. [Google Scholar] [CrossRef]

- Baraniuk, R.; Kingsbury, N.; Selesnick, I. The dual-tree complex wavelet transform-a coherent framework for multiscale signal and image processing. IEEE Signal Process. Mag. 2005, 22, 123–151. [Google Scholar]

- Kingsbury, N. Image processing with complex wavelets. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 1999, 357, 2543–2560. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar]

- Bengio, Y. Practical recommendations for gradient-based training of deep architectures. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 437–478. [Google Scholar]

- MacKay, D.J. Information-based objective functions for active data selection. Neural Comput. 1992, 4, 590–604. [Google Scholar]

- Luo, T.; Kramer, K.; Goldgof, D.B.; Hall, L.O.; Samson, S.; Remsen, A.; Hopkins, T.; Cohn, D. Active learning to recognize multiple types of plankton. J. Mach. Learn. Res. 2005, 6, 589–613. [Google Scholar]

- Settles, B. Active learning. Synth. Lect. Artif. Intell. Mach. Learn. 2012, 6, 1–114. [Google Scholar]

- Joshi, A.J.; Porikli, F.; Papanikolopoulos, N. Multi-class active learning for image classification. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 2372–2379. [Google Scholar]

- Farag, A.A.; Mohamed, R.M.; El-Baz, A. A unified framework for map estimation in remote sensing image segmentation. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1617–1634. [Google Scholar]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1222–1239. [Google Scholar]

- Kolmogorov, V. Convergent tree-reweighted message passing for energy minimization. In Proceedings of the International Workshop on Artificial Intelligence and Statistics, Bridgetown, Barbados, 6–8 January 2005; pp. 182–189. [Google Scholar]

- Yedidia, J.S.; Freeman, W.T.; Weiss, Y. Constructing free-energy approximations and generalized belief propagation algorithms. IEEE Trans. Inf. Theory 2005, 51, 2282–2312. [Google Scholar]

- Cloude, S.R.; Pottier, E. A review of target decomposition theorems in radar polarimetry. IEEE Trans. Geosci. Remote Sens. 1996, 34, 498–518. [Google Scholar]

- Cao, X.; Zhou, F.; Xu, L.; Meng, D.; Xu, Z.; Paisley, J. Hyperspectral image classification with Markov random fields and a convolutional neural network. IEEE Trans. Image Process. 2018, 27, 2354–2367. [Google Scholar] [CrossRef] [PubMed]

- Xie, H.; Pierce, L.E.; Ulaby, F.T. Statistical properties of logarithmically transformed speckle. IEEE Trans. Geosci. Remote Sens. 2002, 40, 721–727. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).