Ensemble Learning for Oat Yield Prediction Using Multi-Growth Stage UAV Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Field Trial Design

2.2. UAV Image Processing

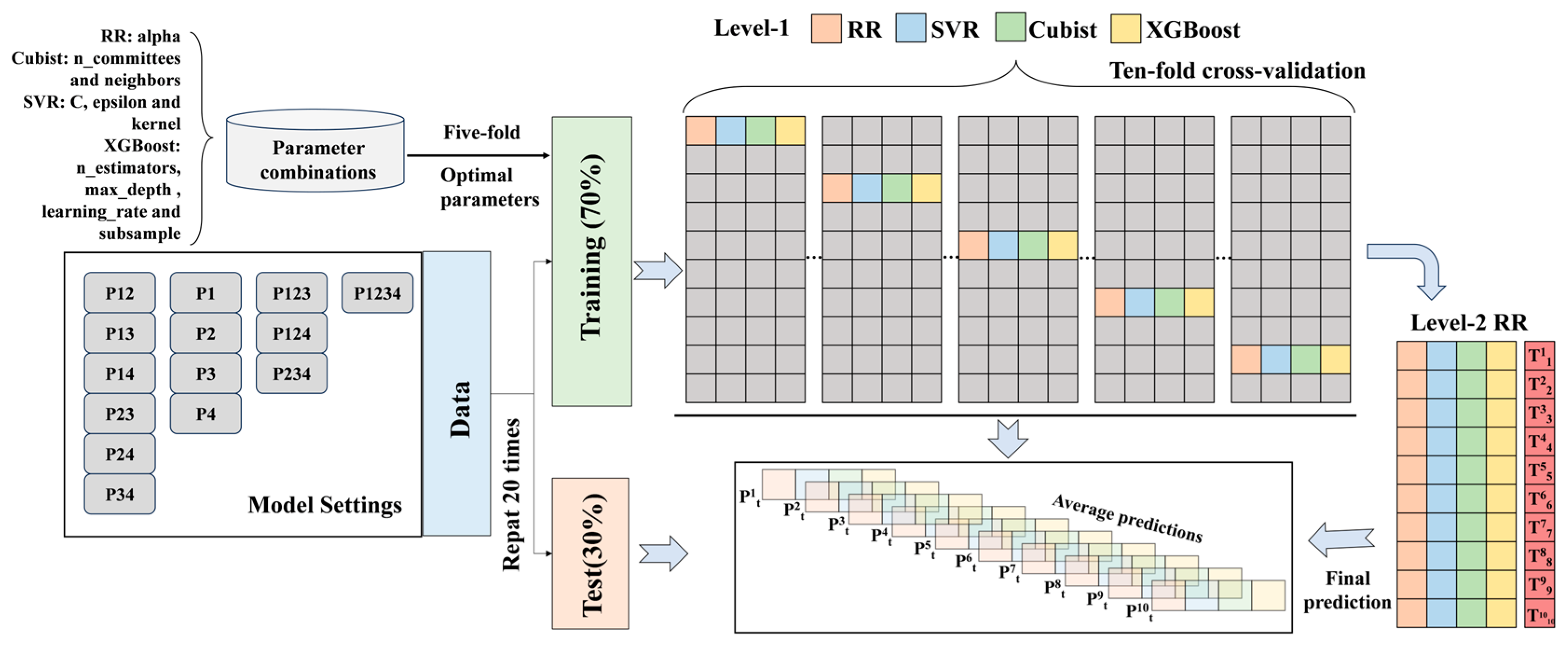

2.3. Ensemble Learning Framework for Oat Yield Prediction

2.4. Model Evaluation

3. Results

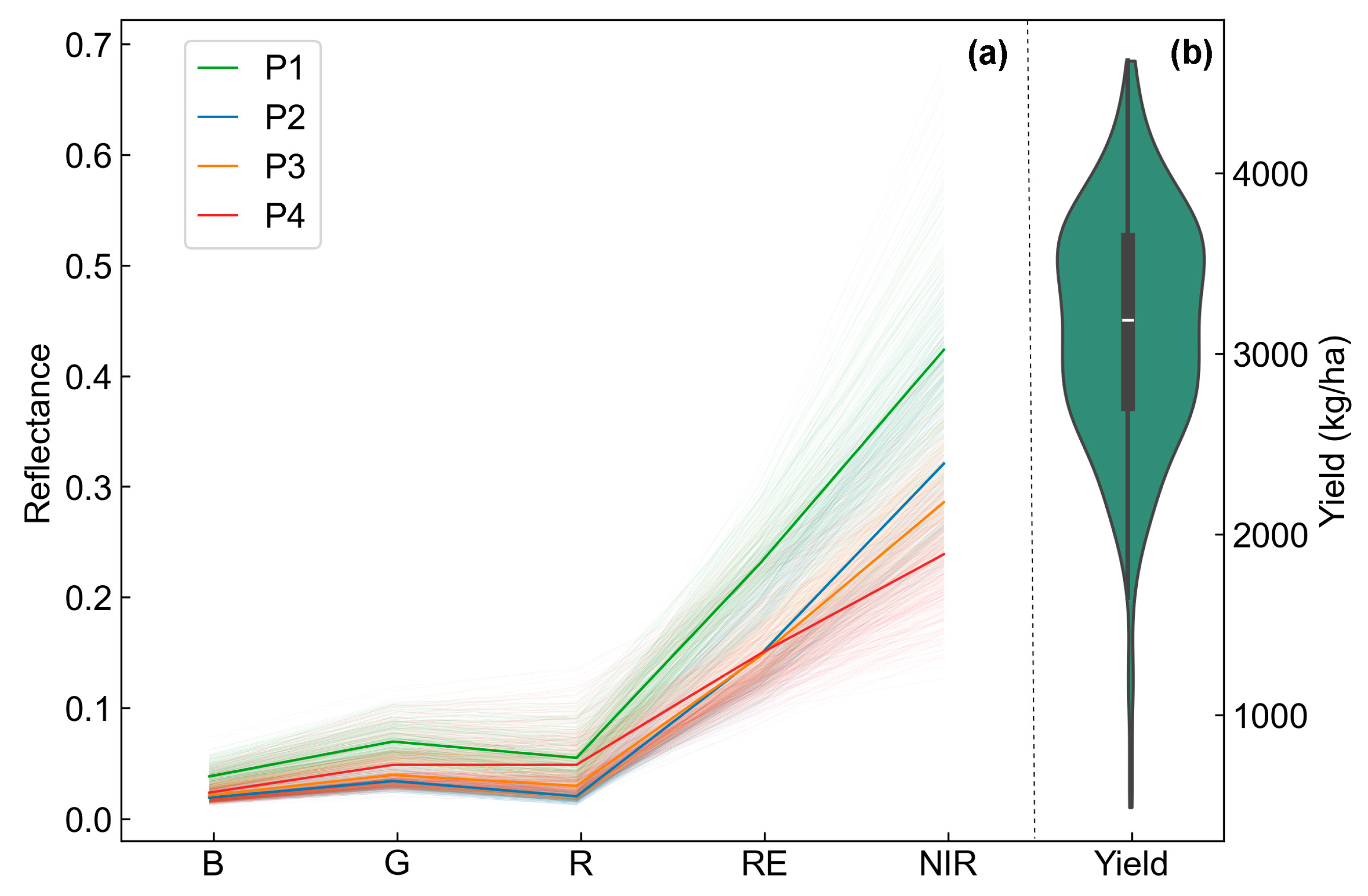

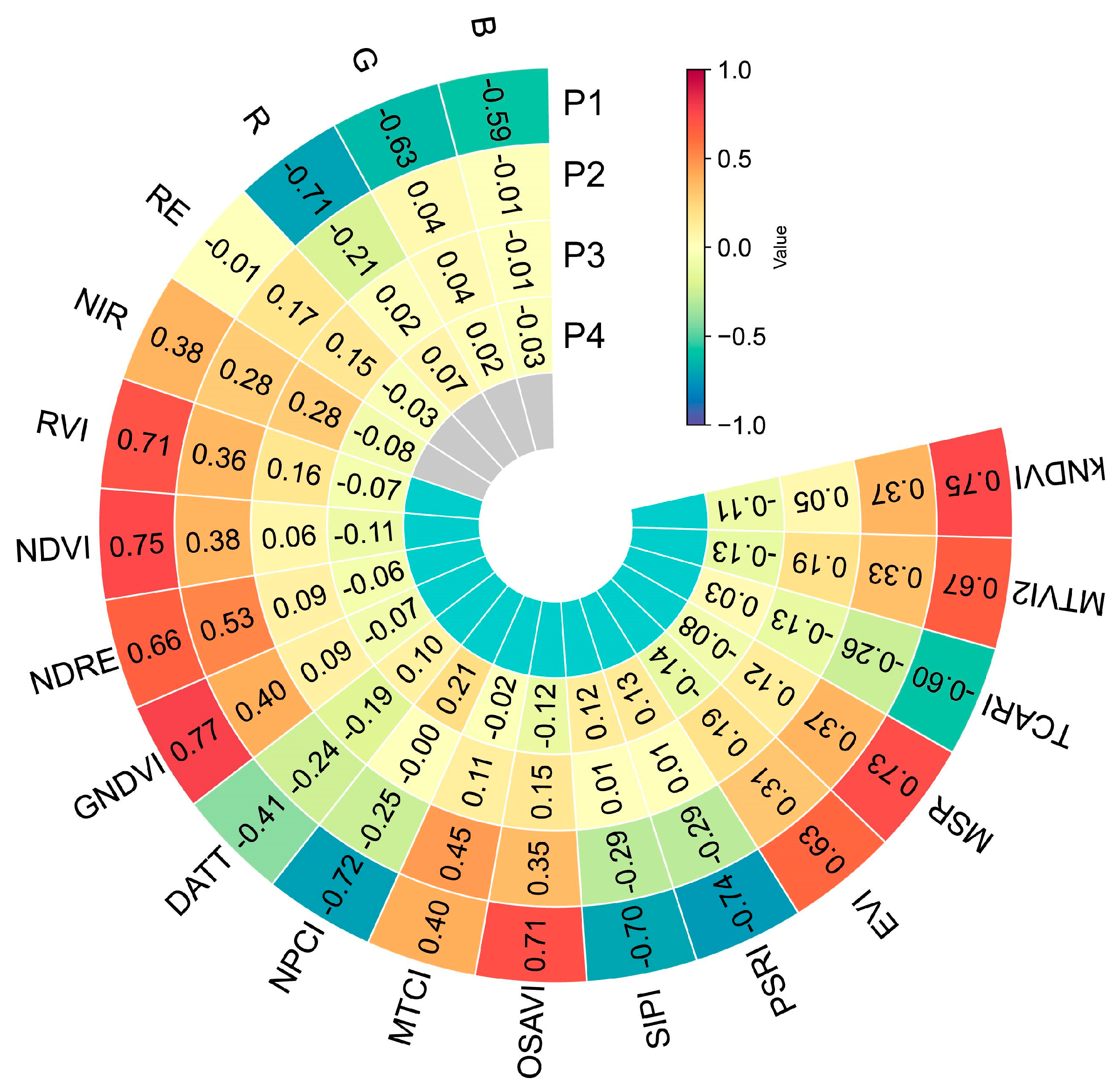

3.1. Statistical Analysis of Yield and UAV Spectral Data

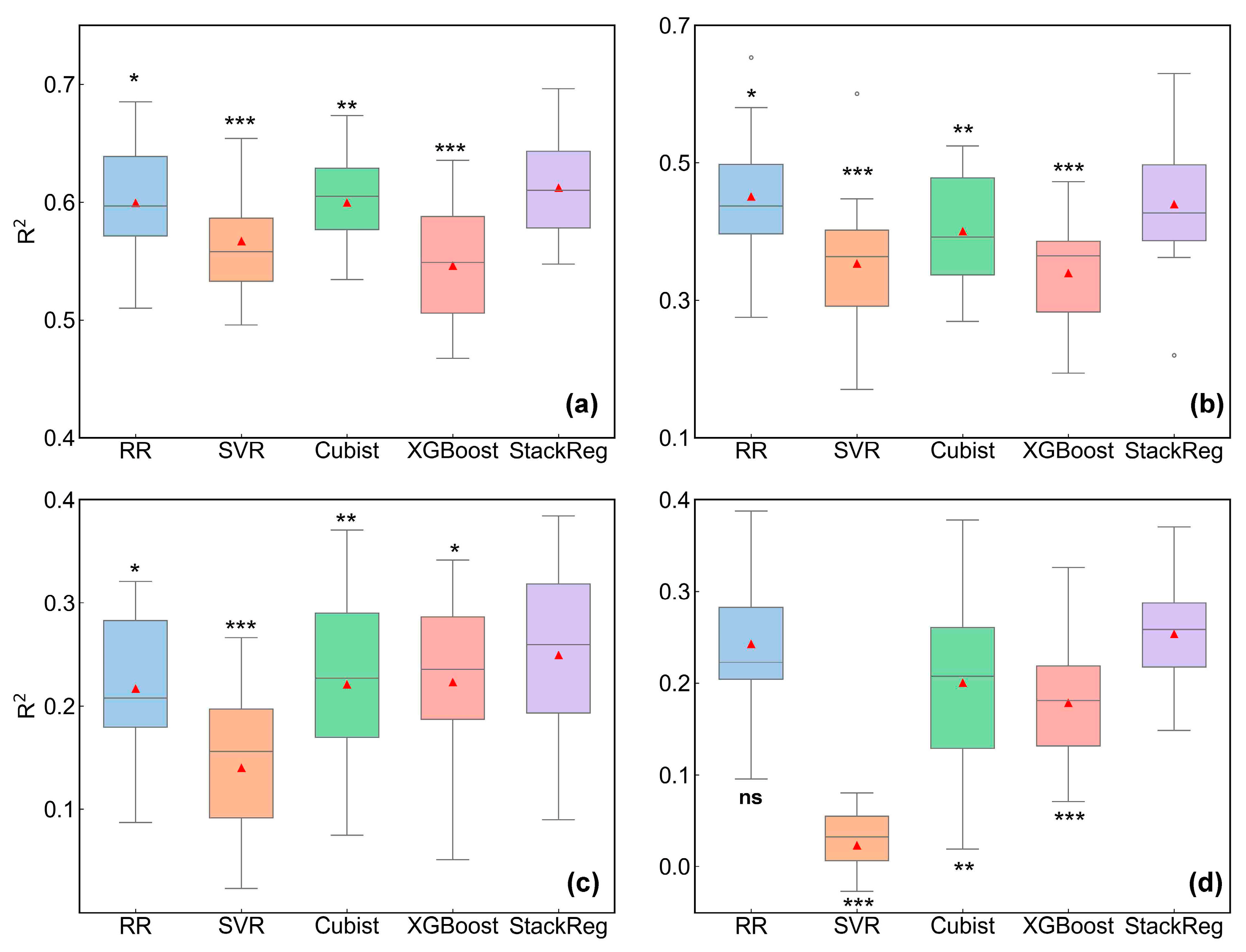

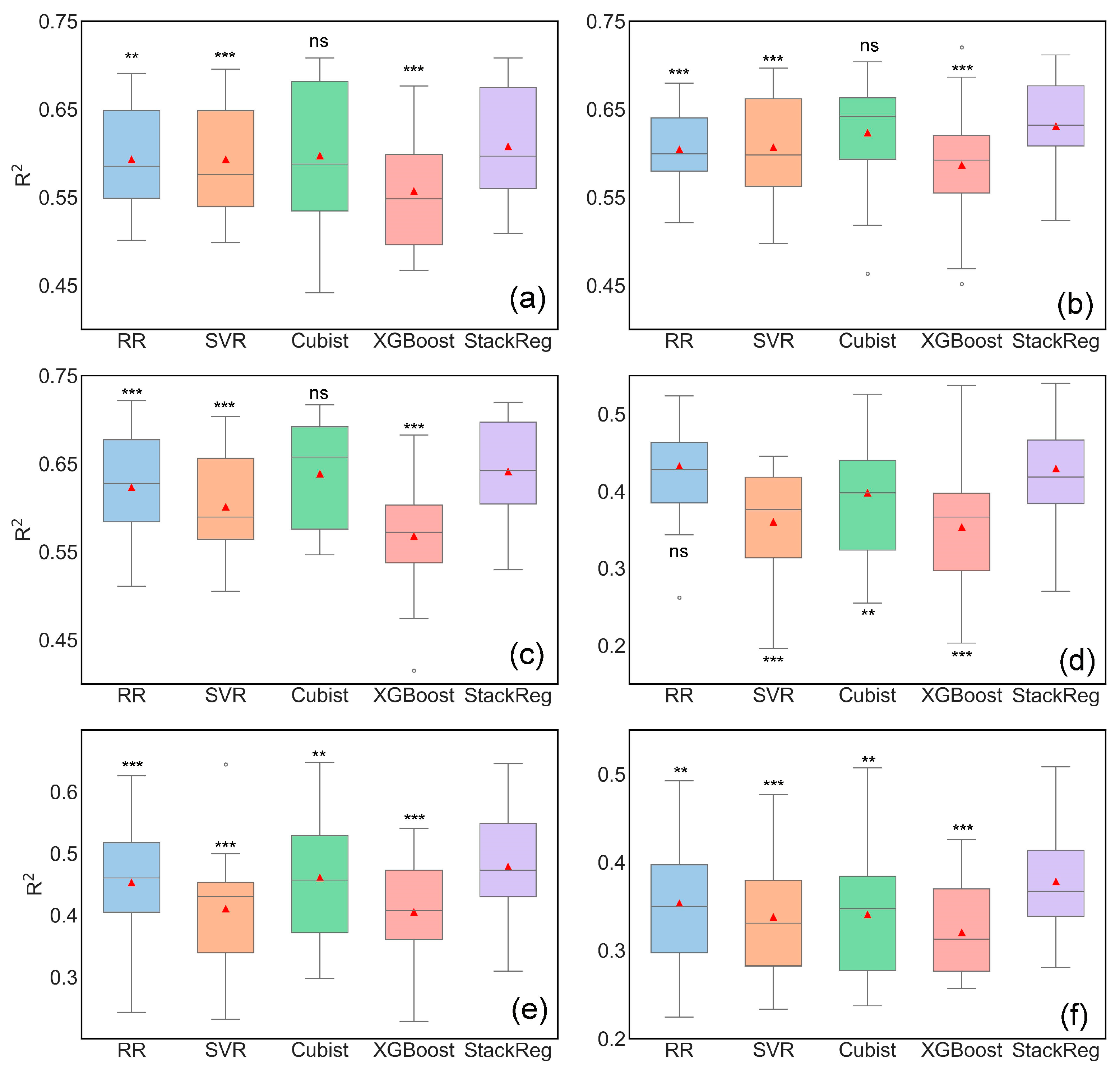

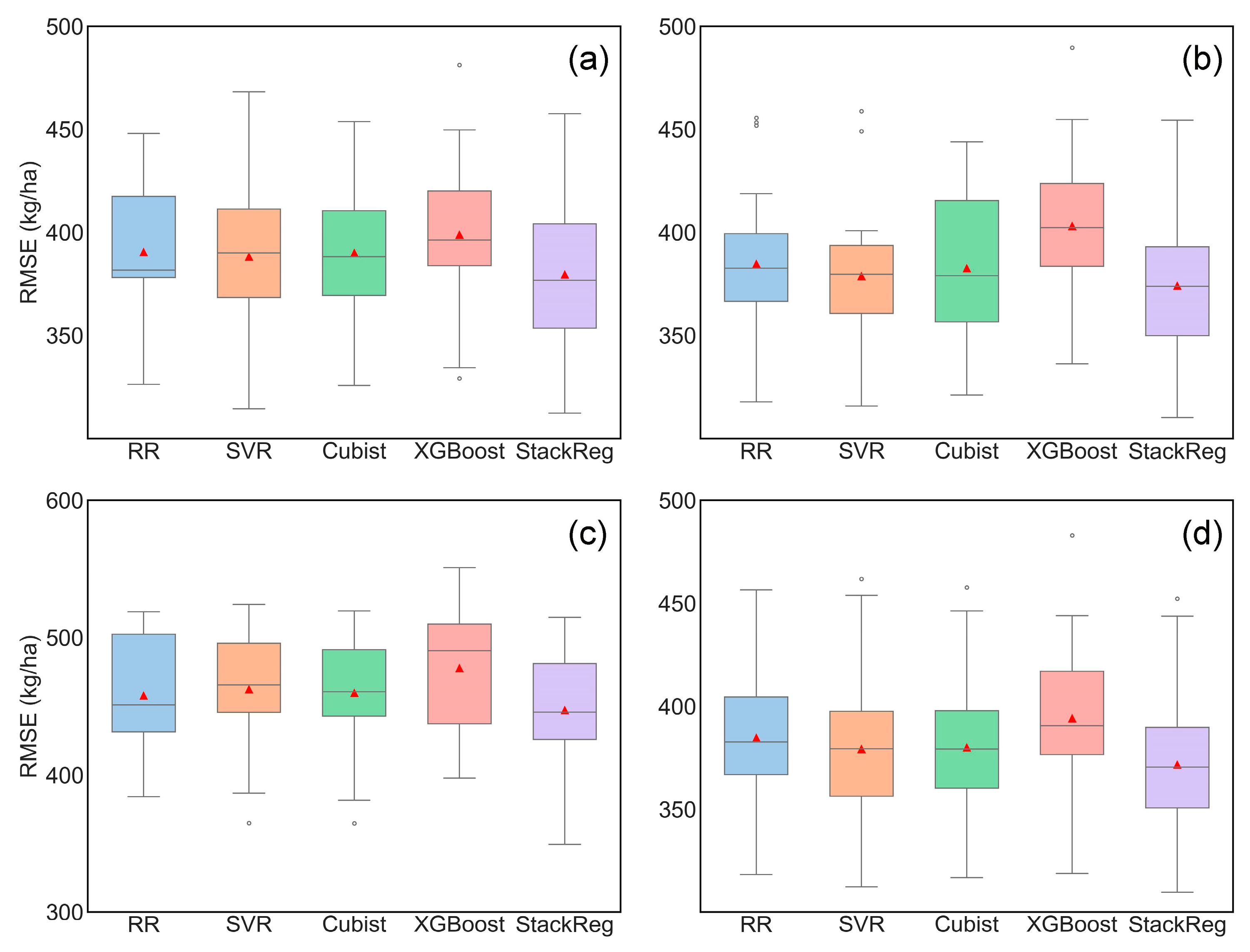

3.2. Evaluation of Oat Yield Prediction Models Based on Single-Stage UAV Imagery

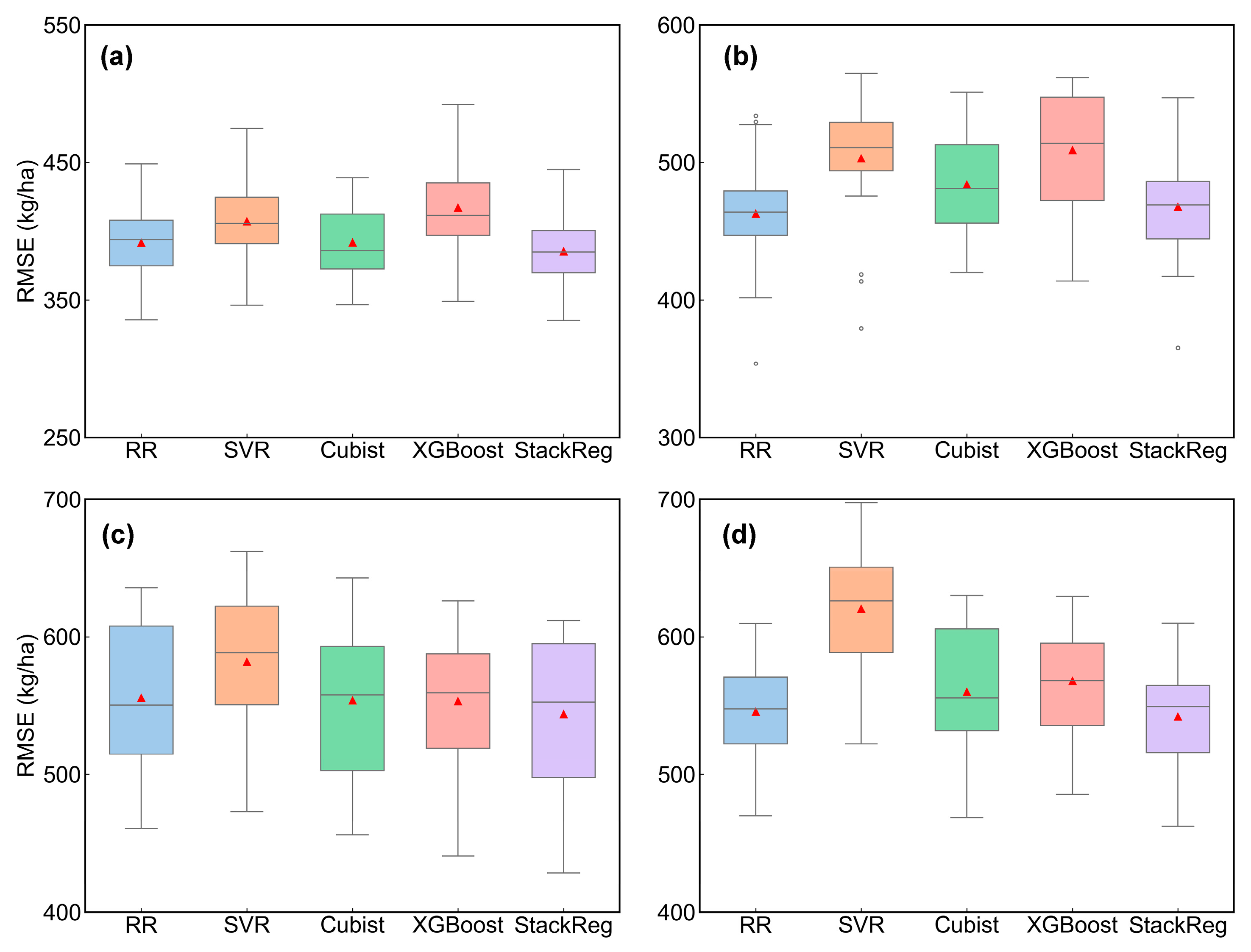

3.3. Evaluation of Oat Yield Prediction Models Based on Dual-Stage UAV Imagery

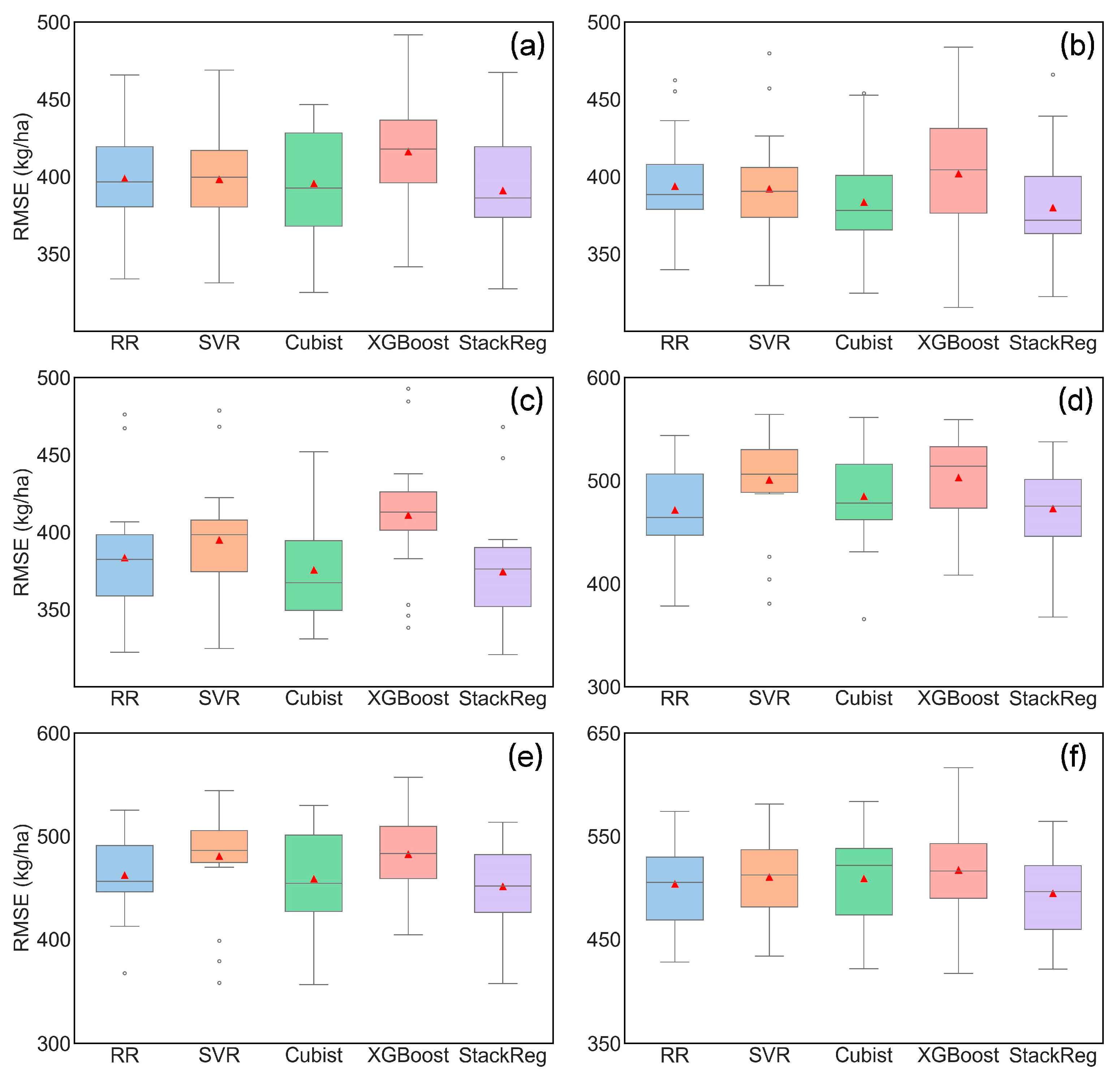

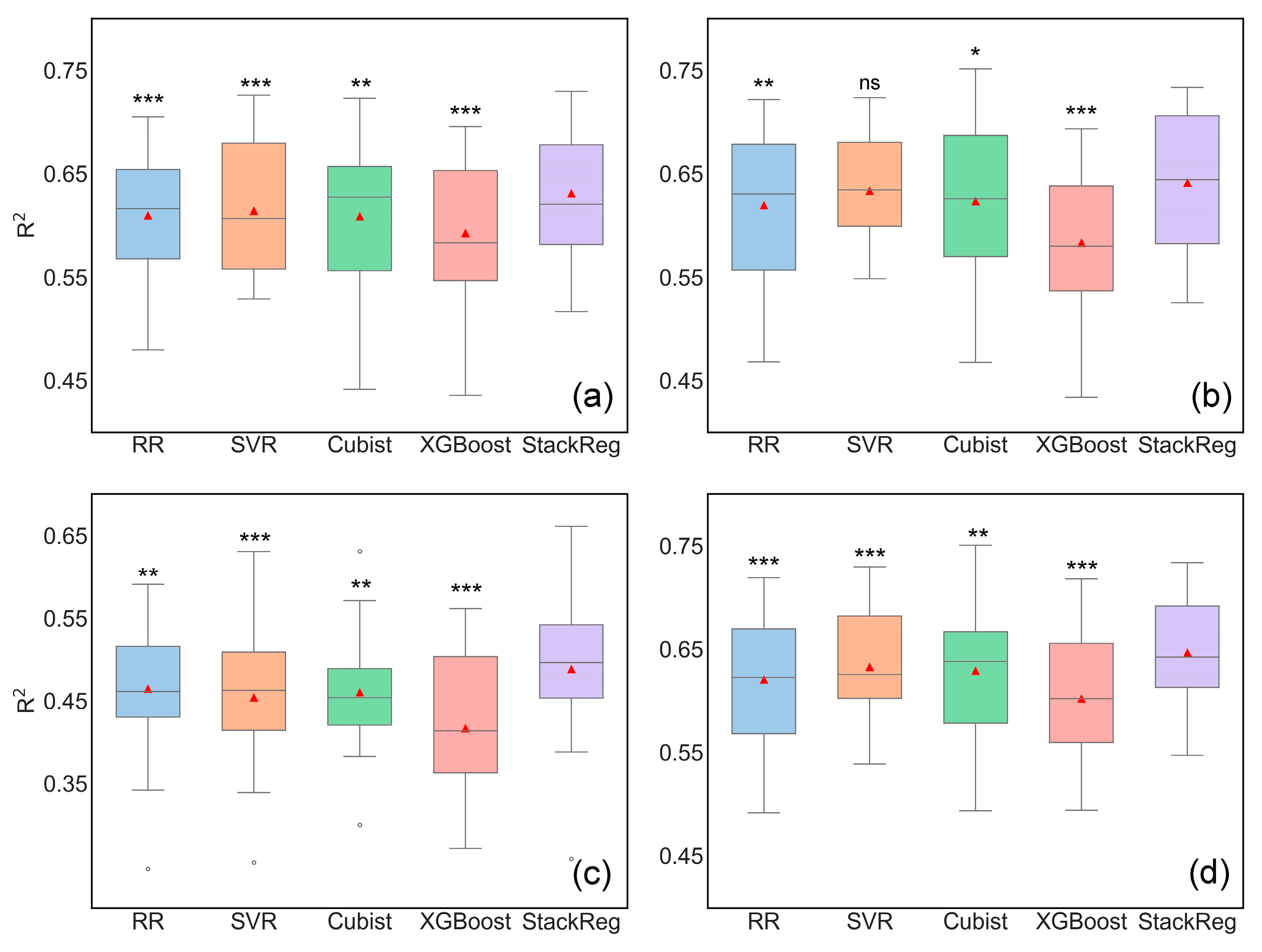

3.4. Evaluation of Oat Yield Prediction Models Based on Multi-Stage UAV Imagery

4. Discussion

4.1. Integrating Multiple Growth Stages for Oat Yield Prediction

4.2. Potential of Ensemble Learning in Oat Yield Prediction

4.3. Implications for Future Research

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Butt, M.S.; Tahir-Nadeem, M.; Khan, M.K.I.; Shabir, R.; Butt, M.S. Oat: Unique among the cereals. Eur. J. Nutr. 2008, 47, 68–79. [Google Scholar] [CrossRef] [PubMed]

- Rispail, N.; Montilla-Bascón, G.; Sánchez-Martín, J.; Flores, F.; Howarth, C.; Langdon, T.; Rubiales, D.; Prats, E. Multi-Environmental Trials Reveal Genetic Plasticity of Oat Agronomic Traits Associated With Climate Variable Changes. Front. Plant Sci. 2018, 9, 1358. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, C.; Gao, L.; Fan, C.; Xu, X.; Zhang, F.; Zhou, Y.; Niu, F.; Li, Z. Time Phase Selection and Accuracy Analysis for Predicting Winter Wheat Yield Based on Time Series Vegetation Index. Remote Sens. 2024, 16, 1995. [Google Scholar] [CrossRef]

- Chen, P.; Li, Y.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Improving yield prediction based on spatio-temporal deep learning approaches for winter wheat: A case study in Jiangsu Province, China. Comput. Electron. Agric. 2023, 213, 108201. [Google Scholar] [CrossRef]

- Laurila, H.; Karjalainen, M.; Kleemola, J.; Hyyppä, J. Cereal Yield Modeling in Finland Using Optical and Radar Remote Sensing. Remote Sens. 2010, 2, 2185–2239. [Google Scholar] [CrossRef]

- Sharma, P.; Leigh, L.; Chang, J.; Maimaitijiang, M.; Caffé, M. Above-Ground Biomass Estimation in Oats Using UAV Remote Sensing and Machine Learning. Sensors 2022, 22, 601. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Zhang, C.; Marzougui, A.; Sankaran, S. High-resolution satellite imagery applications in crop phenotyping: An overview. Comput. Electron. Agric. 2020, 175, 105584. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 2023, 24, 187–212. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Campos-Taberner, M.; Moreno-Martínez, Á.; Walther, S.; Duveiller, G.; Cescatti, A.; Mahecha, M.D.; Muñoz-Marí, J.; García-Haro, F.J.; Guanter, L.; et al. A unified vegetation index for quantifying the terrestrial biosphere. Sci. Adv. 2021, 7, eabc7447. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Moreno-Martínez, Á.; Muñoz-Marí, J.; Campos-Taberner, M.; Camps-Valls, G. Estimation of vegetation traits with kernel NDVI. ISPRS J. Photogramm. Remote Sens. 2023, 195, 408–417. [Google Scholar] [CrossRef]

- van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Comput. Electron. Agric. 2020, 177, 105709. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, X.; Cheng, Q.; Fei, S.; Chen, Z. A Machine-Learning Model Based on the Fusion of Spectral and Textural Features from UAV Multi-Sensors to Analyse the Total Nitrogen Content in Winter Wheat. Remote Sens. 2023, 15, 2152. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop yield prediction with deep convolutional neural networks. Comput. Electron. Agric. 2019, 163, 104859. [Google Scholar] [CrossRef]

- Canicattì, M.; Vallone, M. Drones in vegetable crops: A systematic literature review. Smart Agric. Technol. 2024, 7, 100396. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Marques Ramos, A.P.; Prado Osco, L.; Elis Garcia Furuya, D.; Nunes Gonçalves, W.; Cordeiro Santana, D.; Pereira Ribeiro Teodoro, L.; Antonio da Silva Junior, C.; Fernando Capristo-Silva, G.; Li, J.; Henrique Rojo Baio, F.; et al. A random forest ranking approach to predict yield in maize with uav-based vegetation spectral indices. Comput. Electron. Agric. 2020, 178, 105791. [Google Scholar] [CrossRef]

- Ren, P.; Li, H.; Han, S.; Chen, R.; Yang, G.; Yang, H.; Feng, H.; Zhao, C. Estimation of Soybean Yield by Combining Maturity Group Information and Unmanned Aerial Vehicle Multi-Sensor Data Using Machine Learning. Remote Sens. 2023, 15, 4286. [Google Scholar] [CrossRef]

- Bian, C.; Shi, H.; Wu, S.; Zhang, K.; Wei, M.; Zhao, Y.; Sun, Y.; Zhuang, H.; Zhang, X.; Chen, S. Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data. Remote Sens. 2022, 14, 1474. [Google Scholar] [CrossRef]

- Ji, Y.; Chen, Z.; Cheng, Q.; Liu, R.; Li, M.; Yan, X.; Li, G.; Wang, D.; Fu, L.; Ma, Y.; et al. Estimation of plant height and yield based on UAV imagery in faba bean (Vicia faba L.). Plant Methods 2022, 18, 26. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep convolutional neural networks for rice grain yield estimation at the ripening stage using UAV-based remotely sensed images. Field Crops Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, J.; Shen, W. A Review of Ensemble Learning Algorithms Used in Remote Sensing Applications. Appl. Sci. 2022, 12, 8654. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; He, Z.; Chen, Z.; Shu, M.; Wang, J.; Li, C.; Xiao, Y. Assessment of Ensemble Learning to Predict Wheat Grain Yield Based on UAV-Multispectral Reflectance. Remote Sens. 2021, 13, 2338. [Google Scholar] [CrossRef]

- Sarkar, T.K.; Roy, D.K.; Kang, Y.S.; Jun, S.R.; Park, J.W.; Ryu, C.S. Ensemble of Machine Learning Algorithms for Rice Grain Yield Prediction Using UAV-Based Remote Sensing. J. Biosyst. Eng. 2024, 49, 1–19. [Google Scholar] [CrossRef]

- Liu, Z.; Ji, Y.; Ya, X.; Liu, R.; Liu, Z.; Zong, X.; Yang, T. Ensemble Learning for Pea Yield Estimation Using Unmanned Aerial Vehicles, Red Green Blue, and Multispectral Imagery. Drones 2024, 8, 227. [Google Scholar] [CrossRef]

- Feng, L.; Zhang, Z.; Ma, Y.; Du, Q.; Williams, P.; Drewry, J.; Luck, B. Alfalfa Yield Prediction Using UAV-Based Hyperspectral Imagery and Ensemble Learning. Remote Sens. 2020, 12, 2028. [Google Scholar] [CrossRef]

- Peng, J.; Wang, D.; Zhu, W.; Yang, T.; Liu, Z.; Eyshi Rezaei, E.; Li, J.; Sun, Z.; Xin, X. Combination of UAV and deep learning to estimate wheat yield at ripening stage: The potential of phenotypic features. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103494. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Linna, P.; Lipping, T. Crop Yield Prediction Using Multitemporal UAV Data and Spatio-Temporal Deep Learning Models. Remote Sens. 2020, 12, 4000. [Google Scholar] [CrossRef]

- El-Hendawy, S.; Mohammed, N.; Al-Suhaibani, N. Enhancing Wheat Growth, Physiology, Yield, and Water Use Efficiency under Deficit Irrigation by Integrating Foliar Application of Salicylic Acid and Nutrients at Critical Growth Stages. Plants 2024, 13, 1490. [Google Scholar] [CrossRef] [PubMed]

- Hassan, M.A.; Fei, S.; Li, L.; Jin, Y.; Liu, P.; Rasheed, A.; Shawai, R.S.; Zhang, L.; Ma, A.; Xiao, Y.; et al. Stacking of Canopy Spectral Reflectance from Multiple Growth Stages Improves Grain Yield Prediction under Full and Limited Irrigation in Wheat. Remote Sens. 2022, 14, 4318. [Google Scholar] [CrossRef]

- Pearson, R.L.; Miller, L.D. Remote mapping of standing crop biomass for estimation of the productivity of the shortgrass prairie. In Proceedings of the Eighth International Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 2–6 October 1972; p. 1355. [Google Scholar]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crops Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote sensing of chlorophyll concentration in higher plant leaves. Adv. Space Res. 1998, 22, 689–692. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of chlorophyll a, chlorophyll b, chlorophyll a+ b, and total carotenoid content in eucalyptus leaves. Remote Sens. Environ. 1998, 66, 111–121. [Google Scholar] [CrossRef]

- Peñuelas, J.; Gamon, J.; Fredeen, A.; Merino, J.; Field, C. Reflectance indices associated with physiological changes in nitrogen-and water-limited sunflower leaves. Remote Sens. Environ. 1994, 48, 135–146. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Tang, Q.; Huang, W.; Rivard, B.; Feng, J. Remote estimation of gross primary production in wheat using chlorophyll-related vegetation indices. Agric. For. Meteorol. 2009, 149, 1015–1021. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Penuelas, J.; Baret, F.; Filella, I. Semi-empirical indices to assess carotenoids/chlorophyll a ratio from leaf spectral reflectance. Photosynthetica 1995, 31, 221–230. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V.Y. Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Devadas, R.; Lamb, D.; Simpfendorfer, S.; Backhouse, D. Evaluating ten spectral vegetation indices for identifying rust infection in individual wheat leaves. Precis. Agric. 2009, 10, 459–470. [Google Scholar] [CrossRef]

- Wang, T.; Gao, M.; Cao, C.; You, J.; Zhang, X.; Shen, L. Winter wheat chlorophyll content retrieval based on machine learning using in situ hyperspectral data. Comput. Electron. Agric. 2022, 193, 106728. [Google Scholar] [CrossRef]

- Li, D.; Miao, Y.; Gupta, S.K.; Rosen, C.J.; Yuan, F.; Wang, C.; Wang, L.; Huang, Y. Improving Potato Yield Prediction by Combining Cultivar Information and UAV Remote Sensing Data Using Machine Learning. Remote Sens. 2021, 13, 3322. [Google Scholar] [CrossRef]

- Zhao, D.; Zhen, J.; Zhang, Y.; Miao, J.; Shen, Z.; Jiang, X.; Wang, J.; Jiang, J.; Tang, Y.; Wu, G. Mapping mangrove leaf area index (LAI) by combining remote sensing images with PROSAIL-D and XGBoost methods. Remote Sens. Ecol. Conserv. 2023, 9, 370–389. [Google Scholar] [CrossRef]

- Yang, S.; Li, L.; Fei, S.; Yang, M.; Tao, Z.; Meng, Y.; Xiao, Y. Wheat Yield Prediction Using Machine Learning Method Based on UAV Remote Sensing Data. Drones 2024, 8, 284. [Google Scholar] [CrossRef]

- Sadeghi-Tehran, P.; Virlet, N.; Ampe, E.M.; Reyns, P.; Hawkesford, M.J. DeepCount: In-Field Automatic Quantification of Wheat Spikes Using Simple Linear Iterative Clustering and Deep Convolutional Neural Networks. Front. Plant Sci. 2019, 10, 1176. [Google Scholar] [CrossRef]

- Peltonen-Sainio, P.; Rajala, A. Duration of vegetative and generative development phases in oat cultivars released since 1921. Field Crops Res. 2007, 101, 72–79. [Google Scholar] [CrossRef]

- Guan, K.; Wu, J.; Kimball, J.S.; Anderson, M.C.; Frolking, S.; Li, B.; Hain, C.R.; Lobell, D.B. The shared and unique values of optical, fluorescence, thermal and microwave satellite data for estimating large-scale crop yields. Remote Sens. Environ. 2017, 199, 333–349. [Google Scholar] [CrossRef]

- Deng, Q.; Wu, M.; Zhang, H.; Cui, Y.; Li, M.; Zhang, Y. Winter Wheat Yield Estimation Based on Optimal Weighted Vegetation Index and BHT-ARIMA Model. Remote Sens. 2022, 14, 1994. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Wang, F.; Yi, Q.; Hu, J.; Xie, L.; Yao, X.; Xu, T.; Zheng, J. Combining spectral and textural information in UAV hyperspectral images to estimate rice grain yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

- Zhang, P.-P.; Zhou, X.-X.; Wang, Z.-X.; Mao, W.; Li, W.-X.; Yun, F.; Guo, W.-S.; Tan, C.-W. Using HJ-CCD image and PLS algorithm to estimate the yield of field-grown winter wheat. Sci. Rep. 2020, 10, 5173. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Rasheed, A.; Yang, G.; Reynolds, M.; Xia, X.; Xiao, Y.; He, Z. A rapid monitoring of NDVI across the wheat growth cycle for grain yield prediction using a multi-spectral UAV platform. Plant Sci. 2019, 282, 95–103. [Google Scholar] [CrossRef]

- Zhang, S.; Qi, X.; Duan, J.; Yuan, X.; Zhang, H.; Feng, W.; Guo, T.; He, L. Comparison of Attention Mechanism-Based Deep Learning and Transfer Strategies for Wheat Yield Estimation Using Multisource Temporal Drone Imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4407723. [Google Scholar] [CrossRef]

- Wang, L.; Tian, Y.; Yao, X.; Zhu, Y.; Cao, W. Predicting grain yield and protein content in wheat by fusing multi-sensor and multi-temporal remote-sensing images. Field Crops Res. 2014, 164, 178–188. [Google Scholar] [CrossRef]

- Holloway, J.; Mengersen, K. Statistical Machine Learning Methods and Remote Sensing for Sustainable Development Goals: A Review. Remote Sens. 2018, 10, 1365. [Google Scholar] [CrossRef]

- Shafiee, S.; Lied, L.M.; Burud, I.; Dieseth, J.A.; Alsheikh, M.; Lillemo, M. Sequential forward selection and support vector regression in comparison to LASSO regression for spring wheat yield prediction based on UAV imagery. Comput. Electron. Agric. 2021, 183, 106036. [Google Scholar] [CrossRef]

- Shahhosseini, M.; Hu, G.; Archontoulis, S.V. Forecasting corn yield with machine learning ensembles. Front. Plant Sci. 2020, 11, 1120. [Google Scholar] [CrossRef] [PubMed]

- Yin, J.; Medellín-Azuara, J.; Escriva-Bou, A.; Liu, Z. Bayesian machine learning ensemble approach to quantify model uncertainty in predicting groundwater storage change. Sci. Total Environ. 2021, 769, 144715. [Google Scholar] [CrossRef] [PubMed]

- Useya, J.; Chen, S. Comparative Performance Evaluation of Pixel-Level and Decision-Level Data Fusion of Landsat 8 OLI, Landsat 7 ETM+ and Sentinel-2 MSI for Crop Ensemble Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4441–4451. [Google Scholar] [CrossRef]

- Liu, Z.; Jin, S.; Liu, X.; Yang, Q.; Li, Q.; Zang, J.; Li, Z.; Hu, T.; Guo, Z.; Wu, J.; et al. Extraction of Wheat Spike Phenotypes From Field-Collected Lidar Data and Exploration of Their Relationships With Wheat Yield. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–13. [Google Scholar] [CrossRef]

- Jin, N.; Tao, B.; Ren, W.; He, L.; Zhang, D.; Wang, D.; Yu, Q. Assimilating remote sensing data into a crop model improves winter wheat yield estimation based on regional irrigation data. Agric. Water Manag. 2022, 266, 107583. [Google Scholar] [CrossRef]

- Ishaq, R.A.F.; Zhou, G.; Tian, C.; Tan, Y.; Jing, G.; Jiang, H.; Obaid-ur-Rehman. A Systematic Review of Radiative Transfer Models for Crop Yield Prediction and Crop Traits Retrieval. Remote Sens. 2024, 16, 121. [Google Scholar] [CrossRef]

- Sun, G.; Jiao, Z.; Zhang, A.; Li, F.; Fu, H.; Li, Z. Hyperspectral image-based vegetation index (HSVI): A new vegetation index for urban ecological research. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102529. [Google Scholar] [CrossRef]

- Wang, N.; Suomalainen, J.; Bartholomeus, H.; Kooistra, L.; Masiliūnas, D.; Clevers, J.G.P.W. Diurnal variation of sun-induced chlorophyll fluorescence of agricultural crops observed from a point-based spectrometer on a UAV. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102276. [Google Scholar] [CrossRef]

- Feng, Z.; Cheng, Z.; Ren, L.; Liu, B.; Zhang, C.; Zhao, D.; Sun, H.; Feng, H.; Long, H.; Xu, B.; et al. Real-time monitoring of maize phenology with the VI-RGS composite index using time-series UAV remote sensing images and meteorological data. Comput. Electron. Agric. 2024, 224, 109212. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, S.; Chen, J.; Chen, B.; Wang, X.; Hao, D.; Sun, L. Rice Yield Prediction and Model Interpretation Based on Satellite and Climatic Indicators Using a Transformer Method. Remote Sens. 2022, 14, 5045. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, D.; Zhang, Y.; Zhang, Y.; Han, Y.; Zhang, Q.; Zhang, Q.; Zhang, C.; Liu, Z.; Wang, K. Prediction of corn variety yield with attribute-missing data via graph neural network. Comput. Electron. Agric. 2023, 211, 108046. [Google Scholar] [CrossRef]

| Spectral Bands | Central Wavelength (nm) |

|---|---|

| Blue | 450 ± 16 |

| Green | 560 ± 16 |

| Red | 650 ± 16 |

| Red edge | 730 ± 16 |

| Near-infrared | 840 ± 26 |

| Feature | Formulation | References |

|---|---|---|

| Ratio Vegetation Index (RVI) | NIR/R | [33] |

| Normalized Difference Vegetation Index (NDVI) | (NIR − R)/(NIR + R) | [34] |

| Normalized difference red edge (NDRE) | (NIR − RE)/(NIR − RE) | [35] |

| Green Normalized Difference Vegetation (GNDVI) | (NIR − G)/(NIR + G) | [36] |

| Datt’s chlorophyll content (DATT) | R/(G × RE) | [37] |

| Normalized pigment chlorophyll ratio index (NPCI) | (R − B)/(R + B) | [38] |

| MERIS Terrestrial Chlorophyll Index (MTCI) | (NIR − RE)/(NIR − R) | [39] |

| Optimized Soil-Adjusted Vegetation Index (OSAVI) | [40] | |

| Structure Insensitive Pigment Index (SIPI) | (NIR − B)/(NIR − R) | [41] |

| Plant Senescence Reflectance Index (PSRI) | (R − B)/NIR | [42] |

| Enhanced Vegetation Index (EVI) | [43] | |

| Modified Simple Ratio (MSR) | [44] | |

| Transformed Chlorophyll Absorption Reflectance Index (TCARI) | 3 × ((RE − R) − 0.2 × (RE − G) × (RE/R)) | [45] |

| Modified Transformed Vegetation Index (MTVI2) | [44] | |

| Kernel Normalized Difference Vegetation Index (kNDVI) | [12] |

| Models | Hyperparameters |

|---|---|

| RR | Alpha: Regularization strength was logarithmically spaced across ten values between 0.01 and 100, allowing for fine-tuning of the model’s regularization effect. |

| SVR | C: The regularization parameter was explored across five logarithmic steps between 0.1 and 10 (i.e., 0.1, 0.32, 1, 3.16, and 10), balancing margin flexibility and generalization. Epsilon: The epsilon parameter, determining the margin of tolerance, was tested with values 0.01, 0.1, and 0.2. Kernel: Both the linear and radial basis function (RBF) kernels were tested to model different relationships between input features and yield. |

| Cubist | Committees: The number of committees was varied from 5 to 30, in increments of 5, to control the ensemble size and complexity. Neighbors: The number of neighbors used for local adjustments was tested from 1 to 9, in unit increments, to balance local and global predictions. |

| XGBoost | Number of Estimators: The number of boosting rounds was varied from 100 to 600, in steps of 100, to balance the model complexity and overfitting risk. Max Depth: The maximum tree depth was evaluated at three levels—1, 3, and 5—affecting the model’s complexity. Learning Rate: The learning rate was tested with values 0.01, 0.1, and 0.2, controlling the contribution of each tree. Subsample: The subsample ratio was varied from 0.7 to 0.9, in increments of 0.1, to introduce randomness and reduce overfitting risk. |

| Sampling Year | 2022 | 2023 | Total |

|---|---|---|---|

| Measured number | 141 | 197 | 338 |

| Mean (kg/ha) | 3154.30 | 3168.69 | 3162.69 |

| Maximum (kg/ha) | 4517.20 | 4639.92 | 4639.92 |

| Minimum (kg/ha) | 510.58 | 1804.53 | 510.58 |

| Standard deviation (kg/ha) | 719.82 | 583.78 | 643.05 |

| Coefficient of variation (%) | 22.82 | 18.42 | 20.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Lu, B.; Shang, J.; Wang, X.; Hou, Z.; Jin, S.; Yang, Y.; Zang, H.; Ge, J.; Zeng, Z. Ensemble Learning for Oat Yield Prediction Using Multi-Growth Stage UAV Images. Remote Sens. 2024, 16, 4575. https://doi.org/10.3390/rs16234575

Zhang P, Lu B, Shang J, Wang X, Hou Z, Jin S, Yang Y, Zang H, Ge J, Zeng Z. Ensemble Learning for Oat Yield Prediction Using Multi-Growth Stage UAV Images. Remote Sensing. 2024; 16(23):4575. https://doi.org/10.3390/rs16234575

Chicago/Turabian StyleZhang, Pengpeng, Bing Lu, Jiali Shang, Xingyu Wang, Zhenwei Hou, Shujian Jin, Yadong Yang, Huadong Zang, Junyong Ge, and Zhaohai Zeng. 2024. "Ensemble Learning for Oat Yield Prediction Using Multi-Growth Stage UAV Images" Remote Sensing 16, no. 23: 4575. https://doi.org/10.3390/rs16234575

APA StyleZhang, P., Lu, B., Shang, J., Wang, X., Hou, Z., Jin, S., Yang, Y., Zang, H., Ge, J., & Zeng, Z. (2024). Ensemble Learning for Oat Yield Prediction Using Multi-Growth Stage UAV Images. Remote Sensing, 16(23), 4575. https://doi.org/10.3390/rs16234575