A Multi-Scale Feature Fusion Deep Learning Network for the Extraction of Cropland Based on Landsat Data

Abstract

1. Introduction

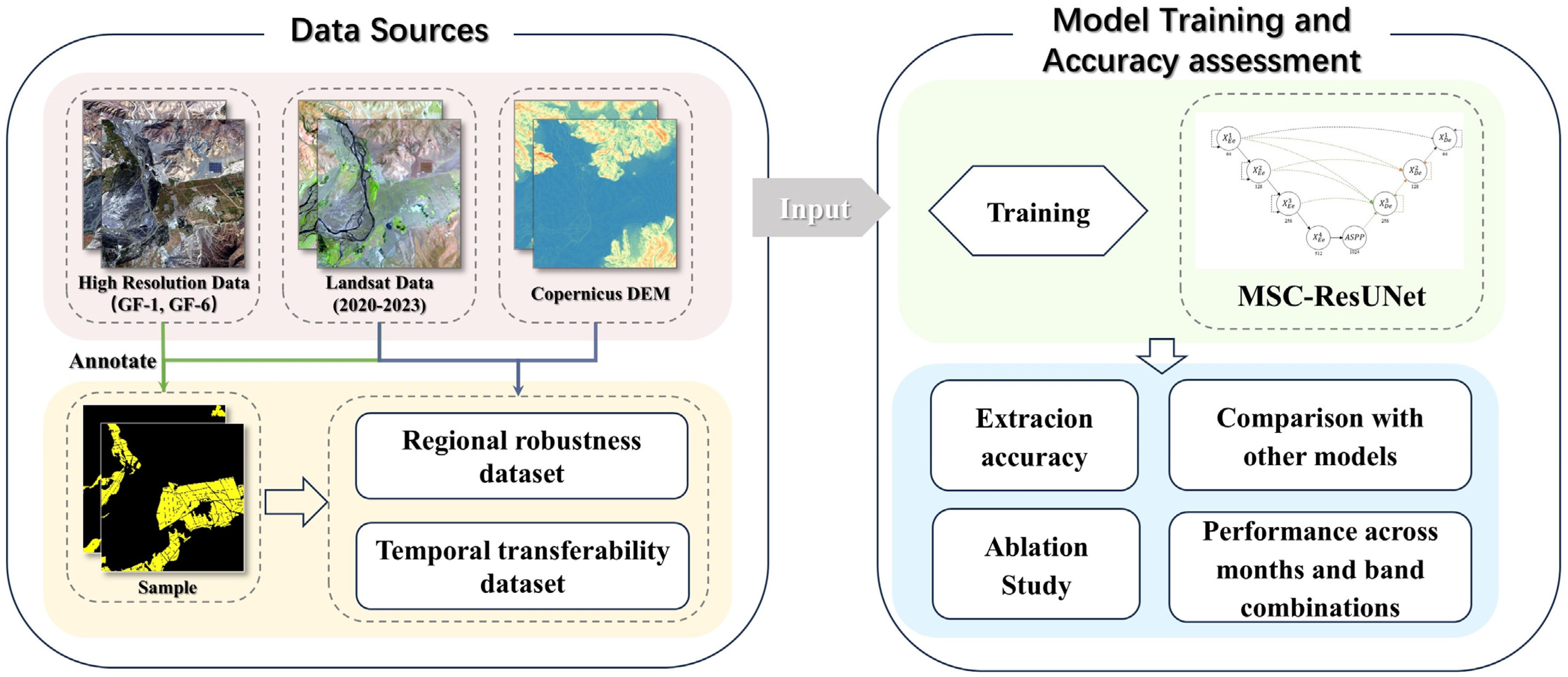

2. Materials and Methods

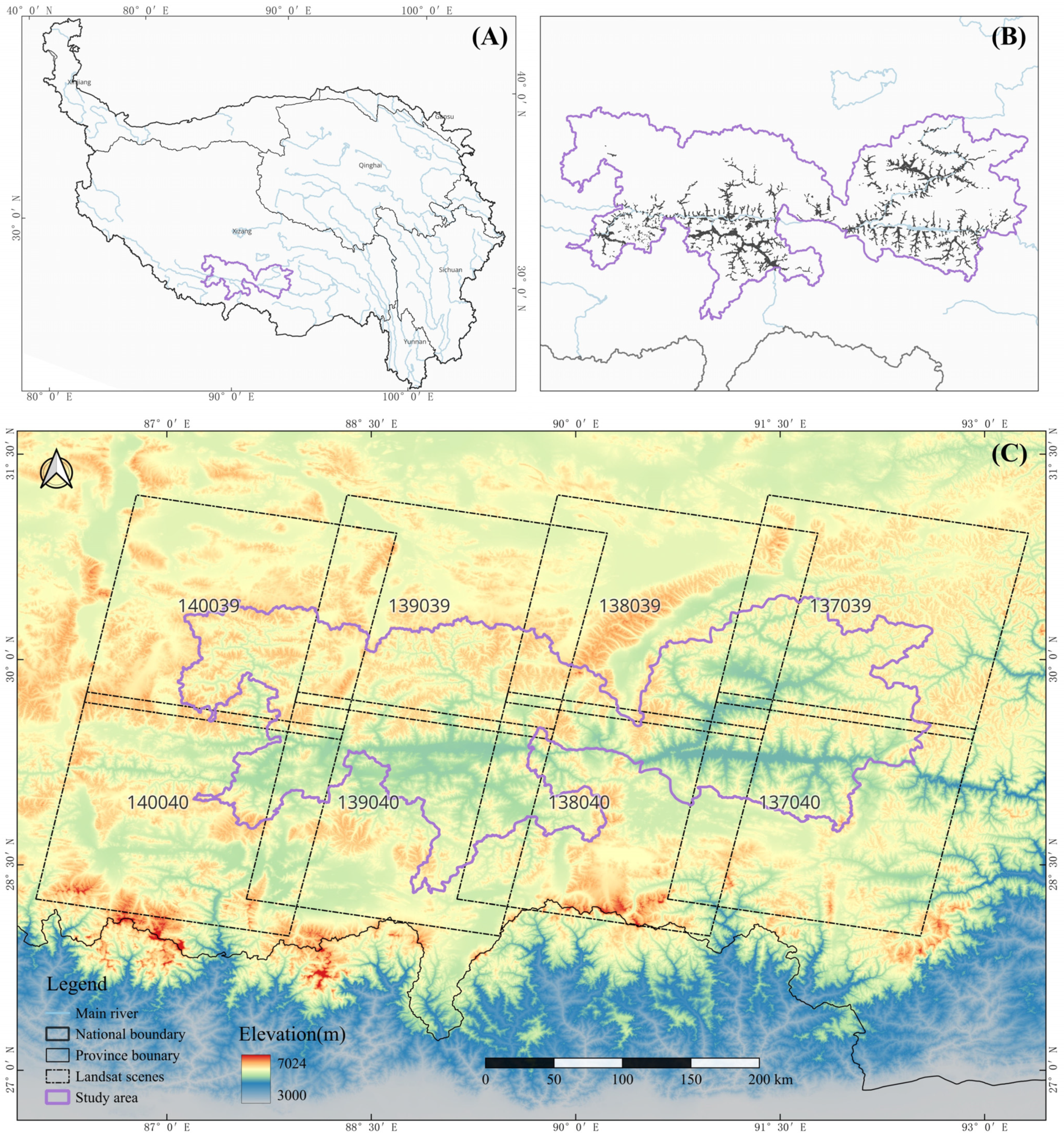

2.1. Study Area

2.2. Dataset

2.2.1. Landsat Data

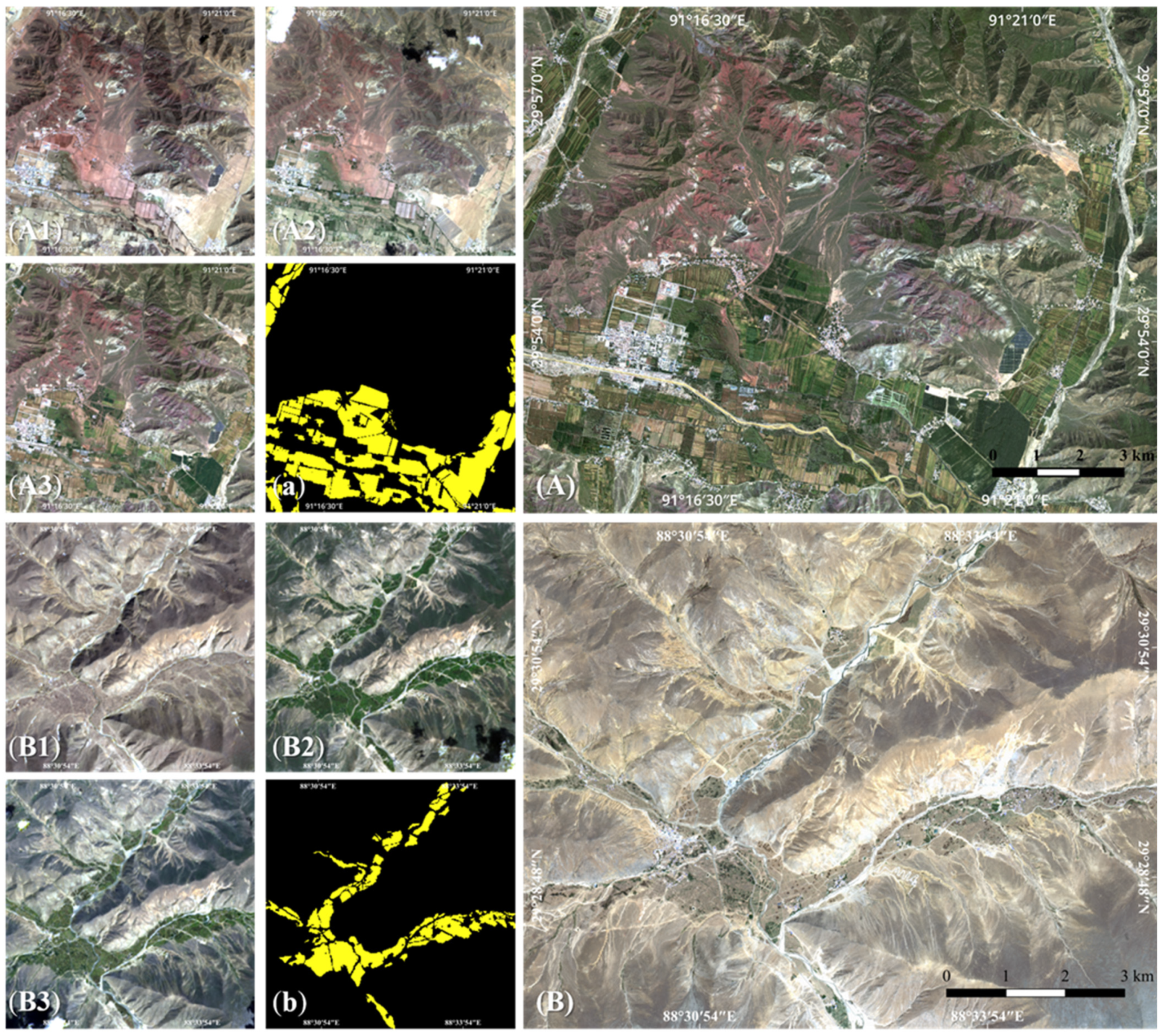

2.2.2. Annotation of Cropland

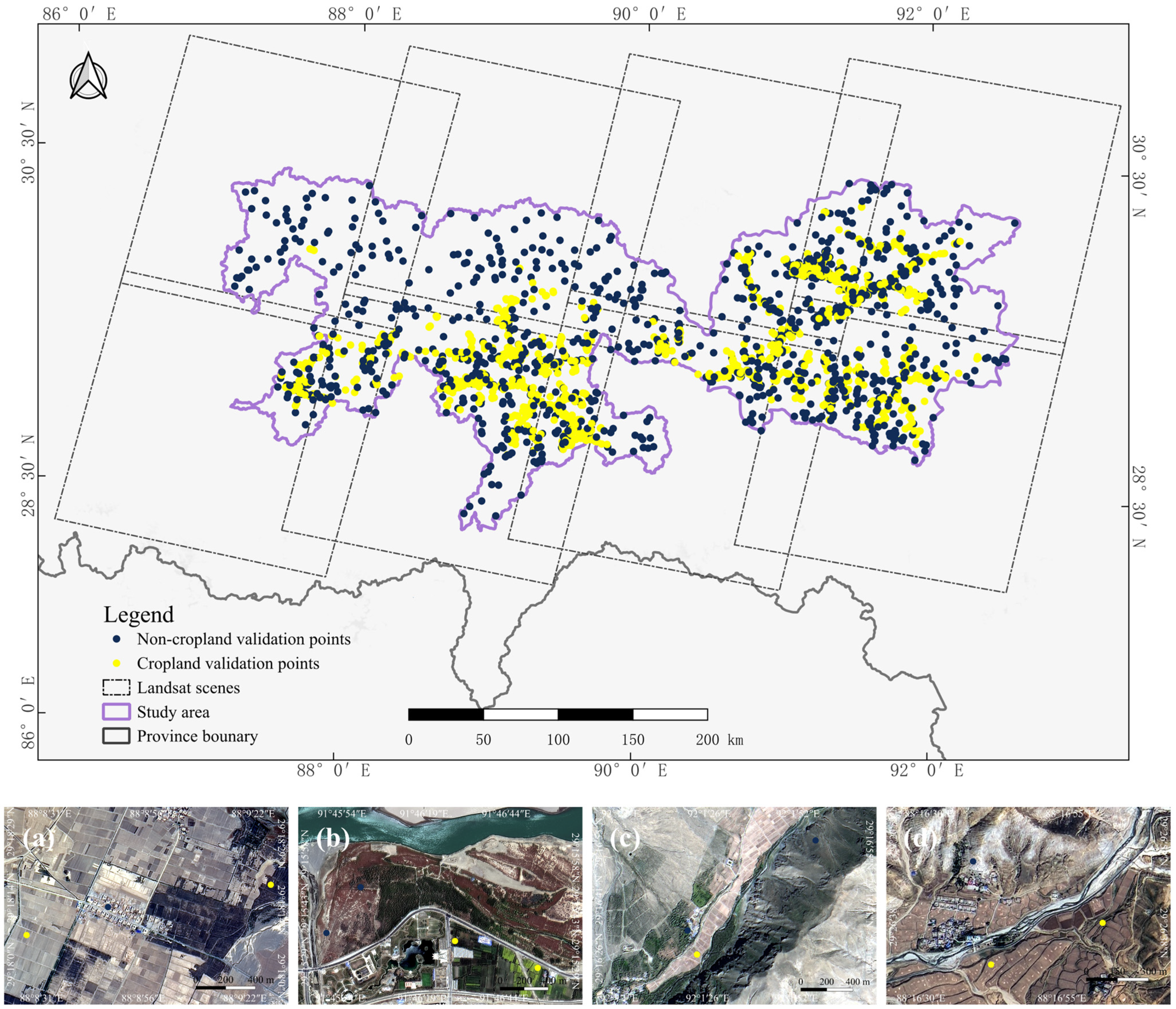

2.2.3. Construction of Dataset

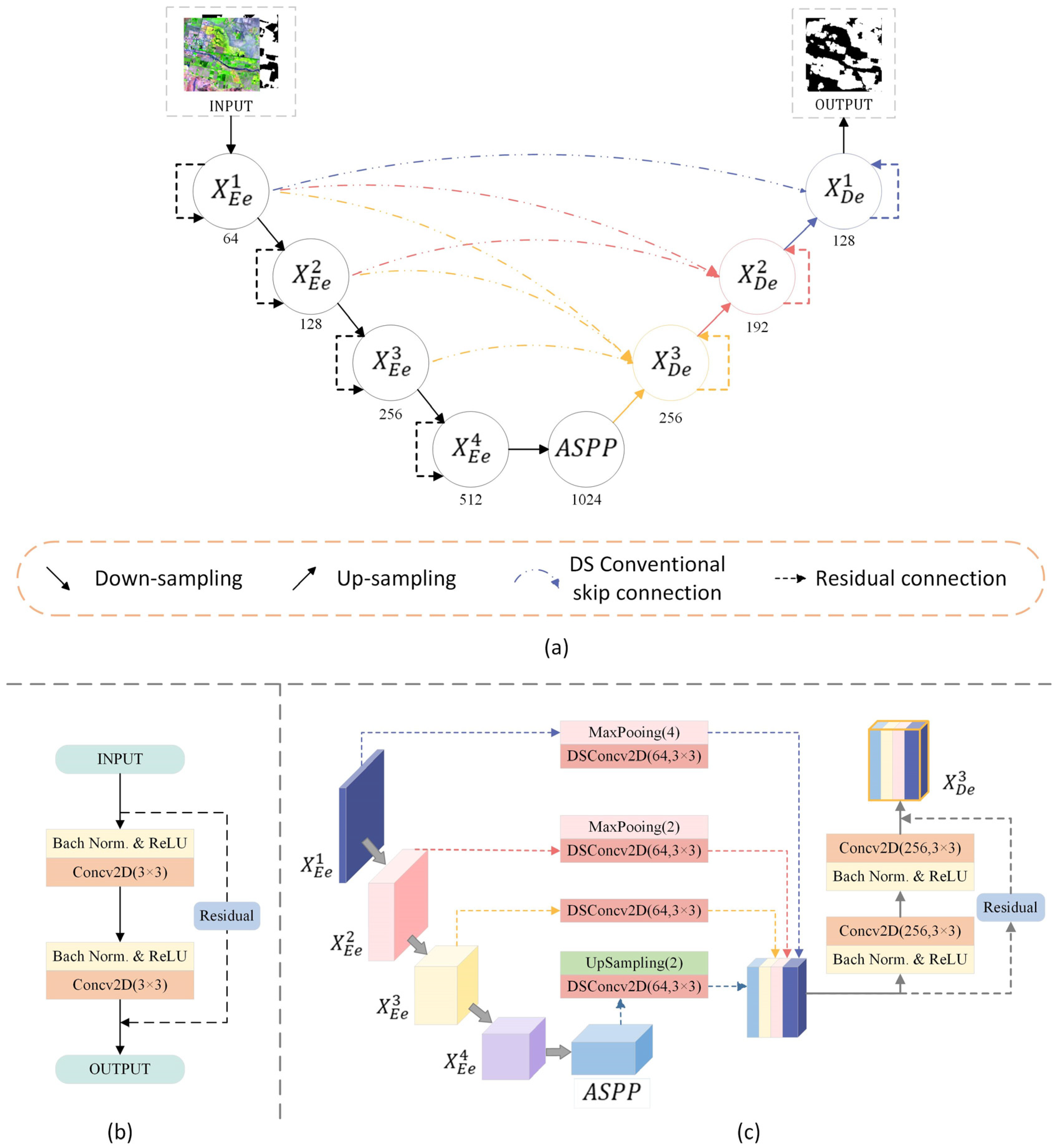

2.3. Network Architecture

2.3.1. Residual Blocks

2.3.2. Depthwise Separable Convolution

2.3.3. Multi-Scale Skip Connections

2.3.4. Dice Loss

2.3.5. Evaluation Metrics

3. Results

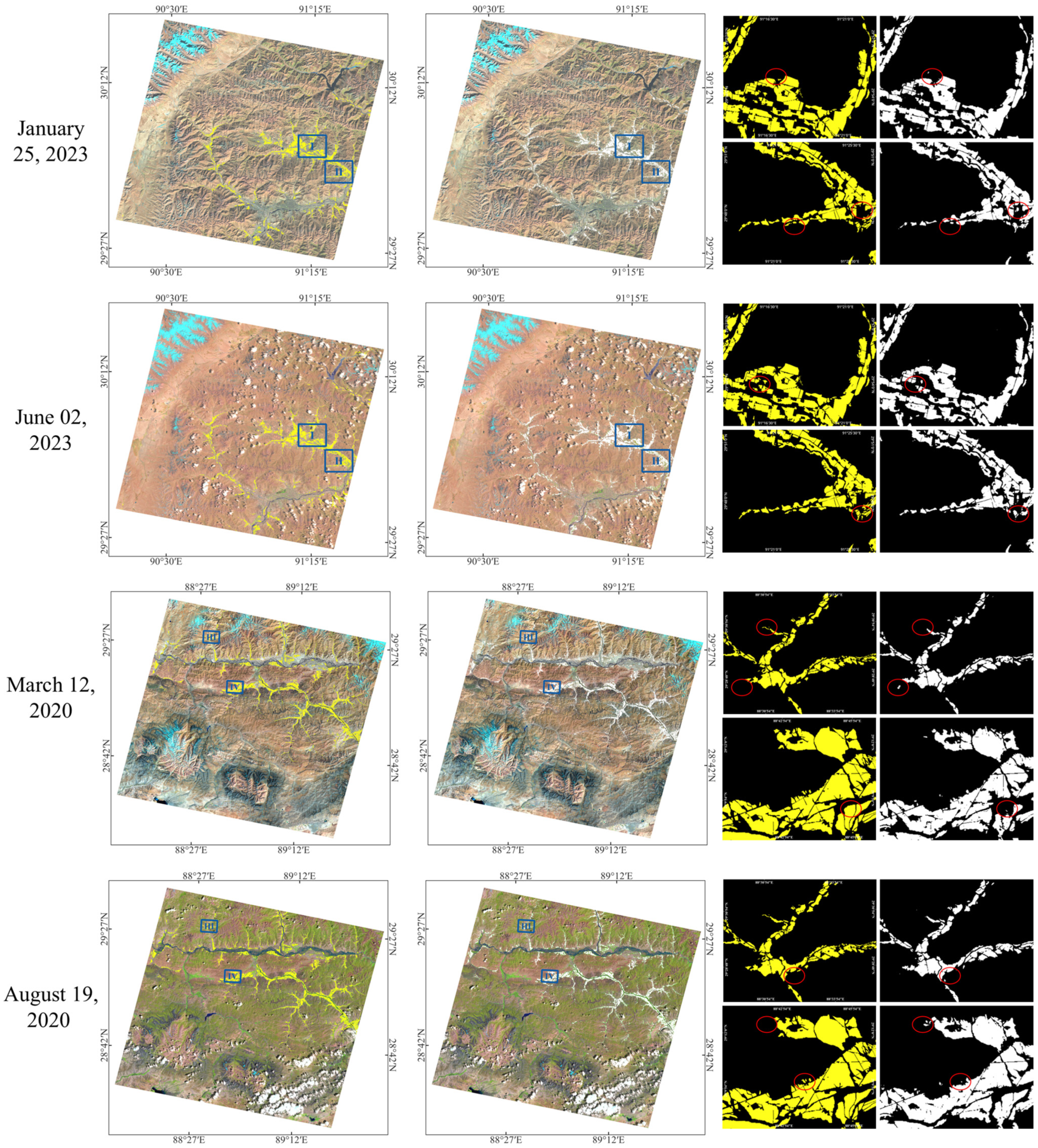

3.1. Performance of MSC-ResUNet

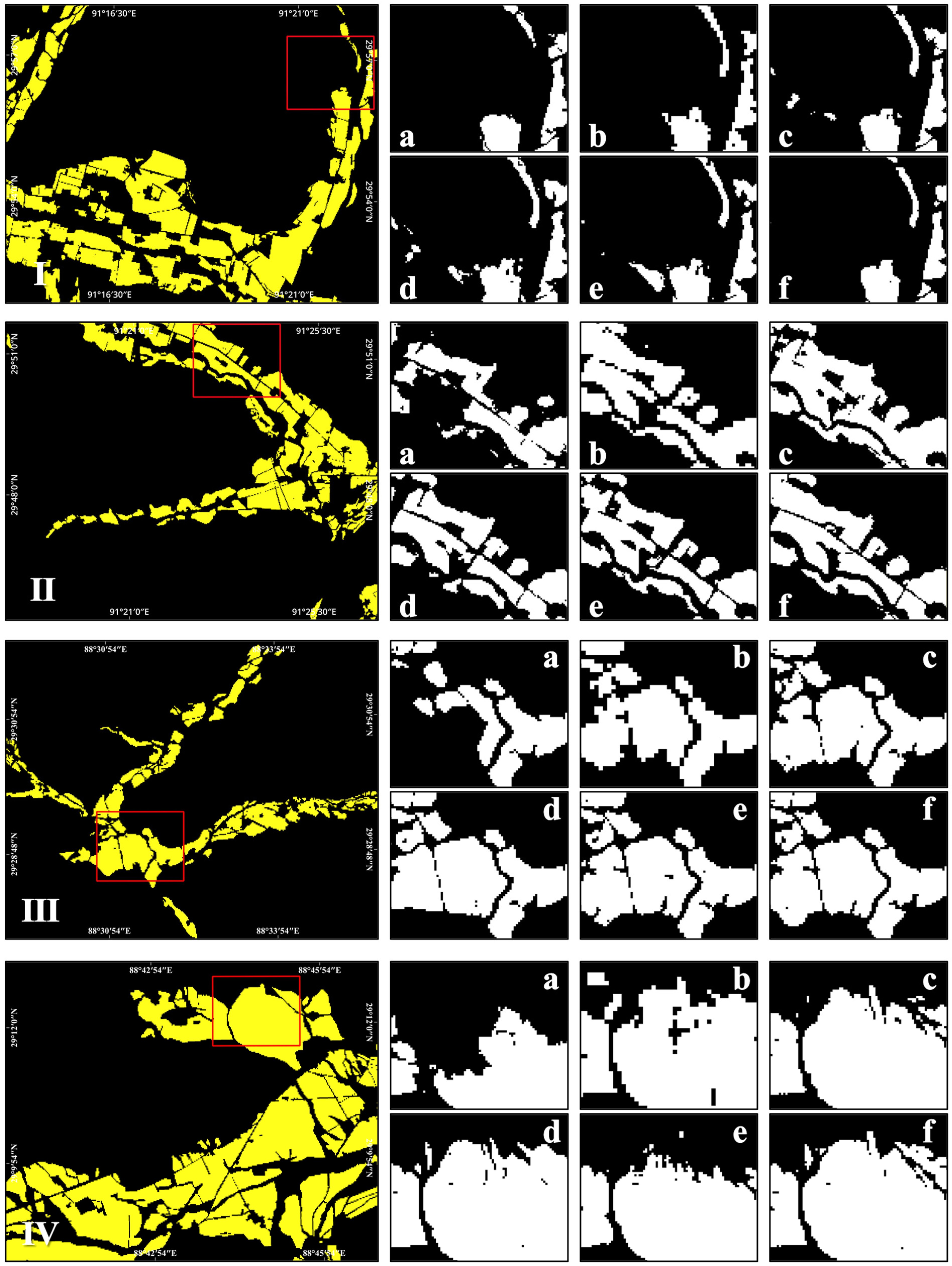

3.2. Comparison with Other Models

3.2.1. Performance Comparisons

3.2.2. Model Parameters and Operational Efficiency

4. Discussion

4.1. Ablation Study

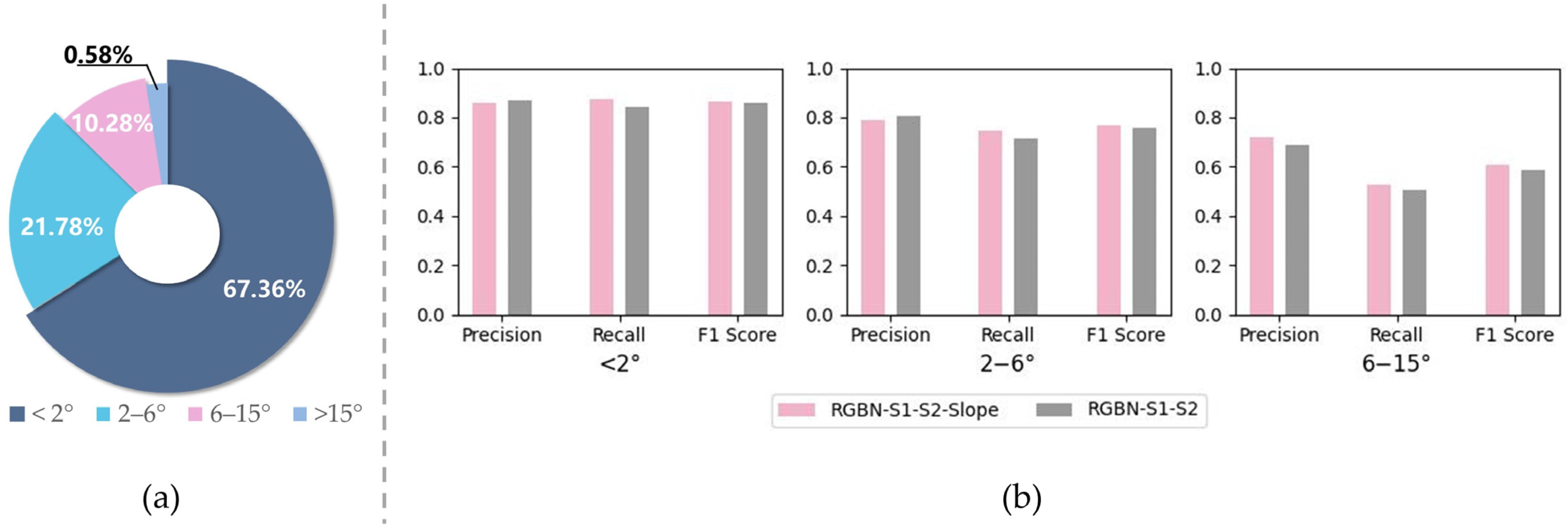

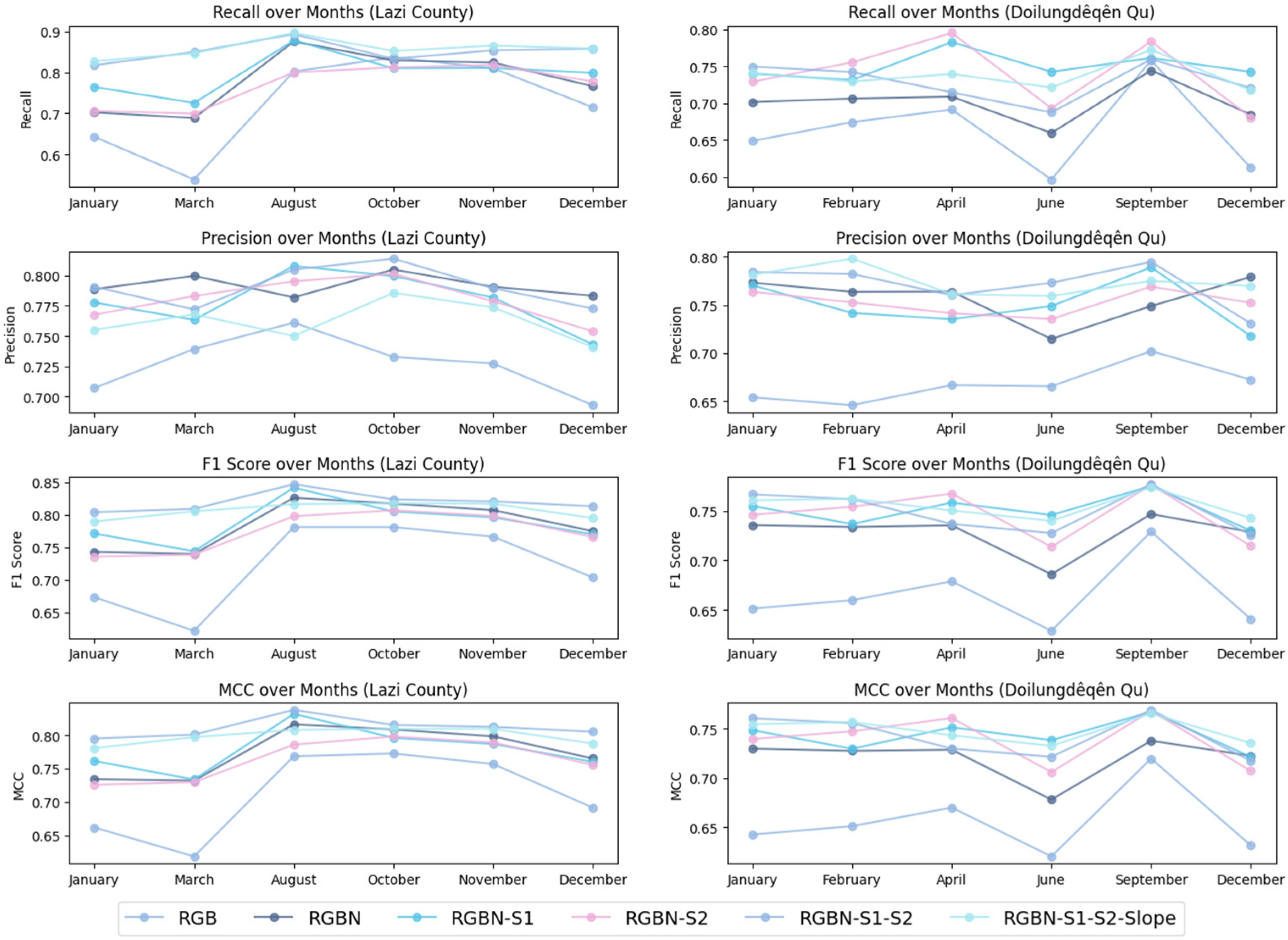

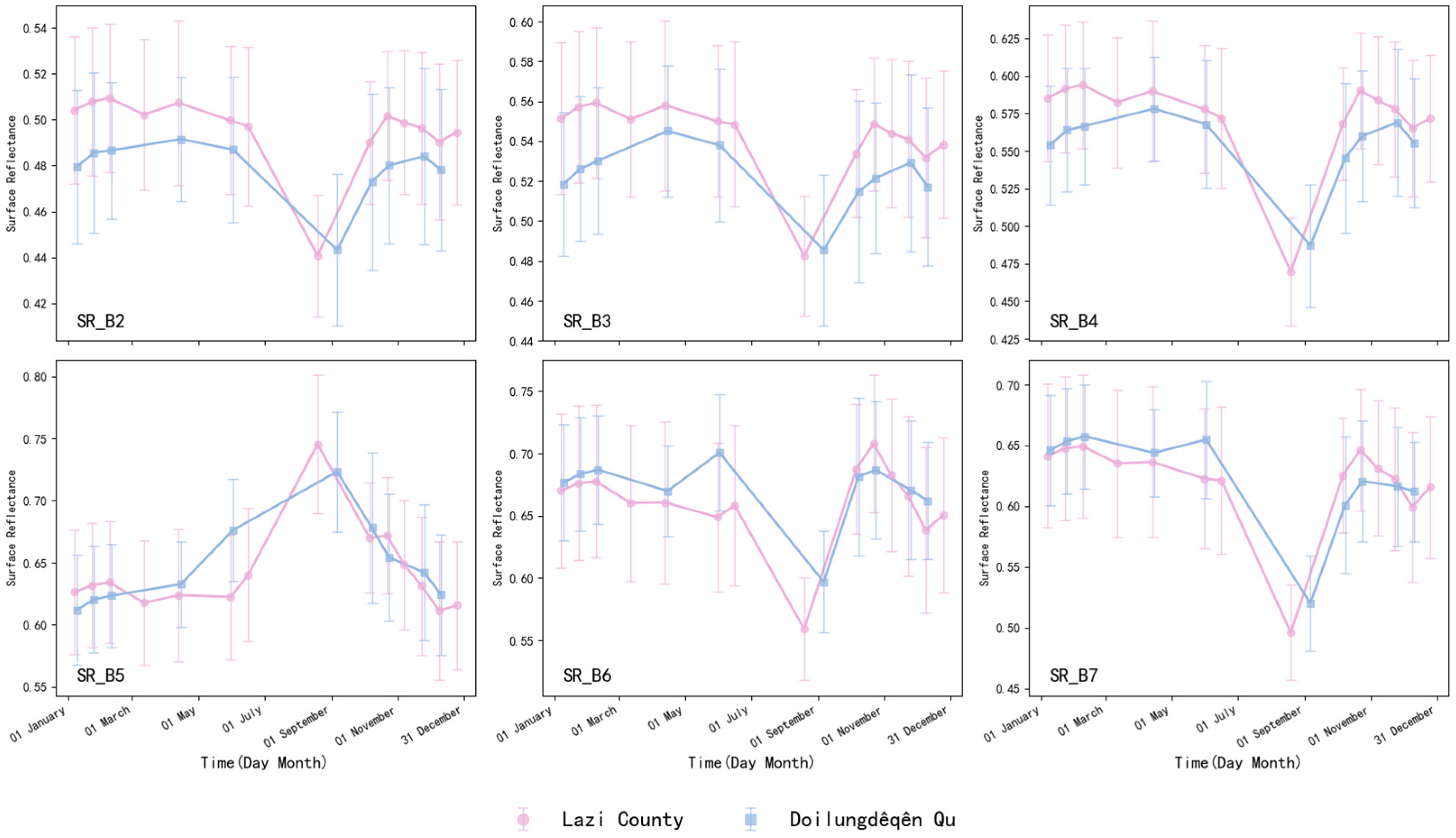

4.2. The Adaptability of the Model to Different Band Combinations

4.3. The Adaptability of the Model to Different Time Phases of Satellite Data

4.4. Advantages and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Giri, C.P. Remote Sensing of Land Use and Land Cover: Principles and Applications; CRC Press: Boca Raton, FL, USA, 2012; ISBN 978-1-4200-7074-3. [Google Scholar]

- Badreldin, N.; Abu Hatab, A.; Lagerkvist, C.-J. Spatiotemporal Dynamics of Urbanization and Cropland in the Nile Delta of Egypt Using Machine Learning and Satellite Big Data: Implications for Sustainable Development. Environ. Monit. Assess. 2019, 191, 767. [Google Scholar] [CrossRef]

- Corgne, S.; Hubert-Moy, L.; Betbeder, J. Monitoring of Agricultural Landscapes Using Remote Sensing Data. In Land Surface Remote Sensing in Agriculture and Forest; Baghdadi, N., Zribi, M., Eds.; Elsevier: Amsterdam, The Netherlands, 2016; pp. 221–247. ISBN 978-1-78548-103-1. [Google Scholar]

- Hamud, A.M.; Prince, H.M.; Shafri, H.Z. Landuse/Landcover Mapping and Monitoring Using Remote Sensing and GIS with Environmental Integration. IOP Conf. Ser. Earth Environ. Sci. 2019, 357, 012038. [Google Scholar] [CrossRef]

- Wang, X.; Shu, L.; Han, R.; Yang, F.; Gordon, T.; Wang, X.; Xu, H. A Survey of Farmland Boundary Extraction Technology Based on Remote Sensing Images. Electronics 2023, 12, 1156. [Google Scholar] [CrossRef]

- Xia, L.; Zhao, F.; Chen, J.; Yu, L.; Lu, M.; Yu, Q.; Liang, S.; Fan, L.; Sun, X.; Wu, S.; et al. A Full Resolution Deep Learning Network for Paddy Rice Mapping Using Landsat Data. ISPRS-J. Photogramm. Remote Sens. 2022, 194, 91–107. [Google Scholar] [CrossRef]

- Julien, Y.; Sobrino, J.A.; Jiménez-Muñoz, J.-C. Land Use Classification from Multitemporal Landsat Imagery Using the Yearly Land Cover Dynamics (YLCD) Method. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 711–720. [Google Scholar] [CrossRef]

- Ortiz, M.J.; Formaggio, A.R.; Epiphanio, J.C.N. Classification of Croplands through Integration of Remote Sensing, GIS, and Historical Database. Int. J. Remote Sens. 1997, 18, 95–105. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m Landsat-Derived Cropland Extent Product of Australia and China Using Random Forest Machine Learning Algorithm on Google Earth Engine Cloud Computing Platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L. Large-Area Crop Mapping Using Time-Series MODIS 250 m NDVI Data: An Assessment for the U.S. Central Great Plains. Remote Sens. Environ. 2008, 112, 1096–1116. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B. Mapping Paddy Rice Agriculture in Southern China Using Multi-Temporal MODIS Images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Zhang, G.; Xiao, X.; Dong, J.; Kou, W.; Jin, C.; Qin, Y.; Zhou, Y.; Wang, J.; Menarguez, M.A.; Biradar, C. Mapping Paddy Rice Planting Areas through Time Series Analysis of MODIS Land Surface Temperature and Vegetation Index Data. ISPRS J. Photogramm. Remote Sens. 2015, 106, 157–171. [Google Scholar] [CrossRef]

- Walker, J.J.; de Beurs, K.M.; Wynne, R.H. Dryland Vegetation Phenology across an Elevation Gradient in Arizona, USA, Investigated with Fused MODIS and Landsat Data. Remote Sens. Environ. 2014, 144, 85–97. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Kou, W.; Qin, Y.; Zhang, G.; Li, L.; Jin, C.; Zhou, Y.; Wang, J.; Biradar, C.; et al. Tracking the Dynamics of Paddy Rice Planting Area in 1986–2010 through Time Series Landsat Images and Phenology-Based Algorithms. Remote Sens. Environ. 2015, 160, 99–113. [Google Scholar] [CrossRef]

- Wang, Q.; Guo, P.; Dong, S.; Liu, Y.; Pan, Y.; Li, C. Extraction of Cropland Spatial Distribution Information Using Multi-Seasonal Fractal Features: A Case Study of Black Soil in Lishu County, China. Agriculture 2023, 13, 486. [Google Scholar] [CrossRef]

- Yang, R.; He, G.; Yin, R.; Wang, G.; Zhang, Z.; Long, T.; Peng, Y. Weakly-Semi Supervised Extraction of Rooftop Photovoltaics from High-Resolution Images Based on Segment Anything Model and Class Activation Map. Appl. Energy 2024, 361, 122964. [Google Scholar] [CrossRef]

- Peng, X.; He, G.; Wang, G.; Yin, R.; Wang, J. A Weakly Supervised Semantic Segmentation Framework for Medium-Resolution Forest Classification with Noisy Labels and GF-1 WFV Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4412419. [Google Scholar] [CrossRef]

- Shunying, W.; Ya’nan, Z.; Xianzeng, Y.; Li, F.; Tianjun, W.; Jiancheng, L. BSNet: Boundary-Semantic-Fusion Network for Farmland Parcel Mapping in High-Resolution Satellite Images. Comput. Electron. Agric. 2023, 206, 107683. [Google Scholar] [CrossRef]

- Li, C.; Fu, L.; Zhu, Q.; Zhu, J.; Fang, Z.; Xie, Y.; Guo, Y.; Gong, Y. Attention Enhanced U-Net for Building Extraction from Farmland Based on Google and WorldView-2 Remote Sensing Images. Remote Sens. 2021, 13, 4411. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A Critical Review of Recurrent Neural Networks for Sequence Learning. arXiv 2015, arXiv:1506.00019. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Lin, Z.; Zhong, R.; Xiong, X.; Guo, C.; Xu, J.; Zhu, Y.; Xu, J.; Ying, Y.; Ting, K.C.; Huang, J.; et al. Large-Scale Rice Mapping Using Multi-Task Spatiotemporal Deep Learning and Sentinel-1 SAR Time Series. Remote Sens. 2022, 14, 699. [Google Scholar] [CrossRef]

- Xu, J.; Yang, J.; Xiong, X.; Li, H.; Huang, J.; Ting, K.C.; Ying, Y.; Lin, T. Towards Interpreting Multi-Temporal Deep Learning Models in Crop Mapping. Remote Sens. Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep Learning Based Multi-Temporal Crop Classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Sathyanarayanan, D.; Anudeep, D.; Keshav Das, C.A.; Bhanadarkar, S.; Uma, D.; Hebbar, R.; Raj, K.G. A Multiclass Deep Learning Approach for LULC Classification of Multispectral Satellite Images. In Proceedings of the 2020 IEEE India Geoscience and Remote Sensing Symposium (InGARSS), Ahmedabad, India, 1–4 December 2020; pp. 102–105. [Google Scholar]

- Zaheer, S.A.; Ryu, Y.; Lee, J.; Zhong, Z.; Lee, K. In-Season Wall-to-Wall Crop-Type Mapping Using Ensemble of Image Segmentation Models. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4411311. [Google Scholar] [CrossRef]

- Qu, Y.; Zhang, B.; Xu, H.; Qiao, Z.; Liu, L. Interannual Monitoring of Cropland in South China from 1991 to 2020 Based on the Combination of Deep Learning and the LandTrendr Algorithm. Remote Sens. 2024, 16, 949. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1055–1059. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Li, R.; Duan, C.; Zheng, S.; Zhang, C.; Atkinson, P.M. MACU-Net for Semantic Segmentation of Fine-Resolution Remotely Sensed Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8007205. [Google Scholar] [CrossRef]

- Bejani, M.M.; Ghatee, M. A Systematic Review on Overfitting Control in Shallow and Deep Neural Networks. Artif. Intell. Rev. 2021, 54, 6391–6438. [Google Scholar] [CrossRef]

- Sun, Z.; Li, L.; Liu, Y.; Du, X.; Li, L. On the Importance of Building High-Quality Training Datasets for Neural Code Search. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 21–29 May 2022. [Google Scholar]

- Zheng, K.; He, G.; Yin, R.; Wang, G.; Long, T. A Comparison of Seven Medium Resolution Impervious Surface Products on the Qinghai–Tibet Plateau, China from a User’s Perspective. Remote Sens. 2023, 15, 2366. [Google Scholar] [CrossRef]

- Guo, Y.; HUANG, Z.; DU, J. Variation characteristics of agricultural boundary temperature in main agricultural regions in basins of the Brahmaputra River and its two tributaries in Xizang from 1981 to 2022. Arid Meteorol. 2024, 42, 47–53. [Google Scholar]

- Li, D.; Tian, P.; Luo, H. Spatio-Temporal Characteristics and Obstacle Diagnosis of Cultivated Land Ecological Security in “One River and Two Tributaries” Region in Tibet. Trans. Chin. Soc. Agric. Mach. 2020, 51, 10. [Google Scholar] [CrossRef]

- Liu, G.; Nimazhaxi; Song, G.; Cheng, L. Analysis of Soil Nutrients Limiting Factors for Barley Production in Centre Tibet. Chin. J. Agrometeorol. 2014, 35, 276–280. [Google Scholar]

- Wu, F.; Ma, W.; Li, T.; Yan, X.; Ma, X.; Tang, S.; Zhang, F. Spatiotemporal patterns and other impacting factors on wheat production of Tibet, China. Chin. J. Appl. Environ. Biol. 2022, 28, 945–953. [Google Scholar] [CrossRef]

- Huang, L.; Feng, Y.; Zhang, B.; Hu, W. Spatio-Temporal Characteristics and Obstacle Factors of Cultivated Land Resources Security. Sustainability 2021, 13, 8498. [Google Scholar] [CrossRef]

- Bai, W.; Yao, L.; Zhang, Y.; Wang, C. Spatial-temporal Dynamics of Cultivated Land in Recent 35 Years in the Lhasa River Basin of Tibet. J. Nat. Resour. 2014, 29, 623–632. [Google Scholar]

- Tao, J.; Wang, Y.; Liu, F.; Zhang, Y.; Chen, Q.; Wu, L. Identification and determination of its critical values for influencing factors of cultivated land reclamation strength in region of Brahmaputra River and its two tributaries in Tibet. Trans. Chin. Soc. Agric. Eng. 2016, 32, 239–246. [Google Scholar] [CrossRef]

- Liu, J.; Yu, Z. The Study and Practice on the Application of Colour Infrared Aerial Remote Sensing Technique to Non-cultivation Coefficient Calculation in Tibet. Natl. Remote Sens. Bull. 1990, 5, 27–37+81. [Google Scholar]

- Van De Kerchove, R.; Zanaga, D.; Keersmaecker, W.; Souverijns, N.; Wevers, J.; Brockmann, C.; Grosu, A.; Paccini, A.; Cartus, O.; Santoro, M.; et al. ESA WorldCover: Global Land Cover Mapping at 10 m Resolution for 2020 Based on Sentinel-1 and 2 Data. In Proceedings of the AGU Fall Meeting Abstracts, New Orleans, LA, USA, 13–17 December 2021; Volume 2021, p. GC45I-0915. [Google Scholar]

- Chen, J.; Zhang, J.; Zhang, W.; Peng, S. Continous Updating and Refinement of Land Cover Data Product. J. Remote Sens. 2016, 20, 991–1001. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Truth | |||||

|---|---|---|---|---|---|

| Cropland | Non-Cropland | Total | Precision | ||

| Prediction | Cropland | 1289 | 20 | 1309 | 0.985 |

| Non-Cropland | 34 | 1165 | 1199 | 0.972 | |

| Total | 1323 | 1185 | |||

| Recall | 0.974 | 0.983 | |||

| MCC | 0.9795 | ||||

| Overall Accuracy | 0.9784 | ||||

| Truth | |||||

|---|---|---|---|---|---|

| Cropland | Non-Cropland | Total | Precision | ||

| Prediction | Cropland | 2,813,164 | 655,573 | 3,468,737 | 0.811 |

| Non-Cropland | 530,335 | 55,507,616 | 56,037,951 | 0.991 | |

| Total | 3,343,499 | 56,163,189 | |||

| Recall | 0.841 | 0.988 | |||

| MCC | 0.8155 | ||||

| F1 | 0.8259 | ||||

| Truth | |||||

|---|---|---|---|---|---|

| Cropland | Non-Cropland | Total | Precision | ||

| Prediction | Cropland | 3,714,676 | 678,438 | 4,393,115 | 0.846 |

| Non-Cropland | 570,082 | 67,978,372 | 68,548,453 | 0.992 | |

| Total | 4,284,758 | 68,656,810 | |||

| Recall | 0.867 | 0.990 | |||

| MCC | 0.8471 | ||||

| F1 | 0.8561 | ||||

| Models | Regional Robustness Dataset | Temporal Transferability Dataset | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | MCC | Precision | Recall | F1 | MCC | |

| DeepLabv3+ | 0.727 | 0.676 | 0.701 | 0.684 | 0.812 | 0.763 | 0.786 | 0.774 |

| UNet | 0.668 | 0.847 | 0.747 | 0.736 | 0.842 | 0.778 | 0.809 | 0.798 |

| ResUNet++ | 0.757 | 0.803 | 0.779 | 0.766 | 0.829 | 0.808 | 0.818 | 0.807 |

| MACU-Net | 0.735 | 0.792 | 0.763 | 0.749 | 0.782 | 0.850 | 0.815 | 0.804 |

| HRNet | 0.792 | 0.814 | 0.803 | 0.791 | 0.837 | 0.864 | 0.850 | 0.841 |

| OURS | 0.811 | 0.841 | 0.826 | 0.816 | 0.846 | 0.867 | 0.856 | 0.847 |

| Model | Number of Parameters | FLOPs | Training (ms/Batch) | Prediction (ms/Batch) |

|---|---|---|---|---|

| DeepLabv3+ | 17,882,241 (68.22 MB) | 5.82 G | 198 | 105 |

| UNet | 31,058,693 (118.48 MB) | 10.97 G | 236 | 88 |

| ResUNet++ | 101,994,116 (389.08 MB) | 15.90 G | 1015 | 111 |

| MACU-Net | 20,591,425 (78.55 MB) | 13.49 G | 299 | 93 |

| HRNet | 66,864,449 (255.07 MB) | 16.76 G | 508 | 181 |

| OURS | 35,261,697 (134.51 MB) | 22.42 G | 405 | 99 |

| No. | Main Model | ASPP | MSC | Precision | Recall | F1 | MCC | |

|---|---|---|---|---|---|---|---|---|

| Conv | DS Conv | |||||||

| 1 | UNet | 0.7924 | 0.8250 | 0.8084 | 0.7969 | |||

| 2 | UNet | 0.7917 | 0.8265 | 0.8087 | 0.7973 | |||

| 3 | UNet | ✓ | ✓ | 0.7891 | 0.8350 | 0.8114 | 0.8002 | |

| 4 | ResUNet | 0.7752 | 0.8247 | 0.7992 | 0.7873 | |||

| 5 | ResUNet | ✓ | 0.8062 | 0.8386 | 0.8221 | 0.8114 | ||

| 6 | ResUNet | ✓ | ✓ | 0.7807 | 0.7976 | 0.7891 | 0.7764 | |

| 7 | ResUNet | ✓ | ✓ | 0.8110 | 0.8414 | 0.8259 | 0.8155 | |

| Band Combinations | Used Bands |

|---|---|

| RGB | true-color channels. (OLI band 2–4) |

| RGBN | true-color channels, near-infrared channel. (OLI band 2–5) |

| RGBN-S1 | true-color channels, near-infrared channel, short-wave infrared 1. (OLI band 2–6) |

| RGBN-S2 | true-color channels, near-infrared channel, short-wave infrared 2. (OLI band 2–5, 7) |

| RGBN-S1-S2 | true-color channels, near-infrared channel, short-wave infrared 1, short-wave infrared 2. (OLI band 2–7) |

| RGBN-S1-S2-Slope | true-color channels, near-infrared channel, short-wave infrared 1, short-wave infrared 2, slope. |

| Band Combinations | Metrics | |||

|---|---|---|---|---|

| Precision | Recall | F1 | MCC | |

| RGB | 0.732 | 0.730 | 0.7314 | 0.7155 |

| RGBN | 0.798 | 0.755 | 0.7757 | 0.7630 |

| RGBN-S1 | 0.796 | 0.809 | 0.8023 | 0.7904 |

| RGBN-S2 | 0.805 | 0.779 | 0.7920 | 0.7799 |

| RGBN-S1-S2 | 0.814 | 0.810 | 0.8120 | 0.8009 |

| RGBN-S1-S2-Slope | 0.811 | 0.841 | 0.8259 | 0.8155 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; He, G.; Peng, X.; Wang, G.; Yin, R. A Multi-Scale Feature Fusion Deep Learning Network for the Extraction of Cropland Based on Landsat Data. Remote Sens. 2024, 16, 4071. https://doi.org/10.3390/rs16214071

Chen H, He G, Peng X, Wang G, Yin R. A Multi-Scale Feature Fusion Deep Learning Network for the Extraction of Cropland Based on Landsat Data. Remote Sensing. 2024; 16(21):4071. https://doi.org/10.3390/rs16214071

Chicago/Turabian StyleChen, Huiling, Guojin He, Xueli Peng, Guizhou Wang, and Ranyu Yin. 2024. "A Multi-Scale Feature Fusion Deep Learning Network for the Extraction of Cropland Based on Landsat Data" Remote Sensing 16, no. 21: 4071. https://doi.org/10.3390/rs16214071

APA StyleChen, H., He, G., Peng, X., Wang, G., & Yin, R. (2024). A Multi-Scale Feature Fusion Deep Learning Network for the Extraction of Cropland Based on Landsat Data. Remote Sensing, 16(21), 4071. https://doi.org/10.3390/rs16214071