MPG-Net: A Semantic Segmentation Model for Extracting Aquaculture Ponds in Coastal Areas from Sentinel-2 MSI and Planet SuperDove Images

Abstract

1. Introduction

2. Materials

2.1. Study Area

2.2. Data

3. Methodology

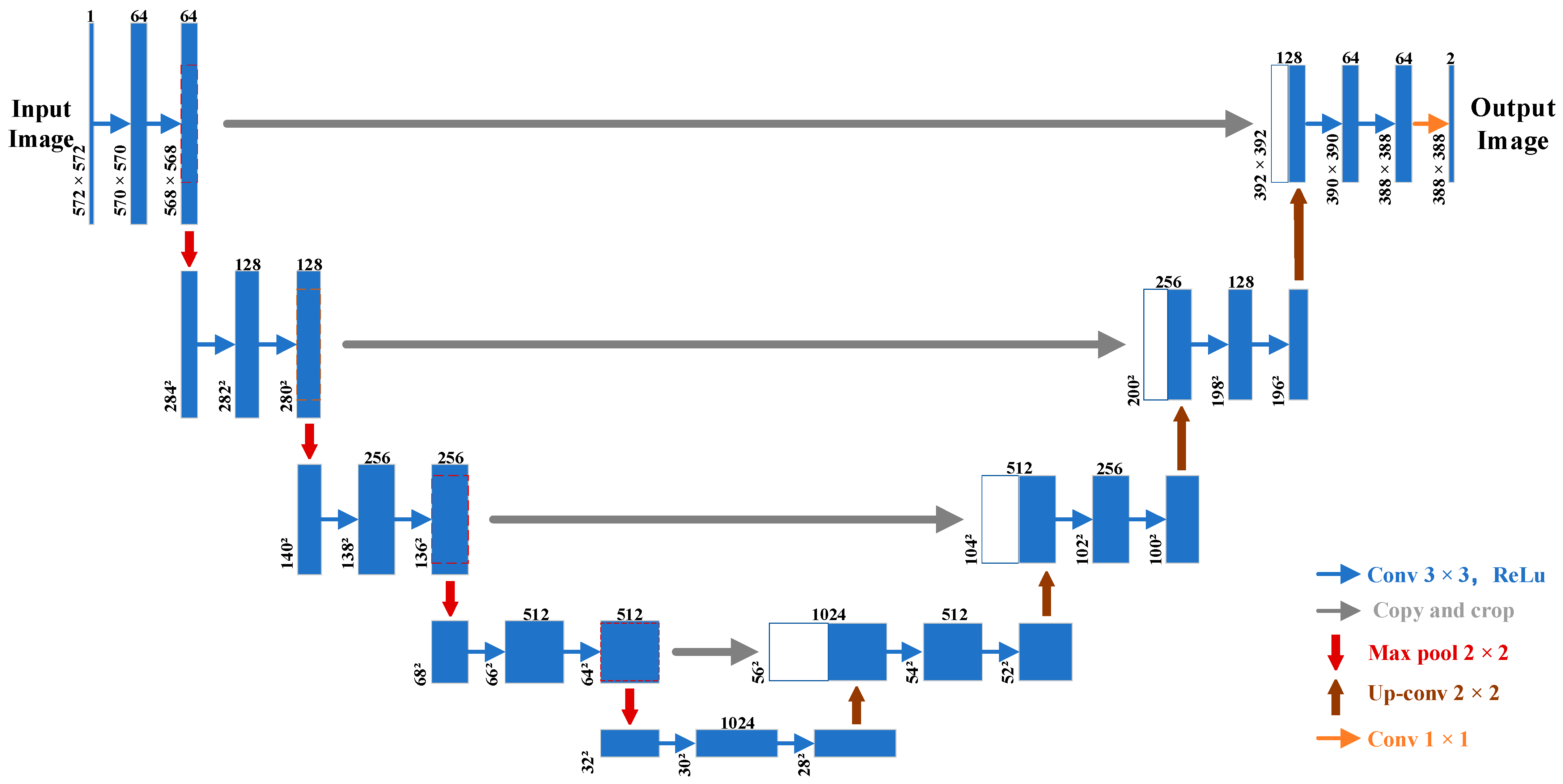

3.1. MPG-Net Model

3.2. The MS Structure

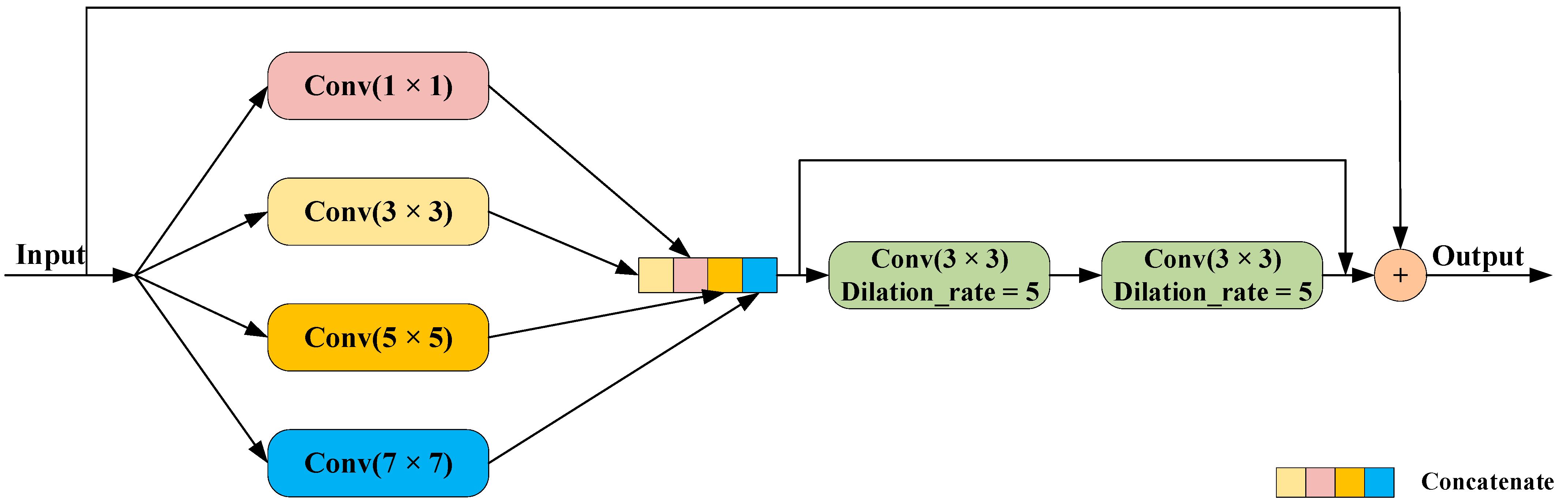

3.3. The PGC Structure

3.4. Experimental Setup

3.4.1. Parameter Settings

3.4.2. The Construction Process of the Aquaculture Pond Extraction Model

3.4.3. Accuracy Assessment

4. Results and Discussion

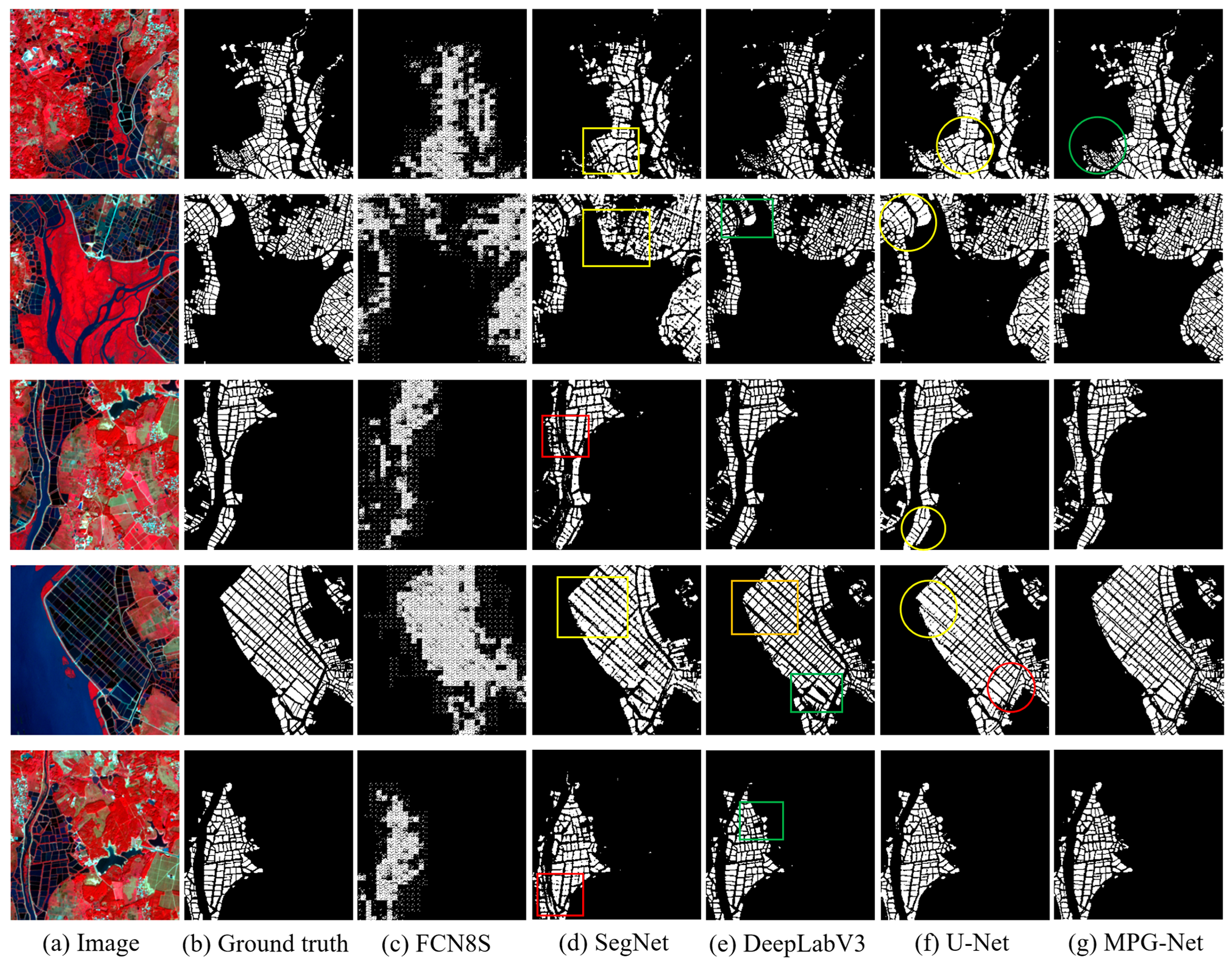

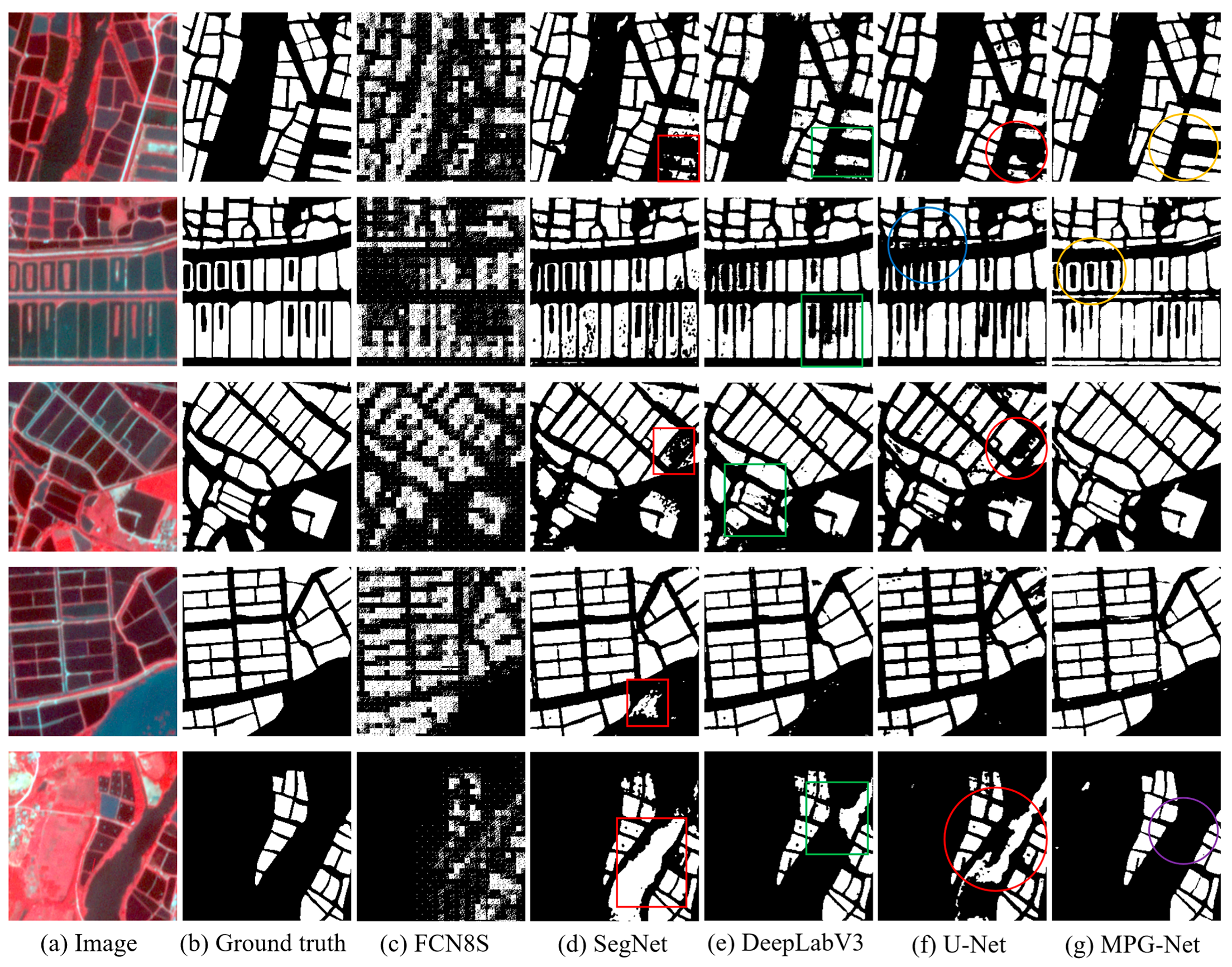

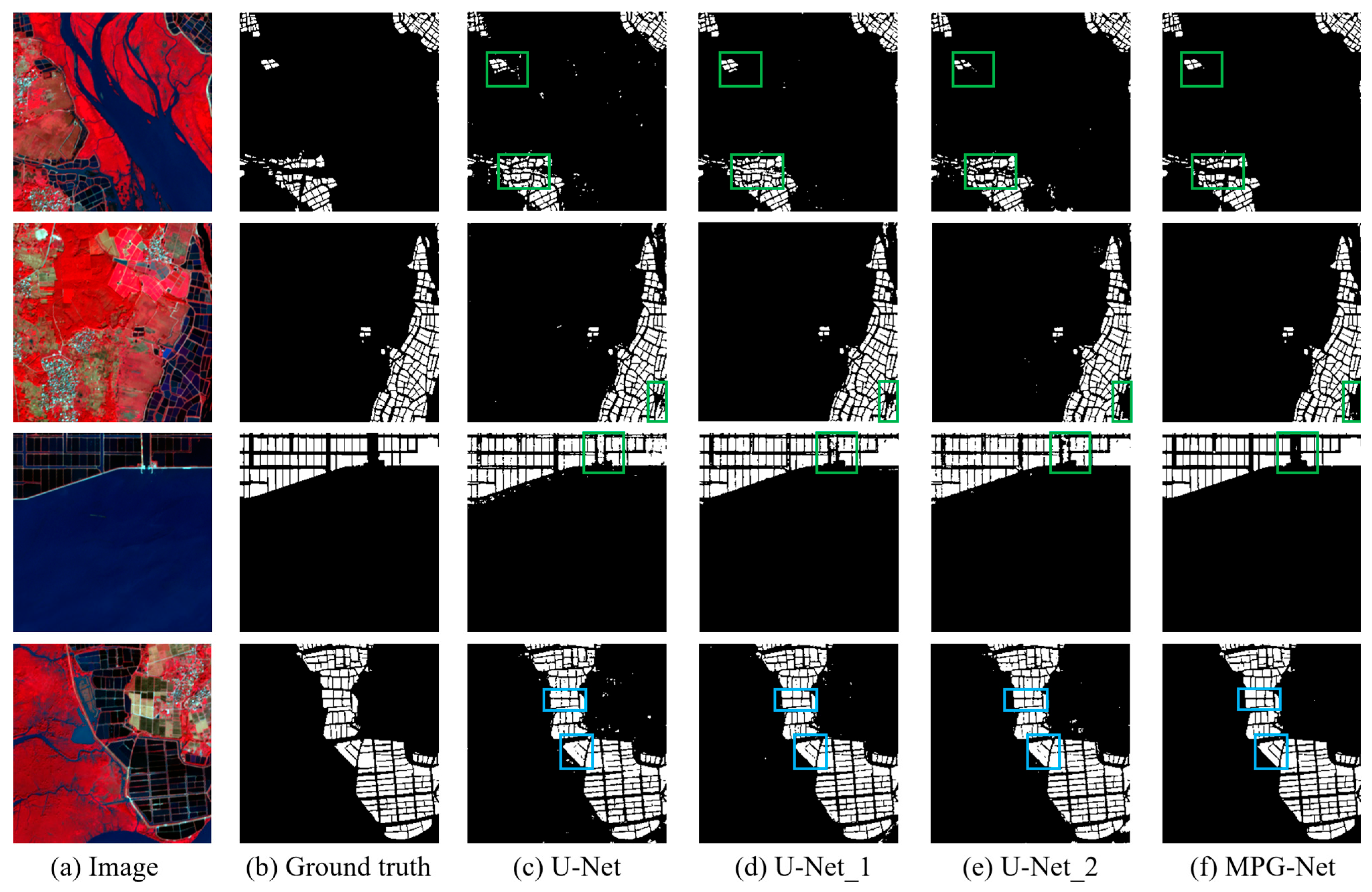

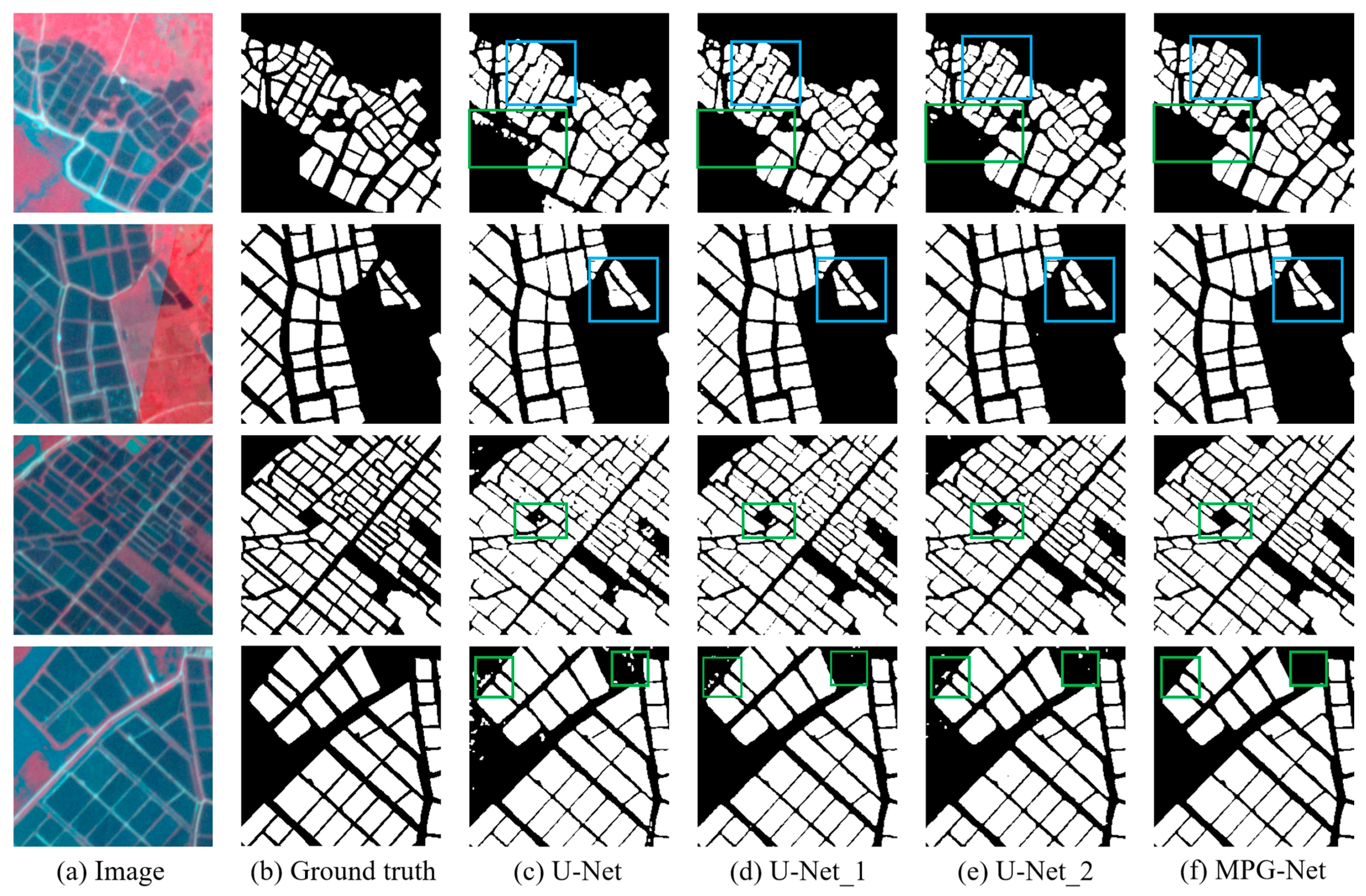

4.1. Comparison Experiments

4.2. Ablation Experiments

4.3. Applicability of Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- FAO. The State of World Fisheries and Aquaculture 2020; FAO: Rome, Italy, 2020; ISBN 978-92-5-132692-3. [Google Scholar]

- Rahman, A.F.; Dragoni, D.; Didan, K.; Barreto-Munoz, A.; Hutabarat, J.A. Detecting Large Scale Conversion of Mangroves to Aquaculture with Change Point and Mixed-Pixel Analyses of High-Fidelity MODIS Data. Remote Sens. Environ. 2013, 130, 96–107. [Google Scholar] [CrossRef]

- Neofitou, N.; Papadimitriou, K.; Domenikiotis, C.; Tziantziou, L.; Panagiotaki, P. GIS in Environmental Monitoring and Assessment of Fish Farming Impacts on Nutrients of Pagasitikos Gulf, Eastern Mediterranean. Aquaculture 2019, 501, 62–75. [Google Scholar] [CrossRef]

- Wang, B.; Cao, L.; Micheli, F.; Naylor, R.L.; Fringer, O.B. The Effects of Intensive Aquaculture on Nutrient Residence Time and Transport in a Coastal Embayment. Environ. Fluid Mech. 2018, 18, 1321–1349. [Google Scholar] [CrossRef]

- Hukom, V.; Nielsen, R.; Asmild, M.; Nielsen, M. Do Aquaculture Farmers Have an Incentive to Maintain Good Water Quality? The Case of Small-Scale Shrimp Farming in Indonesia. Ecol. Econ. 2020, 176, 106717. [Google Scholar] [CrossRef]

- Spalding, M.D.; Ruffo, S.; Lacambra, C.; Meliane, I.; Hale, L.Z.; Shepard, C.C.; Beck, M.W. The Role of Ecosystems in Coastal Protection: Adapting to Climate Change and Coastal Hazards. Ocean Coast. Manag. 2014, 90, 50–57. [Google Scholar] [CrossRef]

- Lv, D.A.; Cheng, J.; Mo, W.; Tang, Y.H.; Sun, L.; Liao, Y.B. Pollution and Ecological Restoration of Mariculture. Ocean Dev. Manag. 2019, 11, 43–48. [Google Scholar]

- Richards, D.R.; Friess, D.A. Rates and Drivers of Mangrove Deforestation in Southeast Asia, 2000–2012. Proc. Natl. Acad. Sci. USA 2016, 113, 344–349. [Google Scholar] [CrossRef]

- Wen, K.; Yao, H.; Huang, Y.; Chen, H.; Liao, P. Remote Sensing Image Extraction for Coastal Aquaculture Ponds in the Guangxi Beibu Gulf Based on Google Earth Engine. Trans. Chin. Soc. Agric. Eng 2021, 37, 280–288. [Google Scholar]

- Liu, W.; Liu, S.; Zhao, J.; Duan, J.; Chen, Z.; Guo, R.; Chu, J.; Zhang, J.; Li, X.; Liu, J. A Remote Sensing Data Management System for Sea Area Usage Management in China. Ocean Coast. Manag. 2018, 152, 163–174. [Google Scholar] [CrossRef]

- Duan, Y.; Li, X.; Zhang, L.; Liu, W.; Chen, D.; Ji, H. Detecting Spatiotemporal Changes of Large-Scale Aquaculture Ponds Regions over 1988–2018 in Jiangsu Province, China Using Google Earth Engine. Ocean Coast. Manag. 2020, 188, 105144. [Google Scholar] [CrossRef]

- Wang, L.; Chen, C.; Xie, F.; Hu, Z.; Zhang, Z.; Chen, H.; He, X.; Chu, Y. Estimation of the Value of Regional Ecosystem Services of an Archipelago Using Satellite Remote Sensing Technology: A Case Study of Zhoushan Archipelago, China. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102616. [Google Scholar] [CrossRef]

- Chen, C.; Liang, J.; Xie, F.; Hu, Z.; Sun, W.; Yang, G.; Yu, J.; Chen, L.; Wang, L.; Wang, L. Temporal and Spatial Variation of Coastline Using Remote Sensing Images for Zhoushan Archipelago, China. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102711. [Google Scholar] [CrossRef]

- Ottinger, M.; Clauss, K.; Huth, J.; Eisfelder, C.; Leinenkugel, P.; Kuenzer, C. Time Series Sentinel-1 SAR Data for the Mapping of Aquaculture Ponds in Coastal Asia. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 9371–9374. [Google Scholar]

- Zhu, Z.; Tang, Y.; Hu, J.; An, M. Coastline Extraction from High-Resolution Multispectral Images by Integrating Prior Edge Information with Active Contour Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4099–4109. [Google Scholar] [CrossRef]

- Sabjan, A.; Lee, L.; See, K.; Wee, S. Comparison of Three Water Indices for Tropical Aquaculture Ponds Extraction Using Google Earth Engine. Sains Malays 2022, 51, 369–378. [Google Scholar]

- Ma, Y.; Zhao, D.; Wang, R.; Su, W. Offshore Aquatic Farming Areas Extraction Method Based on ASTER Data. Trans. Chin. Soc. Agric. Eng. 2010, 26, 120–124. [Google Scholar]

- Wu, Y.; Chen, F.; Ma, Y.; Liu, J.; Li, X. Research on Automatic Extraction Method for Coastal Aquaculture Area Using Landsat8 Data. Remote Sens. Nat. Resour. 2018, 30, 96–105. [Google Scholar]

- Alexandridis, T.K.; Topaloglou, C.A.; Lazaridou, E.; Zalidis, G.C. The Performance of Satellite Images in Mapping Aquacultures. Ocean Coast. Manag. 2008, 51, 638–644. [Google Scholar] [CrossRef]

- Wang, F.; Xia, L.; Chen, Z.; Cui, W.; Liu, Z.; Pan, C. Remote Sensing Identification of Coastal Zone Mariculture Modes Based on Association-Rules Object-Oriented Method. Trans. Chin. Soc. Agric. Eng. 2018, 34, 210–217. [Google Scholar]

- Fu, Y.; Deng, J.; Ye, Z.; Gan, M.; Wang, K.; Wu, J.; Yang, W.; Xiao, G. Coastal Aquaculture Mapping from Very High Spatial Resolution Imagery by Combining Object-Based Neighbor Features. Sustainability 2019, 11, 637. [Google Scholar] [CrossRef]

- Ren, C.; Wang, Z.; Zhang, Y.; Zhang, B.; Chen, L.; Xi, Y.; Xiao, X.; Doughty, R.B.; Liu, M.; Jia, M. Rapid Expansion of Coastal Aquaculture Ponds in China from Landsat Observations during 1984–2016. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101902. [Google Scholar] [CrossRef]

- Huang, S.; Wei, C. Spatial-Temporal Changes in Aquaculture Ponds in Coastal Cities of Guangdong Province: An Empirical Study Based on Sentinel-1 Data during 2015–2019. Trop. Geogr. 2021, 41, 622–634. [Google Scholar]

- Zhu, H.; Li, K.; Wang, L.; Chu, J.; Gao, N.; Chen, Y. Spectral Characteristic Analysis and Remote Sensing Classification of Coastal Aquaculture Areas Based on GF-1 Data. J. Coast. Res. 2019, 90, 49–57. [Google Scholar] [CrossRef]

- Cheng, T.F.; Zhou, W.F.; Fan, W. Progress in the Methods for Extracting Aquaculture Areas from Remote Sensing Data. Remote Sens. Nat. Resour. 2012, 24, 1–5. [Google Scholar]

- Duan, Y.; Li, X.; Zhang, L.; Chen, D.; Ji, H. Mapping National-Scale Aquaculture Ponds Based on the Google Earth Engine in the Chinese Coastal Zone. Aquaculture 2020, 520, 734666. [Google Scholar] [CrossRef]

- Hou, T.; Sun, W.; Chen, C.; Yang, G.; Meng, X.; Peng, J. Marine Floating Raft Aquaculture Extraction of Hyperspectral Remote Sensing Images Based Decision Tree Algorithm. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102846. [Google Scholar] [CrossRef]

- Xue, M.; Chen, Y.Z.; Tian, X. Detection of Marine Aquaculture in Sansha Bay by Remote Sensing. Mar. Environ. Sci. 2019, 730–735. [Google Scholar] [CrossRef]

- Zeng, Z.; Wang, D.; Tan, W.; Huang, J. Extracting Aquaculture Ponds from Natural Water Surfaces around Inland Lakes on Medium Resolution Multispectral Images. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 13–25. [Google Scholar] [CrossRef]

- Xia, Z.; Guo, X.; Chen, R. Automatic Extraction of Aquaculture Ponds Based on Google Earth Engine. Ocean Coast. Manag. 2020, 198, 105348. [Google Scholar] [CrossRef]

- Zhao, C.; Jia, M.; Wang, Z.; Mao, D.; Wang, Y. Identifying Mangroves through Knowledge Extracted from Trained Random Forest Models: An Interpretable Mangrove Mapping Approach (IMMA). ISPRS J. Photogramm. Remote Sens. 2023, 201, 209–225. [Google Scholar] [CrossRef]

- Long, C.; Dai, Z.; Zhou, X.; Mei, X.; Van, C.M. Mapping Mangrove Forests in the Red River Delta, Vietnam. For. Ecol. Manag. 2021, 483, 118910. [Google Scholar] [CrossRef]

- Shao, Z.; Sun, Y.; Xi, J.; Li, Y. Intelligent Optimization Learning for Semantic Segmentation of High Spatial Resolution Remote Sensing Images. Geomat. Inf. Sci. Wuhan Univ. 2022, 47, 234–241. [Google Scholar]

- Wang, E.; Qi, K.; Li, X.; Peng, L. Semantic Segmentation of Remote Sensing Image Based on Neural Network. Acta Opt. Sin. 2019, 39, 93–104. [Google Scholar]

- Cheng, B.; Liang, C.; Liu, X.; Liu, Y.; Ma, X.; Wang, G. Research on a Novel Extraction Method Using Deep Learning Based on GF-2 Images for Aquaculture Areas. Int. J. Remote Sens. 2020, 41, 3575–3591. [Google Scholar] [CrossRef]

- Lu, Y.; Shao, W.; Sun, J. Extraction of Offshore Aquaculture Areas from Medium-Resolution Remote Sensing Images Based on Deep Learning. Remote Sens. 2021, 13, 3854. [Google Scholar] [CrossRef]

- Su, H.; Wei, S.; Qiu, J.; Wu, W. RaftNet: A New Deep Neural Network for Coastal Raft Aquaculture Extraction from Landsat 8 OLI Data. Remote Sens. 2022, 14, 4587. [Google Scholar] [CrossRef]

- Chen, C.; Zou, Z.; Sun, W.; Yang, G.; Song, Y.; Liu, Z. Mapping the Distribution and Dynamics of Coastal Aquaculture Ponds Using Landsat Time Series Data Based on U2-Net Deep Learning Model. Int. J. Digit. Earth 2024, 17, 2346258. [Google Scholar] [CrossRef]

- Ottinger, M.; Clauss, K.; Kuenzer, C. Aquaculture: Relevance, Distribution, Impacts and Spatial Assessments–A Review. Ocean Coast. Manag. 2016, 119, 244–266. [Google Scholar] [CrossRef]

- Chen, Y.; Song, G.; Zhao, W.; Chen, J.W. Estimating Pollutant Loadings from Mariculture in China. Mar. Environ. Sci. 2016, 35, 1–6. [Google Scholar]

- Murray, N.J.; Clemens, R.S.; Phinn, S.R.; Possingham, H.P.; Fuller, R.A. Tracking the Rapid Loss of Tidal Wetlands in the Yellow Sea. Front. Ecol. Environ. 2014, 12, 267–272. [Google Scholar] [CrossRef]

- Peng, Y.; Chen, G.; Li, S.; Liu, Y.; Pernetta, J.C. Use of Degraded Coastal Wetland in an Integrated Mangrove–Aquaculture System: A Case Study from the South China Sea. Ocean Coast. Manag. 2013, 85, 209–213. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Liu, H.; Liu, F.; Fan, X.; Huang, D. Polarized Self-Attention: Towards High-Quality Pixel-Wise Regression. arXiv 2021, arXiv:2107.00782. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-Local Networks Meet Squeeze-Excitation Networks and Beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019; pp. 1971–1980. [Google Scholar]

| Sensor Type | Wave Description | Wavelength (nm) | Spatial Resolution (m) |

|---|---|---|---|

| Sentinel-2A MSI | Band2 Blue | 458–523 | 10 |

| Band3 Green | 543–578 | 10 | |

| Band4 Red | 650–680 | 10 | |

| Band8 NIR | 785–900 | 10 | |

| Planet SuperDove | Band2 Blue | 465–515 | 3 |

| Band3 Green | 513–549 | 3 | |

| Band6 Red | 650–680 | 3 | |

| Band8 NIR | 845–885 | 3 |

| Model Parameters | Optimal Parameters |

|---|---|

| Loss function | binary_crossentropy |

| Optimizer | Adam |

| Activation | Sigmoid |

| Initial learning rate | 0.0001 |

| Epoch | 100 |

| Batch size | 8 |

| Dropout | 0.3 |

| Real Situation | Prediction Results | |

|---|---|---|

| Positive | Negative | |

| Positive | TP | FN |

| Negative | FP | TN |

| Experimental Details | FCN8S | SegNet | DeepLabV3 | U-Net | MPG-Net | |

|---|---|---|---|---|---|---|

| Training Parameters | 134M | 29.5M | 44M | 31M | 10M | |

| Training Time | Sentinel-2 training set | 480.2 min | 275.9 min | 313.7 min | 276.4 min | 269.7 min |

| Planet training set | 495.6 min | 280.5 min | 319.4 min | 282.2 min | 273.6 min | |

| Average Testing Time | Sentinel-2 testing set | 78.3 ms | 31.6 ms | 37.6 ms | 32.1 ms | 30.3 ms |

| Planet testing set | 78.0 ms | 31.8 ms | 37.5 ms | 32.3 ms | 30.0 ms |

| Model | Precision (%) | Recall (%) | IoU (%) | F1-Score (%) |

|---|---|---|---|---|

| FCN8S SegNet | 60.13 87.61 | 63.44 89.49 | 44.66 79.15 | 61.74 88.54 |

| U-Net DeepLabV3 | 92.48 93.37 | 86.72 90.38 | 81.01 84.93 | 89.51 91.85 |

| MPG-Net | 94.57 | 92.73 | 88.04 | 93.64 |

| Model | Precision (%) | Recall (%) | IoU (%) | F1-Score (%) |

|---|---|---|---|---|

| U-Net | 92.48 | 86.72 | 81.01 | 89.51 |

| U-Net_1 | 93.96 | 89.34 | 84.49 | 91.59 |

| U-Net_2 | 93.65 | 91.61 | 86.25 | 92.62 |

| MPG-Net | 94.57 | 92.73 | 88.04 | 93.64 |

| Model | Precision (%) | Recall (%) | IoU (%) | F1-Score (%) |

|---|---|---|---|---|

| U-Net | 90.73 | 92.48 | 84.51 | 91.60 |

| U-Net_1 | 93.87 | 92.60 | 87.32 | 93.23 |

| U-Net_2 | 94.21 | 92.77 | 87.77 | 93.48 |

| MPG-Net | 95.32 | 93.16 | 89.09 | 94.23 |

| Precision (%) | Recall (%) | IoU (%) | F1-Score (%) | Area (/km²) | |

|---|---|---|---|---|---|

| Ground Truth | 100 | 100 | 100 | 100 | 9.27 |

| Planet Image | 93.72 | 91.41 | 86.13 | 92.55 | 9.07 |

| Sentinel-2 Image | 90.24 | 89.92 | 81.95 | 90.08 | 8.84 |

| Precision (%) | Recall (%) | IoU (%) | F1-Score (%) | Area (/km²) | |

|---|---|---|---|---|---|

| Ground Truth | 100 | 100 | 100 | 100 | 16.48 |

| Planet Image | 92.68 | 91.98 | 86.01 | 92.48 | 16.23 |

| Sentinel-2 Image | 91.05 | 90.03 | 82.71 | 90.53 | 16.88 |

| Model | Precision (%) | Recall (%) | IoU (%) | F1-Score (%) |

|---|---|---|---|---|

| FCN8S SegNet | 73.68 88.29 | 83.15 91.03 | 64.12 81.22 | 78.13 89.64 |

| U-Net DeepLabV3 | 90.73 91.58 | 92.48 93.02 | 84.51 85.70 | 91.60 92.29 |

| MPG-Net | 95.32 | 93.16 | 89.09 | 94.23 |

| Model | Mean Precision (%) | Mean Recall (%) | Mean IoU (%) | Mean F1-Score (%) |

|---|---|---|---|---|

| FCN8S SegNet | 66.91 87.95 | 73.30 90.26 | 54.39 80.19 | 69.94 89.09 |

| U-Net DeepLabV3 | 91.61 92.48 | 89.60 91.70 | 82.76 85.32 | 90.56 92.07 |

| MPG-Net | 94.95 | 92.95 | 88.57 | 93.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Zhang, L.; Chen, B.; Zuo, J.; Hu, Y. MPG-Net: A Semantic Segmentation Model for Extracting Aquaculture Ponds in Coastal Areas from Sentinel-2 MSI and Planet SuperDove Images. Remote Sens. 2024, 16, 3760. https://doi.org/10.3390/rs16203760

Chen Y, Zhang L, Chen B, Zuo J, Hu Y. MPG-Net: A Semantic Segmentation Model for Extracting Aquaculture Ponds in Coastal Areas from Sentinel-2 MSI and Planet SuperDove Images. Remote Sensing. 2024; 16(20):3760. https://doi.org/10.3390/rs16203760

Chicago/Turabian StyleChen, Yuyang, Li Zhang, Bowei Chen, Jian Zuo, and Yingwen Hu. 2024. "MPG-Net: A Semantic Segmentation Model for Extracting Aquaculture Ponds in Coastal Areas from Sentinel-2 MSI and Planet SuperDove Images" Remote Sensing 16, no. 20: 3760. https://doi.org/10.3390/rs16203760

APA StyleChen, Y., Zhang, L., Chen, B., Zuo, J., & Hu, Y. (2024). MPG-Net: A Semantic Segmentation Model for Extracting Aquaculture Ponds in Coastal Areas from Sentinel-2 MSI and Planet SuperDove Images. Remote Sensing, 16(20), 3760. https://doi.org/10.3390/rs16203760