Enhanced Precipitation Nowcasting via Temporal Correlation Attention Mechanism and Innovative Jump Connection Strategy

Abstract

1. Introduction

2. Related Work

2.1. PredRNN Network

2.2. Scaled Dot-Product Attention (SDPA)

2.3. Jump Connection Strategy

3. Materials and Methods

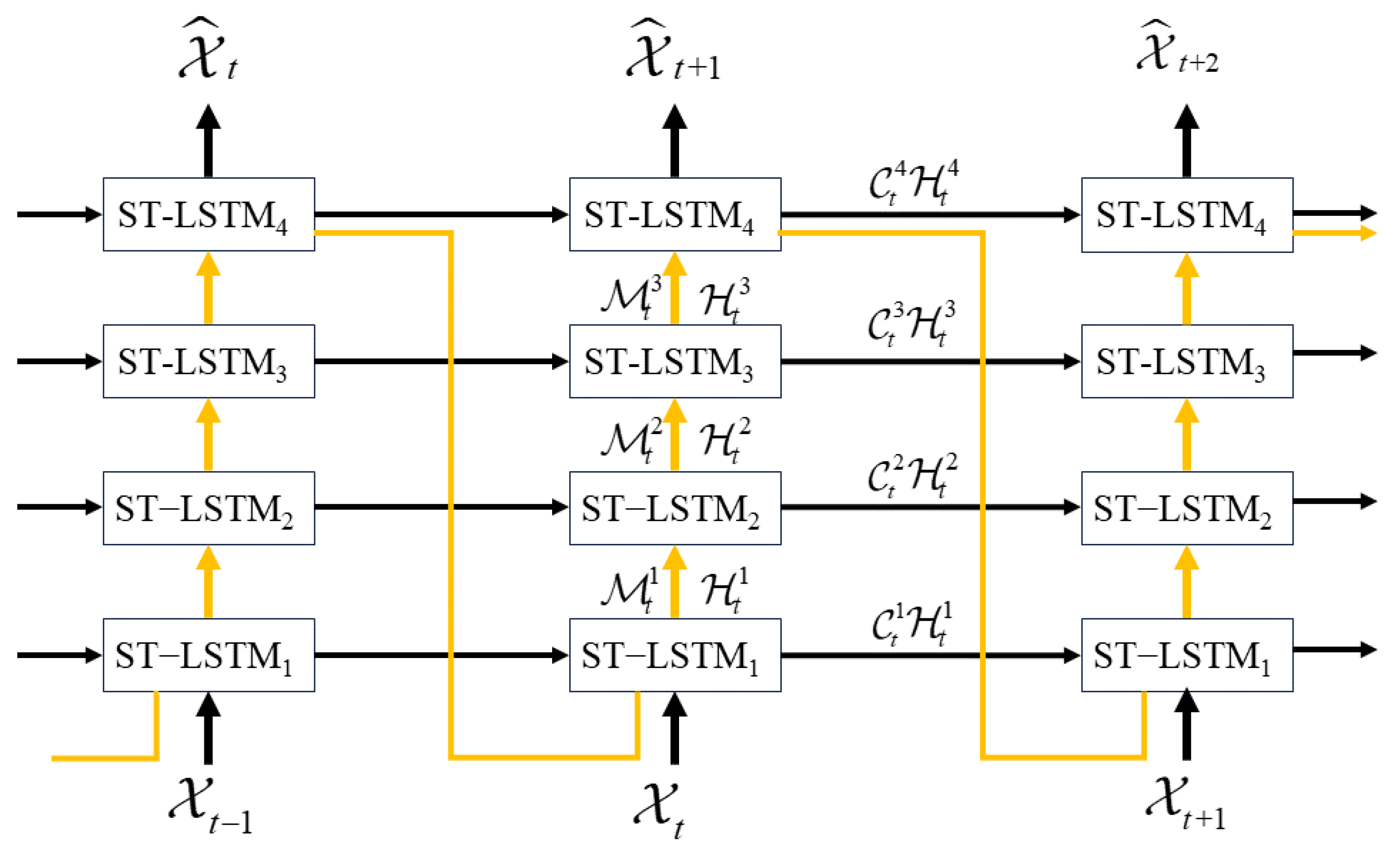

3.1. Application of Jump Connection Strategy in the PredRNN Model

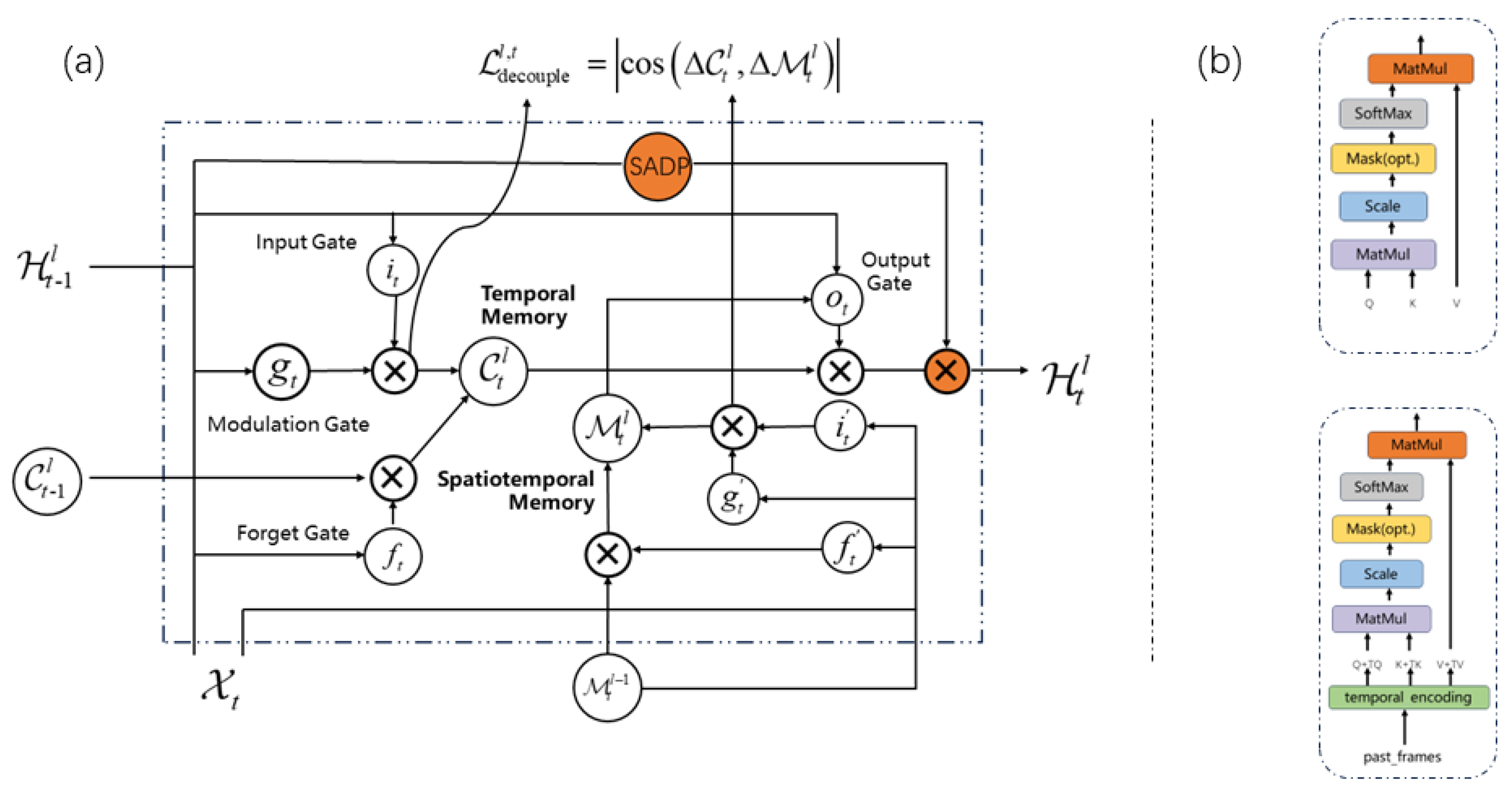

3.2. Temporal Correlation Attention

4. Experiments

4.1. Dataset

4.2. Evaluation Methodology

- (): measures the average of the squares of the differences between actual and predicted values. Its calculation formula is:where n is the total number of pixels, and and are the values of the ith pixel in the observed and predicted values, respectively. A lower value indicates a stronger ability of the model to understand the overall magnitude of the error.

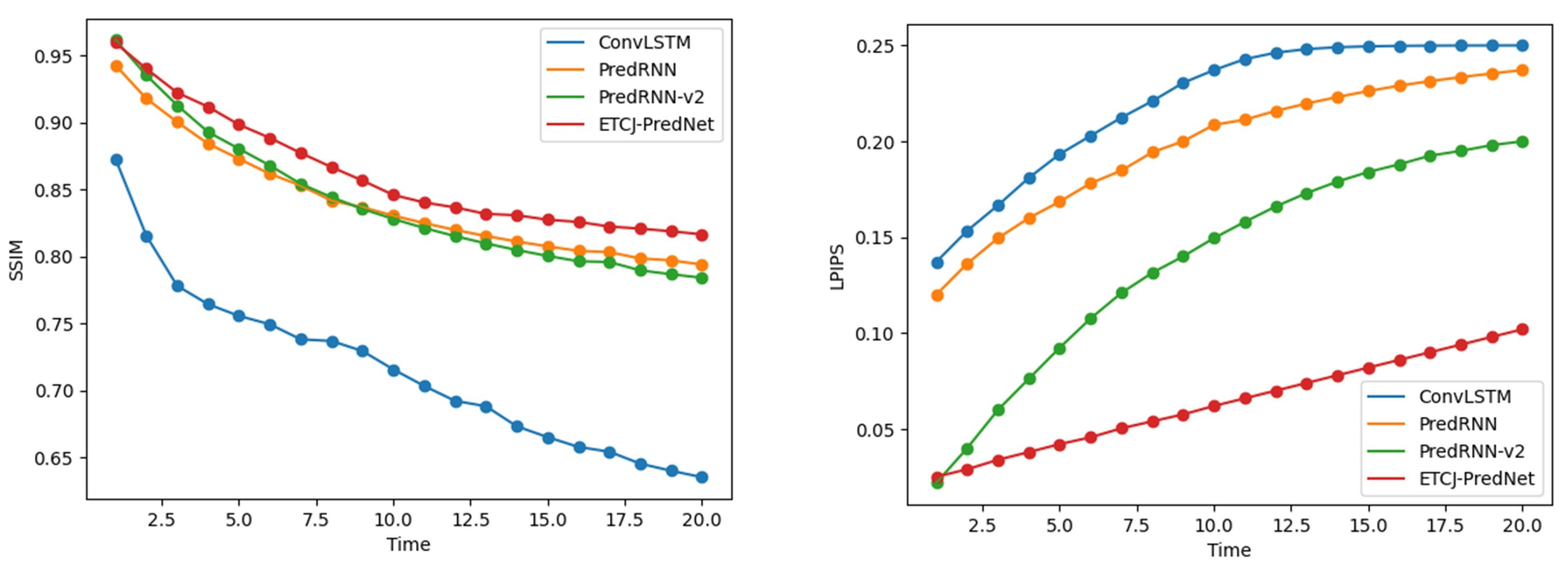

- (): evaluates errors by comparing the structural similarity between predicted and observed results. Its formula is:where , are the mean values of images x, y, , are their variances, and is their covariance. The value of ranges from −1 to 1, with values closer to 1 indicating greater similarity between two images.

- (): PSNR is a widely used metric for assessing image quality. It describes the ratio between the maximum possible power of a signal and the power of destructive noise that affects its quality. The formula for PSNR is:where is the maximum possible pixel value of the image. A higher value indicates better image quality.

- (): LPIPS is used to assess the perceptual similarity between the predicted and the actual images. It measures the perceptual differences between patches of the images as perceived by pretrained deep networks. The formula for LPIPS is complex and involves neural network computations which are not represented by a simple formula.

- (): CSI measures the proportion of correct predictions, excluding the correct negatives. It is calculated using the formula:Here, denotes true positives, is false positives, and represents false negatives.

- (): HSS assesses the accuracy of predictions beyond what is expected by chance. It is expressed as:stands for true negatives.

- (): POD focuses on the accuracy of detecting positive events and is defined as:This metric emphasizes the model’s sensitivity to detecting events correctly.

- (): FAR indicates the proportion of false positives out of all positive forecasts and is calculated as:A lower FAR value is preferable as it indicates fewer false alarms, thus enhancing the reliability of the model’s predictions.

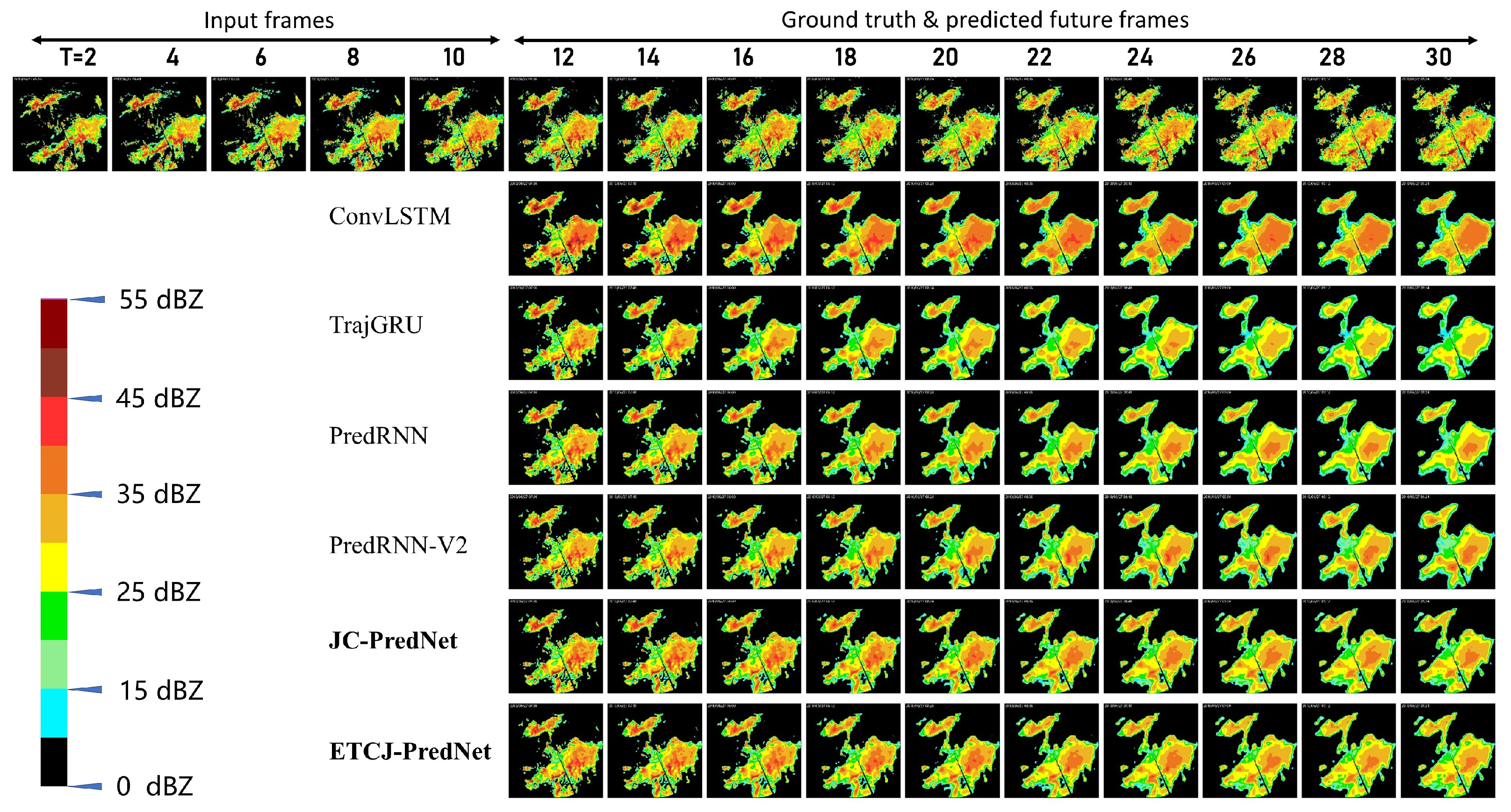

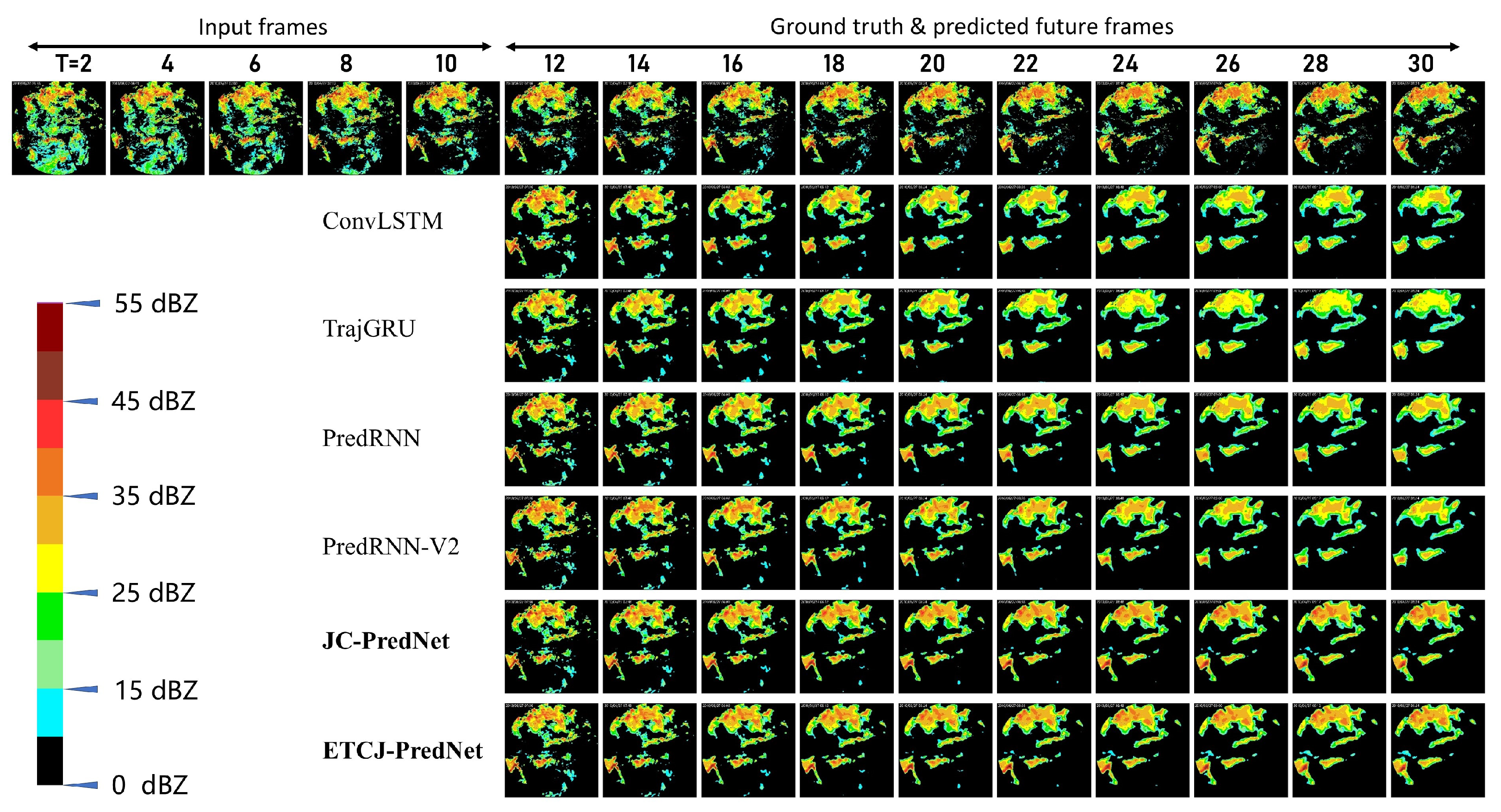

4.3. Result Comparison and Analysis

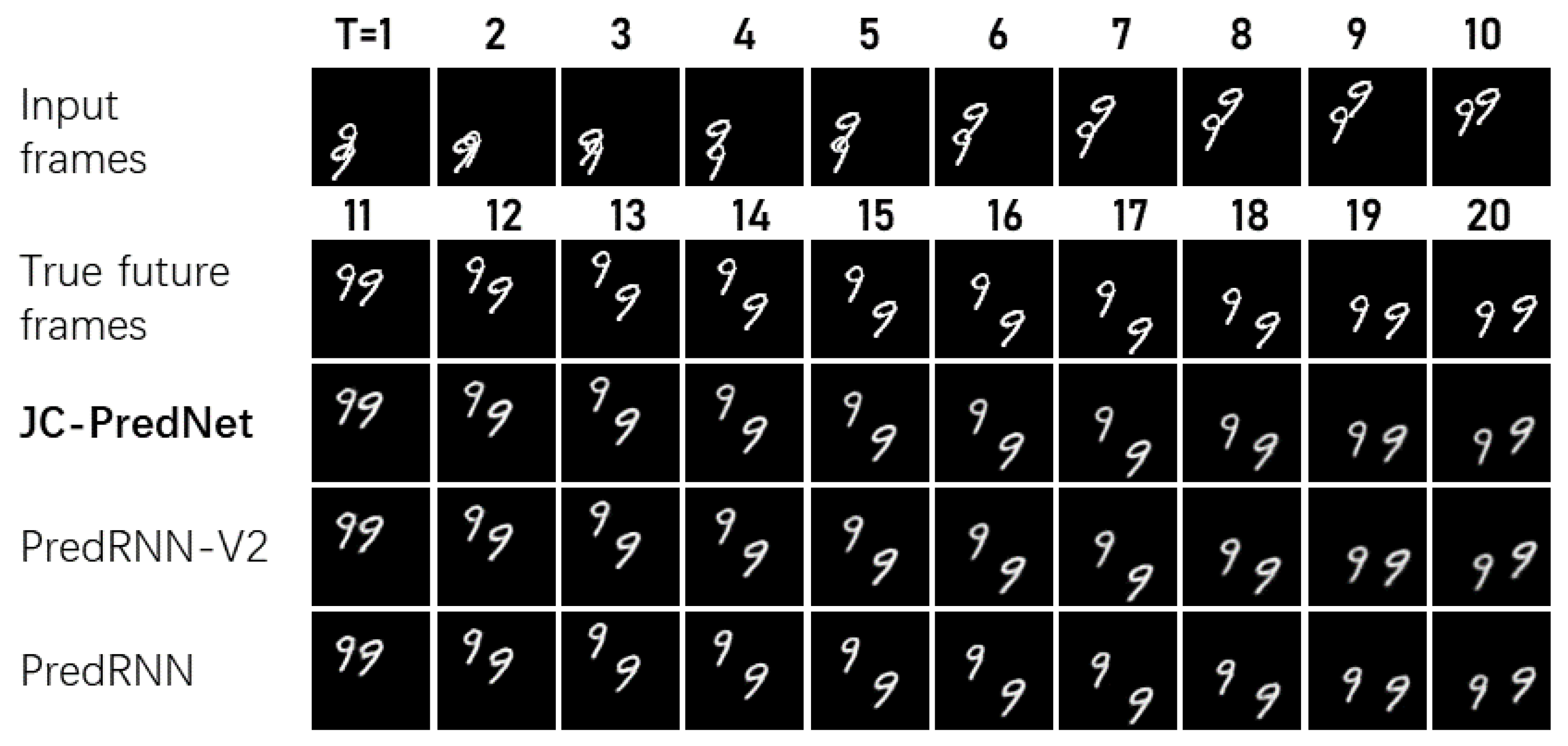

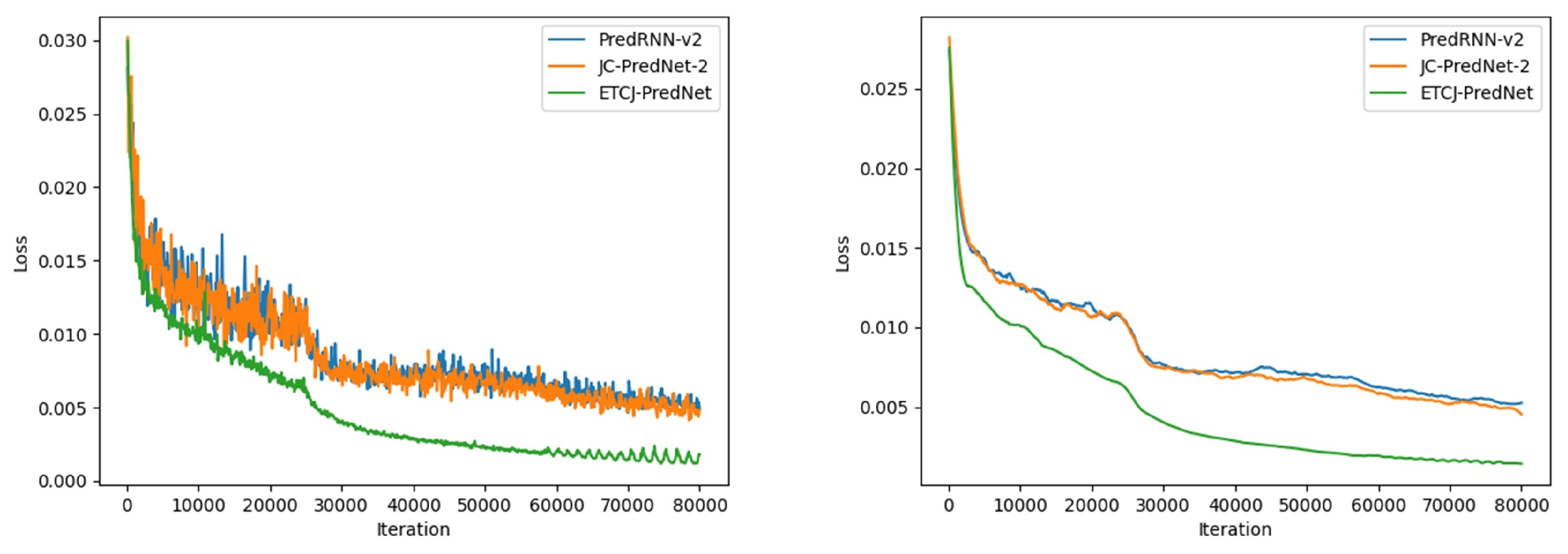

4.3.1. Comparative Experiment

4.3.2. Ablation Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Charney, J.G. Progress in dynamic meteorology. Bull. Am. Meteorol. Soc. 1950, 31, 231–236. [Google Scholar] [CrossRef][Green Version]

- Tolstykh, M.A. Vorticity-divergence semi-Lagrangian shallow-water model of the sphere based on compact finite differences. J. Comput. Phys. 2002, 179, 180–200. [Google Scholar] [CrossRef]

- Turner, B.J.; Zawadzki, I.; Germann, U. Predictability of Precipitation from Continental Radar Images. Part III: Operational Nowcasting Implementation (MAPLE). J. Appl. Meteorol. 2004, 43, 231–248. [Google Scholar] [CrossRef]

- Li, L.; Schmid, W.; Joss, J. Nowcasting of motion and growth of precipitation with radar over a complex orography. J. Appl. Meteorol. 1995, 34, 1286–1300. [Google Scholar] [CrossRef]

- Rinehart, R.E.; Garvey, E.T. Three-dimensional storm motion detection by conventional weather radar. Nature 1978, 273, 287–289. [Google Scholar] [CrossRef]

- Li, Y.J.; Han, L. Storm tracking algorithm development based on the three-dimensional radar image data. J. Comput. Appl. 2008, 28, 1078–1080. [Google Scholar]

- Liang, Q.Q.; Feng, Y.R.; Deng, W.J. A composite approach of radar echo extrapolation based on TREC vectors in combination with model-predicted winds. Adv. Atmos. Sci. 2010, 27, 1119–1130. [Google Scholar] [CrossRef]

- Fletcher, T.D.; Andrieu, H.; Hamel, P. Understanding, management and modelling of urban hydrology and its consequences for receiving waters: A state of the art. Adv. Water Resour. 2013, 51, 261–279. [Google Scholar] [CrossRef]

- Zhang, Y.P.; Cheng, M.H.; Xia, W.M. Estimation of weather radar echo motion field and its application to precipitation nowcasting. Acta Meteor Sin. 2006, 64, 631–646. [Google Scholar]

- Medsker, L.R.; Jain, L.C. Recurrent neural networks. Des. Appl. 2001, 5, 2. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar]

- Zeyer, A.; Doetsch, P.; Voigtlaender, P.; Schlüter, R.; Ney, H. A comprehensive study of deep bidirectional LSTM RNNs for acoustic modeling in speech recognition. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Sundermeyer, M.; Schlüter, R.; Ney, H. LSTM neural networks for language modeling. In Proceedings of the Thirteenth Annual Conference of the International Speech Communication Association, Portland, OR, USA, 9–13 September 2012. [Google Scholar]

- Ma, S.; Han, Y. Describing images by feeding LSTM with structural words. In Proceedings of the 2016 IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 July 2016. [Google Scholar]

- Liu, Y.; Zheng, H.; Feng, X.; Chen, Z. Short-term traffic flow prediction with Conv-LSTM. In Proceedings of the 2017 9th International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 11–13 October 2017. [Google Scholar]

- Zheng, H.; Lin, F.; Feng, X.; Chen, Y. A hybrid deep learning model with attention-based conv-LSTM networks for short-term traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2020, 22, 6910–6920. [Google Scholar] [CrossRef]

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Deep learning for precipitation nowcasting: A benchmark and a new model. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. PredRNN: Recurrent neural networks for predictive learning using spatiotemporal LSTMs. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Wang, Y.; Gao, Z.; Long, M. PredRNN++: Towards a Resolution of the Deep-in-Time Dilemma in Spatiotemporal Predictive Learning. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Wang, Y.; Wu, H.; Zhang, J.; Gao, Z.; Wang, J.; Philip, S.Y.; Long, M. Predrnn: A recurrent neural network for spatiotemporal predictive learning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2208–2225. [Google Scholar] [CrossRef] [PubMed]

- Ravuri, S.; Lenc, K.; Willson, M.; Kangin, D.; Lam, R.; Mirowski, P.; Arribas, A.; Clancy, E.; Robinson, N.; Mohamed, S.; et al. Skilful precipitation nowcasting using deep generative models of radar. Nature 2021, 597, 672–677. [Google Scholar] [CrossRef]

- Xue, W.; Zhou, T.; Wen, Q.; Gao, J.; Ding, B.; Jin, R. Make Transformer Great again for Time Series Forecasting: Channel Aligned Robust Dual Transformer. arXiv 2023, arXiv:2305.12095. [Google Scholar]

- Zhang, Y.; Yan, J. Crossformer: Transformer utilizing cross-dimension dependency for multivariate time series forecasting. In Proceedings of the Eleventh International Conference on Learning Representations, Virtual, 25 April 2022. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tao, D.; Xu, Y.; Xu, C.; Yang, Z.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar]

- Gao, Z.; Shi, X.; Wang, H.; Zhu, Y.; Wang, Y.B.; Li, M.; Yeung, D.Y. Earthformer: Exploring space-time transformers for earth system forecasting. Adv. Neural Inf. Process. Syst. 2022, 35, 25390–25403. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Liang, Y.; Xia, Y.; Ke, S.; Wang, Y.; Wen, Q.; Zhang, J.; Zheng, Y.; Zimmermann, R. Airformer: Predicting nationwide air quality in China with transformers. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37. [Google Scholar]

- Wang, L.; Zeng, L.; Li, J. AEC-GAN: Adversarial Error Correction GANs for Auto-regressive Long Time-series Generation. Proc. Aaai Conf. Artif. Intell. 2023, 37, 10140–10148. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y.; Courville, A. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Smolley, S.P. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Esteban, C.; Hyland, S.L.; Rätsch, G. Real-valued (medical) time series generation with recurrent conditional GANs. arXiv 2017, arXiv:1706.02633. [Google Scholar]

- Yoon, J.; Jarrett, D.; Van der Schaar, M. Time-series generative adversarial networks. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Liao, S.; Ni, H.; Sabate-Vidales, M.; Szpruch, L.; Wiese, M.; Xiao, B. Sig-Wasserstein GANs for conditional time series generation. Math. Financ. 2024, 34, 622–670. [Google Scholar] [CrossRef]

- Zhang, Y.; Long, M.; Chen, K.; Xing, L.; Jin, R.; Jordan, M.I.; Wang, J. Skilful nowcasting of extreme precipitation with NowcastNet. Nature 2023, 619, 526–532. [Google Scholar] [CrossRef]

- Xie, P.; Li, X.; Ji, X.; Chen, X.; Chen, Y.; Liu, J.; Ye, Y. An energy-based generative adversarial forecaster for radar echo map extrapolation. IEEE Geosci. Remote. Sens. Lett. 2020, 19, 3500505. [Google Scholar] [CrossRef]

- Jarrett, D.; Bica, I.; van der Schaar, M. Time-series generation by contrastive imitation. Adv. Neural Inf. Process. Syst. 2021, 34, 28968–28982. [Google Scholar]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate medium-range global weather forecasting with 3D neural networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef]

| Model | MSE ↓ | SSIM ↑ | LPIPS ↓ | FLOPS (G) |

|---|---|---|---|---|

| ConvLSTM [11] | 103.3 | 0.707 | 0.156 | 80.7 |

| TrajGRU [17] | 100.1 | 0.762 | 0.110 | - |

| PredRNN [18] | 62.9 | 0.878 | 0.063 | - |

| PredRNN-V2 [20] | 51.4 | 0.890 | 0.066 | - |

| JC-PredNet | 48.7 | 0.895 | 0.060 | - |

| Model | CSI ↑ | HSS ↑ | POD ↑ | FAR ↓ |

|---|---|---|---|---|

| ConvLSTM | 0.594 | 0.534 | 0.785 | 0.290 |

| TrajGRU | 0.603 | 0.547 | 0.790 | 0.281 |

| PredRNN | 0.651 | 0.608 | 0.830 | 0.249 |

| PredRNN-v2 | 0.691 | 0.658 | 0.850 | 0.213 |

| ETCJ-PredNet(our) | 0.725 | 0.699 | 0.870 | 0.187 |

| Model | CSI ↑ | HSS ↑ | POD ↑ | FAR ↓ |

|---|---|---|---|---|

| ConvLSTM | 0.431 | 0.321 | 0.601 | 0.396 |

| TrajGRU | 0.484 | 0.394 | 0.648 | 0.344 |

| PredRNN | 0.504 | 0.416 | 0.672 | 0.331 |

| PredRNN-v2 | 0.543 | 0.464 | 0.695 | 0.287 |

| ETCJ-PredNet(our) | 0.577 | 0.506 | 0.717 | 0.252 |

| Model | CSI ↑ | HSS ↑ | POD ↑ | FAR ↓ |

|---|---|---|---|---|

| ConvLSTM | 0.211 | 0.255 | 0.373 | 0.672 |

| TrajGRU | 0.234 | 0.264 | 0.394 | 0.634 |

| PredRNN | 0.248 | 0.279 | 0.416 | 0.618 |

| PredRNN-v2 | 0.271 | 0.296 | 0.441 | 0.587 |

| ETCJ-PredNet(our) | 0.331 | 0.353 | 0.514 | 0.518 |

| Model | CSI ↑ | HSS ↑ | POD ↑ | FAR ↓ |

|---|---|---|---|---|

| w/o Jump Connection Strategy | 0.691 | 0.658 | 0.850 | 0.213 |

| w Jump Connection Strategy | 0.707 | 0.678 | 0.861 | 0.201 |

| Model | CSI ↑ | HSS ↑ | POD ↑ | FAR ↓ |

|---|---|---|---|---|

| w/o Temporal Correlation | 0.691 | 0.658 | 0.850 | 0.213 |

| w Temporal Correlation | 0.714 | 0.686 | 0.863 | 0.195 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, W.; Fu, D.; Zhang, C.; Chen, Y.; Liu, A.X.; An, J. Enhanced Precipitation Nowcasting via Temporal Correlation Attention Mechanism and Innovative Jump Connection Strategy. Remote Sens. 2024, 16, 3757. https://doi.org/10.3390/rs16203757

Yu W, Fu D, Zhang C, Chen Y, Liu AX, An J. Enhanced Precipitation Nowcasting via Temporal Correlation Attention Mechanism and Innovative Jump Connection Strategy. Remote Sensing. 2024; 16(20):3757. https://doi.org/10.3390/rs16203757

Chicago/Turabian StyleYu, Wenbin, Daoyong Fu, Chengjun Zhang, Yadang Chen, Alex X. Liu, and Jingjing An. 2024. "Enhanced Precipitation Nowcasting via Temporal Correlation Attention Mechanism and Innovative Jump Connection Strategy" Remote Sensing 16, no. 20: 3757. https://doi.org/10.3390/rs16203757

APA StyleYu, W., Fu, D., Zhang, C., Chen, Y., Liu, A. X., & An, J. (2024). Enhanced Precipitation Nowcasting via Temporal Correlation Attention Mechanism and Innovative Jump Connection Strategy. Remote Sensing, 16(20), 3757. https://doi.org/10.3390/rs16203757