A Multi-Scale Feature Fusion Based Lightweight Vehicle Target Detection Network on Aerial Optical Images

Abstract

1. Introduction

- Vehicle targets exhibit a dense, multi-angle distribution: Remote sensing images of vehicles present arbitrary angles, and their dense distribution often leads to overlapping bounding boxes, using horizontal box methods for vehicle detection.

- A large number of model Params will impair real-time detection: It has become routine to enhance the width and depth of a network to improve its precision; however, this also results in a higher number of Params, consumes greater computational resources and is not conducive to practical deployment.

- Large vehicles with diverse features and low detection accuracy: The actual remote sensing capture process requires the imaging of a large range of scenes, with large variations in the angle of capture and imaging height. Large vehicles are less common, making it challenging to identify general features by which to distinguish them.

- Significant imbalance in the distribution of vehicle types and numbers: In the vehicle dataset, small private cars are numerous, making up the majority. Large vehicles are fewer in number, leading to an unbalanced distribution of different vehicle types and resulting in lower accuracy and significant overfitting.

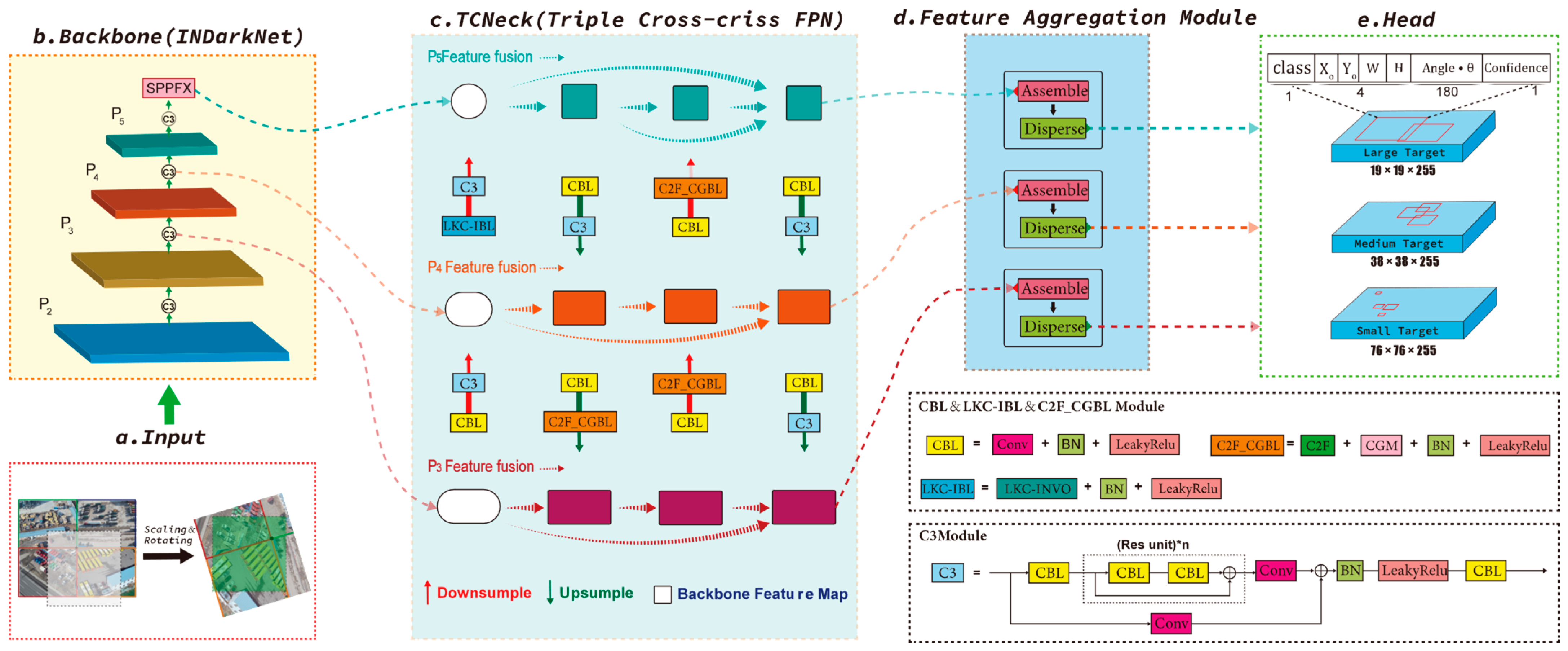

- The development of a new neck structure, TCFPN, which allows the ML-Det network to efficiently fuse three feature maps of different scales, thereby extracting more vehicle target features and improving the precision of detection.

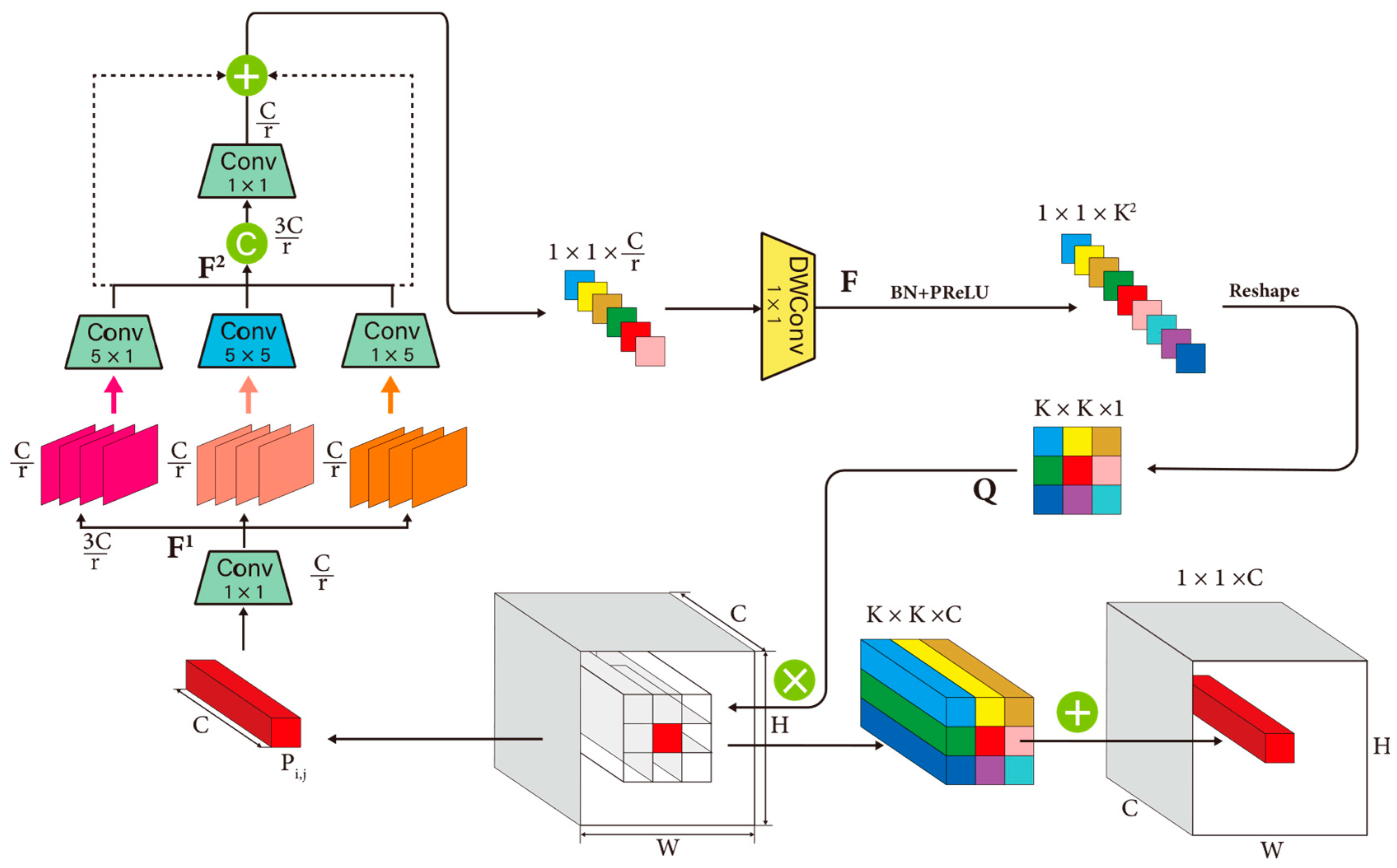

- The introduction of a large kernel coupling built-in involution called LKC–INVO, which employs a large kernel multi-branch coupling structure to expand the model’s receptive field, enhance its ability to extract high-level information, and maintain precision while being lightweight.

- The proposal of a C2F_ContextGuided module by adding a ContextGuided interaction module to the C2F module, so as to combine context information to improve the detection precision and to reduce model Params.

- The creation of an assemble–disperse attention module to aggregate more local feature information.

- The construction of a small dataset with a balanced distribution, MiVehicle, with balanced vehicle numbers to train a more efficient model.

2. Relevant Research

2.1. Remote Sensing Vehicle Target Detection

2.2. Attention Mechanism in Target Detection

3. Methods

3.1. Model

3.2. Innovation Module

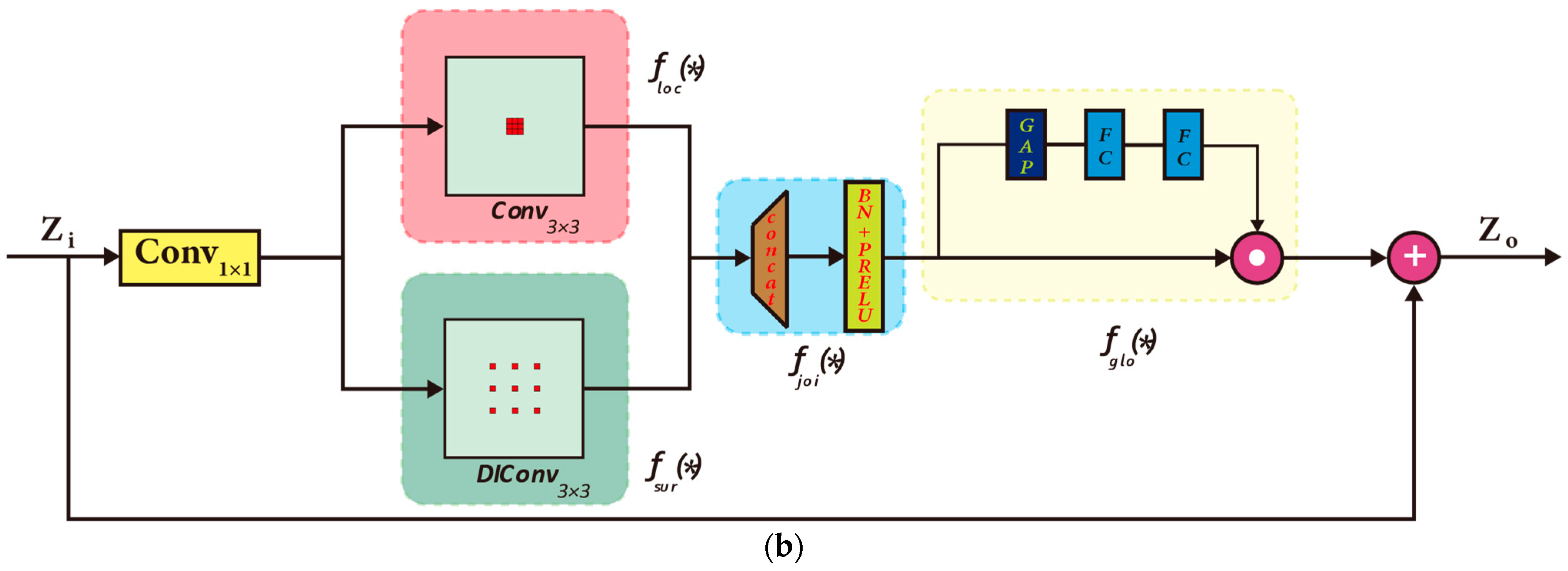

3.2.1. Large Kernel Coupling Built-In Involution (LKC–INVO Convolution)

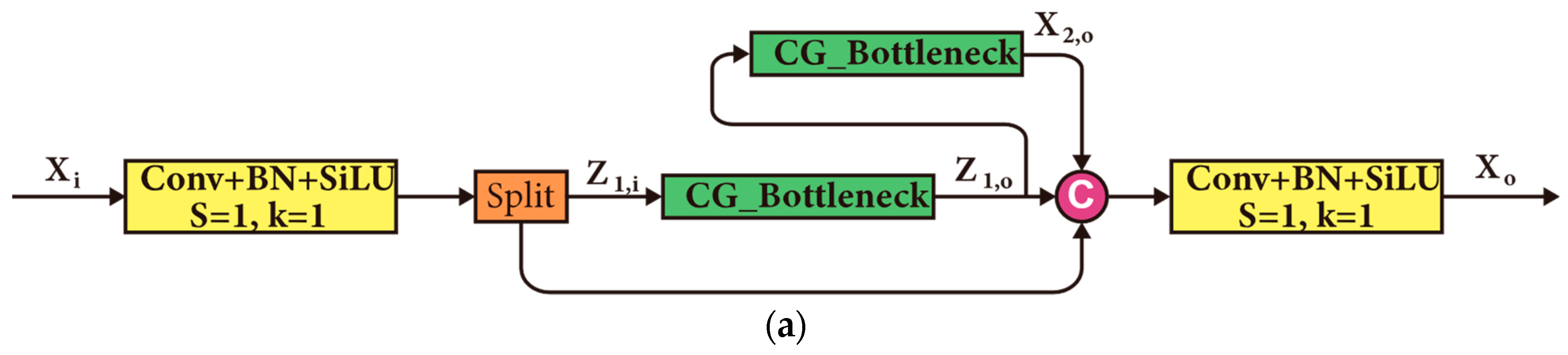

3.2.2. C2F_ContextGuided Context-Perception Module

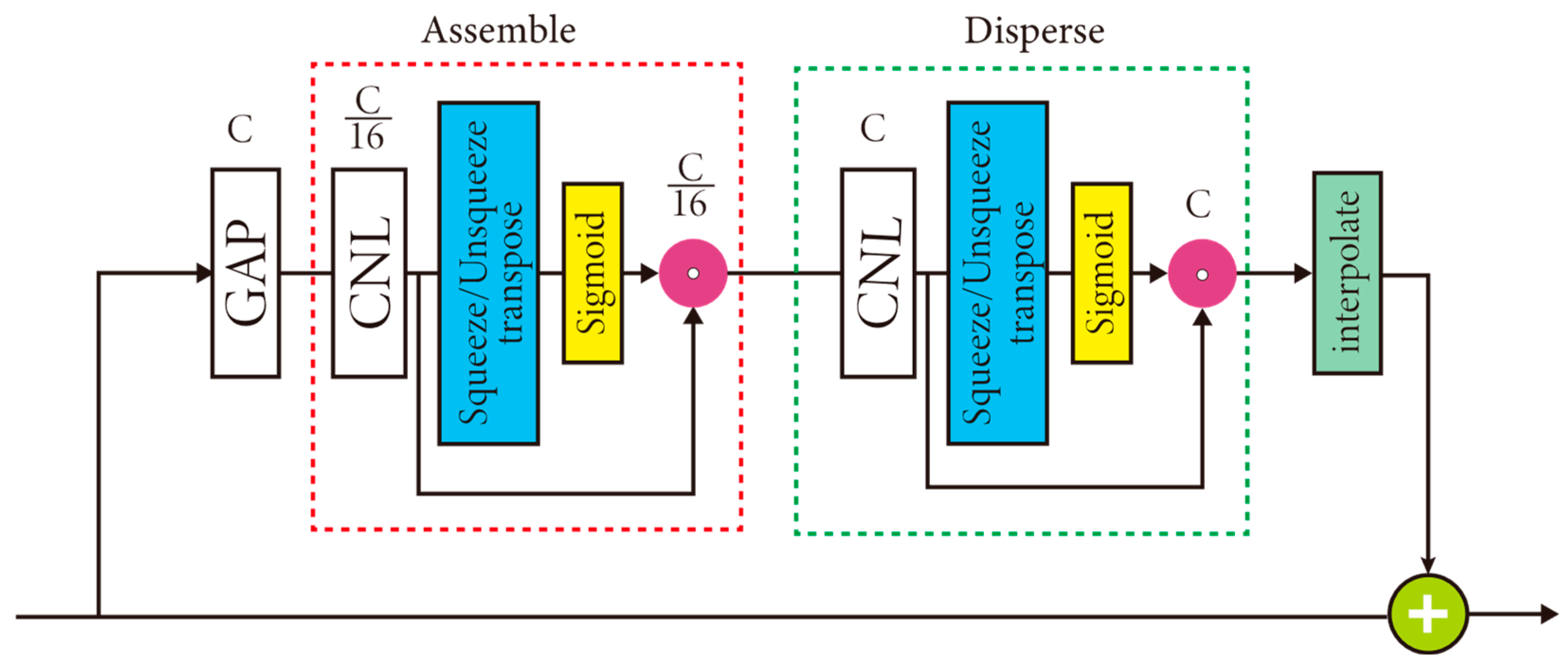

3.2.3. Assemble–Disperse Attention Module

4. Experiment

4.1. Param Setting

4.2. Evaluation Indicators

4.3. Dataset

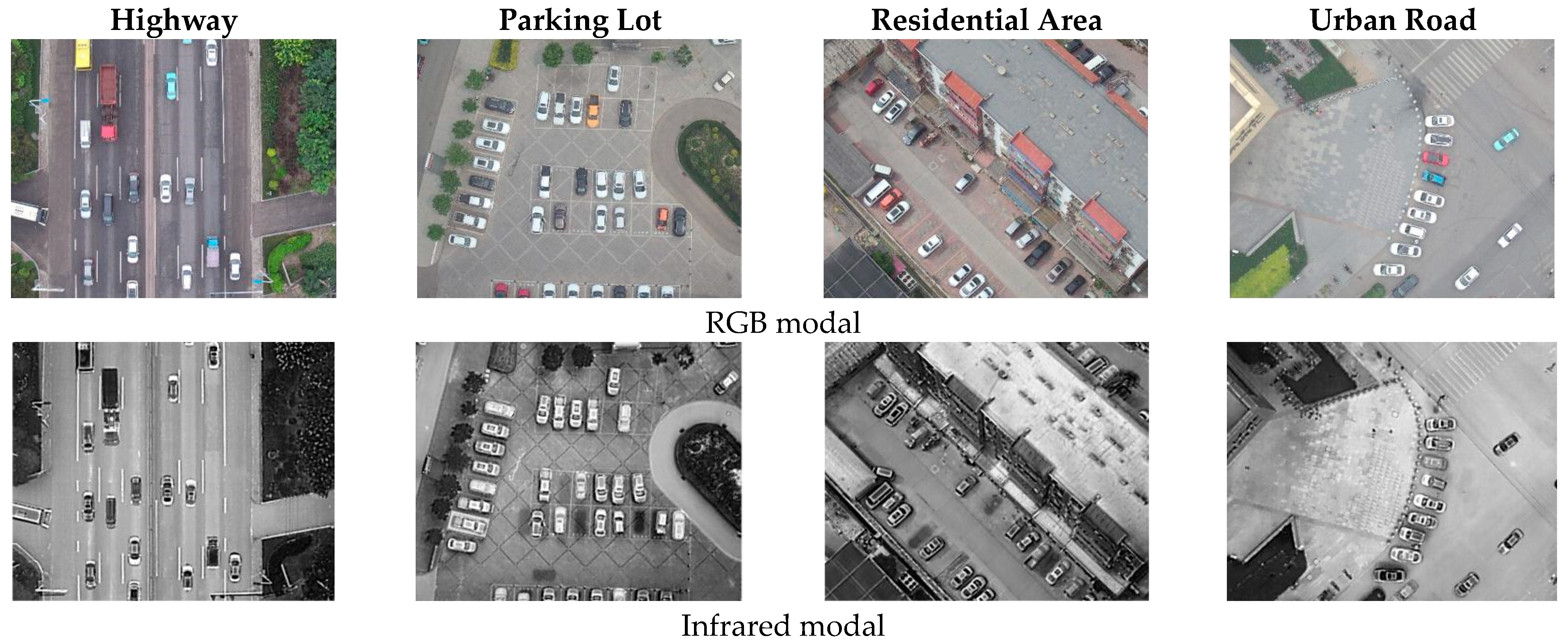

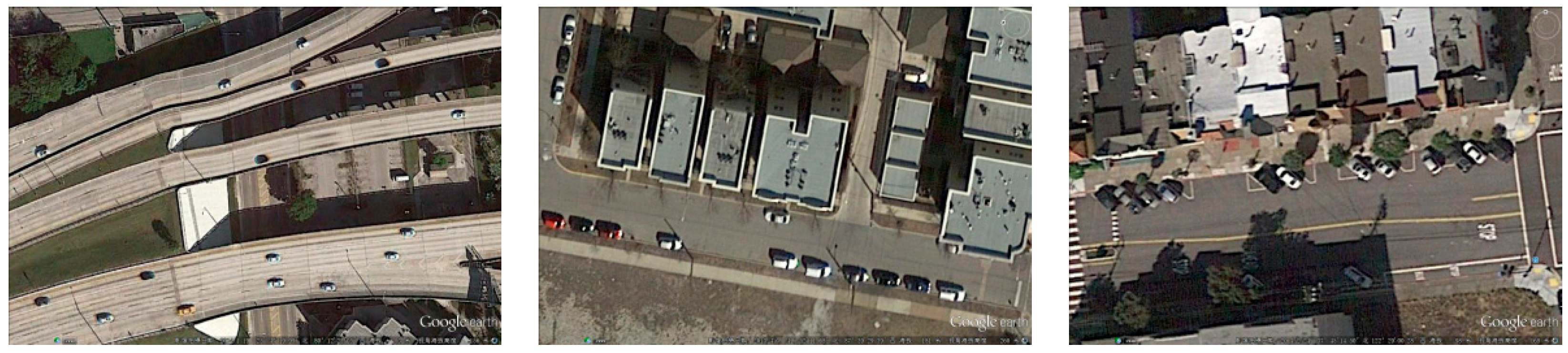

4.3.1. MiVehicle Dataset

4.3.2. UCAS_AOD Dataset

4.3.3. DOTA Dataset

4.4. Ablation Experiment

4.5. Results on Dataset

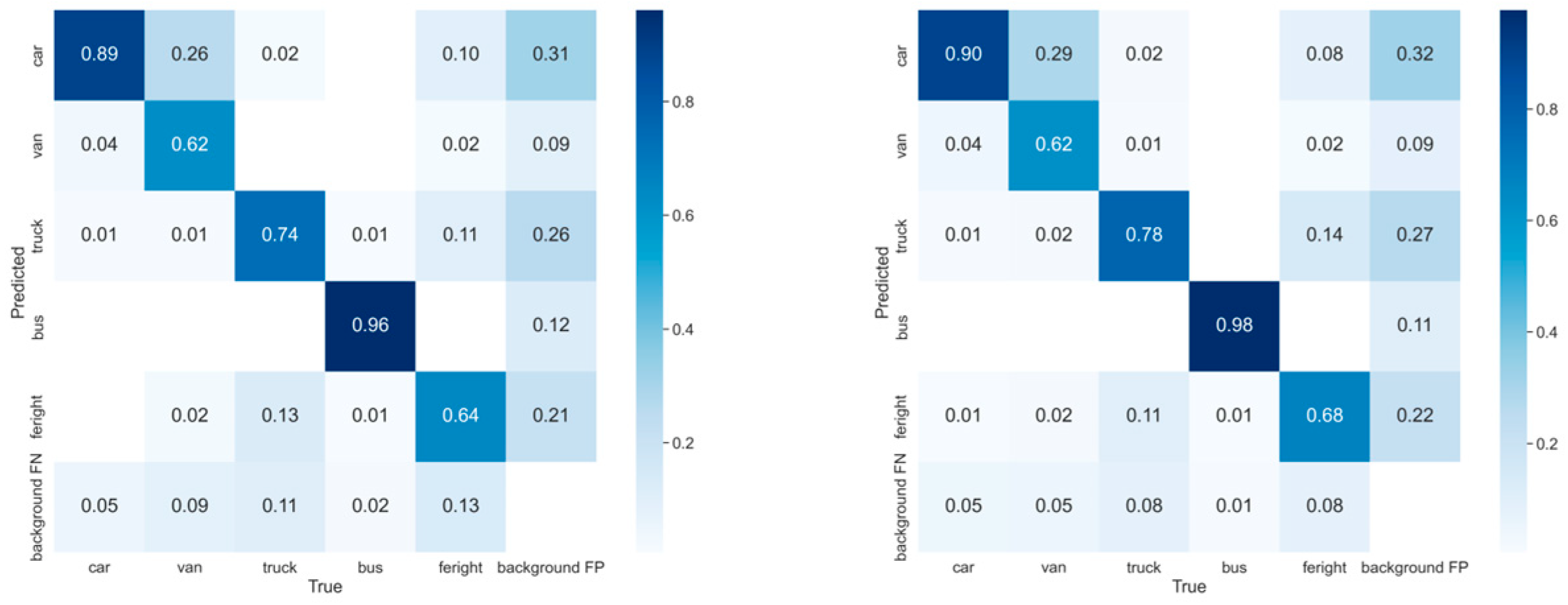

4.5.1. Results on MiVehicle Dataset

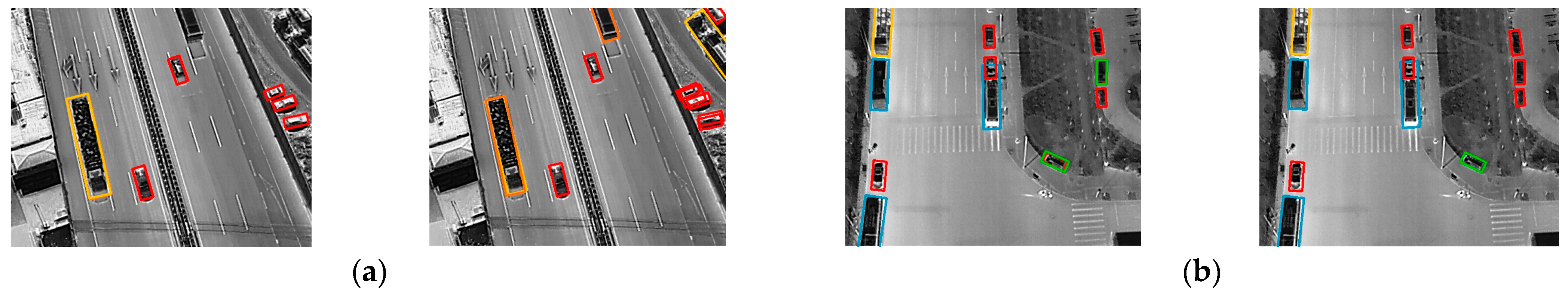

4.5.2. Results on UCAS_AOD Dataset

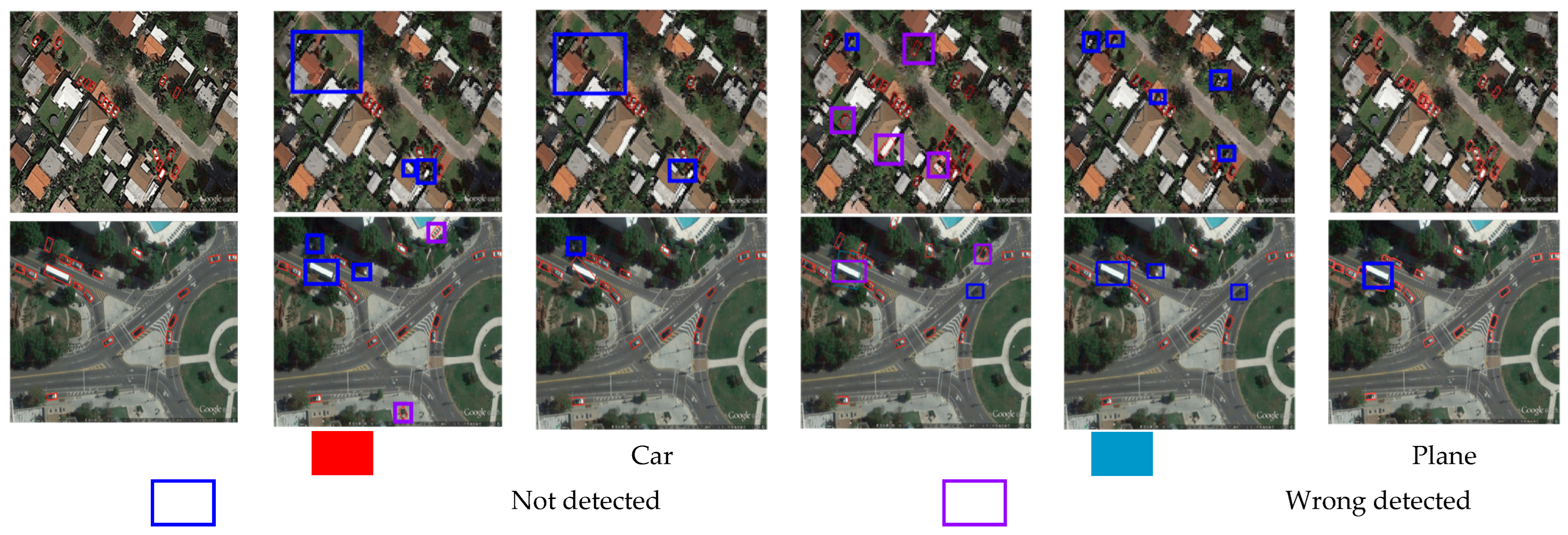

4.5.3. Results on DOTA Dataset

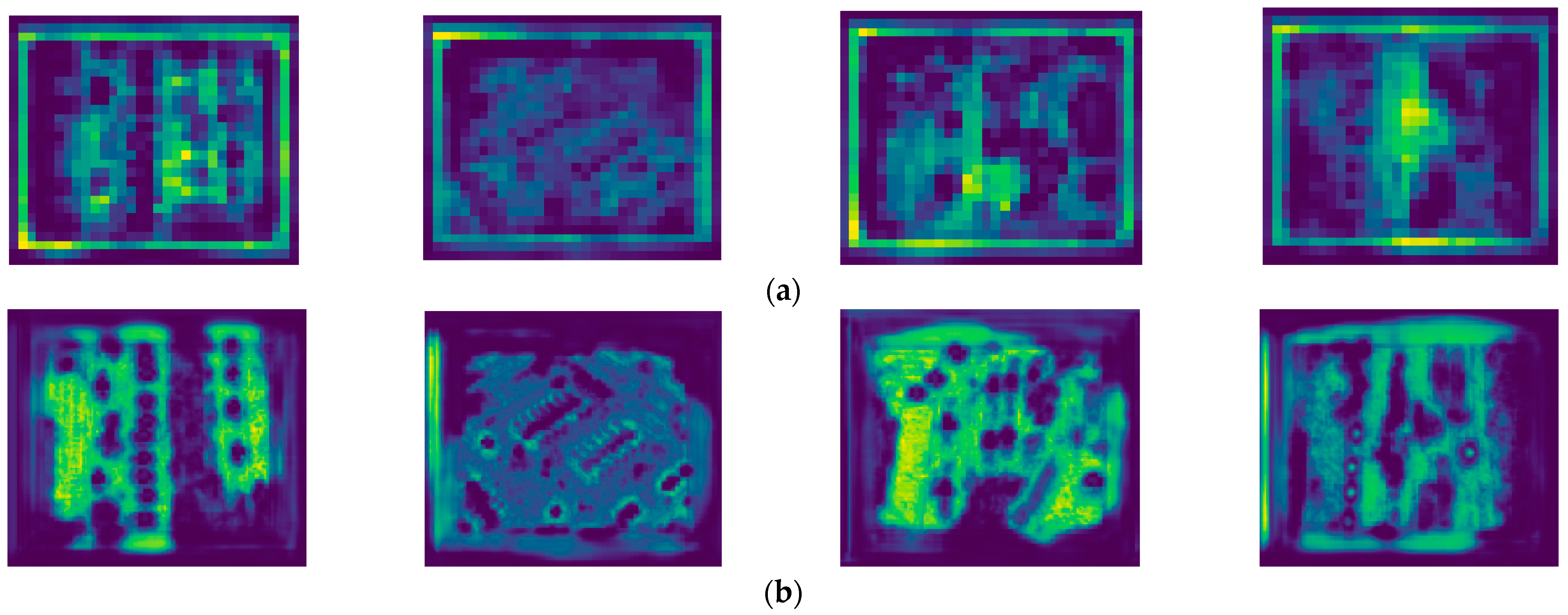

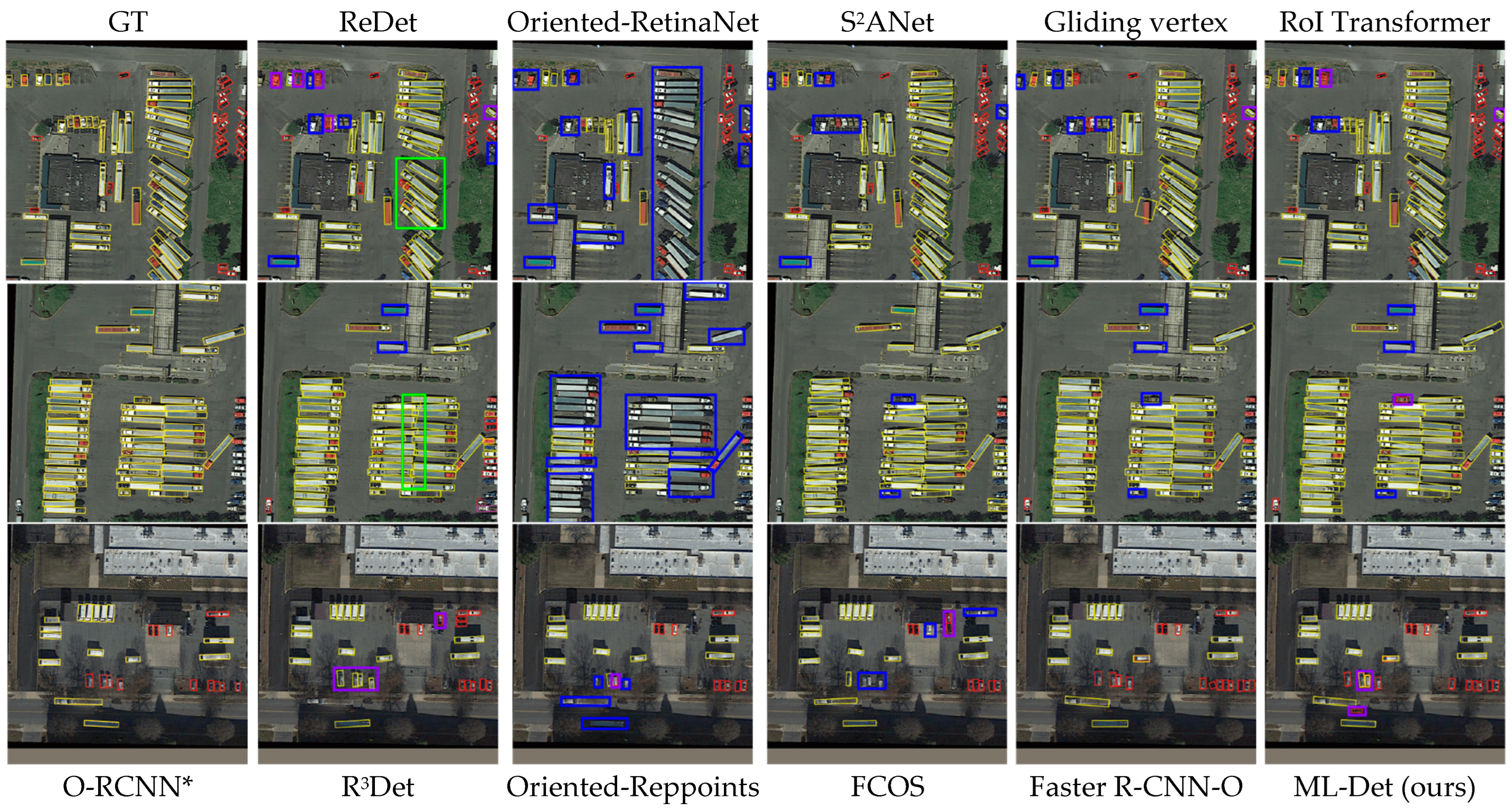

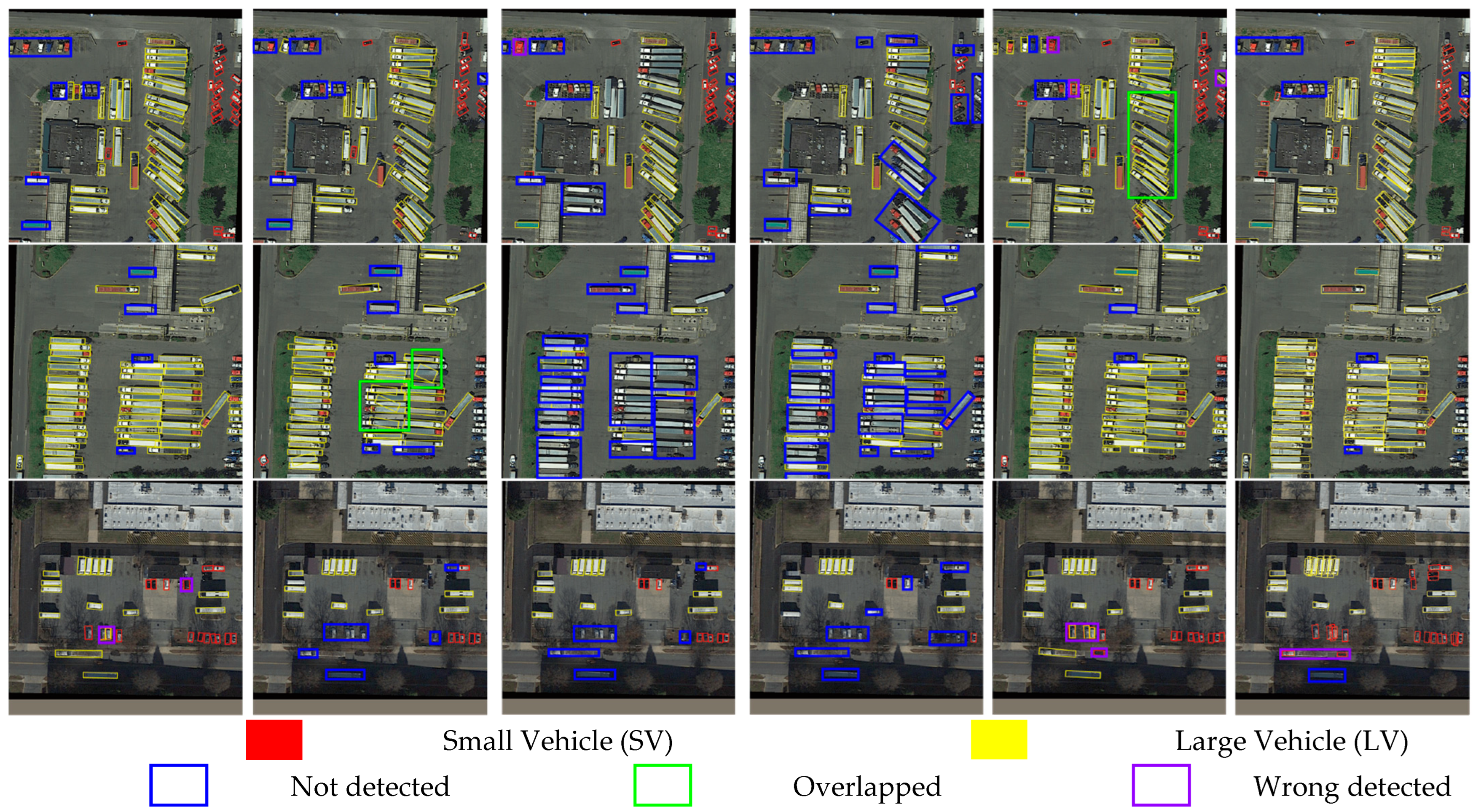

4.5.4. Detection Performance Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| From | Number | Type | Arguments | ||

|---|---|---|---|---|---|

| 0 | −1 | 1 | Convolution | [3, 32, 6, 2, 2] | |

| 1 | −1 | 1 | Convolution | [32, 64, 3, 2, 1] | |

| 2 | −1 | 1 | BottleneckCSP | [64, 64] | |

| 3 | −1 | 1 | Convolution | [64, 128, 3, 2, 1] | |

| 4 | −1 | 2 | BottleneckCSP | [128, 128] | |

| 5 | −1 | 1 | Convolution | [128, 256, 3, 2, 1] | |

| 6 | −1 | 3 | BottleneckCSP | [256, 256] | |

| 7 | −1 | 1 | LKC–INVO | [256, 256] | |

| 8 | −1 | 1 | BottleneckCSP | [256, 512, 1, 1, 0] | |

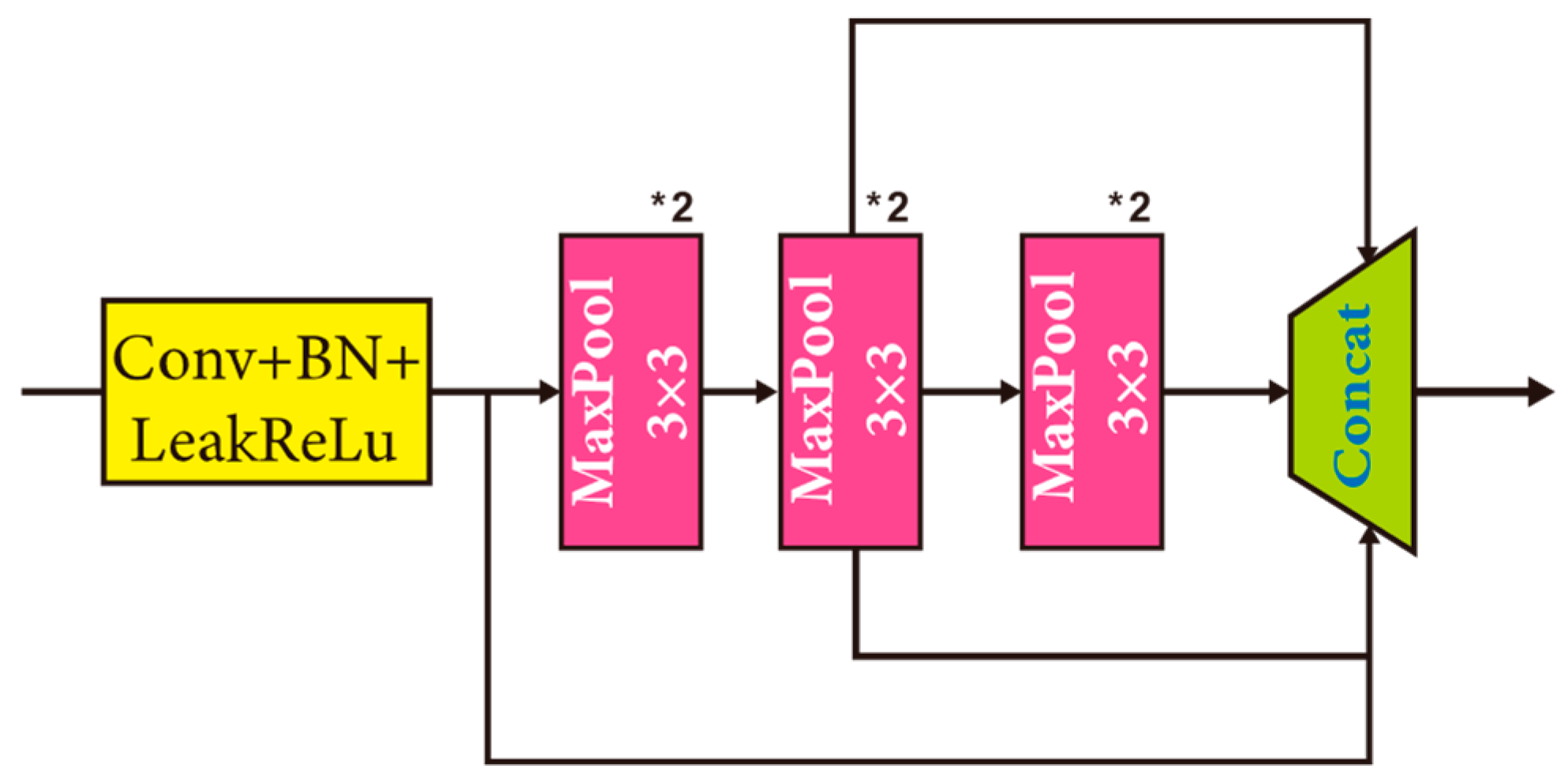

| 9 | −1 | 1 | SPPFX | [512, 512] | |

| 10 | −1 | 1 | Convolution | [512, 256, 1, 1, 0] | |

| 11 | −1 | 1 | Up-sampling | “nearest” | |

| 12 | [−1, 6] | 1 | Concat | - | |

| 13 | −1 | 1 | BottleneckCSP | [512, 256] | |

| 14 | −1 | 1 | Convolution | [256, 128, 1, 1, 0] | |

| 15 | −1 | 1 | Up-sampling | “nearest” | |

| 16 | [−1, 4] | 1 | Concat | - | |

| 17 | −1 | 1 | C2F_ContextGuided | [256, 128] | |

| 18 | −1 | 1 | Convolution | [128, 128, 3, 2, 1] | |

| 19 | [−1, 14] | 1 | Concat | - | |

| 20 | −1 | 1 | C2F_ContextGuided | [256, 256] | |

| 21 | −1 | 1 | Convolution | [256, 256, 5, 2, 1] | |

| 22 | [−1, 10, 8] | 1 | Concat | - | |

| 23 | −1 | 1 | C2F_ContextGuided | [1024, 512] | |

| 24 | −1 | 1 | Assemble–disperse | - | |

| 25 | −1 | 1 | Convolution | [512, 512, 5, 1, 2] | |

| 26 | −1 | 1 | Up-sampling | “nearest” | |

| 27 | [−1, 20, 14] | 1 | Concat | - | |

| 28 | −1 | 1 | BottleneckCSP | [896, 256] | |

| 29 | −1 | 1 | Assemble-Disperse | - | |

| 30 | −1 | 1 | BottleneckCSP | [256, 256, 3, 1, 1] | |

| 31 | −1 | 1 | Up-sampling | “nearest” | |

| 32 | [−1, 17, 4] | 1 | Concat | - | |

| 33 | −1 | 1 | BottleneckCSP | [384, 128] | |

| 34 | −1 | 1 | Assemble–disperse | - | |

| 35 | [34, 29, 24] | 1 | Detect | - | |

| 315 layers | 7.91 × 106 gradients | 20.1 GFLOPS | 7.91 × 106 Params | ||

References

- Tarolli, P.; Mudd, S.M. Remote Sensing of Geomorphology; Elsevier: Amsterdam, The Netherlands, 2020; Volume 23, ISBN 0-444-64177-7. [Google Scholar]

- Yin, L.; Wang, L.; Li, J.; Lu, S.; Tian, J.; Yin, Z.; Liu, S.; Zheng, W. YOLOV4_CSPBi: Enhanced Land Target Detection Model. Land 2023, 12, 1813. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, Z.; Wu, J.; Tian, Y.; Tang, H.; Guo, X. Real-Time Vehicle Detection Based on Improved Yolo V5. Sustainability 2022, 14, 12274. [Google Scholar] [CrossRef]

- Carrasco, D.P.; Rashwan, H.A.; García, M.Á.; Puig, D. T-YOLO: Tiny Vehicle Detection Based on YOLO and Multi-Scale Convolutional Neural Networks. IEEE Access 2021, 11, 22430–22440. [Google Scholar] [CrossRef]

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object Detection in Optical Remote Sensing Images: A Survey and a New Benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Drone-Based RGB-Infrared Cross-Modality Vehicle Detection via Uncertainty-Aware Learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6700–6713. [Google Scholar] [CrossRef]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation Robust Object Detection in Aerial Images Using Deep Convolutional Neural Network. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3735–3739. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.-Y.; Le, Q.V. Nas-Fpn: Learning Scalable Feature Pyramid Architecture for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Chen, Y.; Zhang, C.; Chen, B.; Huang, Y.; Sun, Y.; Wang, C.; Fu, X.; Dai, Y.; Qin, F.; Peng, Y. Accurate Leukocyte Detection Based on Deformable-DETR and Multi-Level Feature Fusion for Aiding Diagnosis of Blood Diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50× Fewer Parameters and <0.5 MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for Mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. Shufflenet v2: Practical Guidelines for Efficient Cnn Architecture Design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Koonce, B.; Koonce, B. EfficientNet. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Apress: Berkeley, CA, USA, 2021; pp. 109–123. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More Features from Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making Vgg-Style Convnets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Yu, C.; Jiang, X.; Wu, F.; Fu, Y.; Zhang, Y.; Li, X.; Fu, T.; Pei, J. Research on Vehicle Detection in Infrared Aerial Images in Complex Urban and Road Backgrounds. Electronics 2024, 13, 319. [Google Scholar] [CrossRef]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep Learning Approach for Car Detection in UAV Imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Ghasemi Darehnaei, Z.; Rastegar Fatemi, S.M.J.; Mirhassani, S.M.; Fouladian, M. Ensemble Deep Learning Using Faster R-Cnn and Genetic Algorithm for Vehicle Detection in Uav Images. IETE J. Res. 2023, 69, 5102–5111. [Google Scholar] [CrossRef]

- Ma, B.; Liu, Z.; Jiang, F.; Yan, Y.; Yuan, J.; Bu, S. Vehicle Detection in Aerial Images Using Rotation-Invariant Cascaded Forest. IEEE Access 2019, 7, 59613–59623. [Google Scholar] [CrossRef]

- Li, Q.; Mou, L.; Xu, Q.; Zhang, Y.; Zhu, X.X. R3-Net: A Deep Network for Multi-Oriented Vehicle Detection in Aerial Images and Videos. arXiv 2018, arXiv:1808.05560. [Google Scholar]

- Li, X.; Men, F.; Lv, S.; Jiang, X.; Pan, M.; Ma, Q.; Yu, H. Vehicle Detection in Very-High-Resolution Remote Sensing Images Based on an Anchor-Free Detection Model with a More Precise Foveal Area. ISPRS Int. J. Geo-Inf. 2021, 10, 549. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Lee, Y.; Park, J. Centermask: Real-Time Anchor-Free Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13906–13915. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. A^ 2-Nets: Double Attention Networks. arXiv 2018, arXiv:1810.11579. [Google Scholar]

- Zhang Yu-Bin Yang, Q.-L. SA-Net: Shuffle Attention for Deep Convolutional Neural Networks. arXiv 2021, arXiv:2102.00240. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-Excite: Exploiting Feature Context in Convolutional Neural Networks. arXiv 2018, arXiv:1810.12348. [Google Scholar]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-Based Attention Module. arXiv 2021, arXiv:2111.12419. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-Local Networks Meet Squeeze-Excitation Networks and Beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1–10. [Google Scholar]

- Li, X.; Hu, X.; Yang, J. Spatial Group-Wise Enhance: Improving Semantic Feature Learning in Convolutional Networks. arXiv 2019, arXiv:1905.09646. [Google Scholar]

- Yang, X.; Yan, J. Arbitrary-Oriented Object Detection with Circular Smooth Label; Springer: Berlin/Heidelberg, Germany, 2020; pp. 677–694. [Google Scholar]

- Li, D.; Hu, J.; Wang, C.; Li, X.; She, Q.; Zhu, L.; Zhang, T.; Chen, Q. Involution: Inverting the Inherence of Convolution for Visual Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12321–12330. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Lee, S.; Lee, S.; Song, B.C. Cfa: Coupled-Hypersphere-Based Feature Adaptation for Target-Oriented Anomaly Localization. IEEE Access 2022, 10, 78446–78454. [Google Scholar] [CrossRef]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.-S.; Bai, X. Gliding Vertex on the Horizontal Bounding Box for Multi-Oriented Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Chen, Y.; Hu, K.; Zhu, J. Oriented Reppoints for Aerial Object Detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1829–1838. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G.-S. Redet: A Rotation-Equivariant Detector for Aerial Object Detection. arXiv 2021, arXiv:2103.07733. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.-S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv 2017, arXiv:1706.09579. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.-S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Yang, S.; Pei, Z.; Zhou, F.; Wang, G. Rotated Faster R-CNN for Oriented Object Detection in Aerial Images. In Proceedings of the 2020 3rd International Conference on Robot Systems and Applications, Chengdu, China, 14–16 June 2020; pp. 35–39. [Google Scholar]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. Rtmdet: An Empirical Study of Designing Real-Time Object Detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar]

- Hou, Y.; Shi, G.; Zhao, Y.; Wang, F.; Jiang, X.; Zhuang, R.; Mei, Y.; Ma, X. R-YOLO: A YOLO-Based Method for Arbitrary-Oriented Target Detection in High-Resolution Remote Sensing Images. Sensors 2022, 22, 5716. [Google Scholar] [CrossRef]

- Qing, H.U.; Li, R.; Pan, C.; Gao, O. Remote Sensing Image Object Detection Based on Oriented Bounding Box and Yolov5. In Proceedings of the 2022 IEEE 10th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 17–19 June 2022; Volume 10, pp. 657–661. [Google Scholar]

- Socher, R.; Ganjoo, M.; Manning, C.D.; Ng, A. Zero-Shot Learning through Cross-Modal Transfer. Adv. Neural Inf. Process. Syst. 2013, 26, 1–7. [Google Scholar]

| Environmental Types | Environmental Parameters | |

|---|---|---|

| Hardware | CPU | Intel(R) Xeon(R) Gold 5218 |

| Memory | 32G | |

| GPU | NVIDIA 4090 | |

| Video memory | 24G | |

| Software | System | Win10 |

| Graphics card driver | CUDA 11.3, CUDNN 8.2 | |

| Deep learning framework | Pytorch 1.10, python 3.8, VS (2019), Opencv, MMRotate | |

| Training Params | Param Values |

|---|---|

| Batch size | 8 |

| Weight decay | 0.0005 |

| Momentum | 0.9 |

| Non-Maximum Suppression (NMS) | 0.5 |

| Learning rate | 0.01 |

| Data augmentation method | Mosaic |

| Optimizer | SGD |

| Actual | |||

| Positive | Negative | ||

| Prediction | Positive | TP | FP |

| Negative | FN | TN | |

| Size | #Images | Modality | #Labels/Categories | Oriented BB | Year |

|---|---|---|---|---|---|

| 840 × 712 | 56,878 | R + I | 190.6 k | √ | 2021 |

| Categories | Car (R/I) | Truck (R/I) | Bus (R/I) | Van (R/I) | Freight Car (R/I) |

| 5 | 389,779/428,086 | 22,123/25,960 | 15,333/16,590 | 11,935/12,708 | 13,400/17,173 |

| Size | #Images | Modality | #Labels/Categories | Oriented BB | Year |

|---|---|---|---|---|---|

| 12,029 × 5014 | 2806 | R | 12.5 k | √ | 2018 |

| Categories | Plane (PL) | Ship (SP) | Storage tank (ST) | Baseball diamond (BD) | Tennis court (TC) |

| 15 | Swimming pool (SP) | Athletic field (GTF) | Harbor (HA) | Bridge (BR) | Large vehicle (LV) |

| Small vehicle (SV) | Helicopter (HC) | Roundabout (RA) | Soccer ball field (SBF) | Basketball court (BC) |

| Methods | Baseline | Impro1 | Impro2 | Impro3 | ML-Det |

|---|---|---|---|---|---|

| TCFPN | - | √ | √ | √ | √ |

| LKC-INVO | - | - | √ | √ | √ |

| C2F_ContextGuided | - | - | - | √ | √ |

| Assemble–disperse | - | - | - | - | √ |

| APCar (%) | 87.44 | 87.80 | 88.45 | 88.31 | 88.02 |

| APVan (%) | 60.35 | 63.61 | 62.07 | 64.16 | 64.10 |

| APTruck (%) | 74.49 | 76.42 | 78.56 | 76.68 | 77.72 |

| APBus (%) | 95.91 | 97.50 | 97.15 | 97.35 | 97.80 |

| APFreight car (%) | 61.29 | 64.03 | 67.57 | 66.70 | 67.96 |

| mAP (%) | 75.90 | 77.87 | 78.76 | 78.64 | 79.12 |

| Param (M) | 7.78 | 9.76 | 8.50 | 7.87 | 7.91 |

| GFLOPS | 17.7 | 22.3 | 21.3 | 20.1 | 20.1 |

| Methods | Modality | AP (%) | mAP (%) | ||||

|---|---|---|---|---|---|---|---|

| Car | Van | Truck | Bus | Freight Car | |||

| CFA | R | 80.6 | 63.5 | 59.4 | 91.7 | 52.6 | 69.56 |

| I | 86.7 | 63.9 | 70.7 | 95.4 | 62.4 | 75.82 | |

| Gliding vertex | R | 80.0 | 58.3 | 55.0 | 90.7 | 46.0 | 66.02 |

| I | 86.6 | 60.7 | 73.0 | 95.0 | 56.1 | 74.27 | |

| Oriented-Reppoints | R | 82.2 | 62.7 | 60.4 | 92.6 | 55.5 | 70.68 |

| I | 88.7 | 67.1 | 77.6 | 97.3 | 64.5 | 79.05 | |

| ReDet | R | 76.6 | 52.4 | 49.1 | 87.6 | 39.5 | 61.03 |

| I | 86.5 | 61.2 | 69.2 | 94.9 | 58.8 | 74.10 | |

| S2Anet | R | 80.9 | 61.6 | 58.9 | 93.7 | 53.3 | 69.67 |

| I | 88.0 | 64.9 | 72.3 | 97.1 | 65.4 | 77.53 | |

| O-RCNN | R | 82.0 | 62.5 | 58.8 | 95.1 | 52.1 | 70.09 |

| I | 87.9 | 64.1 | 77.5 | 97.6 | 64.5 | 78.34 | |

| RoI Transformer | R | 81.8 | 59.6 | 59.9 | 93.6 | 48.0 | 68.58 |

| I | 86.6 | 62.1 | 74.3 | 97.0 | 61.9 | 76.38 | |

| Faster R-CNN-O | R | 81.0 | 58.3 | 54.4 | 88.5 | 48.4 | 66.09 |

| I | 86.7 | 67.0 | 69.2 | 94.1 | 56.8 | 74.76 | |

| RTMDet-S | R | 80.5 | 58.1 | 67.4 | 94.8 | 58.1 | 70.97 |

| I | 87.3 | 65.8 | 77.5 | 98.4 | 68.0 | 79.43 | |

| YOLOv5s-obb | R | 80.6 | 54.2 | 63.3 | 94.3 | 50.4 | 68.70 |

| I | 87.4 | 60.4 | 74.5 | 96.0 | 61.3 | 75.90 | |

| ML-Det (ours) | R | 82.3 | 57.8 | 61.0 | 95.3 | 51.4 | 70.44 |

| I | 88.0 | 64.1 | 77.7 | 97.8 | 68.0 | 79.12 | |

| Methods | Backbone | AP (%) | (%) | Params (M) | |

|---|---|---|---|---|---|

| Car | Plane | ||||

| Oriented Reppoints | R50-FPN | 88.7 | 97.9 | 93.29 | 36.60 |

| Rotated RetinaNet | R50-FPN | 84.2 | 93.8 | 89.02 | 36.15 |

| Gliding vertex | R50-FPN | 88.9 | 94.7 | 91.79 | 41.13 |

| YOLOv5s-obb | CSPDarkNet-PAFPN | 89.0 | 98.9 | 93.96 | 7.78 |

| S2ANet | R50-FPN | 90.9 | 95.3 | 93.00 | 38.54 |

| O-RCNN | R50-FPN | 89.8 | 96.6 | 93.23 | 41.13 |

| ReDet | R50-ReFPN | 88.3 | 96.2 | 92.25 | 31.55 |

| RoI Transformer | Swin tiny-FPN | 90.0 | 97.1 | 93.51 | 58.66 |

| RTMDet-S | CSPNeXt-PAFPN | 91.1 | 97.8 | 94.45 | 8.86 |

| Rotated FCOS | R50-FPN | 87.2 | 98.2 | 92.69 | 31.89 |

| Faster R-CNN-O | R50-FPN | 89.5 | 95.1 | 92.27 | 41.12 |

| ML-Det (ours) | INDarkNet-TCFPN | 91.3 | 99.3 | 95.25 | 7.91 |

| Methods | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | ||

| CFA | 88.0 | 82.1 | 53.9 | 73.7 | 79.9 | 78.9 | 87.2 | 90.8 | 91.9 | 85.6 | 56.1 | 64.4 | 70.2 | 70.6 | 38.1 | 73.44 |

| Rotated-FCOS | 89.0 | 74.3 | 48.0 | 58.6 | 79.3 | 74.0 | 86.8 | 90.9 | 83.3 | 83.8 | 55.9 | 62.9 | 64.1 | 67.6 | 47.7 | 71.08 |

| Oriented-Reppoints | 88.2 | 78.1 | 51.3 | 73.0 | 79.2 | 76.9 | 87.5 | 90.9 | 83.4 | 84.6 | 64.0 | 64.9 | 66.0 | 69.6 | 48.5 | 73.73 |

| ReDet | 89.2 | 83.8 | 52.2 | 71.1 | 78.1 | 82.5 | 88.2 | 90.9 | 87.2 | 86.0 | 65.5 | 62.9 | 75.9 | 70.0 | 66.7 | 76.67 |

| YOLOv5s-obb | 89.3 | 84.5 | 51.4 | 60.7 | 80.7 | 84.7 | 88.4 | 90.7 | 85.9 | 87.5 | 59.6 | 65.2 | 74.5 | 81.7 | 66.9 | 76.79 |

| Gliding vertex | 89.0 | 77.3 | 47.8 | 68.5 | 74.0 | 74.9 | 85.9 | 90.8 | 84.9 | 84.8 | 53.6 | 64.9 | 64.8 | 69.3 | 57.7 | 72.56 |

| RoI Transformer | 89.2 | 84.2 | 51.5 | 72.2 | 78.3 | 77.4 | 87.5 | 90.9 | 86.3 | 85.6 | 63.2 | 66.5 | 68.2 | 71.9 | 60.4 | 75.56 |

| RoI Transformer * | 89.4 | 83.8 | 52.8 | 74.2 | 78.7 | 83.1 | 88.0 | 91.0 | 86.2 | 87.0 | 61.7 | 62.6 | 74.1 | 71.0 | 63.5 | 76.48 |

| R3Det | 89.4 | 76.9 | 45.8 | 71.5 | 76.9 | 74.6 | 82.4 | 90.8 | 78.1 | 84.0 | 60.1 | 63.7 | 62.1 | 66.1 | 39.2 | 70.77 |

| O-RCNN * | 89.5 | 82.8 | 54.2 | 75.2 | 78.6 | 84.9 | 88.1 | 90.9 | 88.1 | 86.6 | 66.9 | 66.7 | 75.4 | 71.6 | 64.0 | 77.56 |

| S2Anet | 88.3 | 79.8 | 46.3 | 71.2 | 77.0 | 73.5 | 79.8 | 90.8 | 82.9 | 82.8 | 55.4 | 62.1 | 61.9 | 69.0 | 51.5 | 71.49 |

| Oriented-RetinaNet | 89.4 | 80.1 | 39.7 | 69.5 | 77.7 | 61.8 | 77.0 | 90.7 | 82.4 | 80.7 | 56.5 | 64.8 | 55.3 | 64.8 | 43.1 | 68.91 |

| Faster R-CNN-O | 89.3 | 82.7 | 49.0 | 69.8 | 74.0 | 72.5 | 85.4 | 90.9 | 83.9 | 84.6 | 54.7 | 65.2 | 65.3 | 68.8 | 57.6 | 72.92 |

| R-YOLO [53] | 90.2 | 84.5 | 54.3 | 68.5 | 78.9 | 87.0 | 89.3 | 90.8 | 74.3 | 89.1 | 66.8 | 67.8 | 74.5 | 74.2 | 65.1 | 77.01 |

| Oriented-YOLOv5 [54] | 93.4 | 83.4 | 57.9 | 68.5 | 78.0 | 87.0 | 90.1 | 94.1 | 82.2 | 80.6 | 60.6 | 68.8 | 76.9 | 67.2 | 68.6 | 76.17 |

| ML-Det (ours) | 89.4 | 85.1 | 51.4 | 72.5 | 80.5 | 85.6 | 85.7 | 90.5 | 88.3 | 85.6 | 62.0 | 65.0 | 76.8 | 83.2 | 64.5 | 77.74 |

| Methods | GFLOPS | FPS | Params (M) |

|---|---|---|---|

| CFA | 117.94 | 29.9 | 36.60 |

| Gliding vertex | 120.73 | 29.3 | 41.13 |

| Oriented-Reppoints | 117.94 | 10.7 | 36.60 |

| ReDet | 33.80 | 16.2 | 31.57 |

| S2ANet | 119.36 | 26.7 | 38.55 |

| O-RCNN | 120.81 | 22.3 | 41.13 |

| RoI Transformer | 121.77 | 19.0 | 55.06 |

| Faster R-CNN-O | 120.73 | 23.5 | 41.13 |

| RTMDet-S | 22.79 | 52.21 | 8.86 |

| Yolov5s-obb | 17.70 | 81.3 | 7.78 |

| ML-Det | 20.10 | 78.8 | 7.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, C.; Jiang, X.; Wu, F.; Fu, Y.; Pei, J.; Zhang, Y.; Li, X.; Fu, T. A Multi-Scale Feature Fusion Based Lightweight Vehicle Target Detection Network on Aerial Optical Images. Remote Sens. 2024, 16, 3637. https://doi.org/10.3390/rs16193637

Yu C, Jiang X, Wu F, Fu Y, Pei J, Zhang Y, Li X, Fu T. A Multi-Scale Feature Fusion Based Lightweight Vehicle Target Detection Network on Aerial Optical Images. Remote Sensing. 2024; 16(19):3637. https://doi.org/10.3390/rs16193637

Chicago/Turabian StyleYu, Chengrui, Xiaonan Jiang, Fanlu Wu, Yao Fu, Junyan Pei, Yu Zhang, Xiangzhi Li, and Tianjiao Fu. 2024. "A Multi-Scale Feature Fusion Based Lightweight Vehicle Target Detection Network on Aerial Optical Images" Remote Sensing 16, no. 19: 3637. https://doi.org/10.3390/rs16193637

APA StyleYu, C., Jiang, X., Wu, F., Fu, Y., Pei, J., Zhang, Y., Li, X., & Fu, T. (2024). A Multi-Scale Feature Fusion Based Lightweight Vehicle Target Detection Network on Aerial Optical Images. Remote Sensing, 16(19), 3637. https://doi.org/10.3390/rs16193637