From Do-It-Yourself Design to Discovery: A Comprehensive Approach to Hyperspectral Imaging from Drones

Abstract

1. Introduction

1.1. Remote Sensing

1.2. Contribution—Holistic Imaging Spectrometer Payload and Image Processing Pipeline

1.3. Paper Overview

2. Payload Description

2.1. Optical Sensors

2.2. Sensors for State-Estimation and Navigation

2.3. Data Processing, Synchronization, and Storage

2.4. UAV Platform

3. Payload Operation and Operational Considerations

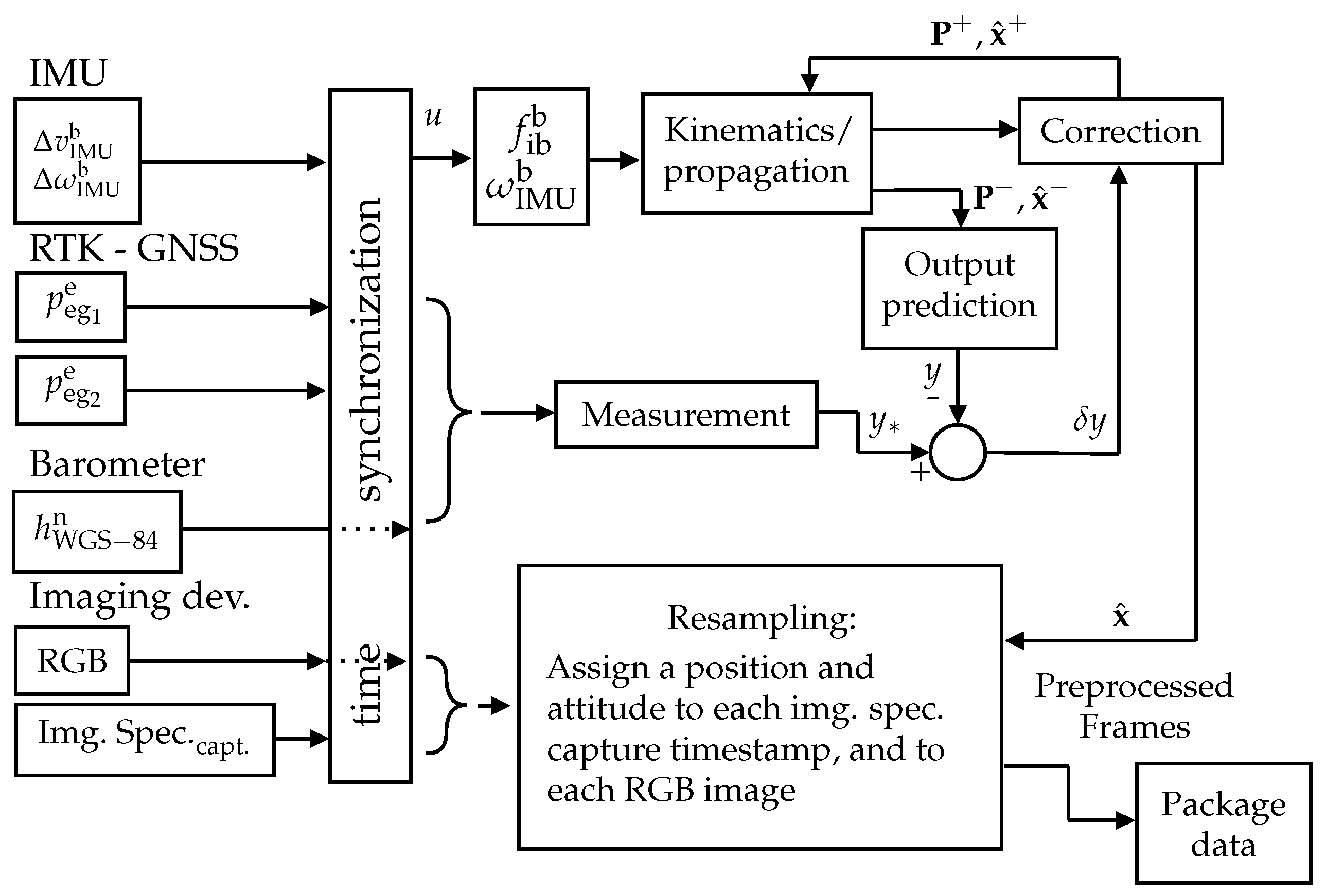

4. Processing of Payload Data

4.1. Processing of Navigation Sensors

4.2. Image Spectrometer Data Processing

4.3. RGB Data Processing

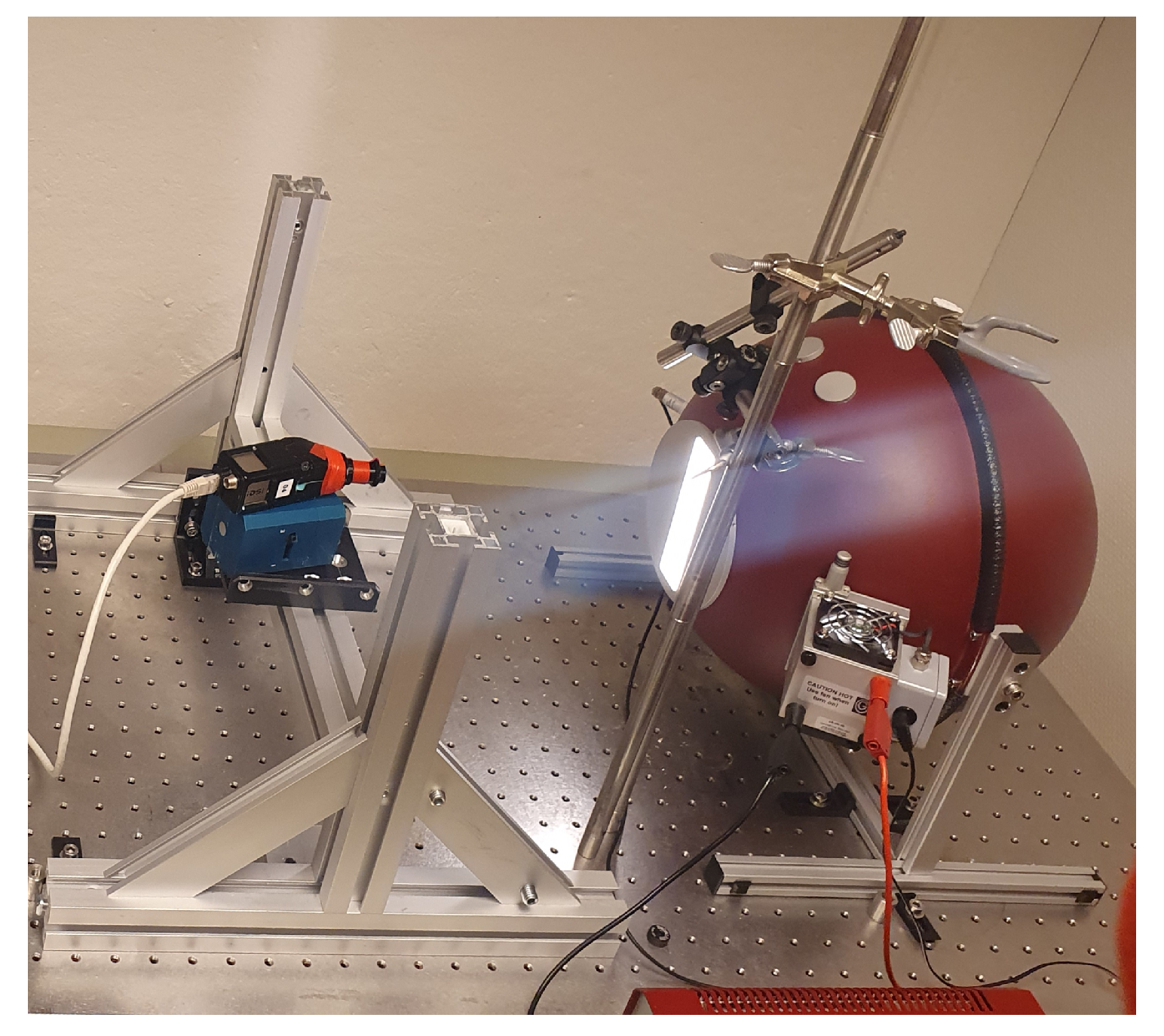

5. Calibration

5.1. Preliminaries Push-Broom Imaging Spectrometer

5.2. Imaging Spectrometer Calibration

5.2.1. Spectral Calibration

5.2.2. Radiometric Calibration

5.2.3. Spectral Smile Correction

5.3. Remote Sensing Reflectance and Atmospheric Corrections

5.3.1. Calculation of the Atmospheric Absorption

5.3.2. Indirect Illumination

- Downwelling light reflected into the atmosphere by ArcLights surrounding. This reflected light is backscattered by the atmosphere into the ArcLight sensor. If ArcLight is surrounded by a surface with a high albedo, such as snow, this effect is more pronounced.

- Rayleigh and forward scattering. This effect can lead to higher measurements, especially during low sun elevations [48]. In contrast to the first described effect, this also happens on the ocean’s surface.

5.3.3. Remote Sensing Reflectance

6. State Estimation

6.1. State-Estimation Preliminaries

6.1.1. Coordinate Systems

6.1.2. Attitude Representations and Relationships

6.1.3. Inertial Measurement Unit

6.1.4. Kinematics—Strapdown Equations

6.1.5. Time Conventions

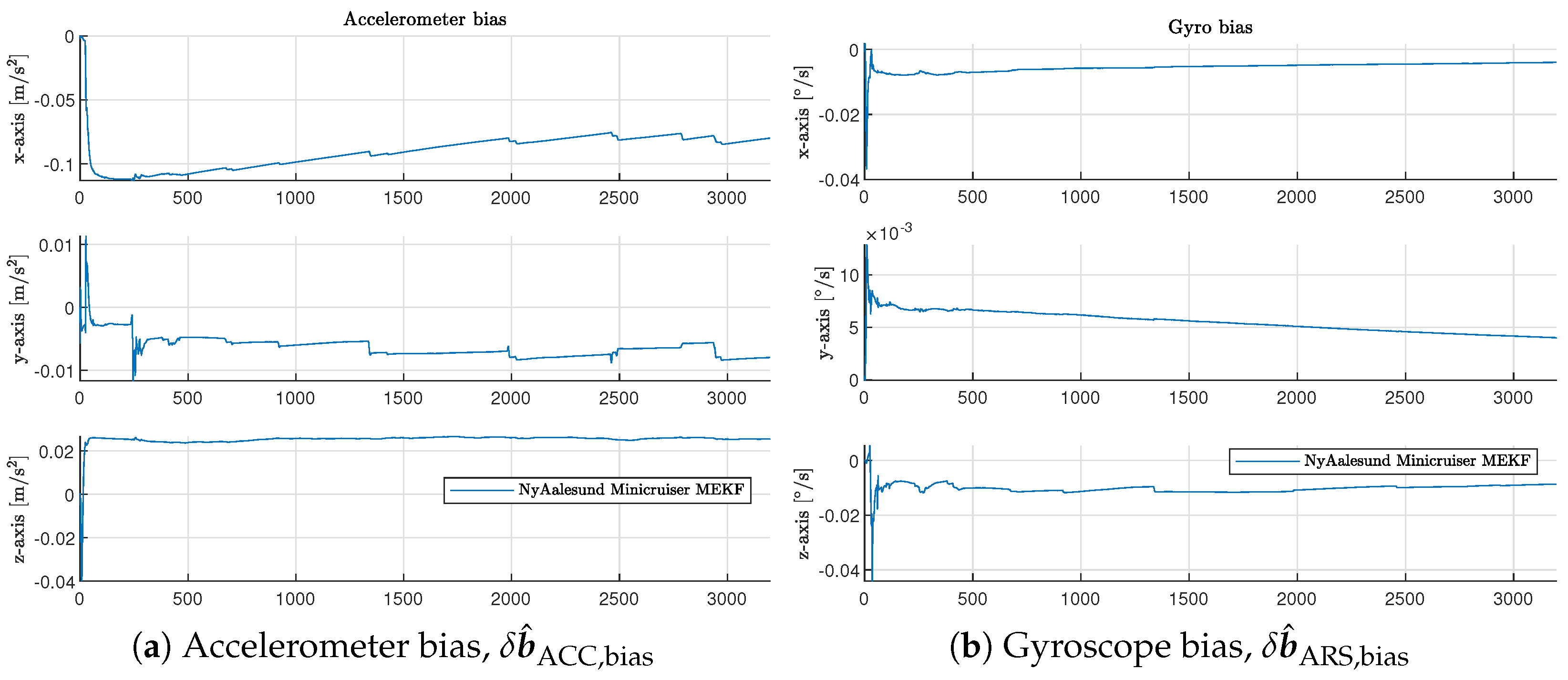

6.2. Multiplicative Error-State Kalman Filter—Prediction

6.3. Multiplicative Error-State Kalman Filter—Update

6.3.1. Position Correction Using GNSS

6.3.2. Position Correction Using Barometer

6.4. Multiplicative Error-State Kalman Filter—Tuning and Performance

6.5. Time Synchronization between Sensor Logs and MEKF Output

7. Georeferencing

7.1. State-Estimation Data—Time Interpolation and Pose Conversions

7.2. Push-Broom Camera Model

7.3. Digital Elevation Model

7.4. Ray Tracing Methodology for 3D Mesh Intersection

7.5. Orthorectification

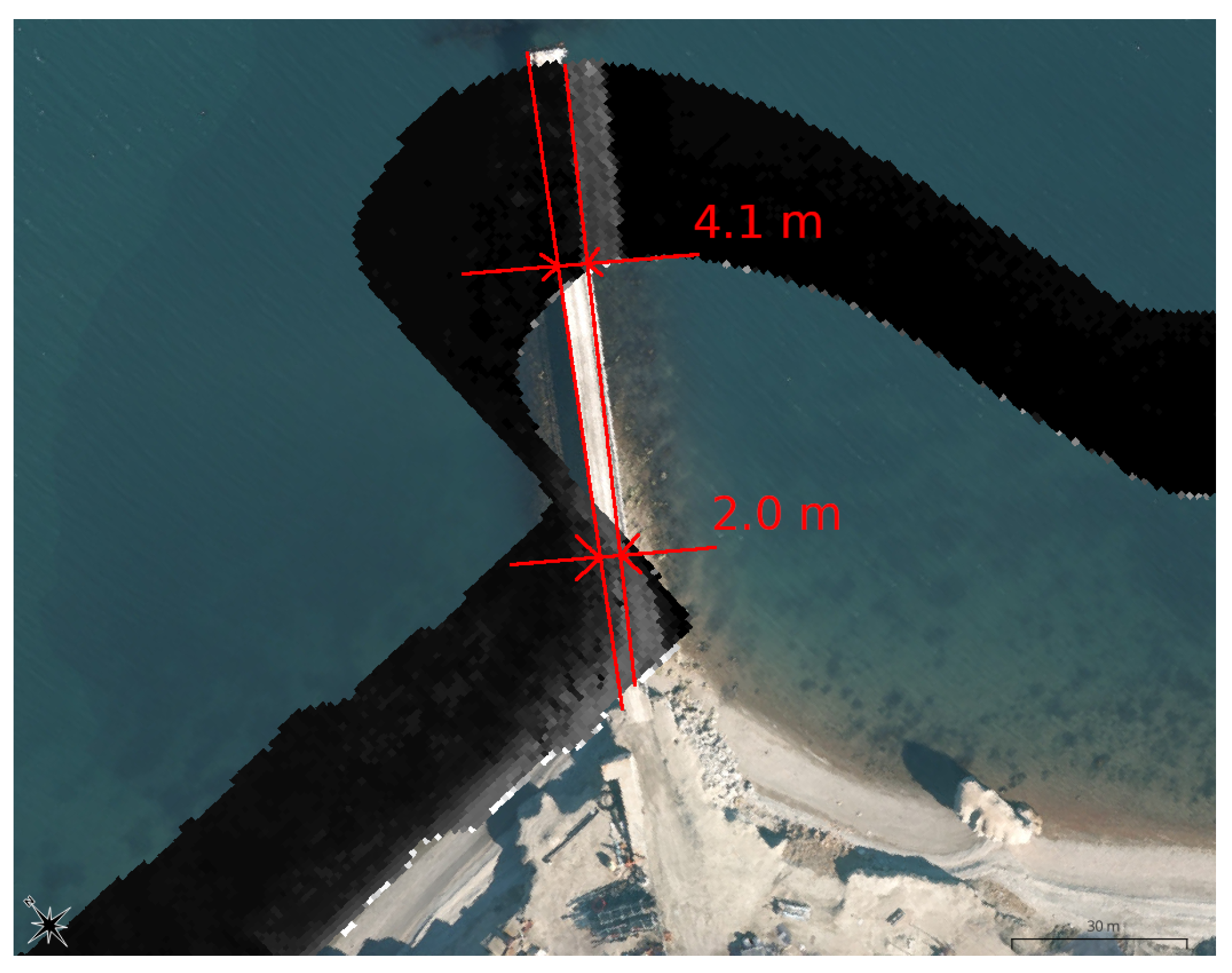

7.6. Direct Georeferencing Accuracy

8. Ocean Color Estimation

8.1. Sun-Glint Removal

8.2. Extraction and Recombination of Imaging Spectroscopy Data

8.3. Exploratory Data Analysis Approaches

8.3.1. Spectral Angle Mapper

8.3.2. Nonlinear Spectral Unmixing, and Data Reduction

8.4. Satellite-Based Imaging Spectroscopy

8.5. In Situ Measurements

9. Summary and Conclusions

9.1. Conclusions

9.2. Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Tuning of the IMU Position in the UAV Reference Frame

| Roll [°] | Pitch [°] | Yaw [°] |

|---|---|---|

Appendix A.2. Pixel Length and Pixel Overlap

Appendix B

Appendix B.1. RADTRANX Simulation

Appendix B.2. Light Absorption

- The molar absorption coefficient

- The concentration C .Assuming a well-mixed atmosphere, the concentration C depends only on the local air pressure.

- The path a light beam traveled h

Appendix C

Appendix C.1. Supplements to MEKF

Appendix C.2. Barometer Calibration

| Scaling | ||

|---|---|---|

| Flight 1 | ||

| Flight 5 | ||

| Flight 8 |

Calculation of Earth Eccentricity

Appendix D

| Value | |

|---|---|

| 0.0741879177 | |

| −0.0741879177 | |

| 0 |

Appendix E

Appendix F

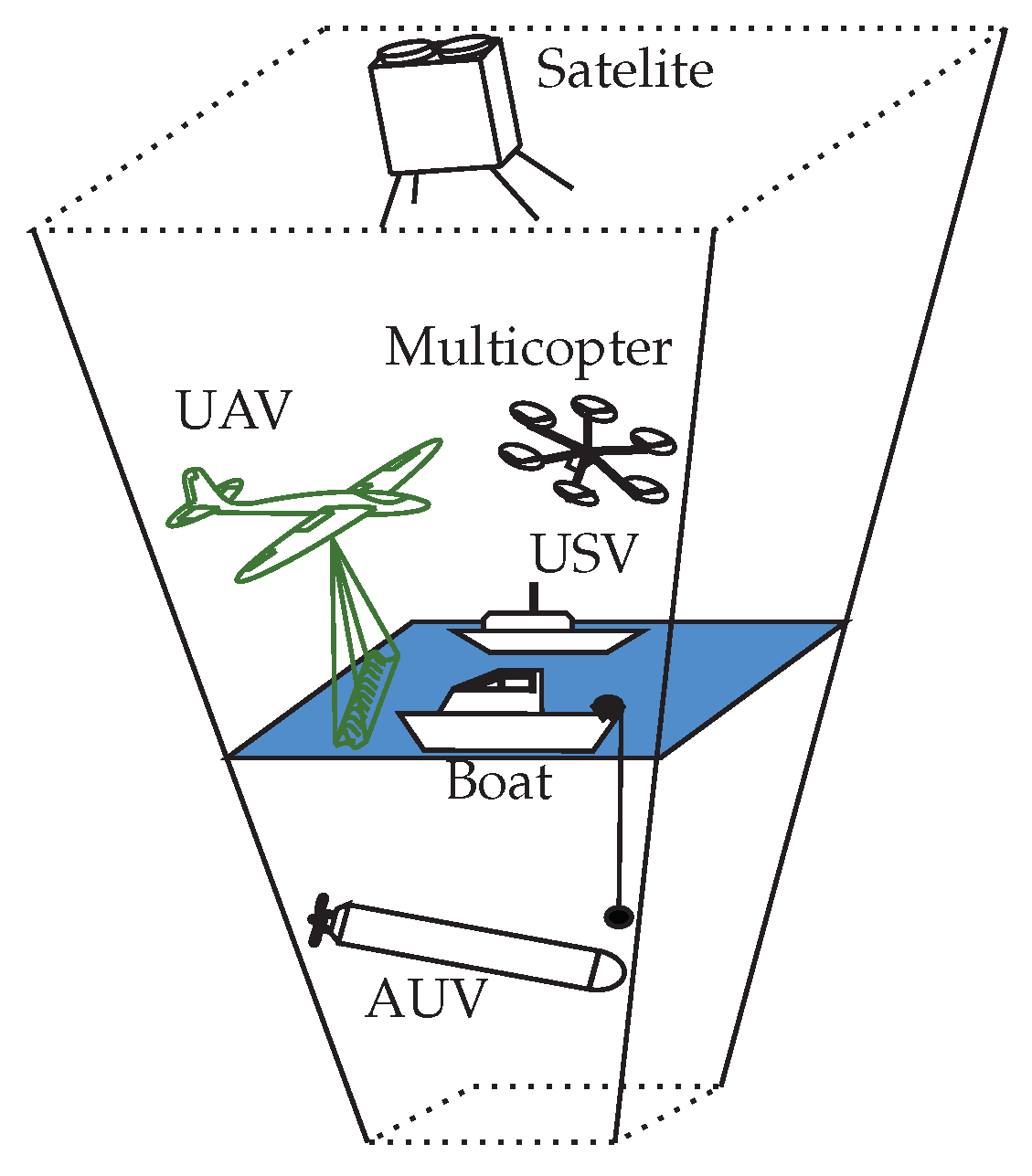

Appendix F.1. The Overarching Mission—Observation Pyramid

| EPGS:4326 WGS-84 | EPGS:32633WGS-84/UTM Zone 33N | |||

|---|---|---|---|---|

| Longitude | Latitude | Northing [m] | Easting [m] | |

| 1 | 78°57′41.888″ | 11°57′34.84″ | 9,261,245.442 | 2,877,896.203 |

| 2 | 78°56′10.691″ | 11°57′34.84″ | 9,255,900.023 | 2,875,579.055 |

| 3 | 78°56′56.264″ | 12°1′32.665″ | 9,252,422.498 | 2,890,882.088 |

| 4 | 78°56′56.264″ | 11°53′37.015″ | 9,264,696.813 | 2,862,566.410 |

References

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern Trends in Hyperspectral Image Analysis: A Review. IEEE Access 2018, 6, 14118–14129. [Google Scholar] [CrossRef]

- Xiang, T.Z.; Xia, G.S.; Zhang, L. Mini-Unmanned Aerial Vehicle-Based Remote Sensing: Techniques, applications, and prospects. IEEE Geosci. Remote Sens. Mag. 2019, 7, 29–63. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Sam, L.; Akanksha; Martín-Torres, F.J.; Kumar, R. UAVs as remote sensing platform in glaciology: Present applications and future prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Zolich, A.; Johansen, T.A.; Cisek, K.; Klausen, K. Unmanned aerial system architecture for maritime missions design & hardware description. In Proceedings of the 2015 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), Cancun, Mexico, 23–25 November 2015; pp. 342–350. [Google Scholar] [CrossRef]

- Whittaker, W. Field Robots for the Next Century. IFAC Proc. Vol. 1992, 25, 41–48. [Google Scholar] [CrossRef]

- Sigernes, F.; Syrjäsuo, M.; Storvold, R.; Fortuna, J.; Grøtte, M.E.; Johansen, T.A. Do it yourself hyperspectral imager for handheld to airborne operations. Opt. Express 2018, 26, 6021–6035. [Google Scholar] [CrossRef]

- Hasler, O.K.; Winter, A.; Langer, D.D.; Bryne, T.H.; Johansen, T.A. Lightweight UAV Payload for Image Spectroscopy and Atmospheric Irradiance Measurements. In Proceedings of the IGARSS 2023 Conference Proceedings, Pasadena, CA, USA, 16–21 July 2023. [Google Scholar]

- Eismann, M.T. Hyperspectral Remote Sensing; SPIE Press: Bellingham, WA, USA, 2012. [Google Scholar] [CrossRef]

- Riihiaho, K.A.; Eskelinen, M.A.; Pölönen, I. A Do-It-Yourself Hyperspectral Imager Brought to Practice with Open-Source Python. Sensors 2021, 21, 1072. [Google Scholar] [CrossRef]

- Salazar-Vazquez, J.; Mendez-Vazquez, A. A plug-and-play Hyperspectral Imaging Sensor using low-cost equipment. HardwareX 2020, 7, e00087. [Google Scholar] [CrossRef] [PubMed]

- Stuart, M.B.; Davies, M.; Hobbs, M.J.; Pering, T.D.; McGonigle, A.J.S.; Willmott, J.R. High-Resolution Hyperspectral Imaging Using Low-Cost Components: Application within Environmental Monitoring Scenarios. Sensors 2022, 22, 4652. [Google Scholar] [CrossRef] [PubMed]

- Henriksen, M.B.; Prentice, E.F.; van Hazendonk, C.M.; Sigernes, F.; Johansen, T.A. A do-it-yourself VIS/NIR pushbroom hyperspectral imager with C-mount optics. Opt. Contin. 2022, 1, 427–441. [Google Scholar] [CrossRef]

- Fortuna, J.; Johansen, T.A. A Lightweight Payload for Hyperspectral Remote Sensing using Small UAVS. In Proceedings of the 2018 9th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 23–26 September 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Hasler, O.; Løvås, H.; Bryne, T.H.; Johansen, T.A. Direct georeferencing for Hyperspectral Imaging of ocean surface. In Proceedings of the 2023 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2023; pp. 1–19. [Google Scholar] [CrossRef]

- Burkart, A.; Cogliati, S.; Schickling, A. A Novel UAV-Based Ultra-Light Weight Spectrometer for Field Spectroscopy. Sens. J. IEEE 2014, 14, 62–67. [Google Scholar] [CrossRef]

- Mao, Y.; Betters, C.H.; Evans, B.; Artlett, C.P.; Leon-Saval, S.G.; Garske, S.; Cairns, I.H.; Cocks, T.; Winter, R.; Dell, T. OpenHSI: A Complete Open-Source Hyperspectral Imaging Solution for Everyone. Remote Sens. 2022, 14, 2244. [Google Scholar] [CrossRef]

- Langer, D.D.; Prentice, E.F.; Johansen, T.A.; Sørensen, A.J. Validation of Hyperspectral Camera Operation with an Experimental Aircraft. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 7256–7259. [Google Scholar] [CrossRef]

- Saari, H.; Aallos, V.V.; Akujärvi, A.; Antila, T.; Holmlund, C.; Kantojärvi, U.; Mäkynen, J.; Ollila, J. Novel Miniaturized Hyperspectral Sensor for UAV and Space Applications. In Proceedings of the SPIE Remote Sensing, Berlin, Germany, 31 August–3 September 2009; Volume 7474. [Google Scholar] [CrossRef]

- Specim. Specim AFX Series. 2022. Available online: https://www.specim.fi/afx/ (accessed on 14 August 2024).

- HySpex, N.E.O. HySpex, Norsk Elektro Optikk, Hyperspectral Cameras. 2022. Available online: https://www.hyspex.com/hyspex-turnkey-solutions/uav/ (accessed on 14 August 2024).

- Solutions, R.H.I.; Pika, L. 2022. Available online: https://resonon.com/Pika-L (accessed on 14 August 2024).

- Lynch, K.; Hill, S. Miniaturized Hyperspectral Sensor for UAV Applications; Headwall Photonics, Inc.: Bolton, MA, USA, 2014. [Google Scholar]

- Kim, J.I.; Chi, J.; Masjedi, A.; Flatt, J.E.; Crawford, M.; Habib, A.F.; Lee, J.; Kim, H.C. High-resolution hyperspectral imagery from pushbroom scanners on unmanned aerial systems. Geosci. Data J. 2022, 9, 221–234. [Google Scholar] [CrossRef]

- Ravi, R.; Hasheminasab, S.M.; Zhou, T.; Masjedi, A.; Quijano, K.; Flatt, J.E.; Crawford, M.; Habib, A. UAV-based multi-sensor multi-platform integration for high throughput phenotyping. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV; Thomasson, J.A., McKee, M., Moorhead, R.J., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2019; Volume 11008, p. 110080E. [Google Scholar] [CrossRef]

- Habib, A.; Zhou, T.; Masjedi, A.; Zhang, Z.; Evan Flatt, J.; Crawford, M. Boresight Calibration of GNSS/INS-Assisted Push-Broom Hyperspectral Scanners on UAV Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1734–1749. [Google Scholar] [CrossRef]

- LaForest, L.; Hasheminasab, S.M.; Zhou, T.; Flatt, J.E.; Habib, A. New Strategies for Time Delay Estimation during System Calibration for UAV-Based GNSS/INS-Assisted Imaging Systems. Remote Sens. 2019, 11, 1811. [Google Scholar] [CrossRef]

- Garrett, J.L.; Bakken, S.; Prentice, E.F.; Langer, D.; Leira, F.S.; Honoré-Livermore, E.; Birkeland, R.; Grøtte, M.E.; Johansen, T.A.; Orlandić, M. Hyperspectral Image Processing Pipelines on Multiple Platforms for Coordinated Oceanographic Observation. In Proceedings of the 2021 11th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 March 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Langer, D.D.; Orlandić, M.; Bakken, S.; Birkeland, R.; Garrett, J.L.; Johansen, T.A.; Sørensen, A.J. Robust and Reconfigurable On-Board Processing for a Hyperspectral Imaging Small Satellite. Remote Sens. 2023, 15, 3756. [Google Scholar] [CrossRef]

- Oudijk, A.E.; Hasler, O.; Øveraas, H.; Marty, S.; Williamson, D.R.; Svendsen, T.; Garrett, J.L. Campaign For Hyperspectral Data Validation In North Atlantic Coastal Waters. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Mobley, C.D. The Oceanic Optics Book; International Ocean Colour Coordinating Group: Dartmouth, Canada, 2022. [Google Scholar]

- Datasheet: OS08A1 8MP HDR Camera. 2023. Available online: https://www.khadas.com/post/os08a10-8mp-hdr-camera (accessed on 14 August 2024).

- Datasheet: Mini-spectrometer Hamamatsu C12880MA. 2022. Available online: https://www.hamamatsu.com/eu/en/product/optical-sensors/spectrometers/mini-spectrometer/C12880MA.html (accessed on 14 August 2024).

- Grant, S.; Johnsen, G.; McKee, D.; Zolich, A.; Cohen, J.H. Spectral and RGB analysis of the light climate and its ecological impacts using an all-sky camera system in the Arctic. Appl. Opt. 2023, 62, 5139–5150. [Google Scholar] [CrossRef]

- Datasheet: Sensonor STIM300. Available online: https://safran-navigation-timing.b-cdn.net/wp-content/uploads/2022/12/STIM300-Datasheet.pdf (accessed on 14 August 2024).

- Datasheet: GNSS Receivers, Neo-M8 and ZED-F9P. Available online: https://www.u-blox.com/en (accessed on 14 August 2024).

- Groves, P. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems, 2nd ed.; Artech House: Norwood, MA, USA, 2013. [Google Scholar]

- Datasheet: Khadas Vim3. 2023. Available online: https://www.khadas.com/vim3 (accessed on 14 August 2024).

- LSTS-DUNE: Unified Navigation Environment [Software]. Available online: https://github.com/LSTS/dune.git (accessed on 14 August 2024).

- Albrektsen, S.M.; Johansen, T.A. User-Configurable Timing and Navigation for UAVs. Sensors 2018, 18, 2468. [Google Scholar] [CrossRef]

- Mini Cruiser. 2023. Available online: https://www.etair-norway.com/ (accessed on 14 August 2024).

- RTKLIB Demo5 [Software]. Available online: https://github.com/rtklibexplorer/RTKLIB.git (accessed on 1 May 2024).

- LSTS-NEPTUS: Unified Navigation Environment [Software]. Available online: https://www.lsts.pt/index.php/software/54/ (accessed on 14 August 2024).

- Open Drone Map [Software]. 2022. Available online: https://github.com/OpenDroneMap/ODM.git (accessed on 14 August 2024).

- Henriksen, M.B.; Prentice, E.F.; Johansen, T.A.; Sigernes, F. Pre-Launch Calibration of the HYPSO-1 Cubesat Hyperspectral Imager. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Henriksen, M.B. On the Calibration and Optical Performance of a Hyperspectral Imager for Drones and Small Satellites. Ph.D. Thesis, NTNU, Trondheim, Norway, 2023. [Google Scholar]

- Polifke, W.; Jan, K. Wärmeübertragung, Grundlagen, Analytische und Numerische Methoden; Pearson: London, UK, 2009. [Google Scholar]

- Norsk Klima Service Senter. 2024. Available online: https://seklima.met.no/observations/ (accessed on 14 August 2024).

- Wallace, J.; Hobbs, P. Atmospheric Science: An Introductory Survey; International Geophysics Series; Elsevier Academic Press: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Gregg, W.W.; Carder, K.L. A simple spectral solar irradiance model for cloudless maritime atmospheres. Limnol. Oceanogr. 1990, 35, 1657–1675. [Google Scholar] [CrossRef]

- Farrell, J. Aided Navigation: GPS with High Rate Sensors, 1st ed.; McGraw-Hill, Inc.: New York, NY, USA, 2008. [Google Scholar]

- Solà, J. Quaternion kinematics for the error-state Kalman filter. arXiv 2017, arXiv:1711.02508. [Google Scholar]

- Markley, F.L. Attitude Error Representations for Kalman Filtering. J. Guid. Control. Dyn. 2003, 26, 311–317. [Google Scholar] [CrossRef]

- Interface Description: U-Blox F9 High Precision GNSS Receiver. Available online: https://content.u-blox.com/sites/default/files/documents/u-blox-F9-HPG-1.32_InterfaceDescription_UBX-22008968.pdf (accessed on 14 August 2024).

- ESA Navipedia. 2023. Available online: https://gssc.esa.int/navipedia/index.php/Main_Page (accessed on 14 August 2024).

- International Earth Rotation and Reference Systems Service. Available online: https://www.iers.org/IERS/EN/Service/Glossary/leapSecond.html?nn=14894 (accessed on 14 August 2024).

- Pavlis, N.K.; Holmes, S.A.; Kenyon, S.C.; Factor, J.K. The development and evaluation of the Earth Gravitational Model 2008 (EGM2008). J. Geophys. Res. Solid Earth 2012, 117. [Google Scholar] [CrossRef]

- Vermeille, H. Computing geodetic coordinates from geocentric coordinates. J. Geod. 2004, 78, 94–95. [Google Scholar] [CrossRef]

- Datasheet: Barometer-MS5611-01BA03-AMSYS. 2017. Available online: https://www.amsys-sensor.com/ (accessed on 14 August 2024).

- Georeferencing for Hyperspectral Images. 2024. Available online: https://pypi.org/project/gref4hsi/ (accessed on 14 August 2024).

- Løvås, H.S.; Mogstad, A.A.; Sørensen, A.J.; Johnsen, G. A Methodology for Consistent Georegistration in Underwater Hyperspectral Imaging. IEEE J. Ocean. Eng. 2022, 47, 331–349. [Google Scholar] [CrossRef]

- Adam Chlus, Zhiwei Ye, P.T. EnSpec/Hytools. 2024. Available online: https://github.com/EnSpec/hytools.git (accessed on 14 August 2024).

- Hedley, J.; Harborne, A.; Mumby, P. Technical note: Simple and robust removal of sun glint for mapping shallow-water benthos. Int. J. Remote Sens. 2005, 26, 2107–2112. [Google Scholar] [CrossRef]

- Oshigami, S.; Yamaguchi, Y.; Mitsuishi, M.; Momose, A.; Yajima, T. An Advanced Method for Mineral Mapping Applicable to Hyperspectral Images: The Composite MSAM. Remote Sens. Lett. 2015, 6, 499–508. [Google Scholar] [CrossRef]

- The Spectral Python (SPy) Package: Version 0.21. 2024. Available online: www.spectralpython.net (accessed on 14 August 2024).

- EcoLight-S. 2024. Available online: https://www.sequoiasci.com/product/ecolight-s/ (accessed on 14 August 2024).

- Bricaud, A.; Claustre, H.; Ras, J.; Oubelkheir, K. Natural variability of phytoplanktonic absorption in oceanic waters: Influence of the size structure of algal populations. J. Geophys. Res. Ocean. 2004, 109. [Google Scholar] [CrossRef]

- Zhang, H.; Bai, X.; Wang, K. Response of the Arctic sea ice–ocean system to meltwater perturbations based on a one-dimensional model study. Ocean. Sci. 2023, 19, 1649–1668. [Google Scholar] [CrossRef]

- Berge, D.J.; Johnsen, D.G.; Cohen, D.J.H. Polar Night Marine Ecology; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Pandey, P.C.; Srivastava, P.K.; Balzter, H.; Bhattacharya, B.; Petropoulos, G.P. Hyperspectral Remote Sensing: Theory and Application; Earth Observation Series; Elsevier: Amsterdam, The Netherlands, 2020. [Google Scholar] [CrossRef]

- Feng, X.R.; Li, H.C.; Wang, R.; Du, Q.; Jia, X.; Plaza, A. Hyperspectral Unmixing Based on Nonnegative Matrix Factorization: A Comprehensive Review. arXiv 2022, arXiv:2205.09933. [Google Scholar] [CrossRef]

- Lupu, D.; Garrett, J.L.; Johansen, T.A.; Orlandic, M.; Necoara, I. Quick unsupervised hyperspectral dimensionality reduction for earth observation: A comparison. arXiv 2024, arXiv:2402.16566. [Google Scholar]

- Bakken, S.; Henriksen, M.B.; Birkeland, R.; Langer, D.D.; Oudijk, A.E.; Berg, S.; Johansen, T.A. HYPSO-1 CubeSat: First Images and In-Orbit Characterization. Remote Sens. 2023, 15, 755. [Google Scholar] [CrossRef]

- HYPSO Package, 2024. NTNU Smallsat Lab. Available online: https://github.com/NTNU-SmallSat-Lab/hypso-package (accessed on 14 August 2024).

- Vermote, E.; Tanre, D.; Deuze, J.; Herman, M.; Morcrette, J.J. Second Simulation of a Satellite Signal in the Solar Spectrum-Vector (6SV). 2006. Available online: https://ltdri.org/6spage.html (accessed on 14 August 2024).

- Marquardt, M.; Goraguer, L.; Assmy, P.; Bluhm, B.A.; Aaboe, S.; Down, E.; Patrohay, E.; Edvardsen, B.; Tatarek, A.; Smoła, Z.; et al. Seasonal dynamics of sea-ice protist and meiofauna in the northwestern Barents Sea. Prog. Oceanogr. 2023, 218, 103128. [Google Scholar] [CrossRef]

- Fragoso, G.M.; Johnsen, G.; Chauton, M.S.; Cottier, F.; Ellingsen, I. Phytoplankton community succession and dynamics using optical approaches. Cont. Shelf Res. 2021, 213, 104322. [Google Scholar] [CrossRef]

- Ornaf, R.M.; Dong, M.W. Key Concepts of HPLC in Pharmaceutical Analysis. In Handbook of Pharmaceutical Analysis by HPLC; Ahuja, S., Dong, M.W., Eds.; Academic Press: Cambridge, MA, USA, 2005; Volume 6, pp. 19–45. [Google Scholar] [CrossRef]

- O’Reilly, J.E.; Werdell, P.J. Chlorophyll algorithms for ocean color sensors—OC4, OC5 & OC6. Remote Sens. Environ. 2019, 229, 32–47. [Google Scholar] [CrossRef]

- Zhou, Y.; Rangarajan, A.; Gader, P.D. An Integrated Approach to Registration and Fusion of Hyperspectral and Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3020–3033. [Google Scholar] [CrossRef]

- Stamnes, K.; Thomas, E.G.; Stamnes, J.J. Radiative Transfer in the Atmosphere and Ocean; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

| Sensor | Used Spectral Range [] | Spectral Resolution (FWHM) [] |

|---|---|---|

| HSI-v4 | 400.0–800.0 | 3.6 |

| Hamamatsu C12880MA | 310.0–879.0 | 12.0 |

| Light Observ. [33] | 378.9–943.7 | 3.29 |

| Unit | Img. Spec. HSI-v4 | RGB Camera | |

|---|---|---|---|

| Focal length f | 16 | 3.47 | |

| Sensor pixel height sh | 1936 | 3840 | |

| Sensor pixel width sw | 1216 | 2160 | |

| Across track fov | 10.61 | 160 | |

| Along track fov | 0.18 | 160 | |

| Slit dimensions | 3 × 50 | — |

| HSI | RGB | |||

|---|---|---|---|---|

|

Exposure Time e [ms] |

fps [s−1] |

HSI Startup Delay [s] |

fps [s−1] | |

| Flight 1 | 25.0 | 18.27 | 15.93 | 1.0 |

| Flight 5 | 25.0 | 40.0 | 0.0 | 1.0 |

| Flight 8 | 25.0 | 40.0 | 6.38 | 1.0 |

| Spectral Coefficients | |||

|---|---|---|---|

| Date/Time DD:MM:YY hh:mm | Sun Elev. [°] | Sun Azim. [°] | Pressure at Sea Level [Pa] |

|---|---|---|---|

| 22:05:22 12:00 | 31.22 | 193.98 | |

| 22:05:22 13:11 | 29.87 | 213.24 | |

| 26:05:22 10:19 | 31.214 | 166.270 | |

| 26:05:22 12:00 | 32.125 | 193.899 | |

| 27:05:22 12:00 | 32.126 | 193.899 | |

| 27:05:22 17:30 | 19.94 | 279.52 |

| Description | Symbol | Value | Unit |

|---|---|---|---|

| Sun surf. Temp | 5778 | ||

| Light speed in vacuum | c | ||

| Planks constant | h | ||

| Boltzmann constant | |||

| Sun diameter | |||

| Distance Sun Earth |

| PPK-GNSS | Barometer | |

|---|---|---|

| [] | 0.2 | — |

| [] | 0.2 | — |

| [] | 0.9 | 1 |

| Variable | Unit | Value |

|---|---|---|

| [2/] | ||

| [2/] | ||

| [2/5] | ||

| [2/3] |

| Date/Time [dd Month yyyy HH:mm] | Chl-A Concentration [μg/L] | Depth [] | Repetition [-] |

|---|---|---|---|

| 22 May 2022 | 1.52569 | 0.0 | 1 |

| 22 May 2022 | 1.91727 | 0.0 | 2 |

| 22 May 2022 | 1.81959 | 0.0 | 3 |

| 26 May 2022 | 0.09681 | 0.0 | 1 |

| 26 May 2022 | 0.11151 | 0.0 | 2 |

| 26 May 2022 | 0.08817 | 0.0 | 3 |

| 27 May 2022 18:45 | 0.57906 | 0.0 | 1 |

| 27 May 2022 18:45 | 0.60344 | 0.0 | 2 |

| 27 May 2022 18:45 | 0.69489 | 7.0 | 1 |

| 27 May 2022 18:45 | 0.70043 | 7.0 | 2 |

| 27 May 2022 18:45 | 0.49050 | 7.0 | 3 |

| Flight No.: | Date [dd month yyyy] | Take-Off Time [Local] [HH:mm] | Flight Duration [min. ] | Altitude (Harbour) [m.a.s.l] |

|---|---|---|---|---|

| 1 | 22 May 2022 | 13:11 | 32.31 | 300 (200) |

| 2 | 23.05.2022 | 09:44 | 61.06 | 300 (150) |

| 3 | 24 May 2022 | 18:02 | 52.08 | 300 (200) |

| 4 | 24 May 2022 | 19:19 | 32.40 | 300 (200) |

| 5 | 26 May 2022 | 10:19 | 48.37 | 300 (200) |

| 6 | 26 May 2022 | 17:22 | 58.96 | 300 (200) |

| 7 | 27 May 2022 | 10:51 | 55.95 | 300 (200) |

| 8 | 27 May 2022 | 17:31 | 59.71 | 300 (200) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasler, O.; Løvås, H.S.; Oudijk, A.E.; Bryne, T.H.; Johansen, T.A. From Do-It-Yourself Design to Discovery: A Comprehensive Approach to Hyperspectral Imaging from Drones. Remote Sens. 2024, 16, 3202. https://doi.org/10.3390/rs16173202

Hasler O, Løvås HS, Oudijk AE, Bryne TH, Johansen TA. From Do-It-Yourself Design to Discovery: A Comprehensive Approach to Hyperspectral Imaging from Drones. Remote Sensing. 2024; 16(17):3202. https://doi.org/10.3390/rs16173202

Chicago/Turabian StyleHasler, Oliver, Håvard S. Løvås, Adriënne E. Oudijk, Torleiv H. Bryne, and Tor Arne Johansen. 2024. "From Do-It-Yourself Design to Discovery: A Comprehensive Approach to Hyperspectral Imaging from Drones" Remote Sensing 16, no. 17: 3202. https://doi.org/10.3390/rs16173202

APA StyleHasler, O., Løvås, H. S., Oudijk, A. E., Bryne, T. H., & Johansen, T. A. (2024). From Do-It-Yourself Design to Discovery: A Comprehensive Approach to Hyperspectral Imaging from Drones. Remote Sensing, 16(17), 3202. https://doi.org/10.3390/rs16173202