TC–Radar: Transformer–CNN Hybrid Network for Millimeter-Wave Radar Object Detection

Abstract

:1. Introduction

- Transformer was integrated with CNN to develop the TC–Radar model, resulting in a significant enhancement in radar detection accuracy. Comprehensive experiments conducted on the CRUW and CARRADA datasets demonstrated that the TC–Radar model outperformed existing methods in terms of detection accuracy and robustness.

- The incorporation of CA and DIFB modules established an effective feature fusion mechanism between the encoder and decoder stages.

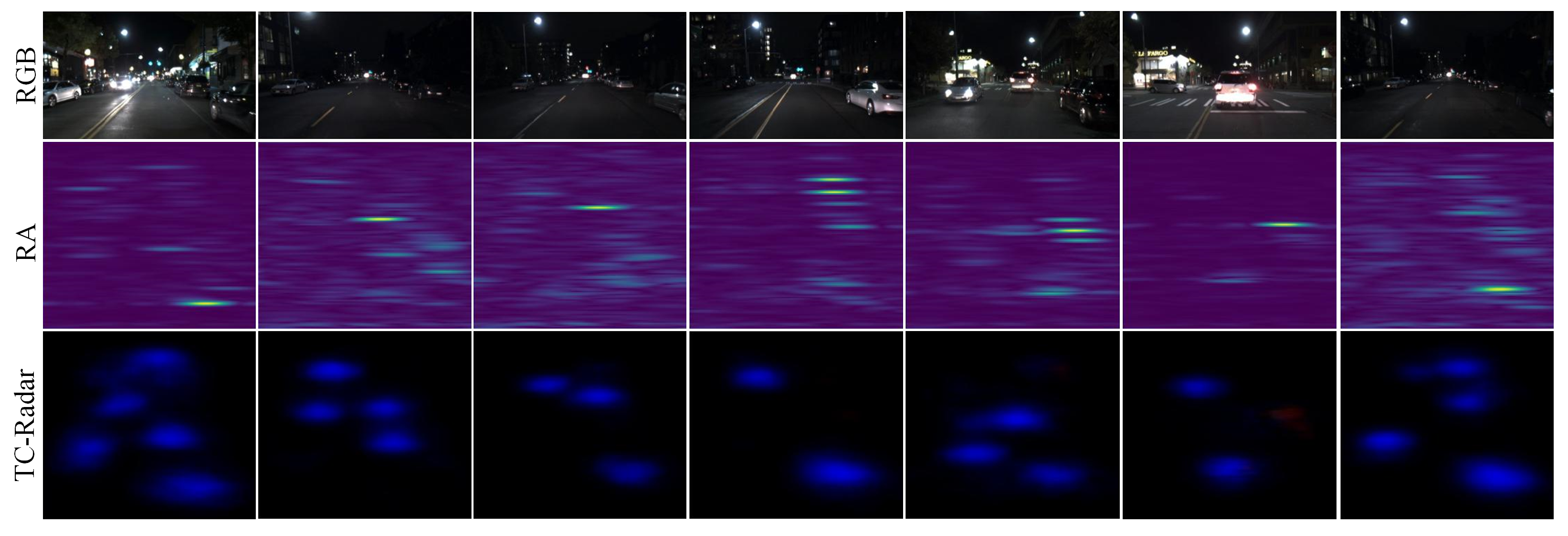

- TC–Radar has demonstrated significant practicality under low-light conditions by compensating for lost information in assisted driving systems and exhibiting robust perception capabilities.

2. Background and Related Work

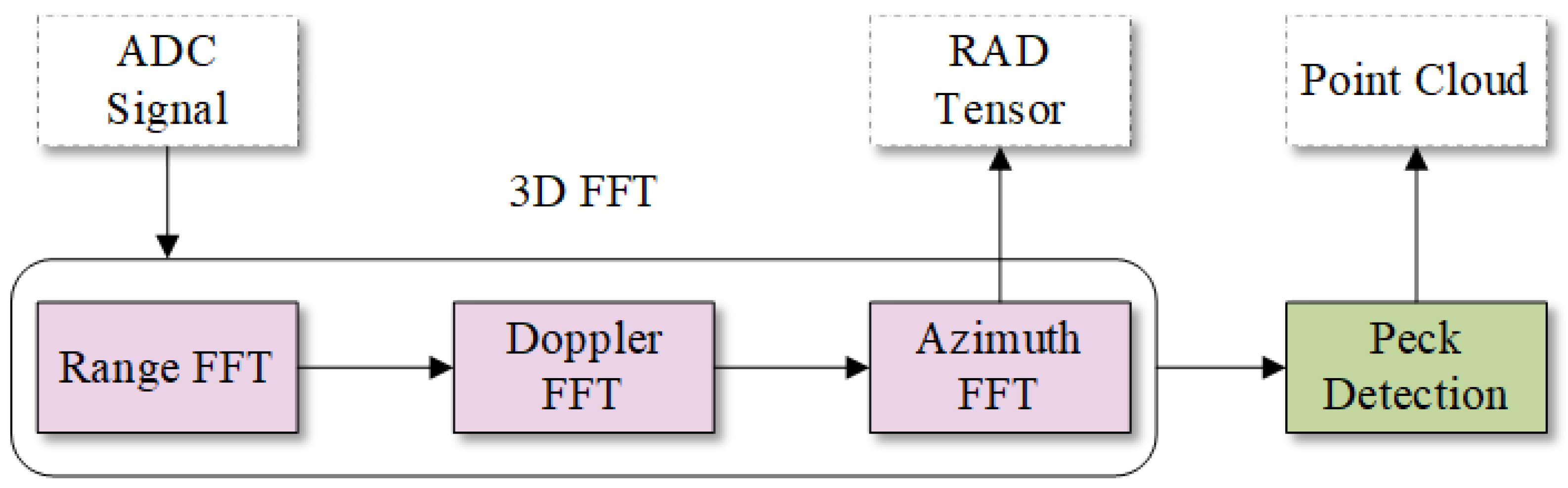

2.1. Radar Signal Processing Chain

2.2. Problem Formulation

2.3. Radar Semantic Segmentation and Object Detection

2.4. Vision Transformer (ViT)-Based Perception Tasks

3. Methodology

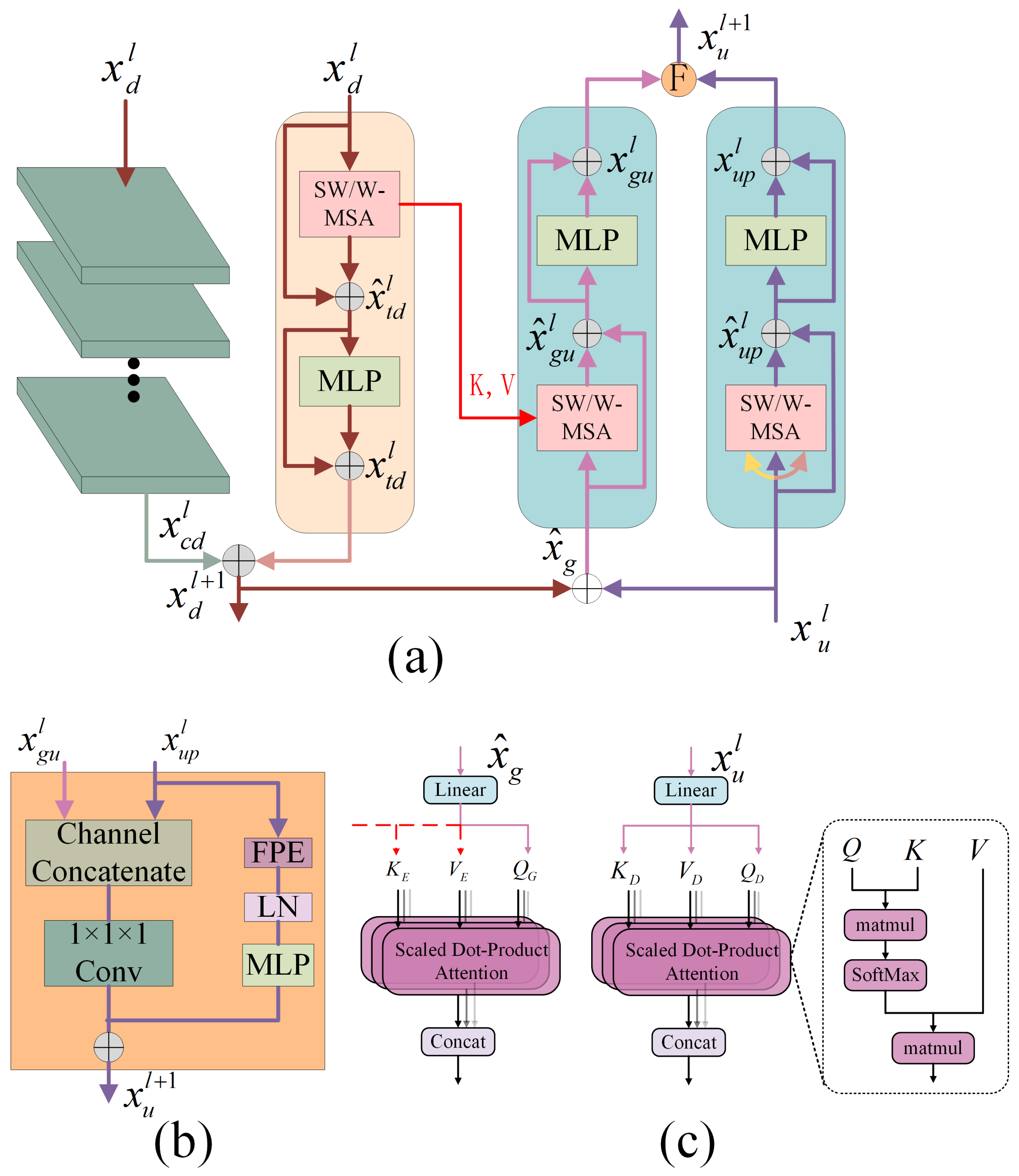

3.1. Overall Architecture

3.1.1. Encode

3.1.2. Decode

3.2. Dense Information Fusion Block (DIFB)

3.3. Cross-Attention (CA)

3.4. Loss Function

4. Experiments

4.1. Dataset

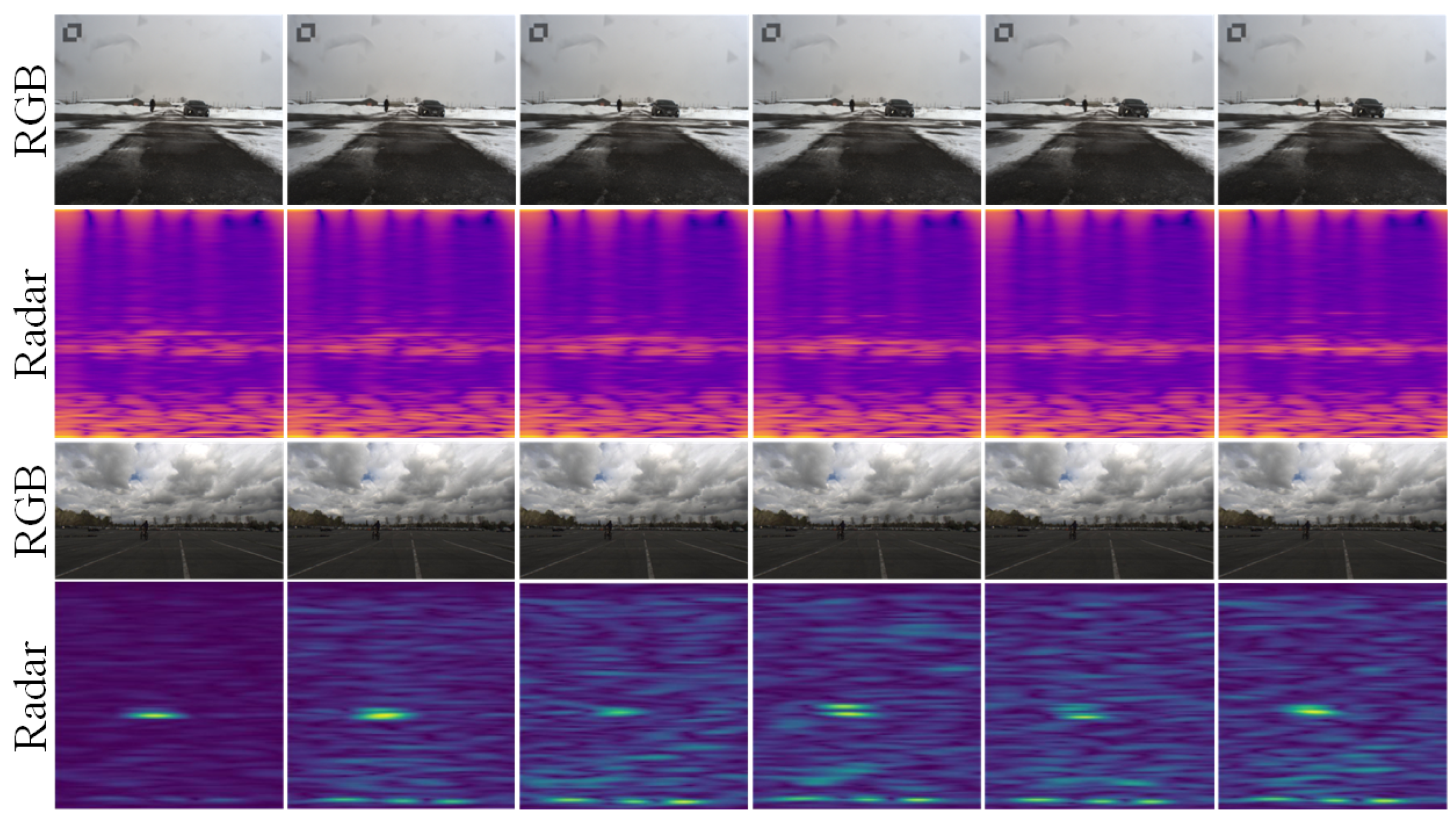

- Object Detection on CRUW: The sensor suite comprises both a camera and a 77 GHz FMCW millimeter-wave radar. A total of 3.5 h of driving data is captured across street, campus, highway, and parking lot scenarios at a rate of 30 frames per second, amounting to roughly 400 K frames. Furthermore, the dataset encompasses data from diverse scenarios, including nighttime and glare conditions, which serve to evaluate the model’s comprehensive perception abilities. Altogether, CRUW represents a high-quality dataset for radar-based object detection. This dataset autonomously produces radar data annotations in the RA perspective utilizing cross-modal supervision techniques, identifying three classes: pedestrians, bicycles, and vehicles. In contrast to the commonly used intersection over union (IoU) evaluation metric, CRUW employs an anchor-free approach known as object localization similarity (OLS) to gauge classification confidence. OLS is delineated as follows:d, S and denote the distance between two points in the RA image, the radial distance from the target to the radar, and the category-specific tolerance constant (defined by the mean object size within each class), respectively. The evaluation metrics employed are AP and average recall (AR). These metrics are assessed using OLS thresholds that vary from 0.5 to 0.9, in increments of 0.05.

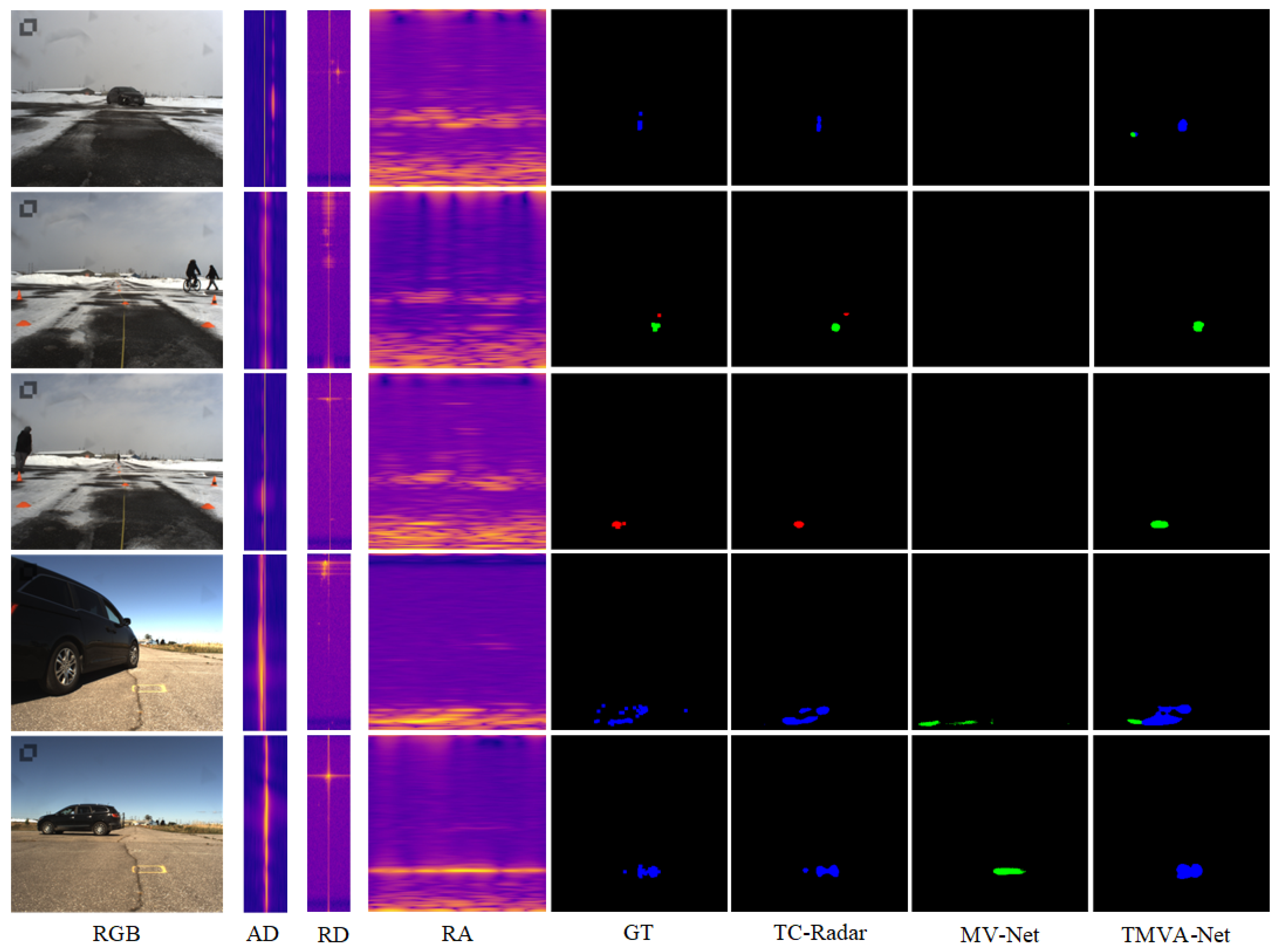

- Semantic Segmentation on CARRADA: The CARRADA dataset comprises 12,666 frames, annotated via a semi-automated process. Relative to the CRUW dataset, CARRADA offers less variety in scene composition yet furnishes comprehensive RAD data. This dataset is segmented into RA, Range–Doppler (RD), and Azimuth–Doppler (AD) 2D tensors, providing authentic datasets for further multi-perspective information fusion research. Within its 30 sequences, the primary entities encompass four classes, pedestrians, cyclists, vehicles, and the background, with detailed mask annotations available in both RA and RD views. Contrasting with the OLS evaluation utilized for CRUW, this dataset employs IoU and Dice as assessment metrics. The formulations for these metrics are delineated as follows:In this context, and represent the GT and the predicted values, respectively.

4.2. Implementation Details

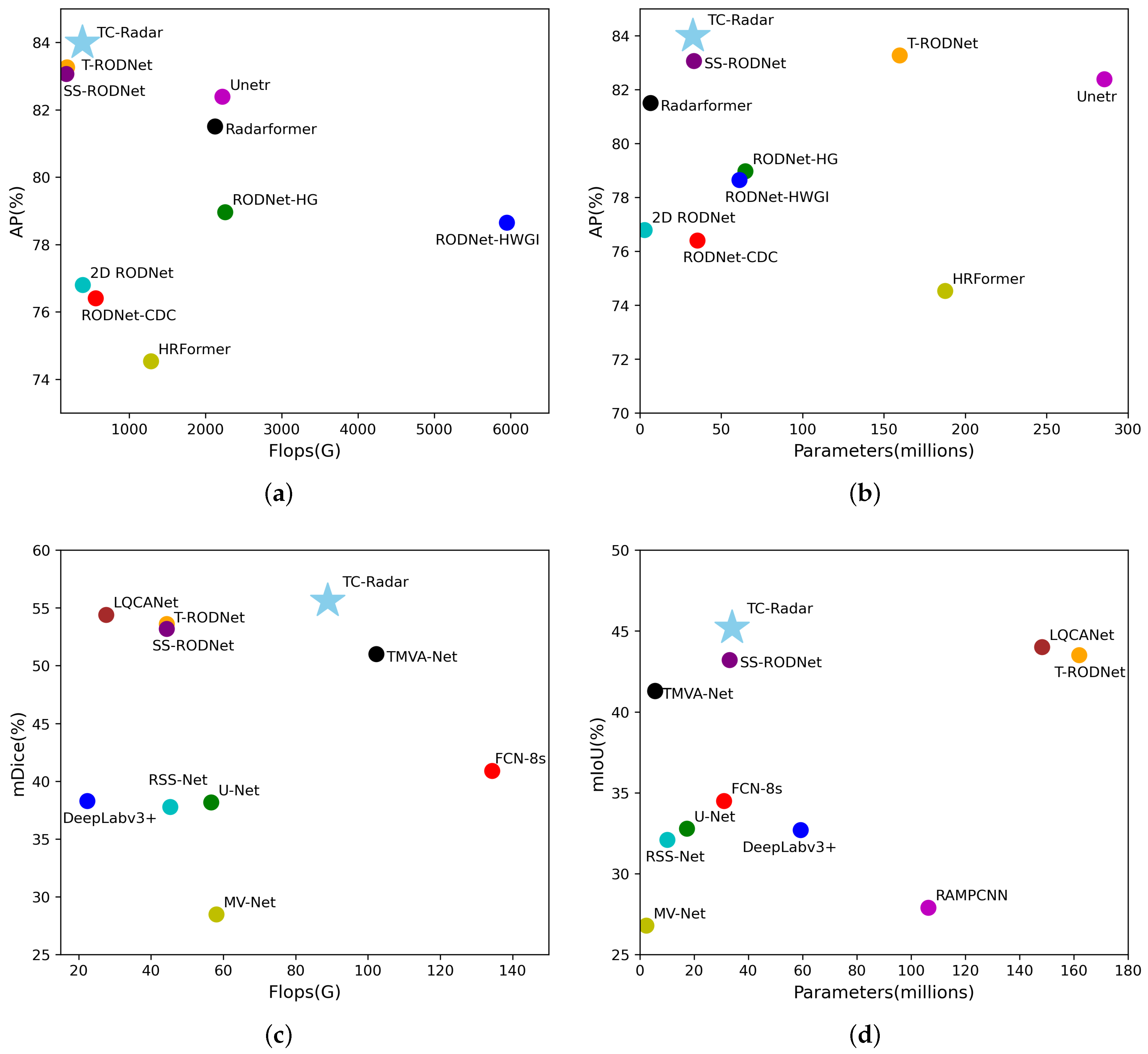

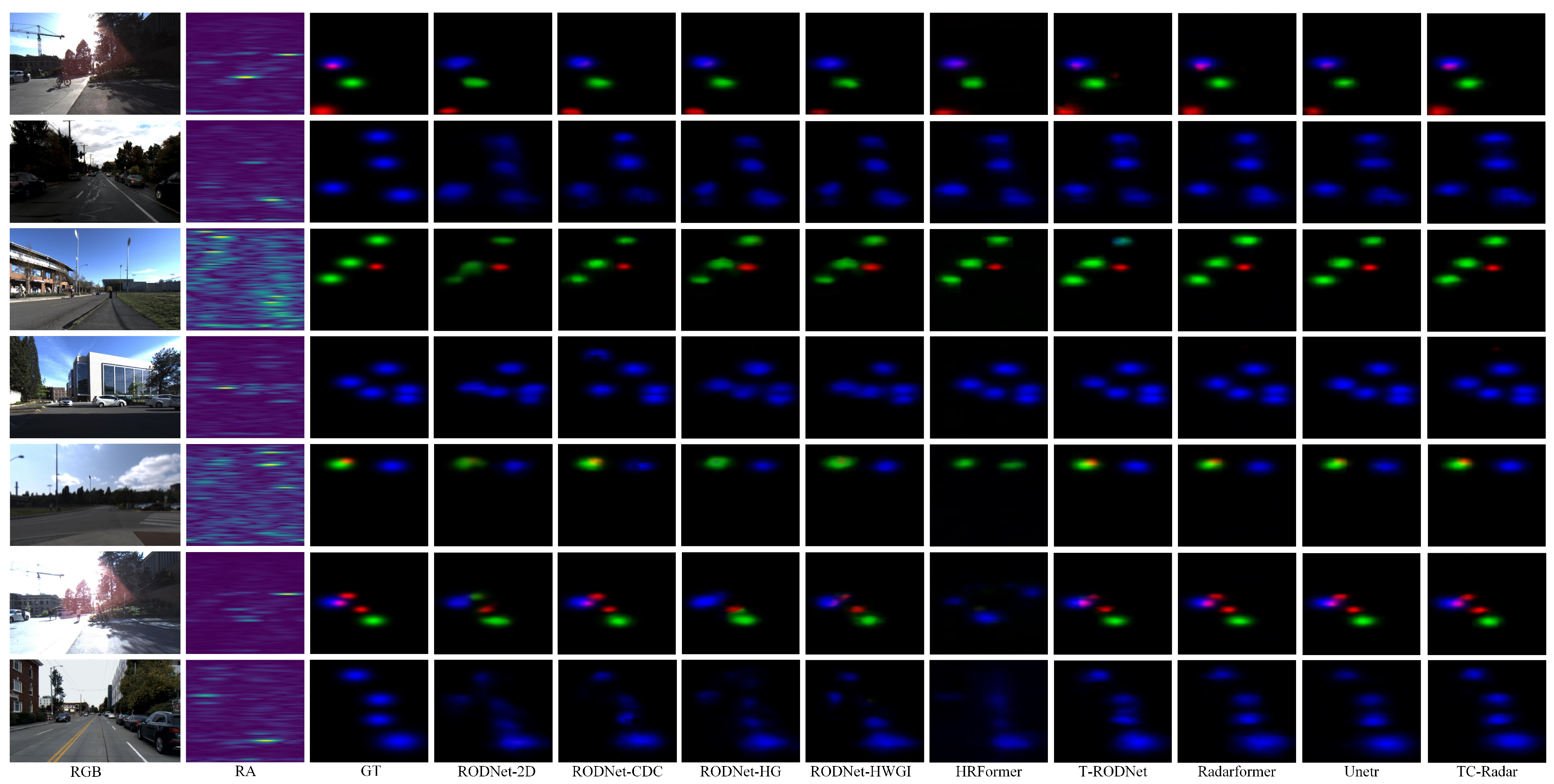

4.3. Comparison with SOTAs

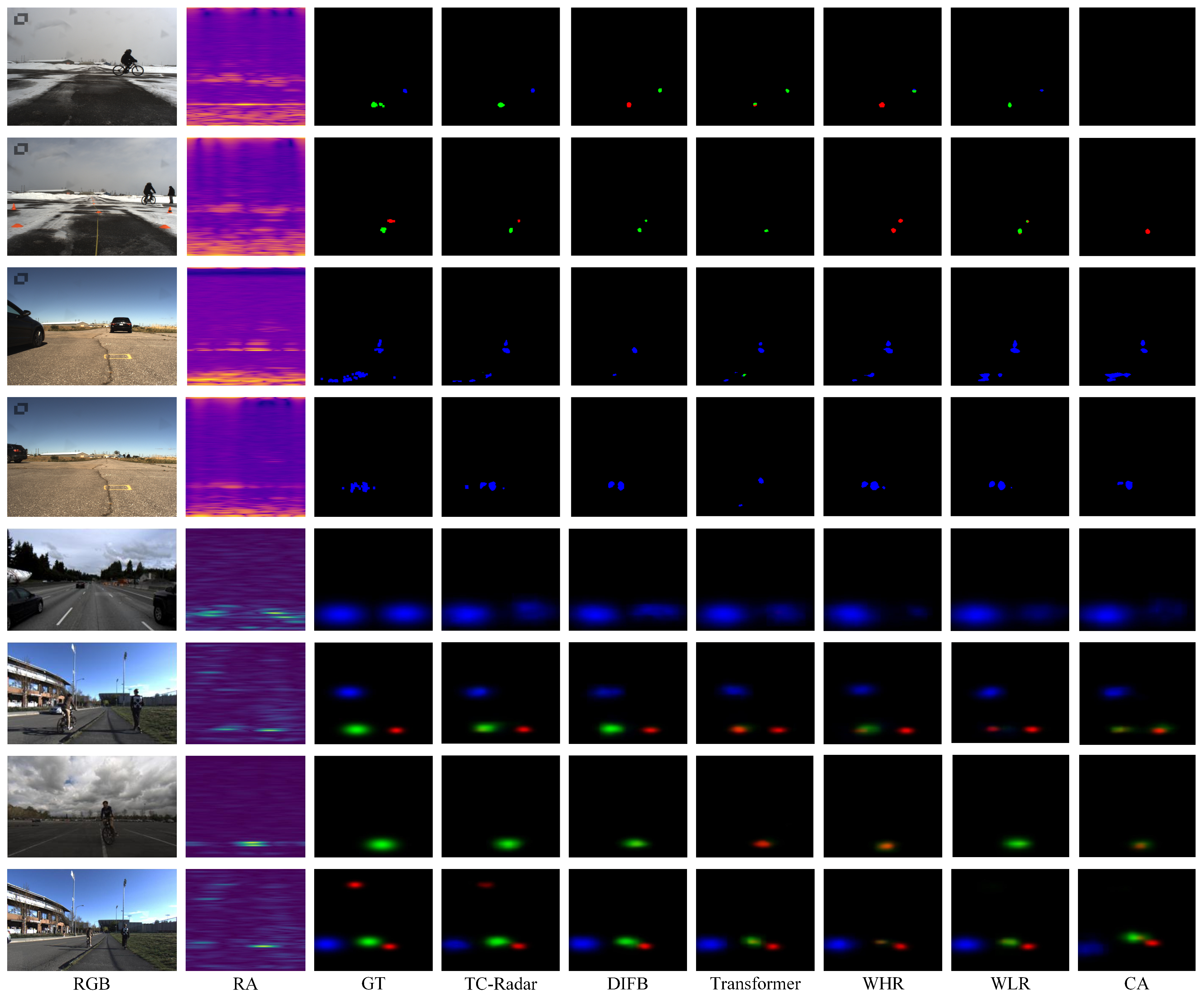

4.4. Ablation Study

4.5. Super Test

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xiang, C.; Feng, C.; Xie, X.; Shi, B.; Lu, H.; Lv, Y.; Yang, M.; Niu, Z. Multi-Sensor Fusion and Cooperative Perception for Autonomous Driving: A Review. IEEE Intell. Transp. Syst. Mag. 2023, 15, 36–58. [Google Scholar] [CrossRef]

- Fernandes, D.; Silva, A.; Névoa, R.; Simões, C.; Gonzalez, D.; Guevara, M.; Novais, P.; Monteiro, J.; Melo-Pinto, P. Point-cloud based 3D object detection and classification methods for self-driving applications: A survey and taxonomy. Inf. Fusion 2021, 68, 161–191. [Google Scholar] [CrossRef]

- Venon, A.; Dupuis, Y.; Vasseur, P.; Merriaux, P. Millimeter Wave FMCW RADARs for Perception, Recognition and Localization in Automotive Applications: A Survey. IEEE Trans. Intell. Veh. 2022, 7, 533–555. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, L.; Zhao, H.; López-Benítez, M.; Yu, L.; Yue, Y. Towards Deep Radar Perception for Autonomous Driving: Datasets, Methods, and Challenges. Sensors 2022, 22, 4208. [Google Scholar] [CrossRef] [PubMed]

- Ignatious, H.A.; El-Sayed, H.; Kulkarni, P. Multilevel Data and Decision Fusion Using Heterogeneous Sensory Data for Autonomous Vehicles. Remote Sens. 2023, 15, 2256. [Google Scholar] [CrossRef]

- Rohling, H. Radar CFAR Thresholding in Clutter and Multiple Target Situations. IEEE Trans. Aerosp. Electron. Syst. 1983, AES-19, 608–621. [Google Scholar] [CrossRef]

- Ravindran, R.; Santora, M.J.; Jamali, M.M. Camera, LiDAR, and Radar Sensor Fusion Based on Bayesian Neural Network (CLR-BNN). IEEE Sens. J. 2022, 22, 6964–6974. [Google Scholar] [CrossRef]

- Wang, Y.; Deng, J.; Li, Y.; Hu, J.; Liu, C.; Zhang, Y.; Ji, J.; Ouyang, W.; Zhang, Y. Bi-LRFusion: Bi-Directional LiDAR-Radar Fusion for 3D Dynamic Object Detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13394–13403. [Google Scholar] [CrossRef]

- Montañez, O.J.; Suarez, M.J.; Fernandez, E.A. Application of Data Sensor Fusion Using Extended Kalman Filter Algorithm for Identification and Tracking of Moving Targets from LiDAR–Radar Data. Remote Sens. 2023, 15, 3396. [Google Scholar] [CrossRef]

- Wang, Z.; Miao, X.; Huang, Z.; Luo, H. Research of Target Detection and Classification Techniques Using Millimeter-Wave Radar and Vision Sensors. Remote Sens. 2021, 13, 1064. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, X.; Wu, X.; Lan, X.; Su, T.; Guo, Y. A Robust Target Detection Algorithm Based on the Fusion of Frequency-Modulated Continuous Wave Radar and a Monocular Camera. Remote Sens. 2024, 16, 2225. [Google Scholar] [CrossRef]

- Zhang, A.; Nowruzi, F.E.; Laganiere, R. RADDet: Range-Azimuth-Doppler based Radar Object Detection for Dynamic Road Users. In Proceedings of the 2021 18th Conference on Robots and Vision (CRV), Burnaby, BC, Canada, 26–28 May 2021; pp. 95–102. [Google Scholar] [CrossRef]

- Gao, X.; Xing, G.; Roy, S.; Liu, H. RAMP-CNN: A Novel Neural Network for Enhanced Automotive Radar Object Recognition. IEEE Sens. J. 2021, 21, 5119–5132. [Google Scholar] [CrossRef]

- Zhang, R.; Cheng, L.; Wang, S.; Lou, Y.; Gao, Y.; Wu, W.; Ng, D.W.K. Integrated Sensing and Communication with Massive MIMO: A Unified Tensor Approach for Channel and Target Parameter Estimation. IEEE Trans. Wirel. Commun. 2024, 1. [Google Scholar] [CrossRef]

- Jin, Y.; Hoffmann, M.; Deligiannis, A.; Fuentes-Michel, J.C.; Vossiek, M. Semantic Segmentation-Based Occupancy Grid Map Learning with Automotive Radar Raw Data. IEEE Trans. Intell. Veh. 2024, 9, 216–230. [Google Scholar] [CrossRef]

- Xu, Y.; Li, W.; Yang, Y.; Ji, H.; Lang, Y. Superimposed Mask-Guided Contrastive Regularization for Multiple Targets Echo Separation on Range–Doppler Maps. IEEE Trans. Instrum. Meas. 2023, 72, 5028712. [Google Scholar] [CrossRef]

- Tatarchenko, M.; Rambach, K. Histogram-based Deep Learning for Automotive Radar. In Proceedings of the 2023 IEEE Radar Conference (RadarConf23), San Antonio, TX, USA, 1–4 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Meng, C.; Duan, Y.; He, C.; Wang, D.; Fan, X.; Zhang, Y. mmPlace: Robust Place Recognition with Intermediate Frequency Signal of Low-Cost Single-Chip Millimeter Wave Radar. IEEE Robot. Autom. Lett. 2024, 9, 4878–4885. [Google Scholar] [CrossRef]

- Ouaknine, A.; Newson, A.; Pérez, P.; Tupin, F.; Rebut, J. Multi-View Radar Semantic Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 15651–15660. [Google Scholar] [CrossRef]

- Zou, H.; Xie, Z.; Ou, J.; Gao, Y. TransRSS: Transformer-based Radar Semantic Segmentation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 6965–6972. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, Z.; Li, Y.; Hwang, J.N.; Xing, G.; Liu, H. RODNet: A Real-Time Radar Object Detection Network Cross-Supervised by Camera-Radar Fused Object 3D Localization. IEEE J. Sel. Top. Signal Process. 2021, 15, 954–967. [Google Scholar] [CrossRef]

- Ju, B.; Yang, W.; Jia, J.; Ye, X.; Chen, Q.; Tan, X.; Sun, H.; Shi, Y.; Ding, E. DANet: Dimension Apart Network for Radar Object Detection. In Proceedings of the 2021 International Conference on Multimedia Retrieval, New York, NY, USA, 30 August 2021; ICMR ’21. pp. 533–539. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Decourt, C.; VanRullen, R.; Salle, D.; Oberlin, T. A Recurrent CNN for Online Object Detection on Raw Radar Frames. IEEE Trans. Intell. Transp. Syst. 2024, 1–10. [Google Scholar] [CrossRef]

- Jia, F.; Tan, J.; Lu, X.; Qian, J. Radar Timing Range–Doppler Spectral Target Detection Based on Attention ConvLSTM in Traffic Scenes. Remote Sens. 2023, 15, 4150. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Long Beach, CA, USA, 2017; Volume 30. [Google Scholar]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-Transformer Network With Multiscale Context Aggregation for Fine-Grained Cropland Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Gao, L.; Liu, H.; Yang, M.; Chen, L.; Wan, Y.; Xiao, Z.; Qian, Y. STransFuse: Fusing Swin Transformer and Convolutional Neural Network for Remote Sensing Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2021, 14, 10990–11003. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Liu, Y.; Li, H. DASNet: Dual Attentive Fully Convolutional Siamese Networks for Change Detection in High-Resolution Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2021, 14, 1194–1206. [Google Scholar] [CrossRef]

- Yang, C.; Kong, Y.; Wang, X.; Cheng, Y. Hyperspectral Image Classification Based on Adaptive Global–Local Feature Fusion. Remote Sens. 2024, 16, 1918. [Google Scholar] [CrossRef]

- Jin, Y.; Deligiannis, A.; Fuentes-Michel, J.C.; Vossiek, M. Cross-Modal Supervision-Based Multitask Learning with Automotive Radar Raw Data. IEEE Trans. Intell. Veh. 2023, 8, 3012–3025. [Google Scholar] [CrossRef]

- Orr, I.; Cohen, M.; Zalevsky, Z. High-resolution radar road segmentation using weakly supervised learning. Nat. Mach. Intell. 2021, 3, 239–246. [Google Scholar] [CrossRef]

- Grimm, C.; Fei, T.; Warsitz, E.; Farhoud, R.; Breddermann, T.; Haeb-Umbach, R. Warping of Radar Data Into Camera Image for Cross-Modal Supervision in Automotive Applications. IEEE Trans. Veh. Technol. 2022, 71, 9435–9449. [Google Scholar] [CrossRef]

- Zhuang, L.; Jiang, T.; Wang, J.; An, Q.; Xiao, K.; Wang, A. Effective mmWave Radar Object Detection Pretraining Based on Masked Image Modeling. IEEE Sens. J. 2024, 24, 3999–4010. [Google Scholar] [CrossRef]

- Schumann, O.; Hahn, M.; Scheiner, N.; Weishaupt, F.; Tilly, J.F.; Dickmann, J.; Wöhler, C. RadarScenes: A Real-World Radar Point Cloud Data Set for Automotive Applications. In Proceedings of the 2021 IEEE 24th International Conference on Information Fusion (FUSION), Sun City, South Africa, 1–4 November 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Fang, S.; Zhu, H.; Bisla, D.; Choromanska, A.; Ravindran, S.; Ren, D.; Wu, R. ERASE-Net: Efficient Segmentation Networks for Automotive Radar Signals. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 9331–9337. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, X.; Zhang, Y.; Guo, Y.; Chen, Y.; Huang, X.; Ma, Z. PeakConv: Learning Peak Receptive Field for Radar Semantic Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 17577–17586. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zeller, M.; Behley, J.; Heidingsfeld, M.; Stachniss, C. Gaussian Radar Transformer for Semantic Segmentation in Noisy Radar Data. IEEE Robot. Autom. Lett. 2023, 8, 344–351. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, S.; Choi, J.W.; Kum, D. CRAFT: Camera-Radar 3D Object Detection with Spatio-Contextual Fusion Transformer. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 1160–1168. [Google Scholar] [CrossRef]

- Hwang, J.J.; Kretzschmar, H.; Manela, J.; Rafferty, S.; Armstrong-Crews, N.; Chen, T.; Anguelov, D. CramNet: Camera-Radar Fusion with Ray-Constrained Cross-Attention for Robust 3D Object Detection. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 388–405. [Google Scholar]

- Lo, C.C.; Vandewalle, P. RCDPT: Radar-Camera Fusion Dense Prediction Transformer. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Jiang, T.; Zhuang, L.; An, Q.; Wang, J.; Xiao, K.; Wang, A. T-RODNet: Transformer for Vehicular Millimeter-Wave Radar Object Detection. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Dalbah, Y.; Lahoud, J.; Cholakkal, H. RadarFormer: Lightweight and Accurate Real-Time Radar Object Detection Model. In Proceedings of the Image Analysis; Gade, R., Felsberg, M., Kämäräinen, J.K., Eds.; Springer: Cham, Switzerland, 2023; pp. 341–358. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Agarwal, A.; Arora, C. Attention Attention Everywhere: Monocular Depth Prediction with Skip Attention. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 5850–5859. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. UNETR: Transformers for 3D Medical Image Segmentation. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2022; pp. 1748–1758. [Google Scholar] [CrossRef]

- Yuan, Y.; Fu, R.; Huang, L.; Lin, W.; Zhang, C.; Chen, X.; Wang, J. HRFormer: High-Resolution Vision Transformer for Dense Predict. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Volume 34, pp. 7281–7293. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Kaul, P.; de Martini, D.; Gadd, M.; Newman, P. RSS-Net: Weakly-Supervised Multi-Class Semantic Segmentation with FMCW Radar. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 431–436. [Google Scholar] [CrossRef]

- Zhuang, L.; Jiang, T.; Jiang, H.; Wang, A.; Huang, Z. LQCANet: Learnable-Query-Guided Multi-Scale Fusion Network Based on Cross-Attention for Radar Semantic Segmentation. IEEE Trans. Intell. Veh. 2024, 9, 3330–3344. [Google Scholar] [CrossRef]

| Branch | Kernel | Padding | Dilation | OutChannels |

|---|---|---|---|---|

| Branch1 | (3, 3, 3) | (1, 1, 1) | (1, 1, 1) | 1/4 C |

| Branch2-1 | (3, 3, 3) | (1, 2, 2) | (1, 2, 2) | 1/4 C |

| Branch2-2 | (3, 5, 5) | (2, 4, 4) | (2, 2, 2) | 1/4 C |

| Branch3-1 | (3, 3, 3) | (1, 4, 4) | (1, 4, 4) | 1/4 C |

| Branch3-2 | (3, 5, 5) | (2, 8, 8) | (2, 4, 4) | 1/4 C |

| Branch4-1 | (3, 3, 3) | (1, 6, 6) | (1, 6, 6) | 1/4 C |

| Branch4-2 | (3, 5, 5) | (2, 12, 12) | (2, 6, 6) | 1/4 C |

| Model | FLOPs (G) | Params (M) | Total | Pedestrian | Cyclist | Car | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| AP (%) | AR (%) | AP (%) | AR (%) | AP (%) | AR (%) | AP (%) | AR (%) | |||

| RODNet-CDC [21] | 560.1 | 34.5 | 76.41 | 82.20 | 76.79 | 80.98 | 74.92 | 78.46 | 77.47 | 88.34 |

| RODNet-HG [21] | 2258.3 | 64.8 | 78.97 | 83.98 | 79.18 | 82.83 | 75.14 | 80.24 | 82.96 | 90.03 |

| RODNet-HWGI [21] | 5949.7 | 61.2 | 78.65 | 83.44 | 78.02 | 83.26 | 76.23 | 78.37 | 82.40 | 89.43 |

| RODNet-2D [45] | 389.5 | 2.8 | 76.80 | 83.50 | 74.31 | 81.46 | 80.21 | 81.89 | 77.03 | 88.62 |

| Unetr [49] | 2221.7 | 285.4 | 82.39 | 86.14 | 81.52 | 85.18 | 85.46 | 86.50 | 80.37 | 87.20 |

| HRFormer [50] | 1286.2 | 187.6 | 74.54 | 78.23 | 75.24 | 78.02 | 66.34 | 67.95 | 82.60 | 90.06 |

| Radarformer [45] | 2125.7 | 6.4 | 81.51 | 86.03 | 81.49 | 86.67 | 81.86 | 82.93 | 81.17 | 88.46 |

| T-RODNet [44] | 182.5 | 159.7 | 83.27 | 86.98 | 82.19 | 85.41 | 82.28 | 84.30 | 86.22 | 92.53 |

| SS-RODNet [34] | 172.8 | 33.1 | 83.07 | 86.43 | 81.37 | 84.61 | 83.34 | 84.34 | 85.55 | 90.86 |

| TC–Radar (Ours) | 384.6 | 32.6 | 83.99 | 88.02 | 85.19 | 88.52 | 82.74 | 84.42 | 83.46 | 91.25 |

| Model | FLOPs (G) | Params (M) | IoU (%) | Dice (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bkg | Ped | Cyc | Car | mIoU | Bkg | Ped | Cyc | Car | mDice | |||

| FCN-8s [51] | 134.3 | 31.0 | 99.8 | 14.8 | 0.0 | 23.3 | 34.5 | 99.9 | 25.8 | 0.0 | 37.8 | 40.9 |

| U-Net [52] | 56.6 | 17.3 | 99.8 | 22.4 | 8.8 | 0.0 | 32.8 | 99.9 | 36.6 | 16.1 | 0.0 | 38.2 |

| DeepLabv3+ [53] | 22.4 | 59.3 | 99.9 | 3.4 | 5.9 | 21.8 | 32.7 | 99.9 | 6.5 | 11.1 | 35.7 | 38.3 |

| RSS-Net [54] | 45.3 | 10.1 | 99.5 | 7.3 | 5.6 | 15.8 | 32.1 | 99.8 | 13.7 | 10.5 | 27.4 | 37.8 |

| RAMP-CNN [13] | 420.4 | 106.4 | 99.8 | 1.7 | 2.6 | 7.2 | 27.9 | 99.9 | 3.4 | 5.1 | 13.5 | 30.5 |

| MV-Net [19] | 58.1 | 2.4 | 99.8 | 0.1 | 1.1 | 6.2 | 26.8 | 99.0 | 0.0 | 7.3 | 24.8 | 28.5 |

| TMVA-Net [19] | 102.3 | 5.6 | 99.8 | 26.0 | 8.6 | 30.7 | 41.3 | 99.9 | 41.3 | 15.9 | 47.0 | 51.0 |

| T-RODNet [44] | 44.3 | 162.0 | 99.9 | 25.4 | 9.5 | 39.4 | 43.5 | 99.9 | 40.5 | 17.4 | 56.6 | 53.6 |

| SS-RODNet [34] | 44.3 | 33.1 | 99.9 | 26.7 | 8.9 | 37.2 | 43.2 | 99.9 | 42.2 | 16.3 | 54.2 | 53.2 |

| LQCANet [55] | 27.6 | 148.3 | 99.9 | 25.3 | 11.3 | 39.5 | 44.0 | 99.9 | 40.4 | 20.5 | 56.6 | 54.4 |

| PeakConv [37] | ✗ | 6.3 | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 53.3 |

| TransRSS [20] | ✗ | ✗ | 99.6 | 24.9 | 13.6 | 33.9 | 43.0 | 99.7 | 37.4 | 15.9 | 47.0 | 51.0 |

| TC–Radar (ours) | 88.9 | 34.0 | 99.9 | 28.6 | 10.8 | 41.6 | 45.2 | 99.9 | 44.4 | 19.5 | 58.7 | 55.6 |

| DIFB | Transformer | CA | AP (%) | AR (%) |

|---|---|---|---|---|

| ✗ | ✓ | ✓ | 82.58 | 85.71 |

| ✓ | ✗ | ✓ | 81.88 | 85.87 |

| ✓ | ✓ | ✗ | 77.46 | 82.05 |

| ✓ | ✓ | WLR | 81.58 | 85.96 |

| ✓ | ✓ | WHR | 80.23 | 83.50 |

| ✓ | ✓ | ✓ | 83.99 | 88.02 |

| DIFB | Transformer | CA | mIoU (%) | mDice (%) |

|---|---|---|---|---|

| ✗ | ✓ | ✓ | 43.5 | 54.0 |

| ✓ | ✗ | ✓ | 42.7 | 52.3 |

| ✓ | ✓ | ✗ | 41.3 | 50.8 |

| ✓ | ✓ | WLR | 43.8 | 53.6 |

| ✓ | ✓ | WHR | 42.4 | 51.9 |

| ✓ | ✓ | ✓ | 45.2 | 55.6 |

| Dataset | Frames | Infer (ms) | AP (mIoU)% | AR (mDice)% |

|---|---|---|---|---|

| CRUW | 1 | 78.06 | 74.52 | 80.72 |

| 16 | 117.12 | 83.99 | 88.02 | |

| CARRADA | 1 | 34.09 | (40.6) | (49.0) |

| 4 | 36.06 | (45.2) | (55.6) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, F.; Li, C.; Bi, S.; Qian, J.; Wei, L.; Sun, G. TC–Radar: Transformer–CNN Hybrid Network for Millimeter-Wave Radar Object Detection. Remote Sens. 2024, 16, 2881. https://doi.org/10.3390/rs16162881

Jia F, Li C, Bi S, Qian J, Wei L, Sun G. TC–Radar: Transformer–CNN Hybrid Network for Millimeter-Wave Radar Object Detection. Remote Sensing. 2024; 16(16):2881. https://doi.org/10.3390/rs16162881

Chicago/Turabian StyleJia, Fengde, Chenyang Li, Siyi Bi, Junhui Qian, Leizhe Wei, and Guohao Sun. 2024. "TC–Radar: Transformer–CNN Hybrid Network for Millimeter-Wave Radar Object Detection" Remote Sensing 16, no. 16: 2881. https://doi.org/10.3390/rs16162881

APA StyleJia, F., Li, C., Bi, S., Qian, J., Wei, L., & Sun, G. (2024). TC–Radar: Transformer–CNN Hybrid Network for Millimeter-Wave Radar Object Detection. Remote Sensing, 16(16), 2881. https://doi.org/10.3390/rs16162881