Unmanned Aerial Vehicle Object Detection Based on Information-Preserving and Fine-Grained Feature Aggregation

Abstract

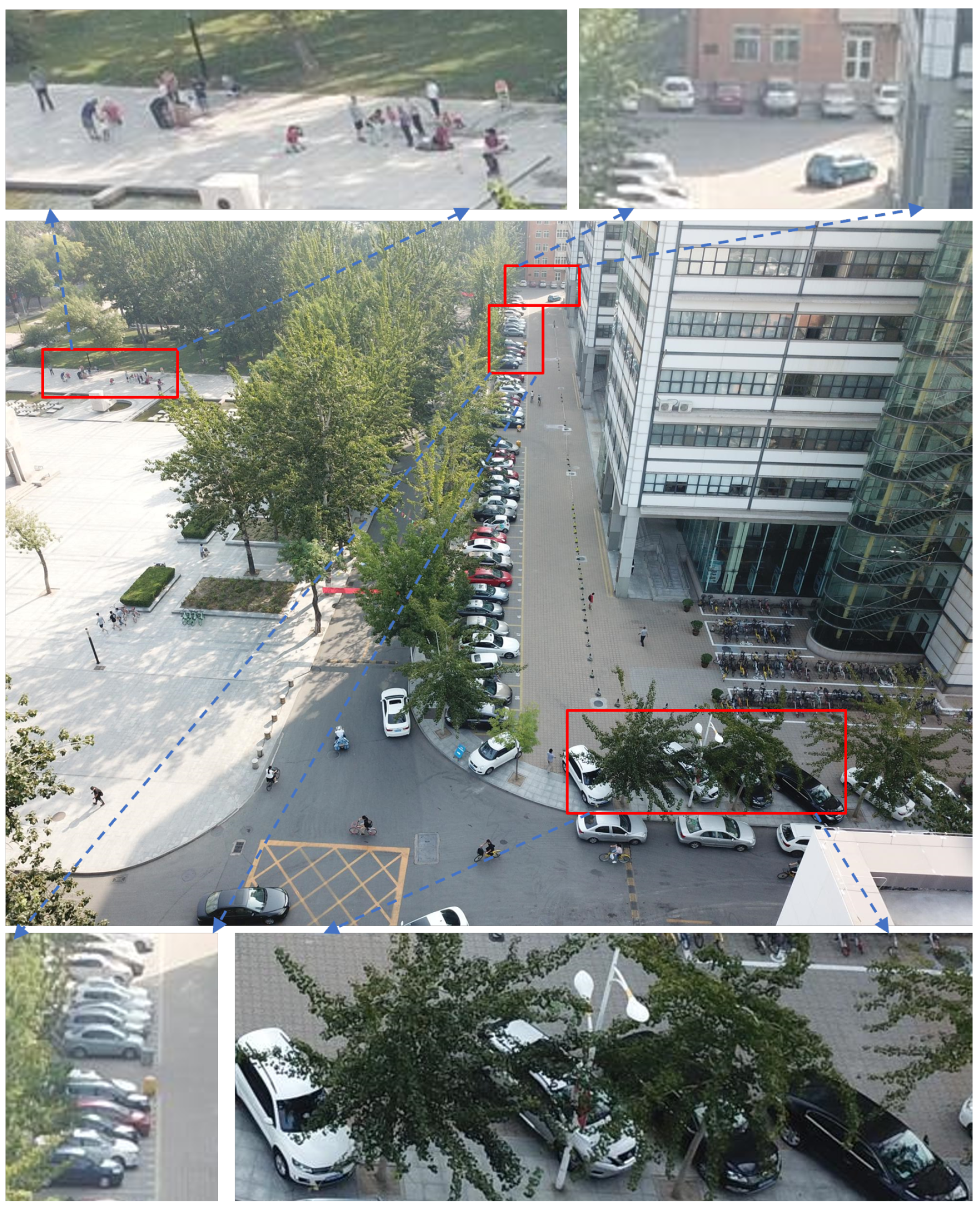

1. Introduction

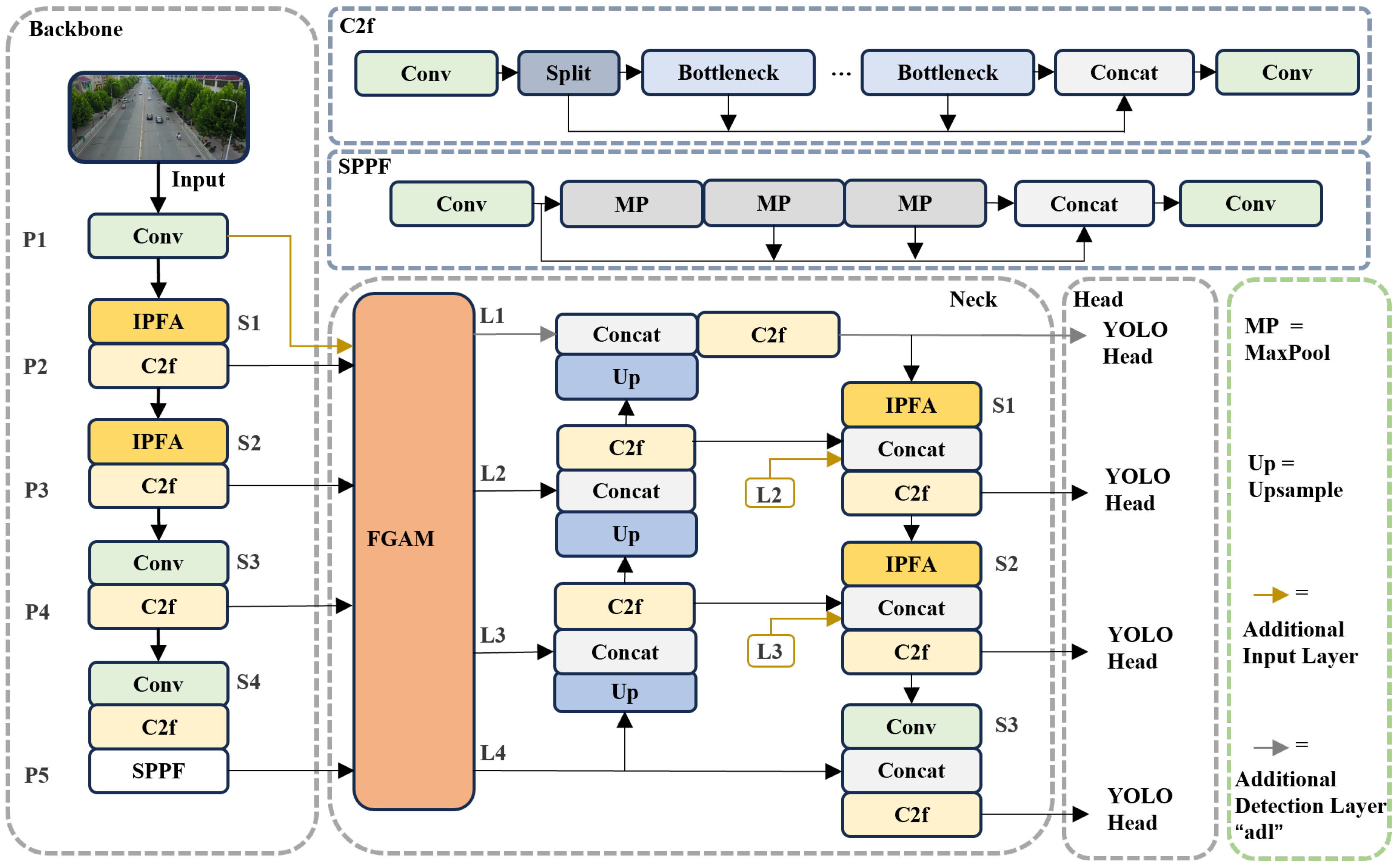

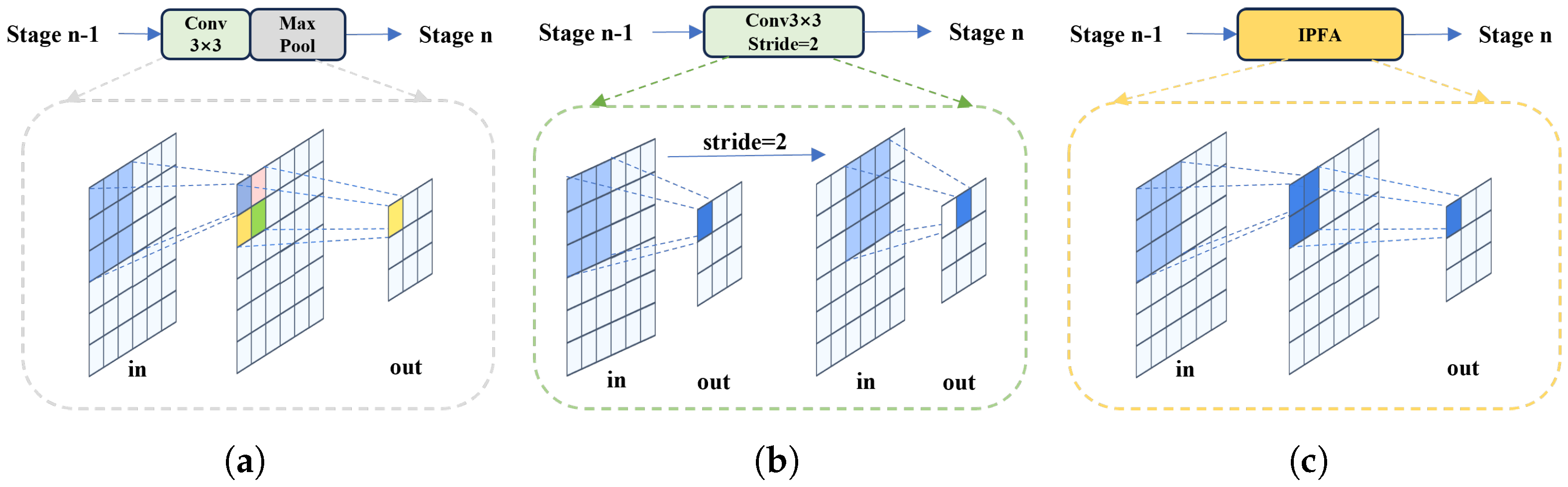

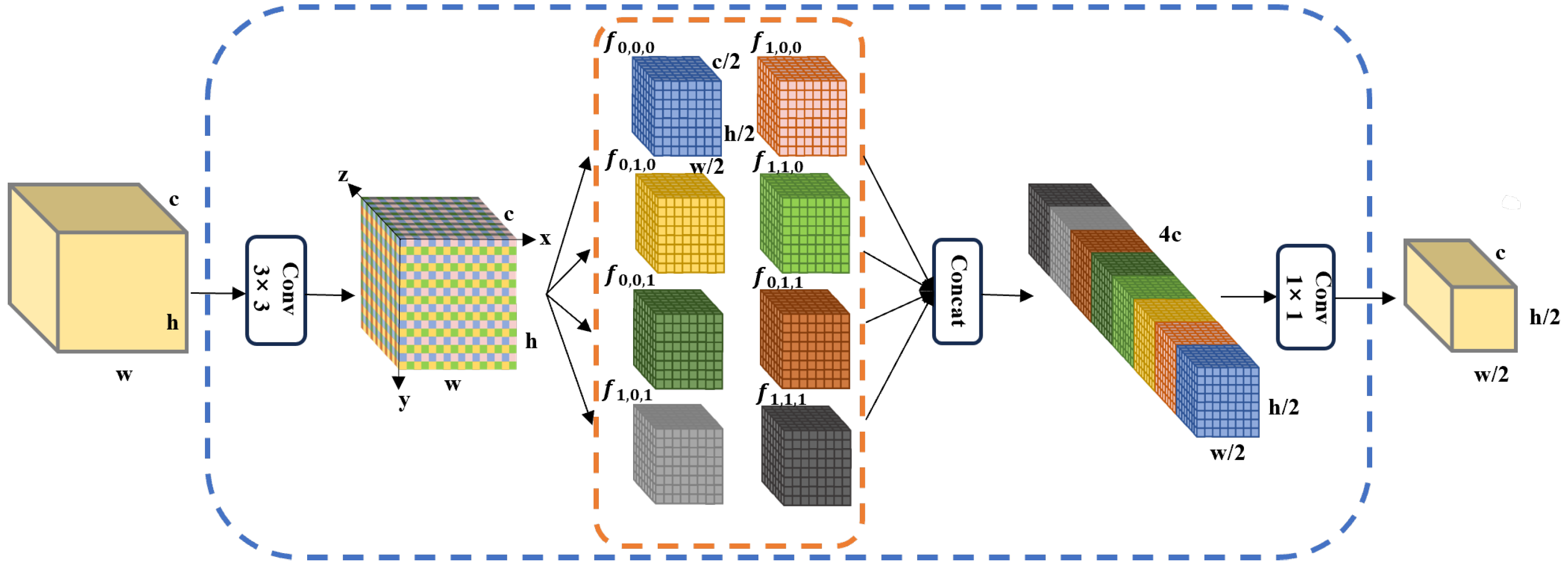

- This paper introduces an IPFA module to address the issue of feature information loss in conventional aggregation methods such as stride convolution. By splitting features across multiple dimensions and reassembling them, particularly along the channel dimension, the IPFA module enables effective feature aggregation while preserving the original features, thus constructing abstract semantic representations.

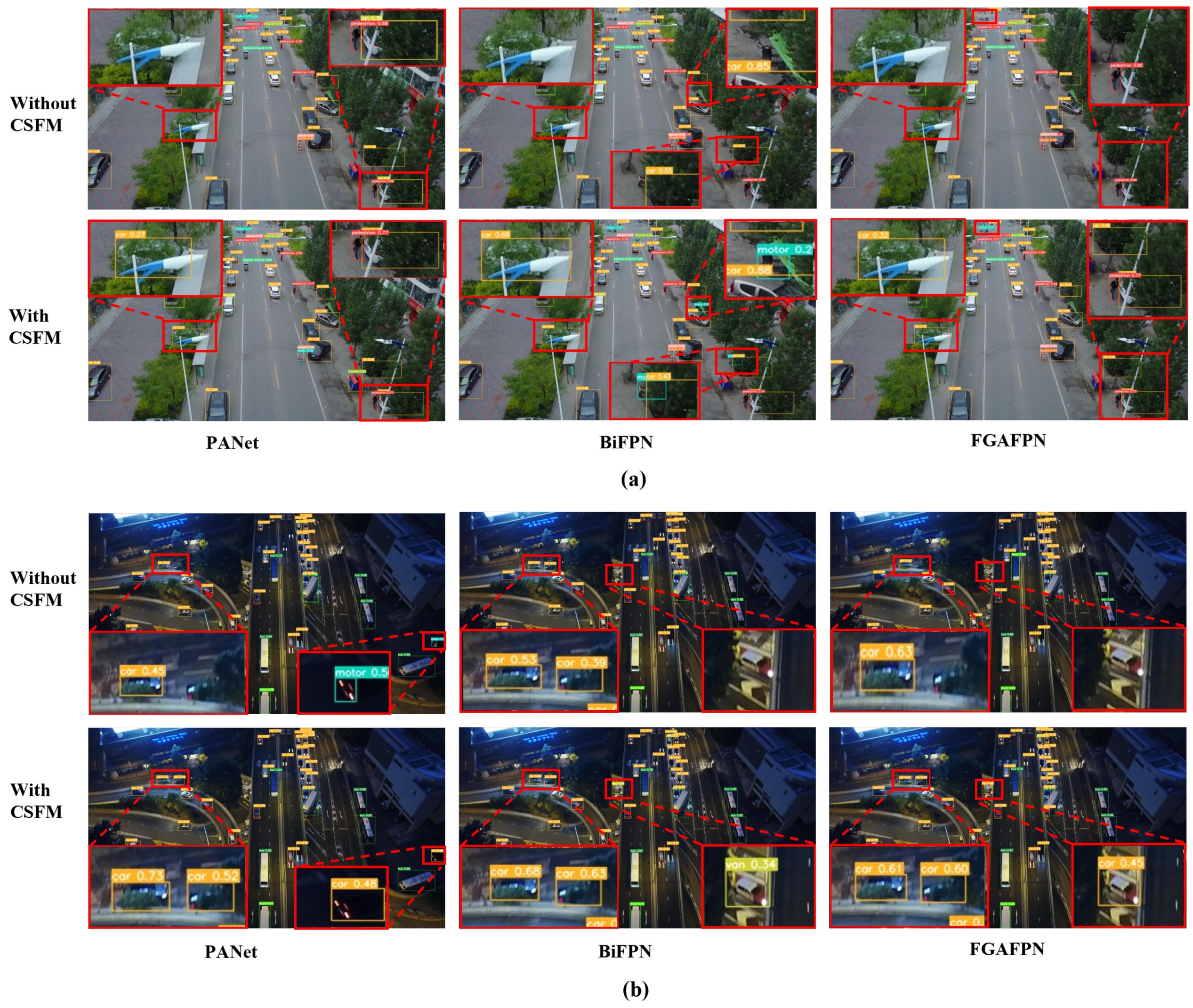

- A CSFM is introduced to balance low-level spatial details and high-level semantic features during the feature fusion process. The CSFM integrates channel and spatial dimensions attention mechanisms to filter out redundancy and conflicts, thereby enhancing fusion effectiveness.

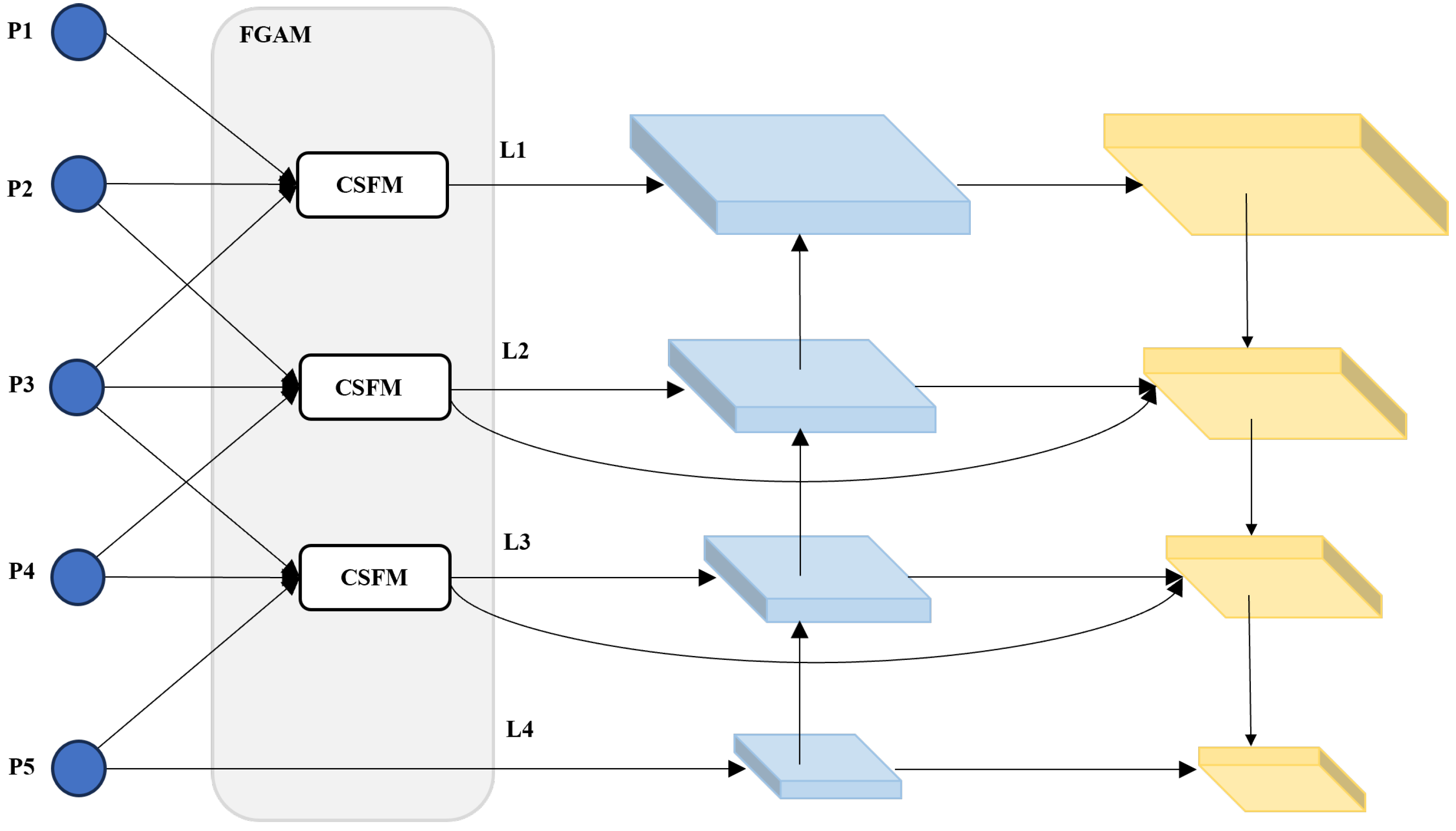

- This paper proposes FGAFPN to exploit the advantages of deep and shallow feature maps fully. Through CSFM and cross-level connections, FGAFPN ensures a balance between semantic and spatial information in the output feature maps, reducing semantic information differences and generating conflicting information, thereby improving target detection performance, especially in scenarios with complex backgrounds.

2. Related Work

2.1. Object Detection

2.2. UAV Image Object Detection

3. Methodology

3.1. Information-Preserving Feature Aggregation Module

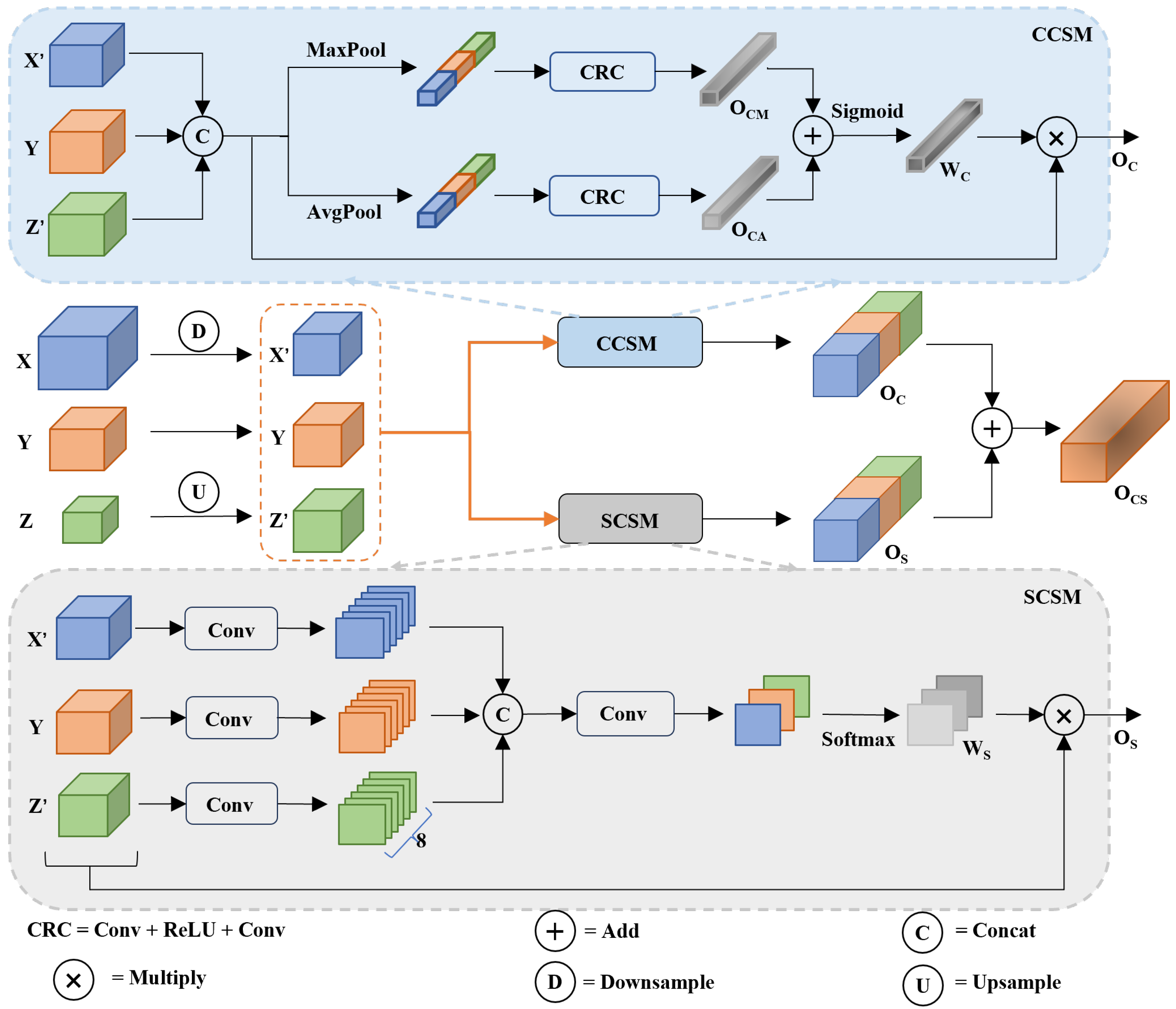

3.2. Conflict Information Suppression Feature Fusion Module

3.3. Fine-Grained Aggregation Feature Pyramid Network

4. Experiment

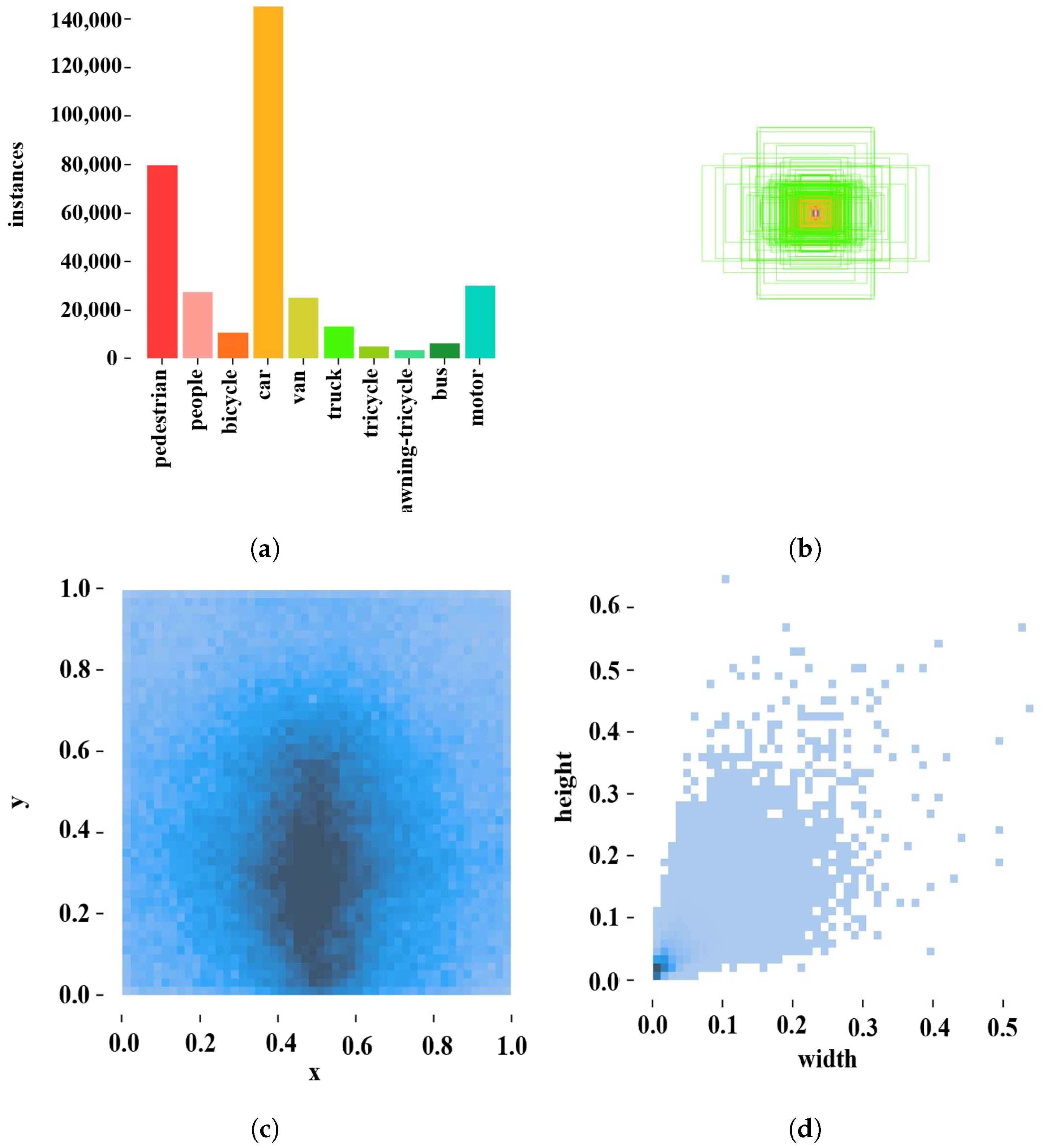

4.1. Datasets

4.2. Evaluation Metrics

4.3. Implementation Details

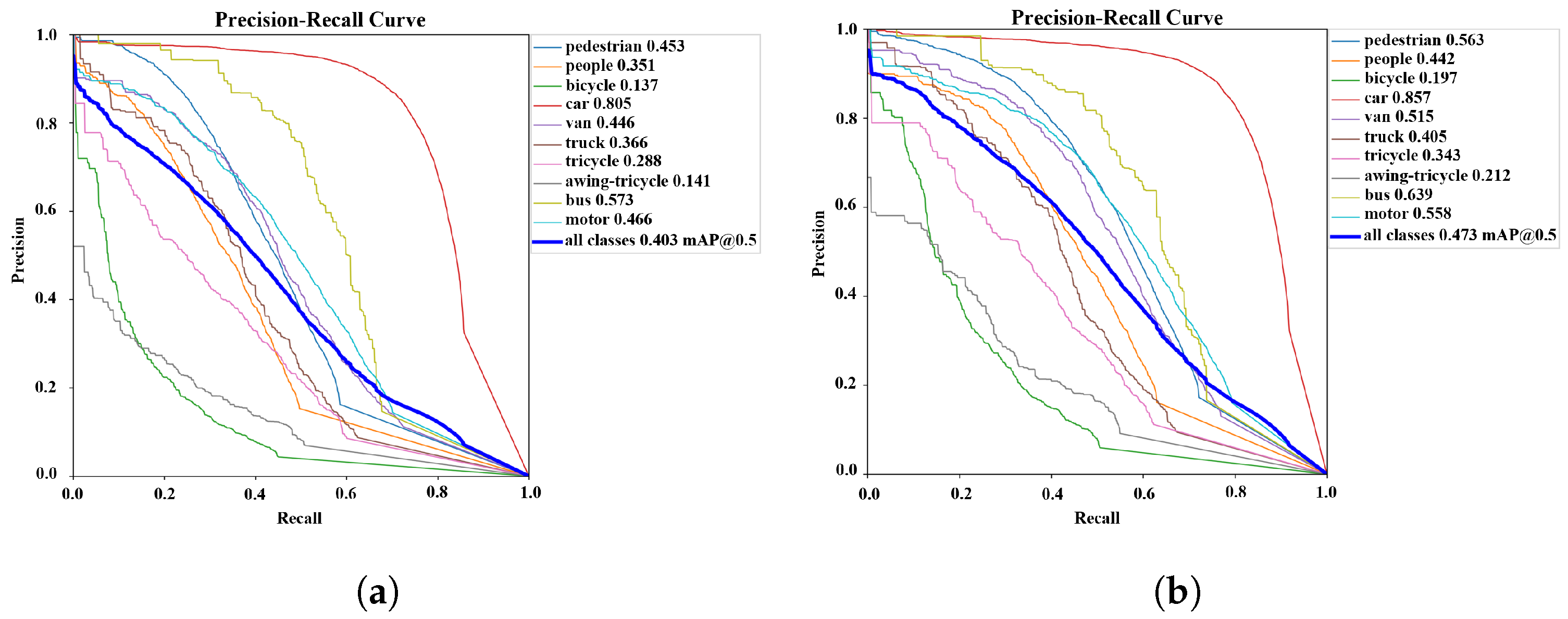

4.4. Analysis of Results

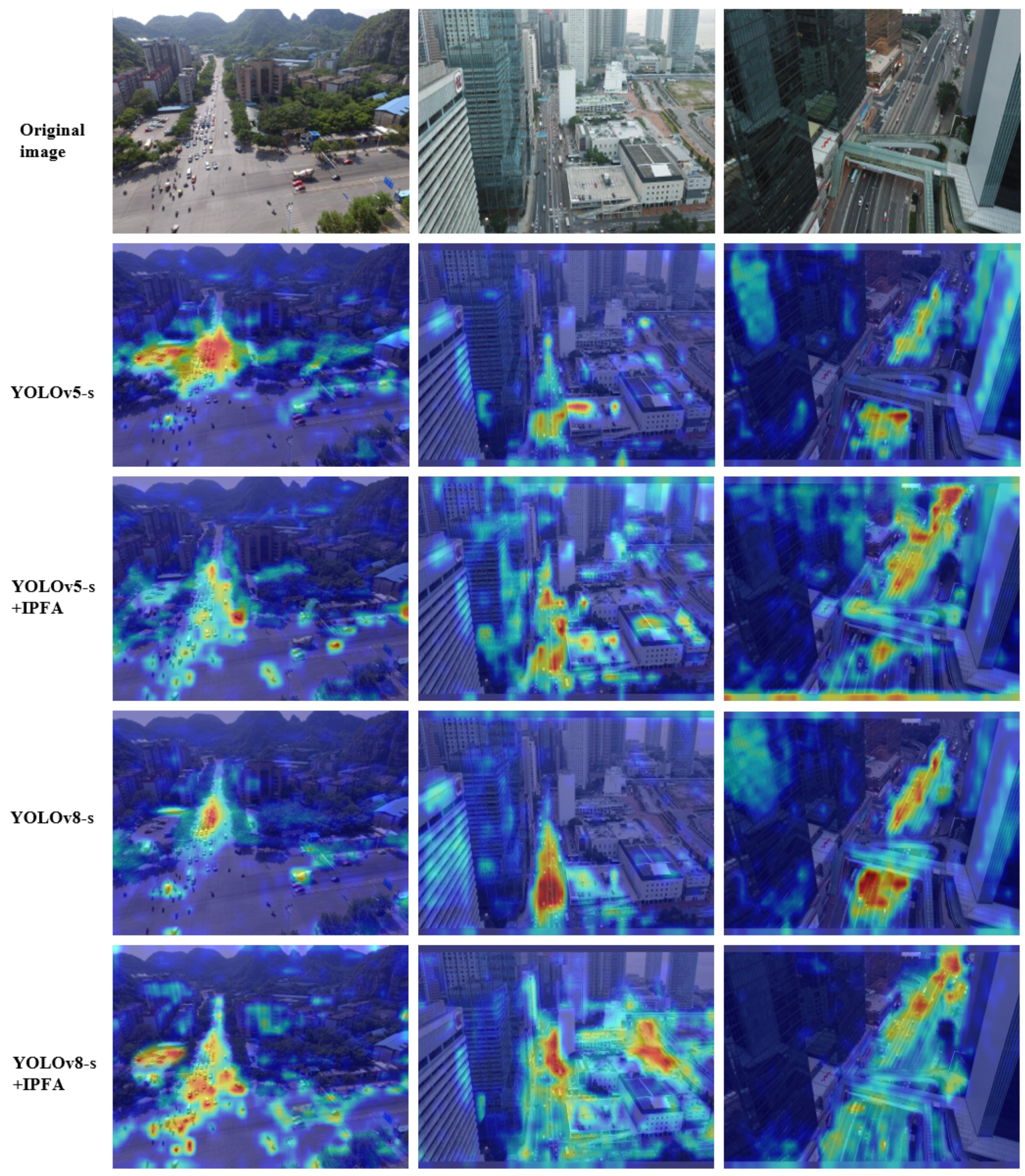

4.4.1. Effect of IPFA Module

4.4.2. Effect of CSFM

4.5. Sensitivity Analysis

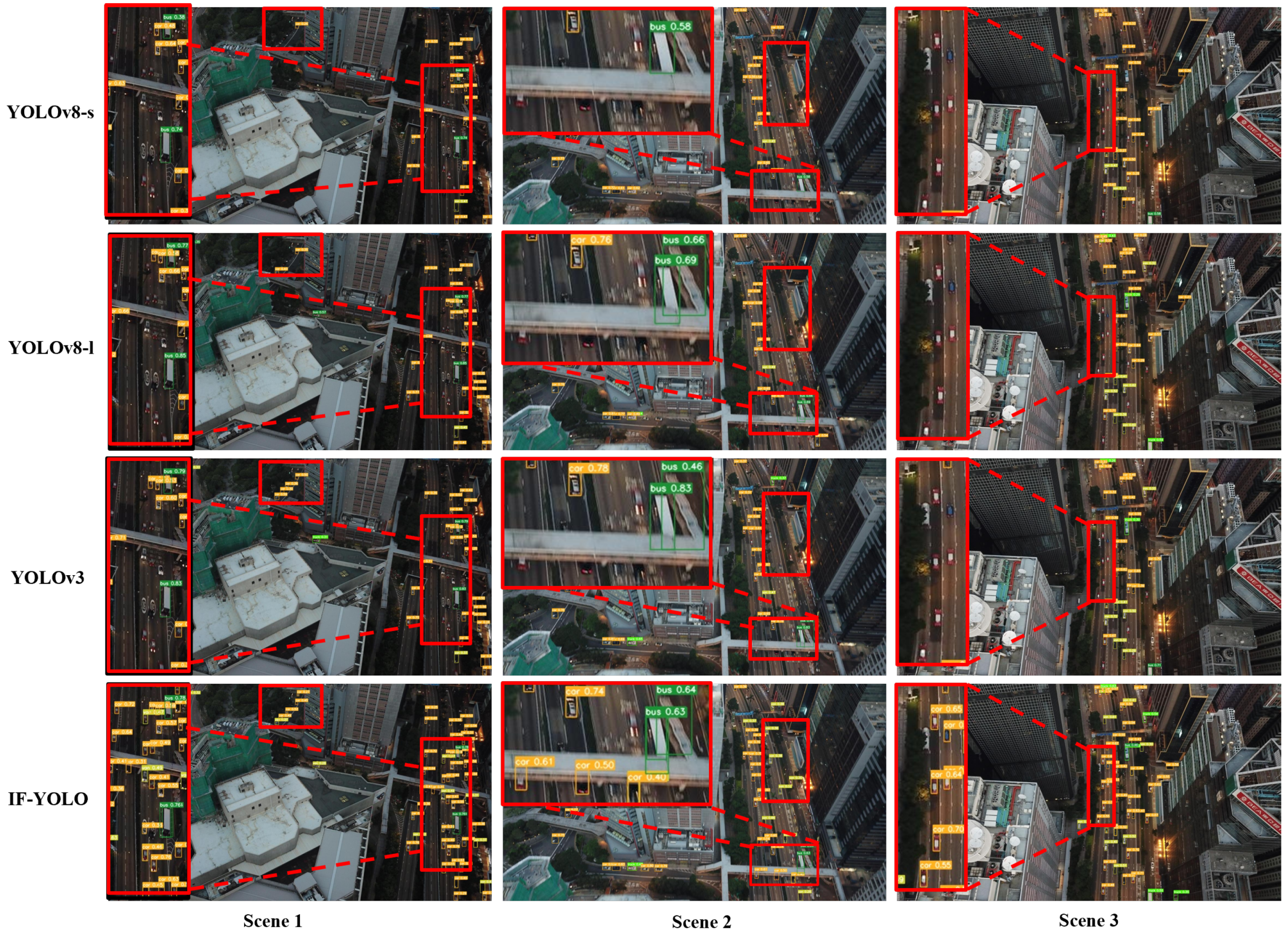

4.6. Comparison with Mainstream Models

4.7. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Audebert, N.; Le Saux, B.; Lefevre, S. Beyond RGB: Very High Resolution Urban Remote Sensing With Multimodal Deep Networks. Isprs J. Photogramm. Remote Sens. 2018, 140, 20–32. [Google Scholar] [CrossRef]

- Gu, J.; Su, T.; Wang, Q.; Du, X.; Guizani, M. Multiple Moving Targets Surveillance Based on a Cooperative Network for Multi-UAV. IEEE Commun. Mag. 2018, 56, 82–89. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Berg, A.C.; Fu, C.Y.; Szegedy, C.; Anguelov, D.; Erhan, D.; Reed, S.; Liu, W. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. 2014. Available online: https://cocodataset.org/ (accessed on 11 July 2024).

- Everingham, M.; Zisserman, A.; Williams, C.K.I.; Gool, L.V.; Allan, M.; Bishop, C.M.; Chapelle, O.; Dalal, N.; Deselaers, T.; Dorko, G.; et al. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. 2007. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2007/index.html (accessed on 11 July 2024).

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. 2012. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2012/index.html (accessed on 11 July 2024).

- Liu, Y.; Sun, P.; Wergeles, N.; Shang, Y. A Survey and Performance Evaluation of Deep Learning Methods for Small Object Detection. Expert Syst. Appl. 2021, 172, 114602. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Farhadi, A.; Redmon, J. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv5: A State-of-the-Art Object Detection System. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 11 July 2024).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Viola, P.A.; Jones, M.J. Rapid Object Detection using a Boosted Cascade of Simple Features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. arXiv 2020, arXiv:2011.08036. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. arXiv 2019, arXiv:1904.11490. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. arXiv 2018, arXiv:1803.01534. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO. Version 8.0.0. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 11 July 2024).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Chen, J.; Huang, D. UFPMP-Det: Toward Accurate and Efficient Object Detection on Drone Imagery. arXiv 2021, arXiv:2112.10415. [Google Scholar]

- Lu, W.; Lan, C.; Niu, C.; Liu, W.; Lyu, L.; Shi, Q.; Wang, S. A CNN-Transformer Hybrid Model Based on CSWin Transformer for UAV Image Object Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1211–1231. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, C.; Guo, W.; Zhang, T.; Li, W. CFANet: Efficient Detection of UAV Image Based on Cross-Layer Feature Aggregation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5608911. [Google Scholar] [CrossRef]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. A Modified YOLOv8 Detection Network for UAV Aerial Image Recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features From Cheap Operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1577–1586. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. arXiv 2021, arXiv:2108.11539. [Google Scholar]

- Zhang, Z. Drone-YOLO: An Efficient Neural Network Method for Target Detection in Drone Images. Drones 2023, 7, 526. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef]

| Method | Val | Test | ||||

|---|---|---|---|---|---|---|

| P% | R% | mAP@0.5% | P% | R% | mAP@0.5% | |

| YOLOv5-s | 50.8 | 37.1 | 38.6 | 43.8 | 33.4 | 31.7 |

| YOLOv5-s+IPFA | 53.0 (↑ 2.2) | 40.6 (↑ 3.5) | 41.9 (↑ 3.3) | 47.5 (↑ 3.7) | 36.5 (↑ 3.1) | 35.1 (↑ 3.4) |

| YOLOv8-s | 50.2 | 39.7 | 40.4 | 46.6 | 34.7 | 33.0 |

| YOLOv8-s+IPFA | 53.0 (↑ 2.8) | 40.8 (↑ 1.1) | 42.1 (↑ 1.8) | 47.9 (↑ 1.3) | 36.1 (↑ 1.4) | 34.7 (↑ 1.7) |

| Method | adl | Val | Test | Parameters (M) | GFLOPS | ||||

|---|---|---|---|---|---|---|---|---|---|

| P% | R% | mAP@0.5% | P% | R% | mAP@0.5% | ||||

| PANet | ✓ | 54.2 | 43.3 | 44.7 | 48.5 | 37.7 | 36.4 | 11.48 | 37.4 |

| PANet+CSFM | ✓ | 54.1 | 43.8 | 45.2 (↑ 0.5) | 47.7 | 38.4 | 36.7 (↑ 0.3) | 12.36 | 40.7 |

| BiFPN | ✓ | 57.2 | 45.2 | 47.6 | 49.4 | 39.2 | 38.2 | 8.96 | 54.9 |

| BiFPN+CSFM | ✓ | 56.8 | 46.5 | 48.3 (↑ 0.7) | 49.2 | 39.7 | 38.5 (↑ 0.3) | 9.12 | 58.6 |

| FGAFPN-CSFM | - | 54.5 | 43.1 | 44.8 | 47.5 | 37.7 | 36.1 | 11.56 | 37.1 |

| FGAFPN | - | 54.2 | 44.0 | 45.8 (↑ 1.0) | 47.8 | 38.1 | 36.4 (↑ 0.3) | 12.44 | 41.1 |

| Backbone | Neck | Val | Test | Parameters (M) | GFLOPS | Time (ms) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S1 | S2 | S3 | S4 | S1 | S2 | S3 | P% | R% | mAP@0.5% | P% | R% | mAP@0.5% | |||

| ✓ | ✓ | 55.4 | 44.4 | 46.1 | 49.2 | 38.8 | 37.6 | 11.7 | 43.6 | 5.1 | |||||

| ✓ | ✓ | 55.5 | 44.3 | 46.2 | 49.0 | 39.1 | 37.7 | 11.75 | 43.6 | 5.1 | |||||

| ✓ | ✓ | 55.3 | 43.3 | 45.6 | 48.9 | 37.8 | 37.0 | 11.95 | 43.6 | 5.1 | |||||

| ✓ | ✓ | 55.8 | 42.9 | 45.5 | 48.0 | 38.7 | 37.3 | 12.0 | 43.6 | 5.1 | |||||

| ✓ | ✓ | ✓ | 55.2 | 44.8 | 46.4 | 49.6 | 38.9 | 37.8 | 11.77 | 43.8 | 5.1 | ||||

| ✓ | ✓ | ✓ | 53.9 | 45.3 | 46.4 | 50.7 | 38.9 | 38.4 | 11.77 | 47.2 | 5.3 | ||||

| ✓ | ✓ | ✓ | 55.2 | 44.7 | 46.4 | 49.1 | 38.7 | 37.8 | 12.01 | 47.2 | 5.2 | ||||

| ✓ | ✓ | ✓ | ✓ | 56.5 | 45.3 | 47.3 | 50.2 | 39.1 | 38.6 | 11.83 | 47.5 | 5.2 | |||

| ✓ | ✓ | ✓ | ✓ | 56.3 | 44.0 | 46.0 | 49.4 | 38.9 | 37.6 | 12.08 | 47.5 | 5.3 | |||

| ✓ | ✓ | ✓ | ✓ | 56.2 | 45.1 | 47.3 | 50.6 | 39.2 | 39.0 | 12.03 | 50.9 | 5.5 | |||

| Method | Val | Test | Parameters (M) | GFLOPS | ||||

|---|---|---|---|---|---|---|---|---|

| P% | R% | mAP@0.5% | P% | R% | mAP@0.5% | |||

| Faster R-CNN | - | - | 19.6 | - | - | - | 41.2 | 118.8 |

| Cascade R-CNN | - | - | 18.9 | - | - | - | 69.0 | 146.6 |

| Li et al. [34] | - | - | 42.2 | - | - | - | 9.66 | - |

| Drone-YOLO-s | - | - | 44.3 | - | - | 35.6 | 10.9 | - |

| TPH-YOLOv5 | - | - | 46.4 | - | - | - | 60.43 | 145.7 |

| UAV-YOLOv8 | 54.4 | 45.6 | 47.0 | - | - | - | 10.3 | - |

| YOLOv3 | 57.8 | 43.3 | 45.7 | 50.1 | 38.8 | 37.3 | 103.67 | 282.5 |

| YOLOv5-s | 50.8 | 37.1 | 38.6 | 43.8 | 33.4 | 31.7 | 9.12 | 24.1 |

| YOLOv5-m | 53.1 | 41.5 | 42.6 | 47.4 | 36.0 | 34.7 | 25.05 | 64.0 |

| YOLOv5-l | 55.7 | 43.2 | 44.7 | 50.0 | 38.0 | 36.9 | 53.14 | 134.9 |

| YOLOv5-x | 56.6 | 44.8 | 46.3 | 50.8 | 38.9 | 38.4 | 97.16 | 246.0 |

| YOLOv8-s | 50.2 | 39.7 | 40.4 | 46.6 | 34.7 | 33.0 | 11.14 | 28.7 |

| YOLOv8-m | 54.0 | 42.7 | 43.6 | 49.9 | 36.6 | 36.0 | 25.86 | 79.1 |

| YOLOv8-l | 57.1 | 43.4 | 45.4 | 49.8 | 38.2 | 36.9 | 43.61 | 165.2 |

| YOLOv8-x | 58.1 | 45.0 | 46.6 | 50.4 | 38.8 | 38.2 | 68.13 | 257.4 |

| IF-YOLO | 56.5 | 45.3 | 47.3 | 50.2 | 39.1 | 38.6 | 11.83 | 47.5 |

| Method | P% | R% | mAP@0.5% | Parameters (M) | GFLOPS |

|---|---|---|---|---|---|

| YOLOv8-s | 50.2 | 39.7 | 40.4 | 11.14 | 28.7 |

| YOLOv8-s+adl | 54.2 (↑ 4.0) | 43.3 (↑ 3.6) | 44.7 (↑ 4.3) | 11.48 | 37.4 |

| YOLOv8-s+FGAFPN | 54.2 (↑ 4.0) | 44.0 (↑ 4.3) | 45.8 (↑ 5.4) | 12.44 | 41.1 |

| YOLOv8-s+adl+IPFA | 56.5 (↑ 6.3) | 44.5 (↑ 4.8) | 46.6 (↑ 6.2) | 10.87 | 43.1 |

| YOLOv8-s+FGAFPN+IPFA | 56.5 (↑ 6.3) | 45.3 (↑ 5.6) | 47.3 (↑ 6.9) | 11.83 | 47.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Zhang, Y.; Shi, Z.; Zhang, Y.; Gao, R. Unmanned Aerial Vehicle Object Detection Based on Information-Preserving and Fine-Grained Feature Aggregation. Remote Sens. 2024, 16, 2590. https://doi.org/10.3390/rs16142590

Zhang J, Zhang Y, Shi Z, Zhang Y, Gao R. Unmanned Aerial Vehicle Object Detection Based on Information-Preserving and Fine-Grained Feature Aggregation. Remote Sensing. 2024; 16(14):2590. https://doi.org/10.3390/rs16142590

Chicago/Turabian StyleZhang, Jiangfan, Yan Zhang, Zhiguang Shi, Yu Zhang, and Ruobin Gao. 2024. "Unmanned Aerial Vehicle Object Detection Based on Information-Preserving and Fine-Grained Feature Aggregation" Remote Sensing 16, no. 14: 2590. https://doi.org/10.3390/rs16142590

APA StyleZhang, J., Zhang, Y., Shi, Z., Zhang, Y., & Gao, R. (2024). Unmanned Aerial Vehicle Object Detection Based on Information-Preserving and Fine-Grained Feature Aggregation. Remote Sensing, 16(14), 2590. https://doi.org/10.3390/rs16142590