Abstract

Geological mapping involves the identification of elements such as rocks, soils, and surface water, which are fundamental tasks in Geological Environment Remote Sensing (GERS) interpretation. High-precision intelligent interpretation technology can not only reduce labor requirements and significantly improve the efficiency of geological mapping but also assist geological disaster prevention assessment and resource exploration. However, the high interclass similarity, high intraclass variability, gradational boundaries, and complex distributional characteristics of GERS elements coupled with the difficulty of manual labeling and the interference of imaging noise, all limit the accuracy of DL-based methods in wide-area GERS interpretation. We propose a Transformer-based multi-stage and multi-scale fusion network, RSWFormer (Rock–Soil–Water Network with Transformer), for geological mapping of spatially large areas. RSWFormer first uses a Multi-stage Geosemantic Hierarchical Sampling (MGHS) module to extract geological information and high-dimensional features at different scales from local to global, and then uses a Multi-scale Geological Context Enhancement (MGCE) module to fuse geological semantic information at different scales to enhance the understanding of contextual semantics. The cascade of the two modules is designed to enhance the interpretation and performance of GERS elements in geologically complex areas. The high mountainous and hilly areas located in western China were selected as the research area. A multi-source geological remote sensing dataset containing diverse GERS feature categories and complex lithological characteristics, Multi-GL9, is constructed to fill the significant gaps in the datasets required for extensive GERS. Using overall accuracy as the evaluation index, RSWFormer achieves 92.15% and 80.23% on the Gaofen-2 and Landsat-8 datasets, respectively, surpassing existing methods. Experiments show that RSWFormer has excellent performance and wide applicability in geological mapping tasks.

1. Introduction

The geological environment is a complex environmental system mainly composed of the lithosphere, hydrosphere, and atmosphere on the earth’s surface, including rocks, soil, groundwater, and other geological bodies. In recent years, environmental problems, such as geological disasters, mine pollution, and soil erosion have occurred frequently. Therefore, it is particularly important and necessary to carry out geological environment research and other related work.

Research on the geological environment covers many aspects, such as geological survey mapping, geological structure analysis [1], mineral resource exploration [2], environmental management [3], and geological disaster prevention [4] (landslides and debris flows), which effectively reduces the losses caused by natural disasters and is of great importance for the promotion of sustainable development of humankind.

However, in areas with harsh environments, such as high-altitude snowy mountainous areas and arid uninhabited areas, traditional artificial geological surveys face many challenges. Geological surveys in these areas are difficult, have limited coverage, and usually require a large investment of time and human resources. In addition, if geological mapping errors occur in complex areas, they may lead to errors in resource assessment, which in turn may cause serious consequences in disaster management. For example, in soil erosion control and mine pollution control, mapping errors may lead to inefficient environmental restoration and increased restoration costs. In the field of mineral resource exploration, the accuracy of geological mapping is directly related to resource assessment and the formulation of mining strategies. If the error is large, it may cause wastage of resources, increase the cost of exploration and mining, and even miss development opportunities due to assessment errors. Therefore, it is particularly important to adopt advanced techniques to carry out regional geological surveys.

With the rapid advancement of satellite sensor technology (for example, the Gaofen, Sentinel, and Landsat series), we can efficiently and accurately obtain high-precision images of the earth’s surface, which are then used to monitor, analyze, and interpret the geological environment, a process called Geological Environment Remote Sensing [5] (GERS). However, the complexity of the spatial distribution characteristics of GERS elements in remote sensing images still limits the possibility of high-precision interpretation. Specifically,

- High interclass similarity and intraclass variability in GERS elements. For example, diorite and granite often show similar texture and tone characteristics in remote sensing images, while arenaceous rock shows diverse spatial distribution patterns after experiencing long geological processes and changes.

- The boundaries of GERS elements such as rocks and soil are gradational. Interactions between different geological features make it difficult to accurately delineate boundaries.

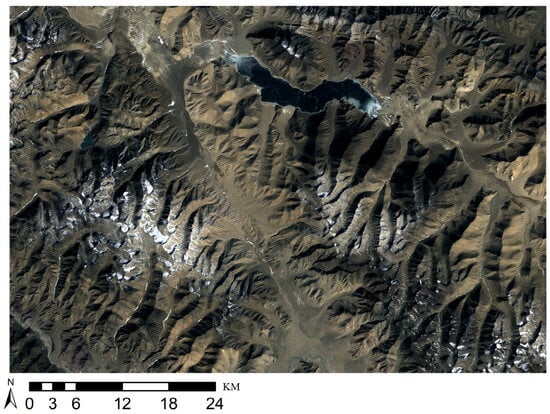

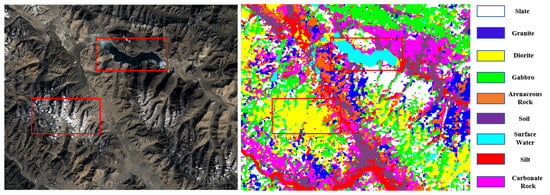

- Distribution complexity and noise interference. As shown in Figure 1, the distribution space of different GERS elements is complex, and the non-uniform distribution varies greatly. In addition, disturbances in atmospheric conditions such as clouds and fog also significantly reduce the accuracy and reliability of remote sensing image data.

Figure 1. Landsat-8 GERS image.

Figure 1. Landsat-8 GERS image.

These factors [5,6,7] increase the difficulty of GERS interpretation.

In view of the complex characteristics of GERS elements and intricacies of terrain, it is particularly important to develop effective GERS interpretation methods. GERS interpretation technology is the process of using data from various remote sensing platforms, image processing, and information extraction techniques to build an accurate GERS interpretation model to interpret and analyze the ground surface and geological information. It can carry out geological interpretation of spatially large areas at a low cost for various GERS elements such as rocks, soils, and surface water. To improve the extraction efficiency of GERS elemental features, a variety of machine learning (ML) algorithms (for example, SVM [8], KNN [9], PCA [10], etc.) are currently mainstream. For example, performance comparison among six ML algorithms using Aster spectral data from Fels et al. [11] and Cracknell et al. [12] explored a variety of machine learning classifiers in supervised lithology classification tasks and pointed out that random forest (RF [13]) are easy to use and have stable performance. Zuo et al. [14] proposed a method to combine ML algorithms with important features of sample data to build a high-precision classification model for lunar geological unit identification. Rezaei et al. [15] used band ratio, SAM [16], and SVM methods to classify the main lithological units in the Sanggan area with a classification accuracy of nearly 79%.

However, ML algorithms have limitations in processing high-precision and spatially large area remote sensing data, resulting in limited interpretation accuracy. Deep learning (DL) has quickly become a leading technology in GERS interpretation [17] due to its ability to combine basic features into high-level features. DL outperforms traditional ML methods in tasks such as object detection [18], scene classification [19,20], and semantic segmentation [6,7]. Wang et al. [21] proposed a framework consisting of multi-source data fusion technology (enhanced feature learning) and FCN [22], which improved the classification accuracy of geological mapping and showed superior performance to traditional ML algorithms. Brandmeier et al. [23] combined U-Net [24] with ArcGIS for lithological mapping in the Mount Isa region (Australia) and found that the DL model outperformed RF and SVM in identifying rare lithology categories. Hajaj et al. [25] evaluated the importance of ML and DL techniques in lithological mapping and emphasized the effectiveness of using high-resolution hyperspectral data combined with advanced DL models to achieve accurate mapping results.

Despite the impressive performance of the DL method, it suffers from complex model structure, a large number of parameters, and cumbersome calculations. In addition, DL faces problems such as difficulty in sample labeling and training and creating the GERS dataset and providing ML and DL models for training requires professional knowledge and time as well as money. Currently, publicly available GERS datasets are relatively scarce, especially high-resolution GERS datasets are almost not-existent, and the existing remote sensing scene datasets have problems such as inconsistent spatial resolution and low diversity. To effectively realize intelligent interpretation of GERS elements in spatially large areas, this study constructed a multi-source GERS dataset called Multi-GL9. This dataset is mainly collected by the Landsat-8 and Gaofen-2 remote sensing satellites and covers nine major GERS elements with multiple categories, complex lithology, and high resolution.

In GERS interpretation, faced with challenges such as the diversity of element changes and the complexity of distribution and considering that an attention mechanism can effectively capture the global dependencies of GERS images, we propose a Transformer-based regional GERS interpretation model called RSWFormer. RSWFormer captures the semantic information of remote sensing images from local to global through LTG block to obtain beneficial contextual information. In addition, a multi-stage downsampling method is used to capture high-level semantic features in remote sensing images and integrate the low- and high-level features through a multi-scale fusion module, which realizes the sharing of valuable semantic information. This design greatly enhances the model’s understanding of the details and context of remote sensing images, thereby significantly improving its ability to interpret complex GERS elements. We conduct extensive comparative experiments on the Multi-GL9 dataset, demonstrating that our model outperforms existing methods. We make the Multi-GL9 dataset publicly available for the first time at: https://github.com/WanZhan-lucky/Geological-remote-sensing-data-set (accessed on 24 June 2024). The contributions of this article include the following points:

- To the best of our knowledge, this study is the first to propose a meter-level high-resolution GERS multi-source dataset (Multi-GL9) which is used in scene classification tasks to fill the lack of datasets of complex regional GERS elements, such as rocks, soils, and surface water. It can provide rich regional and large-scale GERS elements information and further use for the interpretation of complex geological environments.

- In order to meet the needs of regional GERS interpretation, a novel RSWFormer is proposed to capture local to global semantic information, extract high-dimensional feature information of GERS elements, and effectively fuse semantic features of different scales.

- Experiments were conducted on the Multi-GL9 dataset. Compared with existing scene classification models, RSWFormer achieved an overall accuracy improvement of 0.61% and 1.7% on the Gaofen-2 and Landsat-8 datasets, respectively.

2. Related Work

2.1. Datasets in the Field of GERS Interpretation

In the past decade, Earth observation technology has developed rapidly, and the emergence of advanced remote sensing platforms, such as Landsat, Sentinel, SPOT, WorldView, Gaofen, and ZY-3, which have not only provided high-definition surface structural data but have also enhanced the visual representation and spectral characteristics of GERS elements, such as the significant improvement of spatial, temporal and spectral resolutions. They play an important role in GERS interpretation [26].

At present, there are few spatially large area public remote sensing scene datasets, such as NWPU-RESISC45 [27], covering 45 scene classes, and the AID and WPU-RESISC45 datasets from Google Earth. However, there are relatively few datasets available for specialized interpretation of GERS elements. In recent years, the number of publications related to the GERS field has surged from 75 to 2510 [5], accompanied by the proposal of geologic remote sensing datasets. For example, Y Zhang, M Li, S Han [28] used rock image datasets (granite, phyllite, and breccia) to establish a transfer learning model for lithology identification and classification. C Kumar, S Chatterjee, T Oommen, A Guha, A Mukherjee [29] proposed a multi-sensing (ASTER, PALSAR, and Sentinel-1) dataset to optimize lithology classification. D Li, J Zhao, Z Liu [30] collected four basic single-type rock datasets of sandstone, shale, monzogranite, and tuff, and used data augmentation to generate a multi-type mixed rock lithology dataset for training and evaluation. H Shi, ZH Xu, P Lin, W Ma [31] constructed a laboratory dataset of 160 kinds of lithology and a field dataset of 13 kinds of lithology for self-building and detection models.

However, the above GERS datasets are generally confined to limited lithology categories, lack diversity, and most do not contain high-resolution remote sensing images and are not made public. Therefore, we propose and publish for the first time a dataset called Multi-GL9, which combines multi-source data from Landsat 8 (15-m spatial resolution) and Gaofen-2 satellite (1-meter spatial resolution), covering nine categories of GERS elements.

Currently, the trend in GERS interpretation focuses on multi-source and multi-modal data fusion, small training samples, and expert knowledge integrated with DL models. Multi-modal, high-resolution datasets are becoming a research hotspot, which can better capture the complexity and diversity of GERS elements. However, publicly available high-quality datasets are still scarce, especially in covering more lithology categories and subtle features. The research we proposed aims to fill this existing gap by constructing and publishing the Multi-GL9 dataset to provide a high-resolution, multi-category GERS element dataset to support precise research in GERS interpretation. It not only helps to improve the accuracy of current geological element interpretation but also provides a solid data foundation for future research.

2.2. Image Classification Based on Deep Learning (CNNs and Transformer)

DL methods such as CNNs and Transformers have demonstrated powerful capabilities in rapidly processing remote sensing big data and have been widely used in tasks [32,33] such as object detection [34] and image classification [35].

With the rapid developments in DL technologies such as ResNet [36] and self-attention mechanisms [37], DL and remote sensing image processing can be combined to automatically perform GERS elements classification tasks. For example, Latifovic et al. [38] used CNN and combined aerial and satellite remote sensing data to predict geological categories to complete geological mapping. Sang et al. [39] used CNN to identify image content to confirm lithology distribution and complete the production of high-resolution geological maps. Shirmard et al. [40] proposed a framework that combines CNN and traditional ML methods to achieve high-precision lithology mapping using a variety of remote sensing data. Subsequently, researchers optimized CNN architecture, such as in VGG [41], GoogLeNet [42], DenseNet [43], etc., to effectively extract image features through deeper convolution layers and also improved the ability to identify GERS element features [44]. This technology has been widely used in geological mapping [45]. For example, Fu et al. [46] proposed ResNeSt-50 based on ResNet, combined with a channel-level attention mechanism and multi-path network, which significantly improved the accuracy of lithology classification. Huang et al. [47] proposed a rock image classification model based on EfficientNet and a triple attention mechanism. Through transfer learning and optimized network structure, it achieved a test set accuracy of up to 93.2%, showing strong robustness and generalization capabilities. Although CNNs have performed well in the field of image classification, they still have the disadvantage of being unable to capture the characteristics and global information of GERS images in GERS interpretation. It is necessary to combine methods such as attention mechanism and optimization of network structure to make up for its shortcomings.

Because the Transformer is not limited by the local receptive field, it allows flexible attention to various areas of the image, surpassing traditional CNNs, and achieving some progress in the field of GERS. For example, Dong et al. [48] proposed the ViT-DGCN model, which enhances the feature extraction performance of remote sensing images by combining ViT [49]. The FaciesViT model proposed by Koeshidayatullah et al. [50] has shown significant performance improvement and repeatability in image-based lithology classification tasks. Jacinto et al. [51] proposed solving the rock stratigraphy prediction problem using a Transformer-based algorithm. The CNN-transformer model proposed by Cheng et al. [52] can effectively capture local and global mineral characteristics and performs better than the traditional CNN’s model. However, the above models have problems in GERS interpretation such as complex training, weak generalization ability, and high data requirements.

ViT often has difficulty in capturing local features when processing GERS images. In contrast, we consider Swin [53]’s hierarchical feature extraction, which has high computational efficiency and better local and global feature fusion. However, Swin has some cross-scale information that is not effectively integrated, which may lead to the loss of specific details or features when processing complex GERS images. Based on this, we propose RSWFormer, which can effectively capture key features in GERS images by fusing multi-scale features and sharing high-dimensional and low-dimensional semantic information.

3. Methodology

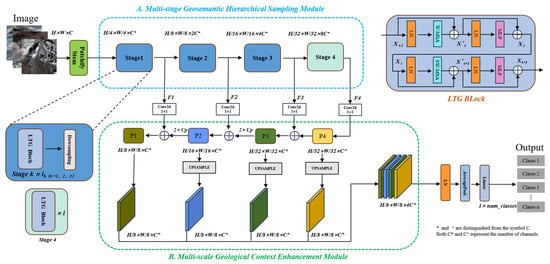

This section introduces the RSWFormer model designed specifically for GERS element interpretation in detail. RSWFormer consists of two main modules: the Multi-stage Geosemantic Hierarchical Sampling (MGHS) module and the Multi-scale Geological Context Enhancement (MGCE) module. The cascade of these two modules enables the RSWFormer to provide richer and more detailed geological information interpretation when handling GERS element interpretation tasks.

First let us define this task. In GERS image classification, we give a dataset consisting of N images Data = , where each image of the input model is . The model is trained by means of a training set and the effectiveness of the model is evaluated using a validation set, with the aim of classifying each into one of t categories = {, , , …, } and make predictions in unknown GERS regions. We use the RSWFormer model to accomplish this task.

3.1. Overall Architecture and Processes

The overall architecture and data processing flow of RSWFormer is shown in Figure 2. It mainly includes MGHS and MGCE modules, whose functions are as follows:

Figure 2.

Overall architecture of the proposed RSWFormer.

- The MGHS module aims to extract high-dimensional geological features, in which the LTG block effectively captures and analyzes geological data at each stage to achieve the extraction of feature information from local to global. More details are presented in Section 3.2.

- The MGCE module enhances the understanding of contextual semantics by fusing geological semantic information at different scales. Detailed information is introduced in Section 3.3.

The specific processing flow is as follows:

- The image size is and is first input into a Patchify stem (Inchannel = 3, Outchannel = embed_dim, Kernel_size = Stride = Patch_size, called a Patchify stem). It uses a convolution kernel (stride 4) to extract high-resolution features and outputs features with a channel count of 96 followed by a LayerNorm [54] (LN).

- In four consecutive stages (), the depth of the feature map is gradually deepened and its spatial dimension is reduced at the same time, aiming to capture more abstract and advanced geological remote sensing image features. In order to maintain a high spatial resolution and effectively fuse features and retain key feature information, downsampling is not performed in the . The output feature maps are labeled .

- performs dimension transformation through a size convolution kernel to unify them to the dimension . Subsequently, the feature maps are upsampled by 2 times one by one and added together to obtain . Parameter information at each stage is shown in Table 1.

Table 1. Parameter table at different stages.

Table 1. Parameter table at different stages. - By upsampling to the same resolution as through bilinear interpolation and splicing in the channel dimension, finally, a feature with rich GERS details is formed to be used in the classification task.

3.2. Multi-Stage Geosemantic Hierarchical Sampling Module (MGHS)

GERS elements usually show complex interactions, with diverse characteristics and uneven distributions. The traditional self-attention mechanism cannot fully consider the local characteristics of GERS images and has high computational complexity.

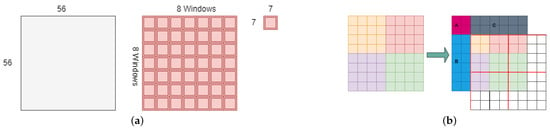

We adopted the hierarchical construction method in ST [53] work, W-MSA reduces computational complexity and SW-MSA’s cross-window communication capabilities. The alternate use of the two MSAs can capture local to global geological semantic information, which we call the LTG (Local To Global) block. Specifically, this is as follows:

- As shown in Figure 3a, in W-MSA, the feature map is divided into different windows according to (M = 7), and multi-head self-attention is used within each window. Using this method, the computational complexity is , which is smaller than the computational complexity of when using the MSA method.

Figure 3. Example operation diagram. (a) is W-MSA, (b) is SW-MSA.

Figure 3. Example operation diagram. (a) is W-MSA, (b) is SW-MSA. - As shown in Figure 3b, in SW-MSA, the window position in W-MSA is offset by in the x and y directions (for example, Window_size = , then offset by 2 pixels). This is combined with the masked MSA method, and each offset window is calculated independently to improve the ability to capture global information.

In addition, during the downsampling process, the spatial size of the feature map is halved while the count of channels is doubled to enhance the feature representation of the model through dimensional reorganization.

The original image is processed by Patchify Stem to obtain X, and we set . are calculated as follows:

where index i is used to identify the processing order of each stage. The details are as follows:

- When i takes the value of 1, the initial feature () first passes through in (one characteristic of a Transformer is that it does not change the resolution and dimension of the input data).

- Secondly, when passing through the layer, it halves the H and W of the feature map and uses the linear transformation weight to reduce the merged patch channel dimension from the original four times to two times.

- Then, it generates feature , completing the conversion of doubling the quantity of channels and halving the spatial size.

- Finally, is used as new input data to further generate and then is used to generate .

It is worth noting that in the process of generating , it is not downsampled, so the layer is removed, thereby retaining the original data dimension. The entire processing flow is shown in module A in Figure 2.

Through this hierarchical and progressive processing method, we can more comprehensively and carefully mine the complex semantic information from local to global GERS images.

3.3. Multi-Scale Geological Context Enhancement Module (MGCE)

Traditional classification networks extract high-level features in images through multi-stage processing. Although high-level features possess richer semantic information, they have lower resolution and are therefore less capable of perceiving details. On the contrary, low-level features contain rich location and detail information and have higher resolution; at the same time, due to fewer convolutional layers, their semantic information is weaker and more susceptible to noise. In FPN [55], a top-down approach is used to gradually fuse high-level and low-level features. PANet [56] proposes a top-down and bottom-up two-way fusion path.

The above design optimizes the feature fusion and can significantly enhance the model’s understanding of GERS images. The specific process of MGCE is as follows:

- Firstly, considering the idea of feature fusion of different scales, we use (kernel size is ) to adjust the channel to for .

- Then, we obtain the by twice up-sampling operation, and the computational procedure is summarized as follows:

- Next, are dimensioned to the same width and height as using the bilinear interpolation method .

- Finally, the feature maps are connected in the channel dimension using the concatenation function .

- Afterwards, the feature maps are normalized by the LN layer. The calculations are as follows:where represents Adaptive Average Pooling, is the weight of changing the channel dimension, and is the most probable among the t categories.

In GERS images, this structure can not only capture the diversity of geological structures and features at different scales, but it can also retain the underlying detailed information and incorporate high-level semantic information.

4. Experiments and Analysis

4.1. Study Area and Dataset

The study area is located in the high mountains and hilly area in western China with variable topography and extreme climatic conditions. The topography of the area includes steep slopes, areas covered with snow and ice, mudslides, and rain and snow erosion phenomena with sparse natural vegetation and a high degree of rock exposure. The study area contains rock types such as granite, gabbro, diorite, etc. The variability of lithology increases the complexity of GERS interpretation, which meets the needs of this study for the interpretation of geologic elements. The multi-source dataset (Multi-GL9) mainly consists of two remote sensing satellite images: Landsat-8 and Gaofen-2.

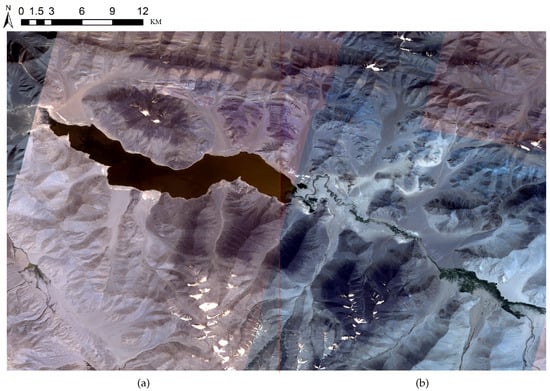

This study used the multispectral satellite Landsat-8 carrying OLI and TIRS sensors, which can provide visible information for exploration lithology mapping, including Band 2 (Blue 0.452–0.512; 30 m), Band 3 (Green 0.533–0.590; 30 m), Band 4 (Red 0.636–0.673; 30 m), and Band 8 (Panchromatic, 15 m). We chose Bands 4, 3, 2, and 8 to synthesize 15 m resolution images and removed Band 8 information after synthesis. Gaofen-2 is a high-spatial resolution imagery remote sensing satellite with Band 4 (NIR 0.77–0.89; 3.2 m), Band 3 (Red 0.63–0.69; 3.2 m), Band 2 (Green 0.52–0.59; 3.2 m) and Panchromatic (0.45–0.90; 0.8 m). We used Bands 4, 3, 2, and Panchromatic to synthesize the new image, and then removed the panchromatic information for a new image with a spatial resolution of up to 1 m. As shown in Figure 4 for the study area of the Gaofen-2 image, Figure 4a is used for manual labeling to construct the dataset and for model training and validation, while Figure 4b is used for model testing or prediction.

Figure 4.

Example image of the Gaofen-2 research area. (a) has size 31,983 × 41,497 pixels for training and validation, (b) has size 42,054 × 30,991 pixels for testing or prediction.

In view of the complexity of GERS images, the interpretation of specific GERS elements usually relies on human intuitive judgment and understanding. The specific labeling process is as follows:

- Preprocessing of Figure 4a (stretching, sampling, and denoising).

- The uniqueness, diversity, and serious homogenization of GERS elements have led to a complex classification system and a lack of unified standards. Based on this, we first built on the theoretical foundation of constructing 9 and 13 types of GERS elements in [6,7] and then conducted in-depth research on a large number of geological survey reports (covering a wide area) based on field investigations and on-site data collection. Next, with the help of texture features captured by remote sensing satellites and the interaction between GERS elements, we analyzed the differences in tonal and texture features of the data in Figure 4a. Therefore, we finally divided the GERS elements into nine categories: slate, granite, gabbro, diorite, silt, surface water, soil, arenaceous rock, and carbonate rock.

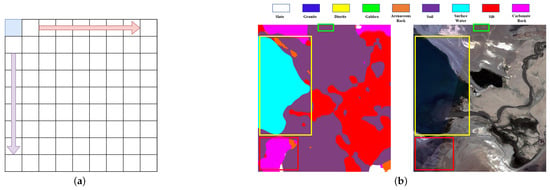

- As shown in Figure 5a, crop GERS data in a top-to-bottom, left-to-right, non-overlapping window sliding manner, and the cropping size was set to in consideration of the high-spatial resolution of Gaofen-2, while the cropping size of Landsat-8 data was set to .

Figure 5. (a) shows the cropping method used to construct the dataset in Section 4.1, and (b) shows the small region in Figure 4b (used for testing) with its interpretation map and palette, with a size of pixels. The red, yellow and green boxes are displayed for contrast, see the text for details.

Figure 5. (a) shows the cropping method used to construct the dataset in Section 4.1, and (b) shows the small region in Figure 4b (used for testing) with its interpretation map and palette, with a size of pixels. The red, yellow and green boxes are displayed for contrast, see the text for details. - When performing manual annotation, we specify the label category according to the principle of the largest area in the image block. For mixed transition zones, we divide them into a certain type of elements based on prior knowledge identification and the proportion of each geological element component; for the finely distributed and smaller areas, they are divided into existing categories or the category names are redefined based on geological theoretical knowledge.

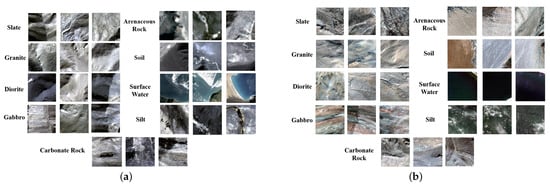

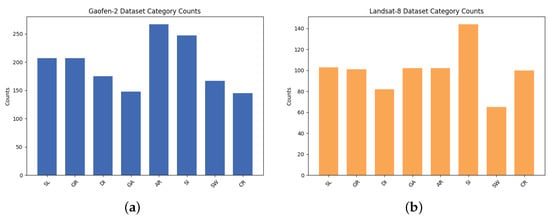

During the entire annotation process, geographical coordinates were used to ensure the consistency of the cropping position and the label data, thereby constructing the Gaofen-2 scene dataset containing geographical coordinates, with a total of 1668 images. Meanwhile, the same annotation process was followed for the GERS image in Figure 1 to produce the Landsat-8 scene dataset, totaling 899 images. Figure 6 presents a partial sample of the two datasets, while Table 2 details the number of images in each GERS element class. Figure 7 shows the distribution of the quantity of images in each category in the two datasets in the form of histograms.

Figure 6.

(a) is a partial sample of the Gaofen-2, (b) is a partial sample of the Landsat-8.

Table 2.

The number of images per category from Gaofen-2 and Landsat-8 datasets.

Figure 7.

Histogram distribution of each GERS element category, (a) is Gaofen-2, (b) is Landsat-8.

4.2. Experimental Environment and Evaluation Metrics

Our research utilizes PyTorch to build the model and calculate the loss using a cross-entropy-based loss function with the following relevant Equation (4), where denotes the observed sample distribution, is the predictive distribution of the model, and t is the total number of categories. A decrease in the loss value indicates that the label predicted by the model is getting closer to the true label.

We use the following training configuration: the learning rate is set to , the batch size is 16, the total number of iterations is 100, and the AdamW algorithm is used. The dataset is randomly divided into training and test sets according to an 8:2 ratio, specifically, 1337 out of 1668 Gaofen-2 images are used for training and 331 are used as validation, cropped and resized to , whereas 899 Landsat-8 images are similarly divided and up-sampled and resized to to facilitate training. The hardware and software setup for the experimental environment is detailed in the provided Table 3, which provides the necessary computational power for the experiments.

Table 3.

Experimental configuration.

We explored multiple models based on CNNs and Transformer (shown in Section 4.4) and present the classification results using overall accuracy(OA) as in Equation (5), which indicates the proportion of the model that correctly classifies the entire dataset. To provide insights into RSWFormer’s classification capabilities in the field of GERS, class-specific accuracies are also presented. True positive (TP) is a positive example predicted to be a positive example, true negative (TN) is a negative example predicted to be a negative example; false positive (FP) is a negative example predicted to be a positive example; and false negative (FN) is a positive example predicted to be a negative example.

4.3. Analysis of Results

On the Multi-GL9 dataset with 9 GERS elements, we used the proposed RSWFormer model to conduct experiments according to the configuration in Section 4.2 and evaluated the classification performance of different GERS elements through OA and specific category accuracy (see Table 4). The OA of RSWFormer on the Gaofen-2 dataset is as high as 92.15%. The interpretation accuracies of gabbro and surface water are both 100%, and the other categories’ accuracies are all above 90%. In the Landsat-8 dataset, the OA is 80.23%, and the classification accuracy values of surface water and silt also reach 100%. This shows that RSWFormer has high classification accuracy on high-resolution datasets and is efficient and accurate in analyzing and identifying geological features.

Table 4.

RSWFormer’s OA under the Gaofen-2 and Landsat-8 datasets and the interpretation accuracy of each category (%).

Then, we used ArcGIS10.2 software to crop the image in Figure 4b to obtain a small area containing a variety of GERS features for small area interpretation testing. The overall effect of small-area interpretation is good, and the water body contour curve and soil body category in the small area are successfully predicted, as shown in the yellow and green boxes in Figure 5b. The soil body is widely distributed, which is consistent with the actual spatial distribution. The spatial distribution of other categories including silt and carbonate rock is basically consistent. However, a small number of misclassifications are still obvious, such as the red box in Figure 5b, which misclassifies carbonate rock into silt and arenaceous rock. This may be caused by the imbalance of a small number of samples and the overlap or similarity of geological characteristics.

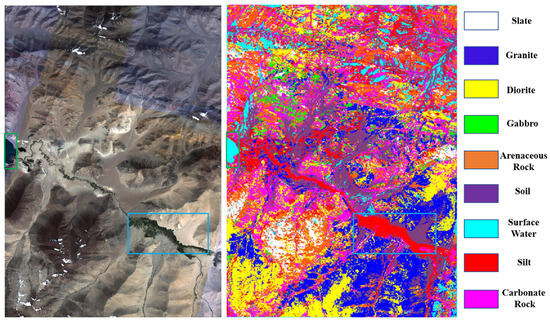

Next, to further validate the effectiveness and generalization of RSWFormer for regional lithology mapping, we adopted the sliding window method to classify the prediction from left to right and from top to bottom using overlapping windows. Specifically, Figure 4b was scaled to 0.5, 0.75, 1.0, 1.25, 1.5, and 1.75, setting the window size to and the stride to 32. Accumulating the prediction results of the six scaling values to obtain the final classification values, an interpretation plot was generated using the same color palette as the test. As shown in Figure 8, the distribution of soil and silt in the blue box is very accurate, the distribution of various types of rocks (e.g., slate, granite, gabbro, carbonate rock, etc.) is highly consistent with the actual terrain, and the surface water boundaries in the green box are basically correctly interpreted. This shows that RSWFormer has the ability to generalize over a wide range of unknown regions.

Figure 8.

Large-scale prediction area: On the left is the Gaofen-2 image, size 42,054 × 30,991 pixels, and on the right is the interpretation result of each category and the map of the corresponding palette. The blue and green boxes are used for contrast display, see the text for details.

Finally, we use the model weight with OA = 80.23% and scale it at the same ratio to predict the wider Landsat-8 entire image. As shown in Figure 9, the outlines of each GERS element were accurately identified and the boundaries of water bodies, soil distribution patterns, and the spatial distribution of rocks such as diorite, gabbro, and granite were successfully depicted. However, as in Figure 8, there is still a large amount of “salt and pepper” misclassification in the surface water interpretation.

Figure 9.

On the left, Landsat-8 large-scale image with dimensions of pixels. The right image shows the results of the interpretation of each category and its color palette. The two red boxes are used for comparison, see the text for details.

To sum up, RSWFormer shows excellent overall interpretation results in the GERS element identification task, fully verifying its effectiveness and generalization in this field. Its prediction results are highly consistent with the terrain characteristics and lithology distribution of the area, effectively meet the interpretation needs of GERS elements in a wide area and can effectively assist manual experts in visual interpretation.

4.4. Comparative Experiment of Multi-GL9 Dataset in the Same Area

For a comprehensive assessment of RSWFormer’s performance, we compared multiple models, including EfficientNet [57], RegNet [58], CeiT [59], DeiT [60], ViT [49], and PVT [61].

4.4.1. Comparative Experiments and Analysis of Gaofen-2 Dataset

According to Table 5, on the high-resolution dataset, the recognition accuracy of surface water reaches 100%, followed closely by silt and soil, both of which have recognition accuracies of more than 95%, which may be related to the larger coverage area and relatively single geological features. However, for lithologies such as slate, granite, gabbro, diorite, and carbonate rock, the interpretation accuracy is relatively low due to the high similarity between them and their unique complexity and diversity. Despite this, the interpretation accuracies of these lithologies in RSWFormer still remain above 90%. In particular, interpretation accuracy of gabbro reaches 100%.

Table 5.

OA and class-specific accuracy on Gaofen-2 dataset (%).

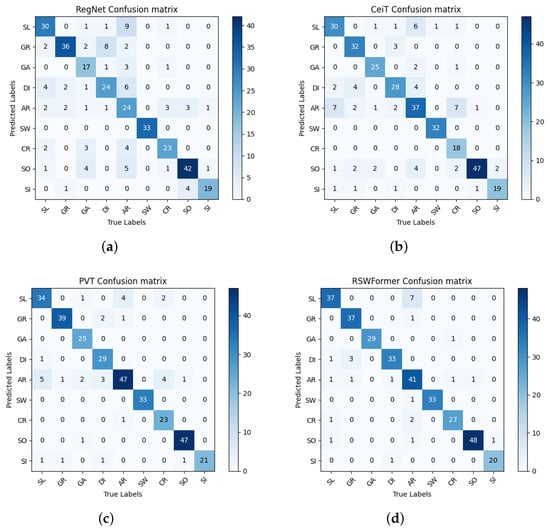

The data show that compared with CNNs, the average interpretation accuracy of the Transformer model in the GERS interpretation task is improved by about 10%. This improvement is mainly due to the attention mechanism that enhances the model’s understanding of the interrelationships between GERS elements, allowing it to more accurately identify transition areas between different lithologies and their geological characteristics. In addition, it also shows that CNNs still show great potential for development in the GERS interpretation task. Overall, we found that RSWFormer achieved 92.15% OA in the GERS interpretation task, which is 0.61% higher than the current optimal model PVT and 0.91% higher than the ViT model, proving its efficient recognition ability. Figure 10 shows the confusion matrix of RegNet, Ceit, PVT, and RSWFormer on the Gaofen-2 dataset.

Figure 10.

Confusion matrix of Gaofen-2 dataset. (a) is RegNet and OA = 75.83%, (b) is CeiT and OA = 82.48%, (c) is PVT and OA = 91.54%, (d) is RSWFormer and OA = 92.15%.

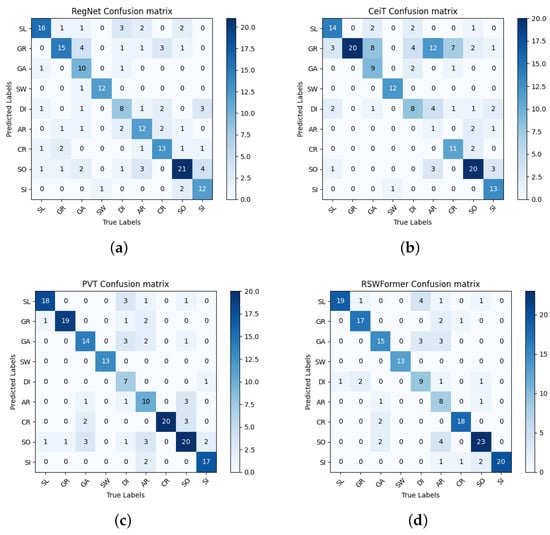

4.4.2. Comparative Experiments and Analysis of Landsat-8 Dataset

We performed the same comparison experiment on the Landsat-8 dataset, and the data are shown in Table 6. RSWFormer achieves a 100% recognition accuracy on the surface water and silt categories. In the slate and carbonate rock categories, the accuracy also exceeded 90%. Overall, compared with Transformer, low-resolution GERS images increase the ambiguity between categories, and there is less information in local areas, which reduces the feature extraction effect, resulting in relatively poor performance of the CNN. In contrast, RSWFormer improves performance by 1.7% when comparing the optimal PVT and ViT models.

Table 6.

OA and class-specific accuracy on Landsat-8 dataset (%).

In addition, the average interpretation accuracy of diorite and arenaceous rock is relatively low, which may be due to the fact that these two rock types are more variable in their natural environment and the effect of noise is more significant in low-resolution images, making it difficult to accurately capture their complex and variable features. Figure 11 shows the confusion matrix of RSWFormer and other models.

Figure 11.

Confusion matrix of Landsat-8 dataset, (a) is RegNet and OA = 67.23%, (b) is CeiT and OA = 62.71%, (c) is PVT and OA = 78.53%, (d) is RSWFormer and OA = 80.23%.

Finally, in two datasets of comparative experiments, we found that ViT’s interpretation performance exceeded CeiT and DeiT. This phenomenon may be due to the relatively complex GERS features in the Multi-GL9 dataset, resulting in CeiT and DeiT being insufficiently sensitive to these features and performing poorly. On the contrary, ViT has strong global information processing capabilities and can more effectively capture and analyze the widely distributed geological structures and features in GERS images. Therefore, based on the above data, RSWFormer shows excellent performance in the field of GERS interpretation. It shows relatively balanced and stable performance in identifying images with complex features and in diverse GERS element classification tasks.

5. Conclusions

This paper constructs a multi-source dataset Multi-GL9 that has the characteristics of diverse categories, complex lithology, and variable distribution. A Transformer-based fusion network RSWFormer is proposed, which uses the MGHS module to extract multi-scale geological features and the MGCE module to fuse geological semantic information of different scales, solving the problems of high inter-class similarity and intra-class differences, gradational boundaries, and complex distribution of elements in the GERS interpretation task. Experiments show that this method has wide applicability and advantages in mapping tasks over spatially large areas.

In the future, we will consider coupling geological remote sensing knowledge graphs to correct misclassified GERS elements. We will introduce remote sensing data from radar, optical, and thermal infrared data to integrate and combine advanced DL technology and geological expert knowledge to further improve the accuracy and robustness of GERS element interpretation. Our research not only provides a solid technical foundation for GERS interpretation but also provides important inspiration for further research and innovation in areas such as building identification and crop classification in agricultural monitoring.

Author Contributions

Conceptualization, S.H. and J.D.; methodology, Z.W. and S.H.; investigation, C.Z.; formal analysis, X.L. and T.Z.; writing—original draft preparation, S.H. and Z.W.; writing—review and editing, J.Z. and J.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was supported by the National Natural Science Foundation of China under Grant 42201415 and the Science and Technology Innovation Foundation of Command Center of Integrated Natural Resources Survey Center (KC20230006).

Data Availability Statement

The Multi-GL9 dataset is available at https://github.com/WanZhan-lucky/Geological-remote-sensing-data-set (accessed on 24 June 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Price, N.J.; Cosgrove, J.W. Analysis of Geological Structures; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Sabins, F.F. Remote sensing for mineral exploration. Ore Geol. 1999, 14, 157–183. [Google Scholar] [CrossRef]

- Gutiérrez, F.; Parise, M.; Waele, J.D.; Jourde, H. A review on natural and human-induced geohazards and impacts in karst. Earth-Sci. Rev. 2014, 138, 61–88. [Google Scholar] [CrossRef]

- Goetz, A.F.; Rowan, L.C. Geologic remote sensing. Science 1981, 211, 781–791. [Google Scholar] [CrossRef]

- Han, W.; Zhang, X.; Wang, Y.; Wang, L.; Huang, X.; Li, J.; Wang, S.; Chen, W.; Li, X.; Feng, R.; et al. A survey of machine learning and deep learning in remote sensing of geological environment: Challenges, advances, and opportunities. Isprs J. Photogramm. Remote Sens. 2023, 202, 87–113. [Google Scholar] [CrossRef]

- Han, W.; Li, J.; Wang, S.; Zhang, X.; Dong, Y.; Fan, R.; Zhang, X.; Wang, L. Geological remote sensing interpretation using deep learning feature and an adaptive multisource data fusion network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Wang, S.; Huang, X.; Han, W.; Li, J.; Zhang, X.; Wang, L. Lithological mapping of geological remote sensing via adversarial semi-supervised segmentation network. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103536. [Google Scholar] [CrossRef]

- Hearst, M.; Dumais, S.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883, revision #137311. [Google Scholar] [CrossRef]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100. [Google Scholar]

- Fels, A.E.A.E.; Ghorfi, M.E. Using remote sensing data for geological mapping in semi-arid environment: A machine learning approach. Earth Sci. Inform. 2022, 15, 485–496. [Google Scholar] [CrossRef]

- Cracknell, M.J.; Reading, A.M. Geological mapping using remote sensing data: A comparison of five machine learning algorithms, their response to variations in the spatial distribution of training data and the use of explicit spatial information. Comput. Geosci. 2014, 63, 22–33. [Google Scholar] [CrossRef]

- Rigatti, S.J. Random forest. J. Insur. Med. 2017, 47, 31–39. [Google Scholar] [CrossRef] [PubMed]

- Zuo, W.; Zeng, X.; Gao, X.; Zhang, Z.; Liu, D.; Li, C. Machine learning fusion multi-source data features for classification prediction of lunar surface geological units. Remote Sens. 2022, 14, 5075. [Google Scholar] [CrossRef]

- Rezaei, A.; Hassani, H.; Moarefvand, P.; Golmohammadi, A. Lithological mapping in sangan region in northeast iran using aster satellite data and image processing methods. Geol. Ecol. Landsc. 2020, 4, 59–70. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.; Barloon, P.J.; Goetz, A.F.H. The Spectral Image Processing System (Sips): Software for Integrated Analysis of Aviris Data. 1992. Available online: https://api.semanticscholar.org/CorpusID:60199064 (accessed on 24 June 2024).

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Han, W.; Chen, J.; Wang, L.; Feng, R.; Li, F.; Wu, L.; Tian, T.; Yan, J. Methods for small, weak object detection in optical high-resolution remote sensing images: A survey of advances and challenges. IEEE Geosci. Remote Sens. Mag. 2021, 9, 8–34. [Google Scholar] [CrossRef]

- Han, W.; Feng, R.; Wang, L.; Cheng, Y. A semi-supervised generative framework with deep learning features for high-resolution remote sensing image scene classification. Isprs J. Photogramm. Remote Sens. 2018, 145, 23–43. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L. Remote sensing image scene classification using cnn-capsnet. Remote Sens. 2019, 11, 494. [Google Scholar] [CrossRef]

- Wang, Z.; Zuo, R.; Liu, H. Lithological mapping based on fully convolutional network and multi-source geological data. Remote Sens. 2021, 13, 4860. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Brandmeier, M.; Chen, Y. Lithological classification using multi-sensor data and convolutional neural networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 55–59. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Part III 18, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Hajaj, S.; Harti, A.E.; Jellouli, A.; Pour, A.B.; Himyari, S.M.; Hamzaoui, A.; Hashim, M. Evaluating the performance of machine learning and deep learning techniques to hymap imagery for lithological mapping in a semi-arid region: Case study from western anti-atlas, morocco. Minerals 2023, 13, 766. [Google Scholar] [CrossRef]

- der Meer, F.D.V.; Werff, H.M.V.D.; Ruitenbeek, F.J.V.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; Meijde, M.V.D.; Carranza, E.J.M.; Smeth, J.B.; Woldai, T. Multi-and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, M.; Han, S. Automatic identification and classification in lithology based on deep learning in rock images. Yanshi Xuebao/Acta Petrol. Sin. 2018, 34, 333–342. [Google Scholar]

- Kumar, C.; Chatterjee, S.; Oommen, T.; Guha, A.; Mukherjee, A. Multi-sensor datasets-based optimal integration of spectral, textural, and morphological characteristics of rocks for lithological classification using machine learning models. Geocarto Int. 2022, 37, 6004–6032. [Google Scholar] [CrossRef]

- Li, D.; Zhao, J.; Liu, Z. A novel method of multitype hybrid rock lithology classification based on convolutional neural networks. Sensors 2022, 22, 1574. [Google Scholar] [CrossRef] [PubMed]

- Shi, H.; Xu, Z.; Lin, P.; Ma, W. Refined lithology identification: Methodology, challenges and prospects. Geoenergy Sci. Eng. 2023, 231, 212382. [Google Scholar] [CrossRef]

- Wang, S.; Han, W.; Zhang, X.; Li, J.; Wang, L. Geospatial remote sensing interpretation: From perception to cognition. Innov. Geosci. 2024, 2, 100056. [Google Scholar] [CrossRef]

- Wang, S.; Han, W.; Huang, X.; Zhang, X.; Wang, L.; Li, J. Trustworthy remote sensing interpretation: Concepts, technologies, and applications. ISPRS J. Photogramm. Remote Sens. 2024, 209, 150–172. [Google Scholar] [CrossRef]

- Han, W.; Li, J.; Wang, S.; Wang, Y.; Yan, J.; Fan, R.; Zhang, X.; Wang, L. A context-scale-aware detector and a new benchmark for remote sensing small weak object detection in unmanned aerial vehicle images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102966. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, L.; Li, J.; Han, W.; Fan, R.; Wang, S. Satellite-derived sediment distribution mapping using icesat-2 and superdove. Isprs J. Photogramm. Remote. Sens. 2023, 202, 545–564. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Ser. NIPS’17. Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Latifovic, R.; Pouliot, D.; Campbell, J. Assessment of convolution neural networks for surficial geology mapping in the south rae geological region, northwest territories, canada. Remote Sens. 2018, 10, 307. [Google Scholar] [CrossRef]

- Sang, X.; Xue, L.; Ran, X.; Li, X.; Liu, J.; Liu, Z. Intelligent high-resolution geological mapping based on slic-cnn. Isprs Int. J. Geo-Inf. 2020, 9, 99. [Google Scholar] [CrossRef]

- Shirmard, H.; Farahbakhsh, E.; Heidari, E.; Pour, A.B.; Pradhan, B.; Müller, D.; Chandra, R. A comparative study of convolutional neural networks and conventional machine learning models for lithological mapping using remote sensing data. Remote Sens. 2022, 14, 819. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Pascual, A.D.P.; Shu, L.; Szoke-Sieswerda, J.; McIsaac, K.; Osinski, G. Towards natural scene rock image classification with convolutional neural networks. In Proceedings of the 2019 IEEE Canadian Conference of Electrical and Computer Engineering (CCECE), Edmonton, AB, Canada, 5–8 May 2019; pp. 1–4. [Google Scholar]

- Lynda, N.O. Systematic survey of convolutional neural network in satellite image classification for geological mapping. In Proceedings of the 2019 15th International Conference on Electronics, Computer and Computation (ICECCO), Abuja, Nigeria, 10–12 December 2019; pp. 1–6. [Google Scholar]

- Fu, D.; Su, C.; Wang, W.; Yuan, R. Deep learning based lithology classification of drill core images. PLoS ONE 2022, 17, e0270826. [Google Scholar] [CrossRef]

- Huang, Z.; Su, L.; Wu, J.; Chen, Y. Rock image classification based on efficientnet and triplet attention mechanism. Appl. Sci. 2023, 13, 3180. [Google Scholar] [CrossRef]

- Dong, Y.; Yang, Z.; Liu, Q.; Zuo, R.; Wang, Z. Fusion of gaofen-5 and sentinel-2b data for lithological mapping using vision transformer dynamic graph convolutional network. Int. J. Appl. Earth Obs. Geoinf. 2024, 129, 103780. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Koeshidayatullah, A.; Al-Azani, S.; Baraboshkin, E.E.; Alfarraj, M. Faciesvit: Vision transformer for an improved core lithofacies prediction. Front. Earth Sci. 2022, 10, 992442. [Google Scholar] [CrossRef]

- Jacinto, M.V.G.; Silva, M.A.; de Oliveira, L.H.L.; Medeiros, D.R.; de Medeiros, G.C.; Rodrigues, T.C.; de Montalvão, L.C.; de Almeida, R.V. Lithostratigraphy modeling with transformer-based deep learning and natural language processing techniques. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference, Abu Dhabi, United Arab Emirates, 2–5 October 2023; p. D031S110R003. [Google Scholar]

- Cheng, L.; Keyan, X.; Li, S.; Rui, T.; Xuchao, D.; Baocheng, Q.; Dahong, X. Cnn-transformers for mineral prospectivity mapping in the maodeng—Baiyinchagan area, southern great xing’an range. Ore Geol. Rev. 2024, 167, 106007. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, June 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; Volume 97, pp. 6105–6114. [Google Scholar]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.; Dollár, P. Designing network design spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 10428–10436. [Google Scholar]

- Yuan, K.; Guo, S.; Liu, Z.; Zhou, A.; Yu, F.; Wu, W. Incorporating convolution designs into visual transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 579–588. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; Volume 139, pp. 10347–10357. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 568–578. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).