Detection and Type Recognition of SAR Artificial Modulation Targets Based on Multi-Scale Amplitude-Phase Features

Abstract

1. Introduction

1.1. Relevant Background

1.2. Existing Problem

1.3. Our Work

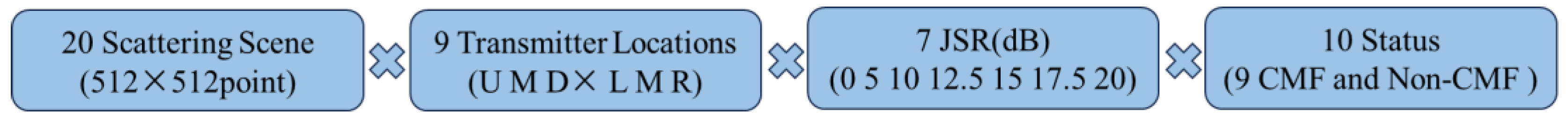

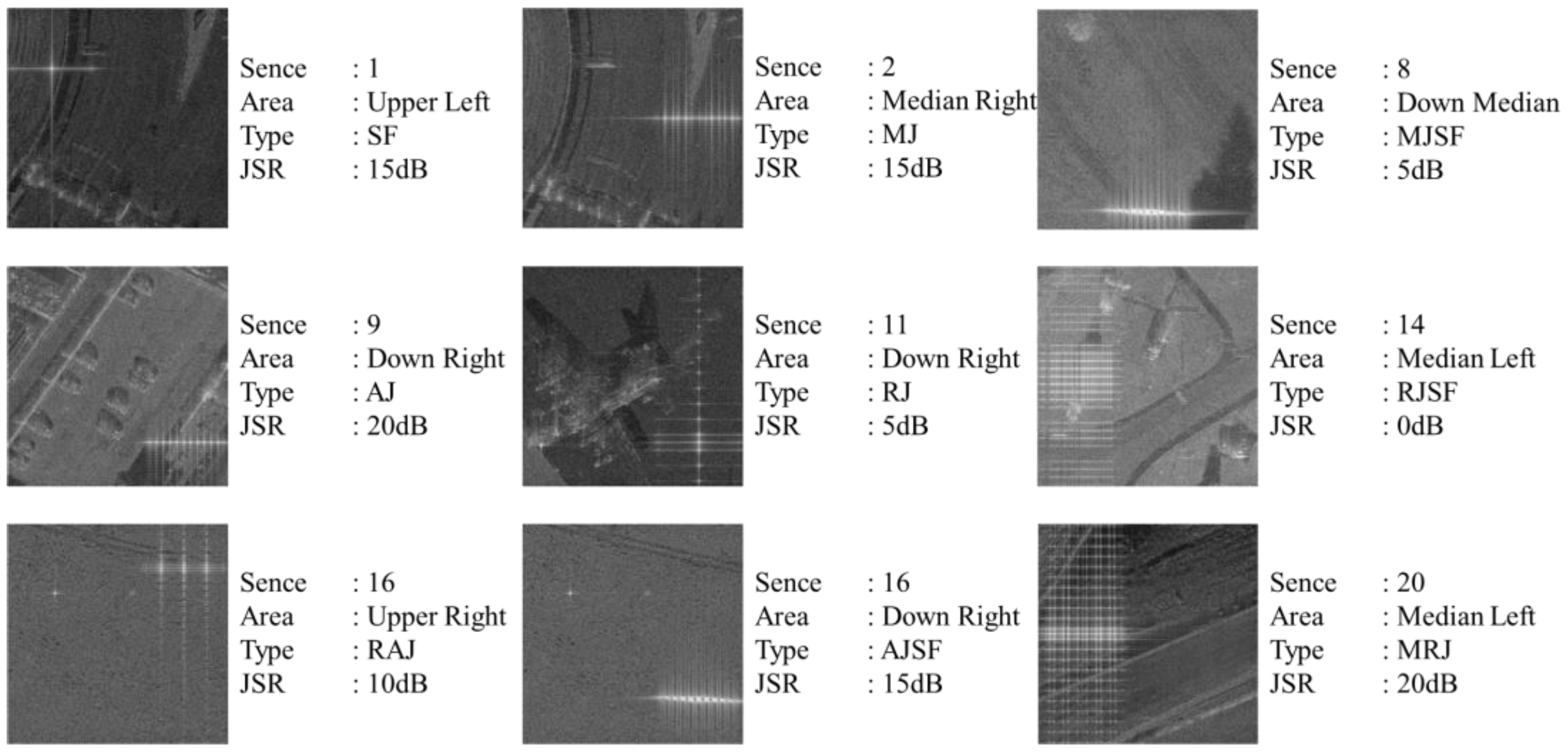

- A new Dataset, the AMT Detection and Modulation Type Recognition Dataset (ADMTR Dataset), is established, wherein the factors of different jamming positions, different Jamming-to-Signal Ratios (JSRs), and the modulated parameter are considered to enhance the generalization, which conforms more to the reality of complex electronic countermeasure environments.

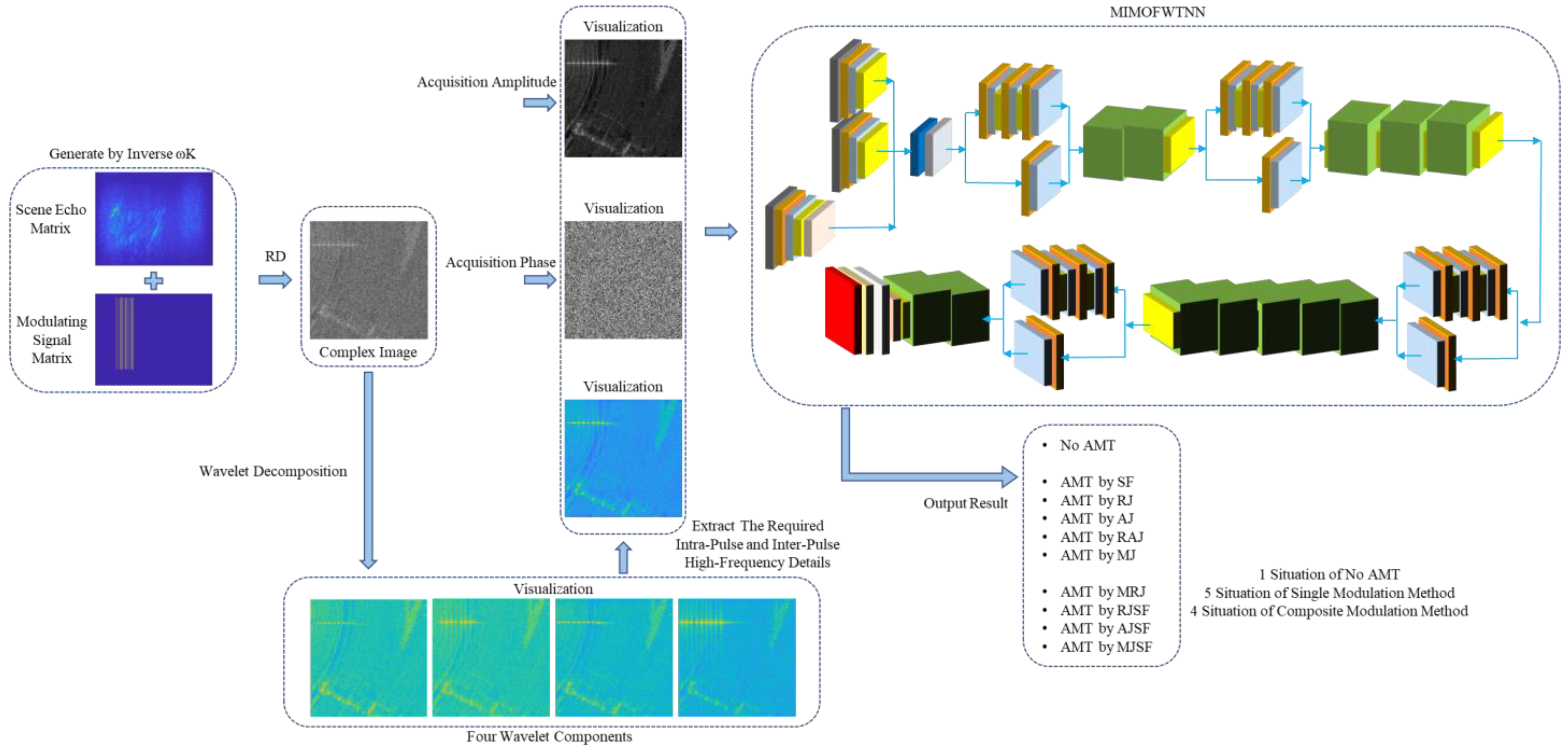

- The Multi-scale Amplitude-Phase Feature analysis method is proposed to detect and identify AMTs formed in echoes, which not only uses the amplitude information of the scene but also adequately makes use of the phase and high-frequency information.

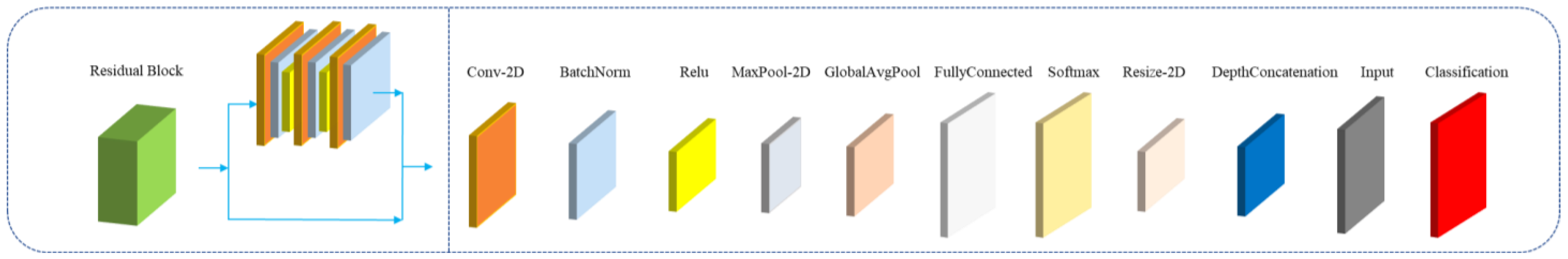

- A MIMOFWTNN network is designed to realize the detection and recognition task. The network structure is improved and optimized, especially in terms of phase processing and multi-scale processing branches, achieving a better correction rate on the completely independent test set compared to the state-of-the-art methods.

- The focus of this work is the AMT, which is a special modulated signal rather than the real echoes and targets in SAR Automatic Target Recognition (ATR). To the best of our knowledge, the related work is rarely studied in this field and the proposed network can not only detect AMTs but also recognize AMTs with different modulation types.

2. Model Construction

2.1. SAR Model

2.2. AMT Modulation Signal Model

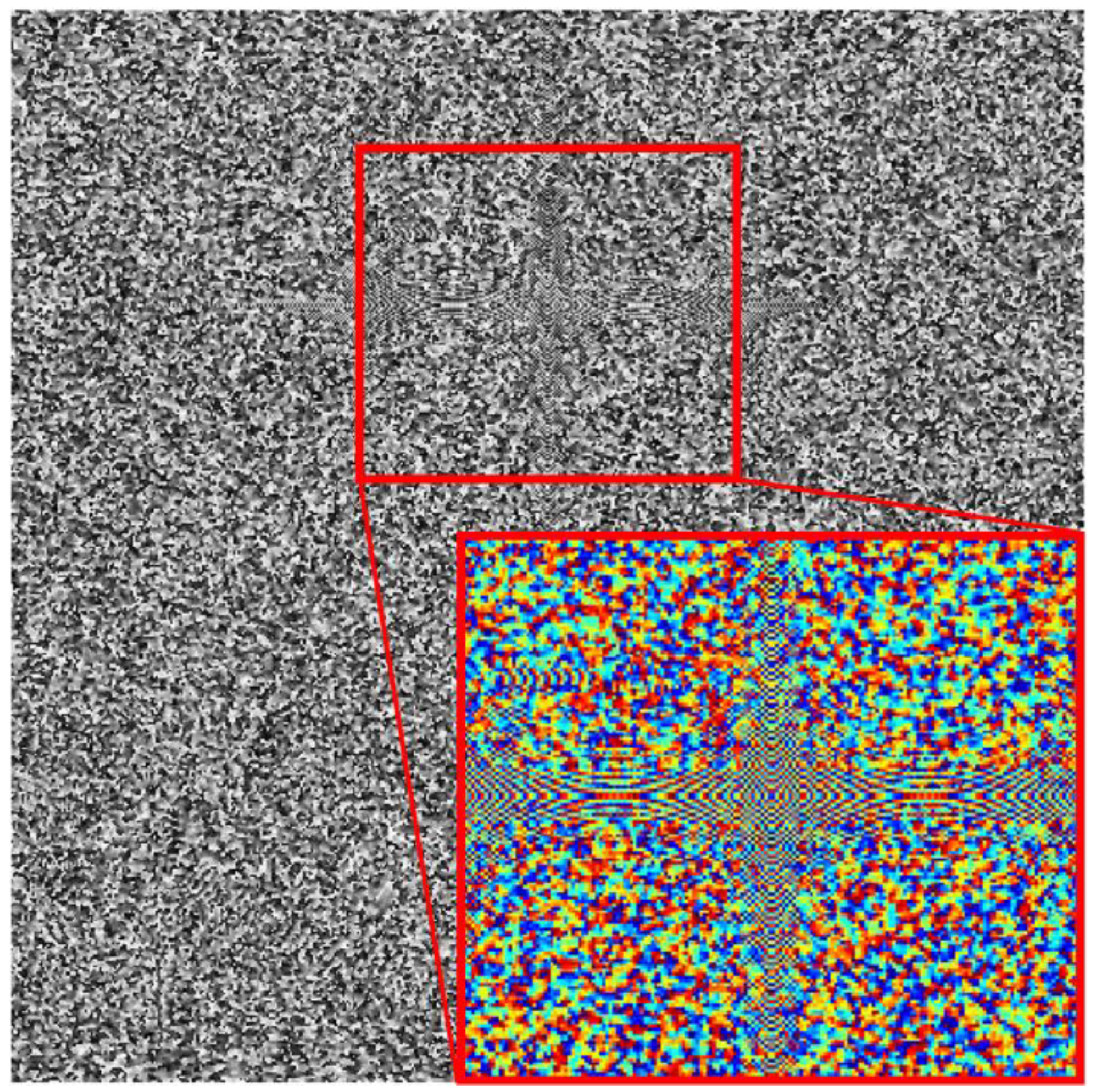

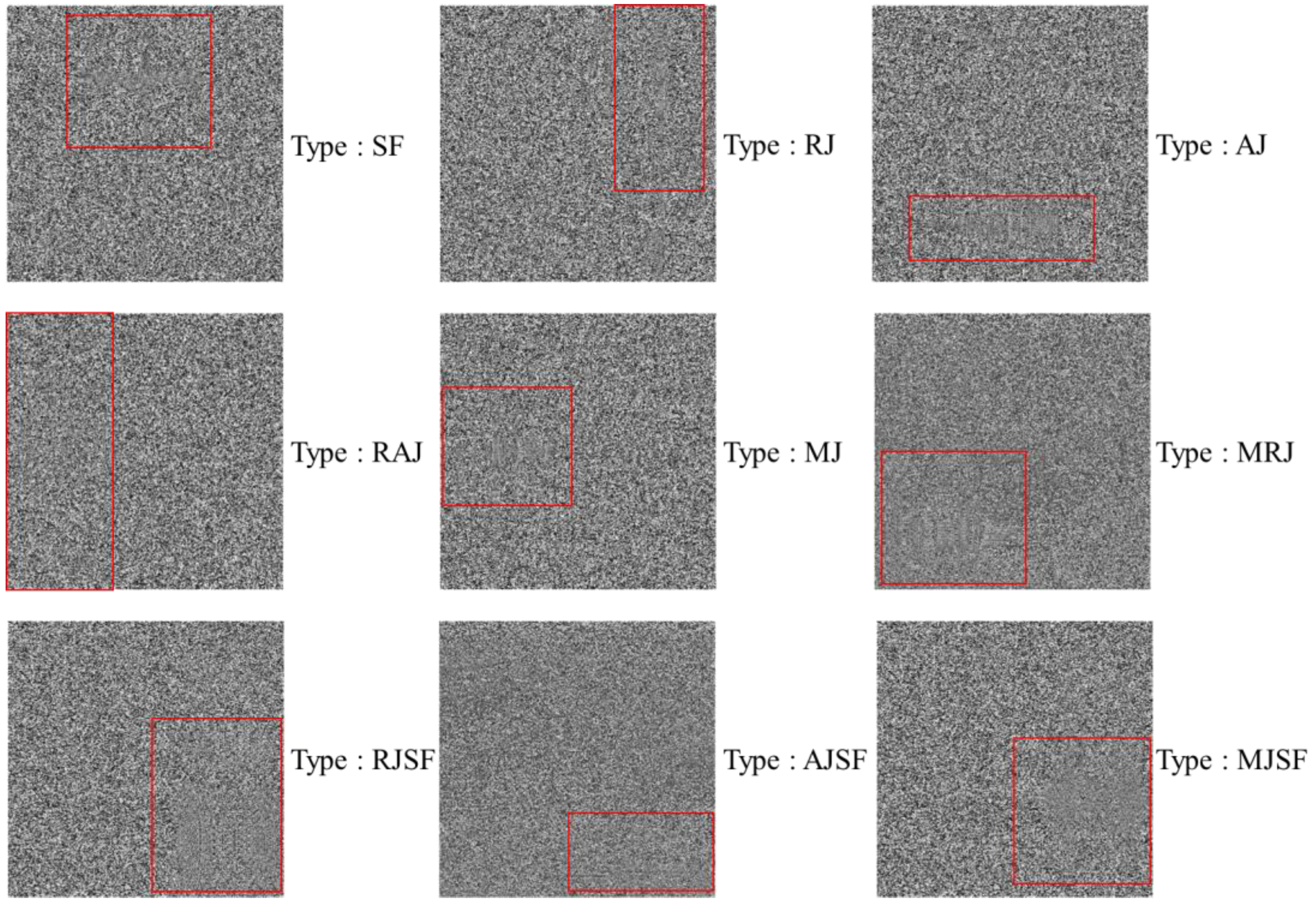

2.3. Establish Dataset

3. Method Design

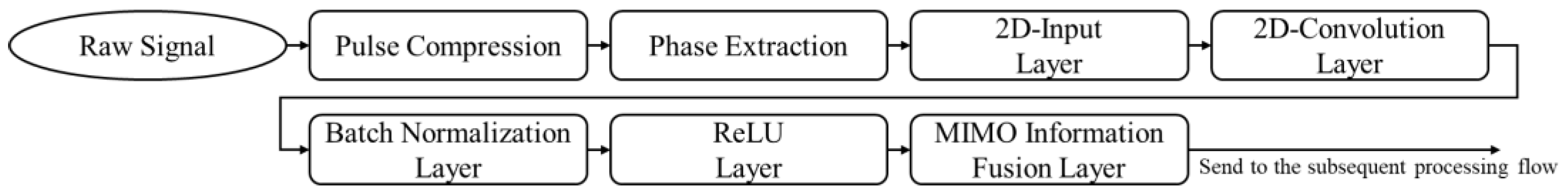

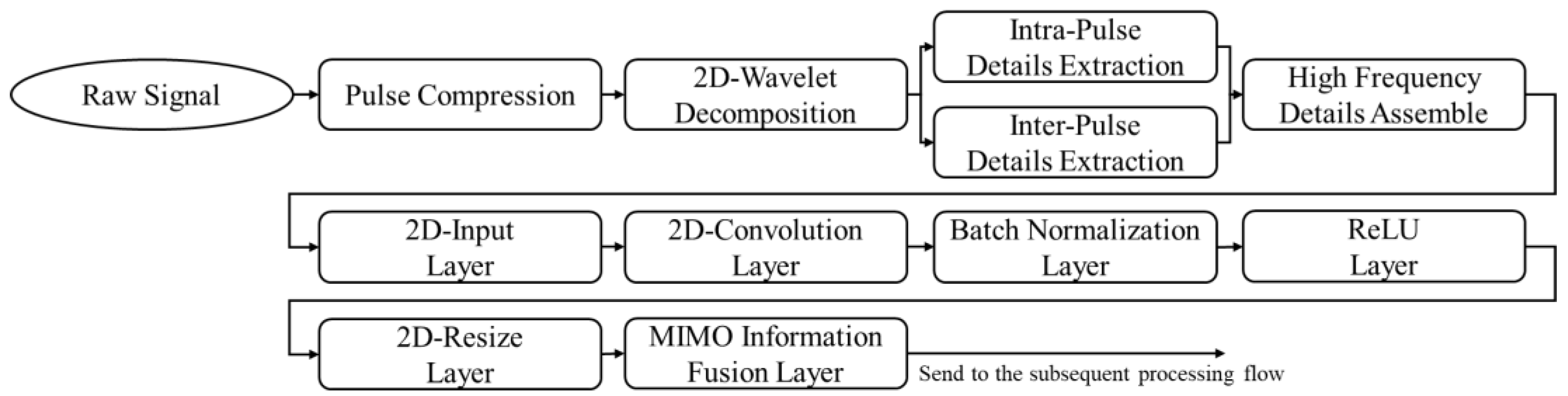

3.1. Network Structure

3.2. Phase Information Processing Branch

3.3. Multi-Scale Information Processing Branch

4. Experimental Verification

4.1. Training and Testing

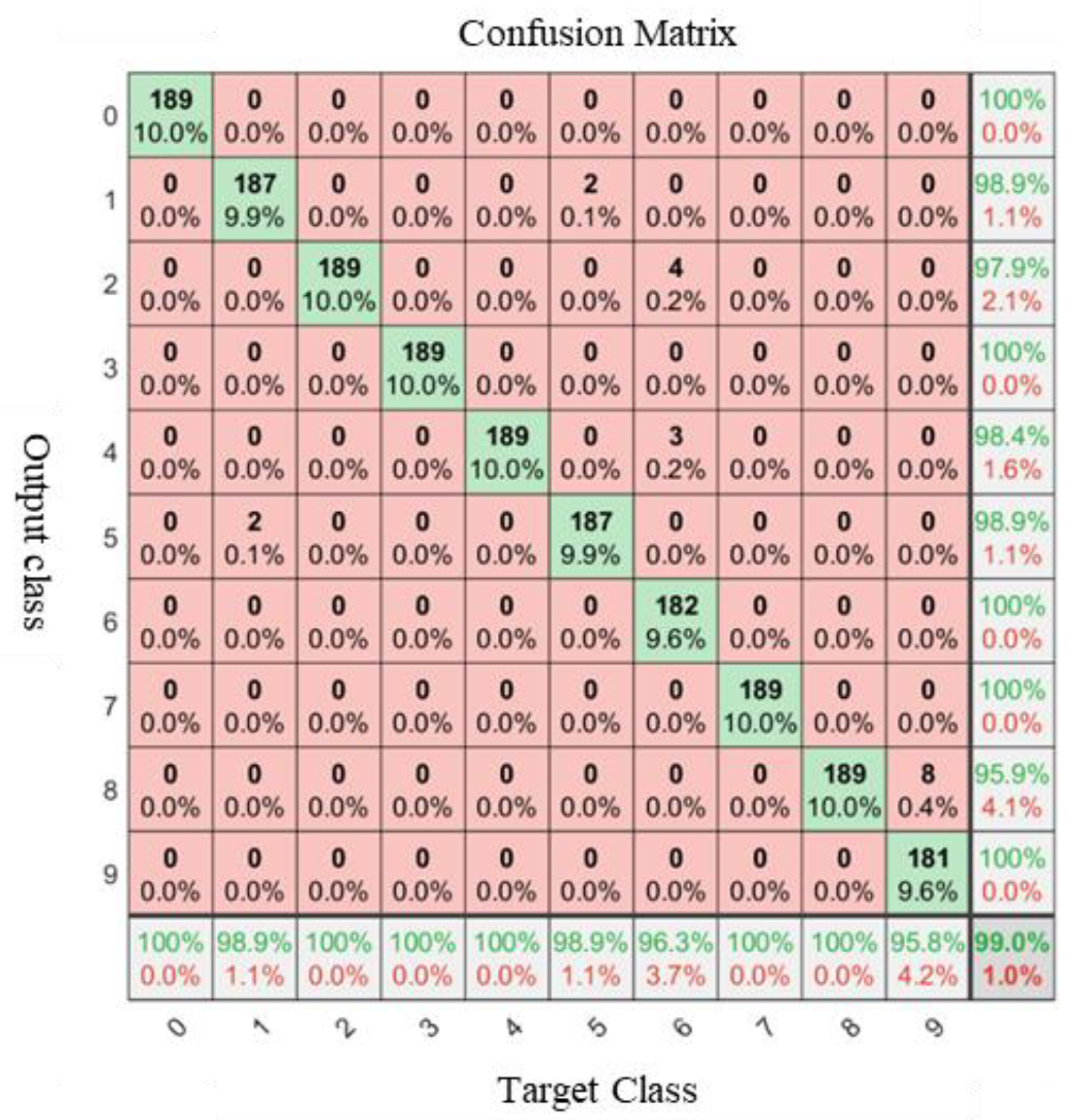

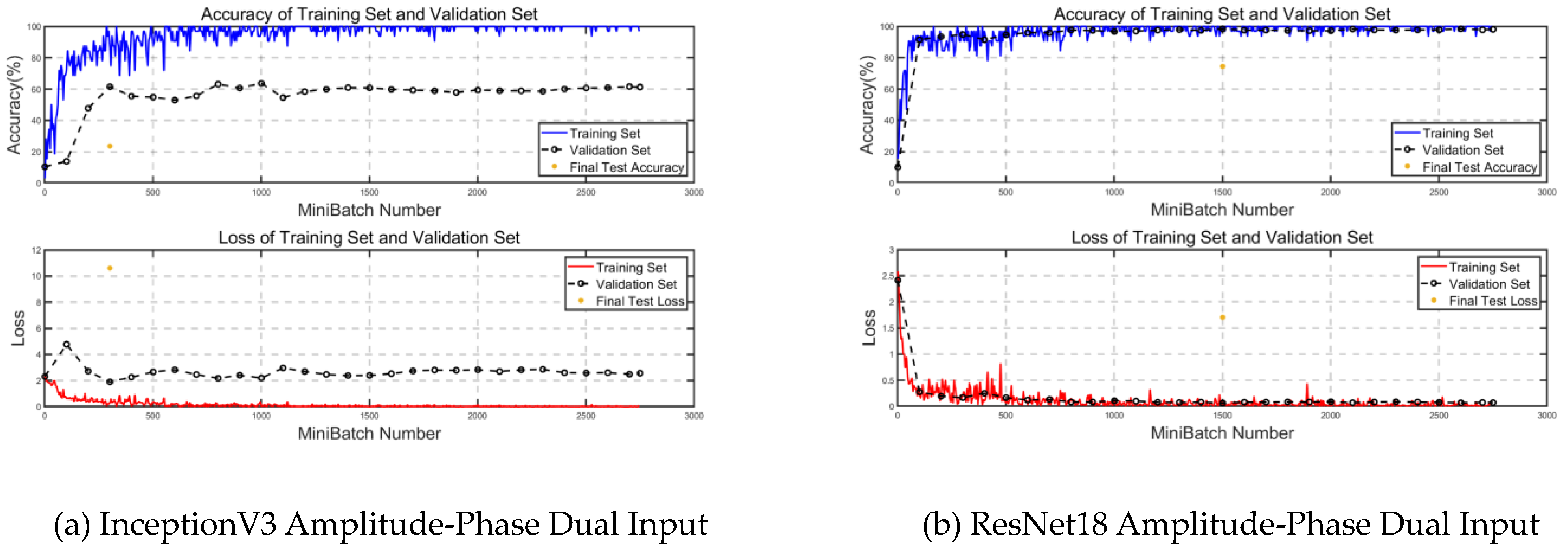

4.2. Comparison Experiment

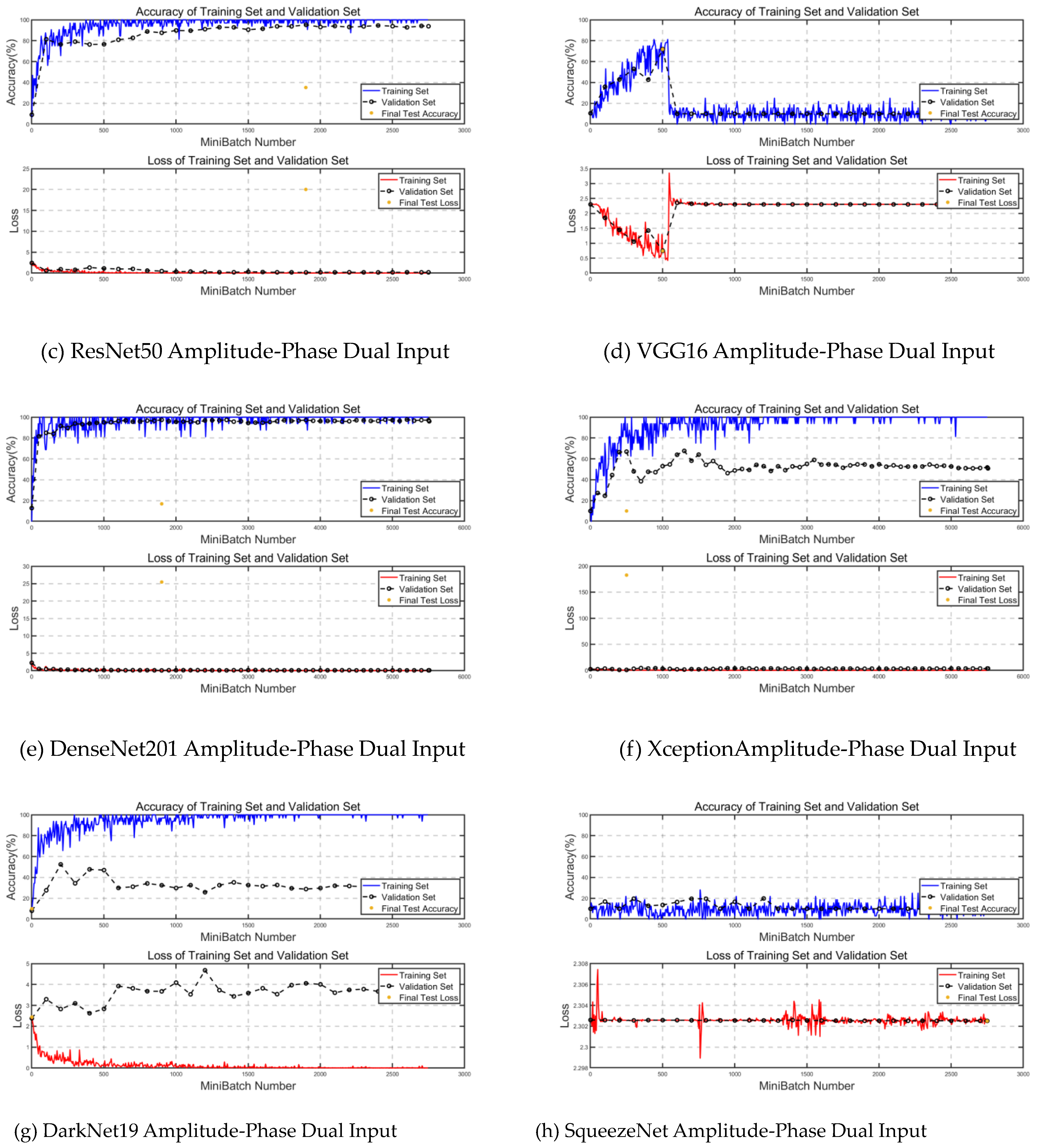

4.3. Ablation Experiment

5. Conclusions and Prospects

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, M.; Wang, R.; Deng, Y.; Wu, L.; Zhang, Z.; Zhang, H.; Li, N.; Liu, Y.; Luo, X. A Synchronization Algorithm for Spaceborne/Stationary BiSAR Imaging Based on Contrast Optimization with Direct Signal from Radar Satellite. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1977–1989. [Google Scholar] [CrossRef]

- Fu, S.; Xu, F.; Jin, Y.-Q. Reciprocal Translation between SAR and Optical Remote Sensing Images with Cascaded-Residual Adversarial Networks. Sci. China Inf. Sci. 2021, 64, 122301. [Google Scholar] [CrossRef]

- Zhang, H.; Deng, Y.; Wang, R.; Li, N.; Zhao, S.; Hong, F.; Wu, L.; Loffeld, O. Spaceborne/Stationary Bistatic SAR Imaging with TerraSAR-X as an Illuminator in Staring-Spotlight Mode. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5203–5216. [Google Scholar] [CrossRef]

- Song, Q.; Xu, F. Zero-Shot Learning of SAR Target Feature Space with Deep Generative Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2245–2249. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.-Q. Complex-Valued Convolutional Neural Network and Its Application in Polarimetric SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Hou, X.; Ao, W.; Xu, F. End-to-End Automatic Ship Detection and Recognition in High-Resolution Gaofen-3 Spaceborne SAR Images. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 9486–9489. [Google Scholar]

- Potter, L.C.; Moses, R.L. Attributed Scattering Centers for SAR ATR. IEEE Trans. Image Process. 1997, 6, 79–91. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; MIT Press: Cambridge, MA, USA, 2014; Volume 2, pp. 3104–3112. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Ravuri, S.; Vinyals, O. Classification Accuracy Score for Conditional Generative Models. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 12268–12279. [Google Scholar]

- Metz, L.; Poole, B.; Pfau, D.; Sohl-Dickstein, J. Unrolled Generative Adversarial Networks. arXiv 2017, arXiv:1611.02163. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved Training of Wasserstein GANs. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 5769–5779. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. In Proceedings of the International Conference on Machine Learning, Sydney, ACT, Australia, 6–11 August 2017. [Google Scholar]

- Menon, S.; Damian, A.; Hu, S.; Ravi, N.; Rudin, C. PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2434–2442. [Google Scholar]

- Dahl, R.; Norouzi, M.; Shlens, J. Pixel Recursive Super Resolution. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5449–5458. [Google Scholar]

- Chen, Y.; Tai, Y.; Liu, X.; Shen, C.; Yang, J. FSRNet: End-to-End Learning Face Super-Resolution with Facial Priors. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2492–2501. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2016, arXiv:1511.06434. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2018, arXiv:1710.10196. [Google Scholar]

- Krichen, M. Generative Adversarial Networks. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–7. [Google Scholar]

- Kingma, D.P.; Dhariwal, P. Glow: Generative Flow with Invertible 1×1 Convolutions. arXiv 2018, arXiv:1807.03039. [Google Scholar]

- Vahdat, A.; Kautz, J. NVAE: A Deep Hierarchical Variational Autoencoder. arXiv 2021, arXiv:2007.03898. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2022, arXiv:1312.6114. [Google Scholar]

- van den Oord, A.; Kalchbrenner, N.; Vinyals, O.; Espeholt, L.; Graves, A.; Kavukcuoglu, K. Conditional Image Generation with PixelCNN Decoders. arXiv 2016, arXiv:1606.05328. [Google Scholar]

- van den Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Amrani, M.; Jiang, F.; Xu, Y.; Liu, S.; Zhang, S. SAR-Oriented Visual Saliency Model and Directed Acyclic Graph Support Vector Metric Based Target Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3794–3810. [Google Scholar] [CrossRef]

- Coman, C.; Thaens, R. A Deep Learning SAR Target Classification Experiment on MSTAR Dataset. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–6. [Google Scholar]

- Tang, J.; Deng, C.; Huang, G.-B.; Zhao, B. Compressed-Domain Ship Detection on Spaceborne Optical Image Using Deep Neural Network and Extreme Learning Machine. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1174–1185. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A Densely Connected End-to-End Neural Network for Multiscale and Multiscene SAR Ship Detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Li, X.; Zhang, G.; Cui, H.; Hou, S.; Wang, S.; Li, X.; Chen, Y.; Li, Z.; Zhang, L. MCANet: A Joint Semantic Segmentation Framework of Optical and SAR Images for Land Use Classification. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102638. [Google Scholar] [CrossRef]

- Park, J. Efficient Ensemble via Rotation-Based Self- Supervised Learning Technique and Multi-Input Multi-Output Network. IEEE Access 2024, 12, 36135–36147. [Google Scholar] [CrossRef]

- Ferianc, M.; Rodrigues, M. MIMMO: Multi-Input Massive Multi-Output Neural Network. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Vancouver, BC, Canada, 2023; pp. 4564–4569. [Google Scholar]

- Zhong, F.; Wang, G.; Chen, Z.; Yuan, X.; Xia, F. Multiple-Input Multiple-Output Fusion Network for Generalized Zero-Shot Learning. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Toronto, ON, Canada, 2021; pp. 1725–1729. [Google Scholar]

| Name | Abbr. | Equations |

|---|---|---|

| Shift Frequency CMF | SF | |

| Range intermittent sampling Jamming CMF | RJ | |

| Azimuth intermittent sampling Jamming CMF | AJ | |

| Range-Azimuth intermittent sampling Jamming CMF | RAJ | |

| Micromotion frequency Jamming CMF | MJ | |

| Micromotion frequency and Range intermittent sampling Jamming CMF | MRJ | |

| Range intermittent sampling Jamming and Shift Frequency CMF | RJSF | |

| Azimuth intermittent sampling Jamming and Shift Frequency CMF | AJSF | |

| Micromotion frequency Jamming and Shift Frequency CMF | MJSF |

| Feature | Value |

|---|---|

| Total Network Layers | 187 layers |

| Input Branch | 3 branches |

| Output Branch | 2 branches |

| Output Class | 10 classes |

| Parameter Scale | 23.6 million |

| Number of Residual Blocks | 16 blocks |

| Environment | Value | Parameter | Value |

|---|---|---|---|

| CPU | Intel i9-13900K | Optimizer | SGDM |

| GPU | Nvidia RTX 4090 | Initial Learning Rate | 0.01 |

| RAM | 64 G @ 4000 MHz | Learning rate strategy | Piecewise Decline |

| OS Version | Windows 11 | Decline strategy | 0.5 Every 2 Epoch |

| Environment Version | MATLAB R2023b | Max Epoch | 10 |

| GPU Driver Version | 546.33 | Mini Batch Size | 32 |

| CUDA Version | 12.3 | Validation Frequency | Every 100 Mini Batch |

| Training Option | Only GPU | Output Network | Best Validation Loss |

| Test Number | Training Duration | Verification Accuracy |

|---|---|---|

| 1 | 82 m 17 s | 98.68% |

| 2 | 66 m 19 s | 86.67% |

| 3 | 83 m 26 s | 99.37% |

| 4 | 81 m 17 s | 99.42% |

| 5 | 66 m 04 s | 98.20% |

| 6 | 65 m 54 s | 99.42% |

| 7 | 68 m 36 s | 99.15% |

| 8 | 66 m 16 s | 99.15% |

| 9 | 67 m 51 s | 99.37% |

| 10 | 65 m 54 s | 99.21% |

| Network | Average Validation Accuracy | Average Training Time | Test Set Accuracy | Average Storage File Size |

|---|---|---|---|---|

| MIMOFWTNN | 96.96% | 74.21 m | 99.0% | 85.8 MB |

| InceptionV3 | 17.84% | 38.04 m | 23.5% | 81.2 MB |

| ResNet18 | 38.46% | 10.11 m | 57.4% | 40.2 MB |

| ResNet50 | 95.46% | 32.70 m | 88.4% | 85.5 MB |

| VGG16 | 82.79% | 369.39 m | 71.9% | 1.94 GB |

| DenseNet201 | 26.59% | 242.57 m | 60.9% | 73.6 MB |

| Xcepetion | 16.09% | 68.34 m | 82.8% | 76.5 MB |

| DarkNet19 | 9.61% | 28.22 m | 13.0% | 70.6 MB |

| SqueezeNet | 10.48% | 11.38 m | 10.0% | 3.34 MB |

| Network | Average Validation Accuracy | Average Training Time | Test Set Accuracy | Average Storage File Size |

|---|---|---|---|---|

| MIMOFWTNN | 96.96% | 74.21 m | 99.0% | 85.8 MB |

| Only Amplitude | 99.05% | 31.05 m | 83.7% | 85.5 MB |

| Only Phase | 11.69% | 31.64 m | 11.1% | 85.5 MB |

| Dual Addition | 74.21% | 52.64 m | 20.1% | 85.6 MB |

| Dual Depth | 92.50% | 64.70 m | 39.1% | 85.6 MB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, W.; Cai, Z.; Fang, F.; Feng, D.; Wang, J.; Xing, S.; Quan, S. Detection and Type Recognition of SAR Artificial Modulation Targets Based on Multi-Scale Amplitude-Phase Features. Remote Sens. 2024, 16, 2107. https://doi.org/10.3390/rs16122107

Meng W, Cai Z, Fang F, Feng D, Wang J, Xing S, Quan S. Detection and Type Recognition of SAR Artificial Modulation Targets Based on Multi-Scale Amplitude-Phase Features. Remote Sensing. 2024; 16(12):2107. https://doi.org/10.3390/rs16122107

Chicago/Turabian StyleMeng, Weize, Zhihao Cai, Fuping Fang, Dejun Feng, Jinrong Wang, Shiqi Xing, and Sinong Quan. 2024. "Detection and Type Recognition of SAR Artificial Modulation Targets Based on Multi-Scale Amplitude-Phase Features" Remote Sensing 16, no. 12: 2107. https://doi.org/10.3390/rs16122107

APA StyleMeng, W., Cai, Z., Fang, F., Feng, D., Wang, J., Xing, S., & Quan, S. (2024). Detection and Type Recognition of SAR Artificial Modulation Targets Based on Multi-Scale Amplitude-Phase Features. Remote Sensing, 16(12), 2107. https://doi.org/10.3390/rs16122107