Self-Paced Multi-Scale Joint Feature Mapper for Multi-Objective Change Detection in Heterogeneous Images

Abstract

1. Introduction

2. Background and Motivation

2.1. Problem Definition

2.2. Related Work

2.3. Evolutionary Multi-Objective Optimization

2.4. Self-Paced Learning

3. Methodology

| Algorithm 1 Overall framework of SMJFM |

|

| Algorithm 2 Algorithm for MOPSO in remote sensing image change detection |

|

3.1. Multi-Scale Joint Feature Mapper

3.2. MJFM with Self-Paced Learning (SMJFM)

- is a convex function over , ensuring the uniqueness of with respect to ;

- With all parameters held constant except for and , exhibits a monotonically decreasing trend with . Moreover, as approaches 0, , and as tends to infinity, ;

- increases monotonically with respect to , , and .

3.3. Change Detection with Multi-Objective PSO

4. Experiment

4.1. Experimental Settings

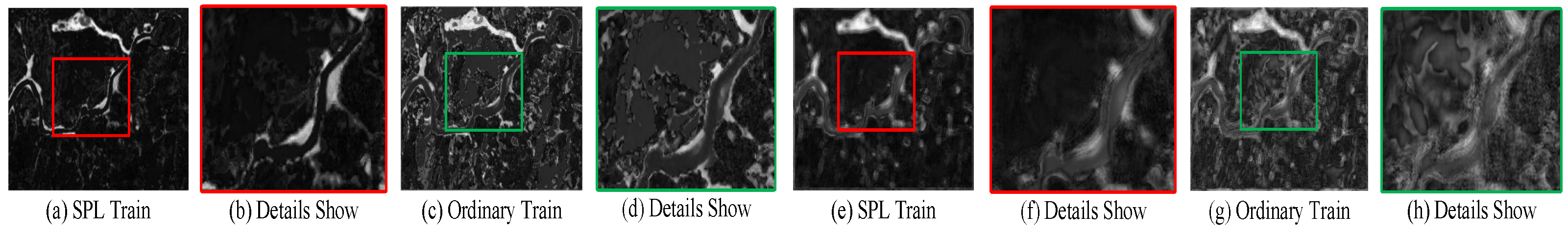

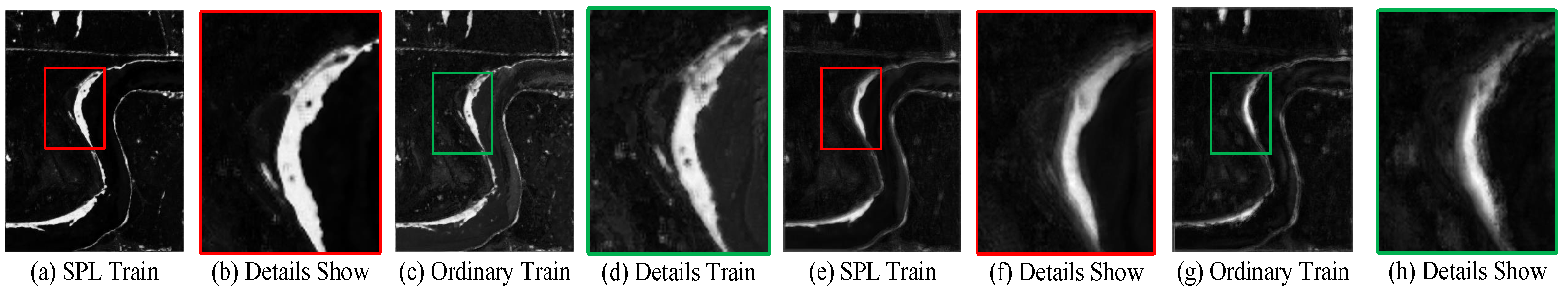

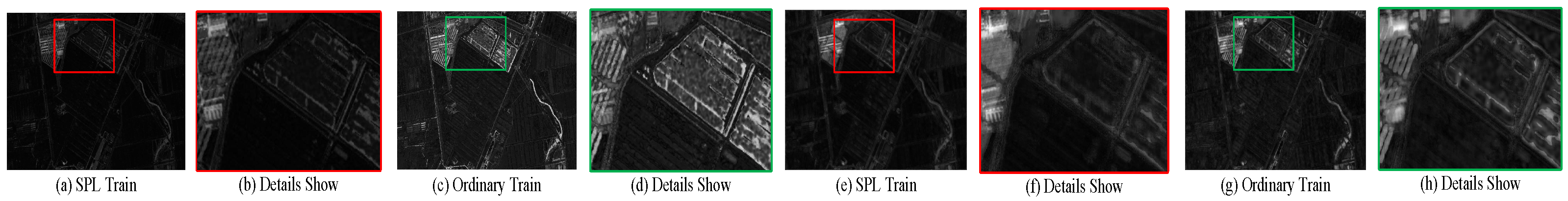

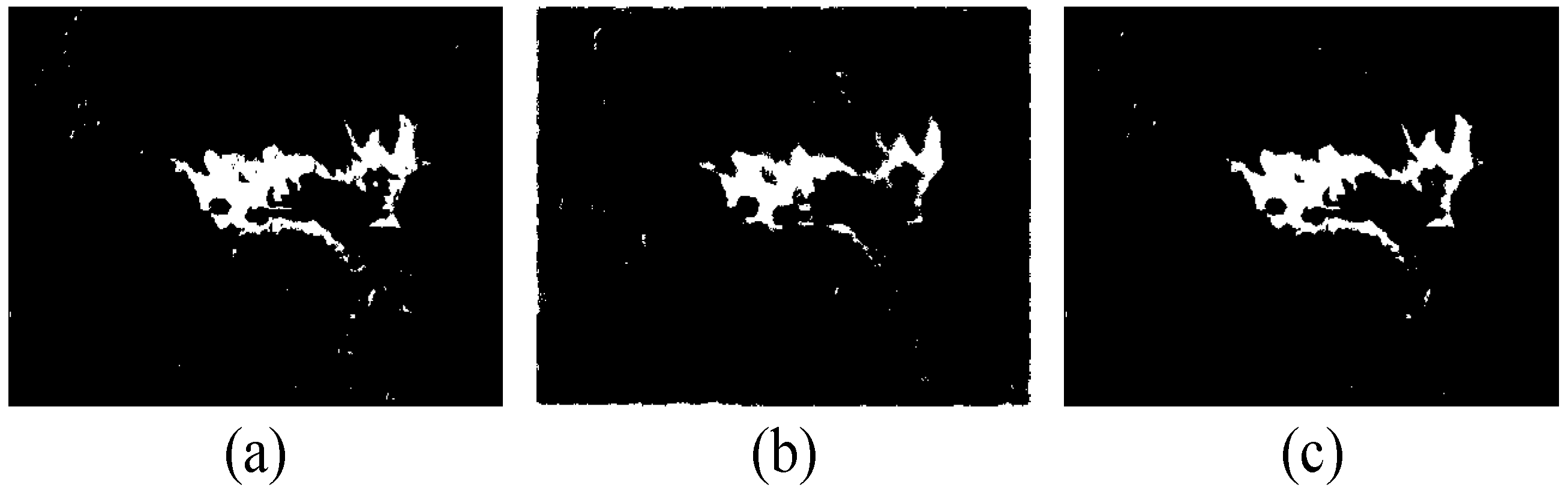

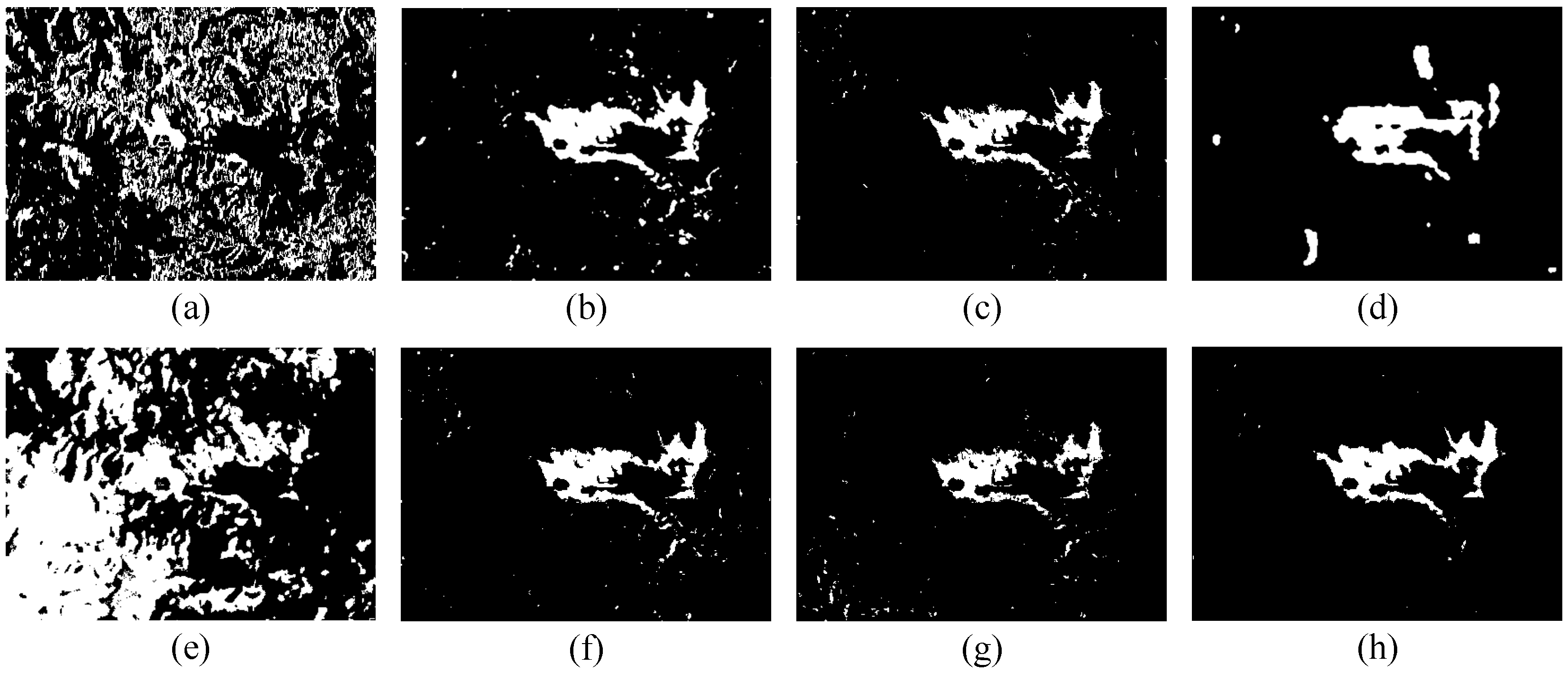

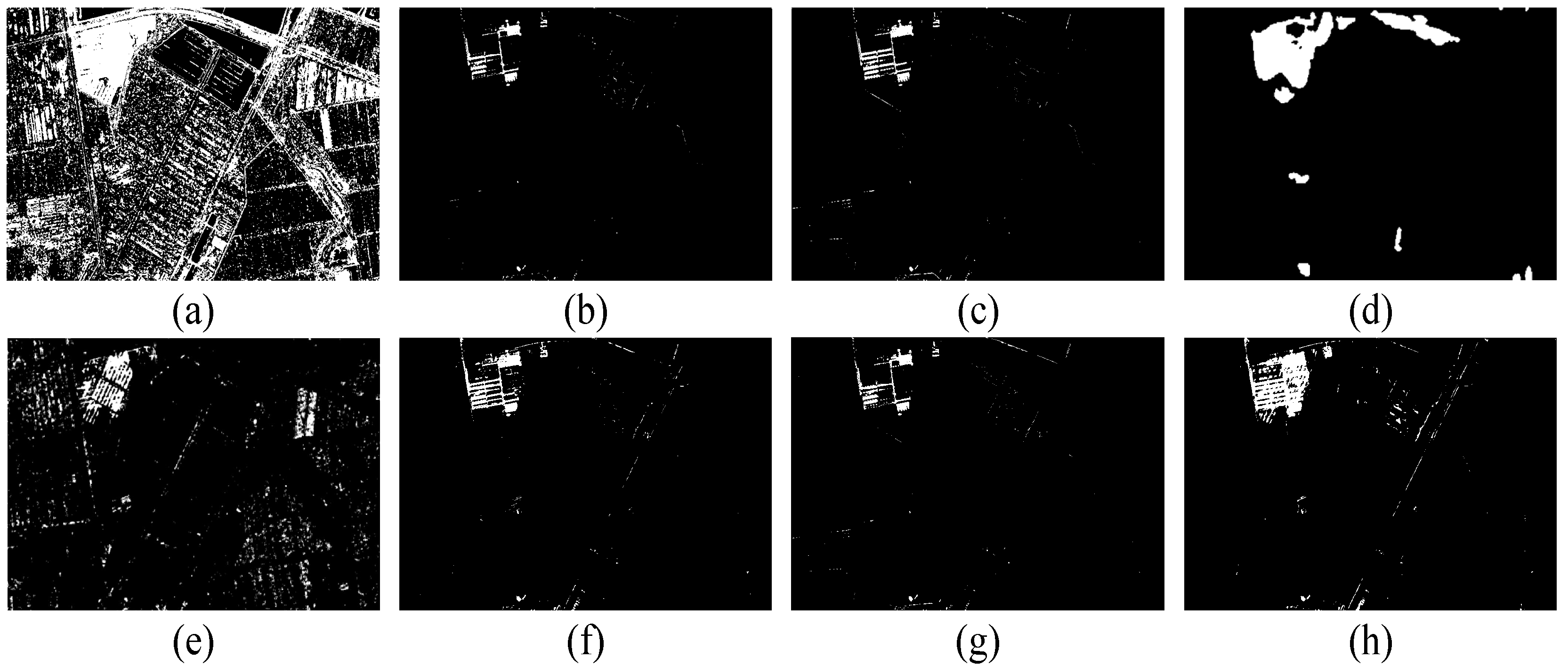

4.2. Test of Self-Paced Learning

4.3. Test of Self-Paced Learning Parameters

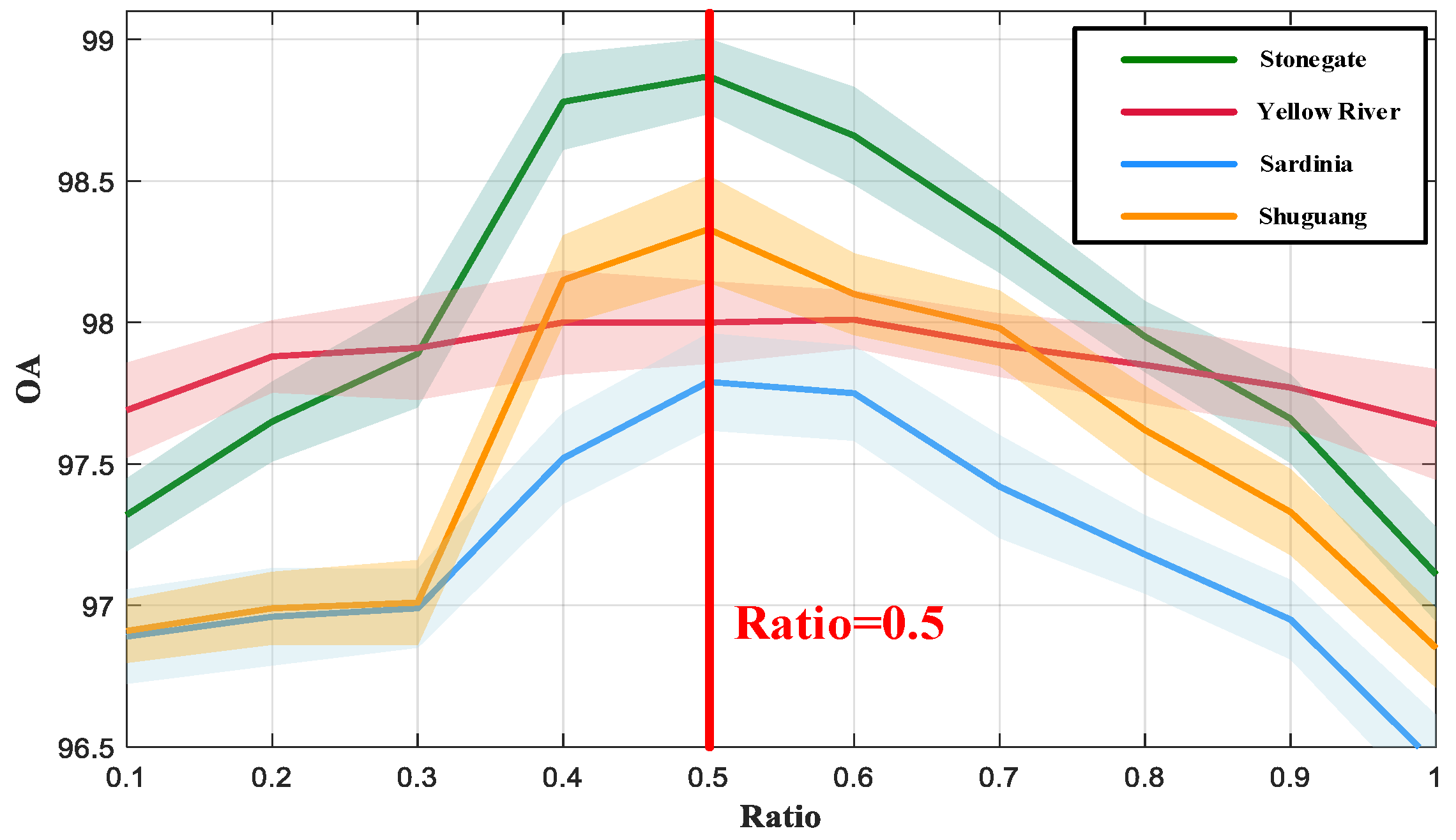

4.4. Test of Multi-Scale Learning

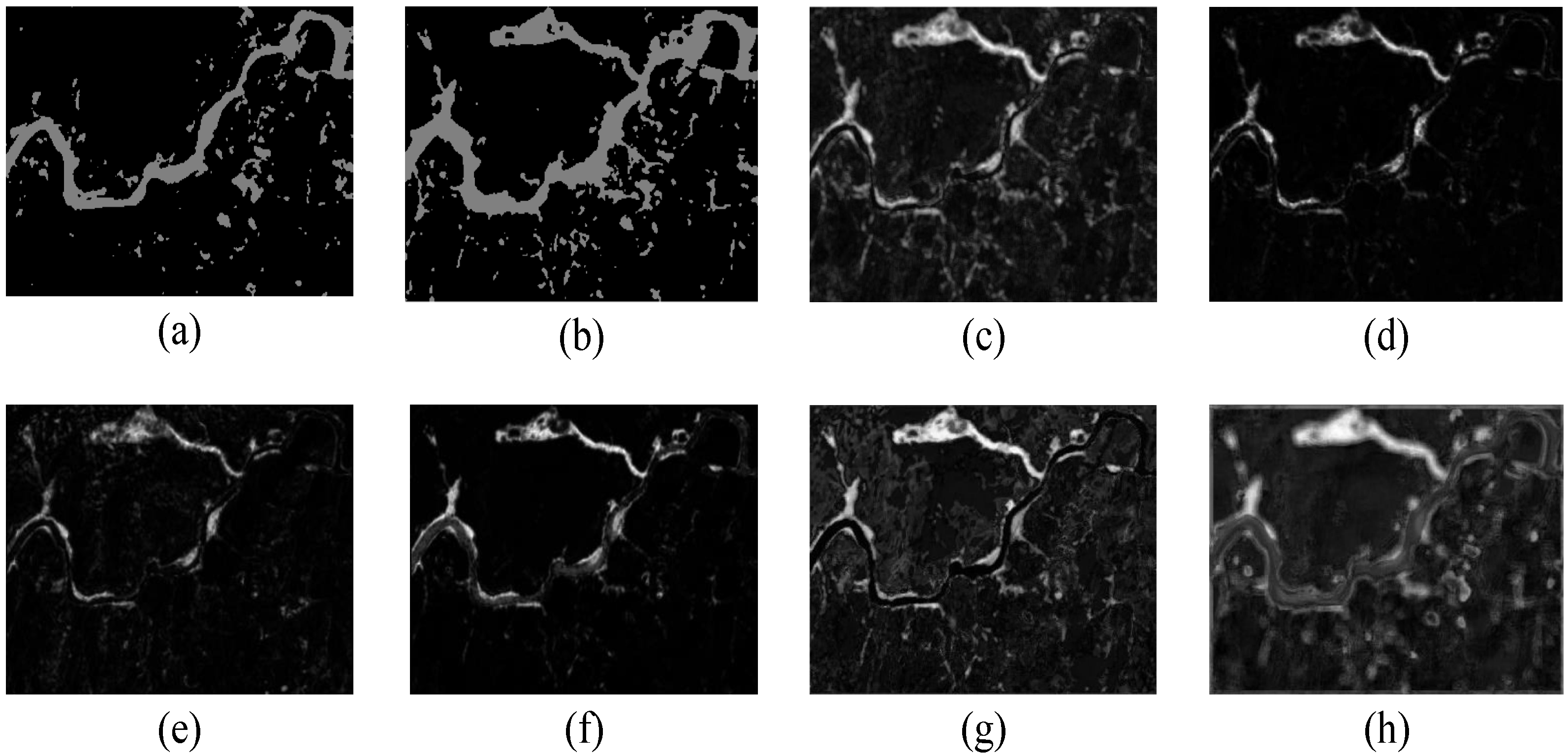

4.5. Test of MOPSO

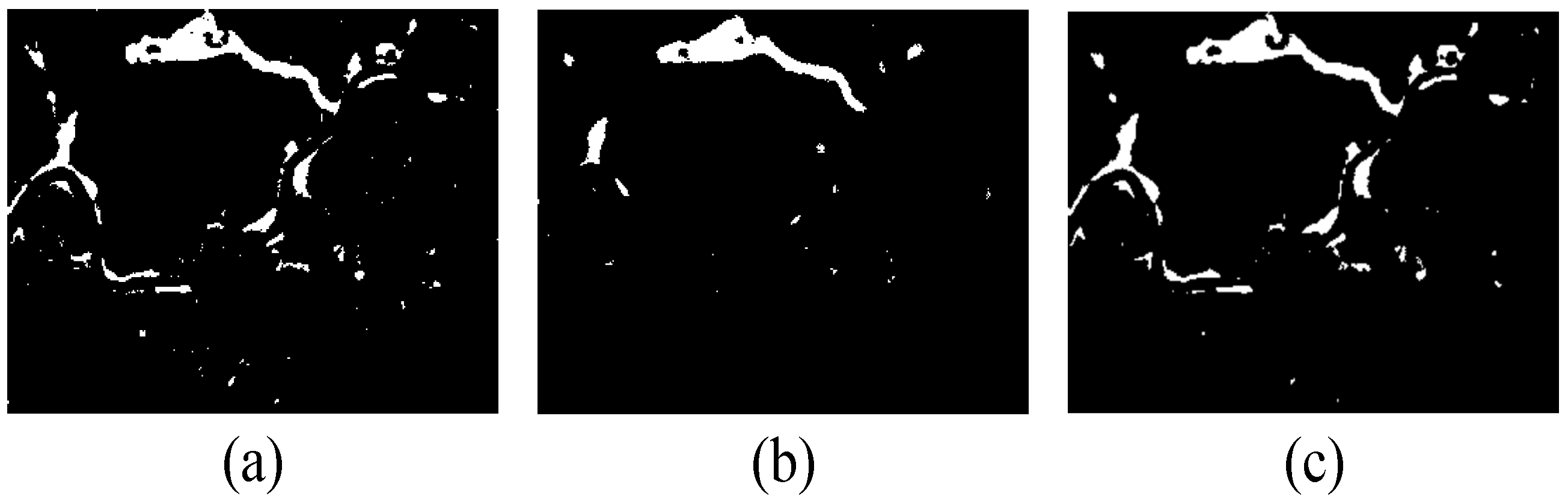

4.6. Test of Robust Evaluation of Change Map

4.7. Test of Patch Size

4.8. Comparison of SMJFM against Other Methods

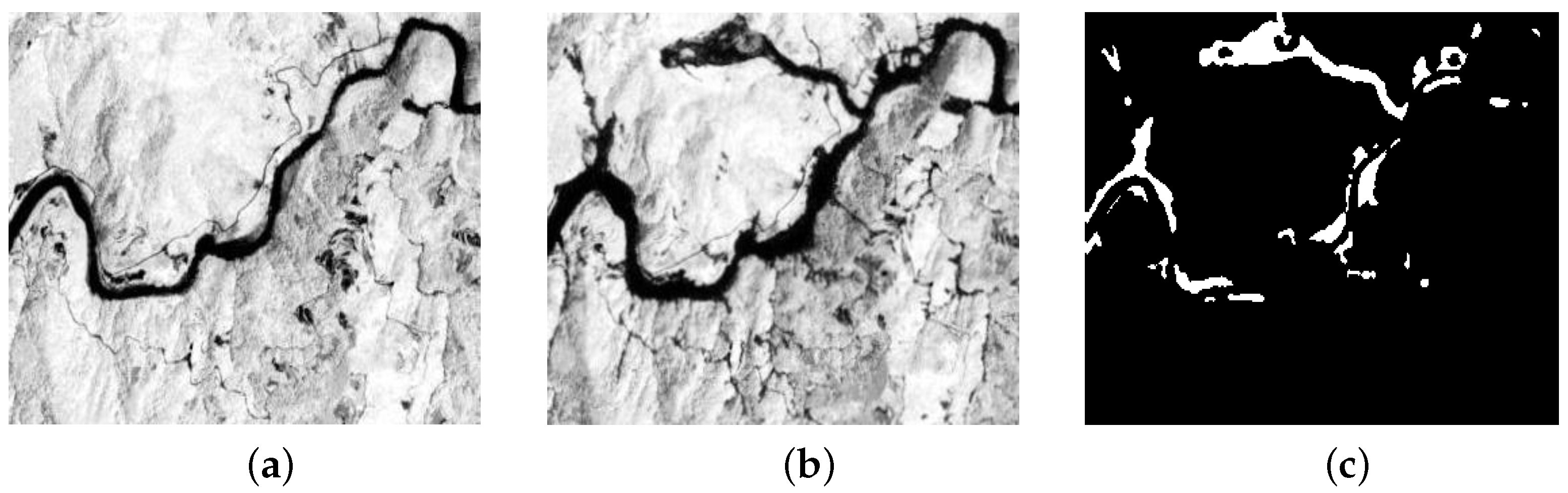

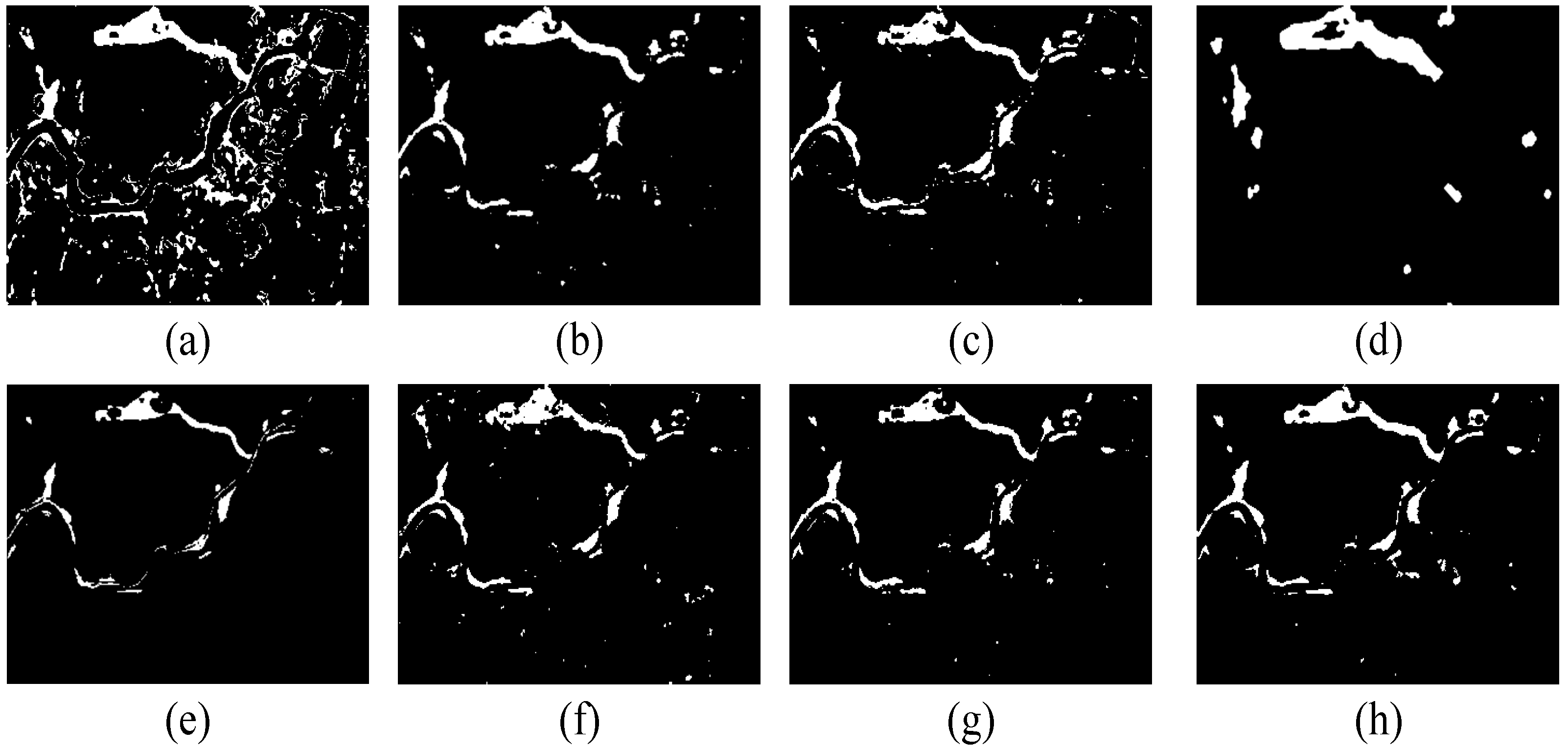

4.8.1. Experiments on the Stone-Gate Data Set

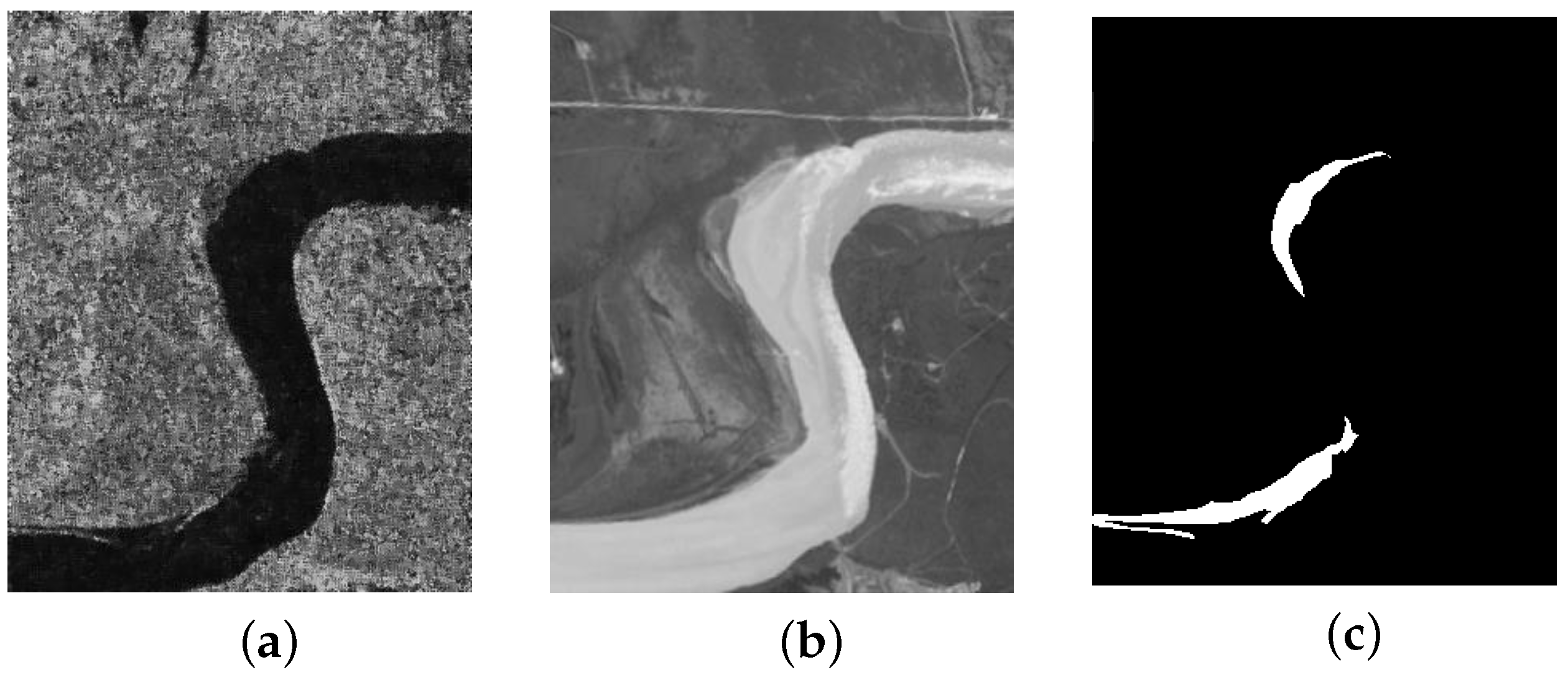

4.8.2. Experiments on the Yellow River Data Set

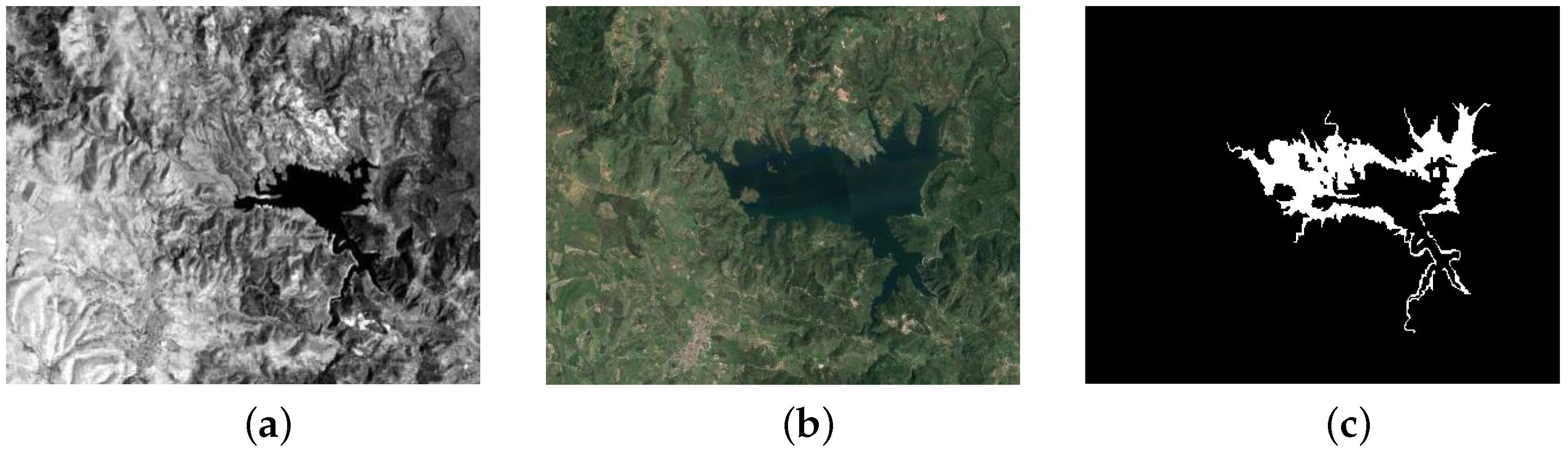

4.8.3. Experiments on the Sardinia Data Set

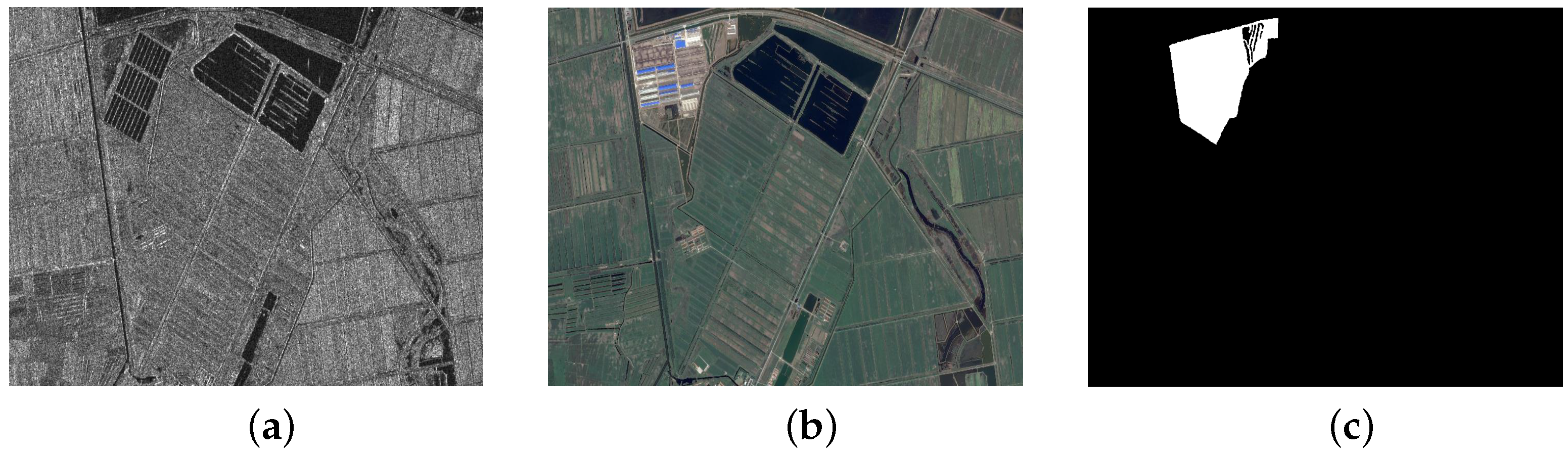

4.8.4. Experiments on the Shuguang Data Set

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shi, J.; Wu, T.; Qin, A.K.; Lei, Y.; Joen, G. Self-Guided Autoencoders for Unsupervised Change Detection in Heterogeneous Remote Sensing Images. IEEE Trans. Artif. Intell. 2024. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change detection in synthetic aperture radar images based on deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 125–138. [Google Scholar] [CrossRef] [PubMed]

- Wen, Y.; Ma, X.; Zhang, X.; Pun, M.O. GCD-DDPM: A generative change detection model based on difference-feature guided DDPM. IEEE Trans. Geosci. Remote Sens. 2024. [Google Scholar] [CrossRef]

- Ji, Y.; Sun, W.; Wang, Y.; Lv, Z.; Yang, G.; Zhan, Y.; Li, C. Domain Adaptive and Interactive Differential Attention Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2024. [Google Scholar] [CrossRef]

- Jin, S.; Yang, L.; Zhu, Z.; Homer, C. A land cover change detection and classification protocol for updating Alaska NLCD 2001 to 2011. Remote Sens. Environ. 2017, 195, 44–55. [Google Scholar] [CrossRef]

- Yin, H.; Pflugmacher, D.; Li, A.; Li, Z.; Hostert, P. Land use and land cover change in Inner Mongolia-understanding the effects of China’s re-vegetation programs. Remote Sens. Environ. 2018, 204, 918–930. [Google Scholar] [CrossRef]

- Leichtle, T.; Geiß, C.; Wurm, M.; Lakes, T.; Taubenböck, H. Unsupervised change detection in VHR remote sensing imagery–an object-based clustering approach in a dynamic urban environment. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 15–27. [Google Scholar] [CrossRef]

- Leichtle, T.; Geiß, C.; Lakes, T.; Taubenböck, H. Class imbalance in unsupervised change detection–a diagnostic analysis from urban remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2017, 60, 83–98. [Google Scholar] [CrossRef]

- Luo, H.; Liu, C.; Wu, C.; Guo, X. Urban change detection based on Dempster–Shafer theory for multitemporal very high-resolution imagery. Remote Sens. 2018, 10, 980. [Google Scholar] [CrossRef]

- Wang, S.; Ma, Q.; Ding, H.; Liang, H. Detection of urban expansion and land surface temperature change using multi-temporal landsat images. Resour. Conserv. Recycl. 2018, 128, 526–534. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A deep convolutional coupling network for change detection based on heterogeneous optical and radar images. IEEE Trans. Neural Netw. Learn. Syst. 2016, 29, 545–559. [Google Scholar] [CrossRef] [PubMed]

- Gong, M.; Zhang, P.; Su, L.; Liu, J. Coupled dictionary learning for change detection from multisource data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7077–7091. [Google Scholar] [CrossRef]

- Li, M.; Li, M.; Zhang, P.; Wu, Y.; Song, W.; An, L. SAR image change detection using PCANet guided by saliency detection. IEEE Geosci. Remote Sens. Lett. 2018, 16, 402–406. [Google Scholar] [CrossRef]

- Zhao, M.; Ling, Q.; Li, F. An iterative feedback-based change detection algorithm for flood mapping in SAR images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 231–235. [Google Scholar] [CrossRef]

- Zheng, Y.; Jiao, L.; Liu, H.; Zhang, X.; Hou, B.; Wang, S. Unsupervised saliency-guided SAR image change detection. Pattern Recognit. 2017, 61, 309–326. [Google Scholar] [CrossRef]

- De, S.; Pirrone, D.; Bovolo, F.; Bruzzone, L.; Bhattacharya, A. A novel change detection framework based on deep learning for the analysis of multi-temporal polarimetric SAR images. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5193–5196. [Google Scholar]

- Hou, B.; Liu, Q.; Wang, H.; Wang, Y. From W-Net to CDGAN: Bitemporal change detection via deep learning techniques. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1790–1802. [Google Scholar] [CrossRef]

- Lu, X.; Yuan, Y.; Zheng, X. Joint dictionary learning for multispectral change detection. IEEE Trans. Cybern. 2016, 47, 884–897. [Google Scholar] [CrossRef] [PubMed]

- Xiang, Y.; Wang, F.; Wan, L.; Jiao, N.; You, H. OS-flow: A robust algorithm for dense optical and SAR image registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6335–6354. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Chan, J.C.W. Thick cloud removal with optical and SAR imagery via convolutional-mapping-deconvolutional network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2865–2879. [Google Scholar] [CrossRef]

- Xiang, Y.; Tao, R.; Wan, L.; Wang, F.; You, H. OS-PC: Combining feature representation and 3-D phase correlation for subpixel optical and SAR image registration. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6451–6466. [Google Scholar] [CrossRef]

- Coello, C.A.C. Evolutionary Algorithms for Solving Multi-Objective Problems; Springer: New York, NY, USA, 2007. [Google Scholar]

- Fonseca, C.M.; Fleming, P.J. An overview of evolutionary algorithms in multiobjective optimization. Evol. Comput. 1995, 3, 1–16. [Google Scholar] [CrossRef]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- Volpi, M.; Tuia, D.; Bovolo, F.; Kanevski, M.; Bruzzone, L. Supervised change detection in VHR images using contextual information and support vector machines. Int. J. Appl. Earth Obs. Geoinf. 2013, 20, 77–85. [Google Scholar] [CrossRef]

- Qin, Y.; Niu, Z.; Chen, F.; Li, B.; Ban, Y. Object-based land cover change detection for cross-sensor images. Int. J. Remote Sens. 2013, 34, 6723–6737. [Google Scholar] [CrossRef]

- Mercier, G.; Moser, G.; Serpico, S.B. Conditional copulas for change detection in heterogeneous remote sensing images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1428–1441. [Google Scholar] [CrossRef]

- Volpi, M.; Camps-Valls, G.; Tuia, D. Spectral alignment of multi-temporal cross-sensor images with automated kernel canonical correlation analysis. ISPRS J. Photogramm. Remote Sens. 2015, 107, 50–63. [Google Scholar] [CrossRef]

- Prendes, J.; Chabert, M.; Pascal, F.; Giros, A.; Tourneret, J.Y. Change detection for optical and radar images using a Bayesian nonparametric model coupled with a Markov random field. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 1513–1517. [Google Scholar]

- Yuan, F.; Sawaya, K.E.; Loeffelholz, B.C.; Bauer, M.E. Land cover classification and change analysis of the Twin Cities (Minnesota) Metropolitan Area by multitemporal Landsat remote sensing. Remote Sens. Environ. 2005, 98, 317–328. [Google Scholar] [CrossRef]

- Wu, Y.; Bai, Z.; Miao, Q.; Ma, W.; Yang, Y.; Gong, M. A classified adversarial network for multi-spectral remote sensing image change detection. Remote Sens. 2020, 12, 2098. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, H.; Sun, W.; Jia, M.; Benediktsson, J.A.; Chen, F. Iterative training sample augmentation for enhancing land cover change detection performance with deep learning neural network. IEEE Trans. Neural Netw. Learn. Syst. 2023. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Gong, M.; Zhang, M.; Wu, Y. Spatially self-paced convolutional networks for change detection in heterogeneous images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4966–4979. [Google Scholar] [CrossRef]

- Xu, C.; Liu, B.; He, Z. A New Method for False Alarm Suppression in Heterogeneous Change Detection. Remote Sens. 2023, 15, 1745. [Google Scholar] [CrossRef]

- Touati, R.; Mignotte, M.; Dahmane, M. A new change detector in heterogeneous remote sensing imagery. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar]

- Gong, M.; Niu, X.; Zhan, T.; Zhang, M. A coupling translation network for change detection in heterogeneous images. Int. J. Remote Sens. 2019, 40, 3647–3672. [Google Scholar] [CrossRef]

- Touati, R.; Mignotte, M.; Dahmane, M. Change detection in heterogeneous remote sensing images based on an imaging modality-invariant MDS representation. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3998–4002. [Google Scholar]

- Liu, Z.; Li, G.; Mercier, G.; He, Y.; Pan, Q. Change detection in heterogenous remote sensing images via homogeneous pixel transformation. IEEE Trans. Image Process. 2017, 27, 1822–1834. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change detection in multisource VHR images via deep siamese convolutional multiple-layers recurrent neural network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2848–2864. [Google Scholar] [CrossRef]

- Zhang, P.; Gong, M.; Su, L.; Liu, J.; Li, Z. Change detection based on deep feature representation and mapping transformation for multi-spatial-resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 116, 24–41. [Google Scholar] [CrossRef]

- Wu, Y.; Li, J.; Yuan, Y.; Qin, A.K.; Miao, Q.G.; Gong, M.G. Commonality autoencoder: Learning common features for change detection from heterogeneous images. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 4257–4270. [Google Scholar] [CrossRef]

- Xing, Y.; Zhang, Q.; Ran, L.; Zhang, X.; Yin, H.; Zhang, Y. Progressive Modality-Alignment for Unsupervised Heterogeneous Change Detection. IEEE Trans. Geosci. Remote Sens. 2023. [Google Scholar] [CrossRef]

- Touati, R.; Mignotte, M.; Dahmane, M. Multimodal change detection in remote sensing images using an unsupervised pixel pairwise-based Markov random field model. IEEE Trans. Image Process. 2019, 29, 757–767. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Gong, M.; Wang, C.; Miao, Q. Pareto self-paced learning based on differential evolution. IEEE Trans. Cybern. 2019, 51, 4187–4200. [Google Scholar] [CrossRef]

- Li, H.; Wan, F.; Gong, M.; Qin, A.; Wu, Y.; Xing, L. Privacy-enhanced multitasking particle swarm optimization based on homomorphic encryption. IEEE Trans. Evol. Comput. 2023. [Google Scholar] [CrossRef]

- Lyu, K.; Li, H.; Gong, M.; Xing, L.; Qin, A. Surrogate-Assisted Evolutionary Multiobjective Neural Architecture Search based on Transfer Stacking and Knowledge Distillation. IEEE Trans. Evol. Comput. 2023. [Google Scholar] [CrossRef]

- Gong, M.; Li, H.; Luo, E.; Liu, J.; Liu, J. A multiobjective cooperative coevolutionary algorithm for hyperspectral sparse unmixing. IEEE Trans. Evol. Comput. 2016, 21, 234–248. [Google Scholar] [CrossRef]

- Li, H.; Gong, M.; Wang, Q.; Liu, J.; Su, L. A multiobjective fuzzy clustering method for change detection in SAR images. Appl. Soft Comput. 2016, 46, 767–777. [Google Scholar] [CrossRef]

- Gong, M.; Li, H.; Meng, D.; Miao, Q.; Liu, J. Decomposition-based evolutionary multiobjective optimization to self-paced learning. IEEE Trans. Evol. Comput. 2018, 23, 288–302. [Google Scholar] [CrossRef]

- Kumar, M.P.; Turki, H.; Preston, D.; Koller, D. Learning specific-class segmentation from diverse data. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1800–1807. [Google Scholar]

- Lee, Y.J.; Grauman, K. Learning the easy things first: Self-paced visual category discovery. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1721–1728. [Google Scholar]

- Tang, K.; Ramanathan, V.; Fei-Fei, L.; Koller, D. Shifting weights: Adapting object detectors from image to video. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar]

- Supancic, J.S.; Ramanan, D. Self-paced learning for long-term tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2379–2386. [Google Scholar]

- Jiang, L.; Meng, D.; Mitamura, T.; Hauptmann, A.G. Easy samples first: Self-paced reranking for zero-example multimedia search. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 547–556. [Google Scholar]

- Jiang, L.; Meng, D.; Yu, S.I.; Lan, Z.; Shan, S.; Hauptmann, A. Self-paced learning with diversity. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Jiang, L.; Meng, D.; Zhao, Q.; Shan, S.; Hauptmann, A. Self-paced curriculum learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Zhao, Q.; Meng, D.; Jiang, L.; Xie, Q.; Xu, Z.; Hauptmann, A. Self-paced learning for matrix factorization. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Zhang, D.; Meng, D.; Li, C.; Jiang, L.; Zhao, Q.; Han, J. A self-paced multiple-instance learning framework for co-saliency detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 594–602. [Google Scholar]

- Kumar, M.; Packer, B.; Koller, D. Self-paced learning for latent variable models. Adv. Neural Inf. Process. Syst. 2010, 23. [Google Scholar]

- Li, H.; Gong, M.; Meng, D.; Miao, Q. Multi-objective self-paced learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

| Data Set | Method | FP | FN | OE | OA | Kappa | IoU |

|---|---|---|---|---|---|---|---|

| Stone-Gate | SMJFM | 216 | 780 | 996 | 98.87 | 0.88 | 80.27 |

| JFM-S | 445 | 1009 | 1454 | 98.34 | 0.83 | 72.45 | |

| JFM-L | 281 | 2785 | 3066 | 96.51 | 0.56 | 40.04 | |

| Yellow River | SMJFM | 536 | 1465 | 2001 | 98.00 | 0.62 | 46.31 |

| JFM-S | 895 | 1514 | 2409 | 97.59 | 0.57 | 41.04 | |

| JFM-L | 589 | 2031 | 2620 | 97.38 | 0.58 | 30.69 | |

| Sardinia | SMJFM | 700 | 2129 | 2829 | 97.79 | 0.78 | 66.02 |

| JFM-S | 791 | 2170 | 2961 | 97.60 | 0.77 | 64.82 | |

| JFM-L | 1065 | 3307 | 4372 | 96.46 | 0.64 | 49.70 | |

| Shuguang | SMJFM | 1768 | 7358 | 9126 | 98.33 | 0.76 | 61.98 |

| JFM-S | 4050 | 13,010 | 17,060 | 96.88 | 0.50 | 35.09 | |

| JFM-L | 2880 | 7886 | 10,766 | 98.03 | 0.72 | 57.13 |

| Data Set | Method | FP | FN | OE | OA | Kappa | IoU |

|---|---|---|---|---|---|---|---|

| Stone-Gate | SMJFM | 216 | 780 | 996 | 98.87 | 0.88 | 80.27 |

| CMJFM | 356 | 1101 | 1457 | 98.34 | 0.79 | 71.92 | |

| Yellow River | SMJFM | 536 | 1465 | 2001 | 98.00 | 0.62 | 46.31 |

| CMJFM | 733 | 1528 | 2261 | 97.73 | 0.59 | 42.38 | |

| Sardinia | SMJFM | 700 | 2129 | 2829 | 97.79 | 0.78 | 66.02 |

| CMJFM | 1328 | 2428 | 3756 | 96.96 | 0.71 | 58.05 | |

| Shuguang | SMJFM | 1768 | 7358 | 9126 | 98.33 | 0.76 | 61.98 |

| CMJFM | 3828 | 8119 | 11,947 | 97.81 | 0.69 | 54.16 |

| Method | FP | FN | OE | OA | Kappa | IoU |

|---|---|---|---|---|---|---|

| PCC | 5772 | 1467 | 7239 | 91.77 | 0.44 | 31.73 |

| SCCN | 1399 | 312 | 1711 | 98.05 | 0.82 | 72.54 |

| CACD | 451 | 1237 | 1688 | 98.08 | 0.80 | 68.05 |

| MRF | 2664 | 2904 | 5568 | 93.67 | 0.38 | 25.72 |

| CAN | 165 | 2544 | 2709 | 96.92 | 0.61 | 45.79 |

| CDCG | 725 | 1608 | 2333 | 97.35 | 0.72 | 58.02 |

| PMA | 277 | 1505 | 1782 | 97.97 | 0.78 | 65.12 |

| SMJFM | 216 | 780 | 996 | 98.87 | 0.88 | 80.27 |

| Method | FP | FN | OE | OA | Kappa | IoU |

|---|---|---|---|---|---|---|

| PCC | 6488 | 1396 | 7884 | 92.10 | 0.27 | 18.55 |

| SCCN | 2825 | 481 | 3306 | 96.69 | 0.60 | 45.05 |

| CACD | 573 | 2302 | 2875 | 97.12 | 0.37 | 23.59 |

| MRF | 24,760 | 714 | 25,474 | 74.48 | 0.1120 | 8.86 |

| CAN | 20,958 | 2346 | 23,304 | 76.65 | 0.0125 | 3.50 |

| CGCD | 728 | 2192 | 2920 | 97.07 | 0.39 | 25.49 |

| PMA | 524 | 2328 | 2852 | 97.14 | 0.36 | 23.23 |

| SMJFM | 536 | 1465 | 2001 | 98.00 | 0.62 | 46.31 |

| Method | FP | FN | OE | OA | Kappa | IoU |

|---|---|---|---|---|---|---|

| PCC | 27,200 | 3962 | 31,162 | 74.79 | 0.10 | 10.52 |

| SCCN | 3015 | 1406 | 4421 | 96.42 | 0.72 | 58.45 |

| CACD | 1284 | 2533 | 3817 | 96.91 | 0.71 | 57.16 |

| MRF | 3246 | 2587 | 5833 | 95.28 | 0.61 | 46.35 |

| CAN | 43,197 | 2616 | 45,813 | 62.93 | 0.08 | 9.86 |

| CGCD | 1561 | 2574 | 4135 | 96.65 | 0.69 | 54.99 |

| PMA | 1072 | 3408 | 4480 | 96.38 | 0.63 | 48.49 |

| SMJFM | 700 | 2129 | 2829 | 97.79 | 0.78 | 66.02 |

| Method | FP | FN | OE | OA | Kappa | IoU |

|---|---|---|---|---|---|---|

| PCC | 158,414 | 723 | 159,137 | 70.86 | 0.15 | 11.91 |

| SCCN | 600 | 18,513 | 19,113 | 96.50 | 0.27 | 16.29 |

| CACD | 920 | 17,388 | 18,308 | 96.64 | 0.33 | 20.92 |

| MRF | 12,702 | 5174 | 17,876 | 96.73 | 0.64 | 48.83 |

| CAN | 14,173 | 14,242 | 28,415 | 94.80 | 0.33 | 21.95 |

| CGCD | 1499 | 15,664 | 17,163 | 96.86 | 0.42 | 27.68 |

| PMA | 911 | 18,287 | 19,198 | 96.48 | 0.28 | 17.05 |

| SMJFM | 1768 | 7358 | 9126 | 98.33 | 0.76 | 61.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Dang, K.; Yang, R.; Song, Q.; Li, H.; Gong, M. Self-Paced Multi-Scale Joint Feature Mapper for Multi-Objective Change Detection in Heterogeneous Images. Remote Sens. 2024, 16, 1961. https://doi.org/10.3390/rs16111961

Wang Y, Dang K, Yang R, Song Q, Li H, Gong M. Self-Paced Multi-Scale Joint Feature Mapper for Multi-Objective Change Detection in Heterogeneous Images. Remote Sensing. 2024; 16(11):1961. https://doi.org/10.3390/rs16111961

Chicago/Turabian StyleWang, Ying, Kelin Dang, Rennong Yang, Qi Song, Hao Li, and Maoguo Gong. 2024. "Self-Paced Multi-Scale Joint Feature Mapper for Multi-Objective Change Detection in Heterogeneous Images" Remote Sensing 16, no. 11: 1961. https://doi.org/10.3390/rs16111961

APA StyleWang, Y., Dang, K., Yang, R., Song, Q., Li, H., & Gong, M. (2024). Self-Paced Multi-Scale Joint Feature Mapper for Multi-Objective Change Detection in Heterogeneous Images. Remote Sensing, 16(11), 1961. https://doi.org/10.3390/rs16111961