DF-UHRNet: A Modified CNN-Based Deep Learning Method for Automatic Sea Ice Classification from Sentinel-1A/B SAR Images

Abstract

1. Introduction

- (1)

- We propose a U-shaped network architecture using the two fusion modules. Compared with the U-HRNet and HRNet networks separately, it requires fewer network parameters and can more effectively fuse neighboring scale semantic features and extensively extract global contextual information to achieve summer sea ice classification.

- (2)

- We designed a sea ice concentration extraction method based on the K-means clustering algorithm and convolution operation, which can extract high-resolution information regarding the sea ice concentration in the Arctic region during summer using SAR images, is faster in terms of extraction, and can obtain a better resolution compared with traditional methods.

- (3)

- We propose a deep learning-based process of optimization for the automatic classification of Arctic summer sea ice using SAR and ice map data, enabling the one-click extraction and identification of sea ice in the region.

2. Materials and Methods

2.1. Ice Charts

2.2. SAR Imagery

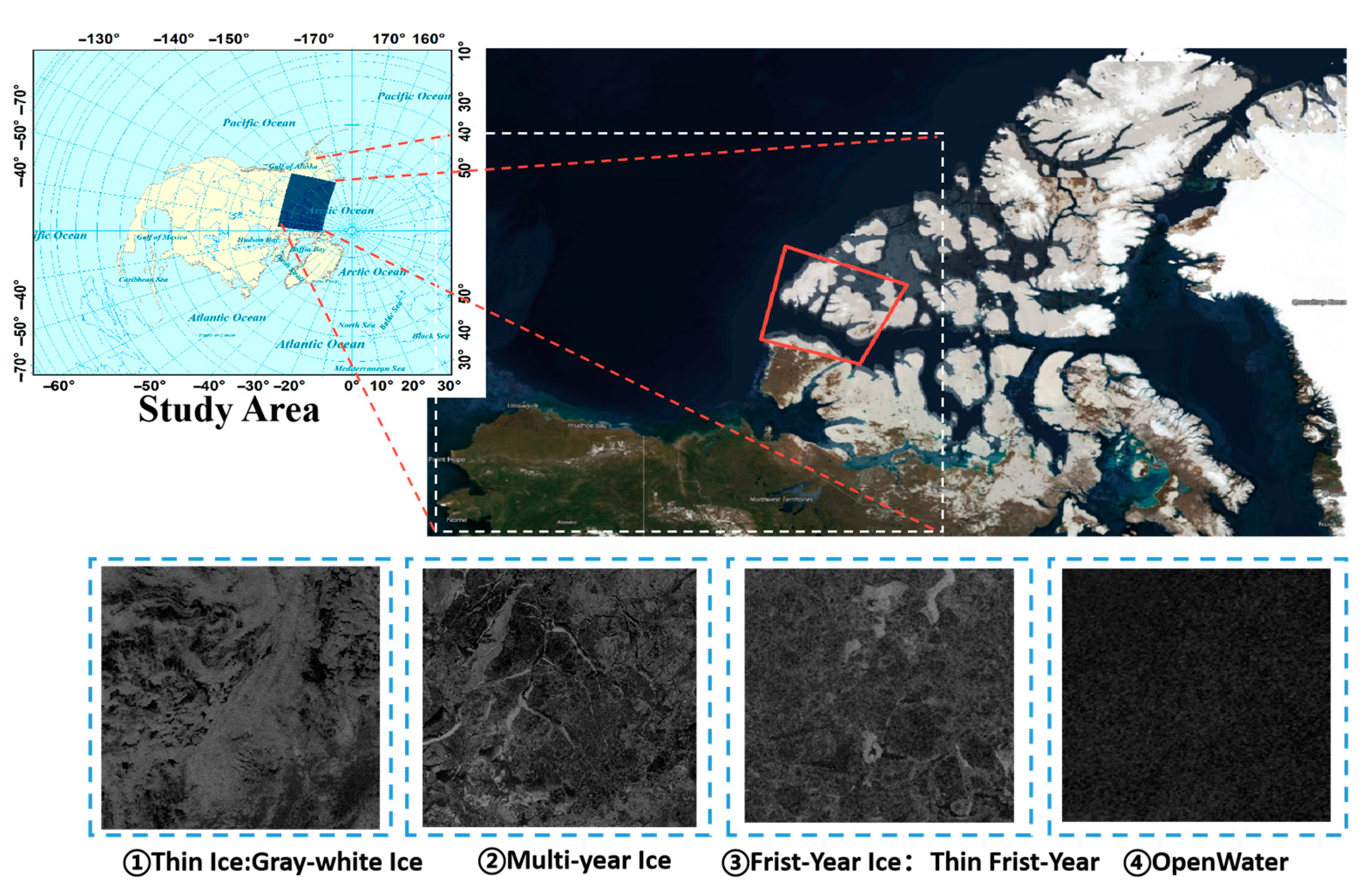

2.3. Description of the Study Area

2.4. Overall Process of Sea Ice Image Segmentation

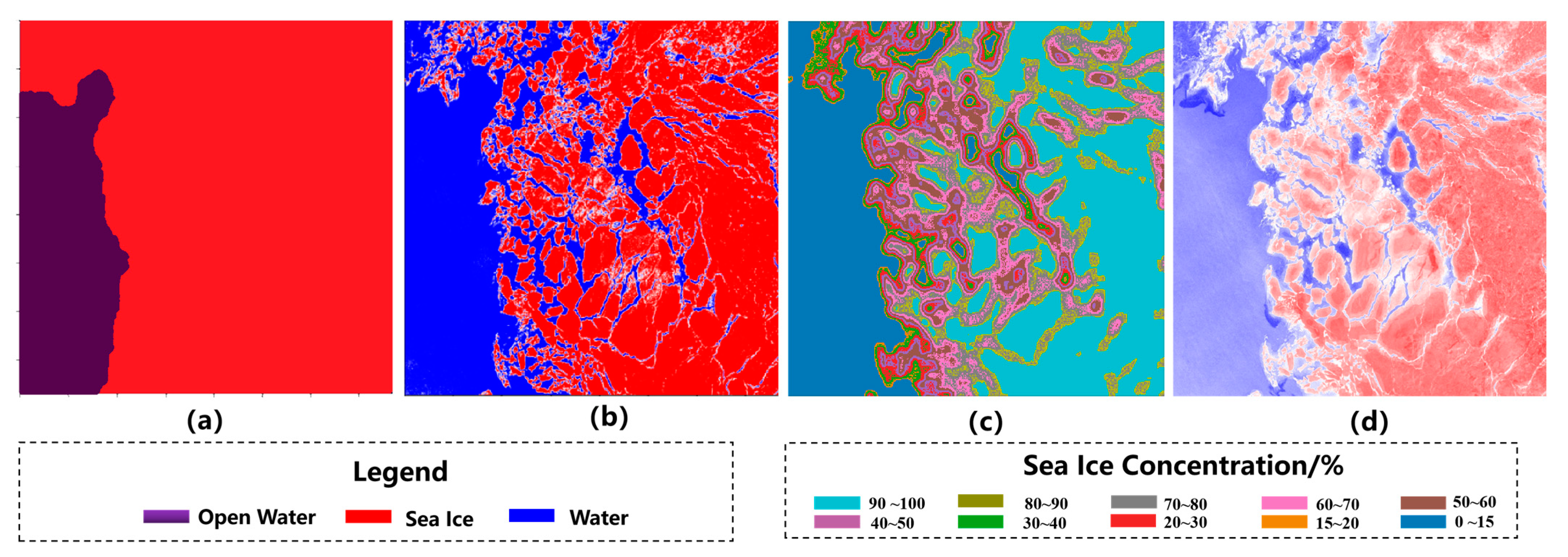

2.5. Extraction of High-Resolution Sea Ice Concentration

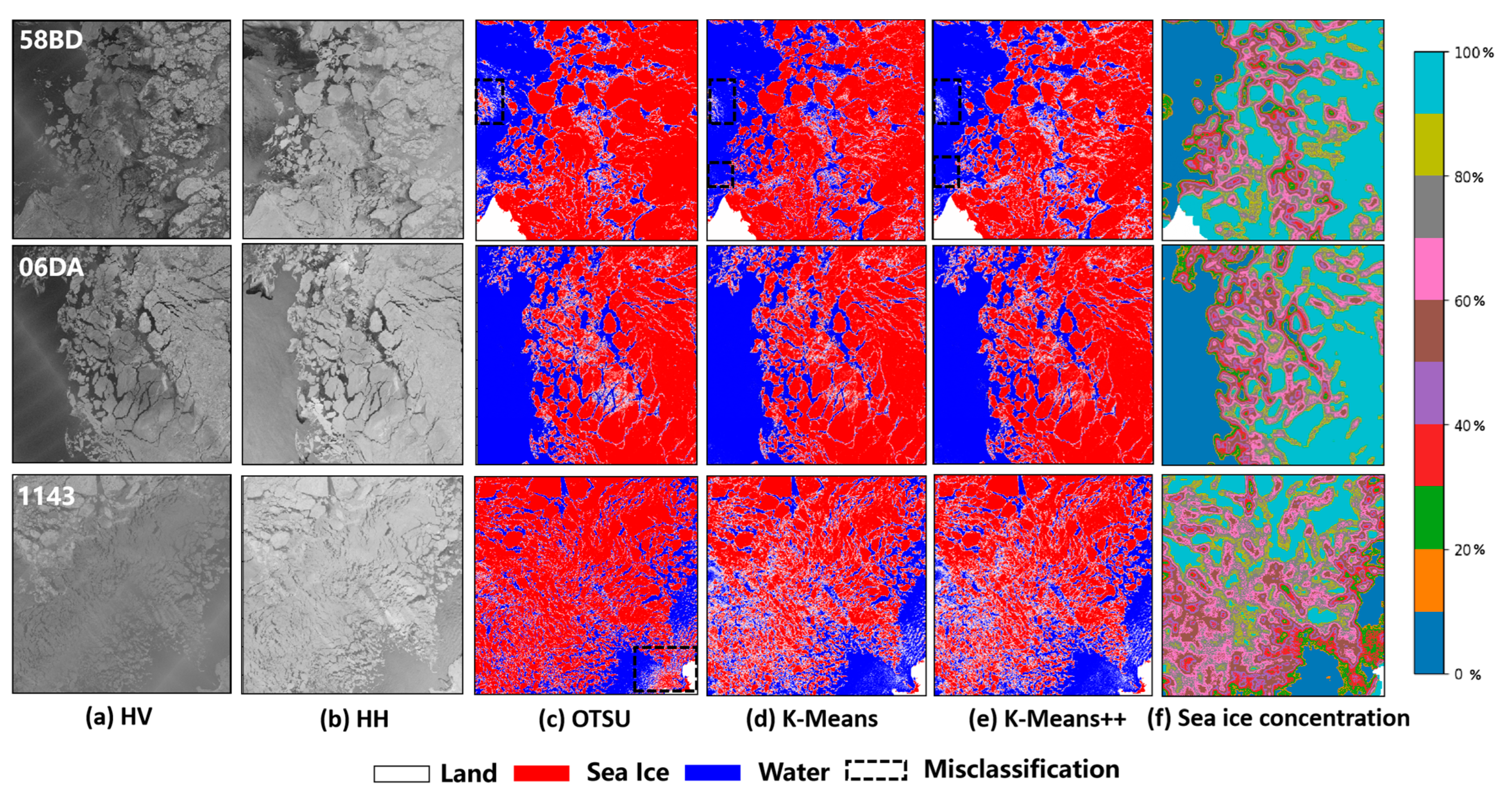

2.5.1. Classification of Ice and Water

2.5.2. Calculation Method of Sea Ice Concentration

2.6. Architecture of DF-UHRNet

2.6.1. Main Body

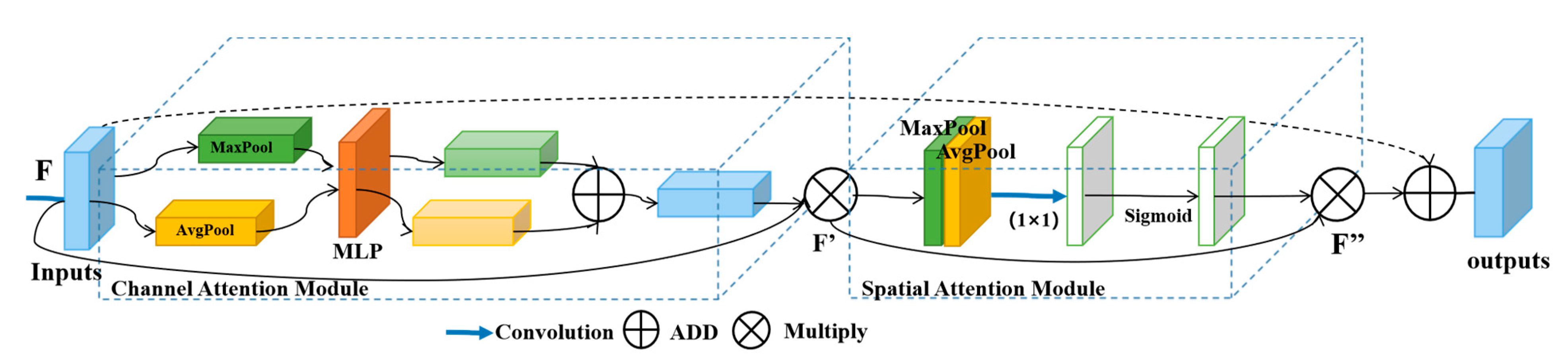

2.6.2. Attention Module

2.6.3. Low-Level and High-Level Fusion Module

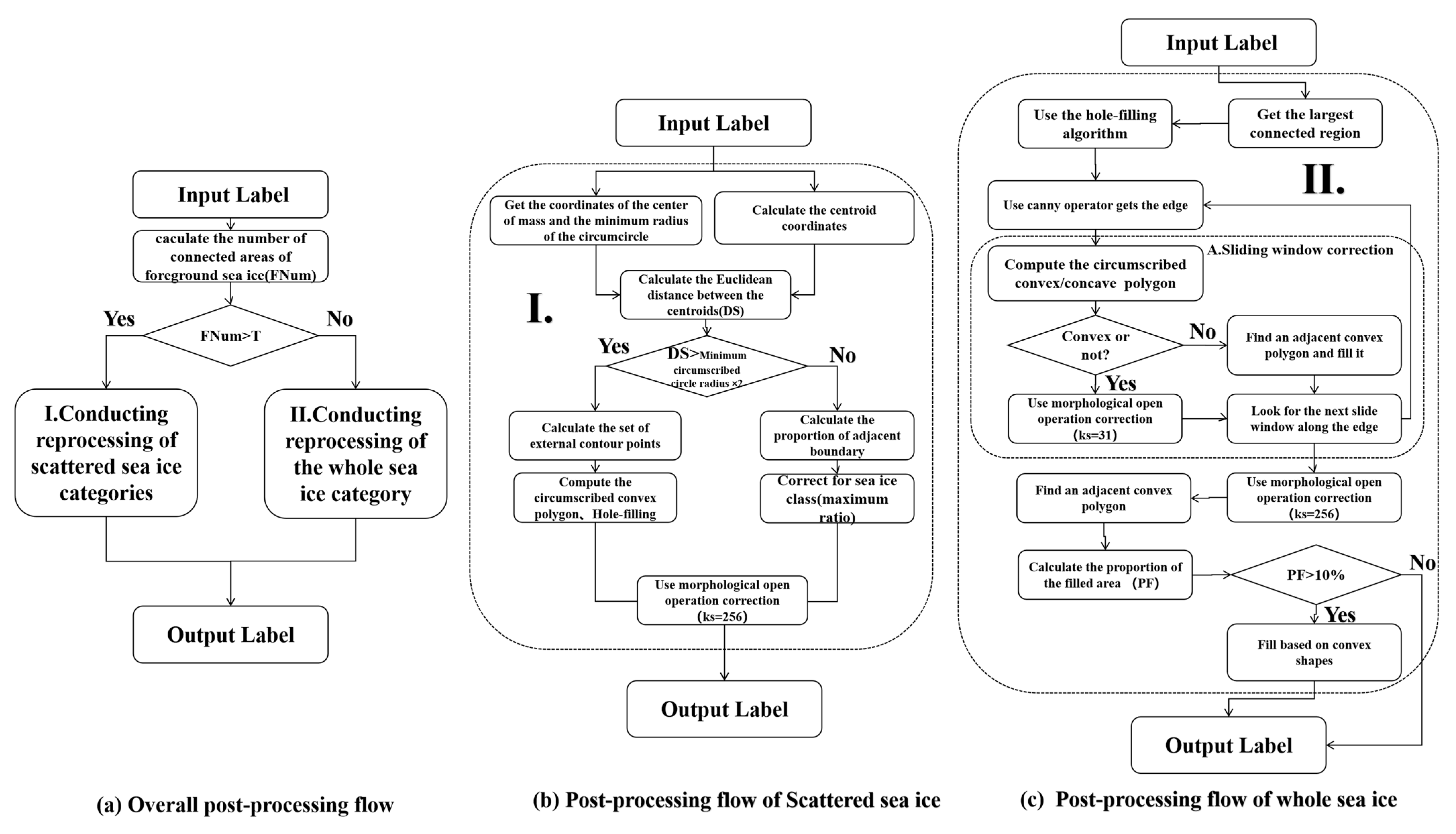

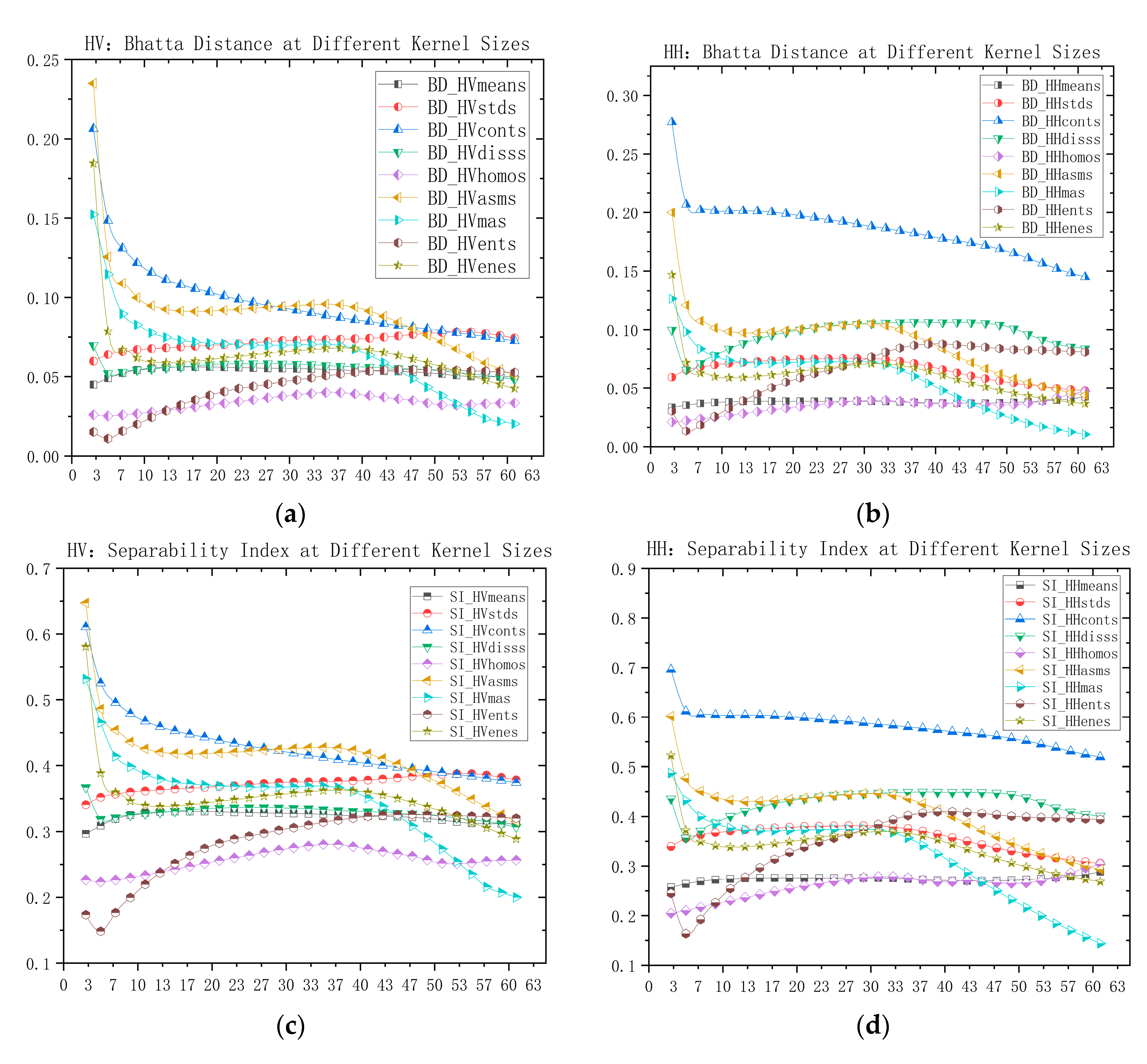

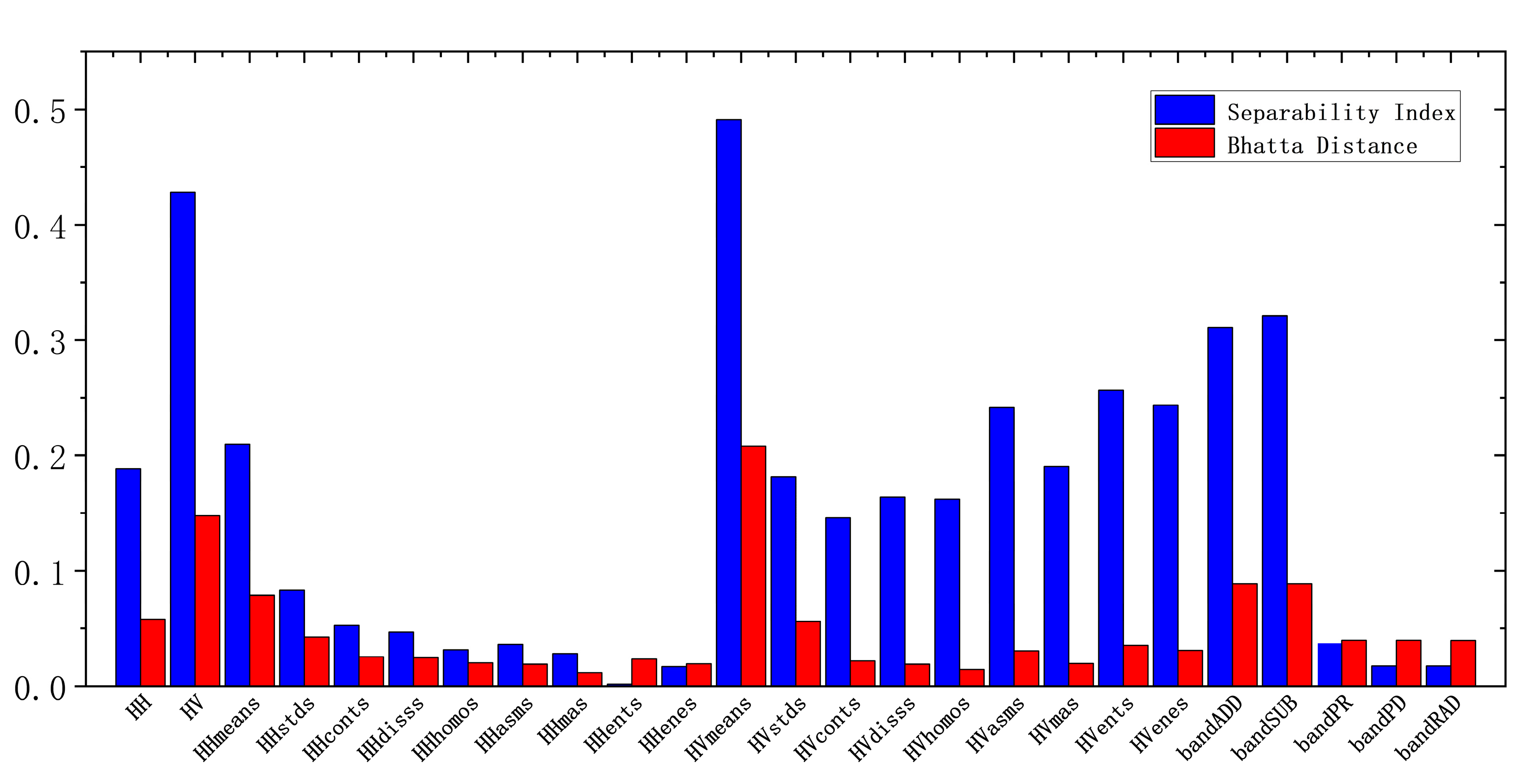

2.6.4. Post-Processing Process

2.7. Accuracy Metric

3. Experiments and Analysis

3.1. Data Selection and Usage

3.2. Experimental Design

3.3. Experimental Results

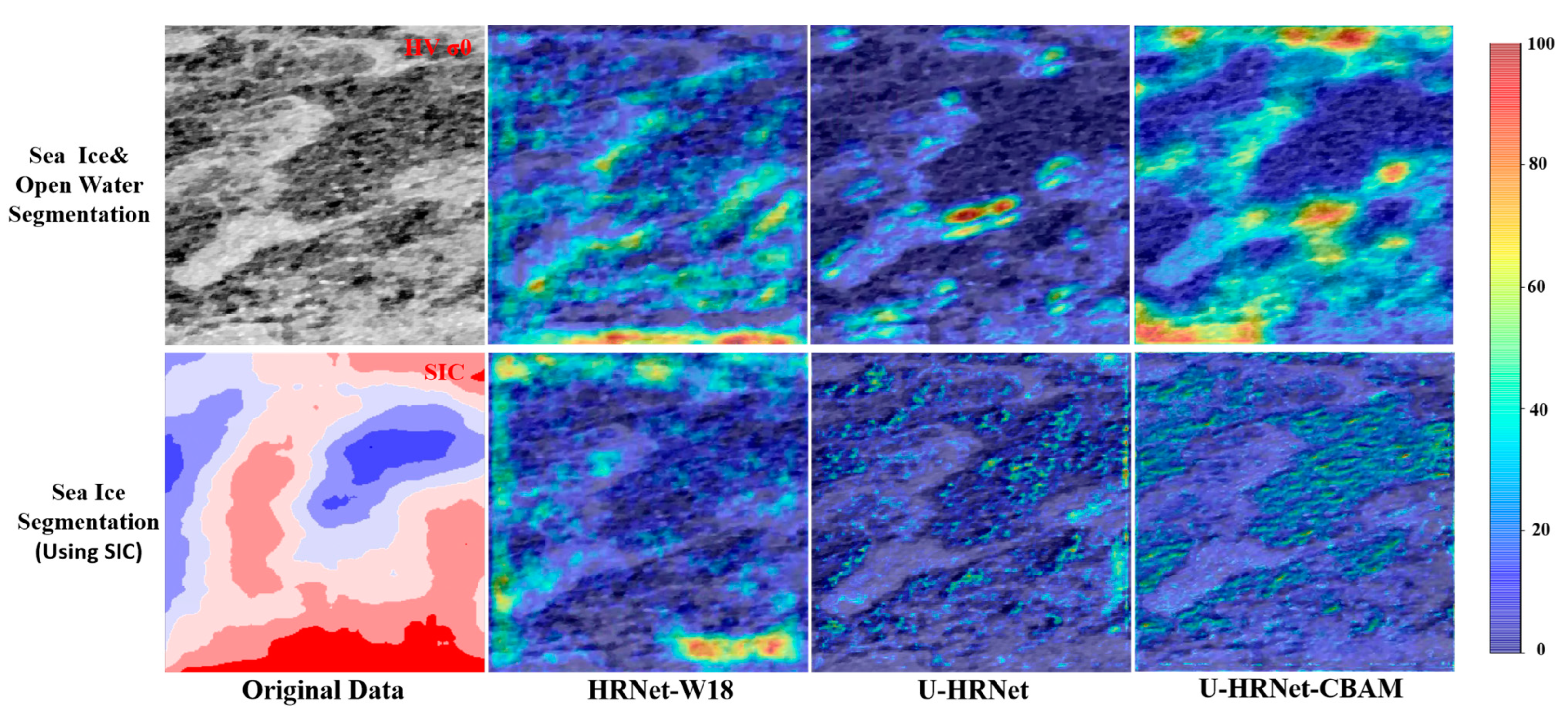

3.3.1. Ablation Study

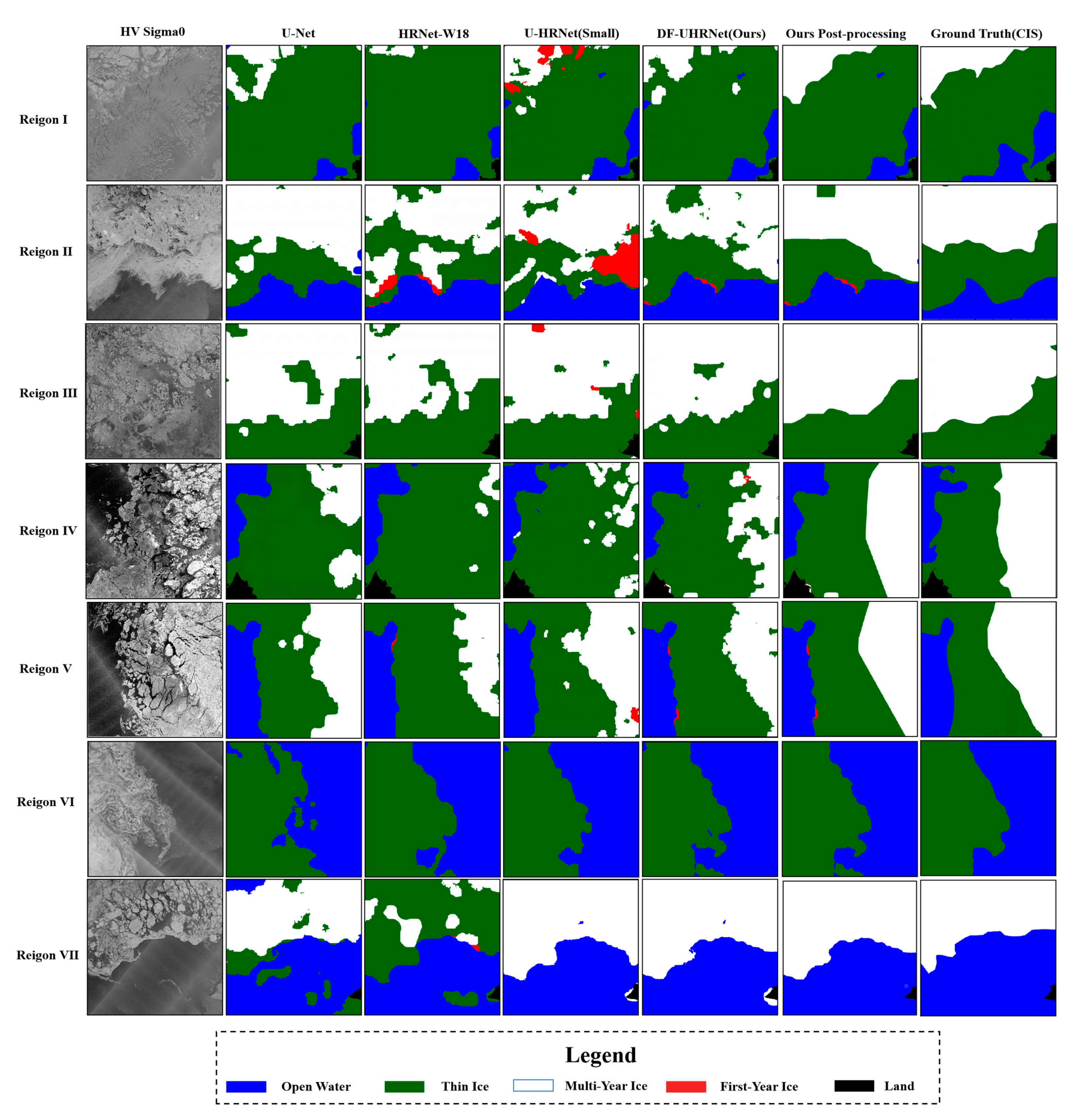

3.3.2. Comparing Experiment Results

4. Conclusions

- (1)

- A method is proposed for extracting sea ice concentrations using a K-means++ clustering algorithm and fast convolution operation. Since its extraction is based on SAR images and is fast and accurate in real time, the data can reflect the spatial distribution of sea ice very effectively. Compared with the direct introduction of sea ice concentration products obtained based on radiometric input features, this method not only has higher spatial resolution but also matches the time of SAR features.

- (2)

- A new fully convolutional neural network DF-UHRNet is proposed, which enables the more effective fusion of high-resolution weak semantic features (focusing on the representation of edges in sea ice) and low-resolution strong semantic features (focusing on the abstract morphology of sea ice) via the design of a dual-scale fusion module. Because a vacuity convolution pyramid module is added to the high-level fusion module, the perceptual field of the convolution kernel can be expanded without any loss of resolution (no feature sampling) and thus, sea ice semantic features can be more effectively extracted. The two fusion modules were carefully designed to facilitate not only the fusion of adjacent scale features, but also to reduce the overall quantity of parameters within the model.

- (3)

- The method achieves a fully automated sea ice classification with a full process flow. All processes do not require additional human intervention, and the fully convolutional neural network facilitates end-to-end sea ice semantic segmentation. Thus, it contributes to the fully automated mapping of Arctic sea ice.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CIS | Canadian Ice Service |

| CBAM | Convolutional Block Attention Module |

| CNN | Convolutional Neural Network |

| ResNet | Residual Network |

| CRF | Conditional Random Field |

| AARI | Arctic and Antarctic Research Institute |

| HRNet | High-Resolution Network |

| U-HRNet | U-Shaped High-Resolution Network |

| U-Net | U-Shaped Convolutional Network |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| BD | Bhattacharyya Distance |

| SI | Separability Index |

| SIC | Sea Ice Concentration |

| ASPP | Atreus Spatial Pyramid Pooling |

| SAR | Synthetic Aperture Radar |

| HDC | Hybrid Dilated Convolution |

| IMO | International Maritime Organization |

| PCA | Principal Component Analysis |

| EW | Extra-Wide Swath |

| GLCM | Gray-Level Concurrence Matrix |

| SVM | Support Vector Machine |

| FFT | Fast Fourier Transform |

References

- Sinha, N.K.; Shokr, M. Sea Ice: Physics and Remote Sensing; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Kwok, R. Arctic sea ice thickness, volume, and multiyear ice coverage: Losses and coupled variability (1958–2018). Environ. Res. Lett. 2018, 13, 105005. [Google Scholar] [CrossRef]

- Xu, J.; Tang, Z.; Yuan, X.; Nie, Y.; Ma, Z.; Wei, X.; Zhang, J. A VR-based the emergency rescue training system of railway accident. Entertain. Comput. 2018, 27, 23–31. [Google Scholar] [CrossRef]

- Ghiasi, S.Y. Application of GNSS Interferometric Reflectometry for Lake Ice Studies. Master’s Thesis, University of Waterloo, Waterloo, ON, Canada, 2020. [Google Scholar]

- Ghiasi, Y.; Duguay, C.R.; Murfitt, J.; van der Sanden, J.J.; Thompson, A.; Drouin, H.; Prévost, C. Application of GNSS Interferometric Reflectometry for the Estimation of Lake Ice Thickness. Remote Sens. 2020, 12, 2721. [Google Scholar] [CrossRef]

- Yan, Q.; Huang, W. Sea ice sensing from GNSS-R data using convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1510–1514. [Google Scholar] [CrossRef]

- Liu, H.; Guo, H.; Zhang, L. SVM-based sea ice classification using textural features and concentration from RADARSAT-2 dual-pol ScanSAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 1601–1613. [Google Scholar] [CrossRef]

- Changying, W.; Dezheng, T.; Yuanfeng, H.; Yi, S.; Jialan, C. Sea Ice Classification of Polarimetric SAR Imagery based on Decision Tree Algorithm of Attributes’ Subtraction. Remote Sens. Technol. Appl. 2021, 33, 975–982. [Google Scholar]

- Lohse, J.; Doulgeris, A.P.; Dierking, W. An optimal decision-tree design strategy and its application to sea ice classification from SAR imagery. Remote Sens. 2019, 11, 1574. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, J.; Xun, L.; Wang, J.; Zhang, D.; Wu, Z.J.I.G.; Letters, R.S. AMFAN: Adaptive Multiscale Feature Attention Network for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE winter conference on applications of computer vision (WACV), Lake Tahoe, CA, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- De Gelis, I.; Colin, A.; Longépé, N. Prediction of categorized sea ice concentration from Sentinel-1 SAR images based on a fully convolutional network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5831–5841. [Google Scholar] [CrossRef]

- Huang, D.; Li, M.; Song, W.; Wang, J. Performance of convolutional neural network and deep belief network in sea ice-water classification using SAR imagery. J. Image Graph. 2018, 23, 1720–1732. [Google Scholar]

- Onstott, R.G.; Carsey, F. SAR and scatterometer signatures of sea ice. Microw. Remote Sens. Sea Ice 1992, 68, 73–104. [Google Scholar]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Galley, R.; Key, E.; Barber, D.; Hwang, B.; Ehn, J. Spatial and temporal variability of sea ice in the southern Beaufort Sea and Amundsen Gulf: 1980–2004. J. Geophys. Res. Ocean. 2008, 113. [Google Scholar] [CrossRef]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Jian, W.; Yubao, Q.; Zhenhua, X.; Xiping, Y.; Jingtian, Z.; Lin, H.; Lijuan, S. Comparison and verification of remote sensing sea ice concentration products for Arctic shipping regions. Chin. J. Polar Res. 2020, 32, 301. [Google Scholar]

- Cavalieri, D.; Crawford, J.; Drinkwater, M.; Eppler, D.; Farmer, L.; Jentz, R.; Wackerman. Aircraft active and passive microwave validation of sea ice concentration from the Defense Meteorological Satellite Program Special Sensor Microwave Imager. J. Geophys. Res. Ocean. 1991, 96, 21989–22008. [Google Scholar] [CrossRef]

- Arthur, D.; Vassilvitskii, S. k-Means++: The Advantages of Careful Seeding; SODA 2007: New Orleans, LA, USA, 2006. [Google Scholar]

- Mundy, C.; Barber, D. On the relationship between spatial patterns of sea-ice type and the mechanisms which create and maintain the North Water (NOW) polynya. Atmosphere-Ocean 2001, 39, 327–341. [Google Scholar] [CrossRef]

- Remund, Q.; Long, D.; Drinkwater, M. Polar sea-ice classification using enhanced resolution NSCAT data. In Proceedings of the IGARSS’98. Sensing and Managing the Environment. 1998 IEEE International Geoscience and Remote Sensing. Symposium Proceedings.(Cat. No. 98CH36174), Seattle, WA, USA, 6–10 July 1998; pp. 1976–1978. [Google Scholar]

- Zhang, Q.; Skjetne, R.; Løset, S.; Marchenko, A. Digital image processing for sea ice observations in support to Arctic DP operations. In Proceedings of the International Conference on Offshore Mechanics and Arctic Engineering, Rio de Janeiro, Brazil, 1–6 July 2012; pp. 555–561. [Google Scholar]

- Aggarwal, S. Satellite Remote Sensing and GIS Applications in Agricultural Meteorology. Princ. Remote Sens. 2004, 23, 23–28. [Google Scholar]

- Mathieu, M.; Henaff, M.; LeCun, Y. Fast training of convolutional networks through ffts. arXiv 2013, arXiv:1312.5851. [Google Scholar]

- Wang, J.; Long, X.; Chen, G.; Wu, Z.; Chen, Z.; Ding, E. U-HRNet: Delving into Improving Semantic Representation of High Resolution Network for Dense Prediction. arXiv 2022, arXiv:2210.07140. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Fukunaga, K. Introduction to Statistical Pattern Recognition; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Cumnling, I.; van Zyl, J. Feature Utility In Polarimetric Radar Image Classificatiion. In Proceedings of the 12th Canadian Symposium on Remote Sensing Geoscience and Remote Sensing Symposium, 10–14 July 1989; pp. 1841–1846. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference On Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Zhang, L.; Liu, H.; Gu, X.; Guo, H.; Chen, J.; Liu, G. Sea ice classification using TerraSAR-X ScanSAR data with removal of scalloping and interscan banding. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 589–598. [Google Scholar] [CrossRef]

- Asmus, V.; Milekhin, O.; Kramareva, L.; Khailov, M.; Shirshakov, A.; Shumakov, I. Arktika-M: The world’s first highly elliptical orbit hydrometeorological space system. Russ. Meteorol. Hydrol. 2021, 46, 805–816. [Google Scholar] [CrossRef]

| Feature Name | SI | BD | Feature Name | SI | BD |

|---|---|---|---|---|---|

| 0.18855 | 0.05806 | 0.01155 | 0.03611 | ||

| 0.14773 | 0.42784 | 0.02339 | 0.02824 | ||

| 0.03951 | 0.01751 | 0.01925 | 0.00186 | ||

| 0.08892 | 0.32102 | 0.20775 | 0.01695 | ||

| 0.08884 | 0.31103 | 0.05596 | 0.49085 | ||

| 0.03961 | 0.03763 | 0.02176 | 0.18168 | ||

| 0.03965 | 0.01751 | 0.01894 | 0.14584 | ||

| 1 | 0.07868 | 0.20947 | 0.01436 | 0.16384 | |

| 0.04251 | 0.08298 | 0.03069 | 0.16254 | ||

| 0.02537 | 0.05249 | 0.01954 | 0.24148 | ||

| 0.02459 | 0.04681 | 0.03535 | 0.19041 | ||

| 0.02007 | 0.03159 | 0.03105 | 0.25662 | ||

| 0.01896 | 0.03611 | 0.01155 | 0.24384 |

| Method | Category Name | Original Samples | Augmented Samples | Final Samples |

|---|---|---|---|---|

| Open Water Segmentation | Open Water | 1690 | 4310 | 6000 |

| Sea Ice | 17,204 | 0 | 6000 | |

| Sea Ice Classification | Multi-year Ice | 13,780 | 0 | 4000 |

| One-year Ice | 2388 | 1612 | 4000 | |

| Thin Ice | 1029 | 2971 | 4000 |

| Model | Parameters | OA (%) | MIoU (%) |

|---|---|---|---|

| HRNet-W18 | 9,671,835 | 82.5 | 72.8 |

| DF-UHRNet (L_1, H_2/without ASPP) 1 | 6,128,391 | 82.5 | 73.6 |

| DF-UHRNet (without CBAM) | 5,077,411 | 91.5 | 85.3 |

| DF-UHRNet (without CRF) | 5,079,175 | 87.4 | 79.4 |

| DF-UHRNet (without SIC) | 5,079,175 | 88.7 | 81.7 |

| Our Model | 5,079,175 | 91.6 | 86.5 |

| Method | Params 1 | Model Size (MB) 2 | MIoU (%) | Accuracy (%) | F1 (%) | Kappa (%) |

|---|---|---|---|---|---|---|

| HRNet-W18 | 9,671,835 | 118.212 | 67.60 | 77.02 | 76.95 | 55.12 |

| U-Net | 10,158,707 | 119.571 | 74.14 | 83.18 | 83.43 | 67.18 |

| U-HRNet (Small) | 6,107,107 | 72.966 | 75.60 | 83.54 | 82.99 | 68.54 |

| DF-UHRNet (Ours) | 5,079,175 | 61.248 | 78.50 | 86.96 | 86.55 | 74.50 |

| Our Post-processing | 5,079,175 | 61.248 | 83.14 | 90.50 | 88.12 | 81.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, R.; Wang, C.; Li, J.; Sui, Y. DF-UHRNet: A Modified CNN-Based Deep Learning Method for Automatic Sea Ice Classification from Sentinel-1A/B SAR Images. Remote Sens. 2023, 15, 2448. https://doi.org/10.3390/rs15092448

Huang R, Wang C, Li J, Sui Y. DF-UHRNet: A Modified CNN-Based Deep Learning Method for Automatic Sea Ice Classification from Sentinel-1A/B SAR Images. Remote Sensing. 2023; 15(9):2448. https://doi.org/10.3390/rs15092448

Chicago/Turabian StyleHuang, Rui, Changying Wang, Jinhua Li, and Yi Sui. 2023. "DF-UHRNet: A Modified CNN-Based Deep Learning Method for Automatic Sea Ice Classification from Sentinel-1A/B SAR Images" Remote Sensing 15, no. 9: 2448. https://doi.org/10.3390/rs15092448

APA StyleHuang, R., Wang, C., Li, J., & Sui, Y. (2023). DF-UHRNet: A Modified CNN-Based Deep Learning Method for Automatic Sea Ice Classification from Sentinel-1A/B SAR Images. Remote Sensing, 15(9), 2448. https://doi.org/10.3390/rs15092448