1. Introduction

With many advantages of low loss, zero casualties, and high mobility, unmanned motion vehicles are widely used in military and civilian fields, such as reconnaissance and strike, search and rescue, environmental monitoring, and resource exploration [

1,

2,

3]. The characteristics and advantages of various unmanned vehicles are different. Fixed-wing UAVs have fast maneuverability, a wide field of view, and are not restricted by terrain [

4]. Rotary-wing UAVs have a simple structure, low cost, good concealment, and are easy to transport and deploy on a large scale [

5]. Unmanned ground vehicles (UGV) have the characteristics of considerable size and strong carrying capacity [

6]. The formation composed of UAVs and UGVs can cooperate in complex, unknown, and dynamic environments to accomplish tasks through multidimensional sensing, information interaction, and collaborative interoperability. At present, various countries are conducting research on cross-domain collaboration projects, including the SHERPA project proposed by the European Union, which aims to build a system for searching and rescuing people in mountainous areas using aerial and ground-based unmanned platforms [

7]. The ROBOSAMPLER project funded by Portugal aims to use rotary-wing UAVs and UGVs to build a hazardous substance sampling platform suitable for complex wild environments [

8]. In offensive swarm-enabled tactics, the United States uses a multi-platform unmanned swarm system composed of UGVs, fixed-wing, and rotary-wing UAVs to conduct reconnaissance on targets in a simulated urban environment [

9]. Heterogeneous air-ground vehicle formations have good development potential in various fields, among them, formation navigation and positioning technology is an important part of the formation control system. This paper proposes a cooperative navigation algorithm for air-ground vehicles to ensure the overall positioning performance of formations and takes into account the respective advantages and shortcomings of different unmanned vehicles in environmental perception and movement characteristics. We construct a heterogeneous air-ground cooperative system through aerial UAVs for wide-range high-altitude observation and UGVs for close reconnaissance, which has the advantages of distributed functions, high system survival rate, and high efficiency [

10,

11,

12]. The cooperative navigation algorithm can reduce the number of sensors carried by the vehicles and reduce the performance requirements of the navigation system on the on-board computing platform. Meanwhile, the use of distributed cooperative navigation architecture can overcome the problems of poor scalability and weak anti-destruction capability of traditional centralized navigation architecture and reduce the communication burden among vehicles.

Accurate positioning information is the critical factor affecting heterogeneous air-ground vehicle formations’ ability to execute various tasks. Satellite navigation is the primary method used by air-ground vehicle formations to achieve their respective positioning. Nevertheless, GNSS-challenged situations may occur in air-ground vehicle formations when executing missions in areas, such as buildings and jungles, caused by occlusion [

13,

14]. The GNSS system is easily interfered within the complex battlefield environment due to low signal power [

15]. The positioning accuracy of ordinary GNSS equipment cannot meet the intended requirements of the navigation system, and in some situations, requiring high accuracy need to be equipped with high precision satellite navigation equipment such as Real Time Kinematic (RTK). However, equipping each vehicle with such a device is too expensive and difficult to implement. Satellite independent navigation means currently include scene matching, terrain matching, astronomical navigation, visual navigation, and so on [

16,

17,

18,

19], but the above navigation sensors are no longer applicable under the high dynamic motion characteristics, lower computational performance, and complex environmental constraints of unmanned vehicles. Therefore, improving the positioning accuracy through cooperative navigation methods has become the current research hotspot for air-ground vehicle formations navigation [

20,

21,

22].

In order to improve the overall localization performance of motion vehicle formations, many scholars have researched multi-source fusion algorithms. Vetrella et al. proposed a cooperative navigation method that incorporates inertial, magnetometer, available satellite pseudorange, cooperative UAV position, and monocular camera information, effectively improving the navigation performance of UAV swarms in GPS-constrained situations [

23]. Indelman et al. proposed a method for distributed vision-aided cooperative localization and navigation of multiple inter-communicating autonomous vehicles based on three-view geometric constraints, allowing localization when different vehicles observe the same scene [

24]. GAO et al. proposed an on-board cooperative positioning scheme based on integrated ultra-wideband (UWB) and GNSS that can obtain better positioning accuracy than the decimeter level [

25]. Xiong et al. integrated the use of satellites, ground stations, inertial, inter-node ranging and speed measurement, and random signal sources to achieve cooperative positioning between vehicles [

26].

Under the computational performance constraint of the navigation platform, the positioning accuracy can be improved by the preferential selection of the available cooperative navigation information. Therefore, numerous scholars have conducted corresponding research on the influence of the position distribution of each vehicle in the cooperative navigation system on positioning accuracy. Chen et al. proposed a cooperative dilution of precise (C-DOP) calculation method combining ranging error, clock error, and position error of cooperative UAVs to analyze the positioning error of UAV swarm under different formations [

27]. Heng et al. proposed a generalized theory where lower bound on expectation of average geometric DOP (LB-E-AGDOP) can be used to quantify positioning accuracy and demonstrated a strong link between LB-E-AGDOP and best achievable accuracy [

28]. Huang et al. used the collaborative dilution of precision (CDOP) model to specify the effect of relative distance measurement accuracy, the number of users, and their distribution on localization [

29]. Causa et al. proposed a concept of the generalized accuracy factor and investigated the accuracy calculation method of cooperative configuration based on visual measurement. The experimental results showed that the UAV swarm could achieve meter-level positioning accuracy with the aid of visual measurement under the appropriate cooperative configuration [

30]. Sivaneri et al. used the UGV to assist another UAV for positioning, thus improving the positioning geometry of the UAV with a low number of satellites [

31]. Although there are numerous studies on cooperative navigation systems, they mainly focus on the acquisition and fusion methods of navigation information. There is no in-depth research on improving the cooperative navigation accuracy of air-ground vehicle formations through the configuration optimization of formations.

A new approach for cooperative navigation of heterogeneous air-ground vehicle formations is proposed in this paper. Firstly, we use the IMM algorithm to predict the motion state of UGVs, then construct a cost function based on the GDOP value of the whole air-ground vehicle formation. Then, it traverses the motion range of UAVs and selects the position where the minimum cost is located as the position where UAVs should arrive at the next moment. Finally, the UGVs localization calculation is completed by fusing the cooperative range values through the Kalman Filter. The simulation results show that the method proposed in this paper can achieve the effect of real-time optimization of configuration, reduce the error of cooperative navigation, and provide guidelines for the deployment and mission execution of heterogeneous air-ground vehicle formations.

2. Measurement Model

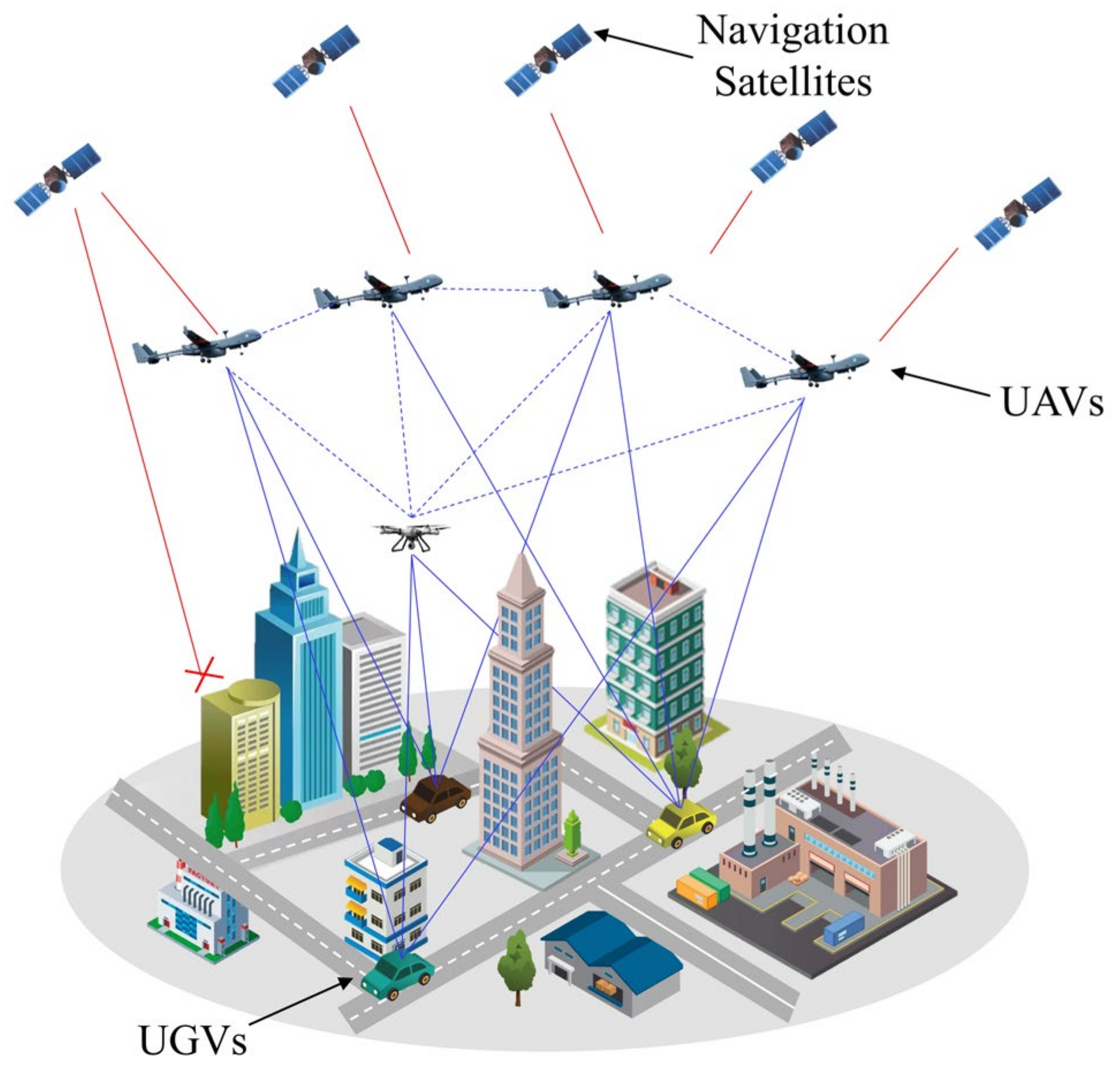

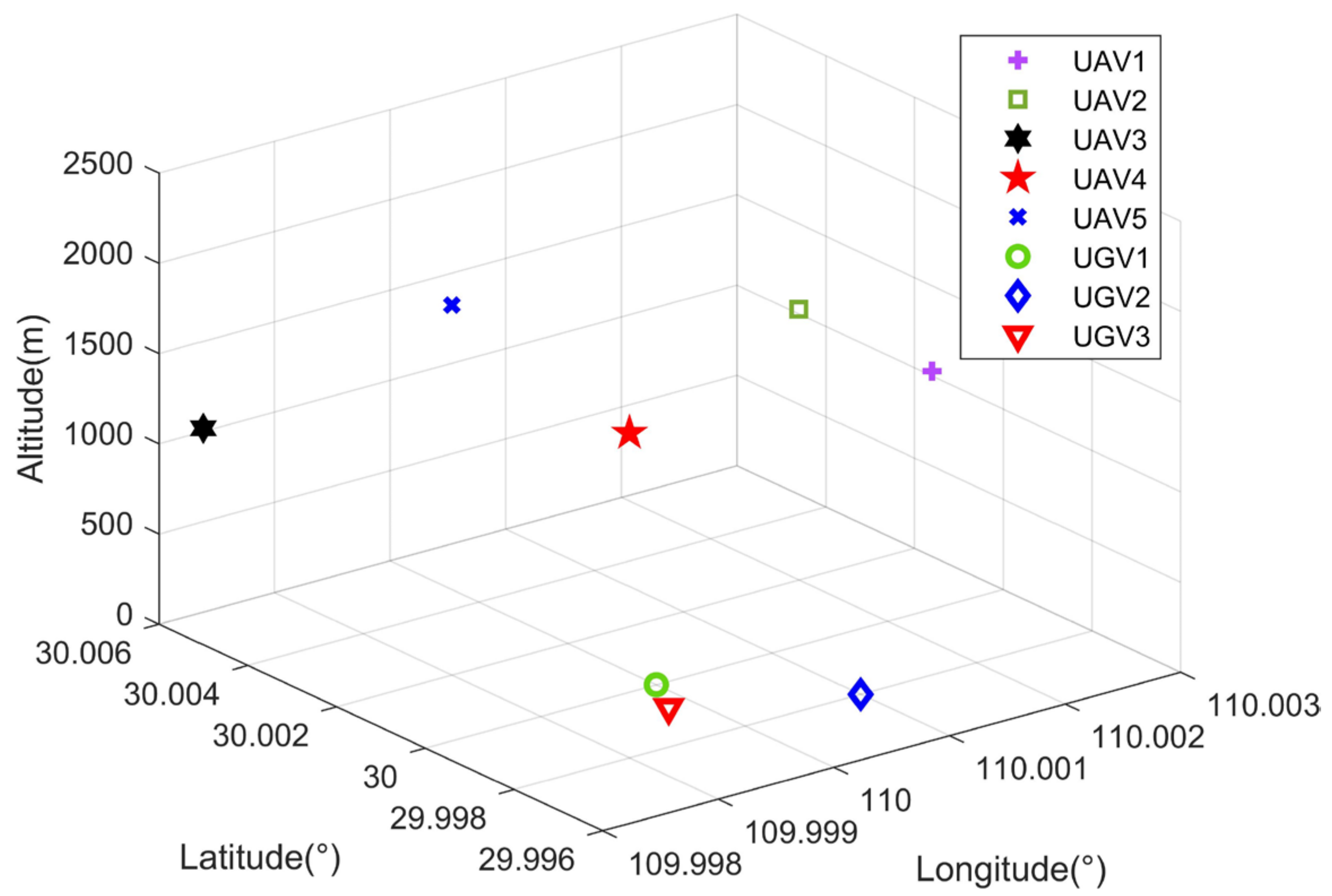

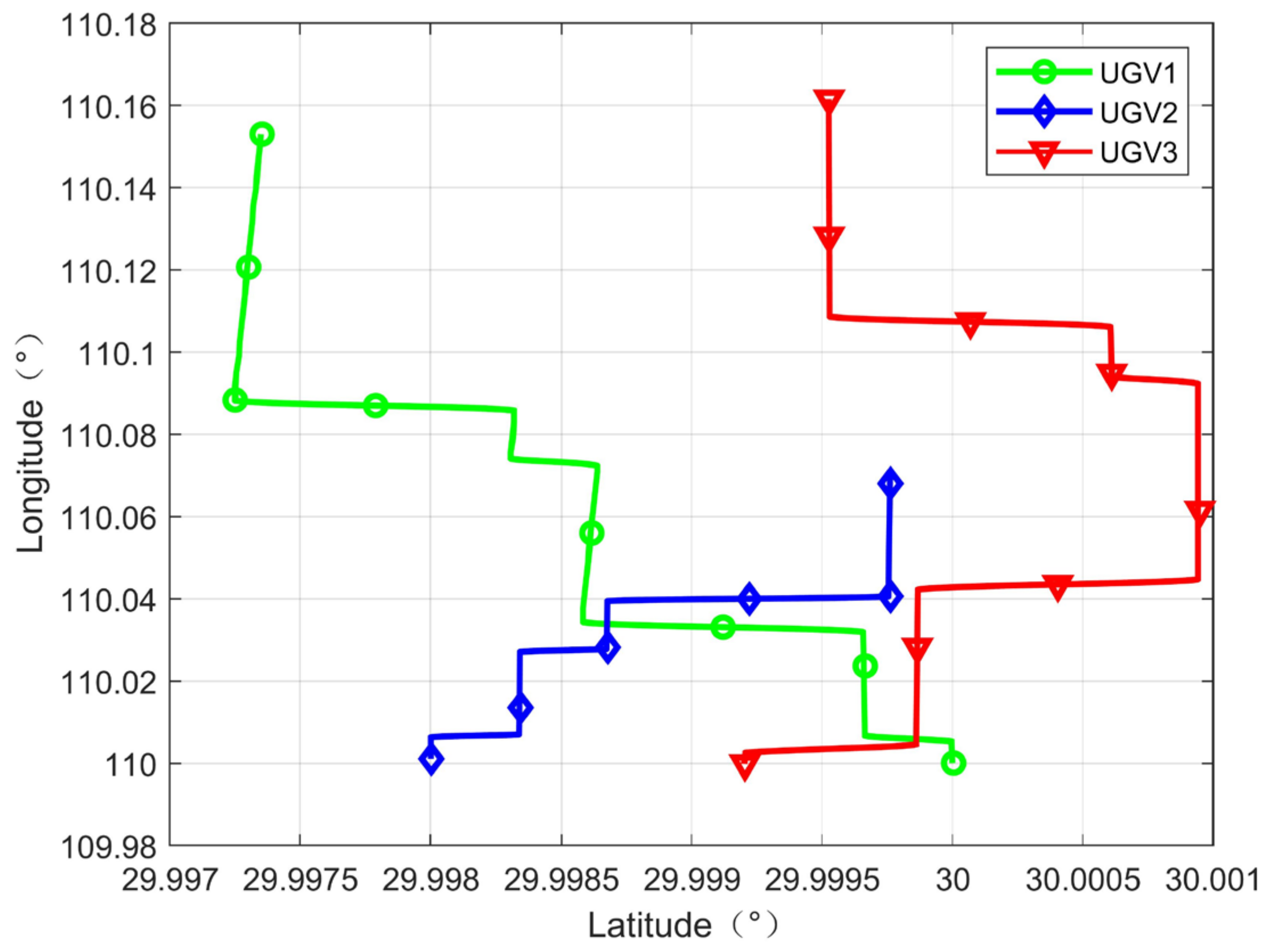

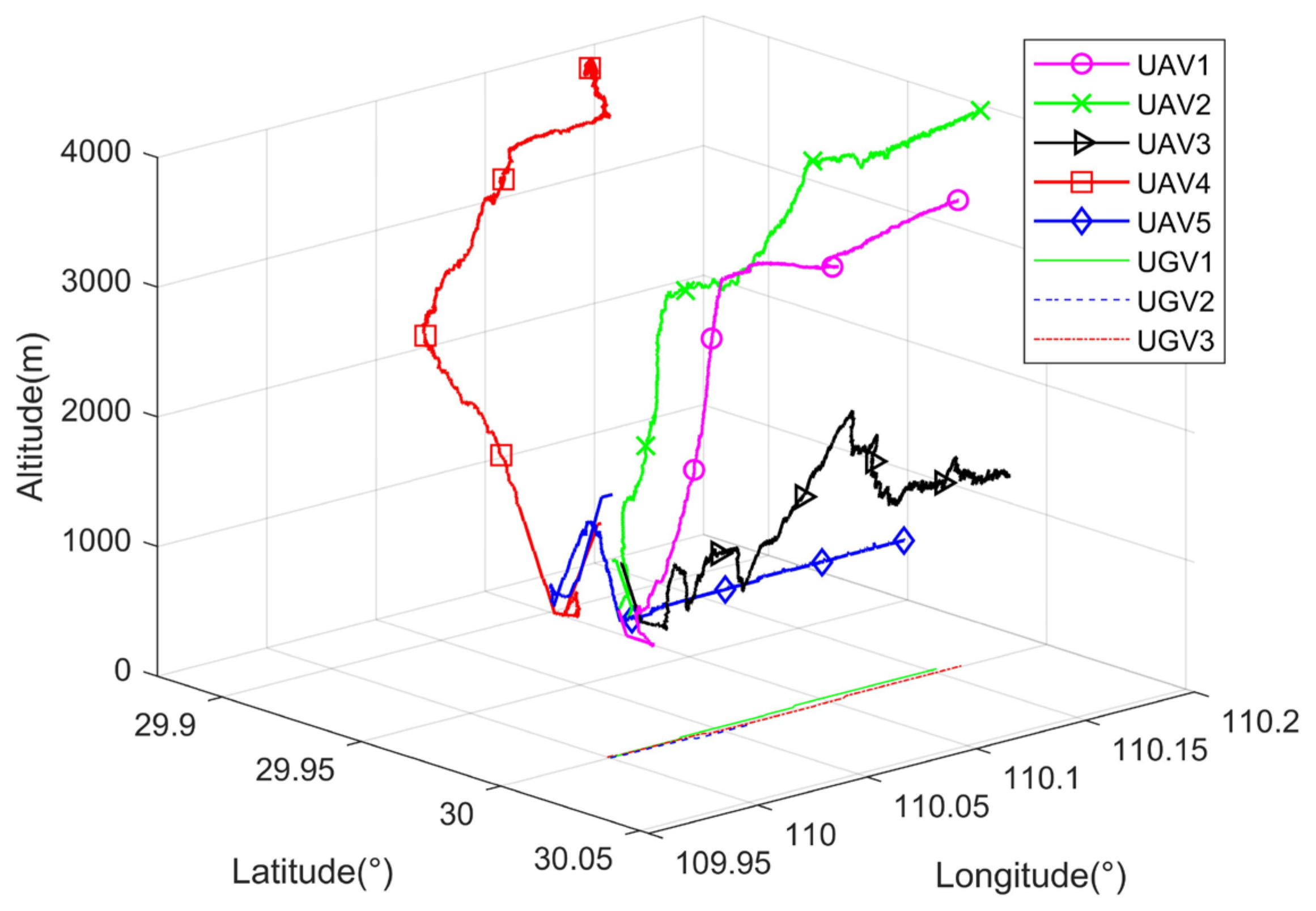

The following scenario is considered in this paper, as shown in

Figure 1, heterogeneous air-ground vehicle formations execute missions in complex scenarios (e.g., urban areas, forests, canyons, etc.). In the above scenario, the GNSS signal received by UGV is easily interrupted and deceived due to obstacle blockage and active jamming, so the regional navigation and positioning system is constructed by UAVs to provide positioning service for UGVs. The UGVs accept the absolute position information of the UAVs and the inter-range information broadcasted by the air reference, then complete their own positioning calculation through the spatial geometry constraint relationship. In terms of navigation sensor configuration, UAVs flying at higher altitude are equipped with high-precision navigation equipment, such as high-precision IMU, differential GPS, and altimeters. UGVs that execute missions in urban alleyways carry lower accuracy IMU and other navigation equipment. Navigation data and sensor data are shared between all vehicles via a wireless network.

For the cooperative navigation system shown in

Figure 1, we can introduce two navigation coordinate systems: Earth-Centered, Earth-Fixed (ECEF) and geographic coordinate system, denoted by

and

, respectively. All high-altitude UAVs are denoted by

; ground-based UGVs are denoted by

. The position parameters of vehicles

are denoted as

, where

. The speed parameters are denoted as

.

Wireless ranging exists now with many kinds of measurement methods, such as Time of Arrival (TOA), Time Difference of Arrival (TDOA), Received Signal Strength Indication (RSSI), and so on. For the distance measurement error, the RSSI measurement method distance measurement error is generally modeled as a log-normal distribution [

32]. Most of the TOA-based methods are modeled as zero-mean Gaussian random variables in the line-of-sight case [

33]. In the non-line-of-sight (NLOS) case, the ranging error is generally modeled as the superposition of the distance difference, measurement noise, and NLOS error due to clock error [

34].

Assuming a zero-mean Gaussian distribution for the range error and perfect clock synchronization for all the high-altitude UAVs, the range values in this paper are of the following form.

where

denotes the actual distance between vehicle

and vehicle

;

is the speed of light;

is the clock error;

is Additive White Gaussian Noise with mean zero and variance

; the superscript

indicates the ECEF coordinate system.

The problem of localization in NLOS environments is described in the literature [

35,

36] and is not analyzed in this paper.

3. Cooperative Navigation System

For the cooperative navigation scenario depicted in

Figure 1, high-altitude fixed-wing UAVs and rotary-wing UAVs can provide cooperative navigation information assistance to UGVs, and the accuracy of the final cooperative navigation is not only related to the accuracy of the navigation sensors but also to the flight configuration of the UAVs. Since different types of vehicles have different speeds and states during movement, UGVs need to adjust their driving state according to the actual environment, such as bypassing obstructions. The whole heterogeneous air-ground vehicle formations cannot execute the mission in a fixed configuration. UAVs need to adjust the flight configuration in real-time according to the position of the UGVs.

The merit of the configuration can be measured by Dilution of precision (DOP), which can correlate the configuration with the positioning accuracy, and the DOP based on inter-vehicles wireless range is calculated as follows.

Set the approximate position of the vehicle

as

and the approximate clock difference as

, after neglecting the measurement noise and NLOS error in Equation (1), the Taylor expansion at

and retaining the first-order term yields,

can be denoted as follows.

where

is the direction cosine of the vehicle

to vehicle

;

is the difference between the approximate position of the vehicle

and the actual position;

is the deviation between the accurate and approximate clock difference.

Equation (2) can be extended to the following form.

Based on Equation (3), the position and clock deviation vectors of the vehicle can be obtained as:

The error covariance of the deviation vector can be defined as:

GDOP is then defined as the square root of the trace of

. The GDOP of all UAVs with respect to the vehicle

is defined as:

For the whole cooperative navigation system, each UGV calculates its position by IMU and fuses the cooperative information from UAVs by the Kalman filter. At the moment, the UGVs need to predict their position at the moment by the IMM algorithm; so as to judge the position that UAVs should reach when the sum of GDOP corresponds to all UGVs at the moment is the smallest; so that the configuration of the whole cooperative navigation system can be adjusted in real-time to provide the UGVs with the optimal cooperative information. Therefore, the cooperative navigation algorithm proposed in this paper is divided into three main parts: position prediction of UGVs, cost function calculation and optimal position selection of the high-altitude UAVs, and inertial/co-ranging value fusion of UGVs.

3.1. Position Prediction of UGVs

In order to carry out the route planning of high-altitude UAVs, the position of UGVs at the moment needs to be estimated first. Ground unmanned vehicles have strong mobility on the ground, and the rapid switching of their movement modes leads to drastic changes in parameters, such as heading, velocity, and acceleration, etc. The traditional single-model filter has a slow convergence speed and poor stability in the accuracy of state estimation and prediction for targets in a highly dynamic motion state, and this paper introduces multi-model filters to predict the position of UGVs at the moment.

The core of the IMM algorithm lies in describing the target’s maneuver using a set of models and filters working in parallel, corresponding to different maneuver states, switching between models follows a known Markov process, and the final estimate is a weighted value of all model state estimates [

37,

38]. The commonly used models are mainly the uniform velocity model, uniform acceleration model, current statistical model, Singer model, and so on [

39,

40]. In order to effectively characterize the maneuvering characteristics of the unmanned vehicle in ground motion, and to improve the robustness of the IMM filter and reduce the computational effort of the system, the uniform velocity model and uniform acceleration model are used to describe the motion state of each ground vehicle in this paper. The specific steps of the IMM algorithm designed in this paper are as follows.

Step 1 Input interaction module. ,

A. Mixing Probability Calculation.

where

represents the transition probability of the state estimation of model

at the

moment to model

at the

moment,

denotes the model probability of model

at the

moment,

is the normalization factor,

is the transfer probability from model

to model

.

B. Mixing state estimation and Covariance matrix Calculation.

where

and

are the state estimation and the covariance matrix of model

at the

moment, respectively.

Step 2 Model filter estimation module.

A. one-step prediction for sub-model

where

are the state transfer matrix and the system noise matrix of the model, respectively, and the state equations of the uniform velocity model and the uniform acceleration model are shown in the literature [

41].

is the variance matrix of the system noise.

B. position prediction

where

is the estimated value of the position of UGV at the

moment.

Step 3 Update of model probability module.

A. Filtered residuals

where

is the position output obtained by fusing the IMU/co-ranging values of the vehicle at the

moment, as described in

Section 3.3, and

is the measurement matrix.

B. Kalman filter gain calculation

C. Sub-model measurement update

D. Mode Probability Update

First, the likelihood function is calculated with the following equation.

where

is the likelihood function,

denotes the Gaussian density function of

with zero mean and covariance

. The updated model probabilities are denoted as:

Step 4 Estimation fusion module.

The first two steps in the above steps are completed at the moment, thus predicting the position of the UGVs at the moment. The last two steps are performed after the completion of the vehicle IMU/co-ranging assistance at the moment to update the model data of the IMM algorithm.

3.2. Cost Functions and Path Planning Strategies

The positioning accuracy of the UAVs depend on the ranging error, the air-based reference position error, and the UAVs configuration distribution. The range error is mainly determined by the clock difference between the two vehicles, the air-based reference position error mainly depends on the GNSS positioning accuracy, and the above two points are determined by the sensor hardware performance, so the UAV needs to dynamically adjust the flight position to form different formation configurations to provide cooperative navigation services for the UGVs. After the predicted position of the UGVs at the

moment, the position prediction value needs to be used to plan the position of the UAVs to achieve the optimal configuration. Path planning algorithms are mainly classified into three categories, namely traditional path planning algorithms, sampling-based path planning algorithms, and intelligent bionic algorithms, among which the A* algorithm is the mainstream algorithm in the field of path planning at present [

42,

43]. The advantage of the A* algorithm is its rapid response to the environment and high computational efficiency. It is a heuristic search algorithm that allows the UAV to quickly plan a route and generate maneuver control commands with known starting and ending points [

44].

This paper combines the need of real-time configuration optimization and the idea of the sparse A* algorithm to propose a real-time memoryless path optimization method. Taking the current position of the UAV as the center and dividing the neighborhood space to establish a grid centered on the UAV, that is, the area to be extended, and the combination of all the UAVs’ areas to be extended, is called the search space. Traversing all the combinations of ways in the search space to find the grid position corresponding to the minimum cost, that is, the planning position of the UAV at the next moment. First, we establish the cost function on the voyage.

3.2.1. Cost Function Establishment

In the cooperative mission execution of heterogeneous air-ground vehicle formations, borrowing the A* algorithm for trajectory planning from the literature [

45], the cost can generally be divided into two parts, namely the route flight cost and the GDOP cost. The route flight cost mainly includes the distance flown, as it will determine the duration of the UAV, and the route flight cost can also include the distance from the target point if there is a target point for the mission. The GDOP cost is the sum of the GDOP values corresponding to each UGV during the execution of the mission. There is a coupling relationship between the GDOP cost and the route flight cost, so the two need to be considered together.

The cost function is defined as:

where

denotes the GDOP cost, and assuming that the number of ground vehicles is

,

can be designed as:

where

denotes the flight cost of the route, and assuming that the number of aerial vehicles is

,

is designed as:

In Equations (18) and (19), and are the weights of GDOP cost and route flight cost, respectively, which can be adjusted and used to choose whether to give priority to guaranteeing the GDOP value or reducing the flight distance. In this system, the fixed-wing UAVs are selected as the aerial benchmarks, and the movement speed is larger than that of the rotary-wing UAVs, which can reach the preset position quickly, so is set to 1/3 and is set to 2/3. denotes the distance flown by the UAV from the moment to the moment.

3.2.2. Path Planning Strategy

When establishing the area to be extended for UAVs, the constraints of UAVs need to be considered simultaneously, including the maximum movement step , minimum movement step , maximum pitch angle , minimum flight height , and minimum collision avoidance distance between two UAVs. According to the current position of the UAVs and the constraints, the path planning process is as follows.

A. Search space establishment

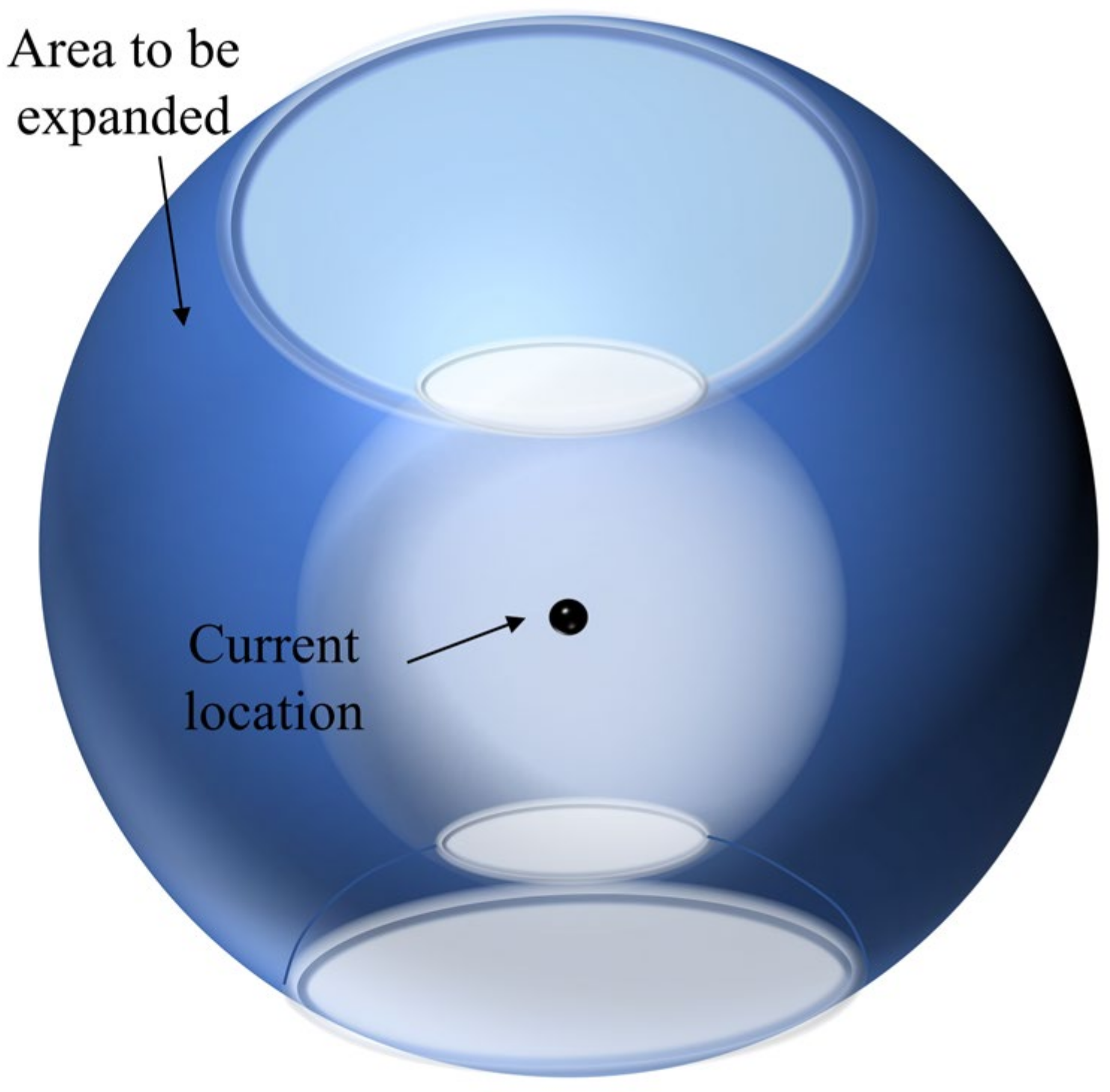

As shown in

Figure 2, the region to be extended for the high-altitude UAVs is established with the current position as the center and the constraints.

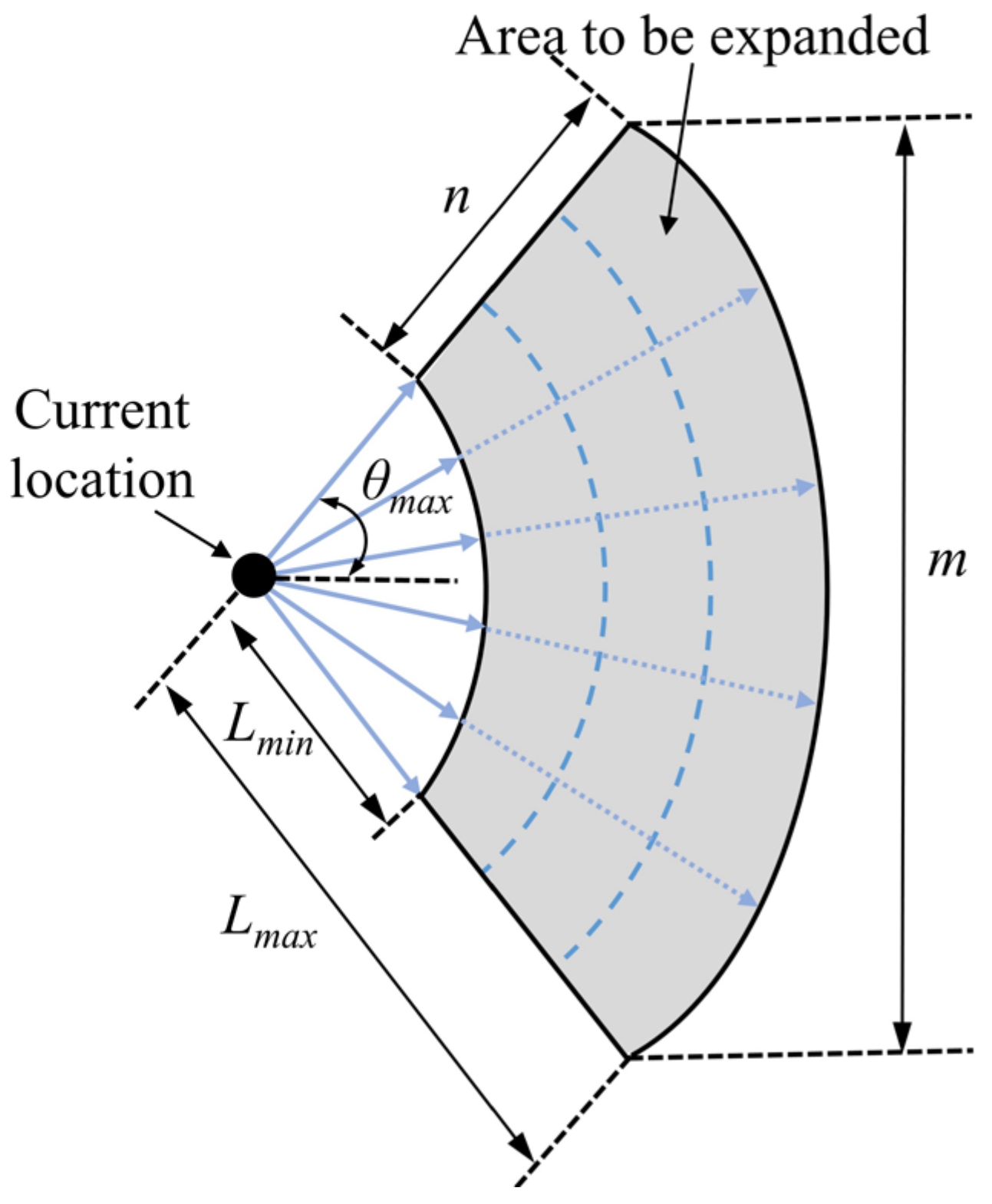

Split the area to be extended, as shown in

Figure 2. The horizontal profile of the area to be extended is a circle, as shown in

Figure 3, and the circle can be split into

parts, each of which has a vertical profile of a sector ring, as shown in

Figure 4.

Divide the sector ring into copies along the radius direction and copies along the arc direction, so that the area to be expanded is divided into expansion nodes. The combinations of extended nodes of airborne UAVs have ways, and the combinations that do not satisfy the height constraint and the minimum collision avoidance distance constraint are removed, and the remaining combinations will constitute the search space at the moments.

B. Calculation of the cost function

Based on the UGVs’ location predicted by Equation (10), all node combinations in the search space are traversed, and the corresponding generation value is calculated according to Equation (17) to find the least costly node combination as the location where the UAVs should arrive at the moment.

C. Path Planning

The positions obtained in step

B are only isolated coordinate points and need to be combined with the motion characteristics of the UAV to plan a motionable route, and the specific route planning algorithm can be found in the literature [

46].

3.3. Inertial/Co-Ranging Value Fusion Method for UGVs

When the UAVs establish the aerial benchmarks, the UGVs complete the cooperative information interaction and distance calculation through the data chain with the UAVs, and the Kalman filter needs to be used to complete the navigation information fusion. Under the Kalman filtering framework, the system state equation is constructed by using the position and attitude information obtained from the inertial sensors as state quantities; then the best state estimate of the previous moment is combined with the equation of motion to complete the one-step prediction; finally, the relative distance is used as the observed quantity to construct the measurement update equation, and the above operations are cycled to complete the cooperative navigation and positioning solution [

47]. Compared with the cooperative navigation technique based on factor graph theory, the Kalman filter has the advantages of high computational efficiency, good real-time performance, and low communication load requirement, which can be realized by engineering [

48].

3.3.1. State Equation

In the process of UAV route planning, it also continuously sends range signals and position information, and after receiving this information, the UGV can fuse it with its own inertial information to make effective corrections to the inertial information.

Using the geographic coordinate system as the navigation coordinate system, the state vector of the UGV

navigation system is defined as follows [

49].

where the superscript

represents the geographic coordinate system. where

and

are platform angle error and velocity error of east, north, and up directions, respectively.

are, respectively, latitude error, longitude error, and altitude error.

are the gyro constant drift errors and the first-order Markov drift errors, respectively;

are the accelerometer biases.

The state equation can be constructed according to the defined state vector

where

is the linearized INS error states matrix,

is the noise transfer matrix,

is the system process noise with multivariate mean normal distribution with variance

, whose value is determined by the accuracy of gyroscope and accelerometer.

3.3.2. Measurement Equation

The measurement equation of the filter can be defined as

where

is the observation vector related to the wireless ranging measurements,

is the observation matrix,

is the measurement noise of wireless ranging with

. The observation vector and observation matrix are defined as:

where

is the transformation matrix that converts

to

, which satisfies the following formula:

where

is the radius of curvature in prime vertical,

denotes the earth oblateness.

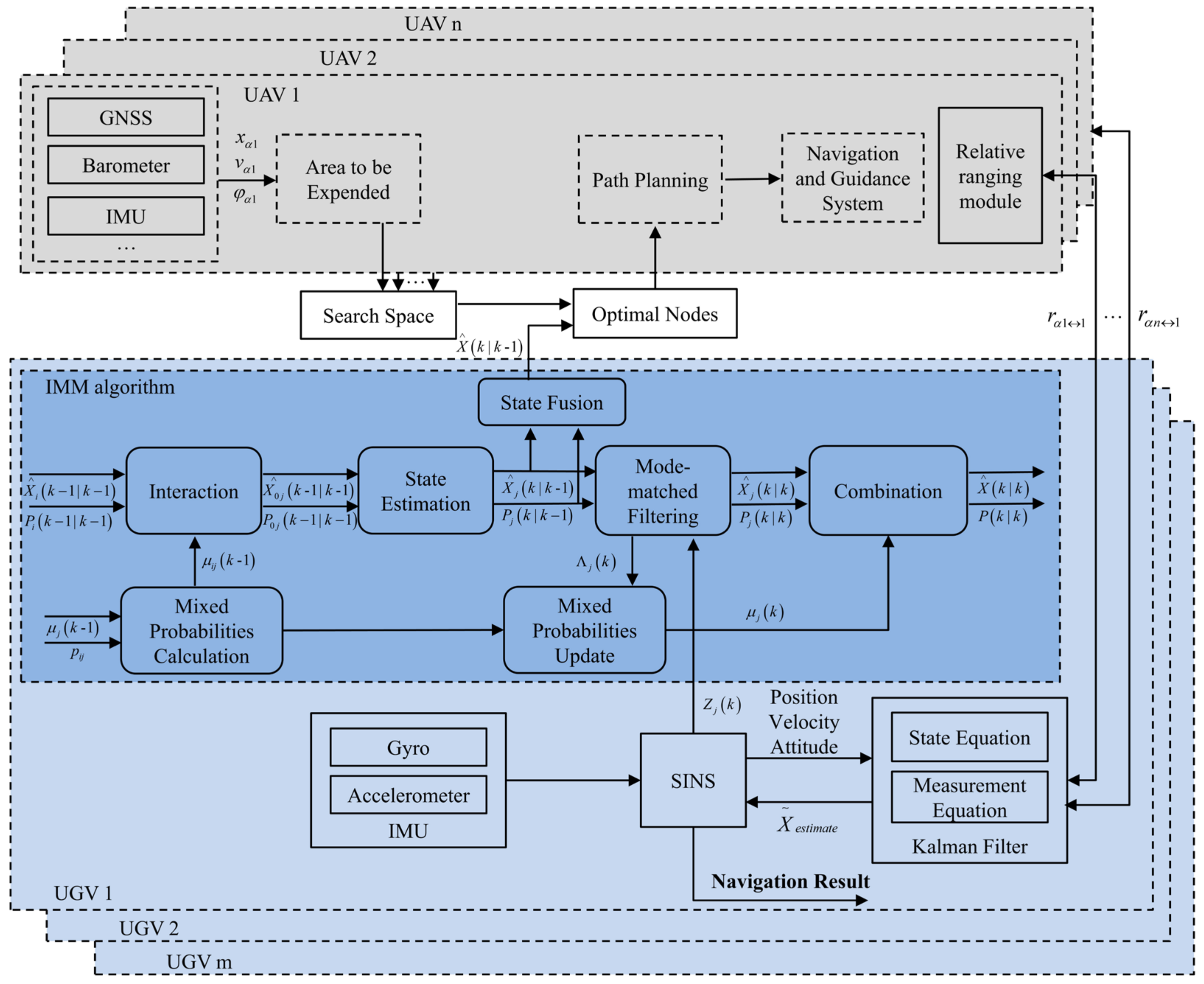

3.4. Description of the Cooperative Navigation Method

The structure of the heterogeneous air-ground vehicle formations cooperative navigation method proposed in this paper is given in

Figure 5. Combining the methods and strategies proposed in

Section 3.1,

Section 3.2 and

Section 3.3, the steps of the cooperative navigation method are as follows.

Step 1: Initialization

Initialization of the whole cooperative navigation system according to the constraints, such as motion characteristics and task requirements of the heterogeneous air-ground vehicle formations, including the establishment of the cost function, the initial model probability, and model transfer probability assignment in the IMM algorithm.

Step 2: Location estimation of all UGVs

At the current moment, using the two steps 1 and 2 described in

Section 3.1, the position estimates

of all UGVs are obtained.

Step 3: Establishment of UAVs’ search space and selection of the minimum cost combination

According to the position estimation value of the UGV and the current position of the UAV, the area to be extended is constructed by combining the relevant constraints, and the area to be extended of all UAVs is partitioned and combined into the search space. The generation value of all node combinations in the search space is calculated according to Equation (17), and the location combination with the lowest cost is selected as the target location of the UAV at the next moment. The UAV uses the target position for path planning and flight control.

Step 4: Inertial/co-ranging fusion filter of UGV

The UAVs continuously broadcasts range measurement signals and position information during flight. After receiving the signals and decoding the distance, the UGVs can fuse and filter them with their inertial guidance information according to Equations (21) and (22). It is worth noting that the filtering frequency need not be consistent with the frequency of the UAV path planning, and the frequency of the UAV path planning can be reduced in order to reduce the computation.

Step 5: IMM sub-filter filtering and fusion

After the position of the ground vehicle is corrected, the model parameters and model probabilities of the IMM algorithm are updated by using the corrected position and velocity as measurements and completing the two steps (3), (4) described in

Section 3.1.

Step 6: Return to Step 2.

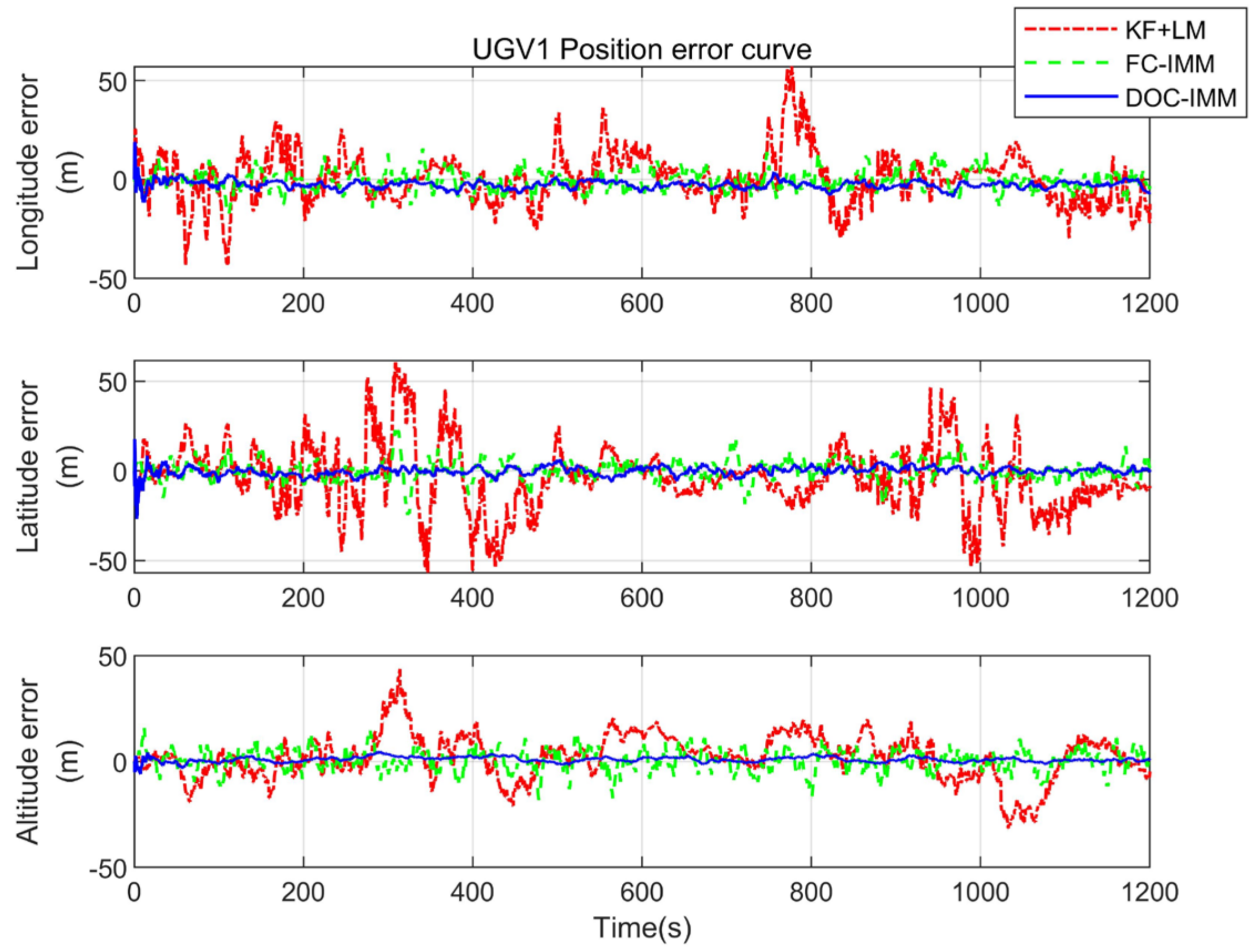

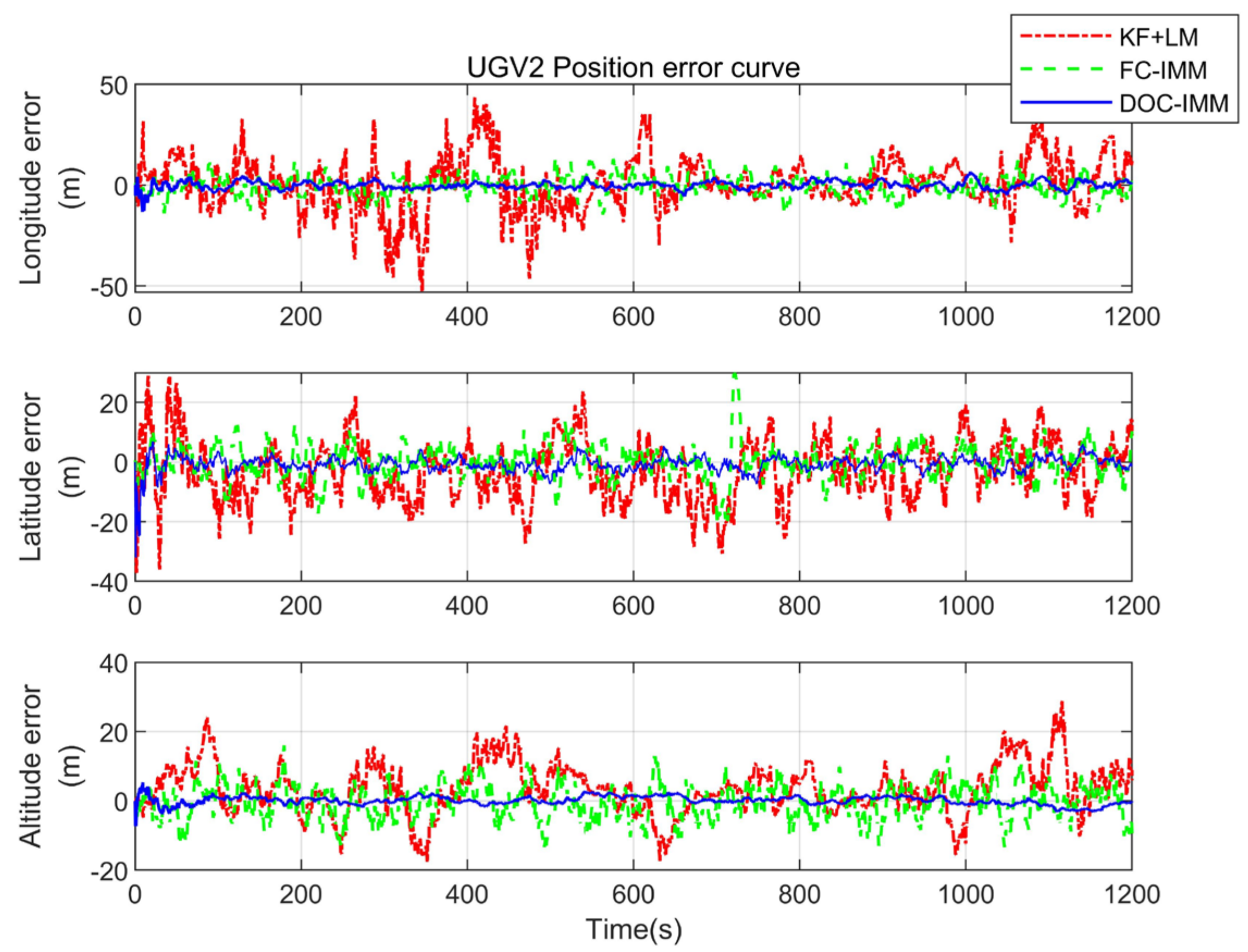

5. Conclusions

In this paper, for the situation of satellite navigation signal challenged in cities, hills, and valleys, we use the techniques of multi-dimensional sensing of navigation information, wireless ranging information interaction, and cooperative interoperability between heterogeneous air-ground vehicle formations to complete real-time navigation and positioning of UGVs. In this method, the DOC-CN algorithm is divided into three steps. First, the location of the UGV is predicted by the IMM algorithm. Then, aerial benchmarks are established by calculating cost functions and the path planning algorithm. Finally, the SINS solution platform is constructed to obtain the continuous position information of the UGVs.

The simulation shows that the DOC-CN algorithm proposed in this paper is significantly superior to that of traditional cooperative positioning methods such as TSLS and the FC-CN method. It can realize the navigation and positioning requirements of UGVs in a certain area under the GNSS-challenged environment and improve the overall formation’s positioning accuracy. Moreover, the next step is to embed the flight control program and navigation algorithm into the hardware platform and complete the practical validation.