1. Introduction

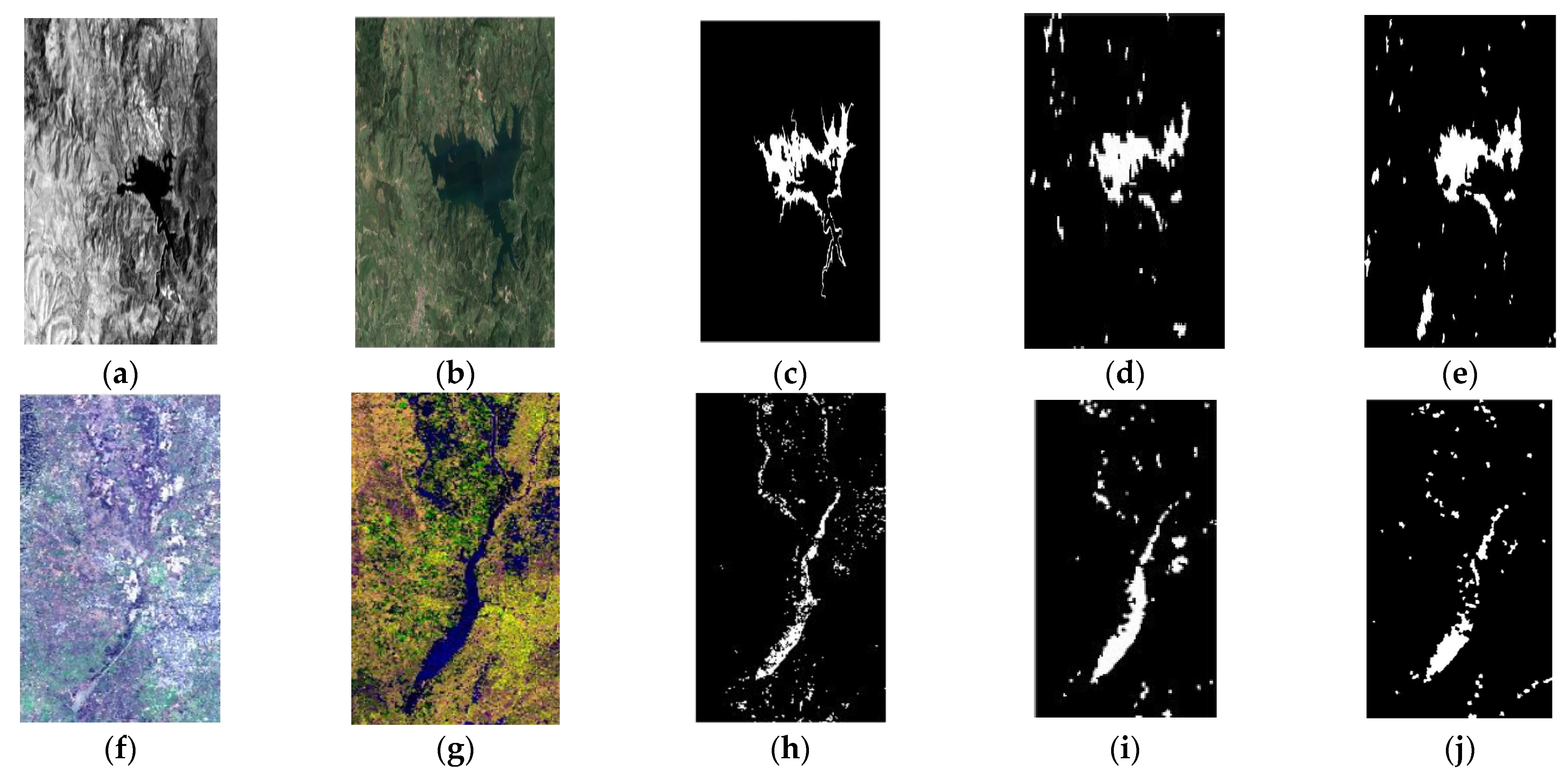

Recently, heterogeneous change detection has drawn increased attention. Heterogeneous change detection aims to achieve change detection from images coming from different types of sensors. Its advantages over homogeneous change detection are clear. It not only combines the characteristics of multiple types of data and removes the environmental conditions. Additionally, it provides a timely change analysis, especially in case of disasters. However, many issues related to heterogeneous change detection have not been addressed, such as false alarms. False alarms can lead researchers to misjudge and mispredict the development trends of events. Furthermore, they can cause researchers to waste resources when dealing with change. Therefore, in this paper, we discuss the issue of false alarm suppression in detail.

Existing heterogeneous change detection methods can be classified from different perspectives. According to the availability of labeled samples, this type of detection can be classified into supervised and unsupervised methods. Since it is difficult to obtain change samples and ground truth maps, unsupervised methods are preferable to supervised ones. According to the processing scale, there are patch-level, pixel-level and subpixel-level methods. Among them, subpixel-level methods are more prominent in improving accuracy [

1]. In [

2], fine spatial but coarse temporal resolution images and coarse spatial but fine temporal images are combined to detect changes by using spectral unmixing to generate the abundance image. According to the principle of the algorithms, there are classification-based, parametric, non-parametric, similarity-based and translation and projection-based methods. Classification-based techniques compare the results of classifying individual images. Parametric techniques use a set of multivariate distributions to model the joint statistics of different imaging modalities, while non-parametric ones do not explicitly assume a specific parametric distribution. The similarity measures are modality-invariant properties. Translation or projection-based methods convert multimodal images onto a common space in which homogeneous change detection methods can be applied [

3].

Among these methods, those based on translation and projection without the presupposition of various conditions have gained the greatest popularity. Non-deep learning methods and deep learning methods are used to realize translation and projection. First, we take a look at the non-deep learning methods. Based on changes in smoothness, a decomposition method was proposed to decompose the post-event image into a regression image of pre-event image and a changed image [

4]. In [

5], heterogeneous change detection was converted into a graph signal processing problem and structural differences were used to detect changes. In [

6], a robust K-nearest neighbor graph was established and an iterative framework was put forward based on a combination of difference map generation and change map calculation. In [

7], a self-expressive property was exploited and difference image was obtained by measuring how much one image conformed to the similarity graph compared to the other image. Furthermore, considering the impact of noise, the fusion of forward and backward difference images was accomplished by statistical modeling in [

8]. In [

9], a new method called INLPG was developed by constructing a graph for the whole image and applying the discrete wavelet transform to fuse difference images. In [

10], a probabilistic graph was constructed and image translation was based on the sparse-constrained image regression model. Next, we investigated deep learning methods. A conditional generative adversarial network was used to transform the heterogeneous synthetic aperture radar (SAR) and optical images into the same space to form the difference image [

11]. In [

12], CycleGAN was adopted to translate the pre-event SAR image into an optical image, and the difference image was obtained by comparing the translated optical image with the post-event optical image. In [

13], a self-supervised detection method was developed based on pseudo-training from affinity matrices and four kinds of regression methods, namely, Gaussian process regression, support vector regression, random forest regression, and homogeneous pixel transformation. In [

14], two new convolutional neural networks were constructed with a loss function based on the affinity priors to mitigate the impact of change pixels on the learning objective. In [

15], a graph fusion framework for change detection was proposed on the condition of smoothness priors. In [

16], an end-to-end framework of a graph convolutional network was constructed to increase localization accuracy in the vertex domain by exploiting intra-modality and cross-modality information.

Generally, the detection results contain many pseudo-changes, which stem from three sources. The first is the difference between shapes and sizes of the same object in heterogeneous images, the second is the imbalance in sample categories in supervised and self-supervised methods, and the third is inherent noise in the imaging process. A common method to solve this problem is supervised classification, which requires some prior knowledge. In [

17], a simple CNN was built to classify the feature difference maps with a few pixel-level training samples to suppress false alarms. In [

18], a structural consistency loss based on the cross-modal distance between affinity and an adversarial loss were introduced to deal with pseudo-changes. In addition, a multitemporal segmentation combining the spectral, spatial, and temporal information of the heterogeneous images was introduced in the preprocessing to reduce false positives [

19]. In [

20], a feature difference network was built to reduce false detections by addressing the information loss and imbalance in feature fusion.

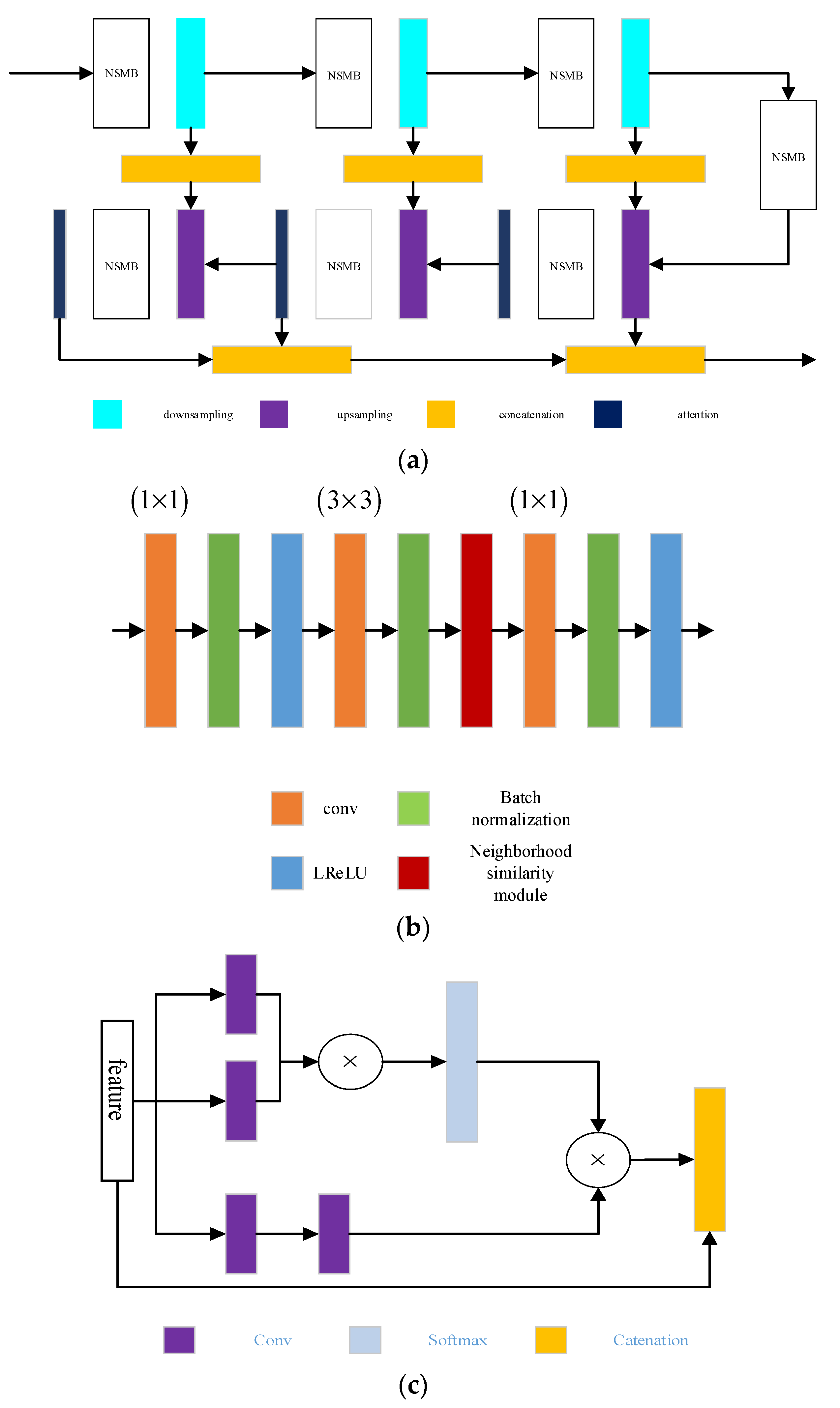

In this article, we propose a new method based on a combination of CNN and GCN to deal with false alarm suppression in heterogeneous change detection. The main contributions of our method are as follows: First, by generating pseudo-label samples to train the whole network, our method is unsupervised. This can help to avoid the false alarms introduced by imbalances in training sample categories. Second, we use the inter-feature relationships between true targets and false ones, which can be represented by an adjacent matrix to generate a change map. This can facilitate the detection of the same object, showing differences in the shapes and sizes of heterogeneous images. Third, a partially absorbing random walk convolution kernel is constructed and applied in the GCN. This new convolution kernel can enhance the features of individual vertex and mitigate the impact of noise to some extent, which is advantageous in the suppression of noise-introduced false alarms. This paper is organized as follows:

Section 2 presents our proposed method and the experiment results obtained by comparison with some state-of-the-art approaches. Our final conclusion is given in

Section 3.