Unsupervised Cross-Scene Aerial Image Segmentation via Spectral Space Transferring and Pseudo-Label Revising

Abstract

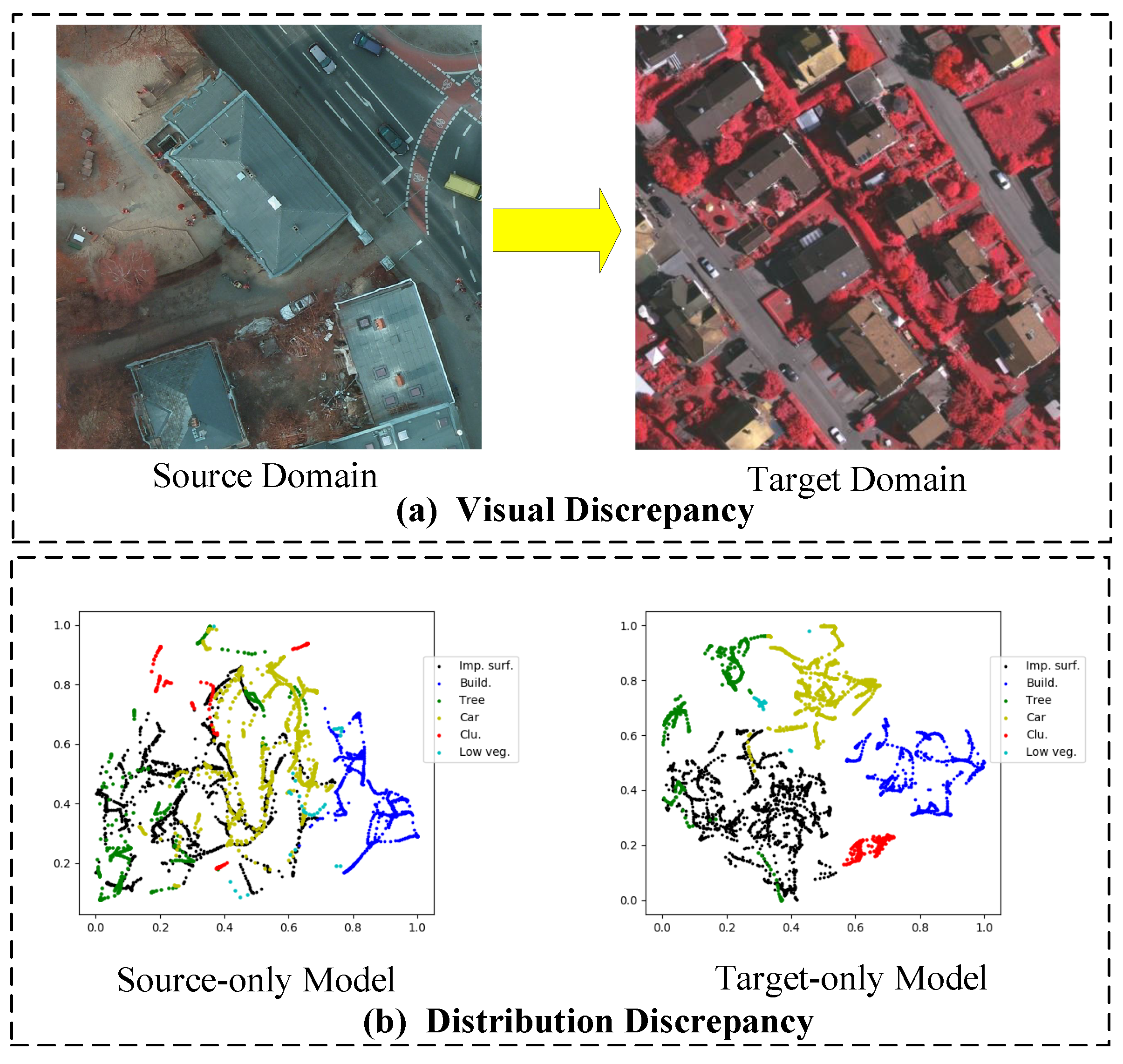

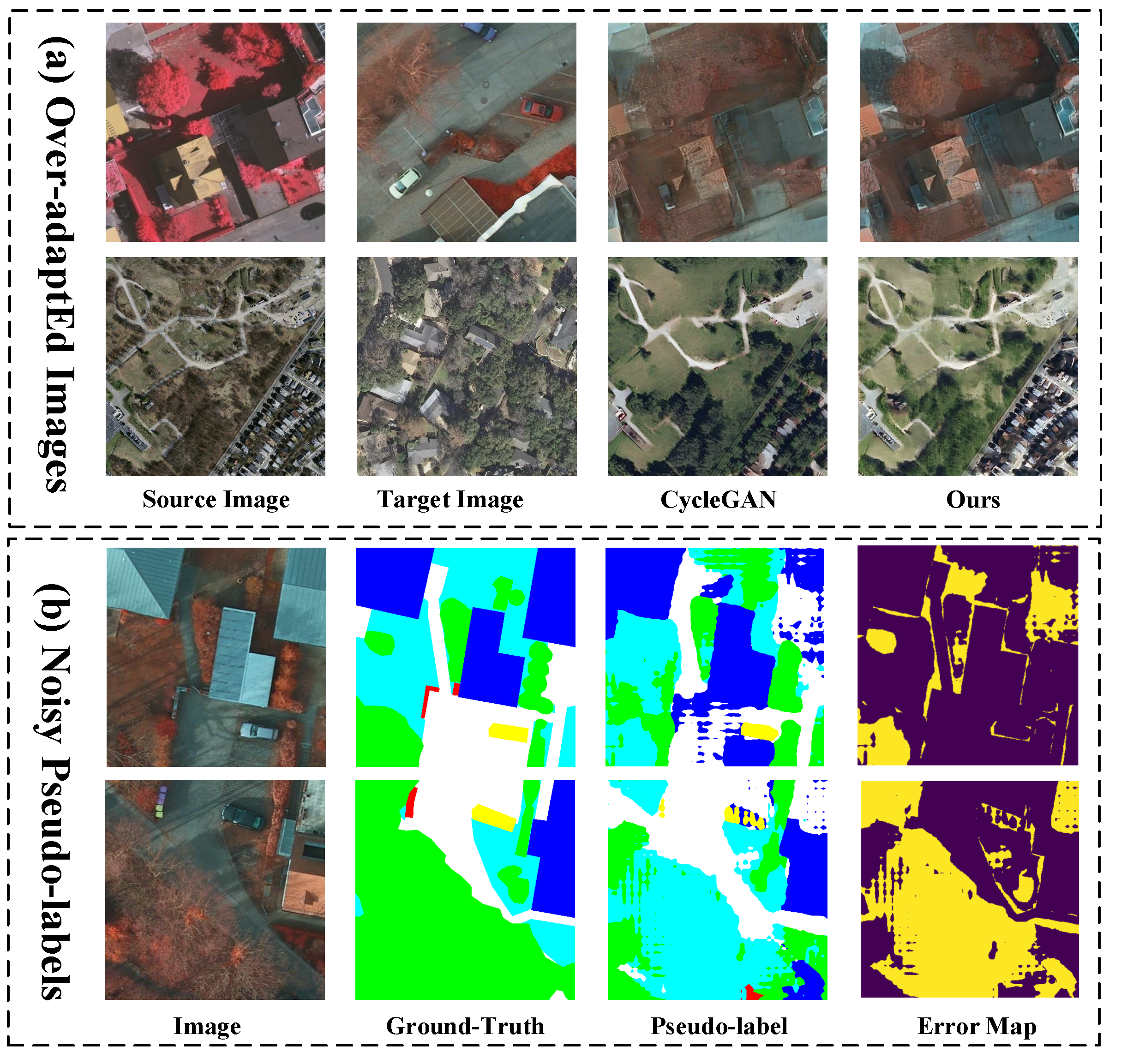

1. Introduction

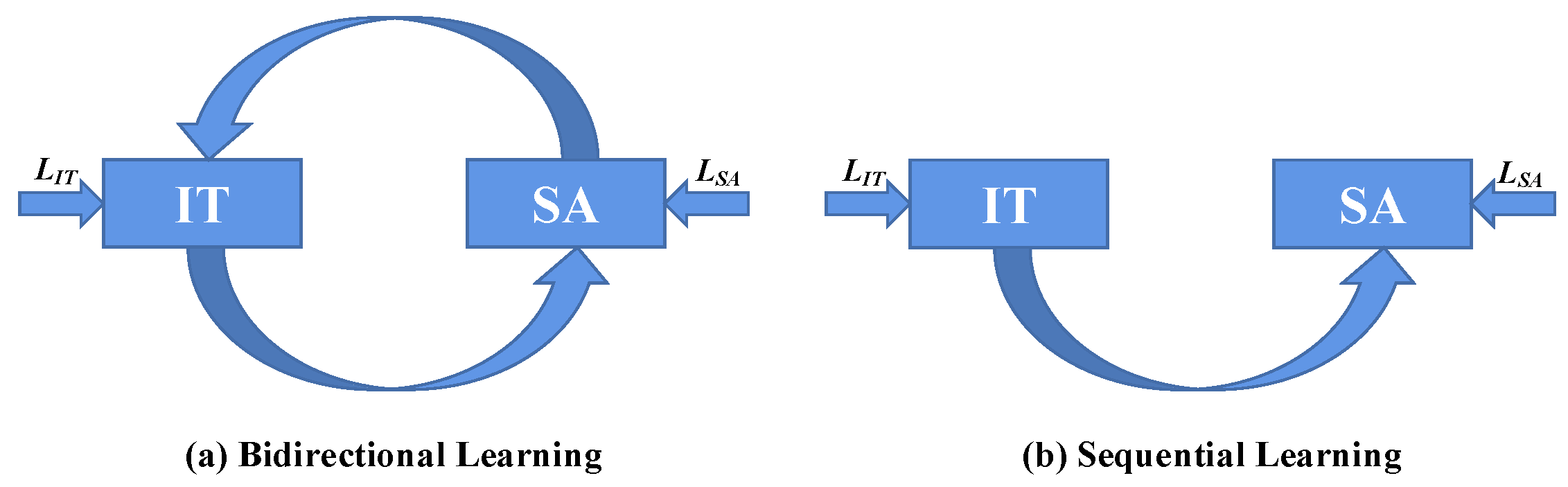

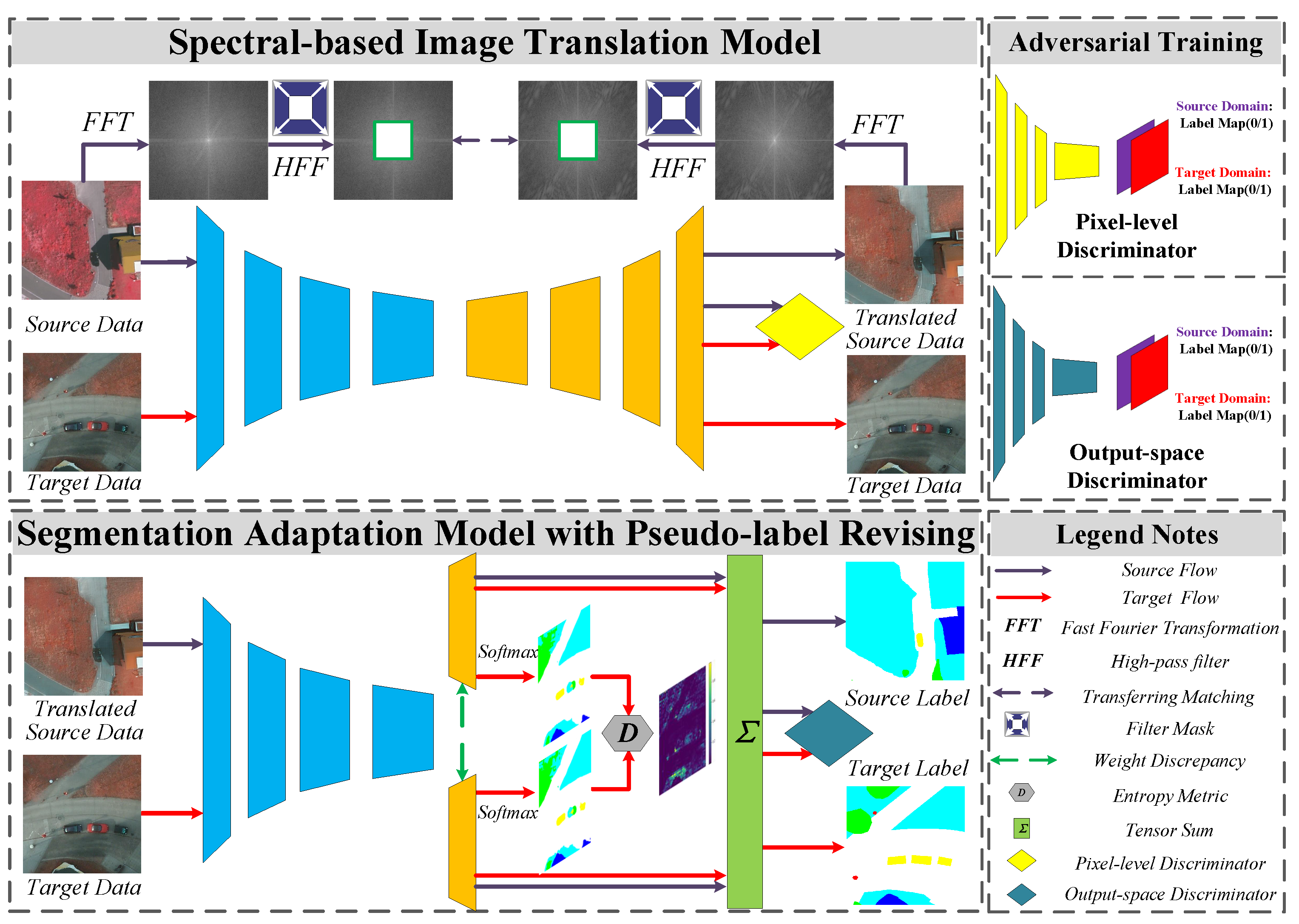

- Different from most UDA segmentation methods, we propose a sequential learning system for unsupervised cross-scene aerial image segmentation. The whole system is decoupled into the image translation model and segmentation adaptation model.

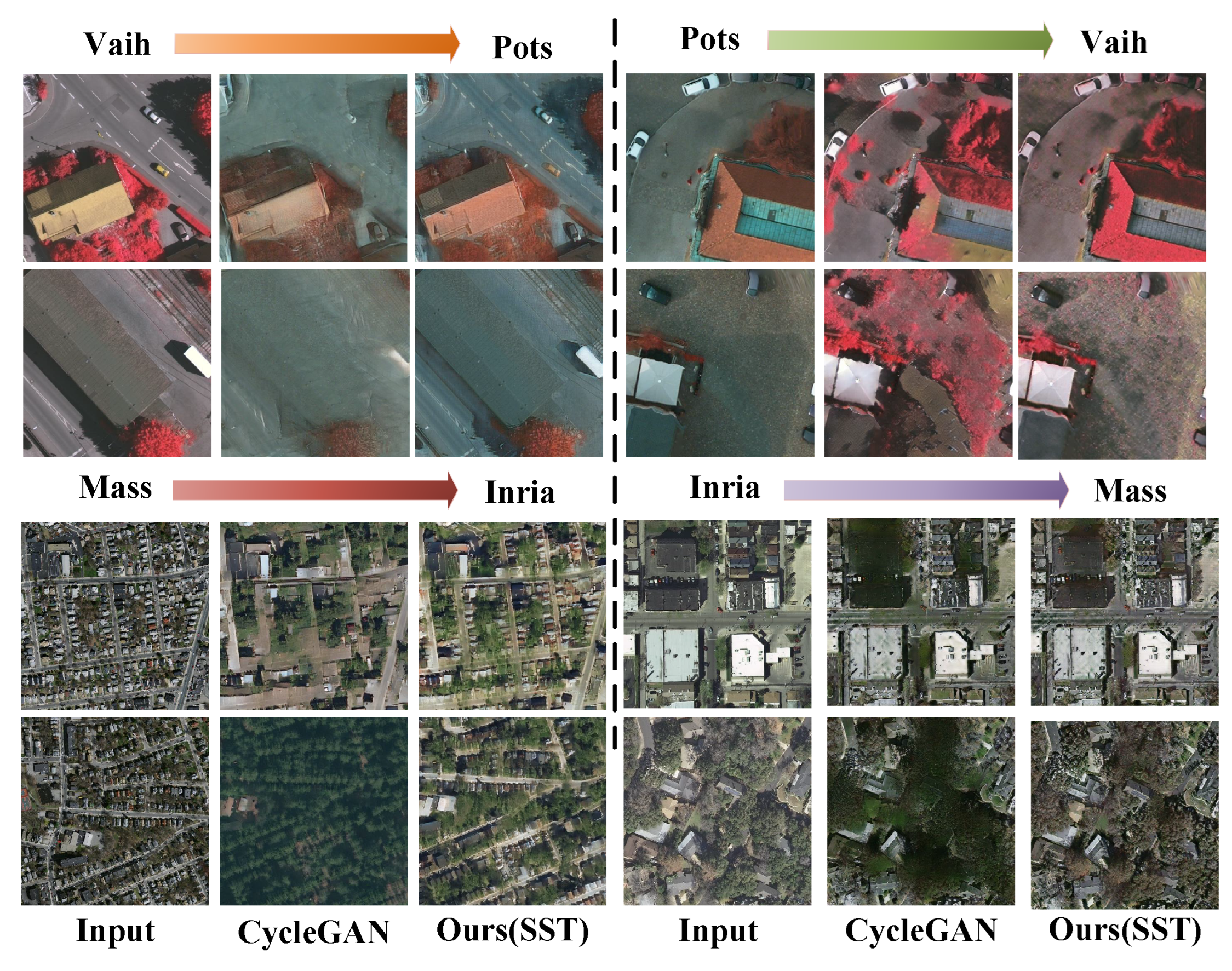

- The spectral space transferring (SST) module is proposed to transfer the high-frequency components between the source image and the translated image, which can better preserve the important identity and fine-grained details.

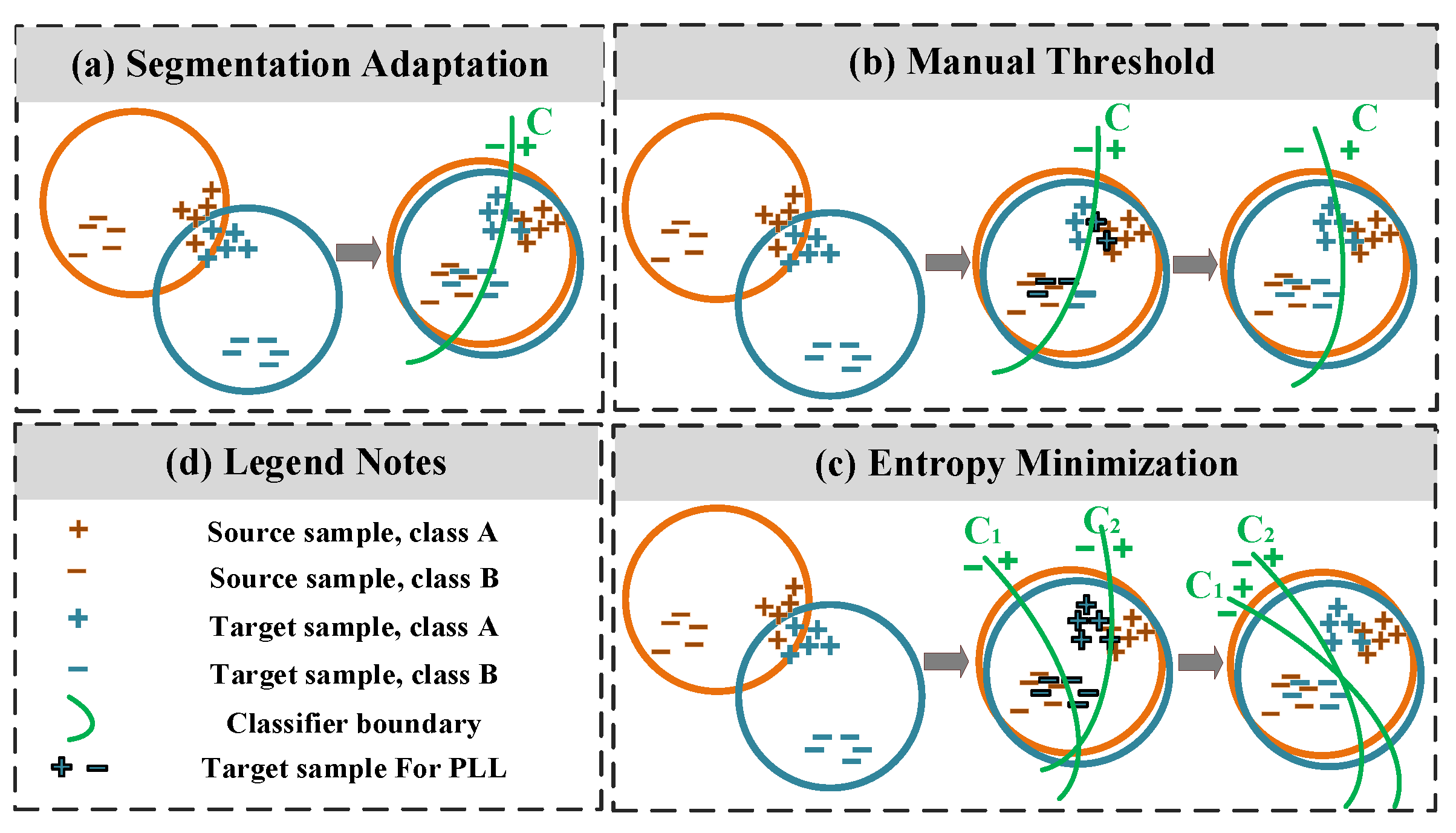

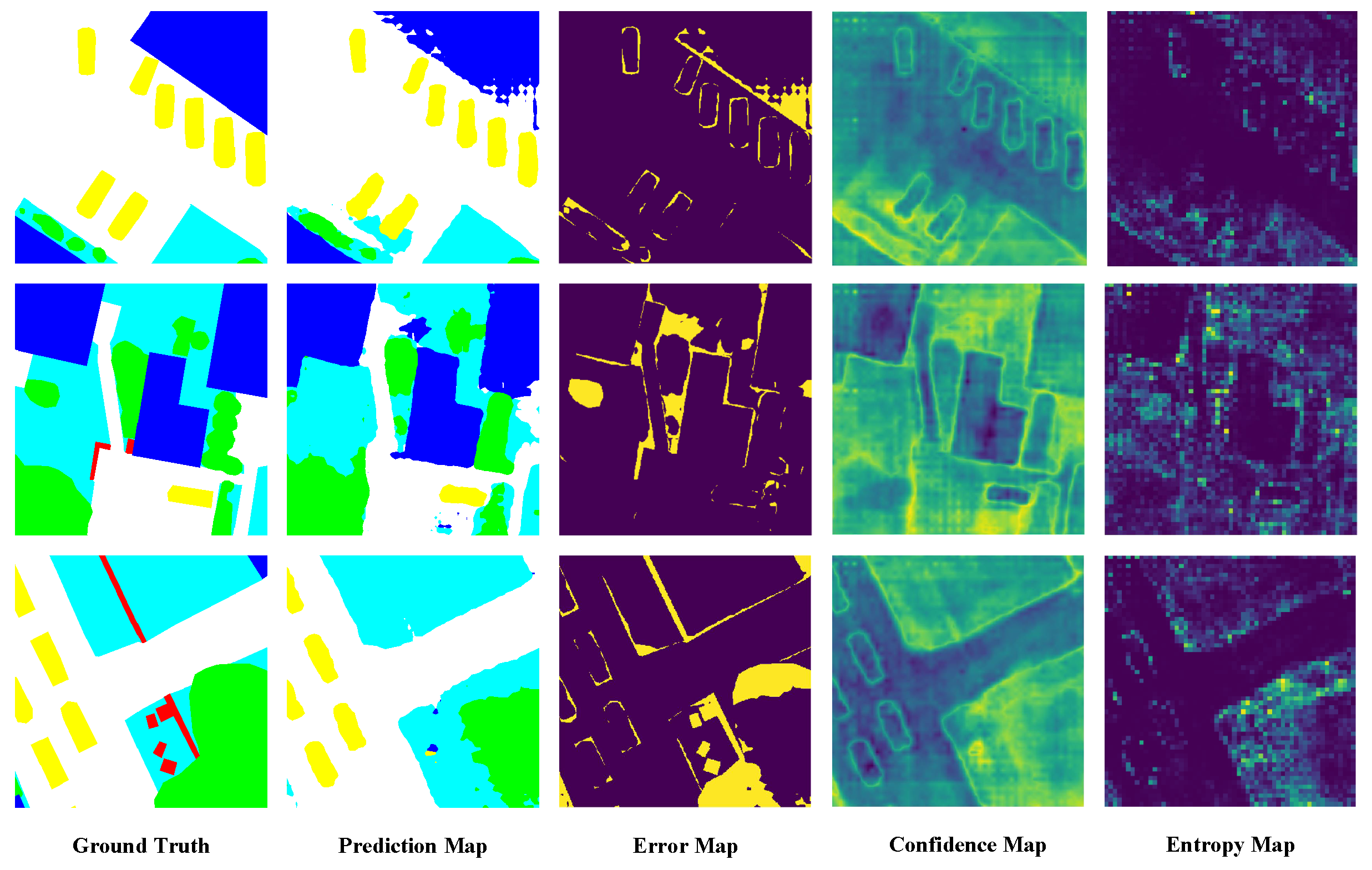

- To further alleviate the distribution discrepancy, an efficient pseudo-label revising (PLR) approach was developed to guide pseudo-label learning via entropy minimization. Without additional parameters, the entropy minimization works as the adaptive threshold to constantly revise the pseudo labels for the target domain.

2. Related Works

2.1. Aerial Image Semantic Segmentation

2.2. UDA for Semantic Segmentation

3. Methodology

3.1. Overview

3.2. Spectral Space Transferring

3.3. Pseudo-Label Revising

3.4. Training Objective

| Algorithm 1 Training process of the proposed method. |

|

4. Experiments

4.1. Datasets

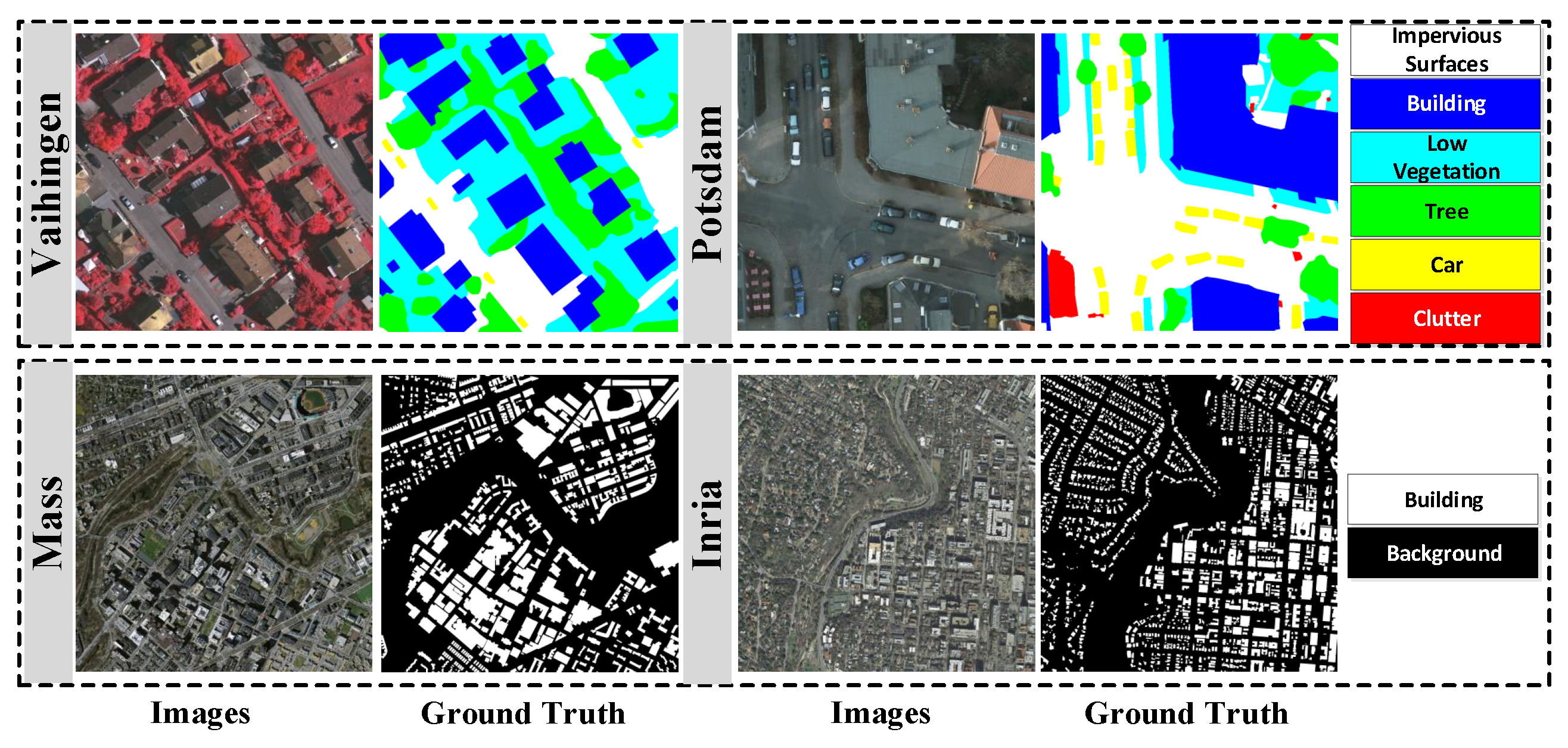

- The Massachusetts building dataset [59] embodies 151 patches of the Boston area consisting of aerial images with a spatial resolution of 1 m/pixel and a size of pixels. The entire dataset covers about 340 square kilometers, in which 137 tiles are selected as training sets, 10 tiles are selected as testing sets, and the remaining 4 tiles are selected to validate the proposed network. For each image in the dataset, the corresponding ground-truth labels are provided, which contain two categories: building and background.

- The Inria aerial image labeling dataset [60] consists of 360 RGB ortho-rectified aerial images with a resolution of pixels and a spatial resolution of 0.3 m/pixel. Following the previous work [36], we arrange images 6–36 for training and images 1–5 for validation. The corresponding ground-truth labels contain two categories: building and background are provided.

- ISPRS Vaihingen 2D Semantic Labeling Challenge dataset contains 33 patches, where the training set embodies 16 tiles and the remaining 17 tiles are selected to test the proposed method. Each aerial image contains the corresponding true orthophoto (TOP) and semantic label, which has an average size of pixels and a ground sampling distance of 9cm. The corresponding ground-truth labels contain six categories: impervious surface, building, low vegetation, tree, car, and clutter/background.

- ISPRS Potsdam 2D semantic labeling challenge dataset involves 38 patches, in which 24 images are used for training and the validation set contains 14 tiles. Each aerial image has an average size of pixels and a ground sampling distance of 5 cm.

4.2. Implementation Details

4.3. Experimental Results

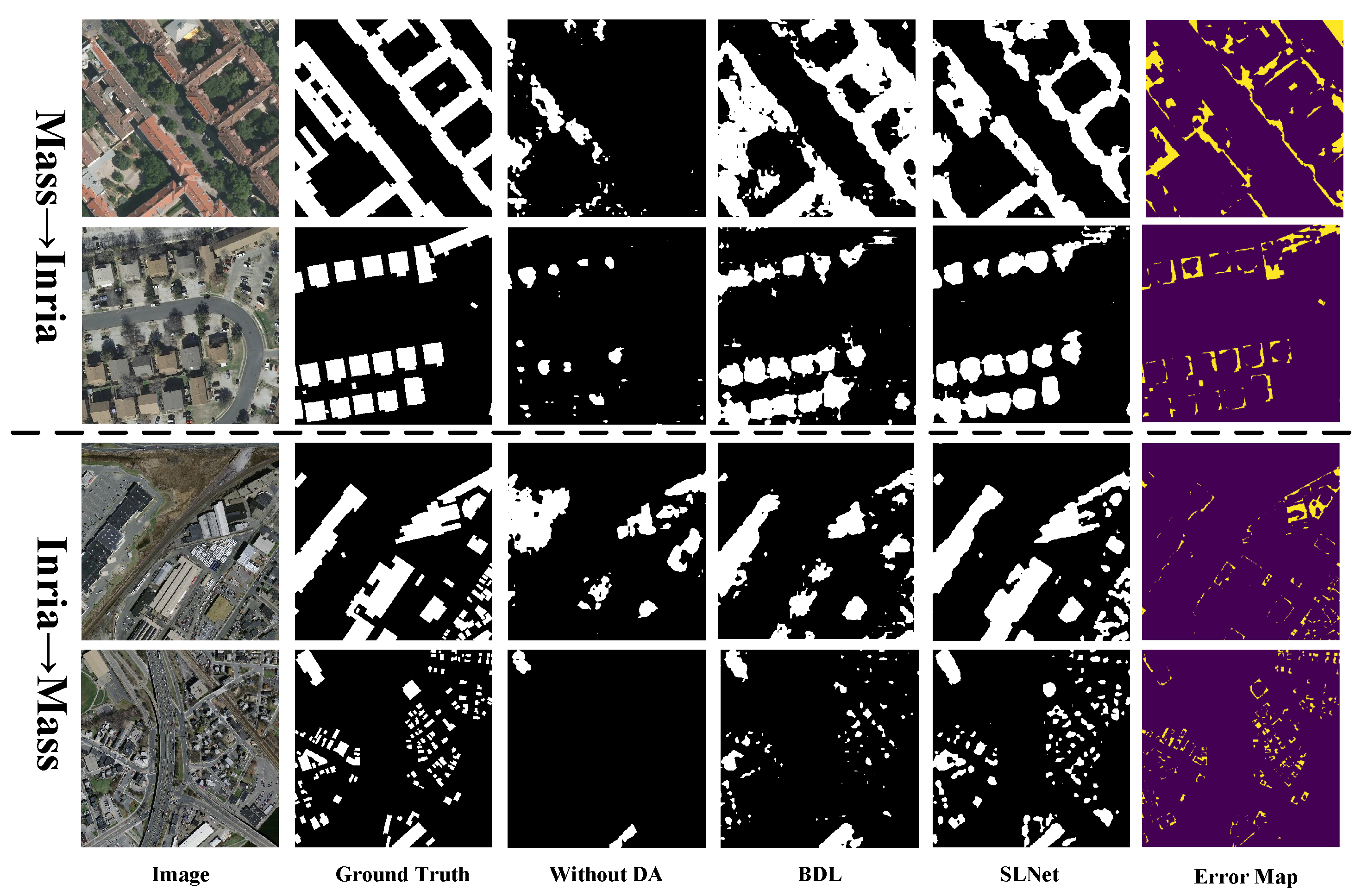

4.3.1. Results on Single-Category UDA Semantic Segmentation

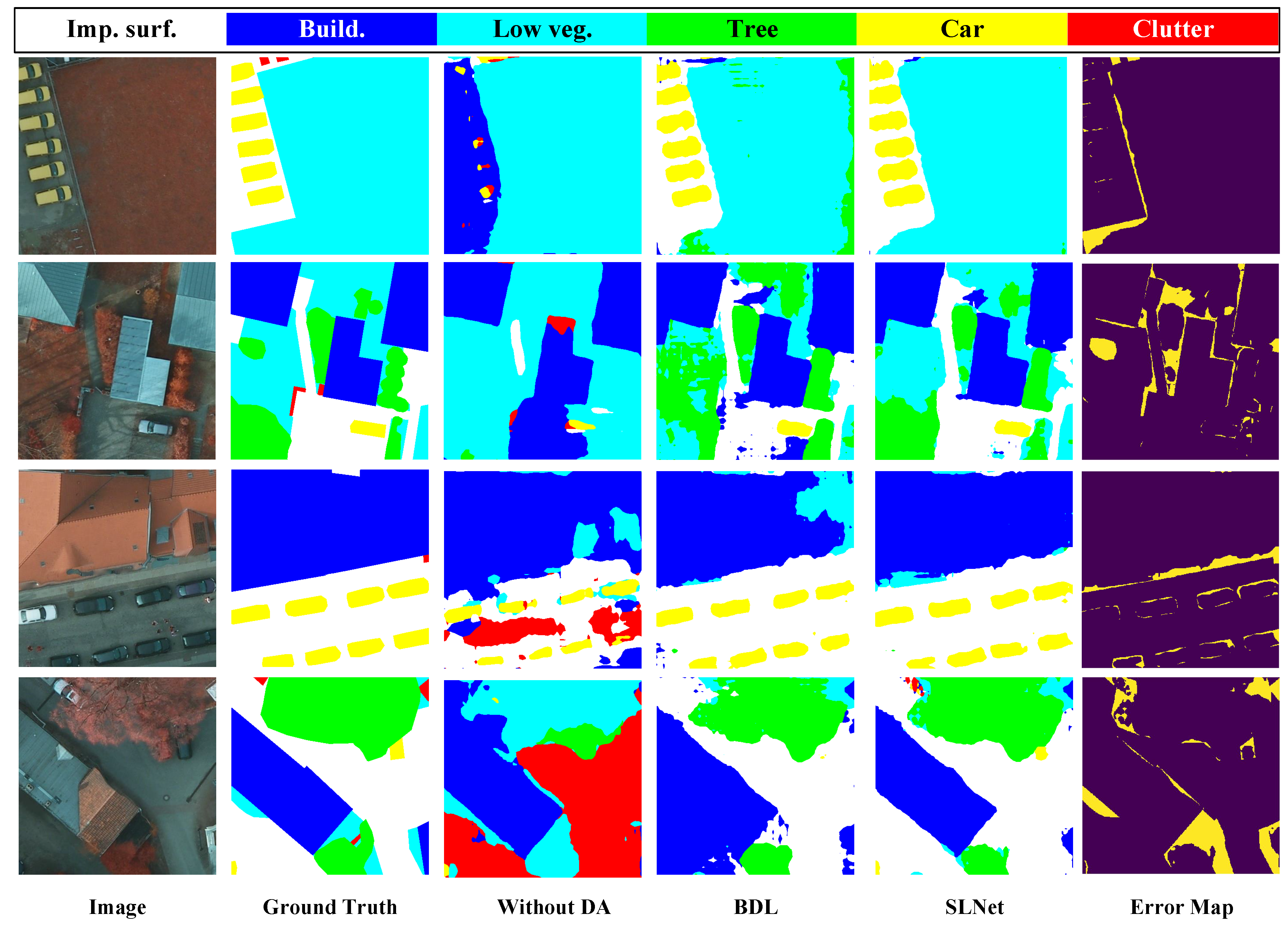

4.3.2. Results on Multi-Category UDA Semantic Segmentation

4.4. Ablation Analysis

4.4.1. Discussion

4.4.2. Analysis of Network Settings

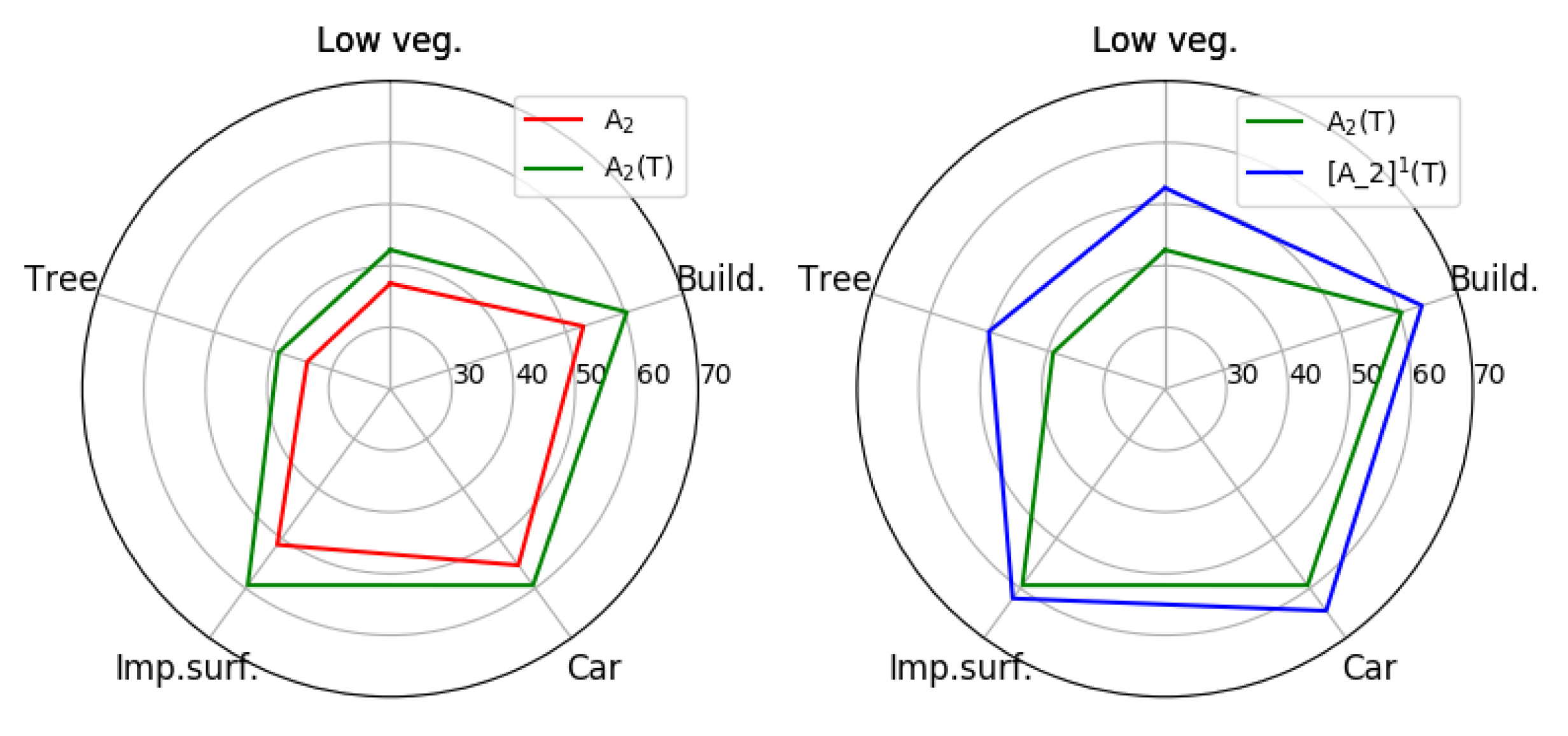

4.4.3. Effect of Spectral Space Transferring

4.4.4. Effect of Pseudo-Label Revising

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stewart, I.D.; Oke, T.R. Local climate zones for urban temperature studies. Bull. Am. Meteorol. Soc. 2012, 93, 1879–1900. [Google Scholar] [CrossRef]

- Matikainen, L.; Karila, K. Segment-based land cover mapping of a suburban area—Comparison of high-resolution remotely sensed datasets using classification trees and test field points. Remote Sens. 2011, 3, 1777–1804. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Malihi, S.; Hahn, M. Integrating fuzzy object based image analysis and ant colony optimization for road extraction from remotely sensed images. ISPRS J. Photogramm. Remote Sens. 2018, 138, 151–163. [Google Scholar] [CrossRef]

- Jin, X.; Davis, C.H. Automated building extraction from high-resolution satellite imagery in urban areas using structural, contextual, and spectral information. EURASIP J. Adv. Signal Process. 2005, 2005, 745309. [Google Scholar] [CrossRef]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Mou, L.; Hua, Y.; Zhu, X.X. Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high-resolution aerial images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7557–7569. [Google Scholar] [CrossRef]

- Hua, Y.; Mou, L.; Zhu, X.X. Recurrently exploring class-wise attention in a hybrid convolutional and bidirectional lstm network for multi-label aerial image classification. ISPRS J. Photogramm. Remote Sens. 2019, 149, 188–199. [Google Scholar] [CrossRef]

- Liu, W.; Sun, X.; Zhang, W.; Guo, Z.; Fu, K. Associatively segmenting semantics and estimating height from monocular remote-sensing imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5624317. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Lee, C.-Y.; Batra, T.; Baig, M.H.; Ulbricht, D. Sliced wasserstein discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10285–10295. [Google Scholar]

- Zhang, P.; Zhang, B.; Chen, D.; Yuan, L.; Wen, F. Cross-domain correspondence learning for exemplar-based image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5143–5153. [Google Scholar]

- Chang, W.-L.; Wang, H.-P.; Peng, W.-H.; Chiu, W.-C. All about structure: Adapting structural information across domains for boosting semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1900–1909. [Google Scholar]

- Xu, Y.; Du, B.; Zhang, L.; Zhang, Q.; Wang, G.; Zhang, L. Self-ensembling attention networks: Addressing domain shift for semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI USA, 27 January–1 February 2019; pp. 5581–5588. [Google Scholar]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M. Learning transferable features with deep adaptation networks. In Proceedings of the International Conference on Machine Learning PMLR, Lille, France, 6–11 July 2015; pp. 97–105. [Google Scholar]

- Geng, B.; Tao, D.; Xu, C. Daml: Domain adaptation metric learning. IEEE Trans. Image Process. 2011, 20, 2980–2989. [Google Scholar] [CrossRef]

- Tsai, Y.-H.; Hung, W.-C.; Schulter, S.; Sohn, K.; Yang, M.-H.; Chandraker, M. Learning to adapt structured output space for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7472–7481. [Google Scholar]

- Zheng, Z.; Yang, Y. Unsupervised scene adaptation with memory regularization in vivo. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; pp. 1076–1082. [Google Scholar]

- Zhang, P.; Zhang, B.; Zhang, T.; Chen, D.; Wang, Y.; Wen, F. Prototypical pseudo label denoising and target structure learning for domain adaptive semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12414–12424. [Google Scholar]

- Zou, Y.; Yu, Z.; Kumar, B.; Wang, J. Unsupervised domain adaptation for semantic segmentation via class-balanced self-training. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 289–305. [Google Scholar]

- Zou, Y.; Yu, Z.; Liu, X.; Kumar, B.; Wang, J. Confidence regularized self-training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5982–5991. [Google Scholar]

- Li, Y.; Yuan, L.; Vasconcelos, N. Bidirectional learning for domain adaptation of semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6936–6945. [Google Scholar]

- Tong, X.-Y.; Xia, G.-S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Liu, Y.; Fan, B.; Wang, L.; Bai, J.; Xiang, S.; Pan, C. Semantic labeling in very high resolution images via a self-cascaded convolutional neural network. ISPRS J. Photogramm. Remote Sens. 2018, 145, 78–95. [Google Scholar] [CrossRef]

- Niu, R.; Sun, X.; Tian, Y.; Diao, W.; Chen, K.; Fu, K. Hybrid multiple attention network for semantic segmentation in aerial images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5603018. [Google Scholar] [CrossRef]

- Cao, Z.; Fu, K.; Lu, X.; Diao, W.; Sun, H.; Yan, M.; Yu, H.; Sun, X. End-to-end dsm fusion networks for semantic segmentation in high-resolution aerial images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1766–1770. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, W.; Sun, X.; Guo, Z.; Fu, K. Hecr-net: Height-embedding context reassembly network for semantic segmentation in aerial images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9117–9131. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.; Pradhan, B.; Beydoun, G.; Sarkar, R.; Park, H.-J.; Alamri, A. A novel method using explainable artificial intelligence (xai)-based shapley additive explanations for spatial landslide prediction using time-series sar dataset. Gondwana Res. 2022. [Google Scholar] [CrossRef]

- Hasanpour Zaryabi, E.; Moradi, L.; Kalantar, B.; Ueda, N.; Halin, A.A. Unboxing the black box of attention mechanisms in remote sensing big data using xai. Remote Sens. 2022, 14, 6254. [Google Scholar] [CrossRef]

- Van der Velden, B.H.; Kuijf, H.J.; Gilhuijs, K.G.; Viergever, M.A. Explainable artificial intelligence (xai) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef]

- Benjdira, B.; Bazi, Y.; Koubaa, A.; Ouni, K. Unsupervised domain adaptation using generative adversarial networks for semantic segmentation of aerial images. Remote Sens. 2019, 11, 1369. [Google Scholar] [CrossRef]

- Tasar, O.; Happy, S.; Tarabalka, Y.; Alliez, P. Colormapgan: Unsupervised domain adaptation for semantic segmentation using color mapping generative adversarial networks. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7178–7193. [Google Scholar] [CrossRef]

- Wu, J.; Tang, Z.; Xu, C.; Liu, E.; Gao, L.; Yan, W. Super-resolution domain adaptation networks for semantic segmentation via pixel and output level aligning. Front. Earth Sci. 2020, 10, 974325. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, Y.; Tian, Y.; Newsam, S. Scale aware adaptation for land-cover classification in remote sensing imagery. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 19–25 June 2021; pp. 2160–2169. [Google Scholar]

- Liu, W.; Su, F.; Jin, X.; Li, H.; Qin, R. Bispace domain adaptation network for remotely sensed semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2020, 60, 1–11. [Google Scholar] [CrossRef]

- Saenko, K.; Kulis, B.; Fritz, M.; Darrell, T. Adapting visual category models to new domains. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2010; pp. 213–226. [Google Scholar]

- Saltori, C.; Lathuiliére, S.; Sebe, N.; Ricci, E.; Galasso, F. Sf-uda 3d: Source-free unsupervised domain adaptation for lidar-based 3d object detection. In Proceedings of the 2020 IEEE International Conference on 3D Vision (3DV), Fukuoka, Japan, 25–28 November 2020; pp. 771–780. [Google Scholar]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; Vaughan, J.W. A theory of learning from different domains. Mach. Learn. 2010, 79, 151–175. [Google Scholar] [CrossRef]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef]

- Long, M.; Wang, J.; Ding, G.; Sun, J.; Yu, P.S. Transfer feature learning with joint distribution adaptation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2200–2207. [Google Scholar]

- Maria Carlucci, F.; Porzi, L.; Caputo, B.; Ricci, E.; Rota Bulo, S. Autodial: Automatic domain alignment layers. In Proceedings of the IEEE International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2017; pp. 5067–5075. [Google Scholar]

- Mancini, M.; Porzi, L.; Bulo, S.R.; Caputo, B.; Ricci, E. Boosting domain adaptation by discovering latent domains. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3771–3780. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.-Y.; Isola, P.; Saenko, K.; Efros, A.; Darrell, T. Cycada: Cycle-consistent adversarial domain adaptation. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1989–1998. [Google Scholar]

- Choi, J.; Kim, T.; Kim, C. Self-ensembling with gan-based data augmentation for domain adaptation in semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of korea, 27 October–2 November 2019; pp. 6830–6840. [Google Scholar]

- Hong, W.; Wang, Z.; Yang, M.; Yuan, J. Conditional generative adversarial network for structured domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1335–1344. [Google Scholar]

- Luo, Y.; Zheng, L.; Guan, T.; Yu, J.; Yang, Y. Taking a closer look at domain shift: Category-level adversaries for semantics consistent domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2507–2516. [Google Scholar]

- Saito, K.; Ushiku, Y.; Harada, T. Asymmetric tri-training for unsupervised domain adaptation. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2988–2997. [Google Scholar]

- Deng, W.; Zheng, L.; Sun, Y.; Jiao, J. Rethinking triplet loss for domain adaptation. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 29–37. [Google Scholar] [CrossRef]

- Pan, Y.; Yao, T.; Li, Y.; Wang, Y.; Ngo, C.-W.; Mei, T. Transferrable prototypical networks for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2239–2247. [Google Scholar]

- Sharma, V.; Murray, N.; Larlus, D.; Sarfraz, S.; Stiefelhagen, R.; Csurka, G. Unsupervised meta-domain adaptation for fashion retrieval. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2021; pp. 1348–1357. [Google Scholar]

- Lee, D.H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. Workshop Chall. Represent. Learn. ICML 2013, 3, 896. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Cooley, J.W.; Lewis, P.A.; Welch, P.D. The fast fourier transform and its applications. IEEE Trans. Educ. 1969, 12, 27–34. [Google Scholar] [CrossRef]

- Frigo, M.; Johnson, S.G. FFTW: An adaptive software architecture for the FFT. In Proceedings of the 1998 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP’98 (Cat. No. 98CH36181), Seattle, WA, USA, 15 May 1998; pp. 1381–1384. [Google Scholar]

- Zhou, Z.-H.; Li, M. Tri-training: Exploiting unlabeled data using three classifiers. IEEE Trans. Knowl. Data Eng. 2005, 17, 1529–1541. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling; University of Toronto: Toronto, ON, Canada, 2013. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? the inria aerial image labeling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Bottou, L. Large-scale machine learning with stochastic gradient descent. In Proceedings of the COMPSTAT’2010, Paris, France, 22–27 August 2010; pp. 177–186. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7167–7176. [Google Scholar]

- Li, Y.; Shi, T.; Zhang, Y.; Chen, W.; Wang, Z.; Li, H. Learning deep semantic segmentation network under multiple weakly-supervised constraints for cross-domain remote sensing image semantic segmentation. ISPRS J. Photogramm. Remote Sens. 2021, 175, 20–33. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, T.; Wang, B. Curriculum-style local-to-global adaptation for cross-domain remote sensing image segmentation. ISPRS J. Photogramm. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Li, C.-L.; Chang, W.-C.; Cheng, Y.; Yang, Y.; Póczos, B. Mmd gan: Towards deeper understanding of moment matching network. Adv. Neural Inf. Process. Syst. 2017, 30, 2203–2213. [Google Scholar]

| Single-Category | Mass ⇒ Inria | Inria ⇒ Mass | ||

|---|---|---|---|---|

| Model | mIoU | mIoU Gap | mIoU | mIoU Gap |

| Source-only | 30.79 | 52.70 | 24.52 | 54.04 |

| ADDA * [64] | 43.52 | 39.97 | 33.38 | 45.18 |

| CyCADA * [46] | 45.81 | 37.69 | 39.86 | 38.70 |

| AdaptSegNet * [20] | 46.28 | 37.21 | 43.17 | 35.39 |

| ScaleNet * [37] | 50.22 | 33.27 | 46.44 | 32.12 |

| SRDA-Net [36] | 52.86 | 30.64 | - | - |

| BDL * [25] | 54.16 | 29.33 | 48.60 | 29.96 |

| SLNet | 57.32 | 26.17 | 51.16 | 27.40 |

| Target-only | 83.49 | 0.00 | 78.56 | 0.00 |

| Multi-Category | Vaihingen ⇒ Potsdam | |||||||

|---|---|---|---|---|---|---|---|---|

| Model | Imp. Surf. | Build. | Low Veg. | Tree | Car | mIoU(%) | Mean (%) | mIoU Gap(%) |

| Source-only | 19.09 | 32.98 | 22.59 | 5.53 | 8.01 | 17.64 | 20.91 | 55.05 |

| GAN-RSDA [34] | 18.66 | 27.40 | 19.72 | 32.06 | 0.59 | 19.69 | 21.96 | 53.00 |

| SEANet [17] | 28.90 | 36.24 | 8.70 | 5.17 | 44.77 | 24.76 | 37.04 | 47.93 |

| AdaptSegNet * [20] | 43.18 | 44.26 | 39.86 | 21.41 | 11.26 | 31.99 | 44.94 | 40.70 |

| CyCADA * [46] | 45.36 | 33.62 | 29.13 | 37.07 | 16.49 | 32.33 | 46.58 | 40.36 |

| ADDA * [64] | 46.29 | 42.21 | 42.54 | 26.29 | 23.56 | 36.18 | 49.05 | 36.51 |

| BSANet [38] | 50.21 | 49.98 | 28.67 | 27.66 | 41.51 | 39.61 | 53.19 | 33.08 |

| Dual_GAN [65] | 45.96 | 59.01 | 41.73 | 25.80 | 39.71 | 42.44 | 58.77 | 30.25 |

| ScaleNet [37] | 49.76 | 46.82 | 52.93 | 40.23 | 42.97 | 46.54 | - | 26.15 |

| SRDA-Net [36] | 60.20 | 61.00 | 51.80 | 36.80 | 63.40 | 54.64 | - | 18.05 |

| CCDA_LGFA [66] | 64.39 | 66.44 | 47.17 | 37.55 | 59.35 | 54.98 | 70.28 | 17.71 |

| BDL * [25] | 61.56 | 59.98 | 44.06 | 45.70 | 66.05 | 55.47 | 72.04 | 17.22 |

| SLNet | 65.05 | 65.86 | 52.63 | 54.08 | 75.25 | 62.58 | 75.85 | 10.11 |

| Target-only | 77.13 | 79.45 | 60.08 | 66.56 | 80.24 | 72.69 | 83.74 | 0.00 |

| Multi-Category | Potsdam ⇒ Vaihingen | |||||||

|---|---|---|---|---|---|---|---|---|

| Model | Imp. Surf. | Build. | Low Veg. | Tree | Car | mIoU(%) | Mean (%) | mIoU Gap(%) |

| Source-only | 40.39 | 32.81 | 24.16 | 23.58 | 5.33 | 25.25 | 37.02 | 53.09 |

| GAN-RSDA [34] | 34.55 | 40.64 | 22.58 | 24.94 | 9.90 | 26.52 | 40.77 | 51.82 |

| ADDA * [64] | 43.28 | 52.16 | 24.39 | 28.84 | 15.26 | 32.79 | 45.68 | 45.55 |

| SEANet [17] | 41.84 | 56.80 | 20.83 | 21.86 | 31.72 | 34.61 | 49.98 | 43.73 |

| CyCADA * [46] | 48.59 | 60.04 | 27.71 | 36.84 | 13.49 | 37.33 | 53.37 | 41.01 |

| AdaptSegNet * [20] | 51.96 | 56.19 | 25.68 | 45.97 | 30.16 | 41.99 | 58.49 | 36.35 |

| BSANet [38] | 53.55 | 59.87 | 23.91 | 51.93 | 27.24 | 43.30 | 62.68 | 35.04 |

| Dual_GAN [65] | 46.19 | 65.44 | 27.85 | 55.82 | 40.31 | 47.12 | 63.01 | 31.22 |

| ScaleNet [37] | 55.22 | 64.46 | 31.34 | 50.40 | 39.86 | 47.66 | - | 30.68 |

| BDL * [25] | 60.05 | 67.48 | 46.42 | 65.40 | 37.81 | 56.67 | 71.52 | 21.67 |

| CCDA_LGFA [66] | 67.74 | 76.75 | 47.02 | 55.03 | 44.90 | 58.29 | 70.06 | 20.05 |

| SLNet | 70.62 | 76.72 | 52.22 | 68.35 | 45.62 | 62.71 | 76.42 | 15.63 |

| Target-only | 79.36 | 85.615 | 72.94 | 75.32 | 78.48 | 78.34 | 87.61 | 0.00 |

| Method | SSL | K | mIoU(%) |

|---|---|---|---|

| N | - | 17.64 | |

| N | - | 44.87 | |

| N | - | 46.18 | |

| N | - | 41.76 | |

| N | - | 48.16 | |

| N | - | 50.81 | |

| N | - | 45.64 | |

| N | - | 50.29 | |

| N | - | 52.17 | |

| Y | 1 | 58.63 | |

| Y | 2 | 62.58 | |

| Y | 3 | 60.24 |

| Method | mIoU (%) | ↑ | |

|---|---|---|---|

| - | 46.18 | 0.00 | |

| 0.00 | 48.19 | 2.01 | |

| 0.01 | 50.34 | 4.16 | |

| 0.05 | 51.67 | 5.49 | |

| 0.10 | 51.91 | 5.73 | |

| 0.15 | 52.17 | 5.99 | |

| 0.00 | 53.95 | 7.77 | |

| 0.01 | 55.88 | 9.70 | |

| 0.05 | 57.92 | 11.74 | |

| 0.10 | 58.63 | 12.45 | |

| 0.15 | 57.27 | 11.09 |

| Method | Threshold | mIoU (%) |

|---|---|---|

| - | 52.17 | |

| + Pseudo-label Learning | 0.95 | 53.71 |

| 0.90 | 55.88 | |

| 0.85 | 56.42 | |

| 0.80 | 55.17 | |

| 0.75 | 55.49 | |

| 0.70 | 54.38 | |

| - | 58.63 |

| Method | Metric Functions | mIoU (%) |

|---|---|---|

| MMD [67] | 55.25 | |

| KL-divergence | 56.94 | |

| JS-divergence | 58.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Zhang, W.; Sun, X.; Guo, Z. Unsupervised Cross-Scene Aerial Image Segmentation via Spectral Space Transferring and Pseudo-Label Revising. Remote Sens. 2023, 15, 1207. https://doi.org/10.3390/rs15051207

Liu W, Zhang W, Sun X, Guo Z. Unsupervised Cross-Scene Aerial Image Segmentation via Spectral Space Transferring and Pseudo-Label Revising. Remote Sensing. 2023; 15(5):1207. https://doi.org/10.3390/rs15051207

Chicago/Turabian StyleLiu, Wenjie, Wenkai Zhang, Xian Sun, and Zhi Guo. 2023. "Unsupervised Cross-Scene Aerial Image Segmentation via Spectral Space Transferring and Pseudo-Label Revising" Remote Sensing 15, no. 5: 1207. https://doi.org/10.3390/rs15051207

APA StyleLiu, W., Zhang, W., Sun, X., & Guo, Z. (2023). Unsupervised Cross-Scene Aerial Image Segmentation via Spectral Space Transferring and Pseudo-Label Revising. Remote Sensing, 15(5), 1207. https://doi.org/10.3390/rs15051207