A Lightweight Siamese Neural Network for Building Change Detection Using Remote Sensing Images

Abstract

1. Introduction

- A lightweight Siamese network LightCDNet using RSIs is proposed for BuCD. The LightCDNet consists of a memory-efficient encoder to compute multilevel deep features. Correspondingly, it utilizes a decoder with deconvolutional layers to recover the changed buildings.

- The MultiTFFM is designed for exploiting multilevel and multiscale building change features. First, it fuses low-level and high-level features (HiLeFs) separately. Subsequently, the fused HiLeFs are processed by the atrous spatial pyramid pooling (ASPP) module to extract multiscale features. Lastly, the fused low-level and multiscale HiLeFs are linked to produce the final feature maps containing the localizations and semantics of varied buildings.

2. Materials and Methods

2.1. Datasets for BuCD

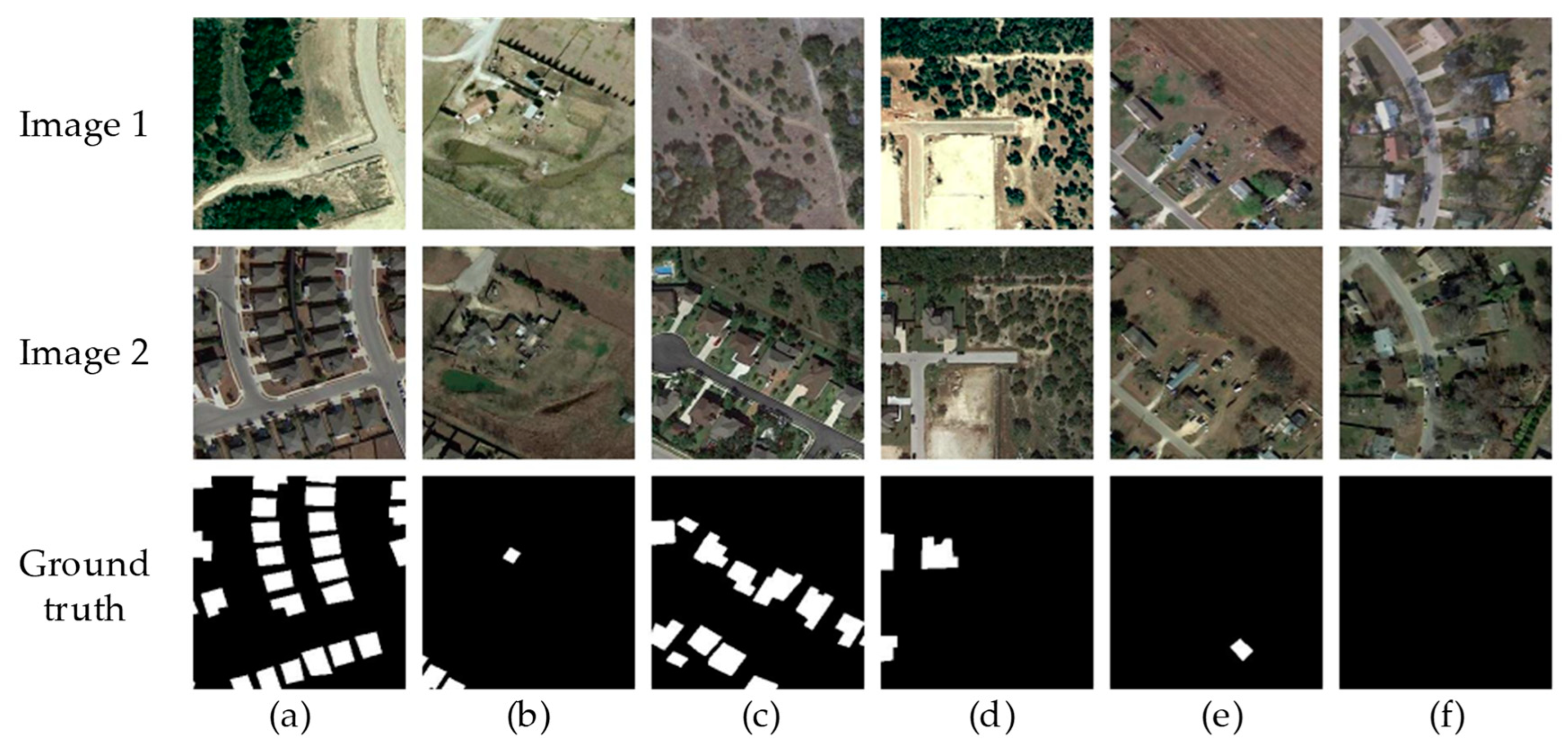

2.1.1. LEVIRCD

2.1.2. WHUCD

2.2. Methods

2.2.1. Siamese Encoder

2.2.2. Multi-Temporal Feature Fusion Module

2.2.3. Decoder

2.2.4. Loss Function

2.2.5. Implementation Details

2.3. Accuracy Assessment

2.4. Comparative Methods

- Cross-layer convolutional neural network (CLNet) [18]: CLNet was adjusted from U-Net. The key component of CLNet is the cross-layer block (CLB), which can combine multilevel and multiscale features. To be specific, CLNet contains two CLBs in the encoder. It is worth noting that the input of CLB is the concatenated multi-date RSIs, i.e., images with six bands.

- Deeply supervised attention metric-based network (DSAMNet) [16]: DSAMNet utilized two weight-sharing branches for feature extraction. Hence, DSAMNet can separately receive multi-date images. Moreover, DSAMNet included the change decision module (CDM) and deeply supervised module (DSM). CDM adopted attention modules to extract discriminative characteristics and produced output change maps. Additionally, the DSM can enhance the capacity of feature learning for DSAMNet.

- Deeply supervised image fusion network (IFNet) [14]: IFNet adopted an encoder-decoder structure. In the encoder, IFNet utilized dual branches based on VGG16. The decoder consists of attention modules and deep supervision. Specifically, the attention modules were embedded to fuse raw convolutional and difference features.

- ICIFNet [42]: ICIFNet, unlike the previous three, contained two asymmetric branches for feature extraction. Specifically, ResNet-18 [49] and PVT v2-B1 [58] are employed separately by two branches in ICIFNet. Four groups of features brimming with local and global information are produced from the dual branches. The attention mechanism was then applied to fuse multiscale features. Finally, the output was generated from the combination of three score maps.

3. Results

3.1. Results on LEVIRCD Dataset

3.2. Results on WHUCD Dataset

4. Discussion

4.1. Ablation Study for Siamese Encoder

4.2. Ablation Study for Decoder

4.3. Efficiency Test

4.4. Perspectives

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BuCD | Building change detection |

| RSIs | Remote sensing images |

| MultiTFFM | Multi-temporal feature fusion module |

| LEVIRCD | LEVIR Building Change Detection dataset |

| WHUCD | WHU Building Change Detection dataset |

| DNNs | Deep neural networks |

| CNNs | Convolutional neural networks |

| FCNs | Fully convolutional neural networks |

| FCEFN | Fully convolutional early fusion network |

| FCSiCN | Fully convolutional Siamese concatenation network |

| FCSiDN | Fully convolutional Siamese difference network |

| IFN | Image fusion network |

| SFCCDN | Semantic feature-constrained change detection network |

| LoLeFs | Low-level features |

| MCAN | Multiscale context aggregation network |

| LGFEM | Local and global feature extraction modules |

| ICIFNet | Intra-scale cross-interaction and inter-scale feature fusion network |

| HiLeFs | High-level features |

| ASPP | Atrous spatial pyramid pooling |

| GPU | Graphics processing unit |

| ImgPs | Image pairs |

| InvResB | Inverted residual bottleneck |

| OtS | Output stride |

| CE | Cross entropy |

| LR | Learning rate |

| F1 | F1 score |

| OA | Overall accuracy |

| IoU | Intersection over union |

| CLNet | Cross-layer convolutional neural network |

| CLB | Cross-layer block |

| DSAMNet | Deeply supervised attention metric-based network |

| CDM | Change decision module |

| DSM | Deeply supervised module |

| IFNet | Deeply supervised image fusion network |

| FLOPs | Floating point operations |

| NOPs | Number of parameters |

| ATrT | Average training time |

| ATeT | Average testing time |

Appendix A

- Our method: https://github.com/yanghplab/LightCDNet (accessed on 29 January 2023)

- CLNet: https://skyearth.org/publication/project/CLNet (accessed on 1 December 2022)

- DSAMNet: https://github.com/liumency/DSAMNet (accessed on 1 December 2022)

- IFNet: https://github.com/GeoZcx/A-deeply-supervised-image-fusion-network-for-change-detection-in-remote-sensing-images (accessed on 1 December 2022)

- ICIFNet: https://github.com/ZhengJianwei2/ICIF-Net (accessed on 1 December 2022)

References

- de Alwis Pitts, D.A.; So, E. Enhanced change detection index for disaster response, recovery assessment and monitoring of buildings and critical facilities—A case study for Muzzaffarabad, Pakistan. Int. J. Appl. Earth Obs. Geoinf. 2017, 63, 167–177. [Google Scholar] [CrossRef]

- Wang, N.; Li, W.; Tao, R.; Du, Q. Graph-based block-level urban change detection using Sentinel-2 time series. Remote Sens. Environ. 2022, 274, 112993. [Google Scholar] [CrossRef]

- Jimenez-Sierra, D.A.; Quintero-Olaya, D.A.; Alvear-Muñoz, J.C.; Benítez-Restrepo, H.D.; Florez-Ospina, J.F.; Chanussot, J. Graph Learning Based on Signal Smoothness Representation for Homogeneous and Heterogeneous Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Reba, M.; Seto, K.C. A systematic review and assessment of algorithms to detect, characterize, and monitor urban land change. Remote Sens. Environ. 2020, 242, 111739. [Google Scholar] [CrossRef]

- Dong, J.; Zhao, W.; Wang, S. Multiscale Context Aggregation Network for Building Change Detection Using High Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Lei, J.; Gu, Y.; Xie, W.; Li, Y.; Du, Q. Boundary Extraction Constrained Siamese Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Liu, T.; Gong, M.; Lu, D.; Zhang, Q.; Zheng, H.; Jiang, F.; Zhang, M. Building Change Detection for VHR Remote Sensing Images via Local–Global Pyramid Network and Cross-Task Transfer Learning Strategy. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Xia, H.; Tian, Y.; Zhang, L.; Li, S. A Deep Siamese PostClassification Fusion Network for Semantic Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Xiao, P.; Zhang, X.; Wang, D.; Yuan, M.; Feng, X.; Kelly, M. Change detection of built-up land: A framework of combining pixel-based detection and object-based recognition. ISPRS J. Photogramm. Remote Sens. 2016, 119, 402–414. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Zhu, T. Building Change Detection from Multitemporal High-Resolution Remotely Sensed Images Based on a Morphological Building Index. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 105–115. [Google Scholar] [CrossRef]

- Sofina, N.; Ehlers, M. Building Change Detection Using High Resolution Remotely Sensed Data and GIS. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3430–3438. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Hu, M.; Lu, M.; Ji, S. Cascaded Deep Neural Networks for Predicting Biases between Building Polygons in Vector Maps and New Remote Sensing Images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021; pp. 4051–4054. [Google Scholar]

- Liu, M.; Shi, Q. DSAMNet: A Deeply Supervised Attention Metric Based Network for Change Detection of High-Resolution Images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021; pp. 6159–6162. [Google Scholar]

- Liu, Y.; Pang, C.; Zhan, Z.; Zhang, X.; Yang, X. Building Change Detection for Remote Sensing Images Using a Dual-Task Constrained Deep Siamese Convolutional Network Model. IEEE Geosci. Remote Sens. Lett. 2021, 18, 811–815. [Google Scholar] [CrossRef]

- Zheng, Z.; Wan, Y.; Zhang, Y.; Xiang, S.; Peng, D.; Zhang, B. CLNet: Cross-layer convolutional neural network for change detection in optical remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 247–267. [Google Scholar] [CrossRef]

- Chen, P.; Zhang, B.; Hong, D.; Chen, Z.; Yang, X.; Li, B. FCCDN: Feature constraint network for VHR image change detection. ISPRS J. Photogramm. Remote Sens. 2022, 187, 101–119. [Google Scholar] [CrossRef]

- Du, P.; Liu, S.; Gamba, P.; Tan, K.; Xia, J. Fusion of Difference Images for Change Detection Over Urban Areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1076–1086. [Google Scholar] [CrossRef]

- Liu, S.; Du, Q.; Tong, X.; Samat, A.; Bruzzone, L.; Bovolo, F. Multiscale Morphological Compressed Change Vector Analysis for Unsupervised Multiple Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4124–4137. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Stow, D.; Hamada, Y.; Coulter, L.; Anguelova, Z. Monitoring shrubland habitat changes through object-based change identification with airborne multispectral imagery. Remote Sens. Environ. 2008, 112, 1051–1061. [Google Scholar] [CrossRef]

- Bouziani, M.; Goïta, K.; He, D.-C. Automatic change detection of buildings in urban environment from very high spatial resolution images using existing geodatabase and prior knowledge. ISPRS J. Photogramm. Remote Sens. 2010, 65, 143–153. [Google Scholar] [CrossRef]

- Leichtle, T.; Geiß, C.; Wurm, M.; Lakes, T.; Taubenböck, H. Unsupervised change detection in VHR remote sensing imagery—An object-based clustering approach in a dynamic urban environment. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 15–27. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X.; Yuan, M. Separate segmentation of multi-temporal high-resolution remote sensing images for object-based change detection in urban area. Remote Sens. Environ. 2017, 201, 243–255. [Google Scholar] [CrossRef]

- Xiao, P.; Yuan, M.; Zhang, X.; Feng, X.; Guo, Y. Cosegmentation for Object-Based Building Change Detection from High-Resolution Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1587–1603. [Google Scholar] [CrossRef]

- Ji, S.; Shen, Y.; Lu, M.; Zhang, Y. Building Instance Change Detection from Large-Scale Aerial Images using Convolutional Neural Networks and Simulated Samples. Remote Sens. 2019, 11, 1343. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Huang, X.; Cao, Y.; Li, J. An automatic change detection method for monitoring newly constructed building areas using time-series multi-view high-resolution optical satellite images. Remote Sens. Environ. 2020, 244, 111802. [Google Scholar] [CrossRef]

- Chen, H.; Li, W.; Shi, Z. Adversarial Instance Augmentation for Building Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Tian, S.; Ma, A.; Zhang, L. ChangeMask: Deep multi-task encoder-transformer-decoder architecture for semantic change detection. ISPRS J. Photogramm. Remote Sens. 2022, 183, 228–239. [Google Scholar] [CrossRef]

- Bai, B.; Fu, W.; Lu, T.; Li, S. Edge-Guided Recurrent Convolutional Neural Network for Multitemporal Remote Sensing Image Building Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Yu, B.; Chen, F.; Xu, C. Landslide detection based on contour-based deep learning framework in case of national scale of Nepal in 2015. Comput. Geosci. 2020, 135, 104388. [Google Scholar] [CrossRef]

- Anniballe, R.; Noto, F.; Scalia, T.; Bignami, C.; Stramondo, S.; Chini, M.; Pierdicca, N. Earthquake damage mapping: An overall assessment of ground surveys and VHR image change detection after L’Aquila 2009 earthquake. Remote Sens. Environ. 2018, 210, 166–178. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Daudt, R.C.; Saux, B.L.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 539–546. [Google Scholar]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “Siamese” time delay neural network. In Proceedings of the 6th International Conference on Neural Information Processing Systems, Denver, CO, USA, 29 November—2 December 1993; pp. 737–744. [Google Scholar]

- Feng, Y.; Xu, H.; Jiang, J.; Liu, H.; Zheng, J. ICIF-Net: Intra-Scale Cross-Interaction and Inter-Scale Feature Fusion Network for Bitemporal Remote Sensing Images Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Shen, Q.; Huang, J.; Wang, M.; Tao, S.; Yang, R.; Zhang, X. Semantic feature-constrained multitask siamese network for building change detection in high-spatial-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 189, 78–94. [Google Scholar] [CrossRef]

- Li, Q.; Zhong, R.; Du, X.; Du, Y. TransUNetCD: A Hybrid Transformer Network for Change Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the European Conference on Computer Vision 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Hariharan, B.; Arbeláez, P.; Girshick, R.; Malik, J. Hypercolumns for object segmentation and fine-grained localization. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 447–456. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision 2018, Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Kingma, D.P.; Ba, L.J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. PVT v2: Improved baselines with Pyramid Vision Transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

| Dataset | Number of Training ImgPs | Number of Validation ImgPs | Number of Testing ImgPs |

|---|---|---|---|

| LEVIRCD | 7120 | 1024 | 2048 |

| WHUCD | 5376 | 768 | 1536 |

| Methods | Precision (%) | Recall (%) | F1 (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|

| CLNet | 83.2 | 75.4 | 79.1 | 65.4 | 98.0 |

| DSAMNet | 80.0 | 90.6 | 85.0 | 73.9 | 98.4 |

| IFNet | 84.7 | 85.5 | 85.1 | 74.0 | 98.5 |

| ICIFNet | 89.6 | 84.3 | 86.8 | 76.8 | 98.7 |

| Ours | 91.3 | 88.0 | 89.6 | 81.2 | 99.0 |

| Methods | Precision (%) | Recall (%) | F1 (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|

| CLNet | 88.8 | 61.6 | 72.7 | 57.1 | 98.0 |

| DSAMNet | 79.0 | 91.9 | 85.0 | 73.9 | 98.6 |

| IFNet | 94.9 | 77.0 | 85.0 | 74.0 | 98.8 |

| ICIFNet | 88.0 | 74.8 | 80.9 | 67.9 | 98.5 |

| Ours | 92.0 | 91.0 | 91.5 | 84.3 | 99.3 |

| Siamese Encoder | Precision (%) | Recall (%) | F1 (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|

| ResNet-50 | 90.2 | 88.6 | 89.4 | 80.8 | 99.1 |

| Xception | 89.5 | 78.7 | 83.7 | 72.0 | 98.7 |

| Ours (MobileNetV2) | 92.0 | 91.0 | 91.5 | 84.3 | 99.3 |

| Decoder | Precision (%) | Recall (%) | F1 (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|

| Ours (Deconvolution) | 92.0 | 91.0 | 91.5 | 84.3 | 99.3 |

| Upsampling | 91.0 | 89.3 | 90.1 | 82.0 | 99.2 |

| Methods | FLOPs (M) | NOPs (M) | ATeT (s/Image) | ATrT (s/Epoch) |

|---|---|---|---|---|

| CLNet | 16.202 | 8.103 | 0.031 | 204.12 |

| DSAMNet | 301,161.792 | 16.951 | 0.045 | 842.20 |

| IFNet | 329,055.161 | 50.442 | 0.060 | 604.36 |

| ICIFNet | 101,474.886 | 23.828 | 0.129 | 1041.75 |

| Siamese Encoder (ResNet50) | 283,461.036 | 155.725 | 0.077 | 738.29 |

| Siamese Encoder (Xception) | 289,171.335 | 168.750 | 0.118 | 725.05 |

| Decoder (Upsampling) | 48,801.779 | 10.405 | 0.052 | 294.18 |

| Ours | 85,410.156 | 10.754 | 0.056 | 303.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, H.; Chen, Y.; Wu, W.; Pu, S.; Wu, X.; Wan, Q.; Dong, W. A Lightweight Siamese Neural Network for Building Change Detection Using Remote Sensing Images. Remote Sens. 2023, 15, 928. https://doi.org/10.3390/rs15040928

Yang H, Chen Y, Wu W, Pu S, Wu X, Wan Q, Dong W. A Lightweight Siamese Neural Network for Building Change Detection Using Remote Sensing Images. Remote Sensing. 2023; 15(4):928. https://doi.org/10.3390/rs15040928

Chicago/Turabian StyleYang, Haiping, Yuanyuan Chen, Wei Wu, Shiliang Pu, Xiaoyang Wu, Qiming Wan, and Wen Dong. 2023. "A Lightweight Siamese Neural Network for Building Change Detection Using Remote Sensing Images" Remote Sensing 15, no. 4: 928. https://doi.org/10.3390/rs15040928

APA StyleYang, H., Chen, Y., Wu, W., Pu, S., Wu, X., Wan, Q., & Dong, W. (2023). A Lightweight Siamese Neural Network for Building Change Detection Using Remote Sensing Images. Remote Sensing, 15(4), 928. https://doi.org/10.3390/rs15040928