A Lightweight Position-Enhanced Anchor-Free Algorithm for SAR Ship Detection

Abstract

:1. Introduction

- (1)

- The existing algorithms of SAR image detection are mainly based on the design of anchors. However, setting the hyperparameters of an anchor heavily relies on human experience and a generated anchor greatly reduces model training speed. In addition, a detection algorithm with anchors mostly focuses on the capture of target edge information, while the unclear contour information of SAR images, especially with respect to small- and medium-sized SAR targets, greatly limits its detection efficiency.

- (2)

- In order to further improve accuracy, most of the existing work blindly adds model structure and skills, resulting in a large number of model parameters, slow inference speed and low efficiency in practical applications, which is not conducive to the deployment of a model using mobile devices and greatly reduces the practicality of the model.

- (3)

- The existing work does not consider the scattering of SAR images and the unclear target profile, which results in an algorithm being unable to better suppress the background clutter to emphasize the salient information of the target, which greatly reduces model performance.

- (1)

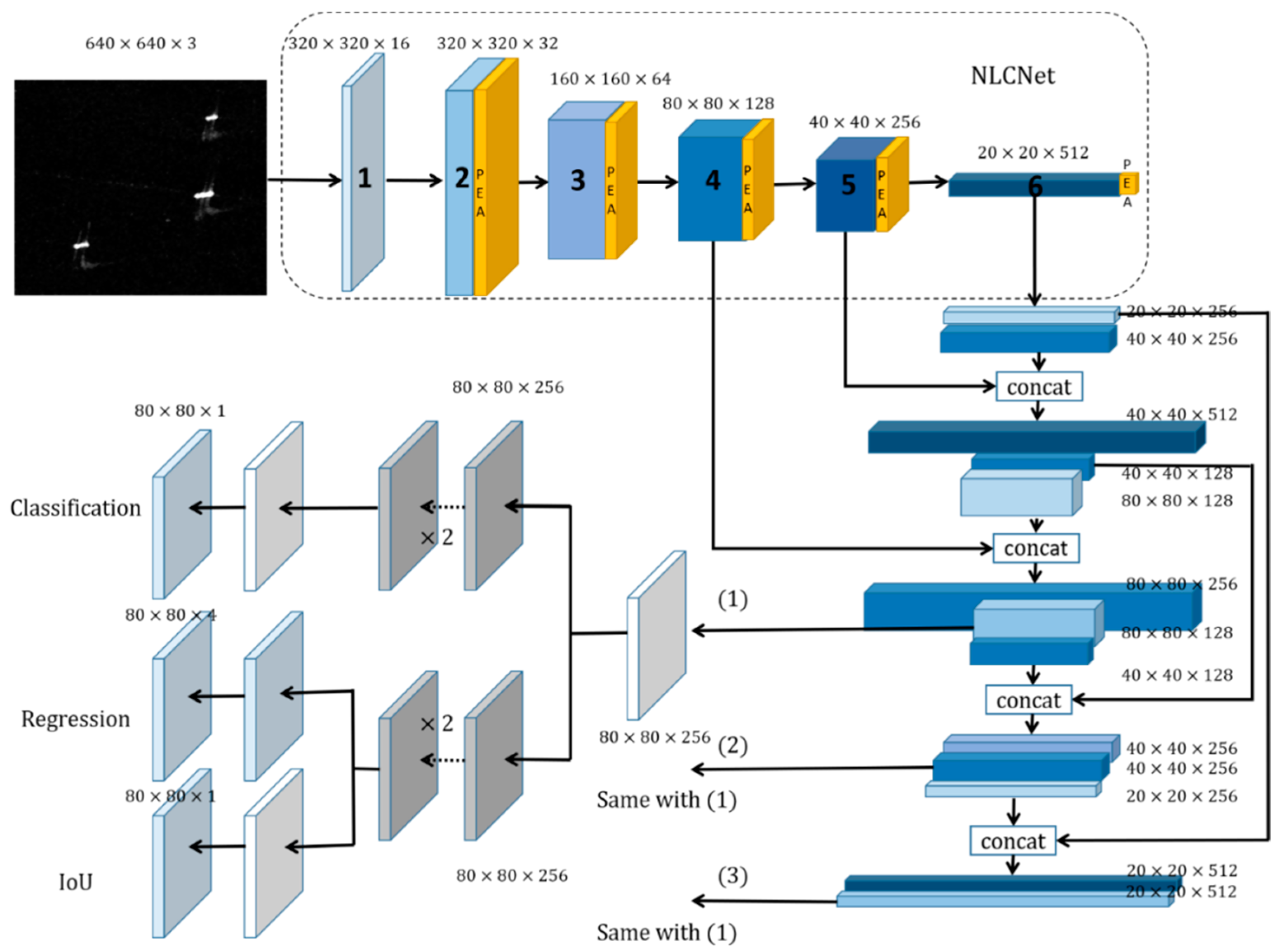

- To solve the problems that occur because anchor-based detection algorithms are highly dependent on design frameworks based on expert experience and the difficulties that occur in solving problems such as unclear contour information and complex backgrounds of SAR image ship targets, we introduced an anchor-free target detection algorithm. We introduced the latest YOLOX as the base network and, inspired by the latest lightweight backbone, LCNet [16], replaced the backbone Darknet-53 with LCNet and then optimized the design according to the SAR target characteristics.

- (2)

- To balance speed and model complexity, we constructed a new lightweight backbone called NLCNet through the ingenious design of depthwise separable convolutional modules and the novel structural construction of multiple modules. Experiments show that our proposed lightweight backbone greatly improved inference speed and detection accuracy.

- (3)

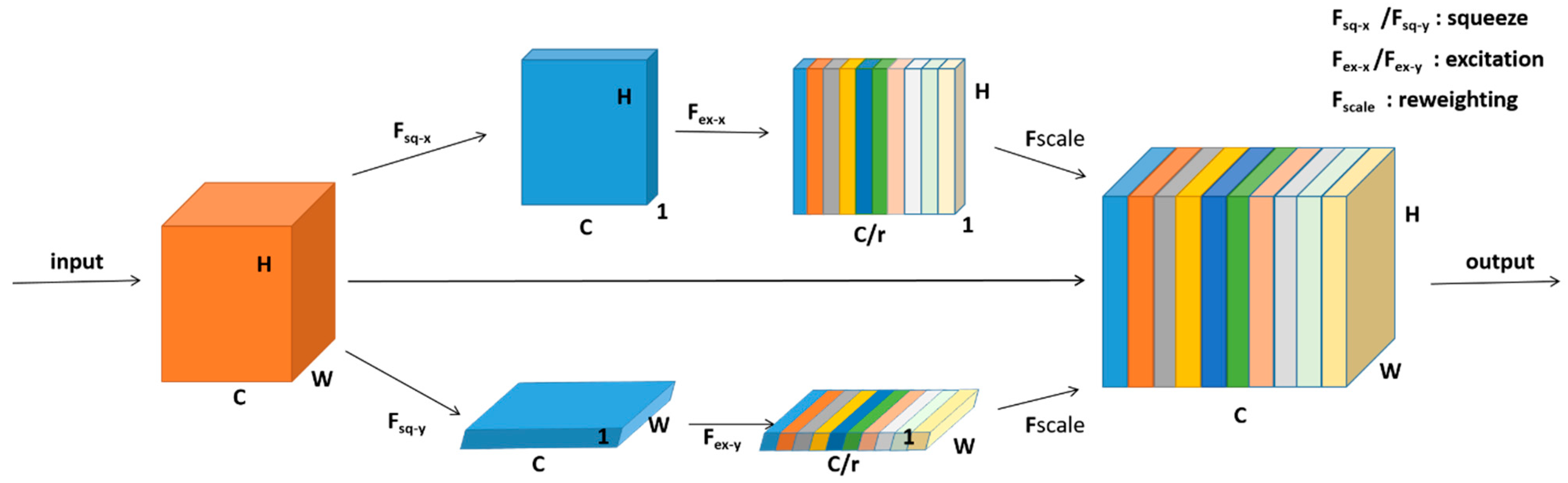

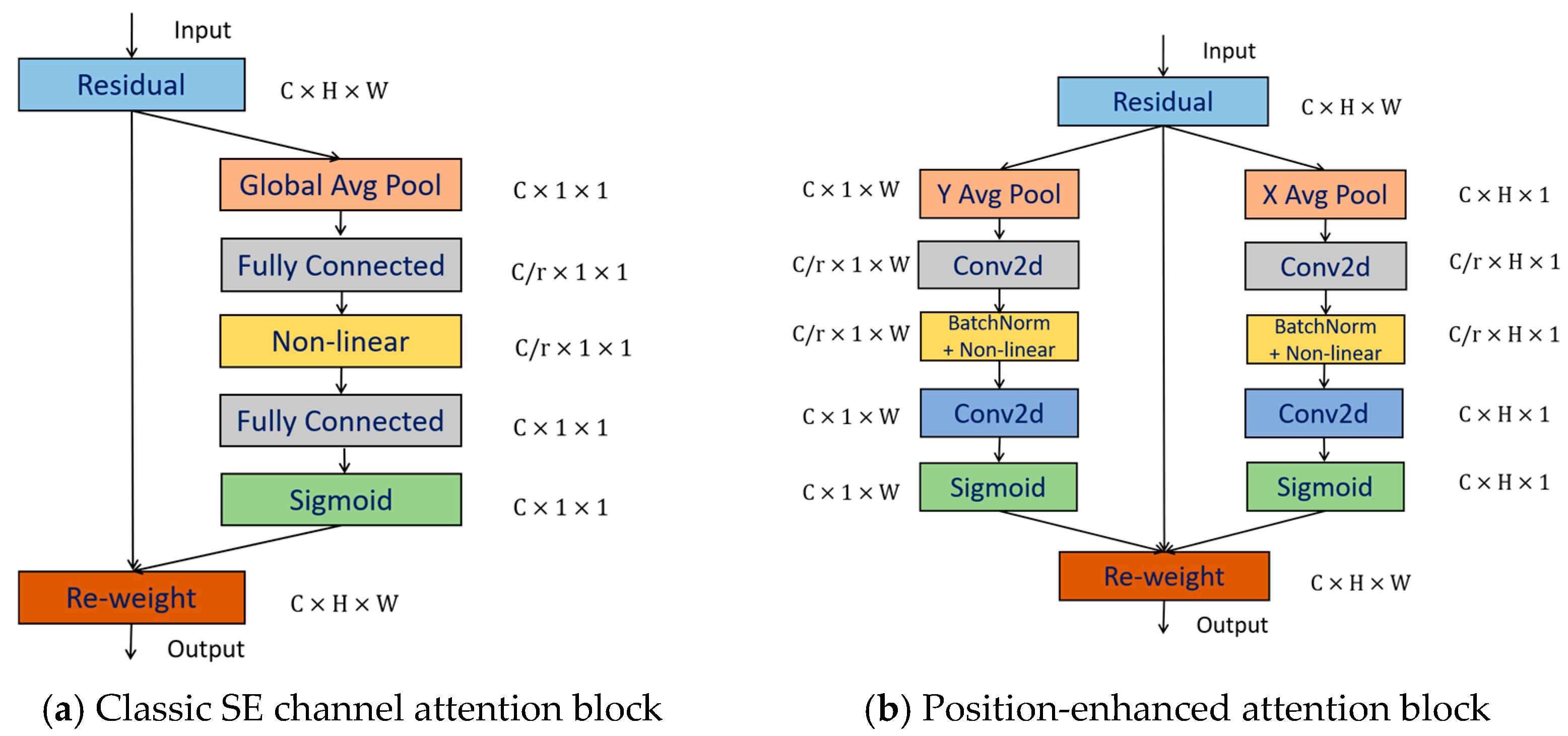

- In order to improve the SAR target localization ability against complex backgrounds, inspired by coordinate attention [17], we designed a position-enhanced attention strategy. The strategy is to add target position awareness information to guide attention to better highlight the target area, effectively suppress the problem of insufficient feature extraction caused by SAR target strong scattering and better detect targets against complex backgrounds, thereby improving detection accuracy.

2. Related Work

- (1)

- Traditional SAR target detection algorithm.The traditional SAR target detection algorithm is as follows. Ai et al. [18] proposed a joint CFAR detection algorithm based on gray correlation by utilizing the strong correlation characteristics of adjacent pixels inside the target SAR images. The CFAR algorithm only considers the gray contrast and ignores target structure information, which causes poor robustness and anti-interference ability and poor detection performance under complex background clutter. Kaplan et al. [19] used the extended fractal (EF) feature to detect vehicle targets in SAR images. This feature is sensitive not only to the contrast of the target background but also to the target size. Compared with the CFAR algorithm, the false alarm rate of detection is reduced. Charalampidis [20] proposed the wavelet fractal (WF) feature, which can effectively segment and classify different textures in images.

- (2)

- Common SAR image backbone networks based on deep learning.It can be seen from VGG [21] that a deeper network can be formed by stacking modules with the same dimension. For a given receptive field, it is shown that compared with using a large convolution kernel for convolution, the effect of using a stacked small convolution kernel is preferable. GoogLeNet [22] adopts a modular structure (inception structure) to enrich network receptive fields with convolutional kernels of different sizes. ShuffleNetV1 [23] and ShuffleNetV2 [24] adopt two core operations: pointwise group convolution and channel shuffling, and they exchange information through channel shuffling. GhostNet [25] divides the original convolution layer into two parts. First, a traditional convolution operation is applied to the input to generate feature maps, then these feature maps are transformed using a linear operation, merging all the features together to get the final result. In DarkNet-53, the poolless layer, the fully connected layer and the reduction of the feature graph are achieved by increasing the step size of the convolution kernel. Using the idea of feature pyramid networks (FPNs), the outputs of three scale feature layers are 13 × 13, 26 × 26 and 52 × 52. Among them, 13 × 13 is suitable for detecting large targets and 52 × 52 is suitable for detecting small targets. Although the above backbone network greatly improves detection accuracy, it also introduces a large number of parameters into the model and the detection speed is relatively slow. MobileNetV1 [26] constructed a network by utilizing depth-separable convolution, which consists of two steps: depthwise convolution and pointwise convolution. MobileNetV2 [27] introduced a residual structure on the basis of MobileNetV1, which first raised the dimension and then reduced the dimension. Although the model is lightweight, it is suitable only for large models and it provides no significant improvement in accuracy in small networks. The characteristic of a remote sensing image target is density and it is difficult to distinguish between target contours and the background environment. A new algorithm [28] is proposed for the above difficulties which can also be used for video target recognition. It mainly uses the visual saliency mechanism to extract the target of the region of interest and experiments show the effectiveness of its results. In addition to SAR image target detection, the research on images captured by UAVs should continue to advance because the use of UAV images for target detection has broad application prospects in real life. The target detection of UAV images is the subject of [29], which combines the deep learning target detection method with existing template matching and proposes a parallel integrated deep learning algorithm for multi-target detection.

- (3)

- SAR image detection algorithm based on deep learning.Jiao et al. [30] considered that the multi-scale nature of SAR image ship targets and the background complexity of offshore ship targets were not conducive to monitoring and the authors innovatively proposed a model based on the faster-RCNN framework. Improvements have been made and a new training strategy has also been proposed so that the training process focuses less on simple targets and is more suitable for the detection of ship targets with complex backgrounds in SAR images, improving detection performance and thereby solving the problem of multiple scales and multiple scenes. Chen et al. [31] mainly focused on indistinguishable ships on land and densely arranged ships at sea and combined a model with an attention mechanism. The purpose was to better solve the above two problems frequently encountered in ship target detection. The application of an attention mechanism can better enable the location of the ship targets we need to detect. At the same time, the loss function is also improved, that is, generalized cross loss is introduced, and soft non-maximum suppression is also used in the model. Therefore, the problem of densely arranged ship targets can be better solved and detection performance can be improved. Cui et al. [32] considered the multi-scale problem of ship targets in SAR images and used a densely connected pyramid structure in their model. At the same time, a convolution block attention module was used to refine the feature map, highlight the salient features, suppress the fuzzy features and effectively improve the accuracy of the SAR image ship target. Although the above algorithms generally have high detection accuracy, model size is large, inference speed is slow and they do not take the characteristics of SAR images into account, which greatly limits the performance of these algorithms. Wan et al. [14] proposed an anchor-free SAR ship detection algorithm, the backbone network of which is the more lightweight MobileNetV2S network, and further improved the neck and head, so that the overall model effect is optimal. However, their improved strategy did not fully consider the characteristics of SAR targets against complex backgrounds, which is an issue worthy of further exploration.

3. Methods

3.1. Benchmark Network

3.2. Lightweight Feature Enhancement Backbone: NLCNet

3.2.1. Discarding of the Squeeze-and-Excitation Networks (SE) Module

3.2.2. Design of a Lightweight Convolution Block

3.3. Position-Enhanced Attention

4. Experiments

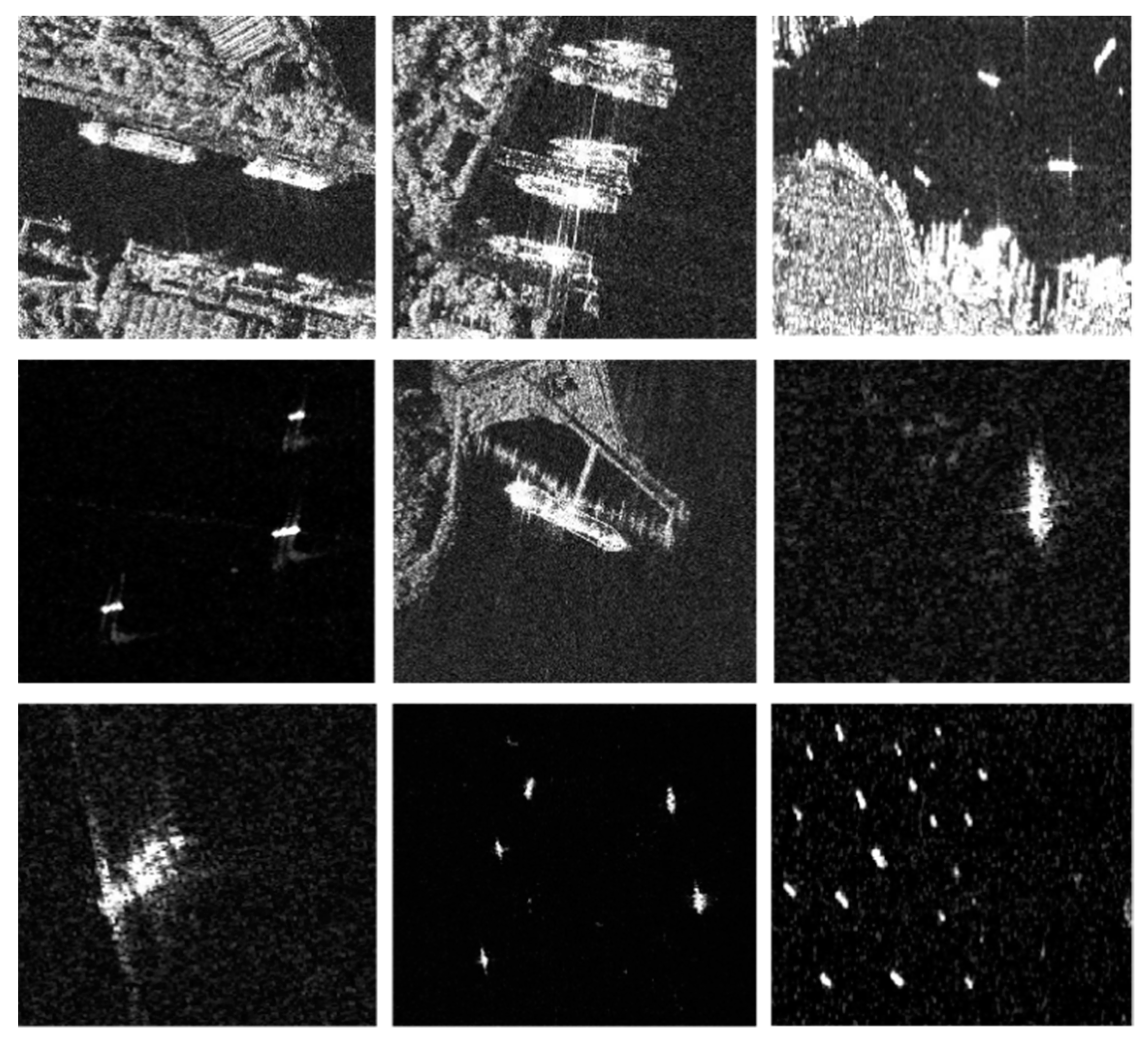

4.1. Dataset and Experimental Settings

4.2. Evaluation Indicators

4.3. Experimental Results and Analysis

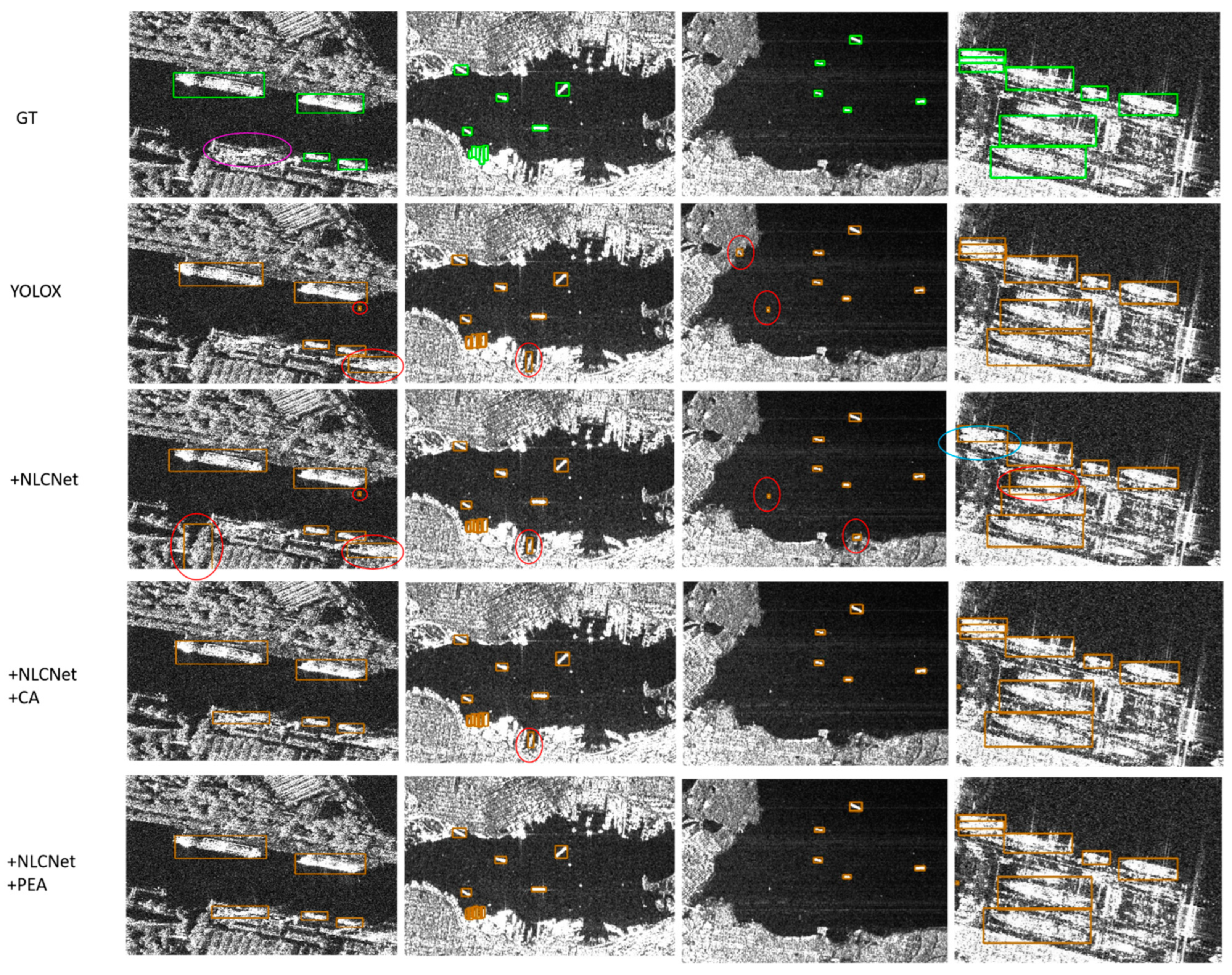

4.3.1. Ablation Experiments on SSDD Datasets

Influence of the NLCNet Backbone Network on the Experimental Results

Influence of Position-Enhanced Attention on Experimental Results

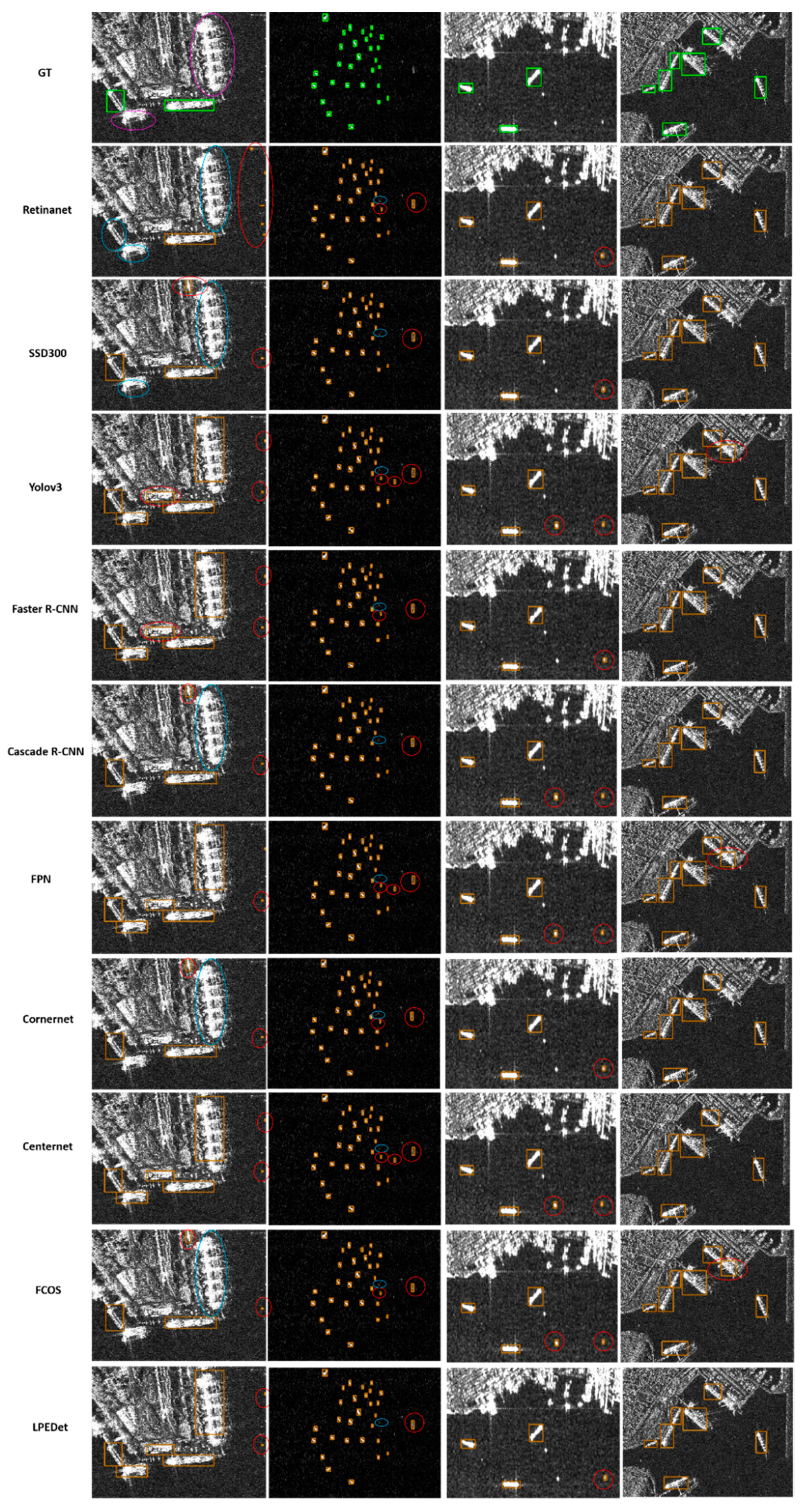

4.3.2. Comparison with the Latest Target Detection Methods Using SSDD Datasets

4.4. Comparison with the Latest SAR Ship Detection Methods Using SSDD Datasets

4.5. Comparison with the Latest SAR Ship Detection Methods Using HRSID Datasets

4.6. The Effect of the Number of Training Sets on Detection Performance

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Robey, F.C.; Fuhrmann, D.R.; Kelly, E.J. A CFAR adaptive matched filter detector. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 208–216. [Google Scholar] [CrossRef] [Green Version]

- Huang, Y.; Liu, F. Detecting cars in VHR SAR images via semantic CFAR algorithm. IEEE Geosci. Remote Sens. Lett. 2016, 13, 801–805. [Google Scholar] [CrossRef]

- Stein, G.W.; Zelnio, E.G.; Garber, F.D. Target detection using an improved fractal scheme. Proc. SPIE—Int. Soc. Opt. Eng. 2006, 6237, 19. [Google Scholar]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S.; Wang, J.; Li, J.; Su, H.; Zhou, Y. Balance scene learning mechanism for offshore and inshore ship detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. ShipDeNet-20: An only 20 convolution layers and <1-MB lightweight SAR ship detector. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1234–1238. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. HyperLi-Net: A hyper-light deep learning network for high-accurate and high-speed ship detection from synthetic aperture radar imagery. ISPRS J. Photogramm. Remote Sens. 2020, 167, 123–153. [Google Scholar] [CrossRef]

- Tz, A.; Xz, A.; Chang, L.B.; Js, A.; Sw, A.; Ia, C.; Xu, Z.A.; Yue, Z.D.; Dp, E.; Jl, F. Balance learning for ship detection from synthetic aperture radar remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 182, 190–207. [Google Scholar]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. Depthwise separable convolution neural network for high-speed SAR ship detection. Remote Sens. 2019, 11, 2483. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Zhang, X. High-speed ship detection in SAR images based on a grid convolutional neural network. Remote Sens. 2019, 11, 1206. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 318–327. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wan, H.Y.; Chen, J.; Huang, Z.X.; Xia, R.F.; Wu, B.C.; Sun, L.; Yao, B.D.; Liu, X.P.; Xing, M.D. AFSar: An anchor-free SAR target detection algorithm based on multiscale enhancement representation learning. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 14 September 2018; pp. 385–400. [Google Scholar]

- Cui, C.; Gao, T.; Wei, S.; Du, Y.; Guo, R.; Dong, S.; Lu, B.; Zhou, Y.; Lv, X.; Liu, Q. PP-LCNet: A lightweight CPU convolutional neural network. arXiv 2021, arXiv:2109.15099. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Ai, J.; Yang, X.; Yan, H. A local Cfar detector based on gray Intensity correlation in Sar imagery. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 697–700. [Google Scholar]

- Kaplan, L.M. Improved SAR target detection via extended fractal features. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 436–451. [Google Scholar] [CrossRef]

- Charalampidis, D.; Kasparis, T. Wavelet-based rotational invariant roughness features for texture classification and segmentation. IEEE Trans. Image Process. 2002, 11, 825–837. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 116–131. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Sun, L.; Chen, J.; Feng, D.; Xing, M. The recognition framework of deep kernel learning for enclosed remote sensing objects. IEEE Access 2021, 9, 95585–95596. [Google Scholar] [CrossRef]

- Sun, L.; Chen, J.; Feng, D.; Xing, M. Parallel ensemble deep learning for real-time remote sensing video multi-Target detection. Remote Sens. 2021, 13, 4377. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A densely connected end-to-end neural network for multiscale and multiscene SAR ship detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Chen, C.; He, C.; Hu, C.; Pei, H.; Jiao, L. A deep neural network based on an attention mechanism for SAR ship detection in multiscale and complex scenarios. IEEE Access 2019, 7, 104848–104863. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense attention pyramid networks for multi-scale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H. Sar ship detection dataset (ssdd): Official release and comprehensive data analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 6569–6578. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 9627–9636. [Google Scholar]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An anchor-free method based on feature balancing and refinement network for multiscale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1331–1344. [Google Scholar] [CrossRef]

- Guo, H.; Yang, X.; Wang, N.; Gao, X. A CenterNet++ model for ship detection in SAR images. Pattern Recognit. 2021, 112, 107787. [Google Scholar] [CrossRef]

- Nie, J.; Anwer, R.M.; Cholakkal, H.; Khan, F.S.; Pang, Y.; Shao, L. Enriched feature guided refinement network for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 9537–9546. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra r-cnn: Towards balanced learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 821–830. [Google Scholar]

- Gao, S.; Liu, J.; Miao, Y.; He, Z. A High-Effective Implementation of Ship Detector for SAR Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Probabilistic two-stage detection. arXiv 2021, arXiv:2103.07461. [Google Scholar]

- Mukherjee, S.; Valenzise, G.; Cheng, I. Potential of deep features for opinion-unaware, distortion-unaware, no-reference image quality assessment. In International Conference on Smart Multimedia; Springer International Publishing: Cham, Switzerland, 2019; pp. 87–95. [Google Scholar]

| Operator | Kernel Size | Stride | Input | Output | PEA |

|---|---|---|---|---|---|

| Conv2D | 2 | - | |||

| DepthSepConv | 1 | √ | |||

| DepthSepConv | 2 | √ | |||

| DepthSepConv | 1 | √ | |||

| DepthSepConv | 2 | √ | |||

| DepthSepConv | 1 | √ | |||

| DepthSepConv | 2 | √ | |||

| 5 × DepthSepConv | 1 | √ | |||

| DepthSepConv | 2 | √ | |||

| DepthSepConv | 1 | √ |

| NoS | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| NoI | 725 | 183 | 89 | 47 | 45 | 16 | 15 | 8 | 4 | 11 | 5 | 3 | 3 | 0 |

| Model | Backbone | Attention | mAP (%) | FLOPs (GMac) | Params (M) | Average Inference Time (ms) |

|---|---|---|---|---|---|---|

| YOLOX | Darknet-53 | - | 96.2 | 26.64 | 8.94 | 8.20 |

| YOLOX | NLCNet | - | 96.8 | 18.27 | 5.58 | 5.27 |

| YOLOX | NLCNet | Coordinate attention | 97.1 | 18.38 | 5.68 | 7.01 |

| YOLOX | NLCNet | Position-enhanced attention | 97.4 | 18.38 | 5.68 | 7.01 |

| Method | mAP (%) | FLOPs (GMac) | Params (M) | Average Inference Time (ms) | |

|---|---|---|---|---|---|

| One-stage | RetinaNet [10] | 91.2 | 81.69 | 36.1 | 44.10 |

| SSD300 [11] | 93.1 | 154.45 | 34.31 | 53.62 | |

| YOLOv3 [12] | 96.2 | 77.54 | 61.52 | 45.81 | |

| YOLOX | 96.2 | 26.64 | 8.94 | 8.20 | |

| YOLOv5 | 97.0 | 16.54 | 7.23 | 8.61 | |

| AFSar [14] | 96.7 | 8.66 | - | - | |

| Two-stage | Faster R-CNN [36] | 96.4 | 91.41 | 41.12 | 45.36 |

| Cascade R-CNN [37] | 96.8 | 119.05 | 69.17 | 65.56 | |

| FPN [38] | 96.5 | 71.65 | 63.56 | 78.30 | |

| Anchor-free | CornerNet [39] | 94.7 | 707.75 | 201.04 | 95.61 |

| CenterNet [40] | 95.1 | 20.4 | 14.21 | 31.54 | |

| FCOS [41] | 95.3 | 78.63 | 60.97 | 48.67 | |

| LPEDet | 97.4 | 18.38 | 5.68 | 7.01 |

| Methods | mAP (%) | FLOPs (GMac) | Params (M) | Average Inference Time (ms) |

|---|---|---|---|---|

| DCMSNN [30] | 89.43 | - | 41.1 | 46.2 |

| NNAM [31] | 79.8 | - | - | 28 |

| FBR-Net [42] | 94.10 | - | 32.5 | 40.1 |

| CenterNet++ [43] | 95.1 | - | - | 33 |

| EFGRNet [44] | 91.1 | - | - | 33 |

| Libra R-CNN [45] | 88.7 | - | - | 57 |

| DAPN [32] | 89.8 | - | - | 41 |

| LPEDet | 97.4 | 18.38 | 5.68 | 7.01 |

| Method | AP | AP50 | AP75 | APS | APM | APL | FLOPs (GMac) | Params (M) | Average Inference Time (ms) |

|---|---|---|---|---|---|---|---|---|---|

| YOLOv3 [12] | 50.9 | 85.0 | 53.1 | 51.0 | 56.1 | 25.5 | 121.15 | 61.52 | 136 |

| SAR-net [46] | - | 84.7 | - | - | - | - | 104.2 | 42.6 | 118 |

| CenterNet2 [47] | 64.5 | 89.5 | 73.0 | 64.7 | 69.1 | 48.3 | - | 71.6 | - |

| RetinaNet [10] | 59.8 | 84.8 | 67.2 | 60.4 | 62.7 | 26.5 | 127.91 | 36.3 | 122 |

| YOLOX [13] | 61.4 | 87.2 | 68.9 | 63.0 | 57.0 | 21.8 | 26.64 | 8.94 | 8.20 |

| LPEDet | 64.4 | 89.7 | 71.8 | 65.8 | 63.4 | 24.2 | 18.38 | 5.68 | 7.01 |

| Method | Datasets Rate | mAP (%) |

|---|---|---|

| LPEDet | 33% | 94.6 |

| 66% | 96.8 | |

| 80% | 97.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Y.; Chen, J.; Huang, Z.; Wan, H.; Xia, R.; Wu, B.; Sun, L.; Xing, M. A Lightweight Position-Enhanced Anchor-Free Algorithm for SAR Ship Detection. Remote Sens. 2022, 14, 1908. https://doi.org/10.3390/rs14081908

Feng Y, Chen J, Huang Z, Wan H, Xia R, Wu B, Sun L, Xing M. A Lightweight Position-Enhanced Anchor-Free Algorithm for SAR Ship Detection. Remote Sensing. 2022; 14(8):1908. https://doi.org/10.3390/rs14081908

Chicago/Turabian StyleFeng, Yun, Jie Chen, Zhixiang Huang, Huiyao Wan, Runfan Xia, Bocai Wu, Long Sun, and Mengdao Xing. 2022. "A Lightweight Position-Enhanced Anchor-Free Algorithm for SAR Ship Detection" Remote Sensing 14, no. 8: 1908. https://doi.org/10.3390/rs14081908

APA StyleFeng, Y., Chen, J., Huang, Z., Wan, H., Xia, R., Wu, B., Sun, L., & Xing, M. (2022). A Lightweight Position-Enhanced Anchor-Free Algorithm for SAR Ship Detection. Remote Sensing, 14(8), 1908. https://doi.org/10.3390/rs14081908