Abstract

Hyperspectral anomaly detection (HAD) as a special target detection can automatically locate anomaly objects whose spectral information are quite different from their surroundings, without any prior information about background and anomaly. In recent years, HAD methods based on the low rank representation (LRR) model have caught much attention, and achieved good results. However, LRR is a global structure model, which inevitably ignores the local geometrical information of hyperspectral image. Furthermore, most of these methods need to construct dictionaries with clustering algorithm in advance, and they are carried out stage by stage. In this paper, we introduce a locality constrained term inspired by manifold learning topreserve the local geometrical structure during the LRR process, and incorporate the dictionary learning into the optimization process of the LRR. Our proposed method is an one-stage algorithm, which can obtain the low rank representation coefficient matrix, the dictionary matrix, and the residual matrix referring to anomaly simultaneously. One simulated and three real hyperspectral images are used as test datasets. Three metrics, including the ROC curve, AUC value, and box plot, are used to evaluate the detection performance. The visualized results demonstrate convincingly that our method can not only detect anomalies accurately, but also suppress the background information and noises effectively. The three evaluation metrics also prove that our method is superior to other typical methods.

1. Introduction

As a 3D data cube, hyperspectral image (HSI) contains both spatial and spectral information. It usually consists of hundreds of narrow spectral bands ranging from the visible to the infrared spectrum [1]. The abundant spectral information offers an unique ability to analyze physicochemical characteristics of materials, which possesses great advantages in many remote sensing applications such as change detection [2], scene classification [3], and target detection [4].

Hyperspectral anomaly detection (HAD) [5] is an important branch of target detection. As opposed to matching target detection [6] with known information, HAD can automatically locate anomaly objects without any prior knowledge. In general, anomaly objects are thought distinctive and abnormal. And they have these characteristics: low proportion, small-size, and large spectral difference from the entire background or their surrounding pixels [7]. Anomaly objects have various entities depending on the application scenario. For example, anomaly objects are man-made objects in aerial reconnaissance [8,9,10], and interesting minerals in geological exploration [11].

In past decades, many HAD algorithms have been proposed. The most classical one is the Reed-Xiaoli (RX) algorithm [12], which is considered a benchmark. The RX algorithm assumes that the background data conforms to a Gaussian distribution, and anomaly degree of a pixel can be obtained by computing its deviation degree from the background distribution. There are two versions of RX algorithm: global RX (GRX) and local RX (LRX) [12]. The LRX adopts a slide dual-window model to find anomalies block by block. However, the multivariate Gaussian distribution is unable to model complex background, and the background modeling may be contaminated by anomalies [5]. There are two common strategies to improve the RX algorithm. One is to enhance the background pixels selection or remove anomalies for a relative pure background. In [13], the weighted RX algorithm assigns larger weight to suspected background pixels, in order to reduce the impact of anomaly pixels. In [14], a locally adaptable iterative RX algorithm was presented, which removes suspected anomaly pixels with RX algorithm iteratively until the results make approximately no change. In [15], the spectral angle distance between each pixel vector and the mean of all pixels are computed, and then the pixels with larger distance are removed to obtain a purer background. The other is kernel technique. Kwon et al. proposed the kernel RX (KRX) [16] method, which enlarges the separation degree between the anomaly and background by mapping nonlinearly the image to a higher-dimensional space. On account of its high computational complexity, a cluster kernel RX algorithm [17] was proposed further, which classifies the original data into several clusters and then adopts KRX method in each cluster.

Recently, the representation-based HAD methods have drawn much attention. The sparse representation (SR)-based HAD method assumes that a background pixel can be linearly expressed by a dictionary with corresponding spare vector [18], but anomaly pixel can not. In [19], a method by the use of background joint sparse representation (BJSR) method was presented, which employs a triple-window model and joint sparse representation to build a representative background dictionary. In [20], a multi-dictionary SR with discriminative feature learning was proposed. The collaborative-representation-based (CRD) detector [21] considers that each background pixel can be well expressed by its spatial neighbors, while anomalies can not. Following that, a background refinement collaboration representation method [22] was developed, where background pixels are obtained according to representation coefficients. Furthermore, the low rank representation (LRR)-based methods have been introduced, which seeks the low rank representation of a HSI. In [23], a low-rank and sparse matrix decomposition-based (LRaSMD) anomaly detection algorithm was proposed, in which the Go Decomposition algorithm [24] was used to decompose original HSI. On the basis of the LRaSMD method, Zhang et al. [25] proposed a LRaSMD-based Mahalanobis-distance anomaly detector (LSMAD), where a robust background is obtained by the LRaSMD method. In [26], the abundance map obtained by spectral unmixing algorithm was fed into a LRR model instead of original HSI, and the dictionary was constructed with a clustering algorithm. In [27], a low rank and sparse representation (LRASR)-based detection method was presented. It employed a sparse regularization term to constrain the representation coefficient matrix, and the dictionary was also constructed by the clustering algorithm. In [28], a anomaly detector using graph dictionary-based low rank decomposition with texture feature extraction is proposed, in which the dictionary was constructed by the graph Laplacian matrix.

Although the methods based on the LRR model can effectively model the background and anomaly part, there are still some drawbacks. Firstly, LRR is a global structure model [29,30], which inevitably ignores the local geometrical information. As we all know, the local structure information is beneficial to target detection [31]. Secondly, most LRR-based HAD methods need to construct a dictionary in advance [26,27,28], and the common dictionary construction method is the clustering algorithm. In practice, the number of background clusters is hard to determine. Thirdly, most HAD methods based on the LRR model have been carried out in several stages, they are not one-stage algorithm. To overcome those, we introduce a locality constrained term to preserve the local geometrical structure. Motivated by the idea of manifold learning, an affinity matrix is used to constrain the representation coefficient matrix, in order to force the pixels with small distance to have similar low representation vector. Moreover, the dictionary learning is integrated into the LRR model, and the dictionary will be updated iteratively along with the optimization process of LRR. In other words, the low representation coefficient matrix, the dictionary matrix, and the residual matrix referring to anomaly will be obtained simultaneously. Specifically, we proposed a locality constrained low rank representation and automatic dictionary learning-based hyperspectral anomaly detector (LCLRR). The main contributions of this paper are summarized as follows.

- (1)

- We introduce an locality constrained low rank representation to model the background and anomaly part for the HSI. By introducing the locality constrained term, this model encourages pixels with similar spectrum to have similar representation coefficient.

- (2)

- The dictionary learning is integrated into the LRR model, and a compact dictionary can be learned iteratively, instead of the widely-used clustering algorithms.

- (3)

- Our HAD method is a one-step algorithm, the representation coefficient matrix, dictionary matrix and anomaly matrix can be obtained simultaneously.

2. Proposed Method

2.1. LRR for Hyperspectral Anomaly Detection

There is high spectral correlation and spatial homogeneity among background pixels [5] in a HSI, and the background pixel is assumed to be sampled from a union of multiple low-dimensional subspace. This makes the background part of the low-rank characteristic. The anomalies have low occurrence probability and small proportion, which makes them sparse. Suppose that an HSI is denoted as . Generally, the is concerted to a 2D matrix , where is the number of pixels and p is number of spectral bands. The low rank representation (LRR) model [29] proposed by Liu et al. is developed to recover the subspace structures of a matrix, and usually used to decompose a matrix into a low rank part and a residual part. Based on low rank representation (LRR) model [29], can be decomposed into the background and anomaly part, which is formulated as

where denotes the background part, which can be expressed as the product of a background dictionary and corresponding representation coefficient matrix . The dictionary is composed of representative background pixels. The matrix is the residual matrix referring to the anomaly part. is norm of , which is the number of nonzero atoms in .

However, the rank function and norm are discrete and non-convex. The problem (1) is NP-hard. Fortunately, the norm is the optimal convex approximation of norm, and the nuclear norm is an appropriate surrogate to rank function [29]. The problem (1) is rewritten as follows,

where is the nuclear norm of , which is the sum of singular values of . is the norm of , which is the sum of absolute value of all elements in . The problem (2) and problem (1) are equivalent when noise is free [29]. Real HSIs contain lots of noises; therefore, a robust model is formulated as

where is the sum of the norm of each column of matrix . Compared to norm, norm can force more columns of to be zero vector. is a regularization parameter, which balances the weights of low-rank and sparsity characteristic.

The problem (3) can be solved by the Inexact Augmented Lagrange Multiplier (IALM) [32,33] algorithm. Once the decomposition process is completed, the residual matrix is utilized to get the final detection map. The anomaly degree is defined as the norm of the ith column of matrix .

If is larger than a given threshold, the pixel will be regarded as an anomaly pixel. Moreover, the is reshaped to the spatial size of , yielding the detection map .

2.2. Locality Constrained LRR

The pixel in a local region belong to similar materials with high probability, and their spectrum vector are similar. It is beneficial for HAD task to explore the local similarity, whose importance has been shown in many articles [34,35]. However, the LRR is a global structure model, which inevitably neglects local geometrical information [29]. Manifold learning is an important dimensionality reduction method, which attempts to obtain the intrinsic distribution and geometry structure of high-dimensional data. Multi-dimensional scaling (MDS) [36] is a classical manifold learning algorithm, which keeps the geometrical structure of original data via holding the distances among points. Motivated by this, we adopt the distance weight , i.e., the Euclidean distance between any two points, to constrain the representation coefficient , in order to make the pixels who are close in original space have similar coefficients in representation space .

As in [37,38], We utilize the following term to preserve the local geometrical structure in the process of low rank decomposition.

where ⊙ is the Hadamard product which is the element-wise product of two matrix. If two pixels are close in a HSI, the weight with small value can make the corresponding pixels in representation space as close as possible.

In the HAD task, most pixels belong to the background, and they are composed of a few main materials. Pixels in a HSI can be sparsely represented by few nonzero coefficients with a dictionary. Therefore, the matrix is also sparse, not only low-rank. We use the following term to characterize this.

where can be seen as the norm of matrix weighted by , which can encourage the sparsity of .

Subsequently, the newly introduced locality constrained term is added into the original objective function (3) of LRR, which is expressed as,

where is a locality constrained regularization parameter.

2.3. Active Dictionary Learning for LRR

For the anomaly detectors based on LRR model, the dictionary construction is of great importance. However, most HAD methods with LRR model need to construct dictionary in advance, that is to say, they have multiple steps. For example, in [26,27,39], the dictionary is built through a clustering algorithm independently. Since there is no prior information about the number of background materials, the number of clusters is difficult to determine, resulting in a big problem of dictionary construction. Different from previous methods, we attempt to learn a compact and discriminative dictionary by exploiting the local geometrical information. Thus, the dictionary learning is integrated into the optimization process of problem (7), in order to learn the representation coefficient and dictionary simultaneously. The objective function is formulated as follows,

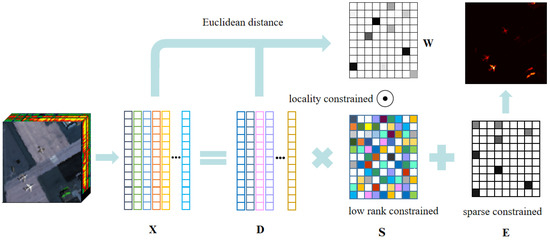

where is the Frobenius norm of , aiming to avoid scale change, is a balance parameter. The optimization process of problem (8) is introduced briefly in Section 2.4. For our HAD method based on the LCLRR model, the procedure is summarized in Algorithm 1, and the flowchart is shown in Figure 1.

| Algorithm 1 HAD algorithm based on LCLRR model. |

Hyperspectral image ; Regularization parameter , , and ; Number of atoms K in dictionary ;

Anomaly detection map . |

Figure 1.

The flowchart of the proposed LCLRR method.

2.4. Optimization Procedure of LCLRR

To make the objective function separable for problem (8), two auxiliary variables and are introduced. The original problem is rewritten as follows,

This constrained optimization problem (9) can be solved by the IALM algorithm [32,37]. The augmented Lagrangian function of Equation (9) is as follows,

where are Lagrange multipliers, is a positive penalty coefficient. is trace of . The problem (10) has multiple variables, it can be solved iteratively by updating one variable with others fixed [27,37]. The processing procedure can be broken down into several steps.

Step 1: updating with other variables fixed, the problem can be formulated as follows,

Step 2: updating with other variables fixed, the problem can be formulated as follows,

Step 3: updating with other variables fixed, the problem can be formulated as follows,

Step 4: updating with other variables fixed, the problem can be formulated as follows,

Step 5: updating with other fixed variables, the problem can be formulated as follows,

The whole optimization process is summarize in Algorithm 2.

| Algorithm 2 The optimization of problem (10) by the IALM algorithm. |

Input matrix ; Regularization parameter , , and ; Weight matrix ;

Representation coefficient matrix ; Dictionary matrix ; Residual matrix ;

, , , , , .

do

|

3. Experiments and Result Analysis

3.1. HSI Dataset

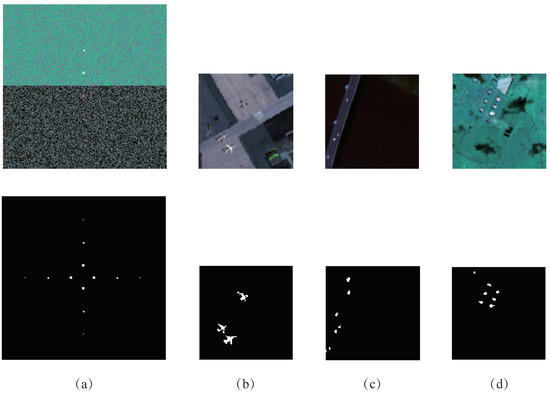

One simulated dataset and three real hyperspectral datasets are used to evaluate our method’s performance. The first is a simulated dataset [40]. It has the spatial size of and 105 spectral bands. The background materials are mixed by five plants in random proportions. The plants are lawn grass, dry long grass, black shrub leaf, sage brush, and tumbleweed, whose spectral vectors can be downloaded from the U.S. Geological Survey vegetation spectral library. The anomalies are set to pure materials. There are 12 anomaly objects with the size of , , and implanted into the complicated background in the shape of a cross. The pseudo-color image and its corresponding reference map are shown in Figure 2a.

Figure 2.

The pseudo-color images composed of 10th, 70th, and 100th spectral bands and their corresponding reference maps: (a) Simulated. (b) San Diego airport. (c) Pavia center. (d) Texas Coast urban.

The second is the San Diego airport dataset, which was captured by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) in San Diego, CA, USA. The whole size of the image is , and its spatial resolution is 3.5 m. After removing water absorption, low SNR, and poor-quality bands, there are 189 spectral bands remained in wavelength ranging from 370 to 2510 nm. In general, a sub-image of in the top-left is chosen as the test image. The background scene mainly containing hangars, airstrips, and meadows, is complicated. Three airplanes consisting of 57 pixels are taken as anomaly objects. The pseudo-color image and its corresponding reference map are shown in Figure 2b.

The third is the Pavia center dataset, which was captured by the Reflective Optics System Imaging Spectrometer (ROSIS) over a urban center in Pavia, Italy. This dataset have the size of , and 1.3 m spatial resolution. There are 102 spectral bands in wavelength ranging from 430 to 860 nm. The background scene mainly includes a bridge and river. Six vehicles on the bridge are regarded as the anomaly objects. The pseudo-color image and its corresponding reference map are displayed in Figure 2c.

The fourth is the Texas Coast urban dataset, which was also captured by the AVIRIS sensor in Texas Coast. The size of the image is , and its spatial resolution is 17.2 m. There are 204 spectral bands ranging from 450 to 1350 nm. The background scene comprises a stretch of meadow and some groves. The houses are regarded as anomaly objects. This image, it should be noted, is contaminated by some serious strip noises; hence, it is a challenging task to recognize all anomaly pixels with noise suppression. The pseudo-color image and its corresponding reference map are shown in Figure 2d.

3.2. Comparison Algorithm and Evaluation Metrics

Seven typical algorithms are compared with our proposed LCLRR algorithm. These competitors include the global RX (GRX) [12], local RX (LRX) [12], CRD [21], BJSR [19], LSMAD [25], LRASR [27], and KIFD [41]. The GRX and LRX are statistical model-based methods. The BJSR, CRD, LSMAD, and LRASR are representation-based methods, they use the SR, CR, and LRR model, respectively. The KIFD is based on the isolation forest theory [42], which isolates anomaly pixels from background directly.

To evaluate the results of different algorithms intuitively, our experiments employ the following three metrics: the receiver operating characteristic (ROC) curve [43], the area under the ROC curve (AUC), and the box plot [44]. The ROC curve illustrate the relationship between detection probability () and false alarm rate (), who are computed as:

where denotes the number of anomaly pixels detected correctly, is the number of anomaly pixels as the reference map shows, indicates the number of misidentified anomaly pixels, which are the background pixels actually. is the number of background pixels. When the is constant, the algorithm with larger gets better performance. In other words, the algorithm whose ROC curve is closer to the top-left corner performs better. The AUC value is a quantitative evaluation metric. The algorithm with larger AUC value gets a better detection performance.

The box plot is used to visualize anomaly–background separability. Usually in a box plot, the red color box describes the distribution of anomaly pixels, and the blue color box represents the background distribution. The middle line in each box is the average value of their corresponding pixels. The larger the gap between the anomaly and background boxes is, the higher the anomaly-background separation degree will be. And the shorter the background box is, the more background information the algorithm suppresses.

3.3. Detection Performance

For the comparison algorithms, there are some parameter settings to be declared in advance. The detection performances of the LRX, BJSR, and CRD methods are closely associated with the double-window size . To sort out the best result, is set to , , , , , and in turn. Moreover, the regularization parameter is set to for the CRD method. For the LSMAD method, the rank is set to 28, the cardinality is set to (p is number of spectral bands). For the LRASR method, the parameter is set to , the parameter is set to 1, the number of clusters are set to 15, the number of pixels in each background cluster is set to 20. For the KIFD method, the dimension parameter , the number of isolation trees q, and the size of train dataset M are set to 300, 1000, and (n is the number of pixels), respectively.

For our method, there are four parameters involved in the experiments. They are the sparsity regularization parameter , the locality regularization parameter , the dictionary regularization parameter , and the number of atoms K in dictionary . The influence of those parameters on the detection performance will be discussed in Section 3.4 in detail. Here, we just give the optimal parameter settings in following experiments. For the simulated dataset, several parameter combinations can make our method acquire the AUC value of 1. For example, the are set to . For the San Diego airport dataset, the are set to . For the Pavia center dataset, the are set to . For the Texas Coast urban dataset, the are set to . For all datasets, the number of atoms K is set to 300, considering the performance and efficiency comprehensively.

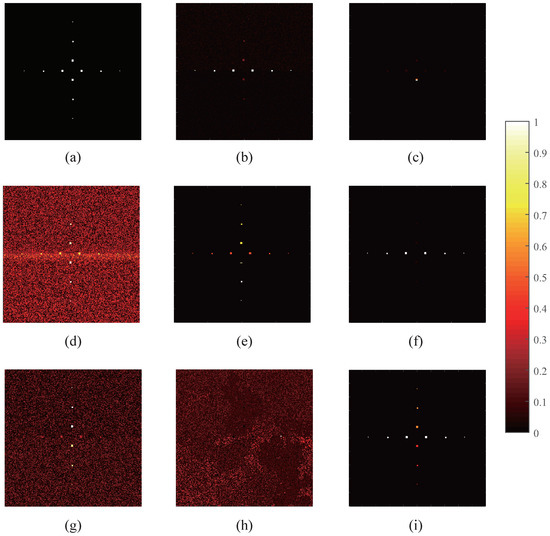

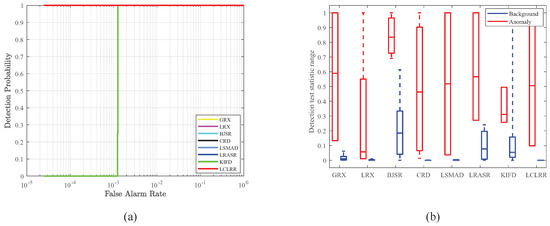

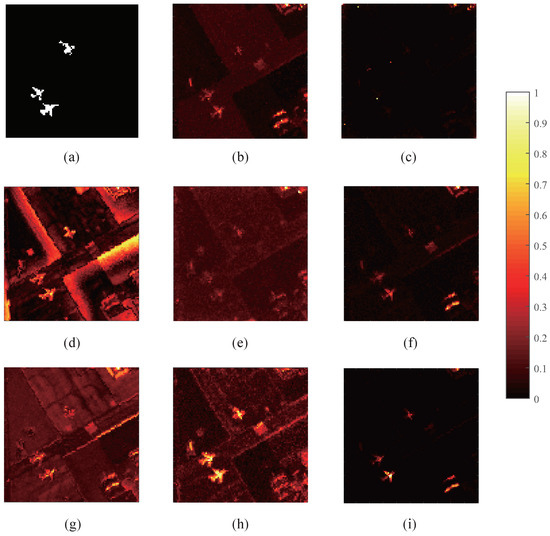

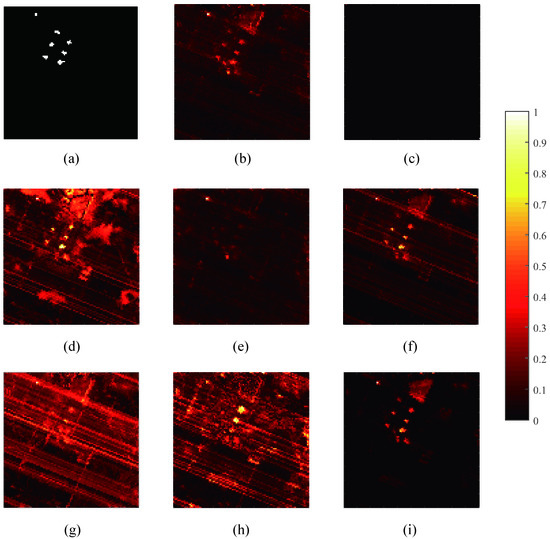

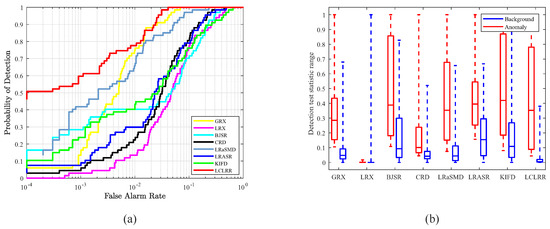

For the simulated dataset, the detection maps are displayed in Figure 3. We can find that our method and CRD method can detect all anomaly pixels with excellent results of background suppression. For the GRX, LRX, and LSMAD method, the background information is suppressed effectively, but only some anomaly objects are identified clearly. For the BJSR and LRASR method, the detection map is blurry. It is obvious that the effect of background suppression is bad. For the KIFD method, few anomaly pixels are detected. It performs poorly in both anomaly detection and background suppression. The ROC curves of different algorithms are displayed in Figure 4a. The curves of all algorithms except the KIFD are at the line whose y-coordinate is 1. The AUC values are listed in Table 1, all algorithms except the KIFD method have the AUC values of 1. Obviously, the ROC curve and AUC value are unable to distinguish the performance difference of comparison algorithms. Here, the box plot depicted in Figure 4b can tell us more. The gap between the background and anomaly boxes for our method is the largest, which demonstrates that our method has the highest anomaly-background separation degree. Moreover, the background box of our method is shorter, which indicates that our method has good background suppression ability.

Figure 3.

The detection maps of different algorithms for the simulated dataset, and the parameters in brackets are sizes of double-window used in corresponding algorithms. (a) Reference map. (b) GRX. (c) LRX (5, 29). (d) BJSR (3, 23). (e) CRD (3, 23). (f) LSMAD. (g) LRASR. (h) KIFD. (i) LCLRR.

Figure 4.

The ROC curves and box plots of different algorithms for the simulated dataset. (a) ROC curve. (b) box plot.

Table 1.

The AUC values of different algorithms for four datasets including simulated, San Diego airport, Pavia center, and Texas Coast urban. The best results are in bold, and the second-best outcomes are underlined.

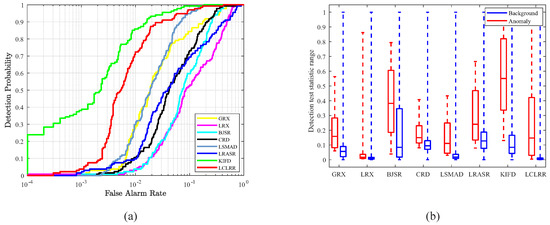

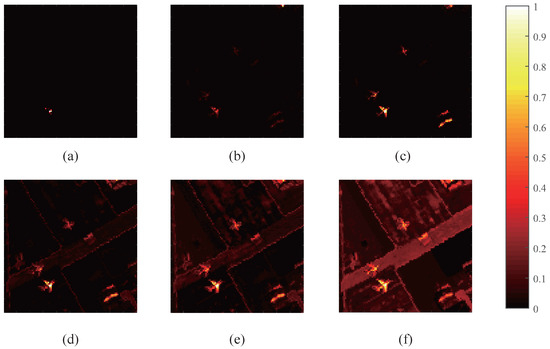

For the San Diego airport dataset, the detection maps are displayed in Figure 5. It can be observed intuitively that the GRX, LSMAD, KIFD, and our method get better performance. For the LRX method, a small number of anomaly pixels are detected, although the background is suppressed effectively. For the BJSR method, the anomaly-background separation degree is low, resulting in a blurred boundary between the background and anomalies. It is also proved in the box plot in Figure 6b, where the background box and anomaly box of the BJSR method is overlapped. For the KIFD method, it has a higher detection probability, and anomaly objects are clear. The ROC curves of different algorithms are shown in Figure 6a. The ROC curve of the KIFD method is the closest, indicating it has the best performance. The AUC values are listed in the second column of Table 1. The KIFD method have the largest AUC value, and our method is the second best. The box plot is shown in Figure 6b. The gap between these boxes for KIFD method is the largest, nevertheless, its anomaly box is large. This indicates that the KIFD method does not suppress the background well. On the contrary, our method performs well in suppressing the background information, because its background box is the shortest.

Figure 5.

The detection maps of different algorithms for the San Diego airport dataset, the parameters in brackets are sizes of double-window used in corresponding algorithms. (a) Reference map. (b) GRX. (c) LRX (5, 29). (d) BJSR (3, 23). (e) CRD (3, 23). (f) LSMAD. (g) LRASR. (h) KIFD. (i) LCLRR.

Figure 6.

The ROC curves and box plots of different algorithms for the San Diego airport dataset. (a) ROC curve. (b) box plot.

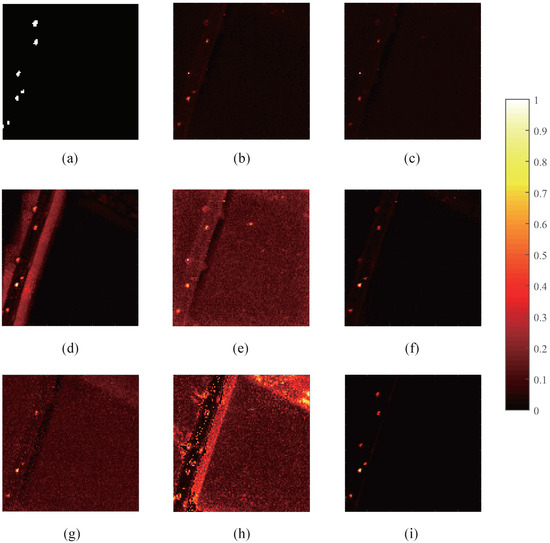

For the Pavia center dataset, the detection maps are shown in Figure 7. It can be founded that our proposed method performs best among all competitors. Our method can not only detect all anomaly pixels accurately, but also suppress the background information thoroughly, especially the edge of bridge. For the GRX, LRX, and LSMAD method, most of anomaly pixels have been detected, but some are not clear enough. The BJSR method can locate anomaly objects, but fail to suppress the edge of bridge, leading to high . The CRD and LRASR method can not set a clear boundary to separate the background and anomaly part. The KIFD method performs poorly. It can not locate anomaly objects and suppress background information. The ROC curves of different algorithms are displayed in Figure 8a. The curve of our method is the closest to the top-left corner, indicating that our method is the best. The results of AUC value are shown in Table 1. The AUC value of our method is 0.9957, which is the largest of all. The box plots are shown in Figure 8b, which give us more details than other two metrics. For the BJSR, LRASR, and KIFD methods, the anomaly box and background box overlapped, indicating low anomaly-background separation degree. This is also verified mutually in the detection maps in Figure 7. For our method, there is a certain gap between the background box and anomaly box. More importantly, the background box of our method is very short, proving it has strong ability to suppress the background. Generally, the three metrics prove that our method is better than others.

Figure 7.

The detection maps of different algorithms for the Pavia center dataset, the parameters in brackets are sizes of double-window used in corresponding algorithms. (a) Reference map. (b) GRX. (c) LRX (5,29). (d) BJSR (3,23). (e) CRD (3,23). (f) LSMAD. (g) LRASR. (h) KIFD. (i) LCLRR.

Figure 8.

The ROC curves and box plots of different algorithms for the Pavia center dataset. (a) ROC curve. (b) box plot.

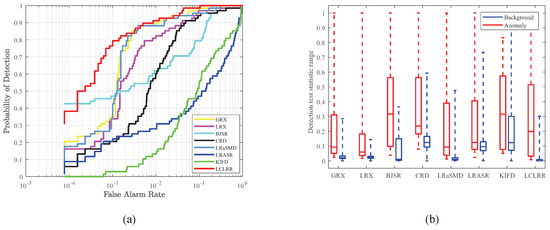

For the Texas Coast urban dataset, the detection maps are shown in Figure 9. It can be observed visually that our method is the best. Since the Texas Coast urban dataset has some serious strip noises, the ability of an algorithm to suppress them is crucial. Our method can not only detect all anomaly pixels clearly, but also suppress both background and strip noises thoroughly, yielding a clear and evident detection map. The LRX method suppresses the background too much, causing few pixels being detected. The BJSR, LRASR, and KIFD method can not suppress background and noise effectively, yielding a fuzzy detection map. The GRX and LSMAD method can only suppress the background not the strip noises. The ROC curve map are displayed in Figure 10a. The curve of our method is the closest to the top-left. Our method has the AUC value of 0.9944, as shown in the fourth column of Table 1. It is the largest of all. The box plots are shown in Figure 10b. The larger gap between two boxes and shorter background box demonstrate that our method is superior to others.

Figure 9.

The detection maps of different algorithms for the Texas Coast urban dataset, the parameters in brackets are sizes of double-window used in corresponding algorithms. (a) Reference map. (b) GRX. (c) LRX (5, 29). (d) BJSR (3, 23). (e) CRD (3, 23). (f) LSMAD. (g) LRASR. (h) KIFD. (i) LCLRR.

Figure 10.

The ROC curves and box plots of different algorithms for the Texas Coast urban dataset. (a) ROC curve. (b) box plot.

In conclusion, our method is the best for the simulated, Pavia center, and Texas Coast urban datasets, and the second best for the San Diego airport dataset. The detection maps on different datasets show that our method can not only detect anomaly objects accurately, but also suppress the background and noises effectively. the detection maps of our method are clear and accurate. Regarding box plots, the gaps between anomaly box and background box are larger, indicating that our method can separate anomaly from the background with a large gap. The background boxes for four datasets are all shorter, indicating that our method can effectively restrain background information.

3.4. Parameters Analyses and Discussion

All the experiments were implemented with the MATLAB R2017a software on a computer with Inter Core I7-10700F CPU@ 2.90 GHz and 16 GB RAM. The key parameter K impacting the running time for our method is set to 300. The running times of different algorithms for four datasets are shown in Table 2. It can be observed that the GRX method runs the fastest, and the CRD method takes the most time. The time complexity of our method is acceptable, considering its excellent detection capability.

Table 2.

The running time (in seconds) of different algorithms for four datasets including the simulated, San Diego airport, Pavia center, and Texas Coast urban.

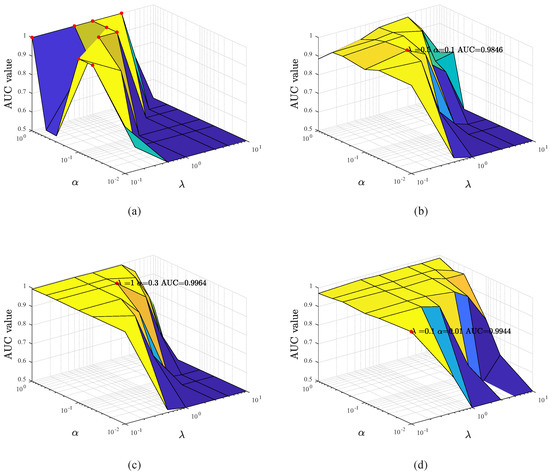

There are four parameters involved in our method, namely the sparsity regularization parameter , the locality regularization parameter , the dictionary regularization parameter , and the number of atoms K in dictionary . We use the changing curve of AUC value to assess the influence of those parameters on the detection performance.

Firstly, the effects of the parameters and are discussed. Before experiment, the and K are fixed to and 300, respectively. The value of is set to in sequence, and the value of is set to in sequence. We compute the AUC values of our algorithm using different parameter combination for four datasets, and then plot the surface diagram of AUC value changes in Figure 11. The best results are labeled with red spot, and corresponding AUC values and parameter combinations are marked nearby. It is worth noting that the AUC value equaling to 0.5 indicate the parameter combination is inappropriate. For the simulated dataset, is preferable, and the ratio of and is less than 10. For other three real datesets, the rule of parameter selection is similar. and are preferably less than 1, and their ratio is less than 10. In all, the scope of working parameter is large, and they are easy to select. Furthermore, the locality regularization parameter is discussed separately. We take the detection maps for the San Diego dataset as an example. we fix the to , and set to in sequence. The final six detection maps are depicted in Figure 12. It can be observed that the anomaly objects become obvious as increases. In other words, the is positively associated with parameter . However, the background pixels also become evident, leading to high . Considering the and comprehensively, is preferable. Based on these results, we can conclude that increasing the weight of the locality constrained term can make our method capture more local details.

Figure 11.

The influence of parameter and on the detection performance for four datasets. (a) Simulated. (b) San Diego airport. (c) Pavia center. (d) Texas Coast urban.

Figure 12.

The detection maps with different settings of for the San Diego airport dataset. (a) . (b) . (c) . (d) . (e) . (f) .

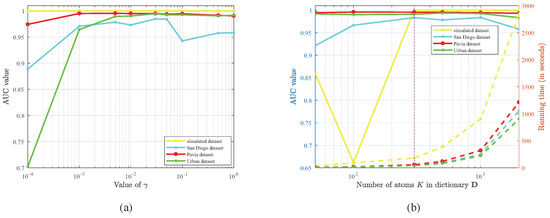

Secondly, the influence of regularization parameter is also discussed. The and are set according to the results in Figure 11. The parameter K is set to 300, and the value of is set to in sequence. The curves of AUC value changes for four datasets are shown in Figure 13a. The have obvious effects for the San Diego airport and Texas Coast urban datasets, but little effect on the simulated and Pavia center datasets. The is an optimal selection for four datasets.

Figure 13.

(a) The influence of parameter on the detection performance for four datasets. (b) The influence of parameter K on the detection performance and running time for four datasets.

Thirdly, the number of atoms in dictionary is an important parameter, which influence the results of weight matrix and coefficient matrix . Similarly, the other parameters are fixed in advance. The K is set to in turn, and the changing curves of AUC value and running time are shown in Figure 13b. The parameter K has some impact for the San Diego airport dataset, but little impact for other three datasets. The main reason for this is that the background materials of the San Diego airport dataset is more complex than others. Obviously, the running time increases rapidly along with the K. The is good choice for four datasets, considering the detection performance and time complexities comprehensively.

4. Conclusions

In this paper, a novel hyperspectral anomaly detection method with the LCLRR model was proposed. Unlike most of the existing HAD algorithms based on LRR model, our proposed method introduced a locality constrained LRR model to model the background and anomaly part. By adding the locality constrained term, our model can force the pixelsr with small distances in HSI to have similar representation coefficients after the low rank decomposition process. Furthermore, the dictionary learning process and LRR is a whole process, the residual matrix referring to anomaly, coefficient matrix, and dictionary matrix can be obtained simultaneously. The experiments on simulated dataset and three real datasets demonstrated that our method can detect anomalies accurately. Significantly, our method can suppress both the background and noises effectively, yielding a clear and accurate detection map. Three metrics including ROC curve, AUC value, box plot, all demonstrate that our algorithm is better than other state-of-art algorithms. In the future, how to extend the LCLRR model into other remote sensing tasks is our next research interest.

Author Contributions

Conceptualization, J.H., K.L. and X.L.; methodology, J.H., X.L. and K.L.; software, J.H; validation, J.H., K.L. and X.L.; formal analysis, J.H.; investigation, J.H.; resources, J.H.; data curation, K.L.; writing—original draft preparation, J.H.; writing—review and editing, K.L.; visualization, J.H.; supervision, K.L.; project administration, J.H.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Key Research and Development Program of China under grant 2018AAA0102201, the Basic Research Strengthening Program of China under Grant 2020-JCJQ-ZD-015-00-02, and in part by the National Natural Science Foundation of China under grant 61871470.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in Hyperspectral Image and Signal Processing: A Comprehensive Overview of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Yuan, Z.; Wang, Q. Unsupervised deep noise modeling for hyperspectral image change detection. Remote Sens. 2019, 11, 258. [Google Scholar] [CrossRef] [Green Version]

- Chen, M.; Wang, Q.; Li, X. Discriminant analysis with graph learning for hyperspectral image classification. Remote Sens. 2018, 10, 836. [Google Scholar] [CrossRef] [Green Version]

- Gao, Y.; Feng, Y.; Yu, X. Hyperspectral Target Detection with an Auxiliary Generative Adversarial Network. Remote Sens. 2021, 13, 4454. [Google Scholar] [CrossRef]

- Su, H.; Wu, Z.; Zhang, H.; Du, Q. Hyperspectral Anomaly Detection: A Survey. IEEE Geosci. Remote Sens. Mag. 2021, 2–28. [Google Scholar] [CrossRef]

- Gao, L.; Yang, B.; Du, Q.; Zhang, B. Adjusted spectral matched filter for target detection in hyperspectral imagery. Remote Sens. 2015, 7, 6611–6634. [Google Scholar] [CrossRef] [Green Version]

- Matteoli, S.; Diani, M.; Corsini, G. A tutorial overview of anomaly detection in hyperspectral images. IEEE Aerosp. Electron. Syst. Mag. 2010, 25, 5–28. [Google Scholar] [CrossRef]

- Eismann, M.T.; Stocker, A.D.; Nasrabadi, N.M. Automated hyperspectral cueing for civilian search and rescue. Proc. IEEE 2009, 97, 1031–1055. [Google Scholar] [CrossRef]

- Theiler, J.; Ziemann, A.; Matteoli, S.; Diani, M. Spectral variability of remotely sensed target materials: Causes, models, and strategies for mitigation and robust exploitation. IEEE Geosci. Remote Sens. Mag. 2019, 7, 8–30. [Google Scholar] [CrossRef]

- Kruse, F.A.; Boardman, J.W.; Huntington, J.F. Comparison of airborne hyperspectral data and EO-1 Hyperion for mineral mapping. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1388–1400. [Google Scholar] [CrossRef] [Green Version]

- Transon, J.; d’Andrimont, R.; Maugnard, A.; Defourny, P. Survey of hyperspectral earth observation applications from space in the sentinel-2 context. Remote Sens. 2018, 10, 157. [Google Scholar] [CrossRef] [Green Version]

- Reed, I.S.; Yu, X. Adaptive multiple-band CFAR detection of an optical pattern with unknown spectral distribution. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, B.; Ran, Q.; Gao, L.; Li, J.; Plaza, A. Weighted-RXD and Linear Filter-Based RXD: Improving Background Statistics Estimation for Anomaly Detection in Hyperspectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2351–2366. [Google Scholar] [CrossRef]

- Taitano, Y.P.; Geier, B.A.; Bauer, K.W. A locally adaptable iterative RX detector. EURASIP J. Adv. Signal Process. 2010, 2010, 341908. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Fan, Y.; Xu, M. A Background-Purification-Based Framework for Anomaly Target Detection in Hyperspectral Imagery. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1238–1242. [Google Scholar] [CrossRef]

- Kwon, H.; Nasrabadi, N.M. Kernel RX-algorithm: A nonlinear anomaly detector for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 388–397. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C.; Ayhan, B.; Eismann, M.T. A Novel Cluster Kernel RX Algorithm for Anomaly and Change Detection Using Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6497–6504. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Sparse representation for target detection in hyperspectral imagery. IEEE J. Sel. Top. Signal Process. 2011, 5, 629–640. [Google Scholar] [CrossRef]

- Li, J.; Zhang, H.; Zhang, L.; Ma, L. Hyperspectral anomaly detection by the use of background joint sparse representation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2523–2533. [Google Scholar] [CrossRef]

- Ma, D.; Yuan, Y.; Wang, Q. Hyperspectral anomaly detection via discriminative feature learning with multiple-dictionary sparse representation. Remote Sens. 2018, 10, 745. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Du, Q. Collaborative representation for hyperspectral anomaly detection. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1463–1474. [Google Scholar] [CrossRef]

- Hou, Z.; Li, W.; Gao, L.; Zhang, B.; Ma, P.; Sun, J. A Background Refinement Collaborative Representation Method with Saliency Weight for Hyperspectral Anomaly Detection. In Proceedings of the 2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2412–2415. [Google Scholar]

- Sun, W.; Liu, C.; Li, J.; Lai, Y.M.; Li, W. Low-rank and sparse matrix decomposition-based anomaly detection for hyperspectral imagery. J. Appl. Remote Sens. 2014, 8, 083641. [Google Scholar] [CrossRef]

- Zhou, T.; Tao, D. Godec: Randomized low-rank & sparse matrix decomposition in noisy case. In Proceedings of the 28th International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011; pp. 33–40. [Google Scholar]

- Zhang, Y.; Du, B.; Zhang, L.; Wang, S. A Low-Rank and Sparse Matrix Decomposition-Based Mahalanobis Distance Method for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1376–1389. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, W.; Guo, R.; Ayhan, B.; Kwan, C.; Vance, S.; Qi, H. Hyperspectral anomaly detection through spectral unmixing and dictionary-based low-rank decomposition. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4391–4405. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Li, J.; Plaza, A.; Wei, Z. Anomaly detection in hyperspectral images based on low-rank and sparse representation. IEEE Trans. Geosci. Remote Sens. 2015, 54, 1990–2000. [Google Scholar] [CrossRef]

- Song, S.; Yang, Y.; Zhou, H.; Chan, J.C.W. Hyperspectral Anomaly Detection via Graph Dictionary-Based Low Rank Decomposition with Texture Feature Extraction. Remote Sens. 2020, 12, 3966. [Google Scholar] [CrossRef]

- Liu, G.; Lin, Z.; Yan, S.; Sun, J.; Yu, Y.; Ma, Y. Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 171–184. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; He, X.; Li, X. Locality and structure regularized low rank representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 911–923. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Chen, M.; Nie, F.; Wang, Q. A multiview-based parameter free framework for group detection. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4147–4153. [Google Scholar]

- Lin, Z.; Chen, M.; Ma, Y. The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Bertsekas, D.P. Constrained Optimization and Lagrange Multiplier Methods; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Li, H.; Feng, R.; Wang, L.; Zhong, Y.; Zhang, L.; Wei, L. Low-Rank Representation Incorporating Local Spatial Constraint for Hyperspectral Anomaly Detection. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021; pp. 4424–4427. [Google Scholar]

- Li, X.; Chen, M.; Nie, F.; Wang, Q. Locality adaptive discriminant analysis. In Proceedings of the IJCAI, Melbourne, Australia, 19–25 August 2017; pp. 2201–2207. [Google Scholar]

- Cox, M.A.; Cox, T.F. Multidimensional scaling. In Handbook of Data Visualization; Springer: Berlin/Heidelberg, Germany, 2008; pp. 315–347. [Google Scholar]

- Yin, H.F.; Wu, X.J.; Kittler, J. Face Recognition via Locality Constrained Low Rank Representation and Dictionary Learning. arXiv 2019, arXiv:1912.03145. [Google Scholar]

- Pan, L.; Li, H.C.; Chen, X.D. Locality constrained low-rank representation for hyperspectral image classification. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016; pp. 493–496. [Google Scholar]

- Yang, Y.; Zhang, J.; Song, S.; Liu, D. Hyperspectral anomaly detection via dictionary construction-based low-rank representation and adaptive weighting. Remote Sens. 2019, 11, 192. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Y.; Ma, D.; Wang, Q. Hyperspectral Anomaly Detection by Graph Pixel Selection. IEEE Trans. Cybern. 2016, 46, 3123–3134. [Google Scholar] [CrossRef]

- Li, S.; Zhang, K.; Duan, P.; Kang, X. Hyperspectral anomaly detection with kernel isolation forest. IEEE Trans. Geosci. Remote Sens. 2019, 58, 319–329. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar]

- Kerekes, J. Receiver operating characteristic curve confidence intervals and regions. IEEE Geosci. Remote Sens. Lett. 2008, 5, 251–255. [Google Scholar] [CrossRef] [Green Version]

- Williamson, D.F.; Parker, R.A.; Kendrick, J.S. The box plot: A simple visual method to interpret data. Ann. Intern. Med. 1989, 110, 916–921. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).