Decision Fusion of Deep Learning and Shallow Learning for Marine Oil Spill Detection

Abstract

:1. Introduction

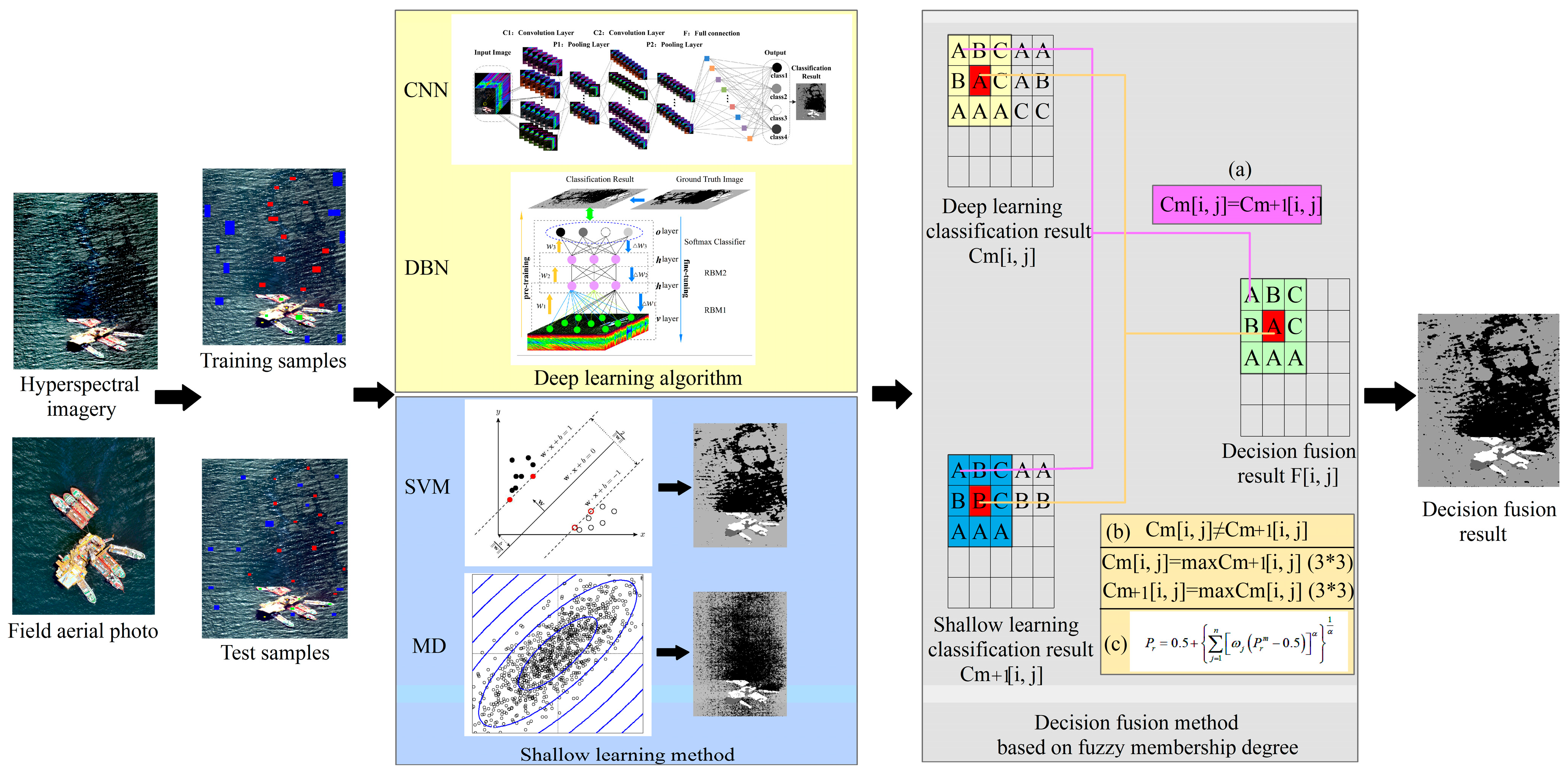

2. Data and Preprocessing

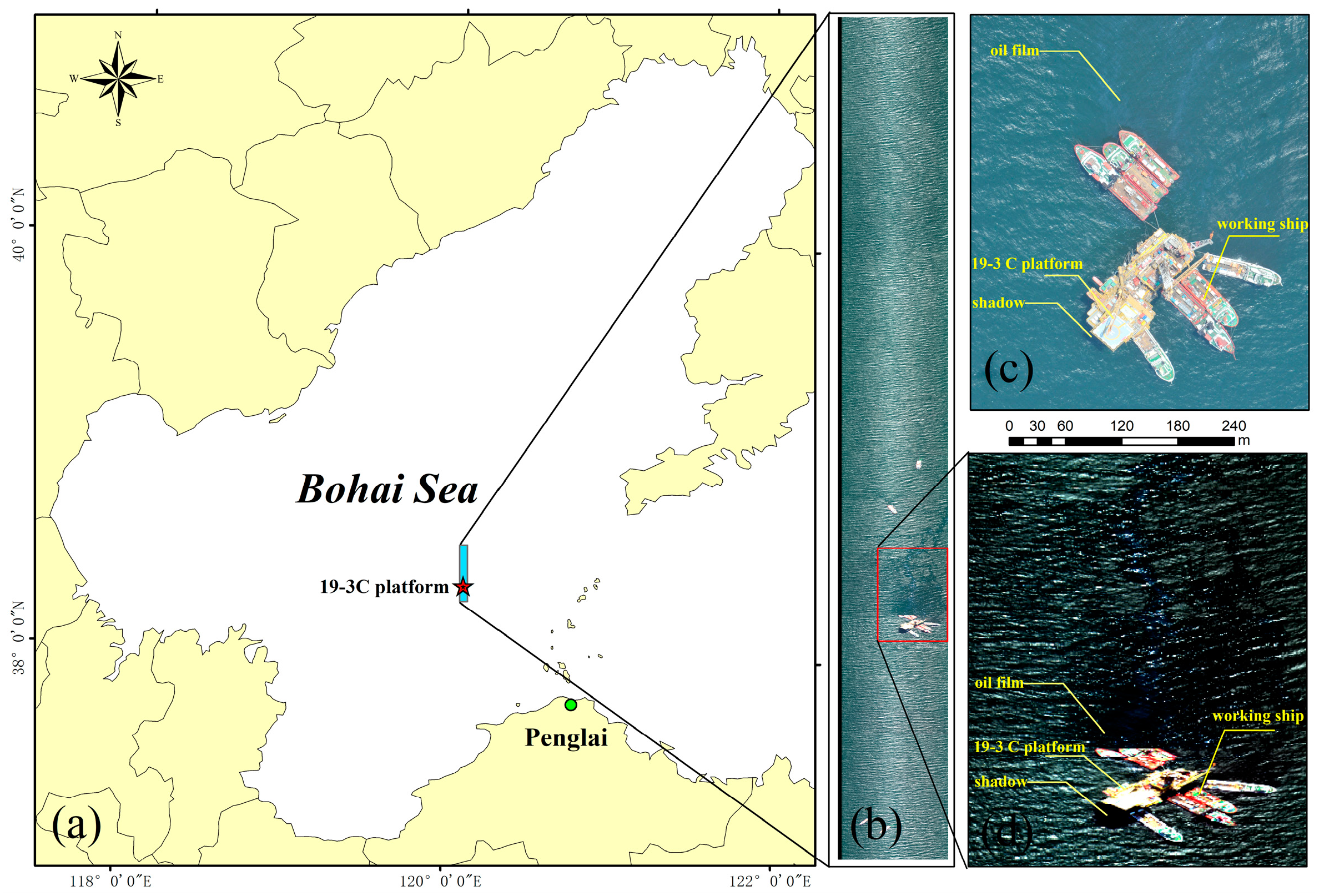

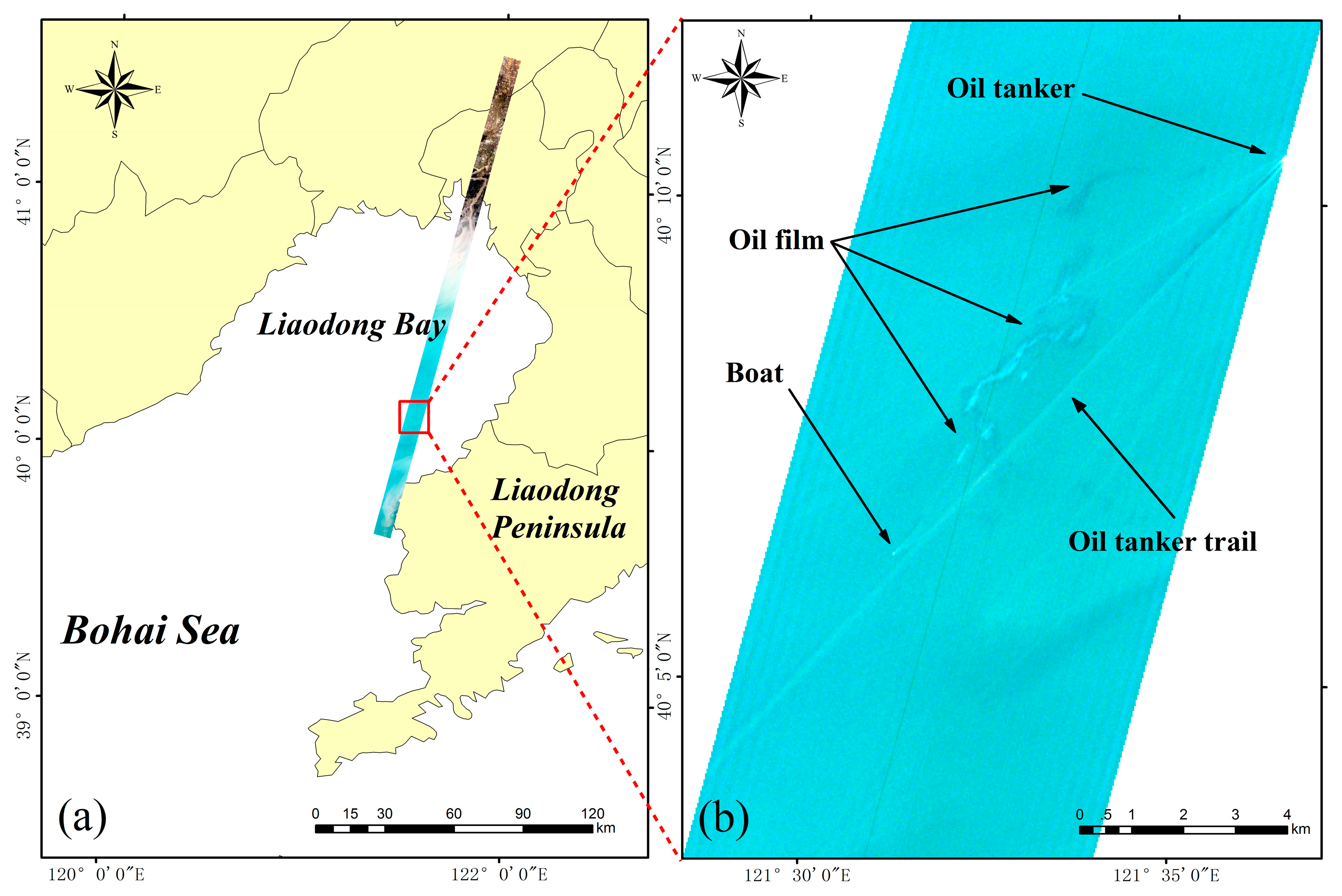

2.1. Accident Summary and AISA+ Hyperspectral Image

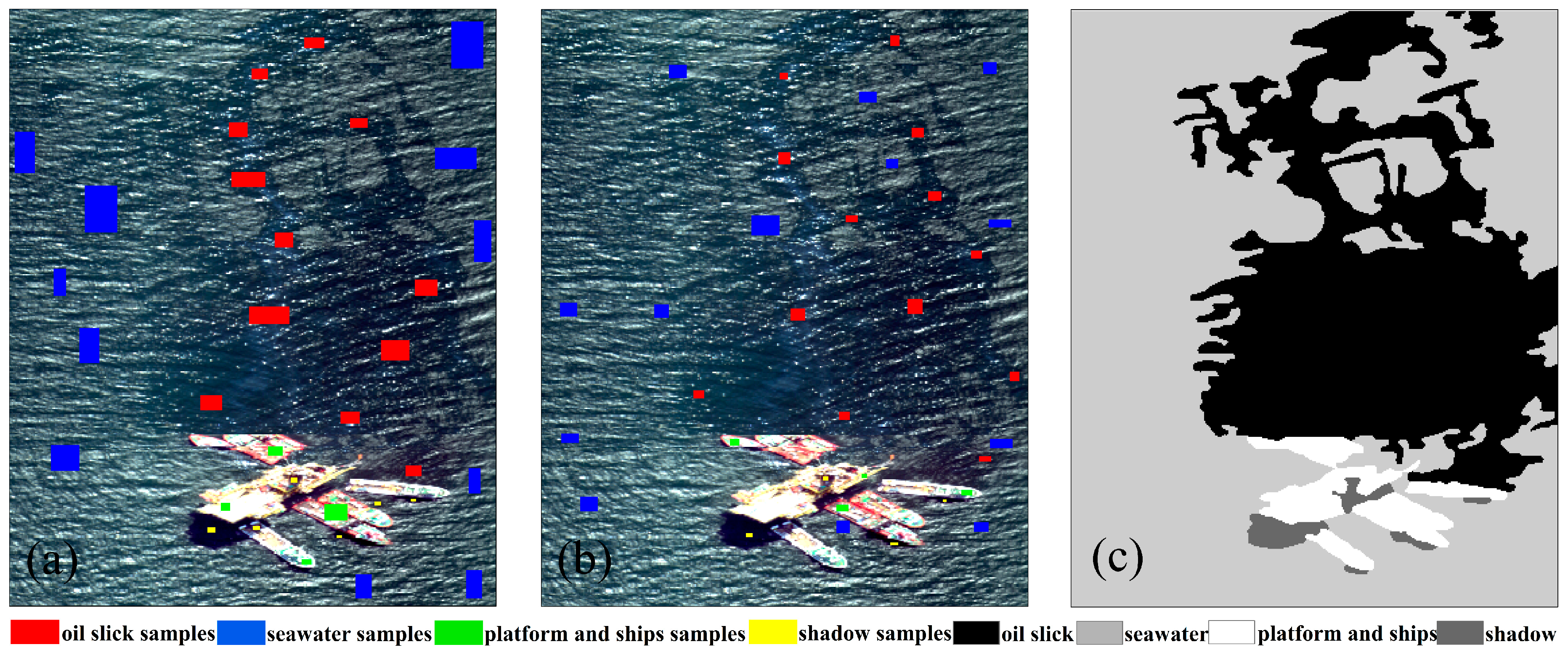

2.2. Sample Selection

3. Method

3.1. Multi-Scale Features Extraction Algorithm Based on Daubechies Wavelet

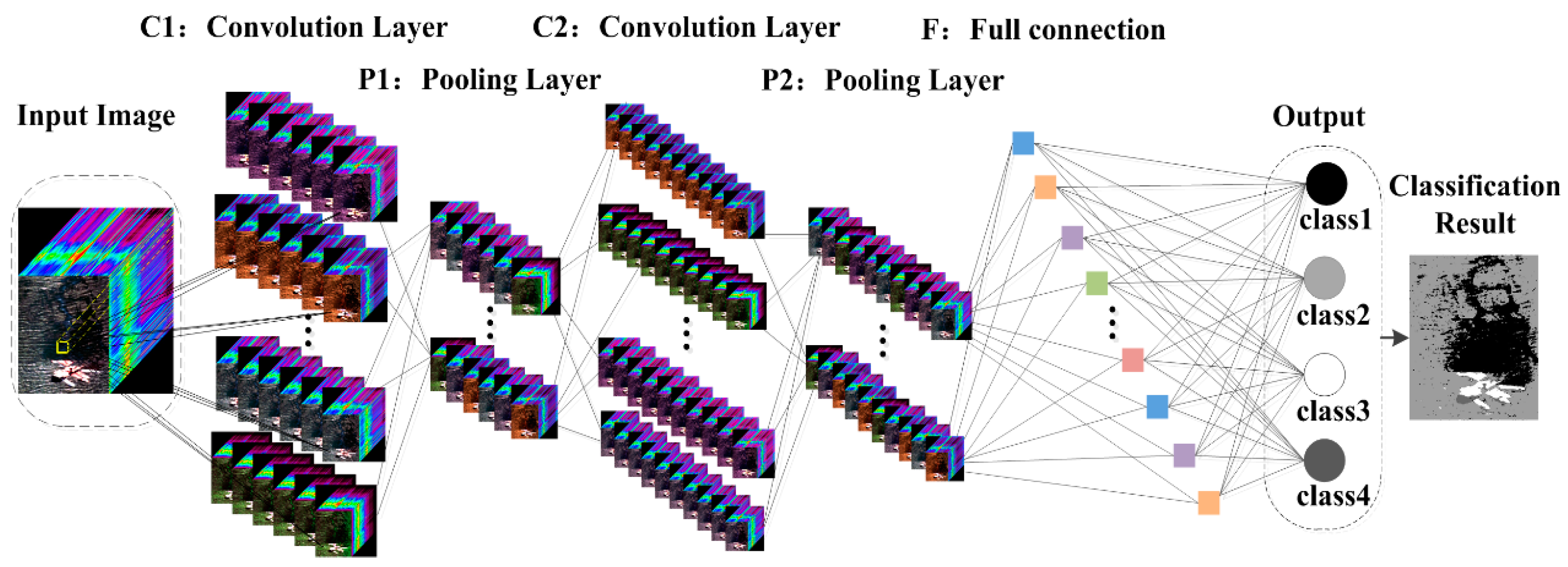

3.2. Deep Learning Oil Spill Detection Algorithms Based on Multi-Scale Features

3.2.1. Convolutional Neural Network (CNN)

- Convolutional Layer

- Pooling Layer

3.2.2. Deep Belief Network (DBN)

3.3. Classical Shallow Learning Algorithms

3.3.1. Support Vector Machine (SVM)

3.3.2. Mahalanobis Distance (MD)

3.4. Decision Fusion Method Based on Fuzzy Membership Degree

4. Results

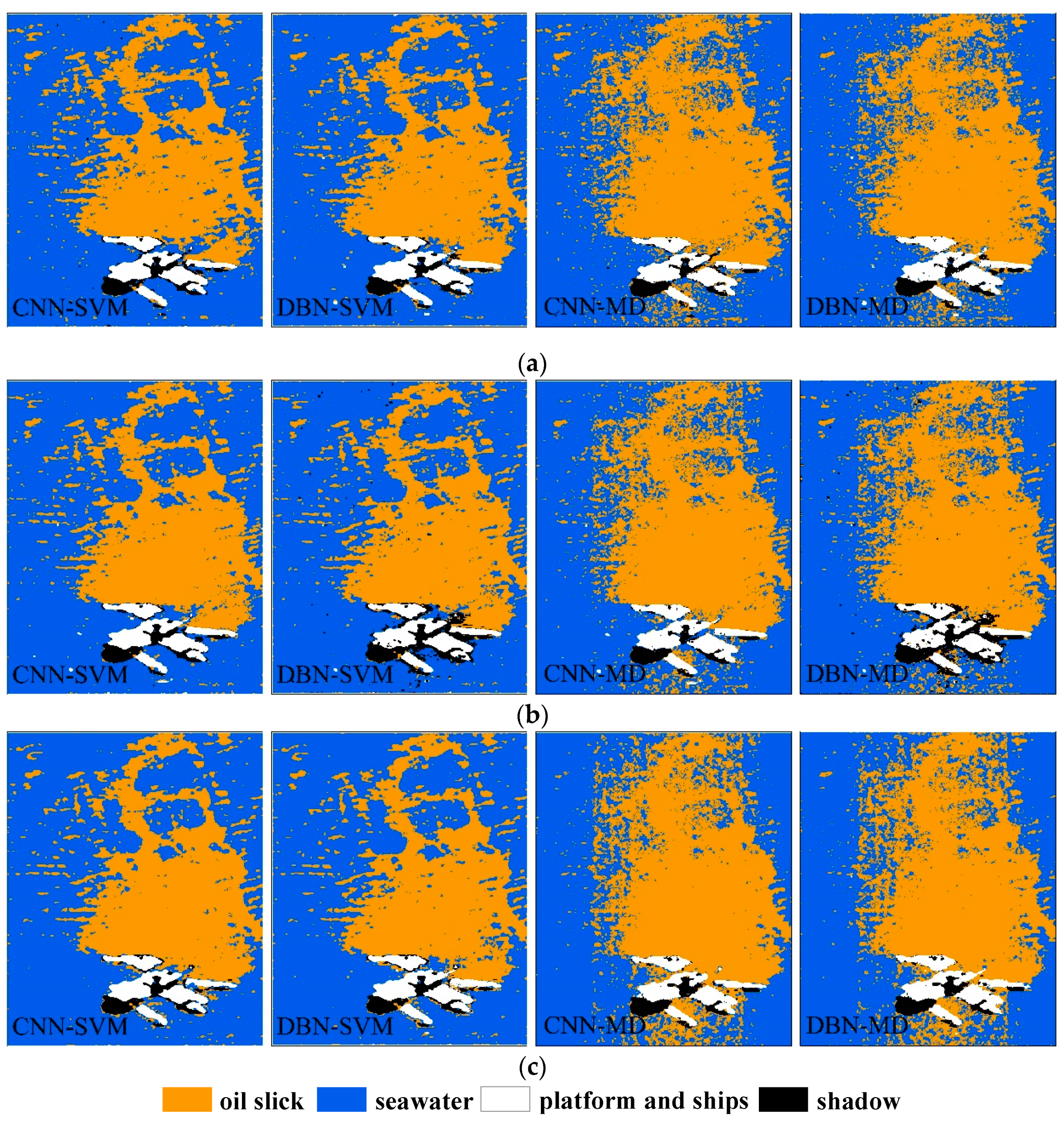

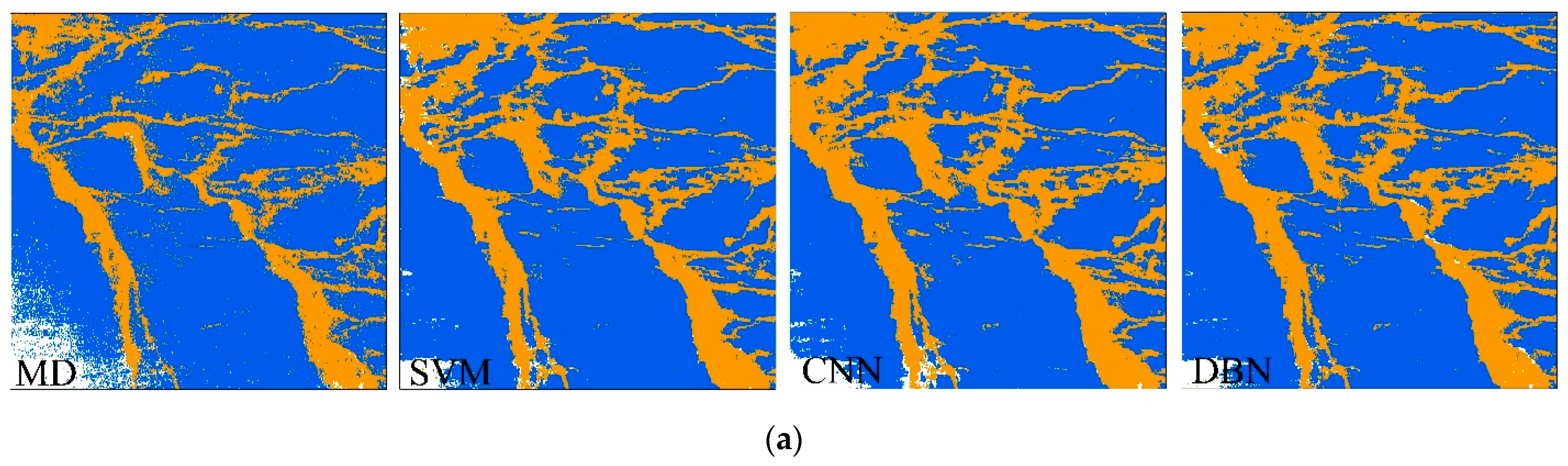

4.1. Oil Spill Detection Results of Single Classifier under Different Scales

4.2. Experimental Results of Decision Fusion

5. Discussion

5.1. Accuracy Evaluation of Oil Spill Detection

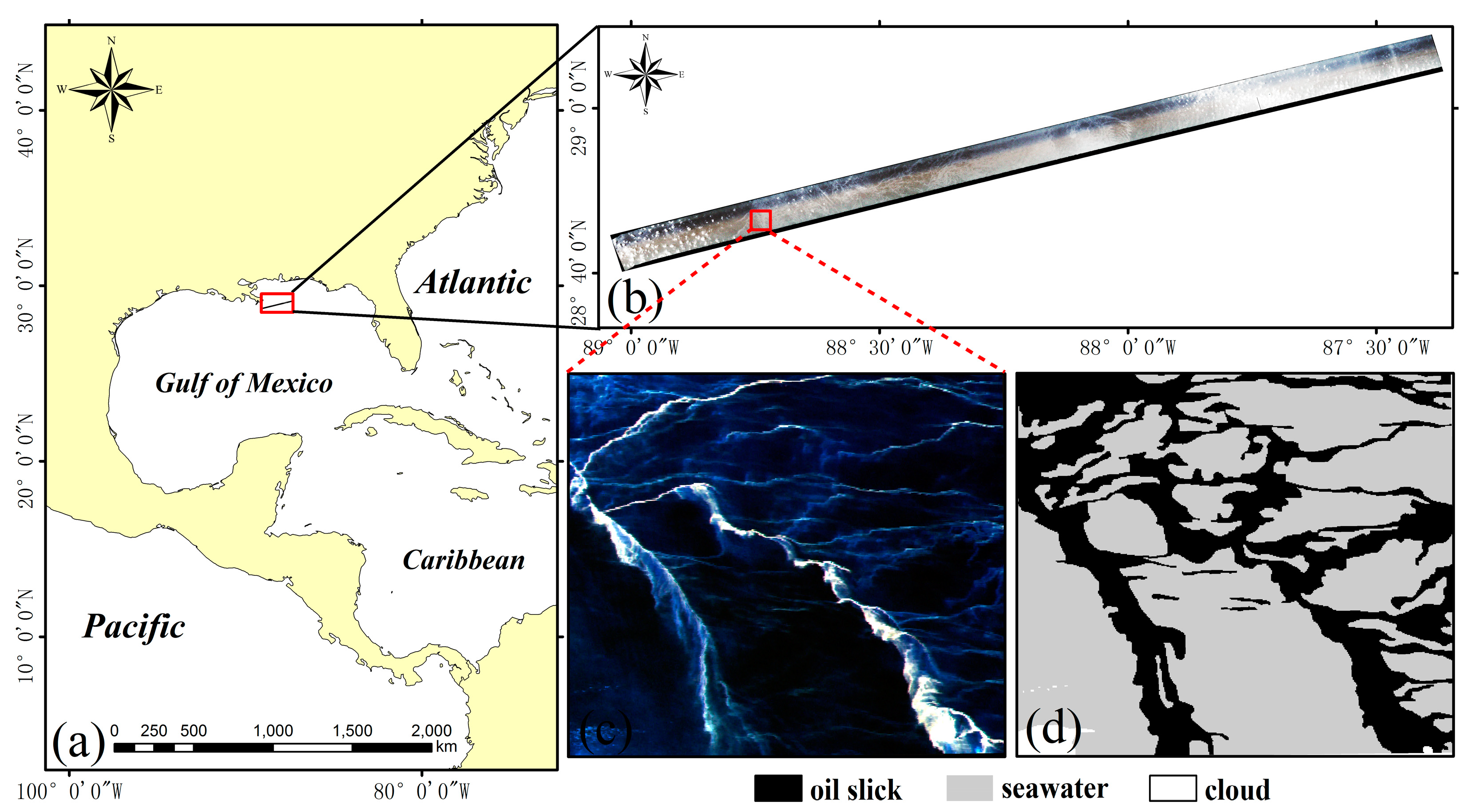

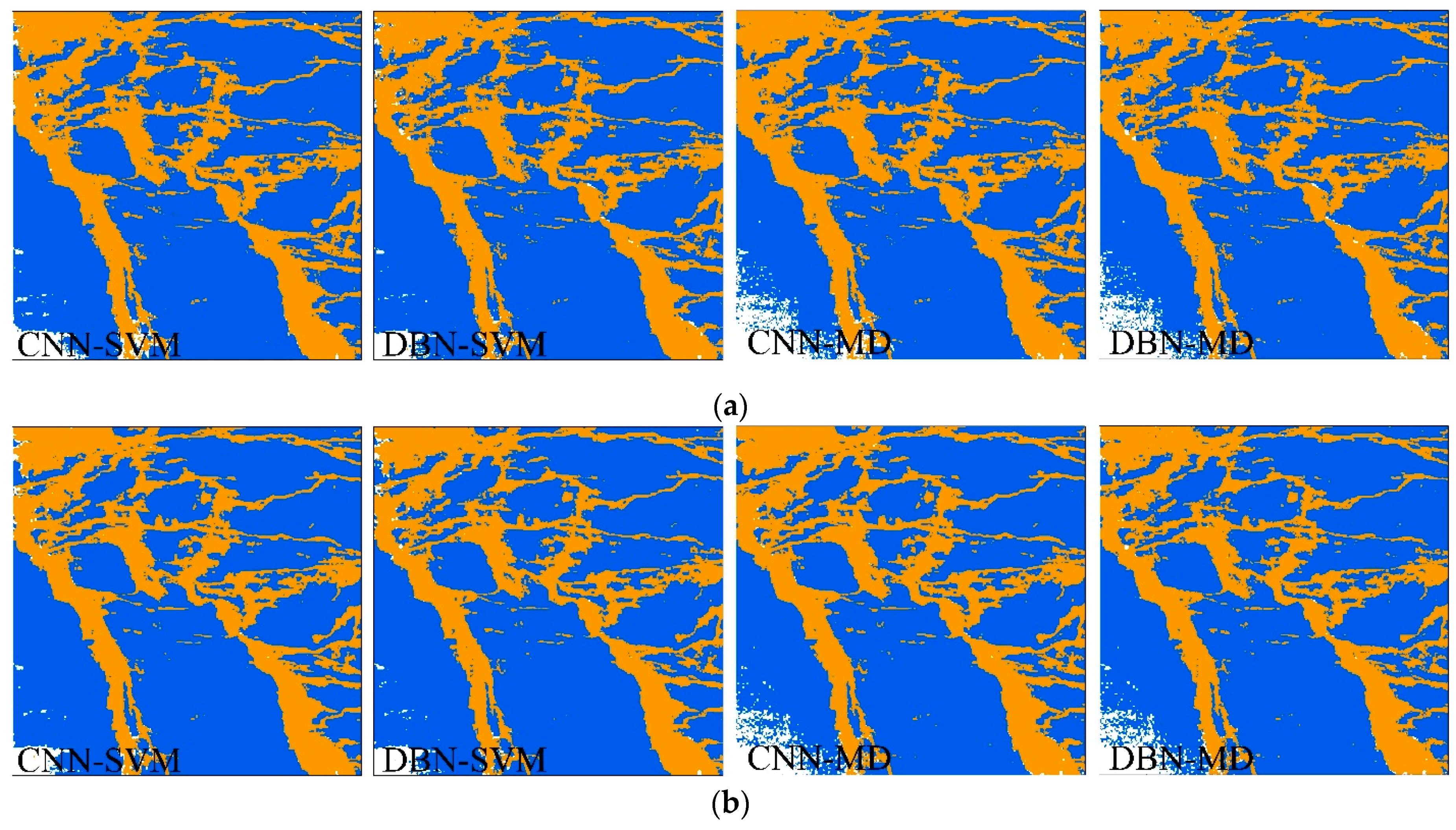

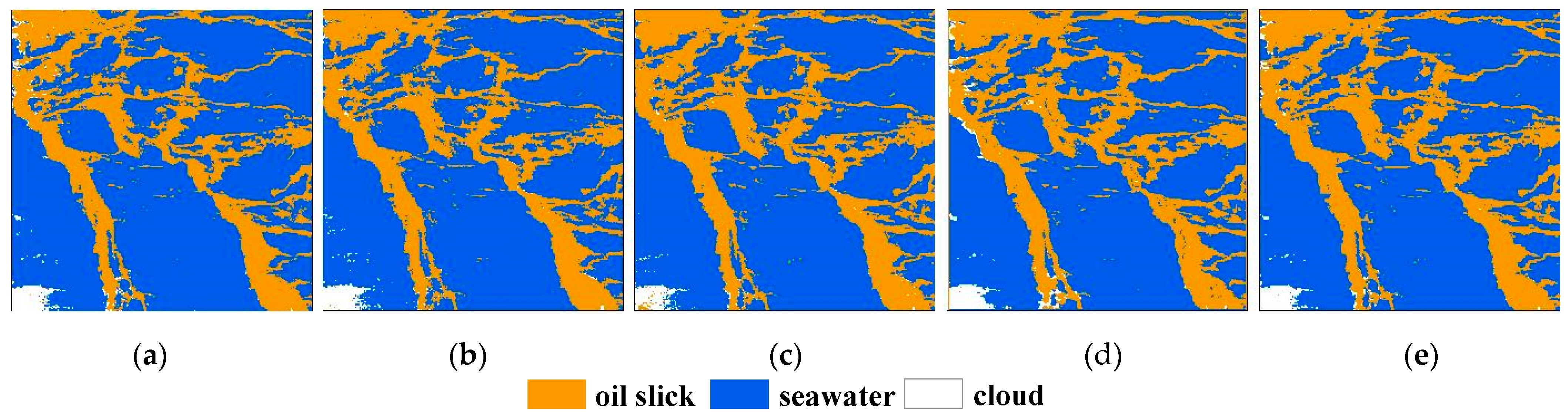

5.2. AVIRIS Hyperspectral Application of the Proposed Method

5.3. Satellite Hyperspectral Application of the Proposed Method

5.4. Comparison with Other Algorithms

5.5. Other Considerations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Leifer, I.; Lehr, W.J.; Simecek-Beatty, D.; Bradley, E.; Clark, R.; Dennison, P.; Hu, Y.X.; Matheson, S.; Jones, C.E.; Holt, B. State of the art satellite and airborne marine oil spill remote sensing: Application to the BP Deepwater Horizon oil spill. Remote Sens. Environ. 2012, 124, 185–209. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.F.; Ma, Y.; Ren, G.B.; Dong, L.; Wan, J.H. Oil spill AISA+ hyperspectral data detection based on different sea surface glint suppression methods. In Proceedings of the ISPRS XLII-3, Beijing, China, 7–11 May 2018. [Google Scholar]

- Yang, J.F.; Wan, J.H.; Ma, Y.; Hu, Y.B. Research on Objected-Oriented Decision Fusion for Oil Spill Detection on Sea Surface. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 28 July–3 August 2019. [Google Scholar]

- Cally, C. Unique oil spill in East China Sea frustrates scientists. Nature 2018, 554, 17–18. [Google Scholar]

- Lu, Y.C.; Li, X.; Tian, Q.J.; Zheng, G.; Sun, S.J.; Liu, Y.X.; Yang, Q. Progress in Marine Oil Spill Optical Remote Sensing: Detected Targets, Spectral Response Characteristics, and Theories. Mar. Geod. 2013, 36, 334–346. [Google Scholar] [CrossRef]

- Fingas, M.; Brown, C. Review of oil spill remote sensing. Mar. Pollut. Bull. 2014, 83, 9–23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- López-Peña, F.; Duro, R.J. A Hyperspectral Based Multisensor System for Marine Oil Spill Detection, Analysis and Tracking. In Proceedings of the 8th International Conference of Knowledge-Based Intelligent Information and Engineering Systems, Wellington, New Zealand, 20–25 September 2004. [Google Scholar]

- Zhao, D.; Cheng, X.; Zhang, H.; Niu, Y.; Qi, Y.; Zhang, H. Evaluation of the ability of spectral indices of hydrocarbons and seawater for identifying oil slicks utilizing hyperspectral images. Remote Sens. 2018, 10, 421. [Google Scholar] [CrossRef] [Green Version]

- El-Rahman, S.A.; Zolait, A.H.S. Hyperspectral image analysis for oil spill detection: A comparative study. Int. J. Comput. Sci. Math. 2018, 9, 103–121. [Google Scholar] [CrossRef]

- Khanna, S.; Santos, M.J.; Ustin, S.L.; Shapiro, K.; Haverkamp, P.J.; Lay, M. Comparing the potential of multispectral and hyperspectral data for monitoring oil spill impact. Sensors 2018, 18, 558. [Google Scholar] [CrossRef] [Green Version]

- Dilish, D. Spectral similarity algorithm-based image classification for oil spill mapping of hyperspectral datasets. J. Spectr. Imaging 2020, 9, a14. [Google Scholar]

- Wettle, M.; Daniel, P.J.; Logan, G.A.; Thankappan, M. Assessing the effect of hydrocarbon oil type and thickness on a remote sensing signal: A sensitivity study based on the optical properties of two different oil types and the HYMAP and Quickbird sensors. Remote Sens. Environ. 2009, 113, 2000–2010. [Google Scholar] [CrossRef]

- Lu, Y.C.; Shi, J.; Wen, Y.S.; Hu, C.M.; Zhou, Y.; Sun, S.J.; Zhang, M.W.; Mao, Z.H.; Liu, Y.X. Optical interpretation of oil emulsions in the ocean—Part I: Laboratory measurements and proof-of-concept with AVIRIS observations. Remote Sens. Environ. 2019, 230, 111183. [Google Scholar] [CrossRef]

- Lu, Y.C.; Shi, J.; Hu, C.M.; Zhang, M.W.; Sun, S.J.; Liu, Y.X. Optical interpretation of oil emulsions in the ocean—Part II: Applications to multi-band coarse-resolution imagery. Remote Sens. Environ. 2020, 242, 111778. [Google Scholar] [CrossRef]

- Yang, J.F.; Wan, J.H.; Ma, Y.; Zhang, J.; Hu, Y.B. Characterization analysis and identification of common marine oil spill types using hyperspectral remote sensing. Int. J. Remote Sens. 2020, 41, 7163–7185. [Google Scholar] [CrossRef]

- Ren, G.B.; Guo, J.; Ma, Y.; Luo, X.D. Oil spill detection and slick thickness measurement via UAV hyperspectral imaging. Haiyang Xuebao 2019, 41, 146–158. [Google Scholar]

- Lu, Y.C.; Tian, Q.J.; Wang, X.Y.; Zheng, G.; Li, X. Determining oil slick thickness using hyperspectral remote sensing in the Bohai Sea of China. Int. J. Dig. Earth 2013, 6, 76–93. [Google Scholar] [CrossRef]

- Jiang, Z.C.; Ma, Y.; Yang, J.F. Inversion of the Thickness of Crude Oil Film Based on an OG-CNN Model. J. Mar. Sci. Eng. 2020, 8, 653. [Google Scholar] [CrossRef]

- Lu, Y.C.; Hu, C.M.; Sun, S.J.; Zhang, M.W.; Zhou, Y.; Shi, J.; Wen, Y.S. Overview of optical remote sensing of marine oil spills and hydrocarbon seepage. J. Remote Sens. 2016, 20, 1259–1269. [Google Scholar]

- Cui, C.; Li, Y.; Liu, B.X.; Li, G.N. A New Endmember Preprocessing Method for the Hyperspectral Unmixing of Imagery Containing Marine Oil Spills. ISPRS Int. J. Geo-Inf. 2017, 6, 286. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Lu, H.M.; Zhang, Z.D.; Liu, P. A novel nonlinear hyperspectral unmixing approach for images of oil spills at sea. Int. J. Remote Sens. 2020, 41, 4682–4699. [Google Scholar] [CrossRef]

- Sidike, P.; Khan, J.; Alam, M.; Bhuana, S. Spectral unmixing of hyperspectral data for oil spill detection. In Proceedings of the SPIE—The International Society for Optical Engineering, Singapore, 5–9 October 2012. [Google Scholar]

- Song, M.P.; Cai, L.F.; Lin, B.; An, J.B.; Chang, C. Hyperspectral oil spill image segmentation using improved region-based active contour model. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar]

- Menezes, J.; Poojary, N. A fusion approach to classify hyperspectral oil spill data. Multimed. Tools Appl. 2020, 79, 5399–5418. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [Green Version]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Hu, W.; Huang, Y.Y.; Li, W.; Zhang, F.; Li, H.C. Deep Convolutional Neural Networks for Hyperspectral Image Classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.; Zhao, W.Z.; Mao, S.J.; Liu, H. Spectral spatial classification of hyperspectral images using deep convolutional neural networks. Remote Sens. Lett. 2015, 6, 468–477. [Google Scholar] [CrossRef]

- Chen, Y.S.; Jiang, H.L.; Li, C.Y.; Jia, X.P.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Chang, W.; Liu, B.; Zhang, Q. Oil slick extraction from hyperspectral images using a modified stacked auto-encoder network. In Proceedings of the SPIE—The International Society for Optical Engineering, Guangzhou, China, 10–13 May 2019. [Google Scholar]

- Mustaqeem; Kwon, S. 1D-CNN: Speech Emotion Recognition System Using a Stacked Network with Dilated CNN Features. Comput. Mater. Contin. 2021, 67, 4039–4059. [Google Scholar] [CrossRef]

- Mustaqeem; Kwon, S. Optimal feature selection based speech emotion recognition using two-stream deep convolutional neural network. Int. J. Intell. Syst. 2021, 36, 5116–5135. [Google Scholar] [CrossRef]

- Slavkovikj, V.; Verstockt, S.; De Neve, W.; Hoecke, S. Hyperspectral Image Classification with Convolutional Neural Networks. In Proceedings of the ACM International Conference on Multimedia, Shanghai, China, 23–26 June 2015. [Google Scholar]

- Zou, Q.; Ni, L.H.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Hu, Y.B.; Zhang, J.; Ma, Y.; An, J.B.; Ren, G.B.; Li, X.M.; Yang, J.G. Hyperspectral Coastal Wetland Classification Based on a Multi-Object Convolutional Neural Network Model and Decision Fusion. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1110–1114. [Google Scholar] [CrossRef]

- Hu, Y.B.; Zhang, J.; Ma, Y.; An, J.B.; Ren, G.B.; Li, X.M.; Sun, Q.P. Deep Learning Classification of Coastal Wetland Hyperspectral Image Combined Spectra and Texture Features: A Case Study of Yellow River Estuary Wetland. Acta Oceanol. Sin. 2019, 38, 142–150. [Google Scholar] [CrossRef]

- Zhu, X.; Li, Y.; Zhang, Q.; Liu, B. Oil film classification using deep learning-based hyperspectral remote sensing technology. ISPRS Int. J. Geo-Inf. 2019, 8, 181. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.F.; Wan, J.H.; Ma, Y.; Zhang, J.; Hu, Y.B.; Jiang, Z.C. Oil spill hyperspectral remote sensing detection based on DCNN with multiscale features. J. Coast. Res. 2019, 90, 332–339. [Google Scholar] [CrossRef]

- Jiang, Z.C.; Ma, Y.; Jiang, T.; Chen, C. Research on the extraction of Red Tide Hyperspectral Remote Sensing Based on the Deep Belief Network. J. Ocean Technol. 2019, 38, 1–7. [Google Scholar]

- Jiang, Z.; Ma, Y. Accurate extraction of offshore raft aquaculture areas based on a 3D-CNN model. Int. J. Remote Sens. 2020, 41, 5457–5481. [Google Scholar] [CrossRef]

- Yekeen, S.T.; Balogun, A.L. Advances in Remote Sensing Technology, Machine Learning and Deep Learning for Marine Oil Spill Detection, Prediction and Vulnerability Assessment. Remote Sens. 2020, 12, 3416. [Google Scholar] [CrossRef]

- Lo, C.P.; Choi, J. A Hybrid Approach to Urban Land Use/Cover Mapping Using Landsat7 Enhanced Thematic Mapper Plus (ETM+) images. Int. J. Remote Sens. 2004, 25, 2687–2700. [Google Scholar] [CrossRef]

- Chini, M.; Pacifici, F.; Emery, W.J.; Pierdicca, N.; Frate, F.D. Comparing Statistical and Neural Network Methods Applied to Very High Resolution Satellite Images Showing Changes in Man-made Structures at Rocky Flats. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1812–1821. [Google Scholar] [CrossRef]

- Licciardi, G.; Pacifici, F.; Tuia, D.; Prasad, S.; West, T.; Giacco, F.; Thiel, C.; Inglada, J.; Christophe, E.; Chanussot, J. Decision Fusion for the Classification of Hyperspectral Data: Outcome of the 2008 GRS-S Data Fusion Contest. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3857–3865. [Google Scholar] [CrossRef] [Green Version]

- Kalluri, H.R.; Prasad, S.; Bruce, L.M. Decision-Level Fusion of spectral Reflectance and Derivative Information for Robust Hyperspectral Land Cover Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4047–4058. [Google Scholar] [CrossRef]

- Li, W.; Prasad, S.; Fowler, J.E. Decision Fusion in Kernel-Induced Spaces for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3399–4012. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.Y.; Ma, Y.; Zhang, Z.; Liang, J. Research on Retrieval Method of Shallow Sea Depth Stereo Remote Sensing Images of Island Reef Based on Decision Fusion. In Proceedings of the 2015 Annual Symposium of the Chinese Society of Oceanography, Beijing, China, 26–27 October 2015. [Google Scholar]

- Report on Accident Investigation and Handling by the Joint Investigation Team of Oil Spill Accident in Penglai 19-3 Oilfield. Available online: http://www.mnr.gov.cn/dt/hy/201206/t20120626_2329986.html (accessed on 26 June 2012).

- Ackley, D.H.; Hinton, G.E.; Sejnowski, T.J. A Learning Algorithm for Boltzmann machines. Cogn. Sci. 1985, 9, 147–169. [Google Scholar] [CrossRef]

- Mohamed, A.R.; Dahl, G.E.; Hinton, G.E. Acoustic modeling using deep belief networks. IEEE Trans. Audio Speech Lang. Process. 2011, 20, 14–22. [Google Scholar] [CrossRef]

- Hinton, G.E. A practical guide to training restricted Boltzmann shallows. Momentum 2010, 9, 926–947. [Google Scholar]

- Chi, M.M.; Feng, R.; Bruzzone, L. Classification of hyperspectral remote sensing data with primal SVM for small-sized training dataset problem. Adv. Space Res. 2008, 41, 1793–1799. [Google Scholar] [CrossRef]

- Liang, L.; Yang, M.H.; Li, Y.F. Hyperspectral Remote Sensing Image Classification Based on ICA and SVM Algorithm. Spectrosc. Spectr. Anal. 2010, 30, 2724–2728. [Google Scholar]

- Zhang, C.; Sargent, I.; Pan, X.; Gardiner, A.; Hare, G.; Atkinson, P.M. VPRS-based regional decision fusion of CNN and MRF classifications for very fine resolution remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4507–4521. [Google Scholar] [CrossRef] [Green Version]

| Parameter | Index |

|---|---|

| number of bands | 258 |

| spectral rang | 400–1000 nm |

| spectral resolution | 5 nm |

| spatial resolution | 1.41 m@1 km |

| field of view | 39.7° |

| Accident | Data | Feature Types | Pixels Number of Training Samples | Pixels Number of Test Samples |

|---|---|---|---|---|

| well kick accident of platform C in Penglai 19-3 Oilfield | AISA+ hyperspectral image | oil slick | 2073 | 704 |

| sea water | 4381 | 1448 | ||

| platform and ships | 322 | 157 | ||

| shadow | 272 | 85 |

| Evaluation Criterion | Pixels Number Correctly Detected of Oil Spill | Pixels Number of Oil Spill in Interpretation Map | Pixels Number of Oil Spill Detected by the Classifier | Recall (%) | Precision (%) | F1 1 | |

|---|---|---|---|---|---|---|---|

| Methods | |||||||

| M D | original scale | 41,890 | 53,822 | 66,752 | 77.83 | 62.75 | 0.6948 |

| first-level scale | 42,646 | 53,822 | 65,397 | 79.24 | 65.21 | 0.7154 | |

| second-level scale | 41,263 | 53,822 | 68,561 | 76.67 | 60.18 | 0.6743 | |

| S V M | original scale | 42,030 | 53,822 | 45,637 | 78.09 | 92.10 | 0.8452 |

| first-level scale | 42,785 | 53,822 | 46,694 | 79.49 | 91.63 | 0.8513 | |

| second-level scale | 42,772 | 53,822 | 46,533 | 79.47 | 91.92 | 0.8524 | |

| C N N | original scale | 45,872 | 53,822 | 54,252 | 85.23 | 84.55 | 0.8489 |

| first-level scale | 46,223 | 53,822 | 52,260 | 85.88 | 88.45 | 0.8715 | |

| second-level scale | 45,208 | 53,822 | 51,707 | 84.00 | 87.43 | 0.8568 | |

| D B N | original scale | 46,324 | 53,822 | 55,445 | 86.07 | 83.55 | 0.8479 |

| first-level scale | 45,103 | 53,822 | 50,638 | 83.80 | 89.07 | 0.8635 | |

| second-level scale | 43,675 | 53,822 | 49,111 | 81.15 | 88.93 | 0.8486 |

| EvaluationCriterion | Pixels Number Correctly Detected of Oil Spill | Pixels Number of Oil Spill in Interpretation Map | Pixels Number of Oil Spill Detected by the Classifier | Recall (%) | Precision (%) | F1 1 | |

|---|---|---|---|---|---|---|---|

| Methods | |||||||

| CNN-SVM | original scale | 46,370 | 53,822 | 52,529 | 86.15 | 88.28 | 0.8720 |

| first-level scale | 47,030 | 53,822 | 53,049 | 87.38 | 88.65 | 0.8801 | |

| second-level scale | 46,917 | 53,822 | 54,083 | 87.17 | 86.75 | 0.8696 | |

| DBN-SVM | original scale | 45,949 | 53,822 | 52,111 | 85.37 | 88.18 | 0.8675 |

| first-level scale | 46,193 | 53,822 | 51,904 | 85.83 | 89.00 | 0.8738 | |

| second-level scale | 45,470 | 53,822 | 51,573 | 84.48 | 88.17 | 0.8628 | |

| CNN-MD | original scale | 49,304 | 53,822 | 69,164 | 91.61 | 71.29 | 0.8018 |

| first-level scale | 49,331 | 53,822 | 67,863 | 91.66 | 72.69 | 0.8108 | |

| second-level scale | 48,817 | 53,822 | 72,353 | 90.70 | 67.47 | 0.7738 | |

| DBN-MD | original scale | 48,588 | 53,822 | 67,100 | 90.28 | 72.41 | 0.8036 |

| first-level scale | 48,968 | 53,822 | 66,297 | 90.98 | 73.86 | 0.8153 | |

| second-level scale | 48,482 | 53,822 | 71,330 | 90.08 | 67.97 | 0.7748 |

| No. | Feature Types | Pixels Number of Training Samples | Pixels Number of Test Samples |

|---|---|---|---|

| 1 | oil slick | 1008 | 54,598 |

| 2 | seawater | 1282 | 103,598 |

| 3 | cloud | 246 | 1804 |

| Evaluation Criterion | Pixels Number Correctly Detected of Oil Spill | Pixels Number of Oil Spill in Interpretation Map | Pixels Number of Oil Spill Detected by the Classifier | Recall (%) | Precision (%) | F1 | |

|---|---|---|---|---|---|---|---|

| Methods | |||||||

| M D | original scale | 34,439 | 54,598 | 36,755 | 63.08 | 93.70 | 0.7540 |

| first-level scale | 36,638 | 54,598 | 39,222 | 67.11 | 93.41 | 0.7810 | |

| second-level scale | 37,702 | 54,598 | 41,744 | 69.05 | 90.32 | 0.7827 | |

| S V M | original scale | 44,102 | 54,598 | 46,732 | 80.78 | 94.37 | 0.8705 |

| first-level scale | 44,001 | 54,598 | 46,493 | 80.59 | 94.64 | 0.8706 | |

| second-level scale | 43,058 | 54,598 | 45,579 | 78.86 | 94.47 | 0.8596 | |

| C N N | original scale | 47,247 | 54,598 | 52,086 | 86.54 | 90.71 | 0.8857 |

| first-level scale | 47,242 | 54,598 | 51,520 | 86.53 | 91.70 | 0.8904 | |

| second-level scale | 47,361 | 54,598 | 52,699 | 86.74 | 89.87 | 0.8828 | |

| D B N | original scale | 45,725 | 54,598 | 49,873 | 83.75 | 91.68 | 0.8754 |

| first-level scale | 47,498 | 54,598 | 52,260 | 87.00 | 90.89 | 0.8890 | |

| second-level scale | 45,168 | 54,598 | 48,086 | 82.73 | 93.93 | 0.8797 |

| Evaluation Criterion | Pixels Number Correctly Detected of Oil Spill | Pixels Number of oil Spill in Interpretation Map | Pixels Number of Oil Spill Detected by the Classifier | Recall (%) | Precision (%) | F1 | |

|---|---|---|---|---|---|---|---|

| Methods | |||||||

| CNN-SVM | original scale | 47,209 | 54,598 | 51,315 | 86.47 | 92.00 | 0.8915 |

| first-level scale | 47,093 | 54,598 | 50,912 | 86.25 | 92.50 | 0.8927 | |

| second-level scale | 47,323 | 54,598 | 52,428 | 86.68 | 90.26 | 0.8843 | |

| DBN-SVM | original scale | 46,245 | 54,598 | 49,772 | 84.70 | 92.91 | 0.8862 |

| first-level scale | 47,346 | 54,598 | 52,031 | 86.72 | 91.00 | 0.8881 | |

| second-level scale | 45,650 | 54,598 | 48,612 | 83.61 | 93.91 | 0.8846 | |

| CNN-MD | original scale | 46,823 | 54,598 | 50,933 | 85.76 | 91.93 | 0.8874 |

| first-level scale | 47,376 | 54,598 | 51,465 | 86.77 | 92.05 | 0.8934 | |

| second-level scale | 47,989 | 54,598 | 54,107 | 87.90 | 88.69 | 0.8829 | |

| DBN-MD | original scale | 45,236 | 54,598 | 48,566 | 82.85 | 93.14 | 0.8770 |

| first-level scale | 47,903 | 54,598 | 53,527 | 87.74 | 89.49 | 0.8861 | |

| second-level scale | 45,439 | 54,598 | 48,398 | 83.22 | 93.89 | 0.8823 |

| Sensor | Spectral Ange (nm) | Number of Bands | Spectral Resolution (nm) | Spatial Resolution (m) | Swath (km) | Platform | |

|---|---|---|---|---|---|---|---|

| Airborne | AVIRIS | 350~2500 | 224 | 10 | 0.89 m@1 km | - | - |

| AISA | 400~1000 | 258 | 5 | 1.41 m@1 km | - | - | |

| CASI | 380~1050 | 288 | 3.5 | 1.42 m@1 km | - | - | |

| HyMap | 400~2500 | 128 | VNIR:15 SWIR:20 | 2.25 m@1 km | - | - | |

| Spaceborne | Hyperion | 400~2500 | 242 | 10 | 30 | 7.7 | EO-1 |

| CHRIS | 400~1050 | 18/62 | 5~12 | 17/34 | 14 | PROBA | |

| AHSI | 400~2500 | 330 | VNIR:5 SWIR:10 | 30 | 60 | GF-5 | |

| HSI | 450~950 | 220 | 5 | 100 | 50 | HJ-1A | |

| HICO | 360~1080 | 128 | 5.7 | 90 | 42 | ISS | |

| PRISMA | 400~2500 | 250 | <12 | 30 | 30 | PRISMA | |

| HIS | 420~2450 | 262 | VNIR:6.5 SWIR:10 | 30 | 30 | EnMAP | |

| Methods | SVM | DBN | 1D-CNN | MRF-CNN | The Proposed Algorithm | |

|---|---|---|---|---|---|---|

| Evaluation Criterion | ||||||

| AISA+ hyperspectral image | F1 | 0.8524 | 0.8635 | 0.8715 | 0.8672 | 0.8801 |

| OA(%) | 89.90 | 89.78 | 90.61 | 91.5 | 91.93 | |

| AVIRIS hyperspectral image | F1 | 0.8706 | 0.8890 | 0.8904 | 0.8668 | 0.8927 |

| OA(%) | 91.51 | 92.23 | 92.47 | 90.76 | 92.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Ma, Y.; Hu, Y.; Jiang, Z.; Zhang, J.; Wan, J.; Li, Z. Decision Fusion of Deep Learning and Shallow Learning for Marine Oil Spill Detection. Remote Sens. 2022, 14, 666. https://doi.org/10.3390/rs14030666

Yang J, Ma Y, Hu Y, Jiang Z, Zhang J, Wan J, Li Z. Decision Fusion of Deep Learning and Shallow Learning for Marine Oil Spill Detection. Remote Sensing. 2022; 14(3):666. https://doi.org/10.3390/rs14030666

Chicago/Turabian StyleYang, Junfang, Yi Ma, Yabin Hu, Zongchen Jiang, Jie Zhang, Jianhua Wan, and Zhongwei Li. 2022. "Decision Fusion of Deep Learning and Shallow Learning for Marine Oil Spill Detection" Remote Sensing 14, no. 3: 666. https://doi.org/10.3390/rs14030666

APA StyleYang, J., Ma, Y., Hu, Y., Jiang, Z., Zhang, J., Wan, J., & Li, Z. (2022). Decision Fusion of Deep Learning and Shallow Learning for Marine Oil Spill Detection. Remote Sensing, 14(3), 666. https://doi.org/10.3390/rs14030666