Lightweight Multimechanism Deep Feature Enhancement Network for Infrared Small-Target Detection

Abstract

1. Introduction

- The target occupies a small proportion of the whole infrared image (generally less than 0.12%) and lacks color and fine structure information (e.g., contour, shape, and texture).Therefore, it is not advisable to blindly increase the network depth and increase the extraction ability of small target features. This will not only increase the computational burden, but also make the model file increase rapidly.

- Using traditional loss functions, such as Soft-IoU, and using marked images (the background is 0, and the target is 1), essentially defines a fixed threshold of 0.5. However, because of the lack of infrared small target features, complex background, and variety of small targets, the network may not have the ability to make the target approach 1 and the background approach 0. For example, the background may be 0.2, and the target, 0.5. Thus, we must relativize the difference between the background and the target and simulate the effect of the step function.

- Unlike the segmentation of large targets, in the segmentation of small infrared targets, the target features may be submerged or may disappear in the clutter in the deep layer of the network. Moreover, we believe that shallow features may play a more important task in the recognition of small infrared targets. Therefore, we must detect targets in low-level feature maps and enhance high-level semantic features.

- Infrared small target segmentation is primarily used in the military field, which requires a small number of network calculations and a small model file. Therefore, we must design a lightweight network.

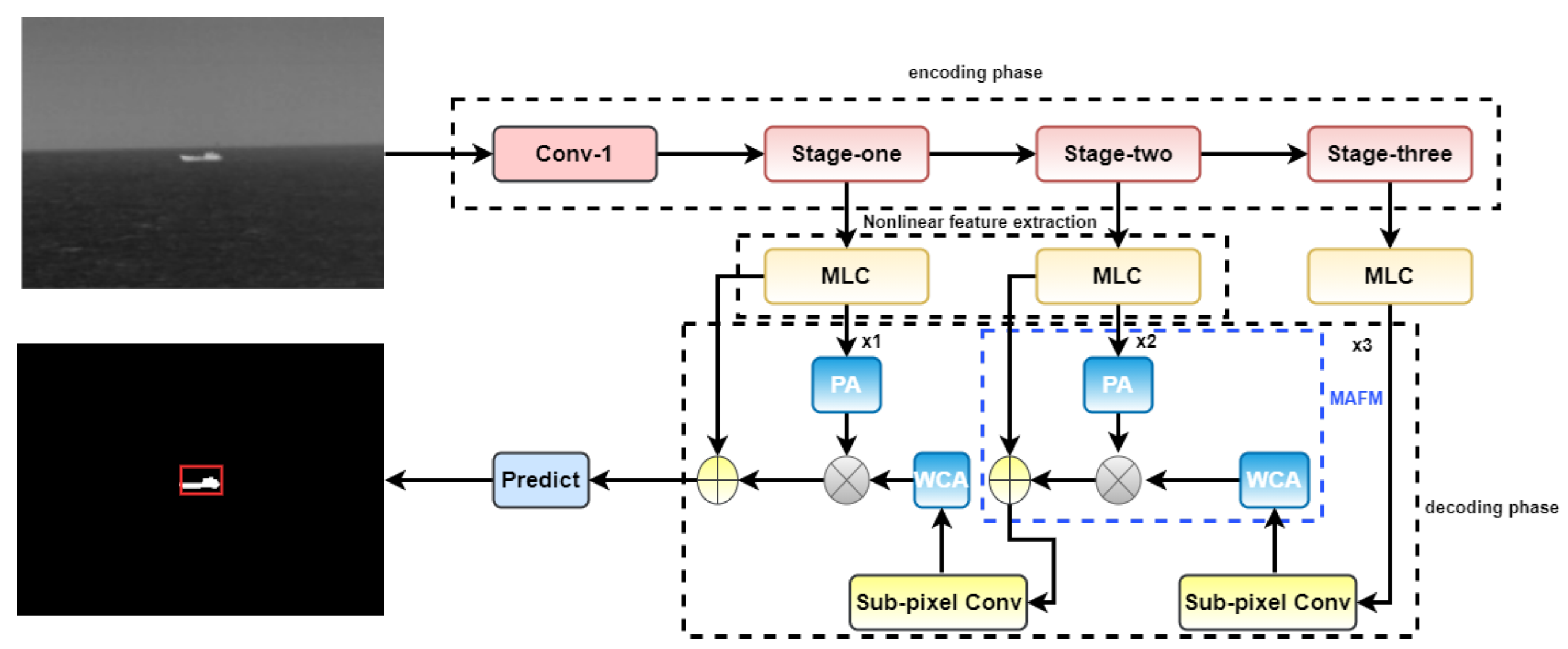

- In this paper, ResNet20 is selected as the backbone network, and the encoder–decoder structure is adopted. There are shortcut connections used in ResNet20. These shortcut connections can fuse features with different resolutions to a certain extent, which helps to alleviate the deep annihilation problem of infrared small target and keep the gradient stable. To minimize unnecessary floating-point operations, we refer to ALCNet and reduce the four-layer structure of the traditional ResNet to three layers. Thus, the floating-point operation is controlled at 4.3 G, and the model size is 0.37 M. It can be embedded in aviation equipment.

- A multimechanism attention collaborative fusion module is proposed, including a weak target channel attention mechanism and pixel attention mechanism. Most of the existing modules are devoted to the development of more complex attention mechanisms, which are not suitable for infrared small-target detection. Lightweight attention mechanisms, such as efficient channel attention network (ECA-Net) [21] cannot effectively form attention to small targets because of the lack of some features of infrared small targets. To solve the above problems, the multimechanism attention collaborative fusion module adopts the concept of multimechanism collaborative feature fusion under the guidance of an attention mechanism and makes improvements in target detection in two aspects. First, a global maximum pooling and sigmoid function are used to enhance the semantic features of small targets and an uncompressed channel convolution is used to extract the channel attention, which effectively retains deep semantic information. Secondly, under the guidance of deep semantic features, the detail features of infrared small targets are retained and enhanced by pixel attention, and the underlying features are modulated by multiplying elements so that the network dynamically selects relevant features from the bottom.

- The loss function in the general network is no longer suitable for the end-to-end detection method that combines model- and data-driven methods. Therefore, we propose a normalized loss function. It normalizes the network output with the maximum and minimum probability, ensures that the loss function focuses on the relative value between pixels, and improves the convergence efficiency of the network, which is also a key factor in improving the efficiency of the model- and data-driven end-to-end detection methods.

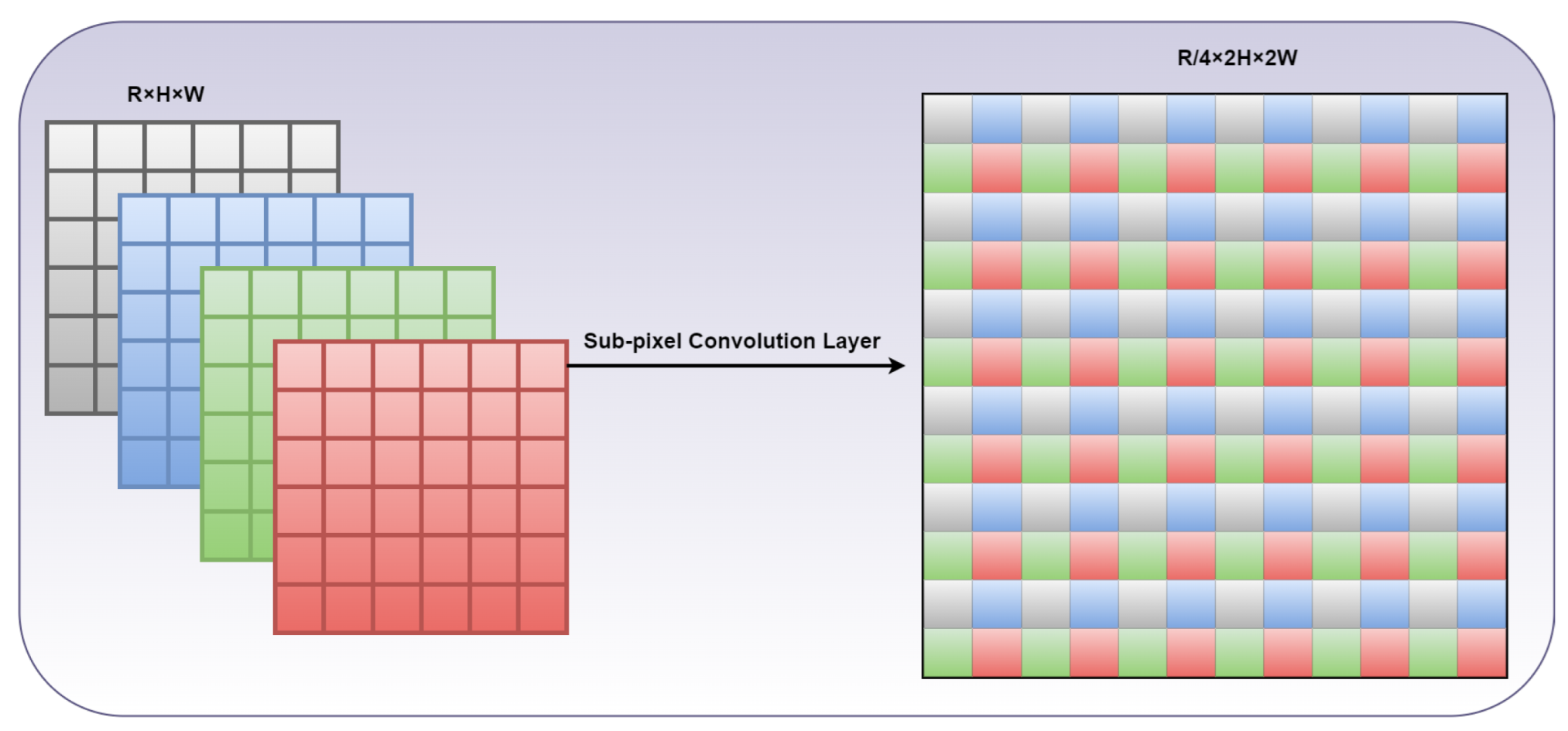

- To address the problem that the small infrared target has few pixels and the difficulty in extracting high-resolution effective features, subpixel convolution upsampling is used to enhance the feature utilization and the low-resolution feature representation is converted into high-resolution identifiable feature representation to improve the detection accuracy of the network.

2. Related Work

2.1. Single-Frame Infrared Small-Target Detection

2.2. Multiscale Feature Fusion Guided by Attention Mechanism

2.3. Loss Function

3. Proposed Method

3.1. Network Structure

3.2. Multimechanism Attention Collaborative Fusion Module (MAFM)

3.2.1. Weak Target Channel Attention Mechanism

3.2.2. Pixel Attention Mechanism

3.3. Subpixel Convolution Upsampling

3.4. Normalized Loss Function

4. Experiments and Results

4.1. Experimental Settings

- Dataset: We adopted an open-source infrared small target dataset SIRST and a dataset IDTAT from the National University of Defense Technology. SIRST contains raw images and pixel-level labeled images, including 427 different images and 480 scene instances from hundreds of real videos. We divided SIRST into three sets: training set, validation set, and testing set allocated as 50%, 20%, and 30%, of the total data, respectively. The SIRST dataset is shown in Figure 6, where it can be seen from (c)(g)(h)(i)(j) that the overall image is dark, and the small target is in a complex background. The background is fuzzy, and there are clouds, Rayleigh noise, and other clutter interference. From IDTAT, we selected folders 6–12, 15, 17, and 21 with relatively complex backgrounds and 5993 pictures. The IDTAT dataset is shown in Figure 7, and its scenes are diverse and very complex. The dataset was divided into training and testing sets at a ratio of 8:2. To make the dataset applicable to the evaluation index of the network, we improved the dataset and marked the selected images at the pixel level. The experiment shows that the improved IDTAT can be effectively applied to the network.

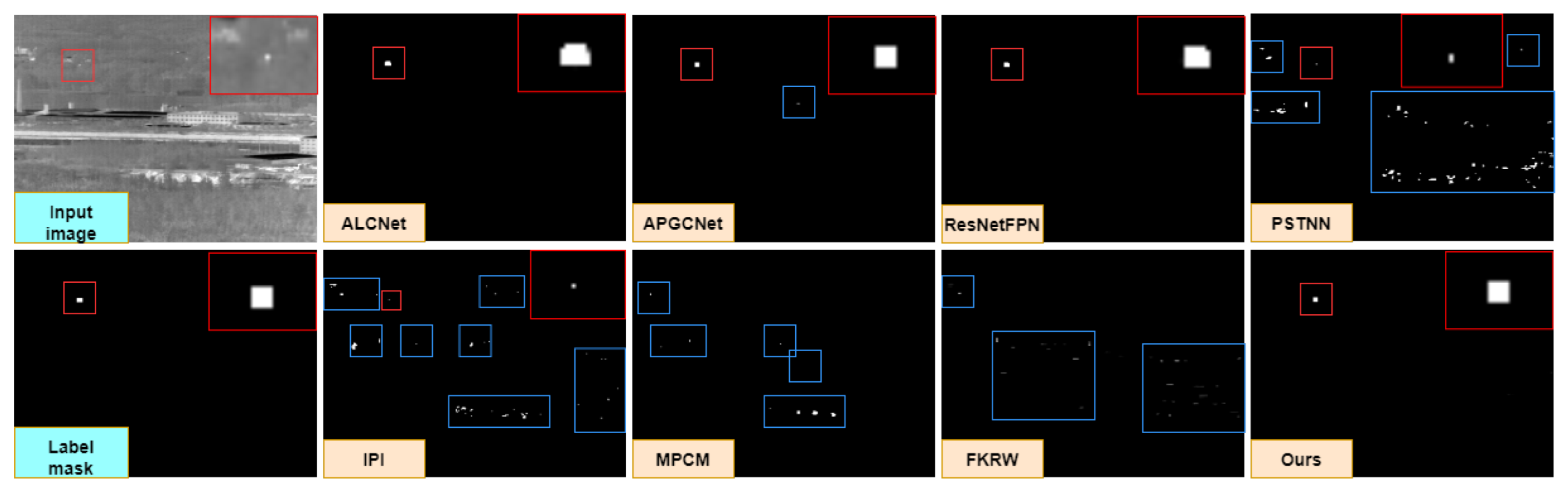

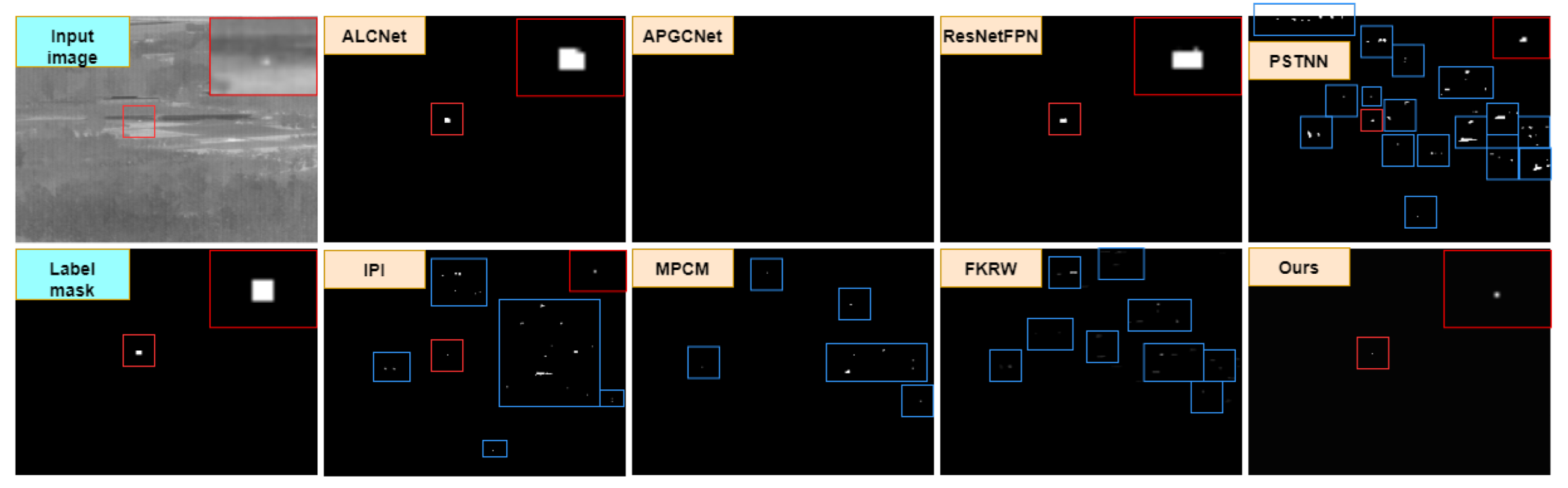

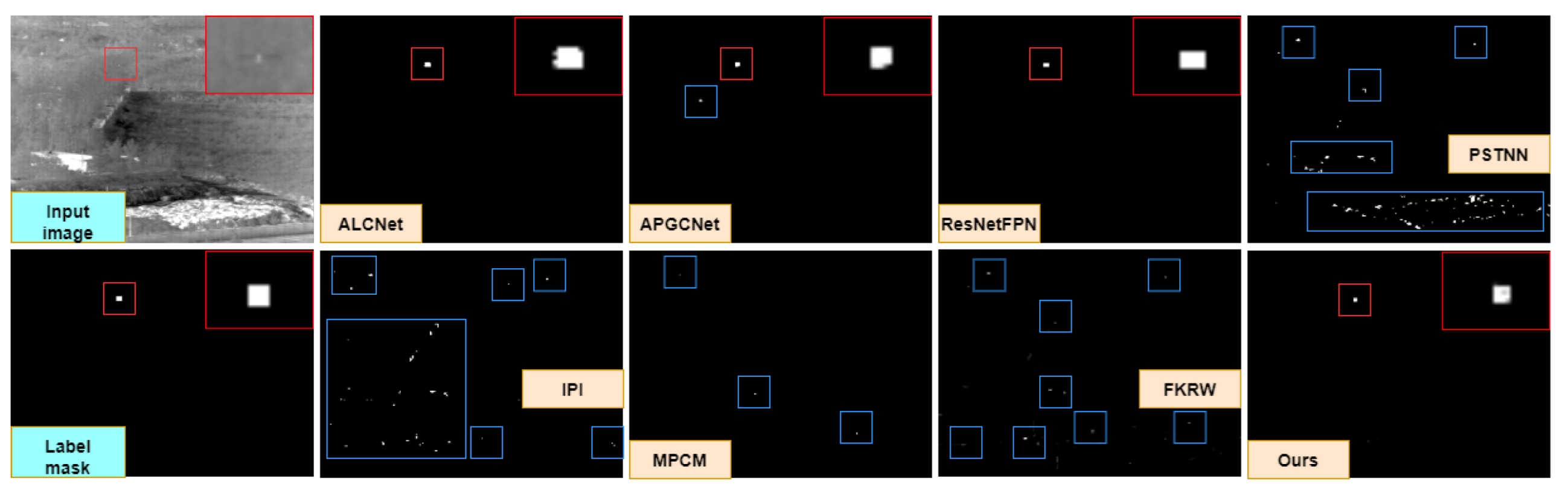

- Comparison Network: To prove the effectiveness of MDFENet, we compared the proposed method with other model- and data-driven methods. From the data-driven methods, we selected FPN [31], the attention local contrast network [19], and the attention-guided pyramid environment network [17]. We select these data-driven methods for comparison because our network was inspired by the above networks and proposed our new models and ideas on this basis. Therefore, we select the above networks as the baseline to make a fair comparison and prove the superiority of our proposed models and ideas. These methods have the same optimizer and other hyperparameters as the proposed method. In the traditional method, two classical methods and two new methods are selected, which are MPCM algorithm [10], IPI algorithm [14], PSTNN algorithm [18], and FKRW algorithm [26]. MPCM and IPI are classical algorithms in infrared small-target detection and have a relatively wide influence. PSTNN and FKRW are advanced model-driven methods in recent years, and their comparison can better prove the superiority of our method.

- Evaluation Metrics: This algorithm is a segmentation-based target-detection algorithm, and the result is a segmented binary image. Therefore, we do not consider traditional infrared small-target detection evaluation indicators, such as background suppression factor and signal-to-noise ratio, to be applicable. Instead, we used FLOPs, Params, IoU, and the normalized intersection over union (nIoU) to objectively evaluate the performance of the proposed network. FLOPs and Params are the key metrics by which to evaluate network speed and lightweightness. IoU is an important metric in pixel segmentation tasks to evaluate the shape detection ability of an algorithm. nIoU is an infrared small-target detection evaluation metric proposed by [19], which can better balance the metrics between model- and data-driven methods, which is defined aswhere T, P, and TP denote true, positive and true positive, respectively.

- Runtime and Implementation Details: Table 2 shows the computational complexity and running time of deep-learning methods. ResNetFPN, ALCNet, AGPCNet and our proposed MDFENet methods based on deep learning are trained on a laptop computer with Nvidia GeForce RTX 2080 Super GPU and 8 G GPU memory, and the code is written in Python 3.8 language using the Pychram 2021 IDE and PyTorch 1.8 framework. When we implemented these methods, we used the ADAM optimizer, where the weight decay coefficients were set to 0.0001. The initial learning rate was 0.001, and the decay strategy of poly was used. The batch size was set to 8. The loss function for each method was normalized Soft-IoU. SIRST dataset was trained for 300 epochs, and the IDTAT dataset was trained for 40 epochs. The model-driven methods, MPCM, IPI, PSTNN, and FKRW were run on a laptop computer with 2.20 Ghz CPU Intel(R) Core(TM) i7-10870H and 16 G main memory. The code was implemented in MATLAB R2021b. The specific experimental parameter settings of each method during implementation are shown in Table 3.

4.2. Comparison to State-of-the-Art Approaches

4.3. Ablation Study

- Influence of multimechanism attention collaborative feature fusion module. Table 5 and Table 6 present the different feature fusion methods and strategies we adopted. Figure 15 show the structure diagrams of each fusion method. We selected the bottom-up attentional module (BLAM) and asymmetric contextual modulation (ACM) and multimechanism top-down attention module (MTAM) and multimechanism reverse attention module (MRAM) integrating channel attention mechanism [21]. We replaced the MAFM with the above modules to verify the effectiveness of the MAFM. As shown in Table 5, Table 6 and Table 7, SIRST and IDTAT were selected as the datasets. Our proposed MAFM has the optimal effect.

- Influence of sub-pixel convolutional upsampling. A high-resolution prediction map can effectively improve the effect of infrared small-target detection. In this study, we used subpixel convolution in a superresolution reconstruction task to improve the resolution of the prediction map. We studied and compared subpixel convolution and linear interpolation in this study. As shown in Table 7, the subpixel convolution network performance is outstanding. This is because of the traditional linear interpolation in spatial information only and in the neural network; correlation and interaction between each channel cannot be ignored, and the subpixel convolution can be a relatively good application of these relations through channel expansion. It is then reconstituted into the image space and the information between channels is effectively used to achieve better results than traditional sampling.

- Influence of normalized loss function. In the traditional IoU and nIoU calculation, the loss calculation of the network output results directly after the sigmoid and the labeled image cannot accurately reflect the error. The normalized probability function greatly reduces the redundant information in the image, suppresses the background, enhances the target, and enables the network to learn more accurate information. The results are presented in Table 7. The performance of the network was greatly improved after the normalized loss was adopted.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wu, D.; Cao, L.; Zhou, P.; Li, N.; Li, Y.; Wang, D. Infrared Small-Target Detection Based on Radiation Characteristics with a Multimodal Feature Fusion Network. Remote Sens. 2022, 14, 3570. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.; Liu, M.; Ye, C.; Zhou, X. Small infrared target detection based on weighted local difference measure. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4204–4214. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, J.; An, W. Infrared dim and small target detection via multiple subspace learning and spatial-temporal patch-tensor model. IEEE Trans. Geosci. Remote Sens. 2020, 1, 6. [Google Scholar] [CrossRef]

- Wu, Z.; Fuller, N.; Theriault, D.; Betke, M. A thermal infrared video benchmark for visual analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 201–208. [Google Scholar]

- Li, Y.; Zhang, Y. Robust infrared small target detection using local steering kernel reconstruction. Pattern Recognit. 2018, 77, 113–125. [Google Scholar] [CrossRef]

- Huang, S.; Peng, Z.; Wang, Z.; Wang, X.; Li, M. Infrared Small Target Detection by Density Peaks Searching and Maximum-Gray Region Growing. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1919–1923. [Google Scholar] [CrossRef]

- Huang, S.; Liu, Y.; He, Y.; Zhang, T.; Peng, Z. Structure-Adaptive Clutter Suppression for Infrared Small Target Detection: Chain-Growth Filtering. Remote Sens. 2020, 12, 47. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Analysis of new top-hat transformation and the application for infrared dim small target detection. Pattern Recognit. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Wei, Y.; You, X.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Zhang, H.; Zhao, Q.; Zhang, X.; Li, N. Infrared Small Target Detection Based on the Weighted Strengthened Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1670–1674. [Google Scholar] [CrossRef]

- Han, J.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A Robust Infrared Small Target Detection Algorithm Based on Human Visual System. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2168–2172. [Google Scholar]

- Qin, Y.; Li, B. Effective Infrared Small Target Detection Utilizing a Novel Local Contrast Method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y. Reweighted Infrared Patch-Tensor Model With Both Non-Local and Local Priors for Single-Frame Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef]

- Hu, Y.; Ma, Y.; Pan, Z.; Liu, Y. Infrared Dim and Small Target Detection from Complex Scenes via Multi-Frame Spatial–Temporal Patch-Tensor Model. Remote Sens. 2022, 14, 2234. [Google Scholar] [CrossRef]

- Zhang, T.; Cao, S.; Pu, T.; Peng, Z. AGPCNet: Attention-Guided Pyramid Context Networks for Infrared Small Target Detection. arXiv 2021, arXiv:2111.03580. [Google Scholar]

- Zhang, L.; Peng, Z. Infrared Small Target Detection Based on Partial Sum of the Tensor Nuclear Norm. Remote Sens. 2019, 11, 382. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Zuo, Z.; Tong, X.; Wei, J.; Su, S.; Wu, P.; Guo, R.; Sun, B. AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sens. 2022, 14, 3412. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 950–959. [Google Scholar]

- Hui, B.; Song, Z.; Fan, H.; Zhong, P.; Hu, W.; Zhang, X.; Lin, J.; Su, H.; Jin, W.; Zhang, Y.; et al. A dataset for infrared detection and tracking of dim-small aircraft targets under ground/air background. China Sci. Data 2020, 5, 291–302. [Google Scholar]

- Guo, C.; Qi, M.; Zhang, L. Spatio-temporal Saliency detection using phase spectrum of quaternion fourier transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-Mean and Max-Median Filters for Detection of Small-Targets. Signal Data Process. Small Targets 1999, 3809, 74–83. [Google Scholar]

- Qin, Y.; Bruzzone, L.; Gao, C.; Li, B. Infrared small target detection based on facet kernel and random walker. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7104–7118. [Google Scholar] [CrossRef]

- Liu, M.; Du, H.-Y.; Zhao, Y.-J.; Dong, L.-Q.; Hui, M. Image small target detection based on deep learning with snr controlled sample generation. Curr. Trends Comput. Sci. Mech. Autom. 2018, 1, 211–220. [Google Scholar]

- Wang, H.; Zhou, L.; Wang, L. Miss Detection vs. False Alarm: Adversarial Learning for Small Object Segmentation in Infrared Images. In Proceedings of the International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Zhao, B.; Wang, C.; Fu, Q.; Han, Z. A Novel Pattern for Infrared Small Target Detection with Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4481–4492. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid attention network for semantic segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation neworks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, J.; Hou, Q.; Cheng, M.; Wang, C.; Feng, J. Improving convolutional networks with self-calibrated convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 10096–10105. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. UnitBox: An Advanced Object Detection Network. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.Y.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019, arXiv:1911.08287. [Google Scholar] [CrossRef]

- Rahman, M.A.; Wang, Y. Optimizing intersection-over-union in deep neural networks for image segmentation. In International Symposium on Visual Computing; Springer: Cham, Switzerland, 2016; pp. 234–244. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. Proc. AAAI Conf. Artif. Intell. 2020, 34, 11908–11915. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. arXiv 2016, arXiv:1609.05158. [Google Scholar]

- Pang, D.; Shan, T.; Ma, P.; Li, W.; Liu, S.; Tao, R. A Novel Spatiotemporal Saliency Method for Low-Altitude Slow Small Infrared Target Detection. IEEE Geosci. Remote Sens. Lett. 2021, 99, 7000705. [Google Scholar] [CrossRef]

- Liu, H.K.; Zhang, L.; Huang, H. Small Target Detection in Infrared Videos Based on Spatio-Temporal Tensor Model. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8689–8700. [Google Scholar] [CrossRef]

- Du, J.; Lu, H.; Zhang, L.; Hu, M.; Chen, S.; Deng, Y.; Zhang, Y. A spatial-temporal feature-based detection framework for infrared dim small target. IEEE Trans. Geosci. Remote Sens. 2021, 60, 3000412. [Google Scholar] [CrossRef]

- Pang, D.; Shan, T.; Li, W.; Ma, P.; Tao, R.; Ma, Y. Facet derivative-based multidirectional edge awareness and spatial–temporal tensor model for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5001015. [Google Scholar] [CrossRef]

| Stage | Output | Backbone |

|---|---|---|

| conv-1 | ||

| Stage-one | ||

| Stage-tow | ||

| Stage-three |

| ResNetFPN | ALCNet | AGPCNet | MDFENet | |

|---|---|---|---|---|

| FLOPs | 16.105 G | 4.336 G | 50.602 G | 4.348 G |

| Params | 0.546 M | 0.372 M | 12.360 M | 0.383 M |

| 0.0261 | 0.0223 | 0.0462 | 0.0231 |

| Method | Hyperparameter Settings |

|---|---|

| MPCM | N = 1, 3,, 9 |

| IPI | PS: 50 × 50, stride: 10, |

| PSTNN | patchSize = 40, slideStep = 40, lambdaL = 0.7 |

| FKRW | K = 4, p = 6, = 200, window size = 11 × 11 |

| Dataset | Metric | PSTNN | FKRW | MPCM | IPI | ResNetFPN | ALCNet | AGPCNet | MDFENet |

|---|---|---|---|---|---|---|---|---|---|

| SIRST | IoU | 0.596 | 0.205 | 0.493 | 0.720 | 0.757 | 0.762 | 0.799 | 0.809 |

| nIoU | 0.577 | 0.253 | 0.351 | 0.650 | 0.751 | 0.761 | 0.795 | 0.808 | |

| IDTAT | IoU | 0.019 | 0.019 | 0.013 | 0.017 | 0.761 | 0.803 | 0.779 | 0.825 |

| nIoU | 0.010 | 0.008 | 0.009 | 0.023 | 0.759 | 0.801 | 0.778 | 0.823 |

| Manner | IoU | nIoU | ||||||

|---|---|---|---|---|---|---|---|---|

| b = 1 | b = 2 | b = 3 | b = 4 | b = 1 | b = 2 | b = 3 | b = 4 | |

| BLAM | 0.745 | 0.769 | 0.781 | 0.799 | 0.758 | 0.772 | 0.779 | 0.785 |

| ACM | 0.754 | 0.763 | 0.784 | 0.796 | 0.749 | 0.756 | 0.780 | 0.795 |

| MTAM | 0.748 | 0.773 | 0.778 | 0.788 | 0.749 | 0.771 | 0.777 | 0.781 |

| MRAM | 0.735 | 0.760 | 0.771 | 0.796 | 0.734 | 0.764 | 0.769 | 0.793 |

| MAFM | 0.756 | 0.787 | 0.802 | 0.809 | 0.757 | 0.782 | 0.799 | 0.808 |

| Manner | IoU | nIoU | ||||||

|---|---|---|---|---|---|---|---|---|

| b = 1 | b = 2 | b = 3 | b = 4 | b = 1 | b = 2 | b = 3 | b = 4 | |

| BLAM | 0.806 | 0.810 | 0.805 | 0.812 | 0.805 | 0.808 | 0.804 | 0.812 |

| ACM | 0.803 | 0.814 | 0.815 | 0.819 | 0.802 | 0.814 | 0.815 | 0.818 |

| MTAM | 0.811 | 0.815 | 0.818 | 0.820 | 0.809 | 0.814 | 0.818 | 0.819 |

| MRAM | 0.808 | 0.810 | 0.819 | 0.813 | 0.807 | 0.810 | 0.818 | 0.819 |

| MAFM | 0.810 | 0.826 | 0.823 | 0.825 | 0.808 | 0.824 | 0.821 | 0.823 |

| Backbone | BLAM | MAFM | Sub-Pixel | NL | SIRST | IDTAT | ||

|---|---|---|---|---|---|---|---|---|

| IoU | nIoU | IoU | nIoU | |||||

| ResNet-20 | ✓ | 0.762 | 0.761 | 0.803 | 0.801 | |||

| ResNet-20 | ✓ | 0.766 | 0.771 | 0.806 | 0.805 | |||

| ResNet-20 | ✓ | ✓ | 0.780 | 0.775 | 0.817 | 0.816 | ||

| ResNet-20 | ✓ | ✓ | ✓ | 0.809 | 0.808 | 0.825 | 0.823 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Nian, B.; Zhang, Y.; Zhang, Y.; Ling, F. Lightweight Multimechanism Deep Feature Enhancement Network for Infrared Small-Target Detection. Remote Sens. 2022, 14, 6278. https://doi.org/10.3390/rs14246278

Zhang Y, Nian B, Zhang Y, Zhang Y, Ling F. Lightweight Multimechanism Deep Feature Enhancement Network for Infrared Small-Target Detection. Remote Sensing. 2022; 14(24):6278. https://doi.org/10.3390/rs14246278

Chicago/Turabian StyleZhang, Yi, Bingkun Nian, Yan Zhang, Yu Zhang, and Feng Ling. 2022. "Lightweight Multimechanism Deep Feature Enhancement Network for Infrared Small-Target Detection" Remote Sensing 14, no. 24: 6278. https://doi.org/10.3390/rs14246278

APA StyleZhang, Y., Nian, B., Zhang, Y., Zhang, Y., & Ling, F. (2022). Lightweight Multimechanism Deep Feature Enhancement Network for Infrared Small-Target Detection. Remote Sensing, 14(24), 6278. https://doi.org/10.3390/rs14246278