SRODNet: Object Detection Network Based on Super Resolution for Autonomous Vehicles

Abstract

1. Introduction

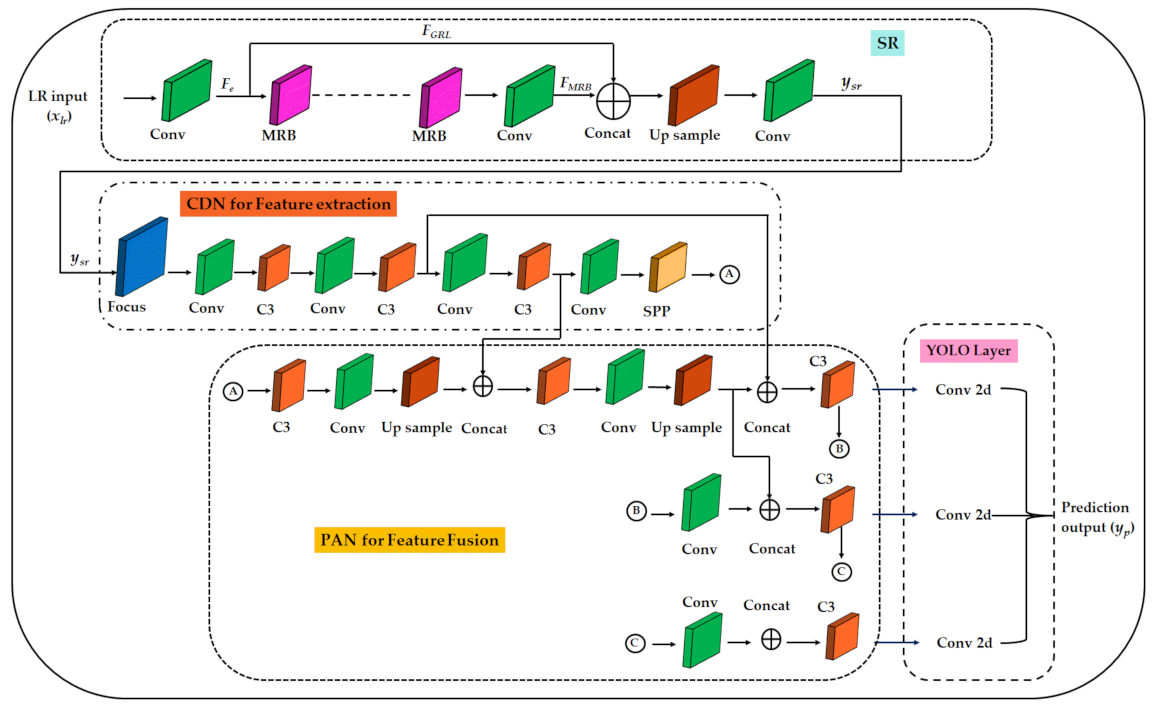

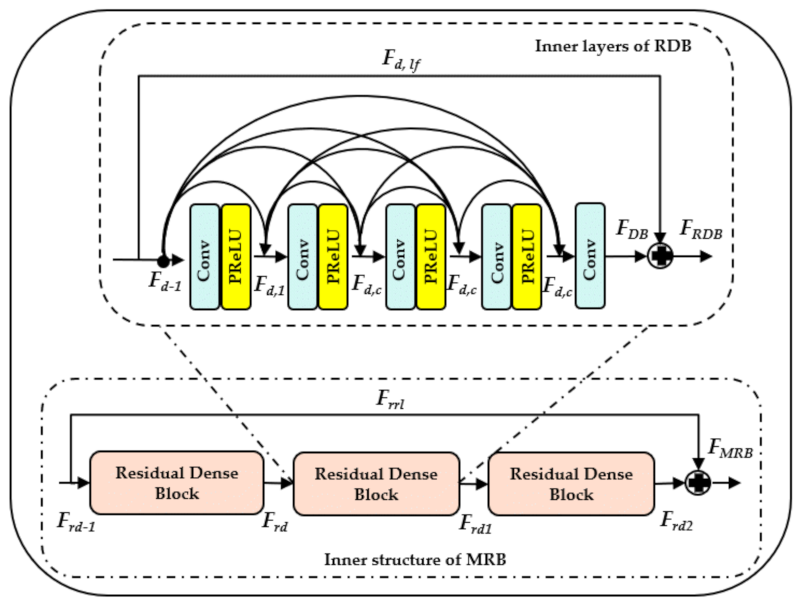

- Here, we propose an SRODNet that associates a super-resolution network and an object detection network to detect objects. The proposed SR method enhances the perceptual quality of small objects with a deep residual network. This network is designed with the proposed modified residual blocks (MRB) and dense connections. In particular, MRBs accumulate all the hierarchical features with global residual learning.

- The proposed model is a structure in which the object detection component, YOLOv5, holds a super-resolution network. This implies that the model functions as a single network for both super-resolution and object detection in the training step. This ensures better feature learning, which enhances the condition of LR images to super-resolved images.

- Finally, the proposed structure was jointly optimized to benefit from hierarchical features that helped the network to learn more efficiently and improve its accuracy. The structure also accumulates multi-features that help to perceive small objects and are useful in remote sensing applications.

- We evaluated the SR model in terms of the peak-signal-to-noise-ratio (PSNR), structural similarity index (SSIM), and perception-image-quality-evaluator (PIQE) metrics. Further, we evaluated SRODNet performance by using the mean average precision (mAP) and F1 score metrics.

2. Related Work

2.1. Single Image Super-Resolution Using Deep Learning Methods

2.2. Deep Learning-Based Object-Detection Models

3. Proposed Object Detection Network Based on SR

4. Experimental Results

4.1. Quantitative Results of Proposed Model for Generic SR Application

4.1.1. Div2k Training Dataset

4.1.2. Public Benchmark Datasets

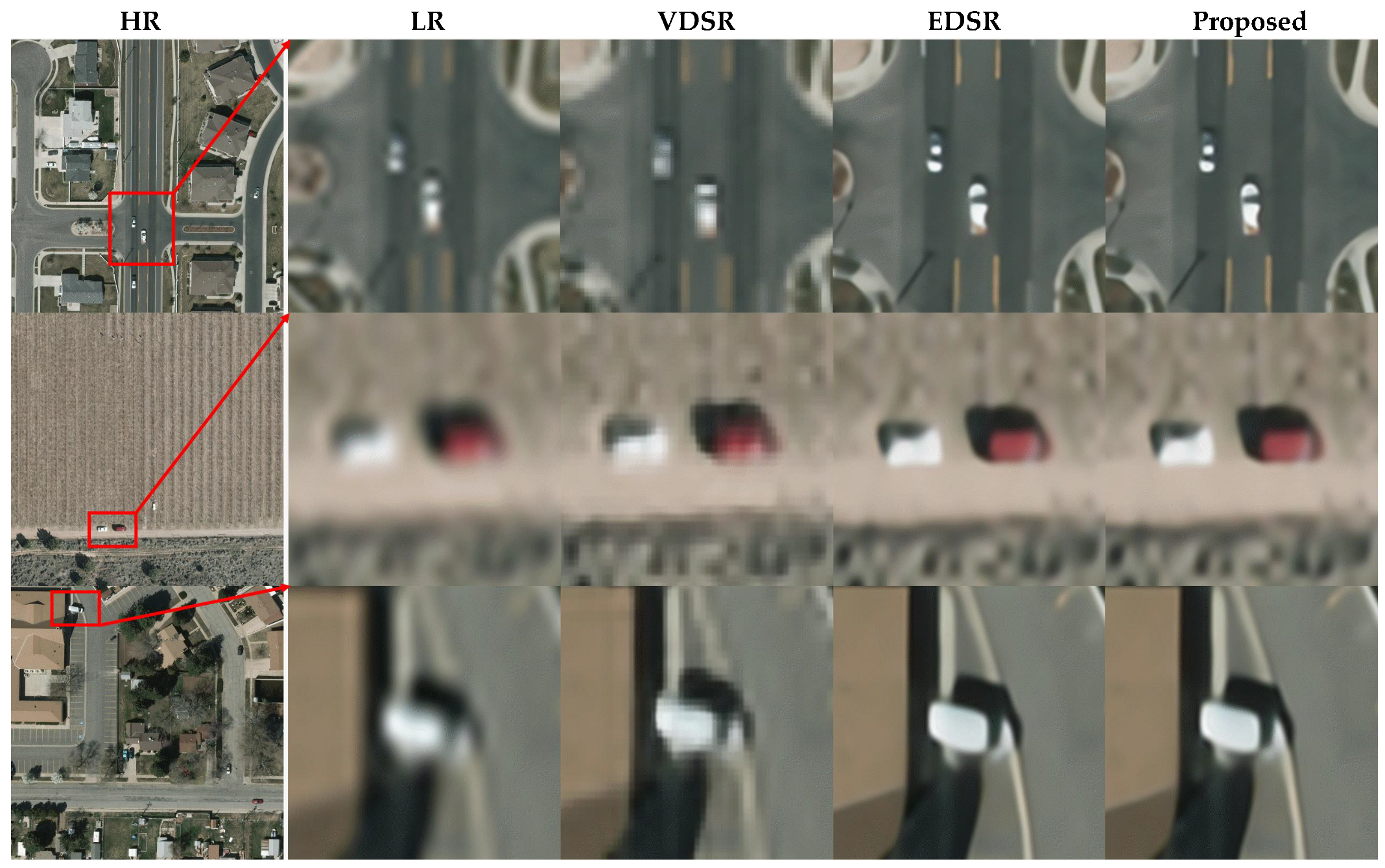

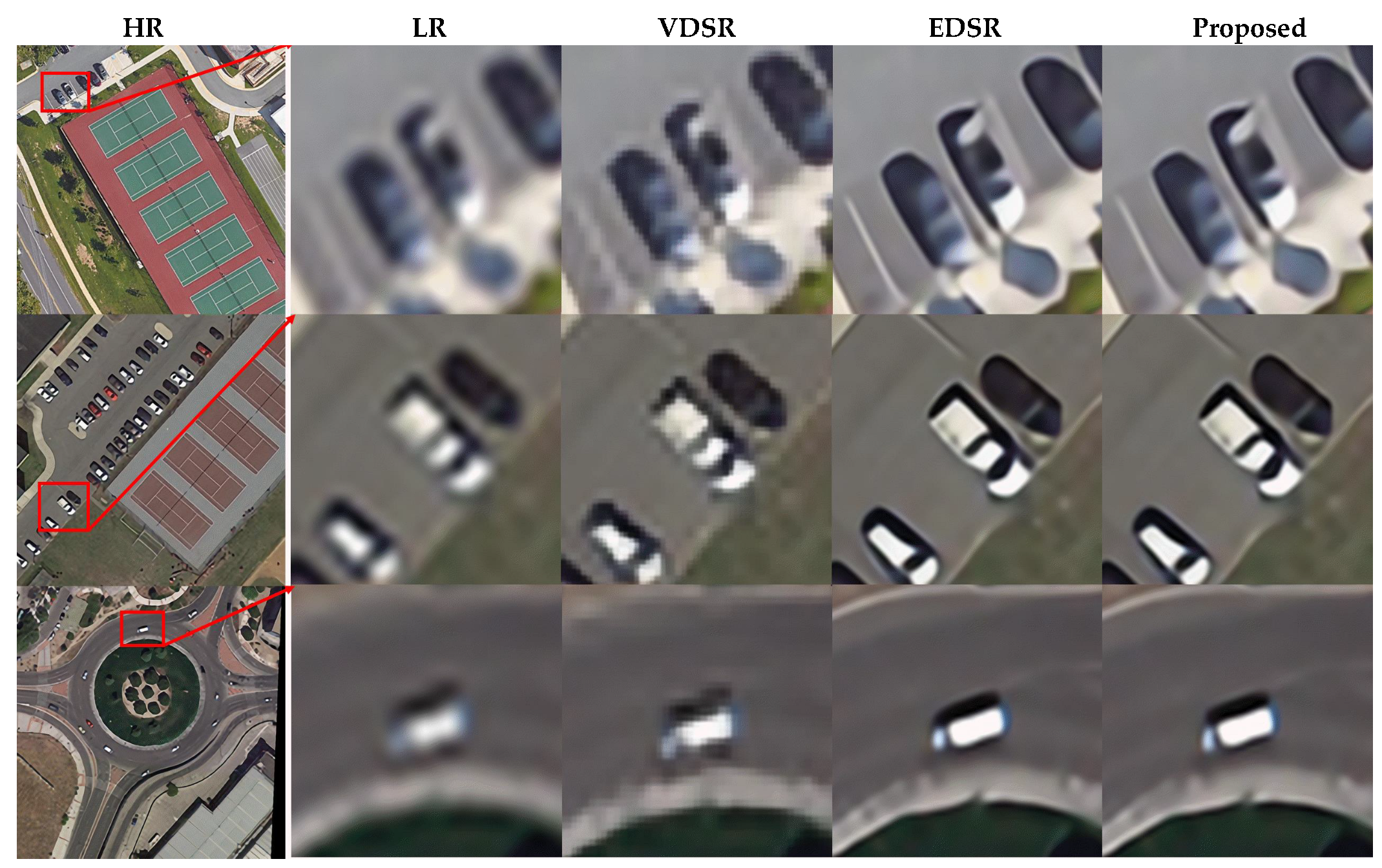

4.2. Super-Resolution Results for Remotesensing Application

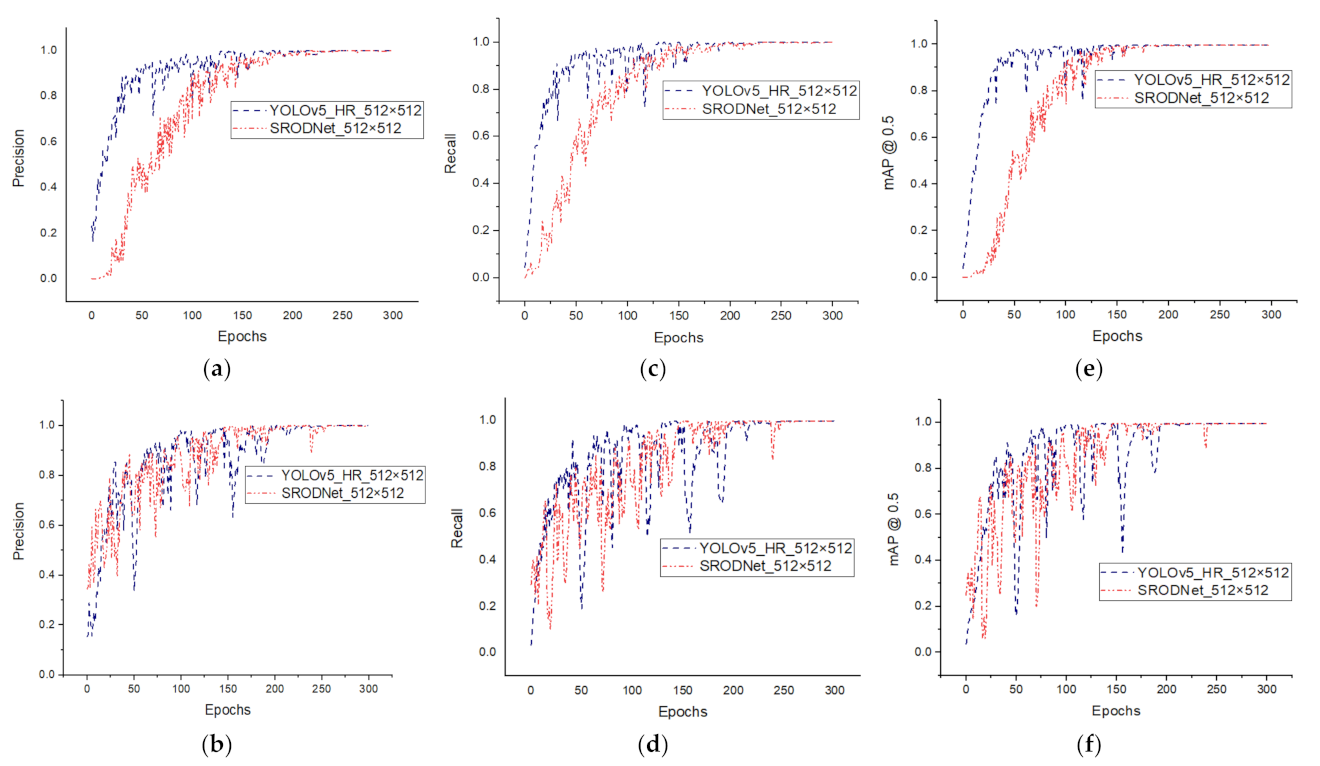

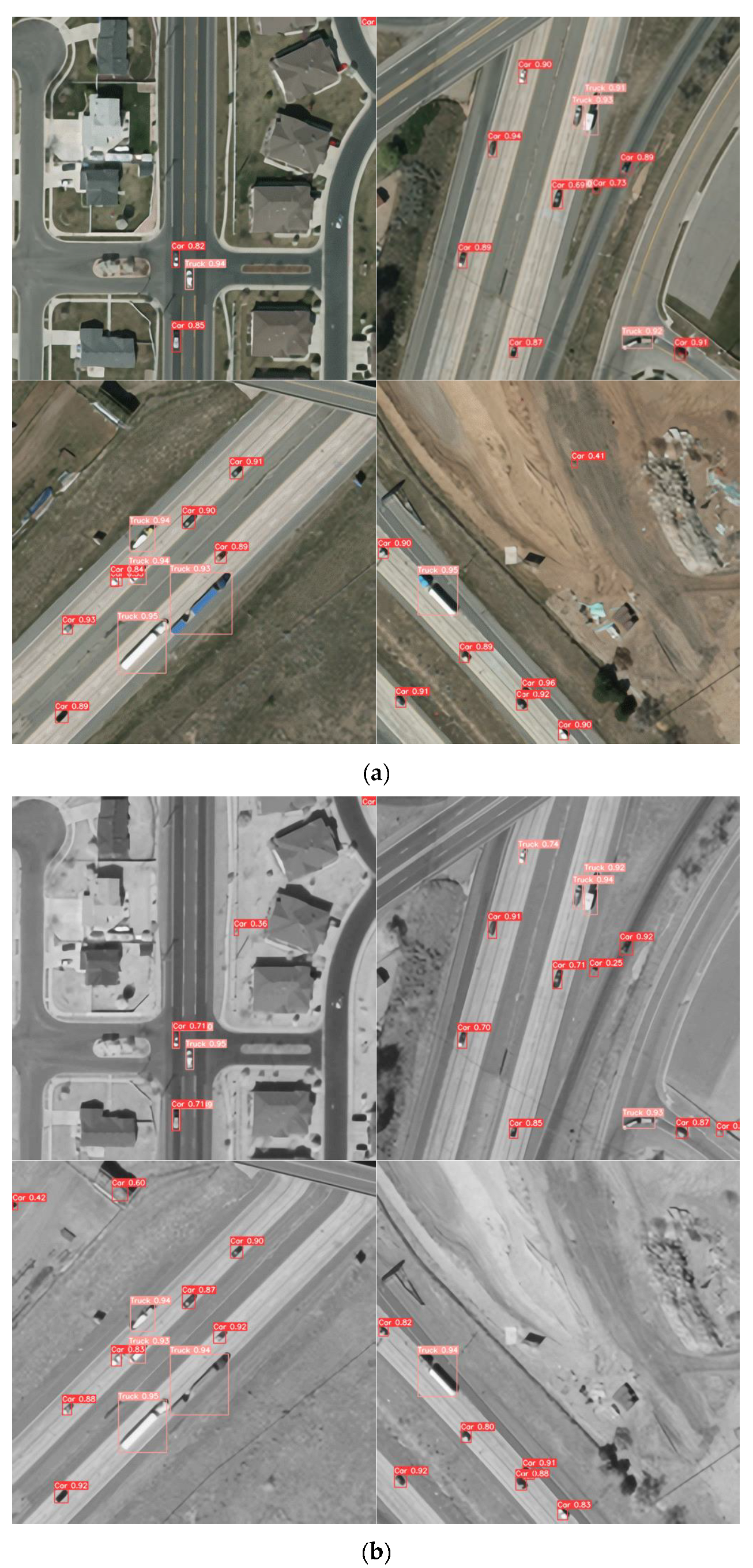

4.3. Detection Results and Performance Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Akcay, S.; Kundegorski, M.; Willcocks, C.; Breckon, T. Using Deep Convolutional Neural Network Architectures for Object Classification and Detection within X-ray Baggage Security Imagery. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2203–2215. [Google Scholar] [CrossRef]

- Bastan, M. Multi-view object detection in dual-energy X-ray images. Mach. Vis. Appl. 2015, 26, 1045–1060. [Google Scholar] [CrossRef]

- Mery, D.; Svec, E.; Arias, M.; Riffo, V.; Saavedra, J.; Banerjee, S. Modern Computer Vision Techniques for X-ray Testing in Baggage Inspection. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 682–692. [Google Scholar] [CrossRef]

- Choi, K.; Yi, J.; Park, C.; Yoon, S. Deep Learning for Anomaly Detection in Time-Series Data: Review, Analysis, and Guidelines. IEEE Access 2021, 9, 120043–120065. [Google Scholar] [CrossRef]

- Shi, X.; Li, X.; Wu, C.; Kong, S.; Yang, J.; He, L. A Real-Time Deep Network for Crowd Counting. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Zhao, P.; Adnan, K.; Lyu, X.; Wei, S.; Sinnott, R. Estimating the Size of Crowds through Deep Learning. In Proceedings of the 2020 IEEE Asia-Pacific Conference on Computer Science and Data Engineering (CSDE), Gold Coast, Australia, 16–18 December 2020. [Google Scholar]

- Xu, J. A deep learning approach to building an intelligent video surveillance system. Multimed. Tools Appl. 2021, 80, 5495–5515. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S. Recent Advances in Deep Learning for Object Detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Mingyu, G.; Qinyu, C.; Bowen, Z.; Jie, S.; Zhihao, N.; Junfan, W.; Huipin, L. A Hybrid YOLOv4 and Particle Filter Based Robotic Arm Grabbing System in Nonlinear and Non-Gaussian Environment. Electronics 2021, 10, 1140. [Google Scholar]

- Kulshreshtha, M.; Chandra, S.S.; Randhawa, P.; Tsaramirsis, G.; Khadidos, A.; Khadidos, A. OATCR: Outdoor Autonomous Trash-Collecting Robot Design Using YOLOv4-Tiny. Electronics 2021, 10, 2292. [Google Scholar] [CrossRef]

- Nelson, R.; Corby, J.R. Machine vision for robotics. IEEE Trans. Ind. Electron. 1983, 30, 282–291. [Google Scholar]

- Loukatos, D.; Petrongonas, E.; Manes, K.; Kyrtopoulos, I.-V.; Dimou, V.; Arvanitis, K.G. A Synergy of Innovative Technologies towards Implementing an Autonomous DIY Electric Vehicle for Harvester-Assisting Purposes. Machines 2021, 9, 82. [Google Scholar] [CrossRef]

- Schulte, J.; Kocherovsky, M.; Paul, N.; Pleune, M.; Chung, C.-J. Autonomous Human-Vehicle Leader-Follower Control Using Deep-Learning-Driven Gesture Recognition. Vehicles 2022, 4, 243–258. [Google Scholar] [CrossRef]

- Thomas, M.; Farid, M. Automatic Car Counting Method for Unmanned Aerial Vehicle Image. IEEE Trans. Geosci. Remote Sens. 2014, 3, 1635–1647. [Google Scholar]

- Liu, K.; Mattyus, G. Fast multi-class vehicle detection on aerial images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1938–1942. [Google Scholar]

- Shengjie, Z.; Jinghong, L.; Yang, T.; Yujia, Z.; Chenglong, L. Rapid Vehicle Detection in Aerial Images under the Complex Background of Dense Urban Areas. Remote Sens. 2022, 14, 2088. [Google Scholar]

- Xungen, L.; Feifei, M.; Shuaishuai, L.; Xiao, J.; Mian, P.; Qi, M.; Haibin, Y. Vehicle Detection in Very-High-Resolution Remote Sensing Images Based on an Anchor-Free Detection Model with a More Precise Foveal Area. Int. J. Geo-Inf. 2021, 10, 549. [Google Scholar]

- Jiandan, Z.; Tao, L.; Guangle, Y. Robust Vehicle Detection in Aerial Images Based on Cascaded Convolutional Neural Networks. Sensors 2017, 17, 2720. [Google Scholar]

- Jaswanth, N.; Chinmayi, N.; Rolf, A.; Hrishikesh, V. A Progressive Review—Emerging Technologies for ADAS Driven Solutions. IEEE Trans. Intell. Veh. 2021, 1, 326–341. [Google Scholar]

- Kim, J.; Hong, S.; Kim, E. Novel On-Road Vehicle Detection System Using Multi-Stage Convolutional Neural Network. IEEE Access 2021, 9, 94371–94385. [Google Scholar]

- Kiho, L.; Kastuv, T. LIDAR: Lidar Information based Dynamic V2V Authentication for Roadside Infrastructure-less Vehicular Networks. In Proceedings of the 2019 16th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 11–14 January 2019. [Google Scholar]

- Aldrich, R.; Wickramarathne, T. Low-Cost Radar for Object Tracking in Autonomous Driving: A Data-Fusion Approach. In Proceedings of the 2018 IEEE 87th Vehicular Technology Conference (VTC Spring), Porto, Portugal, 3–6 June 2018. [Google Scholar]

- Kwang-ju, K.; Pyong-kun, K.; Yun-su, C.; Doo-hyun, C. Multi-Scale Detector for Accurate Vehicle Detection in Traffic Surveillance Data. IEEE Access 2019, 7, 78311–78319. [Google Scholar]

- Khatab, E.; Onsy, A.; Varley, M.; Abouelfarag, A. Vulnerable objects detection for autonomous driving: A review. Integration 2021, 78, 36–48. [Google Scholar] [CrossRef]

- Saeed, A.; Salman, K.; Nick, B. A Deep Journey into Super-resolution: A Survey. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar]

- Yogendra Rao, M.; Arvind, M.; Oh-Seol, K. Single Image Super-Resolution Using Deep Residual Network with Spectral Normalization. In Proceedings of the 17th International Conference on Multimedia Technology and Applications (MITA), Jeju, Republic of Korea, 6–7 June 2021. [Google Scholar]

- Yogendra Rao, M.; Oh-Seol, K. Deep residual dense network for single image super-resolution. Electronics 2021, 10, 555. [Google Scholar]

- Ivan, G.A.; Rafael Marcos, L.B.; Ezequiel, L.R. Improved detection of small objects in road network sequences using CNN and super resolution. Expert Syst. 2021, 39, e12930. [Google Scholar]

- Sheng, R.; Jianqi, L.; Tianyi, T.; Yibo, P.; Jian, J. Towards Efficient Video Detection Object Super-Resolution with Deep Fusion Network for Public Safety. Wiley 2021, 1, 9999398. [Google Scholar]

- Xinqing, W.; Xia, H.; Feng, X.; Yuyang, L.; Xiaodong, H.; Pengyu, S. Multi-Object Detection in Traffic Scenes Based on Improved SSD. Electronics 2018, 7, 302. [Google Scholar]

- Luc, C.; Minh-Tan, P.; Sebastien, L. Small Object Detection in Remote Sensing Images Based on Super-Resolution with Auxiliary Generative Adversarial Networks. Remote Sens. 2020, 12, 3152. [Google Scholar]

- Yunyan, W.; Huaxuan, W.; Luo, S.; Chen, P.; Zhiwei, Y. Detection of plane in remote sensing images using super-resolution. PLoS ONE 2022, 17, 0265503. [Google Scholar]

- Mostofa, M.; Ferdous, S.; Riggan, B.; Nasrabadi, N. Joint-SRVDNet: Joint Super Resolution and Vehicle Detection Network. IEEE Access 2020, 8, 82306–82319. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Chao, D.; Chen, C.L.; Xiaoou, T. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Zhaowen, W.; Ding, L.; Jianchao, Y.; Wei, H.; Thomas, H. Deep Networks for Image Super-Resolution with Sparse Prior. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the CVPR 2015, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Wei-Sheng, L.; Jia-Bin, H.; Narendra, A.; Ming-Hsuan, Y. Deep Laplacian Pyramid Networks for Fast and Accurate Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Bee, L.; Sanghyun, S.; Heewon, K.; Seungjun, N.; Kyoung Mu, L. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 June 2017. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Wazir, M.; Supavadee, A. Multi-Scale Inception Based Super-Resolution Using Deep Learning Approach. Electronics 2019, 8, 892. [Google Scholar]

- Yan, L.; Guangrui, Z.; Hai, W.; Wei, Z.; Min, Z.; Hongbo, Q. An efficient super-resolution network based on aggregated residual transformations. Electronics 2019, 8, 339. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 June 2017. [Google Scholar]

- Zhiqian, C.; Kai, C.; James, C. Vehicle and Pedestrian Detection Using Support Vector Machine and Histogram of Oriented Gradients Features. In Proceedings of the 2013 International Conference on Computer Sciences and Applications, Wuhan, China, 14–15 December 2013. [Google Scholar]

- Zahid, M.; Nazeer, M.; Arif, M.; Imran, S.; Fahad, K.; Mazhar, A.; Uzair, K.; Samee, K. Boosting the Accuracy of AdaBoost for Object Detection and Recognition. In Proceedings of the 2016 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 19–21 December 2016. [Google Scholar]

- Silva, R.; Rodrigues, P.; Giraldi, G.; Cunha, G. Object recognition and tracking using Bayesian networks for augmented reality systems. In Proceedings of the Ninth International Conference on Information Visualization (IV’05), London, UK, 6–8 July 2005. [Google Scholar]

- Qi, Z.; Wang, L.; Xu, Y.; Zhong, P. Robust Object Detection Based on Decision Trees and a New Cascade Architecture. In Proceedings of the 2008 International Conference on Computational Intelligence for Modelling Control & Automation, Vienna, Austria, 10–12 December 2008. [Google Scholar]

- Fica Aida, N.; Purwalaksana, A.; Manalu, I. Object Detection of Surgical Instruments for Assistant Robot Surgeon using KNN. In Proceedings of the 2019 International Conference on Advanced Mechatronics, Intelligent Manufacture and Industrial Automation (ICAMIMIA), Batu, Indonesia, 9–10 October 2019. [Google Scholar]

- Liu, Z.; Xiong, H. Object Detection and Localization Using Random Forest. In Proceedings of the 2012 Second International Conference on Intelligent System Design and Engineering Application, Sanya, China, 6–7 January 2012. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 18 February 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girishick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Mark Liao, H.-Y. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Mark Liao, H.-Y. Scaled-YOLOv4: Scaling Cross Stage Partial Network. arXiv 2020, arXiv:2011.08036. [Google Scholar]

- Yingfeng, C.; Tianyu, L.; Hongbo, G.; Hai, W.; Long, C.; Yicheng, L.; Miguel, S.; Zhixiong, L. YOLOv4-5D: An Effective and Efficient Object Detector for Autonomous Driving. IEEE Trans. Instrum. Meas. 2021, 70, 4503613. [Google Scholar]

- Lian, J.; Yin, Y.; Li, L.; Wang, Z.; Zhou, Y. Small Object Detection in Traffic Scenes based on Attention Feature Fusion. Sensors 2021, 21, 3031. [Google Scholar] [CrossRef] [PubMed]

- Timofte, R.; Agustsson, E.; Van Gool, L.; Yang, M.-H.; Zhang, L.; Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Ntire 2017 challenge on single image super-resolution: Methods and results. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 June 2017. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the 23rd British Machine Vision Conference Location (BMVC), Guildford, UK, 3–7 September 2012. [Google Scholar]

- Timofte, R.; De Smet, V.; Van Gool, L. A+: Adjusted anchored neighborhood regression for fast super-resolution. In Proceedings of the Asian Conference on Computer Vision (ACCV), Singapore, 1–2 November 2014. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the 8th international Conference of Computer Vision (ICCV), Vancouver, BC, Canada, 7–14 July 2001. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015. [Google Scholar]

- Horé, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition, ICPR 2010, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Venkatanath, N.; Praneeth, D.; Chandrasekhar, B.M.; Channappayya, S.S.; Medasani, S.S. Blind Image Quality Evaluation Using Perception Based Features. In Proceedings of the 21st National Conference on Communications (NCC), Mumbai, India, 27 February–1 March 2015. [Google Scholar]

- Chen, C.; Zhong, J.; Tan, Y. Multiple-oriented and small object detection with convolutional neural networks for aerial image. Remote Sens. 2019, 11, 2176. [Google Scholar] [CrossRef]

| S. No | Component | Specification |

|---|---|---|

| 1 | CPU | Intel Xenon Silver 4214R |

| 2 | RAM | 512 GB |

| 3 | GPU | NVIDIA 2x RTX A6000 |

| 4 | Operating System | Windows 10 Pro.10.0.19042, 64 bit |

| 5 | CUDA | CUDA 11.2 with Cudnn 8.1.0 |

| 6 | Data Processing | Python 3.9, OpenCV 4.0 |

| 7 | Deep Learning Framework | Pytorch 1.7.0 |

| Datasets | Set 5 [61] | Set 14 [62] | BSD 100 [63] | Urban 100 [64] | |||||

|---|---|---|---|---|---|---|---|---|---|

| S. No | Architecture | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM |

| 1 | Bicubic [35] | 28.43 | 0.8109 | 26.00 | 0.7023 | 25.96 | 0.6678 | 23.14 | 0.6574 |

| 2 | SRCNN [35] | 30.48 | 0.8628 | 27.50 | 0.7513 | 26.90 | 0.7103 | 24.52 | 0.7226 |

| 3 | FSRCNN [25] | 30.70 | 0.8657 | 27.59 | 0.7535 | 26.96 | 0.7128 | 24.60 | 0.7258 |

| 4 | SCN [25] | 30.39 | 0.8620 | 27.48 | 0.7510 | 26.87 | 0.710 | 24.52 | 0.725 |

| 5 | VDSR [37] | 31.35 | 0.8838 | 28.02 | 0.7678 | 27.29 | 0.7252 | 25.18 | 0.7525 |

| 6 | DRCN [25] | 31.53 | 0.8854 | 28.03 | 0.7673 | 27.24 | 0.7233 | 25.14 | 0.7511 |

| 7 | LapSRN [25] | 31.54 | 0.8866 | 28.09 | 0.7694 | 27.32 | 0.7264 | 25.21 | 0.7553 |

| 8 | SRGAN [25] | 32.05 | 0.8910 | 28.53 | 0.7804 | 27.57 | 0.7354 | 26.07 | 0.7839 |

| 9 | EDSR [39] (simulated) | 32.31 | 0.8829 | 28.80 | 0.7693 | 28.60 | 0.7480 | 26.40 | 0.7805 |

| 10 | Proposed model | 32.35 | 0.8835 | 28.83 | 0.7704 | 28.59 | 0.7482 | 26.34 | 0.7796 |

| Architecture | EDSR [39] | Proposed Model | |||||

|---|---|---|---|---|---|---|---|

| S. No | Datasets | PSNR | SSIM | PIQE | PSNR | SSIM | PIQE |

| 1 | VEDAI-VISIBLE | 29.543 | 0.6857 | 77.687 | 29.520 | 0.6853 | 76.573 |

| 2 | VEDAI-IR | 32.040 | 0.7442 | 78.230 | 32.040 | 0.7443 | 78.160 |

| 3 | DOTA | 26.983 | 0.7338 | 72.469 | 26.954 | 0.7316 | 67.444 |

| 4 | KoHT | 27.507 | 0.8209 | 93.879 | 27.438 | 0.8201 | 93.631 |

| Dataset | VEDAI-VISIBLE | VEDAI-IR | DOTA | KoHT | ||||

|---|---|---|---|---|---|---|---|---|

| Architecture | mAP @ 0.5 | F1 Score | mAP @ 0.5 | F1 Score | mAP @ 0.5 | F1 Score | mAP @ 0.5 | F1 Score |

| Ren, et al. (Z and F) [46] | 32.00 | 0.212 | - | - | - | - | - | - |

| Girishik, et al. (VGG-16) [51] | 37.30 | 0.224 | - | - | - | - | - | - |

| Ren, et al. (VGG-16) [46] | 40.90 | 0.225 | - | - | - | - | - | - |

| Zhong, et al. [67] | 50.20 | 0.305 | - | - | - | - | - | - |

| Chen, et al. [18] | 59.50 | 0.451 | - | - | - | - | - | - |

| YOLOv3_SRGAN_512 [33] | 62.45 | 0.591 | 70.10 | 0.687 | 86.18 | 0.837 | - | - |

| YOLOv3_MsSRGAN_512 [33] | 66.74 | 0.643 | 74.61 | 0.723 | 87.02 | 0.859 | - | - |

| YOLOv3_EDSR [39] | 74.32 | 0.754 | 70.62 | 0.727 | 91.47 | 0.889 | 91.46 | 0.926 |

| SRODNet (ours) | 81.38 | 0.819 | 79.82 | 0.800 | 92.08 | 0.892 | 93.02 | 0.928 |

| Architecture | Hardware | Speed | Model Parameters | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| S. No | Model | GFLOP’s | Inference | Training (hours) | |||||||

| VEDAI-VS | IR | KoHT | DOTA | (s) | VISIBLE | IR | KoHT | DOTA | (million) | ||

| 1 | YOLOv3_GT [55] | 154.8 | 154.8 | 154.8 | 154.6 | 0.014 | 0.402 | 0.400 | 1.151 | 0.396 | ~61.51 |

| 2 | YOLOv3_ EDSR [39] | 154.8 | 154.8 | 154.8 | 154.6 | 0.013 | 0.403 | 0.396 | 1.150 | 0.396 | ~104.6 |

| 3 | SRODNet (ours) | 15.8 | 15.8 | 16.4 | 15.8 | 0.010 | 0.143 | 0.140 | 0.382 | 0.140 | ~24.62 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Musunuri, Y.R.; Kwon, O.-S.; Kung, S.-Y. SRODNet: Object Detection Network Based on Super Resolution for Autonomous Vehicles. Remote Sens. 2022, 14, 6270. https://doi.org/10.3390/rs14246270

Musunuri YR, Kwon O-S, Kung S-Y. SRODNet: Object Detection Network Based on Super Resolution for Autonomous Vehicles. Remote Sensing. 2022; 14(24):6270. https://doi.org/10.3390/rs14246270

Chicago/Turabian StyleMusunuri, Yogendra Rao, Oh-Seol Kwon, and Sun-Yuan Kung. 2022. "SRODNet: Object Detection Network Based on Super Resolution for Autonomous Vehicles" Remote Sensing 14, no. 24: 6270. https://doi.org/10.3390/rs14246270

APA StyleMusunuri, Y. R., Kwon, O.-S., & Kung, S.-Y. (2022). SRODNet: Object Detection Network Based on Super Resolution for Autonomous Vehicles. Remote Sensing, 14(24), 6270. https://doi.org/10.3390/rs14246270