DSM Generation from Multi-View High-Resolution Satellite Images Based on the Photometric Mesh Refinement Method

Abstract

1. Introduction

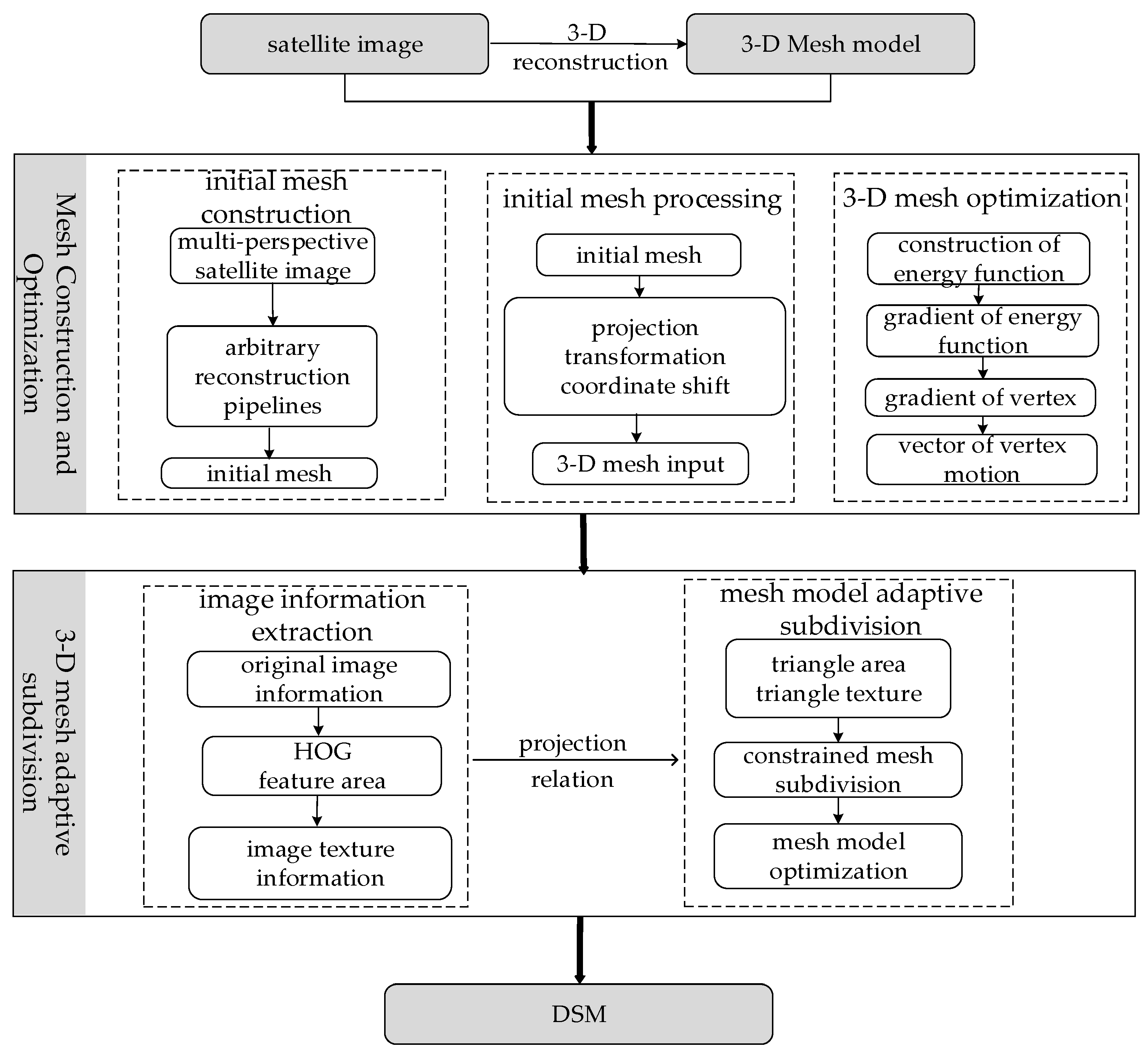

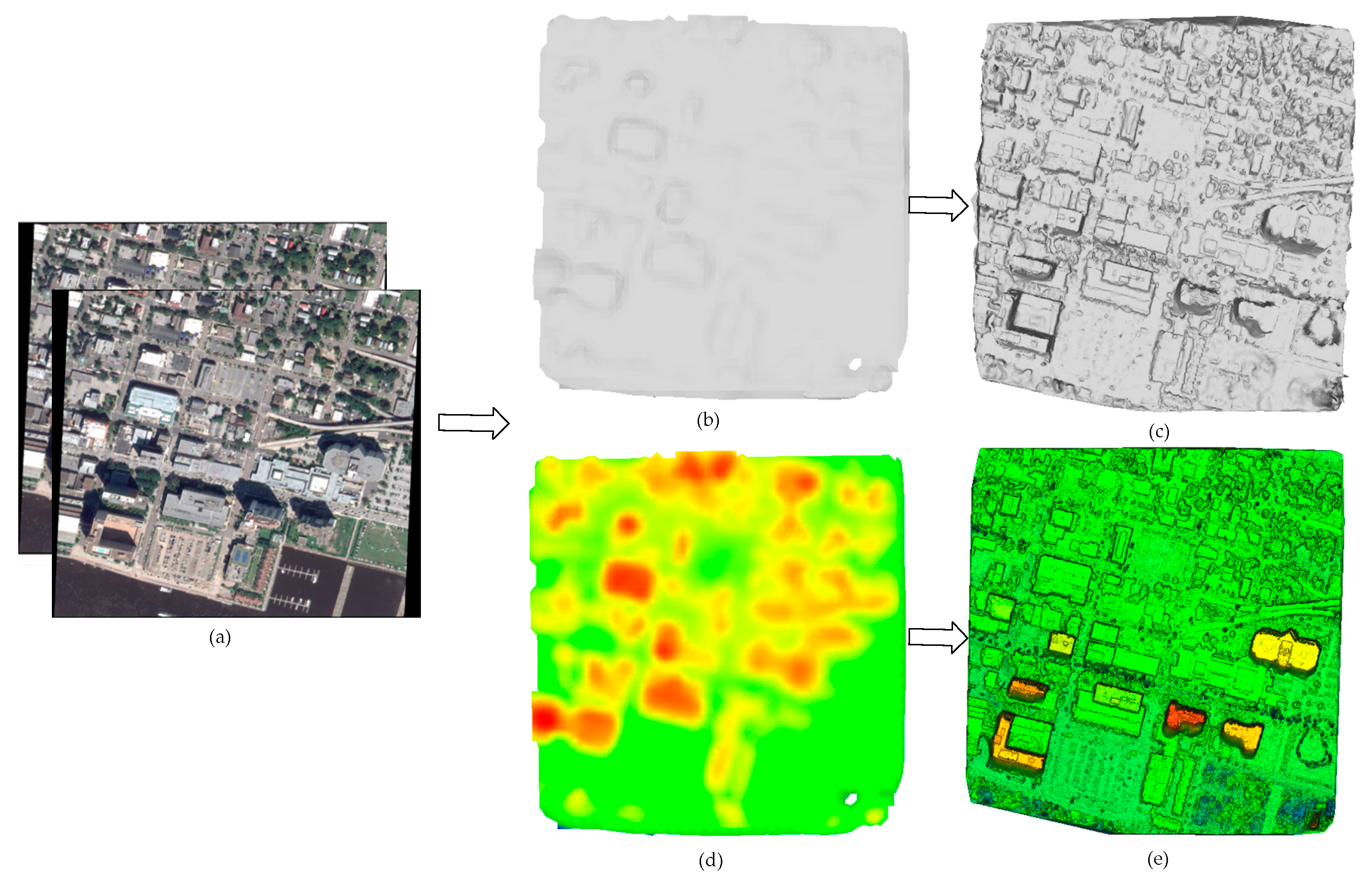

2. Methods

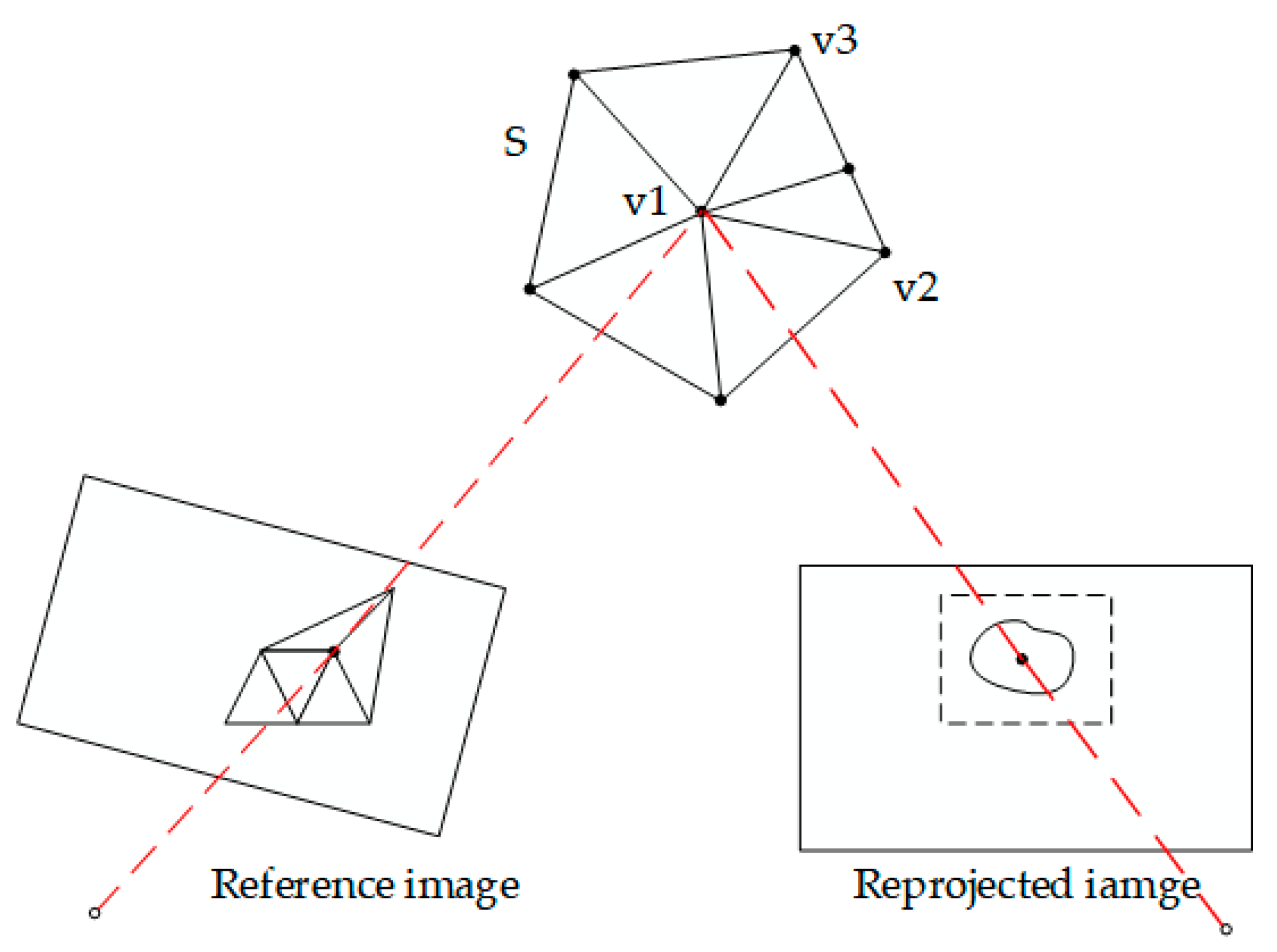

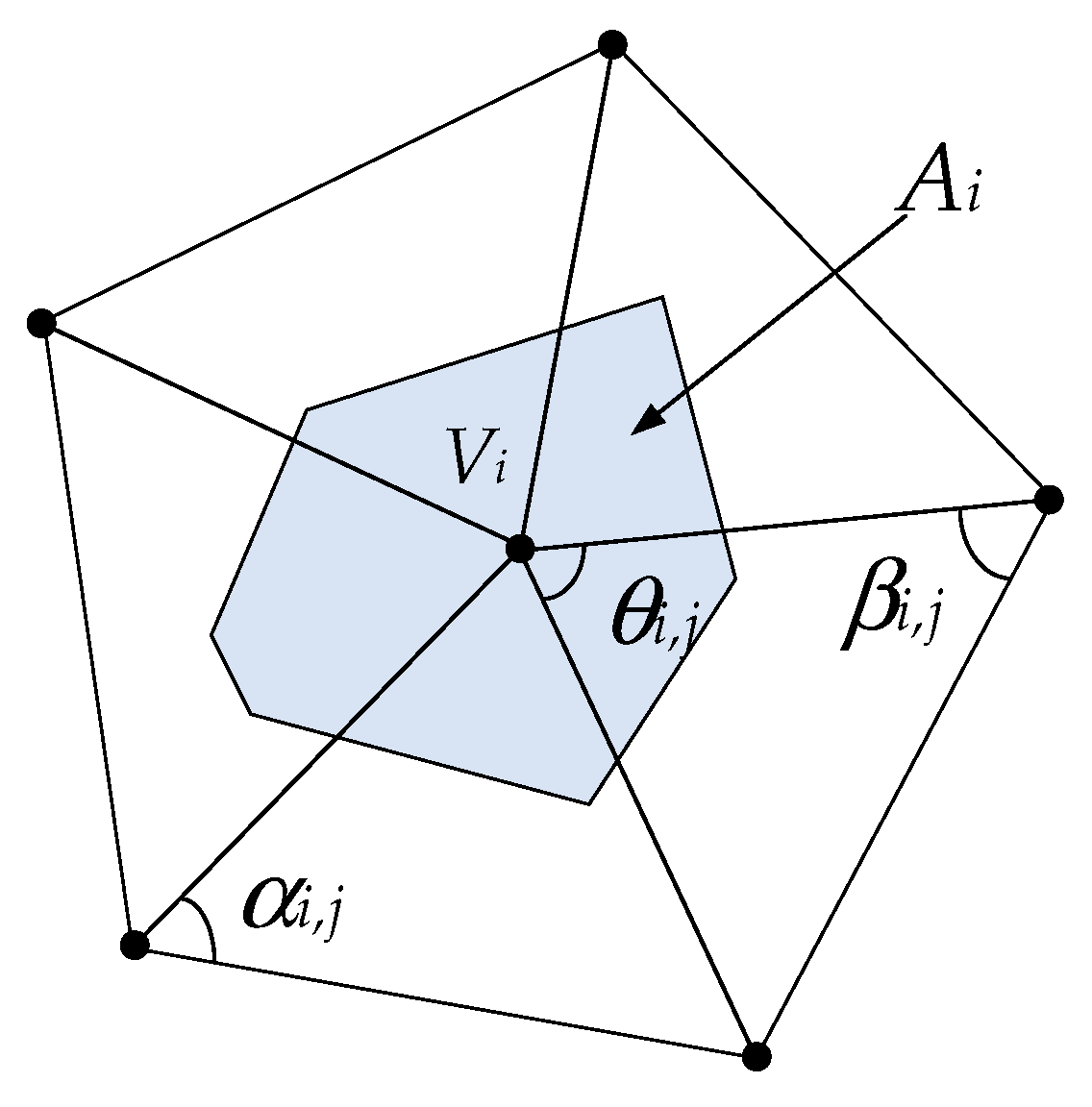

2.1. Construction of the Energy Function

2.2. Vertex Optimization Process

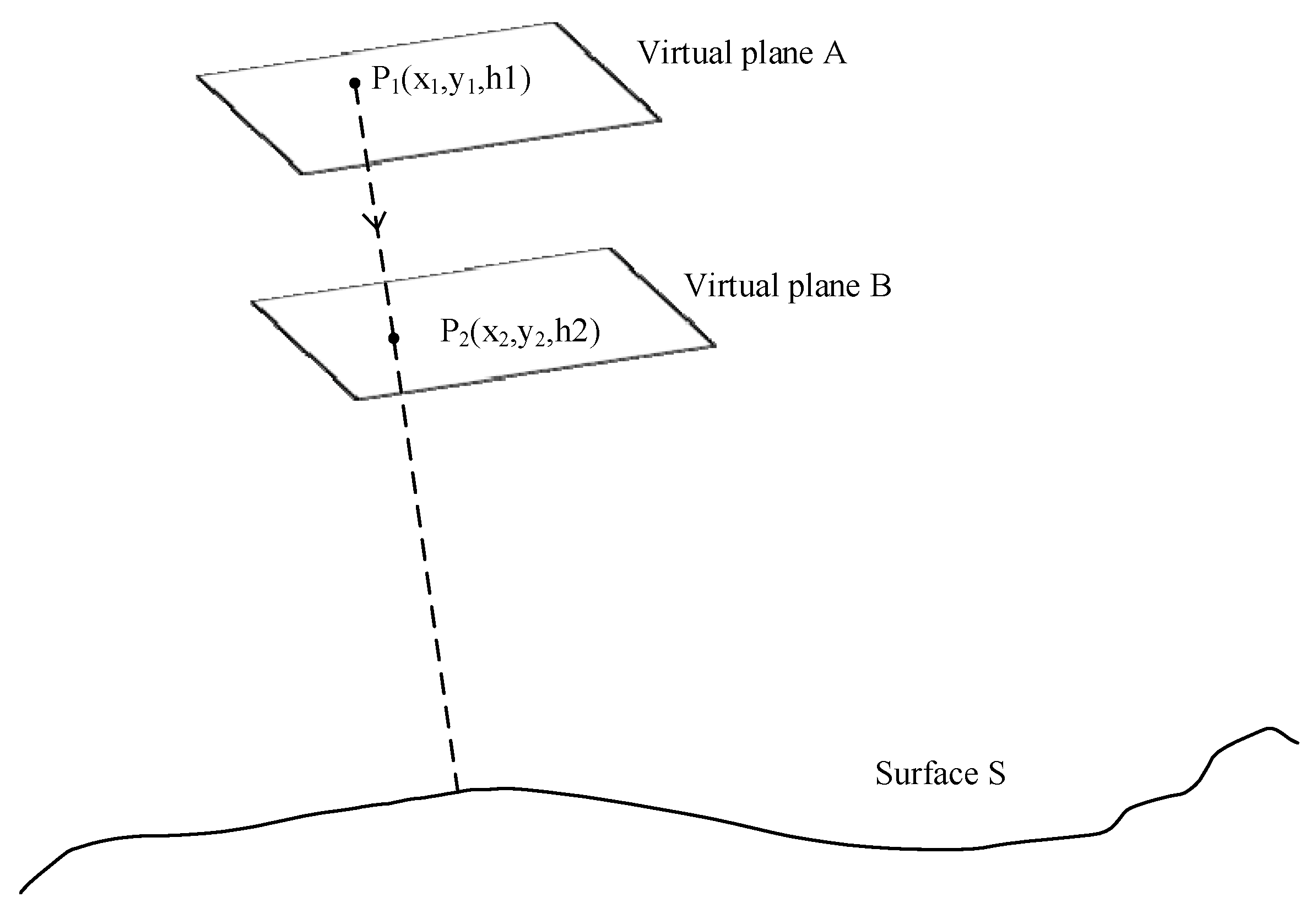

2.3. Simulation of Light

2.4. Reformulation of the Jacobian Matrix

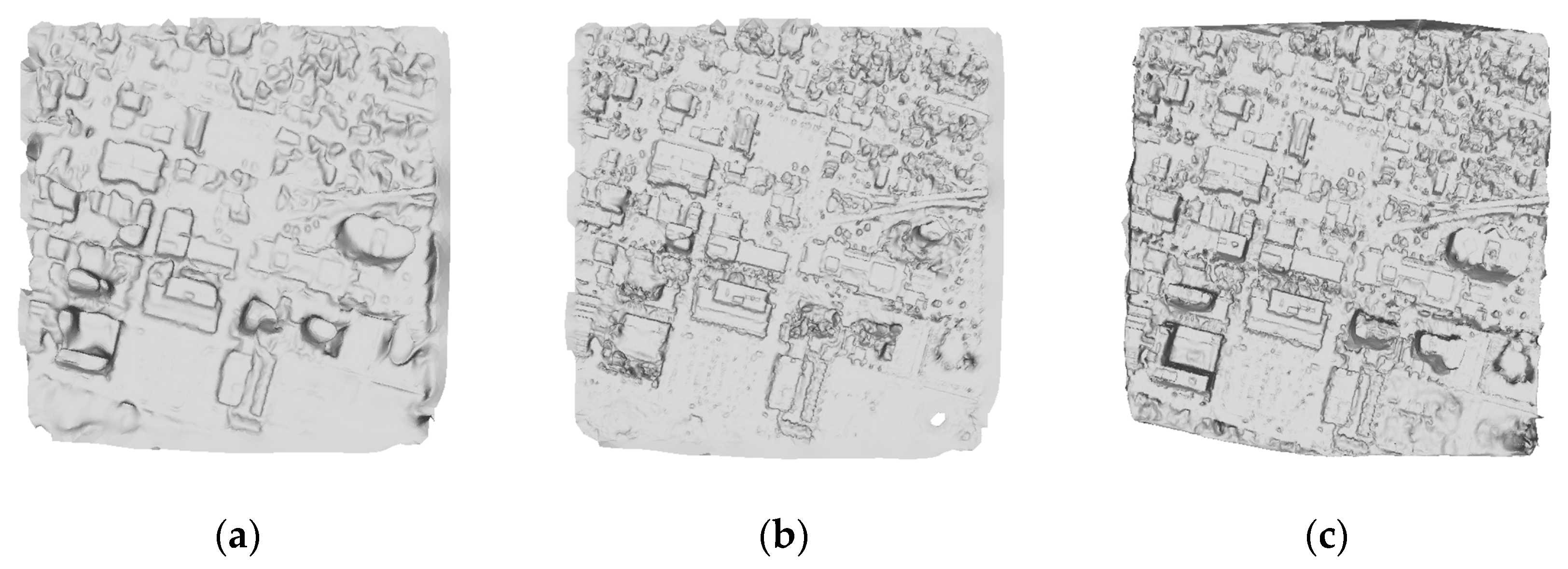

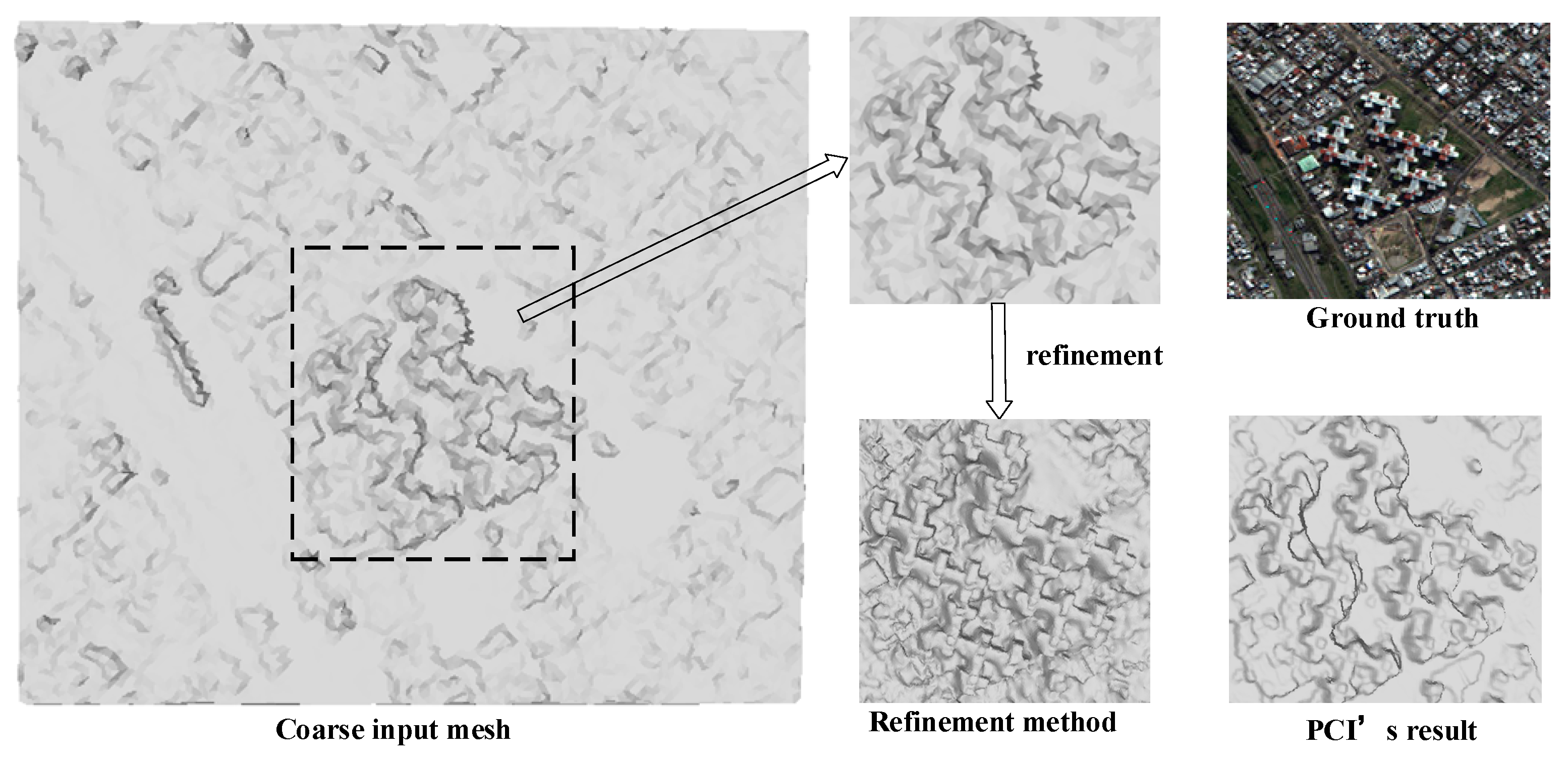

2.5. Subdivision of the Mesh

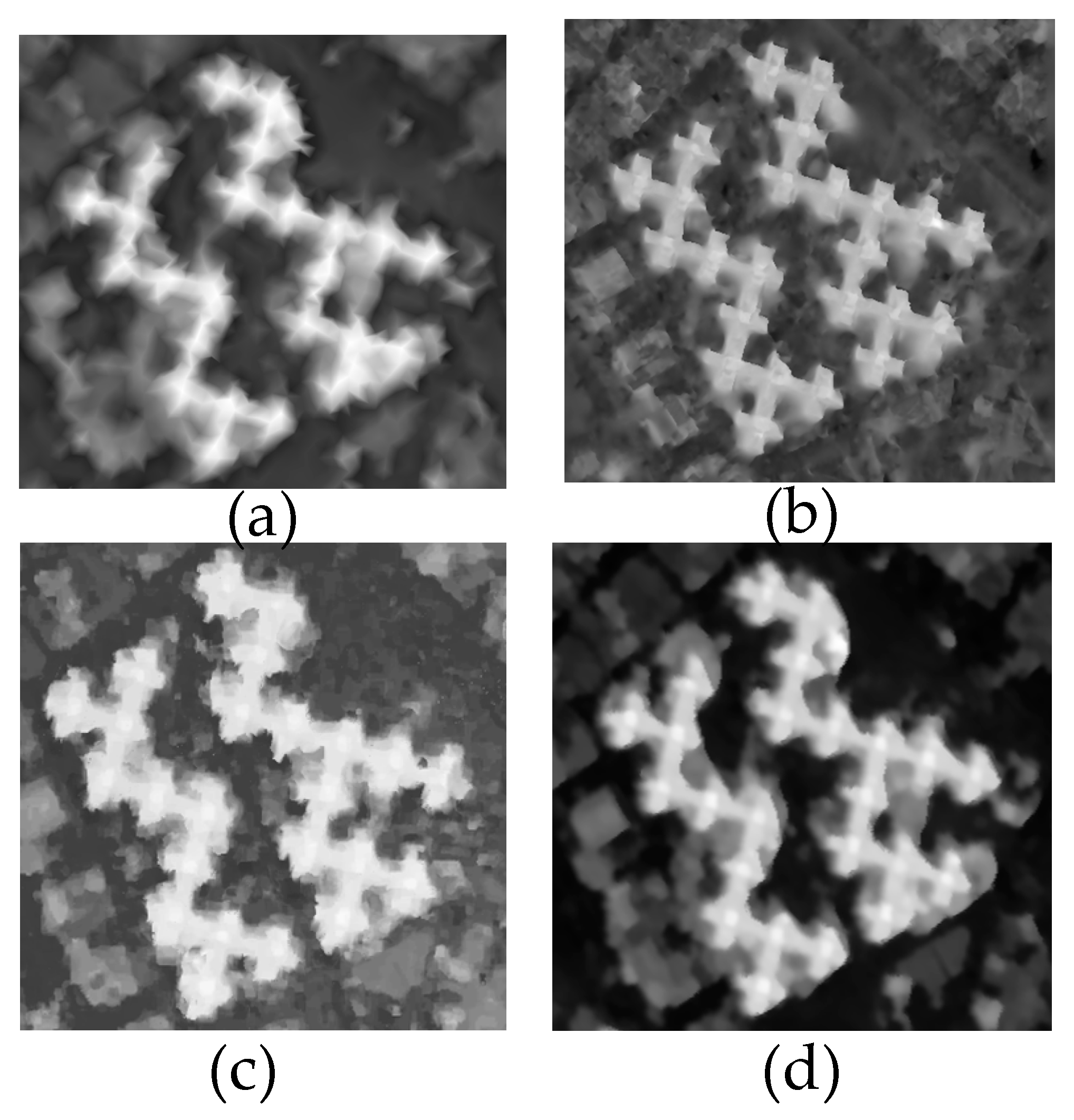

2.5.1. Projection Area Parameters of 3D Mesh Subdivision

2.5.2. Texture Complexity Parameters of 3D Mesh Subdivision

3. Results and Analysis

3.1. Implementation Details

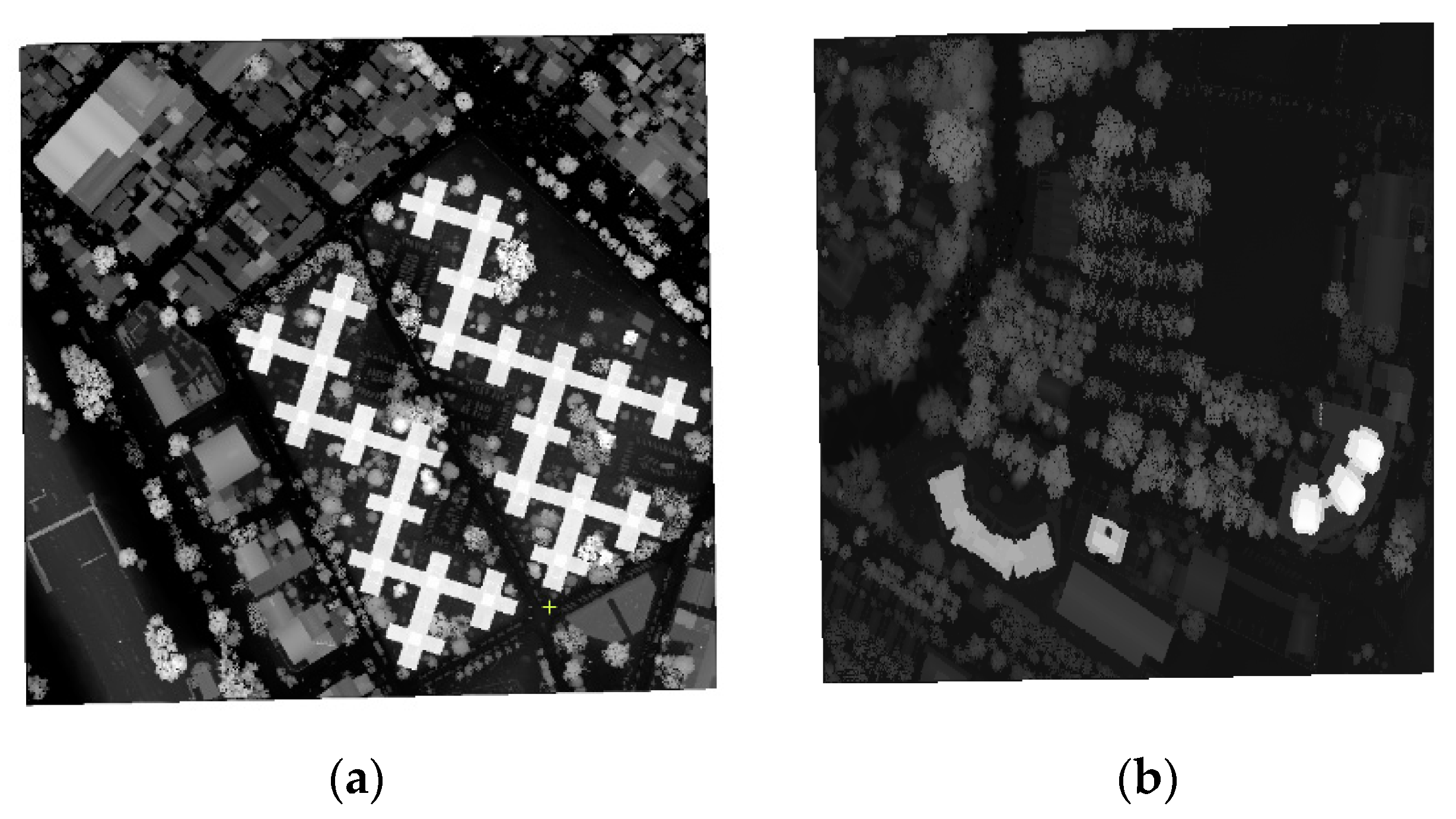

3.2. Experiments of Two Images

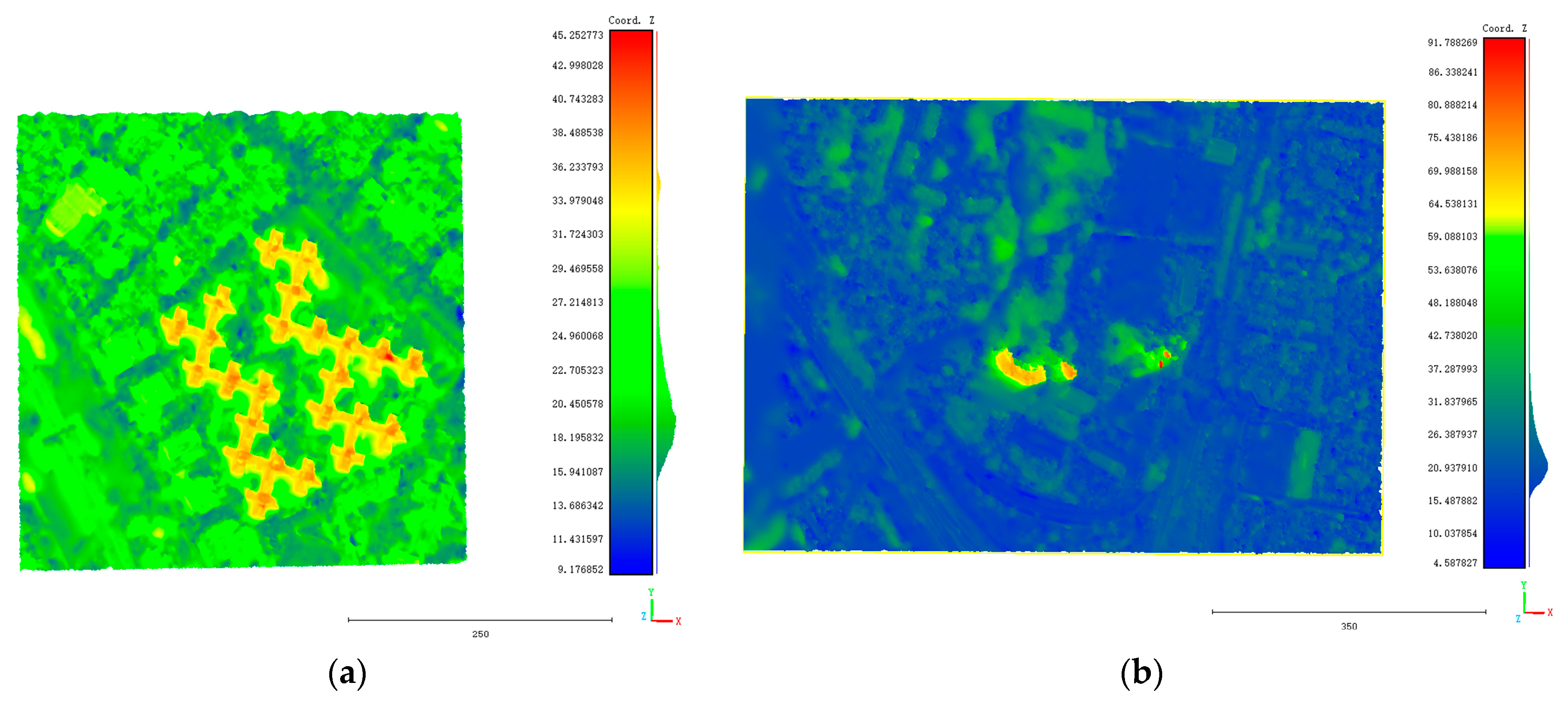

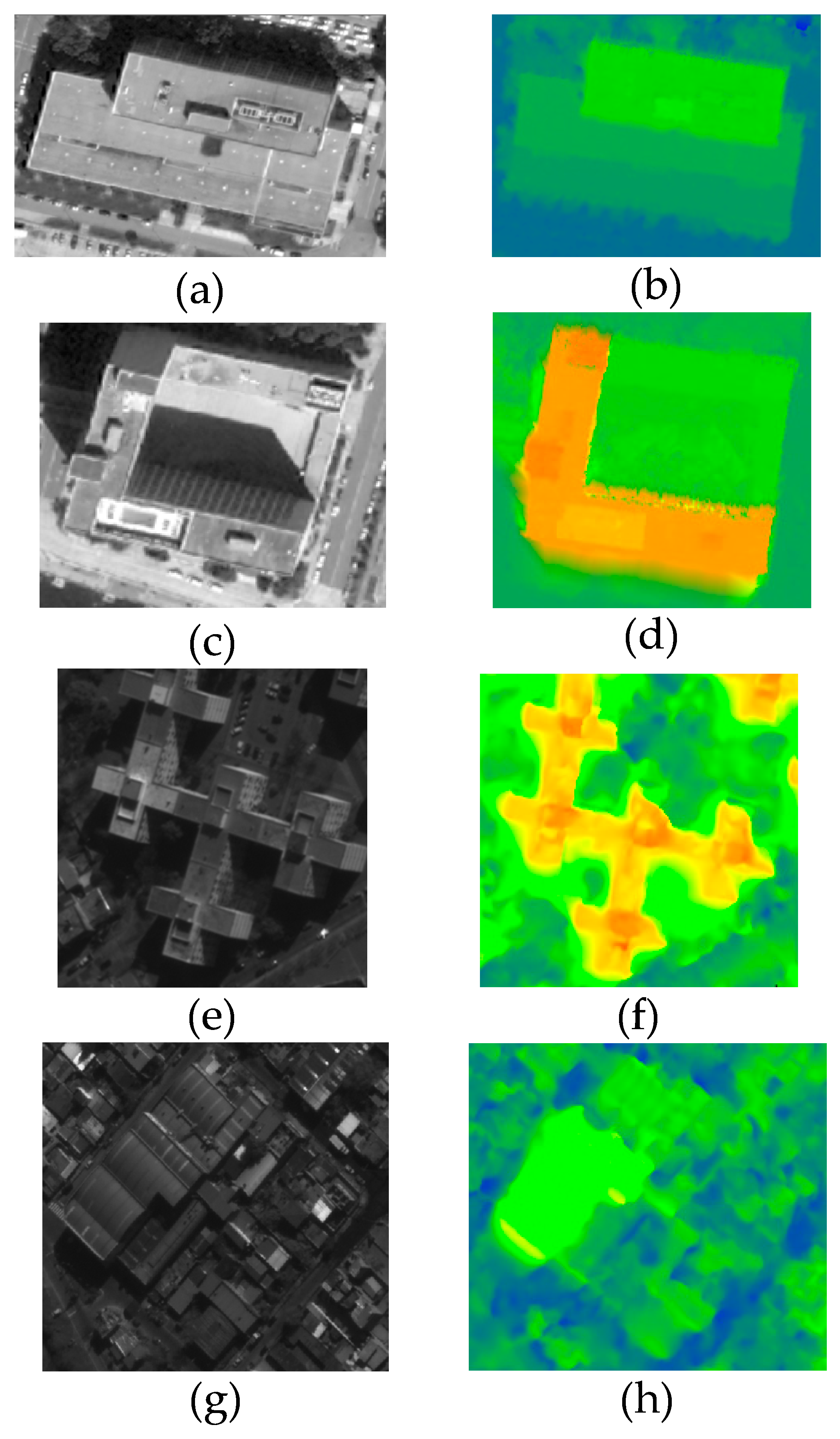

3.3. Test Site and Results of Test Sites

4. Discussion

4.1. Quantitative Evaluation

4.2. Qualitative Evaluation

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gonçalves, D.; Gonçalves, G.; Pérez-Alvávez, J.A.; Andriolo, U. On the 3D Reconstruction of Coastal Structures by Unmanned Aerial Systems with Onboard Global Navigation Satellite System and Real-Time Kinematics and Terrestrial Laser Scanning. Remote Sens. 2022, 14, 1485. [Google Scholar] [CrossRef]

- Järnstedt, J.; Pekkarinen, A.; Tuominen, S.; Ginzler, C.; Holopainen, M.; Viitala, R. Forest variable estimation using a high-resolution digital surface model. ISPRS J. Photogramm. Remote Sens. 2012, 74, 78–84. [Google Scholar] [CrossRef]

- Misra, P.; Avtar, R.; Takeuchi, W. Comparison of digital building height models extracted from AW3D, TanDEM-X, ASTER, and SRTM digital surface models over Yangon City. Remote Sens. 2018, 10, 2008. [Google Scholar] [CrossRef]

- Chai, D. A probabilistic framework for building extraction from airborne color image and DSM. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 948–959. [Google Scholar] [CrossRef]

- Facciolo, G.; De Franchis, C.; Meinhardt-Llopis, E. Automatic 3D Reconstruction from Multi-Date Satellite Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 57–66. [Google Scholar]

- Moratto, Z.M.; Broxton, M.J.; Beyer, R.A.; Lundy, M.; Husmann, K. Ames Stereo Pipeline, NASA’s Open Source Automated Stereogrammetry Software. In Proceedings of the Lunar and Planetary Science Conference, The Woodlands, TX, USA, 1–5 March 2010; p. 2364. [Google Scholar]

- Wu, J.; Cheng, M.; Yao, Z.; Peng, Z.; Li, J.; Ma, J. Automatic generation of high-quality urban DSM with airborne oblique images. J. Image Graph. 2015, 20, 117–128. [Google Scholar]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef]

- Yin, L.; Wang, L.; Zheng, W.; Ge, L.; Tian, J.; Liu, Y.; Yang, B.; Liu, S. Evaluation of empirical atmospheric models using Swarm-C satellite data. Atmosphere 2022, 13, 294. [Google Scholar] [CrossRef]

- Zhao, T.; Shi, J.; Lv, L.; Xu, H.; Chen, D.; Cui, Q.; Jackson, T.J.; Yan, G.; Jia, L.; Chen, L.; et al. Soil moisture experiment in the Luan River supporting new satellite mission opportunities. Remote Sens. Environ. 2020, 240, 111680. [Google Scholar] [CrossRef]

- Tian, H.; Qin, Y.; Niu, Z.; Wang, L.; Ge, S. Summer Maize Mapping by Compositing Time Series Sentinel-1A Imagery Based on Crop Growth Cycles. J. Indian Soc. Remote Sens. 2021, 49, 2863–2874. [Google Scholar] [CrossRef]

- Tian, H.; Wang, Y.; Chen, T.; Zhang, L.; Qin, Y. Early-Season Mapping of Winter Crops Using Sentinel-2 Optical Imagery. Remote Sens. 2021, 13, 3822. [Google Scholar] [CrossRef]

- Tian, H.; Pei, J.; Huang, J.; Li, X.; Wang, J.; Zhou, B.; Qin, Y.; Wang, L. Garlic and winter wheat identification based on active and passive satellite imagery and the google earth engine in northern china. Remote Sens. 2020, 12, 3539. [Google Scholar] [CrossRef]

- Tian, H.; Huang, N.; Niu, Z.; Qin, Y.; Pei, J.; Wang, J. Mapping winter crops in China with multi-source satellite imagery and phenology-based algorithm. Remote Sens. 2019, 11, 820. [Google Scholar] [CrossRef]

- Xie, L.; Zhu, Y.; Yin, M.; Wang, Z.; Ou, D.; Zheng, H.; Liu, H.; Yin, G. Self-feature-based point cloud registration method with a novel convolutional Siamese point net for optical measurement of blade profile. Mech. Syst. Signal Process. 2022, 178, 109243. [Google Scholar] [CrossRef]

- Kuschk, G. Large scale urban reconstruction from remote sensing imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-5/W1, 139–146. [Google Scholar] [CrossRef]

- Wohlfeil, J.; Hirschmüller, H.; Piltz, B.; Börner, A.; Suppa, M. Fully automated generation of accurate digital surface models with sub-meter resolution from satellite imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B1, 75–80. [Google Scholar] [CrossRef]

- d’Angelo, P.; Reinartz, P. Semiglobal matching results on the ISPRS stereo matching benchmark. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2011, XXXVIII–4/W19, 79–84. [Google Scholar] [CrossRef]

- Zhang, K.; Snavely, N.; Sun, J. Leveraging Vision Reconstruction Pipelines for Satellite Imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric Surface Reconstruction from Imagery. In Proceedings of the Proceedings LC3D Workshop, Berlin, Germany, 4–5 December 2012. [Google Scholar]

- Gong, K.; Fritsch, D. DSM generation from high resolution multi-view stereo satellite imagery. Photogramm. Eng. Remote Sens. 2019, 85, 379–387. [Google Scholar] [CrossRef]

- Li, Y.; Zheng, S.; Wang, X.; Ma, H. An efficient photogrammetric stereo matching method for high-resolution images. Comput. Geosci. 2016, 97, 58–66. [Google Scholar] [CrossRef]

- Ghuffar, S. Satellite stereo based digital surface model generation using semi global matching in object and image space. In ISPRS Annals of Photogrammetry, Remote Sensing & Spatial Information Sciences, Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016; ISPRS: Hannover, Germany, 2016; Volume III-1. [Google Scholar]

- De Franchis, C.; Meinhardt-Llopis, E.; Michel, J.; Morel, J.-M.; Facciolo, G. An automatic and modular stereo pipeline for pushbroom images. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2014, II–3, 49–56. [Google Scholar] [CrossRef]

- Huang, X.; Han, Y.; Hu, K. An Improved Semi-Global Matching Method with Optimized Matching Aggregation Constraint. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Chengdu, China, 18–20 July 2020; Volume 569, p. 012050. [Google Scholar]

- Saeed, M.; Ghuffar, S. Semantic Stereo Using Semi-Global Matching and Convolutional Neural Networks. In Proceedings of the Image and Signal Processing for Remote Sensing XXVI, Online Only, UK, 21–25 September 2020; pp. 297–304. [Google Scholar]

- Kim, S.; Rhee, S.; Kim, T. Digital surface model interpolation based on 3D mesh models. Remote Sens. 2018, 11, 24. [Google Scholar] [CrossRef]

- Krauß, T.; Reinartz, P. Enhancment of Dense Urban Digital Surface Models from Vhr Optical Satellite Stereo Data by Pre-Segmentation and Object Detection. In Proceedings of the Canadian Geomatics Conference 2010, Calgary, AB, Canada, 15–18 June 2010. [Google Scholar]

- Li, Z.; Gruen, A. Automatic DSM Generation from Linear Array Imagery Data. In Proceedings of the ISPRS 2004, Istanbul, Turkey, 12–23 July 2004; pp. 12–23. [Google Scholar]

- Eckert, S.; Hollands, T. Comparison of automatic DSM generation modules by processing IKONOS stereo data of an urban area. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 3, 162–167. [Google Scholar] [CrossRef]

- Hu, D.; Ai, M.; Hu, Q.; Li, J. An Approach of DSM Generation from Multi-View Images Acquired by UAVs. In Proceedings of the 2nd ISPRS International Conference on Computer Vision in Remote Sensing (CVRS 2015), Xiamen, China, 28–30 April 2015; pp. 310–315. [Google Scholar]

- Wang, W.; Zhao, Y.; Han, P.; Zhao, P.; Bu, S. Terrainfusion: Real-Time Digital Surface Model Reconstruction Based on Monocular Slam. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 7895–7902. [Google Scholar]

- Zhou, W.; Guo, Q.; Lei, J.; Yu, L.; Hwang, J.-N. IRFR-Net: Interactive recursive feature-reshaping network for detecting salient objects in RGB-D images. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–13. [Google Scholar] [CrossRef]

- Yin, M.; Zhu, Y.; Yin, G.; Fu, G.; Xie, L. Deep Feature Interaction Network for Point Cloud Registration, With Applications to Optical Measurement of Blade Profiles. IEEE Trans. Ind. Inform. 2022, 1–10. [Google Scholar] [CrossRef]

- Gong, K.; Fritsch, D. A Detailed Study about Digital Surface Model Generation Using High Resolution Satellite Stereo imagery. In ISPRS Annals of Photogrammetry, Remote Sensing & Spatial Information Sciences, Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016; ISPRS: Hannover, Germany, 2016; Volume III-1. [Google Scholar]

- Qin, R.; Ling, X.; Farella, E.M.; Remondino, F. Uncertainty-Guided Depth Fusion from Multi-View Satellite Images to Improve the Accuracy in Large-Scale DSM Generation. Remote Sens. 2022, 14, 1309. [Google Scholar] [CrossRef]

- d’Angelo, P.; Kuschk, G. Dense Multi-View Stereo from Satellite Imagery. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 6944–6947. [Google Scholar]

- Wang, K.; Stutts, C.; Dunn, E.; Frahm, J.-M. Efficient Joint Stereo Estimation and Land Usage Classification for Multiview Satellite Data. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Pollard, T.; Mundy, J.L. Change Detection in a 3-d world. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–6. [Google Scholar]

- Zhang, Y.; Zheng, Z.; Luo, Y.; Zhang, Y.; Wu, J.; Peng, Z. A CNN-Based Subpixel Level DSM Generation Approach via Single Image Super-Resolution. Photogramm. Eng. Remote Sens. 2019, 85, 765–775. [Google Scholar] [CrossRef]

- Vu, H.-H.; Labatut, P.; Pons, J.-P.; Keriven, R. High accuracy and visibility-consistent dense multiview stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 889–901. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Siu, S.Y.; Fang, T.; Quan, L. Efficient Multi-View Surface Refinement with Adaptive Resolution Control. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 349–364. [Google Scholar]

- Blaha, M.; Rothermel, M.; Oswald, M.R.; Sattler, T.; Richard, A.; Wegner, J.D.; Pollefeys, M.; Schindler, K. Semantically Informed Multiview Surface Refinement. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3819–3827. [Google Scholar]

- Li, L.; Yao, J.; Lu, X.; Tu, J.; Shan, J. Optimal seamline detection for multiple image mosaicking via graph cuts. ISPRS J. Photogramm. Remote Sens. 2016, 113, 1–16. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Solic, P.; Colella, R.; Catarinucci, L.; Perkovic, T.; Patrono, L. Proof of presence: Novel vehicle detection system. IEEE Wirel. Commun. 2019, 26, 44–49. [Google Scholar] [CrossRef]

- Liu, Y.; Ge, Y.; Wang, F.; Liu, Q.; Lei, Y.; Zhang, D.; Lu, G. A Rotation Invariant HOG Descriptor for Tire Pattern Image Classification. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2412–2416. [Google Scholar]

- Song, P.-L.; Zhu, Y.; Zhang, Z.; Zhang, J.-D. Subsampling-Based HOG for Multi-Scale Real-Time Pedestrian Detection. In Proceedings of the 2019 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), Bangkok, Thailand, 18–20 November 2019; pp. 24–29. [Google Scholar]

- Bosch, M.; Kurtz, Z.; Hagstrom, S.; Brown, M. A Multiple View Stereo Benchmark for Satellite Imagery. In Proceedings of the 2016 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 18–20 October 2016; pp. 1–9. [Google Scholar]

| Texture Complexity Threshold | Projection Area Threshold | Iterations | |

|---|---|---|---|

| a | 0.3 | 24 | 255 |

| b | 0 | 2 | 255 |

| c | 0.3 | 2 | 255 |

| PCI Result | SGM | Refinement Result | ||

|---|---|---|---|---|

| Test site 1 | Completeness [%] | 64 | 57 | 68 |

| RMS [m] | 2.26 | 2.78 | 2.04 | |

| NMAD [m] | 0.79 | 0.85 | 0.76 | |

| Test site 2 | Completeness [%] | 52 | 46 | 49 |

| RMS [m] | 3.36 | 4.17 | 3.88 | |

| NMAD [m] | 1.44 | 1.67 | 1.08 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, B.; Liu, J.; Wang, P.; Yasir, M. DSM Generation from Multi-View High-Resolution Satellite Images Based on the Photometric Mesh Refinement Method. Remote Sens. 2022, 14, 6259. https://doi.org/10.3390/rs14246259

Lv B, Liu J, Wang P, Yasir M. DSM Generation from Multi-View High-Resolution Satellite Images Based on the Photometric Mesh Refinement Method. Remote Sensing. 2022; 14(24):6259. https://doi.org/10.3390/rs14246259

Chicago/Turabian StyleLv, Benchao, Jianchen Liu, Ping Wang, and Muhammad Yasir. 2022. "DSM Generation from Multi-View High-Resolution Satellite Images Based on the Photometric Mesh Refinement Method" Remote Sensing 14, no. 24: 6259. https://doi.org/10.3390/rs14246259

APA StyleLv, B., Liu, J., Wang, P., & Yasir, M. (2022). DSM Generation from Multi-View High-Resolution Satellite Images Based on the Photometric Mesh Refinement Method. Remote Sensing, 14(24), 6259. https://doi.org/10.3390/rs14246259