Abstract

The unprecedented availability of petascale analysis-ready earth observation data has given rise to a remarkable surge in demand for regional to global environmental studies, which exploit tons of data for temporal–spatial analysis at a much larger scale than ever. Imagery mosaicking, which is critical for forming “One Map” with a continuous view for large-scale climate research, has drawn significant concern. However, despite employing distributed data processing engines such as Spark, large-scale data mosaicking still significantly suffers from a staggering number of remote sensing images which could inevitably lead to discouraging performance. The main ill-posed problem of traditional parallel mosaicking algorithms is inherent in the huge computation demand and incredible heavy data I/O burden resulting from intensively shifting tremendous RS data back and forth between limited local memory and bulk external storage throughout the multiple processing stages. To address these issues, we propose an in-memory Spark-enabled distributed data mosaicking at a large scale with geo-gridded data staging accelerated by Alluxio. It organizes enormous “messy” remote sensing datasets into geo-encoded gird groups and indexes them with multi-dimensional space-filling curves geo-encoding assisted by GeoTrellis. All the buckets of geo-grided remote sensing data groups could be loaded directly from Alluxio with data prefetching and expressed as RDDs implemented concurrently as grid tasks of mosaicking on top of the Spark-enabled cluster. It is worth noticing that an in-memory data orchestration is offered to facilitate in-memory big data staging among multiple mosaicking processing stages to eliminate the tremendous data transferring at a great extent while maintaining a better data locality. As a result, benefiting from parallel processing with distributed data prefetching and in-memory data staging, this is a much more effective approach to facilitate large-scale data mosaicking in the context of big data. Experimental results have demonstrated our approach is much more efficient and scalable than the traditional ways of parallel implementing.

1. Introduction

Space-borne sensors conducting the continous monitoring of the earth’s surface have offered a unique and accurate approach to a better understanding of our planet in the past few decades [,]. With the booming of high-resolution Earth Observation (EO) [,,] as well as the increasing diversity of advanced sensors, remotely sensed earth observation data have undergone exponential growth []. The Landsat missions [] of the U.S. Geological Survey (USGS) have archived more than 4.5 petabytes of data by 2019. While the Copernicus program of the European Commission could expect a daily volume of more than 10 terabytes, the current Sentinel series has accounted for almost 6 terabytes of them []. Benefiting from the advanced high-resolution Earth Observation (EO) and open data policies, as well as the maturity of cloud computing technologies, petascale analysis-ready satellite earth observation data are freely available on the Cloud []. Eventually, earth observation data have been widely accepted as “Big Data” [,].

It is noteworthy that the unprecedented availability of enormous earth observation Analysis-Ready Data (ARD) [] has further stimulated increasing interest in global resource and environmental monitoring [,,], such as the Global Rain Forest Mapping (GRFM) project [] of Japan, and the global crop production forecasting system of Global Agriculture Monitoring (GLAM) project [] using MODIS near real-time data. In a nutshell, great efforts have been invested in exploiting an extremely large amount of multi-sensor and multi-temporal satellite data for analysis at a much larger scale than ever. Under this circumstance, capturing a wide field-of-view (FOV) [] scene covering a full region of interest (ROI) [] has become quite common and also critical, especially concerning the imaging width limitation of a single sensor with higher resolution. In recent years, large-scale imagery mosaicking [] has drawn particular attention and become an indispensable task not only for illustrative use but mapping [] and analysis []. The GRFM project [] stitched 13000 JERS-1 SAR scenes to build rainforest mapping for tropical Africa, while the United States Geological Survey (USGS) mosaicked Antarctica mapping [] out of over 1000 images. Moreover, some large-scale crop mapping [,,] and vegetation clearing mapping [] even employ enormous EO data for on-line processing on Google Earth Engine (GEE).

Actually, mosaicking literally stitches a collection of remotely sensed images with overlapping regions into a single geometric-alignment composite scene for a wide field of view. However, in the context of regional to global research, traditional ways of imagery mosaicking remain significantly challenged by several cumbersome issues. First and foremost, mosaicking is practically suffered from a staggering number of “messy” scenes when scaling to a large region. Generally, the sheer vomlume of a regional-scale mosaic [] with thousands of scenes could easily exceed one terabyte, while a continental [] or global mosaic [] may be dozens of terabytes. Moreover, to ensure a more satisfactory result, mosaicking mainly follows a complex processing chain of re-projection, radiometric normalization, seamline detection, and blending [,]. Large-scale mosaicking has been accordingly accepted as both data-intensive [,] and computation-intensive []. As a result, the incredible computation requirements introduced by the numerous RS datasets and the complex multi-stage processing procedure are far beyond the capability of a single computer. Thus, traditional sequential ways of mosaicking turn out to be remarkably time-consuming or even unfeasible in the global scenario. Secondly, the other tough issue lies in the heavy data I/O burden resulting from intensively fitting tremendous RS data into local internal memory all at one time. To make it worse, the frequent data transferring among multiple mosaicking steps may probably cause enormous RS datasets to intensively shift back and forth between limited local memory and bulk external storage. Consequently, large-scale mosaicking is hampered by unexpectedly heavy data I/O load, which may inevitably result in discouraging performance and scalability. Therefore, an optimized data caching approach is expected to keep data locality and avoid computationally-poor out-of-core computing with relatively much higher I/O overhead. What is more, the difficulty is also inherent in the complexity of efficient organizing and quick indexing of these tremendous overlapped “messy” RS datasets.

With the headway of high-performance computing (HPC), several dominant HPC-based approaches have been considered promising solutions to the computational challenge, such as parallel computing with MPI (Message Passing Interface)-enabled multi-core clusters [], distributed computing with Spark [], and CUDA programming powered by GPUs []. Wherein, it is worth noticing that some ongoing open-source spatiotemporal geospatial engines or libraries such as GeoTrellis [] and GeoMesa [,] have emerged with native support for the distributed indexing and analyzing of remote sensing data deluge. Leaning on the spatial resilient distributed datasets (SRDD) abstraction provided by Spark, the distributed GeoTrellis library has facilitated efficient indexing based on multi-dimensional space-filling curves and distributed mosaicking on large RS datasets across multiple processors. However, despite the distributed stream processing facilitated by Spark, GeoTrellis-enabled mosaicking is still not fully competent for the frequently staging of such an immense amount of data among processing steps while maintaining good data locality. The significantly huge I/O challenge caused by intensive data throughput remains a non-ignorable issue in a distributed processing scenorio. In particular, the increasing demand for web-based on-the-fly processing, such as global monitoring on the GEE platform, has further exacerbated the challenge of time-consuming mosaicking.

To properly settle the issues mentioned above, we propose an in-memory distributed mosaicking approach based on Alluxio [] for large-scale remote sensing applications with geo-gridded data staging on Spark-enabled multi-core clusters. Assisted by GeoTrellis, the enormous RS datasets could be efficiently indexed by multi-dimensional space-filling curves geo-encodes and organized into GeoHash-encoded gird groups according to the discrete global grid (DGG) division method. Following this way, buckets of geo-grided data groups are hereafter loaded directly from Alluxio into RDDs that could be implemented concurrently as grid assignments of mosaicking on top of the Spark-enabled cluster for fully exploiting the parallelism. The main contribution is that it introduces Alluxio [,], a virtual distributed file system, as an in-memory data orchestration layer to facilitate in-memory big data staging among multiple mosaicking phases for maintaining a better data locality. By employing the data prefetching mechanism of Alluxio, it could transparently load and orchestrate the required data into local memory ahead of processing for each RDDs task. Meanwhile, it could also cache the intermediate processing results in memory for data staging among mosaicking stages to eliminate the tremendous data transferring burden between internal memory and external storage to a great extent. As a result, benefiting from distributed data prefetching and in-memory data staging, we could expect enhanced parallel processing efficiency and better scalability.

The rest of this paper is organized as follows. The following section discusses the related works about state-of-art large-scale RS data mosaicking. Then, the challenging issues that face current mosaicking processing in the context of RS big data are discussed in Section 3. In Section 4, we go into detail about the design and implementations of novel in-memory distributed large-scale mosaicking for RS images with geo-grided data staging. Section 5 demonstrates the comparative analysis of experimental performance. Finally, the Section 6 draws the final conclusion and summarizes this paper.

2. Related Works

Most of the traditional remote sensing technologies consider improving the image mosaic effect under small data volume. In the face of massive remote sensing data, the low efficiency of remote sensing image mosaicking is even more severe. For data above Terabyte (TB), Pebibyte (PB), and even Exbibyte (EB) levels, current research tends to improve the hardware performance of the machine and use high-performance computing technology to process image mosaics in parallel to improve mosaic efficiency.

2.1. Traditional Way of Mosaicking

Image mosaicking is widely used in the remote sensing field. The traditional method improves the algorithm effect and operation efficiency by improving the basic step algorithm such as reprojection, registration, radiation balance, seam line extraction, and image blending []. Image registration is described in detail in the work [,]. Overlapping areas can be extracted using geo-referencing information. Kim et al. [] compute the projective transformation in overlapped areas of the two given images by using four seed points. To eliminate the radiation differences of multiple remote sensing images, an effective radiation normalization (RN) method is crucial [,]. Moghimi et al. [] used the KAZE detector and CPLR method to reduce the radiation difference of unregistered multi-sensor image pairs that are classified as an effective global models method. Li et al. [] proposed a pairwise gamma correction model to align intensities between images, which focus on handling the radiometric differences in a local manner. Zhang et al. [] and Yu et al. [] proposed a global-to-local radiometric normalization method that combines global and local optimization strategies to eliminate the radiometric differences between images. The algorithms of seam line extraction include: Dijkstra’s algorithm, the dynamic programming (DP) algorithm, and bottleneck mode []. Levin et al. [] introduce several formal cost functions for the evaluation of the quality of mosaicking. In these cost functions, the similarity to the input images and the visibility of the seam is defined in the gradient domain, minimizing the disturbing edges along the seam. However, these traditional algorithms require fine-grained analysis of task objects, are not robust and scalable, and are difficult to generalize to large-scale data. In addition, when the amount of remote sensing image data increases significantly, the improvement effect of traditional mosaic algorithms is not significant and cannot meet the processing speed requirements of large-scale images. For processing multiple types of remote sensing images, the mosaic effect of traditional algorithms is not ideal.

2.2. Large-Scale Mosaicking with HPC

Since large-scale remote sensing mosaic requires a strong capability of data calculation, it is a computationally intensive task []. Optimizing the hardware performance of computing equipment can effectively improve the computing speed in the mosaicking process. Using high-performance computing methods such as parallel computing, cloud computing, and distributed computing, parallelizing each task can improve the mosaic efficiency. Eken et al. [] propose an idea based on measuring and evaluating the hardware resources available to the machine, upon which the mosaicking process is performed, and the resolution of the image is scaled accordingly. Ma et al. [] explore a reusable GPU-based remote sensing image parallel processing model and establish a set of parallel programming templates to make writing parallel remote sensing image processing algorithms more simple and effective. Nevertheless, for massive remote sensing data, it is hard to obtain the best mosaic results only by improving the independent performance of computing devices and parallel control of logical operations. In addition, CUDA programming is oriented to computationally intensive tasks, requiring fine-grained settings for different complex logic operations, which leads to increased programming difficulty and insufficient flexibility.

Parallel processing architectures such as cluster structure and distributed structure provide a new idea for remote sensing big data processing. Researchers have used high-performance computing frameworks such as Message Passing Interface (MPI) [], Hadoop [], Spark [], etc. to deploy computing tasks on multiple machines, and they decompose the serial computing task of a single node into multiple parallel subtasks, which effectively improves computational efficiency. Wang et al. [] use the shared memory programming algorithm (SMPA) of POSIX threads and the distributed memory programming algorithm (DMPA) using MPI to achieve a parallel mosaic. To reduce the memory consumption and load balancing of MPI programming, Ma et al. [] propose a task-tree based mosaicking for remote sensed images at large scale with dynamic DAG scheduling. The drawback of these works is that although MPI improves parallelism, it further increases the communication overhead and programming complexity between multiple nodes []. When the data volume of the mosaicked image is further increased, the complex task scheduling may cause the program to crash. Traditional parallel processing platforms are expensive and have poor scalability and fault tolerance, resulting in a data transmission bottleneck []. Spark is a fast general-purpose computing engine designed for large-scale data processing. It has a concise interface and stronger expressiveness and performance than Hadoop and MPI; moreover, it can support interactive computing and more complex program algorithms faster []. Spark has been widely used in remote sensing image processing. Liu et al. [] and Wang et al. [] realized remote sensing image segmentation through Spark, and Sun et al. [] established a remote sensing data processing platform based on Spark. Compared with CUDA programming and MPI, Spark is more fault-tolerant and scalable [], easing the performance limitations of other parallel platforms. It also provides a distributed memory abstraction (RDD, Resilient Distributed Datasets) of the cluster and divides tasks according to different stages. Although the control level is not as refined as MPI, it supports lightweight and fast processing, and its cluster-distributed computing is more efficient in the face of massive data.

2.3. Current Approaches to Accelerate Large-Scale Mosaicking

To support geographic information analysis and distributed computing in big data scenarios, open-source remote sensing processing tools based on distributed systems are widely used, such as Geospark [], Geotrellis [], GeoMesa [], etc. GeoSpark is is a combination of traditional GIS and Spark, which provides distributed index and queries on spatial vector data in Apache Spark. GeoMesa is a spatial index based on Apache Accumulo key-value store, and it is also for spatial vector data. The core part of Spark in GeoMesa comes from the GeoSpark algorithm. It mainly supports the storage and query of massive spatiotemporal data, while the existence of data processing is partially lacking. GeoTrellis is a Scala library and framework that uses Spark to process raster data at network speed, thus making it an important tool for working with big data in satellite image processing []. As a high-performance raster data computing framework based on Spark, GeoTrellis supports operations such as reading, writing, data conversion, and the rendering of remote sensing image data, and it adopts a distributed architecture to realize parallel processing operations on a large amount of remote sensing data. Due to the frequent I/O of remote sensing image data, intermediate data, and result data to disk, I/O bottlenecks are caused, which limits the overall computing performance. Using a parallel strategy to divide and coordinate the computing tasks of child nodes can improve data processing performance.

The distributed file storage system can alleviate the I/O bottleneck while meeting the needs of distributed computing and storage [,] such as Google File System (GFS) [], Hadoop Distributed File System (HDFS) [], Lustre [], Ceph [], GridFS [], FastDFS [], etc. With the increasing demand for large-scale mosaicing, frequent and inefficient I/O operations lead to overall performance degradation, while traditional distributed file storage systems are expensive, have poor scalability and fault tolerance [], and cannot meet performance requirements. The process of caching fast access does not need to access the actual information source, which can improve the I/O performance []. Alluxio is a memory-based file management system which caches data into memory [] to obtain a high-speed accessible data layer and improves I/O speeds by making the best of cluster memory. In addition, Alluxio enables computing programs to achieve memory-level I/O throughput by moving computation closer to the stored data. Combining it with computationally intensive remote sensing image mosaicking can alleviate I/O bottlenecks in the mosaicking process.

3. Challenging Issues

In this section, we try to discuss in detail the major challenging issues facing traditional mosaicking, especially in the context of large-scale to global research. The first issue is about the difficulty that lies in the efficient organizing and fast indexing of a staggering number of “messy” images with overlapping regions (Section 3.1). The second issue is related to the “out-core” computing problem caused by extremely intensive and heavy I/O burden (Section 3.2). The third challenging problem is to meet the recent emerging requirement of on-the-fly processing, which refers to how to exploit parallelism while overcoming the I/O bottleneck to offer excellent performance and scalability (Section 3.3).

3.1. Difficulty in Organizing and Indexing of Huge Amount of Data

When scaled to a large region, mosaicking is practically challenged with a staggering number of “messy“ scenes of remote sensing images with overlapping regions. A regional-scale mosaic [] composited with thousands of Sentinel-2 scenes may add up to a data volume of more than one terabyte, and it takes hours or even a couple of days of processing. Meanwhile, a national or global scale [] with a sheer volume of dozens of terabytes could even involve tens of thousands of scenes and require a couple of days or weeks. Obviously, large-scale mosaicking has been overwhelmed with significantly huge volumes of remote sensing images. In this circumstance, the traditional way of mosaicking that greatly suffered from intolerable long processing time and poor scalability seems to be no longer inapplicable.

For performance efficiency, data parallelism is generally exploited by decomposing a large-scale processing issue covering the entire ROI into a large group of regional mosaic tasks processed in parallel on smaller bunches of data using a divide-and-conquer data partition method. Despite several approaches available for data ordering and task partition, no matter whether a minimal-spanning-tree based task organizing method [] nor DAG (Directed Acyclic Graph)-based task tree construction strategy [], they all adopt a recursie task partition method and try to express the whole processing tasks as a nearly balanced task tree. Nevertheless, these existing recursive data partitions essentially follow a scene-by-scene manner; most of the split smaller jobs that are supposed to implement in parallel have ordering constraints. As a result, the data parallelism of these approaches could not be fully exploited and may inevitably lead to unsatisfactory parallel efficiency. Essentially, the main reason is that the criss-cross relationships among large numbers of messy geographically adjacent images required for mosaicking have made it not only travail but rather difficult to conduct data ordering and task partition. Firstly, to properly organize the excessive number of images and sort out the intricate relationship among them for task partition from the longitude and latitude geographical coordinates information turns out to be remarkably complicated and quite time consuming. No matter how the data are grouped and organized into jobs, the adjacent mosaicking jobs are mostly data dependent on each other, since they have some shared imagery scenes inevitably. Thus, the quick indexing of a specified image out of these immense RS data and task arranging could even become a cumbersome problem. Therefore, efficient data organizing and indexing among large numbers of images is paramount to conduct proper task partiton and arrange for a better data parallelism.

3.2. Extremely Heavy and Intensive Data I/O

Although the huge computational requirements could be easily tackled by exploiting data parallelism on an MPI- or Spark-enabled cluster, large-scale mosaicking is significantly stuck with tremendous intensive data I/O introduced by an immense amount of images required for masaicking. Despite the increasing RAM capacity of a single computer, it remains too numerous for the local internal memory to fit all the datasets that easily measure in terabytes into local internal memory all at once. Even in a distributed scenario implemented in parallel across multiple processors, plenty of individual data I/O opertions are indispensable for loading on a bunch of datasets for each thread in a scence-by-scene manner from a disk.

Some mainstream distributed file systems, such as HDFS (Hadoop Distributed File System) and Lustre, are dedicated to providing high-performance parallel I/O while improving data locality based on the data copy policy. However, these distributed file systems may relieve the massive I/O overhead to some extent, but loading a large number of images from external storage into memory all at once remains quite a time-consuming I/O bottleneck in case of large-scale mosaicking.

To further complicate the case, the multi-phase mosaicking follows a composite processing chain of e-projection, radiometric normalization, seamline detection, and blending for a more satisfactory seamless view. Due to the limited capacity of local memory, each processing step of mosaicking has no choice but to resort to slower bulk external storage to host the vast volume of the most produced intermediate data instead of maintaining them all in memory. Hence, the followed processing step could only solve some smaller part of the intermediate data that are cached in RAM and spend plenty of extra time-consuming I/O operations to reload most of the leftover data from external storage. Consequently, frequently moving a large amount of data in and out of limited memory to external storage could not only contribute to an extra impressive I/O penalty but also lead to computationally poor out-of-core computing. As a result, despite these HPC accelerators, large-scale mosaicking has significantly stuck with extremely heavy and intensive I/O, which may inevitably lead to poor performance and discouraging scalability. Therefore, an efficent data staging strategy with a better intermediate data caching and prefetching is critically demand for an improved data locality and performance.

3.3. Performance and Scalibility for On-the-Fly Processing

With the proliferation of EO data available online, there has been a remakable increasing demand for web-based global environmental or resource monitoring on some dominant Cloud-enabled platforms such as the GEE platform. These web-based applications generally conduct on-the-fly processing on a great bunch of RS data inside the requested ROI and expect processing results in near real time. When scaling to large or even global regions, to support time-critical on-the-fly processing for these web-based applications anticipates a much higher performance and scalability. Apparently, as an indispensable task of large-scale RS applications, the time-critical on-the-fly processing demand of web-based applications has brought in a new critical challenge for the performance of mosaicking. Despite the ongoing distributed computing ways to accelerate large-scale mosaicking, the Spark-facilitated or even GeoTrellis-enabled approach is up until now still fully not competent for the higher performance needs and aggravated I/O burden. Therefore, to sufficiently exploit parallelism for an enhanced performance and scalabitiy is not only paramount to large-scale mosaicking but also on-the-fly RS data applications.

4. Distributed In-Memroy Mosaicking Accelerated with Alluxio

To tackle the above challenging issues and achieve satisfying performance enhancement, we demonstrated an in-memory distributed large-scale mosaicking with geo-gridded data staging accelerated by Alluxio. The main idea is that it adopts Spark as the basic distributed stream computing framework to implement large-scale mosaicking as geogrided tasks and employs Alluxio to facilitate in-memory big remote sensing data orchestration and staging throughout the entire processing chain. Practically, the RS images are too numerous to directly flood into the Spark engine, since the whole processing could be stuck and lead to discouraging performance or even make it unfeasible. Assisted by GeoTrellis, this approach organizes the enormous number of “messy” RS images into GeoHash-encoded grid tasks through discrete global grid (DGG) division and orders them with multi-dimensional space-filling curves and geo-encoded indexing. Powered by Spark, it breaks down the entire large-scale mosaicking into groups of grid tasks and simultaneously implements them as buckets of spark jobs in parallel through the divide-and-conquer method. Following this way, a two-level parallelism could be exploited, since each divide grid task with geo-eocoding could be further fit into Spark as fine-grained jobs. The main contribution is that this paper finds a new way out to break through the I/O bottleneck by employing Alluxio, a virtual distributed file system, to enable in-memory data staging throughout the whole processing phases of the entire mosaicking procedure by adaptive data prefetching. Thus, it not only prefetches the data required for processing but also elaborately orchestrates the intermediate data result for the next processing step through data staging and transparent data prefetching. Consequently, the data transmission among processing chains could be eliminated to a great extent to maintain a better data locality.

4.1. RS Imagery Organizaiton and Gird Task Construction

In the process of processing cluttered large-scale remote sensing images, if the overall processing is performed without preprocessing, the time consumption in retrieval and the limitation of machine computing power computing will seriously drag down the mosaic performance due to the massive amount of data. Before the mosaic calculation, an appropriate indexing method is set for the remote sensing images, and parallel task division is performed in advance to allocate the tasks to parallel computing nodes for processing, which reduces the overall indexing time and greatly relieves the pressure on the computing node.

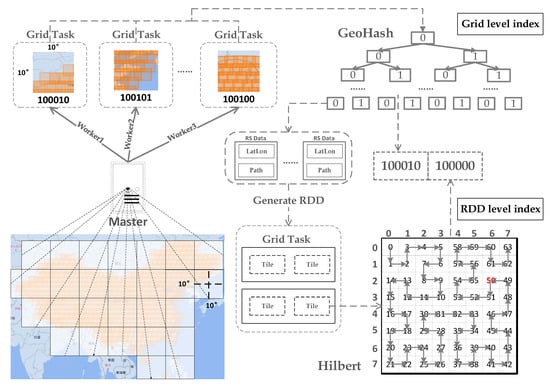

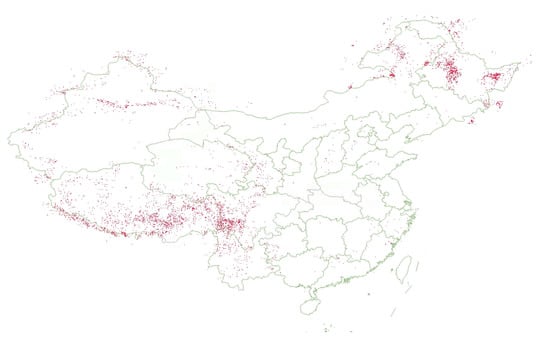

We divide the overall remote sensing image range into different grids, and the remote sensing images to be processed are divided into different grids according to their longitude and latitude ranges, thus building a parallelizable grid task, which greatly improves the speed of retrieval and mosaicking. We first read the header file information of all the images to be stitched, count the latitude and longitude of the four corners of each image, and calculate the latitude and longitude range of the entire image after mosaicking. As shown in Figure 1, according to the latitude and longitude range of the overall mosaic image, it is cut according to 10 degrees and divided into multiple square grids; each grid is a separate grid task and sent to different nodes for parallel computing. Based on the latitude and longitude of the four corners of the remote sensing image, we judge which grids it belongs to. If any of the four corners are within the grid, the image will be divided into the grid. Therefore, a remote sensing image may be divided into four adjacent grids at the largest, and the smallest will be completely contained in one grid. We specify that a grid contains at least one remote sensing image; otherwise, the grid is determined to be empty and not submitted to Spark for processing. In this paper, according to the longitude and then the latitude, the binary tree structure is used to store the remote sensing image information in each grid, including the latitude and longitude coordinates of the four corners of the remote sensing image, and the absolute path stored in the file system.

Figure 1.

The experiment with remote sensing images covering China, and divide them into grids for parallel computation. The grid is divided by 10 degrees according to latitude and longitude. The master node of spark receives the result of grid division and assigns each grid to a different worker node for calculation. We build a double index for remote sensing image data, build an external index according to GeoHash encoding for the external grid, and build a Hilbert internal index for the RDD read by Spark worker nodes.

Before mosaicking a large-scale remote sensing image, it is necessary to organize and sort the large-scale data so that the image position of the corresponding area can be quickly indexed in the parallel calculation of the later mosaicking task, thereby improving the mosaicking speed. In this paper, our in-memory distributed large-scale mosaicking algorithm builds two-layer RDD RS data indexing, the details are shown in Algorithm 1. The external index is based on the latitude and longitude range of the overall mission and organizes large amounts of RS images into GeoHash-encoded grid tasks through DGG division and orders them with multi-dimensional z-curve geo-encoded indexing. The internal index uses the Hilbert curve to index the RDD slices generated by reading remote sensing images in the grid task. For the external grid index, we refer to the GeoHash encoding method to make modifications. The GeoHash algorithm uses the dichotomy method to continuously narrow the interval of longitude and latitude for binary encoding, and it finally combines the encoded parity bits generated by the longitude and latitude, respectively. Since the purpose of our algorithm coding is not to control the precision by the coding length but to set a grid range of a fixed size according to the amount of data, we change the dichotomy to grid coding with a fixed precision of 10 degrees.

| Algorithm 1: Two-layer RDD RS data indexing construction in in-memory distributed large-scale mosaicking |

|

RS data InputPath Output: Two-layer RDD RS data indexing

|

For the external grid index, we refer to the GeoHash encoding method to make modifications. The GeoHash algorithm uses the dichotomy method to continuously narrow the interval of longitude and latitude for binary encoding, and it finally combines the encoded parity bits generated by the longitude and latitude, respectively. Since the purpose of our algorithm coding is not to control the precision by the coding length but to set a grid range of a fixed size according to the amount of data, we change the dichotomy to grid coding with a fixed precision of 10 degrees. All grids treat the latitude and longitude of the upper left corner as the origin and refer to GeoHash encoding to encode 10 degrees with the same grid size. According to the above method, the remote sensing image is encoded with the z-curve indexed by the space-filling curve. Assuming that a mosaic area with a longitude range of 73 to 135 and a latitude range of 4 to 24 is encoded, the number of encoded bits depends on the side with the largest difference between the longitude and latitude. The longitude difference is 62 and the latitude difference is 20. Therefore, the code length is 6 bits. We calculate according to the grid latitude and longitude range. Take the area in red in Figure 1 as an example. Assuming that the longitude of a grid is 133 to 143 and the latitude is 14 to 24, the longitude code is 110 and the dimension code is 001. Finally, the latitude and longitude codes are cross-combined according to the parity bit, and the grid code is calculated as 101,001. In Figure 1, the grid code of the grid received by worker1 is 100,010, and the odd-numbered bits are longitude code 101, which means that the longitude range of the image is 123–133, and the latitude code is the same. For each read image, the latitude and longitude of the four corners are encoded in the same way. If the encoding of any one of the four corners of the remote sensing image matches the grid encoding, the image is divided into matching grids, and the use of a binary tree index can also quickly locate the grid to which it belongs.

We made more detailed settings for the internal parallelism of the grid task, divided the RDD generated by using Spark to read remote sensing image information again, and converted it into multiple tiles for parallel computing, so we built an internal index for the tiles. In order to adapt to the distribution characteristics of remote sensing image data in multi-dimensional space and time, our mosaic algorithm adopts the Hilbert curve index method as the index method to meet the calculation requirements. Suppose we want to use this table to determine the position of a point on the n = 3 Hilbert curve. In this example, the coordinates of the point are assumed to be (4,4). Starting from the first layer, starting from the first square in Figure 1, find the quadrant where the point is located. In this example, which is in the lower right quadrant, the first part of the point’s position on the Hilbert curve is 2 (4 > 3, 4 > 3, 10 in binary). Then, analyze the squares in quadrant 4 of the second level, and repeat the process just now; the next part is 0 (4 < 6, 4 < 6, binary 00). Finally, entering the third layer, it can be found that the point falls in the sub-quadrant of the upper left corner, so the last part of the position is 0 (4 < 5, 4 < 5, binary 00). Connecting these positions together, we obtain the position of the point on the curve as 100,000 in binary or 32 in decimal.

According to the external grid index, we can quickly locate the remote sensing images contained in the grid by latitude and longitude through the modified GeoHash code. For the internal index, the Tile RDD obtained after partitioning the remote sensing image RDD is encoded by the Hilbert curve so that the index construction of the RDD is realized. Using two-layer RDD RS data indexing, we improve the sorting and query efficiency of RS data.

4.2. Implement Grid Task of Mosaicking as RDD Job on Spark

To make better use of the computing resources provided by the distributed architecture, our purpose is to realize the parallelization of remote sensing image mosaicking and reduce the time consumption by allocating computing tasks to different nodes for parallel computing. This paper chooses Spark, a fast and general computing engine designed for large-scale data processing, as a distributed framework for parallel computing.

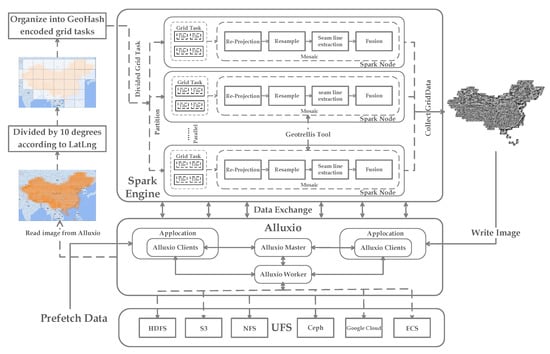

As shown in Figure 2, the program first prefetches the data from the storage and divides the mosaic grid according to the latitude and longitude to obtain the grid task. When performing computing processing in the Spark framework, first, set partitions according to computing resources, which means to uniformly divide the grid tasks distributed to each worker node in the Spark cluster in parallel. According to the 64-core configuration of the cluster in the experiment, set the RDD partitions to 64, which means that the task’s degree of parallelism is 64, which prevents the resource waste caused by the idling of the worker nodes during the task running process. The steps required to implement mosaicking are to process the RDD generated by reading remote sensing image data. In Spark, transformation and action are to operate on RDD, so we focus on splitting the remote sensing mosaic process into transformation and action that can run on the Spark framework. Geotrellis is a high-performance raster data computing framework based on Spark, which supports the reading and writing, data conversion, and rendering of remote sensing image data. This paper uses the Spark interface provided by the Geotrellis tool to read images from storage and convert them to RDD, the most basic data structure in Spark, and split each parallel grid mosaic task into transformation and action tasks.

Figure 2.

Spark first reads the image data from alluxio, collects the spatial range information of the tile data, establishes a geographic grid, and uses the Hadoop API of Spark and geogrillis to convert the remote sensing image into RDD. Then, it preprocesses each RDD for a remote sensing image, performs mosaic calculation, and finally writes it to alluxio to complete the image mosaic operation.

Due to the uneven distribution of sparseness and density in remote sensing image data and the possible huge total data volume of a single image, the time difference of multiple tasks in each stage of mosaic calculation between images is large. Therefore, we set parallelism in more detail inside the grid task and divide the image further. The RDD generated by reading remote sensing image information is tiled into the remote sensing grid layout through raster data conversion and converted into multiple tiles for parallel computing. In this process, the FloatingLayoutScheme() function is used to specify the slice size to control the size of parallel tasks. By default, the slice size used for image data merging is 512 * 512. For the multiple tile RDDs generated in the grid task, appropriate indexes need to be built. The Geotrellis tool can improve search efficiency by setting appropriate indexing methods for multiple tiles inside the RDD. We set the index through KeyIndex in Geotrellis, which supports two space-filling curve indexing methods: z-order curve and Hilbert curve. Z-order curves can be used for two and three-dimensional spaces, and Hilbert curves can be used to represent n-dimensional spaces. In order to adapt to the distribution characteristics of remote sensing image data in multi-dimensional space and time, our mosaic algorithm adopts the Hilbert curve index method as the index method to meet the calculation requirements.

To realize the parallelization of each process in the mosaic, as shown in Figure 2, we use Geotrellis to convert the reprojection, registration, seam line extraction, image blending, etc. in the mosaic process into the transformations and actions provided by Spark. The master node of spark distributes tasks to each parallel worker node to improve the calculation speed. Since a unified geocode needs to be determined in the mosaic process, the reproject() method is used to reproject the remote sensing images of different geocoding to EPSG:4326 to ensure that each remote sensing data read has the same geocode. The resampling methods provided by Geotrellis include nearest-neighbor, bilinear, cubic-convolution, cubic-spline, and lanczo. This paper applies histogram equalization to eliminate the radiation differences between different remote sensing images. In this paper, bilinear sampling is set in the sampling mode of the tiling process to eliminate the white edge effect in the process of image mosaicking. It is different from the general mosaic algorithm, which simply extracts the left and right pixels of the seamline or uses a certain template to find the equilibrium state of local features and finally determines the position of the seamline. The seamline extraction and image blending process is completed by the Stitch() function provided by Geotrellis, the main idea of which is to performs separate triangulation for each grid cell, and it fills the gap by obtaining the cell map of the nearby grid. The 3 * 3 triangular meshes are continuously merged, and the mosaic is realized through iteration.

Since there are repeated images between grids, the grid range is calculated according to the grid code. After the grid mosaic task is completed, the crop() function is used to cut the mosaic data according to the range when collecting grid data, thereby eliminating the overlap between grids. Finally, each RDD is assembled and spliced into a Raster, which can be kept in a file storage system such as HDFS and S3, or the result can be directly output as GeoTiff format data through the Geotiff() method.

4.3. In-Memory Data Staging with Alluxio

To alleviate the poor performance caused by frequent I/O, this paper uses the Alluxio memory file system for data temporary storage. Alluxio is a memory-based distributed file system, which is between the underlying storage and the upper-level computing. It unifies data access and builds a bridge between the upper-level computing framework and the underlying storage system. Alluxio supports staged storage, allowing the management of storage types other than memory; currently supported storage types include memory, solid-state drives, and hard drives. Using staged storage allows Alluxio to store more data in the system, which is beneficial to the mitigation of the memory capacity limitation of the mosaic process due to a large number of remote sensing images.

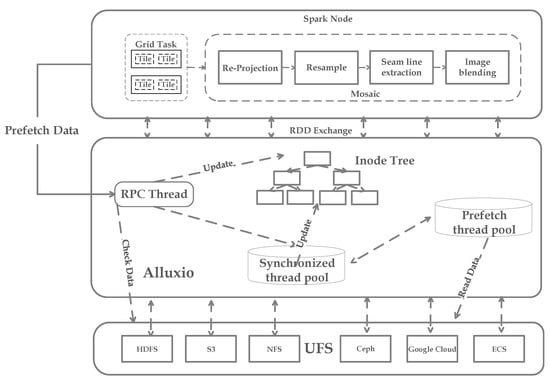

For large-scale remote sensing data mosaics, efficient data I/O methods are crucial. Alluxio worker nodes use a cache mechanism internally to store hot data in memory. The jobs in the mosaic algorithm are submitted to the Spark cluster and scheduled by the Cluster Manager. As shown in Figure 3, the Alluxio master that obtains the cluster command can send prefetch commands to cache remote sensing data while waiting for other tasks running in the cluster to stop. Alluxio includes a synchronization thread pool and a prefetch thread pool. During the data prefetching process, the Remote Procedure Call (RPC) thread reads the image file information, updates the InodeTree information in Alluxio, and synchronizes the remote sensing images in Alluxio with the underlying file system in a recursive way. Alluxio submits the synchronized information to the synchronization thread pool and traverses the images entering the cache through a breadth-first search, while the prefetch thread pool is responsible for reading remote sensing image information from the underlying file system and accelerates the synchronization thread pool. Through this operation, when the mosaic task runs, the workload has been cached, and the cluster will schedule the mosaic job to the corresponding node to run directly.

Figure 3.

The RPC thread reads the image information, updates the InodeTree information in Alluxio, and synchronizes the remote sensing images in Alluxio with the underlying file system. Alluxio submits the synchronized information to the synchronization thread pool, while the prefetch thread pool is responsible for reading remote sensing image information from the underlying file system.

Due to the data staging function provided by Alluxio, asynchronous caching and synchronous caching are supported and provide strong consistency and faster performance for metadata operations. Alluxio can combine caching strategies defined by different data types, which can significantly improve the efficiency of the entire process and reduce the I/O pressure on the underlying file system. For read caching, Alluxio can directly read remote sensing image files from local memory, and it also supports reading memory data from remote worker nodes, which is convenient for processing remote sensing image data deployed on distributed file systems. In addition, Alluxio improves read performance through caching while localizing memory writes, which provides write caching for distributed systems, thereby increasing the output write bandwidth.

Since Alluxio is compatible with Hadoop, Spark and MapReduce programs can run directly on Alluxio without modifying the code, which means that our in-memory distributed large-scale mosaicking program can be easily deployed on Alluxio. In our in-memory distributed large-scale mosaic algorithm, storage and computation are separated, and data transmission between Spark and Alluxio can be performed at the speed of memory. For the calculation process, a class of data that has some common characteristics in Spark’s calculation needs to be finally aggregated to a computing node for calculation: that is the Shuffle process in the Spark operation. These data are distributed on each storage node and processed by the computing units of different nodes. To prevent memory overflow and partition data loss, Spark will write multiple hard disks during this process. We use the in-memory data staging provided by Alluxio to store the temporary data during the Shuffle process in the Alluxio memory, thus realizing memory-level data interaction and effectively compressing the computing time. For the underlying storage system, worker nodes are scheduled by Alluxio’s master to implement distributed memory-level data interaction with the HDFS underlying file system. Based on the above characteristics, the efficiency of the entire process is significantly improved, and the I/O pressure on the underlying file system is reduced.

5. Experiments

5.1. Comparison of Different Types of Mosaic Methods

This paper successfully uses Spark to implement the remote sensing image mosaic algorithm and uses the distributed memory file system Alluxio for file data storage. In this paper, the data processing results of national fire sites and their original remote sensing images are used as input data. The amount of data is nearly 600, the data type is Byte, and the resolution of each image is about 10,000 * 10,000, including Landsat-8, Landsat-7, Landsat-5, Sentinel-1A, and other types of data. The specific information is shown in Table 1 shown. The data size of each scene of Landsat is about 60 MB, the total data volume is about 36 GB, and the size of each scene of Sentinel-1 is about 850 MB.

Table 1.

Summary of all kinds of data used in the experiment.

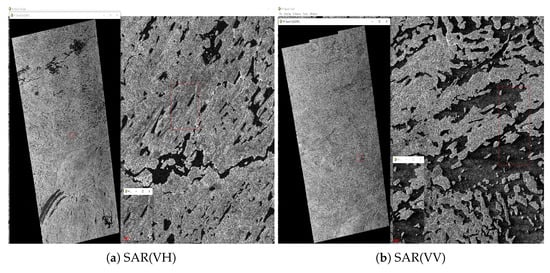

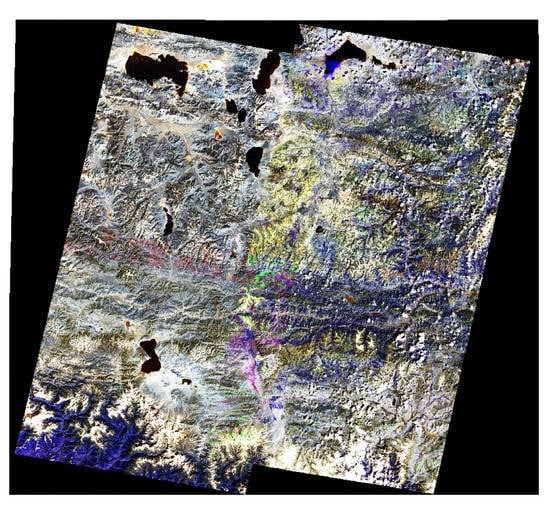

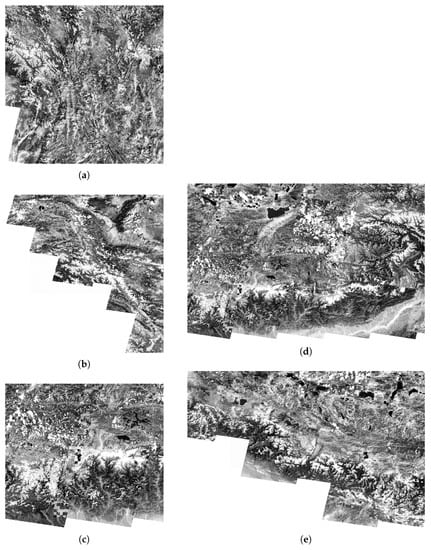

During the experiment, this paper selects optical images (Landsat-5, 7, 8) for single-band mosaic experiments and SAR images (Sentinel-1) for mosaicking. The Sentinal-1 satellite image used in the experiment is the Ground Range Detected (GRD) product, the polarization mode is VV + VH, and the scanning mode is Interferometric Wide swath (IW) mode. As shown in Figure 4, we use three SAR images of the Sentinel-1 satellite with a size of 850 MB for the mosaic experiment. As shown in Figure 5, in order to show the mosaic effect more clearly, we used four Landsat-7 images for mosaic and selected 2, 3, and 4 bands for RGB synthesis. It can be seen from the experimental results that for the image processing of the same type of sensor, the algorithm proposed in this paper can better handle the problem of seam line extraction. In the mosaic results of SAR images and Landsat-7 images, no obvious seam lines are seen in the overlapping area. The RBG composite image also shows that there is no obvious color distortion, and the image mixing effect is also good.

Figure 4.

SAR Image Mosaic Results.

Figure 5.

RGB composite result of mosaic of optical images.

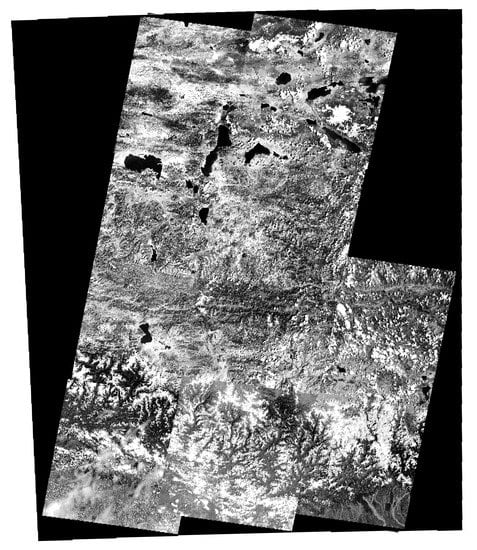

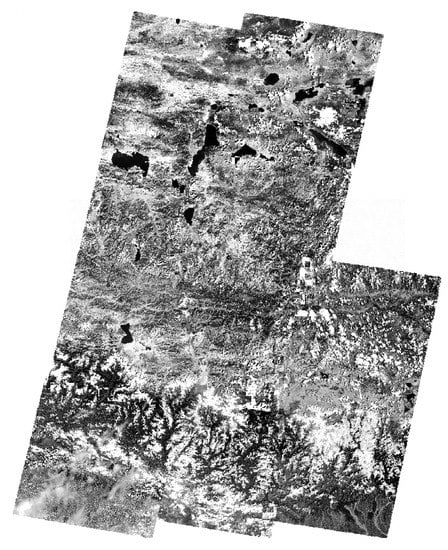

This paper uses ENVI software, which serves as a stand-alone mosaic testing tool for comparison. We selected a total of 10 images from Landsat-5 and Landsat-7 for small-scale mosaic experiments. Figure 6 is the mosaic image of ENVI. In ENVI mosaic, the histogram matching method is used to eliminate the radiation difference, and for Seamlines, we use Auto Generate Seamlines. Figure 7 is the mosaic image we use in Spark for mosaicking. Comparing the ENVI synthesis results with the results of our proposed mosaic algorithm, it can be seen that the images synthesized by ENVI have obvious seam lines, and since the mosaic images used include Landsat-5 and Landsat-7, there are obvious radiation differences in the ENVI results. The mosaic result of the algorithm proposed in this article is smoother, without obvious seamlines and radiation differences, which is better than the mosaic effect of ENVI.

Figure 6.

Envi mosaic result.

Figure 7.

Spark mosaic result.

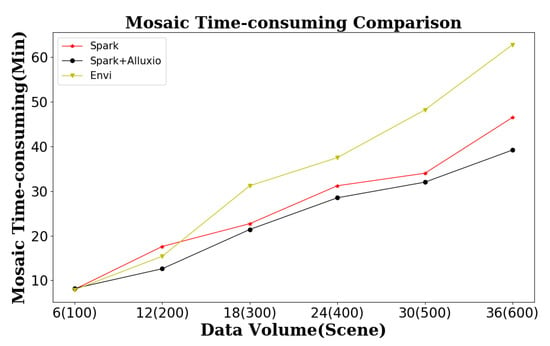

To compare the time consumption, we select 100 to 600 data volumes for remote sensing image mosaic testing and compare the total time to verify the effectiveness of using Spark with Alluxio as the file system and only using Spark for mosaicking. Since the geographic reference coordinate systems of the input data are not necessarily the same, a unified coordinate system conversion is required first. This step is also included in the total time.

Figure 8 shows the mosaicking time of ENVI software mosaicking, Spark mosaicking, and using Spark+Alluxio under different data volumes. Figure 9 shows the mosaicking results of images covering burned areas in China. A total of 16 groups are divided at 10 degrees according to the overall scope of coverage.

Figure 8.

Comparison of ENVI and Spark and Spark with Alluxio for remote sensing image mosaic time-consuming.

Figure 9.

Mosaic image of forest cover products nationwide.

The experimental results show that when the data volume is 100 scenes and the size is about 6G, the speed of the three methods is close, but the speed of ENVI is slightly faster than the other two, which means that when the number of remote sensing images is small, Spark’s mosaic speed is lower than ENVI software. Since the built-in mosaic algorithm of ENVI is superior to the mosaic algorithm provided by Geotrellis, Spark requires different computing nodes to coordinate data processing. When the amount of data is small, data transmission and waiting for synchronization take a long time. In addition, the Spark framework needs to perform frequent serialization and deserialization operations on the data, so it is not suitable for processing a small amount of data. Although ENVI has advantages in the mosaic of remote sensing images with a small amount of data, with the continuous increase of the amount of data, the advantages of Spark mosaic gradually appear. When the amount of data is about 200 scenes and the size is about 12G, the speed of Spark+Alluxio is higher than Spark and nearly 18.2% faster than ENVI, but Spark mosaic is still slower than ENVI due to I/O constraints. When the data volume is 300 scenes with a size of about 18G, ENVI has the slowest speed, and with the continuous increase of data, the mosaic speed of ENVI drops significantly due to the limitation of independent resources. If the amount of data increases further, ENVI cannot even complete the mosaicking of all remote sensing images. We found that the pure Spark mosaic time does not increase linearly with the increase of data. Compared with Spark+Alluxio, it can be seen that the I/O bottleneck causes this result. Spark processes large-scale data and caches data in memory through the in-memory file system Alluxio, which utilizes memory-level I/O throughput and greatly improves processing performance during mosaicking. When mosaicking 600 scene data, compared to only using Spark, the in-memory data staging with the Alluxio method improves the mosaicking speed by nearly 10%.

Based on this experiment, our proposed in-memory mosaicking accelerated with an Alluxio algorithm can effectively improve the processing efficiency when processing large-scale spatiotemporal remote sensing images.

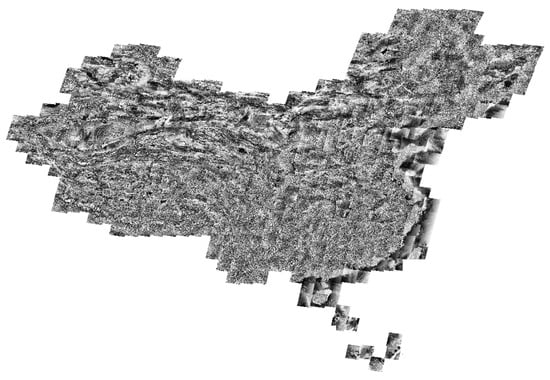

5.2. Large-Scale Data Mosaic Experiment

In order to test the mosaic performance under different node numbers, we use the original images of Landsat-5 and Landsat-7 for experiments. We use the original remote sensing images covering the whole of China for the overall mosaic operation, including 607 original remote sensing images, with a total size of 28 GB. The mosaic algorithm based on Spark+Alluxio is used to divide 36 grids, and the total image size after mosaicking is 20.1 GB. The mosaic result is shown in Figure 10.

Figure 10.

The mosaic result covering entire area of China using Landsat-5 and Landsat-7 satellite images.

Figure 11 shows the mosaic results of the four grids in the image mosaic covering China. Since the selected image area needs to cover the whole of China, we selected the remote sensing images of Landsat-5 and Landsat-7, which were generated in 1999 January to December 2000. Due to the large span of image generation time and spatial range, there is a large number of overlapping images in some areas, and the original image has cloud and fog interference. In addition, to compare the I/O efficiency of large-scale image mosaicking, the experiments have simplified color leveling, radiation equalization, and other operations. The above reasons lead to the radiation differences in the mosaic results in Figure 11. Subsequent studies will use more advanced radiometric balance methods to eliminate radiometric differences between different RS images. This article focuses on parallelizing the basic steps of stitching and using Alluxio to relieve I/O pressure. It fails to conduct a more detailed treatment of color distortion and radiance balance. Therefore, the application scenarios of the mosaic algorithm proposed in this paper are more inclined to mosaic the preprocessed secondary remote sensing images.

Figure 11.

Five zoomed areas in the mosaic result covering the entire area of China.

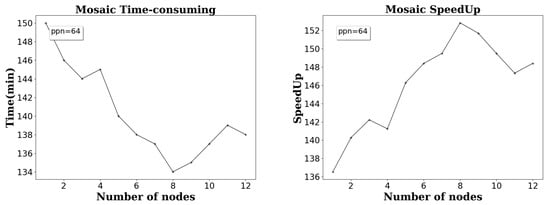

To reflect the parallel efficiency of mosaic experiments and the improvement of I/O efficiency brought by the use of a distributed file system, this paper conducts a simplified experiment and uses histogram equalization to improve image contrast. Figure 12 shows the effect of the number of nodes on the performance of remote sensing mosaicking in the nationwide remote sensing mosaic experiment. We tested the mosaic speed when there were 1 to 12 working nodes. It can be found that when the number of nodes increases to eight, the performance of the parallel mosaicing algorithm improves with the increase of the number of nodes, compared with a working node, the performance is improved by nearly 11%. However, when the number of nodes increases to nine, the performance decreases instead. In a distributed system, the possibility of errors will increase due to the increase of nodes, the cost of communication between nodes will increase, the geographical distance between nodes will increase, and the theoretical minimum delay between nodes will also increase. With the increase of communication cost and synchronization latency, the performance of the mosaic algorithm degrades in the case of nine nodes and above.

Figure 12.

Remote sensing image parallel mosaic experiments were carried out with one to 12 computing nodes, of which the PPN was 64. The CPU of a single computing node is 64 cores and the memory is 256 GB.

Experimental results show that for our in-memory distributed large-scale mosaicking procedure, simply increasing the number of nodes does not significantly improve the mosaicking speed, but it has a negative impact.

5.3. Discussion

To verify the efficiency of our enhanced in-memory mosaicking method, we have conducted both a regional comparative experiment and large-scale performance experiment. The regional-scale comparative experiments are performed between ENVI and our mosaicking method using not only Landsat5/7 but also Sentinal-1 data. From the experimental result showed in Figure 6 and Figure 7, we found that our method could offer a smoother and more radiometrically balanced mosaic result than the result from EVNI, which has obvious seamlines and radiation differences. To reflect the influence of node expansion on the mosaic speed of our mosaicking method, we use Landsat5/7 data covering the whole of China to test the mosaic time under different node numbers. From the usage results shown in Figure 12, we find that the speed of mosaicking does not keep increasing linearly with the increase of the number of nodes, but it decreases when the number of experimental nodes increases to nine. This is because the probability of errors and the delay and communication cost between nodes will increase due to the increase of nodes in a distributed system. Therefore, the performance of the mosaicking algorithm starts to degrade. To verify the impact of data volume expansion on the speed of our mosaicking method, we select the fire-burned product data of China areas, and we use the time consumed by mosaicking with different data volumes as a performance index. We use Spark, Spark+Alluxio, and ENVI mosaic methods for experimental comparison. From the results shown in Figure 8, we found that when the amount of data is small, ENVI is faster than Spark and Spark+Alluxio, which is caused by the long waiting time for data transmission and synchronization among all Spark nodes. However, when the data volume increases to 600 scene, Spark+Alluixo is 24.3% faster than ENVI and 72.1% faster than Spark. This is because our algorithm not only performs parallel processing in the process of mosaicking but also uses Alluxio for data staging which greatly reduces the data I/O pressure and the penalty of data I/O caused by data exchange between internal and external memory, thus improving performance and have more advantages in massive data mosaicking.

However, our proposed method also has limitations. We lack fast hybrid mosaicking for multi-source remote sensing data of different resolutions. The mosaic algorithm proposed by us is suitable for large-scale mosaicking under the background of big data, especially for data products after the spatiotemporal processing of a large amount of remote sensing data, it can provide fast mosaicking and framing processing services, which helps to improve the real-time processing.

6. Conclusions

With the advent of the era of big data, traditional mosaic methods have serious performance limitations for massive remote sensing data. Therefore, this paper proposes a distributed memory mosaic accelerated using Alluxio. The Geotrellis tool is used to split the mosaicking algorithm into MapReduce subroutines that can run in the Spark framework, and it distributes the subroutines to each node in the Spark cluster for calculation, thus realizing the efficient parallelism of the mosaicking algorithm. Since Spark provides in-memory computing, intermediate results can be stored in memory. Together with the distributed in-memory file system Alluxio, performance problems caused by frequent I/O operations are alleviated, and higher computing efficiency is provided for program operation. Experiments show that the method proposed in this paper has a higher mosaicking speed than the traditional mosaicking method, and it can successfully implement remote sensing image mosaicking operations across the country and even a wider range.

In the current research, it is rare to combine remote sensing mosaicking with the Spark framework. We can further refine the mosaicking steps in parallel to improve the mosaicking efficiency. It is not common for remote sensing data to be stored in Alluxio, so the existing storage methods can be improved and optimized according to the nature of remote sensing images, such as setting more efficient indexing methods and optimizing the storage layer in Alluxio, etc.

Author Contributions

Conceptualization, Y.M.; Formal analysis, J.S.; Investigation, Z.Z.; Methodology, Z.Z.; Project administration, Y.M.; Software, Z.Z.; Validation, J.S.; Visualization, Y.M.; Writing—review and editing, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been jointly supported by the National Key R&D Program of China (Grant No. 2016YFC0600510, Grant No. 2018YFC1505501), the national natural science foundation of China (Grant No. 41872253), the Youth Innovation Promotion Association of the Chinese Academy of Sciences(No.Y6YR0300QM), and the Strategic Priority Research Program of Chinese Academy of Sciences, Project title: CASEarth (Grant No. XDA19080103).

Data Availability Statement

Not applicable.

Acknowledgments

This work is supported by the National Natural Science Foundation of China (No. 42071413).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yamada, M.; Fujioka, A.; Fujita, N.; Hashimoto, M.; Ueda, Y.; Aoki, T.; Minami, T.; Torii, M.; Yamamoto, T. Efficient Examples of Earth Observation Satellite Data Processing Using the Jaxa Supercomputer System and the Future for the Next Supercomputer System. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 5735–5738. [Google Scholar]

- Poursanidis, D.; Topouzelis, K.; Chrysoulakis, N. Mapping coastal marine habitats and delineating the deep limits of the Neptune’s seagrass meadows using very high resolution Earth observation data. Int. J. Remote Sens. 2018, 39, 8670–8687. [Google Scholar] [CrossRef]

- Transon, J.; d’Andrimont, R.; Maugnard, A.; Defourny, P. Survey of current hyperspectral Earth observation applications from space and synergies with Sentinel-2. In Proceedings of the 2017 9th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Brugge, Belgium, 27–29 June 2017; pp. 1–8. [Google Scholar]

- Mairota, P.; Cafarelli, B.; Labadessa, R.; Lovergine, F.; Tarantino, C.; Lucas, R.M.; Nagendra, H.; Didham, R.K. Very high resolution Earth observation features for monitoring plant and animal community structure across multiple spatial scales in protected areas. Int. J. Appl. Earth Obs. Geoinf. 2015, 37, 100–105. [Google Scholar] [CrossRef]

- Yilmaz, M.; Uysal, M. Comparing uniform and random data reduction methods for DTM accuracy. Int. J. Eng. Geosci. 2017, 2, 9–16. [Google Scholar] [CrossRef]

- Ma, Y.; Wu, H.; Wang, L.; Huang, B.; Ranjan, R.; Zomaya, A.; Jie, W. Remote sensing big data computing: Challenges and opportunities. Future Gener. Comput. Syst. 2015, 51, 47–60. [Google Scholar] [CrossRef]

- Wu, Z.; Snyder, G.; Vadnais, C.; Arora, R.; Babcock, M.; Stensaas, G.; Doucette, P.; Newman, T. User needs for future Landsat missions. Remote Sens. Environ. 2019, 231, 111214. [Google Scholar] [CrossRef]

- Soille, P.; Burger, A.; Rodriguez, D.; Syrris, V.; Vasilev, V. Towards a JRC earth observation data and processing platform. In Proceedings of the Conference on Big Data from Space (BiDS’16), Santa Cruz de Tenerife, Spain, 15–17 March 2016; pp. 15–17. [Google Scholar]

- Sudmanns, M.; Tiede, D.; Lang, S.; Bergstedt, H.; Trost, G.; Augustin, H.; Baraldi, A.; Blaschke, T. Big Earth data: Disruptive changes in Earth observation data management and analysis? Int. J. Digit. Earth 2019, 13, 832–850. [Google Scholar] [CrossRef]

- Agapiou, A. Remote sensing heritage in a petabyte-scale: Satellite data and heritage Earth Engine© applications. Int. J. Digit. Earth 2017, 10, 85–102. [Google Scholar] [CrossRef]

- Dwyer, J.L.; Roy, D.P.; Sauer, B.; Jenkerson, C.B.; Zhang, H.K.; Lymburner, L. Analysis ready data: Enabling analysis of the Landsat archive. Remote Sens. 2018, 10, 1363. [Google Scholar] [CrossRef]

- Shelestov, A.; Lavreniuk, M.; Kussul, N.; Novikov, A.; Skakun, S. Large scale crop classification using Google earth engine platform. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3696–3699. [Google Scholar]

- Johansen, K.; Phinn, S.; Taylor, M. Mapping woody vegetation clearing in Queensland, Australia from Landsat imagery using the Google Earth Engine. Remote Sens. Appl. Soc. Environ. 2015, 1, 36–49. [Google Scholar] [CrossRef]

- De Grandi, G.; Mayaux, P.; Rauste, Y.; Rosenqvist, A.; Simard, M.; Saatchi, S.S. The Global Rain Forest Mapping Project JERS-1 radar mosaic of tropical Africa: Development and product characterization aspects. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2218–2233. [Google Scholar] [CrossRef]

- Becker-Reshef, I.; Justice, C.; Sullivan, M.; Vermote, E.; Tucker, C.; Anyamba, A.; Small, J.; Pak, E.; Masuoka, E.; Schmaltz, J.; et al. Monitoring global croplands with coarse resolution earth observations: The Global Agriculture Monitoring (GLAM) project. Remote Sens. 2010, 2, 1589–1609. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Li, X.; Feng, R.; Guan, X.; Shen, H.; Zhang, L. Remote sensing image mosaicking: Achievements and challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 8–22. [Google Scholar] [CrossRef]

- Ma, Y.; Wang, L.; Zomaya, A.Y.; Chen, D.; Ranjan, R. Task-tree based large-scale mosaicking for massive remote sensed imageries with dynamic dag scheduling. IEEE Trans. Parallel Distrib. Syst. 2013, 25, 2126–2137. [Google Scholar] [CrossRef]

- Wei, Z.; Jia, K.; Liu, P.; Jia, X.; Xie, Y.; Jiang, Z. Large-scale river mapping using contrastive learning and multi-source satellite imagery. Remote Sens. 2021, 13, 2893. [Google Scholar] [CrossRef]

- Corbane, C.; Syrris, V.; Sabo, F.; Politis, P.; Melchiorri, M.; Pesaresi, M.; Soille, P.; Kemper, T. Convolutional neural networks for global human settlements mapping from Sentinel-2 satellite imagery. Neural Comput. Appl. 2021, 33, 6697–6720. [Google Scholar] [CrossRef]

- Bindschadler, R.; Vornberger, P.; Fleming, A.; Fox, A.; Mullins, J.; Binnie, D.; Paulsen, S.J.; Granneman, B.; Gorodetzky, D. The Landsat image mosaic of Antarctica. Remote Sens. Environ. 2008, 112, 4214–4226. [Google Scholar] [CrossRef]

- Benbahria, Z.; Sebari, I.; Hajji, H.; Smiej, M.F. Intelligent mapping of irrigated areas from Landsat 8 images using transfer learning. Int. J. Eng. Geosci. 2021, 6, 40–50. [Google Scholar] [CrossRef]

- Ahady, A.B.; Kaplan, G. Classification comparison of Landsat-8 and Sentinel-2 data in Google Earth Engine, study case of the city of Kabul. Int. J. Eng. Geosci. 2022, 7, 24–31. [Google Scholar] [CrossRef]

- Traganos, D.; Aggarwal, B.; Poursanidis, D.; Topouzelis, K.; Chrysoulakis, N.; Reinartz, P. Towards global-scale seagrass mapping and monitoring using Sentinel-2 on Google Earth Engine: The case study of the aegean and ionian seas. Remote Sens. 2018, 10, 1227. [Google Scholar] [CrossRef]

- Sudmanns, M.; Tiede, D.; Augustin, H.; Lang, S. Assessing global Sentinel-2 coverage dynamics and data availability for operational Earth observation (EO) applications using the EO-Compass. Int. J. Digit. Earth 2020, 13, 768–784. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Wang, L.; Liu, P.; Ranjan, R. Towards building a data-intensive index for big data computing—A case study of remote sensing data processing. Inf. Sci. 2015, 319, 171–188. [Google Scholar] [CrossRef]

- Buyya, R.; Vecchiola, C.; Thamarai Selvi, S. Chapter 8–Data-Intensive Computing: MapReduce Programming. In Mastering Cloud Computing; Buyya, R., Vecchiola, C., Selvi, S.T., Eds.; Morgan Kaufmann: Boston, MA, USA, 2013; pp. 253–311. [Google Scholar] [CrossRef]

- Wu, Y.; Ge, L.; Luo, Y.; Teng, D.; Feng, J. A Parallel Drone Image Mosaic Method Based on Apache Spark. In Cloud Computing, Smart Grid and Innovative Frontiers in Telecommunications; Springer: New York, NY, USA, 2019; pp. 297–311. [Google Scholar]

- Ma, Y.; Chen, L.; Liu, P.; Lu, K. Parallel programing templates for remote sensing image processing on GPU architectures: Design and implementation. Computing 2016, 98, 7–33. [Google Scholar] [CrossRef]

- Kini, A.; Emanuele, R. Geotrellis: Adding geospatial capabilities to spark. Spark Summit. 2014. Available online: https://docs.huihoo.com/apache/spark/summit/2014/Geotrellis-Adding-Geospatial-Capabilities-to-Spark-Ameet-Kini-Rob-Emanuele.pdf (accessed on 24 August 2022).

- Makris, A.; Tserpes, K.; Spiliopoulos, G.; Anagnostopoulos, D. Performance Evaluation of MongoDB and PostgreSQL for Spatio-temporal Data. In Proceedings of the EDBT/ICDT Workshops, Lisbon, Portugal, 26 March 2019. [Google Scholar]

- Hughes, J.N.; Annex, A.; Eichelberger, C.N.; Fox, A.; Hulbert, A.; Ronquest, M. Geomesa: A distributed architecture for spatio-temporal fusion. In Geospatial Informatics, Fusion, and Motion Video Analytics V; SPIE: Baltimore, MD, USA, 2015; Volume 9473, pp. 128–140. [Google Scholar]

- Li, H. Alluxio: A Virtual Distributed File System. Ph.D. Thesis, UC Berkeley, Berkeley, CA, USA, 2018. [Google Scholar]

- Jia, C.; Li, H. Virtual Distributed File System: Alluxio. Ph.D. Thesis, UC Berkeley, Berkeley, CA, USA, 2019. [Google Scholar]

- Dawn, S.; Saxena, V.; Sharma, B. Remote sensing image registration techniques: A survey. In Proceedings of the International Conference on Image and Signal Processing, Québec, BC, Canada, 30 June–2 July 2010; pp. 103–112. [Google Scholar]

- Zhang, Y.; Zhang, Z.; Ma, G.; Wu, J. Multi-Source Remote Sensing Image Registration Based on Local Deep Learning Feature. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3412–3415. [Google Scholar]

- Kim, D.H.; Yoon, Y.I.; Choi, J.S. An efficient method to build panoramic image mosaics. Pattern Recognit. Lett. 2003, 24, 2421–2429. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, C.; Du, B. Automatic radiometric normalization for multitemporal remote sensing imagery with iterative slow feature analysis. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6141–6155. [Google Scholar] [CrossRef]

- Moghimi, A.; Sarmadian, A.; Mohammadzadeh, A.; Celik, T.; Amani, M.; Kusetogullari, H. Distortion robust relative radiometric normalization of multitemporal and multisensor remote sensing images using image features. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–20. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. Optimal illumination and color consistency for optical remote-sensing image mosaicking. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1943–1947. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, R.; Li, X.; Shen, H.; Yuan, Z. Block adjustment-based radiometric normalization by considering global and local differences. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Yu, L.; Zhang, Y.; Sun, M.; Zhou, X.; Liu, C. An auto-adapting global-to-local color balancing method for optical imagery mosaic. ISPRS J. Photogramm. Remote Sens. 2017, 132, 1–19. [Google Scholar] [CrossRef]

- Levin, A.; Zomet, A.; Peleg, S.; Weiss, Y. Seamless image stitching in the gradient domain. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 377–389. [Google Scholar]

- Camargo, A.; Schultz, R.R.; Wang, Y.; Fevig, R.A.; He, Q. GPU-CPU implementation for super-resolution mosaicking of unmanned aircraft system (UAS) surveillance video. In Proceedings of the 2010 IEEE Southwest Symposium on Image Analysis & Interpretation (SSIAI), Austin, TX, USA, 23–25 May 2010; pp. 25–28. [Google Scholar]

- Eken, S.; Mert, Ü.; Koşunalp, S.; Sayar, A. Resource-and content-aware, scalable stitching framework for remote sensing images. Arab. J. Geosci. 2019, 12, 1–13. [Google Scholar] [CrossRef]

- Borthakur, D. HDFS architecture guide. Hadoop Apache Proj. 2008, 53, 2. Available online: https://hadoop.apache.org/docs/r1.0.4/hdfs_design.html (accessed on 24 August 2022).

- Zaharia, M.; Chowdhury, M.; Das, T.; Dave, A.; Ma, J.; McCauly, M.; Franklin, M.J.; Shenker, S.; Stoica, I. Resilient Distributed Datasets: A Fault-Tolerant Abstraction for In-Memory Cluster Computing. In Proceedings of the 9th USENIX Symposium on Networked Systems Design and Implementation (NSDI 12), San Jose, CA, USA, 25–27 April 2012; pp. 15–28. [Google Scholar]

- Wang, H. Parallel Algorithms for Image and Video Mosaic Based Applications. Ph.D. Thesis, University of Georgia, Athens, GA, USA, 2005. [Google Scholar]

- Chen, L.; Ma, Y.; Liu, P.; Wei, J.; Jie, W.; He, J. A review of parallel computing for large-scale remote sensing image mosaicking. Clust. Comput. 2015, 18, 517–529. [Google Scholar] [CrossRef]

- Huang, F.; Zhu, Q.; Zhou, J.; Tao, J.; Zhou, X.; Jin, D.; Tan, X.; Wang, L. Research on the parallelization of the DBSCAN clustering algorithm for spatial data mining based on the spark platform. Remote Sens. 2017, 9, 1301. [Google Scholar] [CrossRef]

- Zaharia, M. An Architecture for Fast and General Data Processing on Large Clusters; Morgan & Claypool: Williston, VT, USA, 2016. [Google Scholar]

- Liu, B.; He, S.; He, D.; Zhang, Y.; Guizani, M. A spark-based parallel fuzzy c-Means segmentation algorithm for agricultural image Big Data. IEEE Access 2019, 7, 42169–42180. [Google Scholar] [CrossRef]

- Wang, N.; Chen, F.; Yu, B.; Qin, Y. Segmentation of large-scale remotely sensed images on a Spark platform: A strategy for handling massive image tiles with the MapReduce model. ISPRS J. Photogramm. Remote Sens. 2020, 162, 137–147. [Google Scholar] [CrossRef]

- Sun, Z.; Chen, F.; Chi, M.; Zhu, Y. A spark-based big data platform for massive remote sensing data processing. In Proceedings of the International Conference on Data Science, Sydney, Australia, 8–9 August 2015; pp. 120–126. [Google Scholar]

- Reyes-Ortiz, J.L.; Oneto, L.; Anguita, D. Big data analytics in the cloud: Spark on hadoop vs mpi/openmp on beowulf. Procedia Comput. Sci. 2015, 53, 121–130. [Google Scholar] [CrossRef]

- Yu, J.; Wu, J.; Sarwat, M. Geospark: A cluster computing framework for processing large-scale spatial data. In Proceedings of the 23rd SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 3–6 November 2015; pp. 1–4. [Google Scholar]

- Moreno, V.; Nguyen, M.T. Satellite Image Processing using Spark on the HUPI Platform. In TORUS 2—Toward an Open Resource Using Services: Cloud Computing for Environmental Data; Wiley: Hoboken, NJ, USA, 2020; pp. 173–190. [Google Scholar]

- Ghemawat, S.; Gobioff, H.; Leung, S.T. The Google file system. In Proceedings of the Nineteenth ACM Symposium on Operating Systems Principles, Bolton Landing, NY, USA, 19–22 October 2003; pp. 29–43. [Google Scholar]

- Guo, W.; Gong, J.; Jiang, W.; Liu, Y.; She, B. OpenRS-Cloud: A remote sensing image processing platform based on cloud computing environment. Sci. China Technol. Sci. 2010, 53, 221–230. [Google Scholar] [CrossRef]

- McKusick, M.K.; Quinlan, S. GFS: Evolution on Fast-forward: A discussion between Kirk McKusick and Sean Quinlan about the origin and evolution of the Google File System. Queue 2009, 7, 10–20. [Google Scholar] [CrossRef]

- Braam, P. The Lustre storage architecture. arXiv 2019, arXiv:1903.01955. [Google Scholar]

- Weil, S.A.; Brandt, S.A.; Miller, E.L.; Long, D.D.; Maltzahn, C. Ceph: A scalable, high-performance distributed file system. In Proceedings of the 7th Symposium on Operating Systems Design and Implementation, Seattle, WA, USA, 6–8 November 2006; pp. 307–320. [Google Scholar]

- Plugge, E.; Membrey, P.; Hawkins, T. GridFS. In The Definitive Guide to MongoDB: The NoSQL Database for Cloud and Desktop Computing; Apress: New York, NY, USA, 2010; pp. 83–95. [Google Scholar]

- Liu, X.; Yu, Q.; Liao, J. FastDFS: A high performance distributed file system. ICIC Express Lett. Part B Appl. Int. J. Res. Surv. 2014, 5, 1741–1746. [Google Scholar]

- Wang, Y.; Ma, Y.; Liu, P.; Liu, D.; Xie, J. An optimized image mosaic algorithm with parallel io and dynamic grouped parallel strategy based on minimal spanning tree. In Proceedings of the 2010 Ninth International Conference on Grid and Cloud Computing, Nanjing, China, 1–5 November 2010; pp. 501–506. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).