Abstract

Accurately detecting landslides over a large area with complex background objects is a challenging task. Research in the area suffers from three drawbacks in general. First, the models are mostly modified from typical networks, and are not designed specifically for landslide detection. Second, the images used to construct and evaluate models of landslide detection are limited to one spatial resolution, which struggles to meet the requirements of such relevant applications as emergency response. Third, assessments are primarily carried out by using the training data on different parts of the same study area. This makes it difficult to objectively evaluate the transferability of the model, because ground objects in the same area are distributed with similar spectral characteristics. To respond to the challenges above, this study proposes DeenNet, specifically designed for landslide detection. Different from the widely used encoder–decoder networks, DeenNet maintains multi-scale landslide features by decoding the input feature maps to a large scale before encoding a module. The decoding operation is conducted by deconvolution of the input feature maps, while encoding is conducted by convolution. Our model is trained on two earthquake-triggered landslide datasets, constructed using images with different spatial resolutions from different sensor platforms. Two other landslide datasets of different study areas with different spatial resolutions were used to evaluate the trained model. The experimental results demonstrated an at least 6.17% F1-measure improvement by DeenNet compared with three widely used typical encoder–decoder-based networks. The decoder–encoder network structure of DeenNet proves to be effective in maintaining landslide features, regardless of the size of the landslides in different evaluation images. It further validated the capacity of DeenNet in maintaining landslide features, which provides a strong applicability in the context of applications.

1. Introduction

As a frequently occurring type of geological disaster, landslides are detrimental to engineering construction, river courses, the ecology of forests, and human life and property [1,2]. They have caused significant losses in terms of damage to property and human casualties, especially in mountainous regions. For example, landslides have caused 630 people to die or go missing and have injured 216 people every year in China in the last decade (Resources 2010–2020). They have caused direct economic losses of 4.48 billion yuan in this period (Resources 2010–2020). Therefore, it is important to monitor landslides at a large scale to examine their mechanism, analyze their causes, build early warning models of the risk of landslides, accelerate emergency responses, and thus reduce the loss of life and property. Landslides can be categorized into a variety of types, such as falls, flows, and debris, according to the different substances and materials involved [3]. Our aim here is to detect landslides that caused a transformation from vegetation to bare-earth exposure.

Field survey is a traditional and reliable means of obtaining detailed information on a landslide, such as its type, depth, and other geological characteristics. However, while this is a suitable way to explore the mechanism of a specific landslide event, it struggles to provide a general landslide distribution pattern over a large area featuring thousands of landslide incidences. Owing to the rapid development of remote sensing technology, a variety of imaging sensors, including airborne and spaceborne sensors, have been developed and widely applied in different research fields [4,5], such as vehicle detection, land mapping, and natural hazards [6,7,8]. The large number of available remote sensing images makes it possible to visualize landslides in different areas at different spatial resolutions. This has prompted considerable research on developing automatic/semi-automatic methods to detect landslides [9,10].

Image enhancement operations, such as histogram equalization [11], filter convolution [12], spectral index calculation [13], image saliency enhancement [6], and color domain transformation [14], have been applied to enhance information on landslides in the presence of background objects, in order to extract the features needed for landslide detection. The extracted features are commonly used for building landslide detection models based on machine learning theory. Fuzzy logic [15], fuzzy algebraic operations [16], and the Markov random field [17] are commonly used statistical theories in this vein. Support vector machines [18], random forests [19], logistic regression [20], and clustering algorithms [21] are widely used machine learning methods for landslide detection. However, models of landslide detection are mostly constructed by using images with a background of pure vegetation, such that the spectral/textural characteristics of the landslides to be detected are relatively consistent. Moreover, most of the proposed models require manual parameter tuning, which relies heavily on the skill and experience of the technician.

To avoid feature engineering in the above methods, the advent of deep convolutional neural networks has made it possible to automatically learn the features of images by using layer-by-layer convolution filters [22,23,24,25]. Deep convolutional neural networks have achieved remarkable performances in object detection and classification using remotely sensed images from various platforms [26,27,28]. However, the amount of research on landslide detection based on deep learning methods is limited. This is possibly because the number of landslide images that can be used as training samples to train the relevant models is limited. A few typical studies on landslide detection based on optical images published in the last two to three years that have used deep neural networks are listed in Table 1. They show that three challenges are generally encountered in developing models of landslide detection. First, most relevant studies are based on modifying typical network structures from computer vision, such as the U-net [29]. These networks mostly adopt encoder–decoder structures, which may omit the features of landslides with a thin and narrow shape. Second, the images used to train models of landslide detection mostly have a single spatial resolution. In the context of applications, especially emergency response systems, it is difficult to collect a sufficient number of remotely sensed images at a specific spatial resolution to cover the entire disaster area in a short time. Therefore, constructing a landslide detection model based on multi-spatial resolution images is necessary for applications, and can enhance the robustness of the model. Third, strategies used for evaluating the proposed models in most published work involve separating the study area into training and testing areas. It is difficult to evaluate the transferability of the trained models objectively. The images in the test area are not trained, but they still share a similar spatial distribution and spectral and textural characteristics with the training samples.

Table 1.

Recent studies on landslide detection using deep learning models: “RGB” indicates spectral channels of red, green, and blue, “I” is the infrared channel, “DEM” is short for digital elevation model, and “NDVI” is the normalized difference vegetation index.

In this study, we propose a deep neural network called the decoder–encoder network (DeenNet) to detect landslides from multiple images at a high spatial resolution. In contrast to the widely used U-net, which uses the typical encoder–decoder network structure, DeenNet is proposed by taking the decoder–encoder structure. Such a design can prevent small landslides in images from being filtered out by direct layer-wise encoding on the input image. The decoder–encoder network structure can learn details of objects on the ground by decoding the input image into a larger patch, and encoding this patch to the size of the input image. We trained the proposed architecture on the datasets of two typical earthquake-triggered landslide events containing images with different spatial resolutions obtained from different platforms, and evaluated its robustness and reliability on two other datasets of landslide events.

2. Method and Materials

2.1. Proposed Architecture

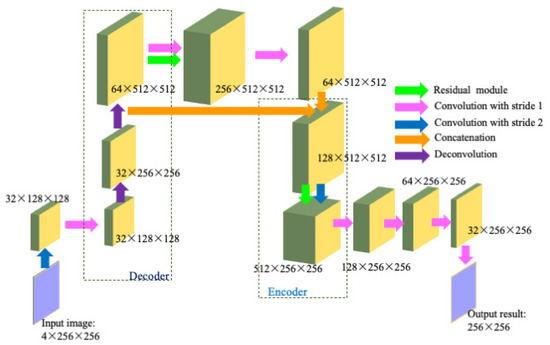

As shown in Figure 1, our proposed DeenNet consists of two modules, for the decoding and encoding phases, arranged in an order opposite to that used in recently published studies on landslide detection [31,32]. Landslides are recognized as an object of interest in the images in this work, and the geological and meteorological restrictions are not considered. Optical RGB images and DEM images are concatenated directly as model input for landslide detection. The concatenated input image is cropped to a size of 256 × 256 pixels. First, it is convoluted by a 3 × 3 convolution with a stride of two pixels to downsample it to a 32-channel feature map with a size of 128 × 128 pixels. This is then used for further feature extraction by means of a convolution operation with a stride of one. Details of the convolution operations using a stride of two and one are introduced in Figure 2. Second, the feature map obtained after residual learning is decoded by two continuous deconvolution operations (shown in Figure 3) to upscale it to a 64-channel 512 × 512-pixel feature map. Such an operation is to collect the spectral and textural details of the landslides in the images, especially for small landslides. Compared with our network structure, the continuous encoding operation in the widely used typical networks [29] will lose the features of small landslides and landslides with a thin and narrow shape. Third, the decoded feature map is concatenated with another feature map at the same scale after multi-scale residual learning. The network structure of the residual module is shown in Figure 4. Concatenation can improve performance in terms of reconstruction by promoting data flow among the layers. The concatenated feature map is further encoded to a 512-channel 256 × 256-pixel feature map by a 3 × 3 kernel convolution with a stride of two, embedded in the form of a residual module. Finally, four step-by-step convolutions are performed to generate the binary image used for landslide detection. The activation function is adopted to be a ReLU (Rectified linear unit) with the definition shown in Equation (1).

Figure 1.

Architecture of the proposed DeenNet. The input image is a four-channel matrix with a size of 256 256 pixels, and the output image is a single-channel image with a size of 256 256 pixels.

Figure 2.

Mechanism of a 3 3 convolution operation with different strides on the input feature map N with a size of pixels: (a) convolution with a stride of one, and (b) convolution with a stride of two.

Figure 3.

Mechanism of deconvolution operation.

Figure 4.

Residual module, where “+” indicates the addition of two feature maps.

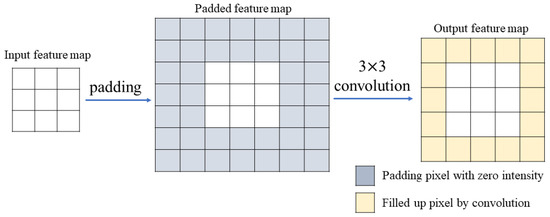

As shown in Figure 2, convolution operations with two values of strides are used differently on the input feature map N. The input feature map is first padded by a pixel along each of its four boundaries to avoid the loss of boundary information during convolution by the 3 3 element kernel K. Each pixel of the output feature map is calculated by using Equation (2). The parameter stride determines the way in which the kernel moves to the next pixel after each convolution. If the stride is one, as shown in Figure 2a, the convolution kernel moves one pixel horizontally or vertically after each convolution until all the pixels in the input feature map have been convoluted. This operation is widely used for learning multi-dimensional features in network design [28,32]. Figure 2b presents the case where the stride is two, and the convolution kernel moves two pixels after each convolution. The output feature map with a size of pixels is thus generated. Such a convolution, with a stride of two, enables the encoding of the information with a reduced size, so that multi-scale feature learning can detect landslides with different sizes.

The deconvolution module in Figure 1 is a key operation that enables the proposed network to capture multi-scale features of landslides, especially those of small landslides. It is generally composed of two steps: feature map padding, and convolution by a 3 3 kernel. According to Figure 3, the input feature map is first padded by two pixels on each boundary, so that the pixels padding the boundary (marked as yellow in Figure 3) can be reserved after the 3 3 convolution. The output feature map is enlarged by two pixels each along its width and height compared with the input feature map, as labeled yellow in Figure 3. This enables the features to be enhanced to a large scale, which improves the detection of small landslides.

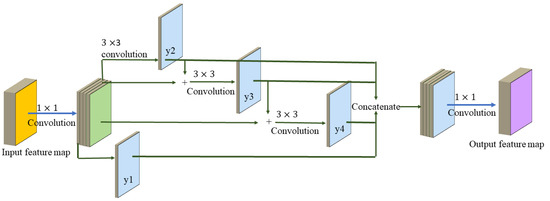

The residual learning module used to aid landslide detection in Figure 1 is adopted from Res2Net [33], which was designed to learn multi-scale features at a granular level by decomposing the feature map into different subsets, and has gained consistent improvement over the recently proposed baseline models [33]. As shown in Figure 4, the input feature map is divided equally along its dimension into four sub-feature maps after applying a 1 1 kernel convolution. One sub-feature map is used directly as feature map y1. Each of the other three sub-feature maps is convoluted by a 3 3 element kernel to yield feature maps y2, y3, and y4. The convolution is conducted with a stride of 1 to maintain the sizes of the feature maps without modifying their dimensions. Through the addition of the decomposed feature maps to y2, y3, and y4, the receptive fields can be enlarged. The feature maps y1, y2, y3, and y4 are finally concatenated and convoluted by a 1 1 kernel to generate the output feature map. This strategy can be used to enhance the efficiency of extracting multi-scale features [33].

2.2. Dataset Preparation

To evaluate the transferability of our proposed DeenNet, we used three large-area earthquake-triggered landslide events and one publicly available landslide dataset, the Bijie landslide dataset [10]. The three earthquake events consisted of two major earthquakes in China—the Jiuzhaigou earthquake and the Lushan earthquake—and a major earthquake event in Japan, the Iburi earthquake. Each of the earthquakes triggered several thousand landslide inventories that provide a sufficient number of samples for training or evaluating the proposed model. We generated a landslide dataset for each earthquake. Table 2 presents the general information on each landslide dataset constructed in this study.

Table 2.

General information on each landslide dataset constructed in this study.

2.2.1. Jiuzhaigou Earthquake-Triggered Landslide Dataset

On 8 August 2017, an earthquake with a magnitude of 7.0 Ms occurred in Jiuzhaigou County of Sichuan Province in Southern China. There is research [34] proposing a seismic landslide polygon dataset with a spatial resolution of 0.5 m. However, the images collected from Geoeye-1 for this study were captured on 14 August 2017 with a spatial resolution of 2 m. The landslide inventories developed by Tian et al. [34] were adjusted for our images by removing independent landslides (94 landslides) that could not be visually interpreted from the images collected in this study. From Figure 5, we can see that the landslides are heavily interrupted by clouds, and were intensively distributed with a large variety of sizes and shapes. Bare soil was among the most disruptive background objects that hindered detection. The DEM (digital elevation model) of the corresponding study area was also collected from the Shuttle Radar Topography Mission (SRTM) to supplement landslide detection by providing topographical information after it had been resized to the same spatial resolution as that of the Geoeye-1 images.

Figure 5.

Landslide inventories in the Jiuzhaigou earthquake-triggered landslide dataset.

2.2.2. Lushan Earthquake-Triggered Landslide Dataset

On 20 April 2013, a 7.0 Ms earthquake struck Lushan County in Ya’an of the Sichuan Province of China. The deadly earthquake triggered thousands of landslides [35]. Based on aerial photographs and high spatial resolution remote sensed images, Xu et al. constructed a landslide inventory dataset through visual interpretation and field investigation [36]. We collected RapidEye images captured on 26 May 2013 to cover most of the area affected by the Lushan earthquake. Landslide records in the dataset representing the area beyond that covered by our images were removed. Moreover, the landslide inventories proposed by Xu et al. [36] were adjusted to correspond to the images collected in this study through visual interpretation. According to Figure 6, the landslides were concentrated in the central part of the study area, and varied in terms of intensity, size, and location. Moreover, the background was complicated by a variety of objects, including bare ground, bare soil, snow, and road networks. This challenging landslide dataset can improve the feasibility and transferability of the constructed model in the context of detecting landslides for different cases. Similar to the Jiuzhaigou earthquake-triggered landslide dataset, the corresponding DEM images were collected and resized to the same resolution.

Figure 6.

Landslide inventories in the Lushan earthquake-triggered landslide dataset.

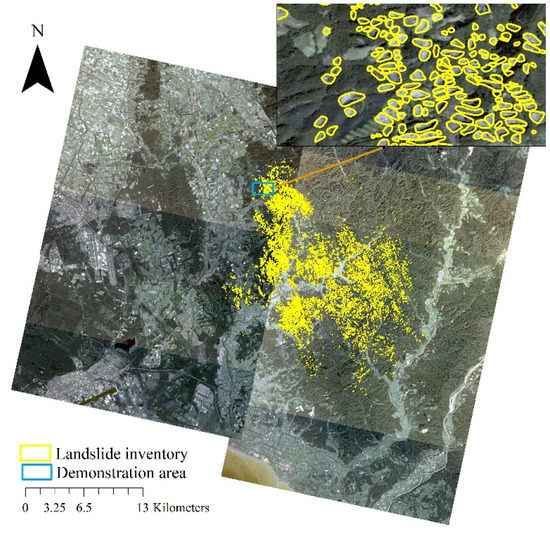

2.2.3. Iburi Earthquake-Triggered Landslide Dataset

On 5 September 2018, Iburi was hit by an earthquake with a magnitude of 6.7 Ms. The landslide inventories triggered by the earthquake were obtained by visual interpretation from Planet satellite images at a spatial resolution of 3 m by referring to the Google Earth platform [37]. The Planet satellite images used for interpretation in [37] were captured in our study as well (as shown in Figure 7). Therefore, the landslide inventory dataset constructed by Shao et al. [37] was directly used to train our landslide detection model. It added to the complexity of the data structure and could further strengthen the transferability of the model during training. To render it consistent with the datasets above, the DEM was collected and resized to the size of the satellite images.

Figure 7.

Landslide inventories of the Iburi earthquake-triggered landslide dataset.

2.2.4. Bijie Landslide Dataset

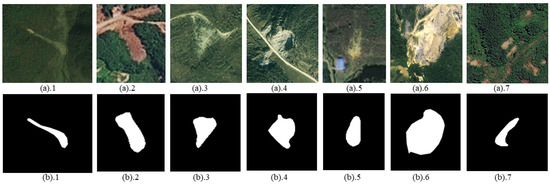

The Bijie landslide dataset is the first publicly available dataset of its kind for a large area [10]. The area is vulnerable to landslides because of intensive rain and unstable topographic conditions. As shown in Figure 8, the dataset was provided in patches of each landslide inventory at a spatial resolution of 0.8 m, as were its corresponding DEM and the binary mask image, with the landslide masks used as the ground truth. The landslide masks were manually delineated and verified through field investigations. However, in some cases, as shown in Figure 8(b).3, the landslide masks consisted of landslides covered with vegetation, as can be seen by referring to the original image in Figure 8(a).3. Since our study focuses on detecting landslides with bare-earth exposure, the landslide cases with vegetation coverage were removed to allow for a fair performance evaluation of the proposed model. The rest of the landslides in the Bijie landslide dataset have different sizes and shapes in different background objects, so it can still be a challenging dataset with which to evaluate the transferability of the model.

Figure 8.

Examples of landslide patches in the Bijie landslide dataset. (a).* RGB images of each landslide inventory. (b).* Manually delineated landslide mask.

3. Experimental Settings

3.1. Dataset and Comparison Methods

Of the four landslide datasets (three earthquake-triggered landslide datasets and one publicly released landslide dataset) presented above, we used the datasets for landslides triggered by the Lushan earthquake and Iburi earthquake to train the landslide detection model because of their large number of heterogeneous landslide samples with significant variations in sizes and shapes, as shown in Table 2. The other two datasets, the Jiuzhaigou landslide dataset and Bijie landslide dataset, were used to evaluate the transferability of the proposed architecture. Referring to Figure 5, Figure 6, Figure 7 and Figure 8, the landslides of the Jiuzhaigou landside dataset and the Bijie landslide dataset exhibit similar spectral characteristics to those the Lushan landslide dataset and the Iburi landslide dataset, respectively. The landslides in the training datasets are representative of the landslides in the evaluation datasets. Due to the variances in the image spatial resolutions and object distributions in different study areas, such evaluation datasets can provide a comprehensive and objective assessment of the impact of our DeenNet in detecting multi-scale landslides over a large area in practical applications. To conduct a fair evaluation of the proposed network in terms of landslide detection over a large area containing complicated background objects, three widely used recent network structures in semantic segmentation were tested as well: U-net, SegNet [38], and DeepLabv3 [39]. Because DeenNet uses the residual module for multi-scale feature learning, U-net, SegNet, and DeepLabv3 were correspondingly modified using the residual module, in order to determine whether residual learning itself or our designed decoder–encoder network structure contributed to the improvement in detection performance. As with DeenNet, the residual module is embedded in the convolution operations of the encoding phases of U-net, and SegNet and the backbone of DeepLabv3 to construct the modified U-net, SegNet and DeepLabv3. For the sake of brevity, the modified U-net, SegNet, and DeepLabv3 are referred to as the U-net + residual module, SegNet + residual module, and DeepLabv3 + residual module, respectively.

3.2. Implementation Details

All the models were implemented on PyTorch, with Ubuntu 14.04 used as the operating system. They were trained on three NVIDIA TITAN X GPUs, where each had a memory of 2 GB. The images used for training the landslide detection model from the two datasets, the Lushan and Iburi datasets, were cropped into patches with a size of 256 256 pixels from the RGB, DEM, and ground-truth mask images. Because the DEM was synthesized as the input image in this study, the input network structures of U-net, SegNet, DeepLabv3, and their modifications were adjusted to four channels to coincide with our proposed network.

The learning rate of our DeenNet was initially set to 0.01 and was adjusted by a decay of 10 after every 30 epochs. Moreover, to accelerate the network convergence toward the global minimum, momentum was set to 0.9 to update the weight during training. The weight decay was set to 0.0001 to eliminate over-fitting. We optimized the model using the SGD (stochastic gradient descent) by minimizing the loss function BCELoss (binary cross-entropy loss), which is as defined in Equation (3). Our model converges at the 126th training epoch.

3.3. Evaluation Metric

We calculated the recall, precision, F1-measure, and IOU to evaluate the landslide detection performance according to Equations (4)–(7). TP represents the number of ground-truth landslide pixels that were correctly detected, FP indicates the number of ground-truth background pixels that were incorrectly classified as landslides, TN is the number of correctly detected ground-truth background objects, and FN represents the number of pixels that were omitted as background. The F1-measure and IOU are widely used to present a general evaluation, because the F1-measure can balance recall and precision, whereas the IOU can measure the similarity of the detected landslide polygons with that of the ground truth. Moreover, FPS (frames per second) was calculated for each model to evaluate the efficiency of computing, as well.

4. Results

Images from the Bijie dataset and Jiuzhaigou dataset were used to assess the models. To present a visual comparison between our proposed network and other networks, we randomly selected four patches from the two datasets, and they are shown in Figure 9 and Figure 10.

Figure 9.

Visual comparison of landslide detection on images of the Bijie landside dataset between our proposed DeenNet and the other networks: (*).1 indicate patches of the original image, (*).2 represent ground-truth image patches, (*).3–(*).8 are the results of U-net, U-net + residual module, SegNet, SegNet + residual module, Deeplabv3, and Deeplabv3+ residual module, respectively; (*).9 represent images detected by our DeenNet.

Figure 10.

Visual comparison of landslide detection on images of the Jiuzhaigou landside dataset between our proposed DeenNet and the other networks: (*).1 indicate the original image patches, (*).2 represent the ground-truth image patches, (*).3–(*).8 are the results of U-net, U-net + residual module, SegNet, SegNet + residual module, Deeplabv3, and Deeplabv3+ residual module, respectively, and (*).9 represent images detected by our DeenNet.

The different network structures delivered significantly different results on evaluation images with different spatial resolutions. With the models trained on images with spatial resolutions of 3 m and 5 m, it was challenging to transfer them to detecting landslides from images with the significantly higher spatial resolution of 0.8 m (shown in Figure 9) and from those at 2 m (shown in Figure 10). The images of the Bijie landslide dataset contained more details and featured landslides with different spectral characteristics or accompanied by bare soil, which made detection more difficult, as shown in Figure 9. DeenNet was able to detect most landslides. U-net, SegNet, DeepLabv3, and their corresponding versions with residual modules could detect only some of the landslides, and failed when the spectral characteristics of the landslides become obscured (Figure 9a), or confused landslides with bare soil (Figure 9b–d). One of the possible reasons for such phenomena is that the encoder–decoder networks can filter out many landslide features through continuous convolutions in the encoding phase. However, our proposed DeenNet was able to capture most landslide features by decoding the input feature map before the encoding operation. Moreover, the modified U-net, SegNet, and DeepLabv3 with residual modules mostly outperformed the original models. This indicates that the residual module is effective in aiding landslide detection by capturing multi-scale landslide features through learning at a smaller granular level, which further validates the superiority of the residual module proposed in the study of Res2Net [33].

Landslide detection on the Jiuzhaigou dataset yielded similar performances. Our proposed DeenNet detected most landslides, especially when they were slender or small, as shown in Figure 10c,d. The U-net + residual module, SegNet, and SegNet + residual module worked well in terms of detecting large landslides, as shown in Figure 10a–c, but ignored small and narrow landslides, as shown in Figure 10d. By synthesizing the results of landslide detection in Figure 9 and Figure 10, we see that DeepLabv3 is likely to omit landslides as background objects, especially when the landslide shares similar characteristics with open space. SegNet and U-net perform better in detecting large landslides than small ones. One possible reason for this is that the atrous spatial pyramid pooling (ASPP) module used in DeepLabv3 omitted a considerable number of landslide-related features in the multi-scale convolution with different rates of dilation. This shows that multi-scale pyramid pooling is not suitable for landslide detection in the case of large variations in their shapes and spectral characteristics over a large area.

Apart from visual comparison, the recall, precision, F1-measure, and IOU of the landslide detection results for each of the compared methods were calculated and are listed in Table 3 and Table 4. In accordance with the visual comparison of the performance of the models in terms of landslide detection, our proposed DeenNet was significantly better than U-net, SegNet, DeepLabv3, and their corresponding residual module networks on both evaluation datasets. It was superior by at least 6.65% in terms of the F1-measure and 4.68% in terms of the IOU to the other methods on the Bijie landslide dataset, and by 6.17% in terms of the F1-measure and 7.88% in terms of the IOU on the Jiuzhaigu landslide dataset. The superiority of DeenNet to the other methods in landslide detection is roughly the same for both of the evaluation datasets. However, the performance of each of the other models varies greatly between the two datasets. The F1-measures of all the models evaluated on Bijie landslide dataset are smaller than 40%, while on the Jiuzhaigou landslide dataset they are mostly larger than 40%. The Bijie landslide dataset is challenging for detection, because it contains 431 landslide cases with different types in different background objects. Due to the higher spatial resolution of images in the Bijie landslide dataset compared with the training samples, the spectral and textural characteristics of each landslide vary largely, with more ground details (as shown in Figure 8). That makes it difficult for the models trained by images with less ground details to detect landslides with more details. The large accuracy gap can be filled by adding more landslide samples from high spatial resolution images (<1 m) during training in a future study. DeepLabv3 performed equally with SegNet, and slightly better than U-net, in detecting landslides in the Bijie landslide dataset, but it presented the worst performance in detecting landslides from the Jiuzhaigou landslide dataset. It has high precision and low recall in Jiuzhaigou landslide dataset. Faced with massive connected landslides (shown in Figure 10a,b) within a vegetation background, DeepLabv3 is likely to misclassify the mixed ground objects as background objects due to continuous convolutions in the backbone and ASPP module. Therefore, massive convolution is not helpful in extracting multi-scale landslide features.

Table 3.

Statistics used to assess the performance of the models in terms of landslide detection on the Bijie landslide dataset (%).

Table 4.

Statistics used to assess the performance of the models in terms of landslide detection on the Jiuzhaigou landslide dataset (%).

On both landslide datasets, the residual-module-containing versions of U-net, SegNet, and DeepLabv3 all outperformed their respective original versions. This indicates that residual learning is efficient in capturing multi-scale landslide-related features. Comparing these residual-model-enhanced methods with our proposed DeenNet shows that DeenNet also had a higher efficiency than the residual module networks of U-net, SegNet, and Deeplabv3 by at least 30 FPS on both of the evaluation datasets. Apart from the satisfactory detection performance, this decent computing efficiency shows our proposed DeenNet’s potential for practical applications, such as emergency response. There is one thing to point out, which is that the accuracies obtained are not that high compared with the works in [3,13,16]. One of the main reasons for this is that our evaluation images are taken from different datasets than the training images, while the evaluation images in most published works [3,13,16] are taken from the same datasets, which are captured in a single place whose ground objects share similar distribution patterns and spectral characteristics. However, due to the different ground distributions in the different evaluation datasets, the transferability of different models can be better evaluated in our study.

5. Discussions

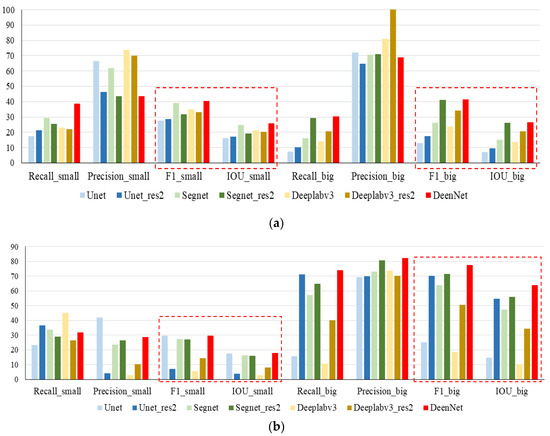

To explore the effects of different orders of network structure between the encoding and the decoding phases on multi-scale landslide detection, we separated the instances of landslides in each evaluation dataset into two categories according to the area of each landslide polygon. The threshold of the landslide area was set to 1000 m2 [40,41], wherein landslides with areas smaller than 1000 m2 were grouped together as small landslides, and those larger than or equal to 1000 m2 were categorized as large landslides. The statistics on the detection of all models in the test datasets are summarized and presented in Figure 11 in terms of small and large landslides.

Figure 11.

Performance evaluation of different model structures according to the sizes of the landslides: (a) evaluation of the Bijie landslide dataset, and (b) evaluation of the Jiuzhaigou earthquake-triggered landslide dataset.

It is apparent that the decoder–encoder network structure used in DeenNet significantly outperformed the widely used encoder–decoder network structure of the other models in terms of detecting both small and large landslides, for both datasets (shown in Figure 11). In terms of small landslide detection, DeenNet achieved an approximate 10% improvement over most other models. The recall and precision of most of the methods in comparison vary significantly in detecting small landslides in both landslide datasets, while our proposed DeenNet performs robustly with comparatively high rates. Although DeenNet is not the highest in both recall and precision, the general performance of the F1-measure and IOU are apparently higher than those of the other methods. DeenNet is more robust in detecting small landslides from images with different background objects with a different spatial resolution from the training datasets. The F1-measure and IOU of the Bijie landslide dataset are both significantly higher than that of the Jiuzhaigou landslide dataset. One of the most likely reasons is that the small landslides in the Bijie landslide dataset still take a fairly large pixel proportion of the image (as shown in Figure 9a) due to the higher spatial resolution, while in the Jiuzhaigou earthquake-triggered landslide dataset they take on obscured ground information with a limited number of landslide pixels (as shown in Figure 10c), which is more difficult to detect. It may be overcome by incorporating more attention-based modules in the network architecture and enlarging the number of training samples and the variety of spatial resolutions for small landslides in our future study.

Apart from the performance improvement in small landslide detection, the proposed DeenNet gained an approximate 15% F1-measure and IOU improvement on large landslides in both evaluation datasets, as well. It was able to capture most landslide-related information by decoding the input feature maps over a larger scale to maintain the spatial and spectral characteristics of the landslides. In the case of the Bijie landslide dataset, there is no significant difference between the detection performances for small landslides and large landslides from all the models. That is mostly due to the difficult landslide cases from the higher spatial resolution images. In contrast, in the case of the Jiuzhaigou landslide dataset, the performances of all the models in detecting large landslides are significantly better than those for small landslides. Large landslides take a higher pixel proportion of the image, which makes it easier to detect their features than those of small ones. The F1-measure and IOU of the Jiuzhaigou landslide dataset are both higher than those of Bijie landslide dataset, apparently. The most likely reason for such a phenomenon is the difficulty of the cases of Bijie landslide dataset, with the similar landslide pixel proportion of the images. Therefore, improving the accuracy in detecting small landslides is a significant step toward enhancing the practicability of the model in future studies. With the remarkable improvement in landslide detection performance, our proposed decoder–encoder network structure can be recognized as a good starting point for detecting multi-scale landslides using data from different sensors and study areas practically.

6. Conclusions

In response to the challenges encountered in detecting landslides from a large number of images, we proposed a semantic segmentation network called DeenNet that transposes the commonly used encoder–decoder network structure. It decodes the input features to a large scale and encodes them to learn the multi-scale features of landslides, in order to retain the features of small landslides. Moreover, the proposed network was trained on the datasets of two earthquake-triggered landslides at different spatial resolutions, and evaluated on the datasets of two different study areas containing images from different platforms with different spatial resolutions. Such an evaluation strategy is close to the empirical application scenarios when satellite images with a single spatial resolution covering the entire study area are difficult to obtain. This can objectively reflect the transferability and robustness of the proposed model in detecting numerous landslides over large areas. The experiments verified the robustness of our proposed decoder–encoder network structure when transferred to the evaluation datasets. It can reduce the features of landslides being filtered out by continuous downscale convolutions, as is done in most typical semantic segmentation networks. Compared with the widely used semantic segmentation frameworks, including Unet, SegNet and DeepLabv3, our proposed DeenNet achieved at least 6% higher accuracy in detecting all the evaluating landslides, and approximate 10% higher in detecting small landslides. The remarkable progress in improving small landslide detection accuracy validates the efficiency of DeenNet in maintaining landslide features. However, it still shows limitations in extracting small landslides from complex background objects. To build a more robust model, more advanced technologies will be considered and larger landslide datasets will be constructed in our future work.

Author Contributions

B.Y. and L.W.; methodology, B.Y. and L.W.; software, C.X.; validation, B.Y., N.W., and F.C.; formal analysis, B.Y.; investigation, L.W.; resources, L.W.; data curation, B.Y.; writing—original draft preparation, B.Y. and L.W.; writing—review and editing, F.C.; visualization, N.W.; supervision, C.X.; project administration, L.W.; funding acquisition, B.Y., F.C., and L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Strategic Priority Research Program of the Chinese Academy of Sciences (XDA19030101), the Youth Innovation Promotion Association, CAS (2022122) and the China-ASEAN Big Earth Data Platform and Applications (CADA, guikeAA20302022).

Data Availability Statement

The Bijie dataset was derived from the following resources available in the public domain: [http://gpcv.whu.edu.cn/data/] (accessed on 17 March 2021). The Jiuzhaigou, Lushan, and Iburi datasets are openly available in [Baidu disk] with the extraction code of 1234 at [https://pan.baidu.com/s/1iGynve6YAbkcDJh-lqG6kw (accessed on 9 April 2021), https://pan.baidu.com/s/12XCG9T4r2gj1Ld5vA60-lQ (accessed on 9 April 2021), https://pan.baidu.com/s/1gFX5l3esyGhuyxKE4--csw (accessed on 8 April 2021)] respectively.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cui, Y.; Cheng, D.; Choi, C.E.; Jin, W.; Lei, Y.; Kargel, J.S. The cost of rapid and haphazard urbanization: Lessons learned from the Freetown landslide disaster. Landslides 2019, 16, 1167–1176. [Google Scholar] [CrossRef]

- Youssef, A.M.; Pourghasemi, H.R.; Pourtaghi, Z.S.; Al-Katheeri, M.M. Landslide susceptibility mapping using random forest, boosted regression tree, classification and regression tree, and general linear models and comparison of their performance at Wadi Tayyah Basin, Asir Region, Saudi Arabia. Landslides 2016, 13, 839–856. [Google Scholar] [CrossRef]

- Mohan, A.; Singh, A.K.; Kumar, B.; Dwivedi, R. Review on remote sensing methods for landslide detection using machine and deep learning. Trans. Emerg. Telecommun. Technol. 2020, 32, e3998. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, F.; Guo, H.; Yi, L.; Zeng, J.; Li, B. Glacial Lake Area Changes in High Mountain Asia during 1990–2020 Using Satellite Remote Sensing. Research 2022, 2022, 9821275. [Google Scholar] [CrossRef] [PubMed]

- Jia, H.; Chen, F.; Zhang, C.; Dong, J.; Du, E.; Wang, L. High emissions could increase the future risk of maize drought in China by 60–70%. Sci. Total Environ. 2022, 852, 158474. [Google Scholar] [CrossRef]

- Chen, F.; Yu, B.; Li, B. A practical trial of landslide detection from single-temporal Landsat8 images using contour-based proposals and random forest: A case study of national Nepal. Landslides 2018, 15, 453–464. [Google Scholar] [CrossRef]

- Lee, J.; Wang, J.; Crandall, D.; Sabanovic, S.; Fox, G. Real-Time, Cloud-Based Object Detection for Unmanned Aerial Vehicles. In Proceedings of the 2017 First IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10–12 April 2017. [Google Scholar]

- Liu, W.; Yamazaki, F.; Vu, T.T. Automated Vehicle Extraction and Speed Determination from QuickBird Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 75–82. [Google Scholar] [CrossRef]

- Catani, F. Landslide detection by deep learning of non-nadiral and crowdsourced optical images. Landslides 2020, 18, 1025–1044. [Google Scholar] [CrossRef]

- Ji, S.; Yu, D.; Shen, C.; Li, W.; Xu, Q. Landslide detection from an open satellite imagery and digital elevation model dataset using attention boosted convolutional neural networks. Landslides 2020, 17, 1337–1352. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Tanaka, S.; Odajima, T.; Kamai, T.; Tsuchida, S. Detection of a landslide movement as geometric misregistration in image matching of SPOT HRV data of two different dates. Int. J. Remote Sens. 2003, 24, 3523–3534. [Google Scholar] [CrossRef]

- Yu, B.; Chen, F.; Xu, C. Landslide detection based on contour-based deep learning framework in case of national scale of Nepal in 2015. Comput. Geosci. 2020, 135, 104388. [Google Scholar] [CrossRef]

- Tavakkoli Piralilou, S.; Shahabi, H.; Jarihani, B.; Ghorbanzadeh, O.; Blaschke, T.; Gholamnia, K.; Meena, S.R.; Aryal, J. Landslide detection using multi-scale image segmentation and different machine learning models in the higher imalayas. Remote Sens. 2019, 11, 2575. [Google Scholar] [CrossRef]

- Yu, B.; Chen, F.; Muhammad, S.; Li, B.; Wang, L.; Wu, M. A simple but effective landslide detection method based on image saliency. Photogramm. Eng. Remote Sens. 2017, 83, 351–363. [Google Scholar] [CrossRef]

- Aksoy, B.; Ercanoglu, M. Landslide identification and classification by object-based image analysis and fuzzy logic: An example from the Azdavay region (Kastamonu, Turkey). Comput. Geosci. 2012, 38, 87–98. [Google Scholar] [CrossRef]

- Pradhan, B.; Lee, S.; Buchroithner, M.F. Use of geospatial data and fuzzy algebraic operators to landslide-hazard mapping. Appl. Geomat. 2009, 1, 3–15. [Google Scholar] [CrossRef]

- Lu, P.; Qin, Y.; Li, Z.; Mondini, A.C.; Casagli, N. Landslide mapping from multi-sensor data through improved change detection-based Markov random field. Remote Sens. Environ. 2019, 231, 111235. [Google Scholar] [CrossRef]

- Dou, J.; Paudel, U.; Oguchi, T.; Uchiyama, S.; Hayakavva, Y.S. Shallow and Deep-Seated Landslide Differentiation Using Support Vector Machines: A Case Study of the Chuetsu Area, Japan. Terr. Atmos. Ocean. Sci. 2015, 26, 227. [Google Scholar] [CrossRef]

- Chen, W.; Li, X.; Wang, Y.; Chen, G.; Liu, S. Forested landslide detection using LiDAR data and the random forest algorithm: A case study of the Three Gorges, China. Remote Sens. Environ. 2014, 152, 291–301. [Google Scholar] [CrossRef]

- Nhu, V.-H.; Mohammadi, A.; Shahabi, H.; Ahmad, B.B.; Al-Ansari, N.; Shirzadi, A.; Geertsema, M.; R Kress, V.; Karimzadeh, S.; Valizadeh Kamran, K. Landslide Detection and Susceptibility Modeling on Cameron Highlands (Malaysia): A Comparison between Random Forest, Logistic Regression and Logistic Model Tree Algorithms. Forests 2020, 11, 830. [Google Scholar] [CrossRef]

- Musaev, A.; Wang, D.; Shridhar, S.; Lai, C.-A.; Pu, C. Toward a real-time service for landslide detection: Augmented explicit semantic analysis and clustering composition approaches. In Proceedings of the 2015 IEEE International Conference on Web Services, New York, NY, USA, 27 June–2 July 2015; pp. 511–518. [Google Scholar]

- Chen, F.; Wang, N.; Yu, B.; Wang, L. Res2-Unet, a New Deep Architecture for Building Detection from High Spatial Resolution Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1494–1501. [Google Scholar] [CrossRef]

- Yu, B.; Yang, A.; Chen, F.; Wang, N.; Wang, L. SNNFD, spiking neural segmentation network in frequency domain using high spatial resolution images for building extraction. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102930. [Google Scholar] [CrossRef]

- Yu, B.; Xu, C.; Chen, F.; Wang, N.; Wang, L. HADeenNet: A hierarchical-attention multi-scale deconvolution network for landslide detection. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102853. [Google Scholar] [CrossRef]

- Yu, B.; Chen, F.; Wang, N.; Yang, L.; Wang, L. MSFTrans: A multi-task frequency-spatial learning Transformer for building extraction from high spatial resolution remote sensed images. GIScience Remote Sens. 2022, in press. [Google Scholar]

- Dey, E.K.; Awrangjeb, M.; Stantic, B. Outlier detection and robust plane fitting for building roof extraction from LiDAR data. Int. J. Remote Sens. 2020, 41, 6325–6354. [Google Scholar] [CrossRef]

- Hamaguchi, R.; Hikosaka, S. Building detection from satellite imagery using ensemble of size-specific detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 187–191. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Meena, S.R.; Ghorbanzadeh, O.; van Westen, C.J.; Nachappa, T.G.; Blaschke, T.; Singh, R.P.; Sarkar, R. Rapid mapping of landslides in the Western Ghats (India) triggered by 2018 extreme monsoon rainfall using a deep learning approach. Landslides 2021, 18, 1937–1950. [Google Scholar] [CrossRef]

- Prakash, N.; Manconi, A.; Loew, S. Mapping Landslides on EO Data: Performance of Deep Learning Models vs. Traditional Machine Learning Models. Remote Sens. 2020, 12, 346. [Google Scholar] [CrossRef]

- Liu, P.; Wei, Y.; Wang, Q.; Chen, Y.; Xie, J. Research on Post-Earthquake Landslide Extraction Algorithm Based on Improved U-Net Model. Remote Sens. 2020, 12, 894. [Google Scholar] [CrossRef]

- Gao, S.; Cheng, M.-M.; Zhao, K.; Zhang, X.-Y.; Yang, M.-H.; Torr, P.H. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef]

- Tian, Y.; Xu, C.; Ma, S.; Xu, X.; Wang, S.; Zhang, H. Inventory and Spatial Distribution of Landslides Triggered by the 8th August 2017 M w 6.5 Jiuzhaigou Earthquake, China. J. Earth Sci. 2019, 30, 206–217. [Google Scholar] [CrossRef]

- Liu, L.; Chong, X.U.; Chen, J. Landslide Factor Sensitivity Analyses for Landslides Triggered by 2013 Lushan Earthquake Using GIS Platform and Certainty Factor Method. J. Eng. Geol. 2014, 22, 1176–1186. [Google Scholar]

- Xu, C.; Xu, X.; Shyu, J. Database and spatial distribution of landslides triggered by the Lushan, China Mw 6.6 earthquake of 20 April 2013. Geomorphology 2015, 248, 77–92. [Google Scholar] [CrossRef]

- Shao, X.; Ma, S.; Xu, C.; Zhang, P.; Wen, B.; Tian, Y.; Zhou, Q.; Cui, Y. Planet Image-Based Inventorying and Machine Learning-Based Susceptibility Mapping for the Landslides Triggered by the 2018 Mw6.6 Tomakomai, Japan Earthquake. Remote Sens. 2019, 11, 978. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Ma, S.; Xu, C. Assessment of co-seismic landslide hazard using the Newmark model and statistical analyses: A case study of the 2013 Lushan, China, Mw6.6 earthquake. Nat. Hazards 2019, 96, 389–412. [Google Scholar] [CrossRef]

- Marc, O.; Hovius, N. Amalgamation in landslide maps: Effects and automatic detection. Nat. Hazards Earth Syst. Sci. 2015, 15, 723–733. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).