1. Introduction

Ship detection is of great value in marine traffic management, navigation safety supervision, fishery management, ship rescue, ocean monitoring, and other civil fields. Timely acquisition of ship location, size, heading, and speed information is of great significance to ensure maritime safety. Due to the complexity of the ocean environment, high labor cost, experience dependence, and unreliable manual observation, automatic ship detection using remote sensing images (RSIs) has attracted more and more interest.

At present, remote sensing satellite images mainly include visible, infrared, and synthetic aperture radar (SAR). With the limited number of SAR satellites and long revisiting period, the applications based on SAR images cannot achieve real-time ship monitoring. Due to the great variation of weather and wind speed, there is high non-uniformity of sea surface clutter in SAR images [

1], which is not conducive to ship detection based on SAR images. Ship monitoring based on spaceborne optical images works well, except when there is heavy cloud cover and light restriction. Infrared imaging systems can record the radiation, reflection, and scattering information of the object to overcome some of the negative effects of thin clouds, mist, and dark light. Therefore, target detection based on thermal infrared remote sensing images has become one of the important means of all-day Earth observation.

Ship detection comprises hull and wake detection [

2]. However, ship wakes do not always exist; therefore, hull detection is more widely used. In recent years, researchers have proposed a variety of ship detection algorithms based on RSIs. In general, ship target features are extracted by traditional or intelligent methods. Computer-assisted ship detection methods typically involve feature maps extraction and automatic location by classifiers, thereby freeing human resources. Traditional detection methods extract middle or low level features containing the color, texture, and shape of targets. Intensity distribution differences between ships and waters are helpful to distinguish ship candidates from sea, but the effectiveness varies across different sea types and states. Since the sea surface is more uniform than the target, Yang et al. [

3] defined intensive metrics to distinguish anomalies from relatively similar backgrounds. Zhu et al. [

4] firstly segmented images to obtain simple shapes, and then extracted shape and texture features from ship candidates. Finally, three classification strategies were used to classify ship candidates. In calm seas, the results of the above method are stable. However, the algorithm based on low-level features has poor robustness when wave, cloud, rain, fog, or reflection occur. In addition, manual feature selection is time consuming and strongly depends on the expertise of the user.

Consequently, later research has focused on how to extract and incorporate more ship features to detect ships more accurately and quickly. In recent years, convolutional neural network (CNN) has made many breakthroughs. Through a series of convolutional and pooling layers, more distinguishable features can be extracted by CNN. However, the accuracy of data-driven CNN detection methods largely depends on large-scale and high-quality training datasets. Driven by CNN, intelligent methods based on advanced features are mainly divided into two categories. The two-stage algorithms first utilize the region proposal network to select the approximate objects region, and then the target detection network classifies the candidate region to obtain more accurate boundaries. Two-stage models mainly contain R-CNN [

5], Fast R-CNN [

6], and Faster R-CNN [

7]. The one-stage detection methods include SSD [

8], RFBNet [

9], and YOLOv1 [

10], YOLO9000 [

11], and YOLOv3 [

12]. One-stage algorithms omit the region proposal process and directly return to the bounding box and assign the relevant class probability.

The accuracy of the supervised algorithm is closely related to the quality of the datasets. Although various public datasets such as ImageNet [

13], PASCAL VOC [

14], COCO [

15], and DOTA [

16] can be used to identify multiple general targets, they are not specifically meant for ship detection. Some large remote sensing targets datasets, such as FAIR1M [

17], include geographical information containing latitude, longitude, and resolution attributes to provide abundant fine-grained classification information. Qi et al. [

18] designed MLRSNet datasets for multi-label scene classification and image retrieval visual recognition tasks. Zhou et al. proposed a large-scale Patternnet-Google Maps/API [

19] dataset which is suitable for deep learning-based image retrieval methods. The open large datasets have greatly accelerated the development of target detection. However, public datasets specific to maritime vessel detection are still not available.

To sum up, there are three main challenges for space-based thermal infrared all-sky automatic ship detection research: (1) Due to the high security level of infrared data, training datasets of thermal infrared remote sensing images for ship detection are scarce. (2) During heat source imaging, the target and boundary may be too indistinct to distinguish, which may lead to false alarms or missed detection. (3) Due to the lack of a clear connection between network parameters and approximate mathematical functions, the interpretability of CNN is poor. The neural network can find as many ships as possible and predict the accurate target position, but it is not known which input information is useful.

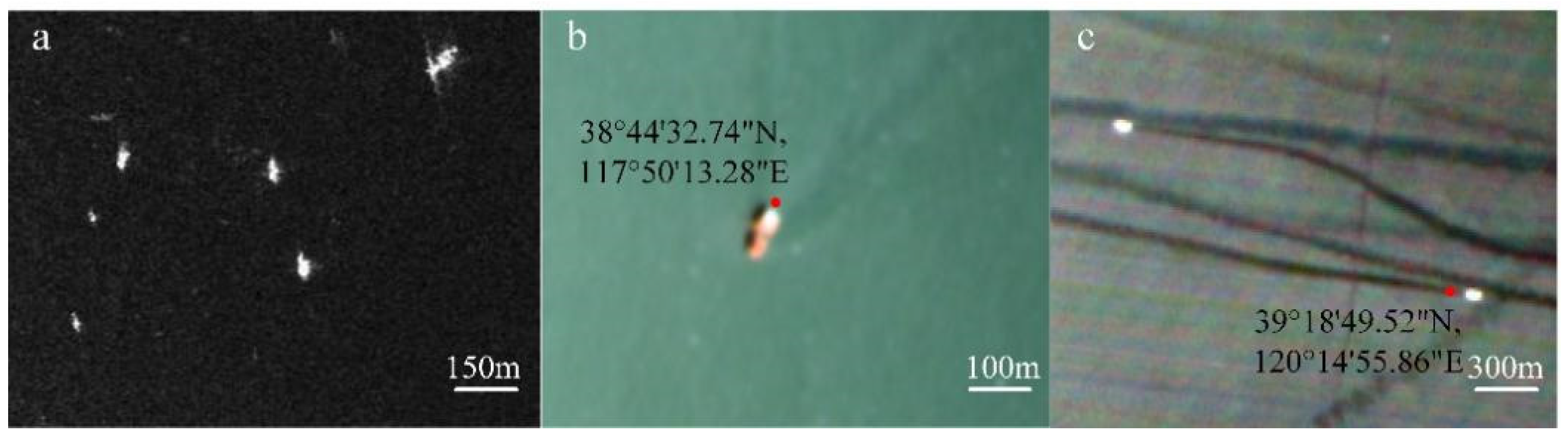

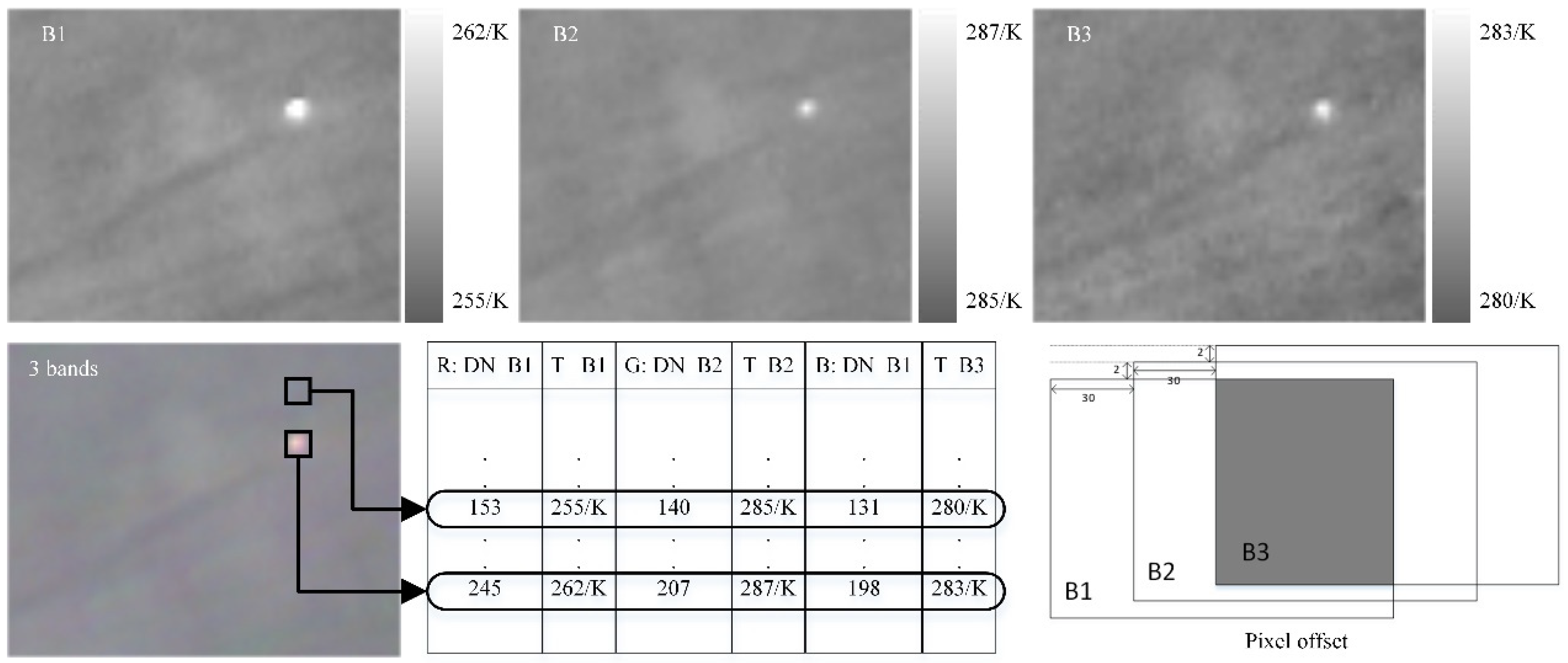

In this paper, we label a new three-bands thermal infrared ship dataset (TISD) to solve the above challenges. All images are from the SDGSAT-1 thermal imaging system (TIS) real remote sensing images. SDGSAT-1 is designed for a sun-synchronous orbit at an altitude of 505 km. The SDGSAT-1 website:

http://www.sdgsat.ac.cn/ (accessed on 14 October 2022). In order to describe human activities in detail, three loads of thermal infrared, glimmer, and multispectral imagers are consulted, among which, the spatial resolution of TIS is 30 m and the imaging width is 300 m. By utilizing the TISD, an advanced detector, namely the improved Yolov5s [

20], is used to train the all-sky ship target detection model. Combined with data feature analysis, the influence of bands selection on target detection accuracy is evaluated. The all-day detection capability is verified with glimmer images. In practice, the proposed datasets are expected to promote the research and application of all-day ship detection. The main contributions of this paper are as follows:

To the best of our knowledge, we are the first to annotate the three-bands thermal infrared ship dataset. All images are from the SDGSAT-1 TIS three-bands real remote sensing images. To enrich the proposed datasets, the selected images contain features of different target sizes and illumination levels in a variety of complex environments. TISD Website:

https://pan.baidu.com/s/1a9_iT-pdaSZ-hkBYU2Qciw?pwd=fgcq (accessed on 14 October 2022).

Due to the lack of clear connection between network parameters and approximate mathematical functions, it is not known which input information is useful. In order to explore the relationship between input information and detection accuracy, the optimum index factor (OIF), which is related to the key information and redundancy between different bands images, is used appropriately to evaluate the useful features in our dataset.

Based on TISD, we used the state of art detector that we proposed before, namely, the improved Yolov5s [

20], as the baseline to train different models by utilizing different spectral bands datasets. Combined with the above theoretical analysis, the influence of combined bands on detection accuracy is explored.

The difficulties of the existing ship detection methods based on datasets are summarized. By using up-sampling and registration pre-processing methods, glimmer images are combined with thermal infrared remote sensing images to verify the all-day ship detection capability.

The organizational structure of this study is as follows. In

Section 2, the related work of publicly available ship datasets is elaborated in detail. In

Section 3, the description of the datasets is outlined. In

Section 4, we present experiments and discuss the experimental results to validate the effectiveness of the proposed research. Finally, in

Section 5, we summarize the content of this study.

2. Related Works

At present, RSIs from radar, optical, reflective infrared, and thermal infrared are mainly used for ship detection, as shown in

Figure 1. As an active microwave sensor, SAR can obtain high-resolution data under various weather conditions, and has been widely used in ocean surveillance [

21,

22]. With the development of deep learning and imaging technology, many automatic target algorithms for RSIs have been proposed to detect different targets. To capture the features of ships with large aspect ratio, Zhao et al. [

23] proposed a new attentional reception pyramid network, which has asymmetric core sizes and various dilated rates. Due to different local clutter and low signal-clutter ratio existing in SAR images, Wang et al. [

24] used variance-weighted information entropy method to measure the local difference between the targets and its neighborhood. Then, the optimal window selection mechanism based on multi-scale local contrast measures are utilized to enhance the target from the complex background. Considering the difference in gray distribution and shape between ship and clutter, Ai et al. [

25] modeled using the ship target’s gray correlation and joint gray intensity distribution of strong clutter pixel and its adjacent pixel in two-dimensional joint lognormal distribution, which greatly reduced the false positives caused by speckle and local background non-uniformity. Gong et al. [

26] presented a novel neighborhood-based ratio operator to produce a difference image for change detection in SAR images. Zhang et al. [

27] proposed an unsupervised change detection method using saliency extraction; however, this method is not suitable for object detection in a single-frame image. Song et al. [

28] generated proven robust training datasets by using synthetic SAR images and automatic identification system data; however, the acquisition of the above data requires the establishment of ground base stations, which is limited by region and lacks real-time capability. Rostami et al. [

29] proposed a new semi-supervised domain adaptive algorithm based on existing optical images labels to transfer features learned from optical to SAR. To be more intuitive, the existing general multi-target detection datasets with ship targets and the proposed TISD are summarized, as shown in

Table 1.

As the only available fine-grained ship dataset, HRSC2016 [

35] has been used as a baseline in many studies. By using public ship dataset HRSC2016, Wang et al. [

39] validated an improved encoder–decoder structure which added a batch normalization (BN) processing layer to speed up model training and introduced extended convolution at different rates to fuse features of different scales. However, some subcategories of HRSC2016 contain no more than ten ship instances, and some small ships are neglected during marking. Given the lack of diversity in public datasets, Cui et al. [

36] established HPDM-OSOD and proposed a novel anchor-free rotating ship detection framework, SKNet. The ship target center key points and shape dimensions, including width, height, and rotation angle, are utilized during modeling to avoid many predefined anchors in the rotating ship detector. For the limitation of fine-grained datasets, Han et al. [

37] established a new twenty-class three-level directional ship recognition dataset (DOSR). Li et al. [

40] combined the classic Saliency Estimation algorithm and deep CNN object detection to ensure the extraction of large ships from multi-scale ships in high-resolution RSIs. Yao et al. [

41] used a region proposal regression algorithm to identify ships of panchromatic images, but the large parameters of the network led to long prediction times. Due to the large size of the remote sensing images, Zhang et al. [

42] firstly utilized a support vector machine to classify the water and non-water areas. However, ships close to shore are difficult to classify by the preprocessing separation method.

As opposed to SAR or spaceborne optical images, ground-based visual images can achieve better accuracy and real-time processing for ship detection, which can be widely used in port management, cross-border ship detection, autonomous shipping, and safe navigation. Li et al. [

43] introduced the attention module to the YOLOv3 network to achieve a good application for real-time ship detection in a real scenario. Shao et al. [

44] used the Seaships dataset [

38] to train CNN to predict the approximate position of ships, and then used significant area detection and coastline information based on global contrast to correct the position of ships. For continuous video detection tasks, accuracy should be sacrificed to ensure real-time processing.

Due to the high secrecy of the infrared remote sensing data, the supply of images is very limited; therefore, it is difficult to collect many positive samples of ships. Transfer learning is helpful when the amount of data is insufficient. Wang et al. [

45] used optical panchromatic images to assist limited infrared data during auxiliary training; however, there is a great difference between infrared and panchromatic images in imaging principle. Song et al. [

46] collected dark light boat images from the infrared cameras on the ships. The datasets contain 3352 marked images of a variety of ship navigation states and interference scenarios. Li et al. [

47] utilized MarDCT videos and images from fixed, mobile, and pan-tilt-zoom cameras [

48], as well as the PETS2016 dataset [

49] for visual performance evaluation. It must be noted that the above studies are not based on real spaceborne infrared remote sensing data [

50,

51], and infrared RSIs have irreplaceable value in the field of ship detection. Therefore, to make up for the lack of a spaceborne thermal infrared public dataset, we notated a thermal infrared ship dataset in three bands based on SDGSAT-1 TIS images.

5. Discussion

As an important military target, real-time ship detection throughout the day has great military significance. Many scholars have studied the effectiveness and generalization of models using public datasets. However, due to the lack of infrared images, there are few available thermal infrared ship datasets. In this paper, a thermal infrared three-channel ship dataset is proposed, and a complete ship detection network model is designed based on the regression algorithm. Unlike visible remote sensing data, our dataset contains ships at night. As opposed to the simulation data, the dataset we propose uses real remote sensing images, which is more conducive to real-time target detection on the satellite. The TISD is based on three-channel thermal infrared images of the SDGSAT-1 thermal imaging system, and the Landsat-8 thermal infrared sensor only has two channels. The TISD has an additional band, namely 8~10.5 µm; therefore, the proposed dataset contains rich spectral information. Dataset feature analysis in

Section 3.4 and the experimental results in

Table 6 show that the increase of spectral information is more conducive to target detection. Instead of utilizing two-stage algorithms, our model is based on a one-stage Yolov5s, which is more conductive to speeding up prediction. In our model, dilated convolution can extract more fine features for smaller ships, and SElayer can pick out more important features. As shown in

Table 7, the accuracy of the proposed model is higher than that of other advanced models in sea scenes. In complex scenes, with a slight decrease of accuracy, our model parameters and floating-point operations are greatly reduced, where our model’s FLOPs is only 47.95% of original Yolov5s’ FLOPs. Thus, it is possible to detect ships from thermal infrared remote sensing images based on the lightweight Yolov5s model.

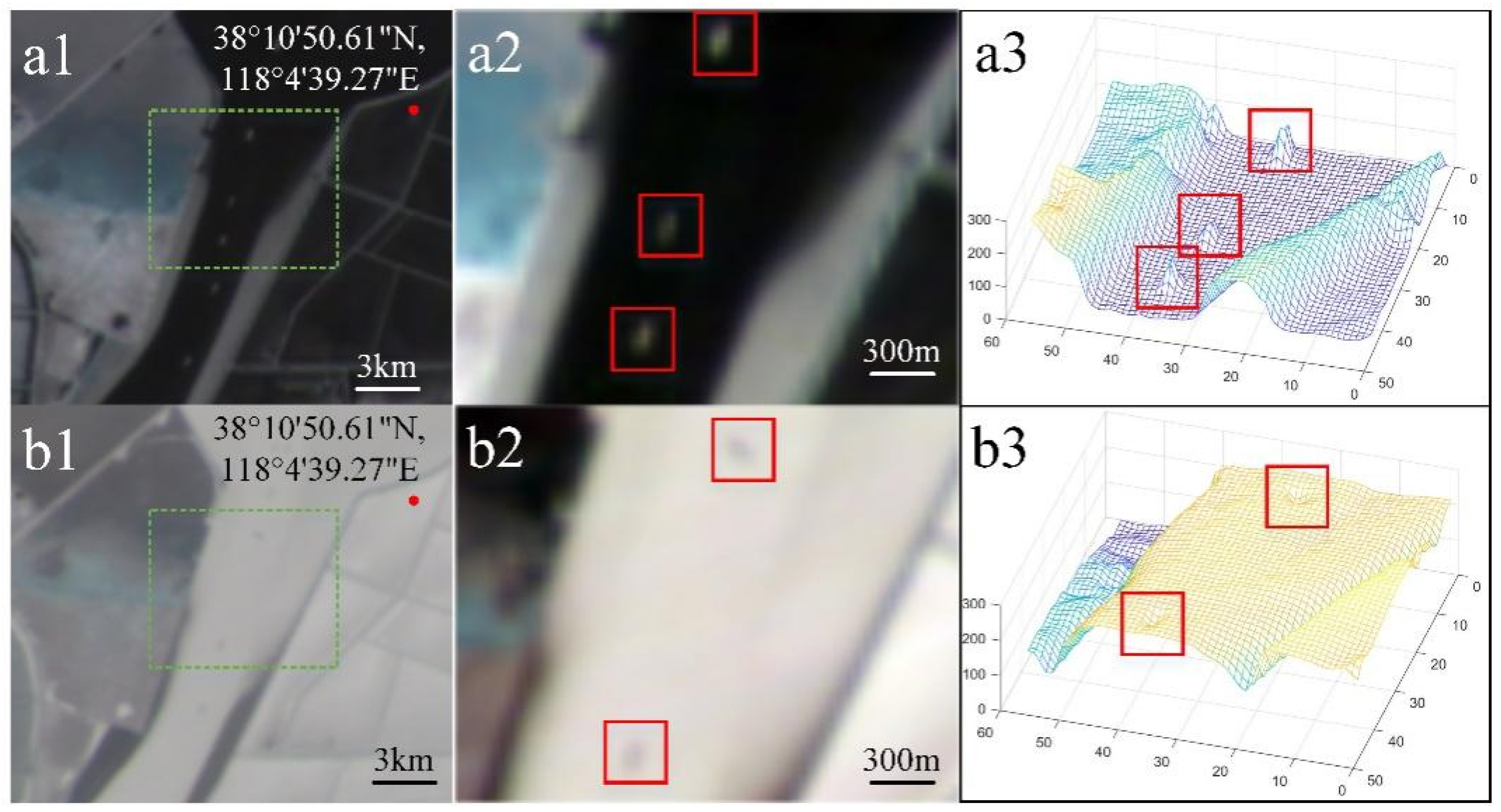

However, the following aspects can be further studied. First, there is an object on land that is misidentified as a ship, as shown by the yellow box in

Figure 11a. Due to the complex situation of land surface, target detection in the sea can be carried out after the preprocessing of sea and land segmentation in the future work. Second, in

Table 7, the accuracy detection at nighttime is lower than during the daytime. The possible reason is that the temperature difference between the ship and the water is too small at night, resulting in a weak contrast between the intensity of target and the background. In

Figure 13a3, during the day, the intensity of the boat is much higher than that of the water. As shown in

Figure 13b3, at night, the intensity of the ship is slightly lower than that of the water, resulting in a low signal-to-background ratio, which is not conducive to target detection. Later work should be scheduled to increase the nighttime ship dataset and to augment the target signal. Third, due to different imaging technology, glimmer datasets are a good complement for thermal infrared images. Our future work will focus on the expansion of glimmer datasets and ship wake labeling to promote accurate ship detection at night.

6. Conclusions

In this paper, the difficulties of existing ship detection datasets are summarized. Due to the high secrecy level of infrared data, thermal infrared ship datasets are lacking. Moreover, both detection accuracy and speed need to be considered for ship detection.

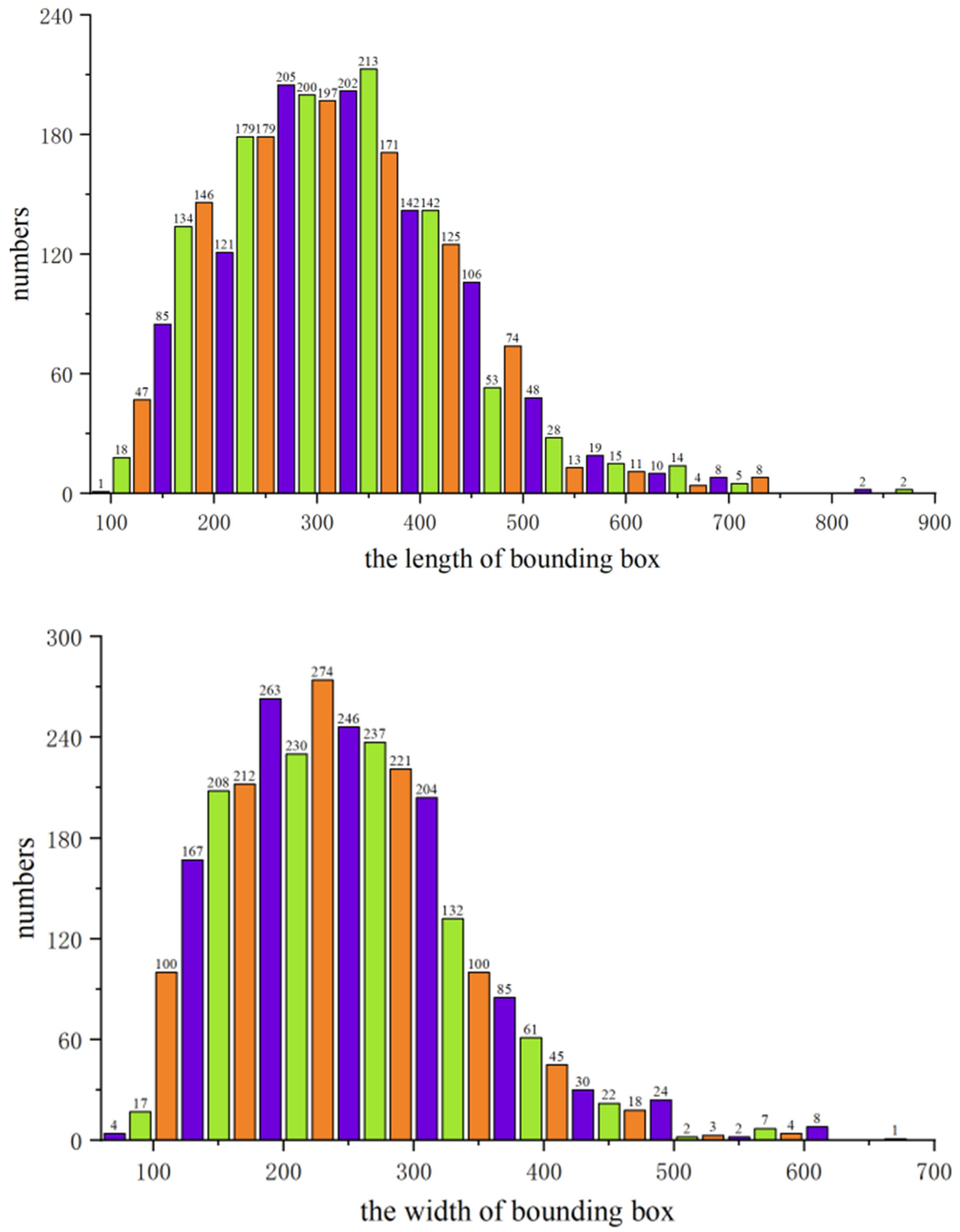

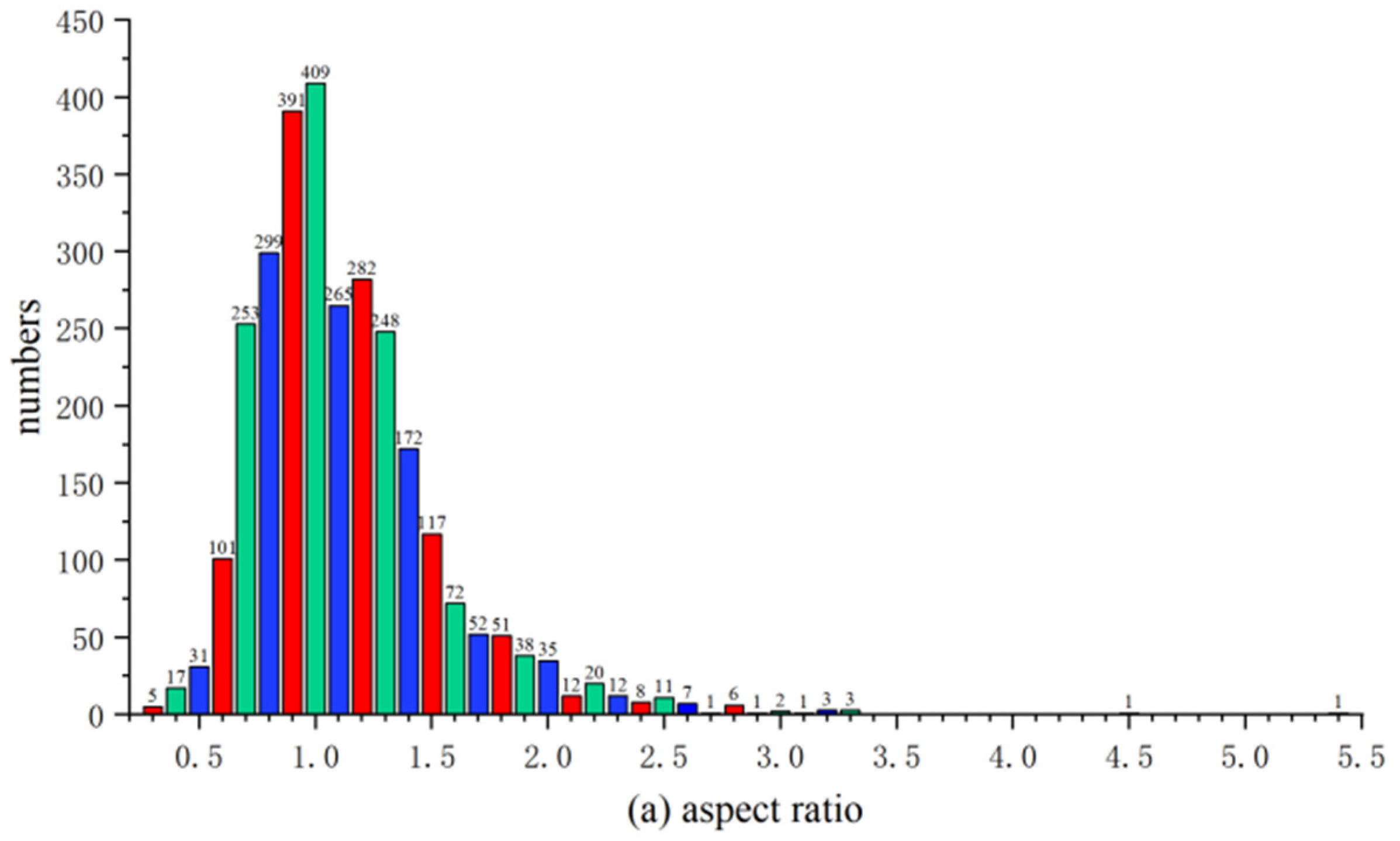

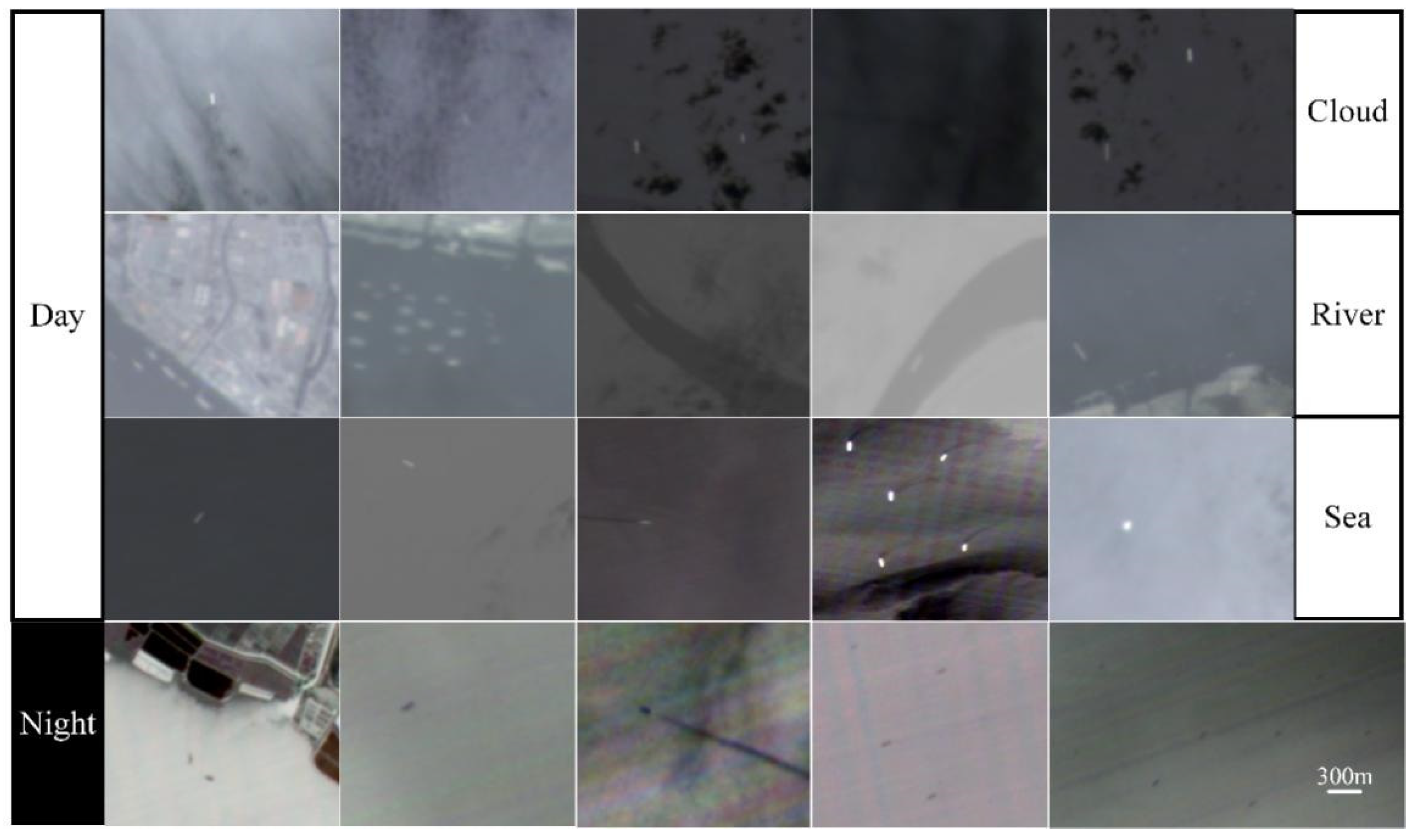

Aiming at the above problems, to the best of our knowledge, we are the first to annotate the three-bands thermal infrared ship dataset (TISD) to compensate for the lack of spaceborne thermal infrared public datasets. All images are provided from the SDGSAT-1 satellite thermal imaging system and all ship targets are annotated with high-precision boundary boxes. After band-to-band registration and up-sampling, the TISD currently contains 2190 images with a resolution of 10 m and 12,774 targets with a wide aspect ratio. The dataset is carefully selected to cover river and sea scenes at different imaging times and with different amounts of cloud cover.

By utilizing TISD, an all-day ship detection model is trained by an improved YOLO-based detector. Experiments show that the result of proposed method is excellent, and especially the detection accuracy on the sea surface is the highest, up to 81.15%. In cloudy, and river scenes, with a slight decrease of accuracy, the computational complexity of the proposed algorithm is greatly reduced, where our model’s FLOPs is only 47.95% of original Yolov5s’ FLOPs.

Based on data feature analyses, the optimal bands combination can promote the accuracy of detection. Among them, the standard deviation is proportional to the information, and the correlation coefficient between bands is related to redundancy data of different bands. Optimum index factor is used to combine standard deviation and correlation coefficient. By experimental comparisons of different bands, optimum index factor is positively correlated with the detection accuracy to a certain extent.

Combined with glimmer images, the model based on TISD is verified to be capable of all-day ship detection. In practice, the proposed dataset is expected to promote the research and application of all-day spaceborne ship detection.