1. Introduction

Synthetic aperture radar (SAR) is an active remote sensing imaging system. Compared with optical sensors, the main advantage of SAR sensors is that they can perform an observation task without being affected by factors such as light intensity and weather conditions. The transmitted microwave signal of SAR sensors, with strong penetration ability, can obtain remote-sensing images without clouds or fog occlusion [

1]. With the continuous increase in SAR platforms, such as aircraft and satellites, a large number of SAR images have been generated. The automatic-detection technology for targets in SAR images has become an important research topic. Recently, ship detection in SAR images has received increased attention because of its wide range of applications. In the civilian field, it is helpful for ocean freight detection and management, while in the military field, it is conducive to tactical deployment and improves the early warning capabilities of coastal defense.

Traditional SAR target-detection methods are mainly based on a statistical distribution of background clutter, difference in contrast, and polarization information. The constant-false-alarm Rate (CFAR) method [

2], based on statistical modeling of clutter, is one of the most widely used methods. The CFAR method obtains an adaptive threshold according to the statistical distribution of background clutter under a constant-false-alarm rate, then compares the pixel intensity with the threshold to distinguish the object’s pixel intensity from that of the background. Traditional methods mostly rely on manual design, with too much prior information to extract complex features, and the generalization ability of the obtained results is poor.

There are also some detection methods based on the machine learning method. Machine learning methods need to use target images for feature learning and pay more attention to feature extraction. The features extracted by machine learning are generally more interpretable [

3] using a boosting decision tree to complete the detection task. To obtain more rotation-invariant features [

3], a spatial-frequency channel feature is artificially designed by integrating the features constructed in the frequency domain and the original spatial channel features. This method also refines the features by subspace learning. Although the features extracted by the machine learning method are more representative, the sliding window operation reduces the efficiency of detection.

With the development of deep learning, deep convolutional neural networks (CNNs) have become mainstream methods in object detection. Deep neural networks have more powerful feature-extraction capability than traditional methods. At present, the target detection algorithms based on deep learning are mainly divided into two categories. The first type is a two-stage target detection algorithm represented by Faster R-CNN [

4], which generates candidate regions on the bounding box containing the target and then performs target detection. The detection accuracy is high, but the efficiency is low. The second type is a one-stage target detection algorithm, mainly represented by the single-shot multiBox detector (SSD) [

5] and the You-Only-Look-Once (YOLO) [

6] series. This type of algorithm does not generate candidate regions and directly detects targets through regression; the detection efficiency is high, but the accuracy is not as good as that of the first type.

Object-detection methods based on CNNs can also be divided into anchor-based and anchor-free categories. Faster-RCNN, SDD, and YOLOv3 [

7] are anchor-based methods; they adopt anchors, which is a group of rectangular boxes clustered on the training set using methods, such as K-means, before training. Anchors can represent the main distribution of the length and width scales of an object in the dataset and help the network find region proposals. In comparison, anchor-free methods, such as CenterNet [

8] and a fully convolutional one-stage object (FCOS) [

9], use other strategies for modeling the object in the detection head instead of adopting predefined anchors. However, object-detection methods based on CNN lose some spatial information in feature extraction because of the locality of convolution and the down-sample operation in pooling. Some structures fuse deep and shallow features, such as the feature pyramid network (FPN) [

10], to enhance the information-capture ability of a model, but this architecture increases the parameters of the model and the model becomes more time-consuming.

In remote-sensing data processing and analysis, there are also many state-of-the-art deep learning models. These methods use multimodal remote-sensing data, including SAR data, hyperspectral (HS) data, multispectral (MS) data [

11], and light-detection-and-ranging (LiDAR) data. [

12], to design a multimodal deep learning framework for remote-sensing image classification. In [

13], the authors used two-branch feature fusion CNN, including a branch of two-tunnel CNN, to extract spectral–spatial features from HS imagery, and another CNN with a cascade block to extract features from LiDAR or high-resolution visual images.

As a novel neural network structure, a transformer structure [

14] provides a new way of thinking for the vision task. Initially, a transformer is used in a natural-language processing (NLP) field. It uses an acyclic network structure, calculating with an encoder–decoder and a self-attention mechanism [

15] to achieve the best performance for machine translation. The successful application of a transformer in the field of NLP has caused scholars to begin to discuss this structure and to test its application in the field of computer vision. Some backbones using a transformer structure, such as ViT [

16] and the Swin Transformer [

17], instead of convolution, have been proved to have better performance than CNN. Transformers exchange information in a local range, which can rapidly expand the effective receptive field of features.

However, there are two main problems to be addressed in using transformers as backbones in SAR ship detection. First, the background of off-shore SAR-detected ship images is very simple, so the global relation modeling mechanism in a transformer will correlate some redundant background information. In addition, inshore SAR-detected ships are similar to the coast, with blurred contour, which makes it difficult to distinguish ship targets from background. The features extracted by a transformer need to be rebuilt with more object details to focus on SAR-detected ship targets with similar backgrounds.

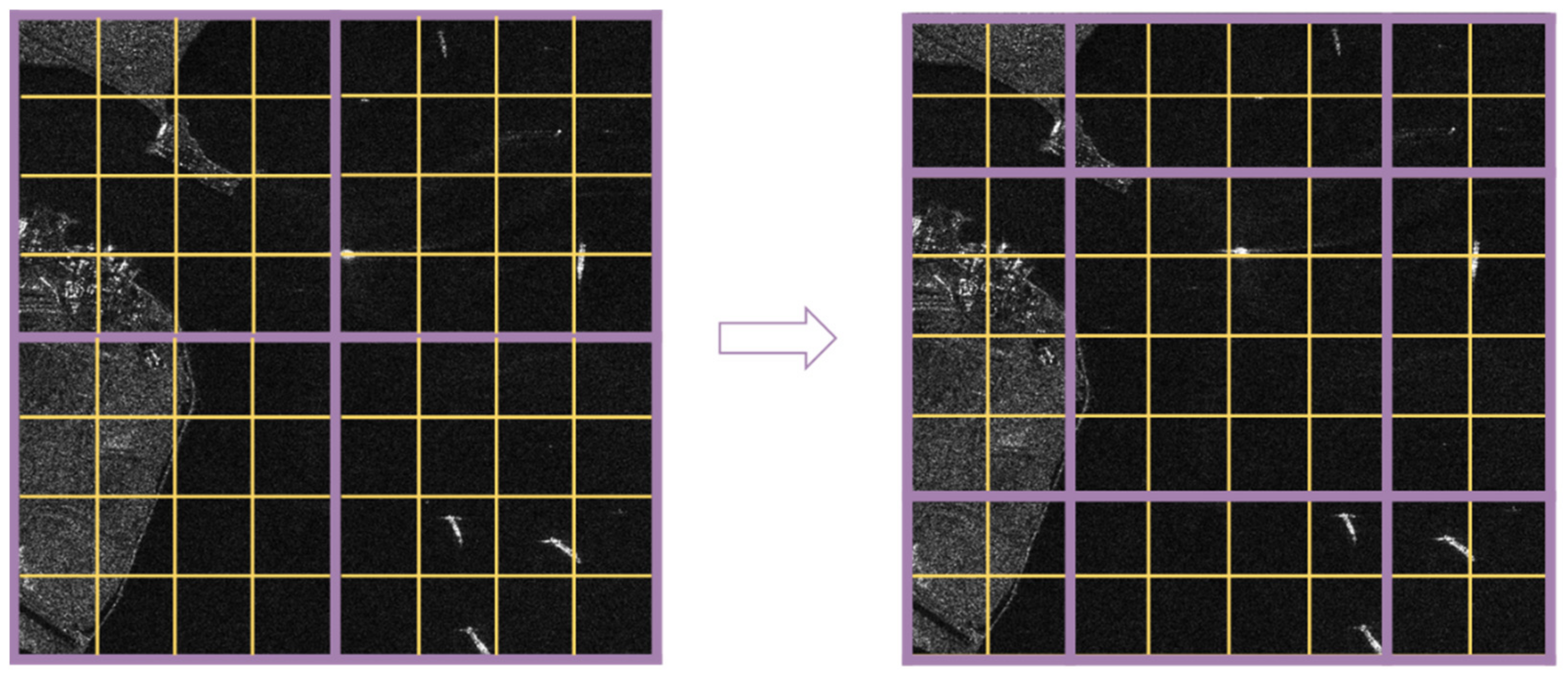

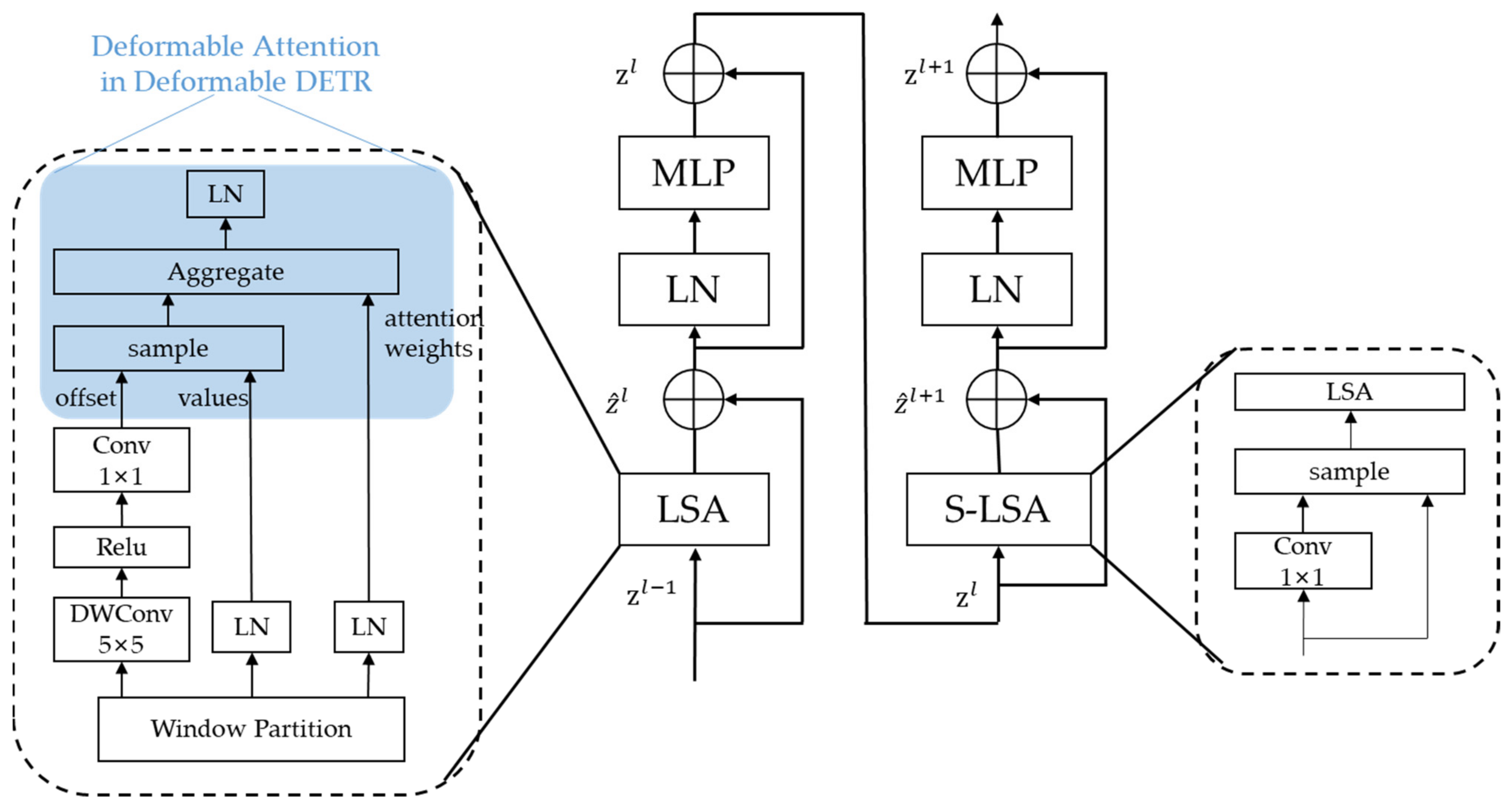

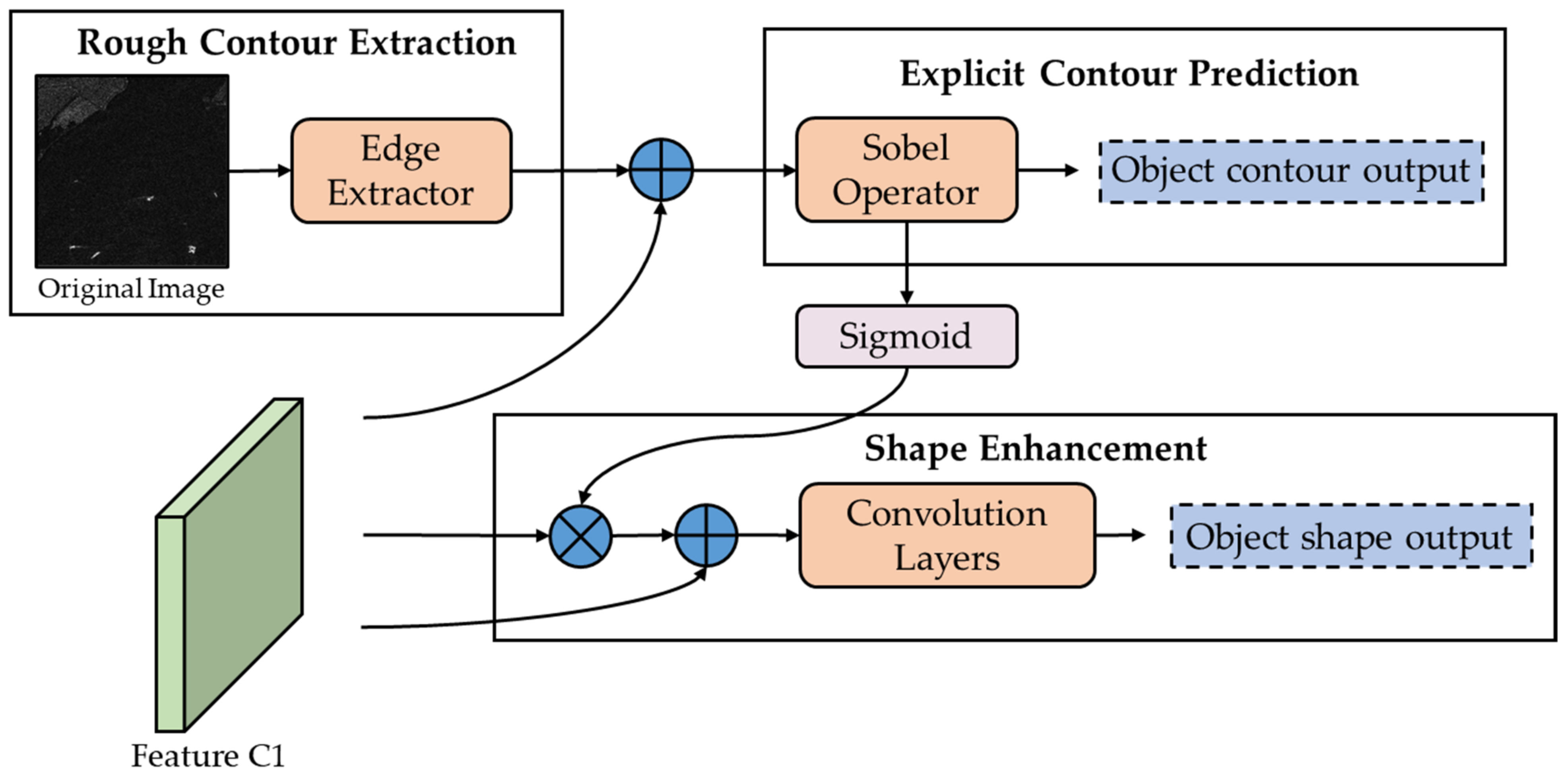

Therefore, in this paper, we propose a backbone with a local-sparse-information-aggregation transformer and a contour-guided shape-enhancement module. First, we introduce the Swin Transformer as the basic backbone architecture. In order to effectively aggregate the sparse meaningful cues of small-scale ships, a deformable attention mechanism is incorporated to change the original self-attention mechanism by generating a data-dependent offset and sampling more meaningful keys for each query. As for the problem of the cyclic-shift in the SW-MSA of the Swin Transformer causing error attention calculation, we adopt a sampled query to acquire exchanged information of adjacent windows. Second, we take the fully convolutional one-stage object detector (FCOS) framework as the basic detection network. In order to enforce the contour constraints on a transformer, the shallowest feature of FPN is input into the contour-guided shape-enhancement module. Then, the enhanced feature is incorporated into the detection head of the FCOS framework to obtain the detection results.

The main contributions of this paper are summarized as follows:

Aiming at the problem of SAR detection for ships of small scale, we propose a local-sparse-information-aggregation transformer as the backbone, based on Swin Transformer architecture. It can effectively fuse meaningful cues of small ships by the sparse-attention mechanism of deformable attention.

When replacing the self-attention mechanism with the deformable attention mechanism, a data-dependent offset generator is incorporated to obtain more salient features for small-scale SAR-detected ships

To better enhance the SAR-detected ships with blurred contour in the features extracted by the transformer and to distinguish them from interference, we propose a contour-guided shape-enhancement module for explicitly enforcing the contour constraints on the one-dimensional transformer architecture.

6. Conclusions

Due to the multi-view imaging principle of SAR, its imaging process is not limited by time or bad weather. The detection of ship in SAR images is a very important application in both military and civilian fields. However, the background clutter, such as a coast, an island, or a sea wave, cause previous object detectors to easily miss ships, with blurred contour, which is a big challenge in SAR ship detection. Therefore, in this paper, we proposed a local-sparse-information-aggregation transformer with explicit contour guidance for ship detection in SAR images. This work was based on the excellent Swin Transformer architecture and the FCOS detection framework. We used a deformable-attention mechanism with a data-dependent offset to effectively aggregate sparse meaningful cues of small-scale ships. Moreover, a novel contour-guided shape-enhancement module was proposed to explicitly enforce the contour constraints on the one-dimensional transformer architecture.

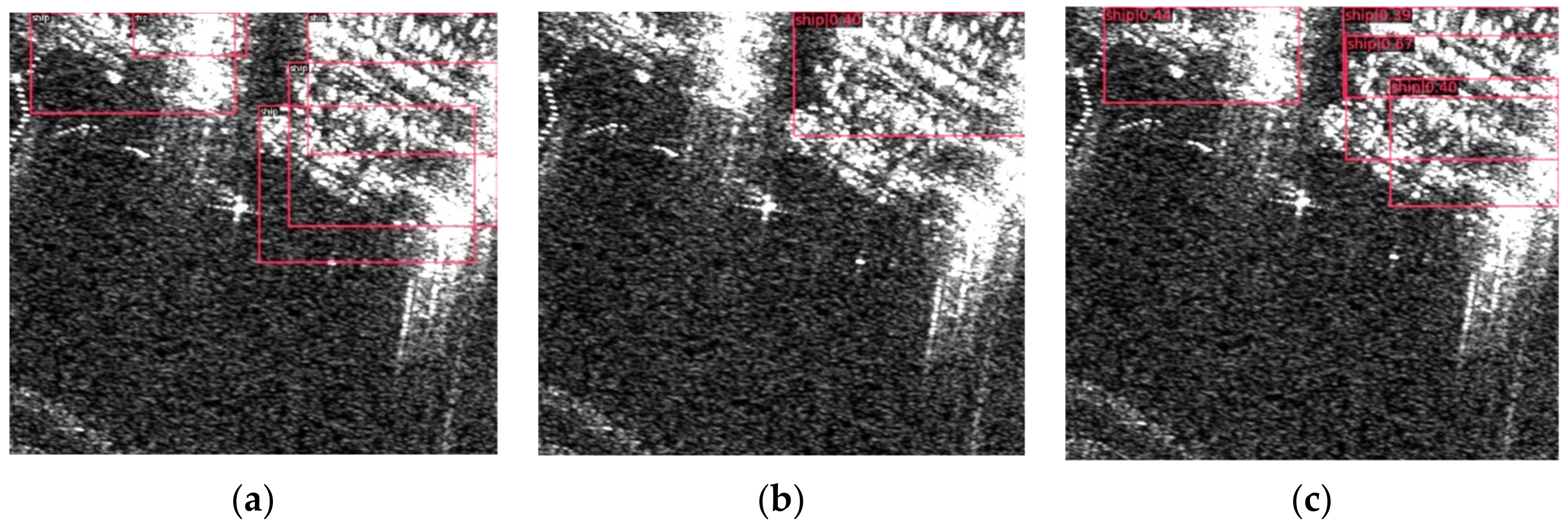

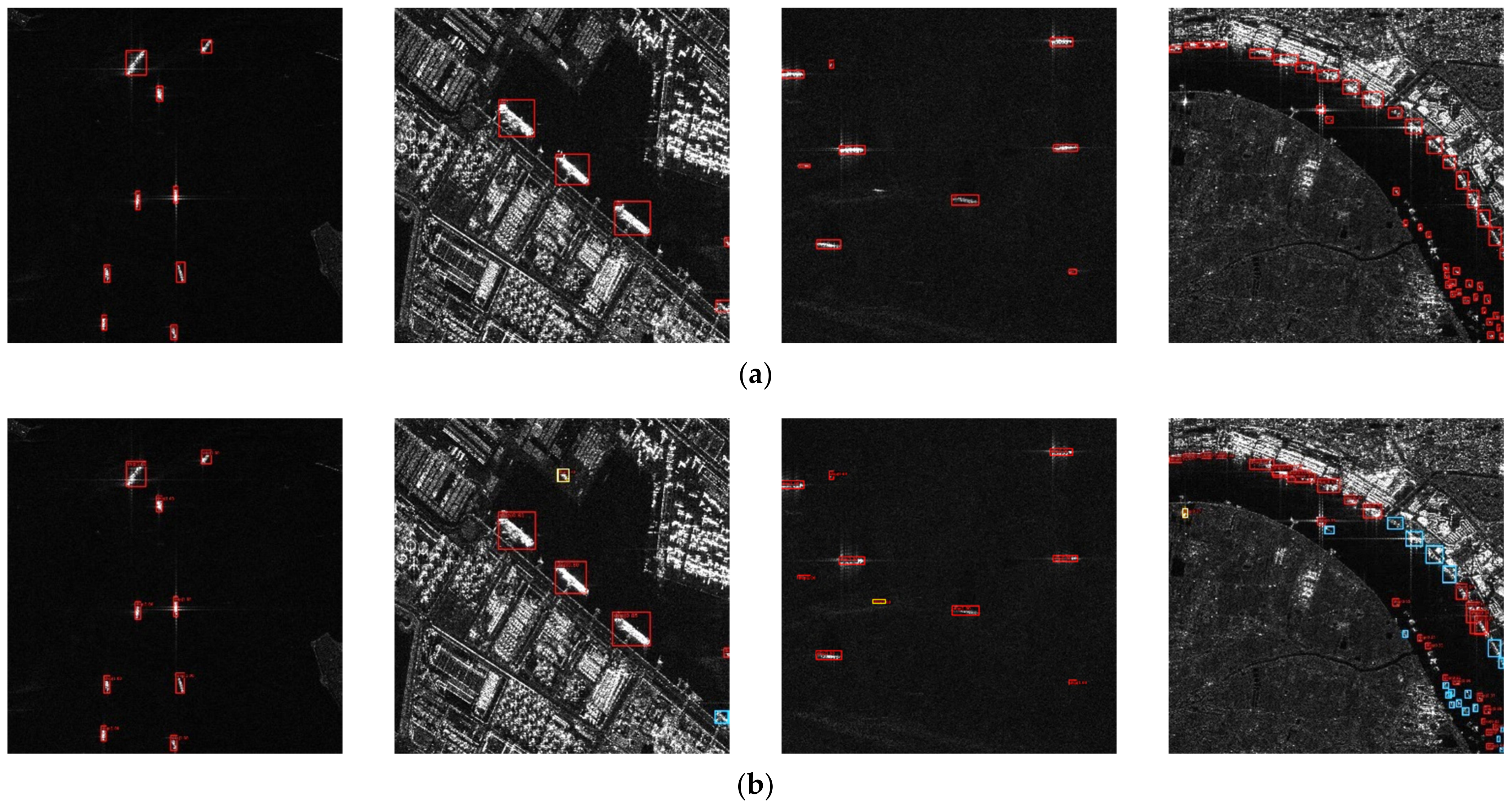

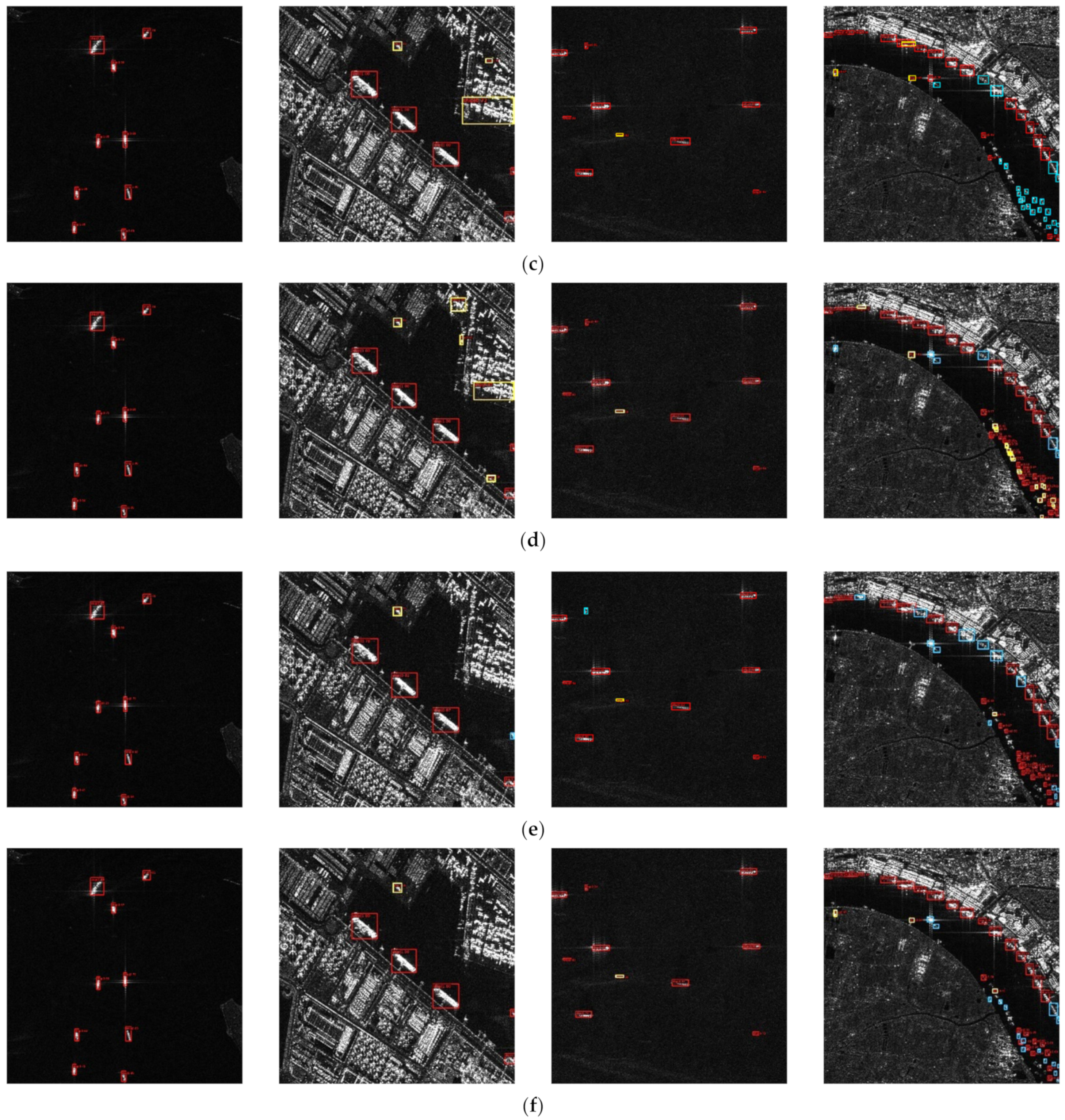

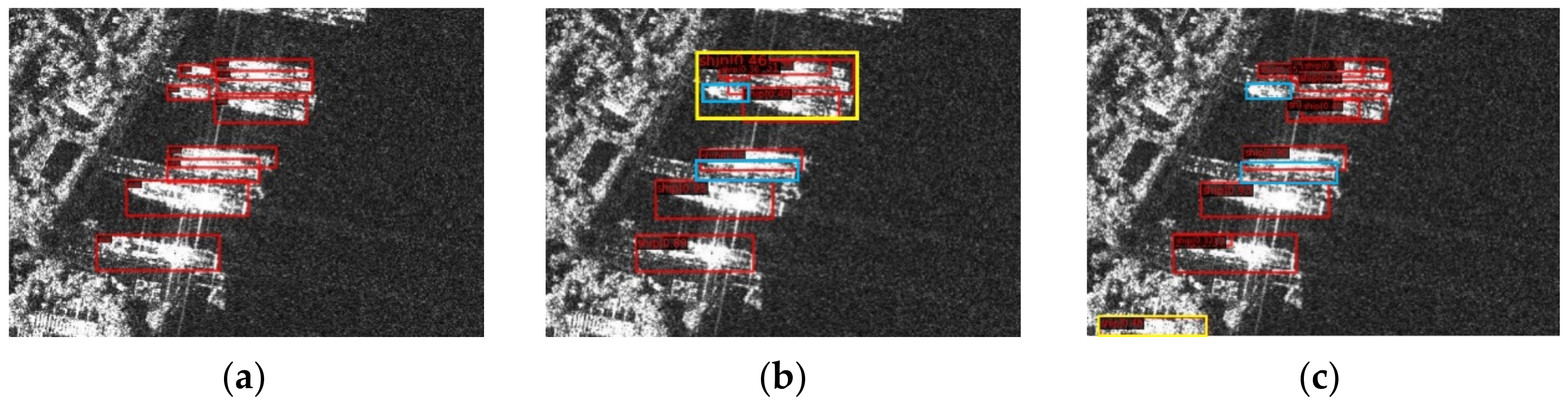

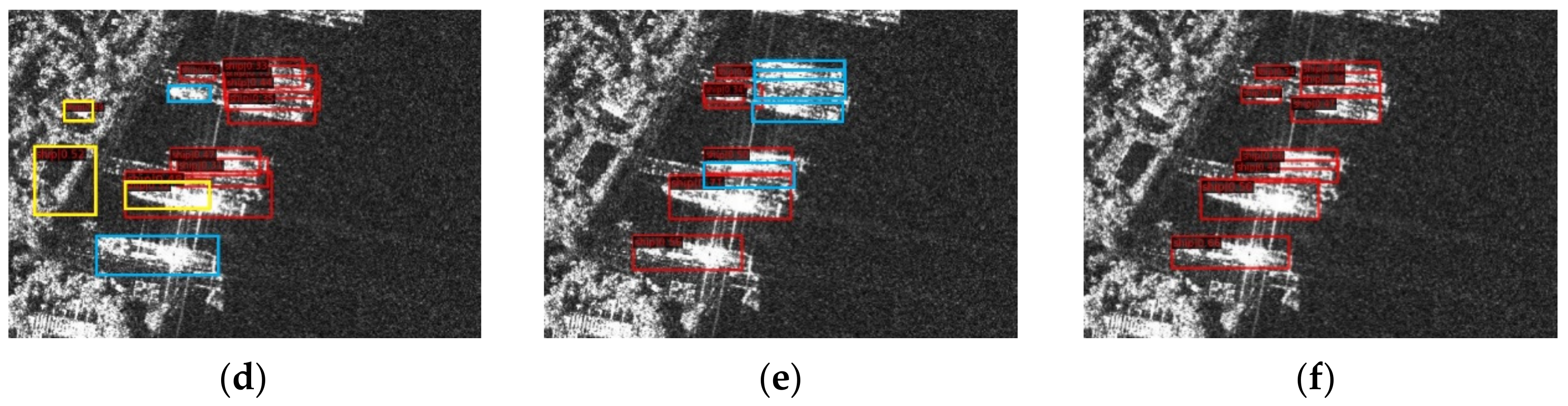

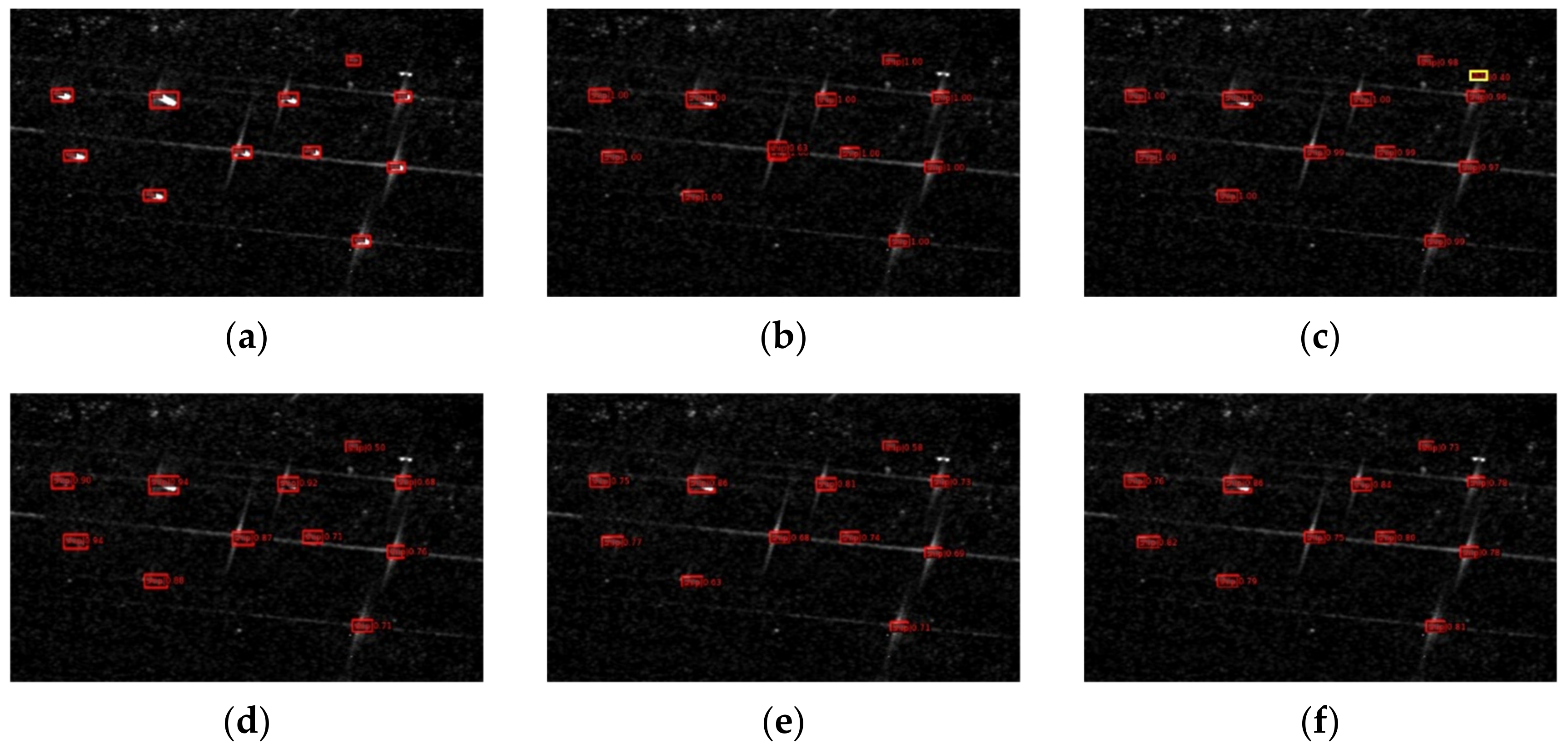

We conducted an ablation study and contrast experiments on the HRSID and SSDD datasets. The experimental results showed that the local-sparse-information-aggregation transformer block and the edge-guidance shape-enhancement module we proposed can effectively improve the performance of the transformer as a backbone to extract features, of which AP50 is 3.9% higher than the result of original method. Compared with existing object detection algorithms, the accuracy of our method was improved over single-stage and two-stage methods, as well as anchor-free methods. Via some visualization results, it was proved that our method can better detect ship targets in most scenes, including some scenes with strong interference. Our future work will combine the structure of the transformer with the convolution structure, as the combination of global and local information can extract stronger semantic features in the SAR image. In addition, we will design an edge extractor that is specifically aimed at SAR images. The light-weight factor also needs to be considered. Network training currently takes a long time, and our future work will also focus on improving the problem of low efficiency.