1. Introduction

Hyperspectral imaging sensors can capture more spectral data than just visible light. The data takes the form of continuous spectral features and can be used for the accurate identification of a variety of surface materials on planet earth [

1]. With the development of hyperspectral sensors, the spatial and spectral resolution of the collected hyperspectral images (HSI) are increasing higher and higher [

2]. Therefore, hyperspectral remote sensing finds important applications in many fields [

3,

4], including mining [

5], astronomy [

6], agriculture [

7], environmental science [

8,

9], wasteland fire tracking, and biological threat detection [

10]. HSI classification technology is an important content of hyperspectral remote sensing of earth observation technology. Its specific task is the classification of the objects represented by each pixel in HSI.

Early HSI classification mostly used traditional machine learning and statistical methods, such as K Nearest Neighbor (KNN) [

11], support vector machine (SVM) [

12], distance classifier [

13], and naive Bayes classifier [

14]. These methods rely on manually designed features. However, the characteristics of hyperspectral images such as high dimensionality and much spatial information make it difficult for a single traditional classification model to achieve good results. Some methods of dimension reduction are used, such as principal component analysis (PCA) [

15], linear discriminant analysis [

16], and band selection methods [

17]. A space-spectral fusion method based on conditional random fields is proposed in [

18]. In another method, the original spectral features and extracted spatial features through the Gabor filter bank form a space-spectral fusion feature [

19]. The above methods use original hyperspectral samples for training. Those conventional models require a lot of prior knowledge and expert experience. Although manual features based on prior knowledge show good performance for some datasets, they are not related to the data and task themselves. So, their generalization ability is limited [

20].

Recently, classifiers based on deep learning are widely used in HSI classification. Deep learning can extract deep features automatically, which is more convenient and effective. For example, the stack autoencoder network (SAE) [

21] and the convolutional neural network (CNN) [

22] are used to extract spectral and spatial features. 1D-CNN only focuses on hyperspectral vectors, but good classification accuracy has been obtained [

23]. 3D-CNN performs convolution operations on three-dimensional HSI patch samples, considering both the spatial and spectral dimensions [

24]. Pu et al. proposed a spatial and spectral convolutional neural network to extract spatial-spectral features [

25]. Ding et al. combined spectral information and spatial coordinates to generate probability maps to fuse spectral and spatial information [

26]. Very recently, transformer shows great potential in the field of computer vision [

27]. L. Sun, G. Zhao, Y. Zheng, and Z. Wu used CNN to obtain HSI feature maps, then the serialized feature map is fed into the transformer module [

28]. The deep features are extracted adaptively according to different training sets. Thus, classifiers based on deep features are generally better than conventional ones [

20]. However, they always require a great number of labeled samples to optimize the parameters, labelling samples are expensive in the field of hyperspectral image processing. Thus, most hyperspectral classification is performed with small sample sizes.

Hyperspectral image classification with small sample sizes involves semi-supervised learning, self-supervised learning, and sample augmentation methods. Both semi-supervised learning and self-supervised learning try to mine information from abundant unlabelled samples. DAE-GCN [

29] propose a deep autoencoder model to extract relevant features from the HSI and constructs a spectral-spatial graph to train a semi-supervised graph convolutional network. L. Zhao, W. Luo, Q. Liao, S. Chen, and J [

30] introduced a module to generate HSI sample pairs and used the available samples for training a self-supervised learning model based on a Siamese network. Then labeled samples are used to fine-tune the model. Li T. et al. [

31] proposed a dual-branch residual neural network. A self-supervised learning pre-training method with the idea of recovering intermediate unlabelled pixel information through artificially divided image cube samples is designed. Then pre-trained weights and a few labeled samples are used for the training classifier. However, the semi-supervised and self-supervised learning methods are based on existing enough unlabeled samples. In fact, for some hyperspectral classifiers, unlabeled samples are also insufficient. So, in order to increase the number of samples, many data augmentation methods are proposed. Early sample augmentation methods [

32] generate new samples by rotating, adding noise, and linearly combining existing original samples, assuming that these newly generated samples and original samples share the same label. Wang C. et al. established a data mixture model to augment the labeled training set quadratically [

33]. The paradigm of conventional data augmentation methods is relatively fixed. And it cannot guarantee that the generated samples conform to the correct distribution and provide useful information.

Nowadays, many scholars use generative adversarial networks to learn the implicit distribution function of the base samples, and then produce new samples with the same distribution by random sampling. Traditional GAN networks [

34] only generate samples of the same class, but cannot generate samples of multiple classes, resulting in that the generated samples cannot improve the classification accuracy effectively. To generate labeled samples, Odena et al. proposed the Auxiliary Classifier GAN (AC-GAN) [

35]. As an approach to solving the multi-classification problem, its discriminator is used to output corresponding label probabilities, and each generated sample has a corresponding class label. In practice, both the truthfulness of the data and the likelihood of correct classification are taken into account by the objective function of the discriminator. The classification branch is added to alleviate the HSI classification problem using limited training samples. Based on these ideas, Y. Zhan et al. designed a GAN network with a one-dimensional spectral structure [

36], trained a one-dimensional GAN network with unlabeled samples, and then converted the trained discriminator into a classification network. Chen et al. designed a 1D and 3D GAN network for HSI classification, combining the generated samples with the real training samples into a new class [

37], which was fine-tuned in the discriminator to improve the final classification performance. Multiscale conditional adversarial networks [

38] use multiple scales and stages to achieve a coarse-to-fine fashion. This method can achieve high-quality data augmentation with a small number of training samples. J. Feng et al. combined a self-supervised classifier and GAN [

39]. The pretext cluster task was designed by leveraging abundant unlabeled samples, then transferring the learned cluster representation from the cluster task. HyperViTGAN [

40] is proposed to deal with the class imbalance problem of HSI data. It also involves an external semi-supervised classifier to share the task of the discriminator in GAN. In response to gradient disappearance and mode collapse, Liang et al. proposed an average minimization loss constrained by unlabeled data for HSI [

41]. Gulrajani et al. introduced WGAN-GP into the network to make training smoother and more efficient [

42]. We find that when GAN is used to generate hyperspectral data, inputs are often noise or noise-label, lacking guidance and constraint from prior knowledge. It leads to unstable and low-quality generated results on multi-category tasks. Besides, although the generator may fit the distribution of real data well, the network that has been trained with fixed parameters cannot generate data that satisfies the diversity under the same distribution. Additionally, some of the generated samples may be far from the real samples and not all the generated samples are helpful to the hyperspectral image classification.

In this article, we propose a new generative network named AC-WGAN-GP based on AC-GAN and WGAN-GP. The proposed framework utilizes guidance from prior knowledge and improves label reliability by a separate classifier. The online generation mechanism improves the diversity of generated sample sets. A selection algorithm based on KNN is presented to choose more reliable samples for training. The proposed framework can offer high-quality labeled samples with diversity and veracity to expand the training set. The contributions of this work can be summarized as follows.

We construct a new generative network named AC-WGAN-GP to generate labeled samples of different classes. We also design the new input of the generator including PCA features and category information are used to guide the process of generating ad noise to maintain the diversity of samples. Considering the task of generating multi-category labeled samples, we add a separate classifier to strengthen the difference between samples of different categories.

The online generation mechanism is studied profoundly. Instead of generating samples after the network has converged, the online generation mechanism makes AC-WGAN-GP periodically keep the generated samples during the training process, thereby significantly improving the diversity of the generated sample set.

A lightweight sample selection method is designed to efficiently select samples that are similar to real ones from the generated sample set. The function of the proposed algorithm also includes smoothing the label to reduce the error of using cross-entropy loss. Finally, the augmented training set is constructed by the generated samples and original real samples.

We organize the rest of this article as follows.

Section 2 is used to review a series of GAN. The detailed introduction of the proposed method is presented in

Section 3.

Section 4 evaluates the proposed method and comparison with competing methods in this paper, Finally,

Section 5 concludes the paper.

3. The Proposed Method

Figure 1 shows the overall framework for the HSI classification with a small sample size based on the AC-WGAN-GP, which is composed of four parts: the preprocessing based on Gaussian smoothing, the AC-WGAN-GP network, online sample generation, and sample selection algorithm based on KNN.

3.1. Smoothing-Based Preprocessing

The spectral vectors of neighboring pixels are assumed to be related because they are likely to be part of an image of a semantically homogeneous component.

This paper uses gaussian filter to exploit the neighboring information because gaussian filter has rotation invariance. Gaussian kernel has fixed parameters. Therefore, each pixel will perform a weighted calculation with the neighboring pixels through the Gaussian kernel. Gaussian filter weighted the sum of the pixels according to the spatial distance between the neighboring pixels and the central pixels to obtain smoothed HSI patches. As the data complexity increases, the structure of networks used for learning also needs to be designed to be more complex. The smoothed HSI patches contain some spatial information while discarding harmful information. We think that the generator and discriminator can be designed with a simpler structure because of the smoothed input patches. The Gaussian smoothing process can be considered a spatial feature extraction.

After the normalization of the original hyperspectral image, a patch is taken as the input of gaussian filtering for each pixel

, which can be expressed as

(where

M is the width,

N is the height, and

H is the number of bands), and the smoothed image is:

where

is the pixel in

,

is the spatial coordinate of the pixel,

is the spatial coordinate of the central pixel

. In practice, we restrict the sums to a distance of

from

, since pixels far from that have a negligible contribution.

is the window size and

is adjusted when experimenting and selected from collection [1, 1.67, 2.33, 3, 3.67, 4.33, 5].

3.2. AC-WGAN-GP

In the proposed method, the AC-WGAN-GP is constructed based on part of the theories of AC-GAN and WGAN-GP. We design an input of the generator so that the generator can be guided from the manual feature and different labels. So, the difficulty of generating high quality samples in different categories decreases. We also design a new structure by adding an auxiliary classifier to form a separate classifier

. Accordingly, the new optimization function is also designed. Thereby, the output fake labels and the generated samples are in parallel. Finally, fake labels and fake samples can be generated simultaneously from a limited number of real training samples thus expanding the training set. The architecture of AC-WGAN-GP is shown in

Figure 2.

A network used to generate multi-category samples in different distribution is difficult. Besides adding label information to the input of generator, we believe that the manual features also can be used as prior information. So, the input of generator introduces the principal components of real sample pixel as additional constraints to guide . The vector of noise extends a number of dimensions to accommodate the principal components extracted from and one-hot labels. With the above input, the generator can be guided to generate high-quality samples in a targeted manner. Furtherly, we employ the Principal component analysis (PCA) to reduce the dimensionality of the HSIs. Indian pines dataset has 200 spectral bands, Salinas dataset has 224 spectral bands, and KSC dataset has 176 spectral bands. We select the first 30 principal components (PC), expressed as . So, the input of generator includes three parts: Gaussian noise variable ; one-hot coded class information ; and 30-dimensional PC of single pixel. On the basis of traditional Gaussian noise, prior knowledge of various labels and samples are added. The generated sample can be expressed as .

In our AC-WGAN-GP, an independent classifier

is used to output fake one-hot labels independently. Refs. [

39,

40] show the methods of combining an independent classifier with GAN. The task of the generator in AC-WGAN-GP is generating samples of different categories, which is difficult to control. So, we have to add the category-correct constraints to the objective function. To reinforce the function of generating samples from different categories, we separate a classifier

that uses part of the loss function of the generator. Besides, it is difficult for the discriminator to discriminate between the real and fake samples while outputting the category of the generated samples. The function of

includes two points. Firstly,

is responsible for generating the labels of fake samples efficiently. Secondly, when optimizing AC-WGAN-GP, the loss function of

could ensure the generated samples belong to the corresponding input category information, thereby increasing the gap between samples of different categories. A classifier based on CNN is suitable. The input of the classifier

is the same as the input of the discriminator. The classifier is trained by real samples and their labels and then predicts the labels of fake samples. The cross-entropy loss of

is formally unified with the loss functions of the generator and discriminator. Therefore, we consider AC-WGAN-GP as a whole network during training.

The input of discriminator is composed of the real single pixel , the generated fake sample labeled as c, and the output of is a probability distribution . The function of the discriminator is to judge the real or fake samples. The purpose of designing and is that each part of the AC-WGAN-GP is assigned a specific task.

In the above framework, referring to Deep Convolution Generative Adversarial Network (DCGAN) [

45],

adopts the form of a fractionally-stride convolutional neural network, while

and

adopt the form of a standard CNN. The Batch norm layer is used in generator

and discriminator

to normalize features, which improves the training speed and makes the training more stable. The Leak-ReLU activation function, not the ReLU one, is used in the discriminator to prevent gradient sparsity, and no activation function is used at the last layer of the discriminator. The ReLU activation function is employed in the generator, while the tanh activation function is utilized solely in the output layer. Classifier

consists of a convolutional layer and a full-connection one, and finally connects a Softmax activation function to output the probability of classification. In the training process, according to the idea of fixing one part to train another part,

,

and

fix the parameters of two networks, optimize the remaining network parameters, and iterate alternately in this way to complete the whole training process. The specific network parameter design is shown in

Section 4. The input to the generator is our designed vector, a noise-label-principal component vector. We can select the output of the generator to the parts we need, such as fake labels or fake pixel vectors.

After designing the architecture of the AC-WGAN-GP, we propose a suitable optimization function. The multi-classification problem can be realized by the Auxiliary Classifier GAN (AC-GAN), which was introduced by Odena et al. [

35]. We treat its loss function as a prototype. The objective function contains the likelihood of data source

and the likelihood of category

. Updating the parameters of

is achieved by maximizing

, while updating the parameters of

G is attained by maximizing

.

The structure of , is similar and both use cross entropy loss. The two parts of are responsible for judging the real samples and fake samples respectively. represents the judgement result of the discriminator. Discrimination can also be viewed as a binary classification problem. And the two parts of classify real and fake samples. defines as labels the classifier output, and represents the ground truth labels.

Based on above equations, the discriminator optimizes

in the training process is obtained. We define

as sampling uniformly along straight lines between pairs of points sampled from the data distribution

and the generator distribution

in sample space.

represents the random variable that fits the distribution of

.

is the random variable that fits the distribution of fake samples

.

is the gradient. The first and second part is responsible for judging whether the sample is real or fake. The third part is the gradient penalty.

is showed in the following formula:

As a regular choice and part of the objective function of the generator, the class cross entropy loss is the objective functions of the classifier. As shown in the following formula:

where

is defined as the labels of the network forward output, and

is the ground truth labels of training samples.

The objective function of the generator consists of two parts. One part comes from discriminator

, which ensures that the discriminator does not recognize the sample produced by the generator. The other part comes from classifier

, which ensures that the samples generated by the generator belong to the corresponding class

to the greatest extent. The second part reinforces the connection between category information and generated samples. Therefore, the sum of these two parts constitutes the objective function for the generator G is defined as:

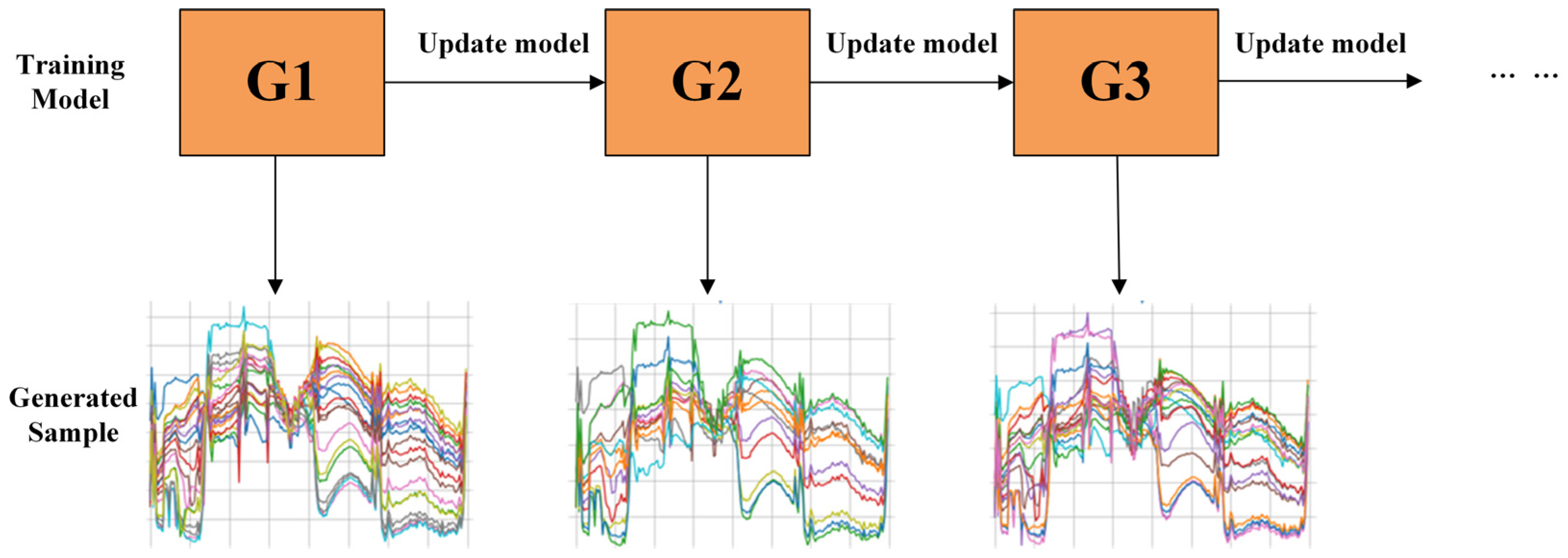

3.3. Online Sample Generation

Generally, after GAN is trained, the parameters of each network are fixed, then the required input data generator is sent to the generator to generate false samples. Although the trained network can fit the distribution of training samples of different classes and input gaussian noise Z is also random, the parameters and other inputs of GAN are fixed, so the diversity of fake data generated by AC-WGAN-GP is poor. Therefore, we find it is appropriate to design a GAN-friendly mechanism named online sample generation.

Firstly, AC-WGAN-GP is trained by the above training methods described in

Section 3.2, and then we observe the loss value of the network. After the network reaches a certain section of convergence, the online generation strategy starts. Although the network is not well-trained, and the loss curve is still trending downward, we can start to gather some of the faked samples and labels at one certain epoch. Then AC-WGAN-GP will continue to train to optimize network parameters. After a certain number of training epochs, AC-WGAN-GP will generate samples online again. Finally, the samples generated online in each time are collected. In order to ensure the diversity of samples and the balance between different classes, we can keep the abundant online generated samples which might include all categories of hyperspectral samples if the computer memory allows. In this way, we can also alleviate the pressure to tune the variables of the online generation mechanism. As shown in

Figure 3, online sample generation can take advantage of plenty of models to generate samples with higher diversity. And the abundant generated fake samples and labels will be filtrated in the next part.

3.4. Sample Selection Algorithm Based on KNN

The online sample generation guarantees the diversity of generated samples, but due to GAN’s unique properties, it is not guaranteed that all generated samples have good quality and be similar to real samples. Therefore, for the samples generated by GAN, an extra selection method is needed to select samples that are close to real samples thus can promote classification accuracy. The algorithm includes two steps: samples selection and label smoothing.

To ensure the total number of parameters and operation time of the entire framework are appropriate, a brief and effective method is demanded. Firstly, the total set of original real data is divided into a training set and a temporary set . We use the clustering algorithm to divide the temporary set into N clusters. And then the central samples of each cluster and a part of the temporary set samples around central samples () are randomly taken to form a mini set. The clustering algorithm we employ are K-MEANS clustering algorithm, DBSCAN clustering algorithm, EM algorithm, and Mean shift clustering algorithm. For each algorithm, we repeat the above operations, and finally take the union of the mini sets to form . In the generated sample set, KNN is used to select the closest sample to each test sample (). Finally, the selected generated samples constitute the ultimate generated sample set. The generated fake samples selected by this algorithm can be representative and similar with the real samples.

The samples in

originate from the overall generated distribution. Each sample is characterized by its own label, but, nevertheless, it might mix information from other classes. In deep learning, when calculating cross-entropy with one-hot coded labels, only the loss of the correct class (the class with the label coded as 1) is considered, but the loss of the wrong class (the class with the label coded as 0) is not considered. It may lead to overfitting, gradient explosion or gradient disappearance [

46]. In order to distinguish the labels of generated samples and labels of real samples and reduce the negative impact of labelling errors when training, we use label smoothing regularization (LSR) [

47] to consider the distribution of wrong classes. We conduct the LSR processing on the labels of fake samples set.

represents the generated one-hot label,

, K represents the number of classes. The smoothing process is shown as:

After the above operations, smooth labels improve the generalization ability of the network.

After implementing the algorithm, we obtain the final fake hyperspectral samples and their corresponding labels to augment limited training datasets. At the same time, the quality and diversity of the generated samples are also guaranteed to a certain extent. Algorithm 1 summarizes selection of generated samples and label smoothing.

| Algorithm 1. Samples selection and label smoothing |

Input: Generated sample set G, dataset D, the number of categories N, clustering algorithm list L (K-MEANS, DBSCAN, EM and Mean shift), the hyperparameter represents the volume of random set M, the hyperparameter for KNN k

Output: selected samples and smoothing labels |

| Step1: | Randomly divided D into training set A and temporary set B |

| Step2: | Union the clustering set:

For , do |

| | |

| | |

| | |

| Step3: | , |

| Step4: |

For, do

|

| Step5: | |

4. Experiments and Result Analysis

In this experiment, firstly we introduce basic information such as datasets, training samples, and evaluation metrics. Then detailed experimental settings are given. The third section analyzes the quality of pixels generated using proposed method. In next section, we study the effect of real and generated pixel ratios on the classification results and perform the ablation experiments of the final sample selection module. Finally, we compare AC-GAN-GP and CNN classifier with classifiers based on traditional methods, convolution and GAN, demonstrating the effectiveness of our proposed method on task of hyperspectral classification with small samples. We implemented AC-WGAN-GP with the tensorflow framework on a PC server with two NVIDIA GTX1080TI GPU and 22 GB memory. The average time for training AC-WGAN-GP, generating and classifying is 210 min and 15 s.

4.1. Hyperspectral Datasets

In this experiment, three popular datasets of hyperspectral images are used as experimental data named the Indian Pines dataset, the Salinas dataset, and the Kennedy Space Centre (KSC) dataset.

Indian Pines: The dataset of the Indian Pines comprises a hyperspectral image of agricultural and forest areas in India, which is collected by remote sensing equipment utilizing an airborne visible/infrared imaging spectrometer (AVIRIS). Note that the image in the dataset comprises

pixels, and its spatial resolution is 20 m/pixel. The Indian Pines dataset consists of 220 spectral bands, 20 of which severely water-absorbing bands are removed, and we conduct experiments on the remaining 200 spectral bands. For this dataset, we consider 16 classes to be of interest, excluding background pixels.

Figure 4a shows the three-band false color image and the ground reference map of the Indian Pines image.

Salinas: Salinas data was also taken by the aforementioned AVIRIS imaging spectrometer, and it is an image of the Salinas valley, a prominent valley notable for being a highly productive region in California, the United States of America (USA) The image is with a spatial resolution of 3.7 m, and it originally had 224 bands, out of which we used only 204 bands for classification. We exclude 20 bands that could not be reflected by water, namely the 108th–112th, 154th–167th bands and the 224th band. The image is characterized by a size of

pixels, which are divided into 16 classes.

Figure 4b shows the three-band false color image and ground reference map of the Salinas image.

KSC: The NASA’s AVIRIS equipment collected data over the Kennedy Space Centre (KSC) in the south-eastern state of Florida, United States of America almost a quarter of a century ago. This spectroradiometer equipment obtained 224 bands each characterized by a width of 10 nm and having a median wavelength ranging from 400 nm to 2500 nm. The image consists of

pixels and possesses a spatial resolution of 18 m/pixel. Only 176 bands are used for further analysis after all water absorbing bands and low SNR bands are eliminated. For classification purposes, we define 13 classes, which represent a variety of land cover types in the pertinent environment for the given site.

Figure 4c shows the three-band false color image and the ground reference map of the KSC image.

For each of these three datasets, we divide the initial dataset into two subsets named a training set and a testing one. The training set is composed of 200 samples, and is extracted by random sampling. In other words, the proportion of training samples on the three datasets are 2.0%, 1.0%, 3.8%, which satisfies the requirement of few-shot experiments. The remaining samples are taken as the testing set.

Table 1,

Table 2 and

Table 3 show the legend of each category.

Table 4,

Table 5 and

Table 6 present the number of training samples selected from each dataset and the total number of samples.

4.2. Experimental Setting

The training/testing sample used in the experiment is a single pixel. Each pixel can be used as feature to train the AC-WGAN-GP and the CNN classifiers, as it corresponds to a unique label. The number of classes in the dataset is represented by n. All HSIs data are normalized between −1 and 1 at the beginning of the experiment. We utilize randomly-selected training and testing sets to repeat the experiment 10 times, and subsequently report the average obtained accuracy. For quantitative evaluation of the experimental results, we utilize the popular metrics of the overall accuracy (OA), the average accuracy (AA) and the kappa correlation coefficient (). The definitions of all OA, AA, and Kappa are shown as follows:

(1)

OA:

OA assesses the proportion of correctly identified samples to all the samples.

where

N is the total number of labeled samples,

represents the number of class

i samples divided into class

i, and

is the total number of categories.

(2)

AA:

AA represents the mean of the percentage of the correctly identified samples.

where

is the total number of categories,

represents the number of samples of category

i divided into category

i, and

represents the number of samples of category

i.

(3)

Kappa:

Kappa coefficient denotes the interrater reliability for categorical variables.

where

and

, respectively, represent the total number of samples of category

i true category and the number of samples predicted to be category

i.

The size of the window in Gaussian smoothing kernel is 11 and the sigma for the Gaussian smoothing kernel is 0.1. The network structure and parameters for generators, discriminators, and classifiers in AC-WGAN-GP are described in detail in

Table 7. In the table Deconv represents the fractionally-strided convolutional neural network layer, conv represents the convolutional neural network layer, Fn represents the full connection layer, BN represents the Batchnorm layer, stride represents the step size of convolution, and Padding represents the way of filling. The input of generator

is a vector composed of noise, one-hot label and 30-dimensional principal components. The sample generated by

is a single pixel with the size of

. The input of

is

and

, and the output is a scalar, which represents whether the input sample is a real sample or a false sample.

has the same input as

, and the output is the probability that pixels belong to each category. The size of the mini-batch is set to 64 for training AC-WGAN-GP and CNN, the learning rate ranges from 0.1 to 0.0001, and the value of the label smoothing parameter is 0.1.

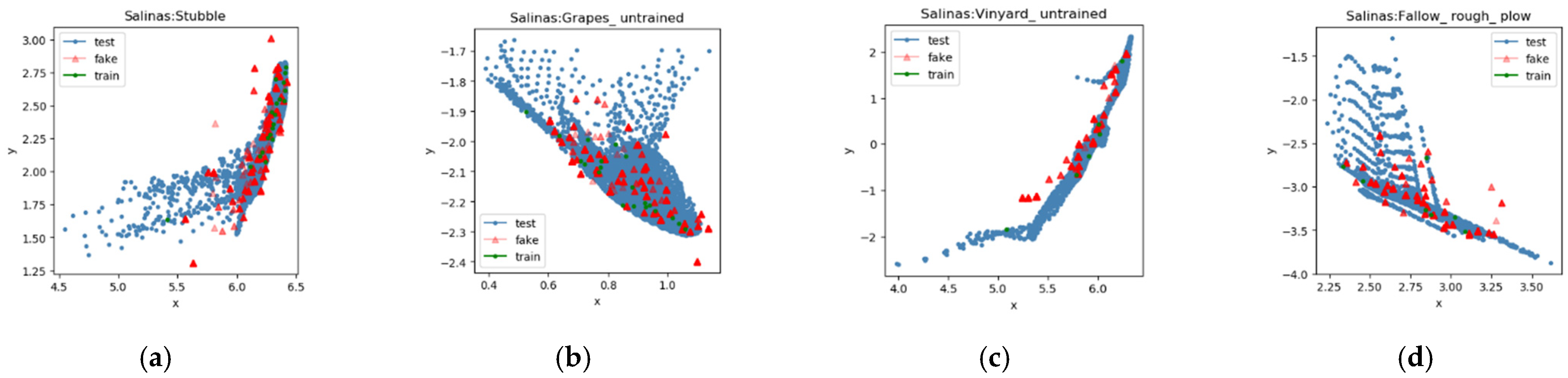

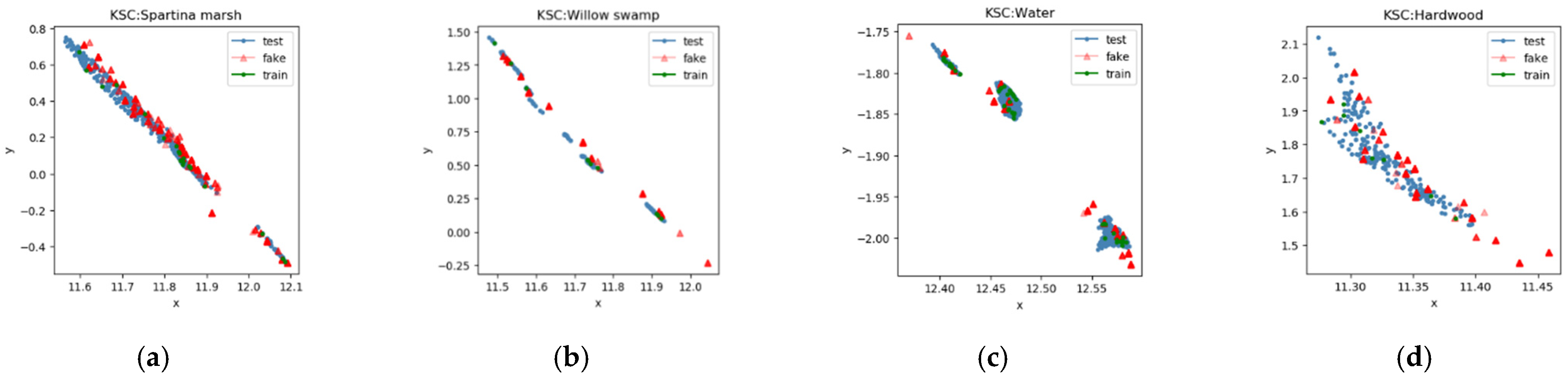

4.3. Analysis of Generated Samples

Before using the generated samples for classification, we have to check the distribution of the generated samples. We check whether the distributions of generated samples and real samples are consistent. Because the distribution of each class is different, each class has its own distribution. When generating samples, our method generates labeled samples, so the generated samples belonging to the same category should possess the same distribution as that of the real samples. In order to verify whether the generated samples and real samples of the same class have the same distribution, we extract the principal components of the generated samples and real samples through PCA first, and then select the two first principal components. As shown in

Figure 5,

Figure 6 and

Figure 7, the red triangle represents the generated sample, the blue point represents the real test sample, and the green point represents the real training sample. The figure shows that, for most categories, the AC-WGAN-GP network can generate samples that have a similar distribution to original samples in the feature space. However, for the classes with too few real training samples, the generated sample distribution and the real sample distribution show some differences. Such as Grass-pasture-mowed class in the Indian Pines dataset, the number and distribution of real samples and fake samples are different. In addition, it can be seen from the figure that AC-WGAN-GP tends to imitate simple distributions better, but sometimes complex distributions cannot be well fitted. There are two reasons for this difference. One is that too few training samples are sent to AC-WGAN-GP network, the convergence is not complete; the other is that the randomly selected training samples are not evenly distributed in the class, which cannot represent the overall distribution of this class. For these two reasons, GAN does not perform well in generating a small number of class samples. This is also a common problem of GAN. Intuitively, the sample distribution generated by AC-WGAN-GP is correct.

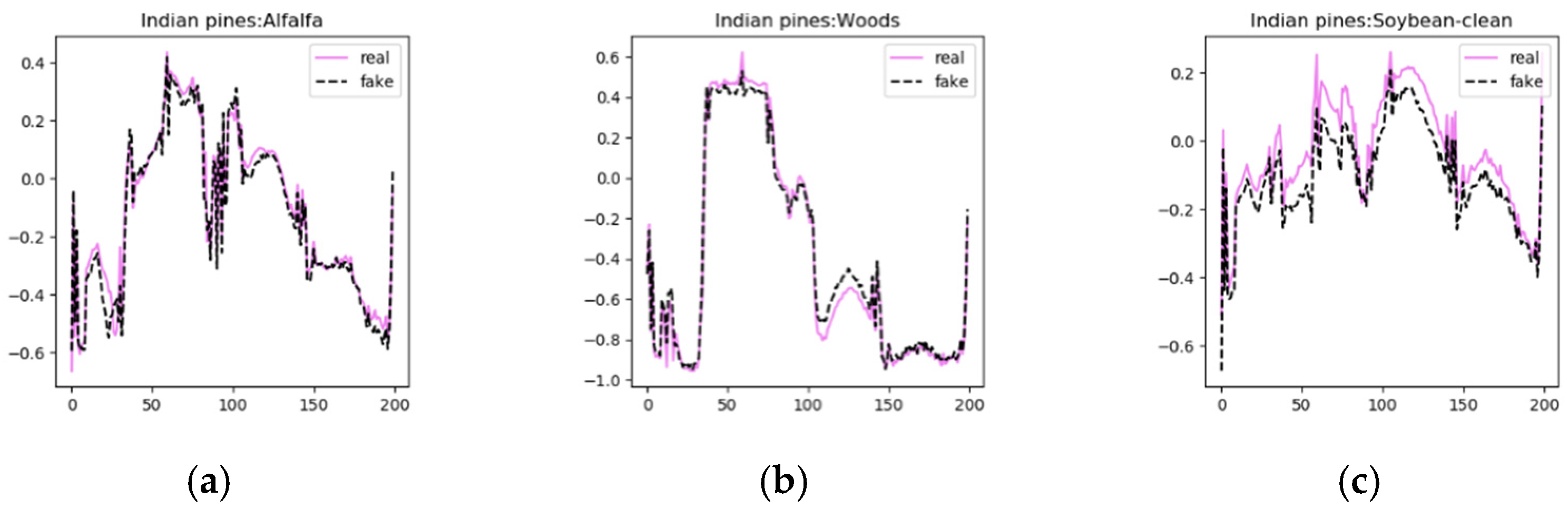

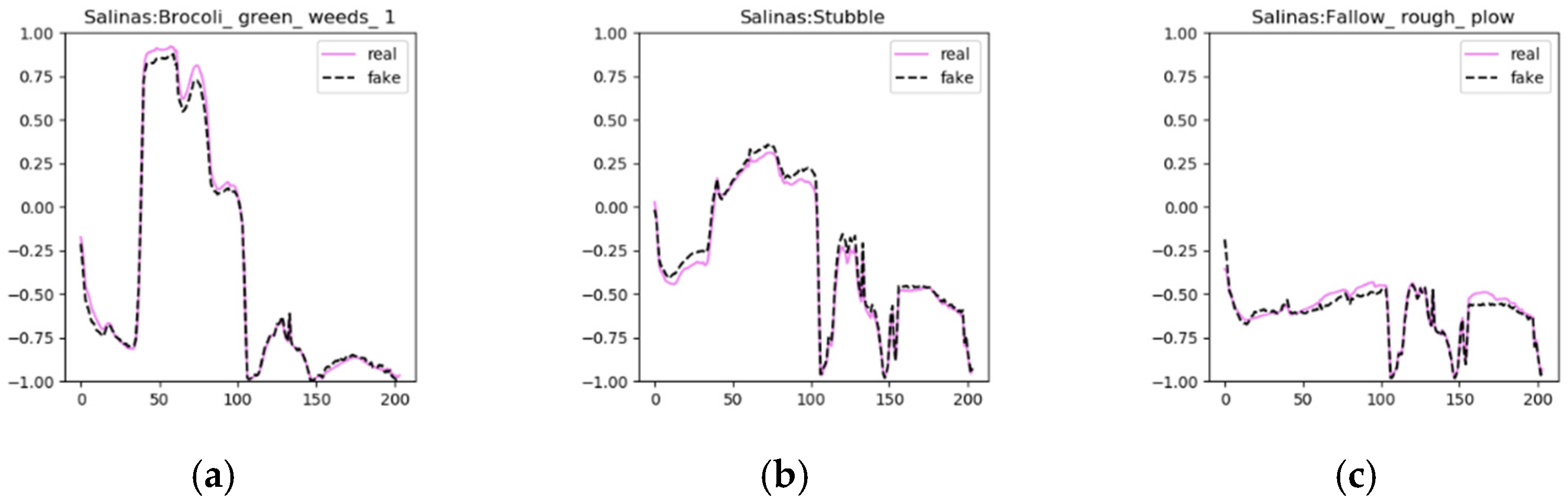

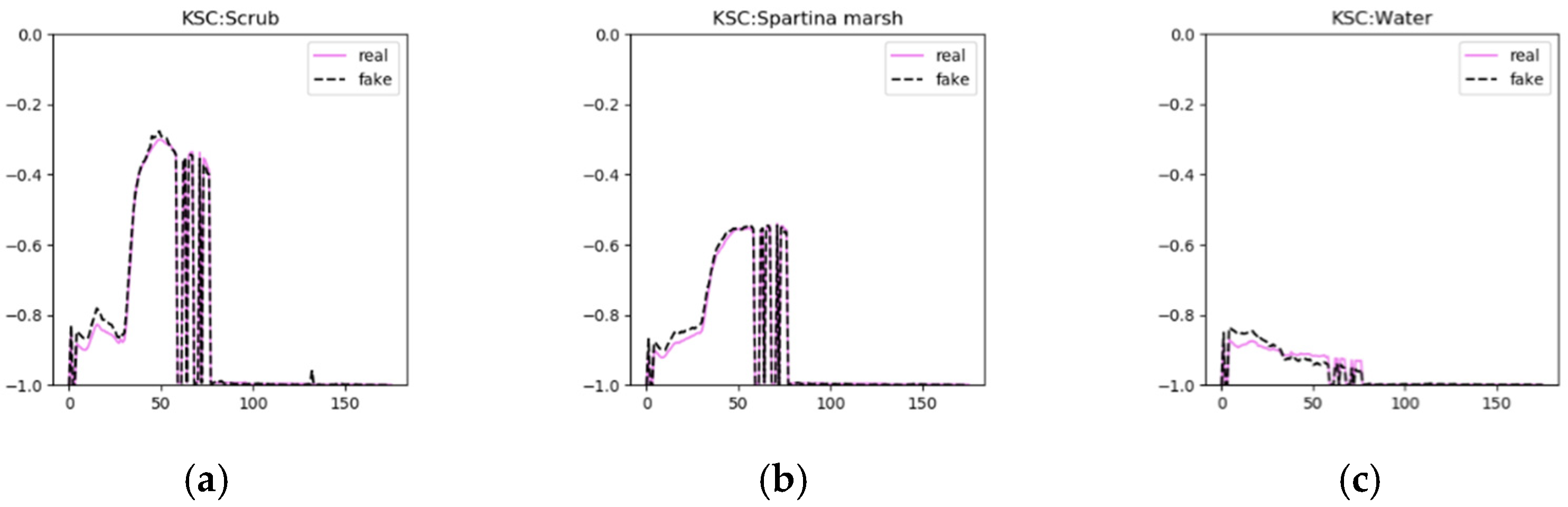

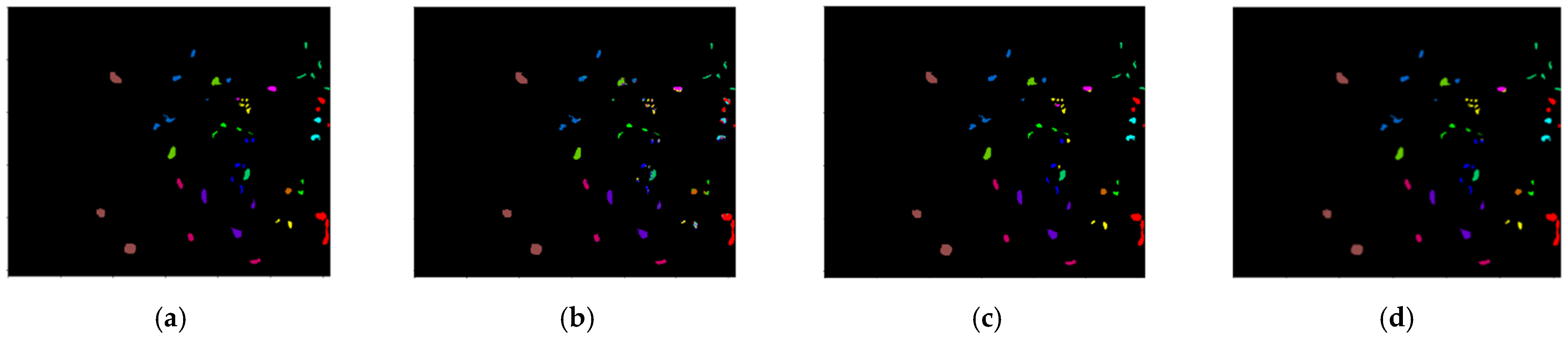

In the visualization experiment, three classes of generated fake samples and real samples were selected from the three data sets for visual display, as shown in

Figure 8,

Figure 9 and

Figure 10. The solid red line represents the real sample and the dotted black line represents the generated sample. The figure indicates that the generated sample is very similar to the real sample of the corresponding class, but not completely consistent, meet the demands that generated samples need to be consistent with the real sample distribution and have a certain diversity. The generator can learn the different characteristics of each class and generate different samples according to the class. We obtain a model that fits multiple class distributions and fills in the lack of diversity in the sample space using limited samples by utilizing AC-WGAN-GP.

In addition to analyzing the distribution difference according to the visual results obtained by the category, the experiments in this subsection also give numerical results on whether the real samples and the generated samples are consistent in the global distribution. A 1-Nearest Neighbor classifier (1-NN classifier) is used for evaluating whether the two distributions are consistent. Given two sets of samples, the real samples are labeled as positive samples and denoted as

, and the generated samples are labeled as negative samples and denoted as

. Train 1 nearest neighbor classifier by

and

, and then use them as test samples to obtain new labels, calculate the classification accuracy of the 1-NN classifier, and express it as the Transfer(T) accuracy. When the number of samples is very large, if two distributions are consistent and not completely replicated, the T accuracy of the 1-NN classifier should be 50%. Because when the generated samples are only the results of simple replication of real samples, the T accuracy rate is 0%. When the two distributions do not match at all, the T accuracy rate is 100%.

Table 8 calculates the T accuracy of the three HSIs datasets, and the table also shows the average spectral distance between the generated samples and the real samples. We select the number of samples shown in the first row of the table for calculation. The average T accuracies of the three datasets are 57.11%, 69.70% and 63.91%, respectively, which are relatively close to the ideal generative distribution effect. When the average T accuracy rate is closer to 50% and the average spectral distance is smaller, the effect of adding the generated samples to the real training samples for data enhancement is more obvious.

4.4. Generated Sample and Real Sample Mixed Ratio Analysis

The ratio of the real samples and generated samples in training sets may affect the result of classification. So, we conduct an experiment to investigate the effect of injecting different proportions of fake samples on classification accuracy. We selected several representative and common mixing proportions 1:0, 4:1, 2:1, 1:1, 1:2, 1:4.

Table 9 lists the experimental results, which indicate that both the Indian pines dataset and the KSC dataset achieve the best classification accuracy when the ratio between the real samples and the fake samples is 1:1, and the Salinas dataset achieves the best precision at 2:1. The accuracy of the three datasets with fake samples is improved compared with that without fake samples.

This experiment indirectly proves that the distribution of samples generated by AC-WGAN-GP is correct and makes up for the lack of diversity of small training samples in the sample space. The method has a positive impact on the classification results. Among them, Indian Pines and KSC classification accuracy are the best when the ratio of real and false samples is 1:1, and classification accuracy on Salinas is the best when the ratio of real and false samples is 2:1. It may indicate that the sample quality generated by the model on datasets Indian Pines and KSC is slightly better. From

Table 9,

Table 10,

Table 11,

Table 12 and

Table 13, the bold number in the tables represents the best result in the comparison.

4.5. Effectiveness Analysis of Sample Selection Algorithm

We make a comparison of the classification accuracy of KNN selecting fake samples and randomly selected fake samples to verify the function of our selection algorithm.

Table 10 presents the experimental results and indicates that the accuracy of the KNN sample selection method on the three datasets is higher than that of random sample selection method. The

OA,

AA and

KAPPA index using KNN selection on Indian Pines dataset are respectively higher than the random selection method 0.2%, 1.06%, 0.21%. On Salinas and KSC datasets, the number is 0.4%, 0.43%, 0.44% and 0.48%, 0.44%, 0.64%. It shows that the KNN sample selection method is effective and necessary. The selection algorithm we proposed selects the generated samples that are similar to the real samples and has a positive effect on classification.

4.6. Classification Result

As a most important way of assessing the performance, we compare it with several other competing algorithms including SVM, CNN, and GAN on small-size training sets (See

Section 4.2 for specific quantities). Recognizing the well-known advantages of support vector machines (SVM), this paper introduces some HSIs classifiers based on SVM for comparison, namely 3D-RBF-SVM and EMP-SVM [

12]. 3D-RBF-SVM input is an image block, the SVM classifiers are using the radial basis function kernel. As a typical deep learning model, 1D-CNN [

23] and 3D-CNN [

24] also have good classification performance, and are used to compare. Furthermore, 3D-Aug-GAN [

37] also uses a GAN network to augment the training set and improves classification accuracy, which is used for comparison with our AC-WGAN-GP. Meanwhile, 1D-S-SVM and 1D-S-CNN are also used for comparison. Where S stands for gaussian smoothing, 1D means that the input is a single pixel, and the structure of CNN is the same as that of classifier C. These improved classifiers will verify the effect of smoothing operation and whether AC-WGAN-GP augments the training set thus improving the accuracy of classification. All of the above methods use the same training samples in a small size. Besides, we add a HSI classifiers also using samples with small size named AML [

48] for comparison.

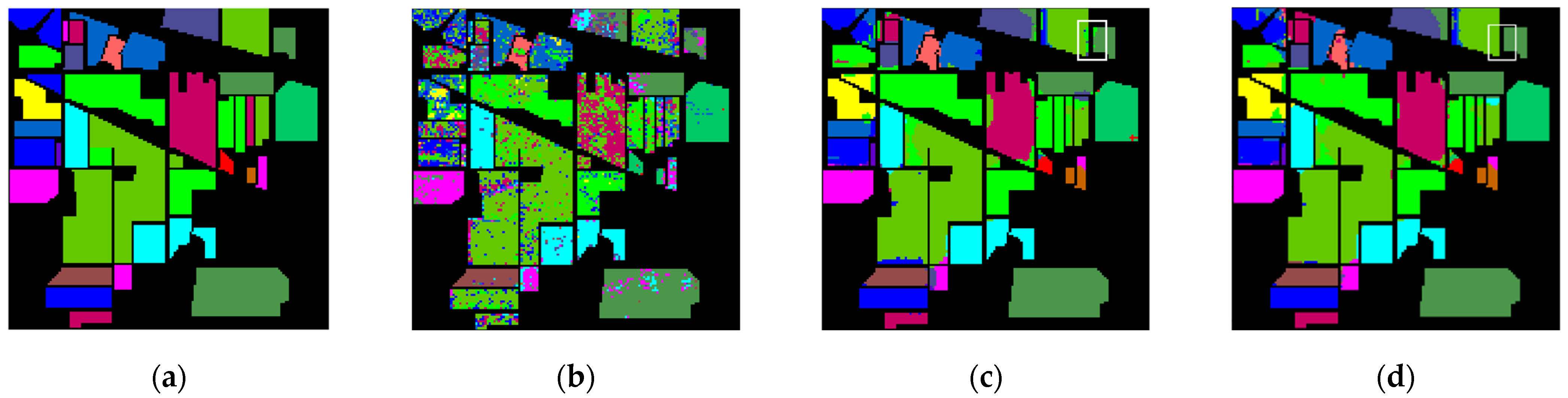

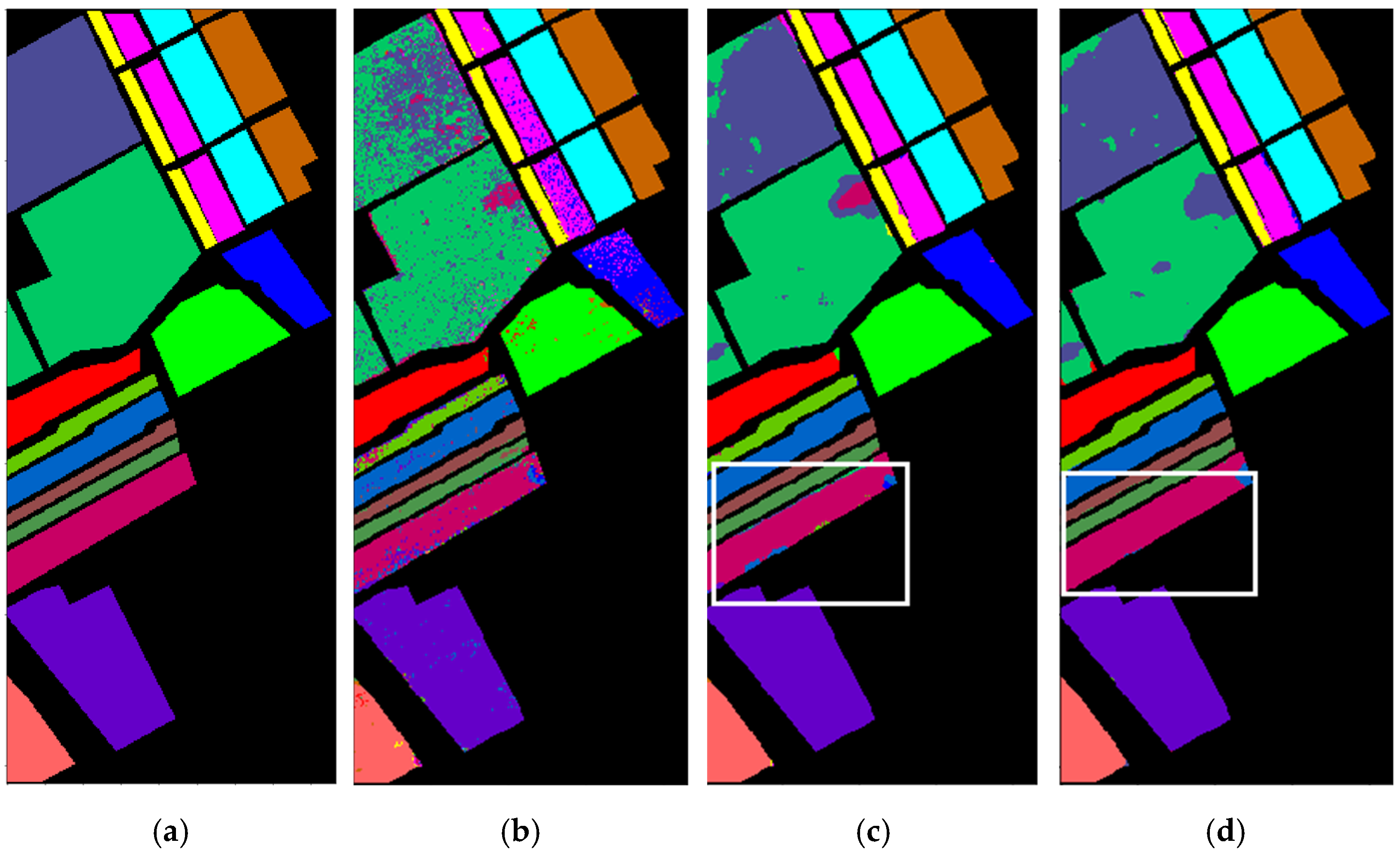

The qualitative evaluation of various methods is shown in

Table 11,

Table 12 and

Table 13.

Table 14 shows the comparison of the recent classifier AML and AC-WGAN-GP. The visual classification results are shown in

Figure 11,

Figure 12 and

Figure 13. Based on the above experimental results, some observations and discussions can be focused on.

First of all, it can be seen from the visual classification map in

Figure 11,

Figure 12 and

Figure 13. Gaussian smoothing of the data with the 1D-CNN classifier, and our proposed AC-WGAN-GP model. It can be seen that the third and fourth column results are significantly better than the second column. And significant differences between the third and fourth sets of prediction plots are marked by white boxes. In Indian Pines, the classification result of the 1D-S-CNN algorithm demonstrates that compared with the method only using CNN, the noise inside the class is small, but the class boundary produces more error points, which shows that the use of Gaussian smoothing can effectively smooth the spectral samples inside the class, but it is easier to confuse the spatial features at the boundary, and obtain the wrong class boundary. But after using AC-WGAN-GP to augment data, the class boundary in the white box is significantly improved. In Salinas, algorithms confuse some of the categories 8 and 15 samples in this data set, making more misclassification points. The reason for this problem is that the spectral characteristics of the two types are relatively similar, and the classification difficulty comes higher. It is difficult for many algorithms to completely distinguish these two categories. Compared with the other two algorithms, the proposed algorithm has a better classification effect on these two categories. And except for some noise points and misclassification points at the boundary of the 10th and 5th categories, the noise points of other categories in this dataset are less and the boundary positioning is clearer. Compared with 1D-CNN, the noise points of the proposed algorithm are greatly reduced, and compared with 1D-S-CNN, there are fewer areas of error points. In KSC, the pixels are relatively discrete.

Figure 13e,f shows that the main misclassification point of the algorithm is that the samples of the fifth class close to the fourth class are wrongly classified into the fourth class. The reason for this error is that the spectrum has the problem of the same spectrum including different objects and the same object scattering in a different spectrum, and the number of training samples is few. The classification results of our proposed method still have obvious advantages in the same region. It can be seen that the positive effect of AC-WGAN-GP data augmentation on classification.

From the Tables, it can be seen intuitively that our proposed method outperforms other methods on most of the three metrics OA, AA, Kappa, and most of the category classification accuracy.

Firstly, we observe that the method (i.e., 3D-RBF-SVM, EMP-SVM, 1D-CNN) uses the single pixel or image block of the original data as input, and their classification accuracy is lower than that of other methods (i.e., SVM, 1D-S-SVM, 1D-S-CNN, AC-WGAN-GP) that adopt gaussian smoothing processing. For example, as can be seen from

Table 11, the

OA of EMP-SVM is 18.97%, 21.94%, and 23.17% lower than 1D-S-SVM, 1D-S-CNN, and AC-WGAN-GP, respectively. Similar properties can be found in

Table 12 and

Table 13. The above phenomenon shows that gaussian smoothing can improve the classification accuracy, because gaussian smoothing not only simply filters hyperspectral pixels, but also adds neighboring information. At the same time, gaussian smoothing also makes the learning task easier.

Secondly, the classification accuracy of AC-WGAN-GP is higher than that of 1D-S- CNN with only original training samples. As shown in the experimental results of KSC dataset, OA, AA, and Kappa of AC-WGAN-GP are all higher than those of 1D-S-CNN, which are 1.36%, 2.03%, and 1.51% higher, respectively. The same results can be obtained in the Salinas dataset. On the Indian Pines dataset, OA and Kappa were significantly improved, while AA decreased slightly. Compared with 3D-CNN, the proposed method still has an advantage. The experimental results of the Indian Pines dataset, OA, AA, and Kappa of AC-WGAN-GP are all higher than those of 3D-CNN, which are 6.04%, 20.18% and 7.34% higher, respectively. In addition to the third category, we also outperform 3D-CNN in specific categories. For example categories 12, 13, 16, and 3D-CNN are far below the average due to poor training samples to learn and the proposed AC-WGAN-GP does not have this problem.

Thirdly, by comparing the 3D-Aug–GAN with our proposed AC-WGAN-GP method, it is observed from

Table 12 that the

OA,

AA and

Kappa of AC-WGAN-GP are 19.65%, 17.02% and 0.25% higher than 3D-Aug–GAN, respectively. In specific categories, our method is still leading. We can observe the same results from Indian pines and KSC data. The above analysis verifies that the proposed method has higher classification accuracy than that of 3D-Aug-GAN. We have made a little attribution on a GAN-based hyperspectral data augmentation and classifier compared with the above old models.

Fourthly, AML is a method that combines LSTM and attention and aims at HSI classification for small training size. On different proportion of training sets, we compare the AML with our AC-WGAN-GP. The result in

Table 14 shows that the performance of our method is ahead of AML slightly.

Finally, we notice that high accuracy can be achieved using only 1D-CNN and the smoothing module on the KSC dataset. Only categories 3, 4 and 5 do not achieve 100.00 accuracy. Even in this case, the method using AC-WGAN-GP framework is still 1.36%, 2.03%, 1.51% higher on

OA,

AA, and

Kappa, respectively. Specially, in

Figure 14, we list distribution of generated samples from three categories. These samples have low quality and lead to low accuracy. In Alfalfa, we can see the distribution of real samples is discrete and irregular. So it is difficult for AC-WGAN-GP to learn a better distribution. The generated samples have obvious wrong. In Stone-Steel-Towers, the situation is similar. In OAK/Broadleaf, the generated samples conform to the distribution of the real samples to some extent, but the network has not learned the right sparse and density of real distribution. From the above instances, we find that some categories have complex and uneven distribution. This tests the performance of GAN. For AC-WGAN-GP proposed by us, the result is not ideal.