CD-SDN: Unsupervised Sensitivity Disparity Networks for Hyper-Spectral Image Change Detection

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

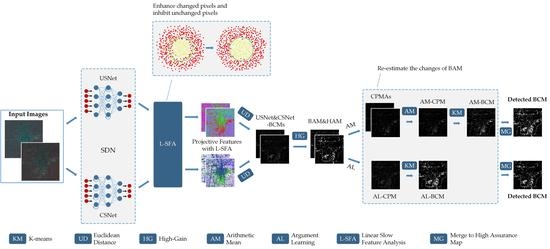

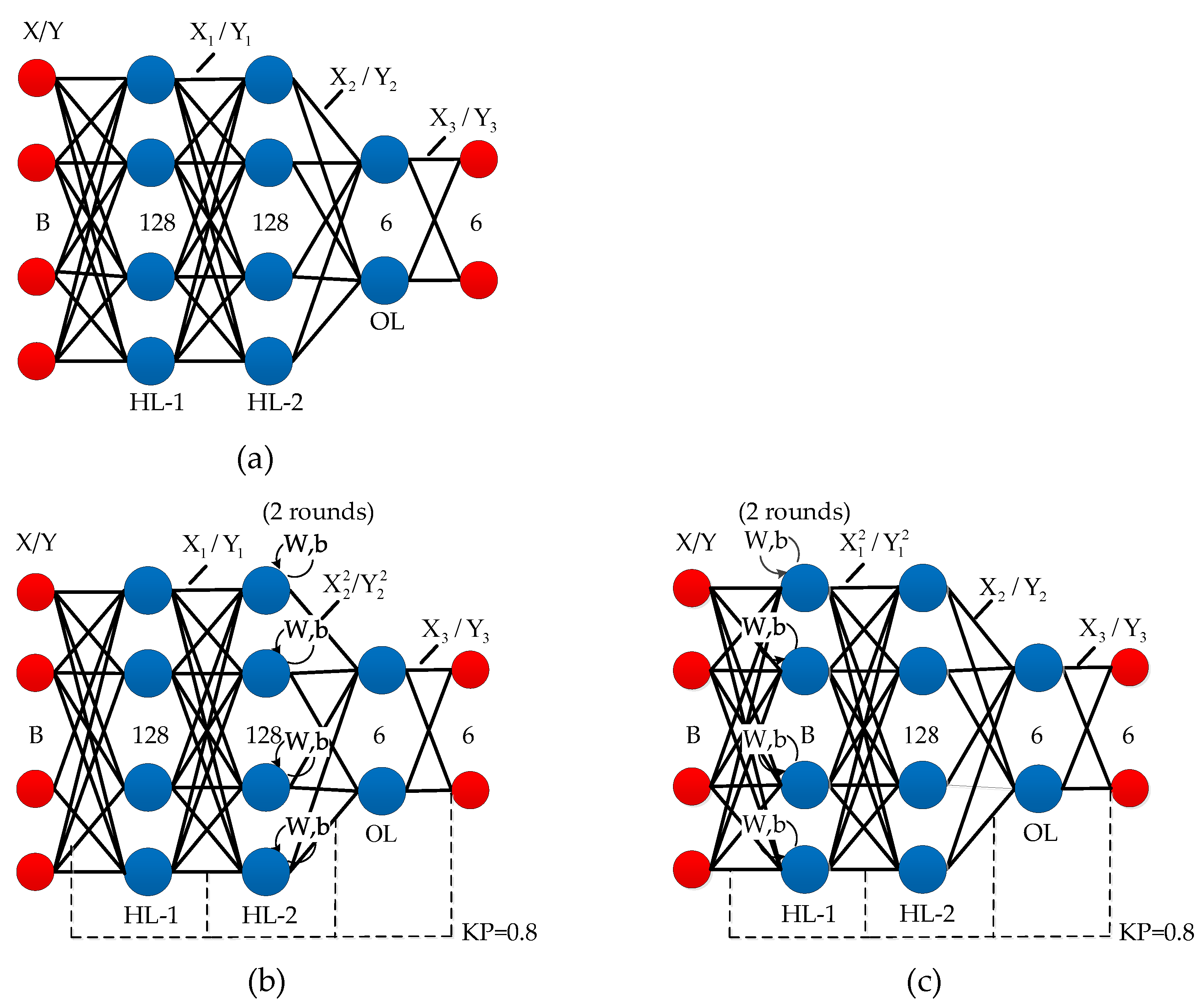

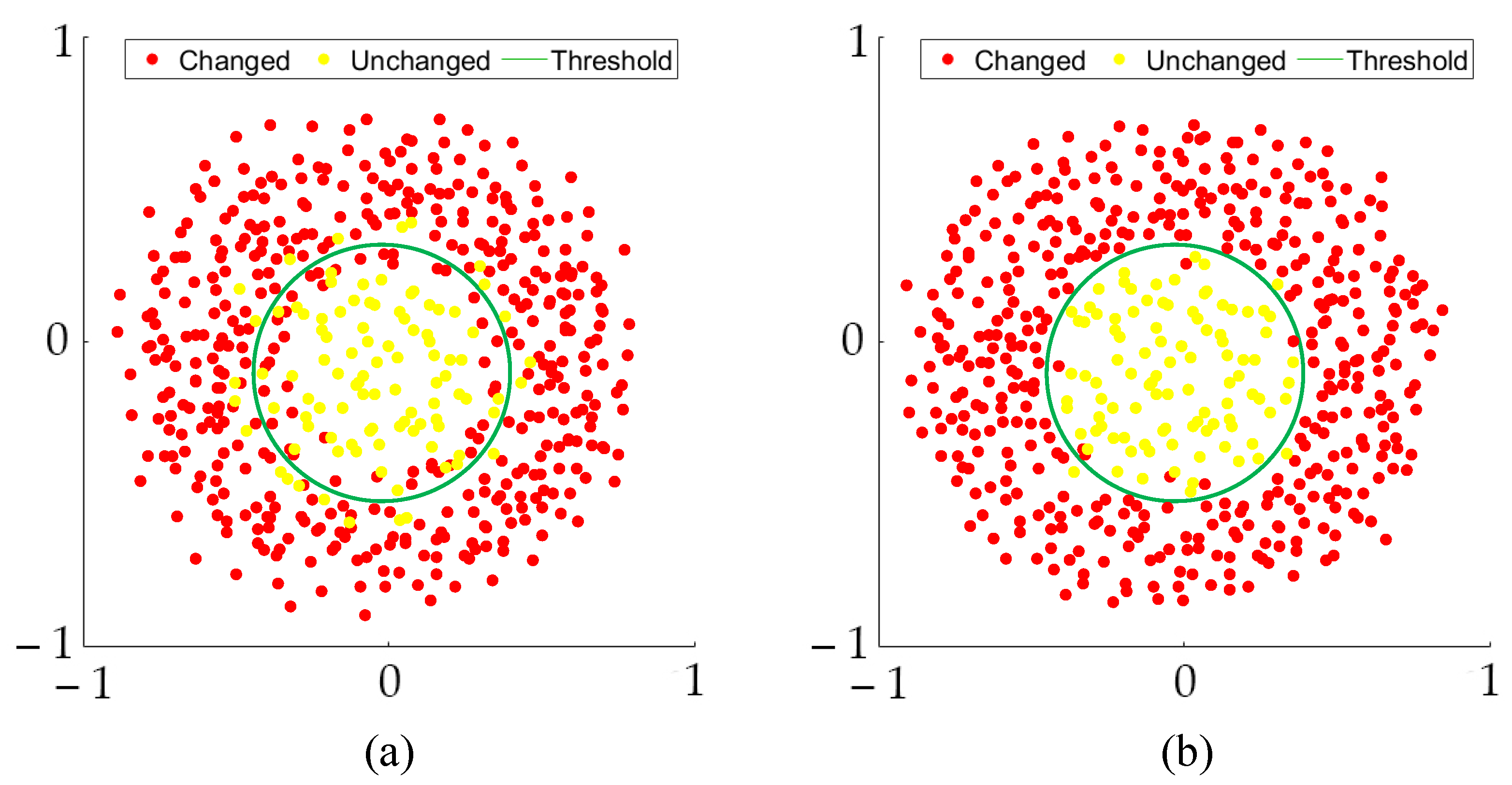

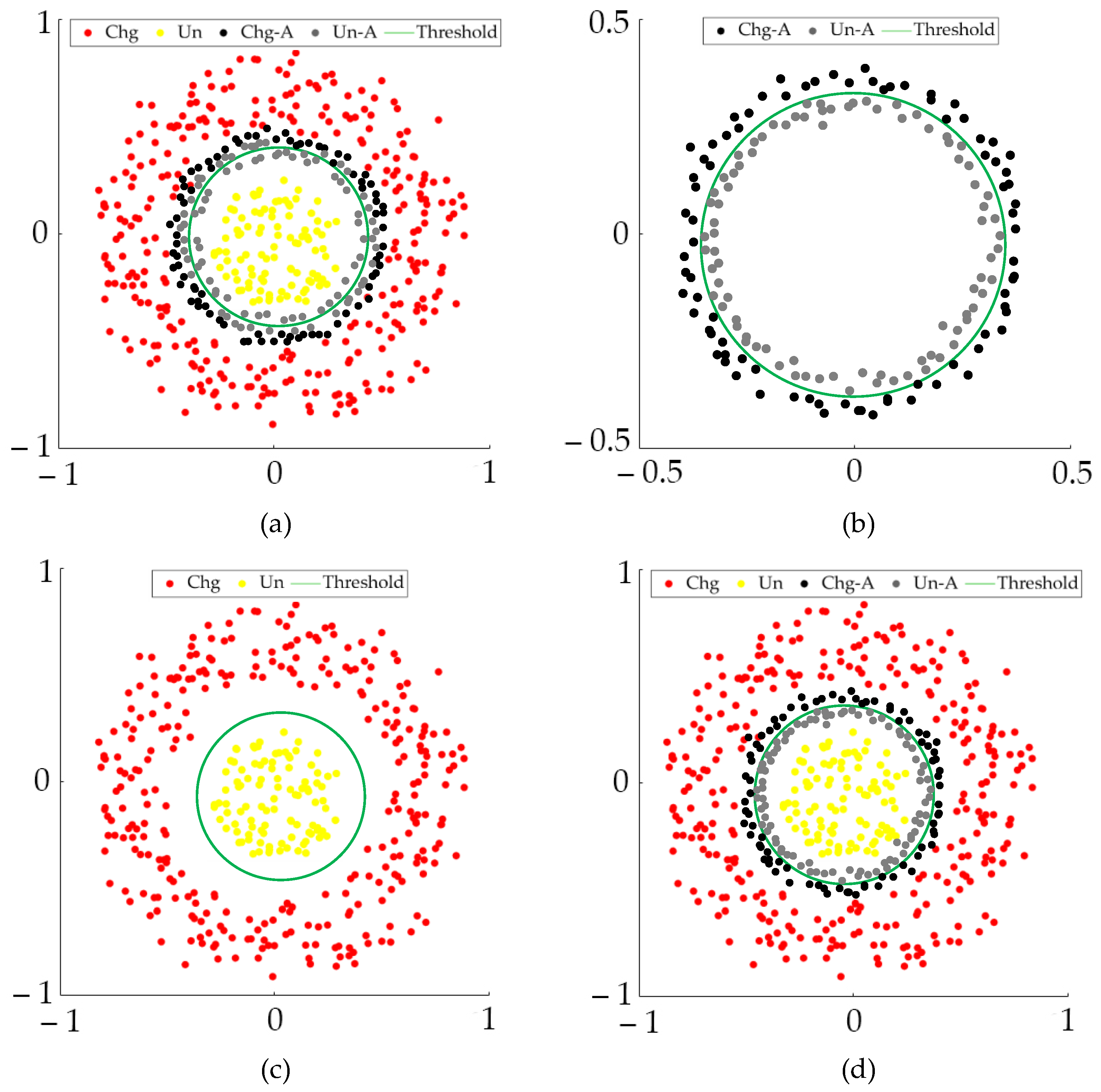

3.1. Generation of CPM & BCM

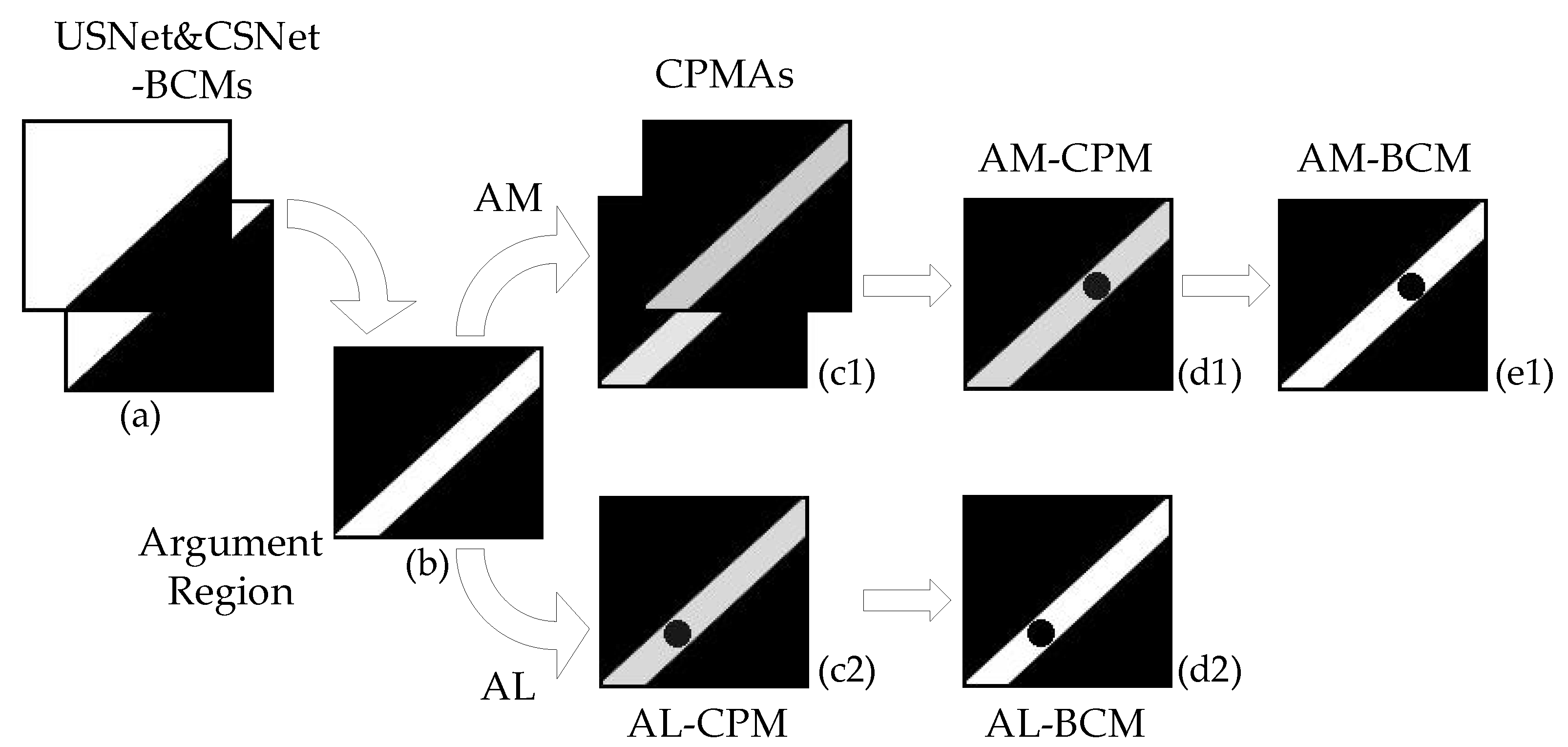

3.2. Generation of BAM & Re-Estimation of Argument Region

| Algorithm 1 The executive process of proposed CD-SDN to generate the final binary change map |

| Input: Bi-temporal images R and Q; |

| Output: The final binary change map BCM1 and BCM2; |

| 1: Utilized pre-detected BCM as a reference to select training samples X and Y; |

| 2: Initialization for parameters of SDN as ; |

| 3: while i < epochs do |

| 4: Compute the bi-temporal projective features of training samples: |

| = f (X, ) and = f (Y, ); |

| 5: Compute the gradient of Loss function = |

| by ∂L ( )/∂ and ∂L ( )/∂; |

| 6: Update the parameters; |

| 7: i++; |

| 8: end |

| 9: Training model is used to generate the projective features and of whole bi-temporal images R and Q; |

| 10: Apply L-SFA to the and for post-processing to get and ; |

| 11: Euclidean distance to calculate the CPM; |

| 12: K-means to get the USNet-BCM and CSNet-BCM; |

| 13: High-Gain to generate the BAM and HAM; |

| 14: Case AM approach: |

| 15: Calculate the CPMA-USNet and CPMA-CSNet by using BAM; |

| 16: Arithmetic mean to get the AM-CPM; |

| 17: K-mean to generate the AM-BCM; |

| 18: Merge the AM-BCM with HAM to obtain the final BCM1; |

| 19: Case AL approach: |

| 20: Argument learning to generate the projective features; |

| 21: Euclidean distance to calculate the AL-CPM; |

| 22: K-means is to generate the AL-BCM; |

| 23: Merge the AL-BCM with HAM to obtain the final BCM2; |

| 24: return BCM1, BCM2; |

4. Results

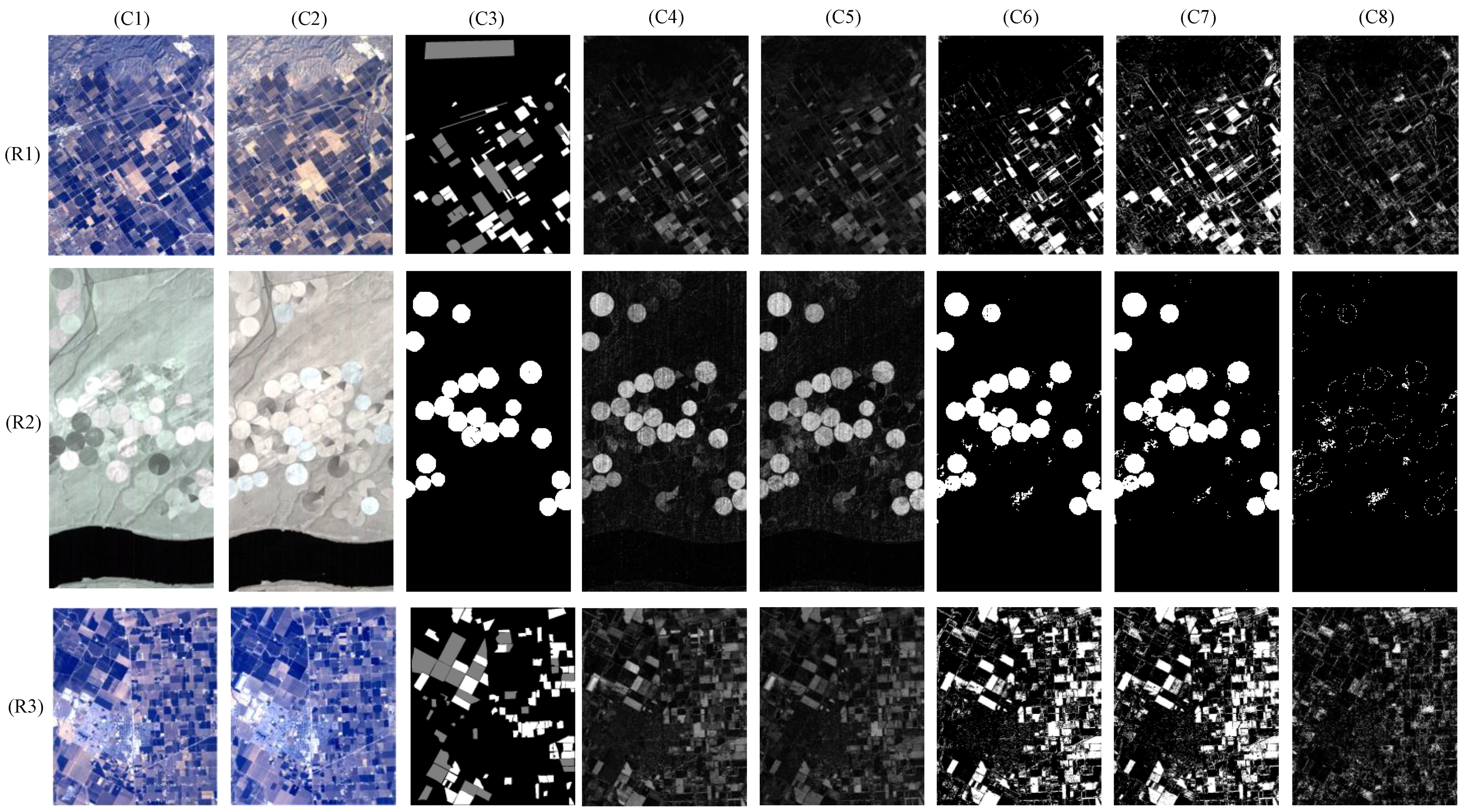

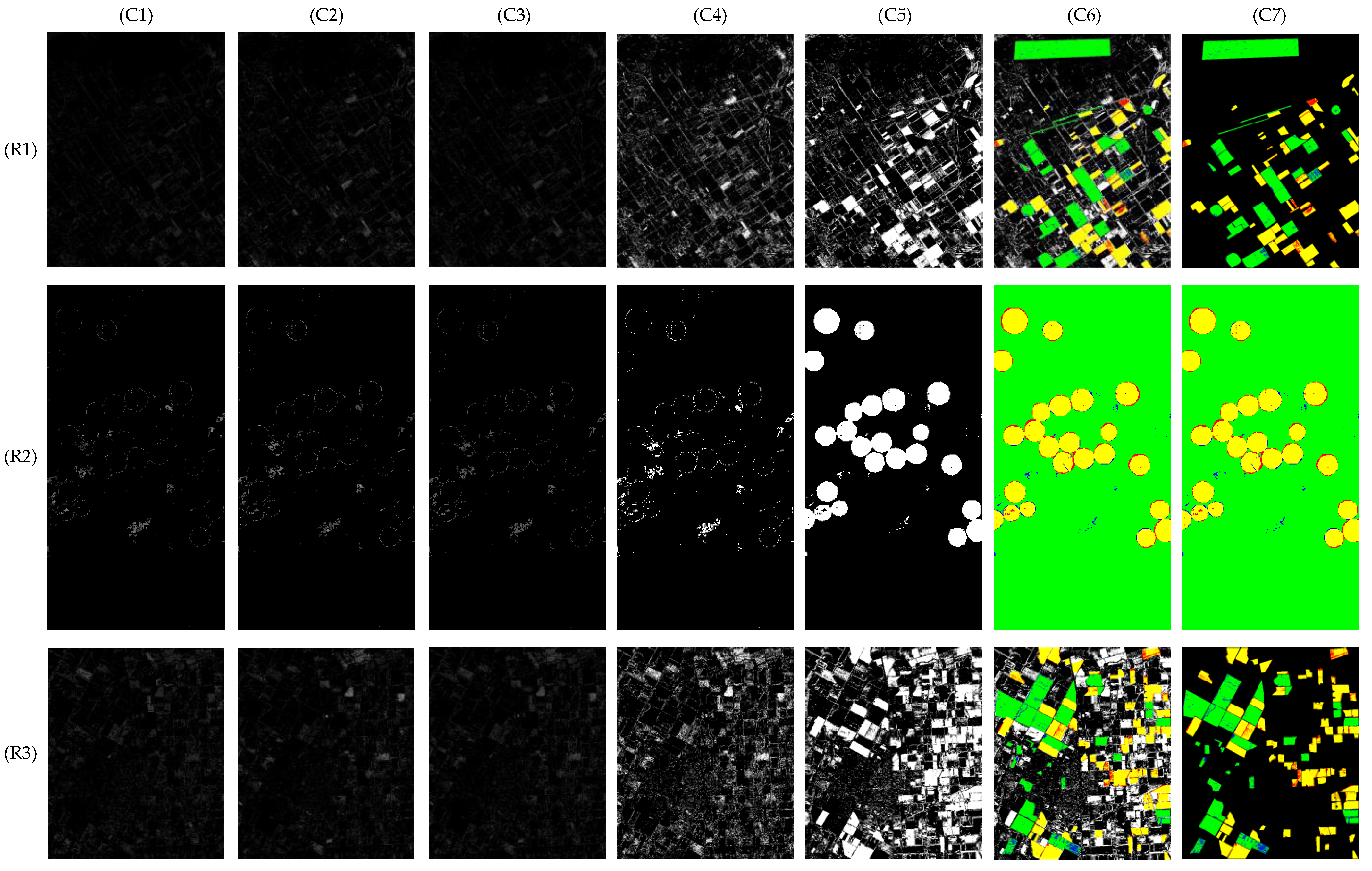

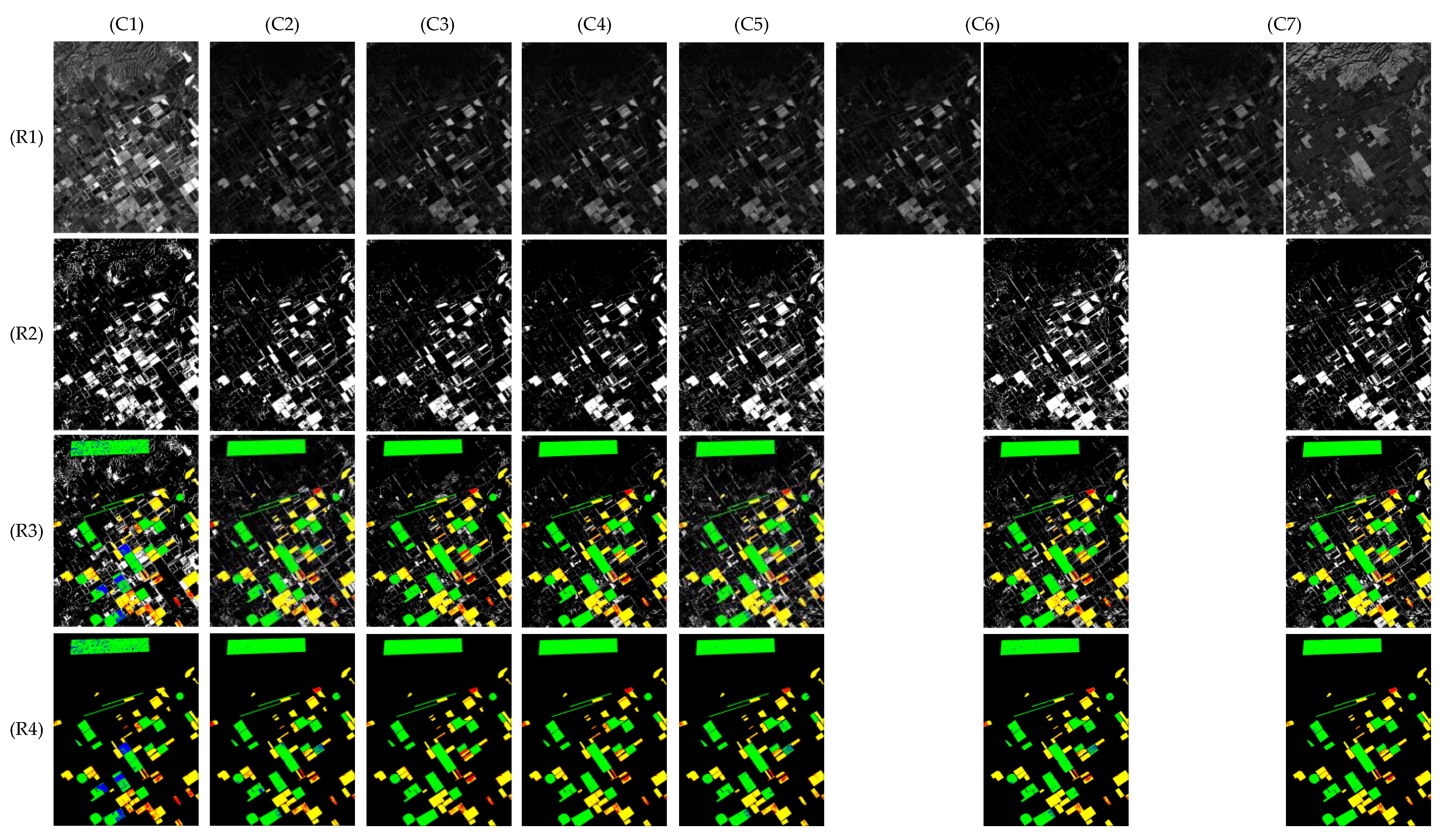

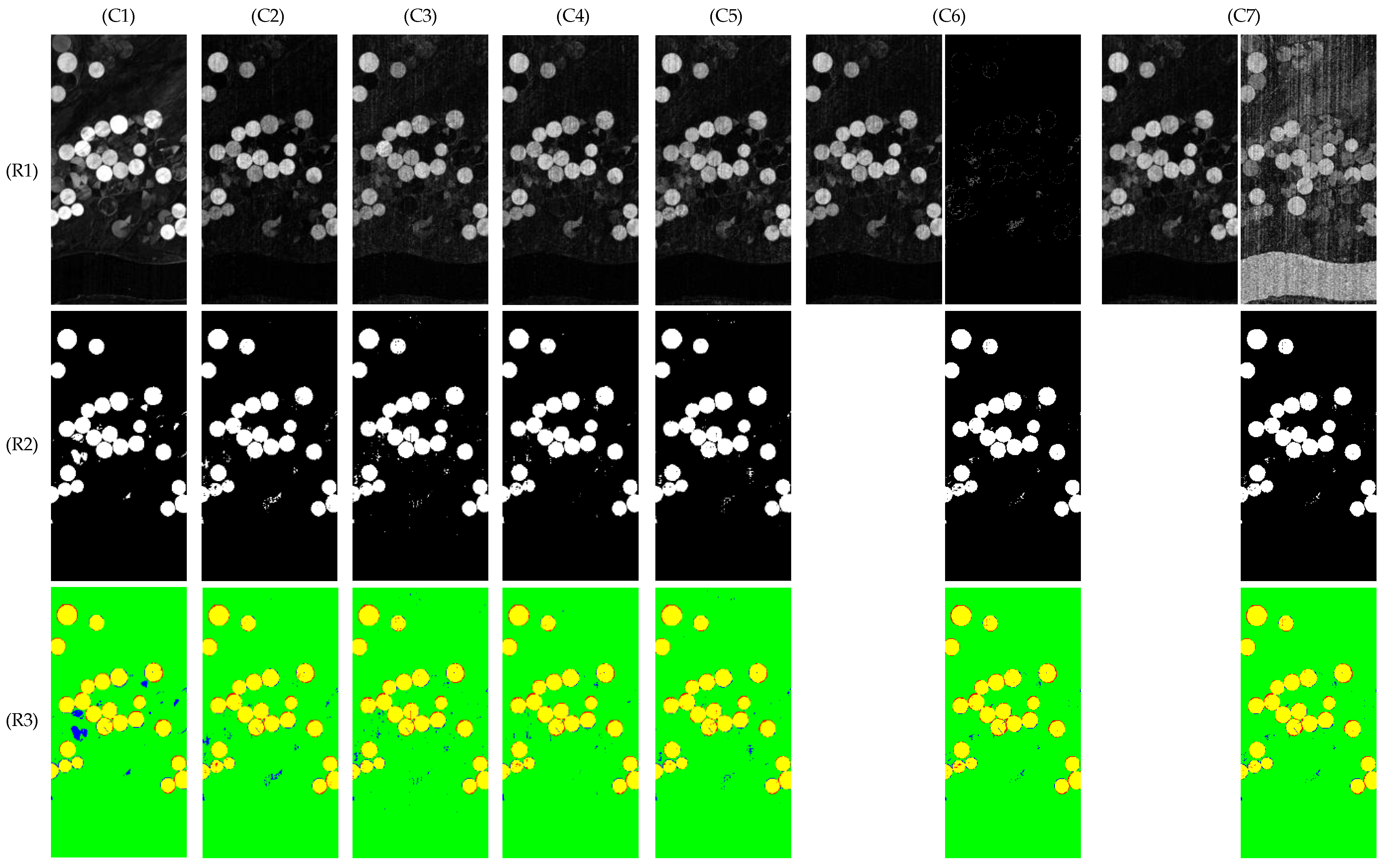

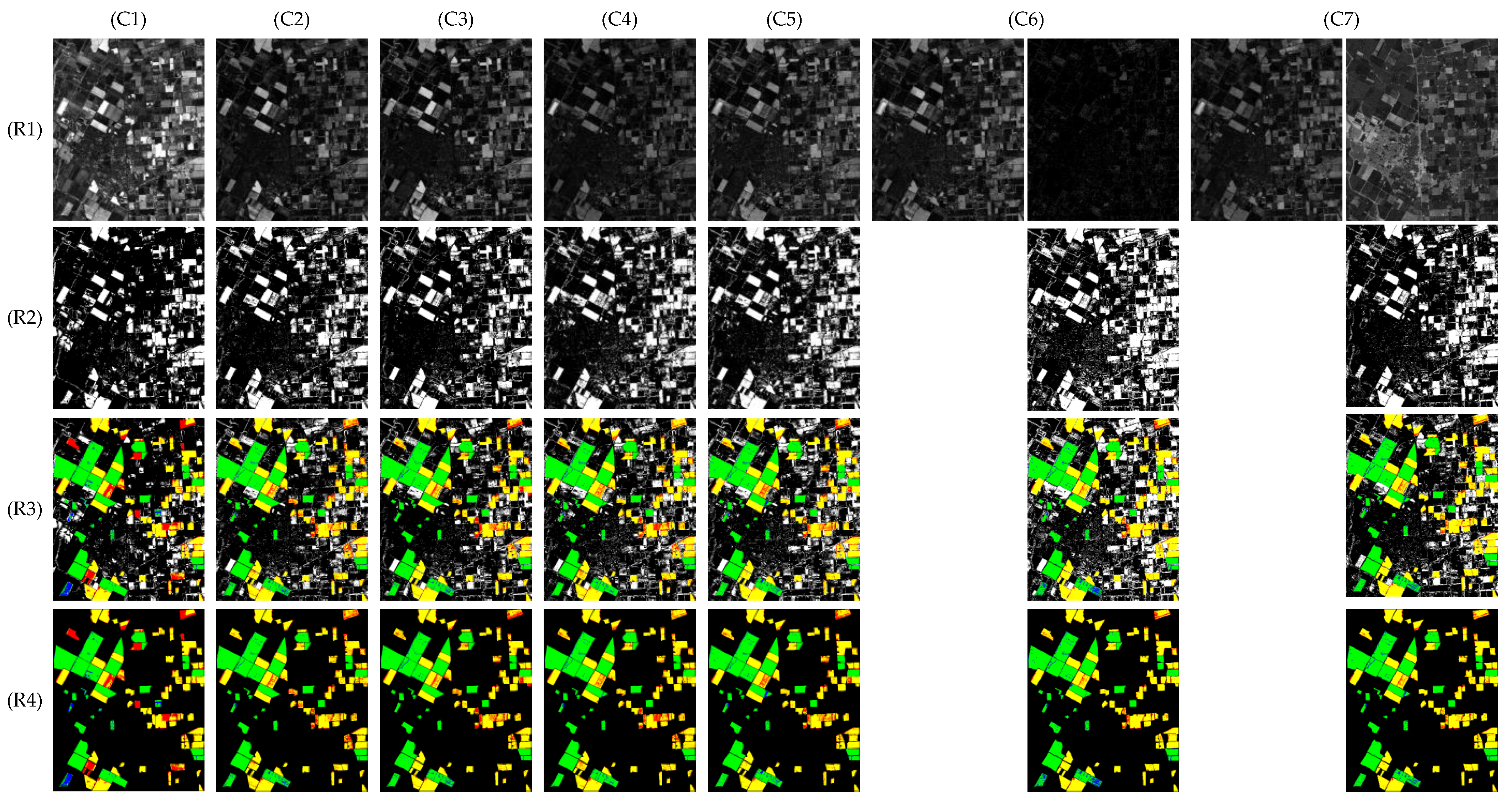

4.1. Performance of Proposed CD-SDN

4.2. Comparison with State-of-the-Art Works

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Puhm, M.; Deutscher, J.; Hirschmugl, M.; Wimmer, A.; Schmitt, U.; Schardt, M. A Near Real-Time Method for Forest Change Detection Based on a Structural Time Series Model and the Kalman Filter. Remote Sens. 2020, 12, 3135. [Google Scholar] [CrossRef]

- Karakani, E.G.; Malekian, A.; Gholami, S.; Liu, J. Spatiotemporal monitoring and change detection of vegetation cover for drought management in the Middle East. Theor. Appl. Climatol. 2021, 144, 299–315. [Google Scholar] [CrossRef]

- Henchiri, M.; Ali, S.; Essifi, B.; Kalisa, W.; Zhang, S.; Bai, Y. Monitoring land cover change detection with NOAA-AVHRR and MODIS remotely sensed data in the North and West of Africa from 1982 to 2015. Environ. Sci. Pollut. Res. 2020, 27, 5873–5889. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.-L.; Tan, T.-H.; Lee, W.-H.; Chang, L.; Chen, Y.-N.; Fan, K.-C.; Alkhaleefah, M. Consolidated Convolutional Neural Network for Hyperspectral Image Classification. Remote Sens. 2022, 14, 1571. [Google Scholar] [CrossRef]

- AL-Alimi, D.; Al-qaness, M.A.; Cai, Z.; Dahou, A.; Shao, Y.; Issaka, S. Meta-Learner Hybrid Models to Classify Hyperspectral Images. Remote Sens. 2022, 14, 1038. [Google Scholar] [CrossRef]

- Chen, D.; Tu, W.; Cao, R.; Zhang, Y.; He, B.; Wang, C.; Shi, T.; Li, Q. A hierarchical approach for fine-grained urban villages recognition fusing remote and social sensing data. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102661. [Google Scholar] [CrossRef]

- Chen, X.L.; Zhao, H.M.; Li, P.X.; Yin, Z.Y. Remote sensing image-based analysis of the relationship between urban heat island and land use/cover changes. Remote Sens. Environ. 2006, 104, 133–146. [Google Scholar] [CrossRef]

- Lambin, E.F.; Strahlers, A.H. Change-vector analysis in multitemporal space: A tool to detect and categorize land-cover change processes using high temporal-resolution satellite data. Remote Sens. Environ. 1994, 48, 231–244. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Wu, F.; Guan, H.; Yan, D.; Wang, Z.; Wang, D.; Meng, Q. Precise Geometric Correction and Robust Mosaicking for Airborne Lightweight Optical Butting Infrared Imaging System. IEEE Access 2019, 7, 93569–93579. [Google Scholar] [CrossRef]

- Schroeder, T.A.; Cohen, W.B.; Song, C.H.; Canty, M.J.; Yang, Z.Q. Radiometric correction of multi-temporal Landsat data for characterization of early successional forest patterns in western Oregon. Remote Sens. Environ. 2006, 103, 16–26. [Google Scholar] [CrossRef]

- Vicente-Serrano, S.M.; Pérez-Cabello, F.; Lasanta, T. Assessment of radiometric correction techniques in analyzing vegetation variability and change using time series of Landsat images. Remote Sens. Environ. 2008, 112, 3916–3934. [Google Scholar] [CrossRef]

- Wang, S.; Wang, Y.; Liu, Y.; Li, L. SAR image change detection based on sparse representation and a capsule network. Remote Sens. Lett. 2021, 12, 890–899. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Simon, S.; Germán, R.; Roberto, A.; Riccardo, G. Street-view change detection with deconvolutional networks. Auton. Robots 2018, 42, 1301–1322. [Google Scholar] [CrossRef]

- Lei, Y.; Peng, D.; Zhang, P.; Ke, Q.; Li, H. Hierarchical Paired Channel Fusion Network for Street Scene Change Detection. IEEE Trans. Image Process. 2021, 30, 55–67. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Wang, Z.; Gao, J.; Rutjes, C.; Nufer, K.; Tao, D.; Feng, D.D.; Menzies, S.W. Short-Term Lesion Change Detection for Melanoma Screening with Novel Siamese Neural Network. IEEE Trans. Med. Imaging 2020, 40, 840–851. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Ji, Y.; Wang, L.; Ji, B.; Jiao, L.; Han, J. SAR Image Change Detection based on deep denoising and CNN. IET Image Process. 2019, 13, 1509–1515. [Google Scholar] [CrossRef]

- Qin, D.; Zhou, X.; Zhou, W.; Huang, G.; Ren, Y.; Horan, B.; He, J.; Kito, N. MSIM: A Change Detection Framework for Damage Assessment in Natural Disasters. Expert Syst. Appl. 2018, 97, 372–383. [Google Scholar] [CrossRef]

- Song, L.; Xia, M.; Jin, J.; Qian, M.; Zhang, Y. SUACDNet: Attentional change detection network based on siamese U-shaped structure. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102597. [Google Scholar] [CrossRef]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised deep slow feature analysis for change detection in multi-temporal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef]

- Li, J.; Yuan, X.; Feng, L. Alteration Detection of Multispectral/Hyperspectral Images Using Dual-Path Partial Recurrent Networks. Remote Sens. 2021, 13, 4802. [Google Scholar] [CrossRef]

- Pu, R.; Gong, P.; Tian, Y.; Miao, X.; Carruthers, R.I.; Anderson, G.L. Invasive species change detection using artificial neural networks and CASI hyperspectral imagery. Environ. Monit. Assess. 2008, 140, 15–32. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Patra, S.; Ghosh, A. An unsupervised context-sensitive change detection technique based on modified self-organizing feature map neural network. Int. J. Approx. Reason. 2009, 50, 37–50. [Google Scholar] [CrossRef]

- Huang, F.; Yu, Y.; Feng, T. Hyperspectral remote sensing image change detection based on tensor and deep learning. J. Vis. Commun. Image Represent. 2019, 58, 233–244. [Google Scholar] [CrossRef]

- Moustafa, M.S.; Mohamed, S.A.; Ahmed, S.; Nasr, A.H. Hyperspectral change detection based on modification of UNet neural networks. J. Appl. Remote Sens. 2021, 15, 028505. [Google Scholar] [CrossRef]

- Danielsson, P.-E. Euclidean distance mapping. Comput. Graph. Image Process. 1980, 14, 227–248. [Google Scholar] [CrossRef]

- Kanungo, T.; Mount, D.M.; Netanyahu, N.S.; Piatko, C.D.; Silverman, R.; Wu, A.Y. An efficient k-means clustering algorithm: Analysis and implementation. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 881–892. [Google Scholar] [CrossRef]

- Emran, S.M.; Ye, N. Robustness of Chi-square and Canberra distance metrics for computer intrusion detection. Qual. Reliab. Eng. Int. 2002, 18, 19–28. [Google Scholar] [CrossRef]

- Maesschalck, R.D.; Jouan-Rimbaud, D.; Massart, D.L. The Mahalanobis distance. Chemom. Intell. Lab. Syst. 2000, 50, 1–18. [Google Scholar] [CrossRef]

- Oike, Y.; Ikeda, M.; Asada, K. A high-speed and low-voltage associative co-processor with exact Hamming/Manhattan-distance estimation using word-parallel and hierarchical search architecture. IEEE J. Solid-State Circuits 2004, 39, 1383–1387. [Google Scholar] [CrossRef]

- Wang, D.; Gao, T.; Zhang, Y. Image Sharpening Detection Based on Difference Sets. IEEE Access 2020, 8, 51431–51445. [Google Scholar] [CrossRef]

- De Bem, P.P.; de Carvalho Junior, O.A.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Change detection of deforestation in the Brazilian Amazon using landsat data and convolutional neural networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Hyperspectral Change Detection Dataset. Available online: https://citius.usc.es/investigacion/datasets/hyperspectral-change-detection-dataset (accessed on 1 September 2021).

| Methods | CVA [8] | CD-FCN [20] | DSFA [20] | CD-USNet [21] | CD-CSNet | CD-SDN-AM | CD-SDN-AL |

|---|---|---|---|---|---|---|---|

| (Proposed) | (Proposed) | (Proposed) | |||||

| TP | 42,247 | 44,435 | 43,426 | 43,442 | 45,497 | 46,532 | 45,481 |

| TN | 68,093 | 77,257 | 79,333 | 79,580 | 77,087 | 76,838 | 78,892 |

| FP | 12,325 | 3161 | 1085 | 838 | 3331 | 3580 | 1526 |

| FN | 9887 | 7699 | 8708 | 8692 | 6637 | 5602 | 6653 |

| OA_CHG | 0.8104 | 0.8523 | 0.8330 | 0.8333 | 0.8727 | 0.8925 | 0.8724 |

| OA_UN | 0.8467 | 0.9607 | 0.9865 | 0.9896 | 0.9586 | 0.9555 | 0.9810 |

| OA | 0.8324 | 0.9181 | 0.9261 | 0.9281 | 0.9248 | 0.9307 | 0.9383 |

| Kappa | 0.6517 | 0.8257 | 0.8411 | 0.8452 | 0.8406 | 0.8539 | 0.8684 |

| F1 | 0.7918 | 0.8911 | 0.8987 | 0.9012 | 0.9013 | 0.9102 | 0.9175 |

| Methods | CVA [8] | CD-FCN [20] | DSFA [20] | CD-USNet [21] | CD-CSNet | CD-SDN-AM | CD-SDN-AL |

|---|---|---|---|---|---|---|---|

| (Proposed) | (Proposed) | (Proposed) | |||||

| TP | 9338 | 9284 | 9299 | 9206 | 9314 | 9195 | 9346 |

| TN | 66,953 | 67,452 | 67,467 | 67,650 | 67,535 | 67,674 | 67,632 |

| FP | 1061 | 562 | 547 | 364 | 479 | 340 | 382 |

| FN | 648 | 702 | 687 | 780 | 672 | 791 | 640 |

| OA_CHG | 0.9351 | 0.9297 | 0.9312 | 0.9219 | 0.9327 | 0.9208 | 0.9359 |

| OA_UN | 0.9844 | 0.9917 | 0.9920 | 0.9946 | 0.9930 | 0.9950 | 0.9944 |

| OA | 0.9781 | 0.9838 | 0.9842 | 0.9853 | 0.9852 | 0.9855 | 0.9869 |

| Kappa | 0.9036 | 0.9270 | 0.9287 | 0.9331 | 0.9334 | 0.9338 | 0.9407 |

| F1 | 0.9162 | 0.9363 | 0.9378 | 0.9415 | 0.9418 | 0.9421 | 0.9482 |

| Methods | CVA [8] | CD-FCN [20] | DSFA [20] | CD-USNet [21] | CD-CSNet | CD-SDN-AM | CD-SDN-AL |

|---|---|---|---|---|---|---|---|

| (Proposed) | (Proposed) | (Proposed) | |||||

| TP | 29,521 | 32,837 | 32,972 | 33,227 | 33,738 | 35,717 | 35,632 |

| TN | 32,259 | 33,016 | 32,961 | 32,761 | 32,603 | 31,815 | 32,422 |

| FP | 1952 | 1195 | 1250 | 1450 | 1608 | 2396 | 1789 |

| FN | 9749 | 6433 | 6298 | 6043 | 5532 | 3553 | 3638 |

| OA_CHG | 0.7517 | 0.8362 | 0.8396 | 0.8461 | 0.8591 | 0.9095 | 0.9074 |

| OA_UN | 0.9429 | 0.9651 | 0.9635 | 0.9576 | 0.9530 | 0.9300 | 0.9477 |

| OA | 0.8408 | 0.8962 | 0.8973 | 0.8980 | 0.9028 | 0.9190 | 0.9261 |

| Kappa | 0.6846 | 0.7934 | 0.7955 | 0.7968 | 0.8062 | 0.8377 | 0.8521 |

| F1 | 0.8346 | 0.8959 | 0.8973 | 0.8987 | 0.9043 | 0.9231 | 0.9292 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Yuan, X.; Li, J.; Huang, G.; Li, P.; Feng, L. CD-SDN: Unsupervised Sensitivity Disparity Networks for Hyper-Spectral Image Change Detection. Remote Sens. 2022, 14, 4806. https://doi.org/10.3390/rs14194806

Li J, Yuan X, Li J, Huang G, Li P, Feng L. CD-SDN: Unsupervised Sensitivity Disparity Networks for Hyper-Spectral Image Change Detection. Remote Sensing. 2022; 14(19):4806. https://doi.org/10.3390/rs14194806

Chicago/Turabian StyleLi, Jinlong, Xiaochen Yuan, Jinfeng Li, Guoheng Huang, Ping Li, and Li Feng. 2022. "CD-SDN: Unsupervised Sensitivity Disparity Networks for Hyper-Spectral Image Change Detection" Remote Sensing 14, no. 19: 4806. https://doi.org/10.3390/rs14194806

APA StyleLi, J., Yuan, X., Li, J., Huang, G., Li, P., & Feng, L. (2022). CD-SDN: Unsupervised Sensitivity Disparity Networks for Hyper-Spectral Image Change Detection. Remote Sensing, 14(19), 4806. https://doi.org/10.3390/rs14194806