A Low-Altitude Remote Sensing Inspection Method on Rural Living Environments Based on a Modified YOLOv5s-ViT

Abstract

1. Introduction

- (1)

- A rural living environment inspection dataset was established based on images collected by UAV, which contained two categories of labeled samples, i.e., random construction samples and random storage samples. The dataset was used to train and evaluate the target detection model.

- (2)

- The SimAM attention mechanism was embedded into YOLOv5s to improve the model’s ability to capture distinguishable features of the target. Thereby, target detection accuracy was improved.

- (3)

- The Vision Transformer structure was incorporated to recognize the two categories of samples mentioned above. The Vision Transformer component allowed the model to pay attention to the contextual global features of the target, complementing the CNN model that mainly focused on local features.

2. Materials and Methods

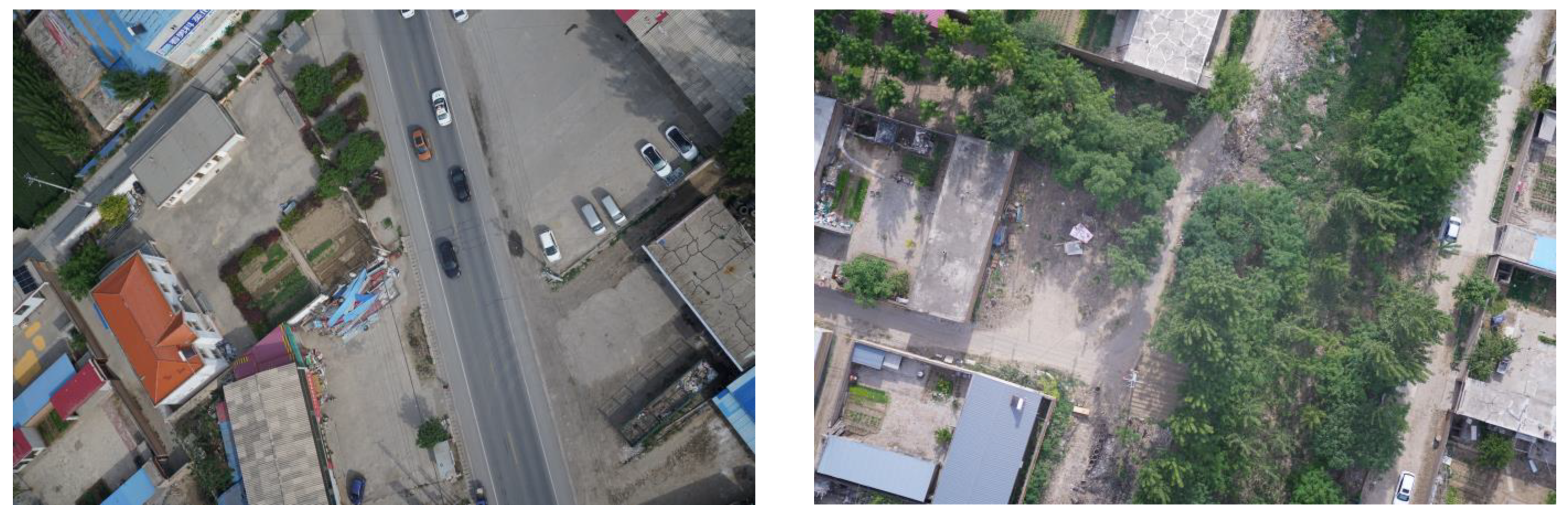

2.1. Data Collection

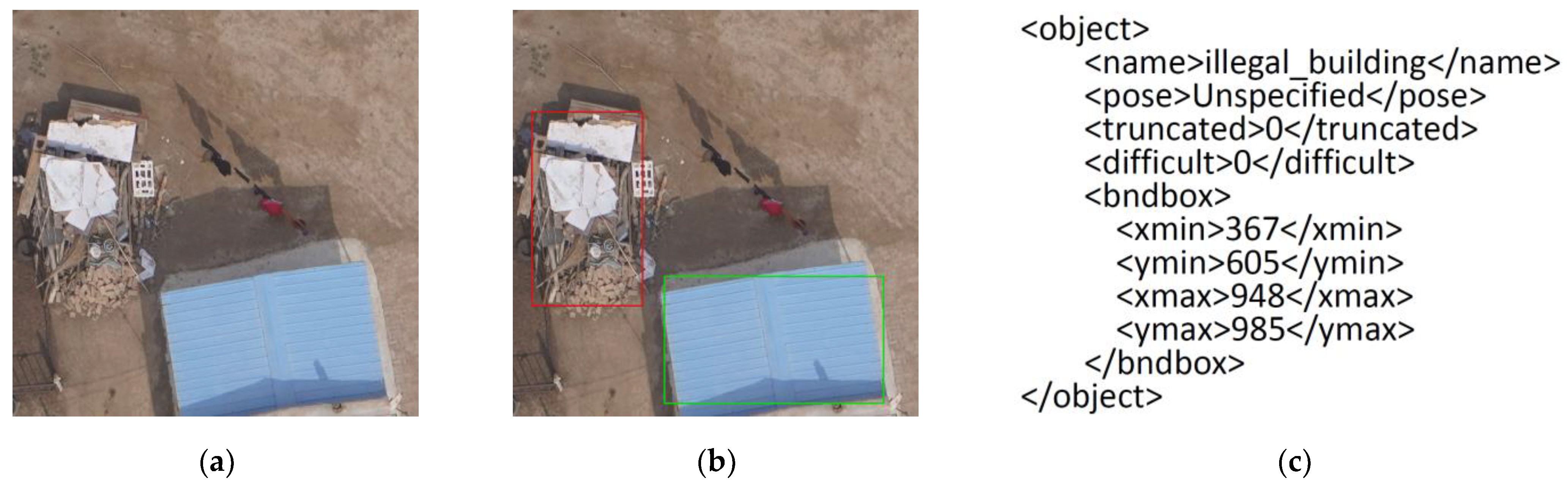

2.2. Data Processing

2.2.1. Data Preprocessing

2.2.2. Data Enhancement

3. Model Improvement

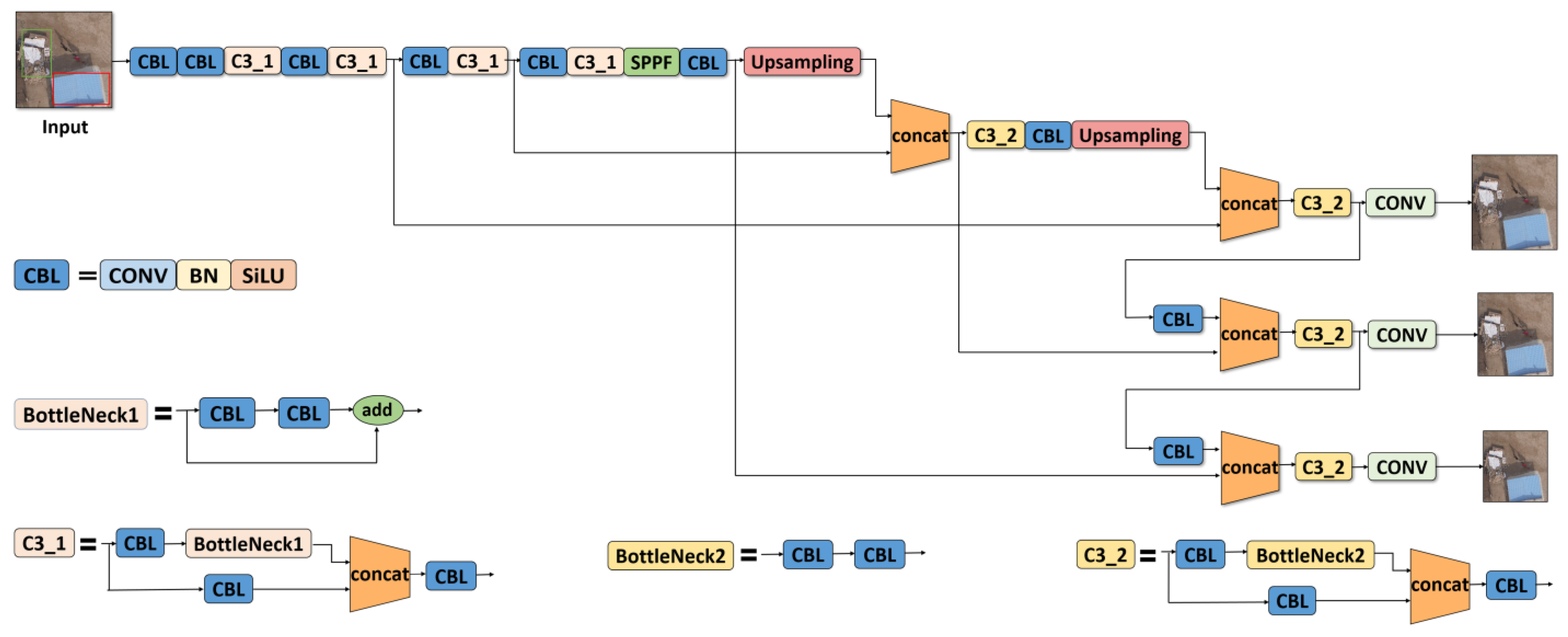

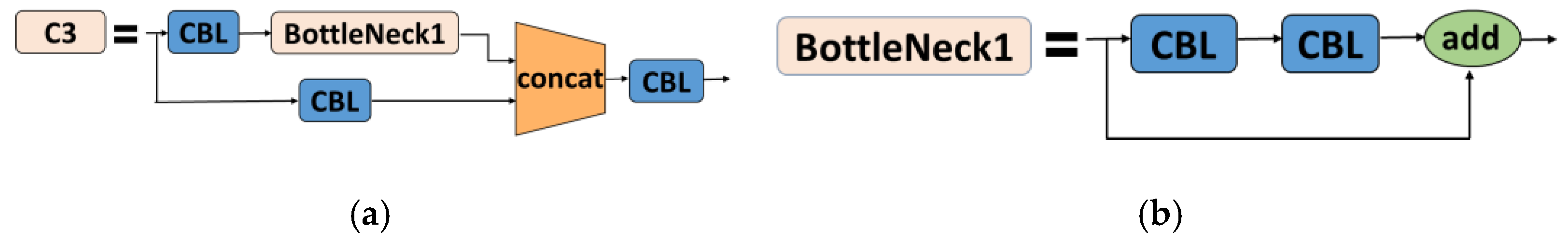

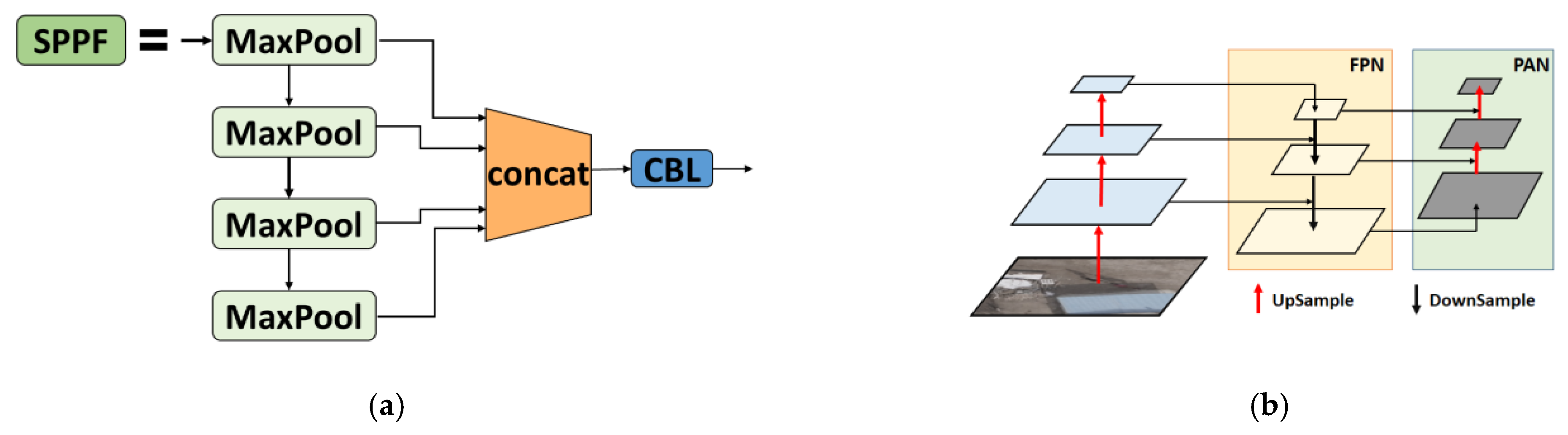

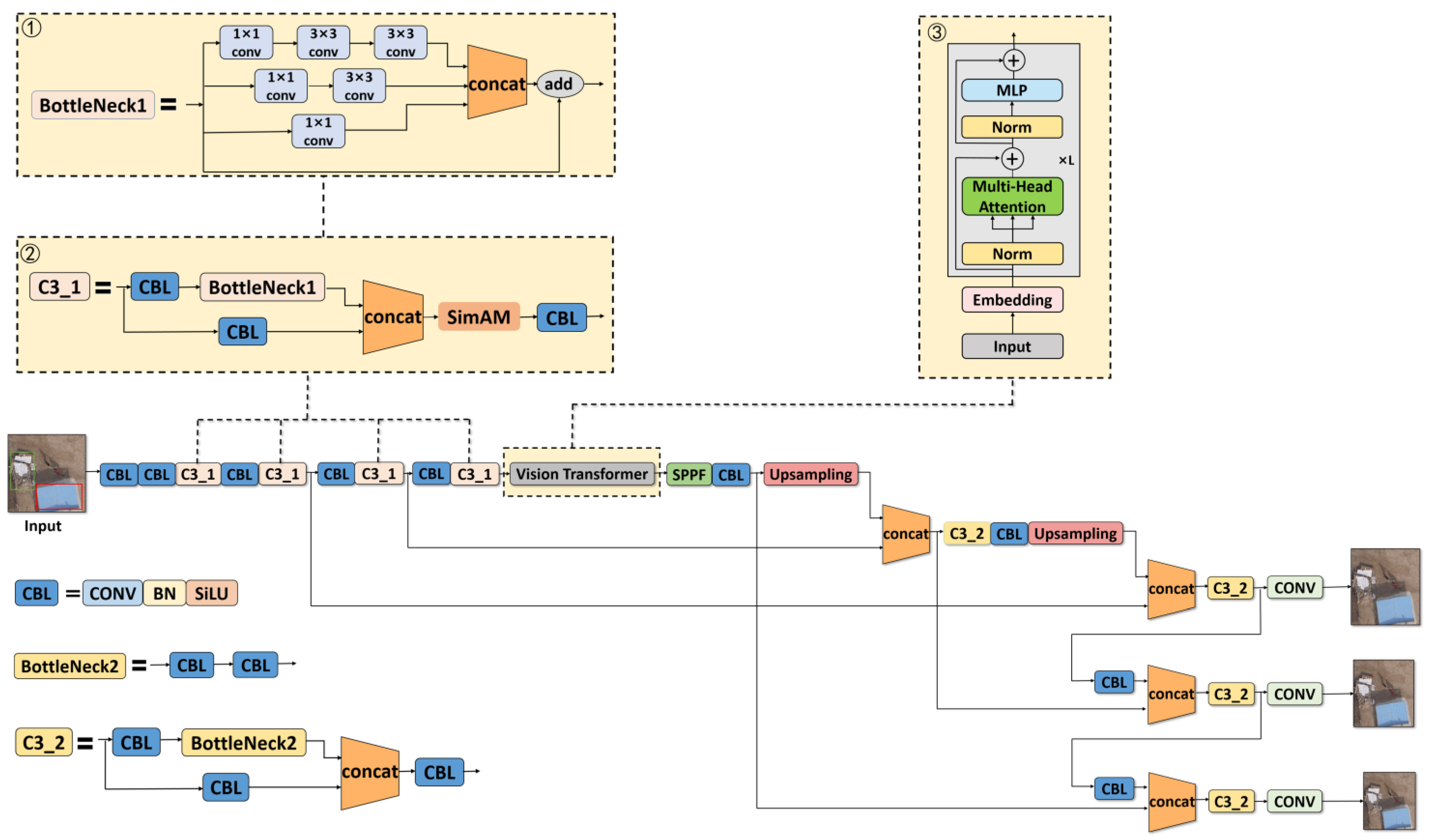

3.1. Basic YOLOv5 Network

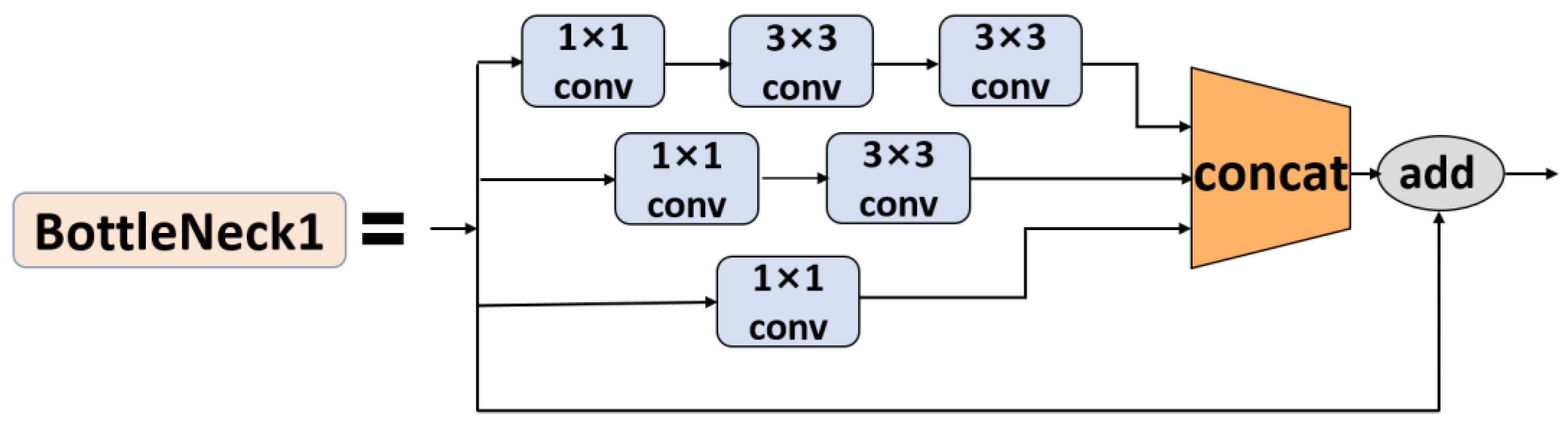

3.2. Modification of YOLOv5s

3.2.1. Multi-Scale Feature Detection

3.2.2. SimAM Attention Mechanism

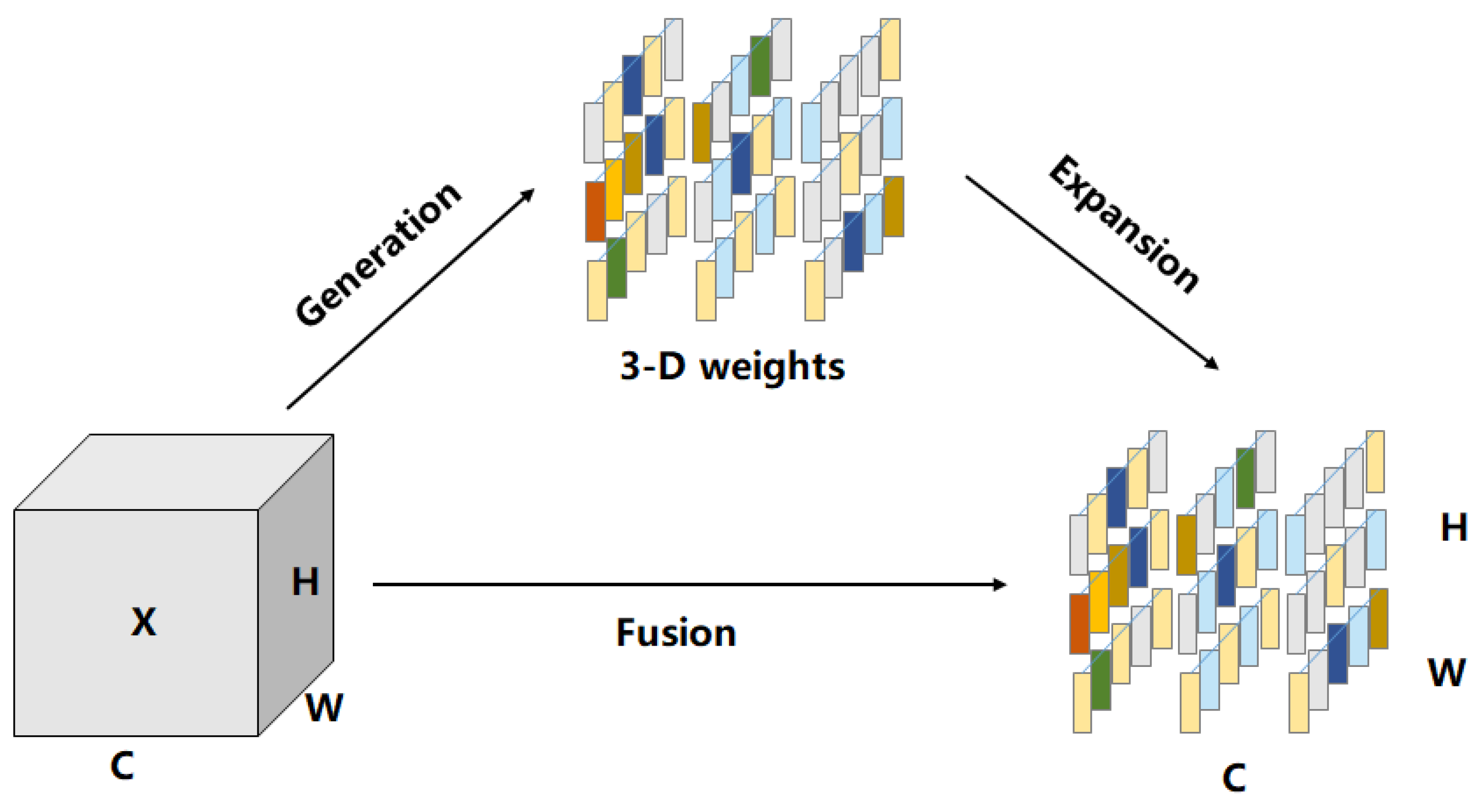

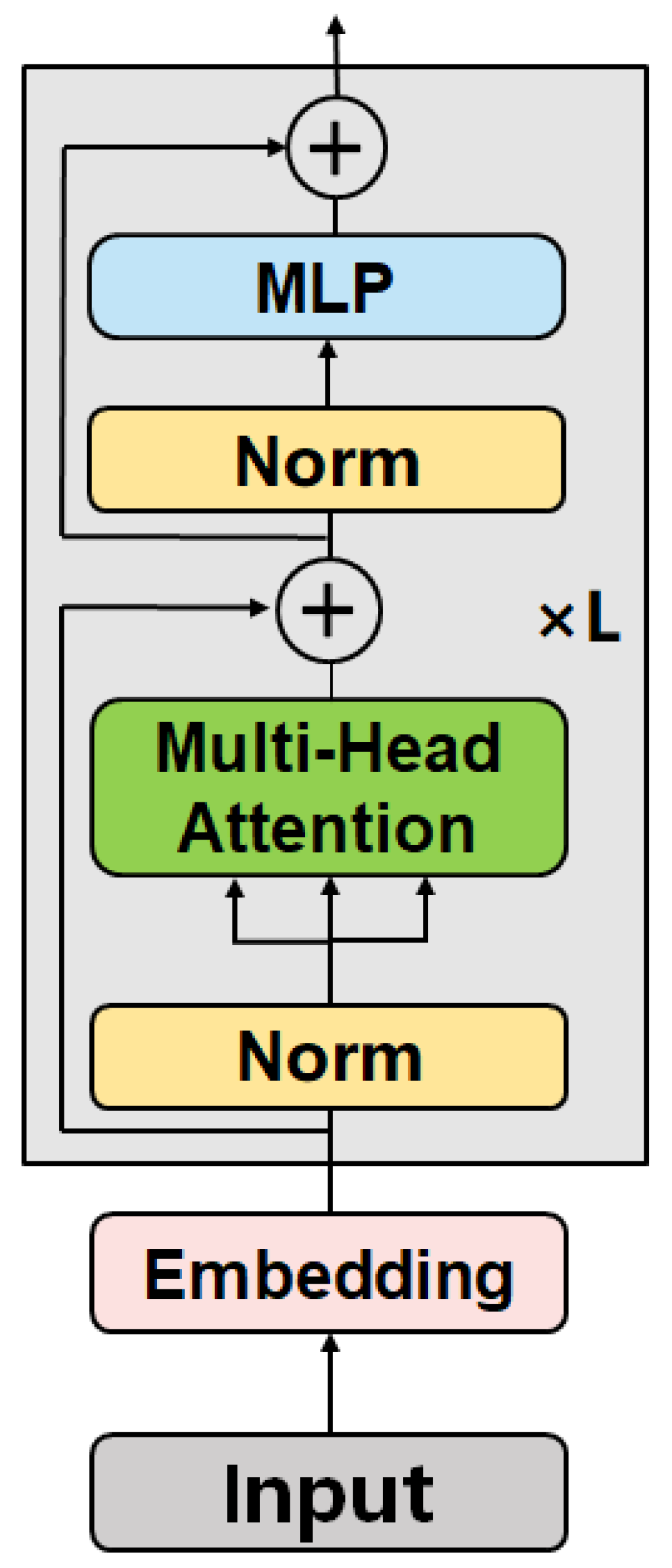

3.2.3. Vision Transformer Integration

3.2.4. The K-Means++ Custom Anchor Box

4. Experiment and Analysis

4.1. Experiment Dataset

4.2. Model Training

4.3. Evaluation Indicators

4.4. Experiment Results and Analysis

4.4.1. Comparison of Different Detection Algorithms

4.4.2. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- National Bureau of Statistics of China. 2021 China Statistical Yearbook; China Statistics Publishing Society: Beijing, China, 2021.

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Sun, W.; Dai, L.; Zhang, X.; Chang, P.; He, X. RSOD: Real-time small object detection algorithm in UAV-based traffic monitoring. Appl. Intell. 2022, 52, 8448–8463. [Google Scholar] [CrossRef]

- Byun, S.; Shin, I.-K.; Moon, J.; Kang, J.; Choi, S.-I. Road traffic monitoring from UAV images using deep learning networks. Remote Sens. 2021, 13, 4027. [Google Scholar] [CrossRef]

- Li, B.; Cao, R.; Wang, Z.; Song, R.-F.; Peng, Z.-R.; Xiu, G.; Fu, Q. Use of multi-rotor unmanned aerial vehicles for fine-grained roadside air pollution monitoring. Transp. Res. Rec. 2019, 2673, 169–180. [Google Scholar] [CrossRef]

- Bolla, G.M.; Casagrande, M.; Comazzetto, A.; Dal Moro, R.; Destro, M.; Fantin, E.; Colombatti, G.; Aboudan, A.; Lorenzini, E.C. ARIA: Air pollutants monitoring using UAVs. In Proceedings of the 2018 5th IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Rome, Italy, 20–22 June 2018; pp. 225–229. [Google Scholar]

- Wong, S.Y.; Choe, C.W.C.; Goh, H.H.; Low, Y.W.; Cheah, D.Y.S.; Pang, S.C. Power transmission line fault detection and diagnosis based on artificial intelligence approach and its development in UAV: A review. Arab. J. Sci. Eng. 2021, 46, 9305–9331. [Google Scholar] [CrossRef]

- Chen, W.; Li, Y.; Zhao, Z. Transmission Line Vibration Damper Detection Using Deep Neural Networks Based on UAV Remote Sensing Image. Sensors 2022, 22, 1892. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.; Zhang, F.; Xia, J.; Xie, J.; Zhang, Z.; Du, Z.; Liu, R. Identifying Damaged Buildings in Aerial Images Using the Object Detection Method. Remote Sens. 2021, 13, 4213. [Google Scholar] [CrossRef]

- Zhang, R.; Li, H.; Duan, K.; You, S.; Liu, K.; Wang, F.; Hu, Y. Automatic detection of earthquake-damaged buildings by integrating UAV oblique photography and infrared thermal imaging. Remote Sens. 2020, 12, 2621. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Wang, W.; Lai, Q.; Fu, H.; Shen, J.; Ling, H.; Yang, R. Salient object detection in the deep learning era: An in-depth survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3239–3259. [Google Scholar] [CrossRef] [PubMed]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A deep learning based forest fire detection approach using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019; pp. 1–5. [Google Scholar]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Ben Jdira, B.; Alajlan, N.; Zuair, M. Deep learning approach for car detection in UAV imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Li, F.; Xin, J.; Chen, T.; Xin, L.; Wei, Z.; Li, Y.; Zhang, Y.; Jin, H.; Tu, Y.; Zhou, X.; et al. An automatic detection method of bird’s nest on transmission line tower based on faster_RCNN. IEEE Access 2020, 8, 164214–164221. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Yu, J. Detection of collapsed buildings in post-earthquake remote sensing images based on the improved YOLOv3. Remote Sens. 2019, 12, 44. [Google Scholar] [CrossRef]

- Yu, J.; Luo, S. Detection method of illegal building based on YOLOv5. Comput. Eng. Appl. 2021, 57, 236–244. [Google Scholar]

- Jiang, H.; Hu, X.; Li, K.; Zhang, J.; Gong, J.; Zhang, M. PGA-SiamNet: Pyramid feature-based attention-guided Siamese network for remote sensing orthoimagery building change detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef]

- Peng, B.; Ren, D.; Zheng, C.; Lu, A. TRDet: Two-Stage Rotated Detection of Rural Buildings in Remote Sensing Images. Remote Sens. 2022, 14, 522. [Google Scholar] [CrossRef]

| Camera Model | Valid Pixel | Maximum Resolution | Color Space |

|---|---|---|---|

| Sony A7RIV | 61 million pixels | 9504 × 6336 | sRGB |

| Model | Precision/% | Recall/% | F1/% | mAP/% | Total Parameter | GFLOPs |

|---|---|---|---|---|---|---|

| YOLOv5n | 92.6 | 78.8 | 85.1 | 87.4 | 1,761,871 | 4.2 |

| YOLOv5s | 91.2 | 81.2 | 85.9 | 88.5 | 7,025,023 | 15.9 |

| YOLOv5m | 95.2 | 88.1 | 91.5 | 93.2 | 20,856,975 | 48.0 |

| YOLOv5l | 96.2 | 91.7 | 93.9 | 95.2 | 46,113,663 | 107.8 |

| YOLOv5x | 97.6 | 91.4 | 94.4 | 95.5 | 86,224,543 | 204.2 |

| Feature Map Scale | Anchor Box Size | ||

|---|---|---|---|

| Anchor Box 1 | Anchor Box 2 | Anchor Box 3 | |

| small | (88,94) | (138,136) | (174,182) |

| medium | (245,136) | (156,303) | (250,232) |

| large | (305,332) | (424,404) | (610,643) |

| Model | Precision/% | Recall/% | F1/% | mAP/% | Total Parameter | GFLOPs | Frame Per Second |

|---|---|---|---|---|---|---|---|

| YOLOv5s | 91.2 | 81.2 | 85.9 | 88.5 | 7,025,023 | 15.9 | 46.0 |

| YOLOv3 | 69.3 | 8.7 | 15.5 | 40.5 | 61,523,119 | 198.5 | 22.4 |

| YOLOv4 | 77.5 | 31.2 | 44.5 | 55.1 | 63,943,071 | 181.0 | 16.7 |

| Faster R-CNN | 66.5 | 90.4 | 76.6 | 87.2 | 136,709,509 | 402.5 | 21.0 |

| YOLOv5x | 97.6 | 91.4 | 94.4 | 95.5 | 86,224,543 | 204.2 | 16.3 |

| YOLOv5_Building [19] | 92.9 | 29.1 | 44.3 | 73.7 | 5,286,058 | 38.4 | 40.0 |

| YOLOv5s-ViT | 93.4 | 92.7 | 93.0 | 95.0 | 27,234,559 | 34.2 | 30.1 |

| Model | Detection Result | |

|---|---|---|

| Detection of Random Construction | Detection of Random Storage | |

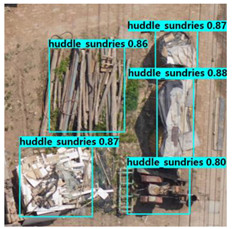

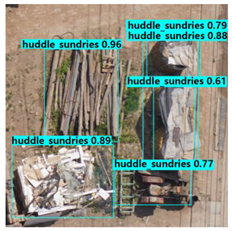

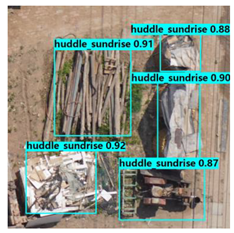

| YOLOv5s |  |  |

| YOLOv3 |  |  |

| YOLOv4 |  |  |

| Faster R-CNN |  |  |

| YOLOv5_Building [19] |  |  |

| YOLOv5s-ViT |  |  |

| Scheme | Add k-Means++ Algorithm | Add Modified BottleNeck Structure | Add SimAM | Add Vision Transformer Module |

|---|---|---|---|---|

| 0 | ||||

| 1 | √ | |||

| 2 | √ | √ | ||

| 3 | √ | √ | √ | |

| 4 | √ | √ | √ | √ |

| Model | Precision/% | Recall/% | F1/% | mAP/% |

|---|---|---|---|---|

| scheme 0 | 91.2 | 81.2 | 85.9 | 88.5 |

| scheme 1 | 94.1 | 87.2 | 90.5 | 91.8 |

| scheme 2 | 94.5 | 88.0 | 91.1 | 92.9 |

| scheme 3 | 93.2 | 88.8 | 90.9 | 93.2 |

| scheme 4 | 93.4 | 92.7 | 93.0 | 95.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Sun, W.; Wu, H.; Zhao, C.; Teng, G.; Yang, Y.; Du, P. A Low-Altitude Remote Sensing Inspection Method on Rural Living Environments Based on a Modified YOLOv5s-ViT. Remote Sens. 2022, 14, 4784. https://doi.org/10.3390/rs14194784

Wang C, Sun W, Wu H, Zhao C, Teng G, Yang Y, Du P. A Low-Altitude Remote Sensing Inspection Method on Rural Living Environments Based on a Modified YOLOv5s-ViT. Remote Sensing. 2022; 14(19):4784. https://doi.org/10.3390/rs14194784

Chicago/Turabian StyleWang, Chunshan, Wei Sun, Huarui Wu, Chunjiang Zhao, Guifa Teng, Yingru Yang, and Pengfei Du. 2022. "A Low-Altitude Remote Sensing Inspection Method on Rural Living Environments Based on a Modified YOLOv5s-ViT" Remote Sensing 14, no. 19: 4784. https://doi.org/10.3390/rs14194784

APA StyleWang, C., Sun, W., Wu, H., Zhao, C., Teng, G., Yang, Y., & Du, P. (2022). A Low-Altitude Remote Sensing Inspection Method on Rural Living Environments Based on a Modified YOLOv5s-ViT. Remote Sensing, 14(19), 4784. https://doi.org/10.3390/rs14194784