Automatic Extraction of Marine Aquaculture Zones from Optical Satellite Images by R3Det with Piecewise Linear Stretching

Abstract

1. Introduction

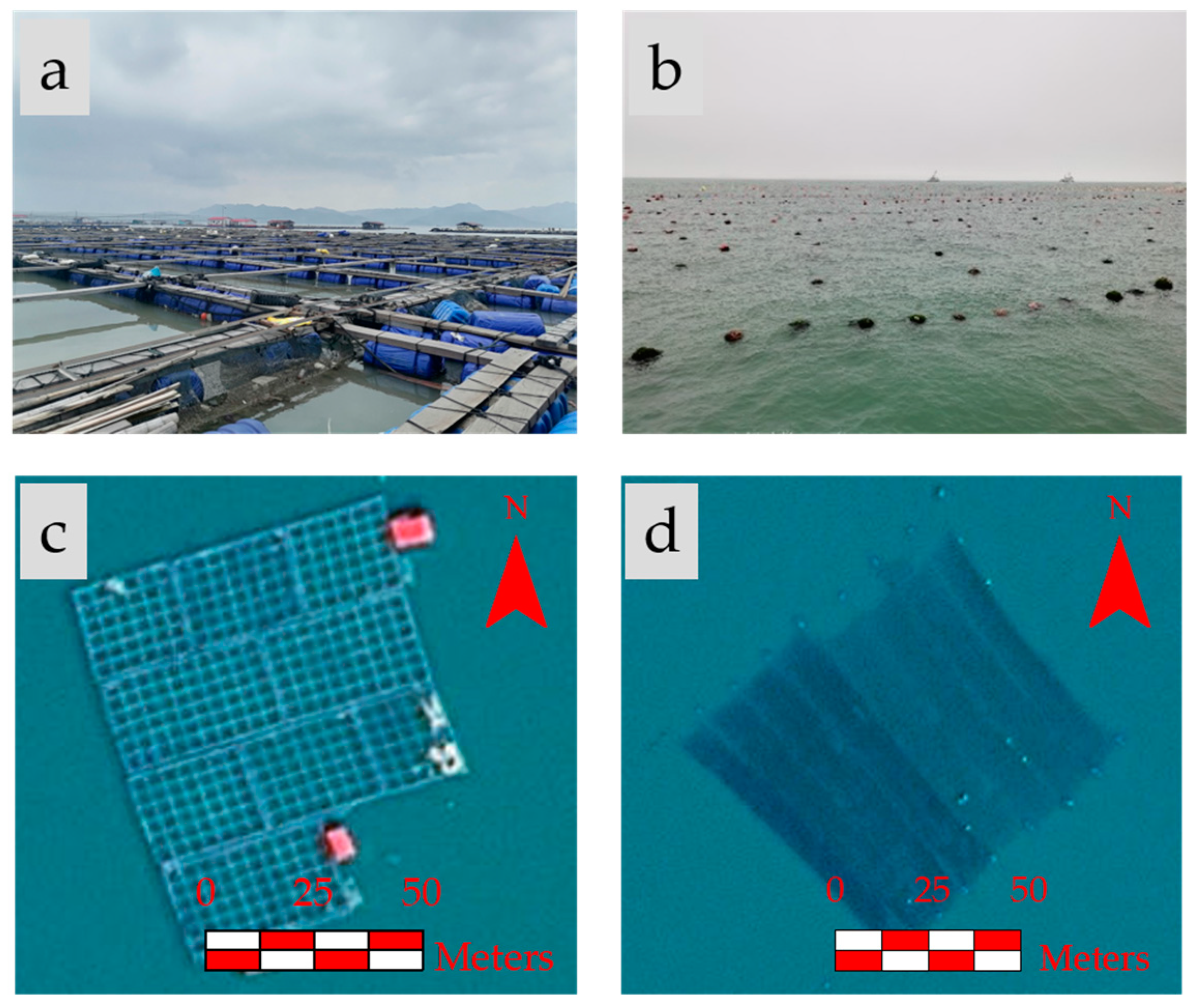

2. Study Area and Data

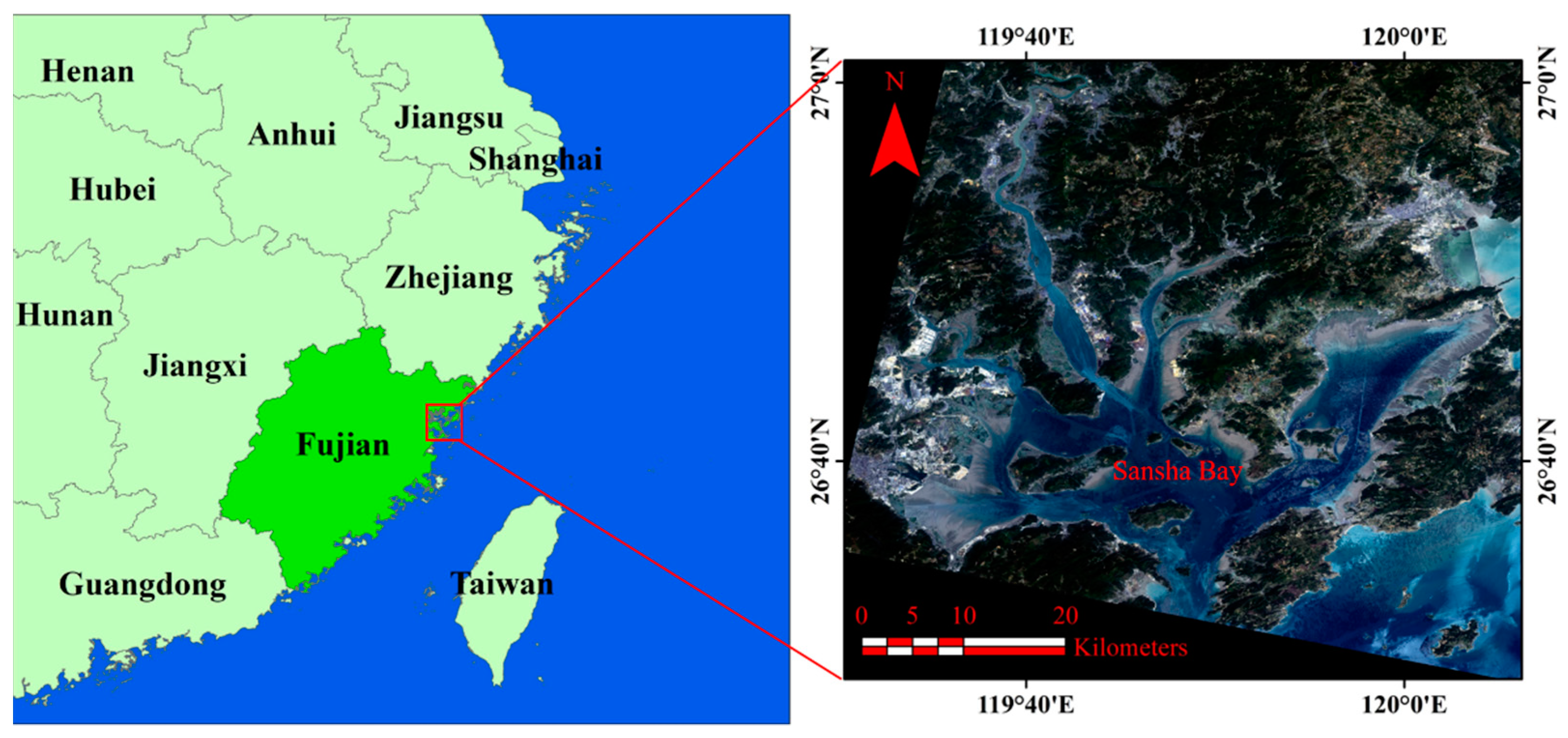

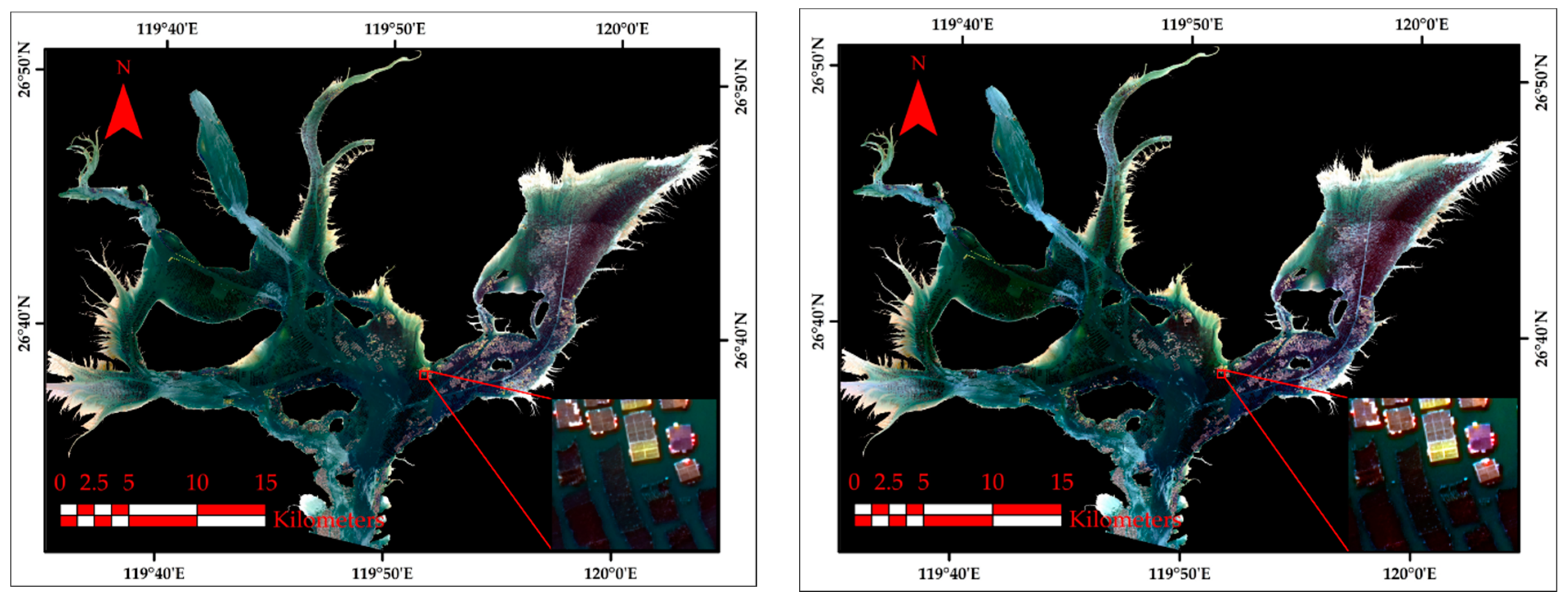

2.1. Study Area

2.2. Data and Preprocessing

3. Research Methods

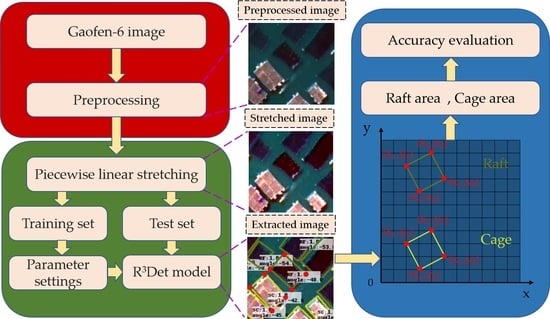

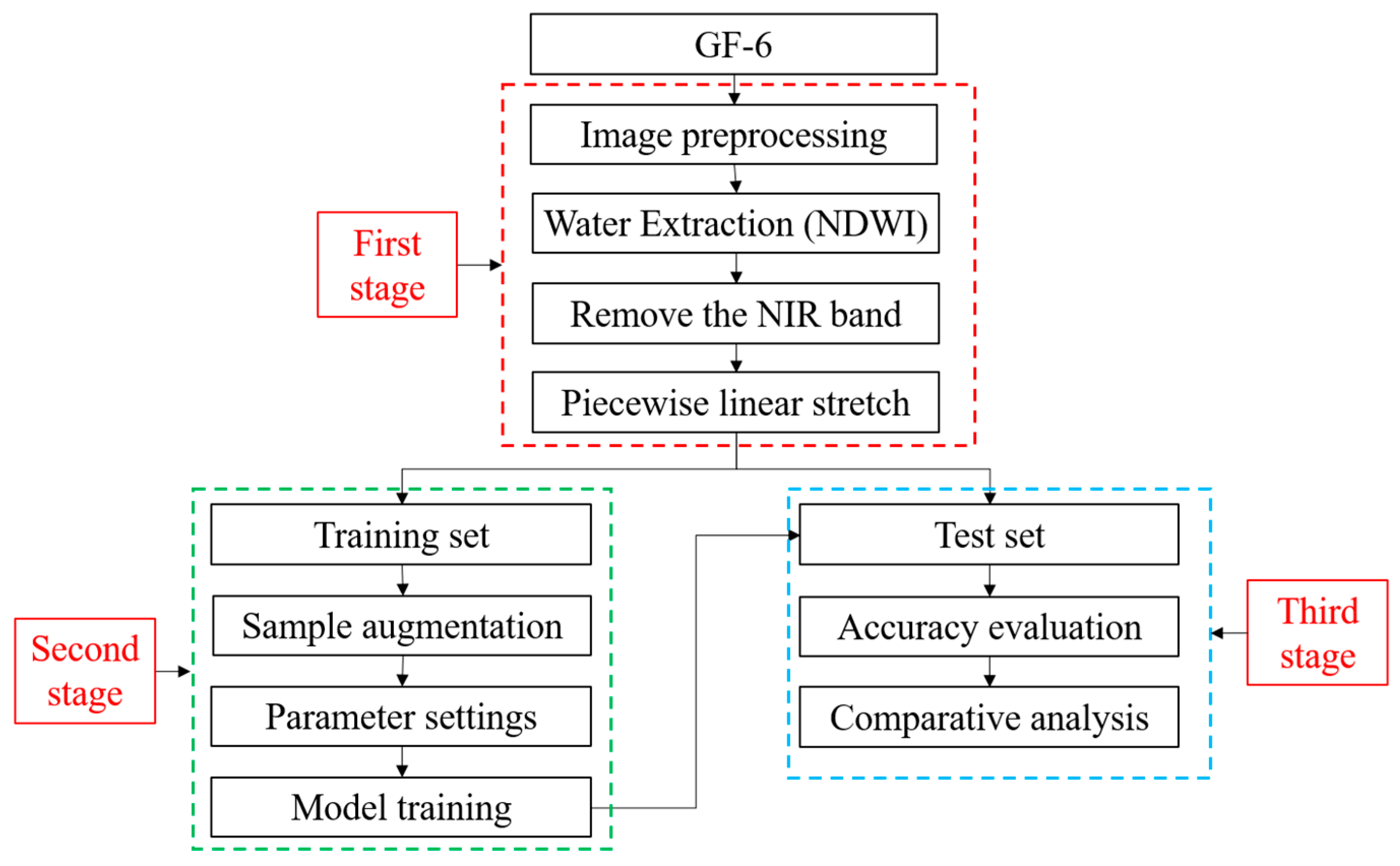

3.1. Extraction Process from Satellite Images

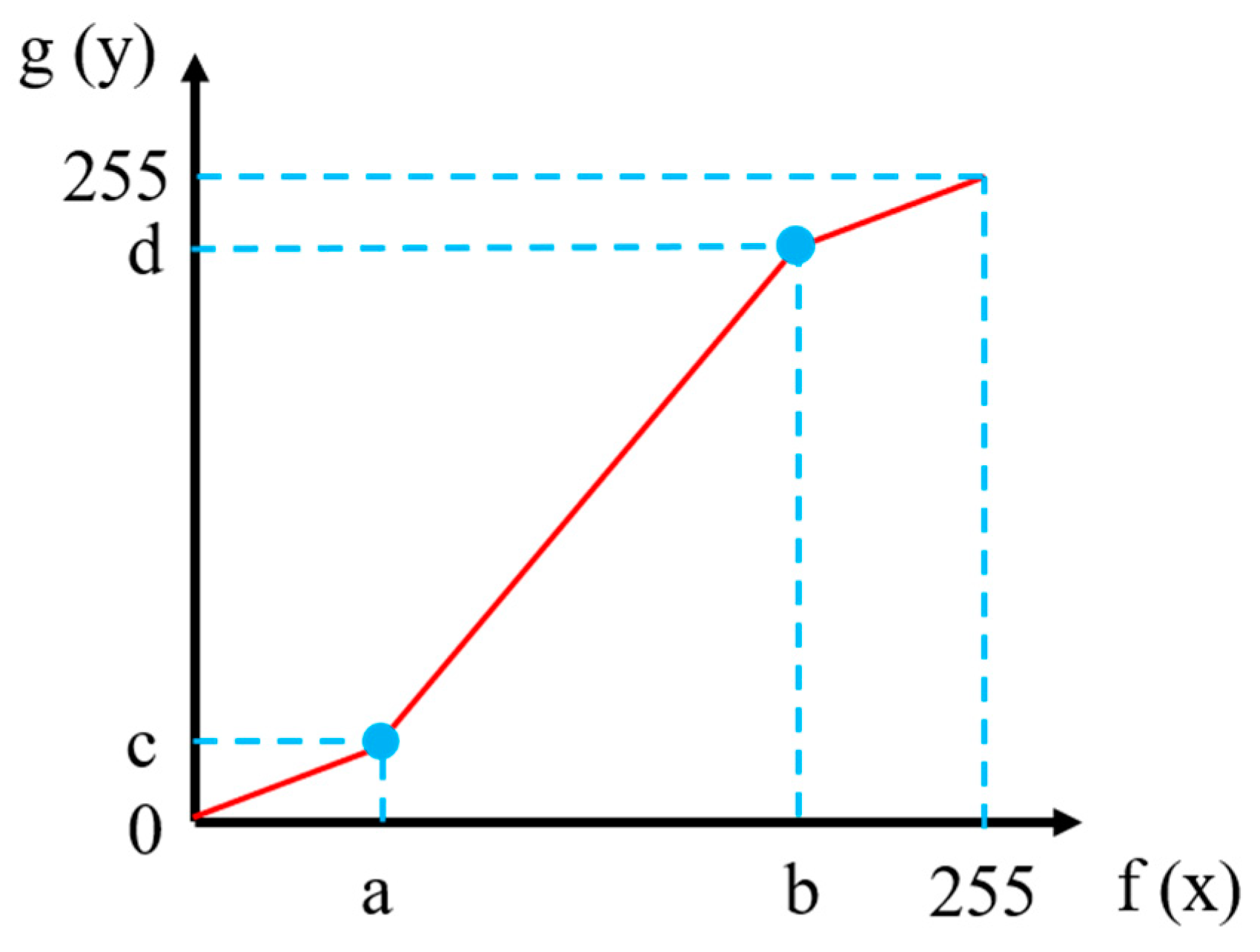

3.2. Piecewise Linear Stretching Based on Histogram

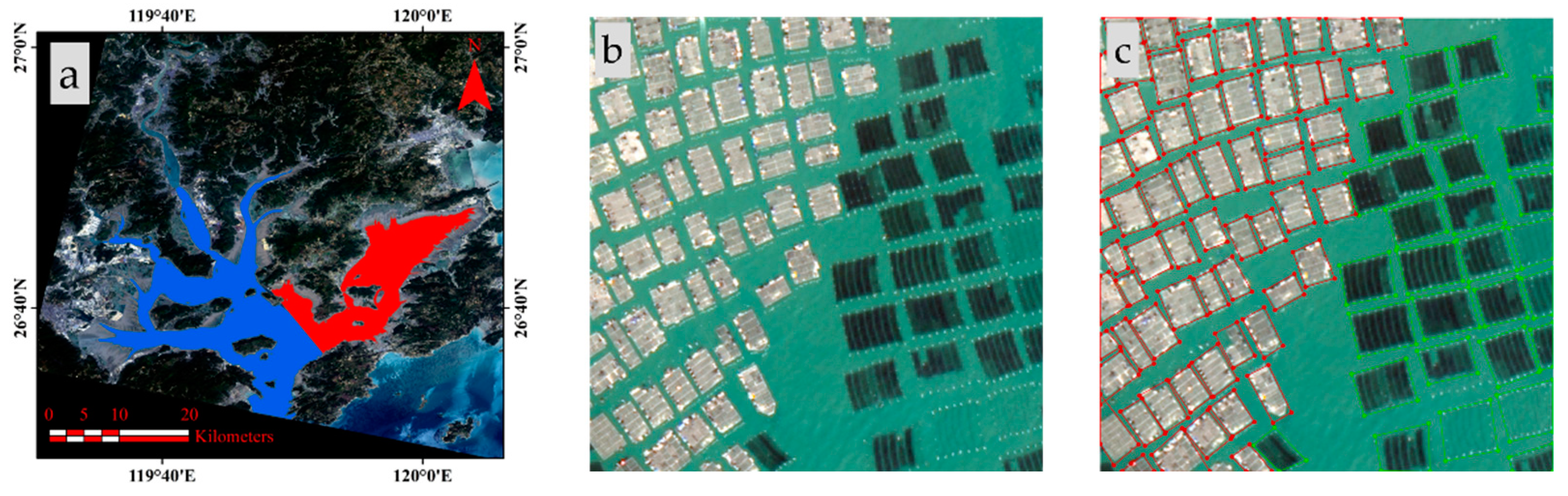

3.3. Dataset

3.4. R3Det

3.5. Confusion Matrix

4. Experimental Results and Analysis

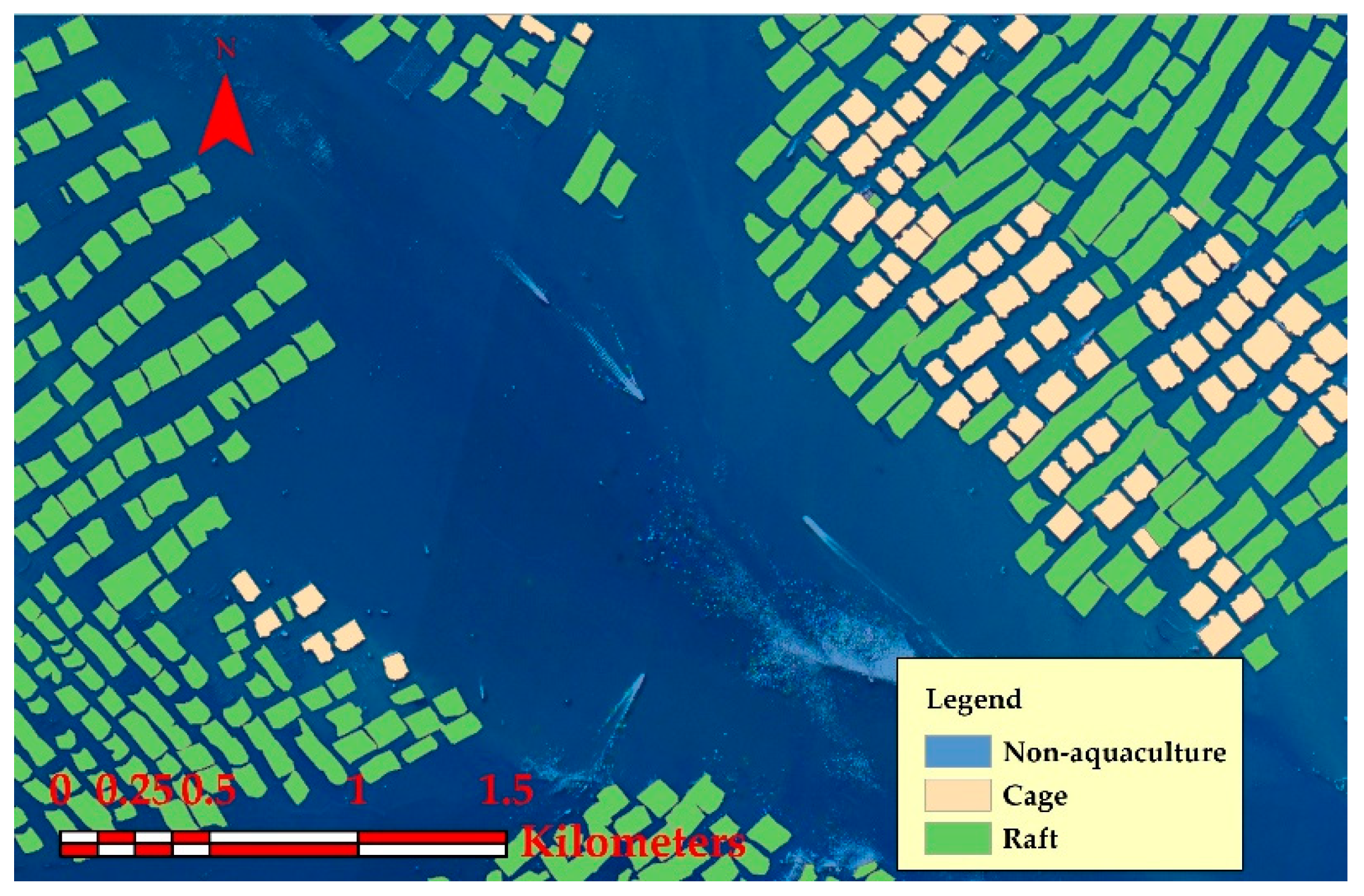

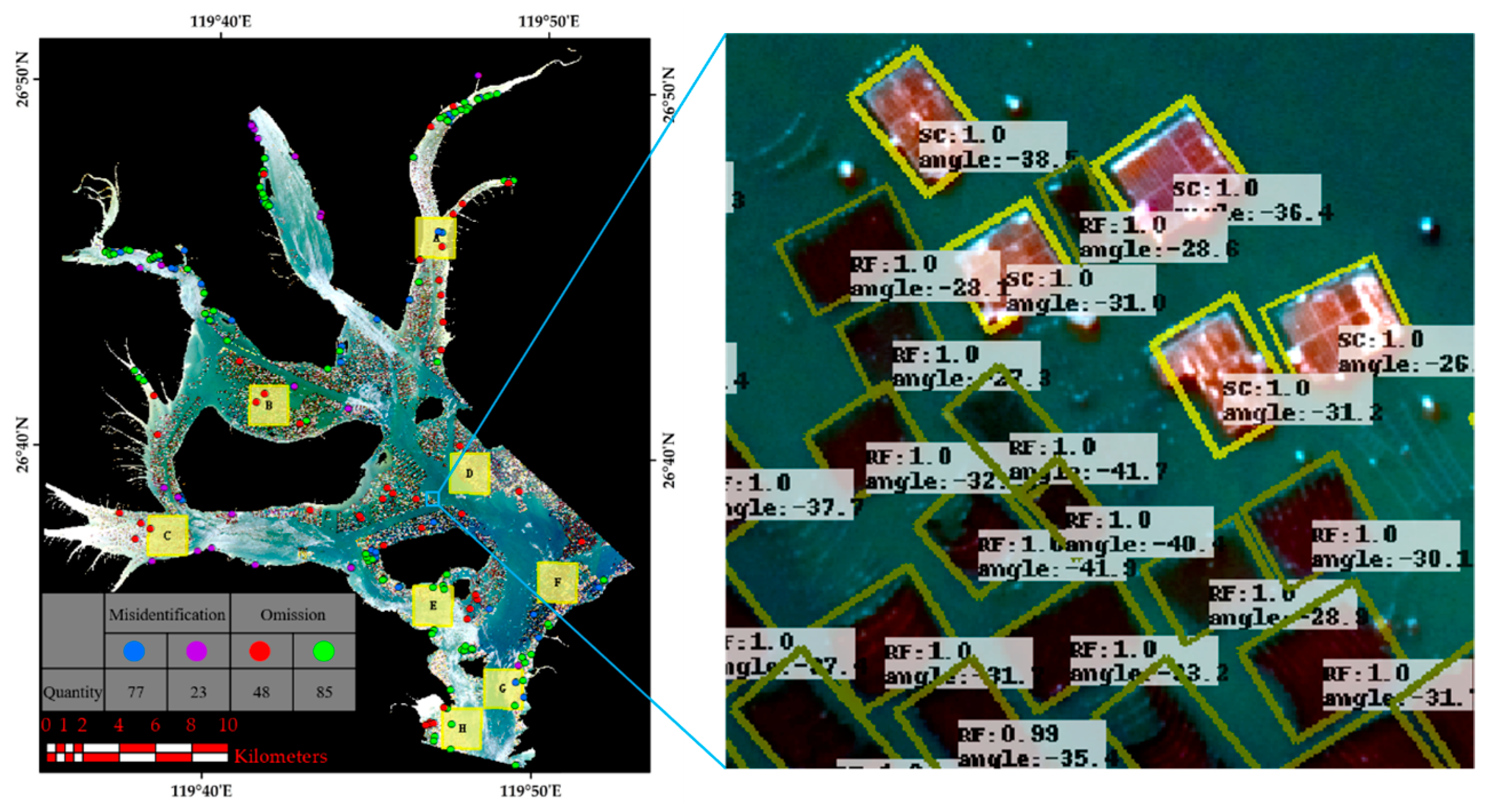

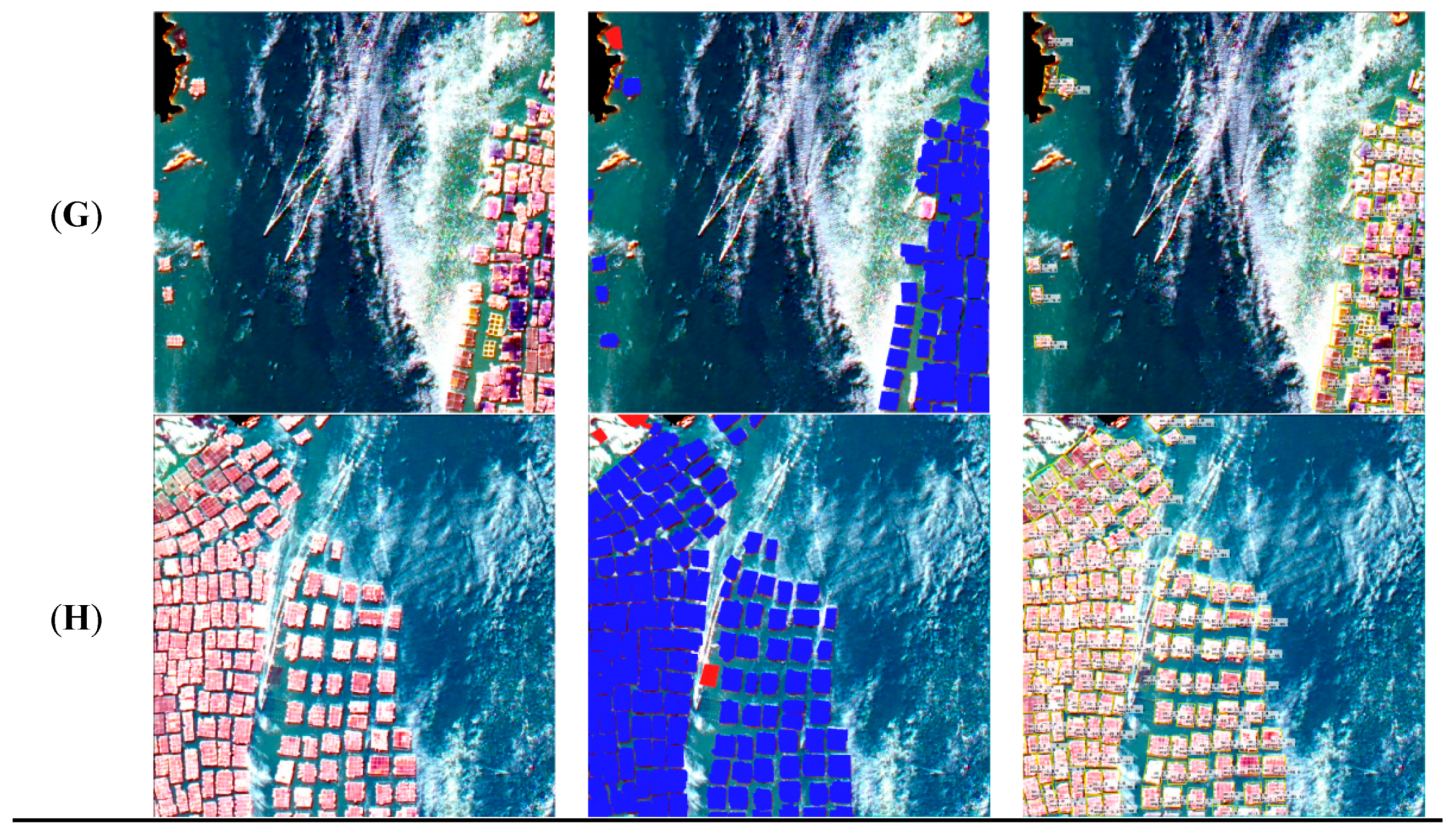

4.1. Extraction Results

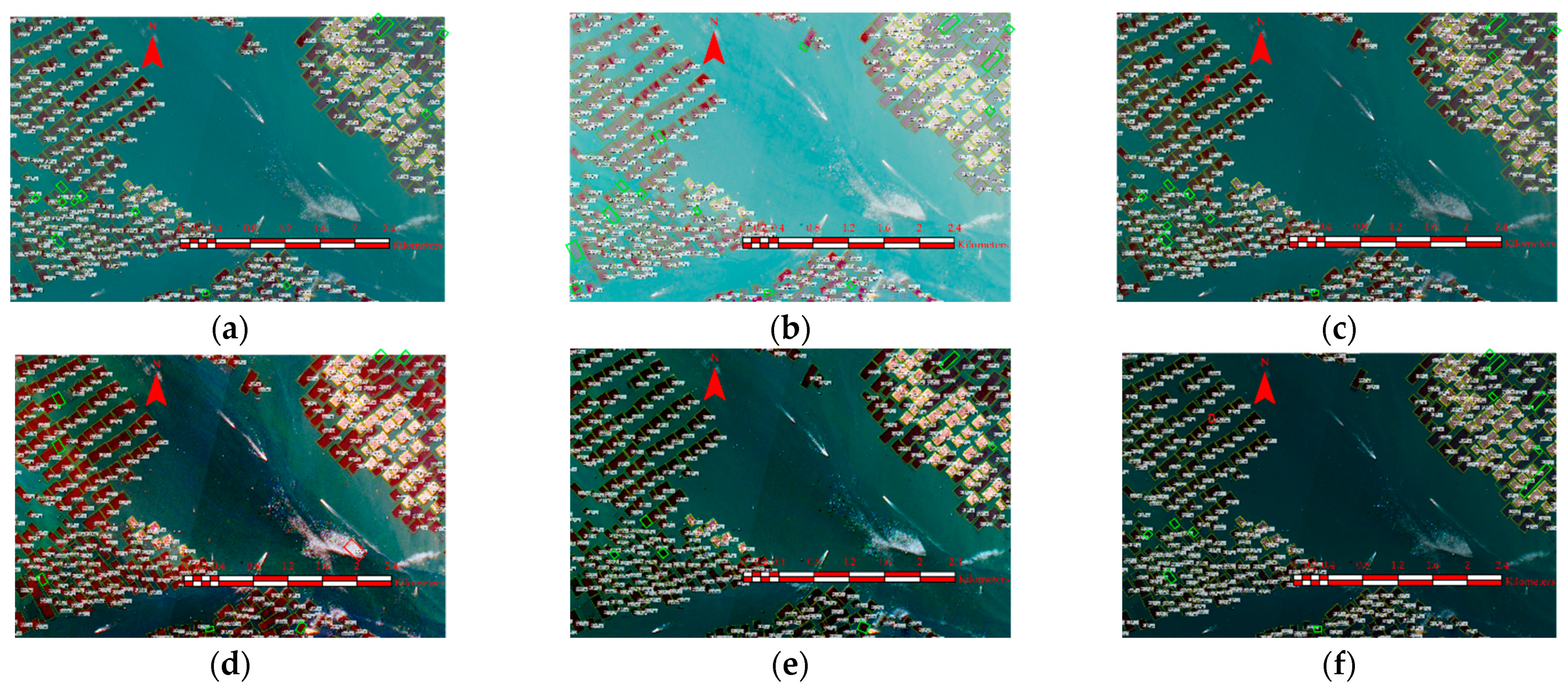

4.2. Comparisons of Accuracy of Different Stretching Conditions

4.3. Comparisons of Different Models

5. Discussion

5.1. Importance of Piecewise Linear Stretching for Extraction of Aquaculture Zones

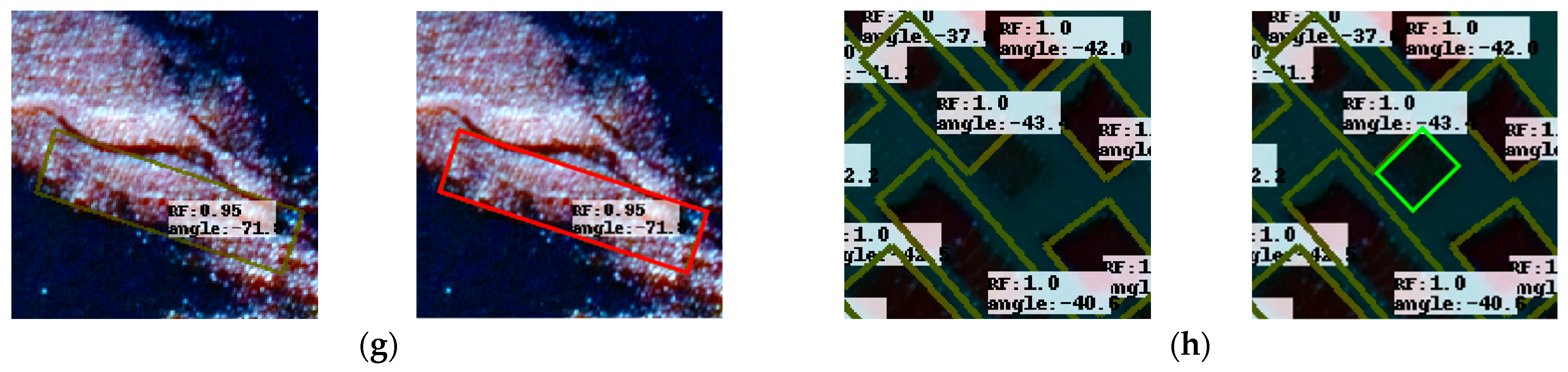

5.2. Importance of R3Det for Extraction of Aquaculture Zones

5.3. Influence of the Bounding Box on the Aquaculture Zone

5.4. Problems and Prospects

6. Conclusions

- Compared with the stretched images using methods of square root stretching, equalization stretching, Gaussian stretching, logarithmic stretching, and unstretched images, piecewise linear stretching could more effectively highlight the appearance characteristics of raft aquaculture and cage aquaculture zones, as well as improve the contrast of the images, achieving the highest accuracy for both raft and cage extraction.

- Compared with R2CNN and RetinaNet, R3Det showdc a higher extraction accuracy for marine aquaculture zones under piecewise linear stretching. The overall extraction accuracy of R3Det for Sansha Bay raft aquaculture and cage aquaculture were 98.91% and 97.21%, respectively, and the extraction precision of the total area of aquaculture was 92.08%.

- The method proposed in this study is not limited by factors such as specific aquaculture zones and model structure and can classify and extract marine aquaculture zones under large-scale and complex aquaculture backgrounds. The study results can provide effective assistance for relevant marine aquaculture management departments to conduct large-scale aquaculture monitoring and scientific sea use, thus achieving sustainable development of the marine aquaculture industry.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xia, Z.; Guo, X.; Chen, R. Automatic extraction of aquaculture ponds based on Google Earth Engine. Ocean Coast. Manag. 2020, 198, 105348. [Google Scholar] [CrossRef]

- Akber, M.A.; Aziz, A.A.; Lovelock, C. Major drivers of coastal aquaculture expansion in Southeast Asia. Ocean Coast. Manag. 2020, 198, 105364. [Google Scholar] [CrossRef]

- FAO. The State of World Fisheries and Aquaculture; FAO: Rome, Italy, 2022; Volume 4, pp. 40–41. [Google Scholar]

- Clavelle, T.; Lester, S.E.; Gentry, R.; Froehlich, H.E. Interactions and management for the future of marine aquaculture and capture fisheries. Fish Fish. 2019, 20, 368–388. [Google Scholar] [CrossRef]

- Marquez, M.J.; Roncales, C.J.; Tigcal, R.A.; Quinto, E.; Orbecido, A.; Bungay, V.; Beltran, A.; Aviso, K. Development of optical detection for antibiotic residues: Oxytetracycline in freshwater aquaculture. MATEC Web Conf. 2019, 268, 06013. [Google Scholar] [CrossRef][Green Version]

- Bing, W.; Ling, C.; Fiorenza, M.; Naylor, R.L.; Fringer, O.B. The effects of intensive aquaculture on nutrient residence time and transport in a coastal embayment. Environ. Fluid Mech. 2018, 18, 1321–1349. [Google Scholar]

- Neofitou, N.; Papadimitriou, K.; Domenikiotis, C.; Tziantziou, L.; Panagiotaki, P. GIS in environmental monitoring and assessment of fish farming impacts on nutrients of Pagasitikos Gulf, Eastern Mediterranean. Aquaculture 2019, 501, 62–75. [Google Scholar] [CrossRef]

- Zhang, C.; Yin, K.; Shi, X.; Yan, X. Risk assessment for typhoon storm surges using geospatial techniques for the coastal areas of Guangdong, China. Ocean Coast. Manag. 2021, 213, 105880. [Google Scholar] [CrossRef]

- Mmia, B.; Ab, B.; Gkk, C.; Mak, B.; Bp, B. Vulnerability of inland and coastal aquaculture to climate change: Evidence from a developing country. Aquac. Fish. 2019, 4, 183–189. [Google Scholar]

- Kang, J.; Sui, L.; Yang, X.; Liu, Y.; Wang, Z.; Wang, J.; Yang, F.; Liu, B.; Ma, Y. Sea Surface-Visible Aquaculture Spatial-Temporal Distribution Remote Sensing: A Case Study in Liaoning Province, China from 2000 to 2018. Sustainability 2019, 11, 7186. [Google Scholar] [CrossRef]

- dan Teknik, M.S.P.J.; NURDIN, S.; MUSTAPHA, M.A.; LIHAN, T.; Abd Ghaffar, M. Determination of potential fishing grounds of Rastrelliger kanagurta using satellite remote sensing and GIS technique. Sains Malays. 2015, 44, 225–232. [Google Scholar]

- Wang, Z.; Lu, C.; Yang, X. Exponentially sampling scale parameters for the efficient segmentation of remote-sensing images. Int. J. Remote Sens. 2018, 39, 1628–1654. [Google Scholar] [CrossRef]

- McCarthy, M.J.; Colna, K.E.; El-Mezayen, M.M.; Laureano-Rosario, A.E.; Méndez-Lázaro, P.; Otis, D.B.; Toro-Farmer, G.; Vega-Rodriguez, M.; Muller-Karger, F.E. Satellite remote sensing for coastal management: A review of successful applications. Environ. Manag. 2017, 60, 323–339. [Google Scholar] [CrossRef] [PubMed]

- Jayanthi, M. Monitoring brackishwater aquaculture development using multi-spectral satellite data and GIS- a case study near Pichavaram mangroves south-east coast of India. Indian J. Fish. 2011, 58, 85–90. [Google Scholar]

- Seto, K.C.; Fragkias, M. Mangrove conversion and aquaculture development in Vietnam: A remote sensing-based approach for evaluating the Ramsar Convention on Wetlands. Glob. Environ. Change 2007, 17, 486–500. [Google Scholar] [CrossRef]

- Chu, J.; Zhao, D.Z.; Zhang, F.S.; Wei, B.Q.; Li, C.M.; Suo, A.N. Monitor method of rafts cultivation by remote sense—A case of Changhai. Mar. Environ. Sci. 2008, 27, 6. [Google Scholar]

- Wang, M.; Cui, Q.; Wang, J.; Ming, D.; Lv, G. Raft cultivation area extraction from high resolution remote sensing imagery by fusing multi-scale region-line primitive association features. ISPRS J. Photogramm. Remote Sens. 2017, 123, 104–113. [Google Scholar]

- Lu, Y.; Li, Q.; Du, x.; Wang, H.; Liu, J. A Method of Coastal Aquaculture Area Automatic Extraction with High Spatial Resolution Images. Remote Sens. Technol. Appl. 2015, 30, 9. [Google Scholar]

- Wang, J.; Gao, J. Extraction of Enclosure Culture in Gehu Lake Based on Correspondence Analysis. J. Remote Sens. 2008, 12, 8. [Google Scholar]

- Sun, X.; Su, F.; Zhou, C.; Xue, Z. Analyses on Spatial-Temporal Changes in Aquaculture Iand in Coastal Areas of the Pearl River Estuarine. Resour. Sci. 2010, 32, 7. [Google Scholar]

- Xie, Y.; Wang, M.; Zhang, X. An Object-oriented Approach for Extracting Farm Waters within Coastal Belts. Remote Sens. Technol. Appl. 2009, 24, 68–72. [Google Scholar]

- Guan, X.; Zhang, C.; Jiang, J.; Cao, J. Remote Sensing monitoring of aquaculture and automatic information extraction. Remote Sens. Land Resour. 2009, 21, 41–44. [Google Scholar]

- Shen, Y.; Zhu, S.; Chen, C.; Du, Q.; Xiao, L.; Chen, J.; Pan, D. Efficient Deep Learning of Non-local Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6029–6043. [Google Scholar] [CrossRef]

- Cheng, B.; Liang, C.; Liu, X.; Liu, Y.; Wang, G. Research on a novel extraction method using Deep Learning based on GF-2 images for aquaculture areas. Int. J. Remote Sens. 2020, 41, 3575–3591. [Google Scholar] [CrossRef]

- Cui, B.; Fei, D.; Shao, G.; Lu, Y.; Chu, J. Extracting Raft Aquaculture Areas from Remote Sensing Images via an Improved U-Net with a PSE Structure. Remote Sens. 2019, 11, 2053. [Google Scholar] [CrossRef]

- Liu, C.; Jiang, T.; Zhang, Z.; Sui, B.; Pan, X.; Zhang, L.; Zhang, J. Extraction method of offshore mariculture area under weak signal based on multisource feature fusion. J. Mar. Sci. Eng. 2020, 8, 99. [Google Scholar] [CrossRef]

- Fu, Y.; Ye, Z.; Deng, J.; Zheng, X.; Huang, Y.; Yang, W.; Wang, Y.; Wang, K. Finer resolution mapping of marine aquaculture areas using worldView-2 imagery and a hierarchical cascade convolutional neural network. Remote Sens. 2019, 11, 1678. [Google Scholar] [CrossRef]

- Lu, Y.; Shao, W.; Sun, J. Extraction of Offshore Aquaculture Areas from Medium-Resolution Remote Sensing Images Based on Deep Learning. Remote Sens. 2021, 13, 3854. [Google Scholar] [CrossRef]

- Yang, X.; Liu, Q.; Yan, J.; Li, A.; Zhang, Z.; Yu, G. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. AAAI Conf. Artif. Intelligence 2021, 35, 3163–3171. [Google Scholar]

- Ma, Y.; Qu, X.; Feng, D.; Zhang, P.; Huang, H.; Zhang, Z.; Gui, F. Recognition and statistical analysis of coastal marine aquacultural cages based on R3Det single-stage detector: A case study of Fujian Province, China. Ocean Coast. Manag. 2022, 225, 106244. [Google Scholar]

- Raju, G.; Nair, M.S. A fast and efficient color image enhancement method based on fuzzy-logic and histogram. AEU-Int. J. Electron. Commun. 2014, 68, 237–243. [Google Scholar] [CrossRef]

- Paul, A.; Bhattacharya, P.; Maity, S.P. Histogram modification in adaptive bi-histogram equalization for contrast enhancement on digital images. Optik 2022, 259, 168899. [Google Scholar] [CrossRef]

- Kumar, R.; Bhandari, A.K. Luminosity and contrast enhancement of retinal vessel images using weighted average histogram. Biomed. Signal Process. Control 2022, 71, 103089. [Google Scholar] [CrossRef]

- Luo, W.; Duan, S.; Zheng, J. Underwater image restoration and enhancement based on a fusion algorithm with color balance, contrast optimization, and histogram stretching. IEEE Access 2021, 9, 31792–31804. [Google Scholar] [CrossRef]

- Mayathevar, K.; Veluchamy, M.; Subramani, B. Fuzzy color histogram equalization with weighted distribution for image enhancement. Optik 2020, 216, 164927. [Google Scholar] [CrossRef]

- Singh, H.; Kumar, A.; Balyan, L.; Singh, G.K. A novel optimally weighted framework of piecewise gamma corrected fractional order masking for satellite image enhancement. Comput. Electr. Eng. 2019, 75, 245–261. [Google Scholar] [CrossRef]

- Zhou, S.; Kang, R.; Ji, C.; Kaufmann, H. Heavy metal distribution, contamination and analysis of sources—Intertidal zones of Sandu Bay, Ningde, China. Mar. Pollut. Bull. 2018, 135, 1138–1144. [Google Scholar] [CrossRef]

- Zhang, J.; Xing, X.; Qi, S.; Tan, L.; Yang, D.; Chen, W.; Yang, J.; Xu, M. Organochlorine pesticides (OCPs) in soils of the coastal areas along Sanduao Bay and Xinghua Bay, southeast China. J. Geochem. Explor. 2013, 125, 153–158. [Google Scholar] [CrossRef]

- Chen, M.; Ke, Y.; Bai, J.; Li, P.; Lyu, M.; Gong, Z.; Zhou, D. Monitoring early stage invasion of exotic Spartina alterniflora using deep-learning super-resolution techniques based on multisource high-resolution satellite imagery: A case study in the Yellow River Delta, China. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102180. [Google Scholar] [CrossRef]

- Zhang, M.; Lin, H. Wetland classification using parcel-level ensemble algorithm based on Gaofen-6 multispectral imagery and Sentinel-1 dataset. J. Hydrol. 2022, 606, 127462. [Google Scholar] [CrossRef]

- Zeng, M.; Li, Y.; Meng, Q.; Yang, T.; Liu, J. Improving histogram-based image contrast enhancement using gray-level information histogram with application to X-ray images. Optik 2012, 123, 511–520. [Google Scholar] [CrossRef]

- Gibson, D.; Gaydecki, P.A. The application of local grey level histograms to organelle classification in histological images. Comput. Biol. Med. 1996, 26, 329. [Google Scholar] [CrossRef]

- Li, L.; Ran, G.; Chen, W. Gray level image thresholding based on fisher linear projection of two-dimensional histogram. Pattern Recognit. 1997, 30, 743–749. [Google Scholar]

- Cheng, M.; Yuan, H.; Wang, Q.; Cai, Z.; Liu, Y.; Zhang, Y. Application of deep learning in sheep behaviors recognition and influence analysis of training data characteristics on the recognition effect. Comput. Electron. Agric. 2022, 198, 107010. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Yu, Z.; Zhao, S.; Bei, G. Research on Intelligent Detection of Coal Gangue Based on Deep Learning. Measurement 2022, 198, 111415. [Google Scholar] [CrossRef]

- Scardino, G.; Scicchitano, G.; Chirivì, M.; Costa, P.J.; Luparelli, A.; Mastronuzzi, G. Convolutional Neural Network and Optical Flow for the Assessment of Wave and Tide Parameters from Video Analysis (LEUCOTEA): An Innovative Tool for Coastal Monitoring. Remote Sens. 2022, 14, 2994. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, Y.; Yang, X.; Wang, Z.; Lu, C.; Li, Z.; Yang, F. Aquaculture area extraction and vulnerability assessment in Sanduao based on richer convolutional features network model. J. Oceanol. Limnol. 2019, 37, 1941–1954. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arxiv 2017, arXiv:1706.09579. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Garcia-Garin, O.; Monleon-Getino, A.; Brosa, P.L.; Borrell, A.; Vighi, M. Automatic detection and quantification of floating marine macro-litter in aerial images: Introducing a novel deep learning approach connected to a web application in R. Environ. Pollut. 2021, 273, 116490. [Google Scholar] [CrossRef]

- Wolf, M.; Berg, K.; Garaba, S.P.; Gnann, N.; Zielinski, O. Machine learning for aquatic plastic litter detection, classification and quantification (APLASTIC-Q). Environ. Res. Lett. 2020, 15, 114042. [Google Scholar] [CrossRef]

| Sensor Type | Spectral Range (nm) | Spatial Resolution (m) | Swath Width (km) | Revisit Period (Day) | Coverage Period (Day) | |

|---|---|---|---|---|---|---|

| PMS sensor | Panchromatic | Panchromatic: 450–900 | 2 | 95 | 4 | 41 |

| Multispectral | Blue: 450–520 | 8 | ||||

| Green: 520–590 | ||||||

| Red: 630–690 | ||||||

| NIR: 770–890 | ||||||

| WFV sensor | Multispectral | Blue: 450–520 | 16 | 860 | 4 | 41 |

| Green: 520–590 | ||||||

| Red: 630–690 |

| Value (Red) | Value (Green) | Value (Blue) | |

|---|---|---|---|

| Cage | 195.76 | 157.62 | 150.18 |

| Raft | 32.40 | 22.45 | 27.39 |

| Non-aquaculture | 12 | 72 | 70 |

| Parameter | Value |

|---|---|

| Max epoch | 10 |

| Iteration epoch | 27,000 |

| Max iteration | 270,000 |

| Batch size | 1 |

| Epsilon | 0.00005 |

| Momentum | 0.9 |

| Learning rate | 0.0005 |

| Decay weight | 0.0001 |

| Actual | |||

|---|---|---|---|

| Positive | Negative | ||

| Predict | Positive | True positive (TP) | False positive (FP) |

| Negative | False negative (FN) | True negative (TN) | |

| Type | Stretching Method | Precision (%) | Recall (%) | F-Measure (%) |

|---|---|---|---|---|

| Cage | Square root stretching | 97.88 | 89.52 | 93.51 |

| Logarithmic stretching | 98.57 | 85.09 | 91.33 | |

| Gaussian stretching | 97.58 | 89.35 | 93.28 | |

| Equalization stretching | 96.27 | 94.41 | 95.33 | |

| Piecewise linear stretching | 98.79 | 95.67 | 97.21 | |

| Unstretched | 98.28 | 88.16 | 92.91 | |

| Raft | Square root stretching | 97.17 | 94.31 | 95.72 |

| Logarithmic stretching | 97.30 | 90.26 | 93.65 | |

| Gaussian stretching | 96.66 | 96.41 | 96.53 | |

| Equalization stretching | 97.13 | 98.61 | 97.86 | |

| Piecewise linear stretching | 98.66 | 99.16 | 98.91 | |

| Unstretched | 96.67 | 96.73 | 96.70 |

| Type | Model | Precision (%) | Recall (%) | F-Measure (%) |

|---|---|---|---|---|

| Cage | R2CNN | 97.59 | 95.94 | 96.76 |

| RetinaNet | 96.97 | 95.87 | 96.42 | |

| R3Det | 98.79 | 95.67 | 97.21 | |

| Raft | R2CNN | 97.82 | 99.10 | 98.45 |

| RetinaNet | 96.66 | 98.84 | 97.74 | |

| R3Det | 98.66 | 99.16 | 98.91 |

| ID | Type | Vectorized (Hectare) | R3Det (Hectare) | Precision (%) | Type | Vectorization (Hectare) | R3Det (Hectare) | Precision (%) |

|---|---|---|---|---|---|---|---|---|

| A | Cage | 7.04 | 7.83 | 88.75 | Raft | 74.24 | 66.58 | 89.68 |

| B | Cage | 0.00 | 0.00 | - | Raft | 168.34 | 188.41 | 88.08 |

| C | Cage | 8.98 | 11.53 | 71.60 | Raft | 173.51 | 188.76 | 91.21 |

| D | Cage | 53.30 | 62.38 | 82.97 | Raft | 110.86 | 120.95 | 90.90 |

| E | Cage | 28.80 | 33.83 | 82.53 | Raft | 150.40 | 166.21 | 89.49 |

| F | Cage | 71.31 | 72.06 | 98.95 | Raft | 8.13 | 8.28 | 98.12 |

| G | Cage | 47.97 | 50.59 | 94.53 | Raft | 0.62 | 2.41 | −189.67 |

| H | Cage | 125.49 | 130.45 | 96.05 | Raft | 1.76 | 2.10 | 80.68 |

| A–H | Cage | 342.89 | 368.68 | 92.48 | Raft | 687.85 | 743.70 | 91.88 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Qu, X.; Yu, C.; Wu, L.; Zhang, P.; Huang, H.; Gui, F.; Feng, D. Automatic Extraction of Marine Aquaculture Zones from Optical Satellite Images by R3Det with Piecewise Linear Stretching. Remote Sens. 2022, 14, 4430. https://doi.org/10.3390/rs14184430

Ma Y, Qu X, Yu C, Wu L, Zhang P, Huang H, Gui F, Feng D. Automatic Extraction of Marine Aquaculture Zones from Optical Satellite Images by R3Det with Piecewise Linear Stretching. Remote Sensing. 2022; 14(18):4430. https://doi.org/10.3390/rs14184430

Chicago/Turabian StyleMa, Yujie, Xiaoyu Qu, Cixian Yu, Lianhui Wu, Peng Zhang, Hengda Huang, Fukun Gui, and Dejun Feng. 2022. "Automatic Extraction of Marine Aquaculture Zones from Optical Satellite Images by R3Det with Piecewise Linear Stretching" Remote Sensing 14, no. 18: 4430. https://doi.org/10.3390/rs14184430

APA StyleMa, Y., Qu, X., Yu, C., Wu, L., Zhang, P., Huang, H., Gui, F., & Feng, D. (2022). Automatic Extraction of Marine Aquaculture Zones from Optical Satellite Images by R3Det with Piecewise Linear Stretching. Remote Sensing, 14(18), 4430. https://doi.org/10.3390/rs14184430