Cloud Detection of Gaofen-2 Multi-Spectral Imagery Based on the Modified Radiation Transmittance Map

Abstract

:1. Introduction

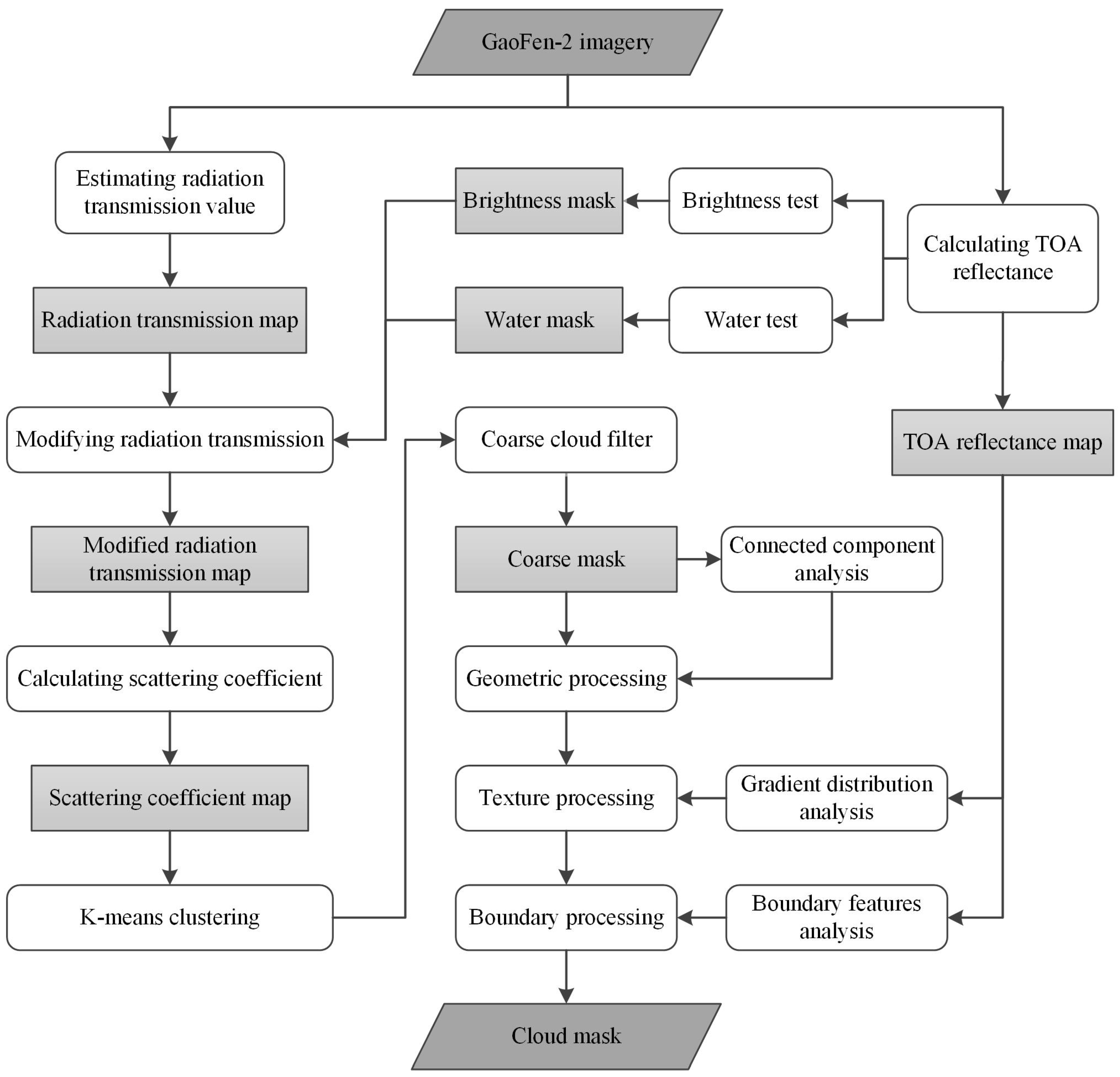

2. Methodology

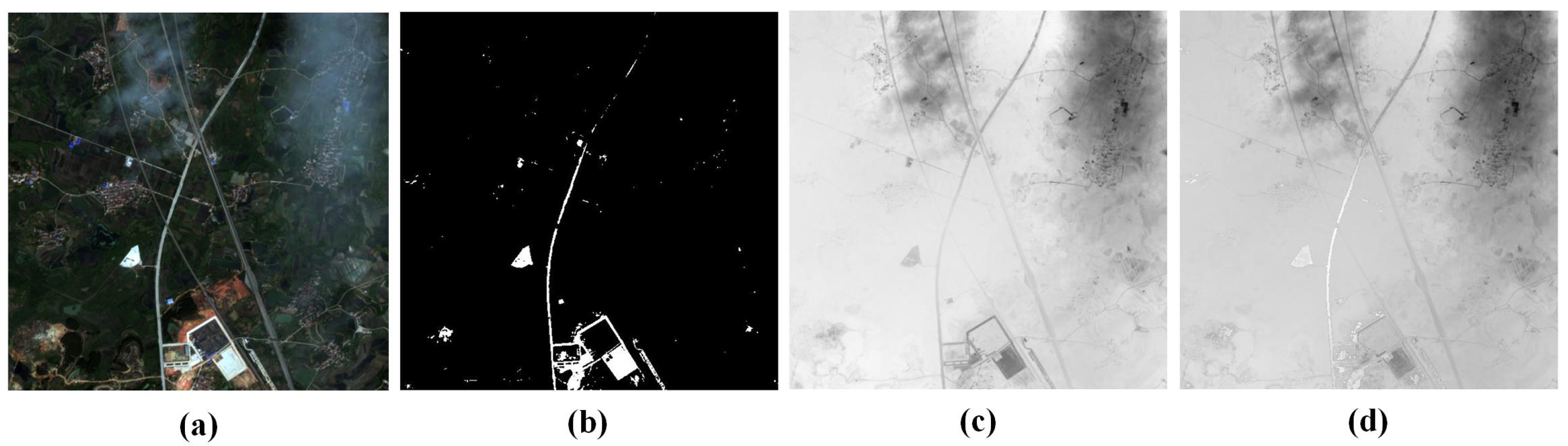

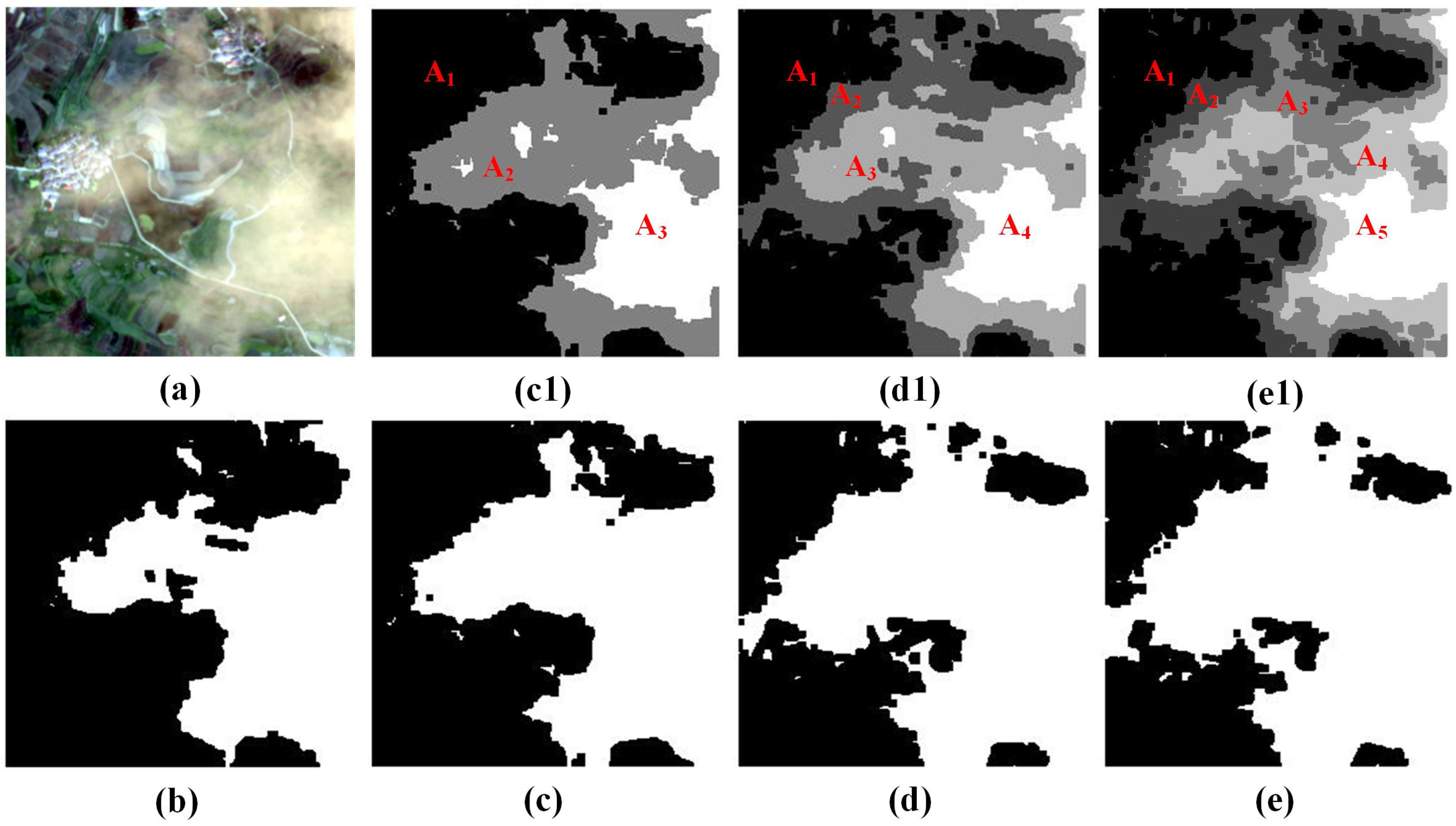

2.1. Cloud Detection

- if .

- if.

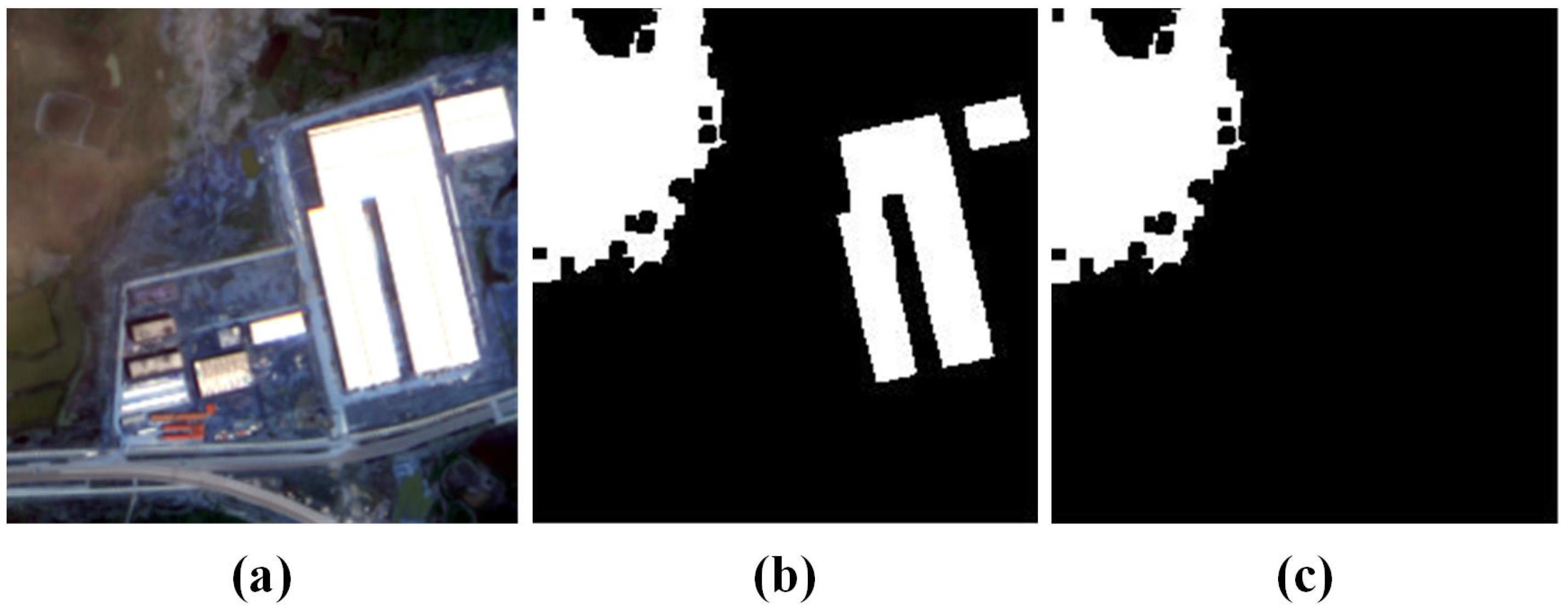

2.2. Post-Processing

2.3. Parameter Decision

2.4. Evaluation Metrics

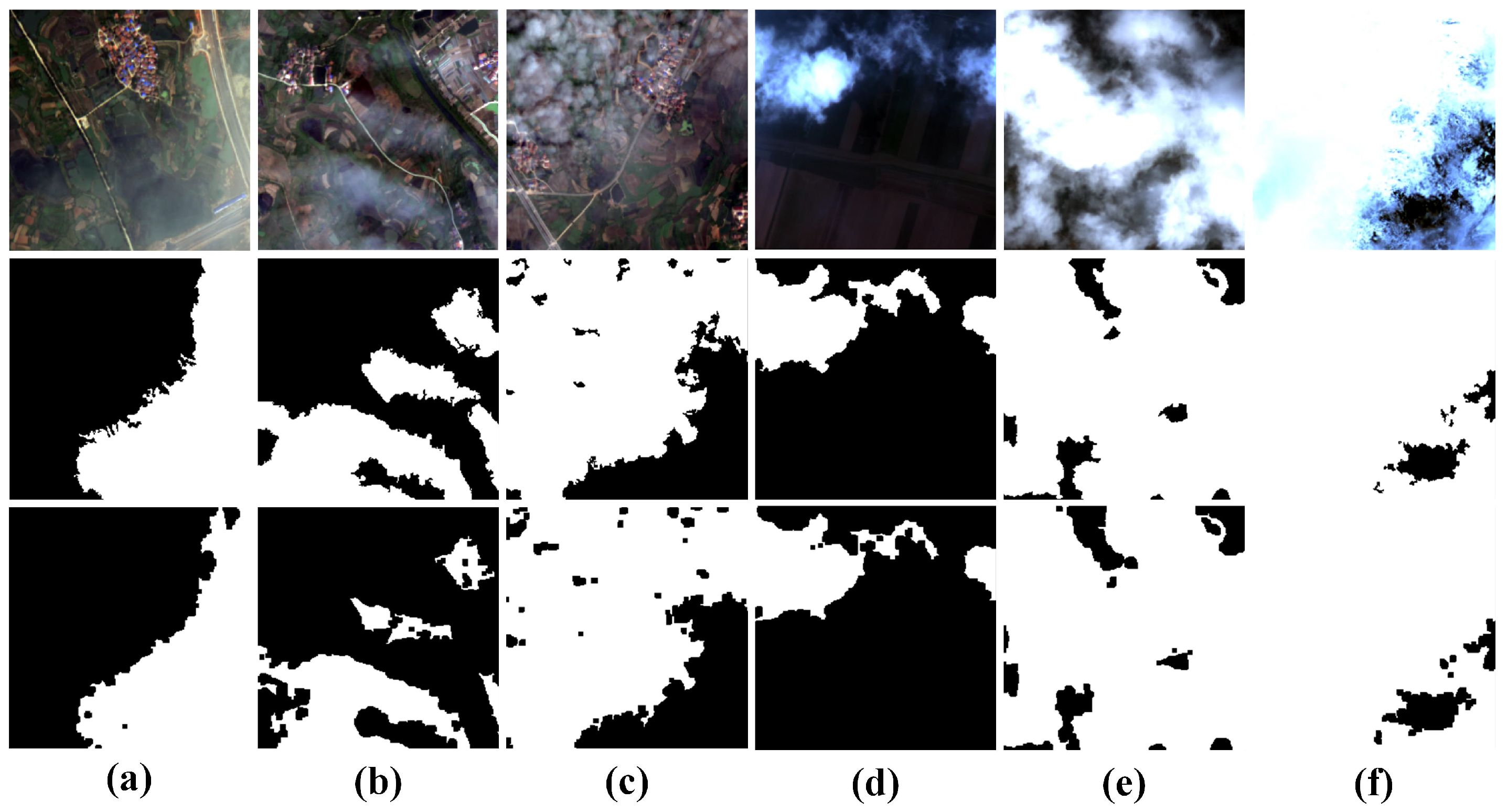

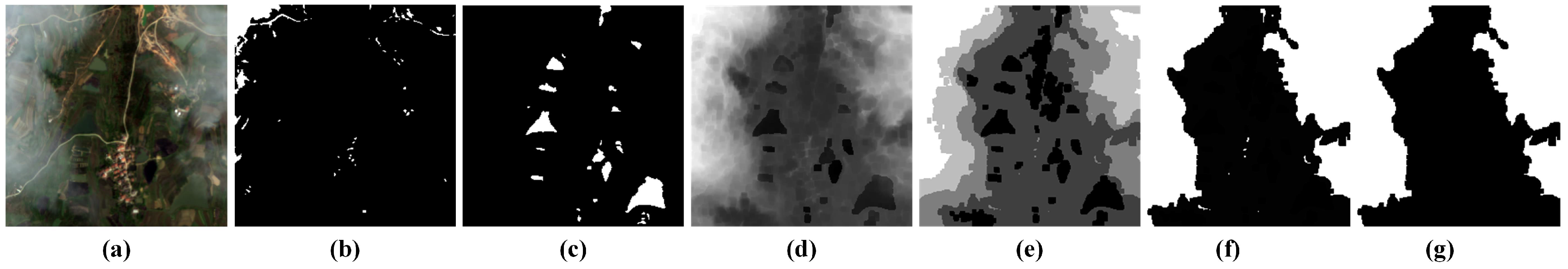

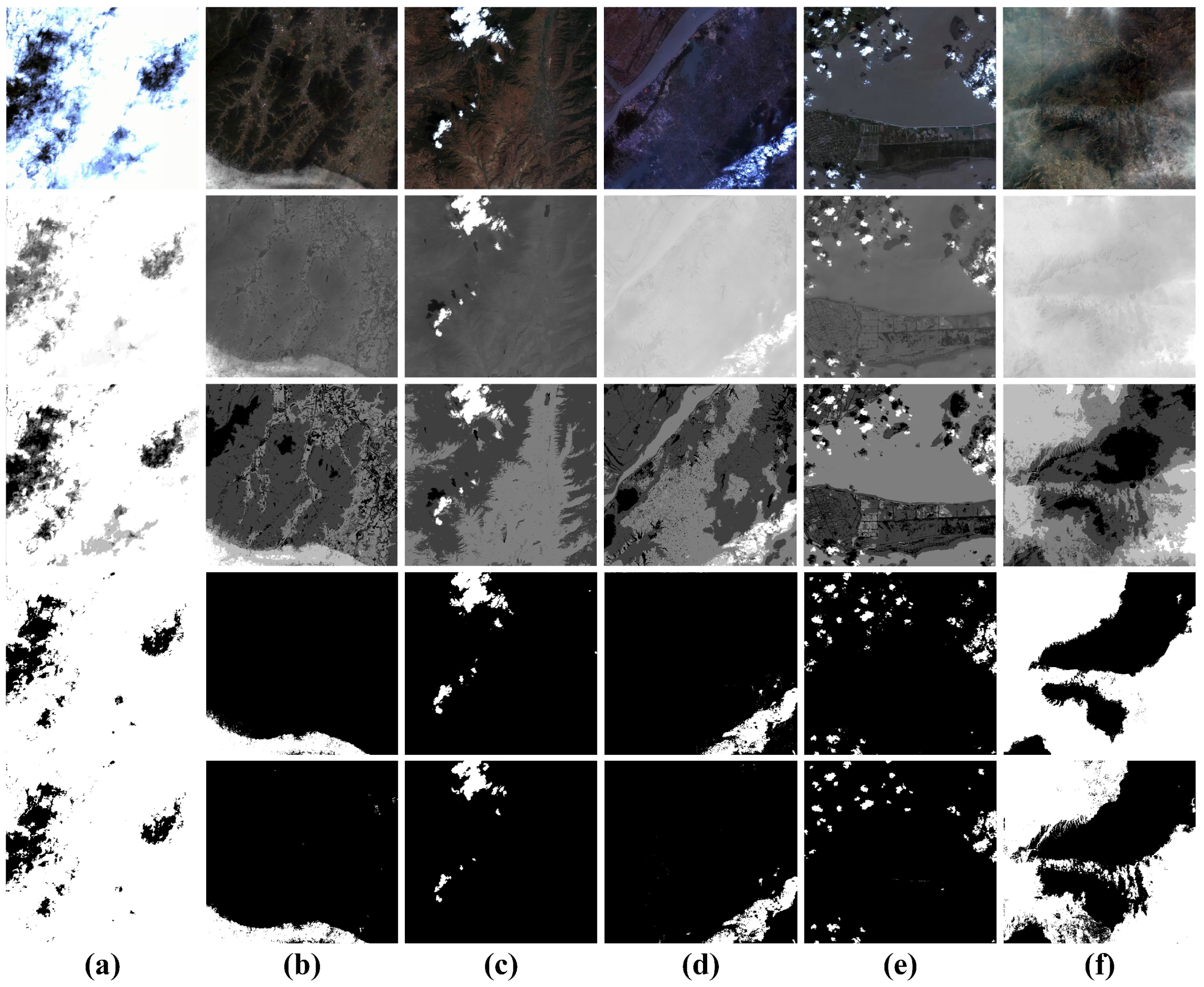

3. Results

3.1. Data Set and Experimental Setup

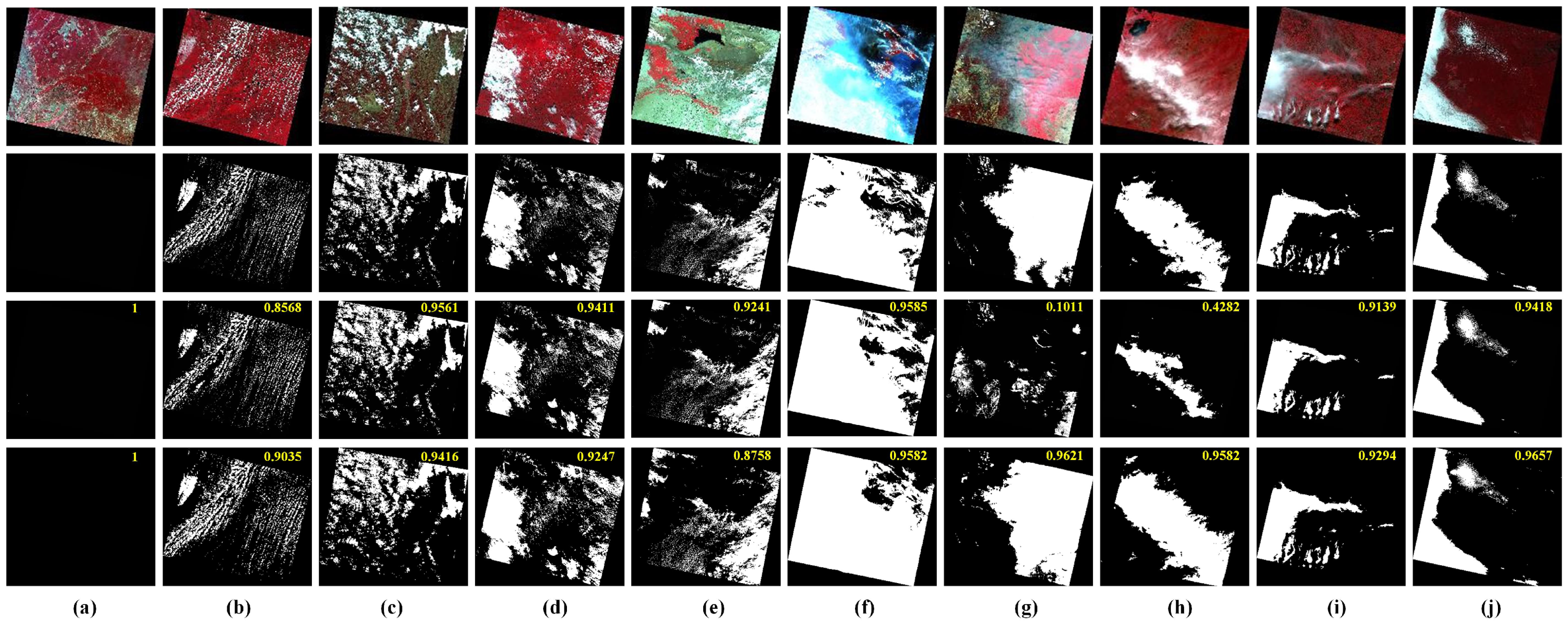

3.2. Detection Performance

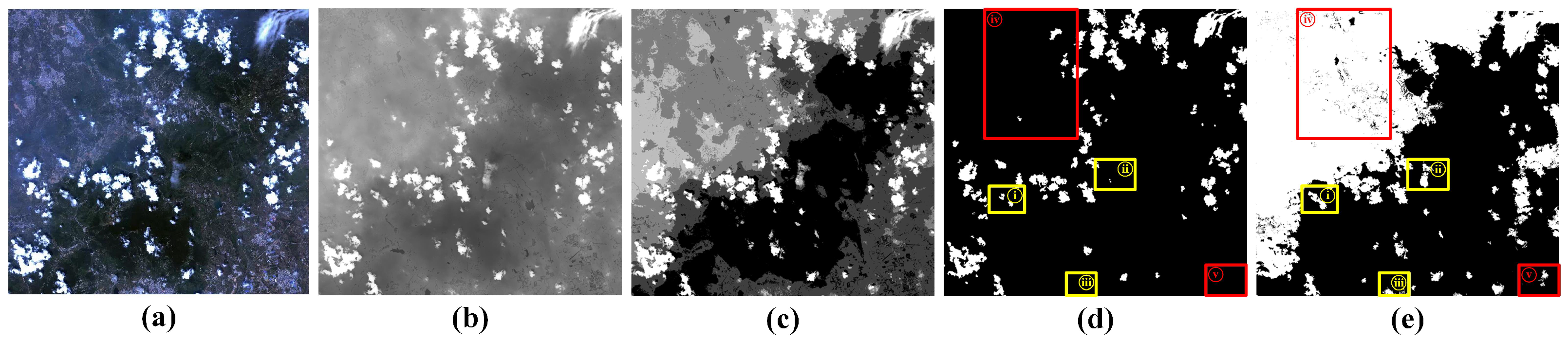

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, B.; Huang, B.; Xu, B. Multi-source remotely sensed data fusion for improving land cover classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Pellikka, P.K.E.; Heikinheimo, V.; Hietanen, J.; Schfer, E.; Siljander, M.; Heiskanen, J. Impact of land cover change on aboveground carbon stocks in Afromontane landscape in Kenya. Appl. Geogr. 2018, 94, 178–189. [Google Scholar] [CrossRef]

- Hongtao, D.; Zhigang, C.; Ming, S.; Dong, L.; Qitao, X. Detection of illicit sand mining and the associated environmental effects in China’s fourth largest freshwater lake using daytime and nighttime satellite images. Sci. Total Environ. 2018, 647, 606–618. [Google Scholar]

- Bo, P.; Fenzhen, S.; Yunshan, M. A cloud and cloud shadow detection method based on fuzzy c-means algorithm. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 1714–1727. [Google Scholar] [CrossRef]

- Huang, C.; Thomas, N.; Goward, S.N.; Masek, J.G.; Townshend, J. Automated masking of cloud and cloud shadow for forest change analysis using Landsat images. Int. J. Remote Sens. 2010, 31, 5464–5549. [Google Scholar] [CrossRef]

- Mohajerani, S.; Krammer, T.A.; Saeedi, P. Cloud detection algorithm for remote sensing images using fully convolutional neural networks. In Proceedings of the IEEE 20th International Workshop on Multimedia Signal Processing, Vancouver, BC, Canada, 29–31 August 2018. [Google Scholar]

- Zhai, H.; Zhang, H.; Zhang, L.; Li, P. Cloud/shadow detection based on spectral indices for multi/hyperspectral optical remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2018, 144, 235–253. [Google Scholar] [CrossRef]

- Champion, N. Automatic cloud detection from multi-temporal satellite images: Towards the use of pléiades time series. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B3, 559–564. [Google Scholar] [CrossRef]

- Lin, C.H.; Lin, B.Y.; Lee, K.Y.; Chen, Y.C. Radiometric normalization and cloud detection of optical satellite images using invariant pixels. ISPRS J. Photogramm. Remote Sens. 2015, 106, 107–117. [Google Scholar] [CrossRef]

- Xiaolin, Z.; Helmer, E.H. An automatic method for screening clouds and cloud shadows in optical satellite image time series in cloudy regions. Remote Sens. Environ. 2018, 214, 135–153. [Google Scholar]

- Zhang, X.; Wang, H.; Che, H.Z.; Tan, S.C.; Yao, X.P. The impact of aerosol on MODIS cloud detection and property retrieval in seriously polluted East China. Sci. Total Environ. 2019, 711, 134634. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ Automated Cloud-Cover Assessment (ACCA) algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef] [Green Version]

- Xie, F.; Shi, M.; Shi, Z.; Yin, J.; Zhao, D. Multilevel cloud detection in remote sensing images based on deep learning. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 3631–3640. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Wei, J.; Huang, W.; Li, Z.; Sun, L.; Cribb, M. Cloud detection for Landsat imagery by combining the random forest and superpixels extracted via energy-driven sampling segmentation approaches. Remote Sens. Environ. 2020, 248, 112005. [Google Scholar] [CrossRef]

- Zhiwei, L.; Huifang, S.; Huifang, L.; Guisong, X.; Paolo, G.; Liangpei, Z. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Joseph Hughes, M.; Laue, B. Cloud detection algorithm comparison and validation for operational Landsat data products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef]

- Katelyn, T.; Xiaojing, T.; Jeffrey, G.M.; Martin, C.; Junchang, J.; Shi, Q.; Zhe, Z.; Curtis, E.W. Comparison of cloud detection algorithms for Sentinel-2 imagery. Sci. Remote Sens. 2020, 2, 100010. [Google Scholar]

- Zhong, B.; Chen, W.; Wu, S.; Hu, L.; Luo, X.; Liu, Q. A cloud detection method based on relationship between objects of cloud and cloud-shadow for chinese moderate to high resolution satellite imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 4898–4908. [Google Scholar] [CrossRef]

- Sun, L.; Zhou, X.; Wei, J.; Wang, Q.; Liu, X.; Shu, M.; Chen, T.; Chi, Y.; Zhang, W. A new cloud detection method supported by GlobeLand30 data set. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 3628–3645. [Google Scholar] [CrossRef]

- Trepte, Q.Z.; Minnis, P.; Sun-Mack, S.; Yost, C.R.; Chen, Y.; Jin, Z.; Hong, G.; Chang, F.L.; Smith, W.L.; Bedka, K.M.a. Global cloud detection for CERES edition 4 using Terra and Aqua MODIS data. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9410–9449. [Google Scholar] [CrossRef]

- Tang, H.; Yu, K.; Hagolle, O.; Jiang, K.; Geng, X.; Zhao, Y. A cloud detection method based on a time series of MODIS surface reflectance images. Int. J. Digit. Earth 2013, 6, 157–171. [Google Scholar] [CrossRef]

- Zhang, X.; Tan, S.C.; Shi, G.Y.; Wang, H. Improvement of MODIS cloud mask over severe polluted eastern China. Sci. Total Environ. 2018, 654, 345–355. [Google Scholar] [CrossRef] [PubMed]

- Adrian, F. Cloud and cloud-shadow detection in SPOT5 HRG imagery with automated morphological feature extraction. Remote Sens. 2014, 6, 776–800. [Google Scholar]

- Turner, J.; Marshall, G.J.; Ladkin, R.S. An operational, real-time cloud detection scheme for use in the Antarctic based on AVHRR data. Int. J. Remote Sens. 2001, 22, 3027–3046. [Google Scholar] [CrossRef]

- Hégarat-Mascle, S.A.; Andre, C. Use of Markov Random Fields for automatic cloud/shadow detection on high resolution optical images. ISPRS J. Photogramm. Remote Sens. 2009, 64, 351–366. [Google Scholar]

- Latry, C.; Panem, C.; Dejean, P. Cloud detection with SVM technique. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 6–11 July 2008. [Google Scholar]

- Segal-Rozenhaimer, M.; Li, A.; Das, K.; Chirayath, V. Cloud detection algorithm for multi-modal satellite imagery using convolutional neural-networks (CNN). Remote Sens. Environ. 2020, 237, 111446. [Google Scholar] [CrossRef]

- Li, Y.; Chen, W.; Zhang, Y.; Tao, C.; Tan, Y. Accurate cloud detection in high-resolution remote sensing imagery by weakly supervised deep learning. Remote Sens. Environ. 2020, 250, 112045. [Google Scholar] [CrossRef]

- Chavez, P.S. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Aliaksei, M.; Rudolf, R.; Rupert, M.; Peter, R. Haze detection and removal in remotely sensed multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5895–5905. [Google Scholar]

- Qi, L.; Xinbo, G.; Lihuo, H.; Lu, W. Haze removal for a single visible remote sensing image. Signal Process. 2017, 137, 33–43. [Google Scholar]

- He, K.; Jian, S.; Fellow; IEEE; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Middleton, W. Vision Through the Atmosphere; University of Toronto Press: Toronto, ON, Canada, 1952. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Cozman, F.; Krotkov, E. Depth from scattering. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 1997. [Google Scholar]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Zahra, S.; Ghazanfar, M.A.; Khalid, A.; Azam, M.A.; Naeem, U.; Prugel-Bennett, A. Novel centroid selection approaches for KMeans-clustering based recommender systems. Inf. Sci. 2015, 320, 156–189. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X.; Yuan, M. Separate segmentation of multi-temporal high-resolution remote sensing images for object-based change detection in urban area. Remote Sens. Environ. 2017, 201, 243–255. [Google Scholar] [CrossRef]

- Rijsbergen, C.J.V. Information Retrieval, 2nd ed.; Butterworth-Heinemann: Oxford, UK, 1979. [Google Scholar]

- Peng, B.; Zhang, L.; Mou, X.; Yang, M.H. Evaluation of segmentation quality via adaptive composition of reference segmentations. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1929–1941. [Google Scholar] [CrossRef]

- Huang, X.; Ye, Y.; Zhang, H. Extensions of kmeans-type algorithms: A new clustering framework by integrating intracluster compactness and intercluster separation. IEEE Trans. Neural Netw. Learn Syst. 2014, 25, 1433–1446. [Google Scholar] [CrossRef]

| Cloud Covered Region | Non-Cloud Covered Region | Discriminability | |

|---|---|---|---|

| Correlation | 54.0932 | −14.4269 | 1.2667 |

| Uniformity of gradient distribution | 16494.3488 | 6116.9381 | 0.6291 |

| Uniformity of gray distribution | 222.2017 | 110.4711 | 0.5028 |

| Standard deviation of gradient value | 1.7466 | 2.0770 | 0.1892 |

| Mean gradient value | 1.1392 | 1.3456 | 0.1812 |

| Standard deviation of gray value | 69.2241 | 59.4468 | 0.1412 |

| Mean gray value | 530.7838 | 488.7896 | 0.0791 |

| Gradient entropy | 2.4174 | 2.3055 | 0.0463 |

| Mixing entropy | 2.9431 | 2.8349 | 0.0368 |

| Gray level entropy | 0.6391 | 0.6543 | 0.0239 |

| Parameter | Value | Equation | Parameter | Value | Equation |

|---|---|---|---|---|---|

| 0.24 | Equation (9) | 20 | Equation (12) | ||

| 0.18 | Equation (9) | 0 | Equation (13) | ||

| 0.15 | Equation (10) | 8000 | Equation (13) | ||

| 0.50 | CCM Rule 1 | 0.24 | Equation (15) | ||

| 0.05 | CCM Rule 2 | 0.22 | Equation (15) | ||

| 20 | Equation (12) | 0.20 | Equation (15) |

| Cloud Type | stratus | stratus fractus | cirrocumulus | cumulus | stratocumulus | altostratus |

| F-measure (mean ± variance) | 0.9327 ± 0.0012 | 0.9091 ± 0.0093 | 0.9214 ± 0.0072 | 0.9382 ± 0.0010 | 0.9672 ± 0.0018 | 0.9880 ± 0.0008 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.; He, L.; Zhang, Y.; Wu, Z. Cloud Detection of Gaofen-2 Multi-Spectral Imagery Based on the Modified Radiation Transmittance Map. Remote Sens. 2022, 14, 4374. https://doi.org/10.3390/rs14174374

Lin Y, He L, Zhang Y, Wu Z. Cloud Detection of Gaofen-2 Multi-Spectral Imagery Based on the Modified Radiation Transmittance Map. Remote Sensing. 2022; 14(17):4374. https://doi.org/10.3390/rs14174374

Chicago/Turabian StyleLin, Yi, Lin He, Yi Zhang, and Zhaocong Wu. 2022. "Cloud Detection of Gaofen-2 Multi-Spectral Imagery Based on the Modified Radiation Transmittance Map" Remote Sensing 14, no. 17: 4374. https://doi.org/10.3390/rs14174374

APA StyleLin, Y., He, L., Zhang, Y., & Wu, Z. (2022). Cloud Detection of Gaofen-2 Multi-Spectral Imagery Based on the Modified Radiation Transmittance Map. Remote Sensing, 14(17), 4374. https://doi.org/10.3390/rs14174374