A Batch Pixel-Based Algorithm to Composite Landsat Time Series Images

Abstract

:1. Introduction

2. Materials and Methods

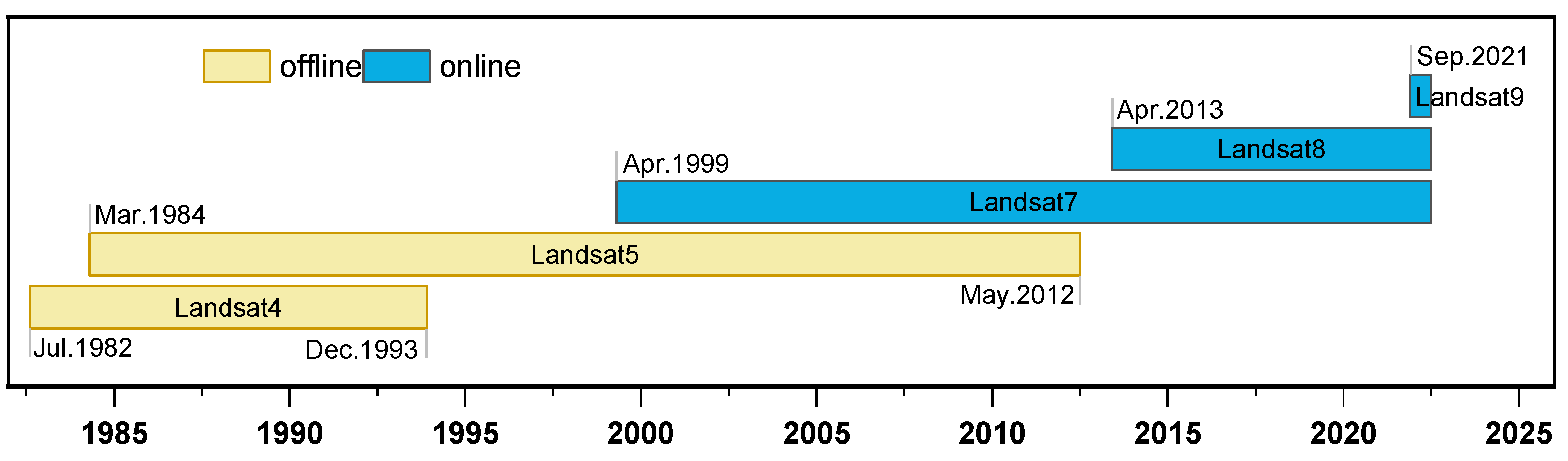

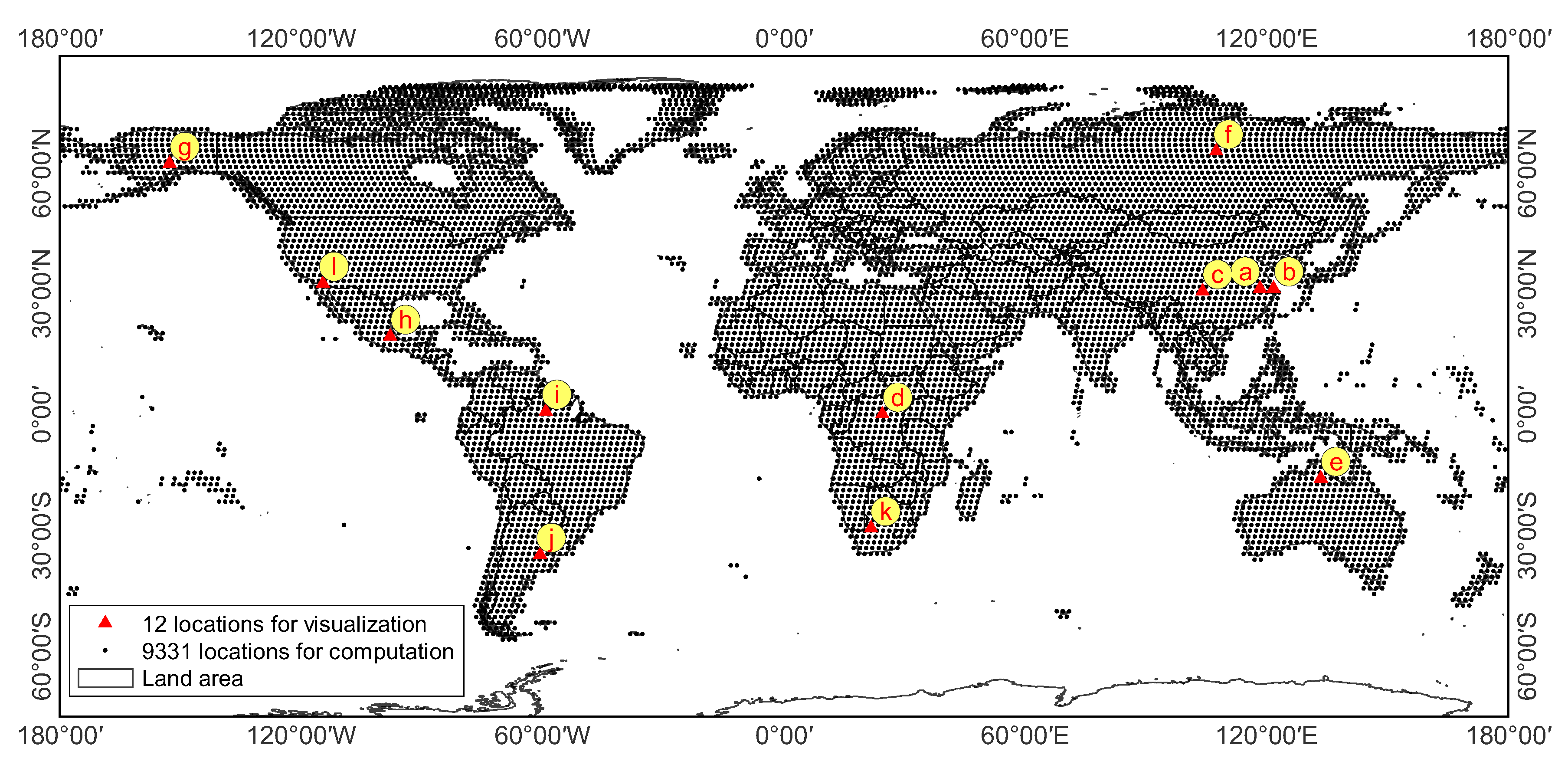

2.1. Data

2.2. Method

2.2.1. Automated Algorithm for the Batch Composition

2.2.2. Reference Image

2.2.3. Priority Index

- (1)

- The smaller the observation date difference between the substitute and the reference image, the higher the priority;

- (2)

- The smaller the acquisition year difference between the substitute and the reference image, the higher the priority;

- (3)

- Less cloud coverage;

- (4)

- Images from the same season;

- (5)

- Images from the same sensor type.

2.2.4. Composition Properties

- (a)

- The compositing integrity;

- (b)

- The number of scenes used in the composition, which corresponds to the temporal dispersion;

- (c)

- The time and contribution ratio of each scene used in the composition;

- (d)

- Whether the compositing integrity is achieved or not.

2.2.5. Methods of Sampling and Comparison

2.2.6. Implementation and Source Code of the Algorithm

3. Results

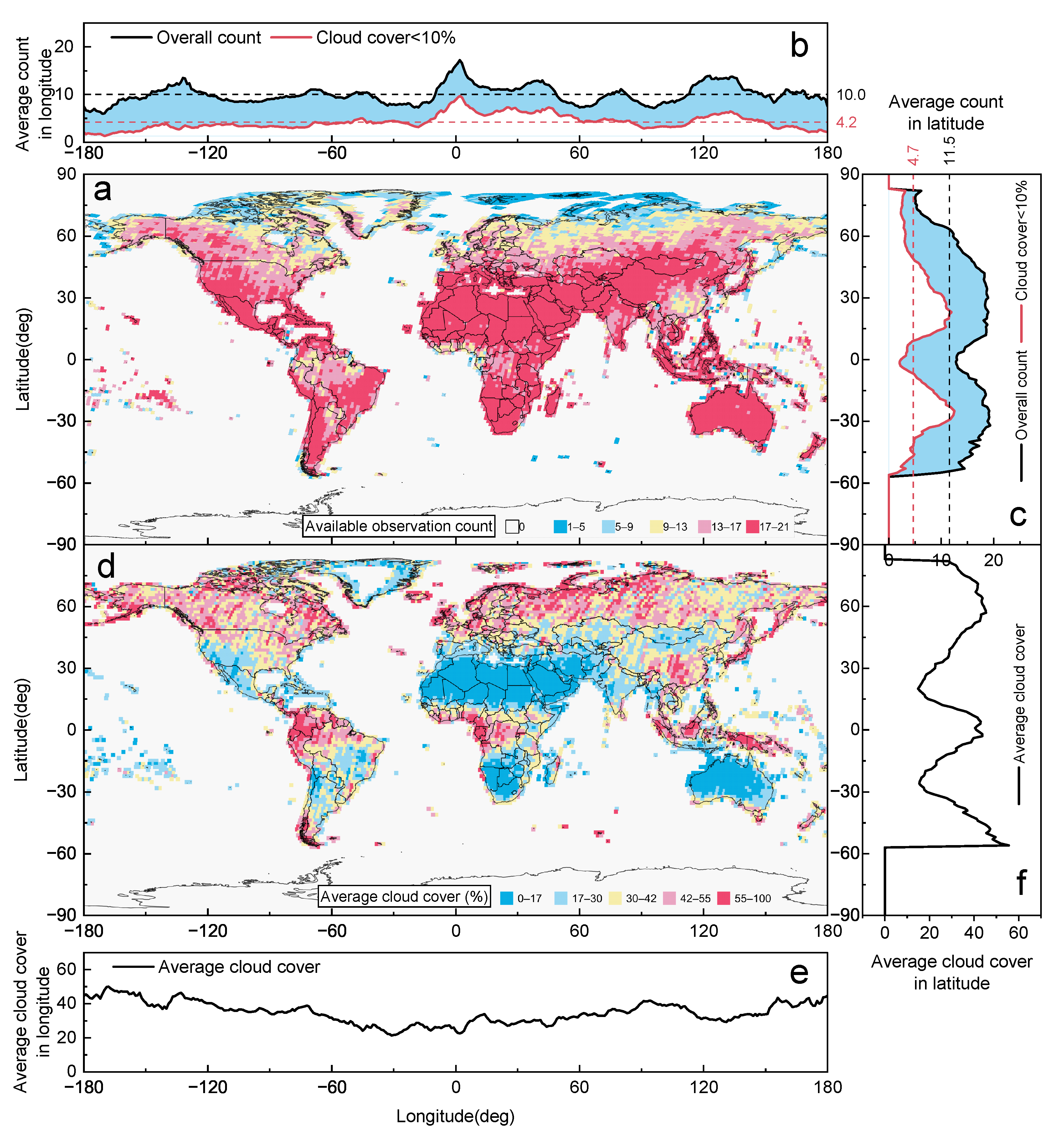

3.1. Spatial Distribution of Observation Frequencies and Cloud Coverage

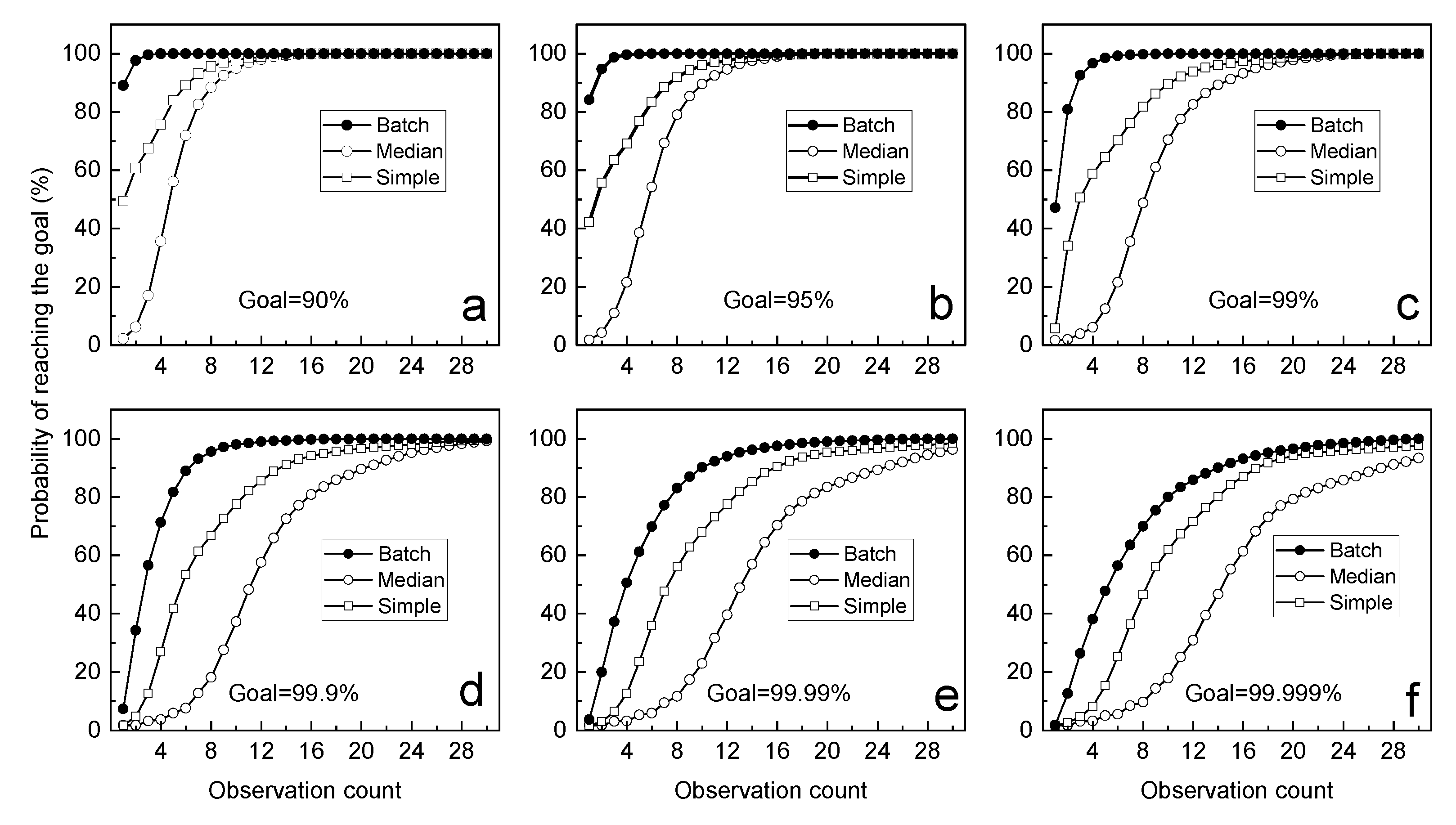

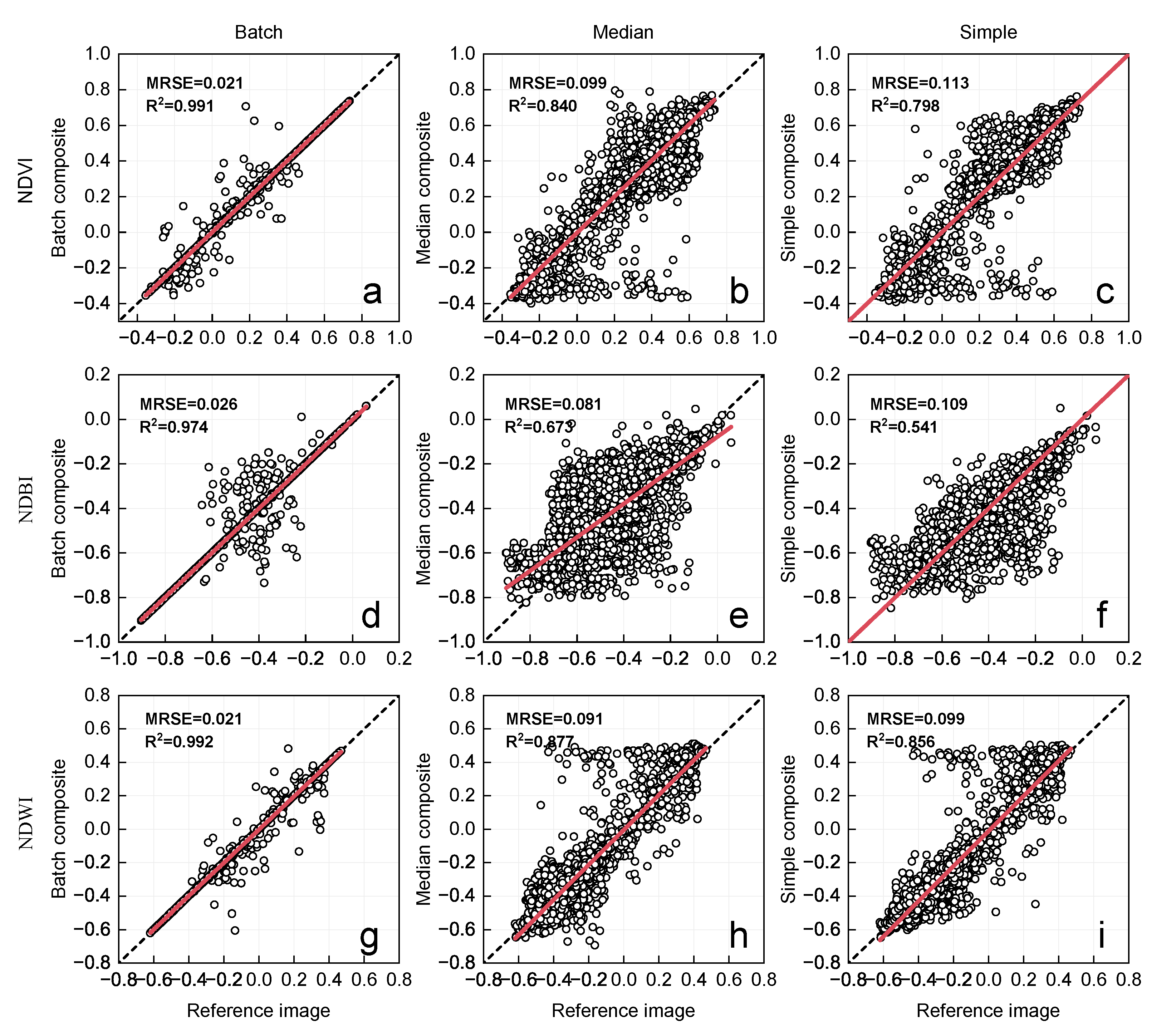

3.2. Global Comparison of the Three Composition Algorithms

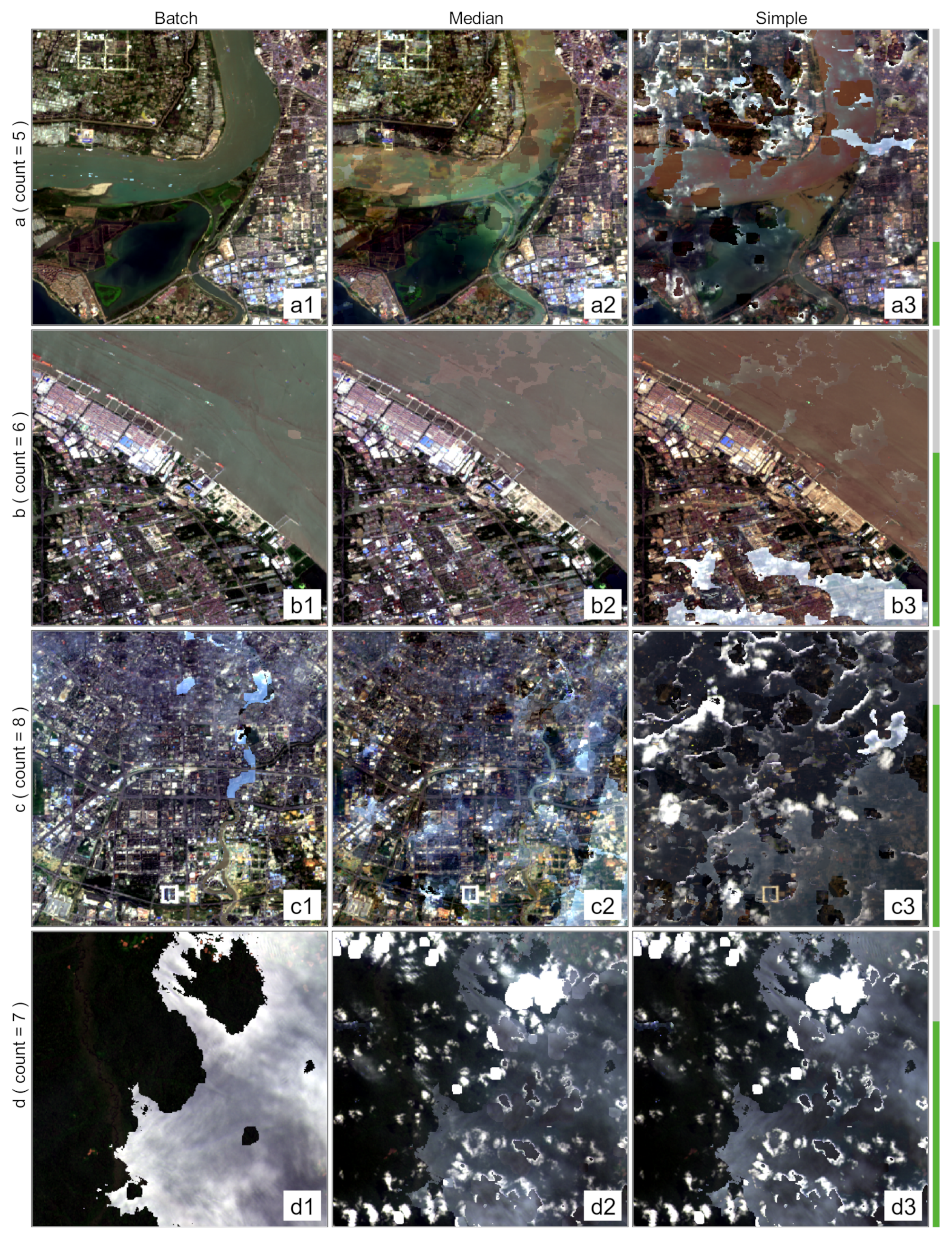

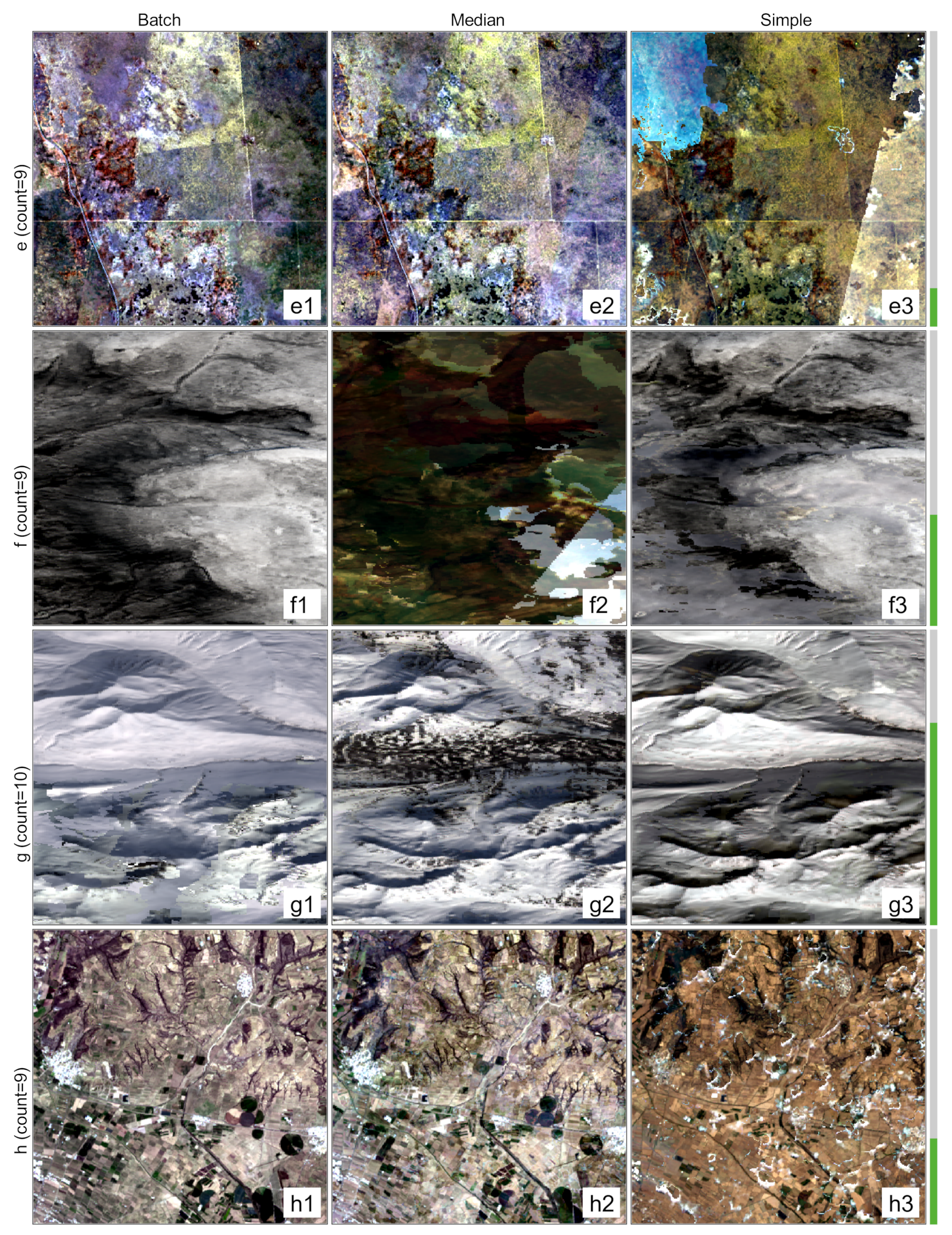

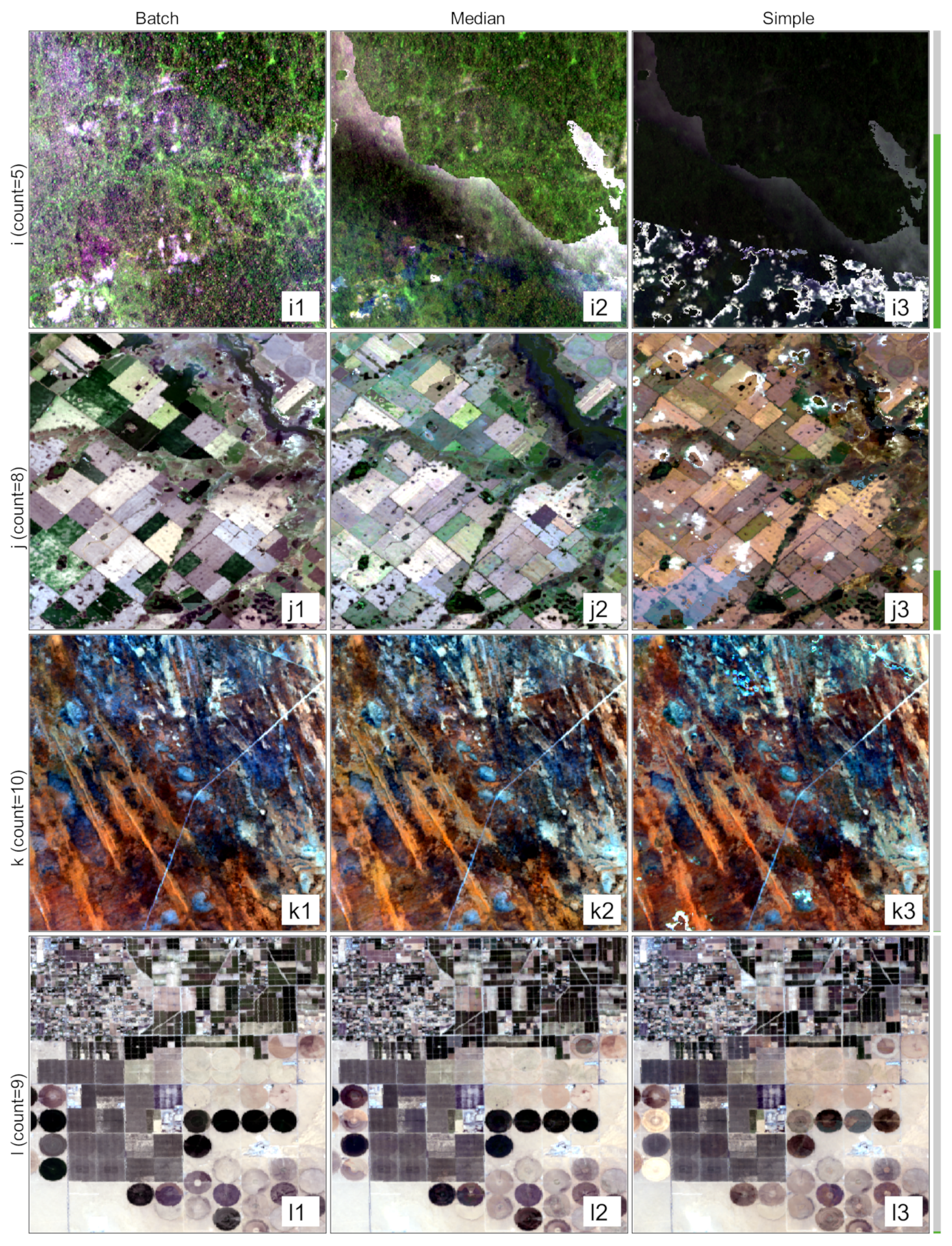

3.3. Visualization Results of Different Algorithms

4. Discussion

4.1. Principles of the Compositing Algorithms

4.2. Effects of Cloud Coverage, Latitude, and Season

4.3. Significance of This Study

- (1)

- The paper proposes a new automated compositing algorithm for Landsat multi-temporal series images, which is implemented on the GEE cloud platform and is characterized by wide adaptability and high data concentration.

- (2)

- It suggests a method for quantifying composition assessment using temporal dispersion instead of human visual inspection and it solves several shortcomings and limitations of isolated pixel-based compositing algorithms.

4.4. Limitations and Potential Improvements

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GEE | Google Earth Engine |

| DOY | day of year |

| VI | vegetation index |

| NIR | near-infrared |

| NDWI | normal difference water index |

| NDBI | normal difference built-up index |

Appendix A

| Location | Lon. | Lat. | Path/Row | Mean Cloud Cover (%) | Available Image Count |

|---|---|---|---|---|---|

| a | 118.3367136 | 31.2981391 | 120/38 | 28.35 | 5 |

| b | 121.6889709 | 31.3093027 | 118/38 | 58.53 | 6 |

| c | 104.0712565 | 30.6049022 | 129/39 | 74.93 | 8 |

| d | 24.4716735 | 0.0630941 | 176/60 | 69.55 | 7 |

| e | 133.4560485 | −15.9006582 | 104/71 | 13.01 | 9 |

| f | 107.4404235 | 65.3931218 | 138/14 | 37.65 | 9 |

| g | −152.7158265 | 62.2972684 | 72/16 | 68.59 | 10 |

| h | −97.8720765 | 19.3706768 | 25/47 | 29.22 | 9 |

| i | −59.2002015 | 0.7661963 | 231/59 | 65.27 | 5 |

| j | −60.6064515 | −34.8341590 | 226/84 | 20.20 | 8 |

| k | 21.6591735 | −28.2488155 | 174/80 | 0.30 | 10 |

| l | −114.6070330 | 32.5517492 | 38/37 | 0.80 | 9 |

| Goals (%) | Methods | Observation Number to Achieve the Integrity Goal | Total | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | ≥15 | |||

| 90.000 | Batch | 8311 | 803 | 177 | 31 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9331 |

| Median | 205 | 375 | 1004 | 1740 | 1914 | 1475 | 996 | 543 | 376 | 226 | 183 | 103 | 90 | 44 | 57 | 9331 | |

| Simple | 4603 | 1065 | 630 | 751 | 781 | 491 | 370 | 221 | 133 | 80 | 66 | 37 | 42 | 23 | 38 | 9331 | |

| 95.000 | Batch | 7852 | 983 | 373 | 80 | 33 | 8 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9331 |

| Median | 174 | 221 | 631 | 981 | 1589 | 1470 | 1401 | 906 | 598 | 388 | 272 | 189 | 171 | 103 | 237 | 9331 | |

| Simple | 3943 | 1263 | 719 | 525 | 720 | 619 | 476 | 304 | 240 | 140 | 102 | 60 | 63 | 37 | 120 | 9331 | |

| 99.000 | Batch | 4397 | 3148 | 1098 | 371 | 177 | 71 | 26 | 21 | 11 | 4 | 4 | 1 | 1 | 0 | 1 | 9331 |

| Median | 158 | 25 | 184 | 206 | 592 | 849 | 1306 | 1228 | 1148 | 878 | 664 | 470 | 359 | 261 | 1003 | 9331 | |

| Simple | 539 | 2642 | 1542 | 759 | 546 | 521 | 562 | 522 | 410 | 321 | 230 | 166 | 120 | 85 | 366 | 9331 | |

| 99.900 | Batch | 681 | 2518 | 2082 | 1370 | 976 | 668 | 394 | 225 | 146 | 82 | 50 | 40 | 26 | 17 | 56 | 9331 |

| Median | 158 | 3 | 136 | 43 | 211 | 163 | 477 | 506 | 873 | 900 | 1031 | 868 | 784 | 610 | 2568 | 9331 | |

| Simple | 159 | 285 | 746 | 1308 | 1408 | 1072 | 748 | 520 | 531 | 462 | 430 | 314 | 301 | 220 | 827 | 9331 | |

| 99.990 | Batch | 342 | 1520 | 1616 | 1241 | 996 | 802 | 683 | 554 | 364 | 293 | 193 | 163 | 123 | 85 | 356 | 9331 |

| Median | 158 | 0 | 129 | 14 | 191 | 60 | 325 | 204 | 537 | 524 | 805 | 744 | 871 | 750 | 4019 | 9331 | |

| Simple | 155 | 118 | 337 | 569 | 1015 | 1157 | 1097 | 778 | 632 | 483 | 493 | 403 | 410 | 300 | 1384 | 9331 | |

| 99.999 | Batch | 169 | 1009 | 1284 | 1092 | 907 | 813 | 660 | 589 | 519 | 423 | 322 | 229 | 202 | 184 | 929 | 9331 |

| Median | 158 | 0 | 127 | 7 | 182 | 39 | 273 | 118 | 438 | 329 | 672 | 538 | 803 | 675 | 4972 | 9331 | |

| Simple | 155 | 94 | 190 | 332 | 663 | 915 | 1043 | 946 | 880 | 554 | 515 | 394 | 452 | 343 | 1855 | 9331 | |

References

- USGS. Landsat Missions. Available online: https://www.usgs.gov/landsat-missions/landsat-9 (accessed on 6 April 2022).

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B.; et al. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- Pontes-Lopes, A.; Dalagnol, R.; Dutra, A.C.; de Jesus Silva, C.V.; de Alencastro Graça, P.M.L.; de Oliveira e Cruz de Aragão, L.E. Quantifying post-fire changes in the aboveground biomass of an Amazonian forest based on field and remote sensing data. Remote Sens. 2022, 14, 1545. [Google Scholar] [CrossRef]

- Workie, T.G.; Debella, H.J. Climate change and its effects on vegetation phenology across ecoregions of Ethiopia. Glob. Ecol. Conserv. 2018, 13, e00366. [Google Scholar] [CrossRef]

- Younes, N.; Joyce, K.E.; Maier, S.W. All models of satellite-derived phenology are wrong, but some are useful: A case study from northern Australia. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102285. [Google Scholar] [CrossRef]

- Grace, K.; Anderson, S.; Gonzales-chang, M.; Costanza, R.; Courville, S.; Dalgaard, T.; Dominati, E.; Kubiszewski, I.; Ogilvy, S.; Porfirio, L.; et al. A review of methods, data, and models to assess changes in the value of ecosystem services from land degradation and restoration. Ecol. Model. 2016, 319, 190–207. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Allen, R.; Anderson, M.; Belward, A.; Bindschadler, R.; Cohen, W.; Gao, F.; Goward, S.N.; Helder, D.; Helmer, E.; et al. Free access to Landsat imagery. Science 2008, 320, 1011. [Google Scholar] [CrossRef]

- Zhu, Z. Change detection using landsat time series: A review of frequencies, preprocessing, algorithms, and applications. ISPRS J. Photogramm. Remote Sens. 2017, 130, 370–384. [Google Scholar] [CrossRef]

- Hemati, M.; Hasanlou, M.; Mahdianpari, M.; Mohammadimanesh, F. A systematic review of Landsat data for change detection applications: 50 years of monitoring the earth. Remote Sens. 2021, 13, 2869. [Google Scholar] [CrossRef]

- Hansen, M.C.; Loveland, T.R. A review of large area monitoring of land cover change using Landsat data. Remote Sens. Environ. 2012, 122, 66–74. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Hobart, G.W.; Luther, J.E.; Hermosilla, T.; Griffiths, P.; Coops, N.C.; Hall, R.J.; Hostert, P.; Dyk, A.; et al. Pixel-based image compositing for large-area dense time series applications and science. Can. J. Remote Sens. 2014, 40, 192–212. [Google Scholar] [CrossRef] [Green Version]

- Masek, J.G.; Wulder, M.A.; Markham, B.; McCorkel, J.; Crawford, C.J.; Storey, J.; Jenstrom, D.T. Landsat 9: Empowering open science and applications through continuity. Remote Sens. Environ. 2020, 248, 111968. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-resolution global maps of 21st-Century forest cover change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [PubMed]

- Danylo, O.; Pirker, J.; Lemoine, G.; Ceccherini, G.; See, L.; McCallum, I.; Hadi; Kraxner, F.; Achard, F.; Fritz, S. A map of the extent and year of detection of oil palm plantations in Indonesia, Malaysia and Thailand. Sci. Data 2021, 8, 96. [Google Scholar] [CrossRef] [PubMed]

- Pekel, J.F.F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-resolution mapping of global surface water and its long-term changes. Nature 2016, 540, 418–422. [Google Scholar] [CrossRef] [PubMed]

- Gong, P.; Liu, H.; Zhang, M.; Li, C.; Wang, J.; Huang, H.; Clinton, N.; Ji, L.; Li, W.; Bai, Y.; et al. Stable classification with limited sample: Transferring a 30-m resolution sample set collected in 2015 to mapping 10-m resolution global land cover in 2017. Sci. Bull. 2019, 64, 370–373. [Google Scholar] [CrossRef]

- Scheip, C.M.; Wegmann, K.W. HazMapper: A global open-source natural hazard mapping application in Google Earth Engine. Nat. Hazards Earth Syst. Sci. 2021, 21, 1495–1511. [Google Scholar] [CrossRef]

- Banerjee, A.; Chakrabarty, M.; Bandyopadhyay, G.; Roy, P.K.; Ray, S. Forecasting environmental factors and zooplankton of Bakreswar reservoir in India using time series model. Ecol. Inform. 2020, 60, 101157. [Google Scholar] [CrossRef]

- Wu, C.; Webb, J.A.; Stewardson, M.J. Modelling Impacts of Environmental Water on Vegetation of a Semi-Arid Floodplain–Lakes System Using 30-Year Landsat Data. Remote Sens. 2022, 14, 708. [Google Scholar] [CrossRef]

- Guindon, L.; Gauthier, S.; Manka, F.; Parisien, M.A.; Whitman, E.; Bernier, P.; Beaudoin, A.; Villemaire, P.; Skakun, R. Trends in wildfire burn severity across Canada, 1985 to 2015. Can. J. For. Res. 2021, 51, 1230–1244. [Google Scholar] [CrossRef]

- Holben, B.N. Characteristics of maximum-value composite images from temporal AVHRR data. Int. J. Remote Sens. 1986, 7, 1417–1434. [Google Scholar] [CrossRef]

- Luo, Y.; Trishchenko, A.; Khlopenkov, K. Developing clear-sky, cloud and cloud shadow mask for producing clear-sky composites at 250-meter spatial resolution for the seven MODIS land bands over Canada and North America. Remote Sens. Environ. 2008, 112, 4167–4185. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS–Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Kline, K.; Scaramuzza, P.L.; Kovalskyy, V.; Hansen, M.; Loveland, T.R.; Vermote, E.; Zhang, C. Web-enabled Landsat Data (WELD): Landsat ETM+ composited mosaics of the conterminous United States. Remote Sens. Environ. 2010, 114, 35–49. [Google Scholar] [CrossRef]

- Potapov, P.; Turubanova, S.; Hansen, M.C. Regional-scale boreal forest cover and change mapping using Landsat data composites for European Russia. Remote Sens. Environ. 2011, 115, 548–561. [Google Scholar] [CrossRef]

- Griffiths, P.; van der Linden, S.; Kuemmerle, T.; Hostert, P. A pixel-based landsat compositing algorithm for large area land cover mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2088–2101. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Google Earth Engine. API Reference. Available online: https://developers.google.com/earth-engine/apidocs (accessed on 2 June 2022).

- Zhao, C.; Wu, Z.; Qin, Q.; Ye, X. A framework of generating land surface reflectance of China early Landsat MSS images by visibility data and its evaluation. Remote Sens. 2022, 14, 1802. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4–8 and Sentinel-2 imagery. Remote Sens. Environ. 2019, 231, 111205. [Google Scholar] [CrossRef]

- FAO. Methods & Standards. Available online: http://www.fao.org/ag/agn/nutrition/Indicatorsfiles/Agriculture.pdf (accessed on 20 December 2021).

- Van De Griend, A.A.; Owe, M. On the relationship between thermal emissivity and the normalized difference vegetation index for natural surfaces. Int. J. Remote Sens. 1993, 14, 1119–1131. [Google Scholar] [CrossRef]

- Zha, Y.; Gao, J.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Goksel, C. Monitoring of a water basin area in Istanbul using remote sensing data. Water Sci. Technol. 1998, 38, 209–216. [Google Scholar] [CrossRef]

- Scaramuzza, P.L.; Bouchard, M.A.; Dwyer, J.L. Development of the landsat data continuity mission cloud-cover assessment algorithms. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1140–1154. [Google Scholar] [CrossRef]

- Perin, V.; Tulbure, M.G.; Gaines, M.D.; Reba, M.L.; Yaeger, M.A. On-farm reservoir monitoring using Landsat inundation datasets. Agric. Water Manag. 2021, 246, 106694. [Google Scholar] [CrossRef]

- Sirin, A.; Medvedeva, M. Remote sensing mapping of peat-fire-burnt areas: Identification among other wildfires. Remote Sens. 2022, 14, 194. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Li, J.; Li, Z.; Shen, H.; Zhang, L. Thick cloud and cloud shadow removal in multitemporal imagery using progressively spatio-temporal patch group deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 162, 148–160. [Google Scholar] [CrossRef]

- Candra, D.S.; Phinn, S.; Scarth, P. Cloud and cloud shadow masking for Sentinel-2 using multitemporal images in global area. Int. J. Remote Sens. 2020, 41, 2877–2904. [Google Scholar] [CrossRef]

- Hantson, S.; Huxman, T.E.; Kimball, S.; Randerson, J.T.; Goulden, M.L. Warming as a Driver of Vegetation Loss in the Sonoran Desert of California. J. Geophys. Res. Biogeosci. 2021, 126, e2020JG005942. [Google Scholar] [CrossRef]

- Chen, N.; Tsendbazar, N.E.; Hamunyela, E.; Verbesselt, J.; Herold, M. Sub-annual tropical forest disturbance monitoring using harmonized Landsat and Sentinel-2 data. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102386. [Google Scholar] [CrossRef]

- Guan, X.; Huang, C.; Zhang, R. Integrating MODIS and Landsat data for land cover classification by multilevel decision rule. Land 2021, 10, 208. [Google Scholar] [CrossRef]

- Li, S.; Wang, J.; Li, D.; Ran, Z.; Yang, B. Evaluation of Landsat 8-like land surface temperature by fusing Landsat 8 and MODIS land surface temperature product. Processes 2021, 9, 2262. [Google Scholar] [CrossRef]

| Methods | Integrity (%) | |||||

|---|---|---|---|---|---|---|

| 90.000 | 95.000 | 99.000 | 99.900 | 99.990 | 99.999 | |

| Batch | 99.9% | 99.5% | 96.6% | 71.3% | 50.6% | 38.1% |

| Median | 35.6% | 21.5% | 6.1% | 3.6% | 3.2% | 3.1% |

| Simple | 75.5% | 69.1% | 58.8% | 26.8% | 12.6% | 8.3% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Ma, J.; Ye, X. A Batch Pixel-Based Algorithm to Composite Landsat Time Series Images. Remote Sens. 2022, 14, 4252. https://doi.org/10.3390/rs14174252

Li J, Ma J, Ye X. A Batch Pixel-Based Algorithm to Composite Landsat Time Series Images. Remote Sensing. 2022; 14(17):4252. https://doi.org/10.3390/rs14174252

Chicago/Turabian StyleLi, Jianzhou, Jinji Ma, and Xiaojiao Ye. 2022. "A Batch Pixel-Based Algorithm to Composite Landsat Time Series Images" Remote Sensing 14, no. 17: 4252. https://doi.org/10.3390/rs14174252

APA StyleLi, J., Ma, J., & Ye, X. (2022). A Batch Pixel-Based Algorithm to Composite Landsat Time Series Images. Remote Sensing, 14(17), 4252. https://doi.org/10.3390/rs14174252