A Deep Learning Application to Map Weed Spatial Extent from Unmanned Aerial Vehicles Imagery

Abstract

:1. Introduction

2. Methods

2.1. Data Collection

2.1.1. UAV Data

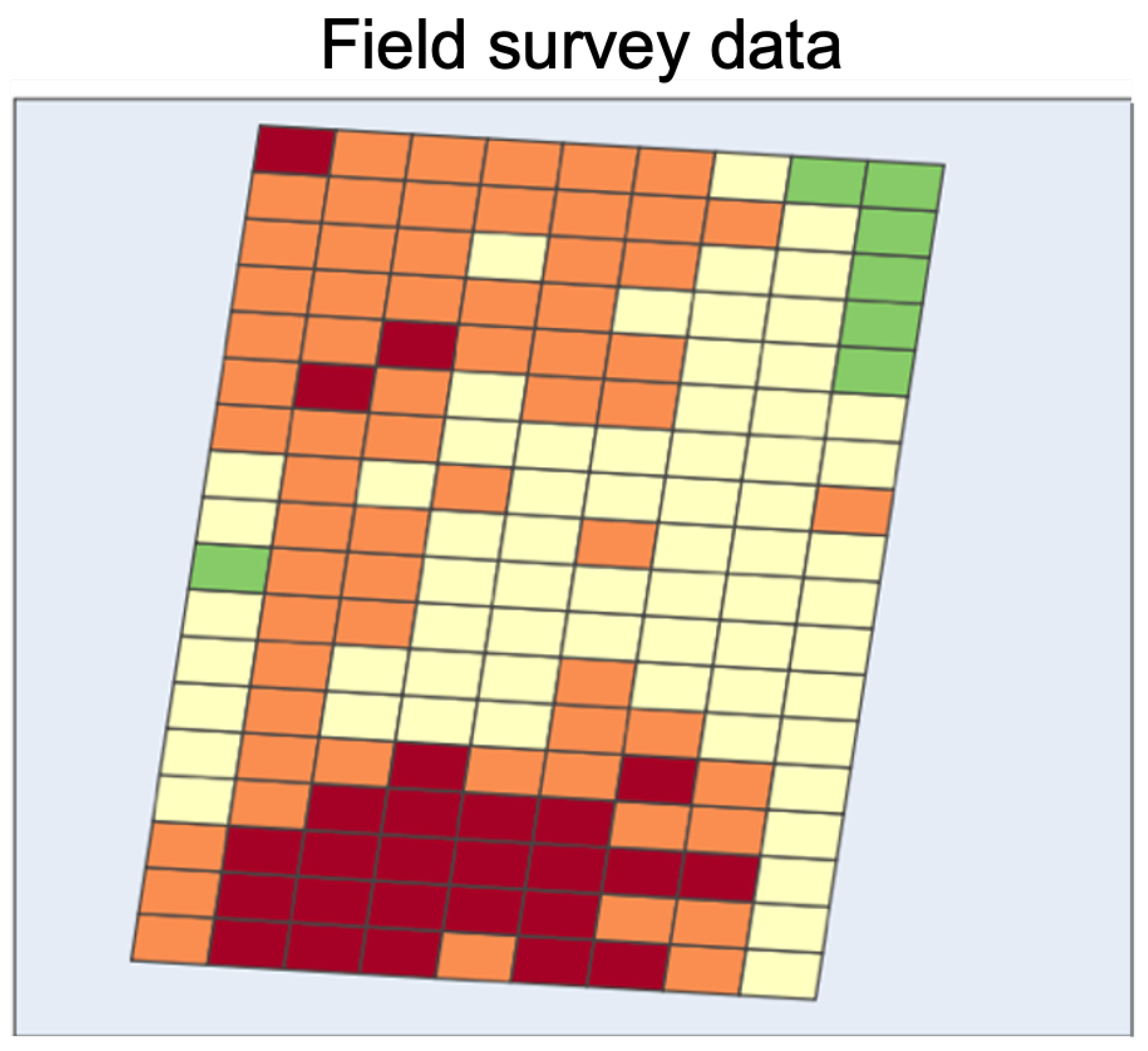

2.1.2. Field Survey Data

2.2. Data Processing

2.2.1. Data Labelling

2.2.2. Dataset Creation

2.3. Data Analysis

2.3.1. Data Modelling

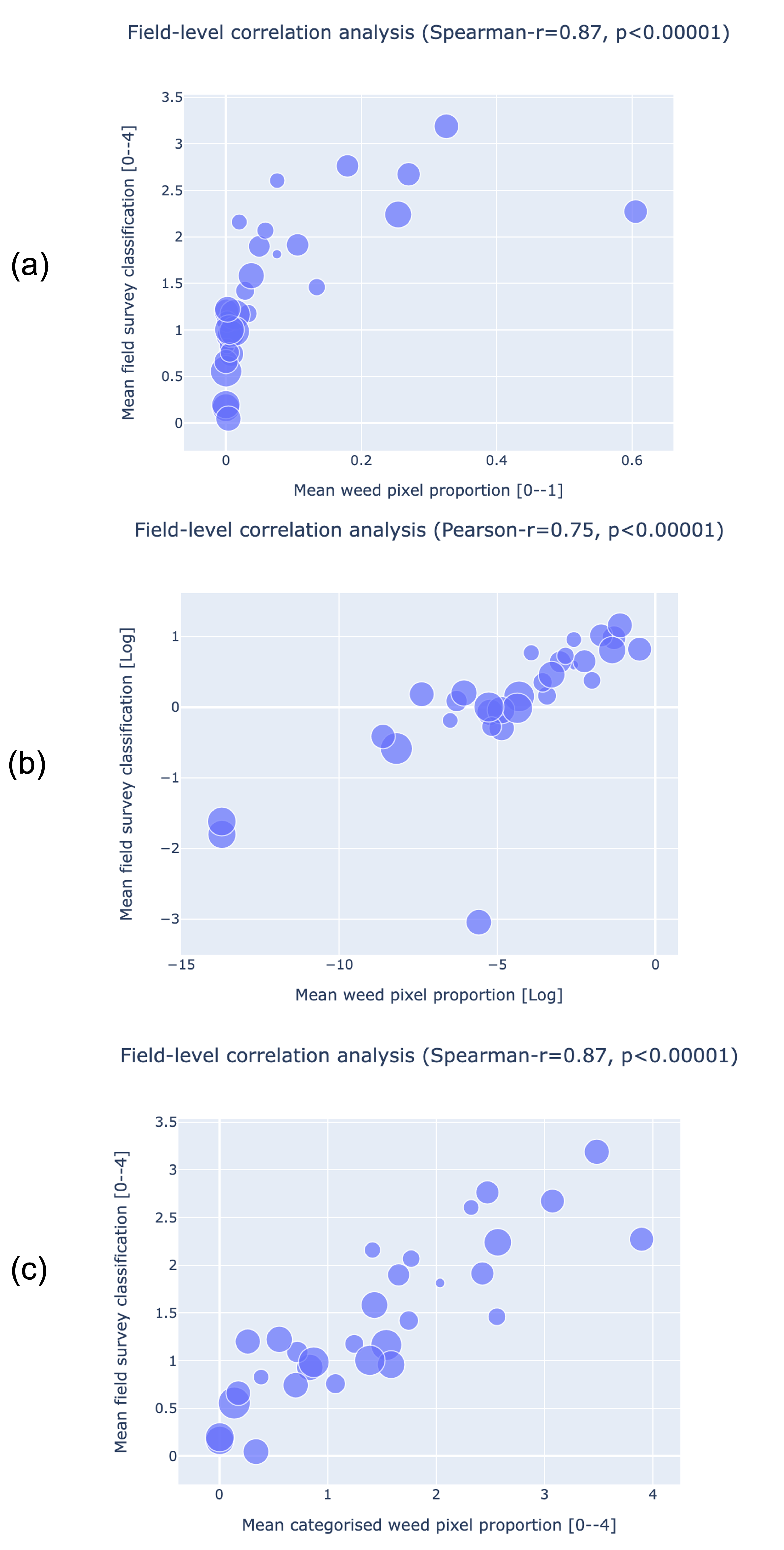

2.3.2. Comparison to Field Survey Data

3. Results

3.1. Model Performance

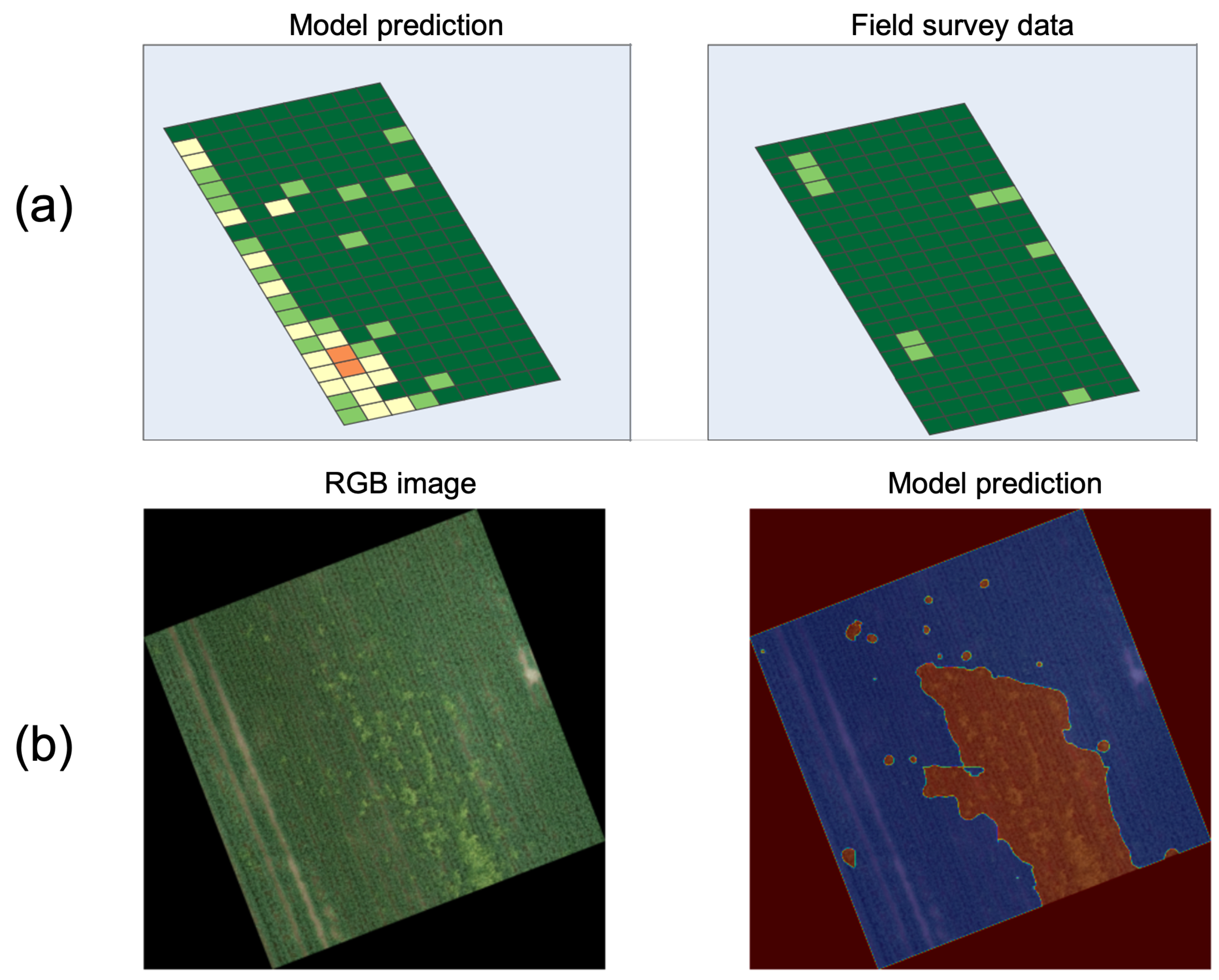

3.2. Comparison to Field Survey Data

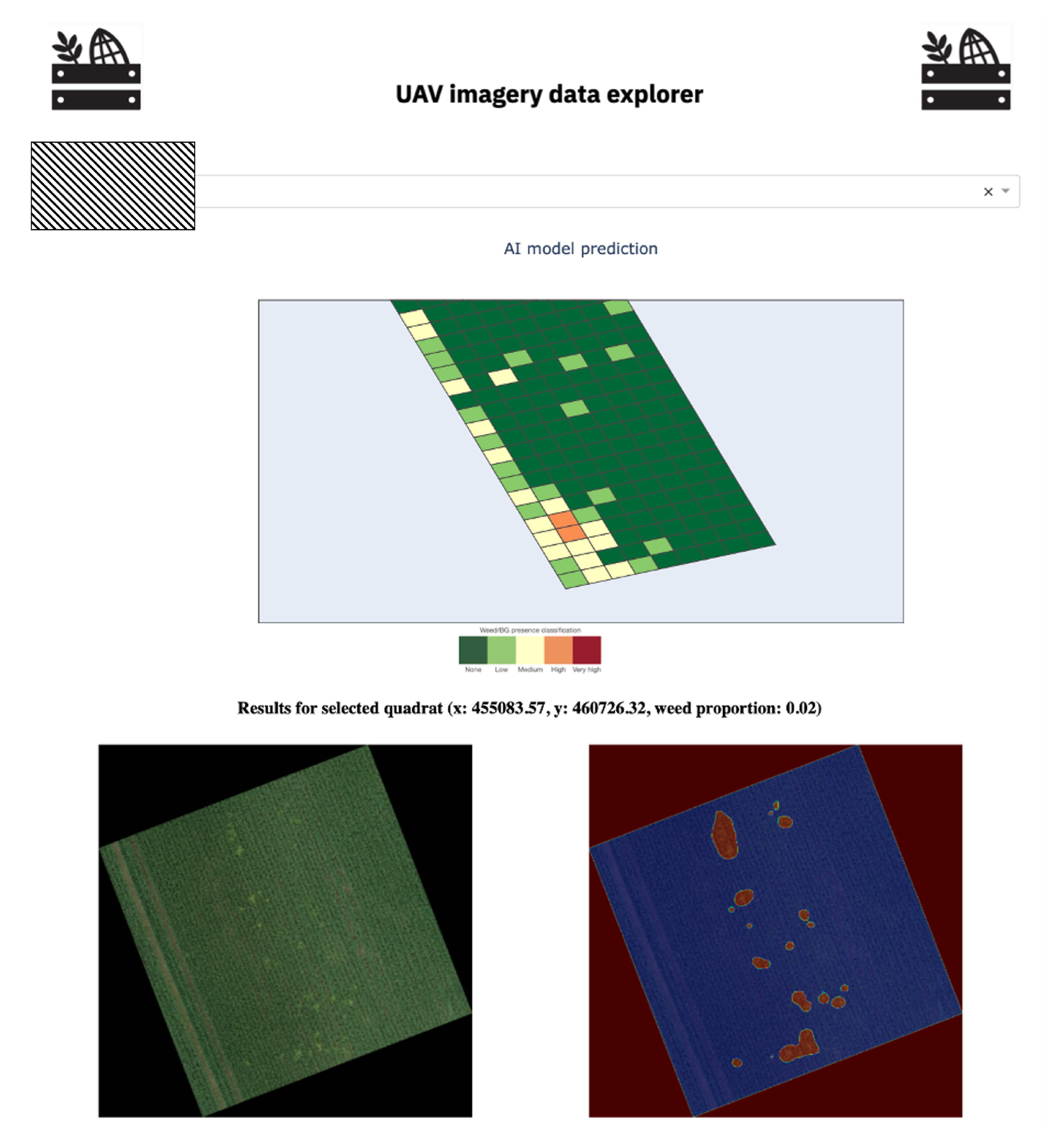

3.3. Model Deployment Pathway

4. Discussion

4.1. Summary

4.2. Comparison to Previous Literature

4.3. Lessons Learned

4.4. Limitations

4.5. Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Oerke, E.C. Crop losses to pests. J. Agric. Sci. 2006, 144, 31–43. [Google Scholar] [CrossRef]

- Varah, A.; Ahodo, K.; Coutts, S.R.; Hicks, H.L.; Comont, D.; Crook, L.; Hull, R.; Neve, P.; Childs, D.Z.; Freckleton, R.P. The costs of human-induced evolution in an agricultural system. Nat. Sustain. 2020, 3, 63–71. [Google Scholar] [CrossRef]

- Franco, C.; Pedersen, S.; Papaharalampos, H.; Ørum, J. The value of precision for image-based decision support in weed management. Precis. Agric. 2017, 18, 366–382. [Google Scholar] [CrossRef]

- Hamouz, P.; Hamouzová, K.; Holec, J.; Tyšer, L. Impact of site-specific weed management on herbicide savings and winter wheat yield. Plant Soil Environ. 2013, 59, 101–107. [Google Scholar] [CrossRef]

- Qi, A.; Perry, J.; Pidgeon, J.; Haylock, L.; Brooks, D. Cost-efficacy in measuring farmland biodiversity–lessons from the Farm Scale Evaluations of genetically modified herbicide-tolerant crops. Ann. Appl. Biol. 2008, 152, 93–101. [Google Scholar] [CrossRef]

- Queenborough, S.A.; Burnet, K.M.; Sutherland, W.J.; Watkinson, A.R.; Freckleton, R.P. From meso-to macroscale population dynamics: A new density-structured approach. Methods Ecol. Evol. 2011, 2, 289–302. [Google Scholar] [CrossRef]

- Hoeser, T.; Bachofer, F.; Kuenzer, C. Object detection and image segmentation with deep learning on Earth observation data: A review—Part II: Applications. Remote Sens. 2020, 12, 3053. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Wen, S.; Zhang, H.; Zhang, Y. Accurate weed mapping and prescription map generation based on fully convolutional networks using UAV imagery. Sensors 2018, 18, 3299. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV low-altitude remote sensing for precision weed management. Weed Technol. 2018, 32, 2–6. [Google Scholar] [CrossRef]

- Mohidem, N.A.; Che’Ya, N.N.; Juraimi, A.S.; Fazlil Ilahi, W.F.; Mohd Roslim, M.H.; Sulaiman, N.; Saberioon, M.; Mohd Noor, N. How Can Unmanned Aerial Vehicles Be Used for Detecting Weeds in Agricultural Fields? Agriculture 2021, 11, 1004. [Google Scholar] [CrossRef]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A large-scale semantic weed mapping framework using aerial multispectral imaging and deep neural network for precision farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef]

- Lambert, J.; Hicks, H.; Childs, D.; Freckleton, R. Evaluating the potential of Unmanned Aerial Systems for mapping weeds at field scales: A case study with Alopecurus myosuroides. Weed Res. 2018, 58, 35–45. [Google Scholar] [CrossRef] [PubMed]

- Lambert, J.P.; Childs, D.Z.; Freckleton, R.P. Testing the ability of unmanned aerial systems and machine learning to map weeds at subfield scales: A test with the weed Alopecurus myosuroides (Huds). Pest Manag. Sci. 2019, 75, 2283–2294. [Google Scholar] [CrossRef] [PubMed]

- Jurado-Expósito, M.; López-Granados, F.; Jiménez-Brenes, F.M.; Torres-Sánchez, J. Monitoring the Spatial Variability of Knapweed (Centaurea diluta Aiton) in Wheat Crops Using Geostatistics and UAV Imagery: Probability Maps for Risk Assessment in Site-Specific Control. Agronomy 2021, 11, 880. [Google Scholar] [CrossRef]

- Rozenberg, G.; Kent, R.; Blank, L. Consumer-grade UAV utilized for detecting and analyzing late-season weed spatial distribution patterns in commercial onion fields. Precis. Agric. 2021, 22, 1317–1332. [Google Scholar] [CrossRef]

- Zou, K.; Chen, X.; Zhang, F.; Zhou, H.; Zhang, C. A Field Weed Density Evaluation Method Based on UAV Imaging and Modified U-Net. Remote Sens. 2021, 13, 310. [Google Scholar] [CrossRef]

- Jinya, S.; Yi, D.; Coombes, M.; Liu, C.; Zhai, X.; McDonald-Maier, K.; Chen, W.H. Spectral analysis and mapping of blackgrass weed by leveraging machine learning and UAV multispectral imagery. Comput. Electron. Agric. 2022, 192, 106621. [Google Scholar]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.J.; Pena, J.M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Castaldi, F.; Pelosi, F.; Pascucci, S.; Casa, R. Assessing the potential of images from unmanned aerial vehicles (UAV) to support herbicide patch spraying in maize. Precis. Agric. 2017, 18, 76–94. [Google Scholar] [CrossRef]

- Nikolić, N.; Rizzo, D.; Marraccini, E.; Gotor, A.A.; Mattivi, P.; Saulet, P.; Persichetti, A.; Masin, R. Site and time-specific early weed control is able to reduce herbicide use in maize-a case study. Ital. J. Agron. 2021, 16, 1780. [Google Scholar] [CrossRef]

- Hunter, J.E., III; Gannon, T.W.; Richardson, R.J.; Yelverton, F.H.; Leon, R.G. Integration of remote-weed mapping and an autonomous spraying unmanned aerial vehicle for site-specific weed management. Pest Manag. Sci. 2020, 76, 1386–1392. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fernández-Quintanilla, C.; Peña, J.; Andújar, D.; Dorado, J.; Ribeiro, A.; López-Granados, F. Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Res. 2018, 58, 259–272. [Google Scholar] [CrossRef]

- de Camargo, T.; Schirrmann, M.; Landwehr, N.; Dammer, K.H.; Pflanz, M. Optimized Deep Learning Model as a Basis for Fast UAV Mapping of Weed Species in Winter Wheat Crops. Remote Sens. 2021, 13, 1704. [Google Scholar] [CrossRef]

- Liu, J.; Xiang, J.; Jin, Y.; Liu, R.; Yan, J.; Wang, L. Boost Precision Agriculture with Unmanned Aerial Vehicle Remote Sensing and Edge Intelligence: A Survey. Remote Sens. 2021, 13, 4387. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; Serrano-Pérez, A.; De Castro, A.I.; López-Granados, F. Quantifying Efficacy and Limits of Unmanned Aerial Vehicle (UAV) Technology for Weed Seedling Detection as Affected by Sensor Resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef]

- Che’Ya, N.N.; Dunwoody, E.; Gupta, M. Assessment of Weed Classification Using Hyperspectral Reflectance and Optimal Multispectral UAV Imagery. Agronomy 2021, 11, 1435. [Google Scholar] [CrossRef]

- Martin, M.P.; Barreto, L.; Riaño, D.; Fernandez-Quintanilla, C.; Vaughan, P. Assessing the potential of hyperspectral remote sensing for the discrimination of grassweeds in winter cereal crops. Int. J. Remote Sens. 2011, 32, 49–67. [Google Scholar] [CrossRef]

- Kaivosoja, J.; Hautsalo, J.; Heikkinen, J.; Hiltunen, L.; Ruuttunen, P.; Näsi, R.; Niemeläinen, O.; Lemsalu, M.; Honkavaara, E.; Salonen, J. Reference Measurements in Developing UAV Systems for Detecting Pests, Weeds, and Diseases. Remote Sens. 2021, 13, 1238. [Google Scholar] [CrossRef]

- Moss, S.R.; Perryman, S.A.; Tatnell, L.V. Managing herbicide-resistant blackgrass (Alopecurus myosuroides): Theory and practice. Weed Technol. 2007, 21, 300–309. [Google Scholar] [CrossRef]

- Hicks, H.L.; Comont, D.; Coutts, S.R.; Crook, L.; Hull, R.; Norris, K.; Neve, P.; Childs, D.Z.; Freckleton, R.P. The factors driving evolved herbicide resistance at a national scale. Nat. Ecol. Evol. 2018, 2, 529–536. [Google Scholar] [CrossRef]

- OpenVINO. Computer Vision Annotation Tool (CVAT). Available online: https://github.com/openvinotoolkit/cvat (accessed on 17 July 2022).

- Howard, J.; Gugger, S. Fastai: A layered API for deep learning. Information 2020, 11, 108. [Google Scholar] [CrossRef] [Green Version]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhangnan, W.; Chen, Y.; Zhao, B.; Kang, X.; Ding, Y. Review of weed detection methods based on computer vision. Sensors 2021, 11, 3647. [Google Scholar]

- Smith, L.N.; Topin, N. Super-convergence: Very fast training of neural networks using large learning rates. Int. Soc. Opt. Photonics 2019, 11006, 1100612. [Google Scholar]

- Wright, L. New Deep Learning Optimizer, Ranger: Synergistic Combination of RAdam + Look Ahead for the Best of Both. 2019. Available online: https://lessw.medium.com/new-deep-learning-optimizer-ranger-synergistic-combination-of-radam-lookahead-for-the-best-of-2dc83f79a48d (accessed on 17 July 2022).

- Gurevitch, J.; Fox, G.A.; Fowler, N.L.; Graham, C.H. Landscape Demography: Population Change and its Drivers Across Spatial Scales. Q. Rev. Biol. 2016, 91, 459–485. [Google Scholar] [CrossRef] [PubMed]

- Somerville, G.J.; Sønderskov, M.; Mathiassen, S.K.; Metcalfe, H. Spatial modelling of within-field weed populations; a review. Agronomy 2020, 10, 1044. [Google Scholar] [CrossRef]

- Balducci, F.; Buono, P. Building a Qualified Annotation Dataset for Skin Lesion Analysis Trough Gamification. In Proceedings of the 2018 International Conference on Advanced Visual Interfaces, AVI ’18, Riva del Sole, Italy, 29 May–1 June 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef] [Green Version]

| Dataset | Metric | Value (Std) |

|---|---|---|

| Test set | Accuracy | 0.92 (0.01) |

| IoU | 0.40 (0.03) | |

| F1-score | 0.57 (0.03) | |

| Recall | 0.89 (0.02) | |

| Precision | 0.41 (0.03) | |

| Out-of-bag set | Accuracy | 0.91 (0.05) |

| IoU | 0.31 (0.09) | |

| F1-score | 0.46 (0.11) | |

| Recall | 0.72 (0.23) | |

| Precision | 0.35 (0.06) |

| Dataset | Metric | Value (Std) |

|---|---|---|

| Test set | Accuracy | 0.92 (0.01) |

| IoU | 0.40 (0.02) | |

| F1-score | 0.57 (0.02) | |

| Recall | 0.88 (0.04) | |

| Precision | 0.42 (0.02) | |

| Out-of-bag set | Accuracy | 0.91 (0.03) |

| IoU | 0.30 (0.13) | |

| F1-score | 0.45 (0.15) | |

| Recall | 0.72 (0.27) | |

| Precision | 0.35 (0.11) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fraccaro, P.; Butt, J.; Edwards, B.; Freckleton, R.P.; Childs, D.Z.; Reusch, K.; Comont, D. A Deep Learning Application to Map Weed Spatial Extent from Unmanned Aerial Vehicles Imagery. Remote Sens. 2022, 14, 4197. https://doi.org/10.3390/rs14174197

Fraccaro P, Butt J, Edwards B, Freckleton RP, Childs DZ, Reusch K, Comont D. A Deep Learning Application to Map Weed Spatial Extent from Unmanned Aerial Vehicles Imagery. Remote Sensing. 2022; 14(17):4197. https://doi.org/10.3390/rs14174197

Chicago/Turabian StyleFraccaro, Paolo, Junaid Butt, Blair Edwards, Robert P. Freckleton, Dylan Z. Childs, Katharina Reusch, and David Comont. 2022. "A Deep Learning Application to Map Weed Spatial Extent from Unmanned Aerial Vehicles Imagery" Remote Sensing 14, no. 17: 4197. https://doi.org/10.3390/rs14174197

APA StyleFraccaro, P., Butt, J., Edwards, B., Freckleton, R. P., Childs, D. Z., Reusch, K., & Comont, D. (2022). A Deep Learning Application to Map Weed Spatial Extent from Unmanned Aerial Vehicles Imagery. Remote Sensing, 14(17), 4197. https://doi.org/10.3390/rs14174197