Abstract

Recently, correlation filter (CF)-based tracking algorithms have attained extensive interest in the field of unmanned aerial vehicle (UAV) tracking. Nonetheless, existing trackers still struggle with selecting suitable features and alleviating the model drift issue for online UAV tracking. In this paper, a robust CF-based tracker with feature integration and response map enhancement is proposed. Concretely, we develop a novel feature integration method that comprehensively describes the target by leveraging auxiliary gradient information extracted from the binary representation. Subsequently, the integrated features are utilized to learn a background-aware correlation filter (BACF) for generating a response map that implies the target location. To mitigate the risk of model drift, we introduce saliency awareness in the BACF framework and further propose an adaptive response fusion strategy to enhance the discriminating capability of the response map. Moreover, a dynamic model update mechanism is designed to prevent filter contamination and maintain tracking stability. Experiments on three public benchmarks verify that the proposed tracker outperforms several state-of-the-art algorithms and achieves a real-time tracking speed, which can be applied in UAV tracking scenarios efficiently.

1. Introduction

Visual object tracking has emerged to be a research hotspot in machine vision, as it is widely adopted in various fields, such as pedestrian tracking, traffic control, automatic navigation, human-machine interaction, medical imaging, and so on [1]. The purpose of general tracking is to automatically estimate the location of a moving object in subsequent frames based on its initial information given by the first frame. With the continuous development of commercial unmanned aerial vehicles (UAVs), corresponding practical applications involving aerial tracking [2,3,4], bathymetric monitor [5,6], aerial photogrammetry [7], disaster response [8] and petrographic investigation [9] have increased rapidly. However, the integration of object tracking techniques into UAV systems remains a challenging issue due to many visual interference factors and specific application constraints [10], such as inadequate sampling resolution, fast motion issue, severe visual occlusion, acute illumination variation, visual angle change, and scarce computation resources [11].

Traditional tracking algorithms are commonly divided into two types, i.e., generative methods [12,13,14] and discriminative methods [15,16,17,18]. Generally, generative methods focus on seeking a compact object representation of the object’s appearance while ignoring background information, which leads to severe performance degradation in complex tracking scenarios. In contrast, discriminative methods employ information from both the target and background to build the object appearance model. Discriminative trackers, which consider the object tracking problem as a binary classification task, are popular for their high accuracy and robustness. Recently, trackers based on correlation filters (CFs) [19,20,21,22,23,24,25] and deep learning (DL) technology [26,27,28,29,30,31,32] theory have emerged and developed rapidly. DL-based trackers leverage deep feature representations and large-scale offline learning to achieve state-of-the-art performance. The hierarchical convolutional features (HCF) [19] tracker takes advantage of deep features from three convolutional layers of the pre-trained network. The keyfilter-aware object tracker (KAOT) [20] also utilizes deep convolutional features to enhance the robustness of UAV tracking. The fully-convolutional Siamese network (SiamFC) [21] algorithm employs an end-to-end architecture to learn a similarity measure function offline, and detects the object that is most similar to the template online. Motivated by the success of SiamFC, some further improvements have been made, such as combining the Siamese network with a region proposal network (RPN) [22,33], adding additional branches [23], exploiting the Transformer architecture [24,34], and so on. In addition, the Siamese network-based algorithm with an anchor proposal network (APN) [25] module is developed for onboard aerial tracking. However, DL-based trackers require vast amounts of data and high-end graphics processing units (GPUs) for training deep neural networks. It is worth noting that most UAVs are currently not equipped with GPUs due to considerations of cost per unit and the weight of the drone itself. Therefore, deploying deep learning models is not an ideal choice for visual object tracking tasks in aerial videos.

Despite performing slightly inferior to DL-based trackers, CF-based trackers can achieve better balance among tracking precision and running speed, and the low cost, correlation filter has become a prominent paradigm for resource-limited UAV platforms. The minimum output sum of the squared errors (MOSSE) [26] tracker is a pioneering work in the development of the CF paradigm. Inspired by MOSSE, the kernelized correlation filter (KCF) [27] algorithm introduces the circulant matrix and kernel trick to CF-based tracking. The scale adaptive with the multiple features (SAMF) [28] tracker integrates the raw pixels, histogram of oriented gradient (HOG) [35], and color names (CN) [29] features to represent the target object. Somewhat differently, the online multi-feature learning (OMFL) [30] algorithm for UAV tracking utilizes the peak-to-sidelobe ratio (PSR) to weight and fuses the response maps learned from different types of features. Recently, the interval-based response inconsistency (IBRI) [31] and the future-aware correlation filter (FACF) [32] trackers handle the model drift issue in aerial tracking scenarios using historical information and future context information, respectively. The CF paradigm has also been extended by saliency proposals [36], multi-cue fusion [37], kernel cross-correlator [38], particle filter [39] and rotation prediction [40].

In CF-based tracking, a correlation filter model is trained with the ability to classify the compact samples by minimizing a least-squares loss. Then, in consecutive frames, the target is detected on the region of interest (ROI) by applying the trained filter. There are two core characteristics of this tracking-by-detection paradigm. First, massive virtual samples can be obtained by the cyclic shift operation of the ROI. Hence, CF-based trackers have sufficient samples for online training. Second, correlation in the spatial domain can be calculated by the discrete Fourier transform (DFT) as a multiplication of elements in the frequency domain. Such an advantage greatly accelerated the training and detection procedures of CF-based trackers, and thus real-time tracking is realized. However, learning from cyclicly shifted examples in the classical CF paradigm [27] is not always valid. Specifically, since the training patches contain synthetic negative samples, periodic repetitions in such synthetic samples introduce disturbing boundary effects that may deteriorate the discriminative capability of the filters. In previous studies, several approaches have been proposed aiming at reducing the boundary effects while offering adequate training patches [41,42,43,44]. Among these methods, a tracker known as a correlation filter with limited boundaries (CFLB) [41] tracker is proposed to extract real negative training samples by incorporating a binary matrix used for cropping patches from background regions. As an extended version of CFLB, the background-aware correlation filter (BACF) [43] employs the HOG [35] instead of the raw pixels in feature extraction phase. Although the BACF tracker solves the boundary effect problem, it still struggles to adapt to various challenging scenarios with a single type of feature to model the targets tracked by the UAV. Moreover, the BACF cannot resolve the model drift problem caused by occlusions or distractors, which inhibits the tracking robustness for its further development.

In this paper, we propose a robust BACF-based algorithm with feature integration and response map enhancement for the UAV tracking applications. The main contributions of this work are three-fold:

- A new feature integration method is presented to combine distinct gradient features in the HSV color space and their binary representation at a hierarchical level for building a discriminative appearance model of the aerial tracking object. This feature-level fusion manner not only makes use of the gradient and color information together in a more rational approach but also delivers better anti-interference ability with the benefit of binary representation.

- Saliency awareness is introduced into the correlation filter framework, enabling the tracker to focus on more salient object regions to enhance model discrimination and mitigate model drift. Furthermore, based on a consistent criterion that reveals the quality of the response map, an adaptive response fusion strategy and a dynamic model update mechanism are designed, respectively, for achieving a more reliable decision-level fusion result and avoiding model corruption.

- Exhaustive evaluations are performed on four popular UAV tracking benchmarks, i.e., UAV123@10fps [45], VisDrone2018-SOT [46], UAVTrack112 [25], and UAV20L [45]. The experimental results demonstrate that our approach achieves very competitive performance compared to state-of-the-art trackers, while delivering a real-time tracking speed of 26.7 frames per second (FPS) on a single CPU.

The rest of this paper is organised below: Section 2 details the tracking framework of the proposed method. Extensive experiments are conducted in Section 3. Section 4 discusses the results and analyzes the effectiveness of each component. At last, a brief conclusion of this study is given in Section 5.

2. Proposed Approach

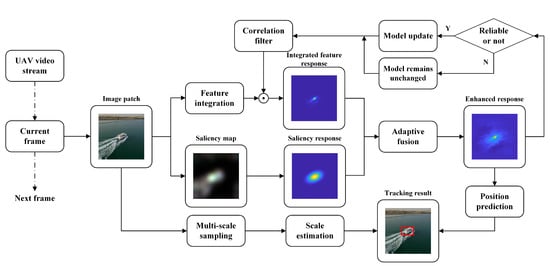

Based on the CF paradigm, a novel tracking pipeline exploiting feature integration and response map enhancement is proposed. In Section 2.1, we first illustrate the proposed feature integration method. In Section 2.2, we describe the filter learning and detection stages of the baseline framework. In Section 2.3, we explain how saliency is incorporated into the framework and provide a description of our adaptive response fusion strategy. At last, the dynamic model update mechanism and the scale estimation method are illustrated in Section 2.4. The diagram in Figure 1 outlines the workflow of our tracker.

Figure 1.

Overall workflow of the proposed approach.

2.1. Feature Integration Method

Feature extraction can be viewed as one of the most crucial components in the tracking framework. Using adequate features can dramatically boost the tracking performance [47]. Previous works [27,48,49] have verified that gradient and color information is very effective for CF-based trackers. Moreover, there is a favorable complementary between the gradient and color features, since the former has a good tolerance to local geometric changes, while the latter possesses a certain amount of photometric invariance. Although exploiting more powerful features, such as those extracted from convolutional neural networks (CNNs) can yield state-of-the-art performance [50,51,52], it will incur a high computational cost, which is highly undesirable for online UAV tracking. Therefore, based on the handcrafted features, we develop a feature integration method to build a robust appearance model of the tracked object.

Inspired by the work in [36], the input image is converted firstly from RGB to the HSV (Hue, Saturation, and Value) color space that corresponds more closely to the manner in which humans perceive color. The elements of each channel’s colormap are in the range of 0 to 1. Secondly, the HSV descriptor is converted to a binary representation by exploiting a simple threshold . If the value is larger than the threshold , the corresponding feature is set to 1, otherwise, it is set to 0. Compared with the standard HSV descriptor, the binary representation is insensitive to object appearance variations in local details and background interference, which facilitates tracking algorithms to adapt to complex UAV tracking scenarios. Further, our binary representation is extended to construct a group of HOG features [35] across each channel. It should be noted that only integrating the HOG features extracted from the binary representation is not sufficient to achieve precise localization for inadequate fine-grained descriptions; thus, these features are employed as auxiliary features in our approach. Instead, the HOG feature maps extracted from the input grayscale image (equivalent to the Value channel, V-channel for short) can be regarded as the primary features. All the primary and auxiliary components are finally concatenated to constitute our integrated features. The process of feature integration is displayed in Figure 2. Thereby, with the contribution of the binary representation, a more natural fusion of color and gradient information is achieved for UAV tracking.

Figure 2.

Illustration of the feature integration process.

2.2. Filter Learning and Detection

In this work, we utilize BACF [43] as our baseline tracker due to its effectiveness in reducing the boundary effects, as well as its real-time performance. As previously mentioned, our integrated features are exploited to enhance the robustness of the baseline tracker. More specifically, let denote a training sample (image patch) with a large search region; T represents the number of training samples, stands for the desired correlation response (predefined Gaussian-shaped labels), and the correlation filter of the baseline tracker is learned by minimizing the objective function as follows:

where and , respectively, denote the k-th channel of the learned filter and our vectorized fused feature, K denotes the number of feature channels, indicates the j-th element of , stands for the circular shift operator, and employs a j-step circular shift to . denotes a binary matrix that crops the middle D elements of , and returns all shifted patches with the size of D from the original image patch. It is noted that the matrix plays an important role in the baseline framework, which makes the correlation operator apply on the real negative samples. represents a regularization, and the superscript ⊤ is the conjugate transpose of a matrix. In this formulation, , , and , where . For computational efficiency, Equation (1) is rewritten in the frequency domain as follows:

where , denotes an auxiliary variable and , the matrix , the superscript represents the DFT operator of a signal A. denotes the orthonormal matrix for mapping a vectorized signal with T dimensions to the Fourier domain, denotes a identity matrix, and ⊗ stands for the Kronecker product. Employing the Augmented Lagrangian Method (ALM) and the alternating direction method of multiplier (ADMM) algorithm [53], Equation (2) can be solved by transformed into two subproblems, respectively, the following and :

where represents the penalty factor and . Each of the above subproblems can be resolved iteratively by the ADMM algorithm.

In the detection phase, the correlation response is calculated by applying the learned filter as:

where is the correlation response map in frame t, the subscript indicates that the response map corresponds to the integrated features ( is called the integrated feature response for short in the following parts), and ⊙ represent the inverse Fourier transform (IFT) operator and the element-wise product, respectively, stands for the Fourier form of the extracted feature maps in frame t, and represents the filter in the previous frame.

2.3. Tracking Incorporating Saliency

Visual saliency is widely deployed in object detection [54,55] and semantic segmentation [56,57]. We assume that it may be also capable of helping the CF-based tracking algorithm to distinguish the target from its background as a supplementary feature that provides object description from another perspective. Therefore, object saliency information is incorporated to enhance correlation tracking in our framework. Starting from the input image patch , a simple yet effective saliency detection algorithm [58] based on the spectral residual theory is applied in this patch to efficiently generate the saliency map. Specifically, we first construct the amplitude feature and the phase feature in the frequency domain through DFT calculation. Then, the log spectrum is adopted to achieve the spectral residual by Equation (5):

where ⋆ stands for the convolution operator, and represents the matrix, expressed as:

The spectral residual contains the distinct information of an image. It captures a compressed representation of the noticeable objects. Through IFT calculation, the saliency map can be generated in the spatial domain by Equation (7):

where represents a Gaussian matrix used to smooth the saliency map.

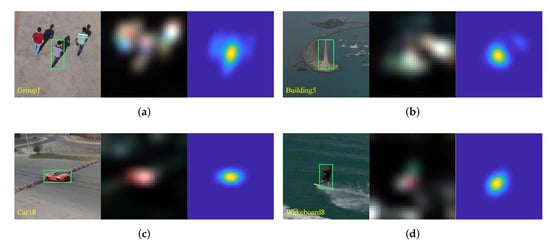

Considering the saliency map as a kind of ordinary single-channel feature map, we train a specific correlation filter for saliency and further achieve the saliency response , as described in Section 2.2. Illustration of the object saliency maps and their correlation response maps in our scheme is shown in Figure 3. It can be observed that embedding saliency into the BACF [43] framework is effective in highlighting the object’s corresponding region and suppressing background interference in the response map. The saliency awareness works as a spatial attention that reflects the foreground information to assist the integrated feature response and thus avoid the tracker drifting to the background.

Figure 3.

Some visual results of the object saliency awareness. (a) Group1. (b) Building5. (c) Car18. (d) Wakeboard8. The first column is the input image patch centered on the target (with a green bounding box). The second and third columns present the saliency map and its response map, respectively. All these examples are from the UAV123@10fps [45] benchmark.

In the response fusion stage, the original saliency response is first interpolated and adjusted to the same size as the integrated feature response output. Subsequently, assuming that we directly perform response maps’ integration, the variant interference and the coarse-grained property of saliency will cause the fusion results to inevitably contain unwanted noise. Therefore, an adaptive response fusion strategy based on the confidence of squared response map (CSRM) [59] is introduced to weigh and merge the two response maps in this work. CSRM reflects the degree of fluctuation and the confidence level of the response maps. For a response map , which is a matrix, the CSRM score is defined as:

The numerator and the denominator of the CSRM represent the peak and the mean square value of the response map, respectively. We use Equation (8) to compute the CSRM scores and for the response maps and . A higher CSRM score means better quality of the response map. Hence, we express the weight of the integrated feature response as the percentage of its CSRM score in the total score obtained by adding and , i.e., . The weight of the saliency response can be determined in the same way. Then, the response maps are linearly added using the adaptive weights to obtain the enhanced response map , as follows:

In the enhanced response map, the coordinate location with the maximum value indicates the estimated target position.

2.4. Model Update and Scale Estimation

Due to camera movements and variations in the viewpoint, the target appearance often changes notably in UAV tracking scenes. Consequently, the learned model is expected to adapt to such variations depending on an online model update mechanism. Nevertheless, when the target experiences more various challenging scenarios such as occlusion and background clutter, unreliable tracking results and inopportune model update operations will contaminate the filter model over time, which can also lead to model drift if not immediately addressed. In this study, we design the dynamic model update mechanism based on a simple fact that the response map appears as a sharp peak and the corresponding CSRM score is higher if the object is not occluded by the surroundings. On the contrary, the response map may appear as a relatively flat peak or even multiple peaks and the corresponding CSRM score is lower if the target is experiencing occlusion. Therefore, we introduce a threshold to distinguish whether the tracking result obtained by the enhanced response map is reliable or not. To eliminate variance effects among different UAV videos, we use normalized CSRM scores for comparison with the threshold. An illustration of normalized CSRM score and response map variations with and without occlusion is shown in Figure 4. For the current frame t, if the tracking result is reliable, it can be formulated as:

where denotes the normalized CSRM score, is the first CSRM score of the enhanced response map (calulated at the second frame) in a video sequence.

Figure 4.

Illustration of normalized CSRM score (bottom) and response map (top) variations with and without occlusion. The example frames (middle) are from the VisDrone2018-SOT [46] benchmark. Severe occlusion occurs in frames 100 to 120, which brings a significant reduction of the normalized CSRM scores and causes multiple peaks in the response maps.

When Equation (10) is satisfied, the object template of the current frame is considered reliable. Then, we independently update the integrated feature model to accommodate the object appearance changes, as follows:

where and , respectively, denote the current frame model and the previous frame model, and denotes the learning rate. In case the current tracking result (or, in other words: current object template) is unreliable, the integrated feature model is retained without updating it to prevent the degradation of the filter. Considering that the initial target information provides the most credible object template, the saliency model in our mechanism is initialized in the first frame and then remains unchanged for the subsequent frames.

For the scale estimation module, we remove the saliency information for algorithmic acceleration. The correlation filter with the integrated features is applied on five scale search regions, and thus five related response maps are obtained by correlation operations. The estimated target scale is determined by the scale factor corresponding to the maximum value in all of these response maps. To sum up, the main steps of the proposed approach are shown in Algorithm 1.

| Algorithm 1: The proposed tracker. |

|

3. Experiments

In this section, the proposed algorithm is compared with other 14 state-of-the-art CF-based tracking algorithms recently proposed, i.e., ECO-HC [48], BACF [43], CSR-DCF [44], CACF [60], STRCF [61], MCCT-H [37], KCC [38], LDES [40], ARCF [2], OMFL [30], AutoTrack [3], DR2Track [4], IBRI [31], and SITUP [62]. We employ two benchmark metrics [63] involving precision and success rate to access tracking accuracy for all tested trackers. In addition, the average FPS is deployed for tracking speed measurement.

3.1. Implementation Details

All compared trackers and ours are implemented in MATLAB R2017a on an Intel Core i7-8700 3.2 GHz CPU with 16 GB RAM. The main parameter settings of our tracker are presented as follows: the threshold for binary representation is chosen as 0.5, the regularization coefficient is set to 0.001, the penalty factor is set to 1, the threshold for determining the reliability of the enhanced response map is 0.45, and the learning rate is set to 0.0127. All parameter values remain fixed in our experiments.

3.2. Quantitative Evaluation

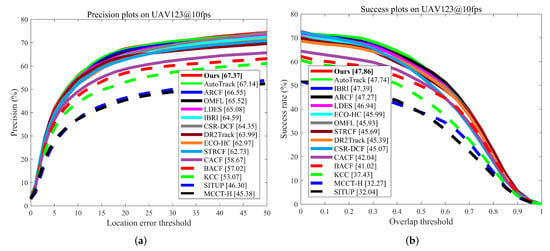

3.2.1. Overall Performance Evaluation

To comprehensively evaluate the overall performance, we first conduct the experiments on three common UAV tracking benchmarks, i.e., UAV123@10fps [45] and Vis Drone2018-SOT [46], and UAVTrack112 [25]. Precision and success plots of our algorithm and other compared trackers on these three benchmarks are presented in Figure 5, Figure 6 and Figure 7. Compared to baseline tracker BACF [43], our approach has a significant advantage of 10.35% in precision and 6.84% in success rate. As shown in Figure 5, the proposed tracker achieves the best performance in both precision and success rate on the UAV123@10fps benchmark. As presented in Figure 6, our tracker consistently ranks first in both terms of precision and success rate on the VisDrone2018-SOT benchmark, which significantly owns an advantage of 1.39% and 1.47% over the second-best tracker. As shown in Figure 7, our tracker ranks first place in precision and second place in success rate on the UAVTrack112 benchmark. Subsequently, all trackers are also tested on a long-term benchmark UAV20L [45]. The evaluation results are presented in Figure 8. The results indicate that the proposed tracker realizes the best performance in success rate, and the second-best performance in precision, which is slightly lower than that of OMFL [30].

Figure 5.

Overall evaluation on the UAV123@10fps [45] benchmark. (a) Precision plots. (b) Success plots.

Figure 6.

Overall evaluation on the VisDrone2018-SOT [46] benchmark. (a) Precision plots. (b) Success plots.

Figure 7.

Overall evaluation on the UAVTrack112 [25] benchmark. (a) Precision plots. (b) Success plots.

Figure 8.

Overall evaluation on the UAV20L [45] benchmark. (a) Precision plots. (b) Success plots.

Besides satisfactory tracking accuracy, the tracking speed of our algorithm is capable of meeting the real-time requirements, as shown in Table 1.

Table 1.

Real-time performance comparison on the UAV123@10fps [45] benchmark. All results are generated solely by a desktop CPU.

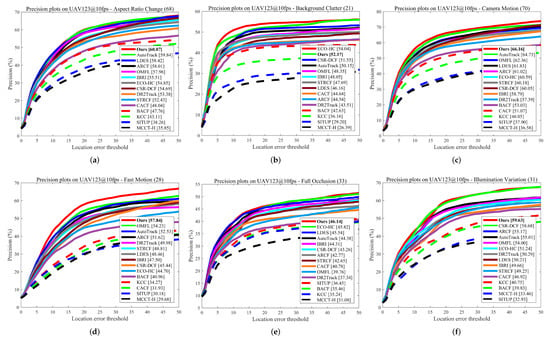

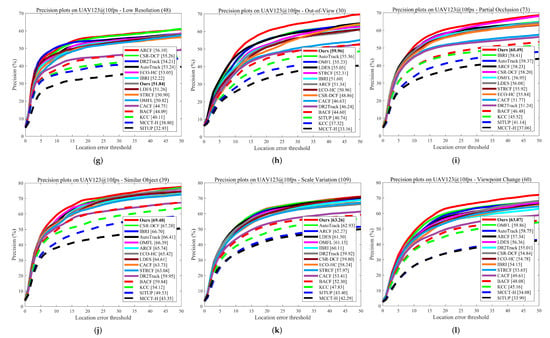

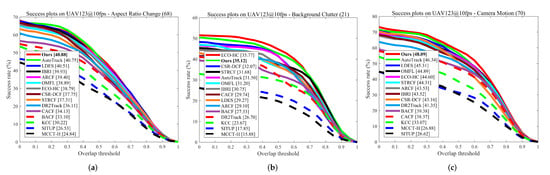

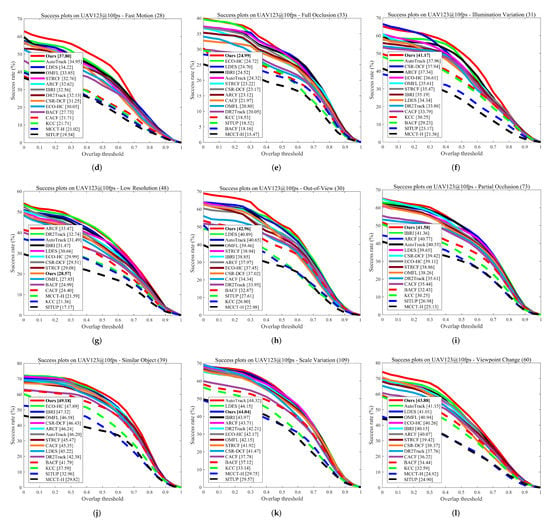

3.2.2. Attribute-Based Evaluation

To analyze the tracking performance of UAV-specific challenging aspects in more detail, the UAV123@10fps [45] benchmark annotates the video sequences into 12 different attributes, i.e., aspect ratio change (ARC), background clutter (BC), camera motion (CM), fast motion (FM), full occlusion (FOC), illumination variation (IV), low resolution (LR), out-of-view (OV), partial occlusion (POC), similar object (SOB), scale variation (SV), and viewpoint change (VC). We report the experimental results of attribute-based performance comparisons in Figure Figure 9 and Figure 10. The observations reveal that the proposed approach performs very competitively over other compared methods. In terms of precision, our approach ranks top among the 10 attributes involving ARC, CM, FM, FOC, IV, OV, POC, SOB, SV, and VC. As shown in Figure 9, our tracker performs 0.23%, 1.43%, 3.61%, 0.32%, 0.95%, 4.60%, 2.08%, 2.12%, 0.33%, and 3.21% better on these attributes compared to the second-best tracker. Note that the proposed algorithm also surpasses the baseline BACF [43] by a large margin (more than 10%) on these 10 attributes. In terms of success rate, Figure 10 shows that our approach obtains the best results on all attributes except BC, LR, and SV. Especially under the visual interference factors unique to UAV tracking, our tracker achieves notable improvements compared to the second-best tracker, i.e., 2.75% on CM, 2.85% on FM, 3.21% on IV, and 2.73% on VC.

Figure 9.

Precision plots on different attributes of the UAV123@10fps [45] benchmark. (a) Aspect ratio change (ARC). (b) Background clutter (BC). (c) Camera motion (CM). (d) Fast motion (FM). (e) Full occlusion (FOC). (f) Illumination variation (IV). (g) Low resolution (LR). (h) Out-of-view (OV). (i) Partial occlusion (POC). (j) Similar object (SOB). (k) Scale variation (SV). (l) Viewpoint change (VC).

Figure 10.

Success plots on different attributes of the UAV123@10fps [45] benchmark. (a) Aspect ratio change. (b) Background clutter. (c) Camera motion. (d) Fast motion. (e) Full occlusion. (f) Illumination variation. (g) Low resolution. (h) Out-of-view. (i) Partial occlusion. (j) Similar object. (k) Scale variation. (l) Viewpoint change.

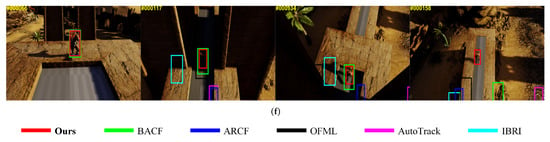

3.3. Qualitative Evaluation

For a more intuitive evaluation, qualitative comparisons are conducted with the proposed tracker and other five representative trackers including ARCF [2], OFML [30], AutoTrack [3], IBRI [31], and the baseline method BACF [43]. Figure 11 illustrates some keyframes with the tracking results on six video sequences (from the UAV123@10fps [45] benchmark), i.e., Car1-2, Person19, Wakeboard5, Uav1-2, Car1-s, and Person3-s. In the Car1-2 sequence, the target with a small size is greatly disturbed by the similar objects around. It can be observed that BACF and OFML lose the target, while our tracker keeps tracking it successfully. In the Person19 sequence, the target moves out of view gradually. Notably, among all the trackers, only IBRI and ours can re-identify the target when it appears again. In the Wakeboard5 sequence, the target experiences significant deformation and scale variations. BACF, AutoTrack, and IBRI completely drift away from the target, while our tracker estimates both the position and scale accurately. In the Uav1-2 sequence, all the comparative trackers do not perform well due to the fast motion and viewpoint change. In contrast, our tracker effectively adapts to these two attributes. As for the Car1-s and Person3-s sequences which are generated by the UE4-based simulator [45], it should be noted that all the trackers except ours fail to locate the targets in the complex scenarios with multiple interference factors including poor illumination, severe viewpoint change, and scale variation.

Figure 11.

Qualitative evaluation on 6 challenging video sequences of the UAV123@10fps [45] benchmark. (a) Car1-2. (b) Person19. (c) Wakeboard5. (d) Uav1-2. (e) Car1-s. (f) Person3-s.

4. Discussion

In general, the quantitative and qualitative experimental results demonstrate the effectiveness and robustness of the proposed algorithm in UAV tracking. As shown in Figure 5, Figure 6, Figure 7 and Figure 8, on each of the four public benchmarks [25,45,46], our tracker obtains the best or the second-best results in terms of precision and success rate. The overall evaluation verifies the superiority of our algorithm in short-term UAV tracking, as well as in long-term tracking scenarios when severe occlusion and out-of-view conditions occur. As shown in Figure 9 and Figure 10, our tracker performs well on most attributes and is applicable for almost all types of complex scenes. The attribute-based evaluation confirms the robustness and well-roundness of our proposed algorithm under different tracking challenges. Meanwhile, the qualitative results further validate the effectiveness of our algorithm and its capability of suppressing model drifts caused by occlusion, out-of-view, or background interference.

To analyze the impact of each component in our tracking architecture, we provide the performance of our tracker with different modules on the benchmark UAV123@10fps [45] in Table 2. The basic notions are as follows: FI denotes feature integration, RME stands for response map enhancement, and DMU means dynamic model update. Table 2 shows that all of the three modules contribute to the substantial improvement over the baseline tracker [43]. The integrated features boost the tracking performance in terms of both precision and success rate by a large margin. The enhanced response map achieved by incorporating the saliency response adaptively further improves the tracking accuracy. Our simple model update mechanism can, not only avoid model contamination, but also effectively reduce computational complexity.

Table 2.

Ablation study of our tracker on the UAV123@10fps [45] benchmark.

5. Conclusions

In this work, a novel CF-based algorithm is proposed to implement UAV tracking tasks. By virtue of an effective feature integration method, the correlation filter with integrated features deliver a significant improvement in adapting to different challenges such as object geometry changes and illumination variations. Saliency awareness is then integrated into the tracking framework, which facilitates the mitigation of model drift. Afterwards, decision-level fusion is achieved through an adaptive response fusion strategy, resulting in an enhanced response map with relatively little background interference. Finally, we utilize a dynamic model update mechanism to maintain the model stability in the presence of occlusions. Extensive experiments on four UAV tracking benchmarks validate the superiority of the proposed tracker over other state-of-the-art trackers. Moreover, our tracker is capable of efficient tracking with low computational requirements, which is promising for further development of real-time UAV applications.

Author Contributions

Conceptualization, B.L. and Y.L.; methodology, B.L. and Y.L.; software, B.L.; writing—original draft preparation, B.L.; writing—review and editing, Y.B. and B.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61871460, and in part by the Natural Science Foundation of Guangxi under Grant 2019GXNSFBA245056.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

UAV123@10fps, UAV20L, VisDrone2018-SOT, and UAVTrack112 datasets are openly available via the following link: https://cemse.kaust.edu.sa/ivul/uav123 (UAV123@10fps and UAV20L); https://github.com/VisDrone/VisDrone-Dataset (VisDrone2018-SOT); https://github.com/vision4robotics/SiamAPN (UAVTrack112) (accessed on 6 June 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kumar, A.; Walia, G.S.; Sharma, K. Recent Trends in Multicue Based Visual Tracking: A Review. Expert Syst. Appl. 2020, 162, 113711. [Google Scholar] [CrossRef]

- Huang, Z.; Fu, C.; Li, Y.; Lin, F.; Lu, P. Learning Aberrance Repressed Correlation Filters for Real-Time UAV Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2891–2900. [Google Scholar]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards High-Performance Visual Tracking for UAV With Automatic Spatio-Temporal Regularization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11920–11929. [Google Scholar]

- Fu, C.; Ding, F.; Li, Y.; Jin, J.; Feng, C. DR2Track: Towards Real-Time Visual Tracking for UAV via Distractor Repressed Dynamic Regression. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 1597–1604. [Google Scholar]

- Specht, M.; Stateczny, A.; Specht, C.; Widźgowski, S.; Lewicka, O.; Wiśniewska, M. Concept of an Innovative Autonomous Unmanned System for Bathymetric Monitoring of Shallow Waterbodies (INNOBAT System). Energies 2021, 14, 5370. [Google Scholar] [CrossRef]

- Wang, D.; Xing, S.; He, Y.; Yu, J.; Xu, Q.; Li, P. Evaluation of a New Lightweight UAV-Borne Topo-Bathymetric LiDAR for Shallow Water Bathymetry and Object Detection. Sensors 2022, 22, 1379. [Google Scholar] [CrossRef] [PubMed]

- Burdziakowski, P. Increasing the Geometrical and Interpretation Quality of Unmanned Aerial Vehicle Photogrammetry Products using Super-Resolution Algorithms. Remote Sens. 2020, 12, 810. [Google Scholar] [CrossRef] [Green Version]

- Yuan, C.; Liu, Z.; Zhang, Y. Aerial Images-Based Forest Fire Detection for Firefighting Using Optical Remote Sensing Techniques and Unmanned Aerial Vehicles. J. Intell. Robot. Syst. 2017, 88, 635–654. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.G.; Lampropoulou, P.; Fakiris, E.; Sardelianos, D.; Papatheodorou, G. Synergistic Use of UAV and USV Data and Petrographic Analyses for the Investigation of Beachrock Formations: A Case Study from Syros Island, Aegean Sea, Greece. Minerals 2018, 8, 534. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Xu, J.; Lin, F.; Guo, F.; Liu, T.; Zhang, Z. Object Saliency-Aware Dual Regularized Correlation Filter for Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8940–8951. [Google Scholar] [CrossRef]

- Fu, C.; Li, B.; Ding, F.; Lin, F.; Lu, G. Correlation Filters for Unmanned Aerial Vehicle-Based Aerial Tracking: A Review and Experimental Evaluation. IEEE Geosci. Remote Sens. Mag. 2022, 10, 125–160. [Google Scholar] [CrossRef]

- Jia, X.; Lu, H.; Yang, M.H. Visual Tracking via Adaptive Structural Local Sparse Appearance Model. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1822–1829. [Google Scholar]

- Sevilla-Lara, L.; Learned-Miller, E. Distribution Fields for Tracking. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1910–1917. [Google Scholar]

- He, S.; Yang, Q.; Lau, R.W.; Wang, J.; Yang, M.H. Visual Tracking via Locality Sensitive Histograms. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2427–2434. [Google Scholar]

- Babenko, B.; Yang, M.H.; Belongie, S. Robust Object Tracking with Online Multiple Instance Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1619–1632. [Google Scholar] [CrossRef] [Green Version]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Ma, S.; Sclaroff, S. MEEM: Robust Tracking via Multiple Experts Using Entropy Minimization. In ECCV 2014: Proceedings of the Computer Vision, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 188–203. [Google Scholar]

- Hare, S.; Golodetz, S.; Saffari, A.; Vineet, V.; Cheng, M.M.; Hicks, S.L.; Torr, P.H.S. Struck: Structured Output Tracking with Kernels. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2096–2109. [Google Scholar] [CrossRef] [Green Version]

- Ma, C.; Huang, J.B.; Yang, X.; Yang, M.H. Hierarchical Convolutional Features for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3074–3082. [Google Scholar]

- Li, Y.; Fu, C.; Huang, Z.; Zhang, Y.; Pan, J. Intermittent Contextual Learning for Keyfilter-Aware UAV Object Tracking Using Deep Convolutional Feature. IEEE Trans. Multimed. 2021, 23, 810–822. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In ECCV 2016 Workshops: Proceedings of the Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Hua, G., Jégou, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast Online Object Tracking and Segmentation: A Unifying Approach. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer Tracking. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8122–8131. [Google Scholar]

- Fu, C.; Cao, Z.; Li, Y.; Ye, J.; Feng, C. Onboard Real-Time Aerial Tracking with Efficient Siamese Anchor Proposal Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking Using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In ECCV 2014 Workshops: Proceedings of the Computer Vision, Zurich, Switzerland, 6–12 September 2014; Agapito, L., Bronstein, M.M., Rother, C., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 254–265. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Van De Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Lin, F.; Li, Y.; Chen, G. Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning. Remote Sens. 2019, 11, 549. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Ye, J.; Xu, J.; He, Y.; Lin, F. Disruptor-Aware Interval-Based Response Inconsistency for Correlation Filters in Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6301–6313. [Google Scholar] [CrossRef]

- Zhang, F.; Ma, S.; Yu, L.; Zhang, Y.; Qiu, Z.; Li, Z. Learning Future-Aware Correlation Filters for Efficient UAV Tracking. Remote Sens. 2021, 13, 4111. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems—NIPS’17, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Zhu, G.; Wang, J.; Wu, Y.; Zhang, X.; Lu, H. MC-HOG Correlation Tracking with Saliency Proposal. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2016. [Google Scholar]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-cue Correlation Filters for Robust Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4844–4853. [Google Scholar]

- Wang, C.; Zhang, L.; Xie, L.; Yuan, J. Kernel Cross-Correlator. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 4179–4186. [Google Scholar]

- Zhang, T.; Xu, C.; Yang, M.H. Learning Multi-Task Correlation Particle Filters for Visual Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 365–378. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, J.; Hoi, S.C.; Song, W.; Wang, Z.; Liu, H. Robust Estimation of Similarity Transformation for Visual Object Tracking. In AAAI’19/IAAI’19/EAAI’19: Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, Hawaii USA, 27 January–1 February 2019; AAAI Press: Menlo Park, CA, USA, 2019. [Google Scholar]

- Galoogahi, H.K.; Sim, T.; Lucey, S. Correlation filters with limited boundaries. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4630–4638. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar]

- Lukežic, A.; Vojír, T.; Zajc, L.C.; Matas, J.; Kristan, M. Discriminative Correlation Filter with Channel and Spatial Reliability. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4847–4856. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In ECCV 2016: Proceedings of the Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Wen, L.; Zhu, P.; Du, D.; Bian, X.; Ling, H.; Hu, Q.; Liu, C.; Cheng, H.; Liu, X.; Ma, W.; et al. VisDrone-SOT2018: The Vision Meets Drone Single-Object Tracking Challenge Results. In ECCV 2018 Workshops: Proceedings of the Computer Vision, Munich, Germany, 8–14 September 2018; Leal-Taixé, L., Roth, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 469–495. [Google Scholar]

- Wang, N.; Shi, J.; Yeung, D.Y.; Jia, J. Understanding and Diagnosing Visual Tracking Systems. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3101–3109. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Dai, K.; Wang, D.; Lu, H.; Sun, C.; Li, J. Visual Tracking via Adaptive Spatially-Regularized Correlation Filters. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4665–4674. [Google Scholar]

- Bhat, G.; Johnander, J.; Danelljan, M.; Khan, F.S.; Felsberg, M. Unveiling the Power of Deep Tracking. In ECCV 2018: Proceedings of the Computer Vision, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 493–509. [Google Scholar]

- Xu, T.; Feng, Z.H.; Wu, X.J.; Kittler, J. Joint Group Feature Selection and Discriminative Filter Learning for Robust Visual Object Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7949–7959. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers; Now Foundations and Trends: Hanover, MA, USA, 2011. [Google Scholar]

- Huang, R.; Feng, W.; Sun, J. Color Feature Reinforcement for Cosaliency Detection Without Single Saliency Residuals. IEEE Signal Process. Lett. 2017, 24, 569–573. [Google Scholar] [CrossRef]

- Tang, Y.; Zou, W.; Jin, Z.; Chen, Y.; Hua, Y.; Li, X. Weakly Supervised Salient Object Detection With Spatiotemporal Cascade Neural Networks. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 1973–1984. [Google Scholar] [CrossRef] [Green Version]

- Wei, Y.; Feng, J.; Liang, X.; Cheng, M.M.; Zhao, Y.; Yan, S. Object Region Mining with Adversarial Erasing: A Simple Classification to Semantic Segmentation Approach. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6488–6496. [Google Scholar]

- Wang, X.; You, S.; Li, X.; Ma, H. Weakly-Supervised Semantic Segmentation by Iteratively Mining Common Object Features. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1354–1362. [Google Scholar]

- Hou, X.; Zhang, L. Saliency Detection: A Spectral Residual Approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Zhang, Y.; Yang, Y.; Zhou, W.; Shi, L.; Li, D. Motion-Aware Correlation Filters for Online Visual Tracking. Sensors 2018, 18, 3937. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mueller, M.; Smith, N.; Ghanem, B. Context-Aware Correlation Filter Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1387–1395. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4904–4913. [Google Scholar]

- Ma, H.; Acton, S.T.; Lin, Z. SITUP: Scale Invariant Tracking Using Average Peak-to-Correlation Energy. IEEE Trans. Image Process. 2020, 29, 3546–3557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).