Evaluation of SAR and Optical Data for Flood Delineation Using Supervised and Unsupervised Classification

Abstract

:1. Introduction

- How to deal efficiently with challenges stemming from the heterogeneity and overlap of MS and SAR signatures of surface water types.

- How to delineate fragmented flood water patches and estimate correctly the total flooded area.

- How to identify an optimal combination of optical, SAR, and textural signatures as regards both accuracy and computational efficiency.

- Comparative advantages of artificial intelligence (AI) algorithms above relatively simple thresholding and segmentation methods.

2. Materials and Methods

2.1. Case Studies: Sesia and Enza Rivers

2.2. Datasets

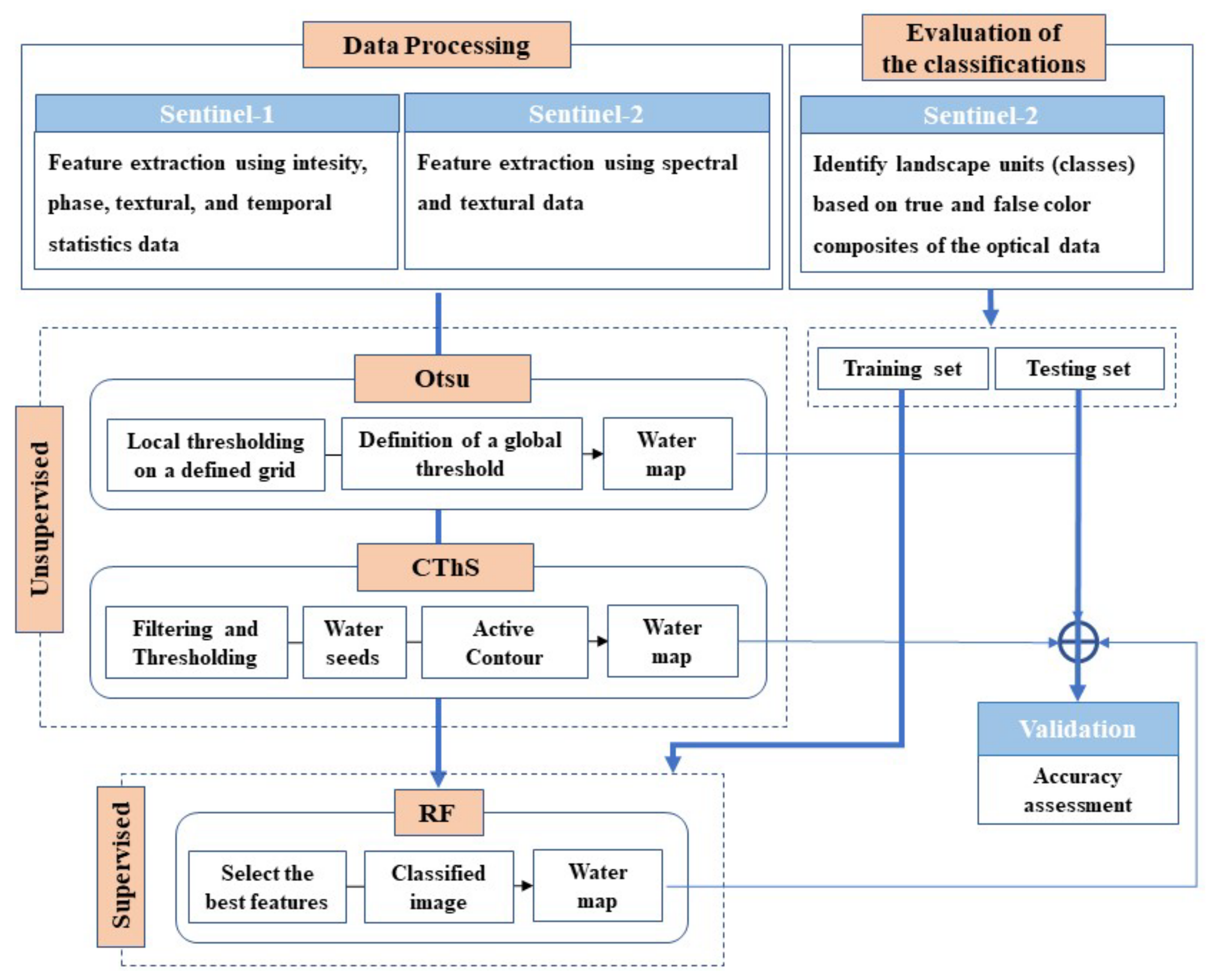

2.3. Methods

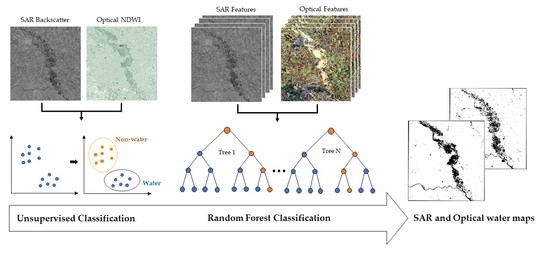

2.3.1. Overview of the Approach

2.3.2. Unsupervised Methods

- Otsu thresholding method

- The CThS method (combination of thresholding and segmentation)

2.3.3. Supervised Random Forest Classification

2.3.4. Feature Generation

2.3.5. Evaluation of the Classifications

3. Results

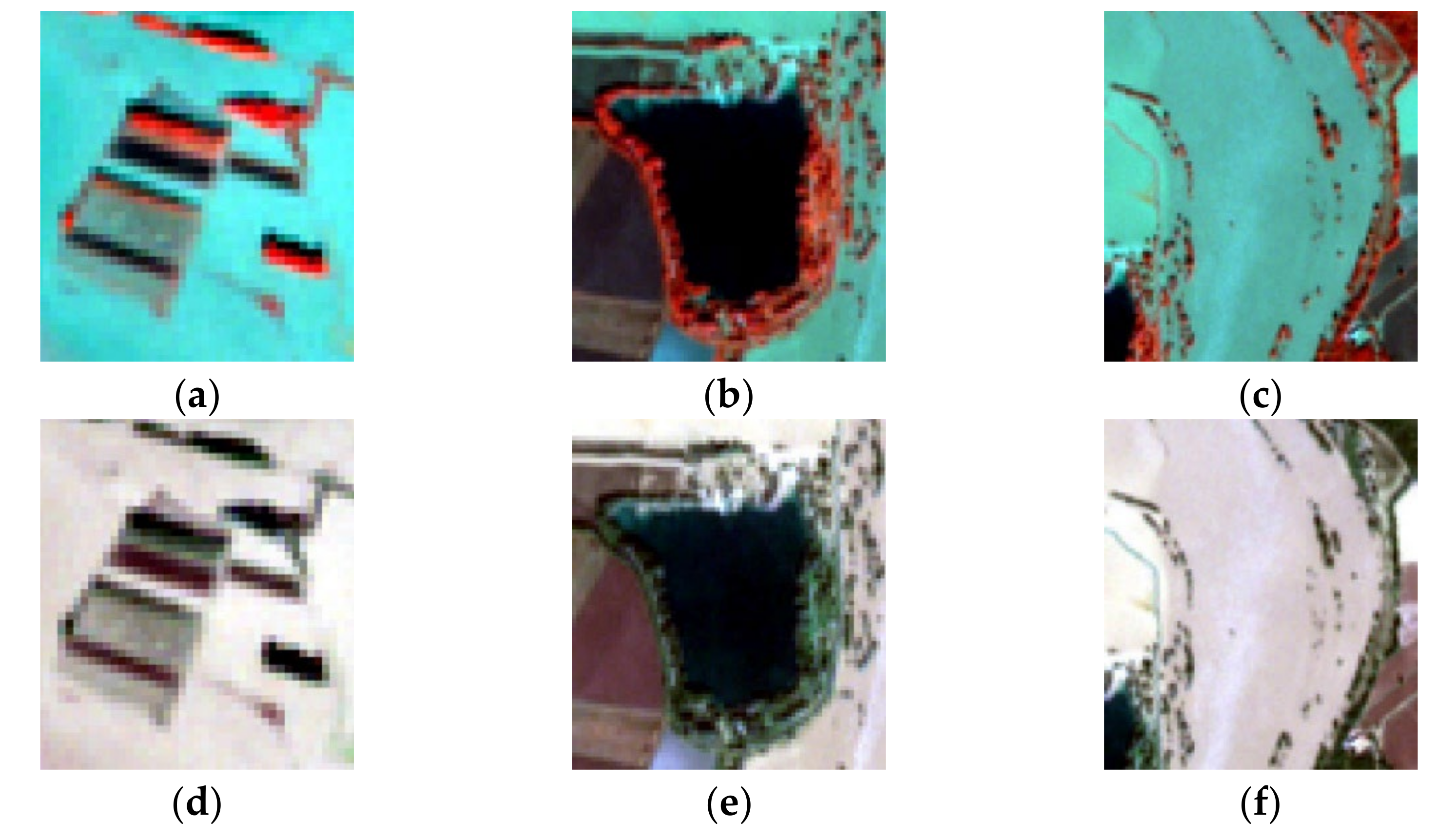

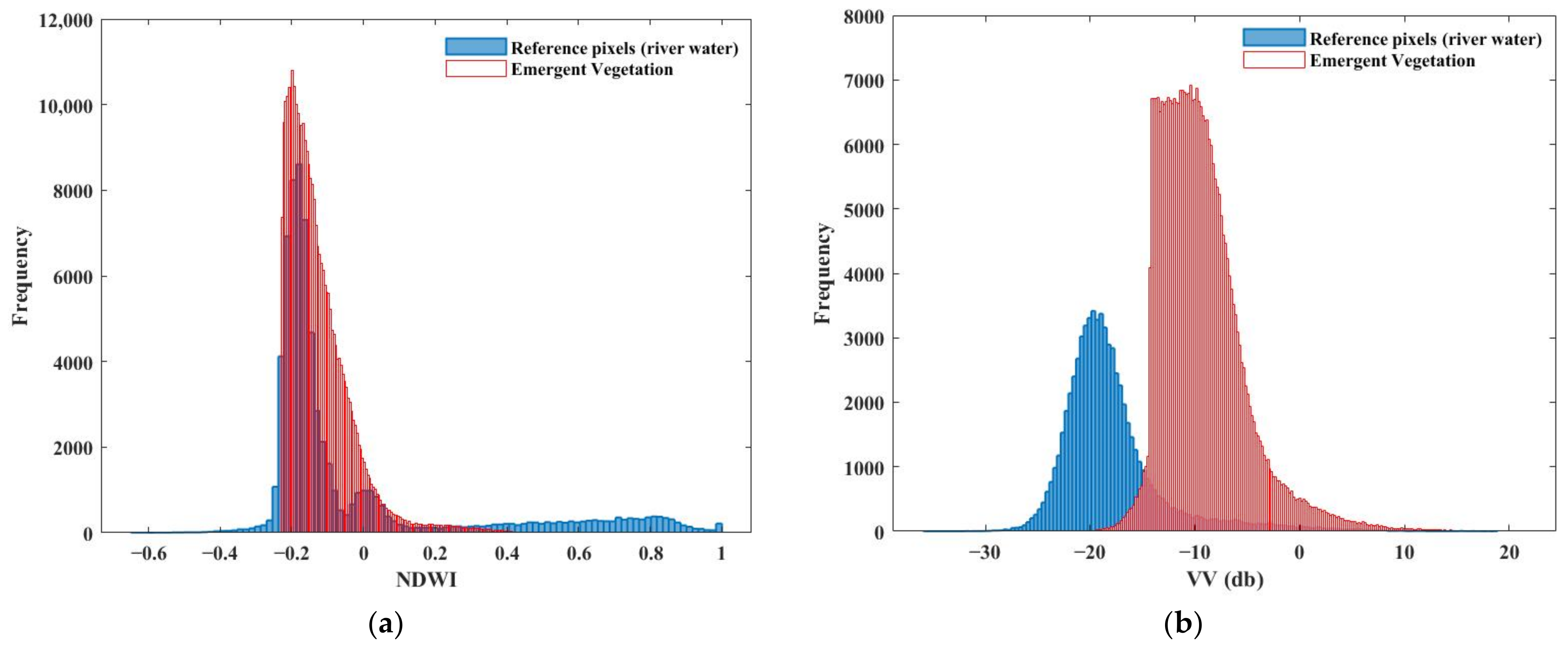

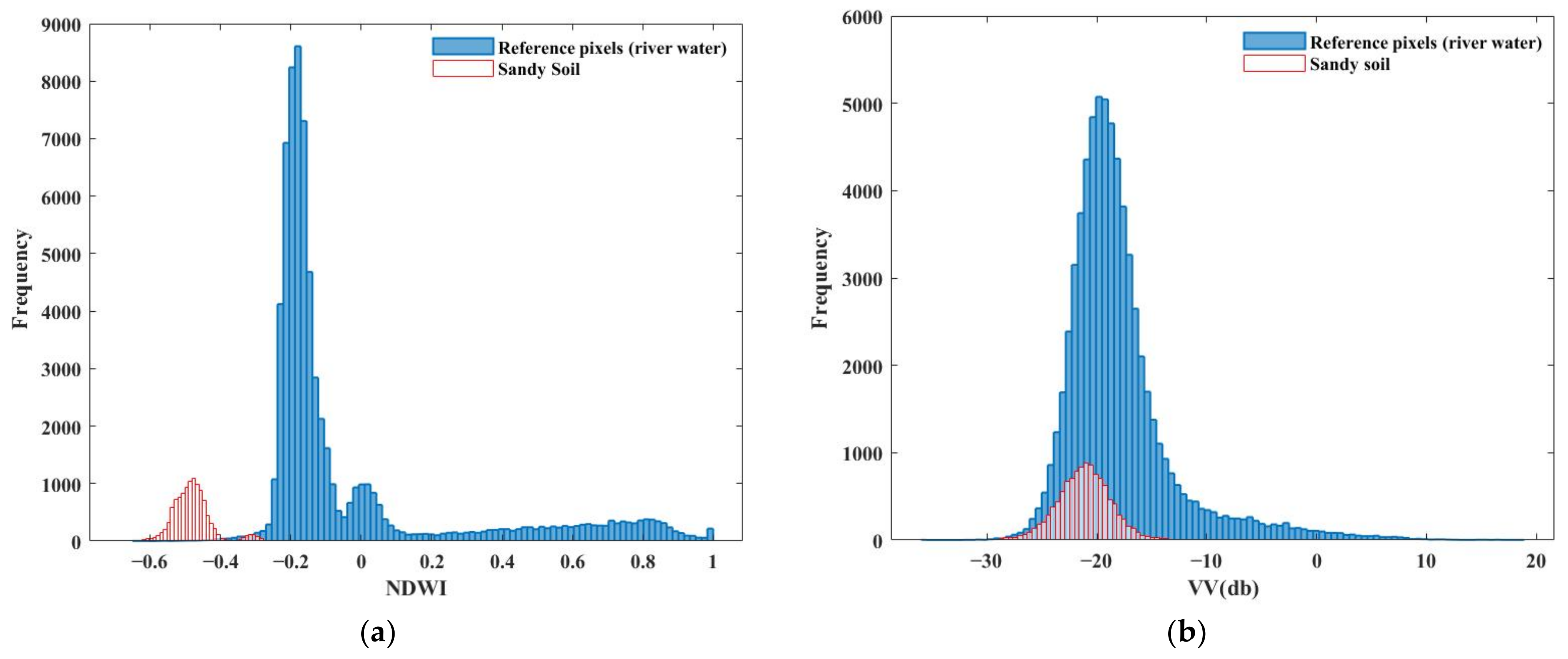

3.1. SAR and Multispectral Signatures of the Classes

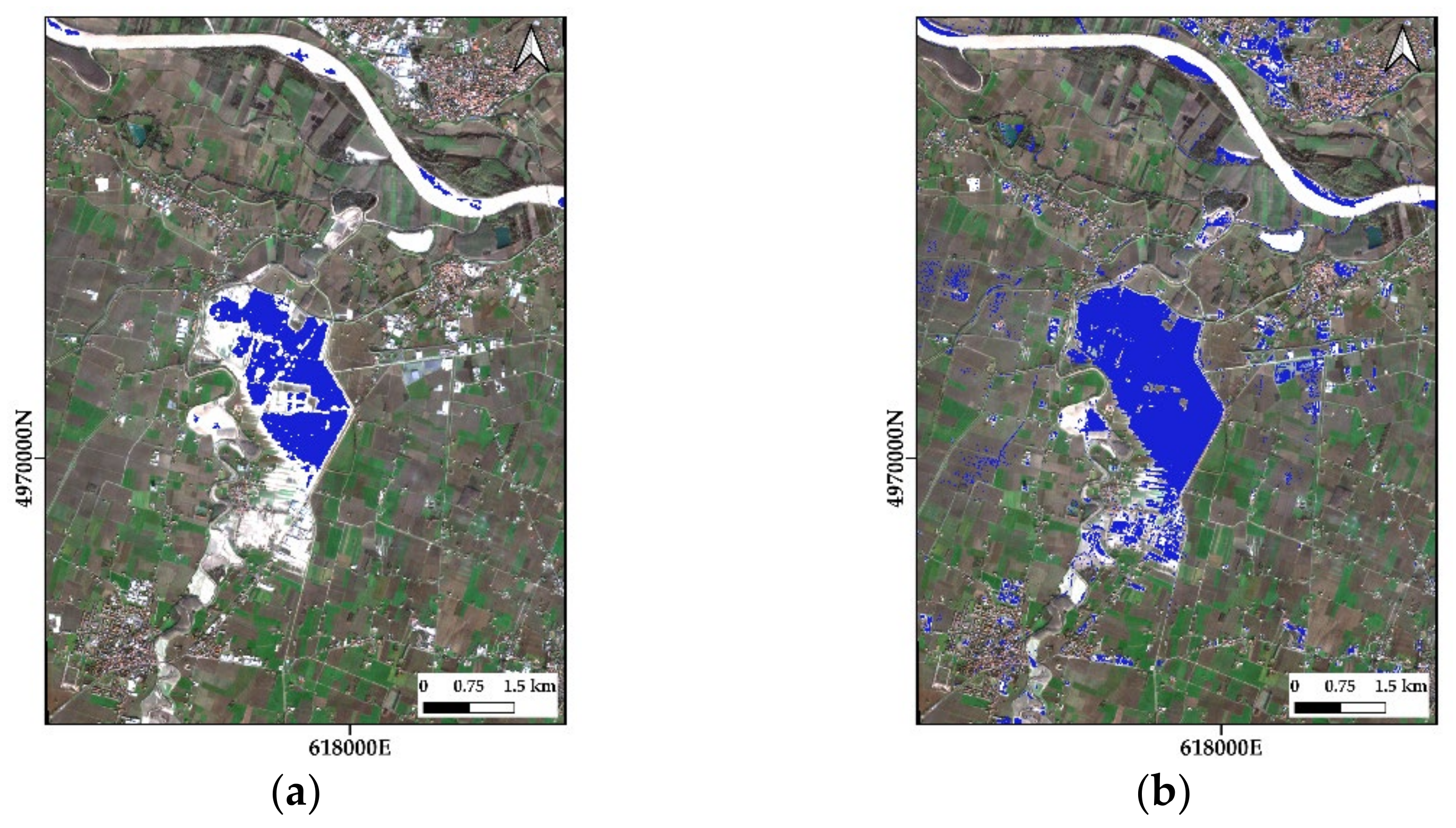

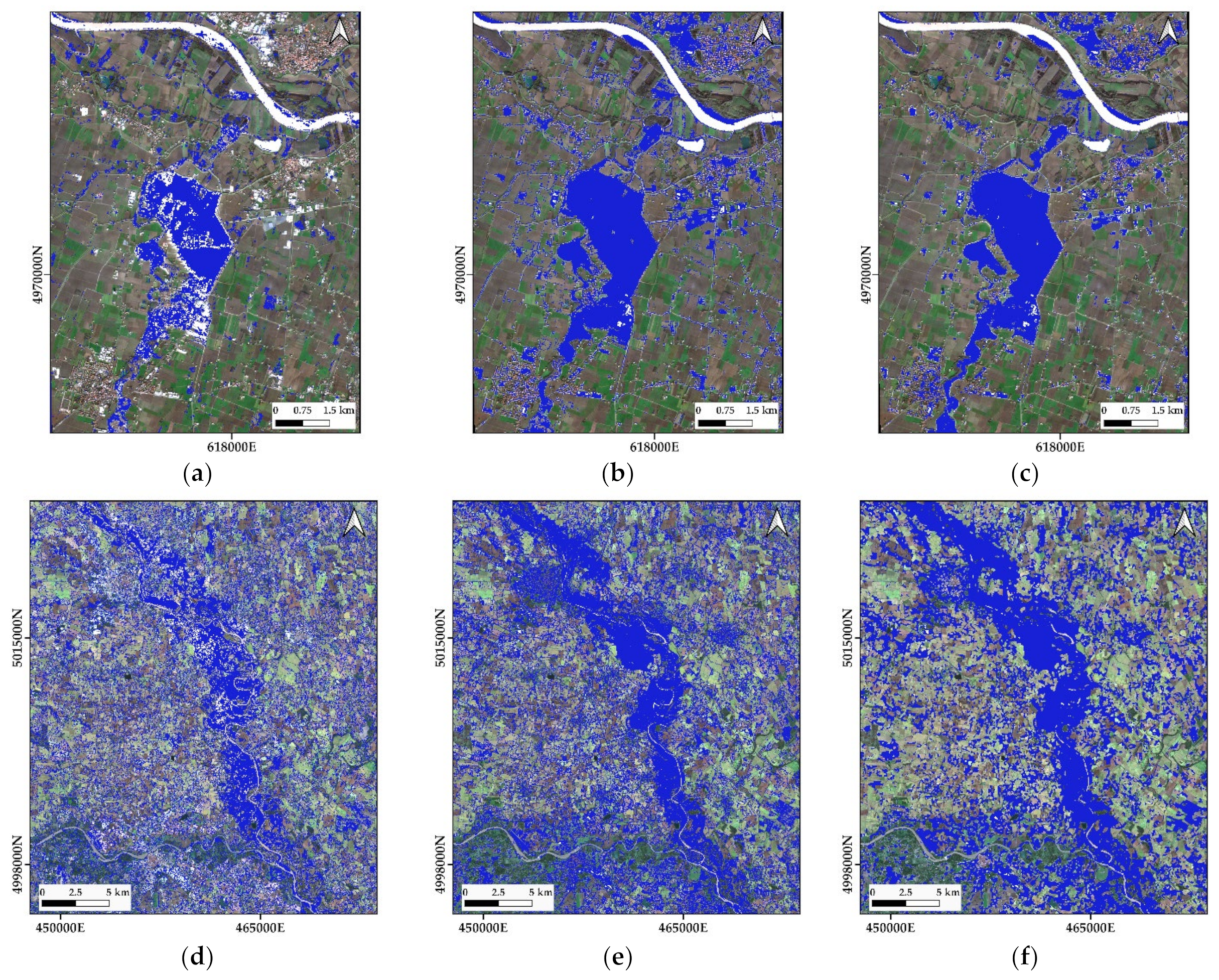

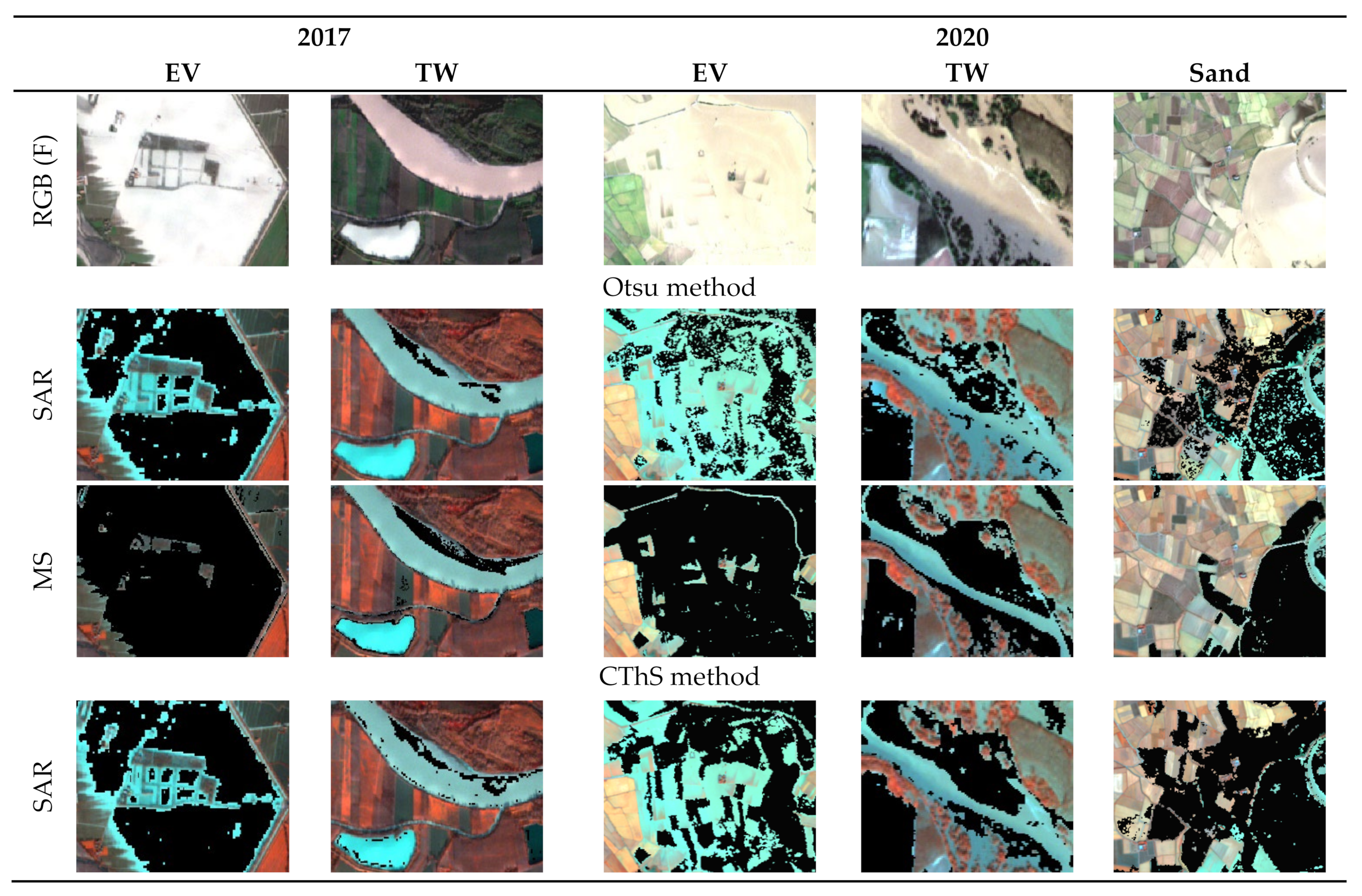

3.2. Flood Maps Derived from Unsupervised Methods: Otsu and CThS Methods

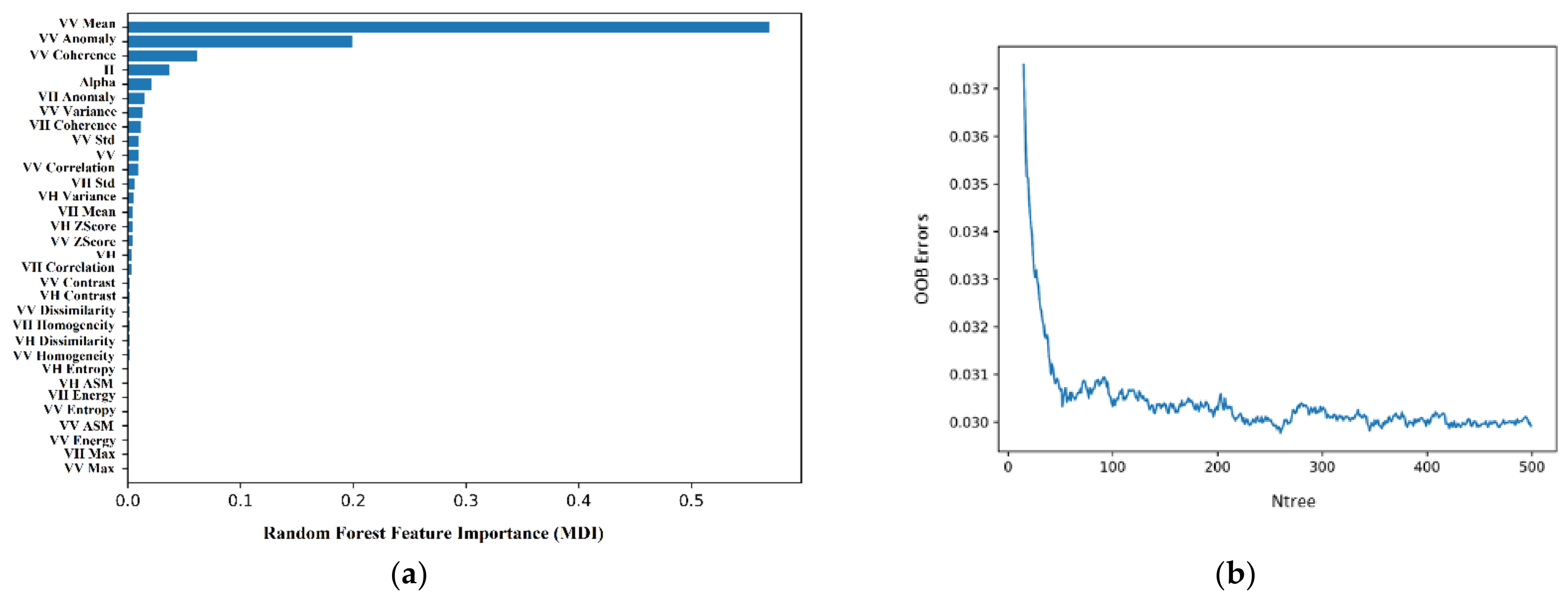

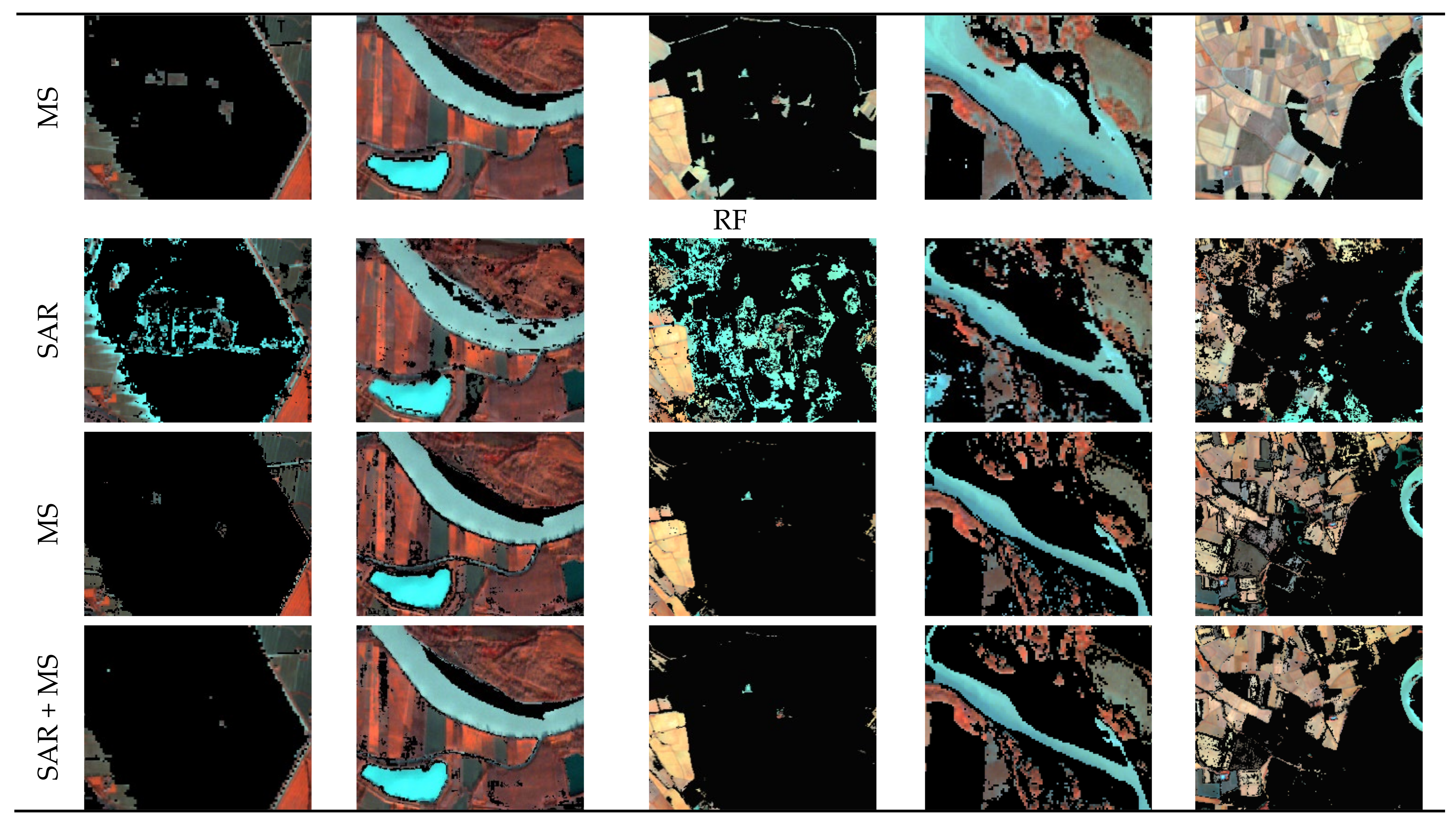

3.3. Flood Maps with Supervised Methods: Random Forest Classification

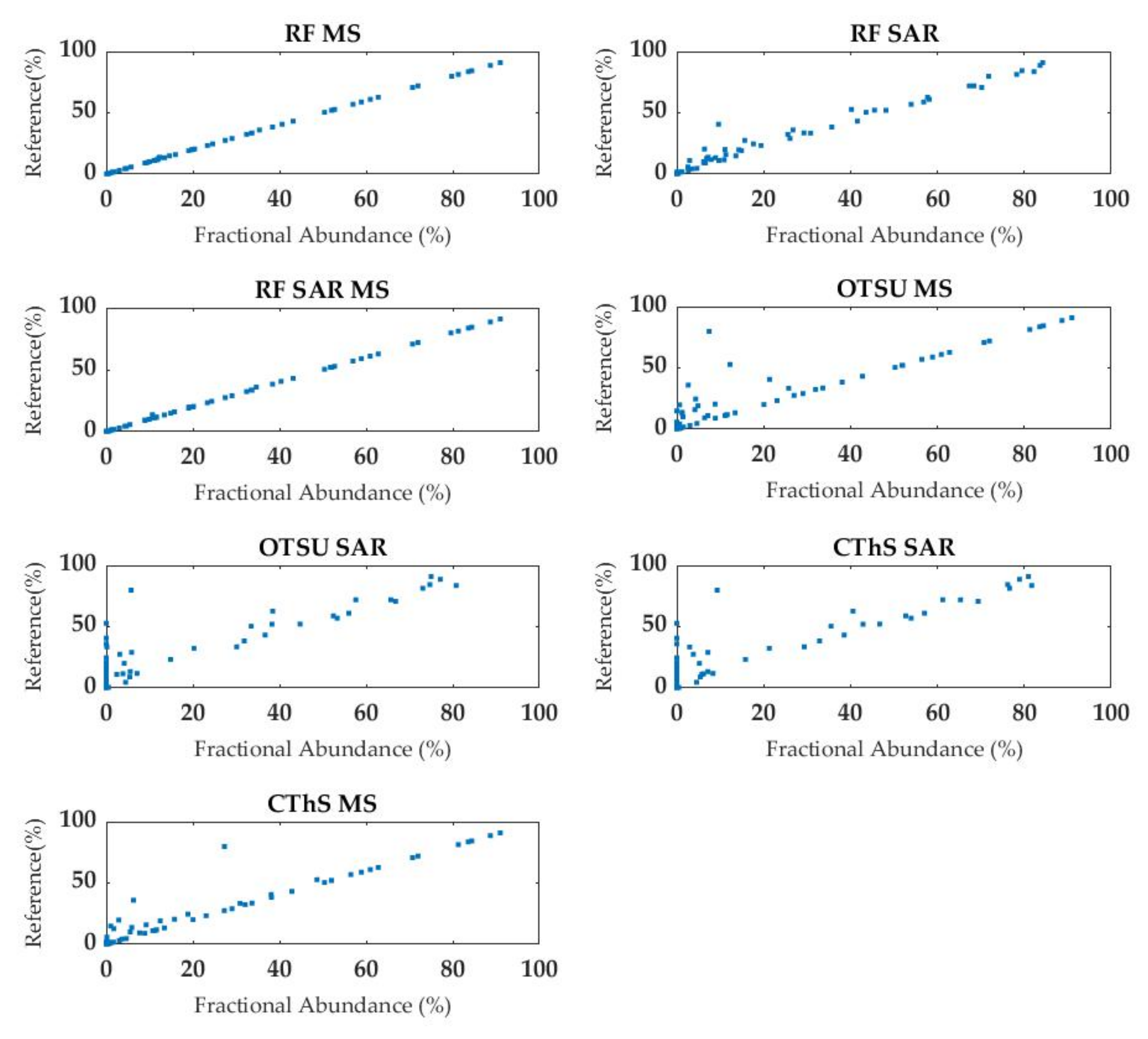

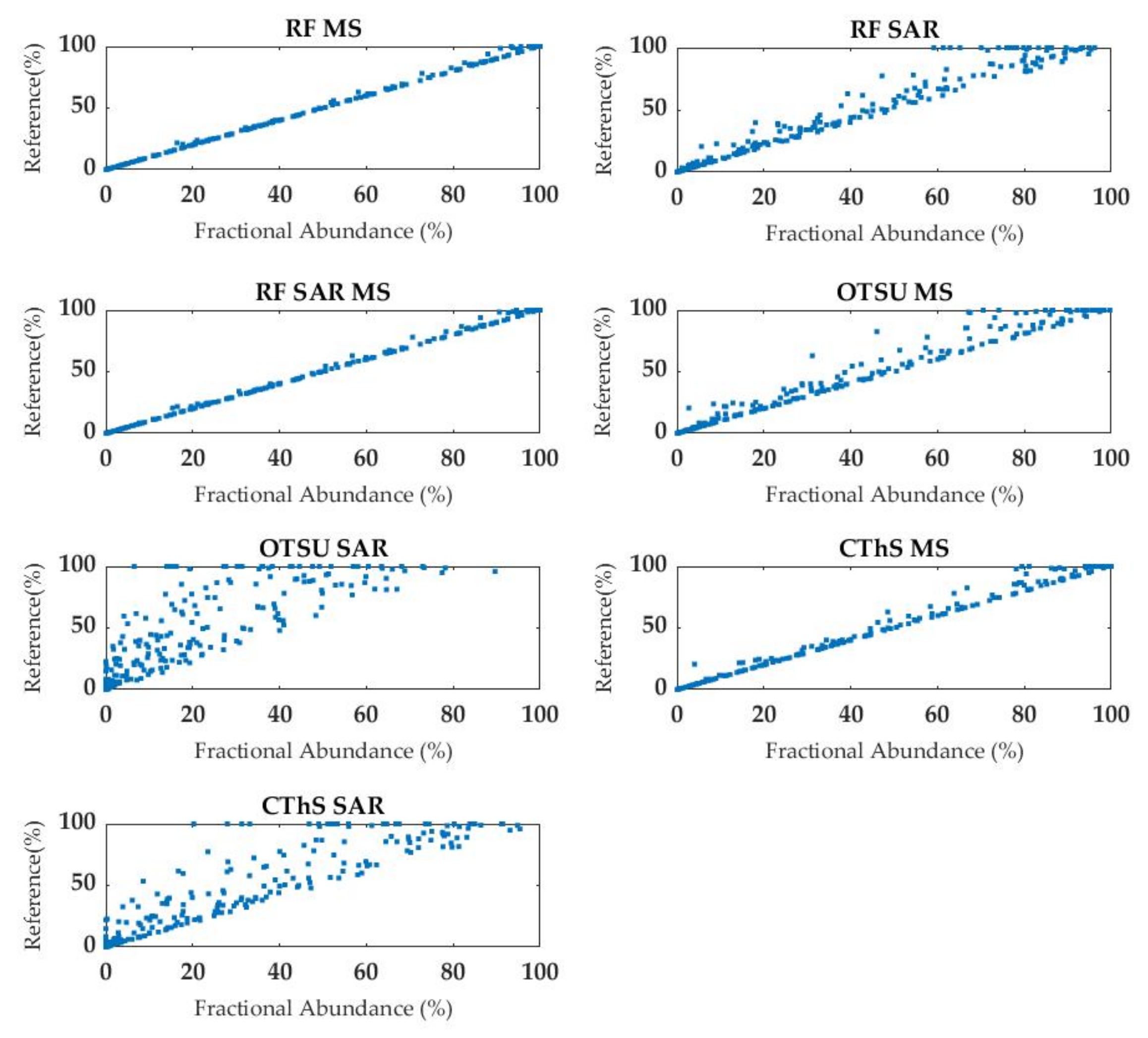

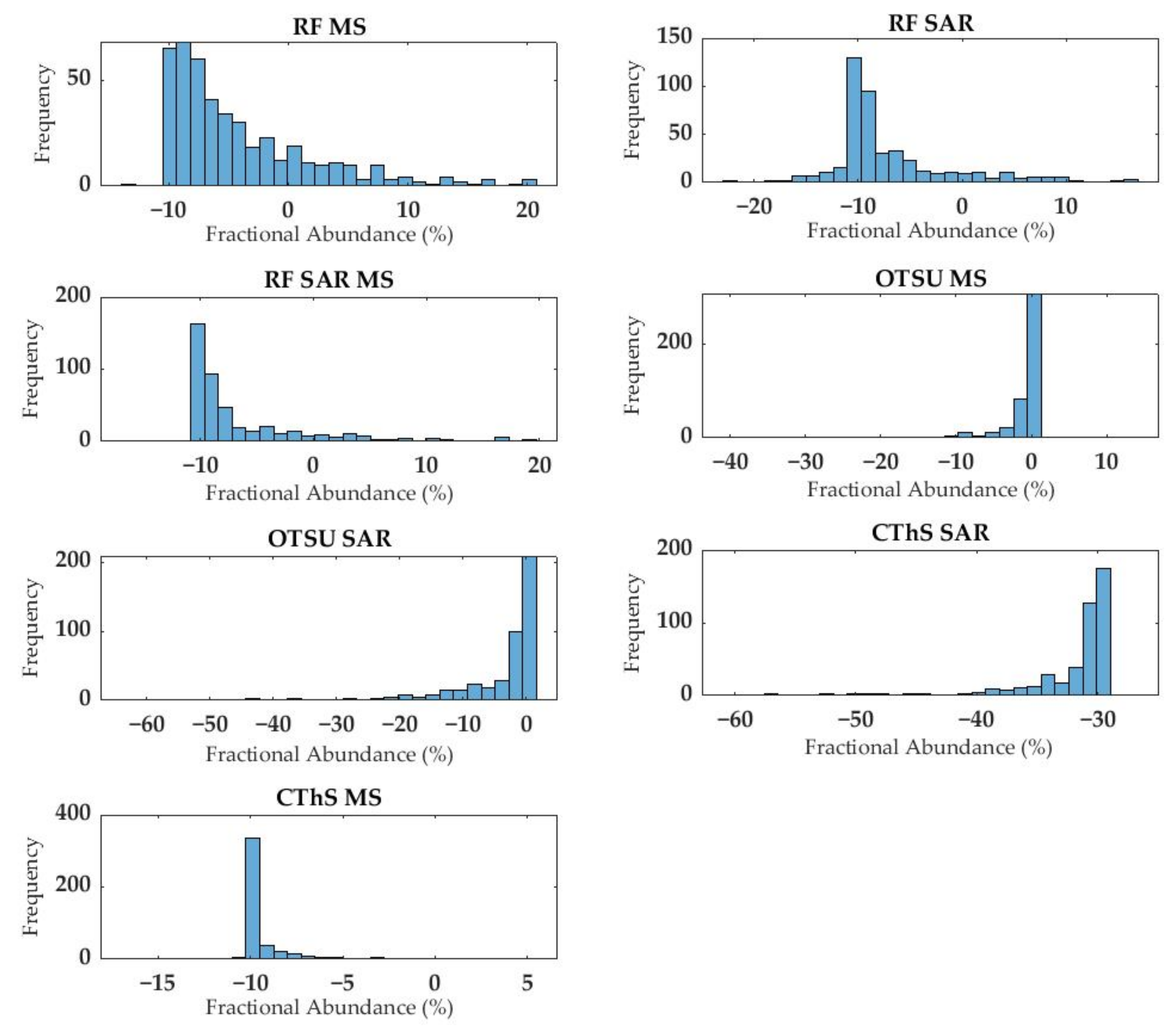

3.4. Evaluation of Flood Delineation

3.5. Sub-Cases: Emergent Vegetation, Sandy Areas, and Turbid Water

4. Discussion

- Delineation of landscape units;

- Spectral and backscatter features;

- Classification methods.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, L.C. Satellite remote sensing of river inundation area, stage, and discharge: A review. Hydrol. Process. 1997, 11, 1427–1439. [Google Scholar] [CrossRef]

- Domenikiotis, C.; Loukas, A.; Dalezios, N. The use of NOAA/AVHRR satellite data for monitoring and assessment of forest fires and floods. Nat. Earth Syst. Sci. 2003, 3, 115–128. [Google Scholar] [CrossRef] [Green Version]

- Fayne, J.V.; Bolten, J.D.; Doyle, C.S.; Fuhrmann, S.; Rice, M.T.; Houser, P.R.; Lakshmi, V. Flood mapping in the lower Mekong River Basin using daily MODIS observations. Int. J. Remote Sens. 2017, 38, 1737–1757. [Google Scholar] [CrossRef]

- Powell, S.; Jakeman, A.; Croke, B. Can NDVI response indicate the effective flood extent in macrophyte dominated floodplain wetlands? Ecol. Indic. 2014, 45, 486–493. [Google Scholar] [CrossRef]

- Zoffoli, M.L.; Kandus, P.; Madanes, N.; Calvo, D.H. Seasonal and interannual analysis of wetlands in South America using NOAA-AVHRR NDVI time series: The case of the Parana Delta Region. Landsc. Ecol. 2008, 23, 833–848. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Abazaj, F. SENTINEL-2 Imagery for Mapping and Monitoring Flooding in Buna River Area. J. Int. Environ. Appl. Sci. 2020, 15, 48–53. [Google Scholar]

- Gao, B.-C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- McFeeters, S.K. Using the normalized difference water index (NDWI) within a geographic information system to detect swimming pools for mosquito abatement: A practical approach. Remote Sens. 2013, 5, 3544–3561. [Google Scholar] [CrossRef] [Green Version]

- Memon, A.A.; Muhammad, S.; Rahman, S.; Haq, M. Flood monitoring and damage assessment using water indices: A case study of Pakistan flood-2012. Egypt. J. Remote Sens. Space Sci. 2015, 18, 99–106. [Google Scholar] [CrossRef] [Green Version]

- Thomas, R.F.; Kingsford, R.T.; Lu, Y.; Cox, S.J.; Sims, N.C.; Hunter, S.J. Mapping inundation in the heterogeneous floodplain wetlands of the Macquarie Marshes, using Landsat Thematic Mapper. J. Hydrol. 2015, 524, 194–213. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, S.; Qin, X.; Zhao, N.; Liang, L. Mapping of urban surface water bodies from Sentinel-2 MSI imagery at 10 m resolution via NDWI-based image sharpening. Remote Sens. 2017, 9, 596. [Google Scholar] [CrossRef] [Green Version]

- Fisher, A.; Flood, N.; Danaher, T. Comparing Landsat water index methods for automated water classification in eastern Australia. Remote Sens. Environ. 2016, 175, 167–182. [Google Scholar] [CrossRef]

- Shen, L.; Li, C. Water body extraction from Landsat ETM+ imagery using adaboost algorithm. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; IEEE: New York, NY, USA, 2010. [Google Scholar]

- Wilson, E.H.; Sader, S.A. Detection of forest harvest type using multiple dates of Landsat TM imagery. Remote Sens. Environ. 2002, 80, 385–396. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Rokni, K.; Ahmad, A.; Selamat, A.; Hazini, S. Water feature extraction and change detection using multitemporal Landsat imagery. Remote Sens. 2014, 6, 4173–4189. [Google Scholar] [CrossRef] [Green Version]

- Bangira, T.; Alfieri, S.M.; Menenti, M.; Van Niekerk, A. Comparing thresholding with machine learning classifiers for mapping complex water. Remote Sens. 2019, 11, 1351. [Google Scholar] [CrossRef] [Green Version]

- Deijns, A.A.J.; Dewitte, O.; Thiery, W.; d’Oreye, N.; Malet, J.-P.; Kervyn, F. Timing landslide and flash flood events from SAR satellite: A new method illustrated in African cloud-covered tropical environments. Nat. Hazards Earth Syst. Sci. Discuss. 2022, 172, 1–38. [Google Scholar]

- Bhatt, C.; Thakur, P.K.; Singh, D.; Chauhan, P.; Pandey, A.; Roy, A. Application of active space-borne microwave remote sensing in flood hazard management. In Geospatial Technologies for Land and Water Resources Management; Springer: Cham, Switzerland, 2022; pp. 457–482. [Google Scholar]

- Santangelo, M.; Cardinali, M.; Bucci, F.; Fiorucci, F.; Mondini, A.C. Exploring event landslide mapping using Sentinel-1 SAR backscatter products. Geomorphology 2022, 397, 108021. [Google Scholar] [CrossRef]

- Laugier, O.; Fellah, K.; Tholey, N.; Meyer, C.; De Fraipont, P. High temporal detection and monitoring of flood zone dynamic using ERS data around catastrophic natural events: The 1993 and 1994 Camargue flood events. In Proceedings of the third ERS Symposium, ESA SP-414, Florence, Italy, 17–21 March 1997. [Google Scholar]

- White, L.; Brisco, B.; Dabboor, M.; Schmitt, A.; Pratt, A. A collection of SAR methodologies for monitoring wetlands. Remote Sens. 2015, 7, 7615–7645. [Google Scholar] [CrossRef] [Green Version]

- Martinis, S.; Plank, S.; Ćwik, K. The use of Sentinel-1 time-series data to improve flood monitoring in arid areas. Remote Sens. 2018, 10, 583. [Google Scholar] [CrossRef] [Green Version]

- Martone, M.; Bräutigam, B.; Rizzoli, P.; Krieger, G. TanDEM-X performance over sandy areas. In Proceedings of the EUSAR 2014, 10th European Conference on Synthetic Aperture Radar, Berlin, Germany, 3–5 June 2014; VDE: Berlin, Germany, 2014. [Google Scholar]

- Ahmed, K.R.; Akter, S. Analysis of landcover change in southwest Bengal delta due to floods by NDVI, NDWI and K-means cluster with Landsat multi-spectral surface reflectance satellite data. Remote Sens. Appl. Soc. Environ. 2017, 8, 168–181. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Unsupervised rapid flood mapping using Sentinel-1 GRD SAR images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3290–3299. [Google Scholar] [CrossRef]

- Kordelas, G.A.; Manakos, I.; Aragonés, D.; Díaz-Delgado, R.; Bustamante, J. Fast and automatic data-driven thresholding for inundation mapping with Sentinel-2 data. Remote Sens. 2018, 10, 910. [Google Scholar] [CrossRef] [Green Version]

- Landuyt, L.; Verhoest, N.E.; Van Coillie, F.M. Flood mapping in vegetated areas using an unsupervised clustering approach on Sentinel-1 and-2 imagery. Remote Sens. 2020, 12, 3611. [Google Scholar] [CrossRef]

- Liang, J.; Liu, D. A local thresholding approach to flood water delineation using Sentinel-1 SAR imagery. ISPRS J. Photogramm. Remote Sens. 2020, 159, 53–62. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, P.; Hu, X. Unsupervised GRNN flood mapping approach combined with uncertainty analysis using bi-temporal Sentinel-2 MSI imageries. Int. J. Digit. Earth 2021, 14, 1561–1581. [Google Scholar] [CrossRef]

- Acharya, T.D.; Subedi, A.; Lee, D.H. Evaluation of Machine Learning Algorithms for Surface Water Extraction in a Landsat 8 Scene of Nepal. Sensors 2019, 19, 2769. [Google Scholar] [CrossRef] [Green Version]

- Huang, M.; Jin, S. Rapid flood mapping and evaluation with a supervised classifier and change detection in Shouguang using Sentinel-1 SAR and Sentinel-2 optical data. Remote Sens. 2020, 12, 2073. [Google Scholar] [CrossRef]

- Nandi, I.; Srivastava, P.K.; Shah, K. Floodplain mapping through support vector machine and optical/infrared images from Landsat 8 OLI/TIRS sensors: Case study from Varanasi. Water Resour. Manag. 2017, 31, 1157–1171. [Google Scholar] [CrossRef]

- Tong, X.; Luo, X.; Liu, S.; Xie, H.; Chao, W.; Liu, S.; Liu, S.; Makhinov, A.; Makhinova, A.; Jiang, Y. An approach for flood monitoring by the combined use of Landsat 8 optical imagery and COSMO-SkyMed radar imagery. ISPRS J. Photogramm. Remote Sens. 2018, 136, 144–153. [Google Scholar] [CrossRef]

- Benoudjit, A.; Guida, R. A novel fully automated mapping of the flood extent on SAR images using a supervised classifier. Remote Sens. 2019, 11, 779. [Google Scholar] [CrossRef] [Green Version]

- Esfandiari, M.; Abdi, G.; Jabari, S.; McGrath, H.; Coleman, D. Flood hazard risk mapping using a pseudo supervised random forest. Remote Sens. 2020, 12, 3206. [Google Scholar] [CrossRef]

- Ji, L.; Zhang, L.; Wylie, B. Analysis of dynamic thresholds for the normalized difference water index. Photogramm. Eng. Remote Sens. 2009, 75, 1307–1317. [Google Scholar] [CrossRef]

- Townsend, P.A.; Walsh, S.J. Modeling floodplain inundation using an integrated GIS with radar and optical remote sensing. Geomorphology 1998, 21, 295–312. [Google Scholar] [CrossRef]

- Chapman, B.; Russo, I.M.; Galdi, C.; Morris, M.; di Bisceglie, M.; Zuffada, C.; Lavalle, M. Comparison of SAR and CYGNSS surface water extent metrics over the Yucatan lake wetland site. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: New York, NY, USA, 2021. [Google Scholar]

- Moser, L.; Schmitt, A.; Wendleder, A.; Roth, A. Monitoring of the Lac Bam wetland extent using dual-polarized X-band SAR data. Remote Sens. 2016, 8, 302. [Google Scholar] [CrossRef] [Green Version]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. Monitoring flood evolution in vegetated areas using COSMO-SkyMed data: The Tuscany 2009 case study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1807–1816. [Google Scholar] [CrossRef]

- Chaouch, N.; Temimi, M.; Hagen, S.; Weishampel, J.; Medeiros, S.; Khanbilvardi, R. A synergetic use of satellite imagery from SAR and optical sensors to improve coastal flood mapping in the Gulf of Mexico. Hydrol. Process. 2012, 26, 1617–1628. [Google Scholar] [CrossRef] [Green Version]

- Refice, A.; Capolongo, D.; Pasquariello, G.; D’Addabbo, A.; Bovenga, F.; Nutricato, R.; Lovergine, F.P.; Pietranera, L. SAR and InSAR for flood monitoring: Examples with COSMO-SkyMed data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2711–2722. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shapiro, L.G. Image segmentation techniques. Comput. Vis. Graph. Image Process. 1985, 29, 100–132. [Google Scholar] [CrossRef]

- Druce, D.; Tong, X.; Lei, X.; Guo, T.; Kittel, C.M.; Grogan, K.; Tottrup, C. An optical and SAR based fusion approach for mapping surface water dynamics over mainland China. Remote Sens. 2021, 13, 1663. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A hierarchical split-based approach for parametric thresholding of SAR images: Flood inundation as a test case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Bangira, T.; Alfieri, S.M.; Menenti, M.; Van Niekerk, A.; Vekerdy, Z. A spectral unmixing method with ensemble estimation of endmembers: Application to flood mapping in the Caprivi floodplain. Remote Sens. 2017, 9, 1013. [Google Scholar] [CrossRef] [Green Version]

- Bovolo, F.; Bruzzone, L. A split-based approach to unsupervised change detection in large-size multitemporal images: Application to tsunami-damage assessment. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1658–1670. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. Urban flood mapping based on unmanned aerial vehicle remote sensing and random forest classifier—A case of Yuyao, China. Water 2015, 7, 1437–1455. [Google Scholar] [CrossRef]

- Porcù, F.; Leonardo, A. Data record on extreme events by OAL and by hazard. In Open-Air Laboratories for Nature Based Solutions to Manage Hydro-Meteo Risks (OPERANDUM); University of Bologna: Bologna, Italy, 2019; pp. 1–117. [Google Scholar]

- QN il Resto del Carlino. Meteo Reggio Emilia, la Piena del Fiume Enza sta Defluendo. 2017. Available online: https://www.ilrestodelcarlino.it/reggio-emilia/cronaca/meteo-fiume-enza-1.3603247 (accessed on 15 January 2021).

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Martinis, S.; Rieke, C. Backscatter analysis using multi-temporal and multi-frequency SAR data in the context of flood mapping at River Saale, Germany. Remote Sens. 2015, 7, 7732–7752. [Google Scholar] [CrossRef] [Green Version]

- ESA. 2014. Available online: https://scihub.copernicus.eu/ (accessed on 15 January 2021).

- Richter, R.; Schläpfer, D. Atmospheric/topographic correction for satellite imagery. DLR Rep. DLR-IB 2005, 438, 565. [Google Scholar]

- Mayer, B.; Kylling, A. The libRadtran software package for radiative transfer calculations-description and examples of use. Atmos. Chem. Phys. 2005, 5, 1855–1877. [Google Scholar] [CrossRef] [Green Version]

- Evans, D.L.; Farr, T.G.; Ford, J.; Thompson, T.W.; Werner, C. Multipolarization radar images for geologic mapping and vegetation discrimination. IEEE Trans. Geosci. Remote Sens. 1986, GE-24, 246–257. [Google Scholar] [CrossRef]

- Wu, S.-T. Analysis of synthetic aperture radar data acquired over a variety of land cover. IEEE Trans. Geosci. Remote Sens. 1984, GE-22, 550–557. [Google Scholar] [CrossRef]

- Wu, S.-T.; Sader, S.A. Multipolarization SAR data for surface feature delineation and forest vegetation characterization. IEEE Trans. Geosci. Remote Sens. 1987, GE-25, 67–76. [Google Scholar] [CrossRef]

- Henry, J.B.; Chastanet, P.; Fellah, K.; Desnos, Y.L. Envisat multi-polarized ASAR data for flood mapping. Int. J. Remote Sens. 2006, 27, 1921–1929. [Google Scholar] [CrossRef]

- Schumann, G.; Hostache, R.; Puech, C.; Hoffmann, L.; Matgen, P.; Pappenberger, F.; Pfister, L. High-resolution 3-D flood information from radar imagery for flood hazard management. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1715–1725. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Horritt, M.; Mason, D.; Luckman, A. Flood boundary delineation from synthetic aperture radar imagery using a statistical active contour model. Int. J. Remote Sens. 2001, 22, 2489–2507. [Google Scholar] [CrossRef]

- Mason, D.C.; Speck, R.; Devereux, B.; Schumann, G.J.-P.; Neal, J.C.; Bates, P.D. Flood detection in urban areas using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2009, 48, 882–894. [Google Scholar] [CrossRef] [Green Version]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Archer, K.J.; Kimes, R.V. Empirical characterization of random forest variable importance measures. Comput. Stat. Data Anal. 2008, 52, 2249–2260. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Nico, G.; Pappalepore, M.; Pasquariello, G.; Refice, A.; Samarelli, S. Comparison of SAR amplitude vs. coherence flood detection methods-a GIS application. Int. J. Remote Sens. 2000, 21, 1619–1631. [Google Scholar] [CrossRef]

- Carreño Conde, F.; De Mata Muñoz, M. Flood monitoring based on the study of Sentinel-1 SAR images: The Ebro River case study. Water 2019, 11, 2454. [Google Scholar] [CrossRef] [Green Version]

- Dellepiane, S.; Bo, G.; Monni, S.; Buck, C. SAR images and interferometric coherence for flood monitoring. In Proceedings of the IGARSS 2000. IEEE 2000 International Geoscience and Remote Sensing Symposium. Taking the Pulse of the Planet: The Role of Remote Sensing in Managing the Environment. Proceedings (Cat. No.00CH37120), Honolulu, HI, USA, 24–28 July 2000. [Google Scholar]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- DeVries, B.; Huang, C.; Armston, J.; Huang, W.; Jones, J.W.; Lang, M.W. Rapid and robust monitoring of flood events using Sentinel-1 and Landsat data on the Google Earth Engine. Remote Sens. Environ. 2020, 240, 111664. [Google Scholar] [CrossRef]

- Doxaran, D.; Froidefond, J.-M.; Lavender, S.; Castaing, P. Spectral signature of highly turbid waters: Application with SPOT data to quantify suspended particulate matter concentrations. Remote Sens. Environ. 2002, 81, 149–161. [Google Scholar] [CrossRef]

- McLaughlin, J.; Webster, K. Effects of a Changing Climate on Peatlands in Permafrost Zones: A Literature Review and Application to Ontario’s Far North; Ontario Forest Research Institute: Sault Ste. Marie, ON, Canada, 2013. [Google Scholar]

- Piemonte, R. Carta dei Suoli. 2021. Available online: https://www.regione.piemonte.it (accessed on 15 January 2021).

- Goffi, A.; Stroppiana, D.; Brivio, P.A.; Bordogna, G.; Boschetti, M. Towards an automated approach to map flooded areas from Sentinel-2 MSI data and soft integration of water spectral features. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101951. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. An algorithm for operational flood mapping from synthetic aperture radar (SAR) data based on the fuzzy logic. Nat. Hazard Earth Syst. Sci. 2011, 11, 529–540. [Google Scholar] [CrossRef] [Green Version]

- Kuppili, A. What Is the Time Complexity of a Random Forest, Both Building the Model and Classification? 2015. Available online: https://www.quora.com/What-is-the-time-complexity-of-a-Random-Forest-both-building-the-model-and-classification (accessed on 21 October 2021).

- Cohen, R. The Chan-Vese Algorithm; Technion, Israel Institute of Technology: Haifa, Israel, 2010. [Google Scholar]

| Event 1 Enza River, 13 December 2017 | Event 2 Sesia River, 3 October 2020 | ||||

|---|---|---|---|---|---|

| Tiles | Processing Level | Image Date | Tiles | Processing Level | Image Date |

| Asc. track number 15 | S-1 GRDH | 2 October 2017 | Asc. track number 88 | S-1 GRDH | 4 August 2020 |

| 8 October 2017 | 10 August 2020 | ||||

| 14 October 2017 | 16 August 2020 | ||||

| 20 October 2017 | 22 August 2020 | ||||

| 26 October 2017 | 28 August 2020 | ||||

| 1 November 2017 | 3 September 2020 | ||||

| 7 November 2017 | 15 September 2020 | ||||

| 13 November 2017 | 21 September 2020 | ||||

| 19 November 2017 | S-1 GRDH S-1 SLC | 27 September 2020 | |||

| 25 November 2017 | 3 October 2020 | ||||

| 1 December 2017 | S-1 GRDH | 9 October 2020 | |||

| S-1 GRDH S-1 SLC | 7 December 2017 | 15 October 2020 | |||

| 13 December 2017 | 27 October 2020 | ||||

| Granule T32TPQ | S-2 L2A | 24 October 2017 | Granule T32TMR | S-2 L2A | 9 August 2020 |

| 13 December 2017 | 3 October 2020 | ||||

| Data Type | Features | Description | No. |

|---|---|---|---|

| Intensity (from GRDH) | backscatter coefficients (VV, VH) | Log intensity in dB | 2 |

| Phase (from SLC) | Coherence (VV, VH) | Normalized cross-correlation coefficient between two interferometric images | 2 |

| H/Alpha Dual Decomposition (VV + VH) | Scattering mechanism information | 2 | |

| Texture (from GRDH) | GLCM: Contrast, Dissimilarity, Homogeneity, Angular Second Moment, Energy, Maximum, Entropy, GLCMMean, GLCMVariance, GLCMCorrelation, (VV, VH) | Gray Level Co-occurrence Matrix: second order textural features [70] | 20 |

| Temporal statistics (from GRDH) | Std (VV, VH) | Time-series standard deviation | 2 |

| Z_Scores (VV, VH) | The number of standard deviations time-series pixels lie from the mean | 2 | |

| Anomalies (VV, VH) | Temporal Anomaly | 2 |

| Emergent Vegetation | Turbid Water | Clear Water | Vegetation | Soil | |

|---|---|---|---|---|---|

| SAR | |||||

| Emergent vegetation | 324 | 319 | 11 | 511 | 304 |

| Turbid water | 117 | 2132 | 59 | 194 | 186 |

| Clear water | 0 | 158 | 492 | 9 | 1 |

| Vegetation | 366 | 640 | 24 | 4510 | 780 |

| Soil | 439 | 460 | 49 | 473 | 2588 |

| MS | |||||

| Emergent vegetation | 791 | 63 | 5 | 82 | 192 |

| Turbid water | 365 | 3646 | 0 | 0 | 157 |

| Clear water | 0 | 0 | 628 | 0 | 0 |

| Vegetation | 0 | 0 | 0 | 5531 | 79 |

| Soil | 90 | 0 | 2 | 84 | 3431 |

| SAR + MS | |||||

| Emergent vegetation | 768 | 84 | 6 | 17 | 321 |

| Turbid water | 396 | 3625 | 0 | 0 | 7 |

| Clear water | 0 | 0 | 628 | 0 | 0 |

| Vegetation | 0 | 0 | 0 | 5602 | 67 |

| Soil | 82 | 0 | 1 | 78 | 3464 |

| EV | TW | CW | |

|---|---|---|---|

| SAR Otsu | 58 | 1816 | 602 |

| SAR CThS | 149 | 2773 | 634 |

| SAR RF | 324 | 2132 | 492 |

| SAR + MS RF | 768 | 3625 | 628 |

| Total testing samples | 1246 | 3709 | 635 |

| Producer’s Accuracy (%) | ||

|---|---|---|

| 2017 | 2020 | |

| SAR Otsu | 78 | 44 |

| MS Otsu | 88 | 89 |

| SAR CThS | 79 | 63 |

| MS CThS | 90 | 94 |

| SAR RF | 92 | 64 |

| MS RF | 99 | 98 |

| SAR + MS RF | 99 | 98 |

| Enza River, 2017 | Sesia River, 2020 | |||

|---|---|---|---|---|

| R2 | RMSE | R2 | RMSE | |

| SAR Otsu | 0.88 | 18.07 | 0.80 | 34.20 |

| MS Otsu | 0.91 | 13.23 | 1.00 | 8.10 |

| SAR CThS | 0.89 | 17.27 | 0.90 | 21.70 |

| MS CThS | 0.96 | 8.76 | 1.00 | 4.30 |

| SAR RF | 0.99 | 6.43 | 1.00 | 10.40 |

| MS RF | 1.00 | 0.23 | 1.00 | 1.40 |

| SAR + MS RF | 1.00 | 0.55 | 1.00 | 1.60 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Foroughnia, F.; Alfieri, S.M.; Menenti, M.; Lindenbergh, R. Evaluation of SAR and Optical Data for Flood Delineation Using Supervised and Unsupervised Classification. Remote Sens. 2022, 14, 3718. https://doi.org/10.3390/rs14153718

Foroughnia F, Alfieri SM, Menenti M, Lindenbergh R. Evaluation of SAR and Optical Data for Flood Delineation Using Supervised and Unsupervised Classification. Remote Sensing. 2022; 14(15):3718. https://doi.org/10.3390/rs14153718

Chicago/Turabian StyleForoughnia, Fatemeh, Silvia Maria Alfieri, Massimo Menenti, and Roderik Lindenbergh. 2022. "Evaluation of SAR and Optical Data for Flood Delineation Using Supervised and Unsupervised Classification" Remote Sensing 14, no. 15: 3718. https://doi.org/10.3390/rs14153718

APA StyleForoughnia, F., Alfieri, S. M., Menenti, M., & Lindenbergh, R. (2022). Evaluation of SAR and Optical Data for Flood Delineation Using Supervised and Unsupervised Classification. Remote Sensing, 14(15), 3718. https://doi.org/10.3390/rs14153718